Abstract

In this study, we aimed to predict mechanical ventilation requirement and mortality using computational modeling of chest radiographs (CXRs) for coronavirus disease 2019 (COVID-19) patients. This two-center, retrospective study analyzed 530 deidentified CXRs from 515 COVID-19 patients treated at Stony Brook University Hospital and Newark Beth Israel Medical Center between March and August 2020. Linear discriminant analysis (LDA), quadratic discriminant analysis (QDA), and random forest (RF) machine learning classifiers to predict mechanical ventilation requirement and mortality were trained and evaluated using radiomic features extracted from patients’ CXRs. Deep learning (DL) approaches were also explored for the clinical outcome prediction task and a novel radiomic embedding framework was introduced. All results are compared against radiologist grading of CXRs (zone-wise expert severity scores). Radiomic classification models had mean area under the receiver operating characteristic curve (mAUCs) of 0.78 ± 0.05 (sensitivity = 0.72 ± 0.07, specificity = 0.72 ± 0.06) and 0.78 ± 0.06 (sensitivity = 0.70 ± 0.09, specificity = 0.73 ± 0.09), compared with expert scores mAUCs of 0.75 ± 0.02 (sensitivity = 0.67 ± 0.08, specificity = 0.69 ± 0.07) and 0.79 ± 0.05 (sensitivity = 0.69 ± 0.08, specificity = 0.76 ± 0.08) for mechanical ventilation requirement and mortality prediction, respectively. Classifiers using both expert severity scores and radiomic features for mechanical ventilation (mAUC = 0.79 ± 0.04, sensitivity = 0.71 ± 0.06, specificity = 0.71 ± 0.08) and mortality (mAUC = 0.83 ± 0.04, sensitivity = 0.79 ± 0.07, specificity = 0.74 ± 0.09) demonstrated improvement over either artificial intelligence or radiologist interpretation alone. Our results also suggest instances in which the inclusion of radiomic features in DL improves model predictions over DL alone. The models proposed in this study and the prognostic information they provide might aid physician decision making and efficient resource allocation during the COVID-19 pandemic.

Keywords: COVID-19, radiography, radiomics, deep learning, artificial intelligence, machine learning

1. Introduction

Coronavirus disease 2019 (COVID-19), an illness caused by novel severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2), has spread rapidly across the world, with over 200 million cases internationally and over 35 million cases in the United States as of 5 August 2021 [1]. Advanced cases of the disease can progress to acute respiratory distress syndrome requiring mechanical ventilation [2,3,4,5,6,7]. Thus far, over 4.2 million people have died internationally [1]. The ability to identify patients that might progress to critical illness from initial clinical presentation can better guide clinical management strategies and improve patient outcomes [2,3,4]. Several studies have demonstrated that radiologic imaging may be useful in this regard [2,7,8].

In the United States, chest radiographs (CXRs) are the primary imaging modality for the monitoring of COVID-19, and the American College of Radiology has recommended that computed chest tomography (CT) be reserved only for selected patients with limited specific clinical indications including severe disease [2,5,9]. However, CXRs have lower resolution than CT images and provide 2-Dimensional (2D) rather than 3D representations of the lungs. These features make CXRs more difficult to interpret than CTs. Early reports suggested that radiologist diagnosis of COVID-19 from CXR had a sensitivity of 69%, compared to a sensitivity of up to 97% on CT [10,11]. Nevertheless, portable radiography is the preferred and often the only available imaging modality in high-volume hospital settings.

Recent studies have qualitatively described the association of ground-glass opacities and lung consolidations with disease severity and progression on CXR and CT [2,5,6,7,11,12]. Specifically, the presence of opacities in multiple lobes has been shown to predict severe illness, and several CXR scoring systems have been developed to assess disease severity based upon this premise [2,6,7]. Studies have also evaluated various clinical biomarkers and comorbidities as predictors of disease progression, and there is some evidence that imaging data might complement these models [4,5,6,13,14,15,16]. However, current studies to model clinical outcomes in COVID-19 primarily rely on less commonly used CTs or qualitative analysis of CXRs [2,4,5,6,10,12]. In this multi-site study, we utilized quantitative techniques to better evaluate the role of CXR in predicting patient outcomes.

Computational radiology employs machine learning to interpret medical images. Two general approaches include deep learning (DL) and radiomic analysis [8,17]. DL makes use of neural networks to iteratively learn features from CXRs using convolution operations. Radiomic features are distinct, handcrafted attributes that can be directly related to the visual characteristics of an image. While recent studies have used these techniques to study COVID-19, few have applied them to multi-institutional CXR cohorts [8,12,18,19,20,21,22].

In this study, we developed computational models to identify clinically actionable information from baseline CXRs taken from COVID-19 patients. A baseline CXR refers to any CXR taken on the first day for which CXR data exist for a patient treated for COVID-19 infection. First, we developed a baseline model using radiologist assessment of CXR severity (zone-wise expert scores) to predict mechanical ventilation requirement and mortality in order to determine the efficacy of machine learning approaches. We then employed machine learning classifiers to predict patient outcomes using computer-extracted radiomic features from baseline CXR. Our third experiment predicted mechanical ventilation requirement and mortality using DL of patient baseline CXRs. Fourth, we proposed a combined DL model using both processed CXRs and corresponding radiomic features to predict clinical outcomes. A novel synergistic approach utilizing radiomic-embedded maps for DL is presented and may provide new interpretations of predefined radiomic features. Figure 1 displays a general flowchart of experiments.

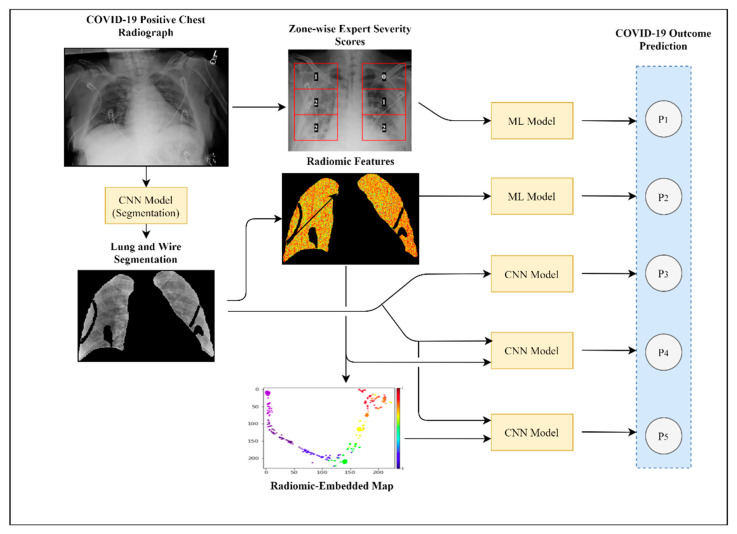

Figure 1.

Study pipeline. Visualized here is the schema for the experiments performed in this study. Experiment 1 demonstrated the use of radiologist expert scoring of CXRs for clinical outcome prediction. In Experiment 2, we extracted predefined radiomic features from segmented CXRs and input them into machine learning models such as linear discriminant analysis, quadratic discriminant analysis, and random forest classifiers. Experiment 3 used a CNN deep learning model to predict COVID-19 patient outcomes using segmented CXRs as inputs. In Experiment 4, we investigated two separate methods (P4 and P5) of integrating radiomic features with segmented CXRs for DL analysis.

Related Work

Machine learning methods have been applied extensively to the study of COVID-19, analyzing both clinical variables and medical images for disease diagnosis and prognosis [3,8,12,13,14,15,18,19,20,21,23,24]. In the domain of computational radiology, many studies have focused primarily on CT image analysis, though further work is now being performed on CXRs [19,20,24]. However, few studies attempt to predict COVID-19 patient clinical outcomes using CXRs, and the public datasets often studied have been critiqued for potentially biasing results [8,19,23]. Below, we perform a brief survey of related and relevant works.

First, several scoring systems based upon radiologist interpretation have been proposed for the grading of COVID-19 severity using CXRs. Balbi et al. have described their own proposed Brixia score and measurements of diseased lung involvement and their correlation with mortality in COVID-19 patients [6]. Similarly, Toussie et al. and Shen et al. have proposed CXR scoring systems that they have shown to correlate significantly with various outcomes including survival, hospitalization, and intubation [2,22].

In general, DL has been widely used in the field of natural and medical image analysis. In this work, we employed both ResNet and U-Net DL architectures, modifying them for our particular use cases [25,26]. ResNet has been previously applied to a variety of classification tasks using medical images, and U-Net is the commonly widely utilized DL architecture for medical image segmentation [27,28]. The use of these architectures is commonplace for medical image classification and segmentation tasks and has historically performed well for numerous tasks.

Computational approaches have also been employed to predict clinical courses for COVID-19 patients. Vaid et al. utilized clinical variables including measurements of inflammation, biomarkers, and other lab values to predict COVID-19 mortality with an AUC of up to 0.84 [3]. Chassagnon et al. utilized a U-Net segmentation pipeline, followed by radiomic feature extraction, using CT data in order to predict long-term survival, with an AUC of up to 0.86 [20]. Studying CXRs, Ferreira Jr. et al. validated the relationship between several radiomic features and COVID-19 diagnosis and prognosis in a small cohort of 49 COVID-19 positive patients [29]. Kwon et al. utilized DL in combination with clinical variables to achieve AUCs of up to 0.88 and 0.82 for intubation and mortality prediction, respectively [24].

Our method combined aspects of each of these approaches to provide a robust, interpretable method for clinical outcome prediction in the context of COVID-19. We analyzed CXRs, a more frequently used modality when compared with CT. Furthermore, our study contained a large dataset of images taken from multiple institutions; the inherent variability in intensity distribution between these datasets demonstrates the robustness of our model on CXRs obtained under different conditions. We also compared radiomic and DL approaches for outcome prediction, investigating their relative benefits for different prediction tasks.

2. Materials and Methods

2.1. Cohort Description

In this two-center, IRB-approved study, anonymized frontal CXRs were obtained from patients suspected of COVID-19 on presentation at Stony Brook University Hospital (SBUH) and Newark Beth Israel Medical Center (NBIMC) between March and June 2020 (Figure 2). A total of 559 baseline CXRs for 538 patients at SBUH were analyzed. For this study, 17 CXRs of pediatric patients or with poor image quality taken from 16 patients were discarded. A total of 174 baseline CXRs from 174 patients were included from NBIMC. Of these, 5 CXRs were discarded due to indistinguishable lung fields. We considered all CXRs taken on the first day for which CXR data exist for a patient as baseline CXRs. Hence, a patient may have multiple baseline CXRs, though these would all be taken on the same day.

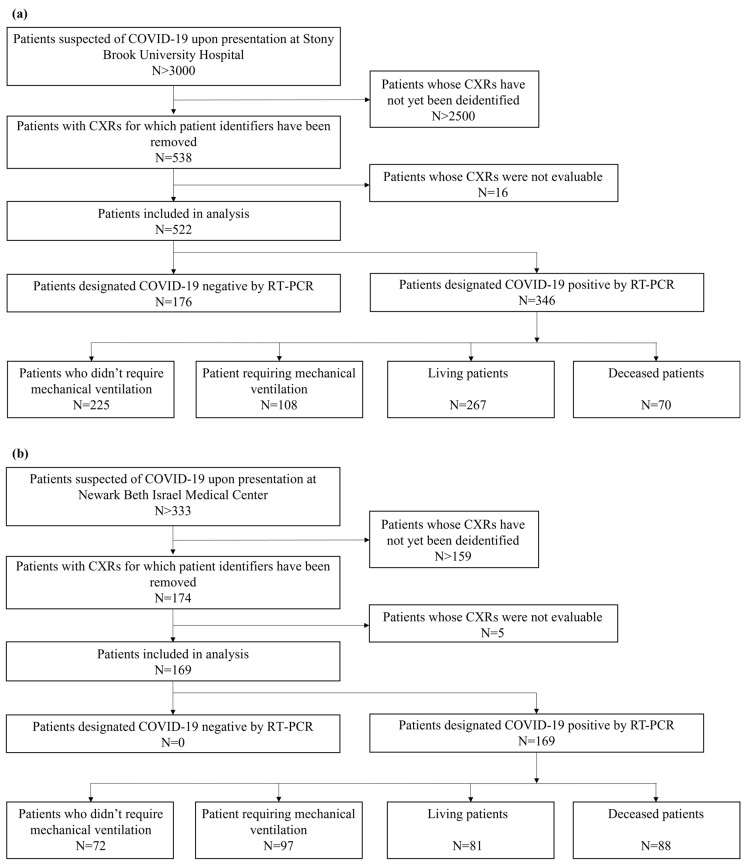

Figure 2.

Summary of patient inclusion and exclusion criteria: (a) displays criteria for SBUH and (b) displays criteria for NBIMC. Ineligibility criteria included pediatric patients, existing intubation status prior to CXR acquisition, poor image orientation, or indistinguishable lung fields on CXR.

In total, 711 CXRs taken from 691 patients (363 males and 328 females) were analyzed in this study. The mean age of patients studied was 56 years old (median = 57 years, standard deviation = 17.774 years, Table 1). COVID-19 positivity was tested for each patient via reverse transcriptase–polymerase chain reaction (RT-PCR). In total, 530 CXRs from 515 patients who tested positive for COVID-19 (Table 2) and 181 CXRs from 176 patients found not to be infected with COVID-19 at SBUH were analyzed. CXRs taken from COVID-19 positive patients were used in outcome prediction experiments, whereas those from both COVID-19 positive and negative patients were used to build lung and artifact segmentation models. Of the 530 CXRs from positive patients, 217 baseline CXRs were taken for 205 patients that later required mechanical ventilation. A total of 164 CXRs were from 158 patients who later died from the disease. Representative CXR images are displayed in Figure 3.

Table 1.

Total patient demographics table.

| Stony Brook University Hospital Patients (n = 522) | Newark Beth Israel Medical Center Patients (n = 169) | |

|---|---|---|

| Sex | 267 (175 COVID-19+) male 255 (171 COVID-19+) female | 96 male 73 female |

| Age | 55 ± 18.630 (p = 0.0989 *) 57 ± 16.969 (COVID-19+, p = 0.1170 *) |

59 ± 14.256 (p = 0.6821 *) |

* p-values for age difference between sexes using a Wilcoxon rank-sum test.

Table 2.

COVID-19 positive patient outcome table.

| Age | Number of COVID-19 Positive Patients | Number Requiring Mechanical Ventilation | Number Deceased | |

|---|---|---|---|---|

| 18–19 (n = 1) |

Male | 1 | 0 | 0 |

| Female | 0 | 0 | 0 | |

| 20–29 (n = 22) |

Male | 11 | 1 | 1 |

| Female | 11 | 4 | 2 | |

| 30–39 (n = 47) |

Male | 29 | 8 | 4 |

| Female | 18 | 4 | 2 | |

| 40–49 (n = 75) |

Male | 42 | 11 | 5 |

| Female | 33 | 9 | 4 | |

| 50–59 (n = 130) |

Male | 64 | 24 | 17 |

| Female | 66 | 30 | 13 | |

| 60–69 (n = 108) |

Male | 60 | 33 | 24 |

| Female | 48 | 27 | 18 | |

| 70–79 (n = 79) |

Male | 41 | 24 | 20 |

| Female | 38 | 17 | 19 | |

| 80+ (n = 53) |

Male | 23 | 6 | 11 |

| Female | 30 | 7 | 18 | |

| Total (n = 515) |

Male | 271 | 107 | 82 |

| Female | 244 | 98 | 76 |

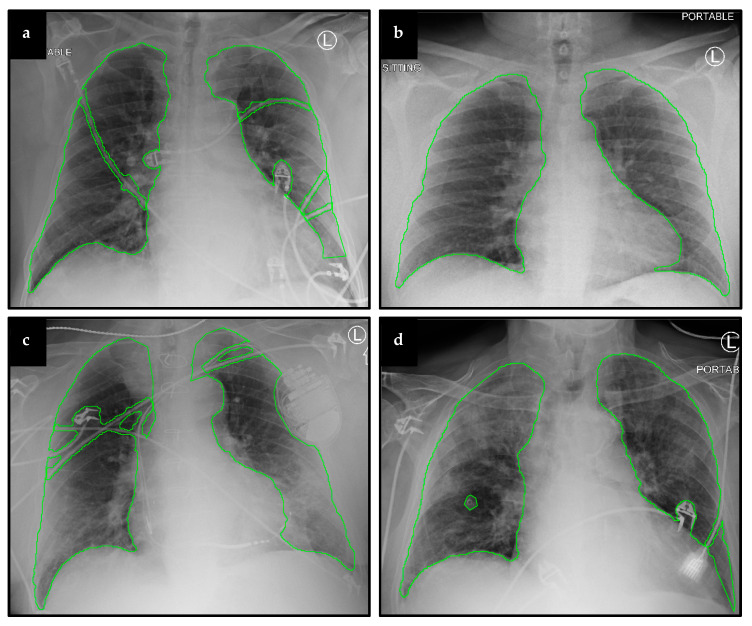

Figure 3.

Representative CXRs and segmentation results. Displayed here are baseline CXRs taken from patients that later (a) required mechanical ventilation, (b) did not require ventilation, (c) survived the disease, and (d) did not survive.

2.2. Image Preprocessing

2.2.1. Lung and Artifact Segmentation

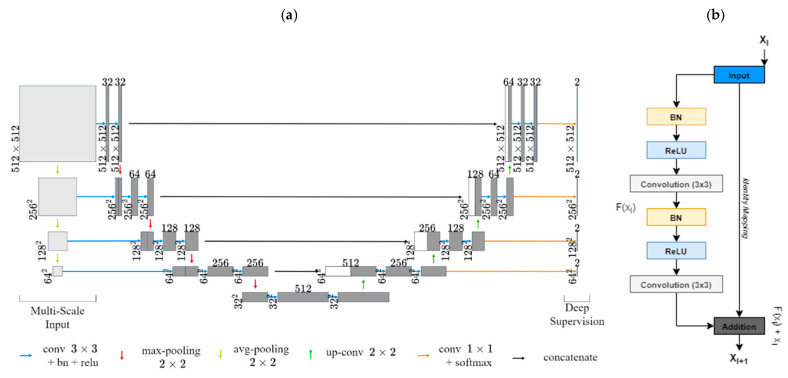

A segmentation pipeline was developed to avoid learning of features unrelated to lung fields. In order to segment lungs and artifacts from CXR images, two residual U-Net DL models were employed [26,30]. Both network architectures were augmented using multiscale image inputs for better intermediate feature representations with deep supervision (Figure 4) [31]. Lung fields and artifacts such as EKG leads, pacemakers, and other non-anatomical objects were first manually segmented for a dataset of 100 CXRs, excluding heart shadows. These segmentations were used to train the two networks, one for lung segmentation and the other for artifact segmentation. A focal Tversky loss function to penalize false positive predictions was employed (alpha = 0.3, gamma = 1.0) [32]. This was to avoid misidentification of high-intensity objects as lungs and to mitigate misclassification of lungs as unwanted artifacts. The trained models were then used to generate lung and artifact masks for the remaining 611 CXRs. Each of these masks was manually reviewed and errors in segmentation, if any, were corrected.

Figure 4.

Network architecture for lung and image artifact segmentation: (a) visualizes our multiscale input residual U-Net architecture. (b) displays an example residual block.

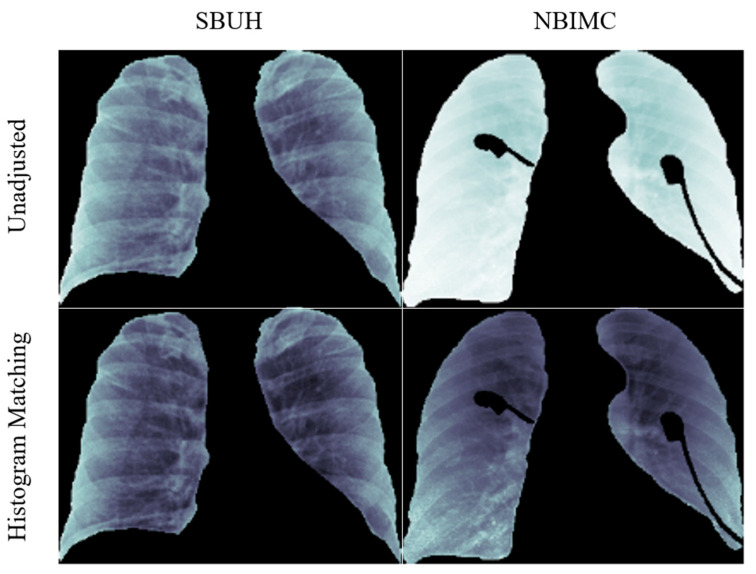

2.2.2. Average Histogram Matching (HM)

It should be noted that CXRs from the two institutions, SBUH and NBIMC, fall within two distinct data domains differing in pixel intensity distribution. To mitigate image differences, an average histogram matching (HM) was employed (Figure 5). A total of 80 CXR images were chosen randomly from the SBUH dataset to create an average cumulative distribution. Every CXR from both SBUH and NBIMC was then mapped to this average cumulative function using an HM approach, bringing all CXRs into the same intensity range [33].

Figure 5.

Results of histogram matching. Displayed are the results of HM preprocessing on CXR images from SBUH and NBIMC.

For both ventilation and mortality classification, models were trained and evaluated in a cross-validation setting. To this end, 217 ventilation-positive and 300 ventilation-negative CXRs were used for ventilation classification, whereas 164 CXRs from deceased patients and 357 CXRs from recovered patients were used for mortality classification. For each iteration of cross-validation evaluation, folds were chosen such that training and testing folds each contained an equal number of positive and negative samples.

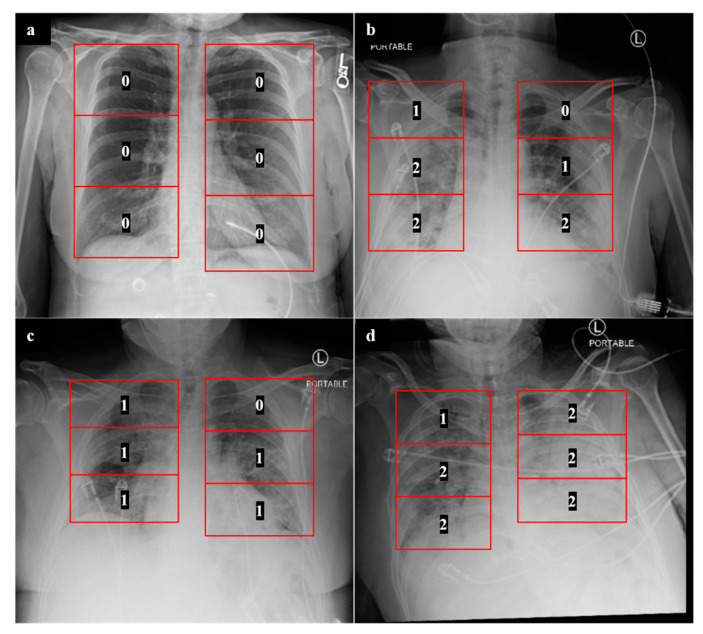

2.3. Experiment 1: Outcome Classification Using Radiologist Severity Scores

In order to develop a clinical baseline model, we adopted a previously described CXR scoring system for COVID-19 patients [7]. Scoring of CXRs was performed by radiology residents (G.S., R.G., S.A., N.S., C.M., and J.P.). Any ambiguous scores were further confirmed by one of two attending radiologists (J.G. and A.G.). For each lung, a severity score of 0, 1, or 2 was assigned to each of three lung zones: lower, middle, and upper (Figure 6), with a maximum possible score of 12 for both lungs combined. A score of 0 was assigned to lung zones with no radiographic findings, a score of 1 was assigned to zones with the presence of ground-glass opacities, and a score of 2 was assigned to zones with consolidative opacities with or without air bronchograms. The formulation of this system and the assignment of different scores to 6 lung zones is in line with other described COVID-19 CXR scoring systems [2,6]. Once these scores were assigned for each CXR, a multiple logistic regression model was developed to predict mechanical ventilation requirement and mortality based upon these zone-wise expert scores. This approach was evaluated in a cross-validation setting and served as a human-based comparison for the machine learning models discussed below.

Figure 6.

Zone-wise expert scores for CXRs. The numbers displayed are examples of zone-wise expert scores obtained for COVID-19 patients who (a) did not require mechanical ventilation and recovered, (b) required mechanical ventilation and recovered, and (c,d) required mechanical ventilation and are deceased.

2.4. Experiment 2: Outcome Classification Using Radiomic Features

Radiomic features were extracted from CXRs for clinical outcome prediction in order to provide interpretable insights into which textural features might be most predictive of mortality and mechanical ventilation. In total, 143 radiomic features from the Haralick, Gabor, Laws energy, histogram of gradients, and grey intensity feature families were computed for each baseline CXR [34,35,36,37]. Features were extracted solely from segmented lung fields, excluding artifacts. Descriptions of various radiomic features can be found in Table 3. Each of these features has been previously studied in medical applications including in the study of COVID-19 [29,34,35,36,37]. In this study, we performed an exploratory analysis of these various well-studied radiomic features in order to determine their relative value in predicting clinical outcomes for COVID-19 patients. For each radiomic feature, statistics including measures of median, skewness, standard deviation, and kurtosis were calculated. These statistics and clinical factors including expert scores and patient age/sex were used for classifier construction.

Table 3.

Selected predictive radiomic feature descriptions.

| Radiomic Feature Family | Features Used for Clinical Outcome Prediction | Description |

|---|---|---|

| Laws Energy | L5E5, E5S5, W5E5, L5E5, W5R5, S5E5, R5E5, W5W5, S5E5, S5W5, S5L5, L5S5, E3S3, R5R5 | Combinations of these filters at different window sizes (3 × 3, 5 × 5) enable identification of various qualitative patterns such as waves, ripples, edges, and spots. |

| Gabor Wavelet | θ = 1.571 λ = 1.786, θ = 0.785 λ = 1.276, θ = 1.963 λ = 1.276, θ = 1.178 λ = 1.786, θ = 1.178 λ = 0.765 | Computes oriented textures via changes in direction and scale to capture microarchitectures in lung regions. Each descriptor quantifies response to a given Gabor filter at a specific wavelength (λ) and orientation (θ) |

| Haralick | Entropy, Correlation, Information | Features are extracted from the grey level co-occurrence matrix (GLCM) of an image. Measures various characteristics regarding local disorder, homogeneity, and heterogeneity. |

| Gradient | X, Y, Diagonal | Measures changes in intensity values within an image in different directions. |

| Grey | Standard Deviation, Mean | Standard measures of intensity information. |

For prediction of future mechanical ventilation requirement and mortality, random forest (RF), linear discriminant analysis (LDA), and quadratic discriminant analysis (QDA) classifiers were trained and cross-validated on radiomic features from baseline CXRs [38,39]. For each of 50 iterations in a 5-fold cross-validation setting, feature reduction among radiomic and clinical features was performed on the training set using a Wilcoxon rank-sum test, Student’s t-test, or a maximum relevance minimum redundancy approach [40]. Highly correlated features (Pearson correlation threshold = 0.9) were removed to reduce redundancy. Ablation studies were performed to assess the relative performance of radiomic classification with and without HM and with and without clinical features.

2.5. Experiment 3: Outcome Classification Using Convolutional Neural Networks

Convolutional neural networks (CNNs) were employed to predict future mechanical ventilation requirement and patient mortality from baseline CXRs. Additional preprocessing steps for DL included automatic cropping of CXRs to a tight boundary around the lungs, resizing input images to 224 × 224 pixels, and the application of min–max normalization to rescale image intensity values between 0 and 1.

For each classification experiment a ResNet-50 pretrained on ImageNet was utilized [25]. Data augmentation techniques such as flipping, rotation, and translation were used to reduce overfitting. The fully connected (FC) layer of each architecture was replaced by a custom layer with an input size of 512 by 1 (no clinical variables included) or 520 by 1 (expert scores and patient age/sex included) and output size of 2 by 1 to match our desired binary classification scheme. The FC layer was trained without the use of pretrained weights. Dropout layers with a probability of 0.1 were included after FC layers to improve the generalizability of classification. For each model, a binary cross-entropy loss function and an Adam optimizer with a learning rate of 0.00001 were used for network training [41]. The learning rate was decreased by a factor of 0.01 after each 10th epoch. Models were trained and evaluated in a cross-validation setting in which new training, validation, and testing splits were chosen for each of five iterations.

Class activation maps (CAMs) were also generated using network outputs prior to the global average pooling layer in the ResNet-50 architecture. These CAMs enable a degree of visualization of a network’s “attention” in making predictions, thereby providing a soft validation of the prognostically relevant regions as determined by the network.

In addition, t-distributed stochastic neighbor embedding (t-SNE) was used to visualize features extracted using ResNet-50 models for mortality and mechanical ventilation predictions using network outputs prior to the final FC layer [42].

2.6. Experiment 4: Outcome Classification Using Convolutional Neural Networks and Radiomic-Map Embedding

DL of radiomic and imaging features was explored using two different approaches.

2.6.1. Feed-Forward Concatenation of Radiomic Features

In this approach, the features used for classifier development in Experiment 2 were first normalized to within a range of 0 to 1 before being concatenated to the output of the upsampling layer of the ResNet-50 architecture used in Experiment 3. The following feed-forward layer was then modified to contain 512 + n neurons, where n is the number of chosen radiomic features for the desired classification problem. If clinical data including expert scores and patient age/sex were also included, the number of neurons was instead 520 + n. In this experiment, model weights for the initial image feature extractor layers were used from Experiment 3, whereas the weights for the altered feed-forward layer were randomly initialized. The entire model was then trained. This process was identical for both mechanical ventilation and mortality prediction.

2.6.2. Radiomic-Embedded Feature Maps

Radiomic features from Experiment 2 were used to create radiomic-embedded feature maps for each CXR. t-SNE (random state = 1) was employed to perform feature reduction and to convert radiomic data to a 2D representation [42]. To assess the predictive capability of a model trained using both radiomic-embedded feature maps and CXR images as inputs, the same general procedure employed in Experiment 3 was used. A key difference was a change in the first input convolution filter of the ResNet-50 architecture to receive a 2-channel CXR and radiomic-embedded map input rather than a 3-channel input. All other network configurations are identical to those described in Experiment 2. Dataset splits of each of these classifiers were identical to those detailed in Experiment 2.

3. Results

Results for Experiments 1, 2, 3, and 4 are summarized in Table 4, Table 5, Table 6 and Table 7 and are reported as mean ± 95% confidence interval based on fivefold cross-validation results.

Table 4.

Expert scores clinical outcome prediction results.

| Classification Type | Sensitivity | Specificity | AUC |

|---|---|---|---|

| Ventilation Requirement | 0.67 ± 0.08 | 0.69 ± 0.07 | 0.75 ± 0.02 |

| Mortality | 0.69 ± 0.08 | 0.76 ± 0.08 | 0.79 ± 0.05 |

Table 5.

Radiomics clinical outcome prediction results.

| Classification Type | Image Adjustment | Clinical Features | Sensitivity | Specificity | AUC |

|---|---|---|---|---|---|

| Ventilation Requirement | Unadjusted | None | 0.64 ± 0.07 | 0.67 ± 0.07 | 0.72 ± 0.05 |

| Expert Scores, patient age and sex | 0.67 ± 0.08 | 0.73 ± 0.07 | 0.77 ± 0.05 | ||

| Histogram Matching | None | 0.72 ± 0.07 | 0.72 ± 0.06 | 0.78 ± 0.05 | |

| Expert Scores, patient age and sex | 0.71 ± 0.06 | 0.71 ± 0.08 | 0.79 ± 0.04 | ||

| Mortality | Unadjusted | None | 0.72 ± 0.09 | 0.72 ± 0.08 | 0.77 ± 0.05 |

| Expert Scores, patient age and sex | 0.79 ± 0.07 | 0.74 ± 0.09 | 0.83 ± 0.04 | ||

| Histogram Matching | None | 0.70 ± 0.09 | 0.73 ± 0.09 | 0.78 ± 0.06 | |

| Expert Scores, patient age and sex | 0.77 ± 0.08 | 0.71 ± 0.09 | 0.80 ± 0.06 |

Bold text indicates highest metrics obtained.

Table 6.

Deep learning clinical outcome prediction results.

| Ventilation Requirement | Mortality | ||||

|---|---|---|---|---|---|

| Unadjusted | Histogram Matching | Unadjusted | Histogram Matching | ||

| Sensitivity | CXR | 0.55 ± 0.09 | 0.64 ± 0.09 | 0.56 ± 0.15 | 0.59 ± 0.13 |

| CLC | 0.63 ± 0.08 | 0.61 ± 0.01 | 0.58 ± 0.17 | 0.67 ± 0.09 | |

| REM | 0.54 ± 0.08 | 0.68 ± 0.05 | 0.66 ± 0.07 | 0.64 ± 0.07 | |

| REM CLC | 0.58 ± 0.09 | 0.62 ± 0.08 | 0.61 ± 0.14 | 0.77 ± 0.07 | |

| RAD | 0.63 ± 0.06 | 0.66 ± 0.04 | 0.58 ± 0.12 | 0.59 ± 0.12 | |

| RAD CLC | 0.62 ± 0.07 | 0.67 ± 0.07 | 0.59 ± 0.07 | 0.69 ± 0.08 | |

| Specificity | CXR | 0.72 ± 0.08 | 0.73 ± 0.07 | 0.72 ± 0.07 | 0.74 ± 0.04 |

| CLC | 0.66 ± 0.08 | 0.76 ± 0.05 | 0.65 ± 0.06 | 0.71 ± 0.09 | |

| REM | 0.59 ± 0.05 | 0.63 ± 0.02 | 0.58 ± 0.08 | 0.73 ± 0.07 | |

| REM CLC | 0.65 ± 0.07 | 0.68 ± 0.06 | 0.63 ± 0.08 | 0.60 ± 0.09 | |

| RAD | 0.69 ± 0.06 | 0.75 ± 0.06 | 0.67 ± 0.03 | 0.76 ± 0.03 | |

| RAD CLC | 0.69 ± 0.06 | 0.78 ± 0.05 | 0.71 ± 0.02 | 0.67 ± 0.03 | |

| AUC | CXR | 0.70 ± 0.07 | 0.75 ± 0.02 | 0.72 ± 0.07 | 0.75 ± 0.04 |

| CLC | 0.69 ± 0.03 | 0.77 ± 0.02 | 0.70 ± 0.07 | 0.74 ± 0.04 | |

| REM | 0.61 ± 0.03 | 0.71 ± 0.02 | 0.67 ± 0.04 | 0.76 ± 0.04 | |

| REM CLC | 0.64 ± 0.02 | 0.72 ± 0.02 | 0.68 ± 0.02 | 0.77 ± 0.01 | |

| RAD | 0.70 ± 0.03 | 0.77 ± 0.03 | 0.69 ± 0.07 | 0.74 ± 0.06 | |

| RAD CLC | 0.72 ± 0.02 | 0.78 ± 0.02 | 0.71 ± 0.04 | 0.75 ± 0.07 | |

All columns used preprocessed patient CXRs as inputs to DL networks in addition to: CLC―clinical features including patient age and sex; REM―radiomic-embedded feature maps; RAD―concatenation of radiomic features to DL feature outputs. Bold text indicates highest metrics obtained.

Table 7.

Top 10 features used in radiomic classifiers.

| Classification Type | Image Adjustment | Clinical Features | Radiomic Features |

|---|---|---|---|

| Ventilation Requirement | Unadjusted | None | 1. Laws L5E5 2. Gabor XY θ = 1.571 λ = 1.786 3. Gradient Diagonal 4. Laws E5S5 5. Laws W5E5 6. Laws L5E5 7. Laws W5R5 8. Laws S5E5 9. Haralick Entropy Ws7 10. Haralick Correlation Ws7 |

| Expert Scores, Patient Age and Sex | 1. ES Lower Left 2. Age 3. ES Middle Left 4. Sex 5. ES Middle Right 6. Laws W5E5 7. Laws W5R5 8. Laws E5S5 9. Gradient Diagonal 10. ES Lower Right 11. Laws R5E5 12. Laws E5E5 13. Laws E3S3 14. Laws R5W5 15. Laws W5W5 16. Laws S5E5 17. Laws S5W5 18. Laws S5L5 19. Gradient dy |

||

| Histogram Matching | None | 1. Gradient Y 2. Laws E5S5 3. Laws L5S5 | |

| Expert Scores, Patient Age and Sex | 1. Laws E3S3 2. LawsR5R5 3. ES Middle Right 4. ES Lower Right 5. Gabor XY θ = 0.785 λ = 1.276 6. ES Middle Left 7. ES Lower Left 8. Gabor XY θ = 1.963 λ = 1.276 9. Grey Standard Deviation 10. Laws L5S5 11. Gabor XY θ = 1.178 λ = 1.786 12. Haralick Entropy Ws3 13. Gradient Sobel Y 14. Gabor XY θ = 1.178 λ = 0.765 15. Haralick Information Ws5 | ||

| Mortality | Unadjusted | None | 1. Haralick Correlation Ws5 |

| Expert Scores, Patient Age and Sex | 1. Age 2. Haralick Correlation Ws5 3. ES Middle Right 4. ES Lower Left | ||

| Histogram Matching | None | 1. Laws R5E5 2. Gradient Y 3. Laws E3S3 4. Haralick Entropy Ws 5 | |

| Expert Scores, Patient Age and Sex | 1. Age 2. ES Lower Left 3. ES Middle Right 4. Laws R5E5 5. ES Upper Right6. ES Lower Right 7. Gradient Y 8. Gradient Sobel YX 9. Laws E3 S3 10. Gradient dx 11. Haralick Entropy |

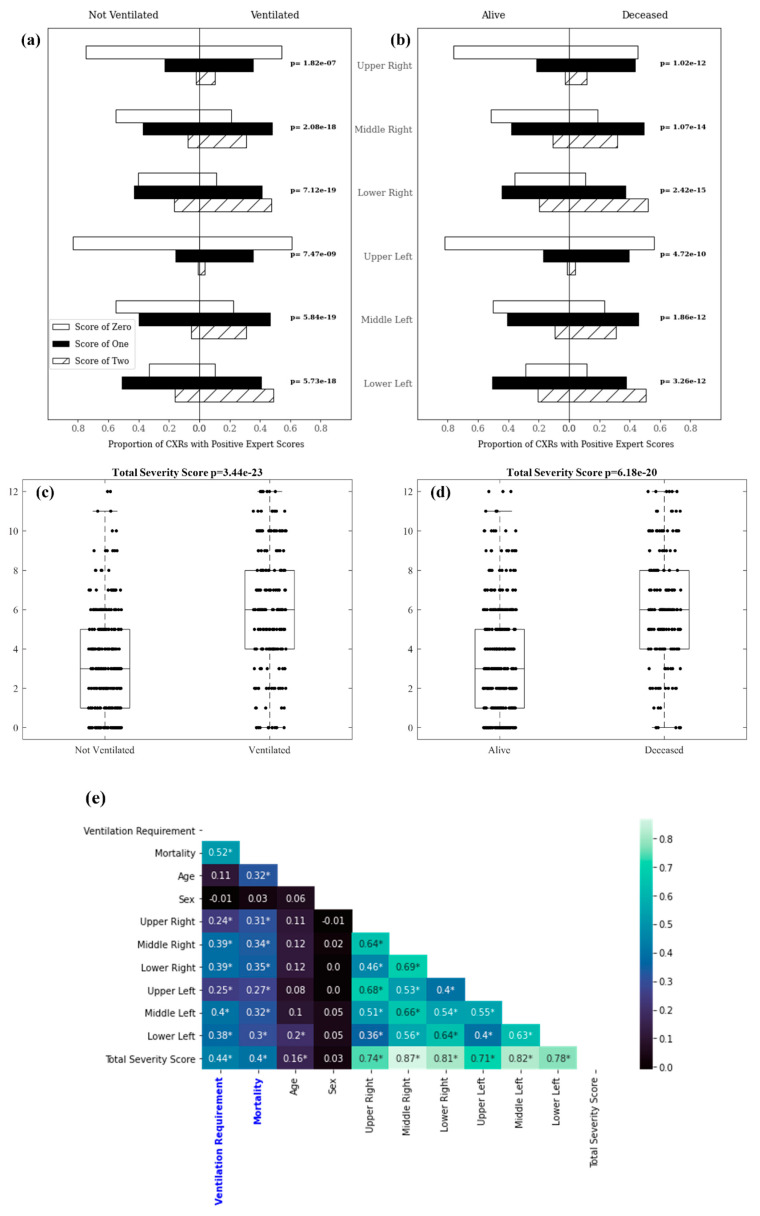

3.1. Experiment 1: Outcome Classification Using Radiologist Severity Scores

For Experiment 1, expert scores predicted mechanical ventilation, with a mean cross-validated AUC (mAUC) of 0.75, a specificity of 69%, and a sensitivity of 67%. Expert scores were able to predict mortality with an mAUC of 0.79, a specificity of 76%, and a sensitivity of 69%. The distribution of zone-wise export scores among patients in each clinical outcome class is shown in Figure 7a,b. Figure 7c,d visualizes distributions of total expert scores among patients in each clinical outcome class. The distribution of expert scores within each lung region along with the distribution of total expert scores was statistically significant between patients requiring mechanical ventilation, compared with those who did not, as well as between deceased and recovered patients. Correlations between zone-wise expert scores for each lung region and clinical outcomes/variables are shown in Figure 7e. Total severity score (the sum of scores from all lung regions) correlated most strongly with both future ventilation requirement (0.44) and mortality (0.40), respectively. These correlations were stronger than correlations for individual lung zones with clinical outcomes and for patient age or sex with clinical outcomes.

Figure 7.

Zone-wise expert scores distribution: (a) and (b) depict the proportion of patients whose CXRs had lung zone scores of 0, 1, and 2 in each pictured population; (c) and (d) visualize the distribution of total zone-wise scores assigned to CXRs for patients in each population; (e) displays correlations between zone-wise severity scores and clinical outcomes. Asterisks denote statistical significance at the level of p = 0.01 as determined by a Pearson’s correlation coefficient.

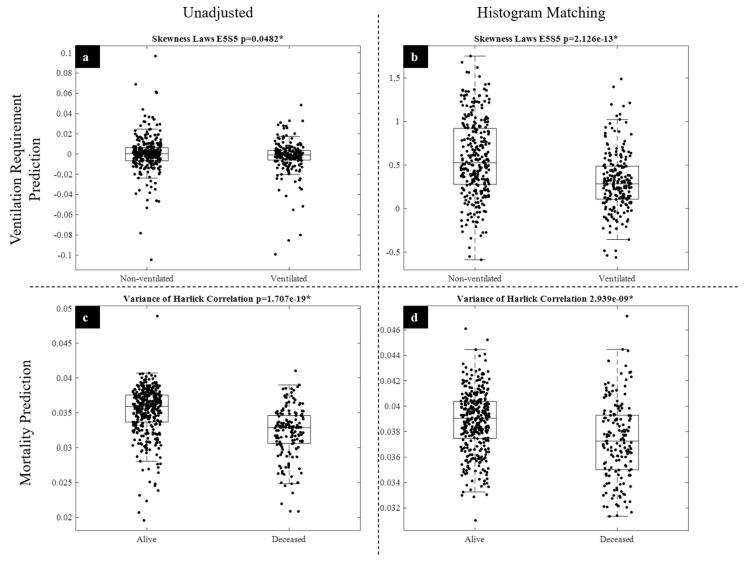

3.2. Experiment 2: Outcome Classification Using Radiomic Features

For Experiment 2, a machine learning classifier trained to predict the need for mechanical ventilation using radiomic features extracted from non-HM-adjusted images yielded an mAUC of 0.72, a specificity of 67%, and a sensitivity of 64%. Using radiomic features from HM-adjusted images achieved an mAUC of 0.78, a specificity of 72%, and a sensitivity of 72% for mechanical ventilation prediction. A machine learning classifier used to predict mortality in COVID-19 positive patients using radiomic features from non-HM-adjusted images had an mAUC of 0.77, a specificity of 72%, and a sensitivity of 72%. Using radiomic features from HM-adjusted images resulted in an mAUC of 0.78, a specificity of 73%, and a sensitivity of 70% for mortality prediction. The inclusion of zone-wise expert scores and patient age and sex improved both mechanical ventilation and mortality prediction when combined with radiomic features to yield an mAUC of 0.79, specificity of 71%, and sensitivity of 71% for mechanical ventilation prediction and an mAUC of 0.83, specificity of 74%, and sensitivity of 79% for mortality prediction.

The top features for radiomic outcome classification are listed in Table 7. Please see Table 3 for detailed descriptions of these features. Among the most discriminating radiomic features identified for predicting mechanical ventilation requirement and mortality were the Laws E5S5 energy and Haralick correlation features, respectively (Figure 8). The Laws E5S5 filter is a composite edge and spot detection filter, whereas the Haralick correlation measures the similarity of a pixel to its neighbors using a grey-level co-occurrence matrix.

Figure 8.

Radiomic feature distribution. Visualized are the relative effects of HM on the distribution of highly discriminative features for ventilation requirement and mortality prediction. (a,b) visualize the distribution of the skewness of the Laws E5S5 radiomic feature for ventilated and non-ventilated patients before (a) and after (b) HM. (c,d) display the distribution of the variance of the Haralick Correlation for alive and deceased patients before (c) and after (d) HM.

3.3. Experiment 3: Outcome Classification Using Convolutional Neural Networks

In Experiment 3, a ResNet-50 model trained solely using non-HM-adjusted CXRs to predict future mechanical ventilation requirement had an mAUC of 0.70, a specificity of 72%, and a sensitivity of 55% on cross-validation. Using HM-adjusted images as input for DL resulted in improved mechanical ventilation requirement prediction with an mAUC of 0.75, a specificity of 73%, and a sensitivity of 64%. A ResNet-50 model trained using non-HM-adjusted CXRs to predict mortality yielded an mAUC of 0.72, a specificity of 72%, and a sensitivity of 56%. Using HM-adjusted images for DL training resulted in improved mortality prediction with an mAUC of 0.75, a specificity of 74%, and a sensitivity of 59%.

3.4. Experiment 4: Outcome Classification Using Convolutional Neural Networks and Radiomic-Map Embedding

For Experiment 4, we found that the inclusion of radiomic features improved DL prediction of both mechanical ventilation and mortality. DL models trained using radiomic-embedded feature maps improved the prediction of mortality over DL of CXRs alone but did not increase performance when predicting mechanical ventilation requirement. Using feed-forward concatenation of radiomic features to DL features, our model obtained an mAUC of 0.77, a specificity of 75%, and a sensitivity of 66% for mechanical ventilation requirement prediction. Using radiomic-embedded features a DL model produced an mAUC of 0.74. a specificity of 76%, and a sensitivity of 59% for mortality prediction. The inclusion of clinical features including expert scores and patient age/sex improved predictions for mechanical ventilation requirement with an mAUC of 0.78, a specificity of 78%, and a sensitivity of 67%. For mortality prediction, the inclusion of clinical features improved model predictions to obtain an mAUC of 0.77, a specificity of 60%, and a sensitivity of 77%. Ultimately, the inclusion of radiomic features improved DL prediction of clinical outcomes (Table 6).

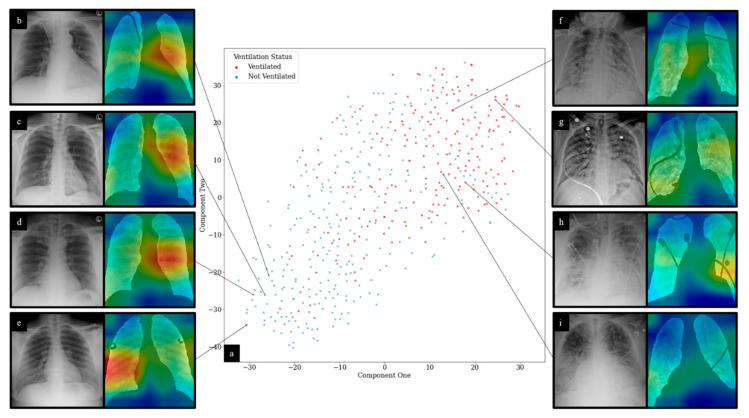

For DL experiments, representative CAMs are shown in Figure 9. An expert reader (J.G, 15 years of experience) noted that for CXRs from patients that required mechanical ventilation, CAM maximal signal intensity was shown to correlate with areas of dense infiltrates. For selected CXRs for patients who did not require mechanical ventilation, CXRs appeared to demonstrate no focal consolidation or infiltrates. The maximal CAM signal for these CXRs was observed in left middle lung zones, predominantly along the perihilar region. For all CAMs generated, network activations were shown to be most significantly located within lung fields. t-SNE feature reduction for deep features is also visualized in Figure 9. Clustering for features from patients that did and did not require mechanical ventilation was observed.

Figure 9.

t-SNE and CAM visualization of DL predictions: (a) displays t-SNE clustering of DL network outputs for ventilation prediction; (b–d) demonstrate no focal consolidation or infiltrates. CAMs show maximal signal intensity in the left middle lung zone predominantly along the perihilar region; (e) shows no focal consolidation or infiltrates. CAM shows maximal signal intensity in the right mid to lower lung zone; (f) demonstrates diffuse patchy infiltrates bilaterally, predominantly in the mid to lower lung zones. CAM shows the highest signal intensities in the right mid to lower lung zones in areas of dense infiltrates. Additionally, noted is slightly increased CAM activity in the left lower lobe around the areas of dense infiltrates; (g) demonstrates diffuse patchy infiltrates bilaterally. CAM shows the highest signal intensities in the right lower and left upper lung zones around areas of slightly dense infiltrates; (h) shows diffuse infiltrates bilaterally with relative sparing of the right upper lobe. CAM shows the highest signal intensities in the right mid and left mid to lower lung zones in areas of dense infiltrates; (i) demonstrates diffuse bilateral reticular opacities with interlobular septal thickening along with superimposed dense infiltrates predominantly in the lower lobes. CAM shows the highest signal intensity in the right lower lung zone around areas of dense infiltrates. CXR interpretation performed by J.G. (15 years of experience).

4. Discussion

In this work, we presented models for baseline CXR analysis demonstrating high sensitivities for future mechanical ventilation requirement (71%) and mortality (79%) prediction. These models outperform expert score-based classification that yields sensitivities of 67% and 69% for mechanical ventilation requirement and mortality, respectively. These results highlight the value that quantitative modeling of CXRs can have for the prognostic prediction of COVID-19. Previous non-imaging models have been proposed with high sensitivities for various clinical outcomes using biomarkers such as serum lactate dehydrogenase, lymphocyte counts, and coagulation factors in the setting of COVID-19 [4,13,14,15]. We demonstrated that these models might be complemented by imaging-based approaches. The ability to discern actionable prognostic information from baseline CXR has significant implications for decision making and triage in the COVID-pandemic, especially in high-volume hospital settings. Determining which patients might progress to severe disease would enable healthcare providers to make informed decisions regarding treatments. Furthermore, the ubiquitous nature of CXR in the management of COVID-19 makes a quantitative predictor of outcomes using the modality a convenient and useful tool for physicians.

Previous studies have applied DL to the analysis of COVID-19 CXRs [8,18,19,23]. However, at least one study has reported potential deficiencies in these approaches, including insufficiencies in a commonly used public dataset, neglecting to segment lung fields, and a failure to account for large differences between disparate public datasets [23]. Most significantly, there has been some suggestion that a few studies on a large multi-institutional public dataset may have produced models that learn to distinguish between data taken from different institutions rather than distinguishing meaningful differences in underlying pathology [23]. Nevertheless, new evaluation methods and improvements in data quality might improve experiments performed on these public datasets [43]. Previous studies have also not explicitly accounted for foreign objects in lung fields, which can obscure pathological findings. Here, we further presented a method for dataset homogenization between two separate institutions using HM, addressing any potential discrimination between datasets by our models. Furthermore, we developed a unique CXR preprocessing pipeline to segment lungs and artifacts.

Radiomic features can provide insight into what characteristics of a patient’s CXR are significant in making clinical predictions and can be more informative to a physician than exclusively DL approaches. From our results, it can be observed that radiomic features play an interesting role in outcome prediction for COVID-19. A small subset of radiomic features was shown to be effective in predicting outcome for both mechanical ventilation requirement (3 features) and mortality (1 feature). Radiomic feature classification of future mechanical ventilation requirement improved with HM while also reducing the number of features required for accurate outcome prediction (10 vs. 3 features). Interestingly, the opposite effect was observed for mortality prediction; the number of features needed for outcome prediction increased following HM (one vs. four features). For ventilation prediction, classifier performances improved following HM, whereas HM slightly worsened mortality prediction performance. Laws energy filters appear to be important in making mechanical ventilation requirement predictions, and Figure 8 demonstrates the observed improvement in Laws E5S5 feature discrimination between classes following HM. For mortality prediction, Laws energy filters are also selected as discriminatory features following HM. However, the performance of these features in predicting mortality is not as strong as the use of Haralick features prior to HM. Notably, the Haralick correlation feature does not seem to be “improved” by HM and becomes less valuable in class discrimination for mortality prediction (Figure 8). The variable effect of postprocessing techniques on different radiomic feature families warrants further exploration in future experiments. Here, we showed that two different feature families (Haralick and Laws energy) might have unique roles in predicting different clinical outcomes and might be variably affected by HM.

In this work, we also explored the relative value of two methods of radiomic feature inclusion in deep learning: radiomic feature embedding and feed-forward concatenation of radiomic features. Notably, the inclusion of radiomic features improved DL predictions for both clinical outcome tasks. For mechanical ventilation requirement prediction, feed-forward radiomic feature concatenation was superior to radiomic feature appending. The opposite was observed for mortality prediction. This again indicates that different machine learning approaches and selective model invocation may be required for different clinical prediction tasks. We also found that HM uniformly improves DL prediction of clinical outcomes.

We also demonstrated that radiomic and DL analysis of CXRs can achieve competitive or superior results in predicting clinical outcomes when compared with expert scoring of CXR severity. This is of particular significance in high-volume or low-resource healthcare settings where expert annotations may be harder to obtain. Moreover, the combination of DL and radiomic approaches with zone-wise expert scoring of CXRs performs even more accurately in the outcome prediction task, indicating that the two might be applied synergistically to further improve predictions. Furthermore, our models have demonstrated validity on a multi-institutional dataset and might provide a more consistent method of CXR evaluation than human scoring.

There are certain limitations in our work. First, we used baseline CXRs that are likely to be nonuniform in the interval between COVID-19 infection and image acquisition. While this is representative of the clinical reality that patients receive baseline CXRs at varying time points in their disease course, future studies might build improved time-to-event prediction models using data with a more uniform temporal distribution. It is also important to note that the two clinical outcomes studied in this work are neither independent nor mutually exclusive; generally, a patient requiring mechanical ventilation is more likely to succumb to their disease than one that does not. Furthermore, a limited number of clinical features were studied, and our models might benefit from including co-morbidities such as a history of cancer, chronic obstructive pulmonary disease, hypertension, etc. Other studies have previously validated the utility of measures such as these in predicting COVID-19 progression and clinical outcomes [3,16]. Additionally, in this study, we did not control for code status among patients, which might influence results. For instance, a patient’s disease might progress to an emergent situation requiring mechanical ventilation, but the patient might have a standing order to not initiate such a procedure [22]. Future experiments might attempt to control this confounding variable if these data are made readily available. Finally, additional validation is necessary to demonstrate the robustness of classification models in the broader context of COVID-19 treatment in other hospitals and locations.

This work, along with several other recent studies, established the value of computational analysis of CXRs in order to study clinical outcomes in COVID-19 [2,21,22,24,44]. In most cases, these studies analyze CXRs taken at a single time point, although modeling of sequential CXR data might enable an improved analysis of the temporal evolution of COVID-19, as observed on imaging data.

5. Conclusions

In summary, we presented a complete pipeline for computational evaluation of CXR in COVID-19 patients. Both radiomic and DL classification models enable us to predict mechanical ventilation requirement and mortality from baseline CXRs. Each of these approaches outperforms or performs competitively with predictions made using expert severity assessment of CXRs, indicating the potential for increased efficacy and efficiency in modeling COVID-19 outcomes using machine learning approaches. Furthermore, we demonstrated the improvement that a novel radiomic embedding approach has on DL predictions of COVID-19 outcomes. The ability to make early predictions of disease outcomes may aid in triage, clinical decision making, and efficient hospital resource allocation as the COVID-19 pandemic progresses.

Acknowledgments

Research reported in this publication was enabled by the Renaissance School of Medicine at Stony Brook University’s “COVID-19 Data Commons and Analytic Environment”, a data quality initiative instituted by the Office of the Dean and supported by the Department of Biomedical Informatics.

Author Contributions

Conceptualization, P.P., G.S. and J.B.; methodology, J.B., S.K. and P.P.; software, S.K. and J.B.; validation, P.P., J.G. and A.G.; formal analysis, J.B., S.K., G.S., R.G., S.A., N.S., C.M. and J.P.; investigation, J.B., S.K., G.S., R.G., S.A., N.S., C.M. and J.P.; resources, P.P., J.G., T.P., A.G. and N.M.; data curation, P.P., J.G., T.P., A.G., N.M., J.B., S.K., G.S., R.G., S.A., N.S., C.M. and J.P.; writing—original draft preparation, J.B. and S.K.; writing—review and editing, P.P., J.G., T.P., A.G., N.M., J.B., S.K., G.S., R.G., S.A., N.S., C.M. and J.P.; visualization, J.B. and S.K.; supervision, P.P., J.G. and A.G.; project administration, P.P., J.G. and A.G.; funding acquisition, P.P. All authors have read and agreed to the published version of the manuscript.

Funding

Research reported in this publication was funded by the Office of the Vice President for Research and Institute for Engineering-Driven Medicine Seed Grants, 2019 at Stony Brook University. J.B. supported by NIGMS T32GM008444.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Boards of Stony Brook University Hospital and Newark Beth Israel Medical Center. All data were deidentified prior to analysis.

Informed Consent Statement

All patient data were deidentified prior to analysis.

Data Availability Statement

A portion of the data reported in this study will be made available through the Cancer Imaging Archive COVID-19 imaging collection.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Dong E., Du H., Gardner L. An Interactive Web-Based Dashboard to Track COVID-19 in Real Time. Lancet Infect. Dis. 2020;20:533–534. doi: 10.1016/S1473-3099(20)30120-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Toussie D., Voutsinas N., Finkelstein M., Cedillo M.A., Manna S., Maron S.Z., Jacobi A., Chung M., Bernheim A., Eber C., et al. Clinical and Chest Radiography Features Determine Patient Outcomes In Young and Middle Age Adults with COVID-19. Radiology. 2020;271:E197–E206. doi: 10.1148/radiol.2020201754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Vaid A., Somani S., Russak A.J., De Freitas J.K., Chaudhry F.F., Paranjpe I., Johnson K.W., Lee S.J., Miotto R., Richter F., et al. Machine Learning to Predict Mortality and Critical Events in a Cohort of Patients With COVID-19 in New York City: Model Development and Validation. J. Med. Internet Res. 2020;22:e24018. doi: 10.2196/24018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Liang W., Liang H., Ou L., Chen B., Chen A., Li C., Li Y., Guan W., Sang L., Lu J., et al. Development and Validation of a Clinical Risk Score to Predict the Occurrence of Critical Illness in Hospitalized Patients With COVID-19. JAMA Intern. Med. 2020;180:1081–1089. doi: 10.1001/jamainternmed.2020.2033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Yang R., Li X., Liu H., Zhen Y., Zhang X., Xiong Q., Luo Y., Gao C., Zeng W. Chest CT Severity Score: An Imaging Tool for Assessing Severe COVID-19. Radiol. Cardiothorac. Imaging. 2020;2:e200047. doi: 10.1148/ryct.2020200047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Balbi M., Caroli A., Corsi A., Milanese G., Surace A., Di Marco F., Novelli L., Silva M., Lorini F.L., Duca A., et al. Chest X-Ray for Predicting Mortality and the Need for Ventilatory Support in COVID-19 Patients Presenting to the Emergency Department. Eur. Radiol. 2020;31:1999–2012. doi: 10.1007/s00330-020-07270-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Khullar R., Shah S., Singh G., Bae J., Gattu R., Jain S., Green J., Anandarangam T., Cohen M., Madan N., et al. Effects of Prone Ventilation on Oxygenation, Inflammation, and Lung Infiltrates in COVID-19 Related Acute Respiratory Distress Syndrome: A Retrospective Cohort Study. J. Clin. Med. 2020;9:4129. doi: 10.3390/jcm9124129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Shi F., Wang J., Shi J., Wu Z., Wang Q., Tang Z., He K., Shi Y., Shen D. Review of Artificial Intelligence Techniques in Imaging Data Acquisition, Segmentation and Diagnosis for COVID-19. arXiv. 2020 doi: 10.1109/RBME.2020.2987975.2004.02731 [DOI] [PubMed] [Google Scholar]

- 9.ACR Recommendations for the Use of Chest Radiography and Computed Tomography (CT) for Suspected COVID-19 Infection. [(accessed on 15 June 2020)]. Available online: https://www.acr.org/Advocacy-and-Economics/ACR-Position-Statements/Recommendations-for-Chest-Radiography-and-CT-for-Suspected-COVID19-Infection.

- 10.Wong H.Y.F., Lam H.Y.S., Fong A.H.-T., Leung S.T., Chin T.W.-Y., Lo C.S.Y., Lui M.M.-S., Lee J.C.Y., Chiu K.W.-H., Chung T., et al. Frequency and Distribution of Chest Radiographic Findings in COVID-19 Positive Patients. Radiology. 2020;296:E72–E78. doi: 10.1148/radiol.2020201160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ai T., Yang Z., Hou H., Zhan C., Chen C., Lv W., Tao Q., Sun Z., Xia L. Correlation of Chest CT and RT-PCR Testing in Coronavirus Disease 2019 (COVID-19) in China: A Report of 1014 Cases. Radiology. 2020;296:E32–E40. doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chaganti S., Balachandran A., Chabin G., Cohen S., Flohr T., Liu S., Mellot F., Murray N., Nicolaou S., Parker W., et al. Quantification of Tomographic Patterns Associated with COVID-19 from Chest CT. arXiv. 2020 doi: 10.1148/ryai.2020200048.2004.01279v5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Yan L., Zhang H.-T., Goncalves J., Xiao Y., Wang M., Guo Y., Sun C., Tang X., Jing L., Zhang M., et al. An Interpretable Mortality Prediction Model for COVID-19 Patients. Nat. Mach. Intell. 2020;2:283–288. doi: 10.1038/s42256-020-0180-7. [DOI] [Google Scholar]

- 14.Ji D., Zhang D., Chen Z., Xu Z., Zhao P., Zhang M., Zhang L., Cheng G., Wang Y., Yang G., et al. Clinical Characteristics Predicting Progression of COVID-19. Social Science Research Network; Rochester, NY, USA: 2020. [Google Scholar]

- 15.Zhou Y., He Y., Yang H., Yu H., Wang T., Chen Z., Yao R., Liang Z. Development and Validation a Nomogram for Predicting the Risk of Severe COVID-19: A Multi-Center Study in Sichuan, China. PLoS ONE. 2020;15:e0233328. doi: 10.1371/journal.pone.0233328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lu J.Q., Musheyev B., Peng Q., Duong T.Q. Neural Network Analysis of Clinical Variables Predicts Escalated Care in COVID-19 Patients: A Retrospective Study. PeerJ. 2021;9:e11205. doi: 10.7717/peerj.11205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Parekh V.S., Jacobs M.A. Deep Learning and Radiomics in Precision Medicine. Expert Rev. Precis. Med. Drug Dev. 2019;4:59–72. doi: 10.1080/23808993.2019.1585805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cohen J.P., Morrison P., Dao L. COVID-19 Image Data Collection. arXiv. 20202003.11597 [Google Scholar]

- 19.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O., Rajendra Acharya U. Automated Detection of COVID-19 Cases Using Deep Neural Networks with X-Ray Images. Comput. Biol. Med. 2020;121:103792. doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Chassagnon G., Vakalopoulou M., Battistella E., Christodoulidis S., Hoang-Thi T.-N., Dangeard S., Deutsch E., Andre F., Guillo E., Halm N., et al. AI-Driven Quantification, Staging and Outcome Prediction of COVID-19 Pneumonia. Med. Image Anal. 2021;67:101860. doi: 10.1016/j.media.2020.101860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Konwer A., Bae J., Singh G., Gattu R., Ali S., Green J., Phatak T., Gupta A., Chen C., Saltz J., et al. Predicting COVID-19 Lung Infiltrate Progression on Chest Radiographs Using Spatio-Temporal LSTM Based Encoder-Decoder Network; Proceedings of the Fourth Conference on Medical Imaging with Deep Learning, PMLR; 25 August 2021; pp. 384–398. [Google Scholar]

- 22.Shen B., Hoshmand-Kochi M., Abbasi A., Glass S., Jiang Z., Singer A.J., Thode H.C., Li H., Hou W., Duong T.Q. Initial Chest Radiograph Scores Inform COVID-19 Status, Intensive Care Unit Admission and Need for Mechanical Ventilation. Clin. Radiol. 2021;76:473.e1–473.e7. doi: 10.1016/j.crad.2021.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Maguolo G., Nanni L. A Critic Evaluation of Methods for COVID-19 Automatic Detection from X-Ray Images. arXiv. 2020 doi: 10.1016/j.inffus.2021.04.008.2004.12823 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kwon Y.J., Toussie D., Finkelstein M., Cedillo M.A., Maron S.Z., Manna S., Voutsinas N., Eber C., Jacobi A., Bernheim A., et al. Combining Initial Radiographs and Clinical Variables Improves Deep Learning Prognostication of Patients with COVID-19 from the Emergency Department. Radiol. Artif. Intell. 2020;3:e200098. doi: 10.1148/ryai.2020200098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.He K., Zhang X., Ren S., Sun J. Deep Residual Learning for Image Recognition. arXiv. 20151512.03385 [Google Scholar]

- 26.Ronneberger O., Fischer P., Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv. 20151505.04597 [Google Scholar]

- 27.Wang J., Zhu H., Wang S.-H., Zhang Y.-D. A Review of Deep Learning on Medical Image Analysis. Mob. Netw. Appl. 2021;26:351–380. doi: 10.1007/s11036-020-01672-7. [DOI] [Google Scholar]

- 28.Siddique N., Paheding S., Elkin C.P., Devabhaktuni V. U-Net and Its Variants for Medical Image Segmentation: A Review of Theory and Applications. IEEE Access. 2021;9:82031–82057. doi: 10.1109/ACCESS.2021.3086020. [DOI] [Google Scholar]

- 29.Ferreira Junior J.R., Cardona Cardenas D.A., Moreno R.A., de Sá Rebelo M.d.F., Krieger J.E., Gutierrez M.A. Novel Chest Radiographic Biomarkers for COVID-19 Using Radiomic Features Associated with Diagnostics and Outcomes. J. Digit. Imaging. 2021;34:1–11. doi: 10.1007/s10278-021-00421-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zhang Z., Liu Q., Wang Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018;15:749–753. doi: 10.1109/LGRS.2018.2802944. [DOI] [Google Scholar]

- 31.Lee C.-Y., Xie S., Gallagher P., Zhang Z., Tu Z. Deeply-Supervised Nets. arXiv. 20141409.5185 [Google Scholar]

- 32.Abraham N., Khan N.M. A Novel Focal Tversky Loss Function with Improved Attention U-Net for Lesion Segmentation. arXiv. 20181810.07842 [Google Scholar]

- 33.Chen C., Dou Q., Chen H., Heng P.-A. Semantic-Aware Generative Adversarial Nets for Unsupervised Domain Adaptation in Chest X-Ray Segmentation. arXiv. 20181806.00600 [Google Scholar]

- 34.Haralick R.M., Shanmugam K., Dinstein I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973;SMC-3:610–621. doi: 10.1109/TSMC.1973.4309314. [DOI] [Google Scholar]

- 35.Jain A.K., Farrokhnia F. Unsupervised Texture Segmentation Using Gabor Filters. Pattern Recognit. 1991;24:1167–1186. doi: 10.1016/0031-3203(91)90143-S. [DOI] [Google Scholar]

- 36.Laws K.I. Textured Image Segmentation. University of Southern California; Los Angeles, CA, USA: 1980. [Google Scholar]

- 37.Dalal N., Triggs B. Histograms of Oriented Gradients for Human Detection; Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05); San Diego, CA, USA. 20–26 June 2005; pp. 886–893. [Google Scholar]

- 38.Tin Kam Ho The Random Subspace Method for Constructing Decision Forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998;20:832–844. doi: 10.1109/34.709601. [DOI] [Google Scholar]

- 39.Hastie T., Tibshirani R., Friedman J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. 2nd ed. Springer; New York, NY, USA: 2009. (Springer series in statistics). [Google Scholar]

- 40.Peng H., Long F., Ding C. Feature Selection Based on Mutual Information Criteria of Max-Dependency, Max-Relevance, and Min-Redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005;27:1226–1238. doi: 10.1109/TPAMI.2005.159. [DOI] [PubMed] [Google Scholar]

- 41.Kingma D.P., Ba J. Adam: A Method for Stochastic Optimization. arXiv. 20171412.6980 [Google Scholar]

- 42.Sharma A., Vans E., Shigemizu D., Boroevich K.A., Tsunoda T. DeepInsight: A Methodology to Transform a Non-Image Data to an Image for Convolution Neural Network Architecture. Sci. Rep. 2019;9:11399. doi: 10.1038/s41598-019-47765-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Cohen J.P., Morrison P., Dao L., Roth K., Duong T.Q., Ghassemi M. COVID-19 Image Data Collection: Prospective Predictions Are the Future. arXiv. 20202006.11988 [Google Scholar]

- 44.Wong A., Lin Z.Q., Wang L., Chung A.G., Shen B., Abbasi A., Hoshmand-Kochi M., Duong T.Q. Towards Computer-Aided Severity Assessment via Deep Neural Networks for Geographic and Opacity Extent Scoring of SARS-CoV-2 Chest X-Rays. Sci. Rep. 2021;11:9315. doi: 10.1038/s41598-021-88538-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

A portion of the data reported in this study will be made available through the Cancer Imaging Archive COVID-19 imaging collection.