Abstract

Celiac Disease (CD) and Environmental Enteropathy (EE) are common causes of malnutrition and adversely impact normal childhood development. CD is an autoimmune disorder that is prevalent worldwide and is caused by an increased sensitivity to gluten. Gluten exposure destructs the small intestinal epithelial barrier, resulting in nutrient mal-absorption and childhood under-nutrition. EE also results in barrier dysfunction but is thought to be caused by an increased vulnerability to infections. EE has been implicated as the predominant cause of under-nutrition, oral vaccine failure, and impaired cognitive development in low-and-middle-income countries. Both conditions require a tissue biopsy for diagnosis, and a major challenge of interpreting clinical biopsy images to differentiate between these gastrointestinal diseases is striking histopathologic overlap between them. In the current study, we propose a convolutional neural network (CNN) to classify duodenal biopsy images from subjects with CD, EE, and healthy controls. We evaluated the performance of our proposed model using a large cohort containing 1000 biopsy images. Our evaluations show that the proposed model achieves an area under ROC of 0.99, 1.00, and 0.97 for CD, EE, and healthy controls, respectively. These results demonstrate the discriminative power of the proposed model in duodenal biopsies classification.

Keywords: Convolutional neural networks, Medical imaging, Celiac Disease, Environmental Enteropathy

1. Introduction and Related Works

Under-nutrition is the underlying cause of approximately 45% of the 5 million under 5-year-old childhood deaths annually in low and middle-income countries (LMICs) [1] and is a major cause of mortality in this population. Linear growth failure (or stunting) is a major complication of under-nutrition, and is associated with irreversible physical and cognitive deficits, with profound developmental implications [32]. A common cause of stunting in LMICs is EE, for which there are no universally accepted, clear diagnostic algorithms or non-invasive biomarkers for accurate diagnosis [32], making this a critical priority [28]. EE has been described to be caused by chronic exposure to enteropathogens which results in a vicious cycle of constant mucosal inflammation, villous blunting, and a damaged epithelium [32]. These deficiencies contribute to a markedly reduced nutrient absorption and thus under-nutrition and stunting [32]. Interestingly, CD, a common cause of stunting in the United States, with an estimated 1% prevalence, is an autoimmune disorder caused by a gluten sensitivity [15] and has many shared histological features with EE (such as increased inflammatory cells and villous blunting) [32]. This resemblance has led to the major challenge of differentiating clinical biopsy images for these similar but distinct diseases. Therefore, there is a major clinical interest towards developing new, innovative methods to automate and enhance the detection of morphological features of EE versus CD, and to differentiate between diseased and healthy small intestinal tissue [4].

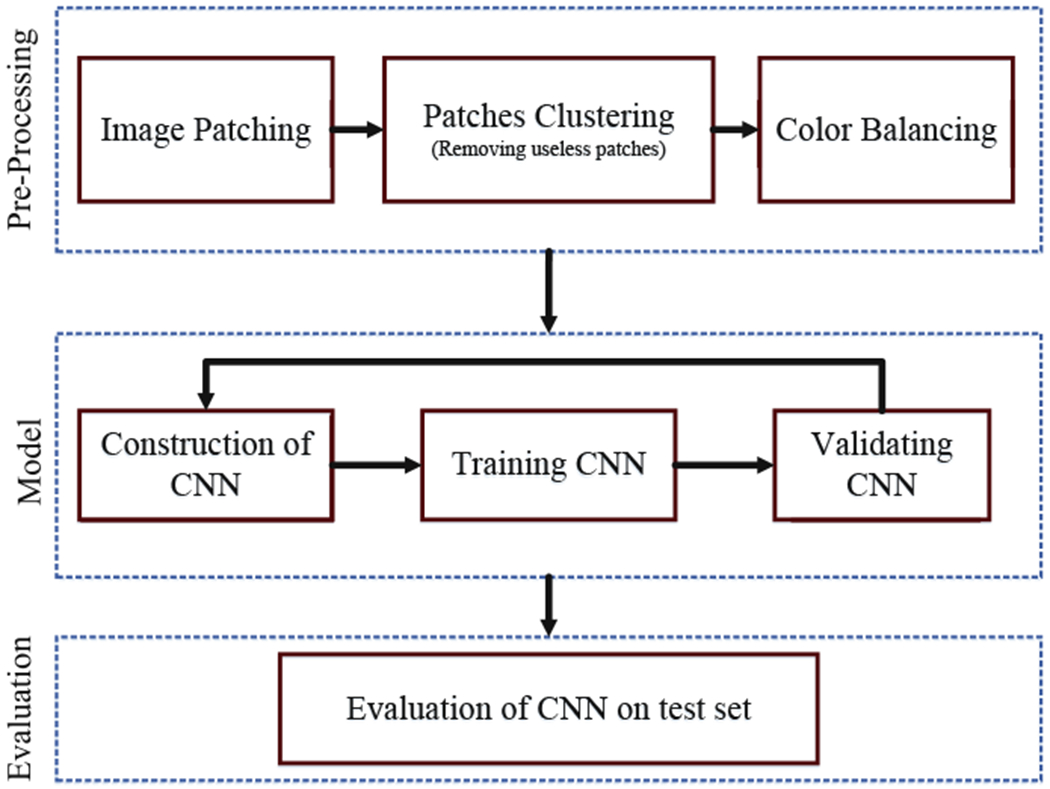

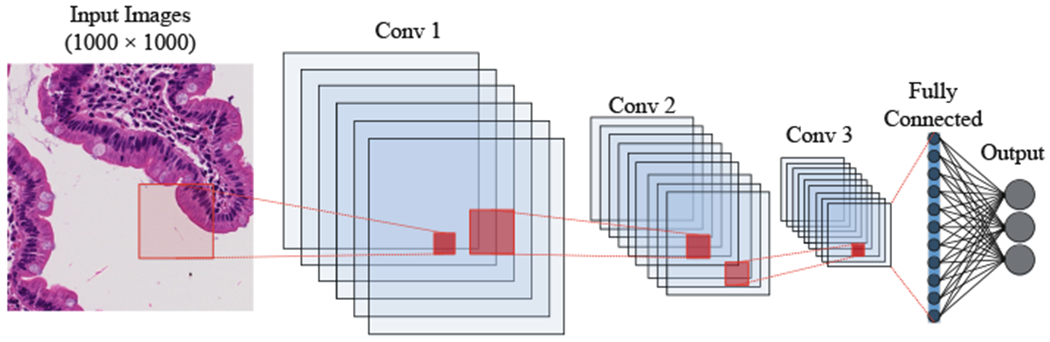

The overview of the methodology used is shown in Fig. 1.

Fig. 1.

Overview of methodology

In this paper, we propose a CNN-based model for classification of biopsy images. In recent years, Deep Learning architectures have received great attention after achieving state-of-the-art results in a wide variety of fundamental tasks such classification [13,18–20,24,29,35] or other medical domains [12,36]. CNNs in particular have proven to be very effective in medical image processing. CNNs preserve local image relations, while reducing dimensionality and for this reason are the most popular machine learning algorithm in image recognition and visual learning tasks [16]. CNNs have been widely used for classification and segmentation in various types of medical applications such as histopathological images of breast tissues, lung images, MRI images, medical X-Ray images, etc. [11,24]. Researchers produced advanced results on duodenal biopsies classification using CNNs [3], but those models are only robust to a single type of image stain or color distribution. Many researchers apply a stain normalization technique as part of the image pre-processing stage to both the training and validation datasets [27]. In this paper, varying levels of color balancing were applied during image pre-processing in order to account for multiple stain variations.

The rest of this paper is organized as follows: In Sect. 2, we describe the different data sets used in this work, as well as, the required pre-processing steps. The architecture of the model is explained in Sect. 4. Empirical results are elaborated in Sect. 5. Finally, Sect. 6 concludes the paper along with outlining future directions.

2. Data Source

For this project, 121 Hematoxylin and Eosin (H&E) stained duodenal biopsy glass slides were retrieved from 102 patients. The slides were converted into 3118 whole slide images, and labeled as either EE, CD, or normal. The biopsy slides for EE patients were from the Aga Khan University Hospital (AKUH) in Karachi, Pakistan (n = 29 slides from 10 patients) and the University of Zambia Medical Center in Lusaka, Zambia (n = 16). The slides for CD patients (n = 34) and normal (n = 42) were retrieved from archives at the University of Virginia (UVa). The CD and normal slides were converted into whole slide images at 40x magnification using the Leica SCN 400 slide scanner (Meyer Instruments, Houston, TX) at UVa, and the digitized EE slides were of 20x magnification and shared via the Environmental Enteric Dysfunction Biopsy Investigators (EEDBI) Consortium shared WUPAX server. Characteristics of our patient population are as follows: the median (Q1, Q3) age of our entire study population was 31 (20.25, 75.5) months, and we had a roughly equal distribution of males (52%, n = 53) and females (48%, n = 49). The majority of our study population were histologically normal controls (41.2%), followed by CD patients (33.3%), and EE patients (25.5%).

3. Pre-processing

In this section, we cover all of the pre-processing steps which include image patching, image clustering, and color balancing. The biopsy images are unstructured (varying image sizes) and too large to process with deep neural networks; thus, requiring that images are split into multiple smaller images. After executing the split, some of the images do not contain much useful information. For instance, some only contain the mostly blank border region of the original image. In the image clustering section, the process to select useful images is described. Finally, color balancing is used to correct for varying color stains which is a common issue in histological image processing.

3.1. Image Patching

Although effectiveness of CNNs in image classification has been shown in various studies in different domains, training on high resolution Whole Slide Tissue Images (WSI) is not commonly preferred due to a high computational cost. However, applying CNNs on WSI enables losing a large amount of discriminative information due to extensive downsampling [14]. Due to a cellular level difference between Celiac, Environmental Entropathy and normal cases, a trained classifier on image patches is likely to perform as well as or even better than a trained WSI-level classifier. Many researchers in pathology image analysis have considered classification or feature extraction on image patches [14]. In this project, after generating patches from each images, labels were applied to each patch according to its associated original image. A CNN was trained to generate predictions on each individual patch.

3.2. Clustering

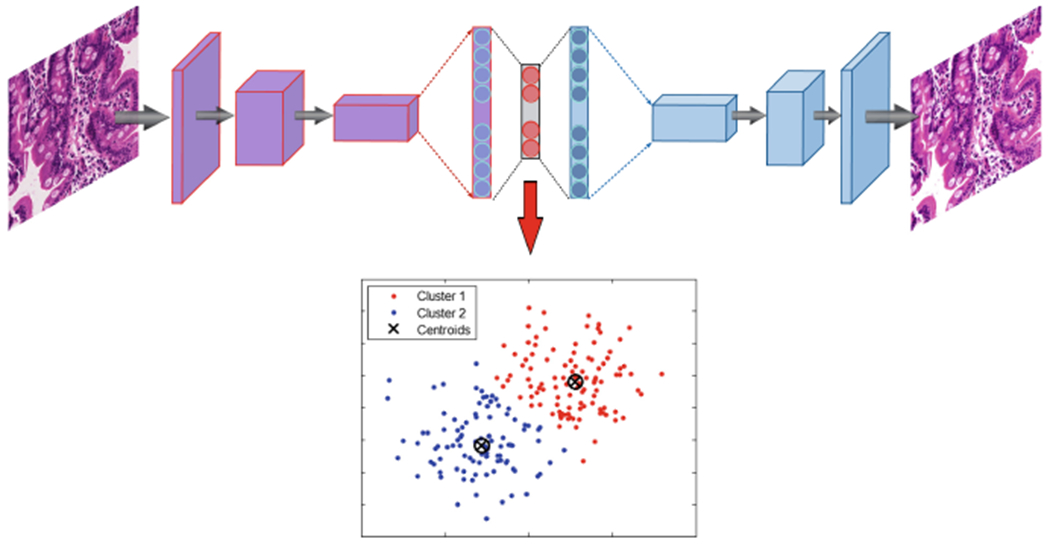

In this study, after image patching, some of created patches do not contain any useful information regarding biopsies and should be removed from the data. These patches have been created from mostly background parts of WSIs. A two-step clustering process was applied to identify the unimportant patches. For the first step, a convolutional autoencoder was used to learn embedded features of each patch and in the second step we used k-means to cluster embedded features into two clusters: useful and not useful. In Fig. 2, the pipeline of our clustering technique is shown which contains both the autoencoder and k-mean clustering.

Fig. 2.

Structure of clustering model with autoencoder and K-means combination

An autoencoder is a type of neural network that is designed to match the model’s inputs to the outputs [10]. The autoencoder has achieved great success as a dimensionality reduction method via the powerful reprehensibility of neural networks [33]. The first version of autoencoder was introduced by DE. Rumelhart et al. [30] in 1985. The main idea is that one hidden layer between input and output layers has much fewer units [23] and can be used to reduce the dimensions of a feature space. For medical images which typically contain many features, using an autoencoder can help allow for faster, more efficient data processing.

A CNN-based autoencoder can be divided into two main steps [25]: encoding and decoding.

| (1) |

Where is a convolutional filter, with convolution among an input volume defined by I = {I1, …, ID} which it learns to represent the input by combining non-linear functions:

| (2) |

where is the bias, and the number of zeros we want to pad the input with is such that: dim(I) = dim(decode(encode(I))) Finally, the encoding convolution is equal to:

| (3) |

The decoding convolution step produces n feature maps zm=1,…,n. The reconstructed results is the result of the convolution between the volume of feature maps and this convolutional filters volume F(2)[7,9].

| (4) |

| (5) |

Where Eq. 5 shows the decoding convolution with I dimensions. The input’s dimensions are equal to the output’s dimensions.

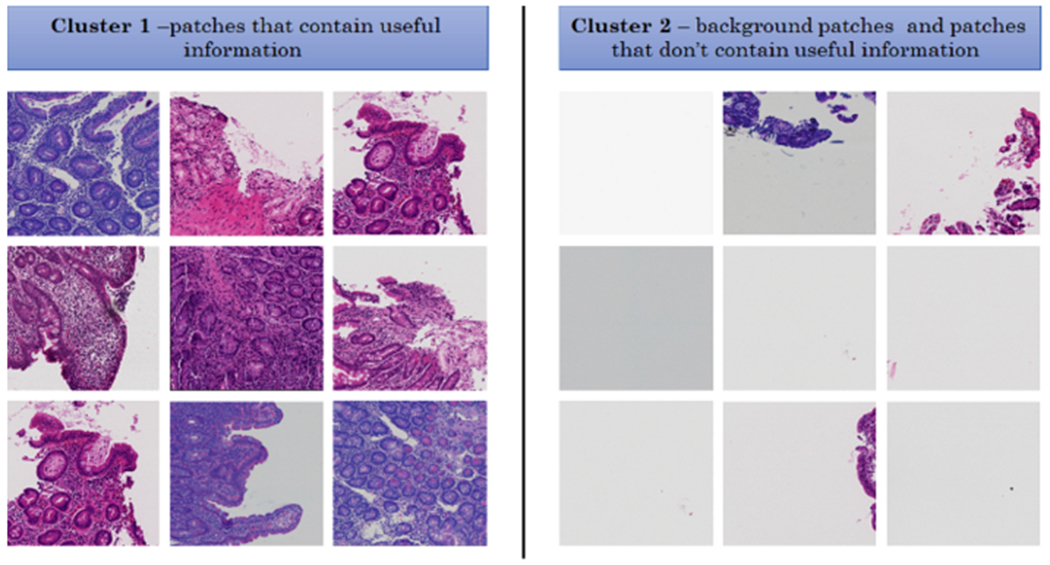

Results of patch clustering has been summarized in Table 1 and Fig. 3. Obviously, patches in cluster 1, which were deemed useful, are used for the analysis in this paper.

Table 1.

The clustering results for all patches into two clusters

| Total | Cluster 1 | Cluster 2 | |

|---|---|---|---|

| Celiac Disease (CD) | 16, 832 | 7, 742 (46%) | 9, 090 (54%) |

| Normal | 15, 983 | 8, 953 (56%) | 7, 030 (44%) |

| Environmental Enteropathy (EE) | 22, 625 | 2, 034 (9%) | 20, 591 (91%) |

| Total | 55, 440 | 18, 729 (34%) | 36, 711 (66%) |

Fig. 3.

Some samples of clustering results - cluster 1 includes patches with useful information and cluster 2 includes patches without useful information (mostly created from background parts of WSIs)

3.3. Color Balancing

The concept of color balancing for this paper is to convert all images to the same color space to account for variations in H&E staining. The images can be represented with the illuminant spectral power distribution I(λ), the surface spectral reflectance S(λ), and the sensor spectral sensitivities C(λ) [5,6]. Using this notation [6], the sensor responses at the pixel with coordinates (x, y) can be thus described as:

| (6) |

where w is the wavelength range of the visible light spectrum, ρ and C(λ) are three-component vectors.

| (7) |

where RGBin is raw images from biopsy and RGBout is results for CNN input. In the following, a more compact version of Eq. 7 is used:

| (8) |

where α is exposure compensation gain, Iω refers the diagonal matrix for the illuminant compensation and A indicates the color matrix transformation.

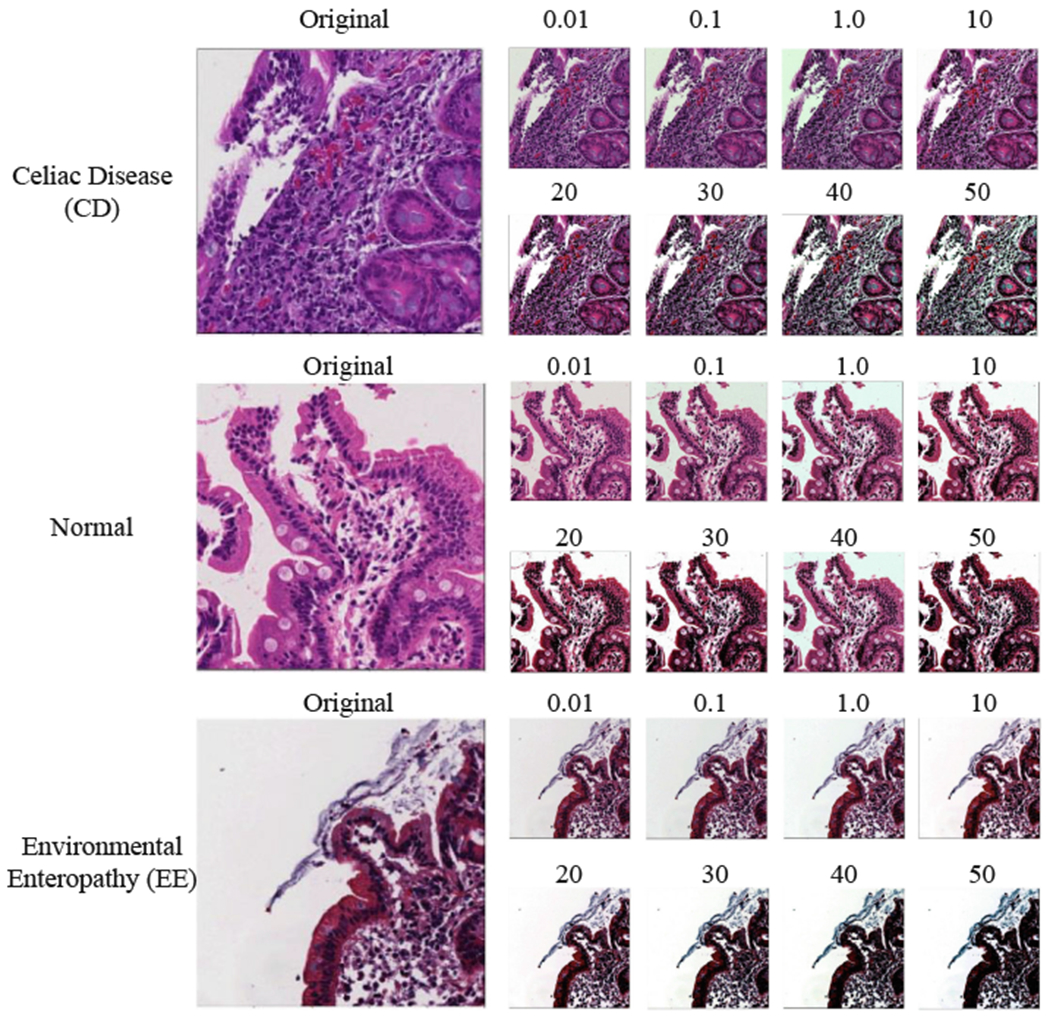

Figure 4 shows the results of color balancing for three classes (Celiac Disease (CD), Normal and Environmental Enteropathy (EE)) with different color balancing percentages between 0.01 and 50.

Fig. 4.

Color balancing samples for the three classes

4. Method

In this section, we describe Convolutional Neural Networks (CNN) including the convolutional layers, pooling layers, activation functions, and optimizer. Then, we discuss our network architecture for diagnosis of Celiac Disease and Environmental Enteropathy. As shown in Fig. 5, the input layers starts with image patches (1000 × 1000) and is connected to the convolutional layer (Conv 1). Conv 1 is connected to the pooling layer (MaxPooling), and then connected to Conv 2. Finally, the last convolutional layer (Conv 3) is flattened and connected to a fully connected perception layer. The output layer contains three nodes which each node represent one class.

Fig. 5.

Structure of convolutional neural net using multiple 2D feature detectors and 2D max-pooling

4.1. Convolutional Layer

CNN is a deep learning architecture that can be employed for hierarchical image classification. Originally, CNNs were built for image processing with an architecture similar to the visual cortex. CNNs have been used effectively for medical image processing. In a basic CNN used for image processing, an image tensor is convolved with a set of kernels of size d × d. These convolution layers are called feature maps and can be stacked to provide multiple filters on the input. The element (feature) of input and output matrices can be different [22]. The process to compute a single output matrix is defined as follows:

| (9) |

Each input matrix I–i is convolved with a corresponding kernel matrix Ki,j, and summed with a bias value Bj at each element. Finally, a non-linear activation function (see Sect. 4.3) is applied to each element [22].

In general, during the back propagation step of a CNN, the weights and biases are adjusted to create effective feature detection filters. The filters in the convolution layer are applied across all three ‘channels’ or Σ (size of the color space) [13].

4.2. Pooling Layer

To reduce the computational complexity, CNNs utilize the concept of pooling to reduce the size of the output from one layer to the next in the network. Different pooling techniques are used to reduce outputs while preserving important features [31]. The most common pooling method is max pooling where the maximum element is selected in the pooling window.

In order to feed the pooled output from stacked featured maps to the next layer, the maps are flattened into one column. The final layers in a CNN are typically fully connected [19].

4.3. Neuron Activation

The implementation of CNN is a discriminative trained model that uses standard back-propagation algorithm using a sigmoid (Eq. 10), (Rectified Linear Units (ReLU) [26] (Eq. 11) as activation function. The output layer for multiclass classification includes a Softmax function (as shown in Eq. 12).

| (10) |

| (11) |

| (12) |

4.4. Optimizor

For this CNN architecture, the Adam optimizor [17] which is a stochastic gradient optimizer that uses only the average of the first two moments of gradient (v and m, shown in Eqs. 13, 14, 15 and 16). It can handle non-stationary of the objective function as in RMSProp, while overcoming the sparse gradient issue limitation of RMSProp [17].

| (13) |

| (14) |

| (15) |

| (16) |

where mt is the first moment and vt indicates second moment that both are estimated. and .

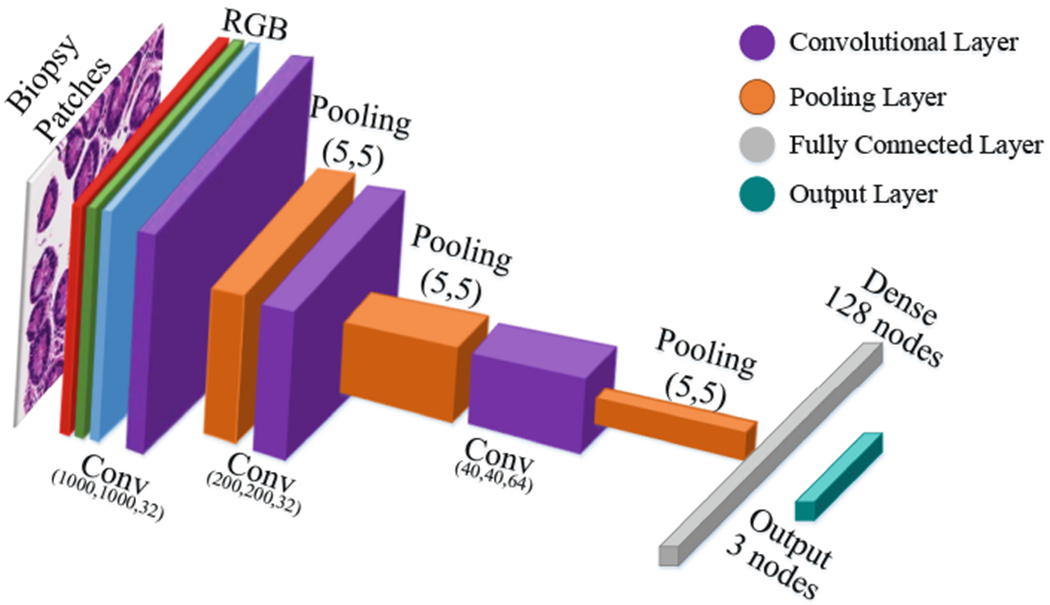

4.5. Network Architecture

As shown in Table 2 and Fig. 6, our CNN architecture consists of three convolution layer each followed by a pooling layer. This model receives RGB image patches with dimensions of (1000 × 1000) as input. The first convolutional layer has 32 filters with kernel size of (3, 3). Then we have Pooling layer with size of (5, 5) which reduce the feature maps from (1000 × 1000) to (200 × 200). The second convolutional layers with 32 filters with kernel size of (3, 3). Then Pooling layer (MaxPooling 2D) with size of (5, 5) reduces the feature maps from (200 × 200) to (40 × 40). The third convolutional layer has 64 filters with kernel size of (3, 3), and final pooling layer (MaxPooling 2D) is scaled down to (8 × 8). The feature maps as shown in Table 2 is flatten and connected to fully connected layer with 128 nodes. The output layer with three nodes to represent the three classes: (Environmental Enteropathy, Celiac Disease, and Normal).

Table 2.

CNN architecture for diagnosis of diseased duodenal on biopsy images

| Layer (type) | Output shape | Trainable parameters | |

|---|---|---|---|

| 1 | Convolutional layer | (1000, 1000, 32) | 869 |

| 2 | Max pooling | (200, 200, 32) | 0 |

| 3 | Convolutional layer | (200, 200, 32) | 9, 248 |

| 4 | Max pooling | (40, 40, 32) | 0 |

| 5 | Convolutional layer | (40, 40, 64) | 18, 496 |

| 6 | Max pooling | (8, 8, 64) | 0 |

| 8 | dense | 128 | 524, 416 |

| 10 | Output | 3 | 387 |

Fig. 6.

Our convolutional neural networks’ architecture

The optimizer used is Adam (see Sect. 4.4) with a learning rate of 0.001, β1 = 0.9, β2 = 0.999 and the loss considered is sparse categorical crossentropy [8]. Also for all layers, we use Rectified linear unit (ReLU) as activation function except output layer which we use Softmax (see Sect.4.3).

5. Empirical Results

5.1. Evaluation Setup

In the research community, comparable and shareable performance measures to evaluate algorithms are preferable. However, in reality such measures may only exist for a handful of methods. The major problem when evaluating image classification methods is the absence of standard data collection protocols. Even if a common collection method existed, simply choosing different training and test sets can introduce inconsistencies in model performance [34]. Another challenge with respect to method evaluation is being able to compare different performance measures used in separate experiments. Performance measures generally evaluate specific aspects of classification task performance, and thus do not always present identical information. In this section, we discuss evaluation metrics and performance measures and highlight ways in which the performance of classifiers can be compared.

Since the underlying mechanics of different evaluation metrics may vary, understanding what exactly each of these metrics represents and what kind of information they are trying to convey is crucial for comparability. Some examples of these metrics include recall, precision, accuracy, F-measure, micro-average, and macro-average. These metrics are based on a “confusion matrix” that comprises true positives (TP), false positives (FP), false negatives (FN) and true negatives (TN) [21]. The significance of these four elements may vary based on the classification application. The fraction of correct predictions over all predictions is called accuracy (Eq. 17). The proportion of correctly predicted positives to all positives is called precision, i.e. positive predictive value (Eq. 18).

| (17) |

| (18) |

| (19) |

| (20) |

5.2. Experimental Setup

The following results were obtained using a combination of central processing units (CPUs) and graphical processing units (GPUs). The processing was done on a Xeon E5 – 2640 (2.6 GHz) with 32 cores and 64 GB memory, and the GPU cards were two Nvidia Titan Xp and a Nvidia Tesla K20c. We implemented our approaches in Python using the Compute Unified Device Architecture (CUDA), which is a parallel computing platform and Application Programming Interface (API) model created by Nvidia. We also used Keras and TensorFlow libraries for creating the neural networks [2, 8].

5.3. Experimental Results

In this section we show that CNN with color balancing can improve the robustness of medical image classification. The results for the model trained on 4 different color balancing values are shown in Table 3. The results shown in Table 4 are also based on the trained model using the same color balancing values. Although in Table 4, the test set is based on a different set of color balancing values: 0.5, 1.0, 1.5 and 2.0. By testing on a different set of color balancing, these results show that this technique can solve the issue of multiple stain variations during histological image analysis.

Table 3.

F1-score for train on a set with color balancing of 0.001, 0.01, 0.1, and 1.0. Then, we evaluate test set with same color balancing

| Precision | Recall | f1-score | Support | |

|---|---|---|---|---|

| Celiac Disease (CD) | 0.89 | 0.99 | 0.94 | 22,196 |

| Normal | 0.99 | 0.83 | 0.91 | 22, 194 |

| Environmental Enteropathy (EE) | 0.96 | 1.00 | 0.98 | 22, 198 |

Table 4.

F1-score for train with color balancing of 0.001, 0.01, 0.1, and 1.0 and test with color balancing of 0.5, 1.0, 1.5 and 2.0

| Precision | Recall | f1-score | Support | |

|---|---|---|---|---|

| Celiac Disease (CD) | 0.90 | 0.94 | 0.92 | 22, 196 |

| Normal | 0.96 | 0.80 | 0.87 | 22, 194 |

| Environmental Enteropathy (EE) | 0.89 | 1.00 | 0.94 | 22, 198 |

As shown in Table 3, the f1-score of three classes (Environmental Enteropathy (EE), Celiac Disease (CD), and Normal) are 0.98, 0.94, and 0.91 respectively. In Table 4, the f1-score is reduced, but not by a significant amount. The three classes (Environmental Enteropathy (EE), Celiac Disease (CD), and Normal) f1-scores are 0.94, 0.92, and 0.87 respectively. The result is very similar to MA. Boni et al. [3] which achieved 90.59% of accuracy in their mode, but without using the color balancing technique to allow differently stained images.

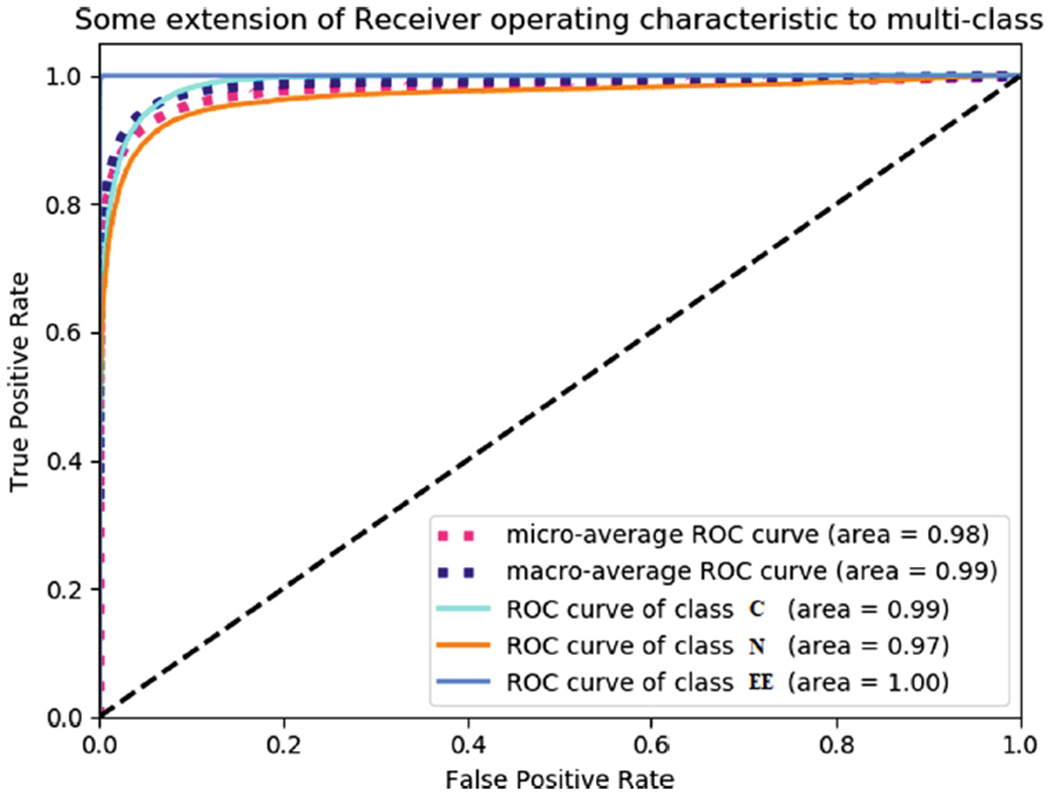

In Fig. 7, Receiver operating characteristics (ROC) curves are valuable graphical tools for evaluating classifiers. However, class imbalances (i.e. differences in prior class probabilities) can cause ROC curves to poorly represent the classifier performance. ROC curve plots true positive rate (TPR) and false positive rate (FPR). The ROC shows that AUC of Environmental Enteropathy (EE) is 1.00, Celiac Disease (CD) is 0.99, and Normal is 0.97.

Fig. 7.

Receiver operating characteristics (ROC) curves for three classes also the figure shows micro-average and macro-average of our classifier

As shown in Table 5, our model performs better compared to some other models in terms of accuracy. Among the compared models, only the fine-tuned ALEXNET [27] has considered the color staining problem. This model proposes a transfer learning based approach for the classification of stained histology images. They also applied stain normalization before using images for fine tuning the model.

Table 5.

Comparison accuracy with different baseline methods

6. Conclusion

In this paper, we proposed a data driven model for diagnosis of diseased duodenal architecture on biopsy images using color balancing on convolutional neural networks. Validation results of this model show that it can be utilized by pathologists in diagnostic operations regarding CD and EE. Furthermore, color consistency is an issue in digital histology images and different imaging systems reproduced the colors of a histological slide differently. Our results demonstrate that application of the color balancing technique can attenuate effect of this issue in image classification.

The methods described here can be improved in multiple ways. Additional training and testing with other color balancing techniques on data sets will continue to identify architectures that work best for these problems. Also, it is possible to extend the model to more than four different color balance percentages to capture more of the complexity in the medical image classification.

Acknowledgments.

This research was supported by University of Virginia, Engineering in Medicine SEED Grant (SS & DEB), the University of Virginia Translational Health Research Institute of Virginia (THRIV) Mentored Career Development Award (SS), and the Bill and Melinda Gates Foundation (AA, OPP1138727; SRM, OPP1144149; PK, OPP1066118)

References

- 1.Who. children: reducing mortality. fact sheet; 2017. http://www.who.int/mediacentre/factsheets/fs178/en/. Accessed 30 Jan 2019 [Google Scholar]

- 2.Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, Corrado GS, Davis A, Dean J, Devin M, et al. : Tensorflow: large-scale machine learning on heterogeneous distributed systems. arXiv preprint arXiv: arXiv:1603.04467 (2016) [Google Scholar]

- 3.Al Boni M, Syed S, Ali A, Moore SR, Brown DE: Duodenal biopsies classification and understanding using convolutional neural networks. American Medical Informatics Association; (2019) [PMC free article] [PubMed] [Google Scholar]

- 4.Bejnordi BE, Veta M, Van Diest PJ, Van Ginneken B, Karssemeijer N, Litjens G, Van Der Laak JA, Hermsen M, Manson QF, Balkenhol M, et al. : Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA 318(22), 2199–2210 (2017) [DOI] [PMC free article] [PubMed] [Google Scholar]