Abstract

Artificial neural networks (ANN) and data analysis (DA) are powerful tools for supporting decision-making. They are employed in diverse fields, and one of them is nanotechnology; for example, in predicting silver nanoparticles size. To our knowledge, we are the first to use ANN to predict liposome size (LZ). Liposomes are lipid nanoparticles used in different biomedical applications that can be produced in Dean-Forces-based microdevices such as the Periodic Disturbance Micromixer (PDM). In this work, ANN and DA techniques are used to build a LZ prediction model by using the most relevant variables in a PDM, the Flow Rate Radio (FRR), and the Total Flow Rate (TFR), and the temperature, solvents, and concentrations were kept constant. The ANN was designed in MATLAB and fed data from 60 experiments with 70% training, 15% validation, and 15% testing. For DA, a regression analysis was used. The model was evaluated; it showed a 0.98147 correlation coefficient for training and 0.97247 in total data compared with 0.882 obtained by DA.

Keywords: artificial neural networks, micromixer, liposome, data analysis

1. Introduction

A liposome is a vesicle frequently made of phospholipids and cholesterol [1]. Liposomes are used in different applications such as transfection [2], drug delivery [3], chemotherapy [4], cosmetics [5], and many others. Mechanical dispersion [6], solvents dispersion [7], and detergent removal [8] are different methods that exist to produce liposomes, but microfluidic micromixers were previously demonstrated to be robust and scalable methods of making size-controlled liposomes [9].

Liposome size (LZ) is an important factor in efficient cancer drug delivery [10]. That is why being able to determine the size of the liposomes before manufacturing them would allow for a lean process [11].

Data analysis (DA) tools were previously used to predict LZ fabricated by micromixers [9,12,13,14]. The models considered LZ as a dependent variable of the Flow Rate Ratio (FRR) and Total Flow Rate (TFR). The implementation of an artificial neural network (ANN) could allow for the development of more accurate models [15]. Artificial Intelligent strategies have become a common tool for pharmaceutical research [16]. ANN works as a “Universal algebraic function” that contemplates noise from experimental data [17], which can help predict the liposome size, finding patterns and relationships between the two data inputs. Comparative studies of both techniques are required to determine the one with the best performance [18].

Currently, there is no ANN-based model for the prediction of LZ; however, this technique was previously used successfully in the prediction of silver nanoparticles [19,20], droplet size in microfluidic devices [21], or the identification of operating parameters in microfluidic devices [22,23,24].

The Periodic Disturbance Mixer (PDM) is a micromixer designed for liposome production based on Dean forces. This device has a polynomial equation that estimates LZ using the FRR and TFR [14].

In this work, we compared the LZ prediction model based on a DA versus ANN two-layer feed-forward network, known as Fitnet [25], in a PDM when temperature, geometry, solvents, and lipids are constants, and the FRR and TFR are variables. We demonstrated that the ANN method had a higher regression number and a lower MSE than the DA model.

2. Materials and Methods

2.1. Experimental Setup

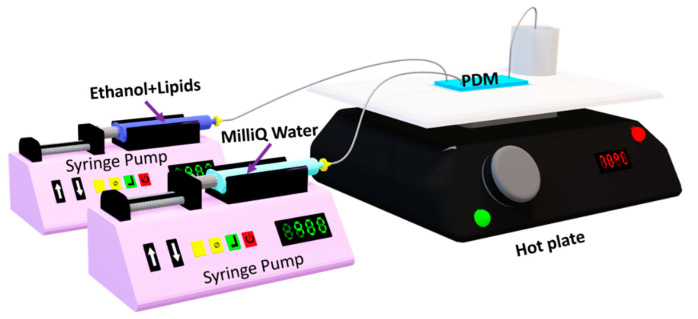

To compare analyses between DA and ANN models successfully, the experimental setup used in “Surface Response Based Modeling of Liposome Characteristics in a Periodic Disturbance Mixer” was preserved. It consisted of PDM devices and three syringe pumps (2 Harvard Apparatus 11 plus 70-2212, 1 Norm-Ject 10 mL). One syringe pump is for the lipid-ethanol mixture; the second is for the MilliQ water; and the last one is for ethanol just for channel cleaning. Each syringe was connected using a 0.22 µm filter and tubing, as shown in Figure 1. The PDM was placed on the hot plate at 70 °C. The final mixture was collected, on the hot plate too, from the outlet in 4-mL scintillation vials prepared with MiliQ water for a final lipid concentration of 0.1 mg/mL for each sample. Samples were cooled down to room temperature, then stored at 4 °C.

Figure 1.

Experimental setup [26].

The lipid mixture consisted of 1,2-dimyristoyl-sn-glycerol-3-phosphocholine (DMPC), cholesterol, and diacetyl phosphate (DHP) at a molar radio 5:4:1 and all diluted in ethanol with a final concentration of 5 mM.

2.2. Data Recollection

The FRR and TFR were selected according to preceding work [14]. The first step was designed with Design of Experiment (DoE) and response surface methodology strategies, taking into consideration micromixer operation conditions and equipment restrictions. All the experiments were between 3 to 18 mL/h for the TFR and 1 to 12 mL/h for the FRR.

The size distribution by the intensity and polydispersity index (PDI) were measured by Dynamic Light Scattering (DLS) equipment, Zetasizer Nano S90 (Malvern, Worcestershire, United Kingdom). To obtain statistically significant results for liposome size, the LZ was obtained by the average of three independent measurement repetitions per sample.

2.3. Prediction Models

The liposome size prediction model based on DA techniques was previously supported [9,13]. It consisted of a reduced quadratic surface response model, Figure A1, based on the Central Composite Circumscribed Rotatable (CCCR) design, fitted using the TFR and FRR as independent variables of 29 samples. TFR2 and TFR*FRR were not considered because of non-probabilistic significance. The DA model is shown in Equation

| (1) |

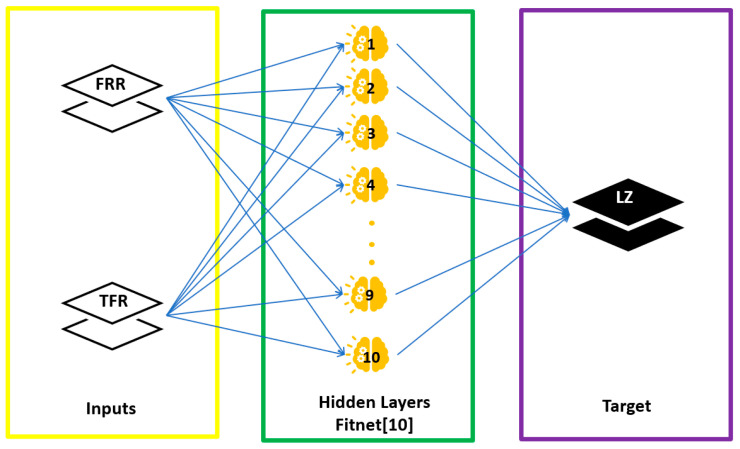

For AI, a prediction model was used with the ANN, two-layer feed-forward network with sigmoid hidden neurons (hidden layer) and linear output neurons (output layer) (Figure 2). It consisted of 2 input and 1 output variables (Table 1), with 60 samples (Figure A2 and Figure A3). A total of 70% of data were used for training, 15% for validation, and finally 15% for testing. This ANN was programmed in MATLAB using the nnstart toolbox.

Figure 2.

Schematic diagram demonstrating the model architecture of ANN prediction model.

Table 1.

Variables of the ANN.

| Variables | Units | Meaning |

|---|---|---|

| FRR (input) | - | The flow rate ratio is the fraction of flow between the water phase and solvent/lipid phase [27]. |

| TFR (input) | ml/h | The total flow rate is the sum of flow between the water phase and solvent/lipid phase [28]. |

| LZ (output) | nm | The liposome size is the average of three independent measurement repetitions of size distribution by intensity. |

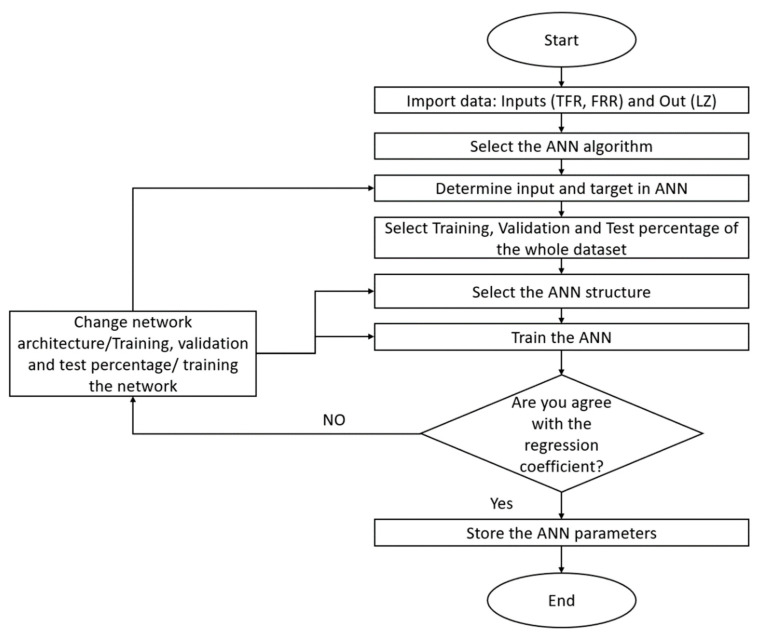

The flow chart of Figure 3 shows the process used to program the ANN prediction model; the first step consisted of importing input and output data in the workspaces; then, with the nftool command, the ANN algorithms (Fitnet) were accessed. The second step was to select the inputs and targets of the ANN from the workspace’s variables, and the third step was to select the percentage of data for training, validation, and test from the whole dataset. The fourth step was the determination of the neurons’ number in the hidden layer. Finally, the sixth step was the training where Levenberg–Marquardt was chosen as the training algorithm. If the regression coefficient was satisfactory, then the network parameters were stored, and if not, the ANN architecture was retrained.

Figure 3.

Flowchart used in MATLAB environment.

3. Results and Discussions

3.1. ANN Prediction Model

The LZ prediction model was developed in MATLAB with nftool. A heuristic approach was used to select the best training, validation, and testing parameters. The data used are shown in Table A1. A total of 42 data were employed for training, 9 for validation, and 9 for the test. All data were randomly selected.

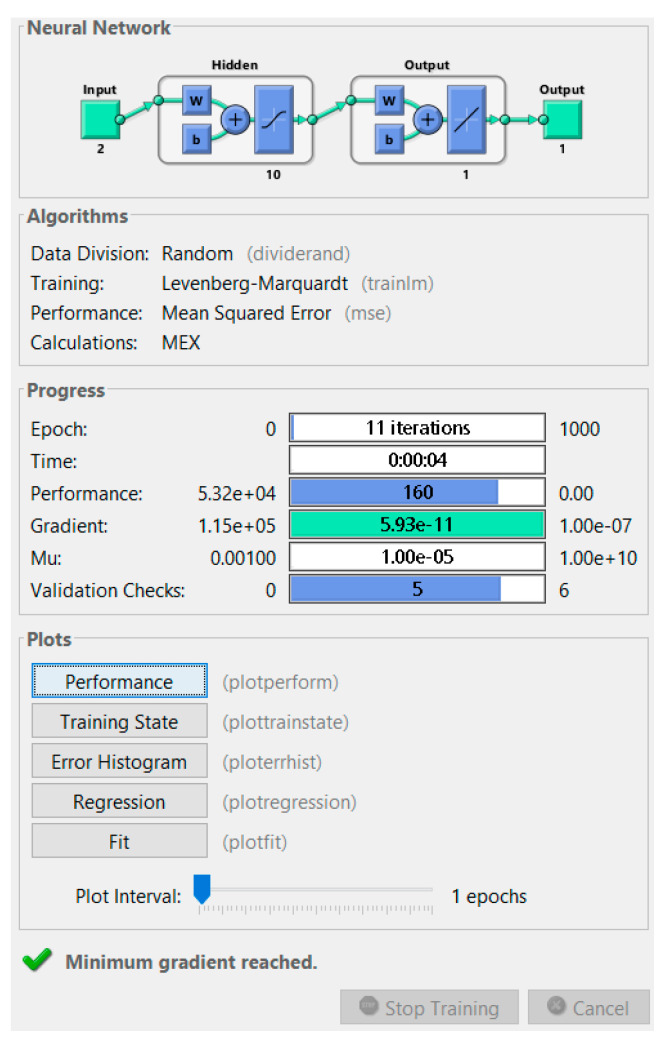

The two layers of the ANN consisted of the hidden layer with 10 neurons and the output layer with a single neuron. The number of neurons in the hidden layer was selected based on the seed neurons’ number according to Equation (2) [16,29]:

| (2) |

where n is the number of samples.

| (3) |

The training process was made with 10 neurons in the hidden layer. The ANN was retrained until it had a total regression number close to 1.

Figure 4 is the MATLAB Training progress report. In this window, it is possible to know all of the ANN characteristics and performances.

Figure 4.

MATLAB Training progress report.

The performance analysis of the ANN is based on the correlation coefficient (R) and Mean Square Error (MSE). R is a statistic measurement of the relationship between variables and their association with each other and is given for the next Equation (4) [30].

| (4) |

where R is the correlation coefficient, values of the x-variable in a sample, mean of the values of the x-variables, values of the y-variable in a sample, mean of the values of the x-variables.

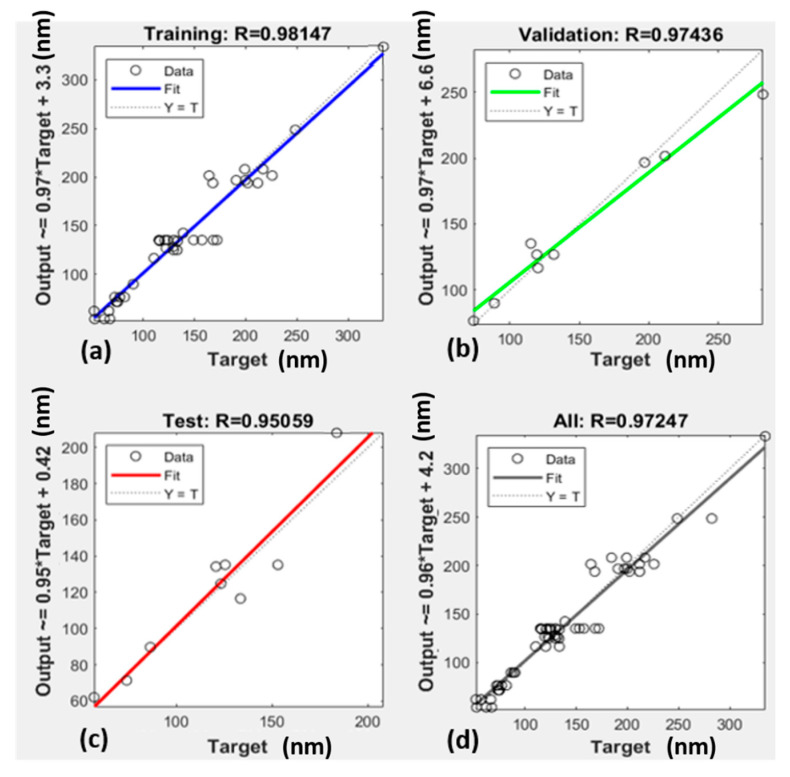

The MSE is used to determine how close a regression line is to the measured data. A MSE value close to 0 indicates that the model fits with the data [31]. Table 2 shows the performance analysis, and Figure 5 has the plots of the data and the model line.

| (5) |

Table 2.

MSE and R coefficient for training, validation, and testing.

| MSE | R | |

|---|---|---|

| Training | 156.7893 | 0.98147 |

| Validation | 290.50693 | 0.97436 |

| Testing | 328.40462 | 0.95059 |

| All | - | 0.97247 |

Figure 5.

Linear Regression of ANN; (a) ANN training model Regression analysis for LZ; (b) ANN testing model Regression analysis for LZ; (c) ANN validation model Regression analysis for LZ; (d) ANN all data model Regression analysis for LZ.

The square error is defined in the next Equation (6).

| (6) |

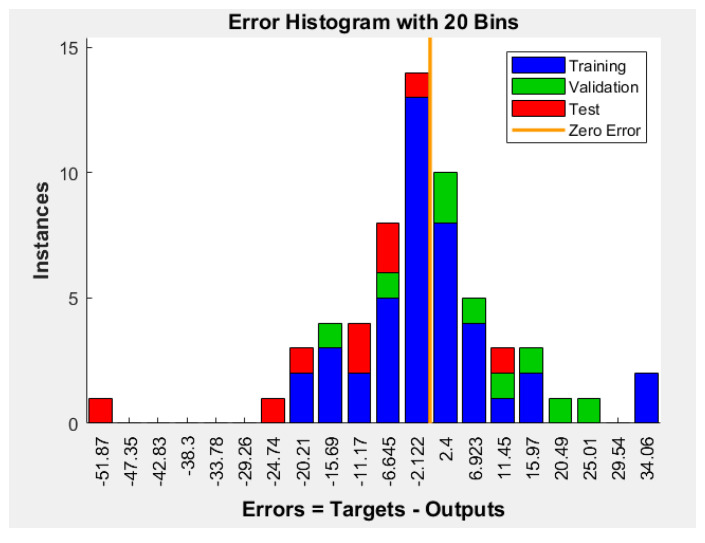

Figure 6 is the histogram of the errors between target values and predicted values after training the ANN. On this graphic, the y-axis represents the number of samples from the dataset, and the x-axis is divided into 20 bins. The width of each bar represents the most common type of error, and it was calculated by the Equation (7), and in the case of our ANN was 4.2965 nm [19].

| (7) |

| (8) |

Figure 6.

Error histogram with 20 bins plot.

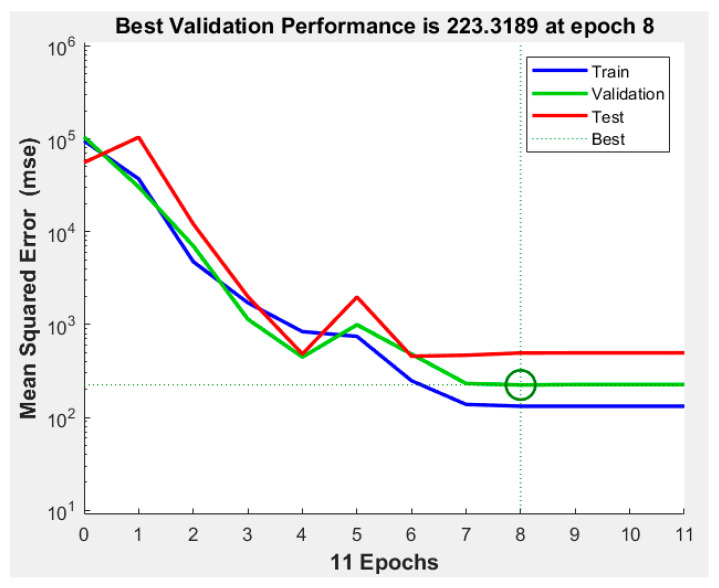

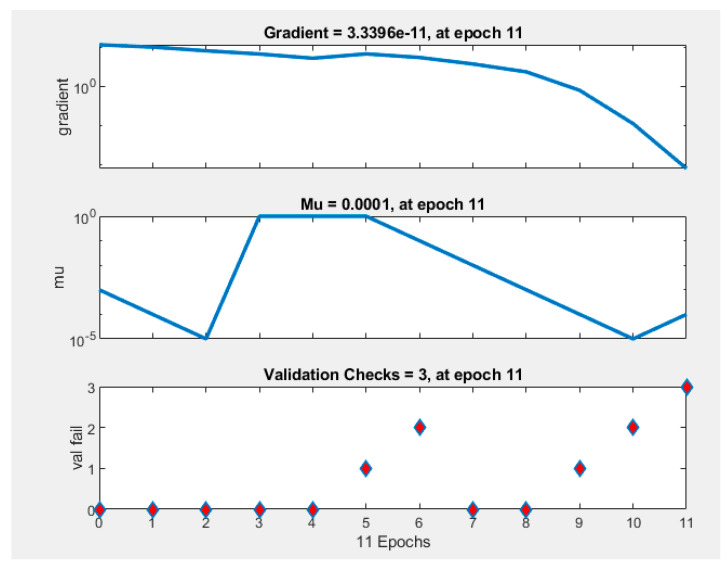

The best validation performance was recorded during epoch number 8 (Figure 7. An epoch is referred to as one cycle over the complete training dataset [32]). In this case, Figure 8 demonstrates that errors are repeated three times after epoch number 8, and the process is stopped at epoch 11.

Figure 7.

Best validation performance in ANN.

Figure 8.

Training state performance plot. At the epoch 11 iteration, gradient is 3.3396 × 10−11, Mu = 0.0001 at epoch 11, and validation checks = 3 at epoch 11.

The code generated for the ANN is in Appendix C.

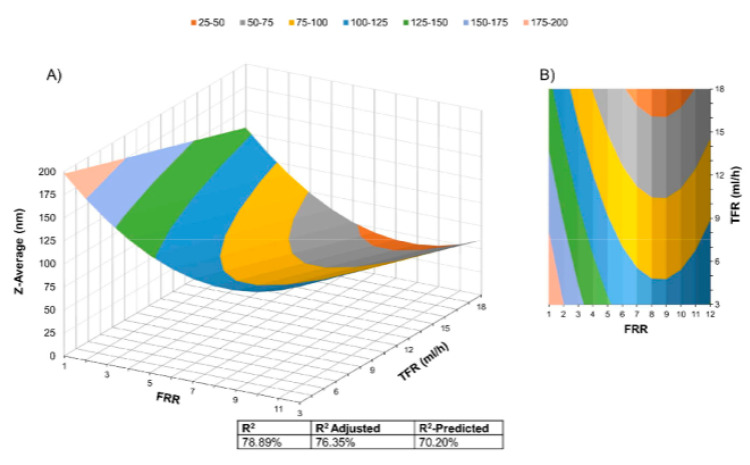

3.2. DA Prediction Model

According to Lopez [10], the performance of the LZ model was evaluated by R2, R2-adjusted, and R2-predicted. R2 values’ range is from 0 to 1, where 0 indicates that the model does not describe the process, and 1 shows that all data are on the regression line [21]. The adjusted R2 is a variant of R2 that has been adjusted for the number of forecasters in the model. R2-adjusted increases if the new term improves the model more than it would be expected by chance. It decreases when a forecaster improves the model by less than expected by chance. R2-predicted is used to indicate if the regression model predicts responses that have a good performance for new observations [33].

This model had R2 = 78.89%, R2-ajusted = 76.35%, and R2-predicted = 70.20%.

3.3. Comparation Models

The models were evaluated by R. For the DA model was used the R multiple (B1), and for ANN model all data R. Table 3 shows a better R for the ANN model with 0.97247 versus 0.7401 for DA model.

Table 3.

Regression coefficient comparation between DA and ANN models.

| Model | R |

|---|---|

| DA | 0.8882 |

| ANN | 0.97247 |

3.4. Experimental Validation

After obtaining the two prediction models, five sets of the FRR and TFR were randomly selected for the experimental validation of the models, where the exclusion criterion was: having the same operation condition. The experimental corroboration samples were carried out with the same protocol for the collection of data from the models and characterized in the same equipment. The MSE was used to evaluate the performance of the two models.

Table 4 shows the MSE of external validation for the ANN and DA models. The square error was calculated for each measurement. The MSE was calculated with Equation (5), and the value obtained for the ANN model was 1.057, and 373.44 for DA model.

Table 4.

Experimental validation performances using ANN and DA model.

| Sample | Frr | Tfrr mL/h |

Measurement LZ nm |

ANN LZ nm |

Square Error |

DA LZ nm |

Square Error |

|---|---|---|---|---|---|---|---|

| 1 | 10.40 | 5.20 | 120.2 | 121.02 | 0.674 | 103.083 | 292.99 |

| 2 | 12.02 | 10.5 | 73.8 | 74.988 | 1.412 | 92.99 | 368.26 |

| 3 | 6.5 | 10.5 | 77.24 | 78.980 | 3.030 | 80.995 | 14.100 |

| 4 | 5 | 18.0 | 64.7 | 64.288 | 0.169 | 61.009 | 13.623 |

| 5 | 3.3 | 3.1 | 199.1 | 199.08 | 0.000 | 164.774 | 1178.27 |

| MSE | 1.057 | MSE | 373.44 |

According to Table 4, the MSE obtained with the eternal validation data was 373.44 for the DA model compared with 1.057 obtained for the ANN model. According to the MSE evaluation criteria, the ANN had a closer MSE to 0 with 1.057, meaning that the model fits better to the data than the DA model with 373.44. Therefore, this result is consistent with that obtained through R.

4. Conclusions

This comparative study aimed to find out whether the DA or ANN was the most efficient method to estimate LZ. Previous research showed that ANNs were the most favorable option for predicting the size of silver nanoparticles. This study confirmed that the ANN was the better approach than the DA for predicting LZ.

The external validation data showed that the MSE in the ANN model was 1.057 contrasted with 373.44 obtained in the DA model. Using R, the DA model showed 0.8884 versus 0.97247 shown by the ANN model in all data.

This work is the first step to complete a universal model as it shows that the training of an ANN improves the regression coefficient compared to DA processes, which allows us to suppose that by expanding the number of variables that are involved in the generation of the model, it can improve its performance and also generalize it.

To generate a universal model, it is important to have an adequate database with the different micromixers currently designed, as well as the type of lipids, solvents, temperatures, flow rates, and mixing percentages to design a complex neural network that is capable of taking all the variables of the systems and thus be able to determine the size of the liposome generated with the given specifications.

Currently, the use of micromixers for the mass production of liposomes has not been implemented. However, when contrasted with an equation that allows one to know the size of the liposome with the given conditions, it could help to promote this technology as one of the most viable by not requiring expensive laboratory equipment.

Acknowledgments

This work was supported by CONACyT (859557), Concordia University, and ÉTS.

Appendix A

Figure A1.

Surface Response Model: The graphical representation of the model generated from DA techniques is shown.

Appendix B

Table A1.

Liposome size of the 60 samples used in ANN modeling.

| Sample | Frr | Tfrr mL/h |

LZ nm |

Sample | Frr | Tfrr mL/h |

LZ nm |

|---|---|---|---|---|---|---|---|

| 1 | 10.40 | 15.80 | 67.52 | 31 | 8.7 | 3.1 | 225.8 |

| 2 | 10.40 | 5.20 | 133.5 | 32 | 5.5 | 8.0 | 130 |

| 3 | 2.60 | 5.20 | 119.4 | 33 | 8.7 | 12.9 | 123.3 |

| 4 | 2.60 | 15.80 | 86.48 | 34 | 5.5 | 8.0 | 157.3 |

| 5 | 6.50 | 10.50 | 81.81 | 35 | 2.3 | 12.9 | 201.9 |

| 6 | 10.40 | 15.80 | 62.1 | 36 | 8.7 | 3.1 | 211.6 |

| 7 | 12 | 18 | 62.12 | 37 | 2.3 | 3.1 | 217 |

| 8 | 2.60 | 5.20 | 122.4 | 38 | 2.3 | 12.9 | 168 |

| 9 | 2.60 | 15.80 | 88.74 | 39 | 5.5 | 8.0 | 115.3 |

| 10 | 6.50 | 10.50 | 72.23 | 40 | 5.5 | 8.0 | 115.1 |

| 11 | 10.40 | 15.80 | 52.71 | 41 | 5.5 | 8.0 | 115.8 |

| 12 | 10.40 | 5.20 | 110.4 | 42 | 5.5 | 8.0 | 121.1 |

| 13 | 2.60 | 5.20 | 131.6 | 43 | 8.7 | 3.1 | 164.3 |

| 14 | 2.60 | 15.80 | 90.27 | 44 | 8.7 | 12.9 | 133.1 |

| 15 | 6.50 | 10.50 | 77.18 | 45 | 5.5 | 8.0 | 129.7 |

| 16 | 6.50 | 3.00 | 133.5 | 6 | 2.3 | 3.1 | 184.4 |

| 17 | 1 | 10.50 | 190.7 | 47 | 2.3 | 3.1 | 199.1 |

| 18 | 6.50 | 18.00 | 66.63 | 8 | 5.5 | 8.0 | 168.4 |

| 19 | 12.02 | 10.50 | 75.09 | 49 | 5.5 | 8.0 | 172 |

| 20 | 6.50 | 3.00 | 120.7 | 50 | 2.3 | 12.9 | 211.8 |

| 21 | 1 | 10.50 | 197 | 51 | 5.5 | 8.0 | 153 |

| 22 | 6.50 | 18.00 | 57.14 | 52 | 5.5 | 1.0 | 248.5 |

| 23 | 12.02 | 10.50 | 74.14 | 53 | 10.0 | 8.0 | 129.4 |

| 24 | 6.50 | 10.50 | 73.81 | 54 | 5.5 | 1.0 | 282.2 |

| 25 | 6.50 | 3.00 | 116 | 55 | 5.5 | 8.0 | 123.9 |

| 26 | 1 | 10.50 | 199.7 | 56 | 5.5 | 8.0 | 125.5 |

| 27 | 6.50 | 18.00 | 52.14 | 57 | 5.5 | 15.0 | 139 |

| 28 | 1 | 18 | 170.8 | 58 | 1.0 | 8.0 | 334.4 |

| 29 | 7 | 18 | 66.83 | 59 | 5.5 | 8.0 | 149.2 |

| 30 | 8.7 | 12.9 | 129.7 | 60 | 5.5 | 8.0 | 123.6 |

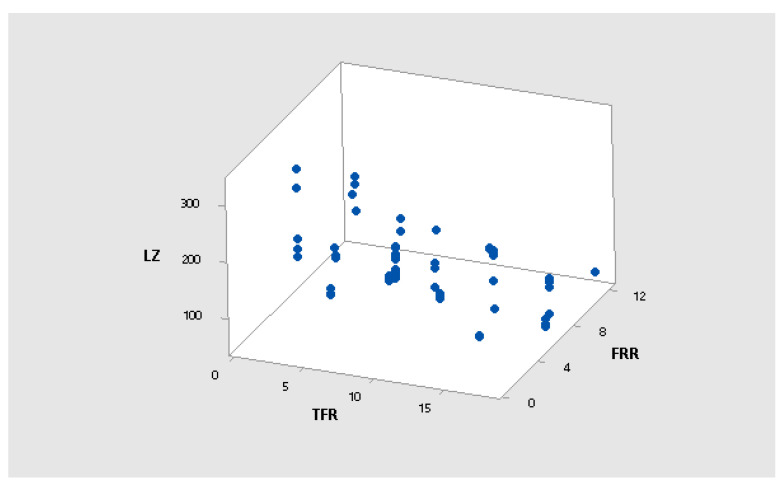

Figure A2.

3D scatter plot of LZ vs. FRR vs. TFR.

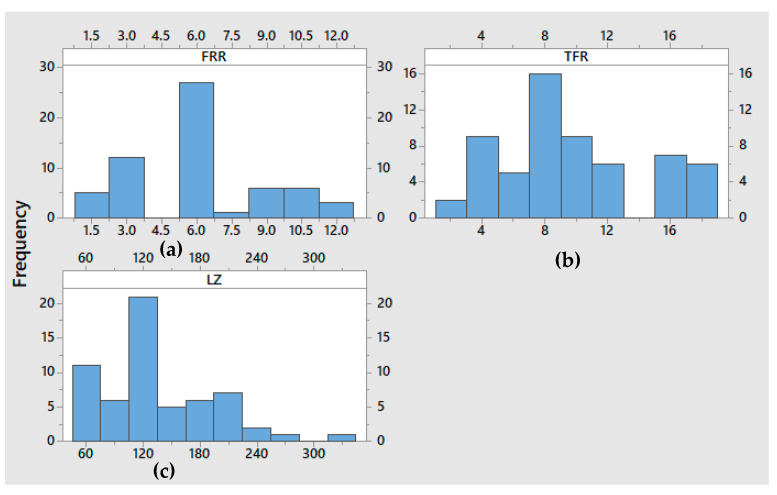

Figure A3.

Histogram plots: (a) FRR vs. Frequency plot (b) TFR(ml/h) vs. Frequency, and (c) LZ vs. frequency.

Calculation of R Multiple for the DA Model

R is also calculated as the square root of the coefficient of determination (R2). This coefficient has a value between 0 and 1 [34].

| (A1) |

Appendix C

The MATLAB code used to predict Liposome size with the FRR and TFR is the following:

function [Y,Xf,Af] = NNF(X,~,~)

%MYNEURALNETWORKFUNCTION neural network simulation function.

% Input 1

x1_step1.xoffset = [0.98;1];

x1_step1.gain = [0.181159420289855;0.105263157894737];

x1_step1.ymin = −1;

% Layer 1

b1 = [2.2573853979793150337;−1.4046714885651678806;−0.06359410449612903915;2.4008609171923636083;−4.4499579001366207365;−2.1788007408023939426;1.9336419725129390113;1.5637078772026233864;3.1849009565352139894;−3.9614189749061110568];

IW1_1 = [−0.13325121336401893335 5.2173462914386723455;5.4213832360022484735 −1.4835535160204569305;−1.5555047045296084285 −0.2443457973733824673;−1.3002680928841963137 3.7318380950631935278;−4.4848684284470383687 3.9942795789850293886;−4.0123400901236037086 −0.30723043350150791575;0.62332724735082822853 −1.7349856439016557719;−10.160464649116889291 −0.81073858216054561776;−3.2670487431816437329 −4.7683452983060288233;−2.5036559886036706679 −2.5286182815795008594];

% Layer 2

b2 = 0.66271782412591195843;

LW2_1 = [1.1438804226171266354 0.37704234783574158696 2.1235959889470081841 −1.2879092489544090583 −1.1746725602152801038 −0.016734561333196285027 −1.1347244592438969768 −0.53324023662588582173 0.11886571077459864854 0.58852216120455558279];

% Output 1

y1_step1.ymin = −1;

y1_step1.gain = 0.00708566569829236;

y1_step1.xoffset = 52.14;

% ===== SIMULATION ========

% Format Input Arguments

isCellX = iscell(X);

if ~isCellX

X = ;

end

% Dimensions

TS = size(X,2); % timesteps

if ~isempty(X)

Q = size(X,1); % samples/series

else

Q = 0;

end

% Allocate Outputs

Y = cell(1,TS);

% Time loop

for ts=1:TS

% Input 1

X = X′;

Xp1 = mapminmax_apply(X,x1_step1);

% Layer 1

a1 = tansig_apply(repmat(b1,1,Q) + IW1_1*Xp1);

% Layer 2

a2 = repmat(b2,1,Q) + LW2_1*a1;

% Output 1

Y = mapminmax_reverse(a2,y1_step1);

Y = Y′;

end

% Final Delay States

Xf = cell(1,0);

Af = cell(2,0);

% Format Output Arguments

if ~isCellX

Y = cell2mat(Y);

end

end

% ===== MODULE FUNCTIONS ========

% Map Minimum and Maximum Input Processing Function

function y = mapminmax_apply(x,settings)

y = bsxfun(@minus,x,settings.xoffset);

y = bsxfun(@times,y,settings.gain);

y = bsxfun(@plus,y,settings.ymin);

end

% Sigmoid Symmetric Transfer Function

function a = tansig_apply(n,~)

a = 2/(1 + exp(−2*n)) − 1;

end

% Map Minimum and Maximum Output Reverse-Processing Function

function x = mapminmax_reverse(y,settings)

x = bsxfun(@minus,y,settings.ymin);

x = bsxfun(@rdivide,x,settings.gain);

x = bsxfun(@plus,x,settings.xoffset);

end

Author Contributions

Conceptualization, I.O., V.N., I.S. and S.C.-L.; methodology, I.O. and R.R.L.; software, I.O.; validation, I.O. and R.R.L.; formal analysis, I.O.; investigation, I.O. and R.R.L.; resources, V.N. and I.S.; data curation, I.O.; writing—original draft preparation, I.O.; writing—review and editing, I.O., V.N., I.S. and S.C.-L.; visualization, I.O. and R.R.L.; supervision, V.N., I.S. and S.C.-L.; project administration, I.O.; funding acquisition, V.N. and I.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Banerjee R. Liposomes: Applications in medicine. J. Biomater. Appl. 2001;16:3–21. doi: 10.1106/RA7U-1V9C-RV7C-8QXL. [DOI] [PubMed] [Google Scholar]

- 2.Gao X., Huang L. A novel cationic liposome reagent for efficient transfection of mammalian cells. Biochem. Biophys. Res. Commun. 1991;179:280–285. doi: 10.1016/0006-291X(91)91366-K. [DOI] [PubMed] [Google Scholar]

- 3.Sharma A., Sharma U.S. Liposomes in drug delivery: Progress and limitations. Int. J. Pharm. 1997;154:123–140. doi: 10.1016/S0378-5173(97)00135-X. [DOI] [Google Scholar]

- 4.Gregoriadis G., Swain C., Wills E., Tavill A. Drug-carrier potential of liposomes in cancer chemotherapy. Lancet. 1974;303:1313–1316. doi: 10.1016/S0140-6736(74)90682-5. [DOI] [PubMed] [Google Scholar]

- 5.Betz G., Aeppli A., Menshutina N., Leuenberger H. In vivo comparison of various liposome formulations for cosmetic application. Int. J. Pharm. 2005;296:44–54. doi: 10.1016/j.ijpharm.2005.02.032. [DOI] [PubMed] [Google Scholar]

- 6.Anwekar H., Patel S., Singhai A. Liposome-as drug carriers. Int. J. Pharm. Life Sci. 2011;2:945–951. [Google Scholar]

- 7.Mozafari M.R. Liposomes: An overview of manufacturing techniques. Cell. Mol. Biol. Lett. 2005;10:711. [PubMed] [Google Scholar]

- 8.Jiskoot W., Teerlink T., Beuvery E.C., Crommelin D.J. Preparation of liposomes via detergent removal from mixed micelles by dilution. Pharm. Weekbl. 1986;8:259–265. doi: 10.1007/BF01960070. [DOI] [PubMed] [Google Scholar]

- 9.Kastner E., Verma V., Lowry D., Perrie Y. Microfluidic-controlled manufacture of liposomes for the solubilisation of a poorly water soluble drug. Int. J. Pharm. 2015;485:122–130. doi: 10.1016/j.ijpharm.2015.02.063. [DOI] [PubMed] [Google Scholar]

- 10.Nagayasu A., Uchiyama K., Kiwada H. The size of liposomes: A factor which affects their targeting efficiency to tumors and therapeutic activity of liposomal antitumor drugs. Adv. Drug Deliv. Rev. 1999;40:75–87. doi: 10.1016/S0169-409X(99)00041-1. [DOI] [PubMed] [Google Scholar]

- 11.Shah S., Dhawan V., Holm R., Nagarsenker M.S., Perrie Y. Liposomes: Advancements and innovation in the manufacturing process. Adv. Drug Deliv. Rev. 2020;154–155:102–122. doi: 10.1016/j.addr.2020.07.002. [DOI] [PubMed] [Google Scholar]

- 12.Sedighi M.J., Billingsley M.M., Haley R.M., Wechsler M.E., Peppas N.A., Langer R. Rapid optimization of liposome characteristics using a combined microfluidics and design-of-experiment approach. Drug Deliv. Transl. Res. 2019;9:404–413. doi: 10.1007/s13346-018-0587-4. [DOI] [PubMed] [Google Scholar]

- 13.Balbino T.A., Aoki N.T., Gaperini A.A.M., Oliveira C.L.P., Azzoni A.R., Cavalcanti L.P., de la Torre L.G. Continuous flow production of cationic liposomes at high lipid concentration in microfluidic devices for gene delivery applications. Chem. Eng. J. 2013;226:423–433. doi: 10.1016/j.cej.2013.04.053. [DOI] [Google Scholar]

- 14.López R.R., Ocampo I., Sánchez L.-M., Alazzam A., Bergeron K.-F., Camacho-León S., Mounier C., Stiharu I., Nerguizian V. Surface response based modeling of liposome characteristics in a periodic disturbance mixer. Micromachines. 2020;11:235. doi: 10.3390/mi11030235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bishop C.M. Pattern Recognition and Machine Learning. Springer; Berlin/Heidelberg, Germany: 2006. Pattern recognition; p. 128. [Google Scholar]

- 16.Agatonovic-Kustrin S., Beresford R. Basic concepts of artificial neural network (ANN) modeling and its application in pharmaceutical research. J. Pharm. Biomed. Anal. 2000;22:717–727. doi: 10.1016/S0731-7085(99)00272-1. [DOI] [PubMed] [Google Scholar]

- 17.Almeida J.S. Predictive non-linear modeling of complex data by artificial neural networks. Curr. Opin. Biotechnol. 2002;13:72–76. doi: 10.1016/S0958-1669(02)00288-4. [DOI] [PubMed] [Google Scholar]

- 18.Karazi S., Issa A., Brabazon D. Comparison of ANN and DoE for the prediction of laser-machined micro-channel dimensions. Opt. Lasers Eng. 2009;47:956–964. doi: 10.1016/j.optlaseng.2009.04.009. [DOI] [Google Scholar]

- 19.Shabanzadeh P., Shameli K., Ismail F., Mohagheghtabar M. Application of artificial neural network (ann) for the prediction of size of silver nanoparticles prepared by green method. Dig. J. Nanomater. Biostruct. 2013;8:1133–1144. [Google Scholar]

- 20.Shabanzadeh P., Senu N., Shameli K., Ismail F., Zamanian A., Mohagheghtabar M. Prediction of silver nanoparticles’ diameter in montmorillonite/chitosan bionanocomposites by using artificial neural networks. Res. Chem. Intermed. 2015;41:3275–3287. doi: 10.1007/s11164-013-1431-6. [DOI] [Google Scholar]

- 21.Mottaghi S., Nazari M., Fattahi S.M., Nazari M., Babamohammadi S. Droplet size prediction in a microfluidic flow focusing device using an adaptive network based fuzzy inference system. Biomed. Microdevices. 2020;22:61. doi: 10.1007/s10544-020-00513-4. [DOI] [PubMed] [Google Scholar]

- 22.Damiati S.A., Rossi D., Joensson H.N., Damiati S. Artificial intelligence application for rapid fabrication of size-tunable PLGA microparticles in microfluidics. Sci. Rep. 2020;10:19517. doi: 10.1038/s41598-020-76477-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lashkaripour A., Rodriguez C., Mehdipour N., Mardian R., McIntyre D., Ortiz L., Campbell J., Densmore D. Machine learning enables design automation of microfluidic flow-focusing droplet generation. Nat. Commun. 2021;12:25. doi: 10.1038/s41467-020-20284-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rizkin B.A., Shkolnik A.S., Ferraro N.J., Hartman R.L. Combining automated microfluidic experimentation with machine learning for efficient polymerization design. Nat. Mach. Intell. 2020;2:200–209. doi: 10.1038/s42256-020-0166-5. [DOI] [Google Scholar]

- 25.Neuron Model–MATLAB & Simulink. [(accessed on 10 June 2021)]. Available online: https://www.mathworks.com/help/deeplearning/ug/neuron-model.html#bss323q-3.

- 26.López R.R., de Rubinat P.G.F., Sánchez L.-M., Alazzam A., Stiharu I., Nerguizian V. Lipid fatty acid chain length influence over liposome physicochemical characteristics produced in a periodic disturbance mixer; Proceedings of the 2020 IEEE 20th International Conference on Nanotechnology (IEEE-NANO); Online. 28 July 2020; Piscataway, NJ, USA: IEEE; 2020. pp. 324–328. [DOI] [Google Scholar]

- 27.Anna S.L., Bontoux N., Stone H.A. Formation of dispersions using “flow focusing” in microchannels. Appl. Phys. Lett. 2003;82:364–366. doi: 10.1063/1.1537519. [DOI] [Google Scholar]

- 28.Lan W., Li S., Luo G. Numerical and experimental investigation of dripping and jetting flow in a coaxial micro-channel. Chem. Eng. Sci. 2015;134:76–85. doi: 10.1016/j.ces.2015.05.004. [DOI] [Google Scholar]

- 29.Li J.-Y., Chow T.W., Yu Y.-L. The estimation theory and optimization algorithm for the number of hidden units in the higher-order feedforward neural network; Proceedings of the ICNN’95-International Conference on Neural Networks; Perth, Australia. 27 November–1 December 1995; Piscataway, NJ, USA: IEEE; 1995. pp. 1229–1233. [DOI] [Google Scholar]

- 30.Angelini C. Regression Analysis. Elsevier; Amsterdam, The Netherlands: 2019. [DOI] [Google Scholar]

- 31.Imbens G.W., Newey W.K., Ridder G. Mean-Square-Error Calculations for Average Treatment Effects. Harvard University; Cambridge, MA, USA: 2005. [DOI] [Google Scholar]

- 32.He K., Meeden G. Selecting the number of bins in a histogram: A decision theoretic approach. J. Stat. Plan. Inference. 1997;61:49–59. doi: 10.1016/S0378-3758(96)00142-5. [DOI] [Google Scholar]

- 33.Minitab Blog Editor. Regression Analysis: How Do I Interpret R-squared and Assess the Goodness-of-Fit? [(accessed on 10 June 2021)]. Available online: https://blog.minitab.com/en/adventures-in-statistics-2/regression-analysis-how-do-i-interpret-r-squared-and-assess-the-goodness-of-fit.

- 34.Kasuya E. On the Use of R and R Squared in Correlation and Regression. Ecol. Res. 2019;34:235–236. doi: 10.1111/1440-1703.1011. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.