Abstract

Background

Virtual reality (VR) enables data visualization in an immersive and engaging manner, and it can be used for creating ways to explore scientific data. Here, we use VR for visualization of 3D histology data, creating a novel interface for digital pathology to aid cancer research.

Methods

Our contribution includes 3D modeling of a whole organ and embedded objects of interest, fusing the models with associated quantitative features and full resolution serial section patches, and implementing the virtual reality application. Our VR application is multi-scale in nature, covering two object levels representing different ranges of detail, namely organ level and sub-organ level. In addition, the application includes several data layers, including the measured histology image layer and multiple representations of quantitative features computed from the histology.

Results

In our interactive VR application, the user can set visualization properties, select different samples and features, and interact with various objects, which is not possible in the traditional 2D-image view used in digital pathology. In this work, we used whole mouse prostates (organ level) with prostate cancer tumors (sub-organ objects of interest) as example cases, and included quantitative histological features relevant for tumor biology in the VR model.

Conclusions

Our application enables a novel way for exploration of high-resolution, multidimensional data for biomedical research purposes, and can also be used in teaching and researcher training. Due to automated processing of the histology data, our application can be easily adopted to visualize other organs and pathologies from various origins.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12885-021-08542-9.

Keywords: Virtual reality, Histology, 3D, Digital pathology, Visualization

Background

Histological assessments remain the cornerstone of pathological diagnostics and research. Currently, traditional microscopy is being increasingly substituted by digital pathology, in which tissue sections are imaged to digital high-resolution whole slide images (WSI). In addition to enabling detailed analysis of the histological samples, the WSIs enable digital analysis and quantification of histological features. Although still nontrivial, the digitalization also allows alignment of serial sections into a common coordinate space, resulting in histology data represented in 3D and thus replicating the three-dimensional nature of the original tissue [1–3]. Advanced visualization methods that reach beyond observing single images on a 2D screen are required to obtain full benefits from these three-dimensional datasets.

In addition to modeling the tissue based on histological sections only, also fusion models of histological serial sections and native 3D imaging modalities such as micro-computed tomography (μCT) [4–10] or magnetic resonance imaging (MRI) [11–13] are possible. Even manually created 3D models representing epithelium with textures acquired from histological samples have been made for teaching purposes [14]. However, applicability of 3D imaging modalities depends on the physical sample size and such measurement devices are not always available, while manual modeling from histology data is time-consuming and subjective. Further, unlike non destructive 3D imaging, modeling based on serial sections is possible also for already sectioned and processed samples. Here, archived clinical sample sets could be accessed to enable retrospective clinical studies holding the potential to advance histopathology and to open up new research areas.

One challenge in 3D histology visualization is the large volume of the data - the size of full resolution images is typically in the range of gigapixels. Reading multiple full resolution WSIs to a computer memory is time-consuming and makes data visualization slow due to long loading times. Modeling of 3D histology data creates a further challenge, not to mention adding feature representations on top.

Virtual reality (VR) can be used for realistic and immersive data visualization in various applications of medical imaging. However, to the best of our knowledge, a tool for modeling and visualization of sparse 3D histology data in VR has not yet emerged. Common 3D visualization tools in e.g. Python or MATLAB can be used to visualize the data and quantitative features, but they do not provide the possibility to fully interact with the data, and also do not allow detailed exploration. Commercial tools, such as Amira (Thermo-Fischer Scientific, MA, USA) and VGSTUDIO MAX (Volume Graphics GmbH, Heidelberg, Germany) for interactive exploration and analysis of volumetric data exist, enabling visualization in 3D. Trivial use of histological visualization requires user-friendly interfaces accessible to pathologists and biomedical researchers without computational knowledge. With well-thought design choices, VR can provide a comfortable and compelling experience for viewing and interacting with scientific data. It is emerging as a popular tool for training simulations in medicine [15, 16] and for surgical planning [17, 18]. In bioinformatics, virtual reality has been used for e.g. visualization of complex molecular structures [19–22] and tracing neurons [23]. 3D representations in VR can concretize complex structures in a manner that is not possible to achieve with a 2D screen - the same holds true for 3D printing [24], but VR enables more exible and detailed visualizations. The spatial connectivity of objects of interest is easy to grasp in VR. In addition, datapoints from e.g. quantitative features can be embedded to the 3D representation for visualization and exploration of additional information.

In this study, we use sparse stacks of histological sections that span through whole organ samples to create realistic 3D organ models in virtual reality. We combine the created whole organ models with models of internal sub-organ objects of interest, and embed quantitative feature visualizations to the models. In our virtual reality application, the user can interact with features to see image patches from full-resolution serial sections they are connected to, and further, study the objects of interest in corresponding sub-organ level with embedded serial sections.

Important aspects that have been taken into consideration are performance optimization for the VR application, automation in data processing and level con-struction, and comfortable user experience. In our prototype application, we use objects in two different scales, with mouse prostates as whole organs and prostate cancer tumors as objects of interest (sub-organ), associated with quantitative features studied for their relevance for the organ anatomy and tumor biology. With our use-case, we show how the organ level view in the VR application enables detection of cancer growth pattern differences, validated in the detailed histology level view, and explored for the associated quantitative details.

Although presented here within a specific case study using mouse prostatic tissue samples, out VR framework is designed to be generic and could be used for any tissue samples and for different staining and imaging modalities under certain assumptions. The current VR implementation makes the assumption that the serially sectioned tissue block has been successfully aligned into a 3D reconstruction and that there is high-resolution image data available for tile-level feature calculation and for illustrating the tile images within the VR model. Our application presents as a novel, generic interface for digital pathology in 3D. Here, we present a use case in cancer research, but the platform is aimed to be used for research, teaching and education purposes, and we anticipate that many use cases will emerge as the technology matures and 3D histology and volumetric imaging of tissue gain popularity.

Methods

Data and ethical permissions

Data [25] from mice heterozygous for tumor suppressor Pten Pten+/- [26] was used in the study. The prostates were collected at the age of 11 months, at which point intraglandular, noninvasive high grade PIN tumors have formed. The prostates, including ventral, dorsal and lateral lobes attached to and surrounding the urethra, were handled as a single block, fixed in PAXgene™ (PreAnalytiX GmbH, Hombrechtikon, Switzerland) and embedded in paraffin. Each prostate was cut into 5 μm thick serial sections, which were placed on glass slides and stained with hematoxylin and eosin (HE) [25]. All animal experimentation and care procedures were carried out in accordance with guidelines and regulations of the national Animal Experiment Board of Finland, and were approved by the board of laboratory animal work of the State Provincial Offices of South Finland (licence no ESAVI/6271/04.10.03/2011) [25].

Image acquisition

The HE-stained slides were scanned and stored in JPEG2000 format [27]. The pixel size of the acquired WSIs was 0.46 μm. Tumors were manually annotated with the freehand tool of ImageJ [28] into binary tumor masks. For the VR application, any other means for obtaining the ROI masks, including staining-guided or AI-based segmentation would be equally applicable. Approximately 300 sections were acquired from a single prostate, from which every tenth section was HE-stained. Thus, each image stack has approximately 30 sections scanned into WSIs with 50 μm distance between consecutive sections.

Image registration

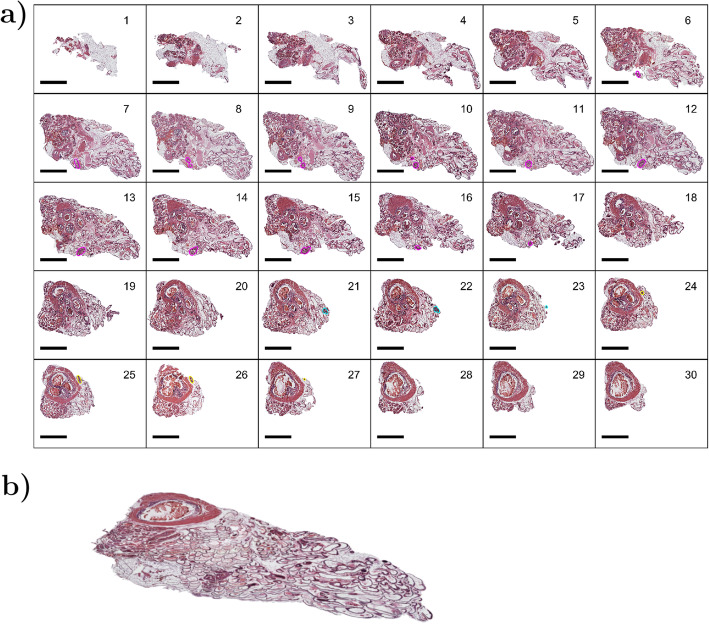

Histological sections have initially different relative orientations and locations in WSIs. Thus, an image registration step was performed to elastically co-register all images in a stack. We used a well performing algorithm from an earlier study and utilized the parameters found via Bayesian optimization for histological data [3]. An existing implementation from TrakEM2 package in Fiji/ImageJ was used for all registrations [29, 30]. The registration method searches corresponding locations in WSIs using normalized cross-correlation from adjacent sections and joins these together with virtual springs. Tissue’s physical properties are also modeled with triangular spring mesh. This spring system is then allowed to bend the tissue until an equilibrium is found, denoting completed registration [31]. The final registration is obtained from the deformed spring mesh and a piece-wise linear transformation is applied accordingly. Resulting transformations were applied to binary masks of both tissue region and tumor annotations. All registered histological sections of one prostate sample with tumor annotations are presented in Fig. 1a, and the whole 3D stack after registration visualized in Fig. 1b. In general, our VR implementation assumes input data sections to be aligned, thus any method for registration, even manual rotation when applicable, can be used in place of the method described here.

Fig. 1.

Every serial section of an example prostate illustrated, with tumors outlined in magenta, yellow and cyan (a) (scalebar = 1 mm). Image stack visualized in 3D with Blender (b)

Feature extraction

The quantitative hand-crafted features, originally created for use with machine learning algorithms and successfully applied in analysis of mouse prostatic lesions in 2D [32, 33], were computed with MATLAB R2017b [34]. The features include histograms of oriented gradients (HOG) [35], local binary patterns (LBP) [36], amount of and distance between nuclei, and intensity features, to name a few. Features were computed from small image patches, with an approximate size of 400 x 400 pixels for features in the prostate level, and 100 x 100 for those in the tumor level. It should be noted, however, that the VR framework is not limited to showing 2D features, and can be appended with 3D feature data in the future. Computing the features in 3D is here partly limited by the fact that only 1:10 of the sections have been H&E stained, thus the gaps limit z-resolution and hinder feature extraction in 3D. Averaging 2D layers in z direction enables partly taking into account the 3D structure, but this is more a means for smoothing the visual appearance in 3D.

Implementation

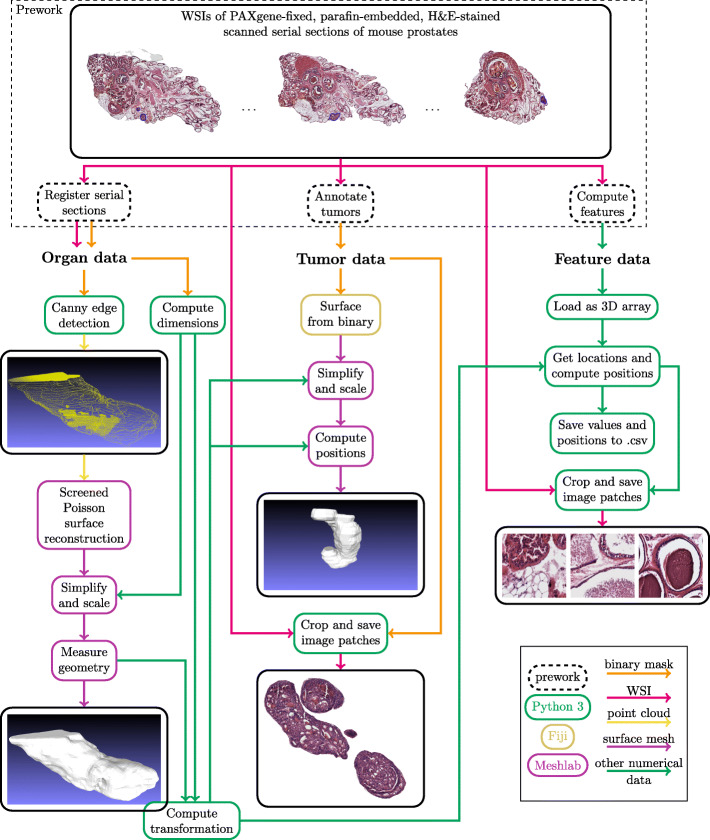

The implementation was automated as far as possible to enable visualization of new organ samples with minimal effort. Data processing was mainly done with Python. Open source software FiJi (ImageJ) [30] and Meshlab [37] were used for generating and processing the digital surface models (DSM). With data processing the original image stack data was transformed to formats that are fast and simple to load and process with a game engine. In addition, model and feature positioning was performed. Automated positioning of tumors and features in relation to the prostate is essential for combining the data in VR. The data processing steps are presented in Fig. 2. Unreal Engine 4 (version 4.21.2) [38] (UE4) was used for VR development. Implementation was done with Blueprint Visual Scripting system and C++ programming.

Fig. 2.

Data processing framework illustrated. The arrows represent the data types passed as input to functions. Colored squares represent programming language or program used for functions. Examples of real inputs and outputs are inside solid black squares

Modeling of organs

Organ DSMs were generated from binary masks with Screened Poisson surface reconstruction [39] from point clouds using Meshlab. To be precise, MeshlabXML scripting interface [40] for Meshlab was used in Python, and Meshlab macros were recorded when functions not implemented in MeshlabXML were needed. Parameters for pre-recorded macros were not changed at any point, since most of the data processing in Meshlab is done in Python with MeshlabXML and the macros only handle tasks that do not require data-specific parameters.

Point cloud acquisition was performed in the following manner. The full resolution binary masks were loaded and resized to 5% of original size to reduce the amount of data points. Distance between serial sections in relation to image size was computed from known data properties (0.46 μm pixel size and 50 μm section distance). Then, borders of each mask were extracted with Canny edge detection and saved as a point cloud with correct vertical positions. To get planar surfaces from top and bottom layers, all points from these masks were included.

For transforming the point cloud to a surface mesh, a Meshlab macro was recorded. First, the point cloud was loaded and point cloud simplification was performed. Then, normals for points were computed and smoothed. Finally, the point cloud was transformed into a mesh with Screened Poisson surface reconstruction and duplicate faces were removed. The macro was saved to be later called directly from Python. The reconstruction from point cloud results in smooth models with no trace of sparse, separate layers. While this method suits well for organ modeling, it fails with smaller objects like tumors. If object width is too small when compared to the distance between sections, the surface of the object is hard to define. Generated models might have ill-posed form and unwanted holes.

The final processing of the model was performed with MeshlabXML. First, the model was scaled to fixed height. Since all samples have the same amount of sections, their size in VR is comparable if their height is the same. Then, rotation was performed to get suitable alignment. Finally, mesh properties of the organ model were acquired with measure_geometry function and saved to a properties file to be used with tumor models and features.

Modeling of tumors

Compared to whole organs, tumor volumes are typically fairly small, making them poorly compatible with similar Screened Poisson surface reconstruction that was used for whole organs. Thus, a Fiji macro was recorded to create models from tumor masks as follows. First, the masks were transformed to binary masks and dilated twice. The voxel depth was set to 1.2 from image properties to approximate correct tumor height. This step, however, is not essential since correct size will be computed later. The surface model was created with Fiji’s 3D viewer plugin. This model was then saved as a binary STL file, as done previously in [24]. The data path was defined as an input parameter for the final macro file. Now, the Fiji macro was ready to be used from Python with syscommand argument.

The properties of the prostate mesh were used to correctly scale and align the tumor models created with Fiji. The desired size S0 and center position P0 were computed from the relation between original tumor and prostate masks, and the size and position of the final prostate model. Tumor mesh center P1 and size S1 were acquired with measure_geometry function of MeshlabXML. The model was scaled with factor S0/S1, and translated by P0 - P1.

Finally, patches of full-sized serial sections were cropped to acquire histological images representing the tumor. The patches were acquired based on the furthest edges of tumor masks, using the tumor masks as alpha channels. Equally sized patches are easier to align in the VR application, and alpha channel is used to hide locations where tumor is not present. Images were saved as RGBA PNG format.

Feature processing

The features used in this implementation were pre-computed with MATLAB as previously described [32, 33]. MATLAB feature arrays with location information were loaded to Python with scipy module’s loadmat function. Features were loaded for each section separately, after which a 3D array was created to cover the whole prostate.

All necessary feature information was saved to comma-separated values (CSV) files for fast loading to Unreal Engine. For each pre-computed feature a separate CSV file was created. The location in relation to the prostate model was computed from scale and location information of the model. The information for each instance of each feature includes position, feature value, normalized feature value, and tumor information telling which tumor, if any, the feature resides in.

Each pixel in the feature map was given an individual index. The index is used to match the feature instance to the image patch from which it was computed from. The full-sized sections were cropped and patches were saved with corresponding indices as PNG files for fast image loading in UE4.

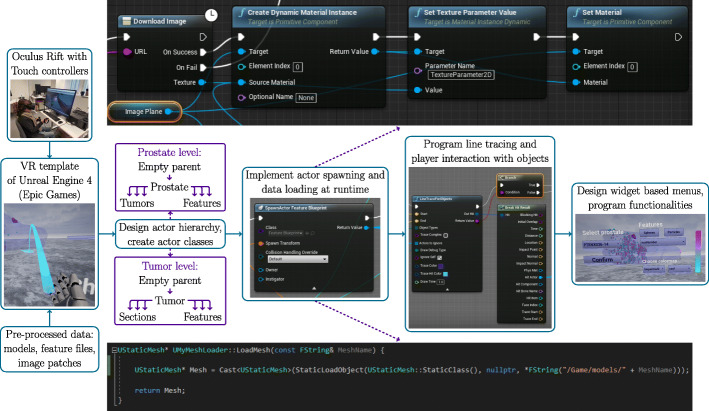

VR implementation for Oculus Rift with unreal engine 4

Most of the VR programming was performed with Blueprint Visual Scripting system in UE4. In addition, some necessary utilities were programmed with C++ and used as Blueprint nodes. VR implementation process is presented in Fig. 3.

Fig. 3.

VR implementation steps illustrated. The example on top shows how images (cropped serial sections) are loaded with Blueprint nodes. The example in bottom is the C++ function used for loading meshes

Templates for character motion and controller mapping (MotionControllerPawn & BP_MotionController) were used as a starting point for VR implementation. These templates include teleportation movement, hand meshes and grabbing functions. Two levels were created, one for the whole organ and one for the detailed view of an object of interest. At this point, only basic level components like floor, walls, light, menu and player were added. All data, including models and features, are loaded dynamically during runtime. Organ and tumor models were imported to Project Content file. After initial import, all models can easily be reimported if changes are made to models. Feature files and images were saved in an external folder from which they are loaded when needed.

All data objects inherit the base Actor class of UE4. Intuitive Actor hierarchy was designed so that there is an empty parent for the main object in the level, which then parents Actors that lay inside the main object. The empty parent is spawned at the center of the main object based on mesh boundaries. All in-game positioning, scaling and rotation operations are applied to the empty parent, which all children in the hierarchy inherit while keeping their relative positions. Actor-based Blueprint classes were created for each object type (prostate, tumor, feature). In addition, a game instance class was created for variables that need to be accessed from both levels. These variables, including prostate sample ID, tumor index and feature name, can be changed from an interactive widget menu in both levels.

Prostate and tumor Actors are spawned at level start. Since feature type and threshold-based visibility can be changed by the user, some optimization strategies were designed for the feature Actor class. Each feature has an ID corresponding to image patches, and this ID is the same for each different feature type at the same location. When a level (or a new prostate) is loaded for the first time, a map structure with feature ID as a key and reference to spawned feature Actor as a value is created. When the feature type is changed, we can simply query the map for the corresponding feature ID and change necessary properties (feature value, normalized value) of the feature Actor. When the threshold value is changed, we need to change visibility, collision properties, and color of the feature. However, changing these values for hundreds or thousands of features each time the slider moves makes the application lag. To create a smooth slider change where the user can see the effect in real-time, only the visibility of the feature is changed when the slider moves. Only when slider grab ends, collision properties and color of the feature are changed. For features that have value below threshold, collision and visibility are disabled. Colormap range is changed to match the range of visible features, threshold being the minimum value represented by the colormap. Thus, full colormap is always in use, otherwise it would be difficult to separate smaller sets of features by color.

Interaction with objects is based on line tracing, where a direct line is drawn from hand mesh. If the line collides with an object, actions are performed. A laser beam from the hand is drawn for ease of use, otherwise it would be difficult to target e.g. a specific feature sphere. Actors to ignore -property of the line trace Blueprint node is used for excluding specific objects from line trace. For example, the right hand can grab spheres inside the prostate and tumors, and the left hand can only select tumors to switch to the tumor level. In the organ level, the user can grab features and the corresponding image patch is visualized. The image is loaded from the external folder and applied as a texture to the image plane Actor that is parented by the feature. When grabbing ends, the Actor with the image plane is destroyed to prevent memory problems resulting from too many textures.

Since this project concentrates on the visualization of scientific data, the colors play an important role. Thus, colormaps for feature visualization were provided and a colorbar was added to the menu. The user can select the preferred colormap from a widget-based dropdown menu. The colormaps were acquired from Python and a CSV file was loaded to Unreal Engine. The feature threshold can also be changed from the menu, and colors are scaled accordingly so that the full colormap is always in use. To achieve clear view on features and tumors inside the prostate, a glass-like material was used for prostate models in main level. Slightly transparent, colored material was used for tumors in main level, with different color for each tumor for better separation. The glass-like material was applied to tumors in detailed sub-organ level to make serial sections clearly visible.

User interface and controls in VR

Widget-based user interface was created to both levels for feature and sample selection and visualization options. In the main level, the user can select the prostate sample from the menu and adjust sample rotation and scale. These selections are applied to the prostate model and all objects (features and tumors) inside the model. In addition, UI controls include several feature visualization options. The user can select which feature to visualize and what is the preferred visualization method - interactive spheres or particle system. Feature visualization threshold and colormap can be adjusted from the UI menu, and the applied colorbar is shown in the menu.

In the detailed sub-organ level the feature selection and visualization options are the same as in the main level. In addition, the user can adjust the scale of the tumor, and show or hide all serial sections (ROIs cropped from WSIs) inside the tumor. The user can return to the main level by selecting the corresponding menu button.

The movement in both levels in VR is performed with either teleportation or left stick movement. Teleportation should be used when moving longer distances to prevent VR sickness, but moving with stick is convenient when moving short distances inside the model. Teleportation is activated by pressing and holding second button of either controller, and releasing when teleportation spot is at desired location. In addition, the user can rotate with right stick. The user can also navigate to menu location by pressing button two on right controller. Screenshots are captured and saved by pressing right hand trigger.

In the main level, a feature sphere can be grabbed by pressing and holding right index trigger when pointing at the sphere. This opens a view with the corresponding image patch, which can be zoomed by simultaneously pressing and holding left hand trigger and moving controllers in opposite directions. When right index trigger is released, the feature sphere is returned to the original position. The user can transport to detailed sub-organ level by pointing at tumor of interest and pressing left index trigger, and re-spawn at the same position when returning from sub-organ level.

In the detailed sub-organ level, the tumor can be rotated by pressing right trigger and holding it while rotating the right controller. The vertical position of the tumor can be adjusted by holding left hand trigger and moving the controller. The serial sections inside the tumor can be hidden by pointing at the section with left controller and pressing the index trigger. Hidden sections can be made visible similarly.

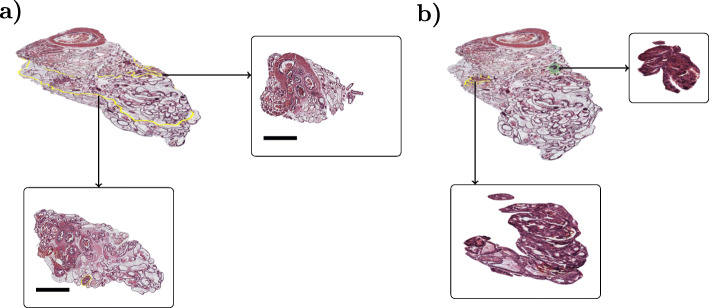

Results

To visualize a whole organ from serial sections, we used sparsely spaced histological WSIs representing whole tissues. Histological image stacks, rendered with Blender [41], are visualized in Fig. 4. In Fig. 4a example sections from the stack, outlined in yellow, are presented. Manually annotated tumors are presented in Fig. 4b, outlined in the image stack and rendered individually.

Fig. 4.

Image stack composed of histological sections from a mouse prostate visualized in Blender. (a) Two example sections are shown in detailed view in the two boxes and highlighted in yellow within the image stack (scale bar = 1 mm). (b) Manually annotated tumour locations (marked in yellow and green within the image stack/3D rendering of the very same prostate shown in (a) with two tumour image stacks visualized in the corresponding boxes

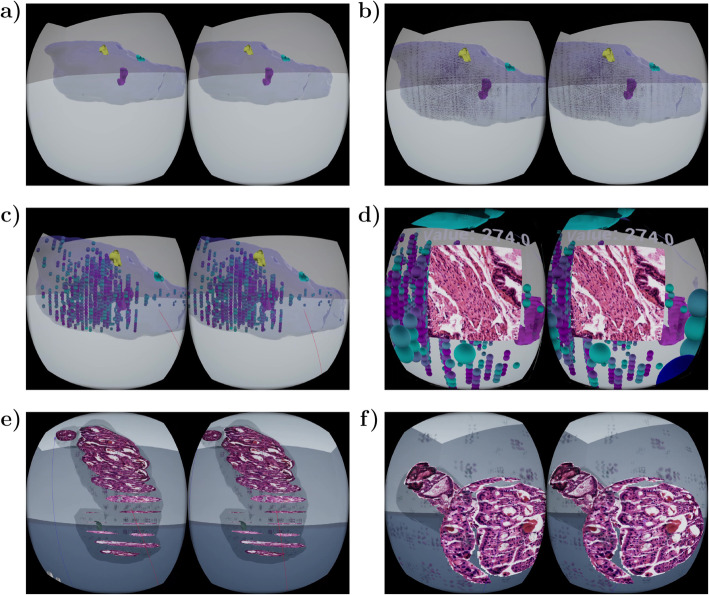

The application prototype consists of two different levels. At the organ level, the user can view the whole organ with its structures (Fig. 5a), pathologies, and even the associated quantitative features (Fig. 5b-c), as well as examine the features interactively (Fig. 5d). In our use-case, this is the level where the prostate with tumors and the computationally calculated features can be viewed and interacted with. At the sub-organ level, the user can interact with single areas of interest (in the example case the tumors; Fig. 5e) and view the serial sections and features computed from these interest locations (Fig. 5f). Application properties and navigation in both levels is demonstrated in Supplementary Video S11. The video presents the left and right eye field of view (FOV) unedited and as realistically as possible, showing almost similar information on both sides, which on the video, unlike when viewed with VR glasses, looks to be duplicated information.

Fig. 5.

VR application view examples were captured during gameplay. Prostate and tumor models are visualized in organ level view (a). Features can be presented as particle systems illustrating feature values spatially (b) or as grabbable spheres for interactive exploration (c). In organ level view, the user can interact with tumors (left hand) and features (right hand). When a feature sphere is grabbed, the corresponding image patch is visualized (d). A tumor with full-resolution HE stained serial sections and with associated quantitative features is visualized in tumor view (e). The tumor can be rotated and serial sections made invisible when studying the tumor in detail (f). In all figures, the virtual reality view is represented unedited as it appears in right and left eye FOVs

Patches of full resolution serial sections are visualized in both levels. Since loading of entire full resolution serial sections is time-consuming, the sections are cropped to smaller patches representing objects of interest to enable image loading without delay. To create a comfortable user experience, controllers are fully utilized for easy navigation and data exploration. User friendly menus are added to levels for full control on selecting the data and visualization method. In addition, ambient music was created for both levels to make the application even more immersive. New samples can be added to the application without manual effort due to automated data processing and dynamic loading of data to levels.

Organ level view: virtualization of a whole prostate model

In the main level of the VR application, prostate outline is visualized together with tumors (Fig. 5a) and quantitative features (Fig. 5b-c), as shown in Supplementary Video S1. The interactive spatial visualization of the whole organ allows intuitive examination of the tumor locations within the organ as well as tumor shapes and growth patterns, in a manner not possible through conventional 2D approaches. Furthermore, through visualization of different quantitative feature values spatially, it is possible to examine which features are connected to and how to the areas of interest (in this case the tumors and their growth patterns) and other parts of the organ. Here, the tumors are rendered as transparent for clear visibility of the structures and features inside the tumor. The features can be selected from a drop-down menu and a slider is used to define the threshold, which, in order to effectively handle outlier values, is defined as percentiles instead of absolute feature values. Only the features belonging to percentile with highest values are visible. Feature visibility changes instantly when slider value is adjusted, making it easy to find a suitable threshold. With easy feature selection and instant thresholding, this level helps in glancing data volumes in exploratory manner and to define visually which features can be used to describe specific properties of the tumors or the prostate. Especially for an expert biologist, the image patches corresponding to specific feature spheres can provide insight to which features are connected to specific tissue structures or histological appearances.

There are two different modes for feature visualization: visualizing all features simultaneously as particle systems (Fig. 5b), and visualizing features whose values are above or below a given threshold as spheres (Fig. 5c). The color of a sphere and particles represents the value of the feature based on a user-selected colormap. The colormap covers the range of values of visible features, thus, regardless of feature threshold, the visible feature with lowest value is assigned a color from the beginning of the colormap, and visible feature with highest value gets color from the end of the colormap. Since changing the color of an object requires more computations than changing just visibility, during threshold slider change the colors of individual spheres remain the same and are changed when slider is released. With the particle system, all features can be visualized at once, since small particles are light to render and do not block the view as solid objects would. It is also possible to plot all spheres at once, however, with large volumes this may cause lag and make the level too crowded for interaction with spheres, possibly decreasing quality of user experience. To examine a feature in a given area, the user can grab a feature sphere to expose the feature value and visualize an image showing the patch from which a given feature was computed from as a cropped area from full resolution histological sections. The user can also zoom the image with two-handed gestures, similarly to two-finger zoom on touch displays. To make particle systems more informative, the feature value affects particle’s spawn rate and lifetime. When a feature’s value is high, particles spawn more frequently and stay alive longer, making these areas more occupied by particles. Thus, areas with higher feature values can be distinguished even from a distance, as demonstrated in Fig. 5b and Supplementary Video S1.

Detailed view on sub-organ region of interest: multiscale virtual tumor view

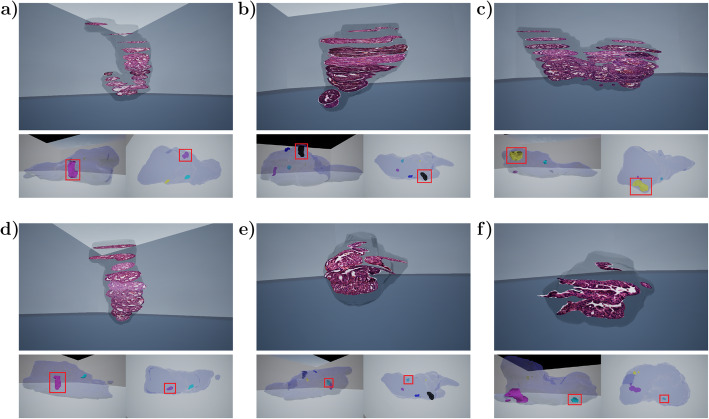

In the main (organ) level, the user can select an object of interest which is visualized and can be examined in more detail at a specific sub-organ level. In this level, the user can study how the tumor appears in the histological sections, and which features are associated to that tumor or its parts (Fig. 6). Inside the tumor, each histological section of the tumor is visualized at their correct 3D spatial location. This allows, for example, exploration of the tumor histology according to the spatial location within the tumor, aiding in evaluation of tumor heterogeneity and research on associated tumor growth patterns.

Fig. 6.

Tumors explored in detail in virtual tumor view. Examples of six tumors are shown, with the corresponding histological sections visible (upper figures). Tumor locations in the prostate are presented below each tumor figure in two orientations, highlighted with red boxes

The user can adjust the rotation and vertical position of the tumor directly by holding designated buttons and rotating or moving the controller. This design enables easy adjustment of the tumor model to get the best, eye-level view of the histological sections inside. Histological sections can then be inspected in detail. To enable browsing sections back and forth, a section can be made invisible such that the consecutive section is in full view, enabling studying all the sections in a tumor.

The histological images were acquired from full resolution sections, and with a high-resolution VR headset they can be studied in high detail. The tumor scale can also be changed, which simultaneously changes the scale of serial sections. Similarly to the organ level, features can be plotted inside the tumor as particles and as transparent spheres. If the user selects to view the features, the feature spheres are rendered transparent to enable visibility of the histological sections underneath. This allows easy visualization and examination of which features are associated with certain histologies.

Volumetric visualization and tumor exploration in prostate cancer: tumor growth pattern differences associated to spatial features beyond histology

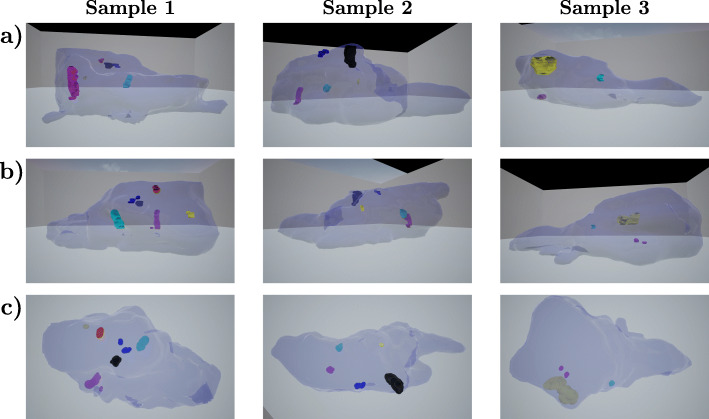

The usability and utilization of our VR model was tested with prostate tissues to study the spatial organization of the tissue as well as tumors of prostate cancer. Prostates of mice genetically heterogeneous with Pten tumor suppressor Pten+/-; [26] form intraglandular, noninvasive high grade prostatic intraepithelial neoplasia (PIN) tumors which were here studied at the age of 11 months. To evaluate heterogeneity in morphology of the tissue and location of the tumors within and between different prostates, six prostate samples were compared by visualizing a 3D model of the whole organs. In Fig. 7, three of these visualizations are presented as still images from three different angles each. The VR application made exploration of the tissue shape and locations of areas of interest simple and intuitive.

Fig. 7.

Three prostate samples with tumors visualized from different angles. Top (a) and center (b) images are from both lateral sides of the prostates, bottom (c) images were captured from above, corresponding to the urethral side of the prostate. Height of each sample is approximately 1.5 mm. Tumors have different colors for better separation

Each prostate had several tumors, and their amount, location and size were found to vary greatly between the samples - a variation which is not evident from 2D histology especially when routinely only a few sections per mouse prostate are examined in such cases. These tumors are currently also challenging to be visualized by many imaging modalities such as PET, making this approach appealing in visualizing them as 3-dimensional structures with high resolution. Most tumors in these samples localize to the lateral lobes of the prostate, which is previously known based on 2D histology and also evident here in relation to the anatomical shape and orientation of the tissue. Each prostate sample had one tumor that was clearly larger than the rest. Tumors were found to often localize near the outer borders of the prostate. Especially, the largest tumors were noted to reach to the outer areas of the tissue. Since mouse prostate is an unencapsulated organ with edges free to expand to the abdominal cavity, the localization effect is likely due to growth pressure in the tissue being smaller towards the edges, thus giving a growth advantage.

The specific sub-organ view was used to study each tumor in more detail. This mode allows easy comparison between tumors to examine tumor heterogeneity and the associated features. In Fig. 6, six tumors with various shapes and sizes are presented as examples, showing views of the full-resolution histological sections from the tumor area together with their locations in the prostates. The histological sections visible inside the tumor at the correct locations, and the ability to select any given section for individual examination (see Fig. 2f), enable detailed histological analysis of intratumoral appearance and heterogeneity. For example, areas of increased cellular density towards a tumor edge likely represent growth fronts, while the cellularly sparse and secretion-containing areas are more benign. From these visualizations it is easy to note how the tumors grow along the prostate glandular structures with occasional elongated and even bended shapes.

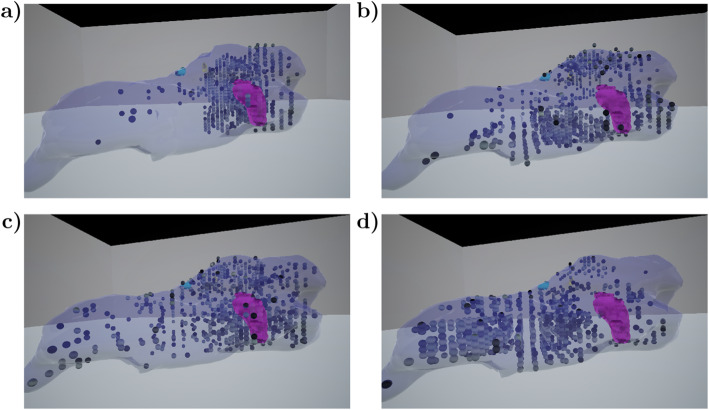

The prostate samples used here have previously been used to develop quantitative histological analysis to computationally study descriptive features and spatial heterogeneity in 2D histological sections [33]. Here, we computed quantitative histological features and included in the VR to explore them in the 3D context. Visualiza-tion of the small image patches to explore the histological appearance that resulted in the quantitated values, enabled exploration of tissue heterogeneity both at the whole tissue and tumor levels. In Fig. 8, still images for four features visualized in a prostate are illustrated as examples. By setting the threshold relatively high, the locations with the most intense feature values can be highlighted. While certain features have strongest signals (i.e., largest values) at specific anatomical parts of the prostate, some are spread evenly throughout the prostate. While only a fraction of the computed features is intuitive in association of 2D histology, visualizing the features in VR enables better understanding of the heterogeneity of anatomical feature distributions and allows discovery of features linked to 3D anatomical properties of the tissue. As an example of an expected result, the amount of nuclei is highest anatomically near the urethra running through the prostate (visible as a distinct red circle on top of the image stacks in Fig. 4), representing a high density of cells in the urethral muscle layer, intraurethral glands and ducts, as compared to the sparse prostate glandular structures distal to the urethra (Fig. 8a). The exploration reveals several anatomically concentrated features not intuitively ex-plained, such as energy (sum of squared elements) in the grayscale co-occurrence matrix (Fig. 8b). Certain non-intuitive features, like features representing local texture, can clearly be observed to concentrate on certain anatomical locations and tissue types areas, such as histogram of oriented gradients features in Fig. 8c and local binary patterns (Fig. 8d, [36]). While additional research is required to understand the potential biological relevance of many of these observations, the ability of these features to distinguish tissue areas and types is likely to become useful in future quantitative analysis of tissue alterations.

Fig. 8.

Quantitative features presented in the 3D prostate volume demonstrate variation of signal intensities between features and in different anatomical parts of the prostate. Approximately 20% of highest ranking features were plotted with bone colormap, where lighter colors represent higher values. Amount of nuclei is highest near urethra, representing high density of cells in ducts and urethral muscle layer compared to sparse prostate glandular structures distal to the urethra (a). Energy (sum of squared elements) in grayscale co-occurrence matrix is strongest at the top and bottom of the prostate (b). Histogram of oriented gradients features with 9 pixel window are spread around the prostate (c). Local binary patterns tend to have strong signals around lateral and ventral prostate (d)

Discussion

We used mouse prostates with prostate cancer tumors as a use-case for VR modeling and visualization. The current case study is performed with prostate cancer material previously assessed by traditional 2D histopathology [25] and by computational 2D analysis [33]. In this work, the data was visualized and explored by using virtual reality, and investigated by a domain expert in comparison to the 2D studies. The VR application was found particularly useful in associating specific 2D histological features and areas of interest to specific 3D tissue environments, as well as in understanding tumor shapes and localization patterns in an organ.

With the VR visualization, the tumors could be explored in ways that are not possible through standard 2D visualization of histological section - not even when several sections are utilized. The ability to observe the tissue and the tumors from multiple angles clearly enhances the information gained of how tumors are dis-tributed in the tissue, their growth patterns and their heterogeneity. Considering that these tumors are too small to currently visualize by live imaging techniques, the observations enabled by the VR 3D visualization are previously unencountered and underline the usefulness of VR as a tissue exploration tool.

Biologically, it is interesting that each prostate sample had one tumor that was clearly larger than the rest. The tumors in this Pten+/- prostate cancer model are multifocal. Thus, observation of a "major tumor" within each prostate does not reflect a primary tumor from which the others have metastasized, but rather must represent a tumor that is either initiated early or has a stronger growth potential than the others. While these tumors were localized close to the edges of the tissue, they may have gained a spatial growth advantage due to growth pressure in the tissue being smaller where it is easier to expand towards the abdominal cavity. The observation, however, raises questions as to whether the largest, growth-advantaged tumor has a signaling capacity to keep the others’ growth slower in a similar fashion as has been indicated for primary tumors affecting the dormancy of its metastatic cells [42].

Traditionally, quantitative features have been used in machine learning algorithms, and many methods enable defining which features have highest influence on classification results. We have previously used this prostate tumor material in a study where we used feature-based machine learning for analyzing spatial heterogeneity and genetic alterations of neoplastic alterations in these prostate tumors in a 2D setting [33]. Now, with our 3D VR application, we were able to easily explore firstly the spatial distribution of the important computational features to associate them to anatomical locations and, secondly, through visualization of the corresponding histology, study what properties they capture.

In this study, the models were created based on sparse histological serial sections. Long distances between consecutive sections make 3D modeling challenging and results in models with visible discrete steps between sections. In addition to modeling prostates with tumors, we could create models of other sub-organ structures of the prostate, like glands and urethra. This would provide a more informative, comprehensive model of the whole organ and would be excellent especially for teaching purposes. The inner structures could be studied in sub-organ level, with embedded serial sections. With sections 50 μm apart, a single structure has discontinuities between consecutive sections, making this step computationally challenging and e.g. region growing algorithms inapplicable for the task. Yet, this is a natural next step of the VR prostate project.

Conclusions

In this study, we created a VR application for visualizing tissue histology in 3D. In addition to studying the gross anatomy of tissue and its regions of interest, our application can be used to study detailed histology of the samples. Furthermore, quantitative features computed from the serial sections were visualized. Our application is a novel tool for both research and teaching, with a fully immersive experience of virtual walking inside an organ and interacting with the regions of interest and quantitative features to explore the tissue properties. The virtual reality environment separates our work from the existing software for multi-modal 3D visualization.

The application can also be extended to cover more detailed, e.g. single-cell level exploration of the data, as well as other types of data besides the structure models and features. For example, we could present immunohistochemistry results in the application, embed individual nuclei to the models, or visualize spatial sequencing data of genomic or gene expression results acquired from the serial sections.

In this research, whole murine prostates were used as samples. However, other organs from other subjects, even human, could easily be visualized with the same methodology. The only requirement is that serial sections can be acquired and imaged from the sample. With automated processing of the data, our method is easy to adopt for visualization of histological data from other sources.

Supplementary Information

Additional file 1: Supplementary Video 1. Properties of the virtual reality application, including navigation in both organ and tumor level, and various functionalities and features are demonstrated in Supplementary Video S1. Supplement available at: https://github.com/BioimageInformaticsTampere/The-Virtual-Prostate.

Acknowledgements

We thank Hannu Hakkola for composing ambient music for the VR application.

Abbreviations

- 2D/3D

Two/three dimensional

- μCT

Micro-computed tomography

- μm

Micrometer

- AI

Artificial intelligence

- CSV

Comma-separated values

- DSM

Digital surface models

- HE

Hematoxylin and eosin

- HOG

Histograms of oriented gradients

- LBP

Local binary patterns

- MRI

Magnetic resonance imaging

- PET

Positron emission tomography

- PIN

Prostatic intraepithelial neoplasia

- PNG

Portable network graphics file format

- ROI

Region of interest

- STL

Stereolithography language file format

- UE4

Unreal Engine 4

- VR

Virtual reality

- WSI

Whole slide image

Authors’ contributions

KL designed and implemented the application. LL provided data annotations, histological knowledge, end-user testing and biological interpretation. MV and KK designed the pre-processing methods for segmentation and registration of images, and helped implement the feature extraction methods. PR conceived and supervised the study, and implemented the feature extraction methods. KL, LL and PR wrote the manuscript. All authors reviewed and accepted the manuscript. The authors read and approved the final manuscript.

Funding

We are grateful for Academy of Finland (P.R., grants #313921 and #314558), and Academy of Finland Center of Excellence programme (project no. 312043) for funding the development of computational analysis pipeline and virtual modeling. PR & LL gratefully acknowledge funding support from ERAPerMed programme (ABCAP consortium 2020–2022) and Cancer Foundation Finland. L.L. gratefully acknowledges funding from Academy of Finland (#317871 and #334774) and the Finnish Cancer Institute for prostate cancer related research. Funders participated in personnel costs during the research. Funders did not participate in the study design, implementation, or preparation of the manuscript.

Availability of data and materials

Video with visual representation of data used in this study is available at the supplementary site: https://github.com/BioimageInformaticsTampere/The-Virtual-Prostate

Declarations

Ethics approval and consent to participate

All animal experimentation and care procedures were carried out in accordance with guidelines and regulations of the national Animal Experiment Board of Finland, and were approved by the board of laboratory animal work of the State Provincial Offices of South Finland (licence no ESAVI/6271/04.10.03/2011) [25].

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Roberts N, Magee D, Song Y, Brabazon K, Shires M, Crellin D, Orsi NM, Quirke R, Quirke P, Treanor D. Toward routine use of 3d histopathology as a research tool. Am J Pathol. 2012;180(5):1835–1842. doi: 10.1016/j.ajpath.2012.01.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Magee D, Song Y, Gilbert S, Roberts N, Wijayathunga N, Wilcox R, et al. Histopathology in 3d: From three-dimensional reconstruction to multi-stain and multi-modal analysis. J Pathol Inform. 2015;6. [DOI] [PMC free article] [PubMed]

- 3.Kartasalo K, Latonen L, Vihinen J, Visakorpi T, Nykter M, Ruusuvuori P. Comparative analysis of tissue reconstruction algorithms for 3d histology. Bioinformatics. 2018;34(17):3013–3021. doi: 10.1093/bioinformatics/bty210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Silva JM, Zanette I, Noel PB, Cardoso MB, Kimm MA, Pfeier F. Three-dimensional non-destructive soft-tissue visualization with x-ray staining micro-tomography. Sci Rep. 2015;5(1):1–7. doi: 10.1038/srep14088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Dullin C, Ufartes R, Larsson E, Martin S, Lazzarini M, Tromba G, Missbach-Guentner J, Pinkert-Leetsch D, Katschinski DM, Alves F. ct of ex-vivo stained mouse hearts and embryos enables a precise match between 3d virtual histology, classical histology and immunochemistry. PloS one. 2017;12(2):0170597. doi: 10.1371/journal.pone.0170597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Topperwien M, van der Meer F, Stadelmann C, Salditt T. Three-dimensional virtual histology of human cerebellum by x-ray phase-contrast tomography. Proc Natl Acad Sci. 2018;115(27):6940–6945. doi: 10.1073/pnas.1801678115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Busse M, Muller M, Kimm MA, Ferstl S, Allner S, Achterhold K, Herzen J, Pfeier F. Three-dimensional virtual histology enabled through cytoplasm-specific x-ray stain for microscopic and nanoscopic computed tomography. Proc Nat Acad Sci. 2018;115(10):2293–2298. doi: 10.1073/pnas.1720862115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Handschuh S, Beisser C, Ruthensteiner B, Metscher B. Microscopic dual-energy ct (microdect): a exible tool for multichannel ex vivo 3d imaging of biological specimens. J Microsc. 2017;267(1):3–26. doi: 10.1111/jmi.12543. [DOI] [PubMed] [Google Scholar]

- 9.Topperwien M, Markus A, Alves F, Salditt T. Contrast enhancement for visualizing neuronal cytoarchitecture by propagation-based x-ray phase-contrast tomography. NeuroImage. 2019;199:70–80. doi: 10.1016/j.neuroimage.2019.05.043. [DOI] [PubMed] [Google Scholar]

- 10.Ferstl S, Busse M, Muller M, Kimm MA, Drecoll E, Burkner T, Allner S, Dierolf M, Pfeier D, Rummeny EJ, et al. Revealing the microscopic structure of human renal cell carcinoma in three dimensions. IEEE Transact Med Imaging. 2019;39(5):1494–1500. doi: 10.1109/TMI.2019.2952028. [DOI] [PubMed] [Google Scholar]

- 11.Gibson E, Gaed M, Gomez JA, Moussa M, Pautler S, Chin JL, et al. 3d prostate histology image reconstruction: quantifying the impact of tissue deformation and histology section location. J Pathol Inform. 2013;4. [DOI] [PMC free article] [PubMed]

- 12.Schmidt KF, Ziu M, Schmidt NO, Vaghasia P, Cargioli TG, Doshi S, Albert MS, Black PM, Carroll RS, Sun Y. Volume reconstruction techniques improve the correlation between histological and in vivo tumor volume measurements in mouse models of human gliomas. J Neuro-Oncol. 2004;68(3):207–215. doi: 10.1023/B:NEON.0000033364.43142.bf. [DOI] [PubMed] [Google Scholar]

- 13.Bart S, Mozer P, Hemar P, Lenaour G, Comperat E, Renard-Penna R, Chartier-Kastler E, Troccaz J. Proceedings of Surgetica. Montpellier: Sauramps Medical; 2005. Mri-histology registration in prostate cancer; pp. 361–367. [Google Scholar]

- 14.Sieben A, Oparka R, Erolin C. Histology in 3d: development of an online interactive student resource on epithelium. J Visual Commun Med. 2017;40(2):58–65. doi: 10.1080/17453054.2017.1332480. [DOI] [PubMed] [Google Scholar]

- 15.McGuire LS, Alaraj A. Comprehensive Healthcare Simulation: Neurosurgery. Cham: Springer; 2018. Competency assessment in virtual reality-based simulation in neurosurgical training; pp. 153–157. [Google Scholar]

- 16.Liebig T, Holtmannspotter M, Crossley R, Lindkvist J, Henn P, Lonn L, Gallagher AG. Metric-based virtual reality simulation: a paradigm shift in training for mechanical thrombectomy in acute stroke. Stroke. 2018;49(7):239–242. doi: 10.1161/STROKEAHA.118.021089. [DOI] [PubMed] [Google Scholar]

- 17.Shirk JD, Thiel DD, Wallen EM, Linehan JM, White WM, Badani KK, et al. Effect of 3-dimensional virtual reality models for surgical planning of robotic-assisted partial nephrectomy on surgical outcomes: a randomized clinical trial. JAMA Netw Open. 2019;2(9):1911598. [DOI] [PMC free article] [PubMed]

- 18.Frajhof L, Borges J, Homann E, Lopes J, Haddad R. Virtual reality, mixed reality and augmented reality in surgical planning for video or robotically assisted thoracoscopic anatomic resections for treatment of lung cancer. J Visual Surg. 2018;4(7):143. doi: 10.21037/jovs.2018.06.02. [DOI] [Google Scholar]

- 19.Wiebrands M, Malajczuk CJ, Woods AJ, Rohl AL, Mancera RL. Molecular dynamics visualization (mdv): stereoscopic 3d display of biomolecular structure and interactions using the unity game engine. J Integr Bioinform. 2018;15(2). [DOI] [PMC free article] [PubMed]

- 20.Chavent M, Vanel A, Tek A, Levy B, Robert S, Ran B, et al. Gpu-accelerated atom and dynamic bond visualization using hyperballs: A unified algorithm for balls, sticks, and hyperboloids. J Comput Chem. 2011;32(13):2924–35. [DOI] [PubMed]

- 21.Trellet M, Ferey N, Flotynski J, Baaden M, Bourdot P. Semantics for an integrative and immersive pipeline combining visualization and analysis of molecular data. J Integr Bioinform. 2018;15(2). [DOI] [PMC free article] [PubMed]

- 22.Biere N, Ghaar M, Doebbe A, Jager D, Rothe N, Friedrich BM, et al. Heuristic modeling and 3d stereoscopic visualization of a Chlamydomonas reinhardtii cell. J Integr Bioinform. 2018;15(2). [DOI] [PMC free article] [PubMed]

- 23.Usher W, Klacansky P, Federer F, Bremer P-T, Knoll A, Yarch J, Angelucci A, Pascucci V. A virtual reality visualization tool for neuron tracing. IEEE Trans Vis Comput Graph. 2017;24(1):994–1003. doi: 10.1109/TVCG.2017.2744079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Liimatainen K, Latonen L, Kartasalo K, Ruusuvuori P. 2019 41st annual international conference of the IEEE engineering in medicine and biology society (EMBC) IEEE; 2019. 3d-printed whole prostate models with tumor hotspots using dual-extruder printer; pp. 2867–2871. [DOI] [PubMed] [Google Scholar]

- 25.Latonen L, Scaravilli M, Gillen A, Hartikainen S, Zhang F-P, Ruusuvuori P, Kujala P, Poutanen M, Visakorpi T. In vivo expression of mir-32 induces proliferation in prostate epithelium. Am J Pathol. 2017;187(11):2546–2557. doi: 10.1016/j.ajpath.2017.07.012. [DOI] [PubMed] [Google Scholar]

- 26.Cristofano AD, Pesce B, Cordon-Cardo C, Pandol PP. Pten is essential for embryonic development and tumour suppression. Nat Genet. 1998;19(4):348–355. doi: 10.1038/1235. [DOI] [PubMed] [Google Scholar]

- 27.Tuominen V, Isola J. Linking whole-slide microscope images with dicom by using jpeg2000 interactive protocol. J Digit Imaging. 2010;23(4):454–462. doi: 10.1007/s10278-009-9200-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Schneider C, Rasband W, Eliceiri K. Nih image to imagej: 25 years of image analysis. Nat Methods. 2012;9(7):671–675. doi: 10.1038/nmeth.2089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Cardona A, et al. Trakem2 software for neural circuit reconstruction. PLoS ONE. 2012;7(6). 10.1371/journal.pone.0038011. [DOI] [PMC free article] [PubMed]

- 30.Schindelin J, Arganda-Carreras I, Frise E, Kaynig V, Longair M, Pietzsch T, Preibisch S, Rueden C, Saalfeld S, Schmid B, Tinevez JY, White DJ, Hartenstein V, Eliceiri K, Tomancak P, Cardona A. Fiji: an open-source platform for biological-image analysis. Nat Methods. 2012;9(7):676–682. doi: 10.1038/nmeth.2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Saalfeld S, Fetter R, Cardona A, Tomancak P. Elastic volume reconstruction from series of ultra-thin microscopy sections. Nat Methods. 2012;9(7):717–720. doi: 10.1038/nmeth.2072. [DOI] [PubMed] [Google Scholar]

- 32.Ruusuvuori P, Valkonen M, Nykter M, Visakorpi T, Latonen L. Feature-based analysis of mouse prostatic intraepithelial neoplasia in histological tissue sections. J Pathol Inform. 2016;7. [DOI] [PMC free article] [PubMed]

- 33.Valkonen M, Ruusuvuori P, Kartasalo K, Nykter M, Visakorpi T, Latonen L. Analysis of spatial heterogeneity in normal epithelium and preneoplastic alterations in mouse prostate tumor models. Sci Rep. 2017;7(1):44831. doi: 10.1038/srep44831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.MATLAB . Version 9.3.0 (R2017b) Natick: The MathWorks Inc.; 2017. [Google Scholar]

- 35.Dalal N, Triggs B. 2005 IEEE computer society conference on computer vision and pattern recognition (CVPR’05) IEEE; 2005. Histograms of oriented gradients for human detection; pp. 886–893. [Google Scholar]

- 36.Ojala T, Pietikainen M, Maenpaa T. European conference on computer vision. Springer; 2000. Gray scale and rotation invariant texture classi cation with local binary patterns; pp. 404–420. [Google Scholar]

- 37.Cignoni P, Callieri M, Corsini M, Dellepiane M, Ganovelli F, Ranzuglia G. MeshLab: an Open-Source Mesh Processing Tool. In: Scarano V, Chiara RD, Erra U, editors. Eurographics Italian Chapter Conf. The Eurographics Assoc. 2008. [Google Scholar]

- 38.Epic Games, I.: Unreal Engine 4. Version 4.21.2.

- 39.Kazhdan M, Hoppe H. ACM Transactions on Graphics (ToG) 2013. Screened poisson surface reconstruction; p. 29. [Google Scholar]

- 40.3DLIRIOUS . MeshlabXML. GitHub; 2016. [Google Scholar]

- 41.Community BO. Blender - a 3D Modelling and Rendering Package. Amsterdam: Blender Foundation, Stichting Blender Foundation; 2018. [Google Scholar]

- 42.Fares J, Fares MY, Khachfe HH, Salhab HA, Fares Y. Molecular principles of metastasis: a hallmark of cancer revisited. Signal Transduct Target Ther. 2020;5(28). [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1: Supplementary Video 1. Properties of the virtual reality application, including navigation in both organ and tumor level, and various functionalities and features are demonstrated in Supplementary Video S1. Supplement available at: https://github.com/BioimageInformaticsTampere/The-Virtual-Prostate.

Data Availability Statement

Video with visual representation of data used in this study is available at the supplementary site: https://github.com/BioimageInformaticsTampere/The-Virtual-Prostate