Abstract

Despite its increasing use, few studies have reported on demographic representativeness and costs of research recruitment via social media. It was hypothesized that cost, reach, enrollment, and demographic representativeness would differ by social media recruitment approach. Participants were 18–25 year-olds at moderate to high risk of skin cancer based on phenotypic and behavioral characteristics. Paid Instagram, Facebook, and Twitter ads, unpaid social media posts by study staff, and unpaid referrals were used to recruit participants. Demographic and other characteristics of the sample were compared with the 2015 National Health Interview Survey (NHIS) sample. Analyses demonstrated significant differences among recruitment approaches regarding cost efficiency, study participation, and representativeness. Costs were compared across 4,274 individuals who completed eligibility screeners over a 7-month period from: Instagram, 44.6% (of the sample) = 1,907, $9 (per individual screened); Facebook, 31.5% = 1,345, $8; Twitter, 1% = 42, $178; unpaid posts by study staff, 10.6% and referred, 6.5%, $1. The lowest rates of study enrollment among individuals screened was for Twitter. Most demographic and skin cancer risk factors of study participants differed from those of the 2015 NHIS sample and across social media recruitment approaches. Considering recruitment costs and number of participants enrolled, Facebook and Instagram appeared to be the most useful approaches for recruiting 18–25 year-olds. Findings suggest that project budget, target population and representativeness, and participation goals should inform selection and/or combination of existing and emerging online recruitment approaches.

Keywords: Social media, Recruitment, Skin cancer prevention, Cost

Implications.

Practice: Specific social media platforms may be selected to recruit unique or hard-to-reach populations for health promotion campaigns and interventions (e.g., Facebook for young adults, Instagram for young women, Reddit for young men).

Policy: Recruitment from sources in addition to or other than social media (e.g., probability-based research samples) may be necessary to obtain samples representative of the U.S. young adult population for public health surveillance.

Research: Project budget, target population, and participation goals should inform selection and/or combination of existing and emerging online recruitment approaches.

Introduction

Online and social media recruitment approaches are increasingly utilized for public health intervention research. We identified four reviews of over 100 studies using Facebook research recruitment that noted several advantages over traditional media channels (e.g., newspaper, radio, television), including faster recruitment, better representation, ability to access young and hard-to-reach demographics, and cost effectiveness [1–4]. At the same time, Facebook recruitment can be costly and remains limited by the over-representation of young white women and lower internet access among some populations, particularly older adults and individuals with a lower socioeconomic status [1–4]. Additionally, thoroughness of reporting on Facebook recruitment approaches is inconsistent, and cost data are often not included in publications [2].

Compared to Facebook, fewer publications have reported on recruitment outcomes from other social media platforms such as Instagram, Twitter, or Reddit. However, several reviews have drawn conclusions similar to the Facebook reviews regarding advantages and disadvantages in terms of cost, efficiency, and sample representativeness [5–15]. Of the few studies that have compared two or more social media channels [16–21], results vary as to effectiveness, cost-effectiveness, and representativeness, which likely differ by the demographics of the target population. Greater understanding of these metrics can inform study design as well as generalizability of results.

The RE-AIM (Reach, Effectiveness, Adoption, Implementation, Maintenance) model [22] is an implementation science framework developed to help characterize the implementation potential of behavioral interventions. The goal of the current study was to investigate the Reach component of the RE-AIM model by comprehensively assessing and comparing cost, reach, enrollment, and representativeness of several online recruitment approaches (e.g., Facebook, Instagram) in order to inform future recruitment efforts. The parent project for this study is a national randomized hybrid effectiveness-dissemination trial of an online skin cancer risk reduction intervention for young adults (NCT03313492).

Study aims for the present analyses were:

1) To assess and compare the reach, paid and unpaid costs of recruitment, and study enrollment by online approach. Because the approaches differ in nature and target population, we hypothesized that reach, cost, and enrollment would vary by recruitment approach. For instance, because Instagram targets a younger population than Facebook, we expected to reach more young adults more cost-effectively using Instagram compared with Facebook.

2) To compare study participant demographic characteristics and skin cancer risk factors (a) across various online approaches and (b) to a sample that recruited a representative national sample of young adults. We hypothesized that participant characteristics such as age, sex, educational attainment, income, sexual orientation, race, ethnicity, and sun sensitivity would vary by social media recruitment approach. We also compared the UV4Me2 sample to the characteristics of young adults from the 2015 National Health Interview Survey (NHIS). We expected that the current sample would differ from NHIS respondents on skin cancer risk factors such as race, ethnicity, and sun sensitivity, since those are associated with study eligibility criteria (e.g., fair skin, family history of melanoma, history of sunburns) for the skin cancer risk reduction intervention trial [23].

Methods

Overall Procedures

Recruitment

The current study is part of an ongoing project assessing a digital skin cancer risk reduction program for young adults (NCT03313492). Potential participants were young adults, defined as persons between the ages of 18 and 25 years, who were targeted through the use of custom audiences within the social media platforms. Recruitment of study participants was conducted through unpaid social media posts and paid advertising on Facebook, Instagram, and Twitter between September, 2018 and April, 2019. Participants were recruited using unpaid posts by the study team, current study participants, and professional partners (e.g., nonprofit skin cancer prevention organizations) on websites and social media. Unpaid posts by study participants or professional partners may have been re-posts of existing paid or unpaid study posts or posts created by the individuals or organization themselves. With the help of an advertising agency, paid campaigns were launched using social media platform advertisement management systems. Paid advertisements were initially launched on Instagram and Twitter. During the recruitment period, the campaign was adjusted to replace Twitter with Facebook because Twitter recruitment results were low. Thus, two Instagram and Facebook displays and two “carousel” (rotating sequence of images) advertisements and paid Twitter display advertisements were delivered for 28, 19, and 7 weeks, respectively. The goal was cost-effective recruitment, not similar advertising dosage across social media platforms.

Social media advertisement fees were based on the number of advertisements delivered to the target audience. Audience demographic and behavioral characteristics including age range, location (USA), and interests, such as outdoor activities and physical fitness, were identified to more efficiently target the intended audience. Images selected either for display or carousel advertisements featured individual or groups of young adults of different genders engaging in audience-relevant outdoor activities (e.g., hiking, walking on the beach, snowboarding in the winter), events, and sports. Advertisement content focused on potential heath, appearance, and financial benefits of participation (e.g., “Healthy skin is beautiful skin.”). Social media platforms use ever-changing automated algorithms to optimize campaigns in terms of cost-efficiency. For example, Facebook displays more successful advertisements more frequently and less successful advertisements less frequently over time. The study team also optimized the campaign by replacing under- or over-performing advertisements. For example, since men were recruited more slowly than women, advertisements showing single women were replaced with advertisements with men or mixed gender groups. Additionally, advertisements were changed seasonally (e.g., showing a snowboarder during the winter). The objective of the advertisements was to encourage potential participants to click the call-to-action buttons such as “Sign Up” or “Learn More” that directed them to a study-specific landing webpage or sign-up webpage. The landing page included relevant images and brief information about the study and reasons why individuals might want to participate, including brief testimonials from prior participants. Individuals were then instructed to create an account on the sign-up webpage with a phone number, email, and password. Once an individual indicated their interest and created a study account, they were automatically directed to complete an eligibility screener.

Eligibility and Enrollment

Participants eligible for the UV4Me2 study were 18–25 years old, English speakers, living in a U.S. state or Washington DC, had regular internet access, reported phenotypic, familial, and/or behavioral risk factors (e.g., fair skin, family history of melanoma, history of sunburns) that put them at moderate to high risk of developing skin cancer [23], and did not have a personal history of skin cancer. If eligible, individuals were invited to complete the online informed consent form. Upon consent, participants were directed to the 10-min online baseline survey. If enrollees completed the baseline survey, they received a $5 electronic gift card. Participants were informed that the total study incentives would be up to $120 for completing five online surveys over the course of 12 months, plus periodic gift card raffles.

Measures overview

Measures included advertisement displays and advertisement and recruitment costs by recruitment approach. See Table 1 for definitions of terms. Participant referral approach (e.g., Facebook paid advertisements, Instagram paid advertisements) was collected using Google Analytics, or if unavailable, participant self-report. The total number of people who completed a brief online screening questionnaire, the proportion screened who were eligible, the proportion eligible who consented to the study, and the proportion consented who completed online baseline surveys were calculated by recruitment approach. Participants reported their demographic characteristics and skin cancer-related behaviors. To assess representativeness of the participating sample, participant demographics were compared with 2015 NHIS data for the same age group. The NHIS [24, 25] was selected for comparison because it is designed to produce nationally representative information on the health of the U.S. civilian, non-institutionalized adults.

Table 1.

Overview of study variables

| Aims and variables | Definitions |

|---|---|

| Recruitment approach | Instagram, Facebook, Twitter, Reddit, Family/Other Referral, Organic (e.g., unpaid social media posts by study staff) |

| Aim 1. Reach, cost, and study enrollment | |

| Impressions | Number of times advertisements were displayed |

| Reach | Number of people who saw advertisements |

| Advertisement costs | Costs of serving advertisements recorded by social media advertisement analytics platforms |

| Labor costs | Labor costs of recruitment, tracked by project staff |

| Screened | Individual completed a brief online screening questionnaire |

| Eligible | Individual determined to be eligible based on responses to screening questionnaire |

| Consented | Eligible individual who submitted an online informed consent form |

| Enrolled | Consented individual who completed an online baseline survey |

| Excluded | Participant excluded by study staff due to suspicious activity (e.g., attempting to re-enroll using a different name or email address) |

| Aim 2. Representativeness | |

| Demographics | Age, sex, sexual orientation, educational attainment, annual income |

| Skin cancer-related variables | Race, ethnicity, degree of tanning/burning that occurs in the sun, whether participants had received a full body skin cancer examination by a healthcare provider in the last 12 months |

Aim 1. Reach, Enrollment, and Cost by Approach

Measures

Recruitment approach.

We assessed eligibility and enrollment overall and by recruitment approach (i.e., Facebook, Instagram, Close Other Referral, Reddit, Twitter, Other/Unknown) over approximately seven months. Approaches were tracked by placing a unique identifying pixel in each of our authorized web ads on Facebook, Instagram, and Twitter so that we knew when an individual referred from a pre-specified approach accessed the study website. Additionally, participants were asked how they found out about the study to identify informal word of mouth diffusion (e.g., referred by a friend, Reddit). Close Other Referral could have been via an organic social media post or other non-social media means (e.g., text, email, phone, in person).

Impressions and reach.

The number of impressions (i.e., times the advertisements were displayed) and the number of people reached (i.e., people who saw the advertisements) for each approach were calculated.

Study enrollment.

The numbers of participants who were screened, eligible, and consented to participate in the study were assessed. As occurs in other Internet-based and survey research, a portion of participants may attempt to enroll more than once or provide inaccurate responses in order to be deemed eligible and earn study incentives [26–28]. Participants were excluded from the study for giving responses that were likely of poor quality for issues such as providing a non-unique or non-working e-mail address or phone number [26].

Recruitment costs.

Recruitment costs were tracked as part of a more comprehensive effort to collect costs required to deliver the intervention. Recruitment included time spent by program staff and costs incurred by the advertising agency. Program staff time was tracked using a Microsoft Excel-based cost data collection instrument that investigators developed to collect cost data using an activity-based costing approach with recruitment being a separate activity [29–32]. Program staff reported the number of hours spent on each activity (including recruitment) on a monthly basis. Recruitment was defined as identifying and recruiting individuals to participate in the program from approaches that may be used in a real-world setting, including Facebook, Instagram, Twitter, and word of mouth. This time included writing, editing, posting, and monitoring advertisements and obtaining expertise about improvement strategies for recruitment. Investigators assigned a recruitment approach (Facebook, Instagram, Twitter, or unpaid) to each time entry. Even though unpaid advertising did not require payments for the advertisements, program staff dedicated time to developing the unpaid advertisements and posting the advertisements. Program staff time was valued using their salary information that included wages and fringe benefits (average of $65 per hour). Costs incurred by the advertising agency included labor and non-labor costs and were tracked via invoices.

Analyses

We calculated reach, enrollment rates, and costs spent on each advertising approach to estimate cost-effectiveness of each recruitment approach. For each advertising approach, the primary measure of cost-effectiveness was calculated as cost per participant enrolled. A supplemental measure of cost-effectiveness was cost per 1,000 impressions. Thus, we assessed average cost-effectiveness of these approaches. Those with lower cost-effectiveness ratios are preferred as they have lower costs per participant recruited. We also calculated incremental cost-effectiveness ratios, defined as comparing each approach with its next best alternative (approach that enrolled the next highest number of participants) by dividing the difference in costs between the two approaches by the difference in the number of participants.

Aim 2. Representativeness

Measures

The NHIS is conducted annually by the National Center for Health Statistics, part of the Centers for Disease Control and Prevention, using a multistage clustered sample design involving in-person home interviews [24, 25]. The 2015 NHIS data were selected because they included the most recent skin cancer risk data available from NHIS. Items that were comparable between the 2015 NHIS and UV4Me2 surveys were included. Standard demographic items assessed included age, sex, sexual orientation, race/ethnicity, educational attainment, and annual income. Additional items asked about the degree of tanning/burning that occurs after 1–2 weeks (2 weeks for NHIS) in the sun and whether participants had received a full body skin cancer examination by a health care provider (doctor only for NHIS) in the last 12 months. Some response categories in the UV4Me2 study were collapsed to be consistent with those available from the NHIS. For example, race/ethnicity was created from the race and ethnicity variables in the UV4Me2 survey. The NHIS sample was restricted to the age group included in the UV4Me2 dissemination study (18–25 years old).

Analyses

To examine whether the distributions of participant categorical characteristics (e.g., sex) were similar to characteristics of participants from the NHIS in the same age group, we applied goodness of fit chi-square tests for each variable. To determine whether participant characteristics differed by recruitment method, we used chi-square tests. We used exact methods (Monte Carlo estimation) to calculate the p-values because some cell counts were small. Residuals (standardized differences from what were expected under the null hypothesis of no association between characteristic and recruitment method) were used to detect which recruitment methods were more or less successful at recruiting different types of participants. Standardized residuals greater than three were considered significant.

Results

Aim 1. Reach, Enrollment, and Cost by Approach

Table 2 reports non-labor, labor, and total costs spent on each advertising approach. The distribution of expenditures across types of advertising, including unpaid social media advertising, was $35,938, with an average cost of $20 per participant enrolled. In terms of total cost, Instagram costs were highest ($16,799), followed by Facebook ($10,548), Twitter ($7,456), and unpaid advertising ($1,135). Unpaid advertising included time spent by program staff but did not include time spent by partner organizations or current participants, which was likely minimal for both. With the exception of unpaid advertising where all costs were labor, labor costs for the three approaches accounted for 39–40% of total costs and most of those were incurred by the advertising agency. The majority of the time spent on recruitment by program staff was spent on unpaid recruitment and included the time of program staff to post unpaid ads.

Table 2.

Recruitment results by approach platform

| Recruitment results | Unpaid | Total | |||

|---|---|---|---|---|---|

| Costs (total) | $10,548 | $16,799 | $7,456 | $1,135 | $35,938 |

| Non-labor | $6,418 | $10,008 | $4,532 | $0 | $20,958 |

| Labor | |||||

| Ad agency | $4,021 | $6,529 | $2,771 | $0 | $13,321 |

| Program staff | $109 | $262 | $153 | $1,135 | $1,659 |

| Ad reach | N (% of row above) | N (% of row above) | N (% of row above) | N (% of row above) | N (% of row above) |

| Impressions | 418,517 | 1,336,323 | 633,211 | n/a | 2,388,051 |

| Reach | 135,291 (32.3%) | 682,852 (51.1%) | n/a (n/a) | n/a (n/a) | 818,143 (34.3%) |

| Number of participants | N (% of row above) | N (% of row above) | N (% of row above) | N (% of row above) | N (% of row above) |

| Screened | 1,345 (1.0%) | 1,907 (0.3%) | 42 (n/a) | 980 (n/a) | 4,274 (0.5%) |

| Eligible | 779 (57.9%) | 1,038 (54.4%) | 20 (47.6%) | 400 (40.8%) | 2,237 (52.3%) |

| Consented | 676 (86.8%) | 804 (77.5%) | 14 (70.0%) | 370 (92.5%) | 1,864 (83.3%) |

| Enrolled | 652 (96.4%) | 733 (91.2%) | 11 (78.6%) | 358 (96.8%) | 1,754 (94.1%) |

| Excluded by staff (of % screened) | 4 (0.003%) | 6 (0.003%) | 0 (0.0%) | 226 (23.1%) | 236 (5.5%) |

| Cost per advertisement reach | |||||

| Impressions (1,000) | $25 | $13 | $12 | n/a | $15 |

| Reach (1,000) | $78 | $25 | n/a | n/a | $20 |

| Cost per person | |||||

| Screened | $8 | $9 | $178 | $1 | $8 |

| Eligible | $14 | $16 | $373 | $3 | $16 |

| Consented | $16 | $21 | $533 | $3 | $19 |

| Enrolled | $16 | $23 | $678 | $3 | $20 |

Table 2 also presents the number of impressions, individuals reached, and the number of study participants screened, eligible, consented, enrolled, and who were excluded by study staff from each recruitment approach. Across all approaches combined, the study content was displayed almost 2.4 million times and seen by over 800,000 people. However, only 4,274 participants were screened. Of these 4,274, 1,754 (~41%) were enrolled in the study. Instagram advertisements resulted in the highest number of impressions and participants. Twitter was second in the number of impressions but had the lowest number of participants enrolled in the study.

Finally, Table 2 reports cost per 1,000 impressions and per 1,000 people reached and costs per person screened, eligible, consented, and enrolled. Twitter had the lowest cost per 1,000 impressions ($12), followed by Instagram ($13). However, when cost effectiveness was measured in terms of the number of participants enrolled in the study, Facebook was the most cost-effective out of the three paid approaches, with a cost-effectiveness ratio of $16 per person enrolled, followed by Instagram ($23 per enrollment). Twitter’s cost per person who enrolled was substantially higher ($678) than the other two approaches. Given that the only cost incurred for unpaid advertising was the labor cost of program staff, the cost for this type of advertising was a low $1–3 per study participant. However, it should be noted that many more participants recruited by unpaid means (e.g., referral by friends) were excluded by study staff for suspicious activity such as attempting to re-enroll. Our findings from the incremental cost-effectiveness analysis were the same as from the average cost-effectiveness analysis presented in Table 2; thus, we only report the average cost-effectiveness ratios.

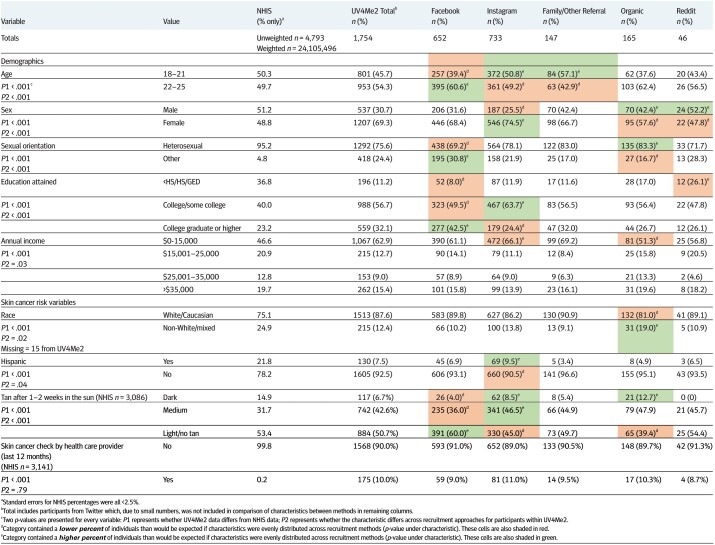

Aim 2. Representativeness

Table 3 illustrates demographic and skin cancer risk variables present in both the current UV4Me2 dissemination study and the NHIS. The UV4Me2 results differed significantly from the NHIS data on all variables assessed. UV4Me2 participants were significantly likely to be older, female, non-Hispanic White, more highly educated, have lower income, and be non-heterosexual (all ps < 0.01). UV4Me2 participants were also more likely to have sun-sensitive skin and have been screened for skin cancer by a health care provider in the last year (ps < 0.001).

Table 3.

Demographics and skin cancer risk factors by approach

All variables also differed significantly by social media recruitment approach, except provider skin cancer screening, which was consistently around 10% across Facebook, Instagram, and other approaches. For example, participants from Instagram were more likely to be younger, female, or Hispanic than others; whereas, participants from Facebook were less likely to be younger or heterosexual. Characteristics differed less often from the other groups in the Family/Other Referral and Reddit Referral groups.

Discussion

Using social media approaches for both recruitment of study participants and intervention delivery is increasing [1–4]. However, few studies have reported on the representativeness of study participants recruited from these approaches, as well as the reach, enrollment, and cost of social media recruitment approaches [2]. It is important to understand these factors in order to make decisions about study methodology and budgeting. The current study compared the cost, reach, enrollment, and representativeness of paid and unpaid social media recruitment approaches, including Instagram, Facebook, and Twitter, for an online skin cancer risk reduction intervention trial for young adults.

Our results demonstrated that young adult research participants can be enrolled relatively quickly and cost-effectively by using paid advertisements on Instagram and Facebook. However, $23 per participant for Instagram and $16 per participant for Facebook may not be feasible for some organizations and budgets depending on the nature of the program, population, and sample size goals. Unpaid posts on Reddit show promise. Paid Twitter advertisements were not cost-effective. Although organic unpaid recruitment appeared to be cost-effective, it alone would not have been sufficient to recruit an adequate number of study participants and might require additional precautions to minimize attempts at re-enrollment [27, 28]. Twitter produced a large number of impressions; however, when cost effectiveness was measured as the number of people who actually enrolled in the study, Facebook appears to be the most cost-effective approach out of the paid approaches for recruiting young adult participants. It should be noted that the use of an advertising agency is helpful but not necessary to advertise on social media.

It is important to emphasize that although participants can be enrolled relatively quickly and cost-effectively from paid social media advertisements, rates of individuals completing screeners for such studies are low. Thus, these social media methods may not yield broadly representative samples. In terms of representativeness, participants recruited from social media approaches for UV4Me2 differed significantly on demographic and skin cancer risk factors from the NHIS sample. NHIS participants are intended to be representative of the U.S. population [24, 25]. It is not surprising that the current sample differed from NHIS respondents on skin cancer risk factors such as race, ethnicity, and sun sensitivity, since those are associated with the study eligibility criteria (e.g., hair color and number of freckles) for the skin cancer risk reduction intervention trial [23]. Since our goal was to enroll young adults at risk for skin cancer who would take part in an online skin cancer risk reduction intervention, it is appropriate for our sample to differ somewhat from the general population. Young adults who do not engage with social media may also be unlikely to engage with online interventions.

There may be several factors contributing to differences among the recruitment sources. It is important to note that participants in the Instagram and Facebook groups responded to paid ads as opposed to organic posts, which may be one reason these populations differed from others. It is not surprising that participants referred by other participants were likely to be similar to them since they were often friends or family. Organic, Instagram, and Facebook recruitment each resulted in samples that differed from other samples to a similar degree.

The strengths of this study are that it compared several paid and unpaid social media recruitment approaches, assessed cost, included a large sample, and compared the study sample with a large, nationally representative sample. There are several limitations to the study. First, although Facebook, Instagram, and Twitter are well-established and popular platforms, the social media landscape is constantly changing, so study findings may not be generalizable far into the future. Second, the samples for the unpaid groups were small, and we compared overall campaigns rather than specific advertisements or paid and unpaid Instagram and Facebook ads/posts. Thus, a different study and/or marketing team may obtain different results. Third, a limited number of variables were available from study participants to be compared with the NHIS data.

Finally, as occurs in other Internet-based and survey research, a portion of participants attempted to enroll more than once or provided inaccurate responses in order to be deemed eligible and earn study incentives. There are several methods to attempt to prevent this from occurring [27, 28]. For example, in the current study, one method we used was to require a code to be entered upon registration that had been sent to the participant’s unique cell phone number. However, we still had to drop approximately 12% of the sample due to suspicious behavior (e.g., repeated name or email address). Although we improved upon the efficacy study in which we had excluded about 22% of the sample [27], the number of suspicious “participants” was still higher than we had hoped. Given the disproportionally high number of participants excluded from unpaid social media recruitment, and since the time program staff spent identifying suspicious participants is not accounted for, the cost for this strategy is underestimated.

These findings offer several directions for future work. First, it is important to consider the potential biases, costs, and benefits of using various recruitment sources. Although this depends on the nature of the research (e.g., activities and number of follow-up time-points), it may be worthwhile to consider other approaches, such as probability-based consumer opinion panels or quota systems to obtain samples more representative of the general U.S. population, if budget allows, and use specific social media approaches to recruit unique or hard-to-reach populations. For example, Instagram may work well to recruit young women; whereas, Reddit might work better to recruit young men. Another cost-effective option for recruiting specific populations might be the combination of a probability-based panel sample as well as asking participants from the probability-based sample to refer their own acquaintances. Such referral-based sampling would likely result in a sample that is similar to the original probability-based sample of participants. This method may require additional identity checks if studies offer incentives, since participants are sometimes tempted to refer themselves [27, 28]. Additionally, the current paper focused only on reach and enrollment. A subsequent paper will examine study retention and intervention outcomes. In conclusion, these results demonstrate significant differences among social media recruitment approaches in terms of cost efficiency, representativeness, and study participation rates. Findings suggest that project budget, target population, and participation goals should inform selection and/or combination of existing and emerging online recruitment approaches.

Acknowledgments

The authors thank Zhaomeng Niu, PhD, for assistance with data analysis, Oxford Communications for assistance with study recruitment, and ITX, Corp. for programming the data and content management system.

Funding

This study was funded by the National Cancer Institute [R01CA204271, PI: Heckman; P30CA006927 and P30CA072720, Cancer Center Support Grants].

Compliance With Ethical Standards

Authors’ Statement of Conflict of Interest and Adherence to Ethical Standards: The authors declare that they have no conflicts of interest.

Human Rights: All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. This study was approved by the Rutgers University IRB.

Informed Consent: Informed consent was obtained from all individual participants included in the study.

Welfare of Animals: This article does not contain any studies with animals performed by any of the authors.

Transparency Statements: The study was pre-registered at clinicaltrials.gov (NCT03313492). The analysis plan was not formally pre-registered.

Data Availability: De-identified data from this study are not available in a public archive. De-identified data from this study will be made available (as allowable according to institutional IRB standards) by emailing the corresponding author. Analytic code used to conduct the analyses presented in this study are not available in a public archive. They may be available by emailing the corresponding author. Materials used to conduct the study are not publically available.

REFERENCES

- 1. Amon KL, Campbell AJ, Hawke C, Steinbeck K. Facebook as a recruitment tool for adolescent health research: a systematic review. Acad Pediatr. 2014;14(5):439–447.e4. [DOI] [PubMed] [Google Scholar]

- 2. Reagan L, Nowlin SY, Birdsall SB, et al. Integrative review of recruitment of research participants through Facebook. Nurs Res. 2019;68(6):423–432. [DOI] [PubMed] [Google Scholar]

- 3. Thornton L, Batterham PJ, Fassnacht DB, Kay-Lambkin F, Calear AL, Hunt S. Recruiting for health, medical or psychosocial research using Facebook: systematic review. Internet Interv. 2016;4:72–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Whitaker C, Stevelink S, Fear N. The use of Facebook in recruiting participants for health research purposes: a systematic review. J Med Internet Res. 2017;19(8):e290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Bell CJ, Spruit JL, Kavanaugh KL. Exposing the risks of social media recruitment in adolescents and young adults with cancer: #Beware. J Adolesc Young Adult Oncol. 2020;9(5):601–607. [DOI] [PubMed] [Google Scholar]

- 6. Darmawan I, Bakker C, Brockman TA, Patten CA, Eder M. The role of social media in enhancing clinical trial recruitment: scoping review. J Med Internet Res. 2020;22(10):e22810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Hudnut-Beumler J, Po’e E, Barkin S. The use of social media for health promotion in Hispanic populations: a scoping systematic review. JMIR Public Health Surveill. 2016;2(2):e32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Comabella CC., Wanat, M. Using social media in supportive and palliative care research. BMJ supportive & palliative care, 2015;5(2), 138–145. [DOI] [PubMed] [Google Scholar]

- 9. Lafferty NT, Manca A. Perspectives on social media in and as research: a synthetic review. Int Rev Psychiatry. 2015;27(2):85–96. [DOI] [PubMed] [Google Scholar]

- 10. Luo T, Li M, Williams D, et al. Using social media for smoking cessation interventions: a systematic review. Perspectives in Public Health. 2020;141(1):50–63. [DOI] [PubMed] [Google Scholar]

- 11. Sanchez C, Grzenda A, Varias A, et al. Social media recruitment for mental health research: a systematic review. Compr Psychiatry. 2020;103:152197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Schwab-Reese LM, Hovdestad W, Tonmyr L, Fluke J. The potential use of social media and other internet-related data and communications for child maltreatment surveillance and epidemiological research: scoping review and recommendations. Child Abuse Negl. 2018;85:187–201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Shieh C, Khan I, Umoren R. Engagement design in studies on pregnancy and infant health using social media: systematic review. Preventive Medicine Reports. 2020;19:101113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Sinnenberg L, Buttenheim AM, Padrez K, Mancheno C, Ungar L, Merchant RM. Twitter as a tool for health research: a systematic review. Am J Public Health. 2017;107(1):e1–e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Topolovec-Vranic J, Natarajan K. The use of social media in recruitment for medical research studies: a scoping review. J Med Internet Res. 2016;18(11):e286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Cahill TJ, Wertz B, Zhong Q, et al. The search for consumers of web-based raw DNA interpretation services: using social media to target hard-to-reach populations. J Med Internet Res. 2019;21(7):e12980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Ford KL, Albritton T, Dunn TA, Crawford K, Neuwirth J, Bull S. Youth study recruitment using paid advertising on Instagram, Snapchat, and Facebook: cross-sectional survey study. JMIR Public Health Surveill. 2019;5(4):e14080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Guillory J, Wiant KF, Farrelly M, et al. Recruiting hard-to-reach populations for survey research: using Facebook and Instagram advertisements and in-person intercept in LGBT bars and nightclubs to recruit LGBT young adults. J Med Internet Res. 2018;20(6):e197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Herbell K, Zauszniewski JA. Facebook or Twitter?: Effective recruitment strategies for family caregivers. Appl Nurs Res. 2018;41:1–4. [DOI] [PubMed] [Google Scholar]

- 20. Hulbert-Williams NJ, Pendrous R, Hulbert-Williams L, Swash B. Recruiting cancer survivors into research studies using online methods: a secondary analysis from an international cancer survivorship cohort study. Ecancermedicalscience. 2019;13:990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Wisk LE, Nelson EB, Magane KM, Weitzman ER. Clinical trial recruitment and retention of college students with type 1 diabetes via social media: an implementation case study. J Diabetes Sci Technol. 2019;13(3):445–456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–1327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Glanz K, Schoenfeld E, Weinstock MA, Layi G, Kidd J, Shigaki DM. Development and reliability of a brief skin cancer risk assessment tool. Cancer Detect Prev. 2003;27(4):311–315. [DOI] [PubMed] [Google Scholar]

- 24. National Center for Health Statistics. National Health Interview Survey: Questionnaires, Datasets, and Related Documentation. May 6, 2019. Retrieved from https://www.cdc.gov/nchs/nhis/nhis_questionnaires.htm

- 25. Parsons VL, Moriarity C, Jones K, Moore T, Davis K.. Design and Estimation for the National Health Interview Survey, 2006–2015. National Center for Health Statistics. Vital Health Stat 2. 2014;(165):1–53. [PubMed] [Google Scholar]

- 26. Bowen AM, Daniel CM, Williams ML, Baird GL. Identifying multiple submissions in Internet research: preserving data integrity. AIDS Behav. 2008;12(6):964–973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Handorf EA, Heckman CJ, Darlow S, Slifker M, Ritterband L. A hierarchical clustering approach to identify repeated enrollments in web survey data. PLoS One. 2018;13(9):e0204394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Meade AW, Craig SB. Identifying careless responses in survey data. Psychol Methods. 2012;17(3):437–455. [DOI] [PubMed] [Google Scholar]

- 29. Finkelstein EA, Khavjou O, Will JC. Cost-effectiveness of WISEWOMAN, a program aimed at reducing heart disease risk among low-income women. J Womens Health (Larchmt). 2006;15(4):379–389. [DOI] [PubMed] [Google Scholar]

- 30. Honeycutt A, Clayton L, Khavjou O, et al. Guide to analyzing the cost-effectiveness of community public health prevention approaches. Research Triangle Park, NC: US Department of Health and Human Services; 2006. [Google Scholar]

- 31. Khavjou OA, Honeycutt AA, Hoerger TJ, Trogdon JG, Cash AJ. Collecting costs of community prevention programs: communities putting prevention to work initiative. Am J Prev Med. 2014;47(2):160–165. [DOI] [PubMed] [Google Scholar]

- 32. Subramanian S, Ekwueme DU, Gardner JG, Trogdon J. Developing and testing a cost-assessment tool for cancer screening programs. Am J Prev Med. 2009;37(3):242–247. [DOI] [PubMed] [Google Scholar]