Abstract

The electrocardiogram (ECG) is a widespread diagnostic tool in healthcare and supports the diagnosis of cardiovascular disorders. Deep learning methods are a successful and popular technique to detect indications of disorders from an ECG signal. However, there are open questions around the robustness of these methods to various factors, including physiological ECG noise. In this study, we generate clean and noisy versions of an ECG dataset before applying symmetric projection attractor reconstruction (SPAR) and scalogram image transformations. A convolutional neural network is used to classify these image transforms. For the clean ECG dataset, F1 scores for SPAR attractor and scalogram transforms were 0.70 and 0.79, respectively. Scores decreased by less than 0.05 for the noisy ECG datasets. Notably, when the network trained on clean data was used to classify the noisy datasets, performance decreases of up to 0.18 in F1 scores were seen. However, when the network trained on the noisy data was used to classify the clean dataset, the decrease was less than 0.05. We conclude that physiological ECG noise impacts classification using deep learning methods and careful consideration should be given to the inclusion of noisy ECG signals in the training data when developing supervised networks for ECG classification.

This article is part of the theme issue ‘Advanced computation in cardiovascular physiology: new challenges and opportunities’.

Keywords: electrocardiogram, physiological noise, robustness, deep learning, convolutional neural network, symmetric projection attractor reconstruction

1. Introduction

(a) . Deep learning and physiological electrocardiogram signal noise

Electrocardiogram (ECG) signals have long been used to support the diagnosis of cardiovascular disorders. Deep learning methods show encouraging results in ECG classification tasks and have recently seen a rapid increase in popularity [1]. Noise and interference on the ECG signal are established causes of error in ECG diagnosis and interpretation [2] and have been noted to affect both manual (clinician) and automated (machine learning) detection of ECG abnormalities [3]. A desirable property of a deep network is that the performance of the network is robust to perturbations in the input data. Network robustness to ECG noise of deep learning methods used to detect cardiovascular disorders is not well understood and there have been no studies directly addressing the issue. Sources of noise that degrade the quality of a dataset include both label noise (in terms of mislabelled data) and ECG signal noise (in terms of physiological noise on the signal). Here, we focus on the impact of ECG signal noise, to gain an understanding of how physiological ECG noise impacts the robustness of deep learning methods.

(b) . Transfer learning with deep networks

While custom network architectures can be developed and trained from scratch to classify ECG signals [1], transfer learning is a popular method for using pretrained deep networks with new data. Transfer learning refers to the retraining of a pretrained network, for example a network pretrained using ImageNet [4] data can be retrained using ECG data to classify ECG data. This training method is useful when there is a lack of data, computational resources or time, or to prototype models and carry out exploratory analysis.

ECG datasets often contain fewer than the large number of samples required to train a deep network from scratch, and in this case transfer learning is an attractive option. Furthermore, many well-known network architectures have a demonstrated record of high performance. The focus of this study is to evaluate the robustness of deep learning to physiological ECG noise, rather than to optimize ECG classification performance of a custom architecture. Using an established pretrained network provides a solid foundation for this focus.

Convolutional neural networks (CNNs) are a class of deep network that is widely used for image classification and, alongside recurrent neural networks (RNNs), are commonly used for ECG classification [1]. Both 1D CNNs applied to the raw ECG signal and two-dimensional CNNs applied to ECG image transforms have been used to detect cardiovascular disorders from the ECG signal.

(c) . Detecting cardiovascular disorders from the electrocardiogram signal

Extensive work has been carried out to develop methods, including deep networks, that extract information from an ECG signal to support clinical decision-making [1,5]. ECG image transformations are methods that convert a one-dimensional ECG signal to a two-dimensional image which can then be passed to a two-dimensional CNN for training and classification.

The use of ECG image transforms allows both the use of two-dimensional CNNs pretrained on the popular image dataset ImageNet [6], and the exploration of the impact of these image transforms on network robustness to noise. ECG image transforms capture frequency domain or morphology information that describe the underlying signal. Existing ECG image transforms and their applications include: the continuous wavelet transform (scalogram) for biometrics [7], grey-level co-occurence matrix for morphological arrhythmia detection [8], recurrence plot to classify arrhythmias [9], distance distribution matrix to identify congestive heart failure [10] and the symmetric projection attractor reconstruction (SPAR) method for genetic mutation detection [11].

Networks trained to classify ECG image transforms are less common than networks trained to classify the ECG signal directly and their utility for pathology classification is still being explored. In particular, the impact of using ECG image transforms on network robustness is unclear.

(d) . Objectives

The main objectives of this study are to

-

(i)

Study the impact of the inclusion of physiological ECG noise in the input data on classification performance of a CNN (the robustness);

-

(ii)

Assess the impact of the inclusion of physiological noise in the training data on the robustness of a CNN;

-

(iii)

Determine whether different ECG image transforms increase or decrease robustness to different noise types.

2. Methods

(a) . Electrocardiogram dataset

Twelve lead ECG signals from the first source of data made available for the PhysioNet/Computing in Cardiology Challenge 2020 [12] were used, and all data used were open access. All subjects with one of the following three diagnostic classes were selected: atrial fibrillation (AF), healthy (Normal) and ST depression (STD). There were 2678 subjects in total: 976 AF, 918 Normal and 784 STD. Data were recorded at 500 Hz and signals were 8–138 s long.

Beyond selecting all subjects with one of the three chosen diagnostic class labels there were no further selection criteria applied. All data with precisely one of the three chosen class labels were used. Although the choice of classes was not clinically motivated, there were several motivations behind it. Firstly, to provide balanced class sizes since although addressing the impact of imbalanced class sizes on deep learning methods is important, as is developing models that can assign more than one class label, the focus of this study was to facilitate evaluation of robustness. Secondly, to reduce the number of classes the model had to identify, as a simpler model allowed a more thorough investigation of robustness. Finally, the healthy ‘Normal’ category was chosen alongside two pathology classes to study any differences in robustness to physiological noise between healthy and pathological ECG signals.

Furthermore, as ECG image transforms were used, one ECG rhythm-based class (AF) and one ECG morphology-based class (STD) were chosen to assess any differing performance with different ECG image transforms. AF is a heart condition resulting in irregular heart rhythm and is characterized by small irregular f (fibrillatory) waves on the ECG [13]. STD is a morphological feature of an ECG signal and can be indicative of several conditions [14].

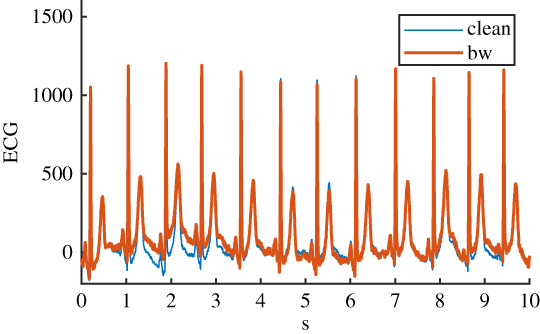

(b) . Physiological electrocardiogram signal noise datasets

To explore the robustness of CNNs to physiological ECG signal noise, the raw ECG dataset described in §2a was filtered to remove as much existing physiological noise as possible resulting in a clean ECG dataset. Then, four physiological noise types were applied with a specified signal-to-noise ratio (SNR). Hereafter, ‘datasets’ refers to these six versions of the original ECG dataset: raw ECG signals, clean ECG signals and four applied ECG noise types. Each dataset contained signals for all 2678 subjects. Filtering of the ECG data was carried out using the ECGdeli toolbox for Matlab [15]. This filtering included: baseline wander removal, low pass filter (150 Hz), high pass filter (0.05 Hz), notch filter (49 Hz to 51 Hz) and isoline correction.

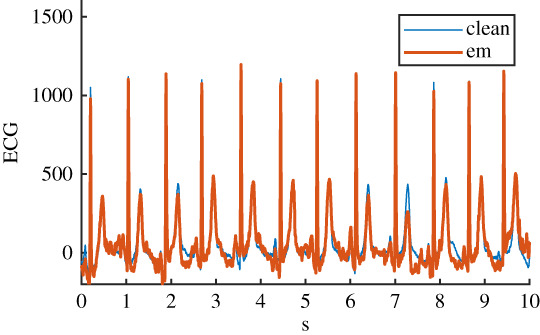

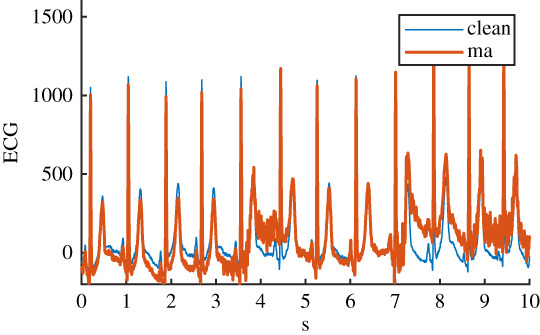

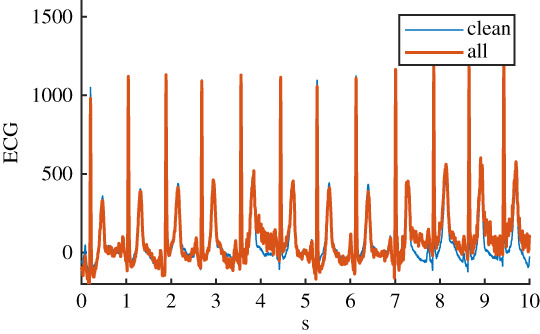

The four physiological noise recordings were originally recorded and prepared for the MIT-BIH noise stress test database [16,17] and were adapted for application to 12-lead ECG in a more recent study [18]. Thirty minutes of each of the four noise types were available. Here, physiological noise was defined as noise that originated from the subject but excluded electrical activity from the heart. The four noise types were: baseline wander (bw), electrode movement (em), motion artefact (ma) and a linear combination of all three (all). Details of the six ECG datasets can be seen in table 1.

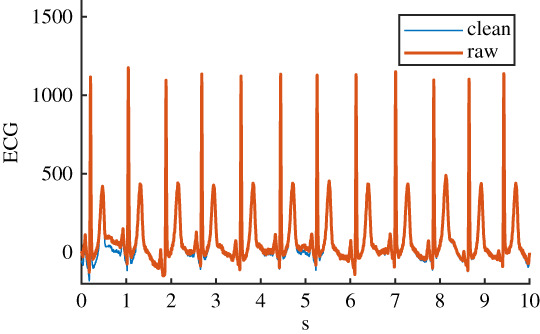

Table 1.

Details of the six ECG datasets, including an example using an ECG signal from the Normal class for each of the six variations. There are 2678 signals in each of the six datasets. Datasets are: raw, clean, baseline wander (bw), electrode movement (em), motion artefact (ma), combination of three noise types (all). Details of filtering are provided: isoline correction (Isoline corr.), baseline wander removal (Base. rem.), low pass filter (Lo pass), high pass filter (Hi pass), notch filter (notch). Details of any added noise is provided. The clean ECG signal is shown in blue on all plots for comparison.

| name | example signal | filtering | added noise |

|---|---|---|---|

| raw |  |

Isoline corr. | none |

| clean |  |

Isoline corr. | none |

| Base. rem. | |||

| Lo pass | |||

| Hi pass | |||

| notch | |||

| bw |  |

Isoline corr. | baseline wander |

| Base. rem. | |||

| Lo pass | |||

| Hi pass | |||

| notch | |||

| em |  |

Isoline corr. | electrode movement |

| Base. rem. | |||

| Lo pass | |||

| Hi pass | |||

| notch | |||

| ma |  |

Isoline corr. | motion artefact |

| Base. rem. | |||

| Lo pass | |||

| Hi pass | |||

| notch | |||

| all |  |

Isoline corr. | baseline wander |

| Base. rem. | electrode movement | ||

| Lo pass | motion artefact | ||

| Hi pass | |||

| notch |

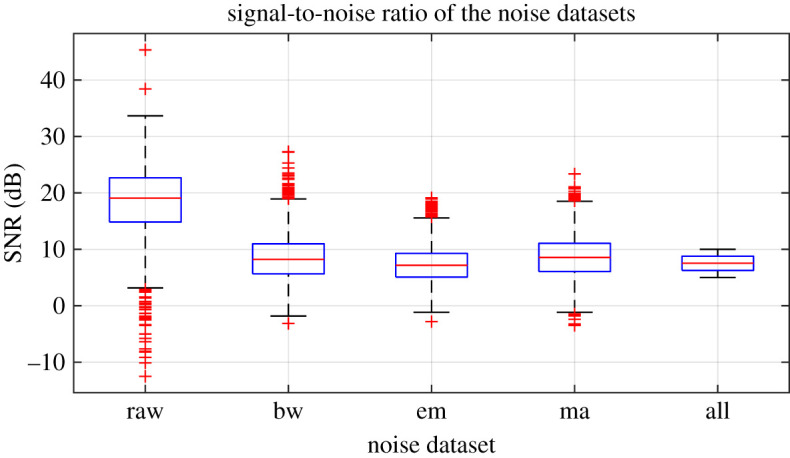

Before the noise was added to the clean ECG dataset, it was scaled to give an SNR in a specified range. The raw ECG dataset had a high overall SNR (figure 1) and so was essentially free of noise, with the exception of a small number of records that contained a significant amount of noise. For the noisy datasets, an SNR interval of 5 dB to 10 dB was specified to give a more uniform noise level and to ensure that the signals were sufficiently noisy for an effect to be detected. The procedure for applying noise to each of the 2678 clean signals was as follows: (i) determine the length of the current clean ECG signal, (ii) randomly select a segment of the same length from the 30 minutes of available noise, (iii) randomly select an SNR between 5 and 10 dB, (iv) using the clean signal, calculate the scaling factor required to scale the chosen ‘all’ noise segment to the specified SNR, (v) scale the ‘all’ noise segment, and the three individual noise segments, using this scaling factor, (vi) add the four noise segments to the clean signal to create four noisy signals. The resulting SNRs for the four noisy datasests can be seen in figure 1. SNRs for the raw signal were calculated using the clean signal, and the difference between the raw and clean signal.

Figure 1.

Signal-to-noise ratios (SNRs) for the noisy datasets. SNRs for the noise datasets calculated using the scaled noise signal and the clean signal. SNRs for the raw signal calculated using the clean signal and the difference between the raw and clean signals. (Online version in colour.)

(c) . Electrocardiogram image transforms

Two transformation methods were used to generate ECG images for each of the six datasets: an attractor generated using the SPAR method [19,20] and a scalogram generated using the continuous wavelet transform. Lead II is commonly used to assess cardiac rhythm [21] and was chosen to generate the ECG image transforms.

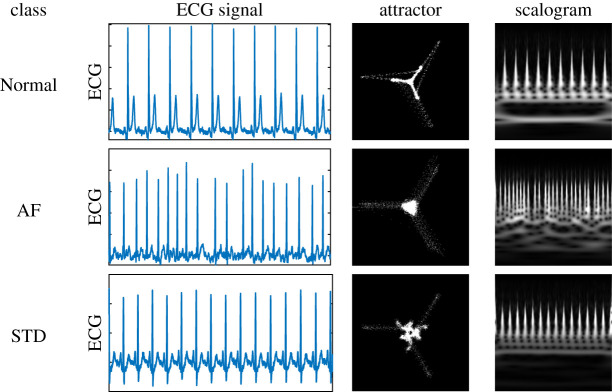

The SPAR method uses delay coordinates to replot the data in three dimensions, then projects the resulting three-dimensional plot to two dimensions from which a density plot is generated. The resulting shape is known as an attractor. An ECG attractor captures morphology information of the signal but factors out heart rate. The continuous wavelet transform was generated using an analytic Morse wavelet, with 16 wavelet bandpass filters per octave and the scalogram was obtained by plotting the absolute value of the resulting coefficients. An ECG scalogram captures frequency domain information of the signal. See figure 2 for a representative ECG, attractor and scalogram for each of the three classes. Both image types were generated with dimension 150 × 150. All image transforms were greyscale and were generated using the full ECG signal. As two image transformations were applied to each of the six datasets, there were 12 image datasets in total. Hereafter, ‘image dataset’ refers to one of these 12.

Figure 2.

Representative ECG signal for each class, along with the corresponding attractor and scalogram. Note that attractor images have increased contrast for better visibility. (Online version in colour.)

(d) . Transfer learning with CNNs

To classify the ECG image transforms, four CNNs pretrained using ImageNet were initially assessed: AlexNet, GoogLeNet, VGG-16 and ResNet-50. Results using these networks identified ResNet-50 as the best performing and so this network was used for the main study. Transfer learning was used to adapt each of the networks for the image transforms. To do this, all layers of each network were frozen and the last learnable layer and classification layer were replaced and retrained using the ECG image transforms. All image transforms were rescaled to either 224 × 224 or 227 × 227 depending on the requirements of the network input layer.

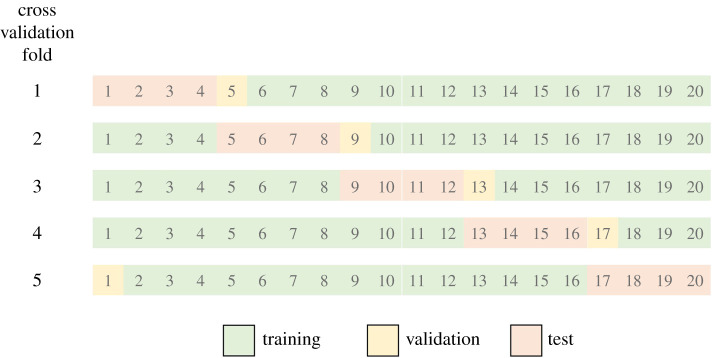

Each of the 12 image datasets were used to train the network. Five-fold cross validation was used. For each cross validation fold, the data were split into training (75%), validation (5%) and test (20%) data (figure 3). Predefined cross validation folds were used, thus giving consistency for the different datasets. To prevent overfitting, networks were set to stop training when the validation loss was larger than the previous smallest value five times consecutively.

Figure 3.

Diagram of how the data was split into training (75%), validation (5%) and test (20%) data for each of the five cross validation folds. (Online version in colour.)

To carry out the study of robustness, and investigate the impact that physiological ECG noise has on classification performance, three variations of the network training and testing were carried out:

-

(i)

Network training and validation using a single image dataset, used to classify test data from the same image dataset;

-

(ii)

Network training and validation using a clean ECG image dataset, used to classify test data from all other image datasets;

-

(iii)

Network training and validation using a noisy ECG image dataset, used to classify test data from all other image datasets.

(e) . Performance metrics

As the classes were slightly imbalanced, the F1 score was used to evaluate the performance of the networks. The F1 score is the harmonic mean of precision and recall and is calculated as follows:

where precision is the fraction of predicted labels of a specific class that are correct and recall is the fraction of a specific class that is correctly identified. To account for the multiple classes, the macro averaged F1 score was used, whereby the F1 score was calculated for each class individually and the arithmetic mean of these was taken. Unless specified, the F1 scores reported below refer to overall network performance (macro averaged F1 score) as opposed to individual classF1 score.

3. Results

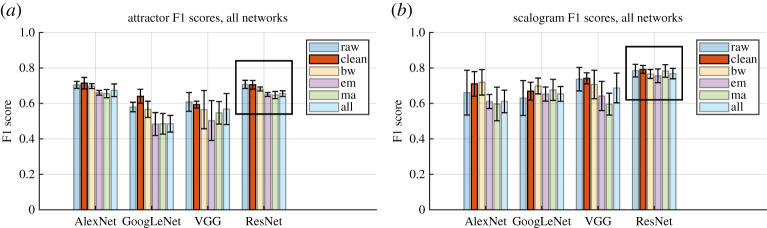

(a) . Network comparison: training and test data from the same dataset

Overall network performance for four different networks trained and tested on each of the six datasets can be seen in figure 4. Using F1 scores as a metric of overall network performance, ResNet-50 is the best performing network and has the lowest standard deviation across the five cross validation folds, although for the attractor images AlexNet has similar performance. Furthermore, unlike the other networks, ResNet-50 performance is fairly consistent across the datasets, with all F1 scores in the range 0.65–0.71 for the SPAR images, and in the range 0.76–0.79 for the scalogram images. ResNet-50 has been used for the remainder of the analysis.

Figure 4.

Comparison of network performance when training and test data were taken from the same dataset, for (a) the attractor images and (b) the scalogram images. Each cluster of bars shows F1 scores for a single network, where each bar represents an ECG dataset: raw, clean, baseline wander (bw), electrode movement (em), motion artefact (ma) and all three combined (all). The raw data performance (raw) is shown for comparison. Error bars show standard deviation across the five cross validation folds. Black boxes highlight results for the chosen network. (Online version in colour.)

(b) . Noise comparison: training and test data from the same dataset

When networks were trained and tested using data from the same dataset, classification performance F1 scores for the noisy datasets were consistently lower than for the clean dataset. These results can be seen within the black boxes in figure 4. It should be noted that the error bars overlap more for scalogram than for the attractor images, suggesting that the performance decrease with noise is less significant. F1 score changes from the clean dataset to the all noise dataset were 0.70 to 0.65 for the attractor images and 0.79 to 0.77 for the scalogram images. ResNet-50 appeared to perform most consistently across all noise types, with the smallest F1 score decrease in performance. Other networks showed larger changes and in some cases performance increased in the presence of noise (see figure 4b, AlexNet for example).

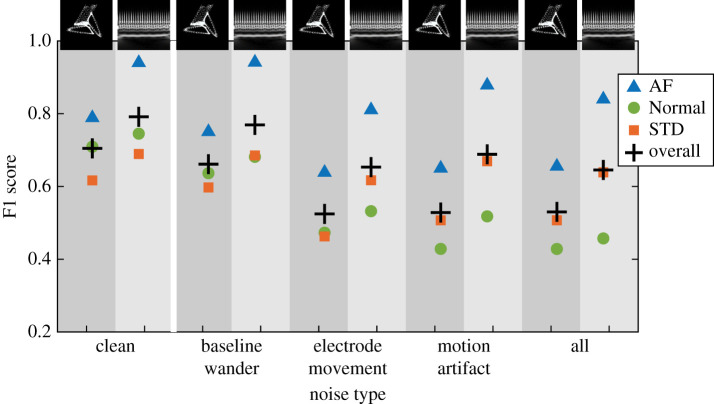

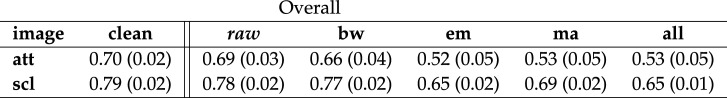

(c) . Using a network trained on clean data to classify noisy data

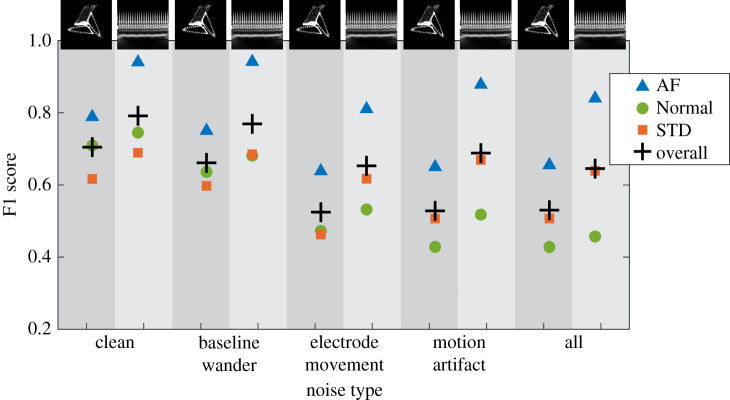

ResNet-50 was chosen for further study. When the network that had been trained on the clean ECG image dataset was used to classify test data from the noisy datasets, F1 scores decreased by up to 0.2. A summary of the overall F1 scores is given in table 2. A summary of results, including a breakdown of the F1 scores by class, can be seen in figure 5.

Table 2.

Summary of overall network F1 scores for the ResNet-50 network trained using the clean dataset, then tested using the noisy datasets. Values shown are the arithmetic mean and standard deviation across the five cross validation folds. Results shown for attractor (att) and scalogram (scl) image transforms. Note that results for clean data are a duplication of data shown in table 4. The results for raw data are shown for comparison.

|

Figure 5.

Breakdown of ResNet-50 F1 scores by class for the network trained using the clean dataset then tested using the noisy datasets. Here + is overall network F1, triangle is AF, circle is Normal and square is STD. Attractor and scalogram F1 scores are shown side by side, indicated by the background shading. From left to right, results are shown for clean, baseline wander, electrode movement and all three combined. (Online version in colour.)

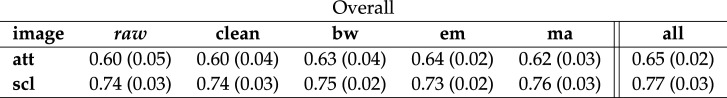

(d) . Using a network trained on noisy data to classify clean and noisy data

A network that had been trained on the all noise ECG image dataset was used to classify test data from the clean and noisy datasets. See the overall F1 scores in table 3 and a summary of results for all classes in figure 6. In this case, the performance decrease was smaller than when the network trained on clean data was used to classify noisy data. The all noise ECG image dataset performed fairly consistently in classifying all other ECG image datasets.

Table 3.

Summary of overall network F1 scores for the ResNet-50 network trained using the all noise image dataset then tested using all other datasets. Values shown are the arithmetic mean and standard deviation across the five cross validation folds. Results shown for attractor (att) and scalogram (scl) images. Note that results for all noise are a duplication of data shown in table 4. The results for raw data are shown for comparison.

|

Figure 6.

Breakdown of ResNet-50 F1 scores by class for the network trained using the all noise dataset then tested using all other datasets. Here + is overall network F1, triangle is AF, circle is Normal and square is STD. Attractor and scalogram F1 scores are shown side by side, indicated by the background shading. From left to right, results are shown for clean, baseline wander, electrode movement and all three combined. (Online version in colour.)

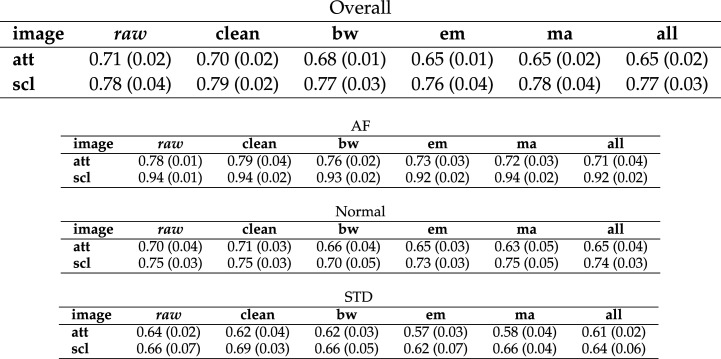

(e) . Class comparison: training and test data from the same dataset

The F1 scores seen so far have been the macro averaged (overall network) F1 scores, that is the arithmetic mean of the F1 scores for each of the three classes individually. Individual class F1 scores for networks whose training and test data were taken from the same ECG dataset can be seen in figure 7. A summary of F1 scores for each class individually can be seen in table 4.

Figure 7.

Breakdown of ResNet-50 F1 scores by class when training and test data was taken from the same dataset. Here + is the overall network F1 score, triangle is AF, circle is Normal and square is STD. Attractor and scalogram F1 scores are shown side by side, indicated by the background shading. Results for clean data are shown in the left-hand columns, followed by results for baseline wander, electrode movement, motion artefact and all three combined. (Online version in colour.)

Table 4.

Summary of network F1 scores for the ResNet-50 network whose training and test data was taken from the same dataset. Values shown are the arithmetic mean and standard deviation across the five cross validation folds. Results shown for attractor (att) and scalogram (scl) images. From top to bottom the table shows results for: overall network performance on all three classes, for AF (atrial fibrillation) only, Normal only and STD (ST depression) only. Results with the raw dataset are included for comparison.

|

4. Discussion

Deep learning is a well established and rapidly growing approach for ECG classification [1] where classification performance is often determined by both the quality of the training data used and the chosen model architecture. In this paper, a ResNet-50 architecture has been used to classify SPAR attractor and scalogram image transforms of an ECG signal. We have explored how robust the networks are to physiological ECG noise, and also how including or excluding physiological noise from the training data impacts robustness. The key findings with regards to choice of training data can be summarized as follows:

-

(i)

Train network using clean data if filtering of future unseen (test) data is known. A network trained and tested on one data type (clean or noisy) has better performance than a network trained on clean data and tested on noisy data or vice versa. Therefore, for example, if it is known that all data the network will have to classify in the future will be filtered, then use filtered data to train the network.

-

(ii)

Train network using noisy data if filtering of future unseen (test) data is unknown. A network trained on noisy data and tested on clean data has a smaller performance decrease than a network trained on clean data and tested on noisy data.

It is perhaps unsurprising that adding physiological ECG noise to the data on which the network is trained makes the network more robust and generalizable than a network trained on clean data alone, as adding (non-physiological) noise to training data is a known method for improving deep network robustness and preventing overfitting [22,23].

(a) . Robustness

Noise and interference are common causes of ECG misclassification [2] and it is beneficial to gain an understanding of how physiological ECG noise affects deep network robustness. Here, we have demonstrated to what degree ECG classification performance was decreased in the presence of different physiological noise types, and found that electrode movement and motion artefact have a bigger impact on performance than baseline wander for attractor images, while networks trained using scalogram image transforms appear to be more robust (see table 4, overall network scores). The SPAR attractor method inherently factors out baseline wander (see [20] for details) which may account for the smaller performance decrease for baseline wander. This result for baseline wander may also be partially due to the fact that some of the ECG signals were short (less than 10 s) so only a minimal amount of baseline wander could be applied.

When the network trained on clean data was used to classify images from the different noisy datasets, performance measured by F1 score decreased minimally for both image types when baseline wander was applied (less than 0.04). When motion artefact, electrode movement or all types of noise combined were added to the input data, classification performance using the network trained on clean data decreased by approximately 0.1 for the scalograms and approximately 0.2 for the attractors. A possible explanation for the higher robustness of scalogram image transforms to the motion artefact and electrode movement noise could be that the relevant frequency information for these noise types is distinct from the frequency information used for classification in these images. We also note that performance for the raw data was very similar to that for the clean data in all cases, which is not surprising as most signals contained very little noise (figure 1).

Eventually, ECG classification networks may be used in a clinical setting and the type or level of noise in this setting will be unknown beforehand. In this situation, it is beneficial to have prior understanding of the robustness of a trained network. While the best performance was found using a network trained on data with the same type of noise as the input data, it is noteworthy that the ‘penalty’ of using a network trained on noisy data was much less than when using a network trained on clean data to classify noisy data (§3c and d). Interestingly, the classification of Normal ECG signals was most affected when using the network trained on clean data to classify noisy data (figure 5). Overall the network appears to be more robust to unseen noise on the input data when noise has been included in the training data.

While these results reveal interesting effects of noise on classification, figure 4 demonstrates the strong dependence on the choice of network and that further work is required in this area. Performance differences in the presence of noise for different networks suggest the need to test individual network plus dataset combinations for robustness and, perhaps more importantly, to develop datasets and techniques for evaluating robustness of networks. Work is underway in this area (see [24,25], for example).

Resistance to adversarial attacks is an increasingly desirable quality as deep learning models for ECG classification become more widely used, especially in a clinical setting. Imperceptible (to a human) changes in an ECG have been shown to cause misclassification by a deep network [25], but would likely have little effect on the image transforms. Adversarial training, where intentionally placed noise is used to generate additional input data, has been shown to increase network stability [26]. The use of ECG image transforms (rather than the raw signal) in deep learning models may be one way of increasing robustness of a network to adversarial attacks, but further work is required in this area to quantify how robust image transformation methods are to imperceptible changes on the original ECG, compared to methods using the original ECG.

(b) . Electrocardiogram preprocessing

Prior to image transformation, the ECG signals were filtered to remove as much real physiological noise as possible before applying the noise types. As real ECG signals were used, the amount of original noise remaining in each clean signal before the noise was applied was different despite the same method being used to filter all ECG signals. One implication of this situation is that, while we can use results of this study to draw conclusions about the impact of various noise types individually, it should be remembered that there may be traces of other noise types in the underlying ECG signal in addition to the newly applied physiological noise. This result echoes the findings of the original physiological noise dataset, where it was noted that it was almost impossible to record electrode movement or motion artefact without baseline wander [16], suggesting that the results on electrode movement or motion artefact alone are less meaningful.

An alternative approach to the generation of the noisy datasets would be to use clean synthetic ECG signals and add the noise to these. This approach would ensure as far as possible that there is only one type of noise being applied to the ECG when that is intended. However, a synthetic ECG dataset may not be as representative of real ECG data and it may be more difficult to translate findings using a synthetic dataset than a real dataset. Further work is required in this area. Finally, other studies have shown a decrease in performance with increasing SNR [27]. Here, the physiological noise was applied with a small, fixed range for the SNR and further work is required to understand how different magnitude SNR noise affects performance. In particular, with regards to our finding that including noise in the training data increases network robustness it would be valuable to determine levels of noise required.

(c) . Image transforms

A notable difference in performance between the frequency-based scalogram image transforms and the morphology-based SPAR images was for AF, see figure 7, where the scalogram F1 score for AF was at least 0.15 higher than the attractor F1 score for all noise types. AF is characterized by irregular f waves, known as fibrillatory waves [13], which are expected to be more easily identifiable on the scalogram, suggesting that machine learning methods incorporating frequency-based techniques are better suited to identifying conditions characterized by frequency-based features such as fibrillatory waves. Among the criteria for diagnosis of AF is a heart rate above 100 bpm [13], and this information would be captured in the scalogram image but not in the attractor image, which factors out heart rate and focusses exclusively on the ECG waveform morphology. Future models that combine the attractor image with additional information such as heart rate may improve the performance of the attractor for AF.

By contrast, classification performance for STD using attractor images is similar to that for scalogram images, despite having a lower overall performance. STD is characterized by an ST segment below the baseline of the ECG which affects the morphology of the ECG waveform. The attractor captures ECG waveform morphology in detail [20], which may explain why ST segment depression is not too difficult to identify using SPAR images.

Signals in the dataset were between 8 and 138 s long and this may have impacted classification performance. The SPAR image transformation method normalizes for signal length, whereas scalogram images for signals with different lengths appear different and can potentially capture information for a wider range of frequencies. It will be beneficial to understand the impact of signal length, and whether there is an optimal ECG signal length that is able to detect different cardiovascular disorders using different machine learning methods.

(d) . Utility and performance

Machine learning models for ECG classification are ultimately being developed to support diagnosis and hopefully lead to improved patient outcomes. Models that use ECG image transforms as opposed to the raw signal are less common [1] and more complex as they involve both image transformation and CNN training stages which may hinder their utility in a clinical setting. In that setting, methods such as machine learning using ECG intervals or even deep learning applied to the ECG signal itself may be more desirable. However, the work reported here identified possible benefits of the approach and developed some ideas that are more broadly applicable. Firstly, it presents an alternative approach to detecting cardiovascular conditions in an ECG signal that may be more robust to different physiological noise types. Secondly, as seen in §3e, different image transforms (i.e. frequency or morphology based) are better suited to classification of different conditions.

In terms of performance F1 scores, while it is difficult to make a head to head comparison of different deep networks’ performance, results found here are comparable to results in the literature. One model for detecting AF from ambulatory ECG had an F1 score of 0.83; ambulatory ECG is more likely to have noise, but the paper does not comment on the impact of this [28]. For the scalogram images, the best F1 scores for Normal and AF were 0.75 and 0.94, respectively. Another model used a one-dimensional CNN to classify ECG signals and gave F1 scores for Normal and AF of 0.92 and 0.81, respectively [29], although two things should be noted here. Firstly, the AF dataset was almost seven times smaller than the Normal data which will reduce classification performance for AF [30]. Secondly, the ECG data was collected using a handheld device as opposed to the12-lead data used in the present study. The device used to record the data should be taken into account when interpreting classification results.

On a similar theme, the lead chosen to generate the ECG image transforms (lead II) contributed to the classification performance for the different classes. For example, while the raised heart rate (greater than 100 bpm) characteristic of AF can be seen on any of the 12 leads, the first chest lead is often the best lead to spot the irregular fibrillatory waves [13]. In addition, while STD is often present in multiple leads, it is not always present in lead II [13] and it would be beneficial to evaluate the classification performance of different ECG leads. The ECG preprocessing may have altered ST changes on the ECG to some extent [31] which would also contribute to the low F1 scores for STD.

Before any model of this sort could be reliably implemented in the clinic, a better understanding of how it has chosen to classify signals is required. Interpretability tools such as LIME [32] and layer-wise relevance propagation [33] are being implemented to understand how these models classified the ECG image transforms, which will additionally help to clarify which regions of the image (i.e. a particular frequency or morphological feature) were most helpful in assigning a particular pathology class label.

(e) . Limitations

Regarding the generation of the noisy ECG datasets, it should be noted that filtering can perform differently on healthy or pathological ECG signals [34], therefore there may have been a different amount of noise on the Normal, AF and STD signals even before the new physiological noise was applied. In addition, the paper describing the generation of the physiological noise used noted that it was almost impossible to record motion artefact without baseline wander [16], suggesting that the results on electrode movement or motion artefact alone are less meaningful.

There is controversy around the use of ECG transforms generated using the wavelet transform (including scalograms), due to varying frequency resolutions, meaning patterns within an individual subject are identified rather than between subjects [1]. Future work to evaluate the impact of normalization and scalogram limit fixing will be beneficial in this area.

Finally, transfer learning models are very sensitive to the choice of network hyperparameters such as the learning rate and number of training epochs, therefore it is unwise to take the values of the performance metrics as definitive. However, these results give insight into how robust these networks are when ECG data with physiological noise is classified. Future work is required to investigate how different network and dataset combinations perform in the presence of ECG noise. There was a slight class imbalance which may have impacted performance, augmented datasets or a custom loss function could address this imbalance in future work.

5. Conclusion

We have shown that ECG image transform classification using a CNN is impacted by physiological noise, in particular by electrode movement and motion artefacts. We have also found that different image transform methods may be more appropriate for classifying different cardiovascular conditions, for example a frequency-based image for an ECG frequency-based condition. With regards to robustness, we found that a network trained on clean data finds it harder to classify noisy data than a network trained on noisy data does to classify clean data. This aligns well with empirical findings that deep network performance is improved by the addition of noise to the training data. Finally, when the future reliability and robustness of a machine learning model used to detect cardiovascular conditions in ECG signals is being evaluated, attention should be paid to level and type of noise in the data used to train the network.

Acknowledgements

Thanks to Claudia Nagel from Karlsruhe Institute of Technology for advice on the ECGdeli software and help obtaining the Lund noise model code. Thanks to the University of Lund for permission to use the noise model and for providing the code. Thanks to Spencer Thomas from the National Physical Laboratory for advice on transfer learning. Thanks to Frederic Brochu from the National Physical Laboratory for advice on parallelizing the code and HPC guidance.

Data accessibility

Data used was extracted from a larger open source dataset available as part of the 2020 PhysioNet/Computing in Cardiology Challenge: Perez Alday EA et al. [12]. https://physionetchallenges.github.io/2020/.

Authors' contributions

J.V. conceived of and designed the study, ran transfer learning and prepared the first draft of the manuscript. P.J.A. generated the noisy signals and ECG image datasets and edited the manuscript. A.S. provided input for transfer learning. J.V., P.J.A., P.M.H. and A.S. developed the noise model. P.J.A., P.M.H. and N.S. were involved in funding acquisition. All authors provided input on the shape of the study and the manuscript. All authors read and approved the manuscript for publication.

Competing interests

P.J.A. has a patent WO2015121679A1 ‘Delay coordinate analysis of periodic data’ which covers the foundations of the SPAR method used in this paper.

Funding

This project 18HLT07 MedalCare has received funding from the EMPIR programme co-financed by the Participating States and from the European Union’s Horizon 2020 research and innovation programme.

References

- 1.Hong S, Zhou Y, Shang J, Xiao C, Sun J. 2020. Opportunities and challenges of deep learning methods for electrocardiogram data: a systematic review. Comput. Biol. Med. 122, 103801. ( 10.1016/j.compbiomed.2020.103801) [DOI] [PubMed] [Google Scholar]

- 2.Luo S, Johnston P. 2010. A review of electrocardiogram filtering. J. Electrocardiol. 43, 486-496. ( 10.1016/j.jelectrocard.2010.07.007) [DOI] [PubMed] [Google Scholar]

- 3.Ribeiro AH et al. 2020. Automatic diagnosis of the 12-lead ECG using a deep neural network. Nat. Commun. 11, 1-9. ( 10.1038/s41467-020-16172-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.ImageNet. www.image-net.orghttp://www.image-net.org.

- 5.Aurore L, Mincholé A, Martínez JP, Laguna P, Rodriguez B. 2017. Computational techniques for ECG analysis and interpretation in light of their contribution to medical advances. J. R. Soc. Interface 15, 20170821. ( 10.1098/rsif.2017.0821) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L. 2009. ImageNet: a large-scale hierarchical image database. In 2009 IEEE Conf. on Computer Vision and Pattern Recognition, Miami, FL, 20–25 June 2009, pp. 248–255.

- 7.Byeon YH, Pan SB, Kwak KC. 2019. Intelligent deep models based on scalograms of electrocardiogram signals for biometrics. Sensors 19, 935. ( 10.3390/s19040935) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sun W, Zeng N, He Y. 2019. Morphological arrhythmia automated diagnosis method using gray-level co-occurrence matrix enhanced convolutional neural network. IEEE Access 7, 67 123-67 129. ( 10.1109/ACCESS.2019.2918361) [DOI] [Google Scholar]

- 9.Mathunjwa BM, Lin Y-T, Lin C-H, Abbod MF, Shieh J-S. 2021. ECG arrhythmia classification by using a recurrence plot and convolutional neural network. Biomed. Signal Proces. 64, 102262. ( 10.1016/j.bspc.2020.102262) [DOI] [Google Scholar]

- 10.Li Y et al. 2018. Combining convolutional neural network and distance distribution matrix for identification of congestive heart failure. IEEE Access 6, 39 734-39 744. ( 10.1109/ACCESS.2018.2855420) [DOI] [Google Scholar]

- 11.Aston PJ, Lyle JV, Bonet-Luz E, Huang CLH, Zhang Y, Jeevaratnam K, Nandi M. 2019. Deep learning applied to attractor images derived from ECG signals for detection of genetic mutation. Comput. Cardiol. 45, 097. ( 10.22489/cinc.2019.097) [DOI] [Google Scholar]

- 12.Perez Alday EA, Gu A, Shah A, Liu C, Sharma A, Seyedi S, Bahrami Rad A, Reyna M, Clifford G. 2020. Classification of 12-lead ECGs: the PhysioNet - Computing in Cardiology Challenge 2020 (version 1.0.1). PhysioNet.

- 13.Goldberger AL, Goldberger ZD, Shvilkin A. 2018. Goldberger’s clinical electrophysiology: a simplified approach, 9th edn. Philadelphia, PA: Elsevier Inc. [Google Scholar]

- 14.Pollehn T, Brady WJ, Perron AD, Morris F. 2002. The electrocardiographic differential diagnosis of ST segment depression. Emerg. Med. J. 19, 129-035. ( 10.1136/emj.19.2.129) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Pilia N, Nagel C, Lenis G, Becker S. 2020. ECGdeli - An open source ECG delineation toolbox for MATLAB. See https://github.com/KIT-IBT/ECGdelihttps://github.com/KIT-IBT/ECGdeli.

- 16.Moody GB, Muldrow WK, Mark RG. 1984. A noise stress test for arrhythmia detectors. Comput. Cardiol. 11, 381-384. [Google Scholar]

- 17.Goldberger AL et al. 2003. PhysioBank, PhysioToolkit, and PhysioNet: components of a new research resource for complex physiologic signals. Circ. 101, e215-e220. ( 10.1161/01.CIR.101.23.e215) [DOI] [PubMed] [Google Scholar]

- 18.Petrėnas A, Marozas V, Solos̆enko A, Kubilius R, Skibarkienė J, Oster J, Sörnmo L. 2017. Electrocardiogram modeling during paroxysmal atrial fibrillation: application to the detection of brief episodes. Physiol. Meas. 38, 2058. ( 10.1088/1361-6579/aa9153) [DOI] [PubMed] [Google Scholar]

- 19.Aston PJ, Christie MI, Huang YH, Nandi M. 2018. Beyond HRV: Attractor reconstruction using the entire cardiovascular waveform data for novel feature extraction. Phys. Meas. 39, 024001. ( 10.1088/1361-6579/aaa93d) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Nandi M, Venton J, Aston PJ. 2018. A novel method to quantify arterial pulse waveform morphology: attractor reconstruction for physiologists and clinicians. Physiol. Meas. 39, 104008. ( 10.1088/1361-6579/aae46a) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Meek S, Morris F. 2002. ABC of clinical electrocardiography.Introduction. I-Leads, rate, rhythm, and cardiac axis. BMJ (ed. Clinical Research) 324, 415-418. ( 10.1136/bmj.324.7334.415) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Goodfellow I, Bengio Y, Courville A. 2016. Deep learning book. Cambridge, MA: MIT Press. [Google Scholar]

- 23.An G. 1996. The effects of adding noise during backpropagation training on a generalisation performance. Neural Comput. 8, 643-674. ( 10.1162/neco.1996.8.3.643) [DOI] [Google Scholar]

- 24.Strodthoff N, Wagner P, Schaeffter T, Samek W. 2020. Deep learning for ECG analysis: benchmarks and insights from PTB-XL. (http://arxiv.org/abs/2004.13701v1 [cs.LG]). [DOI] [PubMed]

- 25.Han X, Hu Y, Foschini L, Chinitz L, Jankelson L, Ranganath R. 2020. Deep learning models for electrocardiograms are susceptible to adversarial attack. Nat. Med. 26, 360-363. ( 10.1038/s41591-020-0791-x) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kurakin A, Goodfellow IJ, Bengio S. 2017. Adversarial machine learning at scale. (http://arxiv.org/abs/1611.01236v2 [cs.CV]).

- 27.Oster J, Clifford GD. 2015. Impact of the presence of noise on the RR interval-based atrial fibrillation detection. J. Electrocardiol. 48, 947-951. ( 10.1016/j.jelectrocard.2015.08.013) [DOI] [PubMed] [Google Scholar]

- 28.Hannun AY, Rajpurkar P, Haghpanahi M, Tison GH, Bourn C, Turakhia MP, Ng AY. 2019. Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nat. Med. 25, 65-69. ( 10.1038/s41591-018-0268-3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Goodfellow SD et al. 2018. Towards understanding ECG rhythm classification using convolutional neural networks and attention mappings. Proc. 3rd ML Healthc. Conf. 85, 83-101. [Google Scholar]

- 30.Johnson JM, Khoshgoftaar TM. 2019. Survey on deep learning with class imbalance. J. Big Data 6, 1-54. ( 10.1186/s40537-018-0162-3) [DOI] [Google Scholar]

- 31.Lenis G, Pilia N, Loewe A, Schulze WHW, Dössel O. 2017. Comparison of baseline wander removal techniques considering the preservation of ST changes in the ischemic ECG: a simulation study. Comput. Math. Method M. 2017, 9295029. ( 10.1155/2017/9295029) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Ribeiro MT, Singh S, Guestrin C. 2016. ‘Why should I trust you?’: Explaining the predictions of any classifier. Proceedings of the 22nd ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining, San Francisco, CA, 13–17 August 2016.

- 33.Bach S, Binder A, Montavon G, Klauschen F, Müller KR, Samek W. 2015. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS ONE 10, 1-46. ( 10.1371/journal.pone.0130140) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Yang M, Liu B, Zhao M, Li F, Wang G, Zhou F. 2013. Normalizing electrocardiograms of both healthy persons & cardiovascular disease patients for biometric authentication. PLoS ONE 8, e71523. ( 10.1371/journal.pone.0071523) [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data used was extracted from a larger open source dataset available as part of the 2020 PhysioNet/Computing in Cardiology Challenge: Perez Alday EA et al. [12]. https://physionetchallenges.github.io/2020/.