Abstract

A novel intelligent navigation technique for accurate image-guided COVID-19 lung biopsy is addressed, which systematically combines augmented reality (AR), customized haptic-enabled surgical tools, and deep neural network to achieve customized surgical navigation. Clinic data from 341 COVID-19 positive patients, with 1598 negative control group, have collected for the model synergy and evaluation. Biomechanics force data from the experiment are applied a WPD-CNN-LSTM (WCL) to learn a new patient-specific COVID-19 surgical model, and the ResNet was employed for the intraoperative force classification. To boost the user immersion and promote the user experience, intro-operational guiding images have combined with the haptic-AR navigational view. Furthermore, a 3-D user interface (3DUI), including all requisite surgical details, was developed with a real-time response guaranteed. Twenty-four thoracic surgeons were invited to the objective and subjective experiments for performance evaluation. The root-mean-square error results of our proposed WCL model is 0.0128, and the classification accuracy is 97%, which demonstrated that the innovative AR with deep learning (DL) intelligent model outperforms the existing perception navigation techniques with significantly higher performance. This article shows a novel framework in the interventional surgical integration for COVID-19 and opens the new research about the integration of AR, haptic rendering, and deep learning for surgical navigation.

Keywords: AR-based, COVID-19, lung biopsy, surgical navigation, WPD-CNN-LSTM (WCL) model

I. Introduction

Since January 2020, a series of unexplained cases of pneumonia with high infectious rate and mortality rate have been discovered in China [1]–[3]. The symptoms of fever (78%), cough (76%), dyspnea (55%), and muscle pain (44%) were considered as the typical symptoms for the corona virus disease 2019 (COVID-19). While laboratory tests found that 25% of infected patients had leukopenia and 63% had lymphocytopenia [4]. However, various atypical cases with nonspecific chest computerized tomography (CT) findings and present with negative results of nucleic acid amplification test (NAAT) for 2019-nCoV have also been reported by clinicians [5]. Since very few autopsy studies have been reported, the requirement of the pathological characteristics and reliable biopsy diagnostic strategy is urgent, which may help to interrupt the further spread of COVID-19. The requirement of a novel and reliable diagnostic approach is urgent, which may help to interrupt the further spread of COVID-19 [6], [7].

Since the higher fake negative diagnosis rate, the clinician in China has already combined the multimodalities data with the RT-PCR as the confirmed diagnosis process. The CT imaging was variable and without specificity, including consolidation, bilateral and peripheral disease, linear opacities, and “crazy-paving” pattern [8]. We reasonably believe that the current diagnosis of COVID-19 is defective and requires an effective auxiliary diagnosis scheme urgently. The recent advancements in AI have provided numerous tools and practical solutions [9]. Although the implementation of clinical-decision support models for medical diagnosis faces challenges with reliability and interpretability, in this embarrassing circulation, it is worth being concerned [10], [11].

In this article, inspired by the aforementioned approaches, the AI-based implementation is introduced into the intraoperative guiding step to assist the image guiding COVID-19 lung biopsy. A new haptic augmented reality (AR) enabled guiding strategy is proposed for precision and reliable surgery. A customized deep learning algorithm is developed to predict the biopsy procedures by the time series. Three significant contributions of our article are summarized as follows.

-

1)

A novel intelligent hybrid model combined WPD-CNN-based long short-term memory (LSTM) with ResNet is proposed for the surgical guiding for the COVID-19 lung biopsy.

-

2)

The haptic-AR interventional navigation prototype has been developed for the demonstration and evaluation of the new lung biopsy techniques.

-

3)

Subjective and objective evaluation has been conducted for the prototype evaluation by the thoracic surgeons to assess the immersion and user experience.

II. Related Work

A. Deep Learning Based COVID-19 Diagnostic Strategies

The recent advancements in AI have provided numerous tools and practical solutions for the detection of COVID-19 [12], [13]. Although the implementation of clinical-decision support models for medical diagnosis faces challenges with reliability and interpretability, in this embarrassing circulation, it is worth being concerned. Wang and Song et al. [14] developed a novel automatic CT image analysis system to assist in the diagnosis and prognosis of treatment of COVID-19 [15]. The predicted score is above 0.8. It provides a reasonable clue to its judgment factors, which is helpful for doctors to make a diagnosis. Abdel-Basset and Chang [16] presented a deep learn-based X-ray image detection method for patients with coronavirus infection, a ResNet50+SVM model. Ozturk et al. [17] designed the DarkCovidNet architecture. A total of 1125 images were used to build the model. Model precision is 98.08% and 87.02%, respectively. Apart from that, data analytics and visualization for the COVID-19 have also been studied [18], [19]. Hemdan et al. [20] achieved COVIDX-Net. The VGG19 and DenseNet201 models had the highest accuracy (90%). VGG19 and DenseNet201 models were applied to the CAD system to identify the patient's health status based on COVID-19 in X-ray images. Gozes et al. [21] achieved lung CT detection platform. It can be used to accurately and rapidly assess disease progression, guide treatment, and patient management. Furthermore, Abdel-Basset et al. [22] also introduced the IoT-based medical decision model to facilitate the doctors to make real-time decisions for the intelligent therapy.

B. AR-Haptic-Enabled Intraoperative Navigation

Minimal invasive surgical image-guided surgery (IGS), with the light injures traumas and rapidly recovered time, brings a significant revolutionary in the field of biopsy and interventional surgeries. The medical navigation systems with poor ergonomics in IGS, mainly due to the lack of the perception of depth and the detailed haptic feedback. Apart from the visual perception compensated by AR navigation, haptic assist has been preliminary attempted in the area of remote robotic surgery (RRS) in the medial application. Lung biopsy, the routine diagnose during the lung diseases examination, demonstrates a high misoperation rate in the clinic manipulation due to the excessive puncture force, which may leads the irreparable damage to the patient's lung. Citardi et al. [23] focused on the kinesthetic feedback, and Yi et al. [24] found that the tactile feedback could also be a solution in the RSS system. Except for the haptic explored in RSS, few haptic navigations in the operation room are also mentioned in medical surgery [25]. In the area of open surgical navigation, Wei et al. [27] introduced the vibration feedback functions for the surgical instruments for the route navigation, a similar study of Jia and Pan [28] employed such haptic-enabled modalities for the resection surgery navigation, furthermore, they improved the laparoscopic surgical simulator with haptic feedback and compared the task execution speed and accuracy in terms of visual-feedback, haptic feedback, and the combined feedback. Coles et al. [29] also reviewed the haptic navigation in conventional surgery and yielded the same result. However, there are inspired results from these domains as well as from RRS applications so that haptic, visual, and multimodal perceptions feedback may find a joint point in a geographic information system (GIS) COVID-19 lung biopsy navigation application.

III. New System Design

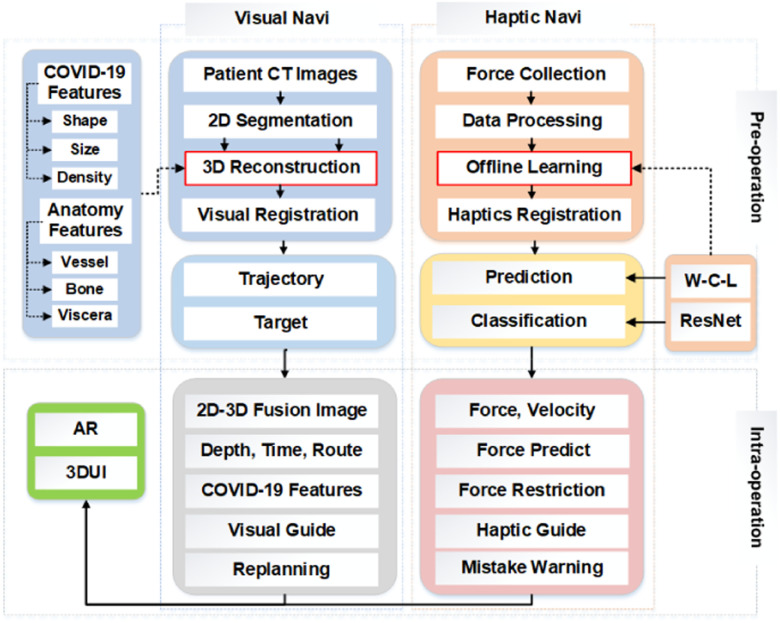

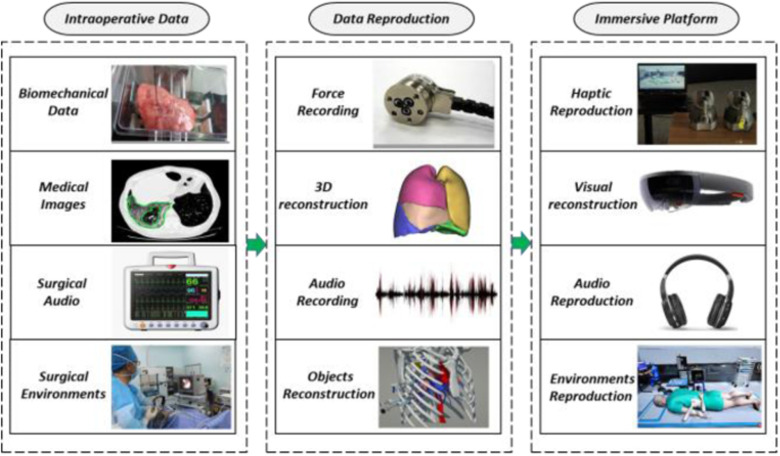

The new haptic-AR navigation framework for the COVID-19 lung biopsy, which has two main procedures: the preoperation and intraoperation with deep learning, as shown in Fig. 1. Both visual and haptic navigate implementation details. A new WPD-CNN-based LSTM model is developed to estimate the lung biopsy accuracy and the deep residual network (ResNet) is employed for the viscera classification during the biopsy. After that, we present the system evaluation approaches, which evaluate the performances with different kinds of feedbacks in the intervention therapy navigation.

Fig. 1.

Procedures for the haptic-AR system guide COVID-19 lung biopsy simulation, both visual and haptic navigation clues are addressed in the framework. WPD-CNN-based LSTM model is developed to estimate the lung biopsy accuracy and the deep ResNet is employed for the viscera classification during the biopsy. 3-D user interface (3DUI) and AR are designed for the real-time visual display terminal.

A. Learning-Based Preoperation for Lung Biopsy

The setup course of the preoperation includes four modules: deep training module for the classification and prediction during the lung biopsy, COVID-19 patient-specific CT 3-D rendering, training data collection and analysis, and the biopsy route plan algorithm and the system integration.

1). Designing Deep Training Module

We design a hybrid deep learning model to solve the problem of predicting and classification for the puncture force at the next moment during the lung biopsy. The wavelet packet decomposition (WPD) is applied in the data preprocessing. The wavelet packet function  is defined as

is defined as

|

In addition to the features of different frequency signals that are produced by WPD, the force features are also extracted by convolutional neural network (CNN). To address problems of the long-term and short-tern dependence problem of the recurrent neural network (RNN), LSTM is proposed and applied in wide applications, which demonstrates a satisfactory performance, such as processing the data based on time series. The detailed formulation is as follows:

|

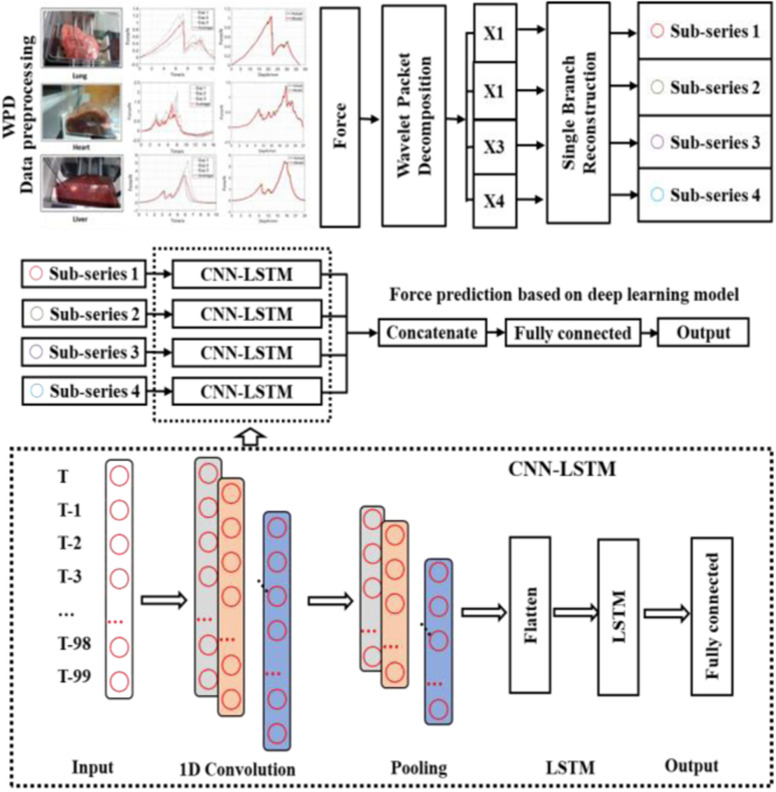

Fig. 2 shows the new WPD-CNN-LSTM (WCL) model proposed for the real-time haptic force feedback analysis. The process has three key steps, which are as follows.

-

1)

Collect the measured data (force) in lung and decompose the original input series into four subseries by two-levels WPD. Sequence length is 100. The predicted data are generated according to the previous 100 time steps within four channels. In this article, (9401, 100) dimension for each subseries is conducted.

-

2)

CNN is applied to extract the features of every subseries. Four subseries matrices are fed into four independent CNN channels. The output of the ith channel is denoted as

.

. -

3)After feature extraction of using WPD and CNN, LSTM is applied to conduct the prediction. In Fig. 2, four independent LSTMs are designed, and each output is concatenated and input to the full connection layer to complete the final prediction. This can be formulated as

Fig. 2.

Diagram of our real-time WPD and CNN based LSTM for COVID-19 surgical force guide predictive model, WPD is used for the data preprocessing, and CNN and LSTM are employed for the haptic prediction.

where  represents the feature representation of WPD-CNN,

represents the feature representation of WPD-CNN,  denotes the LSTM layer,

denotes the LSTM layer,  denotes the subseries,

denotes the subseries,  represents the output through the different LSTM layer,

represents the output through the different LSTM layer,  denotes the concatenate operator,

denotes the concatenate operator,  denotes the fully connected layer, and

denotes the fully connected layer, and  denotes the output.

denotes the output.

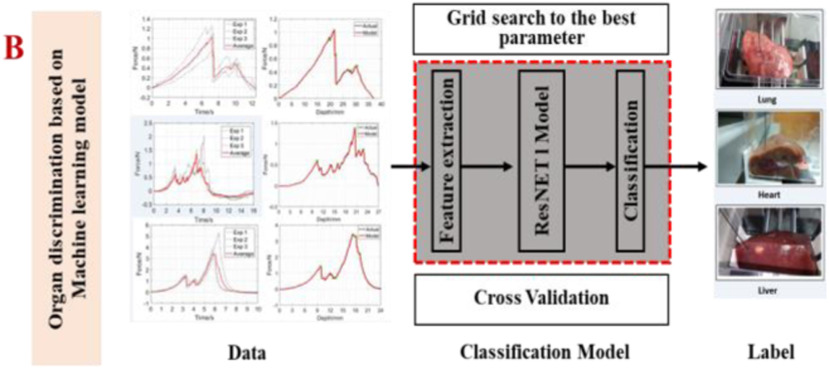

Meanwhile, the ResNet and common machine learning model are used to recognize different organs according to the force feedback captures by the sensors. The ResNet can solve the problem of network degradation with the increase of network layers by adding the shortcut connection in each residual block to enable the gradient flow directly through the bottom layers. The residual block is described as

|

where  denotes the convolutional block with the number of filters

denotes the convolutional block with the number of filters  , the number of filters

, the number of filters  . Finally, the final classification result is output through a fully connected, which is shown in Fig. 3.

. Finally, the final classification result is output through a fully connected, which is shown in Fig. 3.

Fig. 3.

ResNet we used for the real-time force feedback classification model. After the data collection from the biomechanics experiment, parameters are chosen for the ResNet classification algorithm.

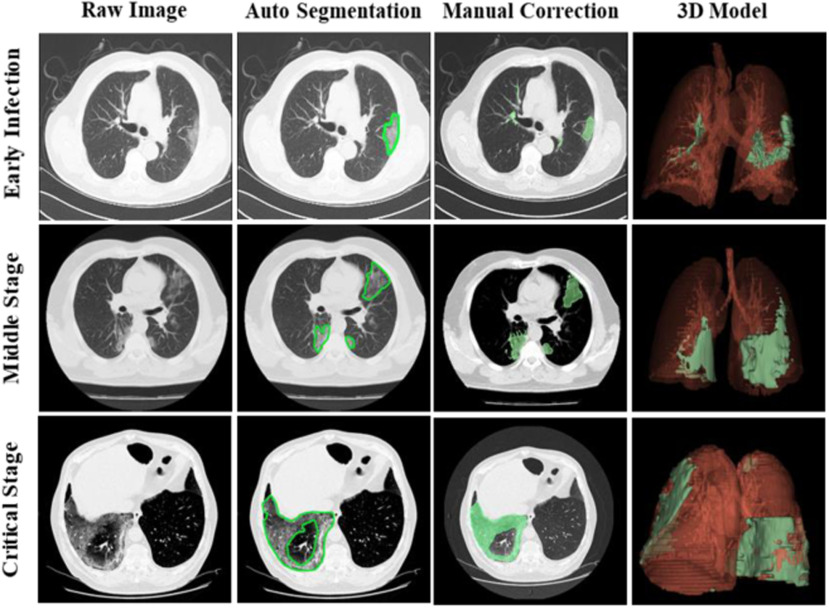

2). COVID-19 Patient-Specific CT 3-D Rendering

The CT images for the visual rendering are provided by the hospital in Fig. 4 show some examples of images. The sample case for the clinical stage is a 55-year old male presented to the hospital in Kunming. He had a two days history of pharyngalgia, headache, rhinorrhea, and fever. He did not contact any COVID-19 patients. Apart from a history of hypertension, the patient was a 30-year smoker. The patient's chest CT scan (February 8, 2020) demonstrated the unilateral peripheral distribution of ground-glass opacities, as shown in Fig. 4. First, we imported patients’ CT images, which is the digital imaging and communications in medicine (DICOM) format, for reconstructing a surgical simulation demo. After that, four professional thoracic surgeons manually corrected the COVID-19 infection region of interest, which is demonstrated in the third column of Fig. 4. The 3-D mesh reconstructed model is used in the marching cube algorithm. We programmed the process of using the SDK, such as VTK, CTK, ITK, and IGSTK, for the visual rendering.

Fig. 4.

Visual rendering procedures for the three COVID-19 patients from the dataset of hospital, the first line is a new infection patient, the second line demonstrates a middle stage patient, and the third line is the critical stage with mortal danger patient. Then, after the thoracic surgeon manual correction, the reconstructed 3-D model of the patient-specific lung structure and the COVID-19 infection region (green part) is shown in the fourth column.

Finally, we employed the shade programming to paint on the vertex colors. The virtual COVID-19 patient was characterized by choice of various triggers, which are designed to respond to the biopsy puncture touching multilayers of the lung demo. It helps judge which costa will be punctured by the trocar needle inserting the current route.

3). Training Data Collection and Analysis

According to the aforementioned haptic navigation framework, with highly immersive tactile feedback, a biomechanics platform is designed to collect the haptic information from the porcine viscera (heart, liver, and lung) that is collected from a butcher. Classification and prediction are applied to verify the COVID-19 lung biopsy technology of haptic navigation. Specifically, in the interventional surgery, the most common misoperation is a vascular puncture. Besides, the mistake biopsy into the wrong target is another commonly occurred situation. Six main parts make up the customized intervention needle. Based on the patients’ consent and without effects for the surgical operation, an 18G COOK trocar needle with a miniature force sensor is employed as the force collection module. Apart from that, the data recording module with the servo motor system, the linear and vertical guideway have also been implemented.

4). Biopsy Route Plan Algorithm and System Integration

In the automobile navigation, the GPS should provide several alternative routes for the driver to choose, which is based on a different kind of the emphasizes, such as the shortest distance, the congestion avoiding, or the shortest time. It is same for the surgical navigation; however, the dangerous area to be avoided, such as a vessel or intensive neurological area, should be the foremost consideration than the time and distance. Specifically, in our COVID-19 lung biopsy, nearly 40% of blood flow through the lung, so the prior avoiding area is the lung artery. In addition, the system should consider the distance and the surgical time. Therefore, we implement automatic addressing algorithms into three layers. The first one is the puncture position plan layer after the route plan layer, which considers the vessel, the position of the COVID-19 infection, as well as the distance. The last layer is the chosen route-specific surgical indications. Fig. 5 shows the whole lung biopsy navigation workflow of our system, in which intraoperative data are collected and processing

Fig. 5.

Force rendering, visual rendering, and the audio rendering procedures are being reconstructed in our intelligent framework, stored the force data, CT-based AR visual clues, and the OR-based audio are provided for the surgical environment reconstruction.

B. User-Oriented Intraoperation

There are potential risks and ethical reasons for the initial implementation of the force and visual navigation framework in the real-world COVID-19 lung biopsy surgery. In this article, we employ the virtual reality to construct an immersive multiperceptual intraoperative environment to simulate the real surgery.

1). 3DUI Design

Haptic-AR navigation 3DUI with the AR device together with the main parts of the UI included in both of the visual and haptic intro-operation details is developed. There are three main kinds of AR display technologies during the operation. Compared to the video-based and projection-based AR navigation system, the see-through display system using a semitransparent free-form lens to reflect the digital content overlapped with the patient on the near-eye microdisplay provides an intuitional and portable surgical experience. In this article, we choose the see-through AR display pattern with the Microsoft HoloLens mixed reality head-mounted display (HMD). The C-arm image or ultrasound image is the essential navigational clues during the intentional surgery, so we put the real-time CT images on the main left part of the 3DUI. The real-time AR navigation interface is constructed in the top right of the UI, which is the manipulation platform for the haptic-AR surgical simulator. We introduce this module to mimic the real operation in OR. Apart from these two components, the coronal, sagittal, and axial CT images are also synchronously displaying the needle track during the surgical simulation as a part of AR navigation. Referring to the GPS interface, we integrate the navigation clues in the bottom of the 3DUI, which includes the operation time, intervention depth, force limitation, speed limitation, the matching layer of the tissue, and the warning of mispuncture during the surgery.

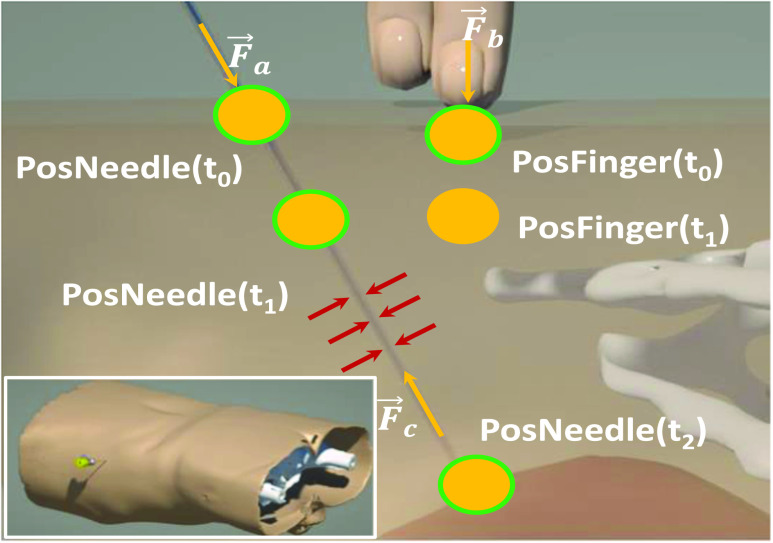

2). Anatomical-Based Rendering of Multiobject Force

Apart from the physics-based rendering of the visual rendering, there is the force rendering pipeline. In order to reconstruct the force, we develop an anatomical-based rendering method. Based on the biomechanics test platform in Fig. 5, these data are obtained from the fresh porcine lung, which is bought from a butcher. To support this dual-hands operation realistically, we carry out the palpation and needle insertion force in one haptic rendering loop. Fig. 6 shows the force rendering algorithms, including universal and indispensable, for most kind of image-guided medical simulations.  ,

,  and

and  are the external needle force, finger pressure, and the tissue resistance, respectively.

are the external needle force, finger pressure, and the tissue resistance, respectively.  rendering is based on the aforementioned deep learning algorithms, inputting with the specific puncture velocity, needle diameter, and the time, the system could output the real-time force, and send it to the haptic device to rendering.

rendering is based on the aforementioned deep learning algorithms, inputting with the specific puncture velocity, needle diameter, and the time, the system could output the real-time force, and send it to the haptic device to rendering.

Fig. 6.

Anatomical-based rendering of needle and finger force during the simulation,  and

and  mean the external pressure of the needle tip and the finger force, and

mean the external pressure of the needle tip and the finger force, and  means the resistant force when the needle passes through the soft tissue.

means the resistant force when the needle passes through the soft tissue.

The force  means the palpation force that the doctor detects the best puncture location through the finger pressing. The target position of lung inside decides the force feedback to the fingertip should be resultant from the rigid ribs and the surface multilayers’ soft tissue. Here, this procedure is divided into two statuses: First, the original location of a fingertip detects the collision detection with the derma surface, virtual proxy without penetration into the visual objects. There is no force feedback in this stage. Second, with no exceeded fascia plane, when

means the palpation force that the doctor detects the best puncture location through the finger pressing. The target position of lung inside decides the force feedback to the fingertip should be resultant from the rigid ribs and the surface multilayers’ soft tissue. Here, this procedure is divided into two statuses: First, the original location of a fingertip detects the collision detection with the derma surface, virtual proxy without penetration into the visual objects. There is no force feedback in this stage. Second, with no exceeded fascia plane, when  turns to

turns to  , with the external force increasing, a proxy begins to penetrate into the objects. Since the skin is 0.8 mm and the thickness of human muscle and fat is 8.4 and 39 mm, respectively, we can render the fingertip force according to the following function:

, with the external force increasing, a proxy begins to penetrate into the objects. Since the skin is 0.8 mm and the thickness of human muscle and fat is 8.4 and 39 mm, respectively, we can render the fingertip force according to the following function:

|

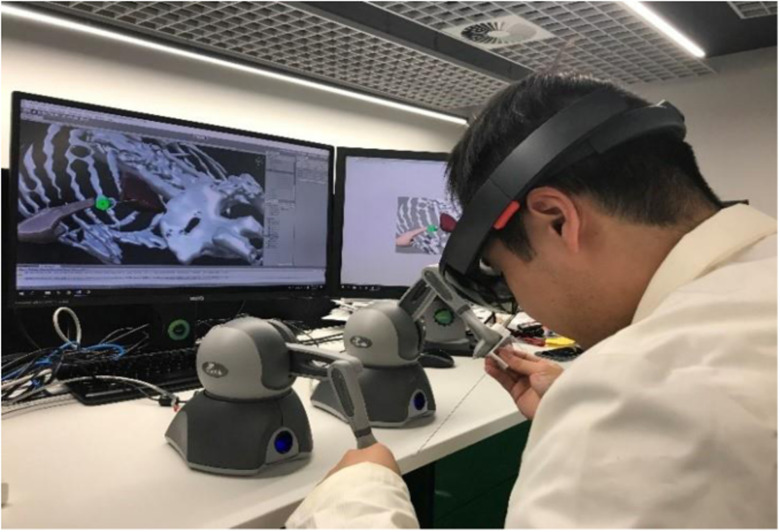

When the COVID-19 lung biopsy navigation framework is implemented, we integrate the hardware system, including a Microsoft HoloLens AR HMD with two Phantom Omni, 6-DOF input, and 3-DOF out haptic rendering device. Owning to the physically based rendering (PBR) and the high refreshment (> 900 HZ) requirement of the haptic rendering, we connect the PC and HoloLens through a feature of holographic remoting, which could put the heavy calculation rendering work on a PC. The whole system is shown in Fig. 7.

Fig. 7.

Haptic-AR-enabled COVID-19 lung biopsy surgical navigation prototype.

C. System Evaluation

Since the simulator cannot fully automatically record all data, a dedicated staff records the relevant data during the operation. 24 medical students, 16 novices (two years internship), and eight professional surgeons conduct the experiments to evaluate our framework. When a doctor starts the experiment, there is a dedicated laboratory staff responsible for recording the data. The experiment tasks include all COVID-19 lung biopsy procedures.

1). Evaluation Metrics

The parameter indicators of the participants’ performance are recorded for the objective evaluation. The first penetration (Path I) trajectory is from the trailing edge of the 12th rib, and reached the target. This route is the most recommended one from the thoracic surgeon, since it avoids the district of ribs and the dense distribution of blood. Path II began from the lower middle part between the 11th rib and 12th rib, across the middle pulmonary lobe and reached the target position. Path III, as the hardest trajectory forms the upper pulmonary lobe penetration, needs to avoid the misspuncture on the lung lobe [30]. Furthermore, to prevent the lung perforation that happened during the surgery, there are two short pauses during the intervention process when the needle tip touches the lung capsular and the target COVID-19 infection lesion.

To fulfil the real-world surgical requirements, we choose our objective evaluation matrices contents based on these three surgical tunnels and ask all the trainees to hold for a pause when they feel (visual or tactile) the resistance of the lung capsular and the target lesion. The target indicators of the systematic review are reported in Table I.

TABLE I. Objective Metrics for the System Evaluation.

| Objective metrics | Abbreviation |

|---|---|

| Total operation time (min) (Paths I, II, III) | T-I, II, III |

| Fluoroscopy time (min) (Paths I, II, III) | FT-I, II, III |

| Needle pathway length (cm) (Paths I, II, III) | NPL-I, II, III |

| Hands pathway length (cm) (Paths I, II, III) | HPL-I, II, III |

| Numbers of puncture (Paths I, II, III) | NP-I, II, III |

| Numbers of rib injury (Paths I, II, III) | NRI-I, II, III |

| Blood vessel injury (Paths I, II, III) | BVI-I, II, III |

| Blood loss (mL) (Paths I, II, III) | BL- I, II, III |

| Numbers of mispuncture (Paths I, II, III) | NMP- I, II, III |

| Numbers of lung capsular injury (Paths I, II, III) | NII-I, II, III |

| Numbers of lesion perforations (Paths I, II, III) | NPP-I, II, III |

| Point 1 punctured accuracy (Path I, cm) | P1 |

| Point 2 punctured accuracy (Path I, cm) | P2 |

| Point 3 punctured accuracy (Path II, cm) | P3 |

| Point 4 punctured accuracy (Path II, cm) | P4 |

| Point 5 punctured accuracy (Path III, cm) | P5 |

| Point 6 punctured accuracy (Path III, cm) | P6 |

2). Subjective Questionnaire

The subjective evaluations include two main components, the navigation effects and the haptic-AR reliable system assessment. The navigation effect part includes each performance of the combinations of different navigational methods. The haptic-AR navigation simulator evaluation questionnaires include the visual and haptic rendering performance feedbacks from the experts and novice participants. The system exceptions matrix is used for the system probable applications assessments for the surgeons’ prospects, as reported in Table II.

TABLE II. Subjective Metrics for the System Evaluation.

| Subjective questionnaires | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Navigation effects | |||||

| Traditional navigation | |||||

| Traditional + AR navigation | |||||

| Traditional + haptic navigation | |||||

| Traditional + haptic + AR navigation | |||||

| Haptic + AR navi | |||||

| System evaluation | |||||

| AR graphic performance | |||||

| Haptic performances | |||||

| Visual fatigue | |||||

| Haptic fatigue | |||||

| Overall appraise | |||||

| Exceptions? | |||||

| Surgical navigation | |||||

| Surgical planning | |||||

| Novice training | |||||

| Resident practice | |||||

| Surgical rehearse |

The bold entities is used to distinguish the different types of evaluation.

IV. Results

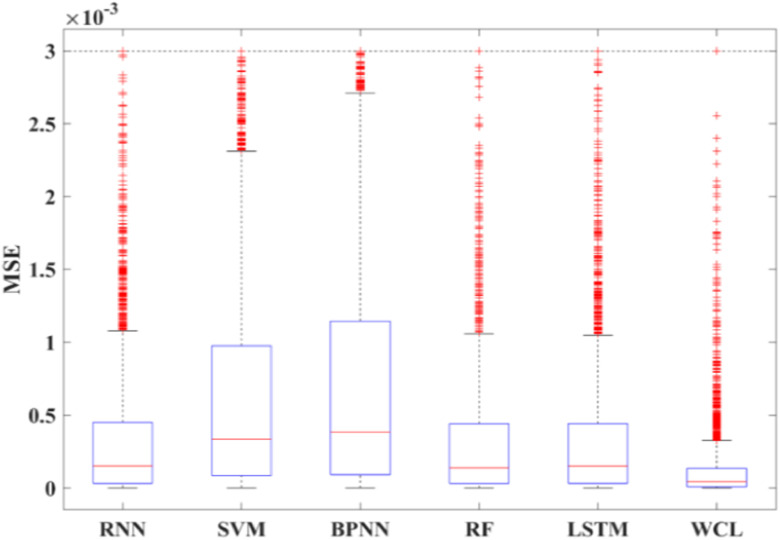

A. Learning Accuracy

The training force data are separated into 100 groups based on time series, each puncture force is collected by the ATI force sensor with 1000-Hz sampling frequency, and the number of effective data in each group are about 10 000. According to the lung puncture data, the results at the next moment predicted by the first 100 time data are reported in Table III. The evaluation metrics include root-mean-square error (RMSE), mean absolute error (MAE), and mean-squared error (MSE). The smallest values indicate that the corresponding model has the best prediction performance, as seen in Table III.

TABLE III. Performance Comparison Between the Proposed WCL Model and the General Machine Learning Method.

| Model | RMSE | MAE | MSE |

|---|---|---|---|

| BPNN | 0.0307 | 0.0241 | 0.000940 |

| RNN | 0.0200 | 0.0152 | 0.000402 |

| Random forest | 0.0190 | 0.0146 | 0.000363 |

| SVM | 0.0269 | 0.0217 | 0.000724 |

| General LSTM | 0.0189 | 0.0148 | 0.000359 |

| Our proposed WCL | 0.0128 | 0.0087 | 0.000164 |

The bold entities indicate the high performance of the model we proposed.

Compared to BPNN, RNN, random forest, SVM, and LSTM, our proposed WPD-CNN-LSTM model presents the best prediction results. Boxplots of the MSE of different models are shown in Fig. 8.

Fig. 8.

Boxplot for comparing different models, and the MSE result demonstrate that WCL method we proposed shows the best performance.

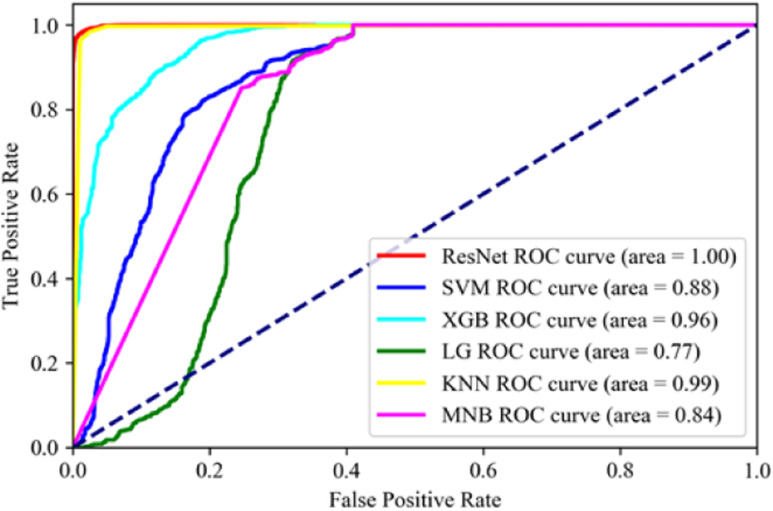

Fifty consecutive data of heart, liver, and lung at different times are randomly selected as training data so that a total of 18 272 samples are used to train the model. The remaining 7831 samples are used for testing. We smooth the dataset, conduct normalization, and perform five-fold cross-validation. Table IV reports the comparison study among different scenarios. The recognition results of heart, liver, and lung based on force feedback data under different models are reported in Table IV. Accuracy, precision, recall, and F1-score are used for performance evaluation. Meanwhile, the area under the ROC curve (AUC) is shown in Fig. 9.

TABLE IV. Performance of Different Classification Model.

| Model | Accuracy | Precision | Recall | F1-score |

|---|---|---|---|---|

| KNN | 0.96 | 0.96 | 0.95 | 0.95 |

| XGBoost | 0.81 | 0.79 | 0.76 | 0.77 |

| SVM | 0.72 | 0.68 | 0.65 | 0.65 |

| Logistic regression | 0.68 | 0.46 | 0.58 | 0.51 |

| Multinomial NaiveBayes | 0.50 | 0.51 | 0.46 | 0.44 |

| ResNet we used | 0.97 | 0.96 | 0.97 | 0.97 |

The bold entities indicate the high performance of the model we proposed.

Fig. 9.

FPS of ROC for different algorithms, the ResNet shows an extremely high performance among the experiment algorithms.

Given the data in Table IV and Fig. 9, we can see that the ResNet network can achieve excellent results in the organ recognition.

In terms of ROC, our proposed ResNet model achieves 1, which outperforms 99%, 96%, 88%, 84%, and 77% for the KNN, XGBoost, SVM, multinomial NaiveBayes, LSTM, and logistic RegressionFCN, respectively.

B. Objective Comparison

The results of the subjective comparison of the different groups are reported in Table V. The abbreviations are the same to that in Table I. The experts’ performances in the paths I, II, and III are better than the novices. As the surgical difficulty increases, the surgical faults increase for both the experts and the novices.

TABLE V. Results of Objective Comparison in Different Groups on the Haptic-AR Navigation Framework.

| OM | I | II | III | ||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Expert | Novice | Expert | Novice | Expert | Novice | ||||||||||||||||||||||

| E1 | E2 | E3 | E4 | N1 | N2 | N3 | N4 | E1 | E2 | E3 | E4 | N1 | N2 | N3 | N4 | E1 | E2 | E3 | E4 | N1 | N2 | N3 | N4 | ||||

| T | 6.8 | 6.2 | 5.7 | 5.1 | 10.4 | 8.7 | 9.4 | 9.1 | 9.1 | 8.8 | 7.1 | 6.1 | 14.1 | 10.1 | 12.7 | 9.8 | 9.7 | 9.1 | 8.3 | 7.7 | 17.1 | 14.5 | 15.1 | 13.8 | |||

| FT | 6.2 | 5.7 | 4.1 | 3.7 | 9.8 | 6.9 | 10.1 | 7.2 | 8.1 | 7.7 | 7.0 | 5.9 | 13.5 | 9.7 | 11 | 10 | 8.1 | 7.5 | 7.2 | 6.8 | 16.8 | 12.8 | 14.5 | 12.6 | |||

| NPL | 47 | 41 | 57 | 61 | 141 | 109 | 124 | 121 | 77 | 70 | 67 | 54 | 198 | 161 | 188 | 154 | 88 | 101 | 74 | 80 | 210 | 177 | 191 | 169 | |||

| HPL | 87 | 51 | 101 | 91 | 257 | 174 | 241 | 209 | 102 | 104 | 88 | 90 | 374 | 301 | 344 | 297 | 147 | 124 | 104 | 110 | 371 | 341 | 355 | 314 | |||

| NP | 4.1 | 2.7 | 2.4 | 1.9 | 7.7 | 5.8 | 7.1 | 6.1 | 6.3 | 7.2 | 4.2 | 3.7 | 9.1 | 7.1 | 8.6 | 7.7 | 9.8 | 8.1 | 6.9 | 6.1 | 12.1 | 10.1 | 11.2 | 10.8 | |||

| NRI | 1.5 | 0.7 | 0.5 | 0.5 | 3.2 | 2.1 | 3.3 | 2.4 | 2.1 | 1.8 | 2.3 | 1.9 | 3.5 | 2.1 | 2.8 | 2.3 | 2.0 | 2.1 | 1.8 | 1.7 | 4.7 | 3.6 | 3.5 | 4.1 | |||

| BVI | 2.1 | 3.1 | 2.4 | 2.3 | 3.8 | 3.2 | 4.1 | 2.6 | 1.9 | 2.1 | 2.1 | 1.7 | 3.2 | 2.1 | 3.3 | 2.4 | 2.7 | 2.4 | 2.5 | 2.8 | 4.1 | 3.4 | 2.9 | 3.3 | |||

| BL | 11 | 17 | 10 | 11 | 61 | 47 | 60 | 48 | 30 | 41 | 28 | 22 | 87 | 58 | 80 | 50 | 70 | 64 | 48 | 44 | 107 | 87 | 114 | 82 | |||

| NMP | 3.1 | 1.7 | 1.2 | 1.4 | 6.7 | 4.5 | 5.5 | 4.1 | 5.1 | 3.3 | 3.1 | 2.1 | 7.1 | 6.2 | 6.8 | 5.7 | 8.1 | 7.2 | 4.9 | 5.1 | 10.4 | 8.7 | 8.9 | 7.4 | |||

| NII | 0.5 | 0.5 | 0 | 0.5 | 2.1 | 1.7 | 1.9 | 1.7 | 1.8 | 1.7 | 1.2 | 0.5 | 3.5 | 2.1 | 2.2 | 1.2 | 3.1 | 4.1 | 3.7 | 3.4 | 5.4 | 5.1 | 5.8 | 5.2 | |||

| NPP | 0.5 | 0 | 0.5 | 0.5 | 1.9 | 1.4 | 1.2 | 1.6 | 0.9 | 0.4 | 1.1 | 1.0 | 2.7 | 1.3 | 1.1 | 2.1 | 3.3 | 2.5 | 1.8 | 2.1 | 4.8 | 3.8 | 3.2 | 3.4 | |||

| P1 | 0.2 | 0.3 | 0.1 | 0.2 | 0.9 | 0.5 | 0.7 | 0.4 | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | |||

| P2 | 0.5 | 0.4 | 0.2 | 0.3 | 1.3 | 1.2 | 1.4 | 1 | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | |||

| P3 | - | - | - | - | - | - | - | - | 0.4 | 0.1 | 0.1 | 0 | 1.4 | 0.8 | 1.1 | 1.2 | - | - | - | - | - | - | - | - | |||

| P4 | - | - | - | - | - | - | - | - | 0.8 | 0.5 | 0.4 | 0 | 2.1 | 1.1 | 1.9 | 1.7 | - | - | - | - | - | - | - | - | |||

| P5 | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | 2.1 | 2.3 | 1.4 | 1.2 | 2.1 | 1.8 | 2.1 | 1.7 | |||

| P6 | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | 2.9 | 3.2 | 2.0 | 1.8 | 3.1 | 2.2 | 2.9 | 1.9 | |||

The bold entities is used to distinguish the different experimental groups of the objective evaluation.

V. Discussion

To develop an intelligent and trustworthy COVID-19 lung biopsy surgical navigator, the haptic-AR based framework has been conducted. Based on the training results, the COVID-19 lung biopsy can be accomplished with or without assistance, visual feedback and numerical feedback are provided.

A. Performance by Haptic-AR Navigation

The improvement of the augmented reality navigation is the natural ergonomic pattern and the immersive perception for the lung biopsy. Compared with the traditional visual-based rendering method, AR navigation can significantly improve the surgical procedures of novice doctors. Furthermore, AR coverage demonstrations can help them identify patient-specific structures. Another interesting finding is that in complex and delicate procedures, such as acupuncture into the lung, AR's 3-D spatial-visual advantage is of great help to beginners. During the biopsy position is found, most experts focus on completing the operation with C-arm image, and even some experts directly remove HoloLens to conduct the remaining operations. Since we are unable to establish an evaluation system for animal experiments or clinical trials, a limitation of our approach is AR immersion, which will be addressed in our future work. The most significant difference observed in the experiment is that the expert's haptic-guide performance is faster and more accurate when performing tasks. The tactile clues of novices suggest that the contribution to clinical experience during surgery is low, which may result in that they are still not accumulating experience.

B. Performance by Hybrid Deep Haptic Navigation

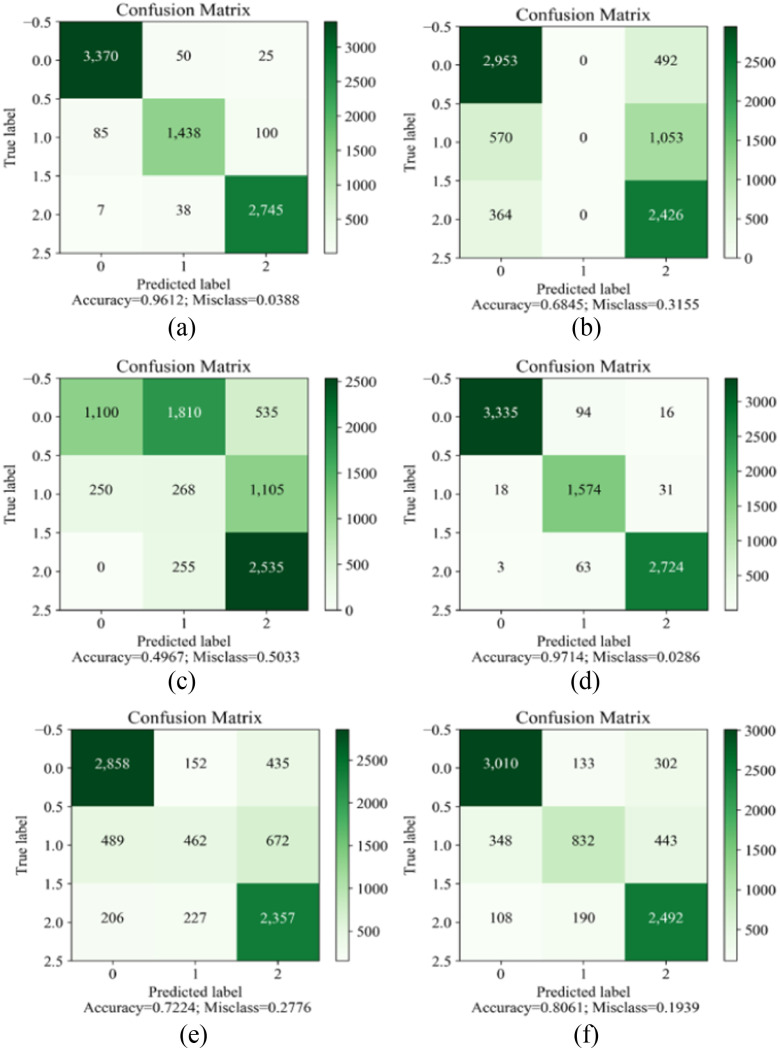

Compared with the traditional LSTM model, the RMSE, MAE, and MSE on the hybrid WCL reduce by 0.061 (N), 0.0061(N), and 0.000195 (N), respectively. The comprehensive performance is improved by more than 30%. It indicates that the new WCL model can obtain more accurate features and more accurate prediction results after the processing of WPD and CNN. The force feedback time series can be decomposed into the low-frequency and the high-frequency subseries. WPD is used to extract these frequency features. We use 1D-CNN to extract the feature information in each frequency subseries. In Table IV, the accuracy of the ResNet method is 97%, which indicates that the ResNet can recognize different organs even using the past 0.1-s data. In contrast, the accuracy of the KNN is 96%, XGBoost is 81%, SVM is 72%, logistic regression is 68%, and the accuracy of multinomial NaiveBayes is 50%. Information that is more promising can be obtained from the ROC curve in Fig. 9 and the confusion matrix in Fig. 10. ResNet is superior in this specific task. In the offline process, we use the proposed WCL model and ResNet model to train the force feedback. After optimizing and adjusting the model parameters, the model is saved. The new experiments with the saved model are performed in the online application. According to the predicted feedback, the organ type and the force feedback at the next moment are predicted and displayed on the monitor.

Fig. 10.

Confusion matrix for different algorithms. (a) K-nearest neighbors. (b) Logistic regression. (c) Multinomial NaiveBayes. (d) ResNet. (e) Support vector machine. (f) XGBoost.

VI. Conclusion

In this article, we proposed a new haptic-AR-enabled guiding strategy for precision and reliable surgery. We developed a customized deep learning algorithm to predict the stress procedures by the time series. Apart from that, to achieve a better human ergonomics performance, we visualized all the navigational clues from our haptic-AR guide system. We are among the first of applying deep learning for the COVID-19 lung biopsy surgical prediction and classification cues, which may provide a new strategy for COVID-19 therapy.

Funding Statement

This work was supported by the National Natural Science Foundation of China under Grant 62062069, Grant 62062070, and Grant 62005235.

Contributor Information

Yonghang Tai, Email: taiyonghang@126.com.

Kai Qian, Email: 4346@ynnu.edu.cn.

Xiaoqiao Huang, Email: hxq@ynnu.edu.cn.

Jun Zhang, Email: junzhang@ynnu.edu.cn.

Mian Ahmad Jan, Email: mianjan@awkum.edu.pk.

Zhengtao Yu, Email: ztyu@hotmail.com.

References

- [1].Zhu N. et al. , “A novel coronavirus from patients with pneumonia in China, 2019,” New England J. Med., vol. 382, pp. 727–733, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Huang C. et al. , “Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China,” Lancet, vol. 395, pp. 497–506, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Wang D. et al. , “Clinical characteristics of 138 hospitalized patients with 2019 novel coronavirus-infected pneumonia in Wuhan, China,” JAMA, vol. 323, pp. 1061–1069, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Holshue M. L. et al. , “First case of 2019 novel coronavirus in the United States,” New England J. Med., vol. 382, pp. 929–936, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Chan J. F. et al. , “A familial cluster of pneumonia associated with the 2019 novel coronavirus indicating person-to-person transmission: A study of a family cluster,” Lancet, vol. 495, pp. 514–523, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Chang L. M. et al. , “Epidemiologic and clinical characteristics of novel coronavirus infections involving 13 patients outside Wuhan, China,” JAMA, vol. 323, pp. 1092–1093, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Nkengasong J., “China's response to a novel coronavirus stands in stark contrast to the 2002 SARS outbreak response,” Nat. Med., vol. 26, pp. 310–311, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Abdel-Basset M., Chang V., Hawash H., Chakrabortty R. K., and Ryan M., “FSS-2019-nCov: A deep learning architecture for semi-supervised few-shot segmentation of COVID-19 infection,” Knowl.-Based Syst., vol. 212, 2020, Art. no. 106647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Elmi-Terander A. et al. , “Surgical navigation technology based on augmented reality and integrated 3D intraoperative imaging a spine cadaveric feasibility and accuracy study,” Spine (Phila Pa 1976), vol. 41, no. 21, pp. E1303–E1311, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Salihoglu S. and Widom J., “GPS: A graph processing system,” in Proc. 25th Int. Conf. Sci. Statist. Database Manage., 2013, pp. 29–34. [Google Scholar]

- [11].Hofmann-Wellenhof B., Lichtenegger H., and Collins J., Global Positioning System: Theory and Practice. Vienna, Austria: Springer, 2001. [Google Scholar]

- [12].Liu L., Vel O. D., Han Q. L., Zhang J., and Xiang Y., “Detecting and preventing cyber insider threats: A survey,” IEEE Commun. Surv. Tut., vol. 20, no. 2, pp. 1397–1417, Apr.–Jun. 2018. [Google Scholar]

- [13].Sun N., Zhang J., Rimba P., Gao S., Zhang L. Y., and Xiang Y., “Data-driven cybersecurity incident prediction: A survey,” IEEE Commun. Surv. Tut., vol. 21, no. 2, pp. 1744–1772, Apr.–Jun. 2019. [Google Scholar]

- [14].Wang S. et al. , “A fully automatic deep learning system for COVID-19 diagnostic and prognostic analysis,” Eur. Respir. J., vol. 56, 2020, Art. no. 2000775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Song Y. et al. , “Deep learning enables accurate diagnosis of novel coronavirus (COVID-19) with CT images,” 2020, medRxiv. [DOI] [PMC free article] [PubMed]

- [16].Abdel-Basset M. and Chang V., “HSMA_WOA: A hybrid novel slime mould algorithm with whale optimization algorithm for tackling the image segmentation problem of chest X-ray images,” Appl. Soft Comput., vol. 95, 2020, Art. no. 106642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Ozturk T., Talo M., Yildirim E. A., Baloglu U. B., Yildirim O., and Acharya U. R., “Automated detection of COVID-19 cases using deep neural networks with X-ray images,” Comput. Biol. Med., vol. 121, 2020, Art. no. 103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Chang V., “Computational intelligence for medical imaging simulations,” J. Med. Syst., vol. 42, 2018, Art. no. 10. [DOI] [PubMed] [Google Scholar]

- [19].Chang V., “Data analytics and visualization for inspecting cancers and genes,” Multimedia Tools Appl., vol. 77, pp. 17693–17707, 2018. [Google Scholar]

- [20].Hemdan E. E. D., Shouman M. A., and Karar M. E., “COVIDX-Net: A framework of deep learning classifiers to diagnose COVID-19 in X-ray images,” 2020, arXiv:2003.11055. [Google Scholar]

- [21].Gozes O. et al. , “Rapid AI development cycle for the coronavirus (COVID-19) pandemic: Initial results for automated detection & patient monitoring using deep learning CT image analysis,” 2020,. arXiv:2003.05037. [Google Scholar]

- [22].Abdel-Basset M., Manogaran G., Gamal A., and Chang V., “A novel intelligent medical decision support model based on soft computing and IoT,” IEEE Internet Things J., vol. 7, no. 5, pp. 4160–4170, May 2020. [Google Scholar]

- [23].Citardi M. J., Yao W., and Luong A., “Next-generation surgical navigation systems in sinus and skull base surgery,” Otolaryngologic Clin. North Amer., vol. 50, no. 3. pp. 617–632, 2017. [DOI] [PubMed] [Google Scholar]

- [24].Ni D. et al. , “A virtual reality simulator for ultrasound-guided biopsy training,” IEEE Comput. Graph. Appl., vol. 31, no. 2, pp. 36–48, Mar./Apr. 2011. [DOI] [PubMed] [Google Scholar]

- [25].Selmi S. Y., Fiard G., Promayon E., Vadcard L., and Troccaz J., “A virtual reality simulator combining a learning environment and clinical case database for image-guided prostate biopsy,” in Proc. 26th IEEE Int. Symp. Comput.-Based Med. Syst., 2013, pp. 179–184. [Google Scholar]

- [26].Yi N., Guo X. J., Li X. R., Xu X. F., and Ma W. J., “The implementation of haptic interaction in virtual surgery,” in Proc. Int. Conf. Elect. Control Eng., 2010, pp. 2351–2354. [Google Scholar]

- [27].Wei L., Najdovski Z., Abdelrahman W., Nahavandi S., and Weisinger H., “Augmented optometry training simulator with multi-point haptics,” in Proc. IEEE Int. Conf. Syst., Man, Cybern., 2012, pp. 2991–2997. [Google Scholar]

- [28].Jia S. and Pan Z., “A preliminary study of suture simulation in virtual surgery,” in Proc. Int. Conf. Audio, Lang., Image Process., 2010, pp. 1340–1345. [Google Scholar]

- [29].Coles T. R., Meglan D., and John N. W., “The role of haptics in medical training simulators: A survey of the state of the art,” IEEE Trans. Haptics, vol. 4, no. 1, pp. 51–66, Jan.–Mar. 2011. [DOI] [PubMed] [Google Scholar]

- [30].Tai Y., Wei L., Zhou H., Nahavandi S., and Shi J., “Tissue and force modelling on multi-layered needle puncture for percutaneous surgery training,” in Proc. IEEE Int. Conf. Syst., Man, Cybern., 2017, pp. 2923–2927. [Google Scholar]