Abstract

To counter the outbreak of COVID-19, the accurate diagnosis of suspected cases plays a crucial role in timely quarantine, medical treatment, and preventing the spread of the pandemic. Considering the limited training cases and resources (e.g, time and budget), we propose a Multi-task Multi-slice Deep Learning System (M Lung-Sys) for multi-class lung pneumonia screening from CT imaging, which only consists of two 2D CNN networks, i.e., slice- and patient-level classification networks. The former aims to seek the feature representations from abundant CT slices instead of limited CT volumes, and for the overall pneumonia screening, the latter one could recover the temporal information by feature refinement and aggregation between different slices. In addition to distinguish COVID-19 from Healthy, H1N1, and CAP cases, our M

Lung-Sys) for multi-class lung pneumonia screening from CT imaging, which only consists of two 2D CNN networks, i.e., slice- and patient-level classification networks. The former aims to seek the feature representations from abundant CT slices instead of limited CT volumes, and for the overall pneumonia screening, the latter one could recover the temporal information by feature refinement and aggregation between different slices. In addition to distinguish COVID-19 from Healthy, H1N1, and CAP cases, our M Lung-Sys also be able to locate the areas of relevant lesions, without any pixel-level annotation. To further demonstrate the effectiveness of our model, we conduct extensive experiments on a chest CT imaging dataset with a total of 734 patients (251 healthy people, 245 COVID-19 patients, 105 H1N1 patients, and 133 CAP patients). The quantitative results with plenty of metrics indicate the superiority of our proposed model on both slice- and patient-level classification tasks. More importantly, the generated lesion location maps make our system interpretable and more valuable to clinicians.

Lung-Sys also be able to locate the areas of relevant lesions, without any pixel-level annotation. To further demonstrate the effectiveness of our model, we conduct extensive experiments on a chest CT imaging dataset with a total of 734 patients (251 healthy people, 245 COVID-19 patients, 105 H1N1 patients, and 133 CAP patients). The quantitative results with plenty of metrics indicate the superiority of our proposed model on both slice- and patient-level classification tasks. More importantly, the generated lesion location maps make our system interpretable and more valuable to clinicians.

Keywords: COVID-19, CT imaging, deep learning, multi-class pneumonia screening, weakly-supervised learning, lesion localization

I. Introduction

Coronavirus disease 2019 (COVID-19), caused by a novel coronavirus (SARS-CoV-2, previously known as 2019-nCoV), is highly contagious and has become increasingly prevalent worldwide. The disease may lead to acute respiratory distress or multiple organ failure in severe cases [1], [2]. As of June 28th, 2020, 495,760 of 9,843,073 confirmed cases across countries have led to death, according to WHO statistics. Thus, how to accurately and efficiently diagnose COVID-19 is of vital importance not only for the timely treatment of patients, but also for the distribution and management of hospital resources during the outbreak.

The standard diagnostic method being used is real-time polymerase chain reaction (RT-PCR), which detects viral nucleotides from specimens obtained by oropharyngeal swab, nasopharyngeal swab, bronchoalveolar lavage, or tracheal aspirate [3]. Early reports of RT-PCR sensitivity vary considerably, ranging from 42% to 71%, and an initially negative RT-PCR result may convert into COVID-19 after up to four days [4]. Recent studies have shown that typical Computed Tomography (CT) findings of COVID-19 include bilateral pulmonary parenchymal groundglass and consolidative pulmonary opacities, with a peripheral lung distribution [5], [6]. In contrast to RT-PCR, chest CT scans have demonstrated about 56 98% sensitivity in detecting COVID-19 at initial manifestation and can be helpful in rectifying false negatives obtained from RT-PCR during early stages of disease development [7], [8].

98% sensitivity in detecting COVID-19 at initial manifestation and can be helpful in rectifying false negatives obtained from RT-PCR during early stages of disease development [7], [8].

However, CT scans also share several similar visual manifestations between COVID-19 and other types of pneumonia, thus making it difficult and time-consuming for doctors to differentiate among a mass of cases, resulting in about 25 53% specificity [4], [7]. Among them, CAP (community-acquired pneumonia) and influenza pneumonia are the most common types of pneumonia, as shown in Figure 1; therefore, it is essential to differentiate COVID-19 pneumonia from these. Recently, Liu et al. compared the chest CT characteristics of COVID-19 pneumonia with influenza pneumonia, and found that COVID-19 pneumonia was more likely to have a peripheral distribution, with the absence of nodules and tree-in-bud signs [9]. Lobar or segmental consolidation with or without cavitation is common in CAP [10]. Although it is easy to identify these typical lesions, the CT features of COVID-19, H1N1 and CAP pneumonia are very diverse.

53% specificity [4], [7]. Among them, CAP (community-acquired pneumonia) and influenza pneumonia are the most common types of pneumonia, as shown in Figure 1; therefore, it is essential to differentiate COVID-19 pneumonia from these. Recently, Liu et al. compared the chest CT characteristics of COVID-19 pneumonia with influenza pneumonia, and found that COVID-19 pneumonia was more likely to have a peripheral distribution, with the absence of nodules and tree-in-bud signs [9]. Lobar or segmental consolidation with or without cavitation is common in CAP [10]. Although it is easy to identify these typical lesions, the CT features of COVID-19, H1N1 and CAP pneumonia are very diverse.

Fig. 1.

Typical images of COVID-19, H1N1 and CAP. The red arrows indicate the locations of different lesions, which are marked by clinical experts. (a) COVID-19: CT shows ground glass opacity (GGO) with consolidation and crazy-paving sign distributed mainly along subpleural lungs. (b) H1N1(influenza A(H1N1)pdm09): the consolidation and small centrilobular nodules mainly locate at bronchovascular bundles. (c) CAP: there exists segmental consolidation with GGO.

In the past few decades, artificial intelligence using deep learning (DL) technology has achieved remarkable progress in various computer vision tasks [11]–[15]. Recently, the superiority of DL has made it widely favored in medical image analysis. Specifically, several studies focus on classifying different diseases, such as autism spectrum disorder [16], [17] or Alzheimer's disease in the brain [18]–[20]; breast cancers [21]–[23]; diabetic retinopathy and Glaucoma in the eye [24]–[26]; and lung cancer [27], [28] or pneumonia [29], [30] in the chest. Some efforts have also been made to partition images, from different modalities (e.g., CT, X-ray, MRI) into different meaningful segments [31]–[33], including pathology, organs or other biological structures.

Existing studies [30], [34], [35] have demonstrated the promising performance of applying deep learning technology for COVID-19 diagnosis. However, as initial studies, several limitations have emerged from these works. First of all, [36]–[39] utilized pixel-wise annotations for segmentation, which require taxing manual labeling. This is unrealistic in practice, especially in the event of an infectious disease pandemic. Second, performing diagnosis or risk assessment on only slice-level CT images [34], [40]–[45] is of limited value to clinicians. Since a volumetric CT exam normally includes hundreds of slices, it is still inconvenient for clinicians to go through the predicted result of each slice one by one. Although, 3D Convolutional Neural Networks (3D CNNs) are one option for tackling these limitations, their high hardware requirements, computational costs (e.g., GPUs) and training time, make them inflexible for applications [43], [46], [47].

To this end, we propose a Multi-task Multi-slice Deep Learning System (M Lung-Sys) for multi-class lung pneumonia screening, which can jointly diagnose and locate COVID-19 from chest CT images. Using the only category labeled information, our system can successfully distinguish COVID-19 from H1N1, CAP and healthy cases, and automatically locate relevant lesions on CT images (e.g., GGO) for better interpretability, which is more important for assisting clinicians in practice. To facilitate the above objective, two networks using a 2D CNN are devised in our system. The first one is a slice-level classification network, which acts like a radiologist to diagnose from coarse (normal or abnormal) to fine (disease categories) for every single CT slice. As the name suggests, it can ignore the temporal information among CT volumes and focus on the spatial information among pixels in each slice. Meanwhile, the learned spatial features can be further leveraged to locate the abnormalities without any annotation. To recover the temporal information and provide more value to clinicians, we introduce a novel patient-level classification network, using specifically designed refinement and aggregation modules, for diagnosis from CT volumes. Taking advantage of the learned spatial features, the patient-level classification network can be trained easily and efficiently.

Lung-Sys) for multi-class lung pneumonia screening, which can jointly diagnose and locate COVID-19 from chest CT images. Using the only category labeled information, our system can successfully distinguish COVID-19 from H1N1, CAP and healthy cases, and automatically locate relevant lesions on CT images (e.g., GGO) for better interpretability, which is more important for assisting clinicians in practice. To facilitate the above objective, two networks using a 2D CNN are devised in our system. The first one is a slice-level classification network, which acts like a radiologist to diagnose from coarse (normal or abnormal) to fine (disease categories) for every single CT slice. As the name suggests, it can ignore the temporal information among CT volumes and focus on the spatial information among pixels in each slice. Meanwhile, the learned spatial features can be further leveraged to locate the abnormalities without any annotation. To recover the temporal information and provide more value to clinicians, we introduce a novel patient-level classification network, using specifically designed refinement and aggregation modules, for diagnosis from CT volumes. Taking advantage of the learned spatial features, the patient-level classification network can be trained easily and efficiently.

In summary, the contributions of this paper are four-fold: 1) We propose an M Lung-Sys for multi-class lung pneumonia screening from CT images. Specifically, it can distinguish COVID-19 from healthy, H1N1 and CAP cases on either a single CT slice or CT volumes of patients. 2) In addition to predicting the probability of pneumonia assessment, our M

Lung-Sys for multi-class lung pneumonia screening from CT images. Specifically, it can distinguish COVID-19 from healthy, H1N1 and CAP cases on either a single CT slice or CT volumes of patients. 2) In addition to predicting the probability of pneumonia assessment, our M Lung-Sys is able to simultaneously output the lesion localization maps for each CT slice, which is valuable to clinicians for diagnosis, allowing them to understand why our system gives a particular prediction, rather than simply being fed a statistic. 3) Compared with 3D CNN based approaches [47], [48], our proposed system can achieve competitive performance with a cheaper cost and without requiring large-scale training data,1 4) Extensive experiments are conducted on a multi-class pneumonia dataset with 251 healthy people, 245 COVID-19 patients, 105 H1N1 patients and 133 CAP patients. We achieve high accuracy of 95.21% for correctly screening the multi-class pneumonia testing cases. The quantitative and qualitative results demonstrate that our system has great potential to be applied in clinical application.

Lung-Sys is able to simultaneously output the lesion localization maps for each CT slice, which is valuable to clinicians for diagnosis, allowing them to understand why our system gives a particular prediction, rather than simply being fed a statistic. 3) Compared with 3D CNN based approaches [47], [48], our proposed system can achieve competitive performance with a cheaper cost and without requiring large-scale training data,1 4) Extensive experiments are conducted on a multi-class pneumonia dataset with 251 healthy people, 245 COVID-19 patients, 105 H1N1 patients and 133 CAP patients. We achieve high accuracy of 95.21% for correctly screening the multi-class pneumonia testing cases. The quantitative and qualitative results demonstrate that our system has great potential to be applied in clinical application.

II. Dataset

A. Patients

The Ethics Committee of Shanghai Public Health Clinical Center, Fudan University approved the protocol of this study and waived the requirement for patient-informed consent (YJ-2020-S035-01). A search through the medical records in our hospital information system was conducted, with the final dataset consisting of 245 patients with COVID-19 pneumonia, 105 patients with H1N1 pneumonia, 133 patients with CAP and 251 healthy subjects with non-pneumonia. Of the 734 enrolled people with 415 (56.5%) men, the mean age was 41.8  15.9 years (range,

15.9 years (range,  years). The patient demographic statistics are summarized in Table I. The available CT scans were directly downloaded from the hospital Picture Archiving and Communications Systems (PACS) and non-chest CTs were excluded. Consequently, 734 three-dimensional (3D) volumetric chest CT exams are acquired for our algorithm study.

years). The patient demographic statistics are summarized in Table I. The available CT scans were directly downloaded from the hospital Picture Archiving and Communications Systems (PACS) and non-chest CTs were excluded. Consequently, 734 three-dimensional (3D) volumetric chest CT exams are acquired for our algorithm study.

TABLE I. Summary of Training and Testing Sets.

| Category | Patient-level | Slice-level | Age | Sex | ||

|---|---|---|---|---|---|---|

| Train | Test | Train | Test | (M/F) | ||

| Healthy | 149 | 102 | 42,834 | 30,448 | 32.4  11.8 11.8 |

131/120 |

| COVID-19 | 149 | 96 | 18,919 | 13,382 | 51.5  15.9 15.9 |

143/102 |

| H1N1 | 64 | 41 | 1,098 | 883 | 28.5  14.6 14.6 |

62/43 |

| CAP | 80 | 53 | 7,067 | 4,105 | 48.5  17.4 17.4 |

79/54 |

All the COVID-19 cases (mean age,  years; range,

years; range,  years) and H1N1 cases (mean age,

years) and H1N1 cases (mean age,  years; range,

years; range,  years) were acquired from January 20 to February 24, 2020 and from May 24, 2009 to January 18, 2010, respectively. All patients were diagnosed according to the diagnostic criteria of the National Health Commission of China and confirmed by RT-PCR detection of viral nucleic acids. Patients with normal CT imaging were excluded.

years) were acquired from January 20 to February 24, 2020 and from May 24, 2009 to January 18, 2010, respectively. All patients were diagnosed according to the diagnostic criteria of the National Health Commission of China and confirmed by RT-PCR detection of viral nucleic acids. Patients with normal CT imaging were excluded.

The patients with CAP subjects (mean age,  years; range,

years; range,  years) and healthy subjects (mean age,

years) and healthy subjects (mean age,  years; range,

years; range,  years) with non-pneumonia were randomly selected between January 3, 2019 and January 30, 2020. All the CAP cases were confirmed positive by bacterial culture, and healthy subjects with non-pneumonia undergoing physical examination had normal CT imaging.

years) with non-pneumonia were randomly selected between January 3, 2019 and January 30, 2020. All the CAP cases were confirmed positive by bacterial culture, and healthy subjects with non-pneumonia undergoing physical examination had normal CT imaging.

B. Selection and Annotation

To better improve the algorithm framework and fairly demonstrate the performance, we do not use any CT volumes from re-examination, that is, only one 3D volumetric CT exam per patient is enrolled in our dataset. As shown in Figure 2, all eligible patients were then randomized into a training set and testing set, respectively, using random computer-generated numbers. Unlike other studies [45], [47] which employ a small number of cases (10% 15%) for testing, we utilize around 40% of each category to evaluate the effectiveness and practicability of our system.

15%) for testing, we utilize around 40% of each category to evaluate the effectiveness and practicability of our system.

Fig. 2.

The flow chart of patient selection.

The annotation was performed at a patient and slice level. First of all, each CT volume was automatically labeled with a one-hot category vector based on CT reports and clinical diagnosis (i.e., 0: Healthy; 1: COVID-19; 2: H1N1; 3: CAP). Considering that each volumetric exam contains  images with a varying number of slices from 24

images with a varying number of slices from 24 495, for training, five experts subsequently annotated each CT slice following four principles: (1) The quality of annotation is supervised by a senior clinician; (2) If a slice is determined to have any lesion, label it with the corresponding CT volume's category; (3) Except for healthy cases, all slices from other cases considered as normal are discarded; (4) All slices from healthy people are annotated as Healthy. Note that we evaluate our model with the whole CT volume (i.e., realistic and arbitrary-length data), the discarded slices are only removed for training. Eventually, the number of slices annotated for the four categories is listed in Table I. The training set was used for algorithm development [n = 442; healthy person, n = 149; COVID-19 patients, n = 149; H1N1 patients, n = 64; CAP patients, n = 80], and the testing set was used for algorithm testing [n = 292; healthy person, n = 102; COVID-19 patients, n = 96; H1N1 patients, n = 41; CAP patients, n = 53].

495, for training, five experts subsequently annotated each CT slice following four principles: (1) The quality of annotation is supervised by a senior clinician; (2) If a slice is determined to have any lesion, label it with the corresponding CT volume's category; (3) Except for healthy cases, all slices from other cases considered as normal are discarded; (4) All slices from healthy people are annotated as Healthy. Note that we evaluate our model with the whole CT volume (i.e., realistic and arbitrary-length data), the discarded slices are only removed for training. Eventually, the number of slices annotated for the four categories is listed in Table I. The training set was used for algorithm development [n = 442; healthy person, n = 149; COVID-19 patients, n = 149; H1N1 patients, n = 64; CAP patients, n = 80], and the testing set was used for algorithm testing [n = 292; healthy person, n = 102; COVID-19 patients, n = 96; H1N1 patients, n = 41; CAP patients, n = 53].

III. Methodology

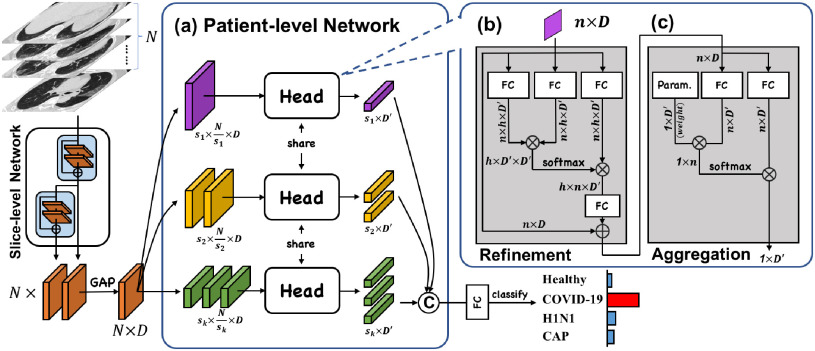

Figure 3 shows the schematic of our proposed Multi-task Multi-slice Deep Learning System (M Lung-Sys), which consists of two components, the Image Preprocessing and Classification Network. Specifically, the Image Preprocessing receives raw CT exams, and prepare them for model training or inference (in Section III-A). For the Classification Network, we divide it into two subnets (stages), i.e., slice-level and patient-level classification networks, with the purpose of jointly COVID-19 screening and location. Concretely, slice-level classification network is trained with multiple CT slice images and predicts the corresponding slice-level categories (in Section III-B), i.e., Healthy, COVID-19, H1N1 or CAP. Besides, patient-level classification network only has four layers, and takes a volume of CT slice features, instead of images as input, which are extracted by the former network, to output the patient-level labels (in Section III-C). Both classification networks are trained separately due to different tasks, but can be used concurrently in an end-to-end manner for the efficiency. More importantly, for cases classified as positive (i.e., COVID-19, H1N1 or CAP), our system can locate suspected area of abnormality without any pixel-level annotations (in Section III-B).

Lung-Sys), which consists of two components, the Image Preprocessing and Classification Network. Specifically, the Image Preprocessing receives raw CT exams, and prepare them for model training or inference (in Section III-A). For the Classification Network, we divide it into two subnets (stages), i.e., slice-level and patient-level classification networks, with the purpose of jointly COVID-19 screening and location. Concretely, slice-level classification network is trained with multiple CT slice images and predicts the corresponding slice-level categories (in Section III-B), i.e., Healthy, COVID-19, H1N1 or CAP. Besides, patient-level classification network only has four layers, and takes a volume of CT slice features, instead of images as input, which are extracted by the former network, to output the patient-level labels (in Section III-C). Both classification networks are trained separately due to different tasks, but can be used concurrently in an end-to-end manner for the efficiency. More importantly, for cases classified as positive (i.e., COVID-19, H1N1 or CAP), our system can locate suspected area of abnormality without any pixel-level annotations (in Section III-B).

Fig. 3.

The schematic of our M Lung-Sys. The red/black arrows indicate the training/inference phases. Both classification networks are trained separately due to different tasks, but can be used concurrently in an end-to-end manner. The details of the classification networks are illustrated in Figure 5 and Figure 6.

Lung-Sys. The red/black arrows indicate the training/inference phases. Both classification networks are trained separately due to different tasks, but can be used concurrently in an end-to-end manner. The details of the classification networks are illustrated in Figure 5 and Figure 6.

A. Image Preprocessing

The pixel value of CT images reflects the absorption rate of different human tissues (e.g., bone, lung, kidney) to x-rays, which is measured by Hounsfield Unit (HU) [49]. If we directly apply raw images for classification, this will inevitably introduce noise or irrelevant information, such as the characteristics of equipment, making the performance of the model inaccurate and unreliable. Consequently, according to the priors from radiologist, here, we introduce two effective yet straightforward approaches for preprocessing.

1). Lung Crop

Given chest CT images, lungs are one of the most important organs observed by radiologists to check whether there exist abnormalities. Considering the extreme cost and time-consumption of manual labeling, instead of training an extra deep learning network for lung segmentation [47], [48], [50], we propose a hand-crafted algorithm to automatically subdivide/segment the image into ‘lungs’ and ‘other,’ and then crop the area of lungs using the minimum bounding rectangle within a given margin. As illustrated in Figure 4, the details of the algorithm involved in lung segmentation and cropping are as following:

-

•

Step 1: Load the raw CT scan image (Figure 4 (a)).

-

•

Step 2: Set a threshold to separate the lung area from others, such as bone and fat (Figure 4 (b)). In this paper, we set the threshold of HU as

.

. -

•

Step 3: To alleviate the effect of ‘trays,’ which the patient lays on during CT scanning, we apply a morphological opening operation [51] (Figure 4 (c)). Specifically, we set the kernel size as

.

. -

•

Step 4: Remove the background (e.g., trays, bone and fat) based on 8-Connected Components Labeling [52] (Figure 4 (d)).

-

•

Step 5: Apply the morphological opening operation again to eliminate the noise caused by Step 3 (Figure 4 (e)).

-

•

Step 6: Compute the minimum bounding rectangle with a margin of 10 pixels for lung cropping and then resize the cropped image (Figure 4 (f)).

Fig. 4.

Detailed procedures of lung cropping during image preprocessing.

2). Multi-Value Window-Leveling

In order to simulate the process of window-leveling when a radiologist is looking at CT scans, we further apply multi-value window-leveling to all images. More concretely, the value of the window center is assigned randomly from  to

to  , and the window width is assigned with a constant of 1200. This preprocessing provides at least two benefits: (1) generating much more CT samples for training, i.e., data augmentation; (2) during inference, the assessment based on multi-value window-leveling CT images will be more accurate and reliable.

, and the window width is assigned with a constant of 1200. This preprocessing provides at least two benefits: (1) generating much more CT samples for training, i.e., data augmentation; (2) during inference, the assessment based on multi-value window-leveling CT images will be more accurate and reliable.

B. Slice-Level Classification Network

After the above-mentioned image preprocessing, slices from CT volumes are first fed into the slice-level classification network. Considering the outstanding performance achieved by the residual networks (ResNets) [53] on the 1000-category image classification task, we utilize ResNet-50 [53] as our backbone and initialize it with the ImageNet [54] pre-trained weights. This network consists of four blocks (a.k.a, ResBlock1 4) with a total of 50 layers, including convolutional layers and fully connected layers. Each block has a similar structure, but different number of layers. The skip connection and identity mapping functions in the blocks make it more possible to apply deeper layers to learn stronger representations. For the purpose of pneumonia classification and alleviating the limitations discussed in Section I, we improve the network from three aspects, i.e., multi-task learning for radiologist-like diagnosis, weakly-supervised learning for slice-level lesion localization (attention) and coordinate maps for learning location information, as shown in Figure 5.

4) with a total of 50 layers, including convolutional layers and fully connected layers. Each block has a similar structure, but different number of layers. The skip connection and identity mapping functions in the blocks make it more possible to apply deeper layers to learn stronger representations. For the purpose of pneumonia classification and alleviating the limitations discussed in Section I, we improve the network from three aspects, i.e., multi-task learning for radiologist-like diagnosis, weakly-supervised learning for slice-level lesion localization (attention) and coordinate maps for learning location information, as shown in Figure 5.

Fig. 5.

The details of our proposed slice-level classification network. We improve the network from three aspects: (1) multi-task learning to diagnose like a radiologist; (2) weakly-supervised learning for slice-level lesion localization (attention); (3) coordinate maps for location information. The symbol ‘©’ indicates a concatenation operation.

1). Multi-Task Learning

Usually, given a CT slice, a radiologist will gradually check for abnormalities and make a decision according to these. To act like an experienced radiologist, we introduce a multi-task learning scheme [55] by dividing the network into two stages. Specifically, image features obtained from the first three ResBlocks are fed into an extra classifier to determine whether they have any lesion characteristics. Then, the features are further passed through ResBlock4 to determine fine-grained category, i.e., Healthy, COVID-19, H1N1 or CAP.

2). Weakly-Supervised Learning for Lesion Localization

Instead of using pixel-wise annotations or bounding box labels for learning to locate infection areas, we devise a weakly-supervised learning approach, that is, employ only the category labels. Specifically, the weights of the extra classifier described in Section III-B1 have a dimension of  , where

, where  is the dimension of the feature and ‘2’ denotes the number of classes (i.e., ‘with lesion’ and ‘without lesion’). These learned weights can be regarded as two prototypical features of the corresponding two classes. Similar to the Class Activation Map [56], we first select one prototypical feature according to the predicted class, and then calculate the distance between it and each point of the image feature extracted from the first three ResBlocks. Intuitively, a closer distance between a point and the prototypical feature of ‘with lesion’ indicates that the area of this point mapping to the input CT slice has a higher probability of being an infection region, e.g., GGO. As one output of our M

is the dimension of the feature and ‘2’ denotes the number of classes (i.e., ‘with lesion’ and ‘without lesion’). These learned weights can be regarded as two prototypical features of the corresponding two classes. Similar to the Class Activation Map [56], we first select one prototypical feature according to the predicted class, and then calculate the distance between it and each point of the image feature extracted from the first three ResBlocks. Intuitively, a closer distance between a point and the prototypical feature of ‘with lesion’ indicates that the area of this point mapping to the input CT slice has a higher probability of being an infection region, e.g., GGO. As one output of our M Lung-Sys, such generated location maps are complementary to the final predicted diagnosis and provide interpretability for our network, making the assistance to clinicians more comprehensive and flexible. More visualization samples are demonstrated in Figure 10, 11 and 12. Furthermore, we regard the lesion location map as an attention map and take full advantage of it to help the slice-level differential diagnosis, as shown in Figure 5.

Lung-Sys, such generated location maps are complementary to the final predicted diagnosis and provide interpretability for our network, making the assistance to clinicians more comprehensive and flexible. More visualization samples are demonstrated in Figure 10, 11 and 12. Furthermore, we regard the lesion location map as an attention map and take full advantage of it to help the slice-level differential diagnosis, as shown in Figure 5.

Fig. 10.

The visualizations of location maps from COVID-19, H1N1 and CAP cases. Each row has four groups. In each group, the left image is the raw CT slice and the right one shows the abnormality areas. Best viewed in color and zoom in.

Fig. 11.

The visualizations of our system outputs for one COVID-19 patient. We show a CT sequence by sampling every five slices. The lesion location maps, with the predicted slice-level diagnosis on the upper right, are attached at the bottom of the corresponding raw CT slices. At the end of the sequence, the probability of each category, predicted by the patient-level classification network is provided, i.e., 0: Healthy; 1: COVID-19; 2: H1N1; 3: CAP. Best viewed in color and zoom in.

Fig. 12.

The visualizations of our system outputs for one COVID-19 patient. We show a CT sequence by sampling every five slices. The lesion location maps, with the predicted slice-level diagnosis on the upper right, are attached at the bottom of the corresponding raw CT slices. At the end of the sequence, the probability of each category, predicted by the patient-level classification network is provided, i.e., 0: Healthy; 1: COVID-19; 2: H1N1; 3: CAP. Best viewed in color and zoom in.

3). Coordinate Maps

From the literature [57], it is known that infections of COVID-19 have several spatial characteristics. For example, they frequently distribute bilaterally on lungs, and predominantly in peripheral lower zone. Nevertheless, convolutional neural networks primarily extract the features of textures. To explicitly capture the spatial information, and inspired by [58], we integrate our slice-level classification network with the coordinate maps ( ) containing three channels, where

) containing three channels, where  and

and  are the height and width of the image feature extracted from the first three ResBlocks, to facilitate the distinction among COVID-19, H1N1 and CAP. The first two channels of the coordinate maps are instantiated and filled with the coordinates of

are the height and width of the image feature extracted from the first three ResBlocks, to facilitate the distinction among COVID-19, H1N1 and CAP. The first two channels of the coordinate maps are instantiated and filled with the coordinates of  and

and  respectively. And we further apply a normalization to make them fall in the range of

respectively. And we further apply a normalization to make them fall in the range of  . The last channel encodes the distance

. The last channel encodes the distance  from the point

from the point  to the center

to the center  , i.e,

, i.e,  . Specifically, these three additional channels are fed into the ResBlock4 together with the image feature and attention map to learn representations with spatial information.

. Specifically, these three additional channels are fed into the ResBlock4 together with the image feature and attention map to learn representations with spatial information.

C. Patient-Level Classification Network

As mentioned in Section I, performing diagnosis or risk assessment on only slice-level CT images is of limited value to clinicians. Although several studies [47], [48] have been proposed to take advantage of temporal information with 3D CNNs for patient-level differential diagnosis, they require thousands of patient-level data for deep model training, which makes the cost particularly high. To overcome these limitations, we further propose a patient-level classification network. It takes a volume of CT slice-level features as input rather than 3D images, and only comprises four layers, allowing it to be trained with lower hardware, time and data cost. Details will be described below. Note that we concatenate the features from ResBlock3 and ResBlock4 in Section III-B as the input.

1). Feature Refinement and Aggregation Head

Inspired by [12], [59], we introduce a three-layer head to conduct feature refinement and aggregation, so that the image features from different slices can be correlated with each other and aggregated into one final feature for patient-level classification. The key intuition behind this is to utilize the attention mechanism to exploit the correlation between different CT slices, and accomplish the refinement and aggregation based on the explored correlation. As shown in Figure 6, the head includes a feature refinement module with two layers and a feature aggregation module with one layer, the structures of which are similar.

Fig. 6.

The details of our proposed patient-level classification network. It takes a volume of slice-level features as input, and feeds them into a feature refinement and aggregation head, so that the image features from different slices can be correlated with each other and aggregated into one final feature for patient-level classification.

Formally, for the feature refinement module, given a volume of CT image features with the feature dimension of  , we first utilize three parallel FC layers to map the input to three different feature spaces for dimension reduction (

, we first utilize three parallel FC layers to map the input to three different feature spaces for dimension reduction ( ) and self-attention [12]. Then, we calculate the distance, as attention, between each pixel in different slices using features from the first two spaces, and refine the features from the last space based on the attention. Finally, another FC layer is employed to expand the feature dimension back to

) and self-attention [12]. Then, we calculate the distance, as attention, between each pixel in different slices using features from the first two spaces, and refine the features from the last space based on the attention. Finally, another FC layer is employed to expand the feature dimension back to  , so that the skip-connection operation [53] can be applied. Similar to [59], we also apply a multi-head mechanism2 to strengthen the refinement ability. Without loss of generality, we define the input volume feature as

, so that the skip-connection operation [53] can be applied. Similar to [59], we also apply a multi-head mechanism2 to strengthen the refinement ability. Without loss of generality, we define the input volume feature as  , where

, where  is the number of slices, and the overall formulation of our refinement module can be expressed as,

is the number of slices, and the overall formulation of our refinement module can be expressed as,

|

where  indicates the correlation between each pixel in different slices,

indicates the correlation between each pixel in different slices,  indicates the

indicates the  -th FC layer with parameter

-th FC layer with parameter  , and we omit the reshape operation for simplicity.

, and we omit the reshape operation for simplicity.

With regard to the feature aggregation module, its structure, as well as the equations, is similar to the refinement module, except that we remove the multi-head mechanism and the last FC layer, and replace the first FC layer with a learnable parameter  , so that the total number of

, so that the total number of  CT image features can be aggregated into one. Details can be found in Figure 6 (c).

CT image features can be aggregated into one. Details can be found in Figure 6 (c).

2). Multi-Scale Learning

If the number of slices with lesions in the early stage is relatively small (i.e.,  ), this may result in key information being leaked when performing feature aggregation from

), this may result in key information being leaked when performing feature aggregation from  to

to  . Therefore, we introduce a multi-scale learning mechanism to aggregate features from different scales. As illustrated in Figure 6 (a), given a set of scales

. Therefore, we introduce a multi-scale learning mechanism to aggregate features from different scales. As illustrated in Figure 6 (a), given a set of scales  , for each scale

, for each scale  , we first divide the input feature

, we first divide the input feature  evenly into

evenly into  parts,

parts,  . Then, a shared feature refinement and aggregation head is applied to each part. In the end, we concatenate a set of aggregated features from all parts of different scales, and feed it into one FC layer to reduce the dimension from

. Then, a shared feature refinement and aggregation head is applied to each part. In the end, we concatenate a set of aggregated features from all parts of different scales, and feed it into one FC layer to reduce the dimension from  to

to  as the final patient-level feature for classification.

as the final patient-level feature for classification.

IV. Experiments

A. Implementation Details

We implement our framework with PyTorch [60]. All CT slices are resized to  . We set the hyper parameters of

. We set the hyper parameters of  and

and  as 512 and 12 respectively, and use four scales in the feature refinement and aggregation head, i.e.,

as 512 and 12 respectively, and use four scales in the feature refinement and aggregation head, i.e.,  . For training, the slice-level/patient-level classification network is trained with two/one NVIDIA 1080Ti GPUs for a total of 110/90 epochs, the initial learning rate is 0.01/0.001 and gradually reduces by a factor of 0.1 every 40/30 epochs. Both classification networks are trained separately with the standard cross-entropy loss function. Random flipping is adopted as data argumentation. During inference, our system is an end-to-end framework since the input of the patient-level classification network is the output of the slice-level one, so that it can be applied effectively. We additionally set the window center as

. For training, the slice-level/patient-level classification network is trained with two/one NVIDIA 1080Ti GPUs for a total of 110/90 epochs, the initial learning rate is 0.01/0.001 and gradually reduces by a factor of 0.1 every 40/30 epochs. Both classification networks are trained separately with the standard cross-entropy loss function. Random flipping is adopted as data argumentation. During inference, our system is an end-to-end framework since the input of the patient-level classification network is the output of the slice-level one, so that it can be applied effectively. We additionally set the window center as  for multi-scale window-leveling and average the final predicted features/scores for assessment.

for multi-scale window-leveling and average the final predicted features/scores for assessment.

B. Statistical Analysis

For the statistical analysis, we apply lots of metrics to thoroughly evaluate the performance of the model, following standard protocol. Concretely, ‘sensitivity,’ known as true positive rate (TPR), indicates the percentage of positive patients with correct discrimination. Referred as true negative rate (TNR), ‘specificity’ represents the percentage of negative persons who are correctly classified. ‘accuracy’ is the percentage of the number of true positive (TP) and true negative (TN) subjects. ‘false positive/negative error’ (FPE/FNE) measures the percentage of negative/positive persons who are misclassified as positive/negative. ‘false disease prediction error’ (FDPE) calculates the percentage of positive persons whose disease types (i.e., COVID-19, H1N1 or CAP) are predicted incorrectly. Receiver operating characteristic curves (ROC) and area under curves (AUC) are used to show the performance of classifier. We also report the  -values compared our model with other competitors to demonstrate the significance level.3

-values compared our model with other competitors to demonstrate the significance level.3

C. Experimental Results

1). Patient-Level Performance

The main purpose of our system is to assist the diagnosis of COVID-19 at a patient level rather than slice level [40]–[44], which is more significant and practical in the real-world applications. Therefore, we first evaluate our system on the patient-level testing set with 102 Healthy, 96 COVID-19, 41 H1N1 and 53 CAP cases. The competitors include one 2D CNN based method of COVNet [30] and three 3D CNN based models as Med3D-50 [61], Med3D-18 [61] and DeCovNet [62]. The results are shown in Table II and Figure 7.

TABLE II. Comparing our Model With Several Competitors on Patient-Level Diagnosis. ‘FPE,’ ‘FNE’ and ‘FDPE’ Denote the Metrics of False Positive Error, False Negative Error and False Disease Prediction Error, Respectively. The Numbers in Square Brackets Represent the 95% Confidence Interval. ‘ ’ Means the

’ Means the  -Value Compared our Model With Other Competitors.

-Value Compared our Model With Other Competitors.

| Metrics | Healthy | COVID-19 | H1N1 | CAP | Overall | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sen. | Spec. | AUC | Sen. | Spec. | AUC | Sen. | Spec. | AUC | Sen. | Spec. | AUC | Acc. | FPE | FNE | FDPE | |

| COVNET | 51.92 | 96.88 | 96.30 | 95.88 | 80.74 | 95.15 | 58.33 | 94.86 | 84.82 | 60.71 | 85.83 | 77.89 | 68.84 [63.70 74.32] ( 74.32] ( ) ) |

48.08 | 3.13 | 18.97 |

| Med3D-50 | 69.70 | 90.59 | 91.44 | 75.00 | 88.83 | 88.22 | 93.02 | 97.24 | 97.70 | 79.39 | 90.96 | 92.09 | 76.37 [71.57 80.83] ( 80.83] ( ) ) |

30.30 | 9.41 | 10.45 |

| Med3D-18 | 93.26 | 100 | 99.99 | 95.05 | 99.49 | 99.81 | 92.86 | 100 | 100 | 98.16 | 93.67 | 99.41 | 94.52 [91.78 96.92] ( 96.92] ( ) ) |

6.74 | 0.00 | 4.62 |

| DeCovNet | 99.03 | 100 | 100 | 95.96 | 96.04 | 98.87 | 100 | 98.03 | 99.85 | 75.51 | 97.93 | 96.51 | 93.83 [91.10 96.58] ( 96.58] ( ) ) |

0.96 | 0.00 | 8.91 |

| Ours | 97.17 | 95.86 | 98.88 | 98.99 | 97.49 | 99.93 | 100 | 99.61 | 100 | 81.19 | 100 | 97.71 |

95.21 [92.81 97.26] 97.26] |

2.83 | 4.15 | 1.57 |

Fig. 7.

ROC plots for patient-level classification. Best viewed in color and zoom in.

For the overall performance, our system achieves 95.21% on accuracy, with only 2.83% and 4.15% in false positive error and false negative error, respectively. Although it may be difficult for clinicians to differentiate COVID-19 from other kinds of viral pneumonia or CAP pneumonia according to CT features, our system, as expected, only gets confused on a small number of cases, i.e., 1.57% in false disease prediction error, which beats the second best model by a margin of 3.05%. Similarly, for H1N1, it obtains approximately 99.6% in both sensitivity and specificity, which is definitely a promising performance. Moreover, our system significantly improves the sensitive of COVID-19 from 95% to 99% comparing with Med3D-18 and DeCovNet. However, we observe that the sensitivity or specificity of Healthy is relatively inferior to Med3D-18 and DeCovNet by approximately 2 4 points, it seems our model is a little oversensitive to noise. On the other hand, Med3D-50 achieves much worse performance at most of metrics unexpectedly, especially in sharp contrast to Med3D-18. Our explanation is that it may be difficult to train a 3D CNN with such large parameters and limited dataset, which is consist with our motivation of using 2D CNN based network.

4 points, it seems our model is a little oversensitive to noise. On the other hand, Med3D-50 achieves much worse performance at most of metrics unexpectedly, especially in sharp contrast to Med3D-18. Our explanation is that it may be difficult to train a 3D CNN with such large parameters and limited dataset, which is consist with our motivation of using 2D CNN based network.

In addition to performance, we also compare the computation cost between our system and other competitors. As shown in Table III, our M Lung-Sys takes full advantage of training data (CT slices and volumes) and has the lowest computation cost, including training time and GPU requirement. Combining with the results in Table II and Table III, our method can achieve better performance with less computing resources, which is more practical for assisting diagnosis.

Lung-Sys takes full advantage of training data (CT slices and volumes) and has the lowest computation cost, including training time and GPU requirement. Combining with the results in Table II and Table III, our method can achieve better performance with less computing resources, which is more practical for assisting diagnosis.

TABLE III. The Comparison of Computational Costs, i.e., the Max Training Epoch, the Input Size, the Required GPUs, Training Time and the Available Training Data, Between our System and Several Competitors. The Shape of Input Size is  , Where

, Where  ,

,  ,

,  ,

,  ,

,  Mean the Number of Batch-Size, Channel, Slice, Image Height and Width, Respectively. The ‘N’ Denotes the Arbitrary Number of Slice When Training Patient-Level Classification Network and the Unit of the ‘Data’ is the Slice/Volume for ‘Slice-Level’/Other Methods. Note That the Input Size Is Limited to the GPU Memory.

Mean the Number of Batch-Size, Channel, Slice, Image Height and Width, Respectively. The ‘N’ Denotes the Arbitrary Number of Slice When Training Patient-Level Classification Network and the Unit of the ‘Data’ is the Slice/Volume for ‘Slice-Level’/Other Methods. Note That the Input Size Is Limited to the GPU Memory.

| Methods | Epoch | Input Size | GPUs | Time(h) | Data |

|---|---|---|---|---|---|

| COVNet | 110 | 4 256 256 |

4 |

2.5 2.5 |

442 |

| Med3D-50 | 220 | 6 256 256 |

6 |

4.5 4.5 |

442 |

| Med3D-18 | 220 | 8 256 256 |

4 |

4 4 |

442 |

| DeCovNet | 220 | 16 256 256 |

4 |

2.5 2.5 |

442 |

| Slice-level | 110 | 32 512 512 |

2 |

2 2 |

70k |

| Patient-level | 90 | 16 N N 1 1 |

1 |

0.3 0.3 |

442 |

2). Slice-Level Performance

Another advantage of our proposed M Lung-Sys is that we can flexibly switch whether the input is CT slices or volumes, i.e., slice-level or patient-level diagnosis. Naturally, we further evaluate our model on slices, using the total of 48,818 CT slices from the four categories (i.e., Healthy, COVID-19, H1N1 and CAP) for testing. As shown in Figure 8, our model achieves 98.40%, 98.99%, 100.00% and 94.58% in AUC for the four categories, respectively. This strongly demonstrates the superiority of our proposed M

Lung-Sys is that we can flexibly switch whether the input is CT slices or volumes, i.e., slice-level or patient-level diagnosis. Naturally, we further evaluate our model on slices, using the total of 48,818 CT slices from the four categories (i.e., Healthy, COVID-19, H1N1 and CAP) for testing. As shown in Figure 8, our model achieves 98.40%, 98.99%, 100.00% and 94.58% in AUC for the four categories, respectively. This strongly demonstrates the superiority of our proposed M Lung-Sys on slice-level diagnosis.

Lung-Sys on slice-level diagnosis.

Fig. 8.

ROC plots for slice-level classification.

D. Ablation Study

1). Improvements in Patient-Level Classification Network

It is worth mentioning that our proposed slice-level classification network is strong and the extracted features are very discriminative. Even without parameters, simple mathematic operations can obtain competitive results on patient-level diagnosis. Meanwhile, the proposed multi-scale mechanism and refinement and aggregation head are able to further boost performance. To verify this, as shown in Table IV, we conduct experiments to demonstrate improvements with different variants of the patient-level classification network. More specifically, ‘Non-parametric Assessment’ denotes a simple variant without parameters for differential diagnosis (we refer readers to A for details). ‘Max pooling’ indicates that the input features are directly aggregated by a max pooling operation. ‘Single-scale + A. Head’ refers to a variant without the multi-scale mechanism (i.e., S = [3]) and feature refinement module. ‘Multi-scale + A. Head’ is similar to the previous model but applies the multi-scale strategy (i.e., S = [1,2,3,4]).

TABLE IV. Improvements of Different Components in the Patient-Level Classification Network. ‘R.&A. Head’ is our Proposed Refinement and Aggregation Head, and ‘A. Head’ is a Variant Without the Refinement Module. ‘Non-Parametric Assessment’ Denotes That we Perform Patient-Level Classification Only With Several Non-Parametric Mathematical Operations. ‘FPE,’ ‘FNE’ and ‘FDPE’ Indicate the Metric of False Positive Error, False Negative Error and False Disease Prediction Error, Respectively. The Numbers in Square Brackets Represent the 95% Confidence Interval. ‘ ’ Means the

’ Means the  -Value Compared our Model With Other Competitors.

-Value Compared our Model With Other Competitors.

| Methods | Accuracy | FPE | FNE | FDPE |

|---|---|---|---|---|

| Non-parametric Assessment | 94.18 [91.44 97.58] ( 97.58] ( ) ) |

10.66 | 0.0 | 3.11 |

| Max pooling | 92.12 [89.04 95.21] ( 95.21] ( ) ) |

2.91 | 6.15 | 4.12 |

| Single-scale + A. Head | 93.15 [90.06 95.56] ( 95.56] ( ) ) |

4.95 | 5.32 | 2.59 |

| Multi-scale + A. Head | 94.18 [91.44 96.58] ( 96.58] ( ) ) |

2.89 | 5.22 | 2.04 |

| Multi-scale + R.&A. Head (Ours) |

95.21 [92.81 97.26] 97.26] |

2.83 | 4.15 | 1.57 |

From the results in Table IV and Figure 9, we highlight the following observations: (1) Using only the non-parametric assessment method, we can achieve competitive results of 94.18% in accuracy, which suggests the stronger feature representations acquired by our slice-level classification network. However, a big performance gap between ‘false positive error’ and ‘false negative error’ also reflects its inferior robustness, since a higher value of hyper-parameter  may result in more healthy cases being misdiagnosed due to some noise. (2) From the results in the second row to the last, the performance on all metrics improves gradually with more and more specifically designed components, which clearly demonstrates the benefits of our proposed feature refinement and aggregation head and multi-scale mechanism. (3) We notice that the false positive error gets worse when applying the method of ‘Single-scale + A. Head,’ and it decreases dramatically when involving multi-scale mechanism. We argue that this does not suggest the inferiority of our proposed ‘A. Head’ (since the overall accuracy is improved by 1%), but reflects the importance and rationality of the multi-scale mechanism.

may result in more healthy cases being misdiagnosed due to some noise. (2) From the results in the second row to the last, the performance on all metrics improves gradually with more and more specifically designed components, which clearly demonstrates the benefits of our proposed feature refinement and aggregation head and multi-scale mechanism. (3) We notice that the false positive error gets worse when applying the method of ‘Single-scale + A. Head,’ and it decreases dramatically when involving multi-scale mechanism. We argue that this does not suggest the inferiority of our proposed ‘A. Head’ (since the overall accuracy is improved by 1%), but reflects the importance and rationality of the multi-scale mechanism.

Fig. 9.

The ROC curves of different variants in patient-level classification network. ‘R.&A. Head’ is our refinement and aggregation head, and ‘A. Head’ is a variant without the refinement module. Best viewed in color and zoom in.

2). Improvements in Slice-Level Classification Network

To explicitly demonstrate the advantages of our improvements in slice-level classification network, we compare it with several competitors on patient-level diagnosis. Without loss of generality, we choose the method of non-parametric assessment with  as the patient-level classification network. Concretely, since the backbone of our slice-level classification network is ResNet-50, which is widely adopted by other works [30], [42], we directly train a vanilla ResNet-50 for four-way classification as a baseline. Based on this, we conduct further experiments by gradually adding different improvements, including multi-task learning, lesion location (attention) maps and coordinate maps.

as the patient-level classification network. Concretely, since the backbone of our slice-level classification network is ResNet-50, which is widely adopted by other works [30], [42], we directly train a vanilla ResNet-50 for four-way classification as a baseline. Based on this, we conduct further experiments by gradually adding different improvements, including multi-task learning, lesion location (attention) maps and coordinate maps.

All results are listed in Table V. Compared with the baseline, our model achieves significant improvement in all four metrics. For example, the overall accuracy is improved from 89.04% to 94.18% and the proportion of false positive is reduced effectively by 12 points. Although we obtain a few more failure cases on false disease predication when utilizing the multi-task mechanism, the number of both false positive and false negative samples is dramatically reduced. The two-task approach acting like a radiologist is expected to better distinguish between healthy people and patients. Furthermore, if we introduce the coordinate maps, fewer positive samples are misclassified as the wrong type of disease and some negative cases with noise are correctly diagnosed as positive, resulting in a decrease in both false positive and false disease prediction error. These results clearly suggest that the components in slice-level classification network play important roles in extracting discriminative features, e.g., attention maps for awareness of small lesions, and coordinate maps for capturing location diversity among different types of pneumonia.

TABLE V. Improvements of Different Components in the Slice-Level Classification Network. ‘FPE,’ ‘FNE’ and ‘FDPE’ Indicate the Metrics of False Positive Error, False Negative Error and False Disease Prediction Error, Respectively. The Numbers in Square Brackets Represent the 95% Confidence Interval. ‘ ’ Means the

’ Means the  -Value Compared our Model With Other Competitors.

-Value Compared our Model With Other Competitors.

| Method | Multi-task | Attention | Coordinate | Accuracy | FPE | FNE | FDPE |

|---|---|---|---|---|---|---|---|

| ResNet-50 | 89.04 [85.27 92.47] ( 92.47] ( ) ) |

22.46 | 1.04 | 3.65 | |||

| ✔ | 92.12 [89.04 94.86] ( 94.86] ( ) ) |

13.40 | 0.00 | 4.74 | |||

| ✔ | ✔ | 93.49 [90.41 95.89] ( 95.89] ( ) ) |

11.88 | 0.00 | 3.57 | ||

| Ours | ✔ | ✔ | ✔ |

94.18 [91.44 97.58] 97.58] |

10.66 | 0.00 | 3.11 |

E. Visualizations of Lesion Localization

As one of its contributions, our M Lung-Sys can implement lesion localization using only category labels, i.e., weakly-supervised learning. To qualitatively evaluate this, we randomly select several CT slices of three categories from the testing set, and show the visualizations of lesion location maps in Figure 10. For each group, the left image is the raw CT slice after lung cropping, and the right image depicts the detected area of abnormality. Note that a warmer color indicates that the corresponding region has a higher probability of being infected. Several observations can be made from Figure 10:

Lung-Sys can implement lesion localization using only category labels, i.e., weakly-supervised learning. To qualitatively evaluate this, we randomly select several CT slices of three categories from the testing set, and show the visualizations of lesion location maps in Figure 10. For each group, the left image is the raw CT slice after lung cropping, and the right image depicts the detected area of abnormality. Note that a warmer color indicates that the corresponding region has a higher probability of being infected. Several observations can be made from Figure 10:

-

1)

First of all, the quality of location maps are competitive. All highlighted areas are concentrated on the left or right lung region, and most abnormal manifestations, such as ground-glass capacities (GGO), are completely captured by our model, which is trained without any pixel-level or bounding box labels. In addition, there is no eccentric area being mistaken as a lesion, such as vertebra, skin or other tissues. Our system can even precisely detect small lesions with relatively high response, as shown in the top-right image of Figure 10 (a). Above all, our system can achieve visual localization of abnormal areas with good interpretability, which is crucial for assisting clinicians in diagnosis and improving the efficiency of medical systems.

-

2)

Second, we found that the location map results are consist with the experience or conclusions of radiologists. Several studies have found that COVID-19 typically presents GGO with or without consolidation in a predominantly peripheral distribution [63]–[65], which has already been used as guidance for COVID-19 diagnosis endorsed by the Society of Thoracic Radiology, the American College of Radiology, and RSNA [66]. In contrast, H1N1 pneumonia most commonly presents a predominantly peribronchovascular distribution [67], [68]. Lobar or segmented consolidation and cavitation suggest a bacterial etiology [10]. Therefore, the visualizations of location maps can reflect the characteristics of lesion distributions in some ways, which may be a valuable indicator for clinicians in analyzing or differentiating these three diseases.

To fully demonstrate the practicability and effectiveness of our system, we further simulate its real-world application and present the outputs, i.e., the diagnosis assessment of diseases and the localization of lesions. Concretely, we randomly select two COVID-19 patients, and feed their CT exams into our system. The full outputs are illustrated in Figure 11 and 12. Due to page limitation, we show a CT sequence by sampling every five slices. The lesion location maps, with the predicted slice-level diagnosis on the upper right, are attached at the bottom of the corresponding raw CT slices. At the end of the sequence, the probability of each category predicted by the patient-level classification network is provided (i.e., 0: Healthy; 1: COVID-19; 2: H1N1; 3: CAP). As can be seen, our system can accurately locate the lesion areas in each slice,4 and these areas also have good continuity in sequence, which are very important characteristics to assist the clinician in diagnosing COVID-19.

V. Discussion

In this paper, we proposed a multi-task multi-slice deep learning system (M Lung-Sys) to assist the work of clinicians by simultaneously screening multi-class lung pneumonia, i.e., Healthy, COVID-19, H1N1 and CAP, and locating lesions from both slice-level and patient-level CT exams. Different from previous studies, which incur high hardware, time and data costs to train 3D CNNs, our system divides this procedure into two stages: slice-level classification and patient-level classification. We first utilize the slice-level classification network to classify each CT slice. An introduced multi-task learning mechanism makes our model diagnose like a radiologist, first checking whether each CT slice contains any abnormality, and then determining what kind of disease it is. Both attention maps and coordinate maps are further applied to provide awareness of small lesions and capture location diversity among different types of pneumonia. With the improvements provided by these components, our system achieves a remarkable performance of 94.18% accuracy, 10.66% false positive error and 0.0% false negative error. Then, to recover the temporal information in CT sequence slices and enable CT volumes screening, we propose a patient-level classification network, taking as input a volume of CT slice features extracted from the slice-level classification network. Such a network can achieve the feature interaction and aggregation between different slices for patient-level diagnosis. Consequently, it further promotes our system by dramatically reducing the cases of false positive and false disease predication and improving the accuracy by 1.1%.

Lung-Sys) to assist the work of clinicians by simultaneously screening multi-class lung pneumonia, i.e., Healthy, COVID-19, H1N1 and CAP, and locating lesions from both slice-level and patient-level CT exams. Different from previous studies, which incur high hardware, time and data costs to train 3D CNNs, our system divides this procedure into two stages: slice-level classification and patient-level classification. We first utilize the slice-level classification network to classify each CT slice. An introduced multi-task learning mechanism makes our model diagnose like a radiologist, first checking whether each CT slice contains any abnormality, and then determining what kind of disease it is. Both attention maps and coordinate maps are further applied to provide awareness of small lesions and capture location diversity among different types of pneumonia. With the improvements provided by these components, our system achieves a remarkable performance of 94.18% accuracy, 10.66% false positive error and 0.0% false negative error. Then, to recover the temporal information in CT sequence slices and enable CT volumes screening, we propose a patient-level classification network, taking as input a volume of CT slice features extracted from the slice-level classification network. Such a network can achieve the feature interaction and aggregation between different slices for patient-level diagnosis. Consequently, it further promotes our system by dramatically reducing the cases of false positive and false disease predication and improving the accuracy by 1.1%.

Above all, from a clinical perspective, our system can perform differential diagnosis with not only a single CT slice, but also a CT volume. The combined outputs of risk assessment (predicted diagnosis) and lesion location maps make it more flexible and valuable to clinicians. For example, they can easily estimate the percentage of infected lung areas or quickly check the lesions at any time before making a decision. As illustrated in Figure 11 and 12, with these lesion location maps, clinicians are able to understand why the deep learning model gives such prediction, not just face a statistic.

Even with the outstanding performance, there are three limitations remaining in our system that need to be improved. First of all, it is very easy for clinicians to distinguish COVID-19 from healthy cases. However, from Table 2, we find that our system may still misclassify some healthy people. We examine failure cases and find that the main challenge lies in the pulsatile artifacts in pulmonary CT imaging. Second, our framework, which contains slice-level and patient-level classification networks, is not end-to-end trainable yet. Although it just increases the negligible training and testing time, we hope the end-to-end training manner would be more conducive to the learning and combination of spatial and temporal information. Third, our proposed localization maps accurately show the location of abnormal regions, which are valuable to clinicians in assisting diagnosis. However, they still lack the ability to automatically visualize the unique lesions’ distributions for each disease.

In the future, we will attempt to tackle the first and third limitation by improving the attention mechanism to enhance the feature representations. Besides, developing the technology of coordinate maps may be an optimal option. Frankly speaking, the third obstacle is a very challenging and ideal objective, but we will continuously promote research along this line. As for the second limitation, we are going to polish our method into a unified framework, through such training mechanism, both spatial and temporal information can complement each other.

VI. Conclusion

In conclusion, we proposed a novel multi-task multi-slice deep learning system (M Lung-Sys) for multi-class lung pneumonia screening from chest CT images. Different from previous 3D CNN approaches, which incur a substantial training cost, our system utilizes two 2D CNN networks, i.e., slice-level and patient-level classification networks, to handle the discriminative feature learning from the spatial and temporal domain, respectively. With these special designs, our system can not only be trained with much less cost, including time, data and GPU, but can also perform differential diagnosis with either a single CT slice, or a CT volume. More importantly, without any pixel-level annotation for training, our system is able to simultaneously output the lesion localization for each CT slice, which is valuable to clinicians for diagnosis, allowing them to understand why our system gives a particular prediction, rather than just being faced with a statistic. According to the remarkable experimental results on 292 testing cases with multi-class lung pneumonia (102 healthy people, 96 COVID-19 patients, 41 H1N1 patients and 53 CAP patients), our system has great potential for clinical application.

Lung-Sys) for multi-class lung pneumonia screening from chest CT images. Different from previous 3D CNN approaches, which incur a substantial training cost, our system utilizes two 2D CNN networks, i.e., slice-level and patient-level classification networks, to handle the discriminative feature learning from the spatial and temporal domain, respectively. With these special designs, our system can not only be trained with much less cost, including time, data and GPU, but can also perform differential diagnosis with either a single CT slice, or a CT volume. More importantly, without any pixel-level annotation for training, our system is able to simultaneously output the lesion localization for each CT slice, which is valuable to clinicians for diagnosis, allowing them to understand why our system gives a particular prediction, rather than just being faced with a statistic. According to the remarkable experimental results on 292 testing cases with multi-class lung pneumonia (102 healthy people, 96 COVID-19 patients, 41 H1N1 patients and 53 CAP patients), our system has great potential for clinical application.

Acknowledgment

The authors would like to thank all of clinicians, patients and researchers who gave valuable time effort and support for this project, especially in data collection and annotation Additionally, we appreciate the contribution to this paper by Wenxuan Wang (for the help of paper revision), Junlin Hou for her suggestion in 3D baselines) and Longquan Jiang (for the assistance of data processing).

Appendix A. Non-Parametric Assessment

Given a volume of CT exam with  slices in sequence, we feed them into our slice-level classification network to obtain two kinds of probabilities for each slice,

slices in sequence, we feed them into our slice-level classification network to obtain two kinds of probabilities for each slice,  and

and  , where

, where  means the probability whether a CT slice contains any lesion or not, and

means the probability whether a CT slice contains any lesion or not, and  denotes the predicted probability of multi-class pneumonia assessment (i.e., 0: Healthy; 1: COVID-19; 2: H1N1; 3: CAP). Then, we derive the final probabilities of four classes for each slice from

denotes the predicted probability of multi-class pneumonia assessment (i.e., 0: Healthy; 1: COVID-19; 2: H1N1; 3: CAP). Then, we derive the final probabilities of four classes for each slice from  and

and  , which can be expressed as follows,

, which can be expressed as follows,

|

Intuitively, if all or most of slices are predicted as Health, the patient has a very high chance of being healthy. Otherwise, he will be diagnosed as either COVID-10, H1N1 or CAP, according to CT imaging manifestation. To simulate this process, our proposed non-parametric holistic assessment on patient-level can be formulated as follows,

|

where  and

and  if

if  , otherwise,

, otherwise,  .

.  is a hyper-parameter to control the degree of ‘most of,’ that is, the proportion of healthy (normal) slices. Normally, the chest CT slices of healthy people should be nearly or completely all normal. Therefore, without loss of generality, in this paper, we set it as a reasonable and acceptable value, i.e.,

is a hyper-parameter to control the degree of ‘most of,’ that is, the proportion of healthy (normal) slices. Normally, the chest CT slices of healthy people should be nearly or completely all normal. Therefore, without loss of generality, in this paper, we set it as a reasonable and acceptable value, i.e.,  .

.

Funding Statement

This work was supported in part by NSFC Project under Grant 62076067, in part by STCSM Projects under Grants 20441900600 and 19ZR1471800, and in part by Shanghai Municipal Science and Technology Major Project under Grant 2017SHZDZX01.

Footnotes

Large scale of patient-level training cases, which are required for 3D CNN based methods, are very difficult to access due to various complex factors, e.g. time limitation and patient privacy. However, a small scale of CT volumes can provide plenty of slice-level samples with category labels, which can be utilized in a 2D CNN system.

In this paper, we utilize the method of bootstrap to sample  groups of test sets with replacement (

groups of test sets with replacement ( is very large, 1000, for example), and then calculate the

is very large, 1000, for example), and then calculate the  -values from

-values from  groups of results, which are evaluated by our model and other competitors, respectively.

groups of results, which are evaluated by our model and other competitors, respectively.

For slices that are classified as Healthy, it detects and visualizes the areas of ‘without lesions’ (described in Section III-B2), so almost the entire image is highlighted.

Contributor Information

Xuelin Qian, Email: xlqian15@fudan.edu.cn.

Huazhu Fu, Email: hzfu@ieee.org.

Weiya Shi, Email: shiweiya@shphc.org.cn.

Tao Chen, Email: eetchen@fudan.edu.cn.

Yanwei Fu, Email: yanweifu@fudan.edu.cn.

Fei Shan, Email: shanfei@shphc.org.cn.

Xiangyang Xue, Email: xyxue@fudan.edu.cn.

References

- [1].Adhikari S. P. et al. , “Epidemiology, causes, clinical manifestation and diagnosis, prevention and control of coronavirus disease (COVID-19) during the early outbreak period: A scoping review,” Infect. Dis. Poverty, vol. 9, no. 1, pp. 1–12, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Chan J. F.-W. et al. , “A familial cluster of pneumonia associated with the 2019 novel coronavirus indicating person-to-person transmission: A study of a family cluster,” Lancet, vol. 395, no. 10223, pp. 514–523, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].NCIRD, 2020. [Online]. Available: https://www.cdc.gov/coronavirus/2019-ncov/lab/guidelines-clinical-specimens.html

- [4].Ai T. et al. , “Correlation of chest CT and RT-PCR testing in coronavirus disease 2019 (COVID-19) in China: A report of 1014 cases,” Radiology, vol. 296, no. 2, pp. E32–E40, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Chung M. et al. , “CT imaging features of 2019 novel coronavirus (2019-nCoV),” Radiology, vol. 295, no. 1, pp. 202–207, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Pan Y. and Guan H., “Imaging changes in patients with 2019-nCov,” Eur. Radiol., vol. 30, no. 7, pp. 3612–3613, Feb. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Fang Y. et al. , “Sensitivity of chest CT for COVID-19: Comparison to RT-PCR,” Radiology, vol. 296, no. 2, pp. E115–E117, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Kanne J. P., Little B. P., Chung J. H., Elicker B. M., and Ketai L. H., “Essentials for radiologists on COVID-19: An update–radiology scientific expert panel,” Radiology, vol. 296, no. 2, pp. E113–E114, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Liu M., Zeng W., Wen Y., Zheng Y., Lv F., and Xiao K., “COVID-19 pneumonia: CT findings of 122 patients and differentiation from influenza pneumonia,” Eur. Radiol., vol. 30, no. 10, pp. 5463–5469, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Chen W. et al. , “Pulmonary invasive fungal disease and bacterial pneumonia: A comparative study with high-resolution CT,” Amer. J. Transl. Res., vol. 11, no. 7, 2019, Art. no. 4542. [PMC free article] [PubMed] [Google Scholar]

- [11].Krizhevsky A., Sutskever I., and Hinton G. E., “Imagenet classification with deep convolutional neural networks,” in Proc. Adv. Neural Inf. Process. Syst., 2012, pp. 1097–1105. [Google Scholar]

- [12].Wang X., Girshick R., Gupta A., and He K., “Non-local neural networks,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2018, pp. 7794–7803. [Google Scholar]

- [13].Qian X., Fu Y., Xiang T., Jiang Y.-G., and Xue X., “Leader-based multi-scale attention deep architecture for person re-identification,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 42, no. 2, pp. 371–385, Feb. 2020. [DOI] [PubMed] [Google Scholar]

- [14].Wang W., Fu Y., Qian X., Jiang Y.-G., Tian Q., and Xue X., “Fm2u-net: Face morphological multi-branch network for makeup-invariant face verification,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2020, pp. 5730–5740. [Google Scholar]

- [15].Chen K. et al. , “Hybrid task cascade for instance segmentation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2019, pp. 4974–4983. [Google Scholar]

- [16].Heinsfeld A. S., Franco A. R., Craddock R. C., Buchweitz A., and Meneguzzi F., “Identification of autism spectrum disorder using deep learning and the abide dataset,” NeuroImage: Clin., vol. 17, pp. 16–23, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Kong Y., Gao J., Xu Y., Pan Y., Wang J., and Liu J., “Classification of autism spectrum disorder by combining brain connectivity and deep neural network classifier,” Neurocomputing, vol. 324, pp. 63–68, 2019. [Google Scholar]

- [18].Liu S., Liu S., Cai W., Pujol S., Kikinis R., and Feng D., “Early diagnosis of alzheimer's disease with deep learning,” in Proc. IEEE Int. Symp. Biomed. Imag., 2014, pp. 1015–1018. [Google Scholar]

- [19].Ortiz A., Munilla J., Gorriz J. M., and Ramirez J., “Ensembles of deep learning architectures for the early diagnosis of the alzheimer's disease,” Int. J. Neural Syst., vol. 26, no. 07, 2016, Art. no. 1650025. [DOI] [PubMed] [Google Scholar]

- [20].Jo T., Nho K., and Saykin A. J., “Deep learning in Alzheimer's disease: Diagnostic classification and prognostic prediction using neuroimaging data,” Front. Aging Neurosci., vol. 11, pp. 220–223, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Albarqouni S., Baur C., Achilles F., Belagiannis V., Demirci S., and Navab N., “Aggnet: Deep learning from crowds for mitosis detection in breast cancer histology images,” IEEE Trans. Med. Imag., vol. 35, no. 5, pp. 1313–1321, May 2016. [DOI] [PubMed] [Google Scholar]

- [22].Bejnordi B. E. et al. , “Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer,” JAMA, vol. 318, no. 22, pp. 2199–2210, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Deng L., Tang S., Fu H., Wang B., and Zhang Y., “Spatiotemporal breast mass detection network (MD-Net) in 4D DCE-MRI images,” in Proc. Int. Conf. Med. Image Comput. Comput.-Assist. Interv., 2019, pp. 271–279. [Google Scholar]

- [24].Sayres R. et al. , “Using a deep learning algorithm and integrated gradients explanation to assist grading for diabetic retinopathy,” Ophthalmology, vol. 126, no. 4, pp. 552–564, 2019. [DOI] [PubMed] [Google Scholar]