Abstract

Strong evidence supporting the top-down modulation of attention has come from studies in which participants learned to suppress a singleton in a heterogeneous four-item display. These studies have been criticized on the grounds that the displays are so sparse that the singleton is not actually salient. We argue that similar evidence of suppression has been found with substantially larger displays where salience is not in question. Additionally, we examine the results of applying salience models to four-item displays, and find prominent markers of salience at the location of the singleton. We conclude that small heterogeneous displays do not preclude strong salience signals. Beyond that, we reflect on how further basic research on salience may speed resolution of the attentional capture debate.

Luck, Gaspelin, Folk, Remington, & Theeuwes (2021) should be thanked for their efforts to resolve a controversy that has been going on for some 25 years. In this commentary we look at a narrow slice of this controversy, and use it to explore a much larger question: What is salience?

In a series of studies, Gaspelin, Luck, and colleagues (e.g., Gaspelin, Leonard & Luck, 2015) have come up with what would seem to be strong evidence of top-down inhibitory control of attention. Their paradigm involves randomly intermixed search and probe trials (see the section on “Evidence for singleton suppression” in the target article). The search displays provide participants the opportunity to learn to inhibit one color; the inhibition is then demonstrated by performance in the probe trials, although analyses of some aspects of the search data also demonstrate inhibition. In another series of studies, Chang and Egeth (e.g., 2019) adapted this method to make the results more clearly related to perception rather than memory, and showed that top-down control can enhance as well as inhibit stimuli. However, all these demonstrations have come under attack on the grounds that the singletons used in these studies are not salient enough to induce participants to use a priority map. Instead, it has been suggested that their search displays probably induced serial search.

What is the alleged problem? Two chief criticisms have been leveled (see, e.g., Wang and Theeuwes, 2020). First, the search displays typically contain few elements; frequently only four (see, for example, Figure 1). It seems widely accepted that saliency should increase as the number of elements in the display increases. (For example, in a red/green display, the salience of a single red item is said to increase as the number of green items increases). This has given warrant to those who would like to claim that four items are too few to generate salience. In addition, the search task is designed to prevent participants from using singleton detection mode, and instead forces them to use a feature-search strategy. That is accomplished by using displays with heterogeneous nontargets. However, Duncan and Humphreys (1989) long ago showed that increasing display heterogeneity has detrimental effects on target detection time. The claim is that saliency, of both the target and of the irrelevant singleton, is reduced as heterogeneity increases (see, e.g., Wang & Theeuwes, 2020, who showed that the suppression demonstrated by Gaspelin et al. (2015) did not occur when 10-element heterogeneous displays were used). “Wang and Theeuwes (2020) argued that in these type of displays with a limited number of heterogeneous elements, there is no capture by the color singleton not because of some assumed top-down feature suppression but simply because there is no salient priority signal to begin with” (Luck et al. 2021, p. 16). There may be other problems with small displays (e.g., Kerzel & Burra, 2020); what we address here is the argument that the small displays used by Gaspelin and colleagues and by Chang and Egeth lack salience.

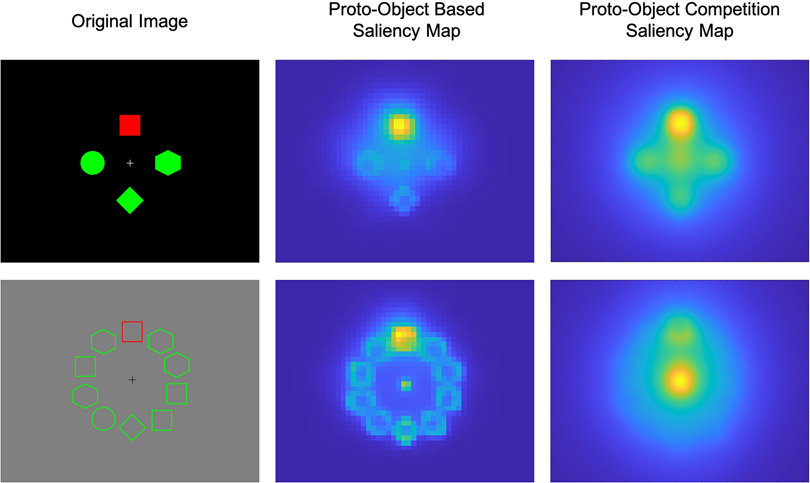

Figure 1.

Top row: Left, shows a stimulus typical of those used by Gaspelin, Leonard, and Luck, (2015) and by Chang and Egeth (2019); center shows output of the Russell et al. (2014) saliency model saliency when applied to that stimulus; and right shows output of the Jeck et al. (2019) model. Bottom row: Left shows a stimulus typical of those used by Theeuwes and his colleagues (e.g., Wang & Theeuwes, 2020); center and right show the outputs of the two saliency models applied to that stimulus.

The arguments that stem from the Duncan and Humphreys analysis are quite reasonable. However, it doesn’t follow that they are sufficient to undermine the conclusions drawn from the studies using size-four displays. That is, even if the salience were lower in size-four displays it might still be potent enough to guide and even capture attention. We should note first that results showing top-down control have been obtained with displays of sizes up to 10, using both feature-search and singleton-search tasks (e.g., Gaspar & McDonald, 2014; Gaspelin et al., 2015). Additionally, in forthcoming work Stilwell and Gaspelin (2021) report suppression in displays of size 10 and 30. They were able to obtain this result by using displays like those in Wang and Theeuwes (2020) but correcting a design flaw in that experiment; with large numbers of items in the probe, one encounters floor effects on report accuracy, which make below-baseline (i.e., suppression) effects difficult to observe in the probe task. Stilwell and Gaspelin avoided this problem by using large display sizes in their search task, but only four items in their probe displays. These results show that it is not necessary to rely on the data from search tasks that used four items in the search displays to conclude that top-down control is operative. However, it is instructive to look more closely at that condition for what it tells us about salience.

Is it true that the N= 4 displays have low salience? Consider the stimulus displays in Figure 1. To us, the red items in both displays look highly salient. However, intuition may not be a good guide, as people might well disagree about that judgement. Another approach is to run the stimuli through a program that is based on a model that assesses salience. There are many such models. We chose two recent ones that were readily available to us (Jeck, Qin, Egeth, & Niebur, 2019; Russell, Mihalaş, von der Heydt, Niebur, & Etienne-Cummings, 2014). In Figure 1 we show stimuli like those frequently used by Theeuwes, and like those used by Gaspelin and Luck and by Chang and Egeth. Figure 1 also shows the salience maps that each model generated for those stimuli. First, we note that one can see the display as showing a collection of four (top) or ten (bottom) objects (circles, squares, diamonds, etc.). At this scale, the salience of individual objects is determined by the usual center-surround differences in the various submodalities that are part of each computational model. But this collection can also be seen as a single perceptual object with four, or ten, parts. At this larger scale, there is only one object in the scene, which makes it by default salient. Our computational models take both of these views into account. The saliency maps produced by the models thus show a local maximum at the location of the small-scale object that differs most from the others (the red square at the top), as well as another maximum in the center of the large-scale composite object. For the purposes of this paper's topic, the chief observation we make about the outcome is that there is a prominent “hot spot” at the location of the irrelevant singleton, and this is the case for both the N=4 and N=10 stimuli. We take this to mean that the singleton is, indeed, salient even with as few as four items, and even though the nontargets are heterogeneous, as must be the case in a feature-search condition.

The numerical salience values of the color singleton are higher for the 10-item displays than the 4-item displays for the Russell et al. (2014) model, as we might expect from Duncan and Humphreys (1989). Interestingly, this is reversed in the Jeck et al. (2019) model.

The Gaspelin and Luck and the Chang and Egeth studies are not the only ones that have been criticized for using displays that lack salience because they are said to be overly sparse. The same argument has emerged in the literature concerning whether “pop out” is due strictly to stimulus salience or whether it may be modulated by what has occurred on previous trials (a “history” effect). Such effects may be strong when salience is supposedly low (i.e., at small set sizes), and weak when salience is supposedly high and dominates any priming effect from previous trials. One strong piece of evidence supporting this argument is that feature-priming effects, which are robust at small display sizes (e.g., 3 or 4), were reduced or eliminated in a tightly packed 12-item display (Meeter & Olivers, 2005). However, Becker and Ansorge (2013) subsequently performed similar experiments, but used eye tracking instead of manual responses to provide a better measure of early attentional processing. They found that the proportion of first target fixations and the latency of those fixations showed no evidence that priming was eliminated at larger set sizes.

It is worth going into a bit more detail about the effects of display size. The broad claim is that saliency of, for example, a red item among green items should increase as the number of green items increases. Why should this be? One reason this might be the case can be traced back to an argument made by Sagi and Julesz (1987). They argued that for a target to be detected preattentively, it must be within some small critical distance of a nontarget, thus creating a local feature gradient. As the number of elements in a display increases, with total area held constant, the probability of a target and a nontarget falling within that critical distance increases. (Sagi and Julesz were concerned with accuracy, not reaction time, but it seems clear enough how this logic can be applied to make predictions about reaction time.) Bacon and Egeth (1991) subjected this argument to a direct test. They independently manipulated display numerosity and target-nontarget separation. Search time was unaffected by separation, but did decrease with numerosity. Why was that? In a subsequent experiment, when grouping among the nontargets was inhibited, it was found that RT no longer decreased with increasing display numerosity. It was concluded that nontarget grouping is a critical factor, and that “salience” (here, the feature gradient created by juxtaposition of a target and one or more nontargets) is not.

So, what is salience? It seems that although salience is a basic concept in vision science, and we have some good approximations of it, it is not yet fully understood. Some evidence for this comes from a recent analysis of how well saliency models can detect odd-one-out targets. Kotseruba, Wloka, Rasouli, and Tsotsos (2019) collected large numbers of both abstract and natural scenes with one singleton each and ran nearly three dozen saliency models on them. They found that the models typically detect odd-one-out targets only after many iterations, in some cases more than would be expected by chance.

Many of these models go back to the functional definition of saliency as integrated local feature gradients, formalized by Koch and Ullman (1985). This concept has been extremely useful, and continues to be so, in particular after its quantitative implementation by Itti, Koch, and Niebur (1998) that made it accessible to a large community of vision scientists and technologists. A large number of studies have shown that this class of models can predict rather well where humans fixate, again both in abstract and in natural scenes. Their predictive power goes beyond eye movements, all the way to making conscious decisions and using hand/arm movements to indicate what observers consider the most salient image regions (e.g., Jeck et al., 2019).

Why, then, did the models do so poorly on detecting singletons in the Kotseruba et al. paradigm? Although that study does not compare model performance with human behavior (except for a small number of images outside their main data set), one might have expected that saliency models would perform well on the task. This is clearly not the case. A possible explanation is given by a closer look at the differences in the models' performance for different image types. Many models work relatively well for those features that can be easily defined in terms of basic image properties, like color, which is why we felt justified applying salience models to our sample stimuli (see Figure 1). The Jeck et al. (2019) and the Russell et al. (2014) models were not included in the Kotseruba et al. survey, but we were confident in selecting them because they do a good job of detecting singletons and correlating with human performance.

However, many models perform worse in those cases where the difference between target (the singleton) and distracters is less easily quantified, which implies that it is difficult to implement in a computer program. One example is size: psychophysical evidence suggests that objects whose size differs from that of their neighbors are salient (Wolfe & Horowitz, 2004). In principle, size is easily quantifiable but measuring and comparing the size of different objects requires the definition of what is an object, a highly non-trivial endeavor. It may thus not be surprising that many of the models tested by Kotseruba et al. found color-defined singletons quite effectively but did very poorly on finding objects whose size differs from those in their surround.

It is possible that the problem is not the basic architecture of the many saliency models that are based on the integration of multiple feature gradients at multiple scales. Instead, the problem may occur one stage earlier, in the definition of the underlying “features” themselves. For instance, future work in understanding perceptual organization will hopefully allow us to gain more insight into the representation of objects, which will then allow quantification of their characteristic features, including size. Many of the problems of current saliency models may then be solved by using this representation as their input. Yet it is also possible that this approach is too narrow. The mere existence of a large number of computational models with different architectures may indicate a lack of deep understanding of what saliency is, and even whether it is a unified concept. Advances in this area will no doubt facilitate completion of the project of “resolving the attentional capture debate” (Luck et al., 2021).

Acknowledgments

We would like to acknowledge support from NIH grant R01DA040990 and NSF grant 1835202 for Ernst Niebur, and NIH grant R01 MH113652 for Howard Egeth. Thanks are due Danny Jeck and Takeshi Uejima for help in applying saliency models to sample stimuli.

Footnotes

We have no known conflicts of interest to disclose.

References

- Bacon WF, & Egeth HE (1991). Local processing in preattentive feature detection. Journal of Experimental Psychology: Human Perception and Performance, 17(1), 77–90. [DOI] [PubMed] [Google Scholar]

- Becker SI, & Ansorge U (2013). Higher set sizes in pop-out search displays do not eliminate priming or enhance target selection. Vision Research, 81, 18–28. 10.1016/j.visres.2013.01.009 [DOI] [PubMed] [Google Scholar]

- Chang S, & Egeth HE (2019). Enhancement and suppression flexibly guide attention. Psychological Science, 30(12), 1724–1732. 10.1177/0956797619878813 [DOI] [PubMed] [Google Scholar]

- Duncan J, & Humphreys GW (1989). Visual search and stimulus similarity. Psychological Review, 96(3), 433–458. [DOI] [PubMed] [Google Scholar]

- Gaspar JM, & McDonald JJ (2014). Suppression of salient objects prevents distraction in visual search. Journal of Neuroscience, 34(16), 5658–5666. 10.1523/JNEUROSCI.4161-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaspelin N, Leonard CJ, & Luck SJ (2015). Direct evidence for active suppression of salient-but-irrelevant sensory inputs. Psychological Science, 26(11), 1740–1750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itti L, Koch C, and Niebur E (1998). A model of saliency-based fast visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence, 20(11):1254–1259. [Google Scholar]

- Jeck DM, Qin M, Egeth H, and Niebur E (2019). Unique objects attract attention even when faint. Vision Research, 160, 60–71. [DOI] [PubMed] [Google Scholar]

- Kerzel D, & Burra N (2020). Capture by context elements, not attentional suppression of distractors, explains the PD with small search displays. Journal of Cognitive Neuroscience, 32(6), 1170–1183. [DOI] [PubMed] [Google Scholar]

- Koch C and Ullman S (1985). Shifts in selective visual attention: towards the underlying neural circuitry. Human Neurobiology, 4(4), 219–227. [PubMed] [Google Scholar]

- Kotseruba J, Wloka C, Rasouli A, & Tsotsos JK (2019). Do saliency models detect odd-one-out targets? New datasets and evaluations. Proceedings of the British Machine Vision Conference (BMVC), Cardiff, UK, 9th—12th September 2019. [Google Scholar]

- Luck SJ, Gaspelin N, Folk CL, Remington RW, & Theeuwes J (2021). Progress toward resolving the attentional capture debate. Visual Cognition, 29(1), 1–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meeter M, & Olivers CNL (2006). Intertrial priming stemming from ambiguity: A new account of priming in visual search. Visual Cognition, 13(2), 202–222. 10.1080/13506280500277488 [DOI] [Google Scholar]

- Russell AF, Mihalaş S, von der Heydt R, Niebur E, & Etienne-Cummings R (2014). A model of proto-object based saliency. Vision Research, 94, 1–15. 10.1016/j.visres.2013.10.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sagi D, & Julesz B (1985). “Where” and “What” in vision. Science, 228, 1214–1219. [DOI] [PubMed] [Google Scholar]

- Stilwell BT, & Gaspelin N (2021, May). Even highly salient distractors are proactively suppressed. The Annual Meeting of the Vision Sciences Society, Virtual conference. [Google Scholar]

- Wang B, & Theeuwes J (2020). Salience determines attentional orienting in visual selection. Journal of Experimental Psychology. Human Perception and Performance, 46(10), 1051–1057. 10.1037/xhp0000796 [DOI] [PubMed] [Google Scholar]

- Wolfe JM, & Horowitz TS (2004). What attributes guide the deployment of attention and how do they do it. Nature Reviews Neuroscience, 5, 495–501. [DOI] [PubMed] [Google Scholar]