Abstract

The Global Wheat Head Detection (GWHD) dataset was created in 2020 and has assembled 193,634 labelled wheat heads from 4700 RGB images acquired from various acquisition platforms and 7 countries/institutions. With an associated competition hosted in Kaggle, GWHD_2020 has successfully attracted attention from both the computer vision and agricultural science communities. From this first experience, a few avenues for improvements have been identified regarding data size, head diversity, and label reliability. To address these issues, the 2020 dataset has been reexamined, relabeled, and complemented by adding 1722 images from 5 additional countries, allowing for 81,553 additional wheat heads. We now release in 2021 a new version of the Global Wheat Head Detection dataset, which is bigger, more diverse, and less noisy than the GWHD_2020 version.

1. Introduction

Quality training data is essential for the deployment of deep learning (DL) techniques to get a general model that can scale on all the possible cases. Increasing dataset size, diversity, and quality is expected to be more efficient than increasing network complexity and depth [1]. Datasets like ImageNet [2] for classification or MS COCO [3] for instance detection are crucial for researchers to develop and rigorously benchmark new DL methods. Similarly, the importance of getting plant- or crop-specific datasets is recognized within the plant phenotyping community ([4–10], p. 2, [11–13]). These datasets allow benchmarking the algorithm performances used to estimate phenotyping traits while encouraging computer vision experts to further improvement ([10], p. 2, [14–17]). The emergence of affordable RGB cameras and platforms, including UAVs and smartphones, makes in-field image acquisition easily accessible. These high-throughput methods are progressively replacing manual measurement of important traits such as wheat head density. Wheat is a crop grown worldwide, and the number of heads per unit area is one of the main components of yield potential. Creating a robust deep learning model performing over all the situations requires a dataset of images covering a wide range of genotypes, sowing density and pattern, plant state and stage, and acquisition conditions. To answer this need for a large and diverse wheat head dataset with consistent and quality labeling, we developed in 2020 the Global Wheat Head Detection (GWHD_2020) [18] that was used to benchmark methods proposed in the computer vision community and recommend best practices to acquire images and keep track of the metadata.

The GWHD_2020 dataset results from the harmonization of several datasets coming from nine different institutions across seven countries and three continents. There are already 27 publications [19–45] (accessed July 2021) that have reported their wheat head detection model using the GWHD_2020 dataset as the standard for training/testing data. A “Global Wheat Detection” competition hosted by Kaggle was also organized, attracting 2245 teams across the world [14], leading to improvements in wheat head detection models [23, 25, 31, 41]. However, issues with the GWHD_2020 dataset were detected during the competition, including labeling noise and an unbalanced test dataset.

To provide a better benchmark dataset for the community, the GWHD_2021 dataset was organized with the following improvements: (1) the GWHD_2020 dataset was checked again to eliminate few poor-quality images, (2) images were re-labeled to avoid consistency issues, (3) a wider range of developmental stages from the GWHD_2020 sites was included, and (4) datasets from 5 new countries (the USA, Mexico, Republic of Sudan, Norway, and Belgium) were added. The resulting GWHD_2021 dataset contains 275,187 wheat heads from 16 institutions distributed across 12 countries.

2. Materials and Methods

The first version of GWHD_2020, used for the Kaggle competition, was divided into several subdatasets. Each subdataset represented all images from one location, acquired with one sensor while mixing several stages. However, wheat head detection models may be sensitive to the developmental stage and acquisition conditions: at the beginning of head emergence, a part of the head is barely visible because it is still not fully out from the last leaf sheath and possibly masked by the awns. Further, during ripening, wheat heads tend to bend and overlap, leading to more erratic labeling. A redefinition of the subdataset was hence necessary to help investigate the effect of the developmental stage on model performances. The new definition of a subdataset was then formulated as “a consistent set of images acquired over the same experimental unit, during the same acquisition session with the same vector and sensor.” A subdataset defines therefore a domain. This new definition forced to split the original GWHD_2020 subdatasets into several smaller ones. The UQ_1 was split into 6 much smaller subdatasets, Arvalis_1 was split into 3 subdatasets, Arvalis_3 into 2 subdatasets, and utokyo_1 into 2 subdatasets. However, in the case of utokyo_2 which was a collection of images taken by farmers at different stages and in different fields, the original subdataset was kept. Overall, the 11 original subdatasets in GWHD_2020 were distributed into 19 subdatasets for GWHD_2021.

Almost 2000 new images were added to GWHD_2020, constituting a major improvement. A part of the new images comes from the institutions already contributing to GWHD_2020 and was collected during a different year and/or at a different location. This was the case for Arvalis (Arvalis_7 to Arvalis_12), University of Queensland (UQ_7 to UQ_11), Nanjing Agricultural University (NAU_2 and NAU_3), and University of Tokyo (Utokyo_1). In addition, 14 new subdatasets were included, coming from 5 new countries: Norway (NMBU), Belgium (Université of Liège [46]), United States of America (Kansas State University [47], TERRA-REF [7]), Mexico (CIMMYT), and Republic of Sudan (Agricultural Research Council). All these images were acquired at a ground sampling distance between 0.2 and 0.4 mm, i.e., similar to that of the images in GWHD_2020. Because none of them was already labeled, a sample was selected by taking no more than one image per microplot, which was randomly cropped to 1024 × 1024 px patches that will be called images in the following for the sake of simplicity.

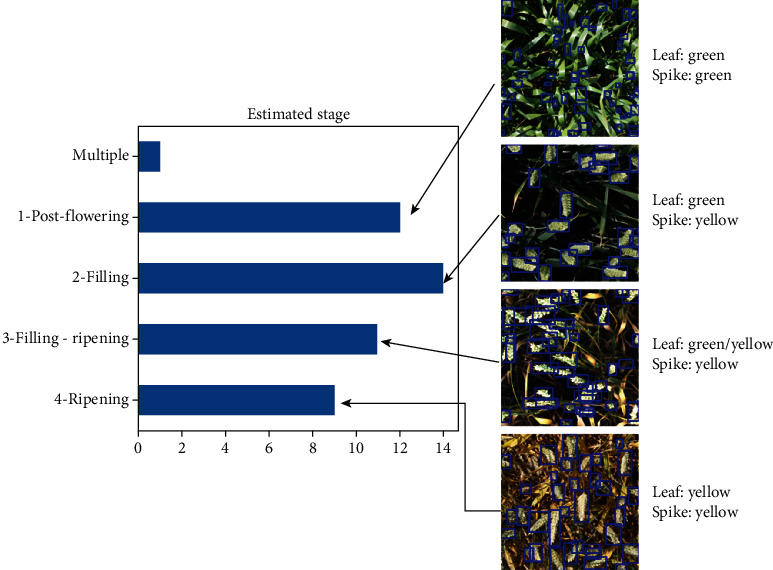

With the addition of 1722 images and 86,000 wheat heads, the GWHD_2021 dataset contains 6500 images and 275,000 wheat heads. The increase in the number of subdatasets from 18 to 47 leads to a larger diversity between them which can be observed on Figure 1. The subdatasets are described in Table 1. However, the new definition of a subdataset led also to more unbalanced subdatasets: the smallest (Arvalis_8) contains only 20 images, while the biggest (ETHZ_1) contains 747 images. This provides the opportunity to possibly take advantage of the data distribution to improve model training. Each subdataset has been visually assigned to several development stage classes depending on the respective color of leaves and heads (Figure 2): postflowering, filling, filling-ripening, and ripening. Examples of the different stages are presented in Figure 2. While being approximative, this metadata is expected to improve model training.

Figure 1.

Sample images of the Global Wheat Head Detection 2021. The blue boxes correspond to the interactively labeled heads.

Table 1.

The subdatasets for GWHD_2020 and GWHD_2021. The column “2020 name” indicates the name given to the subdatasets for GWHD_2020, which were split into several new subdatasets.

| GWHD_2021 subdataset name | GWHD_2020 subdataset name | Owner | Country | Location | Acquisition date | Platform | Development stage | Number of images | Number of wheat head |

|---|---|---|---|---|---|---|---|---|---|

| Ethz_1 | ethz_1 | ETHZ | Switzerland | Usask | 06/06/2018 | Spidercam | Filling | 747 | 49603 |

| Rres_1 | rres_1 | Rothamsted | UK | Rothamsted | 13/07/2015 | Gantry | Filling-ripening | 432 | 19210 |

| ULiège-GxABT_1 | Uliège/Gembloux | Belgium | Gembloux | 28/07/2020 | Cart | Ripening | 30 | 1847 | |

| NMBU_1 | NMBU | Norway | NMBU | 24/07/2020 | Cart | Filling | 82 | 7345 | |

| NMBU_2 | NMBU | Norway | NMBU | 07/08/2020 | Cart | Ripening | 98 | 5211 | |

| Arvalis_1 | arvalis_1 | Arvalis | France | Gréoux | 02/06/2018 | Handheld | Postflowering | 66 | 2935 |

| Arvalis_2 | arvalis_1 | Arvalis | France | Gréoux | 16/06/2018 | Handheld | Filling | 401 | 21003 |

| Arvalis_3 | arvalis_1 | Arvalis | France | Gréoux | 07/2018 | Handheld | Filling-ripening | 588 | 21893 |

| Arvalis_4 | arvalis_2 | Arvalis | France | Gréoux | 27/05/2019 | Handheld | Filling | 204 | 4270 |

| Arvalis_5 | arvalis_3 | Arvalis | France | VLB ∗ | 06/06/2019 | Handheld | Filling | 448 | 8180 |

| Arvalis_6 | arvalis_3 | Arvalis | France | VSC ∗ | 26/06/2019 | Handheld | Filling-ripening | 160 | 8698 |

| Arvalis_7 | Arvalis | France | VLB ∗ | 06/2019 | Handheld | Filling-ripening | 24 | 1247 | |

| Arvalis_8 | Arvalis | France | VLB ∗ | 06/2019 | Handheld | Filling-ripening | 20 | 1062 | |

| Arvalis_9 | Arvalis | France | VLB ∗ | 06/2020 | Handheld | Ripening | 32 | 1894 | |

| Arvalis_10 | Arvalis | France | Mons | 10/06/2020 | Handheld | Filling | 60 | 1563 | |

| Arvalis_11 | Arvalis | France | VLB ∗ | 18/06/2020 | Handheld | Filling | 60 | 2818 | |

| Arvalis_12 | Arvalis | France | Gréoux | 15/06/2020 | Handheld | Filling | 29 | 1277 | |

| Inrae_1 | inrae_1 | INRAe | France | Toulouse | 28/05/2019 | Handheld | Filling-ripening | 176 | 3634 |

| Usask_1 | usask_1 | USaskatchewan | Canada | Saskatchewan | 06/06/2018 | Tractor | Filling-ripening | 200 | 5985 |

| KSU_1 | Kansas State University | US | KSU | 19/05/2016 | Tractor | Postflowering | 100 | 6435 | |

| KSU_2 | Kansas State University | US | KSU | 12/05/2017 | Tractor | Postflowering | 100 | 5302 | |

| KSU_3 | Kansas State University | US | KSU | 25/05/2017 | Tractor | Filling | 95 | 5217 | |

| KSU_4 | Kansas State University | US | KSU | 25/05/2017 | Tractor | Ripening | 60 | 3285 | |

| Terraref_1 | TERRA-REF project | US | Maricopa, AZ | 02/04/2020 | Gantry | Ripening | 144 | 3360 | |

| Terraref_2 | TERRA-REF project | US | Maricopa, AZ | 20/03/2020 | Gantry | Filling | 106 | 1274 | |

| CIMMYT_1 | CIMMYT | Mexico | Ciudad Obregon | 24/03/2020 | Cart | Postflowering | 69 | 2843 | |

| CIMMYT_2 | CIMMYT | Mexico | Ciudad Obregon | 19/03/2020 | Cart | Postflowering | 77 | 2771 | |

| CIMMYT_3 | CIMMYT | Mexico | Ciudad Obregon | 23/03/2020 | Cart | Postflowering | 60 | 1561 | |

| Utokyo_1 | utokyo_1 | UTokyo | Japan | NARO-Tsukuba | 22/05/2018 | Cart | Ripening | 538 | 14185 |

| Utokyo_2 | utokyo_1 | UTokyo | Japan | NARO-Tsukuba | 22/05/2018 | Cart | Ripening | 456 | 13010 |

| Utokyo_3 | utokyo_2 | UTokyo | Japan | NARO-Hokkaido | Multi-years | Handheld | Multiple | 120 | 3085 |

| Ukyoto_1 | UKyoto | Japan | Kyoto | 30/04/2020 | Handheld | Postflowering | 60 | 2670 | |

| NAU_1 | NAU_1 | NAU | China | Baima | n.a | Handheld | Postflowering | 20 | 1240 |

| NAU_2 | NAU | China | Baima | 02/05/2020 | Cart | Postflowering | 100 | 4918 | |

| NAU_3 | NAU | China | Baima | 09/05/2020 | Cart | Filling | 100 | 4596 | |

| UQ_1 | uq_1 | UQueensland | Australia | Gatton | 12/08/2015 | Tractor | Postflowering | 22 | 640 |

| UQ_2 | uq_1 | UQueensland | Australia | Gatton | 08/09/2015 | Tractor | Postflowering | 16 | 39 |

| UQ_3 | uq_1 | UQueensland | Australia | Gatton | 15/09/2015 | Tractor | Filling | 14 | 297 |

| UQ_4 | uq_1 | UQueensland | Australia | Gatton | 01/10/2015 | Tractor | Filling | 30 | 1039 |

| UQ_5 | uq_1 | UQueensland | Australia | Gatton | 09/10/2015 | Tractor | Filling-ripening | 30 | 3680 |

| UQ_6 | uq_1 | UQueensland | Australia | Gatton | 14/10/2015 | Tractor | Filling-ripening | 30 | 1147 |

| UQ_7 | UQueensland | Australia | Gatton | 06/10/2020 | Handheld | Ripening | 17 | 1335 | |

| UQ_8 | UQueensland | Australia | McAllister | 09/10/2020 | Handheld | Ripening | 41 | 4835 | |

| UQ_9 | UQueensland | Australia | Brookstead | 16/10/2020 | Handheld | Filling-ripening | 33 | 2886 | |

| UQ_10 | UQueensland | Australia | Gatton | 22/09/2020 | Handheld | Filling-ripening | 53 | 8629 | |

| UQ_11 | UQueensland | Australia | Gatton | 31/08/2020 | Handheld | Postflowering | 42 | 4345 | |

| ARC_1 | ARC | Sudan | Wad Medani | 03/2021 | Handheld | Filling | 30 | 888 | |

| Total | 6515 | 275187 |

∗VLB: Villiers le Bâcle; VSC: Villers-Saint-Christophe. ∗∗Utokyo_1 and Utokyo_2 were taken at the same location with different sensors. ∗∗∗Utokyo_3 is a special subdataset made from images coming from a large variety of farmers in Hokaido between 2016 and 2019. Italic: Europe: bold: North America; underline: Asia; bold italic: Oceania; bold underline: Africa.

Figure 2.

Distribution of the development stage. The x-axis presents the number of subdataset per development stage.

3. Dataset Diversity Analysis

In comparison to GWHD_2020, the GWHD_2021 dataset puts emphasis on metadata documentation of the different subdatasets, as described in the discussion section of David et al. [18]. Alongside the acquisition platform, each subdataset has been reviewed and a development stage was assigned to each, except for Utokyo_3 (formerly utokyo_2) as it is a collection of images from various farmer fields and development stages. Globally, the GWHD_2021 dataset covers well all development stages ranging from postanthesis to ripening (Figure 2).

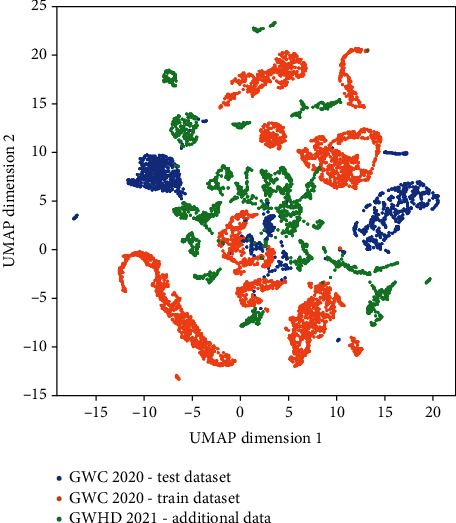

The diversity between images within the GWHD_2021 dataset was documented using the method proposed by Tolias et al. [48]. The deep learning image features were first extracted from the VGG-16 deep network pretrained on the ImageNet dataset that is considered representing well the general features of RGB images. We then selected the last layer which has a size of 14 × 14 × 512 and summed it into a unique vector of 512 channels, which is then normalized. Then, the UMAP dimentionality reduction algorithm [49] was used to project representations into a 2D space. The UMAP algorithm is used to keep the existing clusters during the projection to a low-dimension space. This 2D space is expected to capture the main features of the images. Results (Figure 3) demonstrate that the test dataset used for GWHD_2020 was biased in comparison to the training dataset. The subdatasets added in 2021 populate more evenly the 2D space which is expected to improve the robustness of the models.

Figure 3.

Distribution of the images in the two first dimensions defined by the UMAP algorithm for the GWHD 2021 dataset. The additional subdatasets as well as the training and test datasets from GWHD_2020 are represented by colors.

4. Presentation of Global Wheat Challenge 2021 (GWC 2021)

The results from the Kaggle challenge based on GWHD_2020 have been analyzed by the authors [14]. The findings emphasize that the design of a competition is critical to enable solutions that improve the robustness of the wheat head detection models. The Kaggle competition was based on a metric that was averaged across all test images, without distinction for the subdatasets, and it was biased toward a strict match of the labelling. This artificially enhances the influence on the global score of the largest datasets such as utokyo_1 (now split into Utokyo_1 and Utokyo_2). Further, the metrics used to score the agreement with the labeled heads and largely used for big datasets, such as MS COCO, appear to be less efficient when some heads are labeled in a more uncertain way as it was the case in several situations depending on the development stage, illumination conditions, and head density. As a result, the weighted domain accuracy is proposed as a new metric [14]. The accuracy computed over image i belonging to domain d, AId(i), is classically defined as

| (1) |

where TP, FN, and FP are, respectively, the number of true positive, false negative, and false positive found in image i. The weighted domain accuracy (WDA) is the weighted average of all domain accuracies:

| (2) |

where D is the number of domains (subdatasets) and nd is the number of images in domain d. The training, validation, and test datasets used are presented in Section 5.

The results of the Global Wheat Challenge 2021 are summarized in Table 2. The reference method is a faster-RCN with the same parameters than in the research paper GWHD_2020 [18] and trained on the GWHD_2021 (Global Wheat Challenge 2021 split) training dataset. The full leaderboard can be found at https://www.aicrowd.com/challenges/global-wheat-challenge-2021/leaderboards.

Table 2.

Presentation of the Global Wheat Challenge 2021 results.

| Solution name | WDA |

|---|---|

| randomTeamName (1st place) | 0.700 |

| David_jeon (2nd place) | 0.695 |

| SMART (2nd place) | 0.695 |

| Reference (faster-RCNN) | 0.492 |

5. How to Use/FAQ

How to download? The dataset can be download on Zenodo: https://zenodo.org/record/5092309

What is the license of the dataset? The dataset is under the MIT license, allowing for reuse without restriction

How to cite the dataset? The present paper can be cited when using the GWHD_2021 dataset. However, cite preferentially [18] for wheat head detection challenges or when discussing the difficulty to constitute a large datasets

How to benchmark? Depending on the objectives of the study, we recommend two sets of training, validation, and test (Table 3):

The Global Wheat Challenge 2021 split when the dataset is used for phenotyping purpose, to allow direct comparison with the winning solutions

The “GlobalWheat-WILDS” split is the one used for the WILDS paper [50]. We recommand to use the GlobalWheat-WILDS split when working on out-of-domain distribution shift problems

Table 3.

Presentation of the different splits which can be used with the GWHD_2021.

| Splits | Training | Validation | Test |

|---|---|---|---|

| Global Wheat Challenge 2021 | Ethz_1, Rres_1, Inrae_1, Arvalis (all), NMBU (all), ULiège-GxABT (all) | UQ_1 to UQ_6, Utokyo (all), NAU_1, Usask_1 | UQ_7 to UQ_12, Ukyoto_1, NAU_2 and NAU_3, ARC_1, CIMMYT (all), KSU (all), Terraref (all) |

| GlobalWheat-WILDS | Ethz_1, Rres_1, Inrae_1, Arvalis (all), NMBU (all), ULiège-GxABT (all) | UQ (all), Utokyo (all), Ukyoto_1, NAU (all) | CIMMYT (all), KSU (all); Terraref (all), Usask_1, ARC_1 |

It is further recommended to keep the weighted domain accuracy for comparison with previous works.

6. Conclusion

The second edition of the Global Wheat Head Detection, GWHD_2021, alongside the organization of a second Global Wheat Challenge is an important step for illustrating the usefulness of open and shared data across organizations to further improve high-throughput phenotyping methods. In comparison to the GWHD_2020 dataset, it represents five new countries, 22 new subdatasets, 1200 new images, and 120,000 new-labeled wheat heads. Its revised organization and additional diversity are more representative of the type of images researchers and agronomists can acquire across the world. The revised metrics used to evaluate the models during the Global Wheat Challenge 2021 can help researchers to benchmark one-class localization models on a large range of acquisition conditions. GWHD_2021 is expected to accelerate the building of robust solutions. However, progress on the representation of developing countries is still expected and we are open to new contributions from South America, Africa, and South Asia. We started to include nadir view photos from smartphones, to get a more comprehensive dataset and train reliable models for such affordable devices. Additional works are required to adapt such an approach to other vectors such as a camera mounted on unmanned aerial vehicle, or other high-resolution cameras working in other spectral domains. Further, it is planned to release wheat head masks alongside the bounding box given the very large number of boxes that already exists and provides more associated metadata.

Acknowledgments

We would like to thank the company “Human in the loop”, which corrected and labeled the new datasets. The help of Frederic Venault (INRAe Avignon) was also precious to check the labelled images. The work received support from ANRT for the CIFRE grant of Etienne David, cofunded by Arvalis for the project management. The labelling work was supported by several companies and projects, including Canada: The Global Institute Food Security, University of Saskatchewan which supported the organization of the competition. France: This work was supported by the French National Research Agency under the Investments for the Future Program, referred as ANR-16-CONV-0004 PIA #Digitag. Institut Convergences Agriculture Numérique, Hiphen supported the organization of the competition. Japan: Kubota supported the organization of the competition. Australia: Grains Research and Development Corporation (UOQ2002-008RTX machine learning applied to high-throughput feature extraction from imagery to map spatial variability and UOQ2003-011RTX INVITA—a technology and analytics platform for improving variety selection) supported competition.

Data Availability

The dataset is available on Zenodo (https://zenodo.org/record/5092309).

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this article.

References

- 1.Sambasivan N., Kapania S., Highfill H., Akrong D., Paritosh P., Aroyo L. M. Everyone wants to do the model work, not the data work: data cascades in high-stakes AI. New York, NY, USA: 2021. [DOI] [Google Scholar]

- 2.Deng J., Dong W., Socher R., Li L.-J., Li K., Fei-Fei L. ImageNet: a large-scale hierarchical image database. 2009 IEEE Conference on Computer Vision and Pattern Recognition; 2009; pp. 248–255. [Google Scholar]

- 3.Lin T.-Y., et al. Microsoft coco: common objects in context. European conference on computer vision. 2014. pp. 740–755.

- 4.Cruz J. A., Yin X., Liu X., et al. Multi-modality imagery database for plant phenotyping. Machine Vision and Applications. 2016;27(5):735–749. doi: 10.1007/s00138-015-0734-6. [DOI] [Google Scholar]

- 5.Guo W., Zheng B., Potgieter A. B., et al. Aerial imagery analysis – quantifying appearance and number of sorghum heads for applications in breeding and agronomy. Frontiers in Plant Science. 2018;9:p. 1544. doi: 10.3389/fpls.2018.01544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hughes D. P., Salathé M. CoRR; 2015. An open access repository of images on plant health to enable the development of mobile disease diagnostics through machine learning and crowdsourcing. http://arxiv.org/abs/1511.08060. [Google Scholar]

- 7.LeBauer D., et al. Data from: TERRA-REF, an open reference data set from high resolution genomics, phenomics, and imaging sensors. Dryad. 2020:p. 800302508 bytes. doi: 10.5061/DRYAD.4B8GTHT99. [DOI] [Google Scholar]

- 8.Leminen Madsen S., Mathiassen S. K., Dyrmann M., Laursen M. S., Paz L. C., Jørgensen R. N. Open plant phenotype database of common weeds in Denmark. Remote Sensing. 2020;12(8):p. 1246. doi: 10.3390/rs12081246. [DOI] [Google Scholar]

- 9.Lu H., Cao Z., Xiao Y., Zhuang B., Shen C. TasselNet: counting maize tassels in the wild via local counts regression network. Plant Methods. 2017;13(1):p. 79. doi: 10.1186/s13007-017-0224-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Madec S., Irfan K., David E., et al. The P2S2 segmentation dataset: annotated in-field multi-crop RGB images acquired under various conditions. Lyon, France: 2019. https://hal.inrae.fr/hal-03140124. [Google Scholar]

- 11.Scharr H., Minervini M., French A. P., et al. Leaf segmentation in plant phenotyping: a collation study. Machine Vision and Applications. 2016;27(4):585–606. doi: 10.1007/s00138-015-0737-3. [DOI] [Google Scholar]

- 12.Thapa R., Zhang K., Snavely N., Belongie S., Khan A. The Plant Pathology challenge 2020 data set to classify foliar disease of apples. Applications in Plant Sciences. 2020;8(9, article e11390) doi: 10.1002/aps3.11390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wiesner-Hanks T., Stewart E. L., Kaczmar N., et al. Image set for deep learning: field images of maize annotated with disease symptoms. BMC Research Notes. 2018;11(1):p. 440. doi: 10.1186/s13104-018-3548-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.David E., Ogidi F., Guo W., Baret F., Stavness I. Global Wheat Challenge 2020: analysis of the competition design and winning models. 2021.

- 15.Hani N., Roy P., Isler V. MinneApple: a benchmark dataset for apple detection and segmentation. IEEE Robotics and Automation Letters. 2020;5(2):852–858. doi: 10.1109/LRA.2020.2965061. [DOI] [Google Scholar]

- 16.Minervini M., Fischbach A., Scharr H., Tsaftaris S. A. Finely-grained annotated datasets for image-based plant phenotyping. Pattern Recognition Letters. 2016;81:80–89. doi: 10.1016/j.patrec.2015.10.013. [DOI] [Google Scholar]

- 17.Tsaftaris S. A., Scharr H. Sharing the right data right: a symbiosis with machine learning. Trends in Plant Science. 2019;24(2):99–102. doi: 10.1016/j.tplants.2018.10.016. [DOI] [PubMed] [Google Scholar]

- 18.David E., Madec S., Sadeghi-Tehran P., et al. Global Wheat Head Detection (GWHD) dataset: a large and diverse dataset of high-resolution RGB-labelled images to develop and benchmark wheat head detection methods. Plant Phenomics. 2020;2020, article 3521852:12. doi: 10.34133/2020/3521852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yu G., Wu Y., Xiao J., Cao Y. A novel pyramid network with feature fusion and disentanglement for object detection. Computational Intelligence and Neuroscience. 2021;2021:13. doi: 10.1155/2021/6685954.6685954 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ayalew T. W., Ubbens J. R., Stavness I. Unsupervised domain adaptation for plant organ counting. European conference on computer vision. 2020. pp. 330–346.

- 21.Datta M. N., Rathi Y., Eliazer M. Wheat heads detection using deep learning algorithms. Annals of the Romanian Society for Cell Biology. 2021:5641–5654. [Google Scholar]

- 22.Fourati F., Mseddi W. S., Attia R. Wheat head detection using deep, semi-supervised and ensemble learning. Canadian Journal of Remote Sensing. 2021;47(2):198–208. doi: 10.1080/07038992.2021.1906213. [DOI] [Google Scholar]

- 23.Fourati F., Souidene W., Attia R. An original framework for wheat head detection using deep, semi-supervised and ensemble learning within Global Wheat Head Detection (GWHD) dataset. 2020. https://arxiv.org/abs/2009.11977.

- 24.Gomez A. S., Aptoula E., Parsons S., Bosilj P. Deep regression versus detection for counting in robotic phenotyping. IEEE Robotics and Automation Letters. 2021;6(2):2902–2907. doi: 10.1109/LRA.2021.3062586. [DOI] [Google Scholar]

- 25.Gong B., Ergu D., Cai Y., Ma B. Real-time detection for wheat head applying deep neural network. Sensors. 2021;21(1):p. 191. doi: 10.3390/s21010191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.He M.-X., Hao P., Xin Y. Z. A robust method for wheatear detection using UAV in natural scenes. IEEE Access. 2020;8:189043–189053. doi: 10.1109/ACCESS.2020.3031896. [DOI] [Google Scholar]

- 27.Jiang Y., Li C., Xu R., Sun S., Robertson J. S., Paterson A. H. DeepFlower: a deep learning-based approach to characterize flowering patterns of cotton plants in the field. Plant Methods. 2020;16(1):p. 156. doi: 10.1186/s13007-020-00698-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Jiang B., Xia J., Li S. Few training data for objection detection. Proceedings of the 2020 4th International Conference on Electronic Information Technology and Computer Engineering; November 2020; pp. 579–584. [DOI] [Google Scholar]

- 29.Karwande A., Kulkarni P., Marathe P., Kolhe T., Wyawahare M., Kulkarni P. Machine Learning and Information Processing: Proceedings of ICMLIP 2020. Vol. 1311. Springer; 2021. Computer vision-based wheat grading and breed classification system: a design approach; p. p. 403. [Google Scholar]

- 30.Kattenborn T., Leitloff J., Schiefer F., Hinz S. Review on convolutional neural networks (CNN) in vegetation remote sensing. ISPRS Journal of Photogrammetry and Remote Sensing. 2021;173:24–49. doi: 10.1016/j.isprsjprs.2020.12.010. [DOI] [Google Scholar]

- 31.Khaki S., Safaei N., Pham H., Wang L. WheatNet: a lightweight convolutional neural network for high-throughput image-based wheat head detection and counting. 2021. https://arxiv.org/abs/2103.09408.

- 32.Kolhar S. U., Jagtap J. Bibliometric Review on Image Based Plant Phenotyping. p. p. 16.

- 33.Li J., Li C., Fei S., et al. Wheat ear recognition based on RetinaNet and transfer learning. Sensors. 2021;21(14):p. 4845. doi: 10.3390/s21144845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lucks L., Haraké L., Klingbeil L. Detektion von Weizenähren mithilfe neuronaler Netze und synthetisch erzeugter Trainingsdaten. tm-Technisches Messen; 2021. [Google Scholar]

- 35.Misra T., Arora A., Marwaha S., et al. Web-SpikeSegNet: deep learning framework for recognition and counting of spikes from visual images of wheat plants. IEEE Access. 2021;9:76235–76247. doi: 10.1109/ACCESS.2021.3080836. [DOI] [Google Scholar]

- 36.Riera L. G., Carroll M. E., Zhang Z., et al. Deep multiview image fusion for soybean yield estimation in breeding applications. Plant Phenomics. 2021;2021, article 9846470:12. doi: 10.34133/2021/9846470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Smith D. T., Potgieter A. B., Chapman S. C. Scaling up high-throughput phenotyping for abiotic stress selection in the field. Theoretical and Applied Genetics. 2021;134(6):1845–1866. doi: 10.1007/s00122-021-03864-5. [DOI] [PubMed] [Google Scholar]

- 38.Suzuki Y., Kuyoshi D., Yamane S. Transfer learning algorithm for object detection. Bulletin of Networking, Computing, Systems, and Software. 2021;10(1):1–3. [Google Scholar]

- 39.Trevisan R., Pérez O., Schmitz N., Diers B., Martin N. High-throughput phenotyping of soybean maturity using time series UAV imagery and convolutional neural networks. Remote Sensing. 2020;12(21):p. 3617. doi: 10.3390/rs12213617. [DOI] [Google Scholar]

- 40.Velumani K., Lopez-Lozano R., Madec S., et al. Estimates of maize plant density from UAV RGB images using Faster-RCNN detection model: impact of the spatial resolution. 2021. https://arxiv.org/abs/2105.11857. [DOI] [PMC free article] [PubMed]

- 41.Wu Y., Hu Y., Li L. European Conference on Computer Vision. Springer; 2020. BTWD: bag of tricks for wheat detection; pp. 450–460. [Google Scholar]

- 42.Wang H., Duan Y., Shi Y., Kato Y., Ninomiya S., Guo W. EasyIDP: a Python package for intermediate data processing in UAV-based plant phenotyping. Remote Sensing. 2021;13(13):p. 2622. doi: 10.3390/rs13132622. [DOI] [Google Scholar]

- 43.Wang Y., Qin Y., Cui J. Occlusion robust wheat ear counting algorithm based on deep learning. Frontiers in Plant Science. 2021;12:p. 1139. doi: 10.3389/fpls.2021.645899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Yang B., Gao Z., Gao Y., Zhu Y. Rapid detection and counting of wheat ears in the field using YOLOv4 with attention module. Agronomy. 2021;11(6):p. 1202. doi: 10.3390/agronomy11061202. [DOI] [Google Scholar]

- 45.Lu H., Liu L., Li Y. N., Zhao X. M., Wang X. Q., Cao Z. G. TasselNetV3: Explainable Plant Counting With Guided Upsampling and Background Suppression. IEEE Transactions on Geoscience and Remote Sensing. 2021:1–15. doi: 10.1109/tgrs.2021.3058962. [DOI] [Google Scholar]

- 46.Dandrifosse S., Carlier A., Dumont B., Mercatoris B. Registration and fusion of close-range multimodal wheat images in field conditions. Remote Sensing. 2021;13(7):p. 1380. doi: 10.3390/rs13071380. [DOI] [Google Scholar]

- 47.Wang X., Xuan H., Evers B., Shrestha S., Pless R., Poland J. High-throughput phenotyping with deep learning gives insight into the genetic architecture of flowering time in wheat. GigaScience. 2019;8(giz120) doi: 10.1101/527911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Tolias G., Sicre R., Jégou H. Particular object retrieval with integral max-pooling of CNN activations. 2015. https://arxiv.org/abs/1511.05879.

- 49.McInnes L., Healy J., Melville J. UMAP: Uuniform manifold approximation and projection for dimension reduction. 2020.

- 50.Koh P. W., Sagawa S., Marklund H., et al. WILDS: a benchmark of in-the-wild distribution shifts. 2021. April 2021, https://arxiv.org/abs/2012.07421.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset is available on Zenodo (https://zenodo.org/record/5092309).