Abstract

Aim

: The objectives of this scoping review are to identify the challenges to conducting evidence synthesis during the COVID-19 pandemic and to propose some recommendations addressing the identified gaps.

Methods

: A scoping review methodology was followed to map the literature published on the challenges and solutions of conducting evidence synthesis using the Joanna Briggs Methodology of performing scoping review. We searched several databases from the start of the Pandemic in December 2019 until 10th June 2021.

Results

: A total of 28 publications was included in the review. The challenges cited in the included studies have been categorised into four distinct but interconnected themes including: upstream, Evidence synthesis, downstream and contextual challenges. These challenges have been further refined into issues with primary studies, databases, team capacity, process, resources, and context.

Several proposals to improve the above challenges included: transparency in primary studies registration and reporting, establishment of online platforms that enables collaboration, data sharing and searching, the use of computable evidence and coordination of efforts at an international level.

Conclusion

: This review has highlighted the importance of including artificial intelligence, a framework for international collaboration and a sustained funding model to address many of the shortcomings and ensure we are ready for similar challenges in the future.

Keywords: Evidence synthesis, Methodology, International collaboration, Data sharing

What is new?

Key findings

-

•

There are several limitations with the current evidence synthesis methodologies.

What this adds to what was known?

-

•

Recommendations to improve the limitations of the methodologies are fragmented.

-

•

The development of robust methodologies to cope with the high research output published in response to COVID-19 is imminent.

-

•

A framework of Artificial Intelligence, international collaboration and funding is necessary.

What is the implication and what should change now?

-

•

The review provides insight for methodologists to plan for similar situations.

-

•

Further work is urgently needed in this area

1. Background

The high speed and the scale in which research on the COVID-19 pandemic is published are challenging for decision makers, clinicians, patients and the public [1], [22]. This is mainly because of the difficulty in synthesizing all these data in a timely way (Ruano, Gomez-Garcia, Pieper, & Puljak, 2020). This has been partly addressed by the use of living and rapid reviews to overcome the plethora of published papers ([3,10,14,15,29]; Negrini, Mg, Cote, & Arienti, 2021).

Several evidence syntheses groups have initiated projects to address these challenges. A number of repositories such as WHO COVID, LitCovid, Cochrane COVID-19 Register and L.OVE COVID were developed to identify COVID-19 articles available in multiple resources.[38] Other projects aim to map, prioritize or add extra information with a particular focus. For instance, COVID-END is selecting best evidence syntheses based on explicit criteria policies ([2], [32]; Patil, 2020; Zheng et al., 2020) and LIT-COVID is tracing public health interventions and national policies. Zheng, 2020. The L.OVE COVID initiative is also created by a network of experts supported by artificial intelligence to address a comprehensive map of questions for health decision making (https://iloveevidence.com/).

The COVID-NMA project aims at building an evidence ecosystem for the COVID-19 pandemic [12]. Similarly, the eCOVID-19 RecMap that catalogues COVID-19 recommendations, provides users with direct links to living evidence maps, and in particular to the evidence on the Population and Intervention combination they selected for their guideline question (Lotfi et al., 2021). Some groups are combining their efforts to cover as many steps as possible of the evidence ecosystem. [12] These include the use of the Cochrane COVID-19 register and L.OVE COVID as evidence sources, for the COVID-NMA programme (Metzendorf, 2021 and [13]), and the use of the latter by the Australian Living guidelines to inform their recommendations for practice.'

The BMJ Living NMA, the PAHO Ongoing Living Review and eCOVID-19 RecMap are using L•OVE COVID as a key source of the evidence they process. (Melo, 2021) Furthermore, collaboration between different project is reshaping these efforts. For instance, the Norwegian Institute of Public Health stopped its effort to maintain the Map of COVID-19 evidence and is now collaborating on the L•OVE COVID.

These initiatives and other experiences detailed by knowledge users have also uncovered many shortcomings of the current research synthesis model such as the low quality of primary studies, the complexity of the process of identifying relevant studies, the lack of robustness of the critical appraisal techniques and data analysis (Palayew et al., 2020; Ruano et al., 2020), the unnecessary duplication of reviews and the time taken to produce a systematic review [13,33].

The above challenges and the need to produce timely evidence for policymakers in an environment where there is insufficient reliable evidence to guide practice and policy has drawn significant attention to explore new ways to address the high volume of research synthesized on important topics for researchers, clinicians and policy makers.(Pearson, 2021) To date, no review has systematically scoped and synthesized this information, therefore, this review was undertaken to collate the available data on the challenges of evidence synthesis uncovered by COVID-19 and explore solutions. The objectives of this scoping review are to:

-

•

Identify the challenges to conducting evidence synthesis during the COVID-19 pandemic

-

•

Propose recommendations on how to address the identified challenges

2. Methods

2.1. Inclusion criteria

The methodology of the current scoping review is based on published methodologies [40]. The concept of interest was the challenges to evidence synthesis in the context of the COVID 19 pandemic. We included both qualitative and/or quantitative study designs (experimental, descriptive, and observational studies). We considered only studies published in English. We registered a protocol of the review in Open Science Framework (10.17605/OSF.IO/79EUB).

2.2. Search strategy

We utilized a three-step search strategy. First, we undertook an initial limited search of Ovid MEDLINE on the 9th of June 2021 to examine the text words contained in the title and abstract and of the index terms used to describe the articles found in the first search. Second, we used all identified keywords and index terms to search across all included databases (see information sources, below). Thirdly, we manually searched the reference lists of identified articles for additional publications and information as mentioned by [40] and (Peters et al., 2021).

2.3. Information sources

We searched the following databases: Ovid MEDLINE, Embase and Public Health Reports starting with the date of the start of the Pandemic on the 1st of December 2019 until June 10, 2021. The search combined terms for the concepts of evidence synthesis (e.g., evidence synthesis, knowledge synthesis, systematic reviews) and COVID-19 (e.g., pandemic, Coronavirus). Due to the dearth of literature on the topic, we included any type of publications (i.e. letters, editorials and any other information found on the topic). We also searched the Journal of Clinical Epidemiology, which had a special issue on the topic. Finally, we used citation chaser to find all any relevant references of all the included publications as detailed by [36].

2.4. Data extraction

One author (HK) extracted relevant data from the included publications to address the scoping review question as outlined by [39], [40] and (Peters et al., 2021). The extracted data for the following: authors, year of publication, study type, challenges cited by authors and recommendations made by the authors to address the challenges.

2.5. Mapping the results

In order to map the included research, we identified a set of themes based on discussion among the team members and a review of previous research studies. {[34] #20}{Chen, 2021 #36}{Akl, 2020 #465}{Shokraneh, 2020 #41} We categorized the challenges into upstream level (related to processes that precede Evidence Synthesis (ES), ES level, downstream level (challenges related to processes that follow ES), and contextual challenges. The categorization of upstream, downstream and contextual challenges was based on the following meanings. We have categorized factors associated with production of evidence synthesis under upstream issues, on the other hand, downstream issues included factors that influence the dissemination of evidence synthesis. Contextual factors related to the social context of producing and disseminating evidence synthesis.

3. Results

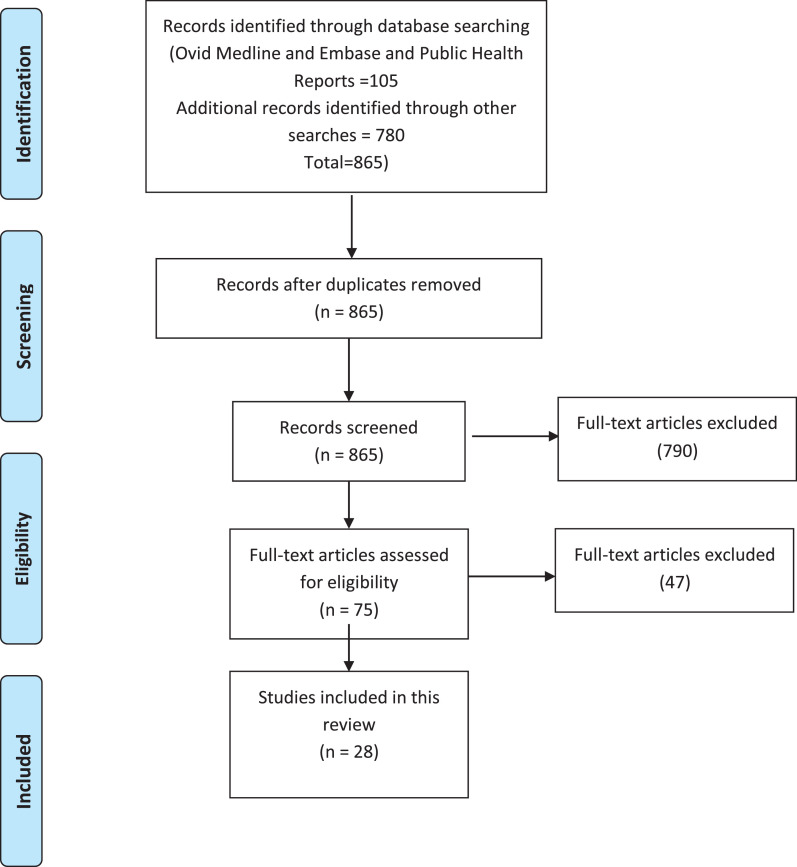

We identified a total of 105 citations through the electronic search of databases, and 780 additional citations using citation searcher. After the removal of all duplicates, we screened the titles and abstracts of 865 unique citations and as a result excluded 790 citations. The screening of the full text of the remaining 75 references yielded a total of 28 eligible publications. The results of the search and selection processes (PRISMA chart) are summarized in Figure 1 .

Fig. 1.

.PRISMA flow chart summarizing the results of the search strategy (Tricco et al., 2018).

3.1. Publication characteristics

Table 1 presents the descriptive summary of the included publications. Five of those (18%) were published in 2021 and the remainder were published in 2020. The included publications consisted of eight discussion papers (n=8; 29%), seven commentaries (25%), five research studies (18%), three methodology publications (11%), two letters to the editor (7%), two editorials (7%) and one literature review (4%).

Table 1.

Characteristics of studies included in the review

| Study name | Article description | Methodology | Challenges | Recommendations |

|---|---|---|---|---|

| [4] | Mapping COVID-19 research | Literature review | Low methodological quality and poor reporting of trials | The need to improve methodology and reporting of studies by having a robust peer review process |

| (Alper, Richardson, Lehmann, & Subbian) 2020 | Covid 19-Knowledge accelerator initiative | Commentary | Inefficiencies across multiple steps in generating evidence | The need to have computable evidence |

| [6] | Analysis of COVID-19 research across science and Social Science Research Landscape | Research study | High output of research data addressing COVID 19 pandemic and lack of collaboration between researchers from different disciplines | the need for a complete and in-depth approach that considers various scientific disciplines in COVID-19 research to benefit not only the scientific community but evidence-based policymaking. |

| (Bell) 2021 | Evidence synthesis and COVID | Editorial | Outdated reviews Review process is laborious and slow to complete as new evidence is being added. |

The need to have weekly reviews conducted to ensure the currency of the evidence. The need to prioritise topics for updating of the evidence |

| [9] | Investigation of the presence of publication bias in COVID-19 studies | Research study | Reporting of only positive studies | Pre-registration of studies and public sharing of data for all study types Meta analysis of observational studies should also be undertaken |

| (Biesty et al.) 2020 | Investigation of qualitative synthesis methodologies to respond to COVID-19 Pandemic | Discussion paper | Potential criticism about rapid review Concerns about the generalisation of the qualitative evidence Time limitation |

The need to produce a Rapid qualitative approach for the Cochrane collaboration to guide decisions based on worked examples and case studies |

3.2. Challenges

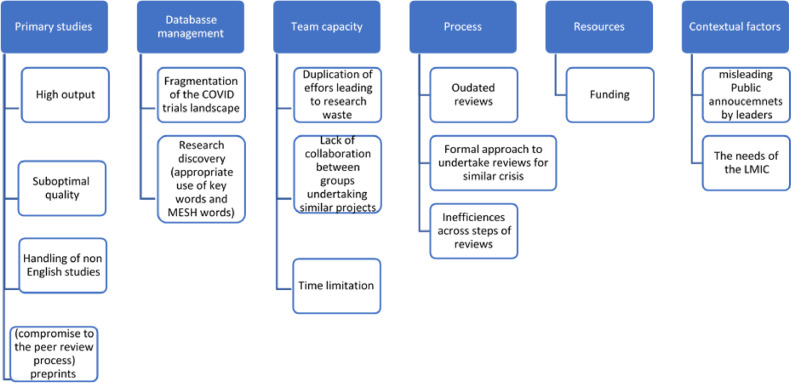

The main challenges identified from the 28 publications included: upstream challenges (20 studies), ES level challenges (four studies), downstream challenges (three studies), and contextual challenges (four studies) as shown in Figure 2 . Table 2 shows the reference to the challenges across the included publications. Many publications detailed more than one theme.

Fig. 2.

Types of challenges for research synthesis communities as a result of the COVID-19 pandemic.

Table 2.

Discussion of challenges across the included studies

| Study name | Primary studies | Databases | Team capacity | Process | Resources | Contextual factors |

|---|---|---|---|---|---|---|

| [4] | X | |||||

| (Alper, Richardson, Lehmann, & Subbian) 2020 | X | |||||

| [6] | X | X | ||||

| (Bell) 2021 | X | |||||

| [9] | X | X | ||||

| (Biesty et al.) 2020 | X | |||||

| (Chen, Allot, & Lu) 2020 | X | X | ||||

| [18] | X | X | ||||

| [20] | X | |||||

| [21] | X | X | ||||

| [27] | X | |||||

| [30] | X | |||||

| [34] | X | X | X | |||

| (Hanney, Kanya, Pokhrel, Jones, & Boaz) 2020 | X | X | X | |||

| (Murad et al.) 2020 | X | X | ||||

| (Nakagawa et al., 2020) | X | X | X | |||

| (Negrini, Mg, Côté, & Arienti, 2021) | X | |||||

| (Nouri et al., 2020) | X | X | ||||

| (Nussbaumer-Streit, Klerings, & Gartlehner, 2020) | X | X | ||||

| (Oikonomidi et al., 2020) | X | X | X | |||

| (Page et al., 2020) | X | X | ||||

| (Palayew et al., 2020) | X | X | X | X | ||

| (Ruano, Gómez-García, Pieper, & Puljak, 2020) | X | |||||

| (Schünemann et al., 2020) | X | |||||

| (Shokraneh & Russell-Rose) 2020 | X | X | ||||

| (Stewart et al., 2020) | X | X | X | |||

| (Tricco et al., 2020) | X | X | X | |||

| (van Schalkwyk et al., 2020) | X |

3.3. Upstream challenges: Evidence production

Evidence production challenged evidence synthesis during the COVID-19 pandemic with a high output in a relatively short amount of time. [4,6]. Moreover, the peer review process lacked rigor due to the need to publish information in a timely manner [7,10]. This significantly increased the chance of problematic primary studies feeding the pipeline of evidence synthesis. Other cited challenges were the need to search for and handle non-English studies (Barbara Nussbaumer-Streit et al., 2019; Robson et al., 2018).

Suggestions to address those challenges included adherence to the published guidance on data inclusion and reporting to enable transparency and accuracy of data reporting. [27] suggested full access to individual participant data (IPD) to enable reanalysis, reproducibility and increased confidence in the results generated by reviews. IPD data would also facilitate various subgroup analysis as was the case in the RECOVERY trial [39]. By combining IPD from various studies the authors generated the evidence for the benefit of dexamethasone in reducing mortality in hospitalized patients [19]. This can be done through the establishment of platforms to share patients’ specific data and monitoring processes for trial and data quality (Page et al., 2020).

The few suggestions on handling of non-English studies (Barbara Nussbaumer-Streit et al., 2019) included the use of no language restrictions when searching the literature, and the establishment of databases such as CDC's COVID-19 Research Articles Downloadable Database, WHO COVID-19 Database and Cochrane's COVID-19 Study Register that enable downloads with no language restrictions (Barbara Nussbaumer-Streit et al., 2019; B. Nussbaumer-Streit, Klerings, & Gartlehner, 2020).[28]

3.4. Upstream challenges: Evidence management

A major hinderance for evidence synthesis has been the fragmentation of the COVID 19 landscape of primary studies across databases (Qingyu [16]; Q. [17, 27]; N. R. [34]), and the inconsistency in their indexing (Barbara Nussbaumer-Streit et al., 2019; B. Nussbaumer-Streit et al., 2020; Shokraneh & Russell-Rose, 2020) . This makes finding relevant studies challenging especially the emerging area with inconsistencies in the reporting the name of the virus.

The of challenge fragmentation of the literature create the need for platforms for sharing individual participant data for COVID. Examples include the Yale University Open Data Access Project (https://yoda.yale.edu/) and clinicaldatarequest.com and the Vivli platform was established accessed from https://vivli.org/vivliwp/wp-content/uploads/2020/01/2020_01_02-DRF-Worksheet.pdf.

3.5. ES level challenges: inefficiency of the process

Inefficiencies across the steps of systematic review process is a major challenge for the evidence synthesis community, as it can take up to two years to produce a review. During that time, the review becomes outdated due to the rate of publication of primary studies [5,9,30]. This was exacerbated during the COVID-19 pandemic with the high output of primary studies published (Murad et al., 2020).

Suggestions to address these challenges include improving the reliability of approaches to conducting rapid reviews. (A. C. Tricco et al., 2020). Other suggestions to overcome the above-mentioned challenges include building a repository a registry of all primary studies conducted in the area and regularly updating the reviews of any new evidence that can be shared with relevant stakeholders ([3]; Murad et al., 2020). One suggestion was to produce a rapid qualitative approach for the Cochrane collaboration to guide decisions based on worked examples and case studies [10]. This is in addition to the establishment of rapid reviews teams that can use simplified processes and shortcuts to produce summary reviews on request within 1-3 days [30].

Computable readable meta-analysis was suggested as a way to maximize on the capability of artificial intelligence in undertaking complex, time consuming reviews [5]. Machine learning is currently used by researchers for some steps of systematic reviews ([25]; Leaman, Wei, Allot, & Lu, 2020; Poux et al., 2017). Another suggestion was to conduct weekly reviews to ensure the currency of the evidence and prioritization of topics for updating of the evidence [8].

3.6. ES level challenges: Duplication of work

Duplication of systematic reviews on the same topic has also been cited as a major challenge leading to research waste. One of the underlying reasons is the lack of information sharing and absence of a coherent sustaining strategy engaging relevant stakeholders from the international community (Clark, Scott, & Glasziou, 2020; Kadykalo, Haddaway, Rytwinski, & Cooke, 2021(Neal R. [34]). Another reason is the lack of collaboration between researchers from different disciplines [6].

Suggestions to address this challenge include building a repository of all primary studies conducted in the area and regularly updating the reviews of any new evidence that can be shared with relevant stakeholders ([3] and Murad et al., 2020).

3.7. ES level challenges: Funding

Funding was cited as a major hurdle to research synthesis as much of the work is currently being undertaken by crowd sourcing and volunteers (S. [37]; Palayew et al., 2020; Stewart, El-Harakeh, & Cherian, 2020). The need to secure funds is important to build team capacity and ensure the sustainability of any project [11]. Hanney et al. (S. R. Hanney, L. Kanya, S. Pokhrel, T. H. Jones, & A. Boaz, 2020) highlighted the need for adequate funding to enable the design of large coherent and sustaining strategy, engaging stakeholders and evaluation of impacts on health systems and partnership participation.

Adequate funding of research teams will enable organizations to maintain the high quality of data produced. A more sustained funding model is required to ensure the quality, viability, and maintenance of any future initiatives of research synthesis models (S. R. [37]; Palayew et al., 2020; Stewart et al., 2020).

3.8. Downstream challenges: Evidence dissemination/publication

With the pandemic, more researchers published preprints to make research data quickly accessible (Oikonomidi, Boutron, Pierre, Cabanac, & Ravaud, 2020; van Schalkwyk, Hird, Maani, Petticrew, & Gilmore, 2020). A recent study by [31] found that the release of preprints resulted in higher citations and Altmetric Attention Score for the publications. However, this practice has the potential to propagate misinformation (Nouri et al., 2020). Also, matching preprints to their subsequent peer review publications and dealing with changes can be time consuming and uncover data errors an especially if several revisions were required for such publications [18,31].

Another challenge relates to the favoured reporting of ‘positive’ studies (selective reporting bias), such in the case of remdesivir and hydroxychloroquine [4], [9], [23]. The authors reported that Covid-19 has put a lot of stress on clinicians and researchers to come up with potential interventions that would benefit patients. The authors describe the “white hat bias” as arising from personal beliefs and the urgent need to find an effective intervention. Observational studies also represent a typical example of white hat bias due to the potential for selection and reporting bias.

There were suggestions to have clear guidance about the inclusion of preprints in reviews and transparency in reporting them in the reviews. The importance of greater transparency and better reporting of trial findings has been highlighted as more important than ever during this constant changing environment of the pandemic where human lives rely on up-to-date evidence free of bias (Moynihan et al., 2019).

3.9. Context challenges

Contextual challenges included the misleading public health announcements and bias in reporting study results by politicians and country leaders. This in turn resulted in the inappropriate application of values and preferences when choosing a treatment because of being promoted by leaders and approval bodies [21]. Bodies like the FDA approved some treatments without having full information to determine its efficacy as in the case of hydroxychloroquine [23].

Suggestions to improve these challenges include the adherence to Evidence based medicine principles and the appropriate application of GRADE in grading evidence for decision making. These strategies will balance the uncertainty of evidence and inappropriate application of values and preferences ([21]; Schunemann et al., 2020). Stewart et al. pointed to contextual challenges specific to the Low-and Middle-income countries (LMIC) (Stewart et al., 2020). These include limited access to computer hardware and software, restrictions on database access, constrained data storage capacity, poor data coverage, and low internet bandwidth.

4. Discussion

This review has mapped several challenges associated with all steps of the research synthesis process. The challenges cited in the included studies have been categorized into four distinct but interconnected challenges: upstream, evidence synthesis level, downstream and contextual challenges. These challenges have been further divided into issues with primary studies, databases management, team capacity, process, resources, and context.

The challenges described in this review are faced by multiple stakeholders of the evidence ecosystems and these are the journal editors, the peer reviewers, and systematic reviewers. The editors are usually faced with challenges to balance out the peer review process and the speed at which an article is published in order to have it available to clinicians and researchers ([8]; Alexander, 2020). The peer reviewers also face challenges with suboptimal quality of some of the publications due to reviewers choosing alternative methodologies such as rapid reviews and at times outdated reviews where searches were undertaken more than 12 months old for a topic (Fretheim, 2020) On the other hand, the systematic reviewers face with a different kind of challenges that include time limitation to produce a review, lack of collaboration between groups working on similar topics which lead at times to duplication of efforts leading to research waste ([34]; Ruano, 2020).

Several issues were uncovered in this review that are critical to the evidence synthesis community and need to be addressed urgently not only for COVID-19 studies but for other topics [24]. These were the low quality of primary studies, the duplication of efforts leading in some cased to research waste and the limited ability to handle non-English studies which may lead to omission of important literature. ([34]; Stewart, 2020) Some recommendations by authors to handle these issues include the use of sharing platforms and wide collaboration on topics as well as clear policy about the inclusion of pre-prints and handling of non-English studies. (Clyne, 2021; van Schalkwyk, 2020)

Despite the shortcomings that we have identified in this review, there were also some major advances that were highlighted in the included studies. These were the extraordinary rapidity of the production of new evidence, particularly in the large platform trials making use of the advances of technology. The revolution in getting information available in pre-prints to have the evidence available to the public and the willingness of many researchers to share data in various ways that have been endorsed by many journals.(Shokraneh,2020; van Schalkwyk, 2020) These efforts have all been maximized by the recent WHO initiatives to produce trustworthy guidelines methodology with standing panels to efficiently produce guidance and evidence summaries to clinicians on several topics.(Lamontagne, 2020; WHO, 2020) [2], [41]

The results detailed in the review suggest that there are already ongoing initiatives that have attempted to address some of the shortcomings mentioned in this review. Examples of these include the COVID-NMA, REcmap and the L.OVE COVID [35]. These ongoing initiatives have been driven by leveraging on several advances in evidence synthesis such as living searches, automation tools, prioritization of topics, crowdsourcing, shared platforms, collaboration and new synthesis methodologies such as living and rapid reviews as detailed in Table. 3 .

Table 3.

Example of advances in in evidence synthesis

| Advances on evidence synthesis | Examples of projects incorporating these advances |

|---|---|

| Living searches | Epistemonikos, REcmap |

| Automation tools | COVID-END, Epistemonikos, COVID-NMA, PAHO Ongoing Living Review |

| Prioritization of topics | COVID-END, REcmap |

| Crowdsourcing | Cochrane, COVID-NMA, |

| Shared platforms | LIT-COVID, REcmap, COVID-NMA, |

| Collaboration and Partnership | LIT-COVID, Epistemonikos, REcmap, COVID-NMA, PAHO Ongoing Living Review |

| New methodologies for evidence synthesis | Rapid and living reviews |

However, a more structured approach is required to use some of the resources and advances already employed in these initiatives to advance the field. [3] suggested an aspirational conceptual model where all the of the above advances can be used to create an evidence ecosystem 2.0 that is able to leverage on crowdsourcing, web-based platforms, that allow collaborators from different countries or continents to work together and increase their efficiency [3]. This is in addition to artificial intelligence and automation to assist with the ongoing and effective identification, coding, and abstraction of data from eligible studies. Finally, open-source tools can be used to build the evidence universe in a cost-effective manner.

It is evident that the use of computable evidence and the use of artificial intelligence is important to address many of the gaps mentioned in undertaking systematic reviews. [3] highlighted the shortfalls of the current research synthesis methodologies which are consistent with our current findings of this review. The authors emphasized the need for enabling the current technologies and tools to replace the laborious work undertaken by researchers. However, many of these tools lack validity and adaptability with other systems and tools. A more concerted effort is required to ensure usability of the available tools within the already existing systems used by researchers to undertake reviews such as EPPI-Reviewer (EPPI-reviewer:) (Thomas and Brunton, 2007).[26]

The current review has a few limitations including the inclusion of non-peer reviewed publications such as commentaries and editorials which may have presented selection bias into the results included of the review. Moreover, we have limited our search to English language due to lack of resources which may have led to omitting some of the other literature that may have contributed to the topic.

The findings of this scoping review will be useful for systematic review teams in the development of robust methodologies to cope with the high output in primary research and with the limitations of the current research synthesis methodology. Moreover, the review will also be able to provide some insight for research methodologists to address similar future situations to efficiency deliver reliable synthesized evidence to policymakers, clinicians, and researchers.

5. Conclusion

This review has highlighted the importance of including artificial intelligence, a framework for international collaboration and a sustained funding model to address many of the shortcomings and ensure we are ready for similar challenges in the future.

Author statement

This review was conceptualised by HK and EA. HK has drafted the manuscript and wrote it. All other authors commented on the draft.

Acknowledgement

The authors would like to thank Dr Neal Haddaway (Senior Research Fellow at SEI Headquarters in Stockholm) for his initial comments on the first draft of the manuscript.

Funding

This work received no funding from any organisations

Footnotes

Declaration of Competing Interests: None to declare. The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Supplementary material associated with this article can be found, in the online version, at doi:10.1016/j.jclinepi.2021.10.017.

Appendix. Supplementary materials

References

- 1.Abu-Raya B., Migliori G.B., O'Ryan M., Edwards K., Torres A., Alffenaar J.W., et al. Coronavirus disease-19: an interim evidence synthesis of the world association for infectious diseases and immunological disorders (Waidid) Front Med. 2020;7 doi: 10.3389/fmed.2020.572485. (no pagination) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Akl E.A., Morgan R.L., Rooney A.A., Beverly B., Katikireddi S.V., Agarwal A., et al. Developing trustworthy recommendations as part of an urgent response (1–2 weeks): a GRADE concept paper. J Clin Epidemiol. 2021;129:1–11. doi: 10.1016/j.jclinepi.2020.09.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Akl E.A., Haddaway N.R., Rada G., Lotfi T. Evidence synthesis 2.0: when systematic, scoping, rapid, living, and overviews of reviews come together. NA. 2020;123:162–165. doi: 10.1016/j.jclinepi.2020.01.025. [DOI] [PubMed] [Google Scholar]

- 4.Alexander P.E., Debono V.B., Mammen M.J., Iorio A., Aryal K., Deng D., et al. COVID-19 coronavirus research has overall low methodological quality thus far: case in point for chloroquine/hydroxychloroquine. J Clin Epidemiol. 2020;123:120–126. doi: 10.1016/j.jclinepi.2020.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Alper B.S., Richardson J.E., Lehmann H.P., Subbian V. It is time for computable evidence synthesis: The COVID-19 Knowledge Accelerator initiative. J Am Med Inform Assoc. 2020;27(8):1338–1339. doi: 10.1093/jamia/ocaa114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Aristovnik A., Ravšelj D., Umek L. A Bibliometric Analysis of COVID-19 across. Sci Social Sci Res Landscape. 2020;12(21) doi: 10.3390/su12219132. 9132-NA. [DOI] [Google Scholar]

- 7.Aronson, J.K., Heneghan, C., Mahtani, K.R., Plüddemann, A. (2018). A word about evidence: ‘rapid reviews’ or ‘restricted reviews’?, 23(6), 204-205. doi:10.1136/bmjebm-2018-111025 [DOI] [PubMed]

- 8.Bell R.J. Evidence synthesis in the time of COVID-19. Climacteric. 2021;24(3):211–213. doi: 10.1080/13697137.2021.1904676. [DOI] [PubMed] [Google Scholar]

- 9.Bellos I. A metaresearch study revealed susceptibility of Covid-19 treatment research to white hat bias: first, do no harm. J Clin Epidemiol. 2021;136:55–63. doi: 10.1016/j.jclinepi.2021.03.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Biesty L., Meskell P., Glenton C., Delaney H., Smalle M., Booth A., et al. A QuESt for speed: rapid qualitative evidence syntheses as a response to the COVID-19 pandemic. Syst Rev. 2020;9(1):256. doi: 10.1186/s13643-020-01512-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Boland, L., Kothari, A., McCutcheon, C., Graham, I.D. (2020). Building an integrated knowledge translation (IKT) evidence base: colloquium proceedings and research direction. 18(1), 1-7. doi:10.1186/s12961-019-0521-3 [DOI] [PMC free article] [PubMed]

- 12.Boutron I., Chaimani A., Meerpohl J.J., Hróbjartsson A., Devane D., Rada G., et al. American College of Physicians; 2020. The COVID-NMA Project: Building an Evidence Ecosystem for the COVID-19 Pandemic. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Boutron I., Créquit P., Williams H., Meerpohl J., Craig J.C., Ravaud P. Future of evidence ecosystem series: 1. Introduction Evidence synthesis ecosystem needs dramatic change. J Clin Epidemiol. 2020;123:135–142. doi: 10.1016/j.jclinepi.2020.01.024. [DOI] [PubMed] [Google Scholar]

- 14.Carollo A., Balagtas J.P.M., Neoh M.J.-Y., Esposito G. A scientometric approach to review the role of the medial preoptic area (MPOA) Parental Behavior. 2021;11(3) doi: 10.3390/brainsci11030393. 393-NA. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Carroll, C., Booth, A., Leaviss, J., Rick, J. (2013). “Best fit” framework synthesis: refining the method. 13(1), 37-37. doi:10.1186/1471-2288-13-37 [DOI] [PMC free article] [PubMed]

- 16.Chen, Q., Allot, A., Lu, Z. (2020). Keep up with the latest coronavirus research. 579(7798), 193-193. doi:10.1038/d41586-020-00694-1 [DOI] [PubMed]

- 17.Chen Q., Allot A., Lu Z. LitCovid: an open database of COVID-19 literature. Nucleic Acids Res. 2021;49(D1):D1534–D1540. doi: 10.1093/nar/gkaa952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Clyne B., Walsh K.A., O'Murchu E., Sharp M.K., Comber L., KK O.B., et al. Using Preprints in Evidence Synthesis: Commentary on experience during the COVID-19 pandemic. J Clin Epidemiol. 2021 doi: 10.1016/j.jclinepi.2021.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Desborough J., Dykgraaf S.H., Phillips C., Wright M., Maddox R., Davis S., et al. Family practice; 2021. Lessons for the global primary care response to COVID-19: a rapid review of evidence from past epidemics; p. 15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.DeMets D.L., Fleming T.R. Achieving effective informed oversight by DMCs in COVID clinical trials. J Clin Epidemiol. 2020;126:167–171. doi: 10.1016/j.jclinepi.2020.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Djulbegovic B., Guyatt G. Evidence-based medicine in times of crisis. J Clin Epidemiol. 2020;126:164–166. doi: 10.1016/j.jclinepi.2020.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dewey C., Hingle S., Goelz E., Linzer M. American College of Physicians; 2020. Supporting clinicians during the COVID-19 pandemic. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Di Castelnuovo A., Costanzo S., Antinori A., Berselli N., Blandi L., Bruno R., et al. Use of hydroxychloroquine in hospitalised COVID-19 patients is associated with reduced mortality: Findings from the observational multicentre Italian CORIST study. NA. 2020;82:38–47. doi: 10.1016/j.ejim.2020.08.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Djulbegovic B., Guyatt G. Evidence-based medicine in times of crisis. J Clin Epidemiol. 2020;126:164–166. doi: 10.1016/j.jclinepi.2020.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dunn, A.G., Bourgeois, F.T. (2020). Is it time for computable evidence synthesis. 27(6), 972-975. doi:10.1093/jamia/ocaa035 [DOI] [PMC free article] [PubMed]

- 26.EPPI-reviewer: EPPI-reviewer: . Retrieved from https://eppi.ioe.ac.uk/cms/Default.aspx?alias=eppi.ioe.ac.uk/cms/er4.

- 27.Ewers M., Ioannidis J.P.A., Plesnila N. Access to data from clinical trials in the COVID-19 crisis: open, flexible, and time-sensitive. J Clin Epidemiol. 2021;130:143–146. doi: 10.1016/j.jclinepi.2020.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Fan J., Gao Y., Zhao N., Dai R., Zhang H., Feng X., et al. Bibliometric analysis on COVID-19: a comparison of research between English and Chinese studies. Front Public Health. 2020;8:477. doi: 10.3389/fpubh.2020.00477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fell, M.J. (2019). The economic impacts of open science: a rapid evidence assessment. 7(3), 46-NA. doi:10.3390/publications7030046

- 30.Fretheim A., Brurberg K.G., Forland F. Rapid reviews for rapid decision-making during the coronavirus disease (COVID-19) pandemic, Norway, 2020. Euro Surveill. 2020;25(19):05. doi: 10.2807/1560-7917.ES.2020.25.19.2000687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Fu, D.Y., Hughey, J.J. (2019). Meta-research: releasing a preprint is associated with more attention and citations for the peer-reviewed article. 8(NA), NA-NA. doi:10.7554/elife.52646 [DOI] [PMC free article] [PubMed]

- 32.Grimshaw, J.M., Tovey, D.I., Lavis, J.N. (2020). COVID-END: an international network to better co-ordinate and maximize the impact of the global evidence synthesis and guidance response to COVID-19.

- 33.Gurevitch J., Koricheva J., Nakagawa S., Stewart G. Meta-analysis and the science of research synthesis. Nature. 2018;555(7695):175–182. doi: 10.1038/nature25753. [DOI] [PubMed] [Google Scholar]

- 34.Haddaway, N.R., Akl, E.A., Page, M.J., Welch, V., Keenan, C., Lotfi, T. (2020). Open synthesis and the coronavirus pandemic in 2020. 126(NA), 184-191. doi:10.1016/j.jclinepi.2020.06.032 [DOI] [PMC free article] [PubMed]

- 35.Haddaway N.R., Akl E.A., Page M.J., Welch V.A., Keenan C., Lotfi T. Open synthesis and the coronavirus pandemic in 2020. J Clin Epidemiol. 2020;126:184–191. doi: 10.1016/j.jclinepi.2020.06.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Haddaway, N.R., Grainger, M.J., Gray, C.T. (2021). citationchaser: an R package for forward and backward citations chasing in academic searching (Version 0.0.3). Retrieved from https://github.com/nealhaddaway/citationchaser

- 37.Hanney, S., Kanya, L., Pokhrel, S., Jones, T.H., Boaz, A. (2020). What is the evidence on policies, interventions and tools for establishing and/or strengthening national health research systems and their effectiveness? [internet]. NA(NA), NA-NA. doi:NA [PubMed]

- 38.Hanney S.R., Kanya L., Pokhrel S., Jones T.H., Boaz A. How to strengthen a health research system: WHO's review, whose literature and who is providing leadership? Health Res Policy Syst. 2020;18(1):72. doi: 10.1186/s12961-020-00581-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Horby P., Landrain M. Vol. 5. RECOVERY Trial Press Release; 2020. p. 2020. (Low-cost dexamethasone reduces death by up to one third in hospitalised patients with severe respiratory complications of COVID-19). Available at: https://www.ox.ac.uk/news/2020-06-16-low-cost-dexamethasone-reduces-death-one-thirdhospitalised-patients-severe. Accessed July. [Google Scholar]

- 40.Khalil H., Peters M., Godfrey C.M., McInerney P., Soares C.B., Parker D. An evidence-based approach to scoping reviews. Worldviews Evidence-Based Nurs. 2016;13(2):118–123. doi: 10.1111/wvn.12144. [DOI] [PubMed] [Google Scholar]

- 41.WHO. (2021). Coronavirus disease (COVID-19) pandemic. Retrieved from https://www.who.int/emergencies/diseases/novel-coronavirus-2019

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.