Summary

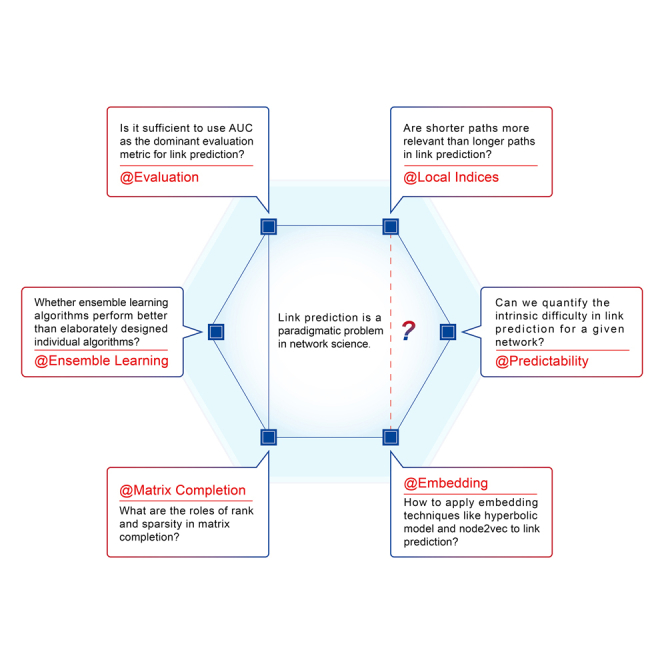

Link prediction is a paradigmatic problem in network science, which aims at estimating the existence likelihoods of nonobserved links, based on known topology. After a brief introduction of the standard problem and evaluation metrics of link prediction, this review will summarize representative progresses about local similarity indices, link predictability, network embedding, matrix completion, ensemble learning, and some others, mainly extracted from related publications in the last decade. Finally, this review will outline some long-standing challenges for future studies.

Subject areas: Computer science, Network, Network topology

Graphical abstract

Computer science; Network; Network topology

Introduction

Network is a natural and powerful tool to characterize a huge number of social, biological, and information systems that consist of interacting elements, and network science is currently one of the most active interdisciplinary research domains (Barabasi, 2016; Newman, 2018). Link prediction is a paradigmatic problem in network science that attempts to uncover missing links or predict future links (Lü and Zhou, 2011), which has already found many theoretical and practical applications, such as reconstruction of networks (Squartini et al., 2018; Peixoto, 2018), evaluation of evolving models (Wang et al., 2012; Zhang et al., 2015), inference of biological interactions (Csermely et al., 2013; Ding et al., 2014), online recommendation of friends and products (Aiello et al., 2012b; Lü et al., 2012), and so on.

Thanks to a few pioneering works (Liben-Nowell and Kleinberg, 2007; Clauset et al., 2008; Zhou et al., 2009; Guimerà and Sales-Pardo, 2009), link prediction has been one of the most active research domains in network science. Early contributions were already summarized by a well-known survey article (Lü and Zhou, 2011), and this review will first define the standard problem and discuss some well-known evaluation metrics and then introduce most representative achievements in the last decade (mostly published after (Lü and Zhou, 2011)), including local similarity indices, link predictability, network embedding, matrix completion, ensemble learning, and some others. Lastly, this review will show limitations of existing studies as well as open challenges for future studies.

Evaluation

Consider a simple network , where V and E are sets of nodes and links, the directionalities and weights of links are ignored, and multiple links and self-connections are not allowed. We assume that there are some missing links or future links in the set of nonobserved links , where U is the universal set containing all potential links. The task of link prediction is to find out those missing or future links. To test the algorithm’s accuracy, the observed link, E, is divided into two parts: the training set is treated as known information, while the probe set is used for algorithm evaluation, and no information in is allowed to be used for prediction. The majority of known studies applied “random division”, namely is randomly drawn from E. In the case of predicting future links, “temporal division” is usually adopted where contains most recently appeared links (Lichtenwalter et al., 2010). In some real networks, missing links have different topological features from observed links. For example, missing links are more likely to be associated with low-degree nodes since interactions between hubs are easy to be known. In such situations, we may apply “biased division” to ensure that is consisted of links with similar topological features to missing links (Zhu et al., 2012).

Performance evaluation metrics can be roughly divided into two categories: threshold-dependent metrics (e.g., fixed threshold accuracy) and threshold-independent metric (e.g., area under threshold curve). Precision and recall are the two most widely used metrics in the former category. Precision is defined as the ratio of relevant items selected to the number of items selected. That is to say, if we take the top-L links as the predicted ones, among which links are correctly predicted; then, the Precision equals . Recall is defined as the ratio of relevant items selected to the total number of relevant items, say . An obvious drawback of threshold-dependent metrics is that we generally do not have a reasonable way to determine the threshold, like the number of predicted links L or the threshold score for the existence of links. A widely adopted way is setting , at which precision = recall (Lü and Zhou, 2011; Liben-Nowell and Kleinberg, 2007). Although is generally unknown, an experiential and reasonable setting is because 10% of links in the probe set are usually enough for us to get statistical solid results while the removal of 10% of links will probably not destroy the structural features of the target network (Lü et al., 2015).

Some studies argued that a single value might not well reflect the performance of a predictor (Lichtenwalter et al., 2010; Yang et al., 2015). Therefore, robust evaluation based on threshold-dependent metrics should cover a range of thresholds (e.g., by varying L), which is actually close to the consideration of threshold curves. The precision–recall (PR) curve (Yang et al., 2015) and receiver operating characteristic (ROC) curve (Hanely and McNeil, 1982) are frequently considered in the literature. The former shows precision with respect to recall at all thresholds and the latter represents performance trade-off between true positives and false positives at different thresholds. We say algorithm X is strictly better than algorithm Y only if X’s threshold curve completely dominates Y’s curve (mathematically speaking, a curve A dominates another curve B in a space is B is always equal or below curve A (Provost et al., 1998)). Davis and Goadrich (2006) have proved that a curve dominates in ROC space if and only if it dominates in PR space.

As the condition of domination is too rigid, we usually use areas under threshold curves as evaluation metrics. The area under the ROC curve (AUC) can be interpreted as the probability that a randomly chosen link in is assigned a higher existence likelihood than a randomly chosen link in . If all likelihoods are generated from an independent and identical distribution, the AUC value should be about 0.5. Therefore, the degree to which the value exceeds 0.5 indicates how better the algorithm performs than pure chance. Thus far, AUC is the most frequently used metric in link prediction, probably because it is highly interpretable, easy to be interpolated, and of good visualization. Meanwhile, readers should be aware of some remarkable disadvantages of AUC, for example, AUC is inadequate to evaluate the early retrieval performance which is critical in real applications especially for many biological scenarios (Saito and Rehmsmeier, 2015), and AUC will give misleadingly overhigh score to algorithms that can successfully rank many negatives in the bottom while this ability is less significant in imbalanced learning (Yang et al., 2015; Lichtenwalter and Chawla, 2012). A typical viewpoint in the early studies is that AUC is suitable for imbalanced learning because AUC is not sensitive to the ratio of positives to negatives and thus can reflect an algorithm’s ability that is independent to the data distribution (Fawcett, 2006). However, recent perspective in machine learning and big data is that talking about the performance or ability of a classification algorithm without specified datasets is meaningless so that this previous advantage gets increasing criticisms (Yang et al., 2015; Lichtenwalter and Chawla, 2012). Hand argued that AUC is fundamentally incoherent because AUC uses different misclassification cost distributions for different classifiers (Hand 2009). Hand’s criticism is deep, but AUC indeed measures relative ranks instead of absolute loss and is irrelevant to misclassification cost so that to dissect AUC in the narration involving misclassification cost may be unfair. To overcome the above disadvantages, scientists have proposed a number of alternatives of AUC, such as H measure (Hand 2009), concentrated ROC (Swamidass et al., 2010), and normalized discounted cumulative gain (Wang et al., 2013). At the same time, the area under the precision-recall curve (AUPR) becomes increasingly popular, especially for biological studies. Though the AUPR score is less interpretable than AUC, each point in the PR curve has an explicit meaning, and the absolute accuracy metrics (e.g., precision and recall) are usually closer to practical requirements than relative ranks.

In summary, empirical comparisons and systematic analyses about evaluation metrics for link prediction are important tasks in this stage because many publications use AUC as the sole metric, while an ongoing empirical study (by Y.-L. Lee and T. Zhou, unpublished) shows that in about 1/3 pairwise comparisons, AUC and AUPR give different ranks of algorithms, and a recent large-scale experimental study also shows inconsistent results by AUC and AUPR (Muscoloni and Cannistraci, 2021). Before a comprehensive and explicit picture obtained, my suggestion is that we have to at least simultaneously report both AUC and AUPR, and only if an algorithm can obviously beat another one in both metrics for a network, we can say the former performs better in this case.

Local similarity indices

A similarity-based algorithm will assign a similarity score to each nonobserved link, and the one with a higher score is of a larger likelihood to be a missing link. Liben-Nowell and Kleinberg (2007) indicated that a very simple index named “common neighbor” (CN), say

| (Equation 1) |

with and being sets of neighbors of nodes x and y, performing very well in link prediction for social networks. Zhou et al. (2009) proposed the “resource allocation” (RA) index via weakening the weights of large-degree common neighbors, namely

| (Equation 2) |

where is the degree of node z. The simplicity, elegance, and good performance of CN, RA, and some other alternatives (Lü and Zhou, 2011) lead to increasing attention on local similarity indices.

In the recent decade, probably, the most impressive achievement on local similarity indices is the proposal of the local community paradigm (Cannistraci et al., 2013a), which suggests that two nodes are more likely to link together if their common neighbors are densely connected. Accordingly, Cannistraci et al. (2013a) proposed the CAR index where the CN index is multiplied by the number of observed links between common neighbors, as follows:

| (Equation 3) |

where is the subset of z’s neighbors that are also common neighbors of x and y. Analogously, RA index can be improved by accounting for the local community paradigm as follows:

| (Equation 4) |

By integrating the idea of Hebbian learning rule, the above index is further extended and renamed as Cannistraci-Hebb (CH) index (Muscoloni et al., 2018):

| (Equation 5) |

where is the internal degree of z, say the number of z’s neighbors that are also in , and is the external degree of z, say the number of z’s neighbors that are not in . The core idea of CH index is to consider the negative impacts of external local community links (see Muscoloni et al. (2020) for more CH indices according to the core idea). Extensive empirical analyses (Muscoloni et al., 2020; Zhou et al., 2021) indicated that the introduction of local community paradigm and Hebbian learning rule could considerably improve the performance of routine local similarity indices.

In most known studies, the presence of many 2-hop paths between a pair of nodes is considered to be the strongest evidence indicating the existence of a corresponding missing link or future link. Although in local path index (Lü et al., 2009) and Katz index (Katz, 1953) longer paths are taken into account, they are considered to be less significant than 2-hop paths. Surprisingly, some recent works have argued that 3-hop-based similarity indices perform better than 2-hop-based indices. Pech et al. (2019) assumed that the existence likelihood of a link is a linear sum of all its neighbors’ contributions. After some algebra, Pech et al. (2019) obtained a global similarity index called linear optimization (LO) index, as follows:

| (Equation 6) |

where A and I are adjacency matrix and identity matrix, respectively. Clearly, the number of 3-hop paths can be interpreted as a degenerated index of LO. Indeed, Daminelli et al. (2015) have already applied 3-hop-based indices to predict missing links in bipartite networks, while almost no one at that time has tried 3-hop-based indices on unipartite networks. Kovács et al. (2019) noted the bipartite nature of the protein-protein interaction networks (not fully bipartite, but of high bipartivity (Holme et al., 2003)) and independently proposed a degree-normalized index (called L3 index) based on 3-hop paths as follows:

| Equation (7) |

They showed its advantage compared with 2-hop-based indices in predicting protein-protein interactions. Muscoloni et al. (2018) further proposed a theory that generalized 2-hop-based indices to n-hop-based indices with n > 2 and demonstrated the superiority of 3-hop-based indices over 2-hop-based indices on protein-protein interaction networks, world trade networks, and food webs. For example, in their framework (Muscoloni et al., 2018), the n-hop-based RA index reads as follows:

| (Equation 8) |

where is the set of all n-hop simple paths connecting x and y, and are the intermediate nodes on the considered path. Accordingly, the L3 index is exactly the same to the 3-hop-based RA index.

We have implemented extensive experiments on 137 real networks (Zhou et al., 2021), suggesting that (i) 3-hop-based indices outperform 2-hop-based indices subject to AUC, while 3-hop-based and 2-hop-based indices are competitive on precision; (ii) CH indices perform the best among all considered candidates; and (iii) 3-hop-based indices are more suitable for disassortative networks with lower densities and lower average clustering coefficient. Furthermore, we have showed that a hybrid of 2-hop-based and 3-hop-based indices via collaborative filtering techniques can result in overall better performance (Lee and Zhou, 2021).

Link predictability

Quantifying link predictability of a network allows us to evaluate link prediction algorithms for this network and to see whether there is still a large space to improve the current prediction accuracy. Lü et al. (2015) raised a hypothesis that missing links are difficult to predict if their addition causes huge structural changes, and thus, a network is highly predictable if the removal or addition of a set of randomly selected links does not significantly change structural features of this network. Denote A the adjacency matrix of a simple network and the adjacency matrix corresponding to a set of randomly selected links from E. After the removal of , the remaining network is also a simple network so that the corresponding adjacency matrix, , can be diagonalized as follows:

| (Equation 9) |

where and and are the kth eigenvalue and corresponding orthogonal and normalized eigenvector of , respectively. Considering as a perturbation to , which results in an updated eigenvalue and a corresponding eigenvector , then we have the following equation:

| (Equation 10) |

Similar to the process to get the expectation value of the first-order perturbation Hamiltonian, we neglect the second-order small terms and the changes of eigenvectors and then obtain the following equation:

| (Equation 11) |

as well as the perturbed matrix:

| (Equation 12) |

which can be considered as the linear approximation of A if the expansion is only based on . If the perturbation does not significantly change the structural features, the eigenvectors of and those of A should be almost the same, and thus, should be very close to A according to Equation (12). We rank all links in in a descending order according to their values in and select the top-L links to form the set , where . Links in and constitute the perturbed network, and if this network is close to G (because is close to A), should be close to . Therefore, Lü et al. (2015) finally proposed an index called structural consistency to measure the inherent difficulty in link prediction as follows:

| Equation (13) |

The above perturbation method can also be applied to predict missing links, and the resulted “structural perturbation method” (SPM) is still one of the most accurate methods till far (Muscoloni and Cannistraci, 2021).

Koutra et al. (2015) found that the major part of a seemingly complicated real network can be represented by a few elemental substructures like cliques, stars, chains, bipartite cores, and so on. Inspired by this study, Xian et al. (2020) claimed that a network is more regular and thus more predictable if it can be well represented by a small number of subnetworks. To reduce the tremendous complexity caused by countless subnetworks, they further set a strong restriction that candidate subnetworks are ego networks of all nodes, where ego network (also called egocentric network) is a subnetwork induced by a central node (known as the ego) and all other nodes directly connected to the ego (called alters), see (Wang et al., 2016a) for example. Obviously, the ego network of node i can be represented by the ith row or ith column of the adjacency matrix A, and if a network can be perfectly represented by all ego networks, there exists a matrix such that A = AZ. Intuitively, if a network G is very regular, the corresponding representation Z should have three properties: (i) G can be well represented by its ego networks so that AZ is close to A; (ii) G can be well represented by a small number of ego networks so that Z is of low rank since the redundant ego networks correspond to zero rows in Z; and (iii) each ego network of G can be represented by a very few other ego networks so that Z is sparse. Accordingly, the best representation matrix can be obtained by solving the following optimization problem:

| (Equation 14) |

where and are tradeoff parameters. Based on , Xian et al. (2020) proposed an ad hoc index named structural regularity index, as follows:

| (Equation 15) |

where r is the rank of , is the number of zero entries in , denotes the proportion of identical ego networks, and characterizes the density of zero entries of the reduced echelon form of . Clearly, a lower r and a larger will result in a smaller , corresponding to a more predictable network.

Xian et al. (2020) suggested that the structural regularity corresponds to redundant information in the adjacency matrix, which can be characterized by a low-rank and sparse representation matrix. Sun et al. (2020) proposed a more direct method to measure such redundancy. Their train of thought is that a more predictable network contains more structural redundancy and thus can be compressed by a shorter binary string. As the shortest possible compression length can be calculated by a lossless compression algorithm, they used the obtained normalized shortest compression length of a network to quantify its structure predictability.

To validate their methods, Lü et al. (2015) and Xian et al. (2020) tested on many real networks about whether and are strongly correlated with prediction accuracies of a few well-known algorithms. This is rough because any algorithm cannot stand for the theoretical best algorithm. Sun et al. (2020) adopted an improved method that uses the best performance among a number of known algorithms for each tested network to approximate the performance of the theoretically best predicting algorithm. Garcia-Perez et al. (2020) analyzed the ensemble of simple networks, where each can be constructed by generating a link between any node pair i and j with a known linking probability . For such theoretical benchmark, the best possible algorithm is to rank unobserved links with largest linking probabilities in the top positions and the theoretical limitation of precision can be easily obtained. They showed that if the size of is too small in compared with A, the evaluation of predictability by is less accurate.

Structural consistence, structural regularity, and compression length are all ad hoc methods. They can be used to probe the intrinsic difficulty in link prediction but cannot mathematically formulate the limitation of prediction. Mathematically speaking, there could be a God algorithm that correctly predicts all missing links, except for those indistinguishable from nonexistent links. A link (i, j) is indistinguishable from another link (u, v) if and only if there is a certain automorphism f such that f(i) = u and f(j) = v, or f(i) = v and f(j) = u. This extremely rigid definition from automorphism-based symmetry makes virtually all real networks have predictability very close to 1, which is indeed meaningless in practice. Using synthetic networks with known prediction limitation is a potentially promising way to evaluate predictors as well as indices for predictability (Garcia-Perez et al., 2020; Muscoloni and Cannistraci, 2018b), but the results may be irrelevant to real-world networks.

All above studies target static networks, while a considerable fraction of real networks are time varying (named as temporal networks) (Holme and Saramäki, 2012). Temporal networks are usually more predictable since one can utilize both topological and temporal patterns. Ignoring topological correlations, the randomness, and thus predictability of a time series can be quantified by the entropy rate (Xu et al., 2019). Tang et al. (2020) listed weights of all possible links as an expanded vector with dimension (self-connections are allowed, directionalities are considered), and thus, the evolution of a temporal network can be fully described by a matrix , where T is the number of snapshots under consideration. After , the evolution of a temporal network can be treated as a stochastic vector process, and how to measure the predictability of temporal networks is transformed to a solved problem based on the generalized Lempel-Ziv algorithm (Kontoyiannis et al., 1998). An obvious defect is that the vector dimension is too big, resulting in huge computational complexity. Tang et al. (2020) thus replaced by a much smaller matrix where only links occurring of snapshots are taken into consideration. An intrinsic weakness of Lempel-Ziv algorithm is that it tends to overestimate the predictability, and thus, in many situations the estimated values are very close to 1 (Xu et al., 2019; Song et al., 2010). Tang et al. (2020) proposed a clever method that compares the predictability of the target network with the corresponding null network, and thus, the normalized predictability is able to characterize the topological-temporal regularity in addition to the least predictable one.

Network embedding

A network embedding algorithm will produce a function with so that every node is represented by a low-dimensional vector (Cui et al., 2018). Then, the existence likelihood of a nonobserved link (u, v) can be estimated by the inner product, the cosine similarity, the Euclidean distance, or the geometrical shortest path of the two learned vectors and (Cui et al., 2018; Cannistraci et al., 2013b). Early methods cannot handle large-scale networks because they usually rely on solving the leading eigenvectors (Tenenbaum et al., 2000; Roweis and Saul, 2000).

Mikolov et al. (2013a, 2013b) proposed a language embedding algorithm named SkipGram that represents every word in a given vocabulary by a low-dimensional vector. Such representation can be obtained by maximizing the co-occurrence probability among words appearing within a window t in a sentence, via some stochastic gradient descent methods. Based on SkipGram, Perozzi et al. (2014) proposed the so-called DeepWalk algorithm, where nodes and truncated random walks are treated as words and sentences. Grover and Leskovec (2016) proposed the node2vec algorithm that learns the low-dimensional representation by maximizing the likelihood of preserving neighborhoods of nodes. Grover and Leskovec argued that the choice of neighborhoods plays a critical role in determining the quality of the representation. Therefore, instead of simple definitions of the neighborhood of an arbitrary node u, such as nodes with distance no more than a threshold to u (like the breadth-first search) and nodes sampled from a random walk starting from u (like the depth-first search), they utilized a flexible neighborhood sampling strategy by biased random walks, which smoothly interpolates between breadth-first search and depth-first search. Considering a random walk that just traversed link (z, v) and now resides at node v, the transition probability from v to any v’s immediate neighbor x is . The node2vec algorithm sets the unnormalized probability as follows:

| (Equation 16) |

where is the weight of link (v, x) and

| (Equation 17) |

Obviously, the sampling strategy in DeepWalk is a special case of node2vec with p = 1 and q = 1. By tuning p and q, node2vec can achieve better performance than DeepWalk in link prediction.

Tang et al. (2016) argued that DeepWalk lacks a clear objective function tailored for network embedding and proposed the LINE algorithm that learns node representations on the basis of a carefully designed objective function that preserves both the first-order and second-order proximity. The first-order proximity is captured by the observed links and thus can be formulated as follows:

| (Equation 18) |

where is the weight of the observed link (u, v) and

| (Equation 19) |

describes the likelihood of the existence of (u, v) given the embedding . Of course, one can adopt other alternatives of Equation (19). The second-order proximity assumes that nodes sharing many connections to other nodes are similar to each other. Accordingly, each node is also treated as a specific context and nodes with similar distributions over contexts are assumed to be similar. Then, the second-order proximity can be characterized by the objective function:

| (Equation 20) |

where denotes the probability that node v will generate a context u, namely

| (Equation 21) |

with being the context representation of u. Clearly, is naturally suitable for directed networks. By minimizing and , LINE learns two kinds of node representations that, respectively, preserve the first-order and second-order proximity and takes their concatenation as the final representation.

In addition to DeepWalk, LINE, and node2vec, other well-known network embedding algorithms that have been applied in link prediction include DNGR (Cao et al., 2016), SDNE (Wang et al., 2016b), HOPE (Ou et al., 2016), GraphGAN (Wang et al., 2018), and so on. On the one hand, embedding is currently a very hot topic in network science and thought to be a promising method for link prediction. On the other hand, some very recent empirical studies (Muscoloni et al., 2020; Mara et al., 2020; Ghasemian et al., 2020) involving more than a thousand networks showed negative evidence that network embedding algorithms perform worse than some elaborately designed mechanistic algorithms. This is not a bad news because one can expect that some link prediction algorithms will enlighten and energize researchers in network embedding and thus make contributions to other aspects of network analyses like community detection, classification, and visualization.

Another notable embedding method is based on the hyperbolic network model (Krioukov et al., 2010; Papadopoulos et al., 2012), where each node is represented by only two coordinates (i.e., ) in a hyperbolic disk. The hyperbolic network models are very simple yet can reproduce many topological characteristics of real networks, such as sparsity, scale-free degree distribution, clustering, small-world property, community structure, self-similarity, and so on (Krioukov et al., 2010; Papadopoulos et al., 2012; Muscoloni and Cannistraci, 2018a). Papadopoulos et al., (2014) applied the hyperbolic model to the Internet at Autonomous Systems (AS) level and showed better performance than traditional methods (e.g., CN index and Katz index) in predicting missing links associated with low-degree nodes (see again (Zhu et al., 2012) for biased sampling preferring low-degree nodes). Wang et al. (2016c) proposed a link prediction algorithm for networks with community structure based on hyperbolic embedding, showing good performance for community networks with power-law degree distributions. Alessandro and Cannistraci proposed the so-called nonuniform popularity-similarity-optimization (nPSO) model as a generative model to grow random networks embedded in the hyperbolic space (Muscoloni and Cannistraci, 2018a), and they leveraged the nPSO model as a synthetic benchmark for link prediction algorithms, showing that the algorithm only accounting for hyperbolic distance does not perform well at the presence of communities (Muscoloni and Cannistraci, 2018b). They further proposed a variant of geometric embedding named minimum curvilinear automata (MCA) (Muscoloni and Cannistraci, 2018c), whose link prediction accuracy is higher than the simple hyperbolic distance (Muscoloni and Cannistraci, 2018b) but lower than coalescent embedding in hyperbolic space (Muscoloni et al., 2017). Kitsak et al. (2020) proposed an embedding algorithm named HYPERLINK in hyperbolic space, whose goal is to obtain more accurate prediction of missing links, so that HYPERLINK performs better than previous hyperbolic embedding algorithms (most of these algorithms are designed to reproduce topological features, not to predict missing links). HYPERLINK is often competitive to other well-known link predictors, and it is in particular good at predicting missing links that are really hard to predict.

Matrix completion

Matrix completion aims to reconstruct a target matrix, given a subset of known entries. Since links can be fully conveyed by the adjacency matrix A, it is natural to regard link prediction as a matrix completion task. Denote the set of node pairs corresponding to known entries in A that can be utilized in the matrix completion task. In most studies, , while we should be aware of that can also contain some known absent links. The matrix completion problem can be formulated in line with supervised learning, as follows:

| (Equation 22) |

where is the parameter vector, is the predicted value of the model, is a loss function, and is a regularization term preventing overfitting. The most frequently used loss functions are squared loss and logistic loss .

Matrix factorization is a very popular method for matrix completion, which has already achieved great success in a closely related domain, the design of recommender systems (Koren et al., 2009). We consider a simple network with symmetry A and assume can be approximately factorized as with and ; then, we need to solve the following optimization problem:

| (Equation 23) |

where and are the ith and jth rows of U., respectively Notice that is the transpose of not the ith row of . Though without topological interpretation, can be treated as a lower-dimensional representation of node i, and matrix factorization can also be considered as a kind of matrix embedding algorithms (Qiu et al., 2019). If we adopt the squared loss function and the Forbenius norm for , then we get a specific optimization problem:

| (Equation 24) |

where is a tradeoff parameter. Menon and Elkan (2011) suggested that one can directly optimize for AUC on the training set, given some known absent links. Accordingly, the objective function Equation (23) can be rewritten in terms of AUC as follows:

| (Equation 25) |

where and are sets of known present and known absent links, respectively. Cleary, and . Menon and Elkan (2011) showed that the usage of AUC-based loss function can improve AUC value by around 10% comparing with the routine loss function like Equation (24).

The factorization in Equation (24) is easy to be extended to directed networks (Menon and Elkan, 2011), bipartite networks (Natarajan and Dhillon, 2014), temporal networks (Ma et al., 2018), and so on. For example, if A is asymmetry, we can replace by with , and thus, Equation (24) can be extended to the following:

| (Equation 26) |

Analogously, for bipartite networks like gene-disease associations (Natarajan and Dhillon, 2014) and user-product purchases (Shang et al., 2010), if , then we can replace by , where and . Accordingly, we get the optimization problem for bipartite networks as follows:

| (Equation 27) |

where and are the ith and jth rows of W and H, respectively. More details can be found in Menon and Elkan (2011), Natarajan and Dhillon (2014), and Ma et al. (2018).

The explicit features of nodes, such as tags associated with users and products (Zhang et al., 2011), can also be incorporated in the matrix factorization framework. Menon and Elkan (2011) suggested a direct combination of explicit features and latent features learned from the observed topology. Denoting the vector of explicit features of node i, the predicted values in Equation (22) are then replaced by

| (Equation 28) |

where is a vector of parameters. Experiments showed that the incorporation can considerably improve the prediction accuracy (Menon and Elkan, 2011). Jain and Dhillon (2013) proposed a so-called inductive matrix completion (IMC) algorithm that uses explicit features to reduce the computational complexity. In IMC, the predicted value can be expressed as where is the vector of i’s explicit features, and is a low-rank matrix with small t, which describes the latent relationships between explicit features and topological structure. Q can be learned from observed links by the following optimization problem:

| (Equation 29) |

Notice that, in Equation (24), the number of parameters to be learned is Nd, while in Equation (29), we only need to learn st parameters. The numbers of latent features (d and t) could be more or less the same as they are largely dependent on the topological structure, while the number of nodes N is usually much larger than the number of explicit features s. Therefore, the computational complexity of IMC should be much lower than brute-force factorization methods. The original IMC is proposed for bipartite networks (Jain and Dhillon, 2013), which has already found successful applications in the design of recommender systems (Jain and Dhillon, 2013) and the prediction of gene- and RNA-disease associations (Natarajan and Dhillon, 2014; Lu et al., 2018; Chen et al., 2018).

Pech et al. (2017) argued that low rank is the most critical property in matrix completion. They assumed that the observed network can be decomposed into two parts as , where is called the backbone preserving the network organization pattern, and is a noise matrix, in which positive and negative entries are spurious and missing links, respectively. Pech et al. (2017) considered only two simple properties: the low rank of and the sparsity of . Accordingly, and can be determined by solving the following optimization problem:

| (Equation 30) |

where is the -norm counting the number of nonzero entries of . The predicted links can be obtained by sorting entries in that correspond to zero entries in A. This method is straightforwardly named as low rank (LR) algorithm. Although being simple, LR performs better than well-known similarity-based algorithms reported in Zhou et al. (2009) and Cannistraci et al. (2013a), hierarchical structure model (Clauset et al., 2008), and stochastic block model (Guimerà and Sales-Pardo, 2009), while slightly worse than LOOP (Pan et al., 2016), structural perturbation model (Lü et al., 2015), and Cannistraci-Hebb automata (Muscoloni et al., 2020).

Ensemble learning

In an early survey (Lü and Zhou, 2011), we noticed the low stability of individual link predictors and thus suggested ensemble learning as a powerful tool to integrate them. Ensemble learning is a popular method in machine learning, which constructs and integrates a number of individual predictors to achieve better algorithmic performance (Zhou, 2012). Roughly speaking, ensemble learning techniques can be divided into two classes: the “parallel ensemble” where individual predictors do not strongly depend on each other and can be implemented simultaneously (e.g., bagging (Breiman, 1996) and random forests (Breiman, 2001)) and the “sequential ensemble” where the integration of individual predictors has to be implemented in a sequential way (e.g., boosting (Freund and Schapire, 1997) and stacking (Wolpert, 1992)). In the following, we will, respectively, introduce how parallel ensemble and sequential ensemble are applied to link prediction.

Given an observed network, an individual link predictor will produce a rank of all unobserved links. Pujari and Kanawati (2012) proposed an aggregation approach on ranks resulted from individual algorithms. If there are R ranks produced by R individual predictors, an unobserved link e’s Borda count in the kth rank can be defined as the number of links ranked ahead of e (there are many variants of Borda count and here we use the simplest one). Pujaji and Kanawati used a weighted aggregation to obtain the final score of any unobserved link e, as follows:

| (Equation 31) |

where is set to be proportional to the precision of the kth predictor trained by the observed network. Clearly, smaller indicates higher existence likelihood. In addition to rank aggregation, a similar weighting technique can also be applied in integrating likelihood scores. If every unobserved link is assigned a score (higher score indicates higher existence likelihood) by each predictor, then the final score of any unobserved link e can be defined in a weighted form as follows:

| (Equation 32) |

where is the score from the kth predictor. Different from rank aggregation, should be normalized before the weighted sum to ensure scores from different predictors are comparable. An alternative aggregation method is the ordered weighted averaging (OWA) (Yager, 1988), where the R predictors are ordered according to their importance to the final prediction, as , and then the final score of any unobserved link e is

| (Equation 33) |

where and . Without prior information, the most usual way to determine the weights is using the maximum entropy method, which maximizes subject to and , where is a tunable parameter measuring the extent to which the ensemble (33) is like an or operation. If is very large, then and , that is to say, only the first predictor works. He et al. (2015) applied OWA to aggregate nine local similarity indices. These indices are ordered according to their normalized values (irrelevant to their qualities), which is essentially unreasonable. Therefore, although He et al. (2015) reported considerable improvement, later experiments (Wu et al., 2019; Zhang et al., 2020) indicated that the method in He et al. (2015) does not work well because the position of a predictor is irrelevant to its quality. In contrast, if the order is relevant to the predictors’ qualities (e.g., according to their precisions trained by the target network), OWA will bring in remarkable improvement compared with individual predictors (Wu et al., 2019). As some link prediction algorithms scale worse than , Duan et al. (2017) argued that to solve smaller problems multiple times is more efficient than to solve a single large problem. They considered a latent factor model (similar to the one described by Equation (23), with complexity ) and developed several ways for the bagging decomposition, such as bagging with random nodes together with their immediate neighbors and bagging preferring dense components. They showed that those bagging techniques can largely reduce computational complexity without sacrificing prediction accuracy. Considering the family of stochastic block models (Guimerà and Sales-Pardo, 2009), Valles-Catala et al. (2018) showed that the integration (via Markov Chain Monte Carlo sampling according to Bayesian rules) of individually less plausible models can result in higher predictive performance than the single most plausible model.

Boosting is a typical sequential ensemble algorithm that trains a base learner from initial training set and adjusts weights of instances (the wrongly predicted instances will be enhanced while the easy-to-be-predicted instances will lose weights) in the training set to train the next learner. Such operation will continue until reaching some preseted conditions. The most representative boosting algorithm is AdaBoost (Freund and Schapire, 1997), which is originally designed for binary classification and thus can be directly applied in link prediction. AdaBoost is an additive model as

| (Equation 34) |

where is the tth base learner, is a scalar coefficient, and T is a preseted terminal time. H aims to minimize the expected value of an exponential loss function:

| (Equation 35) |

where denotes the true class of the instance x and W is the original weight distribution. Without specific requirements, we usually set for every instance x where m is the number of instances. We set and learn from , and then, is determined by minimizing . The weight of an instance x in the second step is updated as follows:

| Equation (36) |

where is the normalization factor. Obviously, if the instance x can be correctly classified, its weight will decrease; otherwise, its weight will increase. Such process iterates until reaching the terminal time T. When applying AdaBoost in link prediction, we need to be aware of the following three issues. (i) The base learner should be sensitive to so that we cannot use unsupervised algorithms or supervised algorithms insensitive to . (ii) In addition to positive instances (observed links), negative instances should be sampled from unobserved links. Though it introduces some noise, the influence is ignorable if the network is sparse. (iii) The negative instances should be undersampled to keep the data balanced. Comar et al. (2011) proposed the so-called LinkBoost algorithm, which is an extension of AdaBoost to link prediction with a typical matrix factorization model being the base learner. Instead of undersampling negative instances, they suggest a cost-sensitive loss function which penalizes the misclassifying links as nonlinks about N times heavier than misclassifying nonlinks as links. They further considered a degree-sensitive loss function that penalizes more for misclassification of links between low-degree nodes than high-degree nodes.

Stacking (Wolpert, 1992) is another powerful approach in sequential ensemble. It trains a group of primary learners from the initial training set and uses the outputs of primary learners as input features to train the secondary learner that provides the final prediction. If both primary learners (i.e., input features) and training instances are directly generated by the same training set, the risk of overfitting will be very high. Therefore, the original training set D, usually containing similar numbers of positive and negative instances for data balance, is divided into J sets with same size as . Denoting the primary learner using the rth algorithm and trained from the jth fold of the training set = D\Dj, for each instance , its feature vector is , where and R is the number of primary algorithms. This J-fold division ensures all features of any instance x are obtained by primary learners trained without x. Some scientists have already used similar techniques (e.g., using various regressions to integrate results from primary predictors and other features (Zhang et al., 2020; Zhang, 2017; Fire et al., 2013)), but they are not aware of stacking model and did not employ any measures to avoid overfitting. Li et al. (2020) proposed a stacking model for link prediction, which use logistic regression and XGBoost to learn 4 similarity indices. Their method is inspiring, but they only considered 4 primary predictors and tested on two very small networks with some experimental results (e.g., the AUC values of CN index) far different from well-known results, and thus, the reported results and conclusion are questionable. Ghasemian et al. (2020) proposed a stacking model that considers 203 primary link predictors on 550 disparate networks. Using a standard supervised random forest algorithm (Breiman, 2001) as the secondary learner, Ghasemian et al. (2020) argued that the stacking model is remarkably superior to individual predictors for real networks and can approach to the theoretical optima for synthetic networks with known highest prediction accuracies. In addition, they showed that social networks are more predictable than biological and technological networks. However, a recent large-scale experiment (Muscoloni and Cannistraci, 2021) suggested that the above stacking model does not perform better than SPM (Lü et al., 2015) and Cannistraci-Hebb automata (Muscoloni et al., 2020). Wu et al. (2019) proposed an alternative sequential ensemble strategy called network reconstruction, which reconstructs network via one link prediction algorithm and predicts missing links by another prediction algorithm.

Discussion

In this review, to improve the readability, we classify representative works in the last decade into five groups. Of course, some novel and interesting methods, such as evolutionary algorithm (Bliss et al., 2014), ant colony approach (Sherkat et al., 2015), structural Hamiltonian analysis (Pan et al., 2016), and leading eigenvector control (Lee et al., 2021), do not belong to any of the above groups, and readers are encouraged to read other recent surveys (Wang et al., 2015; Martínez et al., 2016; Kumar et al., 2020) as complements of the present review.

Computational complexities of some representative algorithms mentioned in this perspective are reported in Table 1. Those algorithms are roughly categorized into three classes according to their computational complexities: the ones of “high” scalability can be applied to large-scale networks with millions of nodes, the ones of “medium” scalability can be applied to mid-sized networks with tens to hundreds of thousands of nodes, and the ones of “low” scalability can only deal with small networks with up to a few thousands of nodes using a common desktop computer. Given the size and sparsity of the target network, as well as the computational power, this table helps readers in finding suitable algorithms.

Table 1.

Computational complexities of some representative link prediction algorithms

| Algorithm | References | Complexity | Scalability |

|---|---|---|---|

| CN | Liben-Nowell and Kleinberg (2007) | High | |

| RA | Zhou et al. (2009) | High | |

| LP | Zhou et al. (2009) | Medium | |

| CH | Muscoloni et al. (2018) | Medium | |

| L3 | Kovács et al. (2019) | Medium | |

| SPM | Lü et al. (2015) | Medium | |

| DeepWalk | Perozzi et al. (2014) | Medium | |

| LINE | Tang et al. (2016) | Medium | |

| NetSMF | Qiu et al. (2019) | Medium | |

| IMC | Jain and Dhillon (2013) | Medium | |

| LR | Pech et al. (2017) | Medium | |

| HSM | Clauset et al. (2008) | Low | |

| SBM | Guimerà and Sales-Pardo (2009) | Low | |

| LOOP | Pan et al. (2016) | Low |

is the number of nodes, is the average degree, is usually a large number depending on the number of random walks, the length of each walk, and the implemented deep learning model, is the number of iterations, and is the representation dimension or the preseted rank for matrix factorization and low-rank decomposition.

Very recently, a notable issue is the applications of neural networks in link prediction, which may be partially facilitated by the dramatic advances of deep learning techniques. Zhang and Chen (2017) trained a fully connected neural network on the adjacency matrices of enclosing subgraphs (with a fixed size) of target links. They applied a variant of the Weisfeiler-Lehman algorithm to determine the order of nodes in each adjacency matrix, ensuring that nodes with closer distances to the target link are ranked in higher positions. Zhang and Chen (2018) further proposed a novel framework based on graph neural networks, which can learn multiple types of information, including general structural features and latent and explicit node features. In this framework, a node’s order in the enclosing subgraph can be determined only by its closeness to the target link and the subgraph size can be flexible. Wang et al. (2020) directly represented the adjacency matrix of a network as an image and then learned hierarchical feature representations by training generative adversarial networks. Some preliminary experimental results suggested that the performance of those methods (Zhang and Chen, 2017, 2018; Wang et al., 2020) is highly competitive to many other state-of-the-art algorithms. Despite of the promising results, at present, features and models are simply pieced together without intrinsic connections. The above pioneering works (Zhang and Chen, 2017, 2018; Wang et al., 2020) provide a good start but we still need in-depth and comprehensive analyses to push forward related studies.

Although most link prediction algorithms only account for structural information, attributes of nodes (e.g., expression levels of genes (Natarajan and Dhillon, 2014) and tags of citation and social networks (Zhang et al., 2011; Wang et al., 2019)) can be utilized to improve the prediction performance. It is easy to treat attributes as independent information additional to structural features and work out a method that directly combines the two, while what is lacking but valuable is to uncover nontrivial relationship between attributes and structural roles and then design more meaningful algorithms. Beyond explicit attributes, we should also pay attention to dynamical information. It is known to us that limited time series obtained from some dynamical processes can be used to reconstruct network topology (Shen et al., 2014) while even a small fraction of missing links in modeling dynamical processes can lead to remarkable biases (Nicolaou and Motter, 2020); however, studies about how to make use of the correlations between topology and dynamics to predict missing links or how to take advantage of link prediction algorithms to improve estimates of dynamical parameters are rare.

By an elaborately designed model, Gu et al. (2017) showed that there is no ground truth in ranking influential spreaders even with a given dynamics. Peel et al. (2017) proved that there is no ground truth and no free lunch for community detection. The latter implies that no detection algorithm can be optimal on all inputs. Fortunately, we have ground truth in link prediction; however, extensive experiments (Ghasemian et al., 2020) also implicate that no known link predictor performs best or worst across all inputs. If link prediction is a no-free-lunch problem, then no single algorithm performs better or worse than any other when applied to all possible inputs. It raises a question that whether the study on prediction algorithms is valuable. The answer is of course YES (Guimerà, 2020) because we actually have free lunches as what we are interested in, the real networks, have far different statistics from those of all possible networks. As Ghasemian et al. (2020) argued that the ensemble models are usually superior to individual algorithms, a related question is whether the study on individual algorithm is valuable. The answer is still YES. Firstly, a recent large-scale experimental study (Muscoloni and Cannistraci, 2021) indicated that the performance of the stacking model is worse than elaborately designed individual algorithms, like SPM (Lü et al., 2015) and Cannistraci-Hebb automata (Muscoloni et al., 2020). Secondly, an individual algorithm could be highly cost-effective for its competitive performance and low complexity in time and space. Above all, individual algorithms, especially the mechanistic algorithms, may provide significant insights about network organization and evolution. In some real applications like friend recommendation, predictions with explanations are more acceptable (Barbieri et al., 2014), which cannot be obtained by ensemble learning. In addition, an alogical reason is that some elegant individual models (e.g., HSM (Clauset et al., 2008), SBM (Guimerà and Sales-Pardo, 2009), SPM (Lü et al., 2015), HYPERLINK (Kitsak et al., 2020), etc.) bring us inimitable esthetic perception that cannot be experienced elsewhere.

Along with fruitful algorithms proposed recently, the design of novel and effective algorithms for general networks is increasingly hard. We expect a larger fraction of algorithms in the future studies will be designed for networks of particular types (e.g., directed networks (Zhang et al., 2013), weighted networks (Zhao et al., 2015), multilayer networks (Bacco et al., 2017), temporal networks (Bu et al., 2019), hypergraphs and bipartite networks (Daminelli et al., 2015; Benson et al., 2018), networks with negative links (Leskovec et al., 2010; Tang et al., 2015), etc.) and networks with domain knowledge (e.g., drug-target interactions (Wu et al., 2018), disease-associated relations (Zeng et al., 2018), protein-protein interactions (Kovács et al., 2019; Lei and Ruan, 2013), criminal networks (Berlusconi et al., 2016), citation networks (Liu et al., 2019), academic social networks (Kong et al., 2019), knowledge graphs (Nickel et al., 2015), etc.). We should take serious consideration about properties and requirements of target networks and domains in the algorithm design, instead of straightforward (and thus less valuable) extensions of general algorithms. For example, if we attempt to recommend friends in an online social network based on link prediction (Aiello et al., 2012b), we need to consider how to explain our recommendations to improve the acceptance rate (Barbieri et al., 2014), how to use the acceptance/rejection information to promote the prediction accuracy (Wu et al., 2013), and how to avoid recommending bots to real users (Aiello et al., 2012a). These considerations will bring fresh challenges in link prediction.

Early studies often compare a very few algorithms on several small networks according to one or two metrics. Recent large-scale experiments (Mara et al., 2020; Ghasemian et al., 2020; Muscoloni et al., 2020; Muscoloni and Cannistraci, 2021; Zhou et al., 2021) indicated that the above methodology may result in misleading conclusions. Future studies ought to implement systematic analyses involving more synthetic and real networks, benchmarks, state-of-the-art algorithms, and metrics. Researchers can find benchmark datasets for networks from Open Graph Benchmark (OGB, ogb.stanford.edu), Pajek (mrvar.fdv.uni-lj.si/pajek), and Link Prediction Benchmarks (LPB, www.domedata.cn/LPB). If relevant results cannot be published in an article with limited space, they should be made public (better together with data and codes) in some accessible websites like GitHub, OGB, and LPB.

Lastly, we would like to emphasize that the soul of a network lies in its links instead of nodes; otherwise, we should pay more attention on set theory rather than graph theory. Therefore, in network science, link prediction is a paradigmatic and fundamental problem with long attractivity and vitality. Beyond an algorithm predicting missing and future links, link prediction is also a powerful analyzing tool, which has already been utilized in evaluating and inferring network evolving mechanisms (Wang et al., 2012; Zhang et al., 2015; Zhang, 2017), testing the privacy-protection algorithms (as an attaching method) (Xian et al., 2021), evaluating and designing network embedding algorithms (Dehghan-Kooshkghazi et al., 2021; Gu et al., 2021), and so on. Though the last decade has witnessed plentiful and substantial achievements, the study of link prediction is just unfolding and more efforts are required toward a full picture of how links do emerge and vanish.

Acknowledgments

I acknowledge Yan-Li Lee for valuable discussion and assistance. This work was partially supported by the National Natural Science Foundation of China (Grant Nos. 11975071 and 61673086), the Science Strength Promotion Programmer of University of Electronic Science and Technology of China under Grant No. Y03111023901014006, and the Fundamental Research Funds for the Central Universities of China under Grant No. ZYGX2016J196.

Author contributions

T.Z. conceived and designed the project. T.Z. wrote the manuscript.

Declaration of interests

The author declares no competing financial interests.

References

- Aiello L.M., Deplano M., Schifanella R., Ruffo G. Proceedings of the international AAAI conference on web and social media. AAAI Press; 2012. People are strange when you're a stranger: Impact and influence of bots on social networks; pp. 10–17. [Google Scholar]

- Aiello L.M., Barrat A., Schifanella R., Cattuto C., Markines B., Menczer F. Friendship prediction and homophily in social media. ACM Trans. Web. 2012;6:9. [Google Scholar]

- Bacco C. De, Power E.A., Larremore D.B., Moore C. Community detection, link prediction, and layer interdependence in multilayer networks. Phys. Rev. E. 2017;95:042317. doi: 10.1103/PhysRevE.95.042317. [DOI] [PubMed] [Google Scholar]

- Barabasi A.-L. Cambridge University Press; 2016. Network Science. [Google Scholar]

- Barbieri N., Bonchi F., Manco G. Proceedings of the 20th ACM SIGKDD international conference on knowledge discovery and data mining. ACM Press; 2014. Who to follow and why: link prediction with explanations; pp. 1266–1275. [Google Scholar]

- Benson A.R., Abebe R., Schaub M.T., Jadbabaie A., Kleinberg J. Simplicial closure and higher-order link prediction. PNAS. 2018;115:E11221–E11230. doi: 10.1073/pnas.1800683115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berlusconi G., Calderoni F., Parolini N., Verani M., Piccardi C. Link prediction in criminal networks: a tool for criminal intelligence analysis. PLoS One. 2016;11:e0154244. doi: 10.1371/journal.pone.0154244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bliss C.A., Frank M.R., Danforth C.M., Dodds P.S. An evolutionary algorithm approach to link prediction in dynamic social networks. J. Comput. Sci. 2014;5:750–764. [Google Scholar]

- Breiman L. Bagging predictors. Mach. Learn. 1996;24:123–140. [Google Scholar]

- Breiman L. Random forests. Mach. Learn. 2001;45:5–32. [Google Scholar]

- Bu Z., Wang Y., Li H.J., Jiang J., Wu Z., Cao J. Link prediction in temporal networks: integrating survival analysis and game theory. Inf. Sci. 2019;498:41–61. [Google Scholar]

- Cannistraci C.V., Alanis-Lobato G., Ravasi T. From link-prediction in brain connectomes and protein interactomes to the local-community-paradigm in complex networks. Sci. Rep. 2013;3:1613. doi: 10.1038/srep01613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cannistraci C.V., Alanis-Lobato G., Ravasi T. Minimum curvilinearity to enhance topological prediction of protein interactions by network embedding. Bioinformatics. 2013;29:i199. doi: 10.1093/bioinformatics/btt208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cao S., Lu W., Xu Q. Proceedings of the AAAI conference on artificial intelligence. AAAI Press; 2016. Deep neural networks for learning graph representations; pp. 1145–1152. [Google Scholar]

- Chen X., Wang L., Qu J., Guan N.N., Li J.Q. Predicting miRNA-disease association based on inductive matrix completion. Bioinformatics. 2018;34:4256–4265. doi: 10.1093/bioinformatics/bty503. [DOI] [PubMed] [Google Scholar]

- Clauset A., Moore C., Newman M.E.J. Hierarchical structure and the prediction of missing links in networks. Nature. 2008;453:98–101. doi: 10.1038/nature06830. [DOI] [PubMed] [Google Scholar]

- Comar P.M., Tan P.N., Jain A.K. Proceedings of the 11th international conference on data mining. IEEE Press; 2011. Linkboost: a novel cost-sensitive boosting framework for community-level network link prediction; pp. 131–140. [Google Scholar]

- Csermely P., Korcsmáros T., Kiss H.J., London G., Nussinov R. Structure and dynamics of molecular networks: a novel paradigm of drug discovery: a comprehensive review. Pharmacol. Ther. 2013;138:333–408. doi: 10.1016/j.pharmthera.2013.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui P., Wang X., Pei J., Zhu W. A survey on network embedding. IEEE Trans. Knowl. Data Eng. 2018;31:833–852. [Google Scholar]

- Daminelli D., Thomas J.M., Durán C., Cannistraci C.V. Common neighbours and the local-community-paradigm for topological link prediction in bipartite networks. New J. Phys. 2015;17:113037. [Google Scholar]

- Davis J., Goadrich M. Proceedings of the 23rd international conference on machine learning. ACM Press); 2006. The relationship between precision–recall and ROC curves; pp. 233–240. [Google Scholar]

- Dehghan-Kooshkghazi, A., Kamiński, B., Prałat, Ł., and Théberge, F. (2021). Evaluating Node embeddings of complex networks. arXiv: 2102.08275.

- Ding H., Takigawa I., Mamitsuka H., Zhu S. Similarity-based machine learning methods for predicting drug–target interactions: a brief review. Brief. Bioinform. 2014;15:734–747. doi: 10.1093/bib/bbt056. [DOI] [PubMed] [Google Scholar]

- Duan L., Ma S., Aggarwal C., Ma T., Huai J. An ensemble approach to link prediction. IEEE Trans. Knowl. Data Eng. 2017;29:2402–2416. [Google Scholar]

- Fawcett T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006;27:861–874. [Google Scholar]

- Fire M., Tenenboim-Chekina L., Puzis R., Lesser O., Rokach L., Elovici Y. Computationally efficient link prediction in a variety of social networks. ACM Trans. Intell. Syst. Technol. 2013;5:10. [Google Scholar]

- Freund Y., Schapire R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997;55:119–139. [Google Scholar]

- Garcia-Perez G., Aliakbarisani R., Ghasemi A., Serrano M.A. Precision as a measure of predictability of missing links in real networks. Phys. Rev. E. 2020;101:052318. doi: 10.1103/PhysRevE.101.052318. [DOI] [PubMed] [Google Scholar]

- Ghasemian A., Galstyan A., Airoldi E.M., Clauset A. Stacking models for nearly optimal link prediction in complex networks. PNAS. 2020;117:23393–23400. doi: 10.1073/pnas.1914950117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grover A., Leskovec J. Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. ACM Press; 2016. node2vec: scalable feature learning for networks; pp. 855–864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu J., Lee S., Saramäki J., Holme P. Ranking influential spreaders is an ill-defined problem. EPL. 2017;118:68002. [Google Scholar]

- Gu W., Gao F., Li R., Zhang J. Learning universal network representation via link prediction by graph convolutional neural network. J. Soc. Comput. 2021;2:43–51. [Google Scholar]

- Guimerà R., Sales-Pardo M. Missing and spurious interactions and the reconstruction of complex networks. PNAS. 2009;106:22073–22078. doi: 10.1073/pnas.0908366106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guimerà R. One model to rule them all in network science. PNAS. 2020;117:25195–25197. doi: 10.1073/pnas.2017807117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hand D.J. Measuring classifier performance: a coherent alternative to the area under the ROC curve. Mach. Learn. 2009;77:103–123. [Google Scholar]

- Hanely J.A., McNeil B.J. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 1982;143:29–36. doi: 10.1148/radiology.143.1.7063747. [DOI] [PubMed] [Google Scholar]

- He Y.-L., Liu J.N.K., Hu Y.-X., Wang X.-Z. OWA operator based link prediction ensemble for social network. Expert Syst. Appl. 2015;42:21–50. [Google Scholar]

- Holme P., Liljeros F., Edling C.R., Kim B.J. Network bipartivity. Phys. Rev. E. 2003;68:056107. doi: 10.1103/PhysRevE.68.056107. [DOI] [PubMed] [Google Scholar]

- Holme P., Saramäki J. Temporal networks. Phys. Rep. 2012;519:97–125. [Google Scholar]

- Jain, P., and Dhillon, I.S. (2013). Provable inductive matrix completion. arXiv: 1306.0626.

- Katz L. A new status index derived from sociometric analysis. Psychometrika. 1953;18:39–43. [Google Scholar]

- Kitsak M., Voitalov I., Krioukov D. Link prediction with hyperbolic geometry. Phys. Rev. Res. 2020;2:043113. [Google Scholar]

- Kong X., Shi Y., Yu S., Liu J., Xia F. Academic social networks: modeling, analysis, mining and applications. J. Netw. Comput. Appl. 2019;132:86–103. [Google Scholar]

- Kontoyiannis I., Algoet P.H., Suhov Y.M., Wyner A.J. Nonparametric entropy estimation for stationary processes and random fields, with applications to English text. IEEE Trans. Inf. Theor. 1998;44:1319–1327. [Google Scholar]

- Koren Y., Bell R., Volinsky C. Matrix factorization techniques for recommender systems. Computer. 2009;40:30–37. [Google Scholar]

- Koutra D., Kang U., Vreeken J., Faloutsos C. Summarizing and understanding large graphs. Stat. Anal. Data Mining. 2015;8:183–202. [Google Scholar]

- Kovács I.A., Luck K., Spirohn K., Wang Y., Pollis C., Schlabach S., Bian W., Kim D.K., Kishore N., Hao T. Network-based prediction of protein interactions. Nat. Commun. 2019;10:1240. doi: 10.1038/s41467-019-09177-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krioukov D., Papadopoulos F., Kitsak M., Vahdat A., Boguna M. Hyperbolic geometry of complex networks. Phys. Rev. E. 2010;82:036106. doi: 10.1103/PhysRevE.82.036106. [DOI] [PubMed] [Google Scholar]

- Kumar A., Singh S.S., Singh K., Biswas B. Link prediction techniques, applications, and performance: a survey. Physica A. 2020;553:124289. [Google Scholar]

- Lee Y.-L., Zhou T. Collaborative filtering approach to link prediction. Physica A. 2021;578:126107. [Google Scholar]

- Lee Y.-L., Dong Q., Zhou T. Link prediction via controlling the leading eigenvector. Appl. Math. Comput. 2021;411:126517. [Google Scholar]

- Lei C., Ruan J. A novel link prediction algorithm for reconstructing protein–protein interaction networks by topological similarity. Bioinformatics. 2013;29:355–364. doi: 10.1093/bioinformatics/bts688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leskovec J., Huttenlocher D., Kleinberg J. Proceedings of the 19th international conference on world wide web. ACM Press; 2010. Predicting positive and negative links in online social networks; pp. 641–650. [Google Scholar]

- Li K., Tu L., Chai L. Ensemble-model-based link prediction of complex networks. Comput. Netw. 2020;166:106978. [Google Scholar]

- Liben-Nowell D., Kleinberg J. The link-prediction problem for social networks. J. Am. Soc. Inf. Sci. Technol. 2007;58:1019–1031. [Google Scholar]

- Lichtenwalter R.N., Lussier J.T., Chawla N.V. Proceedings of the 16th ACM SIGKDD international conference on knowledge discovery and data mining. ACM Press; 2010. New perspectives and methods in link prediction; pp. 243–252. [Google Scholar]

- Lichtenwalter R.N., Chawla N.V. Proceedings of the 2012 IEEE/ACM international conference on advances in social networks analysis and mining. IEEE Press; 2012. Link prediction: fair and effective evaluation; pp. 376–383. [Google Scholar]

- Liu H., Kou H., Yan C., Qi L. Link prediction in paper citation network to construct paper correlation graph. EURASIP J. Wirel. Commun. Netw. 2019;2019:233. [Google Scholar]

- Lu C., Yang M., Luo F., Wu F.-X., Li M., Pan Y., Li Y., Wang J. Prediction of IncRNA-disease associations based on inductive matrix completion. Bioinformatics. 2018;34:3357–3364. doi: 10.1093/bioinformatics/bty327. [DOI] [PubMed] [Google Scholar]

- Lü L., Jin C.-H., Zhou T. Similarity index based on local paths for link prediction of complex networks. Phys. Rev. E. 2009;80:046122. doi: 10.1103/PhysRevE.80.046122. [DOI] [PubMed] [Google Scholar]

- Lü L., Zhou T. Link prediction in complex networks: a survey. Physica A. 2011;390:1150–1170. [Google Scholar]

- Lü L., Medo M., Yeung C.-H., Zhang Y.-C., Zhang Z.-K., Zhou T. Recommender systems. Phys. Rep. 2012;519:1–49. [Google Scholar]

- Lü L., Pan L., Zhou T., Zhang Y.-C., Stanley H.E. Toward link predictability of complex networks. PNAS. 2015;112:2325–2330. doi: 10.1073/pnas.1424644112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ma X., Sun P., Wang Y. Graph regularized nonnegative matrix factorization for temporal link prediction in dynamic networks. Physica A. 2018;496:121–136. [Google Scholar]

- Mara A.C., Lijffijt J., Bie T. De. Proceedings of the 7th IEEE international conference on data science and advanced analytics. IEEE Press); 2020. Benchmarking network embedding models for link prediction: are We making progress? pp. 138–147. [Google Scholar]

- Martínez V., Berzal F., Cubero J.C. A survey of link prediction in complex networks. ACM Comput. Surv. 2016;49:69. [Google Scholar]

- Menon A.K., Elkan C. Proceedings of the joint European conference on machine learning and knowledge discovery in databases. Springer; 2011. Link prediction via matrix factorization; pp. 437–452. [Google Scholar]

- Mikolov, T., Chen, K., Corrado, G., and Dean, J. (2013a). Efficient estimation of word representations in vector space. arXiv: 1301.3781.

- Mikolov T., Sutskever I., Chen K., Corrado G., Dean J. Distributed representations of words and phrases and their compositionality. Adv. Neural Inf. Process. Syst. 2013;26:3111–3119. [Google Scholar]

- Muscoloni A., Thomas J.M., Ciucci S., Bianconi G., Cannistraci C.V. Machine learning meets complex networks via coalescent embedding in the hyperbolic space. Nat. Commun. 2017;8:1615. doi: 10.1038/s41467-017-01825-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muscoloni A., Abdelhamid I., Cannistraci C.V. Local-community network automata modeling based on length-three-paths for prediction of complex network structures in protein interactomes, food web and more. bioRxiv. 2018 doi: 10.1101/346916. [DOI] [Google Scholar]

- Muscoloni A., Cannistraci C.V. A nonuniform popularity-similarity optimization (nPSO) model to efficiently generate realistic complex networks with communities. New J. Phys. 2018;20:052002. [Google Scholar]

- Muscoloni A., Cannistraci C.V. Leveraging the nonuniform PSO network model as a benchmark for performance evaluation in community detection and link prediction. New J. Phys. 2018;20:063022. [Google Scholar]

- Muscoloni, A., and Cannistraci, C.V. (2018c). Minimum curvilinear automata with similarity attachment for network embedding and link prediction in the hyperbolic space. arXiv: 1802.01183..

- Muscoloni, A., Michieli, U., and Cannistraci, C.V. (2020). Adaptive network automata modeling of complex networks. Preprint: 202012.0808.

- Muscoloni, A., and Cannistraci, C.V. (2021) Short note on comparing stacking modelling versus Cannistraci-Hebb adaptive network automata for link prediction in complex networks. Preprints: 202105.0689.

- Natarajan N., Dhillon I.S. Inductive matrix completion for predicting gene-disease associations. Bioinformatics. 2014;30:i60. doi: 10.1093/bioinformatics/btu269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman M.E.J. Oxford University Press; 2018. Networks. [Google Scholar]

- Nickel M., Murphy K., Tresp V., Gabrilovich E. A review of relational machine learning for knowledge graphs. Proc. IEEE. 2015;104:11–33. [Google Scholar]

- Nicolaou Z.G., Motter A.E. Missing links as a source of seemingly variable constants in complex reaction networks. Phys. Rev. Res. 2020;2:043135. [Google Scholar]

- Ou M., Cui P., Zhang J., Zhu W. Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. ACM Press; 2016. Asymmetric transitivity preserving graph embedding; pp. 1105–1114. [Google Scholar]

- Pan L., Zhou T., Lü L., Hu C.-K. Predicting missing links and identifying spurious links via likelihood analysis. Sci. Rep. 2016;6:22955. doi: 10.1038/srep22955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papadopoulos F., Kitsak M., Serrano M.A., Boguna M., Krioukov D. Popularity versus similarity in growing networks. Nature. 2012;489:537–540. doi: 10.1038/nature11459. [DOI] [PubMed] [Google Scholar]

- Papadopoulos F., Psomas C., Krioukov D. Network mapping by replying hyperbolic growth. IEEE/ACM Trans. Netw. 2014;23:198–211. [Google Scholar]

- Pech R., Hao D., Pan L., Cheng H., Zhou T. Link prediction via matrix completion. EPL. 2017;117:38002. [Google Scholar]

- Pech R., Hao D., Lee Y.-L., Yuan Y., Zhou T. Link prediction via linear optimization. Physica A. 2019;528:121319. [Google Scholar]

- Peel L., Larremore D.B., Clauset A. The ground truth about metadata and community detection in networks. Sci. Adv. 2017;3:e1602548. doi: 10.1126/sciadv.1602548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peixoto T.P. Reconstructing networks with unknown and heterogeneous errors. Phys. Rev. X. 2018;8:041011. [Google Scholar]

- Perozzi B., Al-Rfou R., Skiena S. Proceedings of the 20th ACM SIGKDD international conference on knowledge discovery and data mining. ACM Press; 2014. DeepWalk: online learning of social representations; pp. 701–710. [Google Scholar]

- Provost F., Fawcett T., Kohavi R. Proceedings of the 15th international conference on machine learning. Morgan Kaufmann Publishers; 1998. The case against accuracy estimation for comparing induction algorithms; pp. 445–453. [Google Scholar]

- Pujari M., Kanawati R. Proceedings of the 21st international conference on world wide web. ACM Press; 2012. Supervised rank aggregation approach for link prediction in complex networks; pp. 1189–1196. [Google Scholar]

- Qiu J., Dong Y., Ma H., Li J., Wang C., Wang K., Tang T. Proceedings of the world wide web conference. ACM Press; 2019. NetSMF: large-scale network embedding as sparse matrix factorization; pp. 1509–1520. [Google Scholar]

- Roweis S.T., Saul L.K. Nonlinear dimensionality reduction by locally linear embedding. Science. 2000;290:2323–2326. doi: 10.1126/science.290.5500.2323. [DOI] [PubMed] [Google Scholar]

- Saito T., Rehmsmeier M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS One. 2015;10:e0118432. doi: 10.1371/journal.pone.0118432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shang M.-S., Lü L., Zhang Y.-C., Zhou T. Empirical analysis of web-based user-object bipartite networks. EPL. 2010;90:48006. [Google Scholar]

- Shen Z., Wang W.-X., Fan Y., Di Z., Lai Y.-C. Reconstructing propagation networks with natural diversity and identifying hidden sources. Nat. Commun. 2014;5:4323. doi: 10.1038/ncomms5323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sherkat E., Rahgozar M., Asadpour M. Structural link prediction based on ant colony approach in social networks. Physica A. 2015;419:80–94. [Google Scholar]

- Song C., Qu Z., Blumm N., Barabási A.-L. Limits of predictability in human mobility. Science. 2010;327:1018–1021. doi: 10.1126/science.1177170. [DOI] [PubMed] [Google Scholar]

- Squartini T., Caldarelli G., Cimini G., Gabrielli A., Garlaschelli D. Reconstruction methods for networks: the case of economic and financial systems. Phys. Rep. 2018;757:1–47. [Google Scholar]

- Swamidass S.J., Azencott C.A., Daily K., Baldi P.A. CROC stronger than ROC: measuring, visualizing and optimizing early retrieval. Bioinformatics. 2010;26:1348–1356. doi: 10.1093/bioinformatics/btq140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sun J., Feng L., Xie J., Ma X., Wang D., Hu Y. Revealing the predictability of intrinsic structure in complex networks. Nat. Commun. 2020;11:574. doi: 10.1038/s41467-020-14418-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tang J., Chang S., Aggarwal C., Liu H. Proceedings of the 8th ACM international conference on web search and data mining. ACM Press; 2015. Negative link prediction in social media; pp. 87–96. [Google Scholar]

- Tang J., Qu M., Wang M., Zhang M., Yan J., Mei Q. Proceedings of the 24th international conference on world wide web. ACM Press; 2016. LINE: large-scale information network embedding; pp. 1067–1077. [Google Scholar]

- Tang D., Du W., Shekhtman L., Wang Y., Havlin S., Cao X., Yan G. Predictability of real temporal networks. Natl. Sci. Rev. 2020;7:929–937. doi: 10.1093/nsr/nwaa015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tenenbaum J.B., Silva V. De, Langford J.C. A global geometric framework for nonlinear dimensionality reduction. Science. 2000;290:2319–2323. doi: 10.1126/science.290.5500.2319. [DOI] [PubMed] [Google Scholar]

- Valles-Catala T., Peixoto T.P., Sales-Pardo M., Guimera R. Consistencies and inconsistencies between model selection and link prediction in networks. Phys. Rev. E. 2018;97:062316. doi: 10.1103/PhysRevE.97.062316. [DOI] [PubMed] [Google Scholar]

- Wang W.-Q., Zhang Q.-M., Zhou T. Evaluating network models: a likelihood analysis. EPL. 2012;98:28004. [Google Scholar]

- Wang Y., Wang L., Li Y., He D., Chen W., Liu T.Y. Proceedings of the 26th annual conference on learning theory. COLT Press; 2013. A theoretical analysis of NDCG ranking measures; pp. 25–54. [Google Scholar]

- Wang P., Xu B., Wu Y., Zhou X. Link prediction in social networks: the state-of-the-art. Sci. China Inf. Sci. 2015;58:1–38. [Google Scholar]

- Wang Q., Gao J., Zhou T., Hu Z., Tian H. Critical size of ego communication networks. EPL. 2016;114:58004. [Google Scholar]

- Wang D., Cui P., Zhu W. Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. ACM Press; 2016. Structural deep network embedding; pp. 1225–1234. [Google Scholar]

- Wang Z., Wu Y., Li Q., Jin F., Xiong W. Link prediction based on hyperbolic mapping with community structure for complex networks. Physica A. 2016;450:609–623. [Google Scholar]

- Wang H., Wang J., Wang J., Zhao M., Zhang M., Zhang F., Xie X., Guo M. Proceedings of the AAAI conference on artificial intelligence. AAAI Press; 2018. GraphGAN: graph representation learning with generative adversarial nets; pp. 2508–2515. [Google Scholar]

- Wang J., Zhang Q.-M., Zhou T. Tag-aware link prediction algorithm in complex networks. Physica A. 2019;523:105–111. [Google Scholar]

- Wang X.-W., Chen Y., Liu Y.-Y. Link prediction through deep generative model. iScience. 2020;23:101626. doi: 10.1016/j.isci.2020.101626. [DOI] [PMC free article] [PubMed] [Google Scholar]