Abstract

This paper reviews the hypothesis of harmonic cancellation according to which an interfering sound is suppressed or canceled on the basis of its harmonicity (or periodicity in the time domain) for the purpose of Auditory Scene Analysis. It defines the concept, discusses theoretical arguments in its favor, and reviews experimental results that support it, or not. If correct, the hypothesis may draw on time-domain processing of temporally accurate neural representations within the brainstem, as required also by the classic equalization-cancellation model of binaural unmasking. The hypothesis predicts that a target sound corrupted by interference will be easier to hear if the interference is harmonic than inharmonic, all else being equal. This prediction is borne out in a number of behavioral studies, but not all. The paper reviews those results, with the aim to understand the inconsistencies and come up with a reliable conclusion for, or against, the hypothesis of harmonic cancellation within the auditory system.

Keywords: pitch perception, auditory scene analysis, segregation, harmonicity, harmonic cancellation

Introduction

Our environment is cluttered with sound sources, but to act effectively we must focus on one or a few and ignore the others. This is hard because the mixing process, by which sounds from the various sources add up before entering the ears, cannot be undone. We usually do not know the mixing matrix (i.e., the delays and gains applied to each source before adding) and, even if we did, that matrix is generally not invertible. Recovering individual sources is thus impossible except in very simple cases. Nonetheless, we sometimes feel that we can follow an individual source, for example, a voice within a conversation, or an instrument within an ensemble, as if it were alone. The ability to make sense of a complex acoustic scene in terms of individual sources is known as Auditory Scene Analysis (Bregman, 1990).

Auditory Scene Analysis is sometimes discussed as a process of “grouping” elements (e.g., partials) to form sources or objects (Bregman, 1990), for example, according to Gestalt principles. However, such “elements” are conceptual rather than operational. While sinusoids and clicks serve well as synthesis parameters, it may not be possible to extract them from the sound due to theoretical limits (e.g., time–frequency uncertainty tradeoff, Gábor, 1947) and physiological limits (e.g., temporal and frequency resolution of cochlear analysis, Moore & Glasberg, 1983; Plack & Moore, 1990). If they cannot be accessed, postulating that they can be grouped is perhaps misleading.

Fortunately, perfect isolation of each source is usually not necessary. According to the principle of unconscious inference (Helmholtz, 1867; Kersten et al., 2004), we need only to recover enough information to infer the presence or nature of a target. Regularities within the world, internalized as models within the perceptual system, allow us to fill in missing parts. This process, which manipulates incomplete information “under the hood,” provides us with the illusion of perceiving each object just as if true unmixing had taken place. Information about the source is partial but, thanks to inference, it appears to us that it is complete (al Haytham, 1030; Hatfield, 2002; Imbert, 2020).

For this to work, it is essential that the sensory representation be stripped of the influence of background objects. If not, a different background might lead to a different percept, defeating the goal of perceiving the target as if it were in isolation. In other words, the sensory representation should be made invariant to the presence of interfering sources. This is analogous to invariance with respect to intra-class variability in pattern classification (Duda et al., 2012).

Several aspects of auditory processing might contribute to this goal. If target and background differ by their spectral content, cochlear filtering can be used to split sensory input into channels dominated by the target, distinct from those that reflect the background. Discarding the latter then yields a representation that is invariant to the presence of the background—albeit incomplete because of the missing channels. Likewise, if target and background occur at different points in time, temporal resolution properties of the auditory system (Moore et al., 1988; Plack & Moore, 1990) can be used to discard time intervals contaminated by the background.

Putting both elements together, the target can be “glimpsed” within spectro-temporal gaps of the background (Cooke, 2006). The glimpsed “pixels” of the time-frequency representation are handed over to subsequent processing together with a mask to indicate their position. Discarded pixels are not merely set to zero: they are given zero weight (Cooke et al., 1997). Spectro-temporal glimpsing has been proposed in speech processing applications (Wang & Brown, 2006; Wang, 2008), and to account for human perceptual abilities and derive predictive measures of intelligibility (e.g., Best et al., 2019; Josupeit et al., 2020).

Binaural disparity is another potentially useful cue. In addition to head shadow effects that produce favorable target-to-masker ratios within certain frequency channels at either ear (Grange & Culling, 2016), perception benefits from binaural interaction, which is commonly understood to follow the well-known equalization cancellation (EC) model (Durlach, 1963), and its extensions (e.g. Culling & Summerfield, 1994; Breebaart et al., 2001; Akeroyd, 2004). Signals at each ear are differentially time-shifted and scaled (“equalization”), and then subtracted one from the other (“cancellation”) to suppress interaurally coherent sound from a competing source. The internal time shift and scale factor are tuned to match the interfering source. The EC model is assumed to involve temporally accurate neural patterns processed by specialized neural circuitry within the auditory brainstem (Tollin & Yin, 2005; Joris & van der Heijden, 2019).

To summarize this viewpoint, Auditory Scene Analysis entails canceling and/or ignoring irrelevant features of the sensory input, and matching the remainder to an internal model to produce a reliable percept. The process draws on spectro-temporal analysis within the cochlea, complemented by neural time-domain signal processing within the brain, to provide the brain with a rich—albeit incomplete—representation within which a target can be “glimpsed.” The glimpses are then interpreted according to a Helmholtzian inference process.

The remainder of this paper asks whether this process can be extended to include, as a cue, the harmonic (periodic) structure of interference such as a competing talker. So-called “double-vowel” experiments found that vowels mixed in pairs are easier to identify if their fundamental frequencies (F0s) differ (Brokx & Nooteboom, 1982; McKeown, 1992; Culling & Darwin, 1993; Assmann & Summerfield, 1994), suggesting that harmonic structure somehow assists segregation. Furthermore, it appears that this effect is driven mainly by the harmonicity of the background, for example, the competing vowel (Lea, 1992; Summerfield & Culling, 1992; de Cheveigné et al., 1997). This is the harmonic cancellation hypothesis.

To set the stage, I assume a “segregation module” that works hand in hand with a “pattern-matching” module (Figure 1). The segregated sensory pattern (dark red arrow) is accompanied by a “reliability mask” (gray arrow) to assist matching of a pattern that is incomplete or distorted by the segregation process. Sensory representations might consist of a spectral profile (e.g., place-rate representation), or a temporal, or place-time pattern. Examples of the latter are a matrix of autocorrelation functions (ACFs), one per channel (autocorrelogram), or the sum over channels of these ACFs (summary ACF, SACF) (Licklider, 1959; Lyon, 1984; Meddis & Hewitt, 1992). The flow of sensory information in this figure is purely bottom-up: the only top-down influence is attentional control (dotted arrow). Top-down transfer of a sensory-like pattern is also conceivable (“schema-driven” segregation), but not considered here.

Figure 1.

Segregation and matching. Sensory input is stripped of correlates of interfering sources, and the selected pattern, possibly incomplete, is passed on for pattern-matching (or model-fitting), together with a mask that indicates which parts are missing or unreliable. Initial stages are under attentional control.

We want to know whether harmonic cancellation is instantiated in the auditory system, but it is often easier to reason in terms of the acoustic waveform, for clarity and to distinguish theoretical from implementation limits: if a principle fails in abstract terms, consideration of biological constraints is premature. That said, references to “cochlear filtering” or “neural processing” will sometimes creep into the discussion without warning. I beg your patience when this occurs.

Harmonic Cancellation—Possible Mechanisms

How might harmonic cancellation be implemented? This section investigates several hypotheses, including frequency-domain, time-domain, and hybrid models. A later section will ask which—if any—is used by the auditory system. The busy reader might want to read about frequency domain and time domain models, then skip to the Psychophysics section and come back for details as needed. There are also interesting things to be found in the Appendix.

Frequency Domain

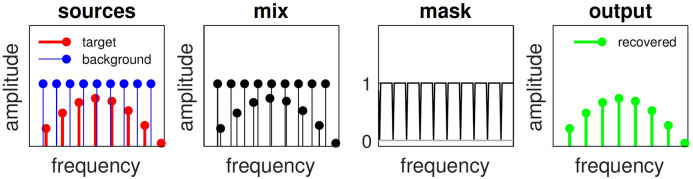

Conceptually, harmonic cancellation is straightforward: just zero all spectral components at multiples of , where is the period of the background, as shown in Figure 2 (Parsons, 1976; Stubbs & Summerfield, 1988). Target components emerge intact (right panel), except in the event, vanishingly unlikely in this idealized world, that a target component falls on the harmonic series of the background.

Figure 2.

Harmonic cancellation in the idealized frequency domain. Left: line spectra of a “target” sound (red) and a “background” (blue). Next to left: mixture. Next to right: harmonic mask with zeros at all harmonics of background. Right: recovered target.

A practical implementation, however, needs to deal with two issues: one is limited frequency resolution of the spectral representation, the other is the spectral widening expected when analyzing a time-limited or otherwise non-stationary signal. Figure 3(a) shows short-term amplitude spectra of two harmonic sounds, a 200 Hz “background” with a flat spectral envelope (blue), and a weaker 238 Hz “target” with a broad peak centered at 1 kHz (red).

Figure 3.

Harmonic cancellation in the frequency domain using a short-term Fourier representation, or a filter bank. (a) 238 Hz target (red) and 200 Hz background (blue) analysed by a filter bank with 100 Hz resolution, (b) mixture, (c) harmonic mask, (d) target recovered from mixture (green), and same in the absence of the background (thin red), (e) same analysis but using a filter bank with non-uniform frequency resolution. Filter bandwidth depends on center frequency (CF) according to estimates of cochlear frequency resolution from Moore and Glasberg 1983 as implemented by Slaney (1993).

This spectral transform has limited frequency resolution (or, equivalently, infinite resolution but the signals are time-limited, in this case eight cycles of a 200 Hz fundamental, shaped with a Hanning window). When target and masker are mixed, here with a target-to-masker ratio (TMR) of 12 dB, the spectrum of the mix (Figure 3(b), black) is almost entirely dominated by the background (Figure 3(a), blue). This differs radically from the idealized picture of Figure 2.

If we multiply the spectrum of the mix with a harmonic mask with zeros at the harmonics of the background (Figure 3(c)), we obtain a “recovered” spectral pattern (d, green) very different from the true target (a, red). Two terms contribute to this difference. One is multiplicative distortion from the masking procedure (compare d, red to a, red), the other is additive distortion due to the incompletely canceled background (compare d, green to d, red). The former can, in principle, be taken into account by a pattern-matching stage if it has access to the nature of that distortion, for example, via the gray arrow in Figure 1. The latter is more serious because it is unknown and cannot be compensated for, and because it implies that we miss our goal of invariance with respect to the background. The shape of the harmonic mask (Figure 3(c)) affects the balance between error terms but a different mask would not yield a radically different result. The contrast between Figure 2 (conceptual model) and Figure 3 (feasible implementation) is sobering.

Spectral resolution is critical. Cochlear filters are narrower, on a linear frequency scale, at low than at high CFs (Figure 3(e)). From this figure, it would seem that low-frequency target features might be recovered, but perhaps not high-frequency (compare green and thin red). This illustration used a bank of gammatone filters (Slaney, 1993) with equivalent rectangular bandwidths (ERBs) from psychophysical estimates (Moore & Glasberg, 1983). If cochlear filters were narrower (e.g., Shera et al., 2002; Sumner et al., 2018) a wider frequency range might be recoverable (not shown), but resolution would still be limited if the stimulus were short or non-stationary.

In summary, frequency-domain cancellation requires (a) a spectral representation with resolution sufficient to cancel background partials while retaining enough of the target to support pattern matching, (b) an estimate of the background period , and (c) a pattern-matching process that tolerates distortion of target spectral patterns. How to estimate the background period is discussed in the Appendix (Period Estimation).

Time Domain

Harmonic cancellation can also be implemented in the time domain by a simple filter with impulse response

| (1) |

where is the period of the interfering sound and is the Kronecker delta function translated to (Figure 4(a), left). The filtered version of a signal is simply . The magnitude transfer function of this filter has deep dips at all harmonics of 1/ (Figure 4(a), right).

Figure 4.

Harmonic cancellation in the time domain. (a) Impulse response of the cancellation filter (left) and corresponding magnitude transfer function (right). (b) Input (left) and output (right) of the cancellation filter for the background 100 Hz vowel /a/ (top), target 132 Hz vowel /e/ (middle), and mixture at TMR= 12 dB (bottom). (c) Schematic diagram of a circuit implementing the cancellation filter (Equation (1)) (left) and neural circuit with similar function (right). A spike on the direct pathway (black) is transmitted unless it coincides with a spike on the delayed pathway (red). The delay can be applied to the positive/excitatory input, instead of negative/inhibitory, with equivalent results.

Figure 4(b) shows a background vowel stimulus /a/ with fundamental 100 Hz (top), a weaker target vowel /i/ with fundamental 132 Hz (middle), and their mixture (bottom), before (left) and after (right) filtering with a cancellation filter with lag equal to the period of the background vowel. The response consists of initial and final one-period glitches, separated by a short steady-state portion, in red. The steady-state portion is zero for the background (top). For the target, it is a distorted version of the target waveform (compare middle right, red, to middle left). For the mixture, it is the same as for the target alone (compare middle right, red, to bottom right, red). In other words, this part of the pattern is invariant with respect to the presence of a background of period , which is what we need. This contrasts with frequency-domain cancellation for which none of the recovered pattern was background-invariant.

In summary, time-domain cancellation requires (a) a time-domain signal representation such that Equation (1) can be implemented, (b) an estimate of the background period (see Appendix, Period Estimation), (c) a pattern matching process capable of selecting the intervals of perfect cancellation, and compensating for distortion of the target within these intervals.

Hybrid Models

A hybrid model combines spectral and temporal processing, for example, cochlear filter bank analysis followed by time-domain harmonic cancellation within the brainstem. There is a rich literature based on this idea for the purpose of auditory modeling and sound processing applications (e.g., Lyon, 1983, 1988; Weintraub, 1985; Meddis & Hewitt, 1992; Assmann & Summerfield, 1990). A benefit of the filter bank is that TMR varies across channels, some favoring the target and others the background (Figure 5(a)), which may be useful if the dynamic range of temporal processing is limited.

Figure 5.

(a) TMR within each channel of a model cochlear filter bank for an input consisting of a 124 Hz harmonic target mixed with a 100 Hz harmonic background with overall TMR=0 dB (black), 12 dB (dotted blue), or +12 dB (dotted red). Thanks to the filter bank, the TMR is enhanced in certain channels within which the target can be “glimpsed.”(b) Linear operations can be swapped. Filtering the signal before the filter bank is equivalent to applying the same filter to each channel after the filter bank.

It is worth remembering that linear, time-invariant operators can be swapped: a time-domain cancellation filter applied to the acoustic waveform can instead be applied to each channel after filtering: the result is the same (Figure 5(b)). Cochlear filtering and transduction are both non-linear and non-stationary (e.g., adaptation), but the “equivalence” of Figure 5(b) may nonetheless be useful conceptually. I review briefly here a selection of hybrid schemes for harmonic cancellation, described in detail in the Appendix (Hybrid Models). In brief:

Hybrid Model 1: Cancellation-enhanced spectral patterns. A time-domain cancellation filter is applied to each channel of the cochlear filter bank, resulting in are cleaner spectral patterns for pattern matching.

Hybrid Model 2: Channel rejection on the basis of periodicity. Channels dominated by the background periodicity are discarded, and the remaining channels are used to form a time-domain pattern for pattern matching, as in the concurrent vowel identification model of Meddis and Hewitt (1992).

Hybrid Model 3: Cancellation filtering of selected channels. As in Hybrid Model 2, channels dominated by the background are discarded, and channels dominated by the target are left intact. In contrast to Hybrid Model 2, channels with intermediate TMR are processed by a cancellation filter. The result is used for time-domain pattern matching.

Hybrid Model 4: Channel-specific cancellation filter. The parameter of the cancellation filter can differ between channels, in contrast to other models that use the same for all channels. The result is used for time-domain pattern matching.

Hybrid Model 5: Synthetic delays. The “synthetic delay” mechanism of de Cheveigné and Pressnitzer (2006) is used to implement the relatively long delays required by the temporal model of harmonic cancellation. The result is used for time-domain pattern matching.

Hybrid Model 6: Logan’s theorem. This is not a specific model but a processing principle. A narrowband signal can be reconstructed perfectly from its zero crossings (and hence also from its half-wave rectified version) (Logan, 1977). This implies that, despite the non-linearities, the temporal model can be implemented after transduction as if it were applied to the acoustic waveform (the theorem does not say how).

These examples illustrate how peripheral filtering and temporal processing might work hand-in-hand to enhance a spectral model (Hybrid Model 1) or a temporal model (Hybrid Models 2–6) of harmonic cancellation. To summarize, a wide variety of mechanisms can implement harmonic cancellation: spectral, temporal, and hybrid.

Alternatives to Harmonic Cancellation

It is important to consider alternatives: to the extent that they are viable, the case for harmonic cancellation is weaker. Other aspects of the spectral structure of the target or background might support segregation, even in situations that seem to implicate harmonic cancellation.

Harmonic Enhancement

According to this hypothesis, the harmonic structure of a target sound allows its extraction from a background. The idea is attractive: it fits with the Auditory Scene Analysis credo that components of a sound are “grouped” together, here on the basis of harmonicity, to form a coherent “object” that can be distinguished from other parts of the scene (Bregman, 1990). It is satisfying to hypothesize that voiced speech might be “engineered” for this purpose through evolution (e.g., Popham et al., 2018).

The mechanisms just reviewed can be re-purposed for enhancement. For example, the mask in Figure 2 can be made to select target harmonics rather than reject background harmonics. Likewise, replacing the minus by a plus in Equation (1), and setting to the period of the target, yields a harmonic enhancement filter:

| (2) |

Enhancement and cancellation seem symmetric one of the other, but they have rather different properties. Enhancement requires the period of the target, but this is hard to estimate when TMR is small, which is unfortunately when segregation is most necessary. Cancellation works well in that situation. An enhancement filter provides only a limited boost in TMR (6 dB for the simple filter of Equation (2)) in contrast to cancellation that can reject the masker perfectly, at least in principle. A larger boost would require a longer impulse response (as explained in Appendix A of de Cheveigné, 1993, courtesy of Jean Laroche), but this might not be practical for a non-stationary signal such as speech. Anticipating, behavioral results also don’t favor the enhancement hypothesis.

Incidentally, the term “harmonic enhancement” appears in other contexts with a different meaning: perceptual enhancement of one harmonic of a complex when it is turned on or off (e.g., Hartmann & Goupell, 2006). Hopefully no confusion will result from this overloading of the terminology.

Spectral Glimpsing

Between the lines of a harmonic spectrum are gaps where target components might be glimpsed (Deroche et al., 2013; Guest & Oxenham, 2019), and this might conceivably account for the benefit observed when a background is harmonic rather than inharmonic. Figure 5(a) shows how individual channels in the low-frequency region can preferentially reflect one source or the other, as long as partials are not too close. The spectral-glimpsing hypothesis glosses over the question of how target channels are distinguished from background channels. In that, it differs from Hybrid Model 2 above.

Waveform Interactions

The sinusoidal waveforms of two or more partials can interact within a channel of a filter bank to produce a complex “beat” pattern. This can occur between partials of the same sound (with a rate equal to the fundamental if the sound is harmonic) or partials of different sounds. The patterns that result are quite diverse (static summation, slow fluctuations, rapid beats, etc.), and they depend in a complex way on several parameters (frequencies, levels, filter shapes). The “waveform interactions” hypothesis is thus ill-defined unless further specified.

From slow to fast: phase-dependent summation of same-frequency partials constitutes a potential confound in experiments that include a “zero F ” condition (de Cheveigné, 1999c). Slow beats between closely-spaced partials from different sounds cause the short-term spectrum to cycle between shapes that might favor perception of one or the other sound, either because it momentarily resembles that of one of the sounds in isolation, or because temporal contrast effects enhance important spectral features (Summerfield et al., 1981; Assmann & Summerfield, 1994; Culling & Darwin, 1994). Faster beats might evoke a sensation of roughness signaling the presence of a target (Treurniet & Boucher, 2001), or the spectral location of such beats might provide cues to its spectral features (e.g., the location of a formant peak, or the boundary between formants of different sounds). Conversely, the lack of beats at a rate slower than F (or the perceptual correlate of this lack, “smoothness”) could signal the absence of a target, or the spectral location of channels dominated by harmonics of a single sound. Finally, the absence of any modulation at F implies that the channel is dominated by a single partial, as in the phenomenon of “synchrony capture” which might signal the position of a formant peak of a successfully isolated sound (Carney et al., 2015; Maxwell et al., 2020).

Interaction of more than two harmonics produces a phase-dependent beat pattern that is more deeply sculpted for certain phase relations, such as cosine, or “Klatt” phase that approximates natural phonation with a glottal pulse within each period. Valleys between pulses might then allow a target to be glimpsed for a favorable alignment, as might occur if sounds of different F are mixed (the pitch period asynchrony hypothesis, PPA, Summerfield & Assmann, 1991).

Beat patterns might be exploited to group channels by correlation (Hall et al., 1984; Sinex et al., 2002; Sinex & Li, 2007; Fishman & Steinschneider, 2010; Shamma et al., 2011) or, alternatively, beat rates in the F range might be compared across channels (Roberts & Bregman, 1991; Treurniet & Boucher, 2001; Roberts & Brunstrom, 2003). This requires the existence of some mechanism to analyze beat patterns and quantify their rates (see Modulation Filter Bank below).

Beat amplitude depends non-monotonically on the amplitude of sources within the stimulus, and the shape of the beat pattern is phase-dependent (for three or more partials). Beat rate affects perceptual salience (e.g., roughness) non-monotonically, and the rate itself may depend non-monotonically on F difference, depending on which partials happen to be close. Finally, each channel has its own pattern of beats. For these reasons, a “waveform interaction hypothesis” is hard to delineate and test (which does not imply that it is incorrect).

Modulation Filter Bank

An influential idea is that cochlear filtering and transduction are followed by analysis by a modulation filter bank within the auditory system (Kay & Matthews, 1972; Viemeister, 1979; Dau et al., 1997; Joris et al., 2004; Stein et al., 2005; Jepsen et al., 2008). Conceptually, this seems rather like reproducing internally an operation (spectral analysis) that is already carried out in the cochlea. A major difference, however, is that it occurs after demodulation of each output of the peripheral filter bank (non-linearity followed by smoothing), which makes it primarily sensitive to features of the waveform envelope, and less sensitive to carrier phase. The concept makes most sense when applied to slow fluctuations (e.g., below 30 Hz), but models have been proposed with channels up to 500 Hz, capitalizing on the smooth transition between neural coding of fine structure at low frequencies and of envelope at higher frequencies (Joris et al., 2004). A modulation filter bank applied to each peripheral channel results in a center frequency best modulation frequency pattern that can be collapsed across channels to obtain a “summary modulation spectrum.” One could imagine a frequency-domain harmonic cancellation model applied to this “internal spectrum.” However, most estimates of modulation filter width are rather wide (quality factor 1), which makes this idea unlikely to work given the issues mentioned earlier.

Alternatively, the 2D pattern could be used to tag channels for the purpose of segregation (Ewert & Dau, 2000; Meyer et al., 1997). One might consider implementing this modulation filter bank using cancellation filters, which would result in a model similar to the hybrid models reviewed previously, a major difference being the demodulation step which renders the model sensitive to envelope periodicity rather than (or in addition to) waveform periodicity.

In Summary

Multiple models have been put forward to explain how the harmonic structure of sounds within an acoustic scene can be used to analyze the scene and attend to particular sources. Some fit the definition of harmonic cancellation, others do not. The next section reviews psychophysical evidence in favor—or against—this hypothesis and its alternatives.

Psychophysics

Detection Benefits from ΔF0

When presented with a mixture of two vowels, subjects more often report that they hear two vowels if the F0s differ (de Cheveigné et al., 1997; Arehart et al., 2005, 2011; McPherson et al., 2020). Likewise, when presented with a harmonic tone with one partial mistuned, they may detect the partial as “standing out” as a separate sound (Moore et al., 1985, 1986). Such a mistuned target tone can be detected at 15 dB relative to a harmonic masker, whereas against a noise background the threshold is 15 dB higher (Micheyl et al., 2006). In each of these examples, background harmonicity seems to affect how many sources are heard. An interpretation, in the context of harmonic cancellation, is that a single entity is perceived if cancellation is perfect, and multiple entities if it leaves a residual.

Discrimination and Identification Benefit from F

Mistuning one partial of a harmonic complex allows it to be matched to a pure tone (Hartmann et al., 1990), implying not only that this “second sound” is detectable, but also that its frequency can be accessed. Subjects are more likely to identify both vowels of a concurrent pair if their s differ (Brokx & Nooteboom, 1982; Scheffers, 1983; Zwicker, 1984; Summerfield & Assmann, 1991; McKeown, 1992; Chalikia & Bregman, 1993; Culling & Darwin, 1993; Assmann & Summerfield, 1994; Shackleton et al., 1994; Arehart et al., 2011). The pattern of results is similar across studies: poor performance (albeit well above chance) for F =0, rapid improvement up to about one semitone, followed by a plateau and possibly a dip at the octave. To create the F =0 condition with continuous speech, the voices must be re-synthesized on a monotone, or one voice given the same F track as the other, so that F s remain the same throughout the presentation. With that manipulation, a similar benefit of non-zero F is obtained (Brokx & Nooteboom, 1982; Leclère et al., 2017).

Improved performance with F 0 is taken to reflect a harmonicity-based segregation mechanism that fails when F0s are the same, and indeed, identification is less good if both voices are whispered (Lea, 1992), or inharmonic (de Cheveigné et al., 1997). This brings up the question as to whether each voice benefits from its harmonic structure, that of its competitor, or both. To answer that question, voices must be parametrized individually, and responses tallied separately. It cannot be answered if the performance metric is “both correct” (Brokx & Nooteboom, 1982; Scheffers, 1983; Summerfield & Assmann, 1991), or if both voices are made inharmonic at the same time (Popham et al., 2018).

Background Harmonicity is Important

In “double vowel experiments,” listeners give two answers on each trial, but it has been noted that one constituent (the “dominant” vowel) is usually identified regardless of F , whereas identification of the other depends on F (Zwicker, 1984; McKeown, 1992; McKeown & Patterson, 1995). “Dominance” is phoneme- and subject-dependent, but this can be overridden by changing the relative level of the vowels, in which case the F effects are mainly observed for the weaker (smaller amplitude) vowel (McKeown, 1992; de Cheveigné et al., 1997; Arehart et al., 2005). This is congruent with the harmonic cancellation hypothesis, in that estimation of the harmonic structure of the background should be easy when the target is weak. However, it could also simply result from a reduced ceiling effect for the more challenging, weaker vowel.

With the F 0 condition as a starting point, performance degrades if the competing vowel is whispered (Lea, 1992) or made inharmonic (de Cheveigné et al., 1997), regardless of whether the target is harmonic or not. This too is consistent with the harmonic cancellation hypothesis. Similar results are reported for connected speech: Steinmetzger and Rosen (2015) found that speech reception thresholds (SRTs) were up to 11 dB lower for periodic than aperiodic maskers, while Deroche et al. (2014b) reported a 4 dB elevation in SRT for inharmonic versus harmonic maskers. Incorporating harmonic cancellation within a predictive model of speech intelligibility improved its fit to experimental data (Prud’homme et al., 2020).

Gockel et al. (2002) found that the threshold for detecting noise in a harmonic masker was 11–14 dB lower than the converse, and Gockel et al. (2003) found a similar result for loudness. This suggests that a harmonic masker might be less potent than a noise masker, as expected from harmonic cancellation. As mentioned earlier, Micheyl et al. (2006) found that a harmonic complex tone (HCT) was easier to detect within a background consisting of another HCT than within noise, and Klinge et al. (2011) found a lower threshold for detection of a tone embedded in (but mistuned from) a harmonic rather than inharmonic or noise background (see also Oh & Lutfi, 2000).

All these results are consistent with harmonic cancellation. However, harmonic cancellation is not exclusive of other mechanisms, and one might expect the auditory system to use several or all if they are effective. The next section reviews evidence for harmonic enhancement.

Target Harmonicity is Less Important

The idea that harmonicity ensures that a sound does not “fall apart into a sea of individual harmonics” is seducing (Popham et al., 2018), but studies that tried to demonstrate an advantage of target harmonicity for segregation have met with mixed results. As noted earlier, in double-vowel experiments the benefit of a F is greatest for weak targets, and measurable for TMR as low as 25 dB (McKeown, 1992; de Cheveigné et al., 1997; Arehart et al., 2005). Estimating the F of a target that weak would be challenging. Replacing a voiced target by a whispered target does not impair intelligibility, regardless of whether the competitor is voiced or whispered (Lea, 1992), nor does randomly perturbing its harmonics to make it inharmonic (de Cheveigné et al., 1997). Modulating the F of target speech in the presence of reverberation disrupts its periodicity, but Culling et al. (1994) found no effect on SRTs (see also Deroche & Culling, 2011b).

For continuous speech, it has been hypothesized that target harmonicity (one aspect of “temporal fine structure,” TFS) could aid glimpsing within a spectro-temporally modulated noise, by tagging time–frequency regions that are voiced. However, a direct test of this hypothesis gave negative results (Shen & Pearson, 2019). There is however some evidence that continuity of target F helps to connect information over time, or reduce informational masking if target and masker F ranges are non-overlapping (Darwin & Bethell-Fox, 1977).

A difficulty in testing the enhancement hypothesis is that manipulation of the target might affect its intelligibility independently of any segregation effect. Whispered speech is reportedly less intelligible than voiced speech (Ruggles et al., 2014), and reverberation, which disrupts harmonicity of an intonated target, also degrades intelligibility (Deroche & Culling, 2011b). Manipulating F (monotonizing, transposing, or inverting the F track) may also affect intrinsic intelligibility (Binns & Culling, 2007; Deroche et al., 2014a; Guest & Oxenham, 2019). Such effects might conceivably offset the benefits of harmonic enhancement, making them unmeasurable, so the best we can say is that we lack strong evidence in favor of harmonic enhancement.

An Intriguing Exception: Target Pitch

In contrast to results just reviewed, a target within a noise background is easier to detect if it is harmonic than inharmonic (McPherson et al., 2020). This inconsistency is resolved if we reflect that a harmonic target is likely detected in noise on the basis of its pitch (Scheffers, 1984; Hafter & Saberi, 2001; Gockel et al., 2006), which is probably more salient if the sound is harmonic. If frequency discrimination in noise relies on a pitch percept, it too should benefit from target harmonicity, as found by McPherson et al. (2020). Thus, we cannot with confidence attribute such benefits to enhanced segregation as opposed to an enhanced pitch percept.

It is also intriguing that the pitch of a target is easier to discriminate if mixed with a noise background rather than a harmonic background (Micheyl et al., 2006), opposite to what we expect of harmonic cancellation (indeed, in that study the same sounds were easier to detect within a harmonic background than a noise background). It would seem that background harmonicity interferes with target pitch, possibly in a way similar to the phenomenon of pitch discrimination interference (PDI) (Gockel et al., 2009; Micheyl et al., 2010). That interference is not absolute: the pitch of a mistuned partial may be heard within a harmonic background (Hartmann et al., 1990; Hartmann & Doty, 1996), and individual tones may be heard within a chord (Graves & Oxenham, 2019), consistent with skills found in competent musicians.

Is the Benefit Explained by Spectral Glimpsing?

Several results seem consistent with this hypothesis. The benefit of F to vowel identification is mainly limited to the region of resolved partials (Culling & Darwin, 1993), and it improves with a higher background F at which partials are more widely spaced (Deroche et al., 2013, 2014a). Guest and Oxenham (2019) found that removing the even harmonics of a masker reduced masking of a target placed one octave above, also consistent with glimpsing within the large gaps between background partials of odd rank.

However, Deroche et al. (2013, 2014a, 2014b) argued that the larger gaps that arise when a masker is made inharmonic should reduce masking, contrary to their results. A possible explanation is that cancellation and glimpsing are both involved (Deroche et al., 2014b), consistent with Hybrid Models 2 or 3.

Is the Benefit Explained by Waveform Interactions?

As pointed out earlier, waveform interaction comes in multiple forms, and it is not always clear which version of the hypothesis is implied when it is invoked. One difficulty, common to many versions, is that the non-monotonic dependency of beat amplitude on component amplitudes implies that the magnitude (and spectral locus) of beat-dependent cues should show non-monotonic variations with level, whereas identification usually varies monotonically with TMR. Another challenge is that F -based segregation seems to benefit mostly partials of low rank, for which, thanks to resolvability, the distribution over channels of high-amplitude beats is likely sparse (Deroche et al., 2014).

Phase effects attributable to PPA were found at 50 Hz, but not at 100 Hz or higher (Summerfield & Assmann, 1991; de Cheveigné et al., 1997; Deroche et al., 2013, 2014; Green & Rosen, 2013, but see Summers & Leek 1998). Furthermore, reverberation should scramble the phase relations required by PPA, whereas it does not affect segregation unless F is modulated (Culling et al., 1994, 2003; Deroche & Culling, 2011b).

Culling and Darwin (1994) attributed effects of small F to the ability to shop for favorable spectral patterns among those offered by slow beats. Random starting phase should reduce this benefit due to the haphazard temporal alignment of beat patterns, but, de Cheveigné et al. (1997) found that the F benefit did not depend on the phase pattern (random vs sine) of either target or background. The slow-beat hypothesis was further tested by de Cheveigné (1999c), again with limited support. The reader should refer to those two papers for a detailed discussion of several forms of the waveform interactions hypothesis. Given the diversity, it is hard to rule out that some form of waveform interaction contributes to segregation. Indeed, harmonic cancellation itself could be construed as a mechanism to exploit a particular form of waveform interaction specific to harmonically-related partials.

The Special Case of Maskers With Frequency-Shifted or Odd-Order Harmonics

In experiments that require detecting (or matching the pitch of) a mistuned partial of rank within a harmonic complex of fundamental F , the subject likely attends to channels with a center frequency close to . The task might then be hampered by the presence, within those channels, of neighboring harmonics, in particular harmonics of rank and . A cancellation filter tuned to F would suppress those unwanted harmonics, but it would also suppress the target unless it is mistuned. We would thus expect performance to improve with mistuning, as indeed is observed (Moore et al., 1986; Hartmann et al., 1990).

However, Roberts and Brunstrom (1998) found a similar result when the background series had been made inharmonic by shifting all partials by the same amount , in which case partials are regularly spaced but harmonicity is disrupted. This suggests that spectral regularity, rather than harmonicity, might be the driving factor, which would put in doubt the harmonic cancellation account. However, that proposal hinges on the existence of a mechanism to detect spectral regularity: Roberts and Brunstrom (2001) doubted the existence of a dictionary of shifted-harmonic templates.

An alternative is that harmonic cancellation is applied locally within peripheral channels, for example based on Hybrid Model 4 (analogous to what has been proposed for the binaural EC model, Culling & Summerfield, 1994; Akeroyd, 2004; Breebaart et al., 2001). Specifically: the shifted partials and can be approximated with harmonics of rank and of a harmonic series of fundamental . A cancellation filter tuned to that series would approximately cancel the closest offending background partials (more distant ones are attenuated by cochlear filtering). The th zero of that filter falls at , that is, it fits the “spectral regularity” template invoked by Roberts and Brunstrom (1998), which would explain why they found that “mistuning” a partial from that position makes it easier to detect or match. An array of such CF-dependent cancellation filters, each tuned to an “equivalent F ” equal to would attenuate a shifted-harmonic complex across all channels, allowing “mistuning” relative to that spectrally regular (but inharmonic) pattern to be detected.

This reasoning can be extended to the case of a background harmonic complex with only odd harmonics of F , as it is equivalent to a series of harmonics of 2F each shifted by . This series can be canceled perfectly by a cancellation filter tuned to F , or approximately, within each peripheral channel, by a cancellation filter tuned near 2F as just described. The reason for considering the latter is that it requires a shorter delay, which is relevant if there is a penalty on longer delays as has been suggested in the context of pitch perception (Moore, 2003; de Cheveigné & Pressnitzer, 2006; Bernstein & Oxenham, 2008). An array of cancellation filters, each tuned to , would spare anything that does not fit the series of odd harmonics, in particular an even-numbered harmonic. If so, it might explain why a single even-numbered harmonic embedded among odd-numbered harmonics is “heard out” more easily than any of the odd-numbered partials (Roberts & Bregman, 1991), and similar explanation might underlie the benefit for identification of a speech target of removing even harmonics of the masker (Guest & Oxenham, 2019) mentioned earlier. This question is revisited in the Discussion.

In Summary

A body of evidence agrees with the hypothesis that harmonic cancellation assists auditory scene analysis, complementing the well-known benefits of peripheral frequency analysis. Dissenting results are sparse. The alternative hypothesis of harmonic enhancement, while attractive, garners little experimental support. Harmonic cancellation raises a number of issues that are discussed further in the Appendix. These include period estimation (necessary to apply cancellation), the relations between correlation and cancellation, analogies with the well-known EC model of Durlach, pattern matching with missing data, potential anatomical and physiological substrates, and the possible synergy between cochlear filtering and neural filtering.

Discussion

Periodicity (or harmonicity)—and its perceptual correlate, pitch—have long captured the attention and imagination of thinkers and scientists (Micheyl & Oxenham, 2010). A periodic sound within the right parameter range evokes a salient percept that is long-lasting in memory (McPherson et al., 2020), is robust to masking by noise (Hafter & Saberi, 2001; McPherson et al., 2020), and supports fine discrimination (e.g., Micheyl & Oxenham, 2010). However, the idea that a sound “falls apart” unless it is harmonic does not withstand a bit of reflection. A one-period tone pulse seems unitary without the aid of harmonicity, meaningless at that duration. A harmonic tone of longer duration may sound unitary, but so does noise which lacks harmonicity. An alternative proposition is that the percept evoked by a sound is unitary by default, and that “multiplicity” is inferred from the accumulation of evidence in favor of additional sources. A complex with a mistuned harmonic initially sounds like a single object but, given time and encouragement, a subject might detect something amiss and interpret it as an additional source. The process requires time (Moore et al., 1985; Hartmann et al., 1990; McKeown & Patterson, 1995), and is harder if the background is made inharmonic (Roberts & Brunstrom, 2003; Roberts & Holmes, 2006). Thus, one could argue, the harmonic nature of one part of the stimulus makes it easier to detect the presence of other parts. From this perspective, harmonicity of a source may contribute to a percept of multiplicity for mixtures in which it participates, rather than to its own unity.

That background harmonicity is crucial comes as a surprise, as it suggests that segregation must rely on an adventitious quality of the environment. Also surprising is that target harmonicity has only a minor role, as it goes against the attractive idea that communication sounds are “engineered” through evolution to be harmonic for resilience. It does make sense, however, when one realizes that cancellation works well (and enhancement poorly) at low TMR, which is when segregation is most needed. Infinite TMR improvement can be achieved, in principle, for very short stimuli for which enhancement offers more limited benefit. Cancellation meshes well with the concept that perception involves a quest for invariance to irrelevant dimensions.

Cancellation as a Model of Sound

The ability to cancel unwanted sounds is clearly useful for perception, but one might take a step further and argue that it is, in part, constitutive of perception. As a predictive model, a harmonic cancellation filter characterizes the part of input that it can cancel, just as an autoregressive model characterizes its spectral envelope, or a binaural EC model its spatial position. The residual, which by definition does not fit that model, informs us about “what else is out there.” It too can be characterized by recursively applying the same model or, alternatively, a compound model can be applied to the original sound to estimate parameters jointly (as in the multiple F model described in the Appendix, Period Estimation). This is related to concepts of predictive coding (Friston, 2018) and compression (Schmidhuber, 2009).

Like pattern classification (Duda et al., 2012), cancellation seeks invariance with respect to irrelevant dimensions of the input, specifically those that reflect the background. In contrast to classifiers that involve non-linear transforms, cancellation as described here is purely linear, which makes sense given that the acoustic mixing process itself is linear.

How Useful is it in Practice?

Auditory Scene Analysis benefits from multiple cues and regularities, of which harmonicity is but one. Harmonic cancellation is likely to be useful in situations where neither temporal separation, nor spectral separation, nor binaural disparities are effective to suppress interfering sources, and then only if the interference is harmonic. Thus, at best, it is one tool among many, beneficial in a restricted set of circumstances.

Measured in terms of TMR at threshold performance, the harmonicity benefit can reach 17 dB for identifying synthetic vowels, although most studies report smaller effects (Summerfield et al., 1992; Culling et al., 1994; de Cheveigné et al., 1997). This is of the same order of magnitude as reported for binaural unmasking (Colburn & Durlach, 1965; Jelfs et al., 2011). In terms of proportion of tokens recognized, the benefit appears maximal for TMR around 15 dB and vanishes below 30 dB or above +15 dB (McKeown, 1992; de Cheveigné et al., 1997; de Cheveigné, 1999b). Thanks in part to harmonicity-based segregation, a target (wide-band harmonic or noise) mixed with a harmonic background can be detected at TMRs down to 20 dB (Gockel et al., 2002; Micheyl et al., 2006), or 32 dB for a narrowband noise target (Deroche & Culling, 2011a). The benefit relative to a noise or inharmonic masker is on the order of 5–15 dB (Micheyl et al., 2006; Deroche & Culling, 2011a; Deroche et al., 2014). Overall, harmonic cancellation mainly benefits weak targets.

For vowel identification, the benefit is measurable for F s as small as 0.4% but not less (de Cheveigné, 1997b), and plateaus for F s beyond 6%. It is greater for longer stimuli (200 ms) than shorter stimuli (50 ms) (Assmann & Summerfield, 1994), but measurable for stimuli as short as four cycles of the lower F (23 ms at 175 Hz, McKeown & Patterson, 1995). It is reduced but not abolished if the masker’s F is modulated at rates as fast as 5 Hz (200 ms period) (Summerfield et al., 1992; de Cheveigné, 1997b; Deroche & Culling, 2011b), suggesting a remarkable ability to track F variations. However, this breaks down in the presence of reverberation, whereas a similar degradation is not observed if the masker F is steady-state (Culling et al., 1994; Sayles et al., 2015). Data from mistuned harmonic experiments suggest that the benefit might be limited to the spectral region below 2–3 kHz (Hartmann et al., 1990). Indeed, in concurrent vowel experiments the benefit appears to stem mainly from the region below 1 kHz that includes a vowel’s first formant (Culling & Darwin, 1993).

Real speech maskers differ from ideal harmonic maskers in that periodic portions are sparsely distributed over time (Hu & Wang, 2008), the F varies due to intonation, and periodicity is further degraded by articulation, irregularities in voice excitation, and added noise including reverberation. The benefit of a F between a monotonized speech target and monotonized masker (two concurrent voices with the same F , or harmonic complex with spectral envelope similar to speech) ranges from 3 to 8 dB (Deroche & Culling, 2013; Deroche et al., 2014a, 2017), which is also on the same order as binaural effects for similar stimuli (Deroche et al., 2017).

Learning?

Pattern-matching models of pitch perception (de Boer, 1976) postulate some form of harmonic template, or “sieve” (Schroeder, 1968; Duifhuis et al., 1982), and the same template is also required for a spectral domain model of segregation. This is non-trivial: the dictionary of templates must cover the full range of F0s, there must be some mechanism to align the templates accurately with the substrate of frequency analysis (e.g., cochlea), and each template itself is a complex affair involving multiple slots with accurate tuning. It has been proposed that templates are learned from exposure to harmonic sounds such as speech (Terhardt, 1974; Divenyi, 1979; Bowling & Purves, 2015; Saddler et al., 2020) possibly modulated by cultural preferences (McDermott & Hauser, 2004; McDermott et al., 2010, 2016; McPherson et al., 2020). The demonstration that templates can be learned from noise (Shamma & Klein, 2000; Shamma & Dutta, 2019) makes that argument more tenuous, and highlights the question of what, exactly, is being learned. Perhaps that algorithm discovers, rather than learns, the mathematical property that is exploited more directly by the cancellation filter.

The template-like properties of a time-domain cancellation filter (Equation (1), Figure 4) stem from mathematics, rather than learning. This is a big appeal: why jump through hoops when a simple solution is at hand? The organism may still need to discover that this regularity exists and is worth attending to, and the mechanism may need tuning, particularly if it involves combining frequency channels. This leaves ample room for learning, and possibly even cultural influences.

Is There Time?

In a classic chapter, de Boer (1976) likened auditory theory to a pendulum moving between “time” and “place” (spectrum). The pendulum is still swinging, and several recent papers have strengthened the case for spectral and place-rate accounts (e.g., Shera et al., 2002; Sumner et al., 2018; Verschooten et al., 2018; Whiteford et al., 2020; Su & Delgutte, 2020). Arguments for time remain (a) evidence for temporal mechanisms of binaural processing (see section Analogy with Binaural EC of the Appendix), (b) existence of specialized neural circuitry within the brain (see section Anatomy and Physiology of the Appendix), and (c) the simplicity, effectiveness and ease of implementation of a time-domain harmonic filter, in contrast to a harmonic template or sieve in the frequency domain.

Hybrid models offer the best of both worlds, but they may worry scholars who care about parsimony or falsifiability. As a case in point, if we admit that delay might arise by cross-channel interaction (de Cheveigné & Pressnitzer, 2006), it is hard to say anything for, or against, the hypothesis that processing involves neural delays. On the other hand, it would be unwise to let this blind us to the possibility that auditory system does rely on a combination of spectral and time-domain analysis.

My personal inclination is that auditory perception involves time-domain processing within the brain, but the effectiveness of that processing is enhanced by the peripheral bandpass filter bank that helps overcome the effects of non-linear transduction and noise (based on principles related to Logan’s theorem). High-resolution mechanical filtering serves to “pre-calculate” a set of useful basis functions upon which the brain then operates in the time-domain (see sections Transforms in Filter Space and Non-Linearity of the Appendix). In this perspective, cochlear mechanics are the “last chance” to process acoustical signals with good resolution, linearity, and low noise, before handing transduced patterns over to more flexible but less accurate neural processing.

Carving Sound at its Joints

Auditory Scene Analysis is often described as a process of assembling elements across the spectrum (simultaneous grouping) or across time (sequential grouping) (Bregman, 1990), mirroring the common process of additive or concatenative synthesis by which stimuli are created in the lab. It glosses over the issue of whether these ingredients are recoverable from the mix, upon which this assumption depends. Once the coins are thrown into the melting pot, can we pull them out intact? According to classic Auditory Scene Analysis, we can: spectral analysis reveals “natural kinds” (partials), between which are found the “joints” at which sounds may be carved (Campbell et al., 2011). Indeed, according to this view, a grouping mechanism is required for any complex sound to form a coherent whole, otherwise it might shatter into as many percepts as partials (although few of us would claim to ever have heard more than a couple of such percepts within a sound). The wisdom of invoking sinusoidal partials as “natural kinds” on which Auditory Scene Analysis processes operate is rarely questioned.

In contrast, harmonic cancellation requires no analysis-into-parts or grouping. Whereas a bandpass filter is defined by what it selects (a frequency band), a cancellation filter is defined by what it removes (periodic power at period ). This is an example, like a shadow, of what Sorensen (2011) calls a “para-natural kind.” The process is effective both to characterize a periodic sound by its parameter , and to get rid of that sound and search for more. It is an alternative way to “carve sound at its joints.”

Conclusion

The harmonic cancellation hypothesis states that the harmonic (or periodic) structure of interfering sounds can be exploited to suppress or ignore them. A large body of experimental results are consistent with this hypothesis, whereas alternative hypotheses for F -based segregation are less well supported. In particular, harmonic enhancement, according to which harmonicity of a target makes it resilient to masking, receives little support, which is surprising because counter to our intuition and inconsistent with textbook explanations of scene analysis involving a harmonicity-based “grouping” operation. Harmonic cancellation fits well with an account of perception as seeking invariance with respect to irrelevant dimensions of the sensory pattern, and with the concept of “unconscious inference” promoted by Helmholtz. Harmonic cancellation can be implemented in the frequency domain (based on cochlear analysis) or time domain (based on the temporal processing of neural discharge patterns). Support for the latter comes from the success of the related EC model of binaural interactions, from the presence of neural structures apparently specialized for processing of temporal information, and from theoretical considerations that suggest that a time-domain implementation might be more straightforward and effective.

Appendix: Deeper Issues

The harmonic cancellation hypothesis is straightforward and well supported experimentally, but it raises a number of interesting questions that are worth considering.

Hybrid models

The hybrid harmonic cancellation models enumerated in the main text are described here in greater detail.

Hybrid Model 1: Cancellation-enhanced spectral patterns. Each channel of a filter bank is convolved with a cancellation filter tuned to . This has the effect of sharpening spectral analysis so that the outcome is closer to the ideal (Figure 2 right). The pattern of power over channels is then handed over to a frequency-domain pattern-matching stage. This is illustrated in Figure 6(a). Two vowels, /a/ and /e/ with fundamentals 100 and 106 Hz, respectively (left), are mixed. Cues to /e/ are indistinct within the spectrum of the mix (right, black), but can be enhanced by applying to each channel a cancellation filter tuned to suppress /a/ (right, red). This model is reminiscent of periodicity tagging of tonotopic patterns (Keilson et al., 1997), or of the place-time model of Assmann and Summerfield (1990) in which a spectral profile for the target vowel was taken by sampling the ACF at the target’s period. If the spectral profile were derived from a limited window of cancellation-filtered signal, placing that window within the background-invariant part (red in Figure 4(b), right) would make the profile invariant with respect to backgrounds of period . The pattern would still be distorted by the cancellation filtering, and spectral pattern-matching would need to take this into account.

Hybrid Model 2: Channel rejection on the basis of periodicity. Filter bank channels are divided into two groups based on TMR (estimated based on residual power at the output of a cancellation filter tuned to ). The first group consists of channels dominated by the background; these are rejected. The remaining channels are handed over to the pattern-matching stage to be matched based on their temporal pattern. This principle was employed in the concurrent vowel identification model of Meddis and Hewitt (1992), itself inspired from earlier ideas for binaural or periodicity-based segregation (Lyon, 1983, 1988; Weintraub, 1985). Spectral resolution must be sufficient so that enough channels are spared to represent the target.

-

Hybrid Model 3: Cancellation filtering of selected channels. Filter bank channels are divided into three groups based on TMR. Channels with large TMR are left untouched, channels with small TMR are discarded, and intermediate channels are processed by the cancellation filter. Keeping the first group intact reduces target distortion, and discarding the second group avoids contamination from noise if the cancellation filter is imperfect (as it might be due to non-linearity or noise). Cancellation filtering is reserved for channels with intermediate TMR, for which it can be effective. This model differs from Hybrid Model 2 by the presence of this third group. A similar suggestion was made by Guest and Oxenham (2019).

Hybrid Model 3 is illustrated in Figure 6(b). The black line shows the TMR per channel at the output of a filter bank in response to the mix /a/+/e/ with overall TMR = 0 dB. Channels for which TMR exceeds some threshold (+12 dB in this example) are left intact (green), channels for which TMR is below a second threshold ( 12 dB in this example) are discarded (black). Channels with intermediate TMR are processed with a cancellation filter (red).

Hybrid Model 4: Channel-specific cancellation filter. In contrast to previous models, for which the parameter is the same for all channels, here it is allowed to vary across channels. This is analogous to the channel-dependent versions of the EC model of binaural unmasking (Culling & Summerfield, 1994; Akeroyd, 2004; Breebaart et al., 2001). This hypothesis may be useful to explain results found with inharmonic stimuli (e.g., Roberts & Brunstrom, 1998) as discussed in the main text.

Hybrid Model 5: Synthetic delays. The cancellation filter of Equation (1) requires a delay equal to the background period (e.g., 20 ms for a 50 Hz fundamental). The existence of delays of this size in the auditory system has been questioned (e.g., Laudanski et al., 2014), and to address this issue it has been suggested that long delays might arise from cross-channel interaction (de Cheveigné & Pressnitzer, 2006). According to this model, the filter bank serves mainly that purpose: to help synthesize the delay required by Equation (1).

Hybrid Model 6: Logan’s theorem. Rather than a specific model, this is a processing principle that addresses the issue of the non-linear transduction that follows cochlear filtering. Due to half-wave rectification, each transduced signal is “blind” to one-half of every cycle, and thus one might worry that some information was lost. Logan’s theorem states instead that a narrowband signal can be reconstructed perfectly from its zero crossings, and hence also from its half-wave rectified version (Logan, 1977; Shamma & Lorenzi, 2013). To the extent that it is applicable here, the benefit of cochlear filtering would be to linearize transduction, so that neural signal processing has, in effect, full access to the acoustic waveform (see the section “Non-Linearity” below).

Figure 6.

Two hybrid models of harmonic cancellation. (a) Hybrid Model 1. Left: power as a function of CF for synthetic vowels /a/, F =100 Hz (blue) and /e/, F =106 Hz (red). Short lines above the plot indicate the first two formant frequencies of each vowel. Right: power as a function of CF for the mix before (black) and after (red) applying a cancellation filter tuned to suppress the period of /a/. (b) Hybrid Model 3. Black: per-channel TMR of vowel /e/ as a function of CF for a mixture of /a/+/e/ at overall TMR=0 dB. Channels are divided into three groups: TMR>12 dB (green, to be left intact), (black, to be discarded), and 12 dB TMR 12 dB (red, to be filtered by a cancellation filter).

Period Estimation

Harmonic cancellation requires an estimate of the interferer period . Harmonic cancellation itself can be used for that purpose: an array of cancellation filters, each tuned to a different delay (lag) covering the range of expected periods, shows a minimum in output power at a lag equal to the period. This is equivalent to searching for a peak in the ACF (Licklider, 1951; Meddis & Hewitt, 1991; de Cheveigné, 1998). The relation between cancellation and correlation is detailed in the next section.

From this perspective, cancellation is both an analysis tool (it cancels part of a signal to reveal the remainder), and an estimation tool (it estimates the period of the part it cancels). Applied recursively to a mixture of two sounds, it can reveal two periods: we first estimate the period of the dominant sound and cancel it, and then recurse on the remainder. These steps can be performed in parallel by searching the two-dimensional parameter space of a cascade of cancellation filters defined as and for a minimum in output power. This output is zero when for integers , (de Cheveigné, 1993; de Cheveigné & Kawahara, 1999). Interestingly, a neural version of this model designed to estimate the pitch of a mistuned partial (de Cheveigné, 1999a) accurately accounted for the subtle shifts observed by Hartmann and Doty (1990), Hartmann et al. (1996), see also Holmes and Roberts (2012).

Associated with the period is an estimate of the degree to which the sound is, in fact, periodic. A straightforward measure is output power of a cancellation filter tuned to the period , normalized by power at the input (or by output averaged over other lags, e.g., 1,…, T). A value of zero indicates that the sound is perfectly periodic, and a small value indicates that it is “approximately periodic.” This same measure can be used as a criterion to detect a target in the presence of a harmonic background.

The threshold beyond which a sound should be declared “aperiodic” depends on the application, and more specifically on the distributions of “periodic” and “aperiodic” sounds as defined by the application’s needs. It is worth noting that residual aperiodic power at the output of a narrowband filter (e.g., filter bank channel) takes on relatively low values even if the stimulus is aperiodic. The threshold needs adjusting accordingly.

Correlation and Cancellation

We can define the running autocorrelation function (ACF) at time as

| (3) |

(dropping the scaling factor 1/W for simplicity), where is the duration of a sliding integration window that serves to smooth the time course of . Power at time can then be defined as . Likewise, we can define a squared difference function (SDF) as power at time of the cancellation filter output

| (4) |

ACF and SDF are then related by

| (5) |

A peak in correlation, cue to the period, maps to a trough in difference function. It is convenient to normalize ACF and SDF

| (6) |

| (7) |

in which case the normalized functions are related more simply by

| (8) |

For a periodic sound with period , , and

Equation (5) is useful to derive the ACF from the SDF or vice-versa. It can also be extended to more terms, for example to implement a cascade of cancellation filters in terms of correlation. This allows different modeling strands to be unified, and justifies some flexibility when speculating about hypothetical neural implementations (see below).

Analogy with Binaural EC

Durlach’s EC model has been successful in accounting for binaural unmasking (Durlach, 1963; Culling & Summerfield, 1994; Culling, 2007) and binaural pitch phenomena (Culling, Summerfield, & Marshall, 1998), and in predictive models of speech intelligibility (Beutelmann & Brand, 2006; Lavandier et al., 2012; Cosentino et al., 2014; Schoenmaker et al., 2016). Binaural interaction has also been couched in terms of inter-aural correlation rather than cancellation (Jeffress, 1948) but, as pointed out by Green (1992), an EC model can be implemented on the basis of inter-aural correlation, and vice versa, as the two are related: , where and are sounds at left and right ears, respectively. A cancellation residue in one model maps to decorrelation in the other.

An interesting suggestion is that EC might operate independently within frequency channels (Culling & Summerfield, 1994; Akeroyd, 2004; Breebaart et al., 2001), rather than with parameters common to all channels as in the original EC model (Durlach, 1963). It has been further suggested that EC parameters can be estimated and applied within short-time windows (Wan et al., 2014; Hauth & Brand, 2018), which paves the way for a spectro-temporal form of the EC model that supports “glimpsing” (Beutelmann et al., 2010).

A monaural version of the EC model has been invoked to explain comodulation masking release (CMR) (Piechowiak et al., 2007).

Anatomy and Physiology

Time-domain and hybrid models entail time-domain signal processing within the brain. Anatomical and physiological specializations to support such processing include transduction and coding of acoustic temporal structure in the auditory nerve (up to 4–5 kHz or possibly higher, Heinz et al., 2001; Hartmann et al., 2019; Carcagno et al., 2019; Verschooten et al., 2019), specialized synapses in the cochlear nucleus and subsequent relays, and fast excitatory and inhibitory interaction in the medial and lateral superior olives (MSO and LSO) (Grothe, 2000; Zheng & Escabí, 2013; Keine et al., 2016; Beiderbeck et al., 2018; Stasiak et al., 2018) and other nuclei (Albrecht et al., 2014; Caspari et al., 2015; Felix et al., 2017). Some of these circuits are interpreted as serving binaural interaction, but presumably could be borrowed for other needs (see Joris & van der Heijden, 2019; Kandler et al., 2020, for recent reviews).

The time-domain cancellation filter of Figure 4(c, left), Equation (1), can be approximated by the “neural cancellation filter” of Figure 4(c, right). Spikes arriving via the direct pathway are suppressed by the coincident arrival of spikes delayed by . Applied to data recorded from the auditory nerve in response to a mixture of two vowels with different F s (Palmer, 1990), that simple circuit was effective in estimating both their periods and suppressing correlates of one or the other vowel (de Cheveigné, 1993, 1997a; Guest & Oxenham, 2019). Such a mechanism would require temporally accurate neural representations (excitatory and inhibitory), delays, and an inhibitory gating or “anticoincidence” mechanism.

Temporally accurate inhibitory transforms of sensory input are created in several nuclei, including cochlear nucleus (CN) (stellate-D cells), medial and lateral nuclei of trapezoid body (MNTB and LNTB), and ventral nucleus of the lateral lemniscus (VNLL) (Arnott et al., 2004; Caspari et al., 2015; Joris & Trussell, 2018). Fast interaction between direct and delayed neural patterns could in principle occur as early as the dendritic fields of cells in CN (Shore et al., 1991; Schofield, 1994; Davis & Voigt, 1997; Needham & Paolini, 2006; Xie & Manis, 2013), or as late as dendritic fields of the inferior colliculus (IC) (Caspari et al., 2015; Chen et al., 2019). A recent study reported evidence for an inhibitory “veto” mechanism at the axon initial segment of LSO principal neurons, with very narrow tuning to inter-aural time differences (Franken et al., 2021). Transmission failure at reputed “secure” synapses in CN and MNTB might conceivably reflect a similar veto mechanism (Mc Laughlin et al., 2008; Englitz et al., 2009; Stasiak et al., 2018).

The cancellation-correlation equivalence discussed earlier implies that fast interaction might also be excitatory-excitatory, the correlation pattern being later converted to a cancellation-like statistic by slower inhibitory interaction along the lines of Equations (5) and (8). Note, however, that finding a minimum of cancellation would then require subtraction of two large correlation values, which may be a problem if those values are coded by a representation (like rate of a Poisson-like process) for which the noise variance of the value increases with its mean. One might speculate that the cost of specialized fast inhibitory circuitry is recouped by the benefit of performing cancellation directly.

There is also evidence in favor of accurate rate-place spectral representations (Larsen et al., 2008; Fishman et al., 2013, 2014; Su & Delgutte, 2020) that might support a spectral version of the harmonic cancellation hypothesis, particularly as it has been argued that tuning might be narrower in humans than in most model animals (Shera et al., 2002; Verschooten et al., 2018; Sumner et al., 2018; Walker et al., 2019). Narrow tuning might also benefit a spectro-temporal mechanism, with the caveat that narrower filters are temporally more sluggish.

Sinex et al. (2002), Sinex and Li (2005), Sinex et al. (2007) report stronger responses in IC neurons for mistuned partials, consistent with the output of a cancellation filter, but they explain it by a different model based on cross-channel interaction of between-partial beat patterns, analogous to the waveform interaction models described earlier. Their model also accounts for the particular temporal structure of the response; whether that structure too could be explained by cancellation remains to be determined.

In summary, known neural circuitry might support both temporal and spectral mechanisms of harmonic cancellation, however I am not aware of evidence as strong as that reported in favor of the EC model. A rate-frequency response such as Figure 4(a) might evade notice if attention is devoted to peaks of activity rather than dips. It could also elude discovery if the output pattern follows a latency code rather than rate code (Chase & Young, 2007). The filter output in Figure 4(b) is evocative of ON–OFF patterns observed in the superior paraolivary nucleus (SPON) (Kandler et al., 2020) but this similarity could be fortuitous, indeed those patterns have been attributed to gap detection or duration encoding (Kadner & Berrebi, 2008).

Smart Pattern Matching

As discussed in the main text (Harmonic Cancellation—Possible Mechanisms), each recovered target pattern is affected by two error terms: imperfect cancellation of the background, and distortion undergone by the target. In the time-domain model, the first term can be reduced to zero over part of the pattern (red segment in Figure 4(b), right). This assumes the ability to locate and isolate reliable intervals, which is commonly granted for auditory perception (Viemeister & Wakefield, 1991; Moore et al., 1988).

There remains the second error term due to filter-induced target distortion. This can be mitigated if it is known to the pattern matching stage, for example, by applying the same distortion to each pattern in the dictionary. Distortion consists of an attenuation factor applied to each target component depending on how close it falls to the harmonic series of the background, as quantified by the filter transfer function (Figure 4(a), right). This produces a “moiré effect” that can be quantified (and thus taken into account) if F s of both background and target are known.

Target patterns can be further refined if the background is stationary over more than two periods, as illustrated in Figure 7. Specifically, if the stimulus is long enough to define distinct observation intervals temporally separated by , these intervals can form distinct pairs from which to infer the target. These observations are not all strictly independent, but the distortion (Figure 7, right) and noise patterns differ between pairs and this may assist inference. A perceptual mechanism operating in this fashion might seem implausibly complex. On the other hand, we cannot rule out that the trick is discovered by a learning process. The point made here is that the opportunity exists.

Figure 7.

Left: waveform of the mix of target vowel /e/ (132 Hz) with background vowel /a/ (100 Hz) at TMR= 12 dB. Given four background cycles, intervals can be paired over spans of , 2 , and 3 , with three, two and one repeats, respectively (blue arrows). Right: spectrum of target vowel /e/ (black line) and cancellation-filtered estimates obtained for spans , 2 , and 3 (symbols). Averaging over estimates (or better: taking their maximum) would yield a more accurate estimate of the target, and averaging over repeats might further attenuate uncorrelated noise (not shown).

Transforms in Filter Space

The idea that cochlear filtering works hand in hand with neural filtering is intriguing. What are the possibilities, what are the limits? As an example, the bandwidth of cochlear filters is usually seen as a hard limit on spectral resolution, but it appears that with neural filtering that limit can be overcome, as exploited by past schemes such as the “second filter” (Huggins & Licklider, 1951), stereausis (Shamma et al., 1989), lateral inhibitory network (LIN) (Shamma, 1985), phase opponency (Carney et al., 2002), synthetic delays (de Cheveigné & Pressnitzer, 2006), EC (Durlach, 1963), selectivity focusing in inferior colliculus (IC) (Chen et al., 2019), and here harmonic cancellation.

This section attempts to make sense of this situation by casting both filtering stages into a common framework. Any filter can be approximated as a finite impulse response (FIR) filter of order , defined by the column vector of impulse response coefficients. A signal is filtered by convolving it with this impulse response. Alternatively, using matrix notation, if is the matrix of time-lagged signals, the filtered signal is obtained as the product . A useful way to think of it is that the lags [ ] create a memory of the past signal, within which the filter can “shop” for useful information to characterize variations over time.

Extending to a -channel filter bank, the filters can be defined by a matrix of impulse responses of size , where each column of represents the impulse response of one channel. The matrix of filtered signals is then obtained as the product . To relate this to the context of this paper, picture as an acoustic signal, as a bank of “cochlear” filters, and as a matrix of vibration waveforms at different points along the basilar membrane.