Abstract

Background

Patient complaints are associated with adverse events and malpractice claims but underused in patient safety improvement.

Objective

To systematically evaluate the use of patient complaint data to identify safety concerns related to diagnosis as an initial step to using this information to facilitate learning and improvement.

Methods

We reviewed patient complaints submitted to Geisinger, a large healthcare organisation in the USA, from August to December 2017 (cohort 1) and January to June 2018 (cohort 2). We selected complaints more likely to be associated with diagnostic concerns in Geisinger’s existing complaint taxonomy. Investigators reviewed all complaint summaries and identified cases as ‘concerning’ for diagnostic error using the National Academy of Medicine’s definition of diagnostic error. For all ‘concerning’ cases, a clinician-reviewer evaluated the associated investigation report and the patient’s medical record to identify any missed opportunities in making a correct or timely diagnosis. In cohort 2, we selected a 10% sample of ‘concerning’ cases to test this smaller pragmatic sample as a proof of concept for future organisational monitoring.

Results

In cohort 1, we reviewed 1865 complaint summaries and identified 177 (9.5%) concerning reports. Review and analysis identified 39 diagnostic errors. Most were categorised as ‘Clinical Care issues’ (27, 69.2%), defined as concerns/questions related to the care that is provided by clinicians in any setting. In cohort 2, we reviewed 2423 patient complaint summaries and identified 310 (12.8%) concerning reports. The 10% sample (n=31 cases) contained five diagnostic errors. Qualitative analysis of cohort 1 cases identified concerns about return visits for persistent and/or worsening symptoms, interpersonal issues and diagnostic testing.

Conclusions

Analysis of patient complaint data and corresponding medical record review identifies patterns of failures in the diagnostic process reported by patients and families. Health systems could systematically analyse available data on patient complaints to monitor diagnostic safety concerns and identify opportunities for learning and improvement.

Keywords: diagnostic errors, healthcare quality improvement, health services research, patient safety

Introduction

Patient complaints are associated with adverse events and malpractice claims but underused in patient safety improvement efforts.1–4 Patients’ experiences offer rich information about factors that lead to adverse events5–9 but existing incident reporting mechanisms often fail to capture them. In the USA, while many healthcare organisations collect and address individual patient complaints, few organisations use systematic or rigorous processes to review and act on patient complaints for system-wide learning and improvement.

Literature on the type and frequency of patient complaints10 is emerging but gaps in knowledge of patient-reported diagnostic safety concerns remain. Diagnostic errors are frequent and harmful,11 yet they are under-reported,12 limiting our data on how and why they occur. While methods to identify diagnostic errors are still being refined, currently most measurement methods are imperfect, unreliable and/or labour intensive.13 Many of the methods to identify patient safety issues cannot specifically identify diagnostic errors.14 There are few methods to study diagnostic errors that include patient perspectives even though this was a major recommendation of the National Academy of Medicine (NAM) report ‘Improving Diagnosis in Health Care’.12 It is thus essential to develop more targeted measurement methods that are patient centred and have stronger safety signals.

Conversely, analytical methods to study patient complaints are getting more robust. For instance, the Healthcare Complaints Analysis Tool (HCAT)10 is reliable for coding and measuring the severity of complaints and helps identify unsafe and hard-to-monitor areas of care though systematic analysis of patient complaints.15 Systematic approaches are similarly needed to analyse patient complaints related to diagnostic errors. We thus evaluated the use of patient complaints to identify diagnosis-related safety concerns as an initial step to enable their use for learning and improvement.

Methods

Setting

Geisinger is one of the largest integrated health systems in the USA serving approximately 4.2 million residents; many live in rural Pennsylvania. Nearly a fifth of the population served is elderly (65+). Geisinger refunds copays and out-of-pocket expenses for certain care delivery concerns raised by patients.16

Patients reported concerns to Geisinger’s Patient Experience department via telephone, email or in person. All patient liaisons are trained in use of communication, especially skills related to de-escalation and service recovery17 and enhancing patient experience. At the time of the study there were approximately 15 patient liaisons. Every complaint is discussed with the patient and/or family member prior to recording their summary statement. A patient who emails, writes a letter or leaves a message is contacted by the ‘patient liaison’ to discuss the issue. The health system has an internal policy to respond to initial patient concerns within 24 hours and provide a written response within 7 days. Summary statements are categorised and entered into a commercial incident reporting system used to manage and track patient complaints.

Geisinger uses a locally developed and routinely updated taxonomy to categorise patient complaints. This is followed by a time-bound investigation conducted by the patient liaison to gather details from the patient’s perspective. Details include information about visits and interactions with clinicians, responses from involved clinicians and patient safety teams and actions to resolve the complaint. Investigation details are recorded into the incident reporting system.

Design and procedures

The research team reviewed two cohorts of patient complaints submitted to Geisinger. In both cohorts, we selected complaints based on an internally developed categorisation. From a total of 34 categories, the following categories were selected for inclusion based on increased likelihood of being associated with diagnostic safety concerns:

Accident/injury—all issues related to patient injuries.

Attitude/behaviour of clinicians/staff—all concerns/questions related to provider actions denoted as unprofessional or demonstrating poor customer service towards patient(s).

Clinical care issues—all concerns/questions related to the care that is provided by clinicians in any setting (inpatient/outpatient).

Delay in care—any concern/question where a patient experiences a perceived or actual delay in obtaining clinical care on an inpatient or outpatient basis.

Delay in test results—any concern/question where a patient experiences a perceived or actual delay in having a medical test performed or resulted on an inpatient or outpatient basis.

Delay in admission/discharge—any concern/question where a patient experiences a perceived or actual delay in the scheduled, anticipated or emergent admission to the hospital for care or a perceived or actual delay in the discharge process.

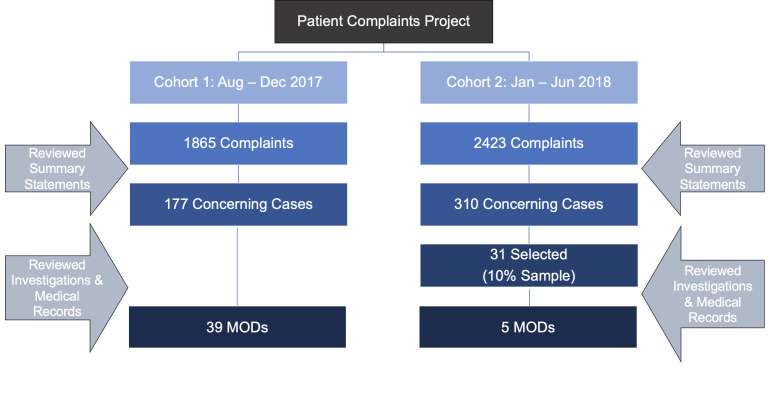

Cohort 1 involved review of all complaints that met the inclusion categorisation above and were submitted between August and December 2017. In cohort 2, we reviewed a more pragmatic sample, as proof of concept, assuming that most healthcare organisations will only choose to periodically review a random manageable sample to gain insights. Cohort 2 includes complaints submitted from January to June 2018 that met the inclusion categorisation. Figure 1 outlines the methodology for each cohort.

Figure 1.

Patient complaint data flow chart. MODs, missed opportunities in diagnosis.

In both cohorts, two clinical reviewers (authors US and VV) independently reviewed all summary statements (one to two sentences only; cohort 1 n=1865; cohort 2 n=2423) and identified cases that were ‘concerning’ for a diagnostic error (figure 1) using NAM’s broad definition of diagnostic error (ie, ‘the failure to (a) establish an accurate and timely explanation of the patient’s health problem(s) or (b) communicate that explanation to the patient’12). Where the two reviewers did not agree, the first author (TDG) would review the summary statement and err on the side of including the case for further review. Cases were included as ‘concerning’ if summary statements included one or more of the following: (A) any language about a diagnosis (eg, misdiagnosis), (B) any mention of a potential patient safety issue (eg, delayed care), and (C) any clinician behaviours related to communication (eg, did not listen). Complaints related to only behavioural issues of nursing and/or staff, clinician behaviour (eg, doctor/nurse was rude) and those unrelated to patient safety were excluded.

In cohort 1, for all ‘concerning’ cases, the associated investigation reports were evaluated by a clinical reviewer (US) for a diagnostic error, defined as a missed opportunity in making a correct or timely diagnosis (MOD),18 and a timeline of events was created from the patient perspective. A second independent clinical reviewer (SK) concurrently reviewed the patient’s medical record for MODs and created a timeline from the medical record documentation. The reviewer used the Revised Safer Dx Instrument19 as a framework to identify diagnostic safety concerns and as a guide to create a timeline of events. For these MOD cases, data collected included patient demographics, number of in-person visits (mean number of visits from initial symptoms to communication of final diagnosis), type of provider and specialty, and final diagnosis. Finally, a multidisciplinary team discussed all MOD cases, and cases where the two reviewers did not agree to confirm presence or absence of MODs, irrespective of investigation outcomes. Cases for which consensus could not be reached were discussed with the senior author (HS) for adjudication (cohort 1 n=25, cohort 2 n=6). In cohort 2, the team followed the same methodology with a 10% random sample of ‘concerning’ cases (see figure 1).

Qualitative analysis

We conducted a qualitative inductive content analysis of cohort 1 confirmed MODs to better understand complaint details from the patient perspective (eg, the written summary statements and detailed investigation notes). Two qualitative methodologists (US and TDG) familiarised themselves with each of the MOD cases while reviewing them exclusively from the perspective of the patient/family/caregiver. At this stage, they did not consider clinician or the healthcare system response to complaints and investigations, nor did they review the medical record for this qualitative analysis. Each reviewer coded the data independently and met to discuss all emergent codes. Based on the discussion, they grouped experiences and identified salient themes. The analysis was presented to the research team for further discussion.

Results

In cohort 1, review of 1865 complaint summaries identified 177 (9.5%) potential diagnostic concerns. On full analysis of these 177 cases, including investigation and chart review, we identified 39 MODs (2.1%); patients were mostly female (n=27), white (n=39), with a mean age of 44 (SD=28.2, range: 9 months to 91 years). The clinical care concerns category was the most common (n=27), followed by delay in care (n=7), delay in test results (n=2), attitude/behaviour of provider (n=2) and discharged too soon (n=1). Most common diagnoses involved were cancer related (n=4), missed fracture (n=4) and Lyme disease (n=3). Patients attended a mean of 1.5 visits before being diagnosed correctly (range: 1–5). More than half of the MODs occurred in the emergency department (ED) and primary care (n=15 and n=11, respectively) (table 1). Research team’s total time investment to analyse cohort 1 was estimated to be approximately 339 hours.

Table 1.

Visit characteristics associated with the complaint

| Cohort 1 | Cohort 2 | |||

| n | % | n | % | |

| Clinical location of visits* | ||||

| Emergency department | 22 | 38.6 | 3 | 60.0 |

| Primary care/family medicine | 16 | 28.1 | 1 | 20.0 |

| Convenient care | 6 | 10.5 | – | – |

| Specialty† | 6 | 10.5 | 1 | 20.0 |

| Urgent care | 4 | 7.0 | – | – |

| Obstetrics-gynaecology | 3 | 5.3 | – | – |

| Clinician involved in care* | ||||

| Physician | 40 | 70.2 | 4 | 80.0 |

| Nurse practitioner/physician assistant | 17 | 29.8 | 1 | 20.0 |

| Category of complaint | ||||

| Clinical care (provider) | 27 | 69.2 | 2 | 40.0 |

| Delay in care | 7 | 17.9 | 1 | 20.0 |

| Delay in test results | 2 | 5.1 | 2 | 40.0 |

| Attitude/behaviour of provider | 2 | 5.1 | - | - |

| Discharged too soon | 1 | 2.6 | - | - |

*Total does not equal 39 as some patients presented to more than one clinician and/or in more than one setting before being correctly diagnosed.

†Surgery, cardiology, radiology, pulmonology, dermatology.

In cohort 2, a review of 2423 summary statements identified 310 (12.8%) potentially concerning reports. Detailed analyses on a random 10% sample (n=31) identified five MODs; mostly male (n=4) and white (n=4), with a mean age of 45 (SD=17.9, range: 21–65 years). Missed diagnoses included: cancer (n=2), dislocation (n=1), acute renal injury (n=1) and hyperglycaemia (n=1) occurring in the ED (n=3), primary care (n=1) and dermatology (n=1); most involved physicians (n=4).

Qualitative analysis

Thirty-nine MOD patient complaints from cohort 1 were included in the final qualitative content analysis. On review of the summary statement and the investigation report text, three commonalities emerged across cases despite heterogeneity among diagnoses. These included: (1) reports of return visits for same or worsening symptoms (the most salient; n=24); (2) interpersonal issues; and (3) diagnostic testing issues. Return visits involved patient-reported situations such as initial treat-and-release ED visits for a symptom (eg, abdominal pain), followed by an ED return visit where a more certain diagnosis (eg, appendicitis) was made. From the patient/caregiver perspective, several reasons for such return visits emerged within this theme: first visit was not helpful, concerns that patient was not heard, limited or no testing performed and concerns with notification of test results. Of this subset, five complaints involved more than one return visit to resolve the patient’s concerns.

Eighteen of the cases included comments related to interpersonal problems (eg, ignoring patient/caregiver suggestions). Testing issues were also found across 12 of the cases and included patient/caregiver concerns regarding perceptions of overtesting and undertesting, not communicating or acting on abnormal test results and imaging misreads. Other concerns were related to perceptions of being discharged too soon (n=4) and challenges in obtaining urgent referral for symptoms (n=1).

Discussion

Patient complaints can be used systematically to identify diagnostic safety concerns but harvesting this information requires considerable time investment from complaint information and medical record reviews. In the main cohort, we identified 39 MODs, many of which were found within ‘clinical care issues’ category of the taxonomy used by the organisation. Qualitative analysis of the main cohort’s diagnostic error cases found that the complaint information from patients/caregivers often highlighted return visits for persistent and/or worsening symptoms. Focusing on higher risk categories within the complaint data (ie, clinical care issues, delay in care, delay in test results) coupled with medical record reviews may improve safety signals from this data source.

Patient complaints to a healthcare system are often unprompted assessments of care reflective of what matters to patients and families. Patients, families and caregivers are in the best position to communicate about their diagnostic experience. However, patient complaint mechanisms are not necessarily set up for safety monitoring.20 While our study has identified a methodology to improve signal for patient-reported diagnostic safety concerns in the complaint data, a national policy on integrating these data for learning and practice improvement is lacking. A recent systematic review of the patient complaint literature identified multiple mechanisms to ensure patient-centred complaint data collection and quality improvements—which includes a reliable coding taxonomy.21 Structured complaint data provide an opportunity for health systems to move safety concerns to the appropriate department to be addressed and tracked, and allow them to be nimble in their management and response.21

Existing complaint taxonomies, such as HCAT, provide a validated method to categorise complaints by problem, severity and harm if used by healthcare systems, and HCAT has been used to identify patient safety issues.15 22 Our study builds on such efforts and specifically explores the way patients articulate and conceptualise diagnostic safety concerns when filing a complaint. While some complaints include clear expressions of missed or delayed diagnoses, other patients express safety concerns about diagnosis through their experience with return visits, communication and testing. Analysing these complaints using this lens can make the healthcare system sensitive to the nuances of diagnostic safety from the patient perspective—for instance, focusing on patient return visits with persistent or worsening symptoms could be fertile ground for additional exploration. This work also informs the development of standardised categorisation mechanisms that capture how patients express diagnostic safety concerns. This standardisation and the subsequent analysis of patients’ experiences of diagnostic errors may highlight areas for improvement in the diagnostic process that might otherwise go undetected and have potential to cause harm. Organisations that intend to pursue diagnostic excellence should focus on systematically identifying patient-reported diagnostic concerns and generate feedback for learning.23

Several factors may influence whether a patient will complain24 and not all diagnostic errors will be captured in patient complaints. Other measurement strategies have to be used concurrently to capture them.25 To date, methods to identify diagnostic errors are still being refined, and currently most measurement methods are imperfect, unreliable and/or labour intensive.13 However, quantifying and learning from the experiences of patients who file their complaints provides insight into how some patients conceptualise diagnostic concerns and is foundational for improvement. Nevertheless, we show why it is essential to develop more targeted methods with better signals that require less review burden.23 26

Our study shows how other health systems can evaluate available data on patient complaints for diagnostic safety concerns to identify opportunities for learning and improvement. To our knowledge, this is the largest assessment of patient complaints to evaluate diagnostic safety data and while the signal is not as high as seen in triggers of electronic medical records,26 27 it is significant. We also provide a proof of concept for future organisational monitoring. Moreover, information harvested from patient complaints complements data obtained from medical record reviews which do not necessarily include an assessment of the breadth of the patient experience. This study is a first step towards more systematic analysis of patient complaints by health systems to routinely identify information on diagnostic safety concerns that could inform sustainable improvements. Our research effort to identify signals of diagnostic safety is thus foundational to creating future learning health systems that will ultimately use multiple measurement methods, including the patient voice, to improve diagnostic safety.23 28

Our study has several limitations. Patient complaint summaries and investigations are not first-person accounts. They are created by a patient liaison, therefore subject to any unconscious biases of or interpretations by the patient liaison. While patient liaisons are trained to receive patient complaints, the research team can only assume that the patient liaison has adequately captured the complaint from the patient’s perspective. Additionally, these patient complaints are not representative of the general US patient population, the Geisinger patient population or of all patients who experience diagnostic errors. We cannot account for differences between patients/families that do file a complaint and those that do not. Because our team included diverse clinical and patient-centred expertise and we used the broad NAM definition, we used consensus methods to determine whether an error occurred and did not calculate inter-rater reliability. Additionally, the depth of our qualitative analysis was limited because the original data were gathered for informing day-to-day clinical operations and not for research purposes. Finally, it is not possible to maintain complete blinding when reviewing cases retrospectively. However, given these limitations and the scant data on diagnostic concerns from patients and families, this study provides insight into leveraging an existing patient-centred data source for future diagnostic safety measurement.

In conclusion, analysis of patient complaint data identifies breakdowns in the diagnostic process reported by patients and families. This work is foundational to advance research and implementation efforts to better harvest diagnostic safety signals from patient complaint data. Health systems could systematically analyse available data on patient complaints to monitor diagnostic safety concerns and identify opportunities for learning and improvement.

Acknowledgments

Our team thanks Cara E Dusick, the patient liaison manager at Geisinger, for her help guiding the team on the patient grievances process and for setting up the infrastructure to identify data for this study. We also thank the Geisinger Committee to Improve Clinical Diagnosis for their support on this project.

Footnotes

Twitter: @TDGiardina, @HardeepSinghMD

Contributors: All authors contributed equally to the planning, conduct and reporting of the work described in the article.

Funding: This work was supported by the Gordon and Betty Moore Foundation. It was also supported in part by the Houston VA HSR&D Center for Innovations in Quality, Effectiveness and Safety (CIN 13-413). In addition, TDG is supported by an Agency for Healthcare Research and Quality Mentored Career Development Award (K01-HS025474); and HS is supported by the Veterans Affairs Health Services Research and Development Service (CRE17-127 and the Presidential Early Career Award for Scientists and Engineers USA 14-274), the VA National Center for Patient Safety, and the Agency for Healthcare Research and Quality (R01HS27363).

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement

No data are available.

Ethics statements

Patient consent for publication

Not required.

Ethics approval

The study was approved by the Institutional Review Board of Baylor College of Medicine and Geisinger.

References

- 1. Harrison R, Walton M, Healy J, et al. Patient complaints about hospital services: applying a complaint taxonomy to analyse and respond to complaints. Int J Qual Health Care 2016;28:240–5. 10.1093/intqhc/mzw003 [DOI] [PubMed] [Google Scholar]

- 2. Hickson GB, Federspiel CF, et al. , JAMA . Patient complaints and malpractice risk 2002;287:2951–7. [DOI] [PubMed] [Google Scholar]

- 3. de Vos MS, Hamming JF, Chua-Hendriks JJC, et al. Connecting perspectives on quality and safety: patient-level linkage of incident, adverse event and complaint data. BMJ Qual Saf 2019;28:180–9. 10.1136/bmjqs-2017-007457 [DOI] [PubMed] [Google Scholar]

- 4. O'Hara JK, Reynolds C, Moore S, et al. What can patients tell us about the quality and safety of hospital care? findings from a UK multicentre survey study. BMJ Qual Saf 2018;27:673–82. 10.1136/bmjqs-2017-006974 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Iedema R, Allen S, Britton K, et al. What do patients and relatives know about problems and failures in care? BMJ Qual Saf 2012;21:198–205. 10.1136/bmjqs-2011-000100 [DOI] [PubMed] [Google Scholar]

- 6. Scott J, Heavey E, Waring J, et al. Implementing a survey for patients to provide safety experience feedback following a care transition: a feasibility study. BMC Health Serv Res 2019;19:613. 10.1186/s12913-019-4447-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Giardina TD, Haskell H, Menon S, et al. Learning from patients' experiences related to diagnostic errors is essential for progress in patient safety. Health Aff 2018;37:1821–7. 10.1377/hlthaff.2018.0698 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Zengin S, Al B, Yavuz E, et al. Analysis of complaints lodged by patients attending a university hospital: a 4-year analysis. J Forensic Leg Med 2014;22:121–4. 10.1016/j.jflm.2013.12.008 [DOI] [PubMed] [Google Scholar]

- 9. Donaldson LJ. The wisdom of patients and families: ignore it at our peril. BMJ Qual Saf 2015;24:603–4. 10.1136/bmjqs-2015-004573 [DOI] [PubMed] [Google Scholar]

- 10. Reader TW, Gillespie A, Roberts J. Patient complaints in healthcare systems: a systematic review and coding taxonomy. BMJ Qual Saf 2014;23:678–89. 10.1136/bmjqs-2013-002437 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Singh H, Meyer AND, Thomas EJ. The frequency of diagnostic errors in outpatient care: estimations from three large observational studies involving us adult populations. BMJ Qual Saf 2014;23:727–31. 10.1136/bmjqs-2013-002627 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. National Academies of Sciences, Engineering, and Medicine . Improving Diagnosis in Health Care. National Academies Press, 2015. [Google Scholar]

- 13. Singh H, Bradford A, Goeschel C. Operational measurement of diagnostic safety: state of the science. Diagnosis 2021;8:51–65. 10.1515/dx-2020-0045 [DOI] [PubMed] [Google Scholar]

- 14. Graber ML, Trowbridge R, Myers JS, et al. The next organizational challenge: finding and addressing diagnostic error. Jt Comm J Qual Patient Saf 2014;40:102–10. 10.1016/S1553-7250(14)40013-8 [DOI] [PubMed] [Google Scholar]

- 15. Gillespie A, Reader TW. Patient-Centered insights: using health care complaints to reveal hot spots and blind spots in quality and safety. Milbank Q 2018;96:530–67. 10.1111/1468-0009.12338 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Burke GF. Geisinger’s Refund Promise: Where Things Stand After One Year. NEJM Catal 2020. [Google Scholar]

- 17. Service Recovery Programs . Available: http://www.ahrq.gov/cahps/quality-improvement/improvement-guide/6-strategies-for-improving/customer-service/strategy6p-service-recovery.html [Accessed 21 Sep 2020].

- 18. Singh H. Editorial: helping health care organizations to define diagnostic errors as missed opportunities in diagnosis. Jt Comm J Qual Patient Saf 2014;40:99–AP1. 10.1016/S1553-7250(14)40012-6 [DOI] [PubMed] [Google Scholar]

- 19. Singh H, Khanna A, Spitzmueller C, et al. Recommendations for using the revised safer DX instrument to help measure and improve diagnostic safety. Diagnosis 2019;6:315–23. 10.1515/dx-2019-0012 [DOI] [PubMed] [Google Scholar]

- 20. de Vos MS, Hamming JF, Marang-van de Mheen PJ. The problem with using patient complaints for improvement. BMJ Qual Saf 2018;27:758–62. 10.1136/bmjqs-2017-007463 [DOI] [PubMed] [Google Scholar]

- 21. van Dael J, Reader TW, Gillespie A, et al. Learning from complaints in healthcare: a realist review of academic literature, policy evidence and front-line insights. BMJ Qual Saf 2020;29:684-695. 10.1136/bmjqs-2019-009704 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Gillespie A, Reader TW. The healthcare complaints analysis tool: development and reliability testing of a method for service monitoring and organisational learning. BMJ Qual Saf 2016;25:937–46. 10.1136/bmjqs-2015-004596 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Singh H, Upadhyay DK, Torretti D. Developing health care organizations that Pursue learning and exploration of diagnostic excellence: an action plan. Acad Med 2020;95:1172–8. 10.1097/ACM.0000000000003062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Howard M, Fleming ML, Parker E. Patients do not always complain when they are dissatisfied: implications for service quality and patient safety. J Patient Saf 2013;9:224–31. 10.1097/PTS.0b013e3182913837 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Vincent C, Burnett S, Carthey J. Safety measurement and monitoring in healthcare: a framework to guide clinical teams and healthcare organisations in maintaining safety. BMJ Qual Saf 2014;23:670–7. 10.1136/bmjqs-2013-002757 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Murphy DR, Meyer AN, Sittig DF, et al. Application of electronic trigger tools to identify targets for improving diagnostic safety. BMJ Qual Saf 2019;28:151–9. 10.1136/bmjqs-2018-008086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Singh H, Giardina TD, Forjuoh SN, et al. Electronic health record-based surveillance of diagnostic errors in primary care. BMJ Qual Saf 2012;21:93–100. 10.1136/bmjqs-2011-000304 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Meyer AND, Upadhyay DK, Collins CA, et al. A program to provide clinicians with feedback on their diagnostic performance in a learning health system. Jt Comm J Qual Patient Saf 2021;47:120–6. 10.1016/j.jcjq.2020.08.014 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No data are available.