Abstract

Ambiguous stimuli are useful for assessing emotional bias. For example, surprised faces could convey a positive or negative meaning, and the degree to which an individual interprets these expressions as positive or negative represents their “valence bias.” Currently, the most well-validated ambiguous stimuli for assessing valence bias include nonverbal signals (faces and scenes), overlooking an inherent ambiguity in verbal signals. This study identified 32 words with dual-valence ambiguity (i.e., relatively high intersubject variability in valence ratings and relatively slow response times) and length-matched clearly valenced words (16 positive, 16 negative). Preregistered analyses demonstrated that the words-based valence bias correlated with the bias for faces, rs(213) = .27, p < .001, and scenes, rs(204) = .46, p < .001. That is, the same people who interpret ambiguous faces/scenes as positive also interpret ambiguous words as positive. These findings provide a novel tool for measuring valence bias and greater generalizability, resulting in a more robust measure of this bias.

Keywords: emotion, ambiguity, language, nonverbal, valence bias

Decision making under uncertainty is ubiquitous in daily life (e.g., financial decision making is fraught with risk and ambiguity; Chen & Epstein, 2002; Ellsberg, 1961; see Platt & Huettel, 2008, for a review) and is particularly pervasive in social behavior (see FeldmanHall & Shenhav, 2019, for a review). For instance, uncertainty may arise when judging another’s trustworthiness (King-Casas et al., 2005), gauging their thoughts (Flagan et al., 2017), or gleaning emotion from social signals (Neta et al., 2009). Indeed, humans readily glean emotional meaning from social signals including facial expressions (Ekman et al., 1987), language (Lindquist, 2009), and situational context (Frijda, 1958; Neta et al., 2013). Notably, although some signals can be clearly categorized along the valence dimension: good or bad, approach or avoid (Baumeister et al., 2007; Krieglmeyer et al., 2010), others are less clear. Ambiguity arises when a particular social signal represents both positive and negative outcomes. For example, a wink can signal an attempt at social affiliation (e.g., a show of support; positive), an unwanted flirtation (negative), or it can simply mean that someone has something in their eye (neutral); depending on the context in which this signal is encountered (e.g., a job interview, a first date), our ability to resolve these ambiguities can have widespread consequences on our lives.

A growing body of work has explored stable, trait-like individual differences in interpretations of emotional (dual-valence) ambiguity (Neta et al., 2009). For instance, surprised facial expressions predict both positive/rewarding (e.g., winning the lottery) and negative/threatening outcomes (e.g., stock market crash) and thus are a useful tool for characterizing individual differences in valence bias or the tendency to interpret emotionally ambiguous signals as positive or negative. The valence bias is consistent with multiple theories in social and personality psychology suggesting that situational and personal factors influence how we interpret ambiguous social stimuli. Much of this work has focused on contextual, state factors that influence how we process ambiguous information (e.g., self-fulfilling prophecies, Snyder & Swann, 1978; category and stereotype-based expectancies, Trope & Thompson, 1997). For instance, just as stereotypes preserve mental resources and speed social inferences (Macrae et al., 1994), the valence bias serves as a lens through which individuals might quickly and efficiently categorize ambiguity. Such biases in impression formation are self-perpetuating (Snyder & Swann, 1978; Trope & Thompson, 1997), meaning that, in the context of valence bias, a tendency to interpret ambiguity as negative will likely lead to an increased search for confirmatory (negative) evidence. These effects likely contribute to the stability evident in one’s valence bias (Neta et al., 2009). Indeed, ambiguous information is often taken as confirmatory evidence, reinforcing stereotypes and beliefs rather than refuting them (Todd et al., 2012).

In addition, variability in valence bias, similar to other trait-like factors, powerfully influences behavior in myriad ways (Allport, 1937). For example, a more positive valence bias is associated with greater well-being, by way of less depressive symptoms (Petro et al., 2019), self-reported anxiety (Neta et al., 2017), stress reactivity (Brown et al., 2017), and more physical activity (Neta et al., 2019). Interpersonally, preliminary evidence suggests a positive bias is associated with greater empathy (Neta et al., 2009) and may facilitate ingroup affiliation and cooperation (Lazerus et al., 2016). Alternatively, a more negative bias may contribute to group conflict or out-group derogation (e.g., there is a negativity bias in perceptions of out-group motives; Lees & Cikara, 2020).

To date, valence bias has largely been studied using nonverbal cues such as facial expressions (surprised, morphed faces; Beevers et al., 2009; Neta et al., 2009) and scenes (Neta et al., 2013). Although these nonverbal social signals are important for communication and rich with emotional meaning, there is another important social signal for communicating emotion that has been relatively overlooked: language. Language is a critical component of emotion (Barrett et al., 2007) and interpersonal communication (McGlone & Giles, 2011), and its usage provides insight into both social (e.g., linguistic intergroup bias; Maass et al., 1989) and personality psychology (Nunnally & Flaugher, 1963; Pennebaker & Graybeal, 2001). However, like many other communicative signals, language is fraught with ambiguity (MacDonald et al., 1994; Piantadosi et al., 2012). For example, some words with different meanings sound (homophones; e.g. “break” and “brake”) or look the same (homonyms; e.g., “pen” for writing and “pen” for animals); others take on different parts of speech (e.g., “break” is both a noun and a verb) and even refer to opposing emotional valence signals (e.g., “spring break” and “heart break”).

Despite this pervasiveness of ambiguity in language, previous work has focused on arousal-based rather than valence-based ambiguity. For example, Mathews and colleagues have demonstrated a negativity bias using words that could have a negative or neutral meaning (e.g., “die”), examining one’s tendency to interpret the word as having high or low arousal (i.e., negative or neutral interpretations). Other work has explored ambiguity in which the alternative meanings are positive or neutral (Grey & Matthews, 2000; Eysenck et al., 1991; but see Joorman et al., 2015). However, the work on valence bias relies on dual-valence ambiguity, examining one’s tendency to interpret these stimuli as having a more positive versus negative meaning. Thus, the development of a set of words with dual-valence ambiguity would provide both a novel tool for measuring valence bias and also a more robust and generalizable measure than the one that relies only on nonverbal signals.

The primary goal of this work is to determine the impact of valence bias in processing linguistic ambiguity, thus demonstrating that responses to ambiguous words can be leveraged to characterize bias in response to ambiguity in social signals, more broadly. To that end, we first identified a set of words with dual-valence emotional ambiguity (i.e., valid positive and negative meanings). We relied on the same principles used in identifying ambiguous scenes (Neta et al., 2013), operationalizing dual-valence ambiguity as words with greater intersubject variability (i.e., standard deviation) and slower reaction times in valence ratings (i.e., more time might be required to make a valence decision when multiple response alternatives are valid). Upon identifying these words (and length-matched clearly valenced words) in an exploratory pilot, we conducted a preregistered experiment to compare valence bias for words to that evoked by ambiguous faces and scenes. Specifically, we preregistered our prediction that we would see evidence for dual-valence ambiguity across all three stimulus categories. We also preregistered a prediction that the valence bias would generalize across categories, operationalized as a positive correlation between valence bias for each of the three stimulus categories, controlling for age and gender. That is, we predicted that the same individuals that tend to interpret ambiguous faces and scenes as positive also show more positive interpretations of these words.

Pilot

Method

Participants

Amazon’s Mechanical Turk (MTurk) Workers were invited to participate in an eligibility screener that included demographic questions and an initial word rating block (US$0.20 total), with the option to earn a bonus (US$2.05) if they met the requirements and completed the entire study (total compensation US$2.25). Eligibility was based on Workers indicating that they were over 18 years old, English was their native language, and they had no history of psychological or neurological disorder. The initial word rating block consisted of 50 trials (described below), including five instances of the word “POSITIVE” and five instances of the word “NEGATIVE”; eligibility was based on correctly rating these 10 words with at least 80% accuracy. We expected a sample of 100 participants would result in sufficient variability to identify ambiguous words but collected data from slightly more than 100 participants, expecting to remove some participants due to data quality issues associated with online data collection. Of the 151 who completed the screener, 119 met the eligibility requirements and responded accurately in the screening block (n = 6 ineligible, n = 26 below 80% accuracy), and 103 chose to complete the entire study (Table 1).

Table 1.

Demographics for Pilot and Study 1.

| Pilot (n = 103) |

Study 1 (n = 227) |

|||||

|---|---|---|---|---|---|---|

| Demographic | M | SD | Range | M | SD | Range |

| Age | 37.15 | 10.6 | 22–67 | 44.85 | 14.44 | 18–76 |

| Gender | 55% female (N = 56), 45% male (N = 47) | 53% female (N = 121), 47% male (N = 106) | ||||

| Race | 4 Asian, 6 Black, 88 White, 2 Other, and 3 Unknown | 15 Asian, 20 Black, 175 White, 5 Other, and 12 Unknown | ||||

Stimuli

We compiled a set of 59 “ambiguous” words that we expected might have two distinct valence interpretations: one clearly positive and one clearly negative. To identify clearly positive and negative words, we created a list of words used in both Warriner et al. (2013), which provided valence and arousal ratings of each word, and the English Lexicon Project (Balota et al., 2007), which provided lexical features of each word, including length and frequency (Lund & Burgess, 1996), number of phonemes, number of syllables, number of morphemes, lexical decision reaction time and accuracy, and naming reaction time and accuracy. These lexical characteristics were selected because they cover the “general fields” provided by the English Lexicon Project (length and frequency) but also morphological and phonological features associated with word length. We then eliminated words with a mean arousal rating greater than 1 standard deviation (SD) away from the mean arousal of the 59 ambiguous words. We classified positive words as those with a mean valence > 7 on the 1–9 scale used by Warriner et al. (2013); negative words had mean valence < 3. To ensure that all words shared similar lexical characteristics, we eliminated any words from the master list whose lexical characteristics did not fall within the minimum and maximum values for the 59 ambiguous words. The final list of pilot words included 629 total words: 59 ambiguous, 267 positive, and 303 negative words.

Procedure

All tasks were created and presented using Gorilla Experiment Builder (Anwyl-Irvine et al., 2019), and the study was only accessible to participants using a computer (not a phone or tablet) within the United States. After giving informed consent, participants answered demographic questions and were shown a brief self-guided instructional walkthrough of the task before completing the screener. Using a random seed, we selected 20 positive and 20 negative words from the pilot list to include in the screener task for all participants. These 40 words, along with five instances of the word “POSITIVE” and five instances of the word “NEGATIVE” (total of 50 words) were presented randomly, one at a time, each following a 250 ms fixation cross. Each word remained on screen until the participant rated it as positive or negative by pressing “A” or “L” on their keyboard (key pairing randomized across participants). If no response was made after 2,000 ms, a reminder appeared (e.g., “Please respond as quickly as you can! A = POSITIVE. L = NEGATIVE”). Participants who rated the words “POSITIVE” and “NEGATIVE” with less than 80% accuracy were compensated for their time but not invited to continue the study. This strict cutoff for rejecting participants immediately after the screener (Chandler et al., 2013) allowed for a small margin of error but was necessary given data issues in online samples (e.g., uncontrolled environment). The remaining 589 words from the final pilot list were randomly presented across 10 blocks of 59 words, in capital letters in plain black font on a white background, using the same button-press procedure as the screener block.

Analysis

All calculations described in this section were scripted using R (Version 3.6.0; R Core Team, 2019), and summary data are available at osf.io/b2trn. Trials with a reaction time less than 250 ms (n = 191) or larger than 3 SDs above the group mean (n = 204) were removed before data analysis. Reaction times below 250 ms were removed because this is a lower threshold for simple reaction time tasks (e.g., pressing a key immediately upon attending to a stimulus; Posner, 1980) and implausible for more complex (valence discrimination) tasks. This cutoff is reasonably conservative, given concerns associated with online data collection (e.g., “bots” or automated responding), and is in line with recent reaction time-based research using Gorilla Experiment Builder (Anwyl-Irvine et al., 2019). After removing these 395 trials, we removed one participant that lost 25% of their total trials based on reaction time (all other participants lost no more than 4% of trials). Thus, a total of 238 trials were removed from the final sample prior to data analysis, M (SD) = 2.33 (4.01) per participant. To examine valence ratings, we calculated the percentage of participants who rated each word as negative. For example, if half of the participants rated the word “break” as negative, then the percent negative ratings would be 50%. Mean reaction time was also calculated for each word.

Pilot

Results

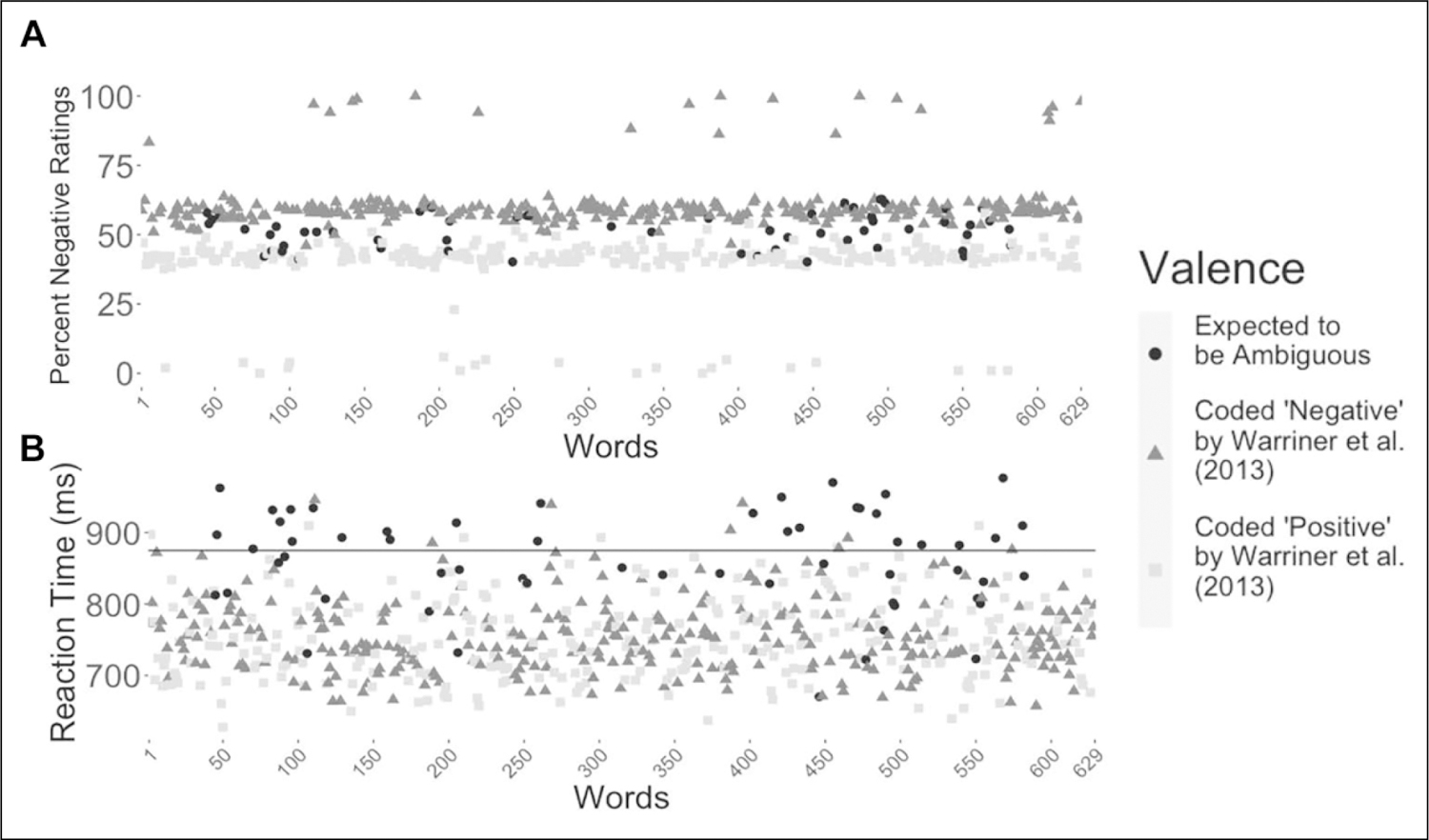

Visual inspection of the valence ratings across subjects revealed two distinct groups of words with high response consensus: one group with a clearly negative meaning (n = 20, percent negative ratings > 75%) and another group with a clearly positive meaning (n = 21, percent negative ratings < 25%; Figure 1A). We removed the two words “POSITIVE” and “NEGATIVE” from each list (given that these were included only as attention checks), resulting in a set of 19 words with a clearly negative meaning and 20 words with a clearly positive meaning.

Figure 1.

Percent negative ratings and mean reaction time for all words. (A) Nineteen words were interpreted as negative by more than 75% of the participants (top of the graph), and 20 were interpreted as positive by more than 75% of the participants (bottom of the graph; excluding the words “POSITIVE” and “NEGATIVE”). (B) Forty words had mean reaction times above 875 ms (black line), suggesting dual-valence ambiguity. For an interactive figure that shows the corresponding word for each point, visit osf.io/b2trn.

Previous work has shown that ambiguous stimuli are associated with longer reaction times in a forced-choice valence categorization task (Neta et al., 2013). Figure 1B shows that responses to 40 words were rated more slowly than the rest (suggesting dual-valence ambiguity), surpassing an average reaction time threshold of 875 ms. These words were also between 25% and 75% in percent negative ratings (i.e., not clearly positive or negative valenced). These 40 words were considered for inclusion in a final list of ambiguous words. We removed eight words for a variety of reasons: Four were outliers in normative ratings (Warriner et al., 2013) or lexical characteristics (Balota et al., 2007), relative to other ambiguous words (“ABUNDANT” had low accuracy, “INHERIT” had higher valence rating, “FACELESS” had a low SD in valence, and “HEADSTONE” had lower frequency); one word was removed because of conceptual redundancy (“COURTROOM” was removed because “COURT” was on the list); two were removed because we could not identify both a clear positive and negative definition (“COSMIC” and “RECEIVE”); and one was expected to prime a more negative interpretation given recent economic events (“RECESSION”). Thus, the final list included 32 ambiguous words.

Because the existing valence bias task (with faces and scenes) uses an equal number of ambiguous (50%) and clearly valenced (25% positive, 25% negative) stimuli, we removed three negative and four positive words with the longest reaction times, resulting in a final list of 16 negative and 16 positive words with the fastest reaction times. The final lists of ambiguous and clear words (see Supplemental Table S1) did not differ in length, t(62) = ‒1.05, p = .30, d = 0.26, but did differ in reaction time, Welch’s t(55) = ‒15.81, p < .001, d = 3.95; ambiguous, M (SD) = 916.51 (28.26); and clear, M (SD) = 777.40 (40.96). Further, there was a significant difference in frequency, such that ambiguous words were more frequent than clearly valenced words, t(62) = 2.08, p = .04, d = 0.52. Although unexpected, this difference is not surprising given that ambiguous words must have multiple definitions (at least one positive and one negative) and thus are likely to have greater use in the English language.

Study 1

Method

Participants

A new sample of Amazon’s MTurk Workers were invited to participate. Power analysis (G*Power) indicated a necessary sample size of at least 134 participants for a bivariate linear regression (α = .05; power = 95%) to detect a small effect size (r = .3; Faul et al., 2009). After providing informed consent and completing the same eligibility screener (but without the word rating block) used in the pilot (US$0.10), eligible participants completed a valence bias task (described below; US$2.15). Of the 389 eligible, 260 workers chose to complete the entire study (total compensation US$2.25). Participants who responded to less than 75% of trials after reaction time cleaning (n = 6; described below) or did not correctly rate the clearly valenced stimuli on more than 60% of trials for two or more stimulus categories (faces, scenes, and words; n 27) were removed. This more liberal accuracy cutoff, compared to the pilot, was taken from previous research (Neta et al., 2019) as it allows for some flexibility in ratings (e.g., a picture of a puppy is typically rated as positive but perhaps not by someone who is afraid of dogs). Participants who were inaccurate for only one stimulus category were retained, but any dependent variables—ratings and reaction times—in that stimulus category were treated as missing in the analyses. Further, a minimum of 60% accuracy on clearly valenced trials was needed to ensure an accurate representation of valence bias, consistent with previous work (Neta et al., 2013; Neta & Whalen, 2010). The final sample included 227 participants.

Stimuli

Six task blocks (faces, scenes, and words) were used to assess valence bias. As in previous work (Neta et al., 2013), each face and scene block included 12 ambiguous images and 12 clear images (six positive and six negative). The facial expressions were selected from the NimStim (Tottenham et al., 2009) and Karolinska Directed Emotional Faces (Lundqvist et al., 1998) sets, and the scenes were selected from the International Affective Picture System (Lang et al., 2008; see Supplemental Table S1). Each word block included 16 ambiguous and 16 clear (eight positive and eight negative) words identified in the pilot. All words were presented in capital letters in plain black font on a white background.

Procedure

As in the pilot, the task was administered using Gorilla Experiment Builder (Anwyl-Irvine et al., 2019) and was only accessible to participants using a computer in the United States. Participants were randomly assigned to a pseudorandom (counterbalanced) presentation order of blocks of faces, scenes, and words. Within each block, stimuli were presented randomly for 500 ms, preceded by a 1,500 ms fixation cross. If participants did not make a response within 2,000 ms, no response was recorded and the task advanced to the next trial. Participants responded by pressing either the “A” or “L” key on their keyboard (response keys counterbalanced across participants). Valence bias for each stimulus category was calculated as the percentage of ambiguous trials in which the participant rated the item as negative out of the total number of trials for that condition (excluding omissions; Neta et al., 2009). For example, if a participant rated 80% of ambiguous words as negative, that individual’s valence bias for words would be 80%.

Analysis

Preregistration (osf.io/z3k2g) and deidentified data with analysis scripts (osf.io/b2trn) are available via Open Science Framework. All data cleaning, analyses, and visualizations were completed using R (Version 3.6.0; R Core Team, 2019). Before calculating valence bias, trials with reaction times less than 250 ms (n = 582) or larger than 3 SDs above the participant mean (n = 469) were removed. As in the pilot, after removing these 1,051 trials, we removed six participants who lost 25% or more of their total trials based on nonresponse or reaction time (all other participants lost no more than 18.75% of trials, and a large majority—i.e., 94% of participants—lost no more than 5% of trials). Thus, a total of 623 trials were removed from the final sample prior to data analysis, M (SD) = 2.45 (2.50) per participant. Additionally, only participants’ first response to each stimulus presentation was retained for analysis. We completed our preregistered analysis of valence bias (percent negative ratings) as well as an unregistered analysis of reaction time to confirm reaction times were slower for ambiguous than clear stimuli, across the stimulus categories. (Note that reaction time analyses were conducted as additional confirmation of dual-valence ambiguity—i.e., longer reaction times for ambiguous than clear stimuli—and to replicate findings from the Pilot but were overlooked at the time of preregistration.) We used an analysis of variance (ANOVA) approach but capitalized on the flexibility of linear mixed effects models (i.e., the ability to handle missing data and robustness to violations of normality; Knief & Forstmeier, 2018) rather than the traditional repeated measures ANOVA. However, these models do not have an agreed upon method for calculating effect sizes (see Rights & Sterba, 2019, for a discussion). Full information maximum likelihood estimation was used to account for any missing data. Partial correlations were used to assess whether valence bias in response to ambiguous words was related to that of the faces and scenes while controlling for gender and age. Where applicable, non-parametric tests (Spearman’s correlations) were used.

Results

Manipulation Check

Replicating and confirming the ambiguity of our new word set, we found that ambiguous words showed greater intersubject variability in valence ratings (i.e., larger SD) than clearly valenced words, t(31) = 16.47, p < .001, d = 4.35; ambiguous, M (SD) = 0.45 (0.05), and clear, M (SD) = 0.24 (0.04). Further, ambiguous words were rated more slowly than clear words, t(31) = 11.89, p < .001, d = 2.99; ambiguous, M (SD) = 814.90 (26.03), and clear, M (SD) = 718.77 (37.21).

Valence Ratings

A linear mixed effects model with fixed within-subjects factors of valence (negative, positive, and ambiguous) and stimulus (faces, scenes, and words) revealed a significant main effect of valence, F(2, 448) = 3,690.50, p < .001, such that negative stimuli were rated as more negative than ambiguous stimuli, which were rated as more negative than positive stimuli (ps < .001; negative M [SD] = 89.30 [10.29]%; ambiguous M [SD] = 48.10 [21.59]%; positive M [SD] = 5.74 [8.29]%; Bonferroni-corrected significance threshold = .02). There was also a significant main effect of Stimulus, F(2, 429) = 13.65, p < .001, such that faces were rated as more negative than scenes and words (ps < .001), but scenes were not different from words (p = .18; Bonferroni-corrected significance threshold = .02). Finally, there was a significant Valence × Stimulus interaction, F(4, 860) = 25.83, p < .001, such that the effect of valence reported above was significant for all three stimulus categories (ps < .001), but there were also stimulus-related differences within each valence condition. Specifically, for the ambiguous condition, faces were rated as more negative than scenes and words (ps < .001), and scenes were trending towards more negative than words (p = .009; Bonferroni-corrected significance threshold = .006). For the negative condition, words were rated as more negative than both faces and scenes (ps < .001), and faces were marginally more negative than scenes (p = .007). There were no stimulus-related differences in the positive condition that surpassed the Bonferroni-corrected threshold (ps > .07).

Reaction Time

A similar valence (negative, positive, and ambiguous) and stimulus (faces, scenes, and words) linear mixed effects model revealed a significant main effect of valence, F(2, 456) = 279.08, p < .001, such that participants took longer to rate ambiguous than negative images, which took longer than ratings of positive images (ps < .001; Bonferroni-corrected significance threshold = .02). There was also a significant main effect of stimulus, F(2, 425) = 108.93, p < .001, such that participants took longer to rate words than scenes, which took longer than ratings of faces (ps < .001; Bonferroni-corrected significance threshold = .02). Finally, there was a significant Valence × Stimulus interaction, F(4, 866) = 17.15, p < .001, such that the effect of valence described above was significant for all three stimulus categories (all ps < .001), and the effect of stimulus described above was also significant or trending in all valence conditions (ps < .001 except between scenes and words for negative valence, p = .01; Bonferroni-corrected significance threshold = .006).

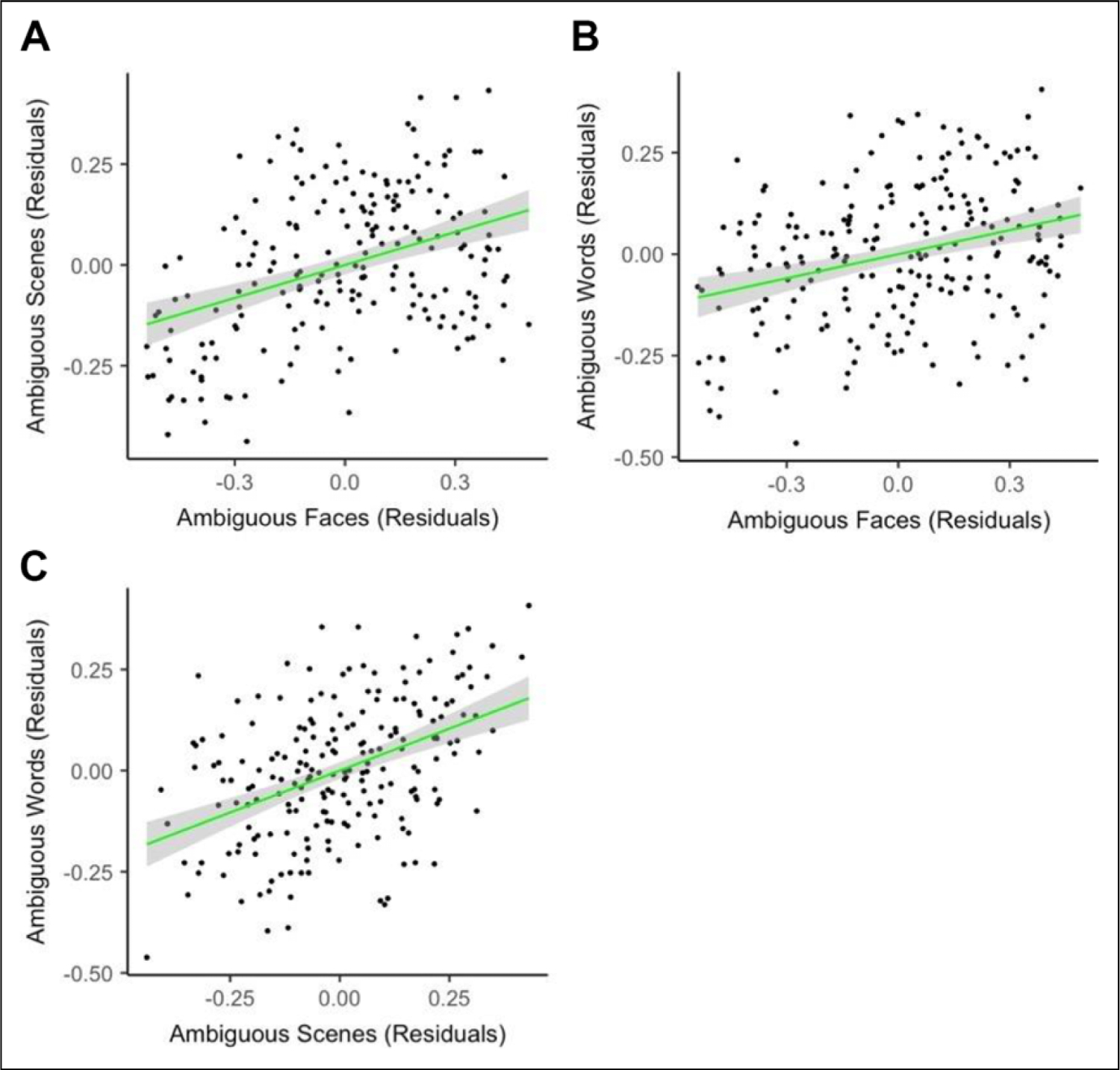

Comparing Valence Bias Across Stimulus Categories

To address one of the primary goals of the project (i.e., the generalizability of valence bias), we compared valence bias for faces, scenes, and words within participants while controlling for age and gender. Replicating previous work (Neta et al., 2013), there was a positive relationship between ratings of ambiguous faces and scenes, rs(198) = .35, p < .001. Notably, we found a similar positive relationship between faces and words, rs(213) = .27, p < .001, and between scenes and words, r(204) = .46, p < .001 (Figure 2A–C).

Figure 2.

Comparing valence bias across stimulus categories. Note. After regressing age and gender on the percent negative ratings in each condition, we found positive associations between ratings of ambiguous (A) faces and scenes, rs(198) = .35, p < .001, (B) faces and words, rs(213) = .27, p < .001, and (C) scenes and words, r(204) = .46, p < .001.

Discussion

We identified a set of words with dual-valence ambiguity, along with length-matched clearly valenced—positive and negative—words. Notably, we showed that the valence bias as measured with nonverbal signals (faces and scenes) extends to verbal signals (words): the same participants who interpret ambiguous faces and scenes as having a positive meaning also interpret the ambiguous words as positive. This generalizability provides a more stable, robust measure of valence bias that extends across the specific features of the stimuli. Specifically, the valence bias is not exclusive to nonverbal social signals (faces and scenes), but rather our responses to ambiguity are broadly relevant to social decision making, ranging from person perception (e.g., faces) to language (single words).

The development and validation of this new stimulus set provides both a novel method for measuring valence bias and numerous advantages for future research. One advantage is the uniformity and simplicity of the stimuli; facial expressions are complex displays subject to interindividual variability in facial features (brow and mouth position) and perceiver biases (stereotypes), which influence judgments of the face (Freeman & Johnson, 2016; Oosterhof & Todorov, 2008). This set of ambiguous words is uniquely useful in its lack of salient features related to group membership that are inherent to the faces and some scenes (e.g., sex, age, race, and ethnicity). Another benefit of the words, particularly for online studies, is that task performance is less vulnerable to effects of screen resolution or other differences that might prove problematic for more complex images. Finally, the word stimuli are more translatable for other modalities (e.g., auditory stimuli).

Having said that, one inherent limitation of these word stimuli is that they are not similarly suitable for very young populations (i.e., children that cannot read) nor will they readily generalize to non-English speaking samples or even to non-American, English-speaking cultures, given different word usage. One interesting avenue for future research would be extending this work to identify dual-valence ambiguity in other languages and cultures. Another potential limitation of this work more broadly is related to well-known flaws with reaction time measurement via browser- or hardware-related differences in online studies. Although Gorilla Experiment Builder implements techniques to mitigate browser-related differences (e.g., JavaScript functions to obtain high-resolution timestamps of approximately 1 ms; Mozilla, 2019), there remain potentially problematic differences in the hardware’s refresh rate—but note that typical refresh rate for USB hardware is 125 Hz (Anwyl-Irvine et al., 2020).

Although we found important generalizability in valence bias across stimulus categories, there were some differences. For example, ambiguous faces were interpreted as more negative than scenes and words (consistent with previous work using faces and scenes; Neta et al., 2013; Neta & Tong, 2016). In contrast, among the clearly negative stimuli, words were interpreted as more negative than faces or scenes. Although it is unclear what is driving these effects, there may be less flexibility in interpretations of clearly negative words (e.g., “evil” or “deadly”) compared to clearly negative (angry) faces. For example, schadenfreude—pleasure at another’s misfortunes—could account for positive interpretations of some angry faces (Cikara & Fiske, 2013) as could perceiver biases (stereotypes; Freeman & Johnson, 2016; Oosterhof & Todorov, 2008).

Reaction times were important for identifying (Pilot) and later confirming (Study 1) the ambiguous nature of our stimuli; as expected, reaction times were slower for ambiguous than clear stimuli. These analyses also revealed insights into stimulus-related differences in processing ambiguity. For instance, reaction times for faces were faster than for scenes and words, perhaps because scenes are more complex (more information to encode; Neta et al., 2013), and words require semantic processing (Petersen et al., 1988; Posner et al., 1988), but faces are processed relatively quickly and automatically (Bar et al., 2006; Willis & Todorov, 2006). These reaction time differences are consistent with divergent processing routes, for example, work using magnetoencephalography has demonstrated faster processing for faces than scenes (Sato et al., 1999). These findings are also consistent with some behavioral observations (e.g., in a matching task, images of faces are matched faster than scenes; Hariri et al., 2002) but not others (e.g., in a recognition task, words were recognized faster than faces; Kolers et al., 1985). Future work may be needed to disentangle these potentially important stimulus-related differences, especially given the relationship between reaction time and valence bias (i.e., slower reaction times are associated with a more positive bias; Neta & Tong, 2016).

Altogether, this work builds on a growing literature aiming to understand individual differences in valence bias, including research that has linked valence bias to important individual differences in physical (Neta et al., 2019) and psychological well-being (Brown et al., 2017; Neta et al., 2017; Petro et al., 2019). There are also clear implications for theories in social psychology, exploring the link between valence bias and contextual factors that influence how we navigate our complex social world (Snyder & Swann, 1978; Todd et al., 2012; Trope & Thompson, 1997). Although the present work has focused primarily on the link to personality (Allport, 1937) in examining stable and generalizable individual differences in bias, future work can and should expand on these findings in the social realm. Notably, this new method for assessing valence bias has the potential to further our understanding of the pervasive uncertainty inherent to social behavior (FeldmanHall & Shenhav, 2019) and may prove to be a critical contributor to interactions across social boundaries (e.g., group affiliation/conflict, linguistic intergroup bias).

Supplementary Material

Acknowledgments

We thank Nathan Petro and Karl Kuntzelman for their comments on earlier drafts and Sarah Gervais for comments on a later draft.

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by a National Science Foundation CAREER Award (principal investigator [PI]: Neta), the National Institutes of Health (NIMH111640; PI: Neta), and Nebraska Tobacco Settlement Biomedical Research Enhancement Funds.

Biographies

Author Biographies

Nicholas R. Harp is a doctoral student in the Department of Psychology at the University of Nebraska–Lincoln (UNL). His research focuses on individual differences in valence bias, with an emphasis on mechanisms for promoting positivity (e.g., physical activity, mindfulness).

Catherine C. Brown is a doctoral student in the Department of Psychology at UNL. Her research interests focus on how stress influences valence bias.

Maital Neta is the Happold Associate Professor and associate chair in the Department of Psychology, Associate Director of the Center for Brain, Biology, and Behavior (CB3), and Director of the Cognitive and Affective Neuroscience Lab at UNL. Her research capitalizes on a number of methods from psychology and neuroscience to examine individual differences in valence bias or the tendency to interpret emotional ambiguity as having a positive or negative meaning.

Footnotes

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Supplemental Material

The supplemental material is available in the online version of the article.

References

- Allport GW (1937). Personality: A psychological interpretation. Holt.

- Anwyl-Irvine AL, Massonnié J, Flitton A, Kirkham N, & Evershed JK (2019). Gorilla in our midst: An online behavioral experiment builder. Behavior Research Methods. 10.3758/s13428-019-01237-x [DOI] [PMC free article] [PubMed]

- Balota DA, Yap MJ, Hutchison KA, Cortese MJ, Kessler B, Loftis B, Neely JH, Nelson DL, Simpson GB, & Treiman R (2007). The English lexicon project. Behavior Research Methods, 39(3), 445–459. 10.3758/BF03193014 [DOI] [PubMed] [Google Scholar]

- Bar M, Neta M, & Linz H (2006). Very first impressions. Emotion, 6(2), 269–278. 10.1037/1528-3542.6.2.269 [DOI] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, & Gendron M (2007). Language as context for the perception of emotion. Trends in Cognitive Sciences, 11(8), 327–332. 10.1016/j.tics.2007.06.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumeister RF, Vohs KD, DeWall CN, & Zhang L (2007). How emotion shapes behavior: Feedback, anticipation, and reflection, rather than direct causation. Personality and Social Psychology Review, 11(2), 167–203. 10.1177/1088868307301033 [DOI] [PubMed] [Google Scholar]

- Beevers CG, Wells TT, Ellis AJ, & Fischer K (2009). Identification of emotionally ambiguous interpersonal stimuli among dysphoric and nondysphoric individuals. Cognitive Therapy and Research, 33(3), 283–290. 10.1007/s10608-008-9198-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CC, Raio CM, & Neta M (2017). Cortisol responses enhance negative valence perception for ambiguous facial expressions. Scientific Reports, 7. 10.1038/s41598-017-14846-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandler J, Mueller P, & Paolacci G (2013). Nonnaïveté among Amazon Mechanical Turk workers: Consequences and solutions for behavioral researchers. Behavioral Research Methods, 46, 112–130. 10.3758/s13428-013-0365-7 [DOI] [PubMed] [Google Scholar]

- Chen Z, & Epstein L (2002). Ambiguity, risk, and asset returns in continuous time. Econometrica, 70(4), 1403–1443. 10.1111/1468-0262.00337 [DOI] [Google Scholar]

- Cikara M, & Fiske ST (2013). Their pain, our pleasure: Stereotype content and schadenfreude. Annals of the New York Academy of Sciences, 1299, 52–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P, Friesen WV, O’Sullivan M, Chan A, Diacoyanni-Tarlatzis I, Heider K, Krause R, LeCompte WA, Pitcairn T, Ricci-Bitti PE, Scherer K, Tomita M, & Tzavaras A (1987). Universals and cultural differences in the judgments of facial expressions of emotion. Journal of Personality and Social Psychology, 53(4), 712–717. 10.1037/0022-3514.53.4.712 [DOI] [PubMed] [Google Scholar]

- Ellsberg D (1961). Risk, ambiguity, and the savage axioms. The Quarterly Journal of Economics, 75(4), 643–669. 10.2307/1884324 [DOI] [Google Scholar]

- Eysenck MW, Mogg K, May J, Richards A, & Mathews A (1991). Bias in interpretation of ambiguous sentences related to threat in anxiety. Journal of Abnormal Psychology, 100(2), 144–150. 10.1037/0021-843X.100.2.144 [DOI] [PubMed] [Google Scholar]

- Faul F, Erdfelder E, Buchner A, & Lang A-G (2009). Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41, 1149–1160. [DOI] [PubMed] [Google Scholar]

- FeldmanHall O, & Shenhav A (2019). Resolving uncertainty in a social world. Nature Human Behavior, 3(5), 426–435. 10.1038/s41562-019-0590-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flagan T, Mumford JA, & Beer JS (2017). How do you see me? The neural basis of motivated meta-perception. Journal of Cognitive Neuroscience, 29(11), 1908–1917. 10.1162/jocn_a_01169 [DOI] [PubMed] [Google Scholar]

- Freeman JB, & Johnson KL (2016). More than meets the eye: Split-second social perception. Trends in Cognitive Sciences, 20(5), 362–374. 10.1016/j.tics.2016.03.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frijda NH (1958). Facial expression and situational cues. The Journal of Abnormal and Social Psychology, 57(2), 149–154. 10.1037/h0045562 [DOI] [PubMed] [Google Scholar]

- Grey S, & Mathews A (2000). Effects of training on interpretation of emotional ambiguity. The Quarterly Journal of Experimental Psychology, 53(4), 1143–1162. 10.1080/713755937 [DOI] [PubMed] [Google Scholar]

- Hariri AR, Tessitore A, Mattay VS, Fera F, & Weinberger DR (2002). The amygdala response to emotional stimuli: A comparison of faces and scenes. NeuroImage, 17(1), 317–323. [DOI] [PubMed] [Google Scholar]

- Joormann J, Waugh CE, & Gotlib IH (2015). Cognitive bias modification for interpretation in major depression: Effects on memory and stress reactivity. Clinical Psychological Science, 3(1), 126–139. 10.1177/2167702614560748 [DOI] [PMC free article] [PubMed] [Google Scholar]

- King-Casas B, Tomlin D, Anen C, Camerer CF, Quartz SR, & Montague R (2005). Getting to know you: Reputation and trust in two-person economic exchange. Science, 308(5718), 78–83. 10.1126/science.1108062 [DOI] [PubMed] [Google Scholar]

- Knief U, & Forstmeier W (2018). Violating the normality assumption may be the lesser of two evils. bioRxiv. 10.1101/498931 [DOI] [PMC free article] [PubMed]

- Kolers PA, Duchnicky RL, & Sundstroem G (1985). Size in the visual processing of faces and words. Journal of Experimental Psychology, 11(6), 726–751. [DOI] [PubMed] [Google Scholar]

- Krieglmeyer R, Deutsch R, De Houwer J, & De Raedt R (2010). Being moved: Valence activates approach-avoidance behavior independently of evaluation and approach-avoidance intentions. Psychological Science, 21(4), 607–613. 10.1177/0956797610365131 [DOI] [PubMed] [Google Scholar]

- Lang P, Bradley MM, & Cuthbert BN (2008). International affective picture system (IAPS): Affective ratings of pictures and instruction manual [Technical Report A–8]. University of Florida. [Google Scholar]

- Lazerus T, Ingbretsen ZA, Stolier RM, Freeman JB, & Cikara M (2016). Positivity bias in judging ingroup members’ emotional expressions. Emotion, 16(8), 1117–1125. 10.1037/emo0000227 [DOI] [PubMed] [Google Scholar]

- Lees J, & Cikara M (2020). Inaccurate group meta-perceptions drive negative out-group attributions in competitive contexts. Nature Human Behavior, 4(3), 279–286. 10.1038/s41562-019-0766-4 [DOI] [PubMed] [Google Scholar]

- Lindquist KA (2009). Language is powerful. Emotion Review, 1(1), 16–18. 10.1177/1754073908097177 [DOI] [Google Scholar]

- Lund K, & Burgess C (1996). Producing high-dimensional semantic spaces from lexical co-occurrence. Behavior Research Methods, Instruments, & Computers, 28(2), 203–208. 10.3758/BF03204766 [DOI] [Google Scholar]

- Lundqvist D, Flykt A, & Öhman A (1998). The Karolinska directed emotional faces—KDEF (CD ROM). Stockholm: Karolinska Institute, Department of Clinical Neuroscience, Psychology Section. [Google Scholar]

- Maass A, Salvi D, Arcuri L, & Semin G (1989). Language use in intergroup contexts: The linguistic intergroup bias. Journal of Personality and Social Psychology, 57(6), 981–993. [DOI] [PubMed] [Google Scholar]

- MacDonald MC, Pearlmutter NJ, & Seidenberg MS (1994). The lexical nature of syntactic ambiguity resolution. Psychological Review, 101(4), 676–703. 10.1037/0033-295X.101.4.676 [DOI] [PubMed] [Google Scholar]

- Macrae CN, Milne AB, & Bodenhausen GV (1994). Stereotypes as energy-saving devices: A peek inside the cognitive toolbox. Journal of Personality and Social Psychology, 66(1), 37–47. [Google Scholar]

- McGlone MS, & Giles H (2011). Language and interpersonal communication. In Knapp ML & Daly JA (Eds.), The SAGE hand-book of interpersonal communication. SAGE Publications. [Google Scholar]

- Mozilla. (2019). Performance.now(). Retrieved August 19, 2020, from https://developer.mozilla.org/en-US/docs/Web/API/Performance/now

- Neta M, Cantelon J, Haga Z, Mahoney CR, Taylor HA, & Davis FC (2017). The impact of uncertain threat on affective bias: Individual differences in response to ambiguity. Emotion, 17(8), 1137–1143. 10.1037/emo0000349 [DOI] [PubMed] [Google Scholar]

- Neta M, Harp NR, Henley DJ, Beckford SE, & Koehler K (2019). One step at a time: Physical activity is linked to positive interpretations of ambiguity. PLoS One, 14(11), e0225106. 10.1371/journal.pone.0225106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neta M, Kelley WM, & Whalen PJ (2013). Neural responses to ambiguity involve domain-general and domain-specific emotion processing systems. Journal of Cognitive Neuroscience, 25(4), 547–557. 10.1162/jocn_a_00363 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neta M, Norris CJ, & Whalen PJ (2009). Corrugator muscle responses are associated with individual differences in positivity-negativity bias. Emotion, 9(5), 640–648. 10.1037/a0016819 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neta M, & Tong TT (2016). Don’t like what you see? Give it time: Longer reaction times associated with increased positive affect. Emotion, 16(5), 730–739. 10.1037/emo0000181 [DOI] [PubMed] [Google Scholar]

- Neta M, & Whalen PJ (2010). The primacy of negative interpretations when resolving the valence of ambiguous facial expressions. Psychological Science, 21(7), 901–907. 10.1177/0956797610373934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nunnally JC, & Flaugher RL (1963). Psychological implications of word usage. Science, 140(3568), 775–781. [DOI] [PubMed] [Google Scholar]

- Oosterhof NN, & Todorov A (2008). The functional basis of face evaluation. Proceedings of the National Academy of Sciences of the United States of America, 105(32), 11087–11092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennebaker JW, & Graybeal A (2001). Patterns of natural language use: Disclosure, personality, and social integration. Current Directions in Psychological Science, 10(3), 90–93. [Google Scholar]

- Petersen SE, Fox PT, Posner MI, Mintun M, & Raichle ME (1988). Positron emission tomographic studies of the cortical anatomy of single-word processing. Nature, 331(6157), 585–589. [DOI] [PubMed] [Google Scholar]

- Petro NM, Tottenham N, & Neta M (2019). Positive valence bias is associated with inverse frontoamygdalar connectivity and less depressive symptoms in developmentally mature children [Preprint]. bioRxiv, 10.1101/839761 [DOI]

- Piantadosi ST, Tily H, & Gibson E (2012). The communicative function of ambiguity in language. Cognition, 122(3), 280–291. 10.1016/j.cognition.2011.10.004 [DOI] [PubMed] [Google Scholar]

- Platt ML, & Huettel SA (2008). Risky business: The neuroeconomics of decision making under uncertainty. Nature Neuroscience, 11(4), 398–403. 10.1038/nn2062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posner MI (1980). Orienting of attention. Quarterly Journal of Experimental Psychology, 32, 3–25. 10.1080/00335558008248231 [DOI] [PubMed] [Google Scholar]

- Posner MI, Petersen SE, Fox PT, & Raichle ME (1988). Localization of cognitive operations in the human brain. Science, 240(4859), 1627–1631. [DOI] [PubMed] [Google Scholar]

- R Core Team. (2019). R: A language and environment for statistical computing. R foundation for statistical computing. https://www.R-project.org/ [Google Scholar]

- Rights JD, & Sterba SK (2019). Quantifying explained variance in multilevel models: An integrative framework for defining R-squared measures. Psychological Methods, 24(3), 309–338. 10.1037/met0000184 [DOI] [PubMed] [Google Scholar]

- Sato N, Nakamura K, Nakamura A, Sugiura M, Ito K, Fukuda H, & Kawashima R (1999). Different time course between scene processing and face processing: A MEG study. Neuroreport, 10(17), 3633–3637. [DOI] [PubMed] [Google Scholar]

- Snyder M, & Swann WB (1978). Hypothesis-testing processes in social interaction. Journal of Personality and Social Psychology, 36(11), 1202–1212. [Google Scholar]

- Todd AR, Galinsky AD, & Bodenhausen GV (2012). Perspective taking undermines stereotype maintenance processes: Evidence from social memory, behavior explanation, and information solicitation. Social Cognition, 30(1), 94–108. [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Marcus DJ, Westerlund A, Casey BJ, & Nelson C (2009). The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168(3), 242–249. 10.1016/j.psychres.2008.05.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trope Y, & Thompson EP (1997). Looking for truth in all the wrong places? Asymmetric search of individuating information about stereotyped group members. Journal of Personality and Social Psychology, 73(2), 229–241. [DOI] [PubMed] [Google Scholar]

- Warriner AB, Kuperman V, & Brysbaert M (2013). Norms of valence, arousal, and dominance for 13,915 English lemmas. Behavior Research Methods, 45(4), 1191–1207. 10.3758/s13428-012-0314-x [DOI] [PubMed] [Google Scholar]

- Willis J, & Todorov A (2006). First impressions: Making up your mind after a 100-ms exposure to a face. Psychological Science, 17(7), 592–598. 10.1111/j.1467-9280.2006.01750.x [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.