Abstract

In March 2020, the World Health Organization announced the COVID-19 pandemic, its dangers, and its rapid spread throughout the world. In March 2021, the second wave of the pandemic began with a new strain of COVID-19, which was more dangerous for some countries, including India, recording 400,000 new cases daily and more than 4,000 deaths per day. This pandemic has overloaded the medical sector, especially radiology. Deep-learning techniques have been used to reduce the burden on hospitals and assist physicians for accurate diagnoses. In our study, two models of deep learning, ResNet-50 and AlexNet, were introduced to diagnose X-ray datasets collected from many sources. Each network diagnosed a multiclass (four classes) and a two-class dataset. The images were processed to remove noise, and a data augmentation technique was applied to the minority classes to create a balance between the classes. The features extracted by convolutional neural network (CNN) models were combined with traditional Gray-level Cooccurrence Matrix (GLCM) and Local Binary Pattern (LBP) algorithms in a 1-D vector of each image, which produced more representative features for each disease. Network parameters were tuned for optimum performance. The ResNet-50 network reached accuracy, sensitivity, specificity, and Area Under the Curve (AUC) of 95%, 94.5%, 98%, and 97.10%, respectively, with the multiclasses (COVID-19, viral pneumonia, lung opacity, and normal), while it reached accuracy, sensitivity, specificity, and AUC of 99%, 98%, 98%, and 97.51%, respectively, with the binary classes (COVID-19 and normal).

1. Introduction

COVID-19 began to appear and spread from the city of Wuhan, China, in December 2019 around the world very quickly. In March 2020, the World Health Organization declared it a global pandemic that caused the closure of airports, restricted internal and external movements, and paralyzed the global economy. By May 13, 2021, the total number of global cases reached about 161,596,640 people, and the number of active cases reached 17,782,865 people, while the number of deaths reached 3,352,620 people, and serious critical cases reached 104,362 people; the number is still increasing daily [1]. The virus spreads through saliva droplets or nasal swabs. The symptoms a person has are high fever, dry cough, headaches, muscle aches, tingling, sneezing, a sore throat, and respiratory diseases from mild to moderate. In addition, 97% of people with COVID-19 suffer from mild symptoms, and 3% suffer from critical cases. However, the elderly and those with chronic diseases such as asthma, pneumonia, heart disease, and diabetes are likely to die from COVID-19.

Two methods for detecting COVID-19 are available, one of which is taking a sample of nasopharyngeal swabs [2], called real-time reverse-transcriptase PCR (rRT-PCR). The other method is chest imaging using X-rays and chest-computed tomography (CCT) [3, 4]. There is a fear of diagnosing using swabs due to contact with surfaces and gloves, and this has caused danger to the medical sector, so diagnosing using CCT is considered safer for medical workers. The CCT method is also considered more accurate in diagnosis because it helps detect hazy white spots in the lungs that are signs of COVID-19. Further, CCT imaging is better than X-ray imaging because the former has high resolution and 3D imaging at 360° angles, while X-ray imaging provides only 2D images. Thus, experiments by some researchers showed that CCT detected 97% of COVID-19, while the swab method achieved a diagnostic accuracy of 52% [5]. Due to the increasing number of cases on a daily basis, which causes a burden on hospitals, doctors, and radiologists, it is necessary to make a diagnosis quickly and in a timely manner, so researchers have worked to introduce artificial intelligence techniques to diagnose CCT and X-ray images of COVID-19 to distinguish COVID-19 from infections, such as pneumonia or even normal conditions. CNN deep-learning techniques are some of the most important artificial intelligence techniques that help doctors and radiologists diagnose medical images, including lung images. In this study, we used X-ray images to diagnose COVID-19 and distinguish it from viral pneumonia, lung opacity, and normal diseases to reduce the burden on hospitals and doctors.

The main contributions of this paper are as follows:

Building deep-learning models to reduce the burdens on hospitals with the outbreak of COVID-19 and helping physicians to improve the accuracy of diagnosing COVID-19 through X-ray images

Combining features extracted by CNN models with conventional GLCM and LBP algorithms

Extracting radial texture patterns, such as pulmonary consolidations, patchy glass opacities, and retinal opacities, to distinguish each disease

Using a dataset from many sources. Data augmentation was used to create a balance between classes, as it was applied to the minority classes and ignored the majority classes

The rest of the present paper is organized as follows: Section 2 describes the related work. The Section 3 describes the materials and methods applied in this paper, and Section 4 reviews an analysis of the results. Section 5 presents a comparison and discussion of the results with existing systems, and the conclusions are included in Section 6.

2. Literature Review

Several deep-learning techniques have been proposed to diagnose COVID-19 through CCT or X-ray images. Loey et al. presented a generative adversarial network (GAN) algorithm with deep learning to diagnose COVID-19 through X-ray images [6]. Toğaçar et al. diagnosed a dataset of COVID-19, pneumonia, and normal images. All images were preprocessed using the fuzzy color technique. The dataset was trained with two models: MobileNetV2 and SqueezeNet. Social mimic optimization was applied for feature processing, and features were fed into the Support Vector Machine (SVM) classifier to classify each image [7]. Tabik et al. created COVIDGR-1.0, which is a homogeneous and balanced dataset that includes all levels of severity to demystify the sensitivity achieved by deep-learning techniques. They presented the COVID-SDNet approach to diagnose COVID-19 with high accuracy [8]. Ni et al. applied MVP-Net and 3D U-Net to CCT scanning of 96 COVID-19 patients from three hospitals in China for the purpose of segmenting and detecting lesions.

Furthermore, algorithms have proven their efficiency in helping specialists diagnose COVID-19 faster and with high accuracy [9]. Ko et al. developed a system called a fast-track COVID-19 classification network (FCONet) to diagnose COVID-19 through CCT images. They trained the dataset with four deep-network models: ResNet-50, Xception, VGG16, and Inception-V3. ResNet-50 achieved the best performance in diagnosing COVID-19 [10]. Wang et al. proposed a DeCovNet to diagnose 3D CT images for localization and classification of COVID-19. A pretrained UNet was applied to segment the lesion region. Then, the segmented lesion region was passed into a deep 3D network to predict COVID-19 [11]. Sun et al. applied an adaptive feature selection guided deep forest (AFS-DF) to diagnose COVID-19. They extracted the most important representative features from the CT images. To avoid feature duplication, they used a feature-selection technique based on a pretrained deep forest. The algorithms achieved accuracy, sensitivity, and specificity of 91.79%, 93.05%, and 89.95%, respectively [12]. Apostolopoulos et al. presented a MobileNet v2 model to diagnose COVID-19 through X-ray imaging. They proved that training the model from scratch outperformed mesh when applying transfer learning. The network achieved satisfactory results for the diagnosis of COVID-19 [13].

Marques et al. developed CNN through EfficientNet Engineering. EfficientNet was applied to a binary classification between COVID-19 and normal person, as well as to diagnose several classes of COVID-19, pneumonia, and normal [14]. Bahadur Chandra et al. presented an automatic COVID-19 screening system (ACoS) that uses radiographic texture features through chest X-ray (CXR) images to distinguish suspected persons from normal [15]. Wang et al. presented the FGCNet system to detect COVID-19 from CCT images. As the networks worked to extract the distinctive individual features of each image, representations were also obtained from a graph convolutional network (GCN). The deeper features were fused between the individual features and the relation-aware features [16].

3. Material and Methods

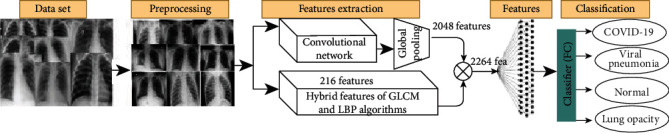

The motivation behind this work was to help doctors and radiologists detect patients with COVID-19 using deep-learning techniques. Two pretrained deep-learning modeling algorithms, namely, ResNet-50 and AlexNet, were used to extract the most important distinguishing features from X-ray images. Further, feature extraction uses conventional GLCM and LBP algorithms and hybrids all features into a 1-D feature vector. Figure 1 describes the methodology used to diagnose COVID-19.

Figure 1.

Methodology for diagnosing COVID-19.

3.1. Dataset Description

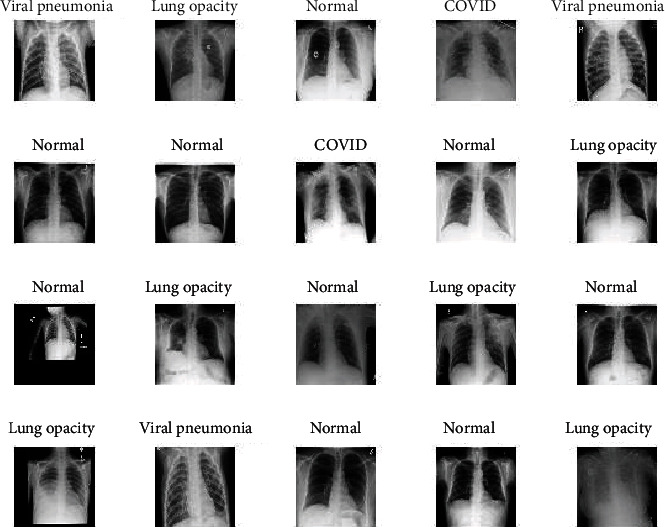

The database of chest X-ray images was compiled by a team of researchers from Qatar University in Doha and Dhaka University in Bangladesh and collaborators from Malaysia and Pakistan. The dataset consists of 21,165 X-ray images divided into four diseases as follows: 3,616 X-ray images of COVID-19-positive cases collected from several sources [17–23], 1,345 images of viral pneumonia collected from sources [24], 10,192 images of normal patients collected from sources [24, 25], and 6,012 images of lung opacity (non-COVID-19) collected from the CXR dataset at the Radiological Society of North America (RSNA) [25]. Figure 2 describes the samples from the dataset used in this study.

Figure 2.

Samples from a multiclass dataset.

3.2. Preprocessing

X-ray images contain noise due to different contrasts, light reflections, and patient movements while taking the X-ray. This noise causes computational complications and reduces the diagnostic accuracy of CNNs, so preprocessing was applied to all images before the training and testing process [26]. Further, the dataset was collected from several sources. Thus, there is a difference in the intensity of imaging from one X-ray device to another, which necessitates the application of normalization to reduce the intensity of homogeneity. The X-ray images were enhanced by calculating the mean for the RGB color channels, and then, scaling was calculated for color constancy. Finally, an average filter was applied to enhance the X-ray images by replacing each pixel with the average value of its neighbors. All images were also resized for CNN models; each image was resized to 224 × 224 pixels for the ResNet-50 model and to 227 × 227 pixels for the AlexNet model [27, 28].

3.3. Data Augmentation

The data augmentation technique improves the performance of deep-learning networks by duplicating existing data rather than looking for new data due to the scarcity of medical images [29]. Data augmentation is an important process because it leads to data diversity during the training phase of the model, thereby solving the problem of unbalanced data. The augmentation method increases the images in the dataset, which leads to reduced overfitting. In this study, the dataset size was 21,165 images distributed into four unbalanced classes: 3,616 images of COVID-19, 1,345 images of viral pneumonia, 10,192 images of normal patients, and 6,012 images of lung opacity (non-COVID-19). Where we noticed a lack of balance between the classes, the augmentation technique needed to be applied to the dataset to create balance. The dataset was augmented using a variety of methods, such as rotation, horizontal and vertical shift, padding, horizontal and vertical flipping, and cropping [30]. Augmentation was applied to three classes, COVID-19, viral pneumonia, and lung opacity, while the method was not applied to the normal class because it contained 10,192 images. Table 1 describes the number of images before and after applying the data augmentation technique.

Table 1.

Number of images of the training dataset before and after the augmentation technique.

| Name of class | COVID-19 | Viral pneumonia | Normal | Lung opacity |

|---|---|---|---|---|

| No. of images before augmentation | 3,616 | 1,345 | 8,154 | 6,012 |

| No. of images after augmentation | 8,107 | 8,087 | 8,154 | 8,138 |

3.4. Feature Extraction

In this study, texture, shape, and color features were extracted by both convolutional neural networks through convolutional layers and conventional algorithms through the GLCM and LBP algorithms. Then, all the extracted features were combined into a 1-D vector feature of each image.

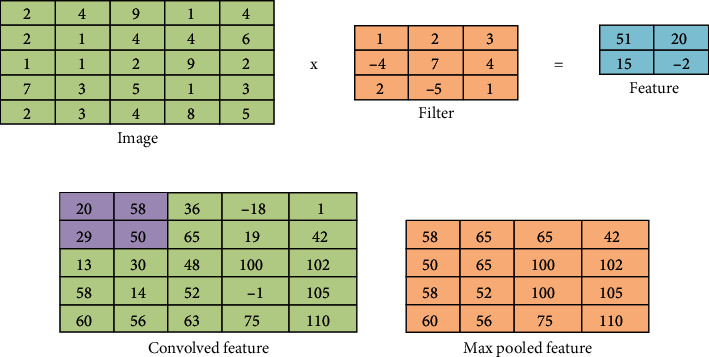

3.4.1. Convolutional and Pooling Layers

X-rays of COVID-19 patients show features of radial texture patterns, such as pulmonary consolidations, patchy glass opacities, and retinal opacities. CNNs extract these features through filters in the convolutional layers [31]. CNN implements several convolutional layers and pooling to extract the most representative features of COVID-19. Figure 3(a) describes how the filters operate in convolutional layers to extract features, depending on the step value, where 9,216 features are extracted for each image. Then, a max pooling layer is applied to reduce the spatial size of the features extracted from the convolutional layers [32], where the extracted features are reduced to 2,048 features of each image. Figure 3(b) describes the process of implementing a max pooling layer with a filter size of two and a stride of one. A rectified linear unit (ReLU) is applied to learn the complex maps between the inputs and response variables, so that the positive input passes and the negative inputs are converted to zero. Equation (1) describes obtaining feature maps.

| (1) |

where xil−1 denotes the local features obtained from the previous layer, kijl denotes the adjustable filter, and bjl denotes the training bias. The benefit of using bias is to prevent overfitting during network training [33]. MJ denotes the input map, whereas f denotes the activation function.

Figure 3.

(a) Convolutional process with a filter size 3 and a stride 2. (b) Performing max pooling.

As mentioned, pooling layers work with the max technique to reduce computational nodes and prevent overfitting. Also, the pooling layer is responsible for the downsampling of feature maps [34]. Equation (2) describes the pooling process.

| (2) |

The down(.) function shows downsampling, which provides an abstract of the local features that will be presented to the next layer.

3.4.2. GLCM and LBP Algorithms

The GLCM algorithm extracts texture features from the region of interest. Smooth regions have pixels close to each other, which differ from rough regions that have pixels other. The GLCM algorithm collects spatial and statistical information from a region of interest. Spatial information defines the relationship between the center and neighboring pixels in terms of distance d and θ (0°, 45°, 90°, and 135°). In this study, 13 statistical features were extracted: correlation, energy, mean, smoothness, kurtosis, contrast, standard deviation, variance, homogeneity, skewness, entropy, and RMS. The LBP algorithm describes the texture of the binary surfaces of the lesion region and extracts features from the region of interest. The LBP algorithm determines the center pixel to be analyzed on the basis of adjacent pixels and according to R (radius), which determines the number of neighboring pixels. In this study, 203 features were extracted for each image. Then, the features extracted by GLCM and LBP were combined so that each image is represented in a 1-D vector with a length of 216 features.

3.4.3. Combined Features Extracted

After obtaining 2,048 features by convolutional neural networks through convolutional layers and GLCM and LBP algorithms, the extracted features are then combined so that each image is represented by a 1-D vector with a length of 2,264 features.

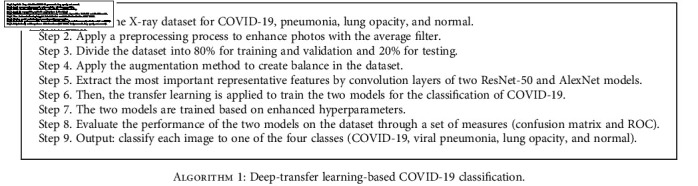

3.5. Transfer Learning

Deep-learning techniques classify medical images with high diagnostic accuracy by extracting their features. They do this through training CNN models from scratch, using deep-learning transfer techniques through pretrained CNN models or using a hybrid method through transfer deep learning with tuning parameters of specific training layers called fine-tuning [35, 36]. In our study, we used learning transfer techniques by tuning the parameters in specific training layers and replacing the last classification layers in proportion to the new dataset. In the transfer learning, models were trained on ImageNet datasets, which were divided into more than a thousand classes, and then, the acquired knowledge was transferred to new classification tasks to diagnose a new dataset containing COVID-19 patients. Algorithm 1 describes the ResNet-50 and AlexNet models' work.

Algorithm 1.

Deep-transfer learning-based COVID-19 classification.

4. Experimental Results

In this paper, two experiments were applied for each of the two ResNet-50 and AlexNet models, the first experiment for classifying multiclass (four diseases) and the second experiment for binary classification (COVID-19 and normal). The parameters were tuned to the best performance, as shown in Table 2, to classify the dataset for multi- and binary classes.

Table 2.

Options for configuring training parameters for deep-learning networks.

| Class | Multiclass (four classes) | Binary class (two classes) | ||

|---|---|---|---|---|

| Options | ResNet-50 | AlexNet | ResNet-50 | AlexNet |

| Training options | Adam | Adam | Adam | Adam |

| Minibatch size | 10 | 120 | 10 | 120 |

| Max epochs | 5 | 10 | 5 | 10 |

| Iteration per epoch | 1,354 | 105 | 883 | 69 |

| Maximum iterations | 6,770 | 1,050 | 4,415 | 690 |

| Initial learn rate | 0.0001 | 0.0001 | 0.0001 | 0.0001 |

| Validation frequency | 5 | 50 | 5 | 50 |

| Training time | 674 min 32 sec | 81 min 5 sec | 442 min 58 sec | 71 min 50 sec |

| Execution environment | GPU | GPU | GPU | GPU |

4.1. Model Training

To train the ResNet-50 and AlexNet deep network models, the two models were trained using the transfer learning method with parameter tuning. Table 2 describes the training options and implementation times in the Matlab 2018b environment. Trained models were implemented by Cori5 Gen6 with 4G NVIDA GPU and 8G RAM.

4.2. Results with Multiclasses

The dataset contained 21,165 images divided into four diseases, as mentioned previously. The dataset was divided into 80% training and validation and 20% testing (80 : 20, respectively). After the parameters were tuned, the ResNet-50 model was trained, and it was minibatch size 10, and the network training was completed with a total of 6,770 iterations with an elapsed time of 674 min 32 sec. Meanwhile, AlexNet was a minibatch size 120, and the network training was completed with a total of 1,050 iterations, with an elapsed time of 81 min 5 sec.

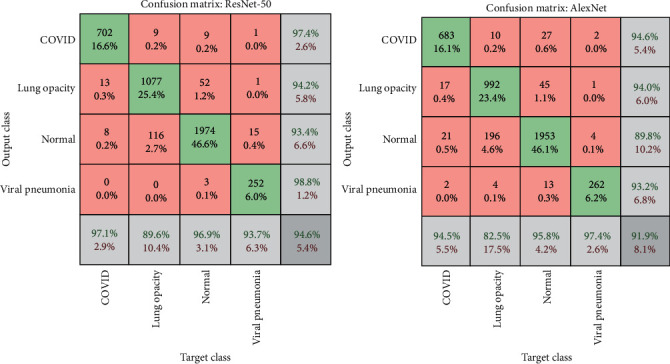

Figure 4 shows the confusion matrix for both the ResNet-50 and AlexNet models. The confusion matrix contains a set of correctly classified images called true positive (TP) and true negative (TN) and a set of misclassified images called false positive (FP) and false negative (FN). Through the confusion matrix, the accuracy, sensitivity, and specificity were calculated according to Equations (3), (4), and (5), and the AUC was calculated according to Equation (6) [37].

Figure 4.

Confusion matrix for multiclass dataset by ResNet-50 and AlexNet models.

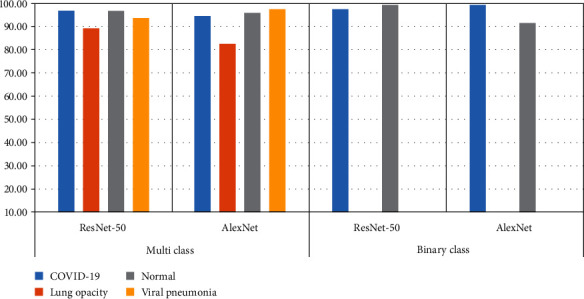

Table 3 and Figure 5 illustrate the multi- and binary class evaluation of both the ResNet-50 and AlexNet models. The networks achieved promising results, as the network ResNet-50 achieved accuracy, sensitivity, specificity, and AUC by 95%, 94.5%, 98%, and 97.10%, respectively, while AlexNet achieved accuracy, sensitivity, specificity, and AUC by 92%, 92.5%, 96.75%, and 99.63%, respectively. Table 4 shows the results that were reached for the diagnosis of each disease, where ResNet-50 reached a diagnostic accuracy of COVID-19 by 97.10%, and 702 of 723 images were diagnosed correctly, while 13 images were incorrectly diagnosed as lung opacity, and eight images were incorrectly diagnosed as normal. Meanwhile, AlexNet reached a diagnostic accuracy of COVID-19 by 94.5%, where 683 of 723 images were correctly diagnosed, 17 images of COVID-19 were incorrectly diagnosed as lung opacity, 21 images of COVID-19 were diagnosed as normal, and two images of COVID-19 were diagnosed as viral pneumonia.

| (3) |

| (4) |

| (5) |

| (6) |

Table 3.

Results of diagnosing diseases using deep-learning models.

| Class | Multiclass (four classes) | Binary class (two classes) | ||

|---|---|---|---|---|

| Measurement | ResNet-50 | AlexNet | ResNet-50 | AlexNet |

| Accuracy% | 95.00 | 92.00 | 99.00 | 93.00 |

| Sensitivity% | 94.50 | 92.50 | 98.00 | 95.00 |

| Specificity% | 98.00 | 96.75 | 98.00 | 95.00 |

| AUC% | 97.10 | 99.63 | 97.51 | 99.61 |

Figure 5.

Display performance of two models for detection of COVID-19 through multi- and binary class.

Table 4.

Performance evaluation results for the COVID-19 disease datasets.

| Class | Multiclass (four classes) | Binary class (two classes) | ||

|---|---|---|---|---|

| Disease types | ResNet-50 | AlexNet | ResNet-50 | AlexNet |

| COVID-19 | 97.10 | 94.50 | 97.40 | 99.30 |

| Lung opacity | 89.60 | 82.50 | — | — |

| Normal | 96.90 | 95.80 | 99.40 | 91.40 |

| Viral pneumonia | 93.70 | 97.40 | — | — |

4.3. Results with Binary Classes

In this experiment, the dataset contains 13,808 images, which are divided into two classes: COVID-19, which contains 3,616 images, and a normal class, which contains 10,129 images. The dataset was divided into 20% for testing and 80% for training and validation. Table 2 describes the tuned parameters of the two networks, where the ResNet-50 model was trained, and it was minibatch size 10, and the network training was completed with a total of 4,415 iterations with an elapsed time of 442 min 58 sec. Meanwhile, AlexNet had a minibatch size of 120, and the network training was completed with a total of 690 iterations with an elapsed time of 71 min 50 sec.

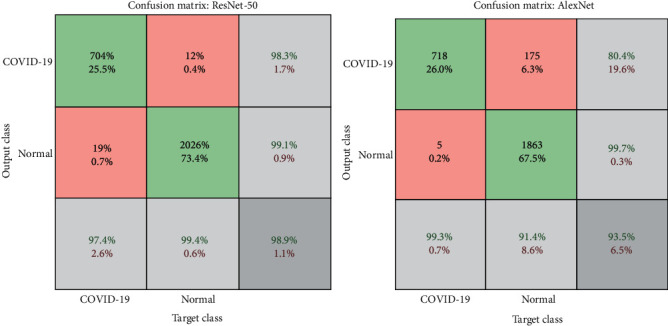

Figure 6 illustrates the confusion matrix for classifying COVID-19 and distinguishing it from normal images using the ResNet-50 and AlexNet models. Table 3 shows the results obtained for both ResNet-50 and AlexNet networks. The networks achieved promising results, as ResNet-50 achieved accuracy, sensitivity, specificity, and AUC by 99%, 98%, 98%, and 97.51%, respectively, while AlexNet achieved accuracy, sensitivity, specificity, and AUC by 93%, 95%, 95%, and 99.61%, respectively. Table 4 shows the results for the diagnosis of each disease, where ResNet-50 reached a diagnostic accuracy of COVID-19 by 97.40%, and 704 of 723 images were diagnosed correctly, while 19 images were incorrectly diagnosed as normal. Meanwhile, AlexNet reached a diagnostic accuracy of COVID-19 of 99.3%, where 718 of 723 images were correctly diagnosed, while five images of COVID-19 were incorrectly diagnosed as normal.

Figure 6.

Confusion matrix for the two-class dataset by ResNet-50 and AlexNet models.

5. Comparative Study and Discussion

The features were extracted using both deep-learning models (ResNet-50 and AlexNet) and traditional algorithms (GLCM and LBP). All the features were combined into a 1-D vector feature for each image, which gave our models high reliability and diagnostic accuracy. The dataset was divided into 80% for training and validation and 20% for testing (80 : 20). The extracted features were fed to the fully connected layers of both the ResNet-50 and AlexNet models. Two experiments were applied for each model, one with four types of disease: COVID-19, viral pneumonia, normal, and lung opacity (non-COVID-19), and the second experiment with two diseases, COVID-19 and normal. All experiments achieved promising results, as shown in Tables 3 and 4. Due to the extraction of features by deep-learning and machine-learning techniques and their combination, the proposed system has achieved promising results compared to existing systems.

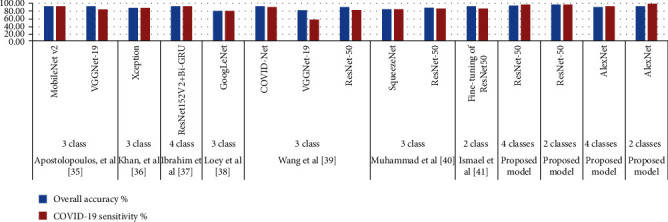

Table 5 and Figure 7 present the results of a comparison of the proposed performance with the existing systems, which shows the superiority of the proposed systems over the existing systems. The overall accuracy of the existing systems reached between 93.36% and 81.5%, while our system achieved an (ResNet-50) overall accuracy of 95% and 98% for the multiclass and binary class, respectively. The existing systems achieved diagnostic accuracy of COVID-19 ranging between 92.95% and 58.7%, while our system (ResNet-50) achieved diagnostic accuracy for detecting COVID-19 with an accuracy of 97.10%, and AlexNet achieved an accuracy of detecting COVID-19 with an accuracy of 99.30%.

Table 5.

Comparison of the performance of our proposed system with existing system.

| Previous studies | Number of class | Technique | Overall accuracy (%) | COVID-19 sensitivity (%) |

|---|---|---|---|---|

| Apostolopoulos et al. [38] | 3 classes | MobileNet v2 | 92.80 | 94.00 |

| VGGNet-19 | 93.50 | 86.00 | ||

| Khan et al. [39] | 3 classes | Xception | 90.20 | 89.00 |

| Ibrahim et al. [40] | 4 classes | ResNet152V2+Bi-GRU | 93.36 | 92.95 |

| Loey et al. [6] | 3 classes | GoogLeNet | 81.50 | 81.80 |

| Wang et al. [41] | 3 classes | COVID-Net | 93.30 | 91.00 |

| VGGNet-19 | 83.00 | 58.70 | ||

| ResNet-50 | 90.60 | 83.00 | ||

| Muhammad et al. [42] | 3 classes | SqueezeNet | 84.40 | 84.30 |

| ResNet-50 | 90.00 | 87.40 | ||

| Ismael et al. [43] | 2 classes | Fine-tuning of ResNet50 | 92.63 | 88.00 |

| Proposed model | 4 classes | ResNet-50 | 95.00 | 97.10 |

| Proposed model | 2 classes | ResNet-50 | 98.00 | 97.40 |

| Proposed model | 4 classes | AlexNet | 92.00 | 94.50 |

| Proposed model | 2 classes | AlexNet | 93.00 | 99.30 |

Figure 7.

Comparison of models' performance on diagnostic accuracy in COVID-19.

6. Conclusion

This work provides a robust system for classifying a dataset collected from multiple sources that contains 21,165 X-ray images divided into four diseases (classes): 3,616 images of COVID-19-positive cases, 1,345 images of viral pneumonia, 10,192 images of normal patients, and 6,012 images of lung opacity (non-COVID-19). CCT and X-ray are the most accurate methods for diagnosing COVID-19. Deep-learning techniques reduce the burden on hospitals, doctors, and radiologists, and they work to diagnose people with COVID-19 with high accuracy to quickly isolate them from others and reduce the spread of the disease. In this paper, we conducted four experiments using ResNet-50 and AlexNet networks with multiclass and binary class datasets. The dataset was divided into 80% for training and validation and 20% for testing (80 : 20, respectively). The features extracted by the CNN models were combined with traditional GLCM and LBP algorithms in a 1-D vector of each image, which produced more representative features. ResNet-50 achieved better results than AlexNet with a multiclass and binary class dataset.

When using the multiclass dataset, ResNet-50 achieved accuracy, sensitivity, specificity, and AUC with 95%, 94.5%, 98%, and 97.10%, respectively, while AlexNet achieved accuracy, sensitivity, specificity, and AUC with 92%, 92.5%, 96.75%, and 99.63%, respectively. Meanwhile, when using the binary class dataset, the ResNet-50 network reached accuracy, sensitivity, specificity, and AUC by 99%, 98%, 98%, and 97.51%, respectively, while AlexNet reached accuracy, sensitivity, specificity, and AUC by 93%, 95%, 95%, and 99.61%, respectively. ResNet-50 also achieved diagnostic accuracy of COVID-19 by 97.10% and 97.40% with the multi- and binary class datasets, respectively, whereas AlexNet reached diagnostic accuracy of COVID-19 by 94.50% and 99.30% with the multi- and binary class datasets, respectively. New deep-learning algorithms will be suggested in the future to improve the system.

Data Availability

Dataset is available on https://www.kaggle.com/c/rsna-pneumonia-detection-challenge/data and https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Worldometer, D. COVID-19 coronavirus pandemic. World Health Organization . World Health Organization; 2020. [Google Scholar]

- 2.Azar A., Wessell D. E., Janus J. R., Simon L. Fractured aluminum nasopharyngeal swab during drive-through testing for COVID-19: radiographic detection of a retained foreign body. Skeletal radiology . 2020;49(11):1873–1877. doi: 10.1007/s00256-020-03582-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.de Barry O., Obadia I., el Hajjam M., Carlier R. Y. Chest-X-ray is a mainstay for follow-up in critically ill patients with COVID-19 induced pneumonia. European Journal of Radiology . 2020;129, article 109075 doi: 10.1016/j.ejrad.2020.109075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Herpe G., Tasu J. P. Impact of the prevalence on the predictive positive value of chest CT in the diagnosis of coronavirus disease (COVID-19) American Journal of Roentgenology . 2020;215(3):p. W39. doi: 10.2214/AJR.20.23530. [DOI] [PubMed] [Google Scholar]

- 5.Ai T., Yang Z., Hou H., et al. Correlation of chest CT and RT-PCR testing for coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology . 2020;296(2):E32–E40. doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Loey M., Smarandache F., Khalifa N. E. Within the lack of chest COVID-19 X-ray dataset: a novel detection model based on GAN and deep transfer learning. Symmetry . 2020;12(4):p. 651. doi: 10.3390/sym12040651. [DOI] [Google Scholar]

- 7.Toğaçar M., Ergen B., Cömert Z. COVID-19 detection using deep learning models to exploit Social Mimic Optimization and structured chest X-ray images using fuzzy color and stacking approaches. Computers in Biology and Medicine . 2020;121, article 103805 doi: 10.1016/j.compbiomed.2020.103805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tabik S., Gomez-Rios A., Martin-Rodriguez J. L., et al. COVIDGR dataset and COVID-SDNet methodology for predicting COVID-19 based on chest X-ray images. IEEE journal of biomedical and health informatics . 2020;24(12):3595–3605. doi: 10.1109/JBHI.2020.3037127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ni Q., Sun Z. Y., Qi L., et al. A deep learning approach to characterize 2019 coronavirus disease (COVID-19) pneumonia in chest CT images. European Radiology . 2020;30(12):6517–6527. doi: 10.1007/s00330-020-07044-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ko H., Chung H., Kang W. S., et al. COVID-19 pneumonia diagnosis using a simple 2D deep learning framework with a single chest CT image: model development and validation. Journal of medical Internet research . 2020;22(6, article e19569) doi: 10.2196/19569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wang X., Deng X., Fu Q., et al. A weakly-supervised framework for COVID-19 classification and lesion localization from chest CT. IEEE Transactions on Medical Imaging . 2020;39(8):2615–2625. doi: 10.1109/TMI.2020.2995965. [DOI] [PubMed] [Google Scholar]

- 12.Sun L., Mo Z., Yan F., et al. Adaptive feature selection guided deep forest for COVID-19 classification with chest ct. IEEE Journal of Biomedical and Health Informatics . 2020;24(10):2798–2805. doi: 10.1109/JBHI.2020.3019505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Apostolopoulos I. D., Aznaouridis S. I., Tzani M. A. Extracting possibly representative COVID-19 biomarkers from X-ray images with deep learning approach and image data related to pulmonary diseases. Journal of Medical and Biological Engineering . 2020;40(3):462–469. doi: 10.1007/s40846-020-00529-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Marques G., Agarwal D., de la Torre Díez I. Automated medical diagnosis of COVID-19 through EfficientNet convolutional neural network. Applied Soft Computing . 2020;96, article 106691 doi: 10.1016/j.asoc.2020.106691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bahadur Chandra T., Verma K., Kumar Singh B., Jain D., Singh Netam S. Coronavirus disease (COVID-19) detection in chest X-ray images using majority voting based classifier ensemble. Expert Systems with Applications . 2021;165, article 113909 doi: 10.1016/j.eswa.2020.113909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wang S. H., Govindaraj V. V., Górriz J. M., Zhang X., Zhang Y. D. COVID-19 classification by FGCNet with deep feature fusion from graph convolutional network and convolutional neural network. Information Fusion . 2021;67:208–229. doi: 10.1016/j.inffus.2020.10.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. https://bimcv.cipf.es/bimcv-projects/bimcv-covid19/#1590858128006-9e640421-6711 .

- 18. https://github.com/ml-workgroup/covid-19-image-repository/tree/master/png .

- 19. https://sirm.org/category/senza-categoria/covid-19/

- 20. https://www.eurorad.org/

- 21. https://github.com/ieee8023/covid-chestxray-dataset .

- 22. https://figshare.com/articles/COVID-19_Chest_X-Ray_Image_Repository/12580328 .

- 23. https://github.com/armiro/COVID-CXNet .

- 24. https://www.kaggle.com/c/rsna-pneumonia-detection-challenge/data .

- 25. https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia .

- 26.Senan E. M., Jadhav M. E. Analysis of dermoscopy images by using ABCD rule for early detection of skin cancer. Global Transitions Proceedings . 2021;2(1):1–7. doi: 10.1016/j.gltp.2021.01.001. [DOI] [Google Scholar]

- 27.Irfan M., Iftikhar M. A., Yasin S., et al. Role of hybrid deep neural networks (HDNNs), computed tomography, and chest X-rays for the detection of COVID-19. International Journal of Environmental Research and Public Health . 2021;18(6):p. 3056. doi: 10.3390/ijerph18063056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Almalki Y. E., Qayyum A., Irfan M., et al. A novel method for COVID-19 diagnosis using artificial intelligence in chest X-ray images. Health care . 2021;9(5):p. 522. doi: 10.3390/healthcare9050522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Mikołajczyk A., Grochowski M. Data augmentation for improving deep learning in image classification problem. 2018 international interdisciplinary PhD workshop (IIPhDW); 2018; Poland. pp. 117–122. [DOI] [Google Scholar]

- 30.Senan E. M., Alsaade F. W., Al-mashhadani M. I. A., Theyazn H. H., Al-Adhaileh M. H. Classification of histopathological images forearly detection of breast cancer using deep learning. Journal of AppliedScience and Engineering . 2021;24(3):323–329. [Google Scholar]

- 31.Gu J., Wang Z., Kuen J., et al. Recent advances in convolutional neural networks. Pattern Recognition . 2018;77:354–377. doi: 10.1016/j.patcog.2017.10.013. [DOI] [Google Scholar]

- 32.Matsugu M., Mori K., Mitari Y., Kaneda Y. Subject independent facial expression recognition with robust face detection using a convolutional neural network. Neural Networks . 2003;16(5–6):555–559. doi: 10.1016/S0893-6080(03)00115-1. [DOI] [PubMed] [Google Scholar]

- 33.Başaran E., Cömert Z., Çelik Y. Convolutional neural network approach for automatic tympanic membrane detection and classification. Biomedical Signal Processing and Control . 2020;56, article 101734 doi: 10.1016/j.bspc.2019.101734. [DOI] [Google Scholar]

- 34.Xu C., Yang J., Lai H., Gao J., Shen L., Yan S. UP-CNN: un-pooling augmented convolutional neural network. Pattern Recognition Letters . 2019;119:34–40. [Google Scholar]

- 35.Wang G., Li W., Zuluaga M. A., et al. Interactive medical image segmentation using deep learning with image-specific fine tuning. IEEE transactions on medical imaging . 2018;37(7):1562–1573. doi: 10.1109/TMI.2018.2791721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Aldhyani T. H., Alrasheed M., Alzahrani M. Y., Ahmed H. Deep learning and holt-trend algorithms for predicting COVID-19 pandemic. Computers, Materials & Continua . 2021;67(2):2141–2160. [Google Scholar]

- 37.Senan E. M., al-Adhaileh M. H., Alsaade F. W., et al. Diagnosis of chronic kidney disease using effective classification algorithms and recursive feature elimination techniques. Journal of Healthcare Engineering . 2021;2021(10):1–10. doi: 10.1155/2021/1004767.1004767 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Apostolopoulos I. D., Mpesiana T. A. COVID-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Physical and Engineering Sciences in Medicine . 2020;43(2):635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Khan A. I., Shah J. L., Bhat M. M. CoroNet: a deep neural network for detection and diagnosis of COVID-19 from chest X-ray images. Computer Methods and Programs in Biomedicine . 2020;196, article 105581 doi: 10.1016/j.cmpb.2020.105581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ibrahim D. M., Elshennawy N. M., Sarhan A. M. Deep-chest: multi-classification deep learning model for diagnosing COVID-19, pneumonia, and lung cancer chest diseases. Computers in Biology and Medicine . 2021;132, article 104348 doi: 10.1016/j.compbiomed.2021.104348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Wang X., Peng Y., Lu L., Lu Z., Bagheri M. ChestX-ray8: hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In Proceedings of the IEEE conference on computer vision and pattern recognition; 2017; Honolulu, HI, USA. pp. 2097–2106. [Google Scholar]

- 42.Muhammad G., Shamim Hossain M. COVID-19 and non-COVID-19 classification using multi-layers fusion from lung ultrasound images. Information Fusion . 2021;72:80–88. doi: 10.1016/j.inffus.2021.02.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ismael A. M., Şengür A. Deep learning approaches for COVID-19 detection based on chest X-ray images. Expert Systems with Applications . 2021;164, article 114054 doi: 10.1016/j.eswa.2020.114054. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Dataset is available on https://www.kaggle.com/c/rsna-pneumonia-detection-challenge/data and https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia.