Abstract

This study aimed to develop a method for detection of femoral neck fracture (FNF) including displaced and non-displaced fractures using convolutional neural network (CNN) with plain X-ray and to validate its use across hospitals through internal and external validation sets. This is a retrospective study using hip and pelvic anteroposterior films for training and detecting femoral neck fracture through residual neural network (ResNet) 18 with convolutional block attention module (CBAM) + + . The study was performed at two tertiary hospitals between February and May 2020 and used data from January 2005 to December 2018. Our primary outcome was favorable performance for diagnosis of femoral neck fracture from negative studies in our dataset. We described the outcomes as area under the receiver operating characteristic curve (AUC), accuracy, Youden index, sensitivity, and specificity. A total of 4,189 images that contained 1,109 positive images (332 non-displaced and 777 displaced) and 3,080 negative images were collected from two hospitals. The test values after training with one hospital dataset were 0.999 AUC, 0.986 accuracy, 0.960 Youden index, and 0.966 sensitivity, and 0.993 specificity. Values of external validation with the other hospital dataset were 0.977, 0.971, 0.920, 0.939, and 0.982, respectively. Values of merged hospital datasets were 0.987, 0.983, 0.960, 0.973, and 0.987, respectively. A CNN algorithm for FNF detection in both displaced and non-displaced fractures using plain X-rays could be used in other hospitals to screen for FNF after training with images from the hospital of interest.

Keywords: Femur, Fracture, Deep learning, Machine learning, Artificial intelligence, AI

Introduction

Femoral neck fracture (FNF) is a type of hip fracture that occurs at the level of the neck of the femur, generally is located within the capsule, and may result in loss of blood supply to the bone. This fracture is common in the elderly, with 250,000 hip fractures each year in the USA [1–4]. Hip fractures are associated with increased mortality, and 12–17% of patients die within 1 year of fracture [5, 6]. Patients with FNF usually visit the emergency department with femoral pain and gait disturbance after traumatic injury. However, it might be possible to stand upright or walk in the initial few hours after fracture [7]. Patient morbidity and mortality increase with time from injury and unplanned delaying surgical treatment [8–10].

Several imaging methods are available for diagnosing hip fracture including FNF in the emergency department [11]. Plain X-ray is inexpensive and is commonly used as a first-line screening test for fracture in patients with related symptoms and signs. Although the sensitivity of diagnosis of hip fracture using X-ray is high, between 90 and 98%, it is difficult to detect a non-displaced fracture of the femoral neck [4, 12–14]. Unrecognized non-displaced fractures could be displaced and create complications such as avascular necrosis of the femoral head and malunion, which increase mortality [1, 4, 11, 15]. Due to these problems, elderly trauma patients with femoral pain are recommended to undergo computed tomography (CT) or magnetic resonance imaging (MRI) even if fractures are not confirmed on plain X-ray images in the emergency room [11, 13, 16–19]. However, these methods might not be available in all cases and increase the required treatment time and cost burden on the patient.

Deep learning is widely used for detection of fractures in medical images, and some studies have been conducted on hip fracture including FNF or intertrochanteric fracture. However, such studies only assess the presence or absence of a fracture; there has been no external validation of deep learning algorithms for detection of femoral fracture [20–25]. This study aims to develop a convolutional neural network (CNN) for detection of FNF with datasets including non-displacement and displacement cases and to perform external validation for this CNN technique.

Methods

Study Design

This is a retrospective study using hip and pelvic anteroposterior (AP) films for learning and detecting FNF using CNN. The study was performed at two tertiary hospitals (Seoul and Gyeonggi-do, Republic of Korea) between February 2020 and May 2020 and used data from January 2005 to December 2018. This study was approved by the Institutional Review Boards of Hanyang University Hospital (ref. no. 2020–01-005) and Seoul National University Bundang Hospital (ref. no. B-2002–595-103) and the requirement for informed consent were waived by the IRBs of two hospitals. All methods and procedures were carried out in accordance with the Declaration of Helsinki.

Dataset of Participants

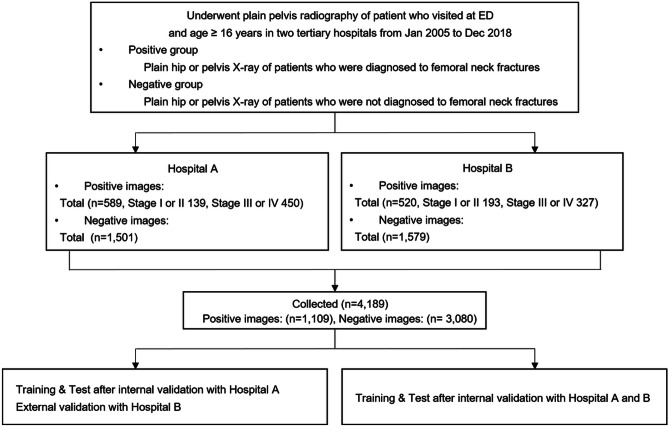

A flowchart of data collection and analysis is shown in Fig. 1.

Fig. 1.

Flowchart of data collection and analyzing in the study

Extraction and Categorization of Positive Plain X-ray Images with Femoral Neck Fracture

We sorted and gathered hip or pelvis AP X-rays including both hips by ICD code in those with FNF confirmed by additional tests such as CT or MRI in the emergency room between 2005 and 2018 and extracted their pre-operative images [26]. Two emergency medicine specialists reviewed the X-rays based on the readings of the radiologist and the surgical records of the orthopedic surgeon. We excluded images with severely altered anatomical structures due to past damage or acute fractures in any other parts including trochanteric or intertrochanteric fracture, but did not exclude patients with implants due to past surgery on the contralateral hip. Garden classification was performed based on the results of surgery, and we subclassified the fractures as Garden type I or II in cases of non-displaced FNF (difficult cases) and as Garden type III or IV in cases of displaced FNF (easy cases) [27–30].

Extraction of Negative Plain X-ray Images Without Femoral Neck Fracture

The candidate images for inclusion in the negative group were identified using X-rays of patients who visited the emergency room with complaints of hip pain or pelvic pain that was not indicative of FNF. Their reports were stated by radiologists as “unremarkable study,” “non-specific finding,” or “no definite acute lesion.” We also excluded images of severe deformation of anatomical structures caused by acute fracture in another area or past damage but did not exclude patients with implants due to past surgery in the absence of anatomical deformation. A relatively larger number of non-fracture images compared to fracture images were obtained at random, with a ratio of fracture to non-fracture images of 1:3. We collected these images from the same hospitals and within the same time period.

X-ray images were extracted using the picture archiving and communication system (PACS, Piview, INFINITT Healthcare, Seoul, South Korea) and stored as.jpeg format images. No personal information was included when saving images for data collection, and data were obtained without personal identifying data. In addition, arbitrary numbers were assigned to images, which were then coded and managed.

Data Pre-processing and Augmentation

In general, medical images acquired by different sources have different sizes (i.e., resolution) and aspect ratios. Moreover, as it is difficult to obtain many training datasets with ground-truth labels, there is a need for proper pre-processing steps and data augmentation. First, we added zeros to the given input images to create square shapes and resized the images to a fixed 500 × 500 pixel. Next, to increase the amount of training data and secure robustness to geometric transformations such as scale, translation, and rotation, we augmented the training images by applying random transformations (e.g., flip, flop, or rotation) and randomly cropping patches of 450 × 450 during the training process. Although the resulting cropped image includes both hips within the processed input image, our data pre-processing and augmentation are not aiming to select the region of interests (i.e., hip regions).

Fracture Detection by Image Classification

The overall network architecture of the proposed method for FNF detection was based on image classifiers since our fracture diagnosis task can be categorized as a typical binary classification problem. Our architecture was based on CBAM, which includes residual neural network (ResNet) and spatial attention modules [31, 32]. Specifically, ResNet used residual mapping to successfully address the issue of vanishing gradients in a CNN. This residual mapping, which can be achieved using the skip connection between layers, allows the model to contain many layers. CBAM integrates an attention module with ResNet and improves the classification performance with a few additional parameters in feed-forward CNNs. CBAM sequentially generates attention maps along the two dimensions of channel and space in the given input feature map. These attention maps refined the input feature map.

Effective Attention via Coarse-to-Fine Approaches

The CBAM module functions well in an image-classification task. However, since low-level activation maps are acquired in the early stages of the CNN, it is difficult to accurately generate spatial attention maps using the spatial attention module in CBAM. Therefore, we slightly modified the spatial attention module in an early stage of the CNN so that a coarse attention map could be calculated. As shown in Fig. 2 (a) and (b), we applied average-pooling and max-pooling operations along the channel axis and concatenate them. Subsequently, additional average-pooling operations were applied before the convolution layer. These average pooling operations make the intermediate feature map. By decreasing the number of average pooling operations in each stage, we obtain different levels of activation maps of size (H/8, W/8), (H/4, W/4), (H/2, W/2), and (H, W) after average pooling in each stage of the network. On this intermediate feature map, we applied a convolution layer with filter size 5 for encoding areas to emphasize and suppress. We increased the resolution to HxW using the bilinear interpolator so that the attention map has the same size as the input feature map. Finally, we multiplied the attention map to the input feature map. Using our modified attention module, we generated more accurate attention maps with a small number of convolutional operations compared to the original CBAM, even though the number of parameters is equivalent.

Fig. 2.

Architecture and network comparison of deep learning neural network for detection of FNF. a Architecture of ResNet18 with attention module. ResNet18 is composed of 8 residual blocks, every two blocks belong to the same stage and have the same channels of output. b Diagram of CBAM + + . The two outputs pooled along the channel axis are resized by additional pooling operations according to stage number and forwarded to a convolution layer. c the AUC of internal validation with Hospital A dataset and the number of Network parameters. The parameter number of ResNet18 with CBAM + + is the lowest and the AUC value is the highest

Experiments

To verify the performance of the proposed method, we perform the following two steps. (1) Phase 1: Hospital A dataset is separated into training data (80%), internal validation data (10%), and test data (10%). We determined the optimal cut-off value using result from internal validation. And we performed the test with Hospital A test dataset and external validation with Hospital B dataset. (2) Phase 2: Training, internal validation, and testing were conducted with all data from Hospitals A and B, using 80%, 10%, and 10% of each dataset, respectively.

Effects of Data Augmentation

To demonstrate the performance of our data augmentation, we provided AUC values with and without using the augmentation technique. The AUC value of the Hospital A dataset was 0.880 when the data augmentation was not applied in training time. In contrast, with our data-augmentation, this AUC value was 0.991. We saw that the data augmentation brings a significant impact on an insufficient training set of medical images.

Network Comparison for Detection of FNF

We conducted experiments with four types of attention modules: CBAM (with spatial attention and with channel attention), CBAM- (with spatial attention and without channel attention), CBAM + (with the proposed spatial attention and with channel attention), and CBAM + + (with the proposed spatial attention and without channel attention), using ResNet18 and ResNet50 as baselines. We trained the networks with the same conditions and evaluated the performance of each module. Figure 2c shows the number of parameters in each network and the AUC value of internal validation set from the Hospital A. There was a small number of data in our training set; we achieved similar AUC values to a larger network. Among the modules, ResNet18 with CBAM + + was the most efficient, with the smallest number of parameters and the highest AUC value. Therefore, we used ResNet18 with CBAM + + as our final model.

Visualization and Verification of the Medical Diagnosis

The proposed method is a computer-aided diagnostic system aimed to help radiologists and emergency doctors in medical imaging analysis. Therefore, we not only classify whether the input X-ray image includes fractured parts, but also visualize suspicious parts. For visualization, we employed Grad-CAM to highlight important regions in the image for diagnosis since the proposed network is composed of CNNs with fully connected layers [33]. As a result, Grad-CAM was applied to the last convolutional layer placed before the fully connected layer to verify the medical diagnosis.

Primary Outcomes and Validation

Our primary outcome was favorable performance of detection of FNF from negative studies in our dataset. For validation, we used the module with accuracy, Youden index, and AUC. Accuracy is the fraction of correct predictions over total predictions [34]. The Youden index is calculated at [(sensitivity + specificity) – 1] and is the vertical distance between the 45-degree line and the point on the ROC curve. Sensitivity, also known as recall, is the fraction of correct predictions over all FNF cases, and specificity is the fraction of correct normal predictions over all normal cases. The AUC is the area under the ROC that plots the relation of the true positive rate (sensitivity) to the false-positive rate (1-specificity). Since the metrics except for AUC change according to the cut-off values that determine fractures or negative predictions, we used the AUC as the primary evaluation metric. In the test and external validation, we selected the optimal cut-off value where Youden index was highest in internal validation because plain radiography of the pelvis is commonly used as a first-line screening test to diagnose hip fractures. We applied the cut-off value using the same algorithm as in the external validation set.

Statistical Analysis

Data were compiled using a standard spreadsheet application (Excel 2016; Microsoft, Redmond, WA, USA) and analyzed using NCSS 12 (Statistical Software 2018, NCSS, LLC. Kaysville, UT, USA, ncss.com/software/ncss). Kolmogorov–Smirnov tests were performed to demonstrate normal distribution of all datasets. We generated descriptive statistics and present them as frequency and percentage for categorical data and as either median and interquartile range (IQR) (non-normal distribution) or mean and standard deviation (SD) (normal distribution) or 95% confidence interval (95% CI) for continuous data. We used one ROC curve and cut-off analysis for internal validation and two ROC curves with the independent groups designed for comparing the ROC curve of external validation with that of test after training and internal validation. Two-tailed p < 0.05 was considered significantly different.

Results

A total of 4,189 images containing 1,109 positive images (332 difficult cases and 777 easy cases) and 3,080 negative images were collected from 4,189 patients (Table 1). From hospital A, 2,090 images consisted of 589 positive images (male, 28.4%; mean [SD] age, 76.8[12.8] years) of FNF and 1,501 negative images with no fracture. From hospital B, 2,099 images comprising 520 positive images (male, 27.8%; age, 74.3[11.7] years) and 1,579 negative images were analyzed.

Table 1.

Baseline characteristics of participants who provided images in datasets

| All datasets | Hospital A set (n = 2,090) | Hospital B set (n = 2,099) | |

|---|---|---|---|

| Total | 4,189 | 2,090 | 2,099 |

| Positive images, n | 1,109 | 589 | 520 |

| Non-displaced, n | 332 | 139 | 193 |

| Displaced, n | 777 | 450 | 327 |

| Age, years | 75.7 [12.9] | 76.8 [13.8] | 74.3 [11.7] |

| Sex, male | 28.1% | 28.4% | 27.8% |

| Negative images | 3,080 | 1,501 | 1,579 |

| Age, years | 46.4 [16.5] | 50.9 [19.1] | 42.3 [12.2] |

| Sex, male | 48.5% | 52.0% | 45.2% |

Continuous variables are presented by mean [standard deviation] and categorical variables are presented by N (%)

Phase 1: Comparison of external validation results with those of testing after internal validation in one hospital.

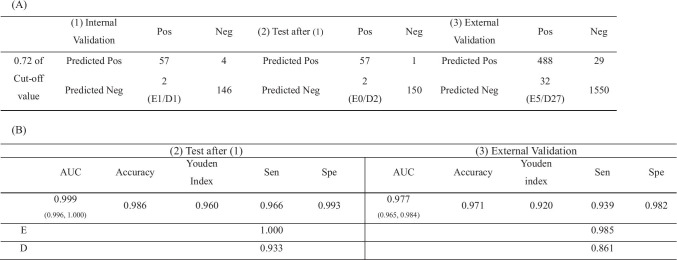

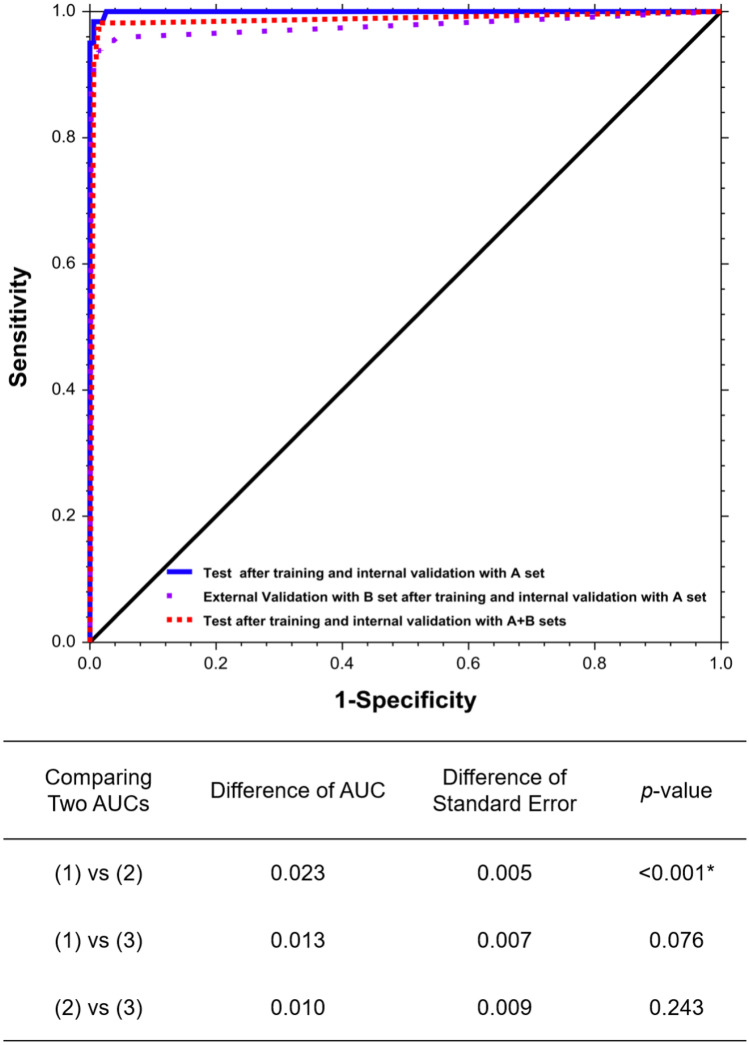

Diagnostic performance matrix and outcomes are shown in Table 2. The optimal cut-off value was 0.72, with the highest Youden index (0.939) on the ROC in internal validation for estimating values in the internal test and external validation. Test values after training and internal validation with hospital A dataset were 0.999 (0.996, 1.000) AUC, 0.986 accuracy, and 0.960 Youden index, with a 0.966 sensitivity (1.000 in easy cases and 0.933 in difficult cases) and a 0.993 specificity. Values of external validation with the hospital B dataset were lower, at 0.977 (0.965, 0.984) AUC, 0.971 accuracy, and 0.920 Youden index, with a 0.939 sensitivity (0.985 in easy cases and 0.861 in difficult cases) and a 0.982 specificity (p < 0.001). These results are shown in Table 2 and Fig. 3.

Table 2.

Diagnostic performance matrix (A) and outcomes (B) on the internal validation, test and external validation test with optimal cut off values (phase 1). Optimal cut off value was estimated when it is located on the highest Youden index in the internal validation

(1) Internal validation with Hospital A set, (2) Test after training and internal validation with Hospital A set, (3) External validation with Hospital B set. Pos positive, fracture of femoral neck Neg negative, no fracture. AUC under the curve of the receiver operating characteristic curve (ROC). Accuracy, the fraction of the correct predictions over the total predictions. The Youden index; the sensitivity + specificity – 1 that is the vertical distance between the 45° line and the point on ROC curve. Sen sensitivity, Spe specificity. In the internal and external validation, we selected the optimal cutoff value when the Youden index value is highest in the test after training with Hospital A set. CI confidence interval. E, easy cases subclassified to garden III or IV types. D, difficult cases subclassified to garden I or II types. *p < 0.05 is statistically significant

Fig. 3.

Receiver operator characteristics (ROC) curves and test comparing two AUCs of (1) Test after training and internal validation with A set, (2) External Validation with B set after training and internal validation with A set (3) Test after training and internal validation with A + B sets. *p < 0.05 is statistically significant

Phase 2: Evaluation of the internal test with combined image datasets.

We set the cut-off value (58.61) at the highest Youden index (0.963) on the ROC of internal validation after training with merged image datasets. Test values using the merged dataset were 0.987 (0.962, 0.995) AUC, 0.983 accuracy, and 0.960 Youden index with a 0.973 sensitivity (1.000 in displaced images and 0.946 in non-displaced images) and a 987 specificity, as shown in Table 3 and Fig. 3.

Table 3.

Diagnostic performance matrix (A) and outcomes (B) on the internal validation, and test after training with all dataset (phase 2). Optimal cut off value was estimated when it is located on the highest Youden index in the internal validation

(1) Internal validation with Hospital A and B set, (2) Test after training and internal validation with Hospital A and B set. Pos positive, fracture of femoral neck; Neg negative, no fracture. AUC under the curve of the receiver operating characteristic curve (ROC). Accuracy, the fraction of the correct predictions over the total predictions. The Youden index; the sensitivity + specificity – 1 that is the vertical distance between the 45° line and the point on ROC curve. Sen sensitivity, Spe specificity. In the internal and external validation, we selected the optimal cutoff value when the Youden index value is highest in the test after training with Hospital A set. CI confidence interval. E, easy cases subclassified to garden III or IV types. D, difficult cases subclassified to garden I or II types. *p < 0.05 is statistically significant

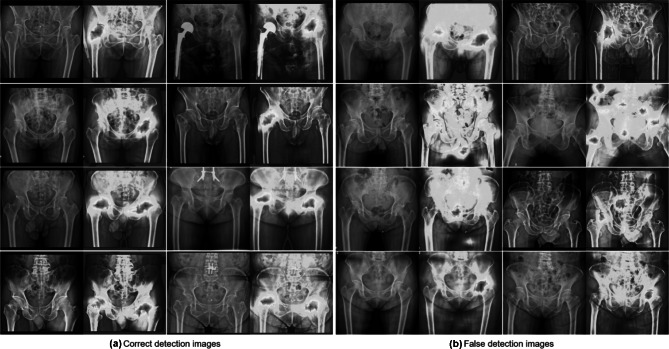

Visualization with Grad-CAM

Figure 4 visualizes the feature maps of our network functions. Testing results of FNF after training through ResNet18 with the spatial attention module using our network could concentrate on bilateral hip joints, even if images were negative.

Fig. 4.

Visualization with Grad-Class Activation mapping (CAM) results in external validation test. A Correct detection images. The images in 1st and 2nd row (true positive images) are the original plain C-ray images and CAM applied images with FNF, whereas the images in 3rd and 4th row (true negative images) are the original plain X-ray images and CAM applied images without fracture. B False detection images. The images in in 1st row are false-positive images, whereas the images in in 2nd, 3rd, and 4th row are false-negative images with unidentified areas highlighted by CAM in images

Discussion

Traditional deep neural networks require many training datasets, but medical images with annotations are not easy to acquire and are insufficient in number to train large networks. To avoid the overfitting problem with insufficient datasets, we employ an efficient network that shows high performance with a small dataset. Thus, we developed CBAM + + as an efficient version of CBAM. In the experiments for detection of FNF with X-ray, we evaluated performances of ResNets with different types of attention modules. We ultimately selected ResNet18 with the proposed CBAM + + as it showed the best performance with the smallest number of parameters. Moreover, we demonstrated the performance of the proposed network by providing visualization results through Grad-CAM.

The X-ray image detection function for FNF through deep learning performed with images of hospital A shows results that are equivalent to or higher than the sensitivity of medical staff, especially emergency medicine doctors. In a previous study, the sensitivity and specificity of X-ray readings of emergency medical doctors, except radiologists and orthopedic surgeons, were 0.956 and 0.822 [21]. In other recent studies of deep learning for detection of femoral fracture conducted at a single institution, ranges of outcomes were 0.906–0.955 for accuracy, 0.939–0.980 for sensitivity, and 0.890–0.980 for AUC [20–22]. These results in our study showed excellent respective outcomes of 0.986, 0.966, and 0.999, respectively. Our deep learning algorithm increased the capability for FNF detection with X-ray as the first screening tool and can be applied to clinical practice of any hospital that provides training and test datasets. Krogue et al. showed that the sensitivity using deep learning was 0.875 in displaced fracture and 0.462 in non-displaced fracture, and Mutasa et al. showed that the sensitivity using generative adversarial network with digitally reconstructed radiographs was 0.910 in displaced fracture and 0.540 in non-displaced fracture [24, 25]. In our study, we did not classify the normal, displaced fracture, and non-displaced fracture but detected displaced and non-displaced fracture images together. However, the sensitivity of easy displaced fracture was 1.000, and that of difficult non-displaced fracture was 0.933. Our algorithm can aid in detection of not only Garden type III or IV, but also Garden type I or II FNF from hip or pelvic AP X-ray images.

We conducted external validation with the dataset of a second hospital after deep learning with a single dataset for detection of FNF. The external validation test results were 0.971 accuracy, 0.939 sensitivity, and 0.977 AUC. Comparing the external validation with test after training and the internal validation, the difference of AUC was 0.023 (p value < 0.001), likely due to the resolution difference and degradation of data with decreasing image intensity level and contrast. However, with merged images of disparate hospitals using the same protocols, the AUC between a single institution and multiple institutions was statistically not different (difference of AUC = 0.013, p = 0.076). This indicates that the completed model trained using one hospital dataset can be transferred to other hospitals and used as a screening tool for FNF. Hospitals that use the model would need to train it using their own positive and negative images to optimize performance in specific environments.

Limitations

There were several limitations in this study. First, the age and sex of patients according to presence or absence of fractures were not completely matched because FNF has a particularly high incidence at certain age and sex. To apply the results of this study to clinical situations, the method must be verified in adult patient of various ages and sexes who visited the emergency room. Therefore, we thought that limiting the range of the control group for statistical matching would result in a deterioration in clinical application. Second, we did not compare the performance of our model to that of physicians with respect to key factors such as clinical outcomes, the time required to reach diagnosis, and the equipment required to use the model as a screening tool. Third, there was a difference in resolution between the medical images and the input images due to resizing. Therefore, it is possible for information loss to occur when attempting to detect FNF since the medical images were downsized [35]. Finally, external validation in phase 1, applying the network of trained with single hospital directly to another hospital showed lower accuracy than the results using a single hospital. So, a phase 2 study should be conducted in which images of the two hospitals are learned together, because we do not yet know how many images are needed to apply our network equally to external data.

Conclusions

We verified a CNN algorithm for detection of both displaced and non-displaced FNF using plain X-rays. This algorithm could be transferred to other hospitals and used to screen for FNF after training with images of the selected hospitals.

Acknowledgements

We would like to thank eworld editing (www.eworldediting.com) for English language editing.

Abbreviations

- FNF

Femoral neck fracture

- CNN

Convolution neural network

- ResNet

Residual neural network

- CBAM

Convolutional block attention module

- AUC

Area under the receiver operating characteristic curve

- CT

Computed tomography

- MRI

Magnetic resonance imaging

- AP

Anteroposterior

- ICD code

International Classification of Diseases code

- PACS

Picture archiving and communication system

- ROC curve

Receiver operating characteristic curve

- Grad-CAM

Gradient-weighted Class Activation Mapping

- IQR

Interquartile ranges

- SD

Standard deviation

- CI

Confidence interval

Author Contribution

Oh J and Kim TH conceived the study and designed the trial. Oh J, Bae J, Ahn C, Byun H, Chung JH, and Lee D supervised the trial conduct and were involved in data collection. Yu S, Kim T, and Yoon M analyzed all images and data. Bae J, Yu S, Oh J and Kim TH drafted the manuscript, and all authors substantially contributed to its revision. Oh J and Kim TH take the responsibility for the content of the paper.

Funding

This study was supported by the National Research Foundation of Korea (2019R1F1A1063502). This work was also supported by the research fund of Hanyang University (HY-2018) and the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NRF2019R1A4A1029800).

Declarations

Ethics Approval

This study was approved by the Institutional Review Boards of Hanyang University Hospital (ref. no. 2020–01-005) and Seoul National University Bundang Hospital (ref. no. B-2002–595-103).

Consent to Participate

The requirement for informed consent were waived by the IRBs of Hanyang University Hospital, and Seoul National University Bundang Hospital. All methods and procedures were carried out in accordance with the Declaration of Helsinki.

Consent for Publication

This manuscript has not been published or presented elsewhere in part or in entirety and is not under consideration by another journal. We have read and understood your journal’s policies, and we believe that neither the manuscript nor the study violates any of these.

Conflict of Interest

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Junwon Bae and Sangjoon Yu contributed this work equally as first author.

Contributor Information

Jaehoon Oh, Email: ojjai@hanmail.net, Email: ohjae7712@gmail.com.

Tae Hyun Kim, Email: taehyunkim@hanyang.ac.kr.

References

- 1.Zuckerman JD. Hip fracture. N Engl J Med. 1996;334(23):1519–1525. doi: 10.1056/NEJM199606063342307. [DOI] [PubMed] [Google Scholar]

- 2.Cummings SR, Rubin SM, Black D. The Future of Hip-Fractures in the United-States - Numbers, Costs, and Potential Effects of Postmenopausal Estrogen. Clin Orthop Relat Res. 1990;252:163–166. [PubMed] [Google Scholar]

- 3.Melton LJ. Hip fractures: A worldwide problem today and tomorrow. Bone. 1993;14:1–8. doi: 10.1016/8756-3282(93)90341-7. [DOI] [PubMed] [Google Scholar]

- 4.Cannon J, Silvestri S, Munro M. Imaging choices in occult hip fracture. J Emerg Med. 2009;37(2):144–152. doi: 10.1016/j.jemermed.2007.12.039. [DOI] [PubMed] [Google Scholar]

- 5.Richmond J, Aharonoff GB, Zuckerman JD, Koval KJ. Mortality risk after hip fracture. J Orthop Trauma. 2003;17(1):53–56. doi: 10.1097/00005131-200301000-00008. [DOI] [PubMed] [Google Scholar]

- 6.LeBlanc KE, Muncie HL, Jr, LeBlanc LL. Hip fracture: diagnosis, treatment, and secondary prevention. Am Fam Physician. 2014;89(12):945–951. [PubMed] [Google Scholar]

- 7.Hip Fracture. OrthoInfo from the American Academy of Orthopaedic Surgeons. Available at https://orthoinfo.aaos.org/en/diseases--conditions/hip-fractures. Accessed 10 November 2020

- 8.Zuckerman JD, Skovron ML, Koval KJ, Aharonoff G, Frankel VH. Postoperative complications and mortality associated with operative delay in older patients who have a fracture of the hip. J Bone Joint Surg Am. 1995;77(10):1551–1556. doi: 10.2106/00004623-199510000-00010. [DOI] [PubMed] [Google Scholar]

- 9.Rudman N, McIlmail D. Emergency Department Evaluation and Treatment of Hip and Thigh Injuries. Emerg Med Clin North Am. 2000;18(1):29–66. doi: 10.1016/S0733-8627(05)70107-3. [DOI] [PubMed] [Google Scholar]

- 10.Bottle A, Aylin P. Mortality associated with delay in operation after hip fracture: observational study. BMJ. 2006;332(7547):947–951. doi: 10.1136/bmj.38790.468519.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Perron AD, Miller MD, Brady WJ. Orthopedic pitfalls in the ED: radiographically occult hip fracture. Am J Emerg Med. 2002;20(3):234–237. doi: 10.1053/ajem.2002.33007. [DOI] [PubMed] [Google Scholar]

- 12.Parker MJ. Missed hip fractures. Arch Emerg Med. 1992;9(1):23–27. doi: 10.1136/emj.9.1.23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rizzo PF, Gould ES, Lyden JP, Asnis SE: Diagnosis of occult fractures about the hip. Magnetic resonance imaging compared with bone-scanning. J Bone Joint Surg Am 75(3):395–401, 1993 [DOI] [PubMed]

- 14.Jordan RW, Dickenson E, Baraza N, Srinivasan K. Who is more accurate in the diagnosis of neck of femur fractures, radiologists or orthopaedic trainees? Skeletal Radiol. 2013;42(2):173–176. doi: 10.1007/s00256-012-1472-8. [DOI] [PubMed] [Google Scholar]

- 15.Labza S, Fassola I, Kunz B, Ertel W, Krasnici S. Delayed recognition of an ipsilateral femoral neck and shaft fracture leading to preventable subsequent complications: a case report. Patient Saf Surg. 2017;11:20. doi: 10.1186/s13037-017-0134-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lubovsky O, Liebergall M, Mattan Y, Weil Y, Mosheiff R. Early diagnosis of occult hip fractures MRI versus CT scan. Injury. 2005;36(6):788–792. doi: 10.1016/j.injury.2005.01.024. [DOI] [PubMed] [Google Scholar]

- 17.Deleanu B, Prejbeanu R, Tsiridis E, Vermesan D, Crisan D, Haragus H, et al. Occult fractures of the proximal femur: imaging diagnosis and management of 82 cases in a regional trauma center. World J Emerg Surg. 2015;10:55. doi: 10.1186/s13017-015-0049-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Thomas RW, Williams HL, Carpenter EC, Lyons K. The validity of investigating occult hip fractures using multidetector CT. Br J Radiol. 2016;89(1060):20150250. doi: 10.1259/bjr.20150250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Mandell JC, Weaver MJ, Khurana B. Computed tomography for occult fractures of the proximal femur, pelvis, and sacrum in clinical practice: single institution, dual-site experience. Emerg Radiol. 2018;25(3):265–273. doi: 10.1007/s10140-018-1580-4. [DOI] [PubMed] [Google Scholar]

- 20.Adams M. Chen W, Holcdorf D, McCusker MW, Howe PD, Gaillard F: Computer vs human: Deep learning versus perceptual training for the detection of neck of femur fractures. J Med Imaging Radiat Oncol. 2019;63(1):27–32. doi: 10.1111/1754-9485.12828. [DOI] [PubMed] [Google Scholar]

- 21.Cheng CT, Ho TY, Lee TY, Chang CC, Chou CC, Chen CC, et al. Application of a deep learning algorithm for detection and visualization of hip fractures on plain pelvic radiographs. Eur Radiol. 2019;29(10):5469–5477. doi: 10.1007/s00330-019-06167-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Urakawa T, Tanaka Y, Goto S, Matsuzawa H, Watanabe K, Endo N. Detecting intertrochanteric hip fractures with orthopedist-level accuracy using a deep convolutional neural network. Skeletal Radiol. 2019;48(2):239–244. doi: 10.1007/s00256-018-3016-3. [DOI] [PubMed] [Google Scholar]

- 23.Badgeley MA, Zech JR, Oakden-Rayner L, Glicksberg BS, Liu M, Gale W, et al. Deep learning predicts hip fracture using confounding patient and healthcare variables. NPJ Digit Med. 2019;2:31. doi: 10.1038/s41746-019-0105-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Krogue JD, Cheng KV, Hwang, KM, Toogood P, Meinberg EG, Geiger, EJ, et al: Automatic Hip Fracture Identification and Functional Subclassification with Deep Learning. Radiol Artif Intell 25(2):e190023, 2020 [DOI] [PMC free article] [PubMed]

- 25.Mutasa S, Varada S, Goel A, Wong TT, Rasiej MJ. Advanced Deep Learning Techniques Applied to Automated Femoral Neck Fracture Detection and Classification. J Digit Imaging. 2020;33(5):1209–1217. doi: 10.1007/s10278-020-00364-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.International Statistical Classification of Diseases and Related Health Problems 10th Revision. Available at https://icd.who.int/browse10/2019/en. Accessed 10 November 2020

- 27.Garden RS. Low-Angle Fixation in Fractures of the Femoral Neck. Journal of Bone and Joint Surgery-British. 1961;43(4):647–663. doi: 10.1302/0301-620X.43B4.647. [DOI] [Google Scholar]

- 28.Frandsen PA, Andersen E, Madsen F, Skjødt T: Garden's classification of femoral neck fractures. An assessment of interobserver variation. J Bone Joint Surg Br 70(4):588–590, 1988 [DOI] [PubMed]

- 29.Thorngren KG, Hommel A, Norrman PO, Thorngren J, Wingstrand H. Epidemiology of femoral neck fractures. Injury. 2002;33:1–7. doi: 10.1016/S0020-1383(02)00324-8. [DOI] [PubMed] [Google Scholar]

- 30.Van Embden D, Rhemrev SJ, Genelin F, Meylaerts SA, Roukema GR. The reliability of a simplified Garden classification for intracapsular hip fractures. Orthop Traumatol Surg Res. 2012;98(4):405–408. doi: 10.1016/j.otsr.2012.02.003. [DOI] [PubMed] [Google Scholar]

- 31.He K, Zhang X, Ren S, Sun J: Deep Residual Learning for Image Recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 770–778, 2016

- 32.Woo S, Park J, Lee J, Kweon IS: CBAM: Convolutional Block Attention Module. Proceedings of the European Conference on Computer Vision (ECCV) 3–19, 2018

- 33.Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D: Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization. Proceedings of the IEEE International Conference on Computer Vision (ICCV) 618–626, 2017

- 34.Youden WJ. Index for rating diagnostic tests. Cancer. 1950;3(1):32–35. doi: 10.1002/1097-0142(1950)3:1<32::AID-CNCR2820030106>3.0.CO;2-3. [DOI] [PubMed] [Google Scholar]

- 35.Kwon G, Ryu J, Oh J, Lim J, Kang BK, Ahn C, et al. Deep learning algorithms for detecting and visualising intussusception on plain abdominal radiography in children: a retrospective multicenter study. Sci Rep. 2020;10(1):17582. doi: 10.1038/s41598-020-74653-1. [DOI] [PMC free article] [PubMed] [Google Scholar]