Abstract

The prediction and detection of radiation-related caries (RRC) are crucial to manage the side effects of the head and the neck cancer (HNC) radiotherapy (RT). Despite the demands for the prediction of RRC, no study proposes and evaluates a prediction method. This study introduces a method based on artificial intelligence neural network to predict and detect either regular caries or RRC in HNC patients under RT using features extracted from panoramic radiograph. We selected fifteen HNC patients (13 men and 2 women) to analyze, retrospectively, their panoramic dental images, including 420 teeth. Two dentists manually labeled the teeth to separate healthy and teeth with either type caries. They also labeled the teeth by resistant and vulnerable, as predictive labels telling about RT aftermath caries. We extracted 105 statistical/morphological image features of the teeth using PyRadiomics. Then, we used an artificial neural network classifier (ANN), firstly, to select the best features (using maximum weights) and then label the teeth: in caries and non-caries while detecting RRC, and resistant and vulnerable while predicting RRC. To evaluate the method, we calculated the confusion matrix, receiver operating characteristic (ROC), and area under curve (AUC), as well as a comparison with recent methods. The proposed method showed a sensibility to detect RRC of 98.8% (AUC = 0.9869) and to predict RRC achieved 99.2% (AUC = 0.9886). The proposed method to predict and detect RRC using neural network and PyRadiomics features showed a reliable accuracy able to perform before starting RT to decrease the side effects on susceptible teeth.

Keywords: Dental caries, Neural networks, PyRadiomics features, Panoramic radiography, Radiotherapy

Background

Head and neck cancer (HNC) ranks first on the list of the most common cancers in the world, with an estimated US incidence over 134,000 new cases per year, considering the summation of the oral cavity, brain, and thyroid cancers [1, 2]. Despite improving treatment outcomes with multimodality treatment (surgery/ radiotherapy/chemotherapy), there is still a high rate of deaths from this disease [3]. Radiation therapy plays a pivotal role in HNC treatment [4–6]. The treatment options depend on several factors, such as tumor stage, patient’s clinical condition, presence of comorbidities, technological resources availability, and local medical expertise [7, 8]. In this scenario, radiotherapy can be used alone or combined with chemotherapy or surgery, depending on the clinical situation. Currently, about 80% of the estimated HNC patients receive radiation therapy as a component of their multidisciplinary treatment [8]. In the last decades, RT has passed through a tremendous technological advance, i.e., intensity-modulated radiation therapy (IMRT) [9]. IMRT has contributed to the increase of the therapeutic index and, in some cases, improving HNC patients’ survival rates [9]. Despite technological evolution obtained with IMRT, the wide variety of structures located in this region makes adverse effects, one of the most significant therapeutic process challenges. The most common adverse effects are mucositis, xerostomia, trismus, secondary infections, radiation caries, dysgeusia, and osteoradionecrosis [10].

Radiation caries is a type of dental caries that can occur in individuals undergoing RT in regions that include the salivary glands [11]. Patients undergoing RT in the oral cavity structures have increased in Streptococcus mutans, Lactobacillus, and Candida. Radiation-related caries (RRC) is the result of changes in salivary glands and saliva, including reduced flow, pH, and buffering capacity and increased viscosity alongside altered flora [11, 12]. Residual saliva with induced radiation xerostomia also has a low concentration of Ca2+ ions resulting in higher solubility of tooth structure and reduced remineralization. In addition to the indirect effect of radiation, there is also increasing evidence showing that radiation directly affects teeth. For instance, the teeth become more prone to rupture with enamel peeling, especially in areas of strength or stress, such as the initial, cusp, and cervical regions of the teeth [10, 11]. Damage is observable at doses higher than 30 Gy, specifically when teeth receive more than 60 Gy [13, 14]. The location of teeth, rapid course, and generalized attack distinguish radiation caries of the others. In daily clinical practice, clinical judgment and panoramic radiograph detect caries induced by radiation therapy [12].

Even experienced clinicians have moderate accuracy and expertise in diagnosing proximal caries on a dental radiograph due to time, quality of the image, or teeth overlapping. Studies have reported a low sensitivity (40–60%) for the use of conventional radiography in diagnosing caries [15–18]. Moreover, it has been shown that dentists misdiagnosed deep caries or healthy teeth, up to 40% and 20%, respectively [16]. Hence, it is not unusual if different dentists have different judgments about the same radiograph.

Recently, researches have conducted several attempts to improve the quality and accuracy of panoramic X-ray in caries detection. For instance, Gray et al. investigated whether the use of specific image enhancements and dual observers affects the detection of caries, dentin extension, and cavitation or not [19]. They used two approaches to detect caries with photostimulable phosphor plates (PSP) and Schick sensors. The authors observed no clinically significant differences using more observers, different film receptors, or filters by comparing the methods.

Machine learning (ML) in the artificial intelligence (AI) context consists of a class of algorithms that make computer processes learn from data [20]. Regarding to computational methods, artificial neural network (ANN), with learning capability, has been used in the medical image diagnostic, and the diseases follow-up [21–23]. ANN are inspired by biological neural networks’ structure and functionalities to construct data models to find patterns. In contrast, few studies have been conducted based on deep ANN architectures in the dental field, and research investigating the detection and diagnosis of dental caries is also more limited [24, 25].

As HNC patients receiving RT tend to develop massive caries, and ANN has a potential application to improve caries detection, we designed a pipeline based on ANN algorithms combined with statistical and morphological image features of the teeth to detect and also predict RRC in panoramic radiograph of patients with HNC who received radiotherapy or going under RT process.

Methods

We developed this study, retrospectively, in a tertiary health institution from 2017 to 2020 on patients diagnosed with HNC treated by radiation therapy combined or not with cisplatin-based chemotherapy. The eligibility criteria were patients without metastases, treated by radiation therapy with a total dose higher than 60 Gy. The exclusion criteria were patients with RT dose lower than 60 Gy, with distant metastases, without panoramic radiograph images, and with all teeth extracted before the treatment. A pair of panoramic radiographs were acquired pre- and post-treatment. According to the institution’s treatment protocol, HNC patients are evaluated before the radiotherapy by a dentist. The dental evaluation includes a panoramic radiograph, teeth extraction, and restoration before the treatment.

During and after radiotherapy treatment, the dentist followed the patients. In the follow-up period, patients were evaluated clinically and radiologically with a panoramic radiograph. Two dentists with more than 13 years of experience visually performed and double-checked the radiographic images to detect caries. During the RT treatment period, we selected 15 patients who fit the inclusion criteria to be the subjects of this study. Among the subjects, 13 were males and 2 females with mean age of 58.7 (± 6.8). The subjects with squamous cell carcinoma (SCC) histology, submitted to a 66 Gy mean dose radiotherapy with or without combined chemotherapy. The most frequent tumor sites were the oropharynx, oral cavity, and larynx. Oncologists diagnosed most patients (93.3%) with advanced tumor stages (stages III/IV). The primary used HNRT modality was 3-dimensional conformal radiotherapy by 80% and only 20% in intensity-modulated radiotherapy (IMRT). Radiation doses prescribed to the primary tumor volume varied from 60 to 70 Gy (mean dose 66 Gy).

Panoramic Radiograph Images

We obtained the radiographs using the Sirona digital panoramic radiograph machine, operating with tube voltage between 60 and 80 kV, tube operating current between 1 and 10 mA, a focal point of , and total filtration of aluminum. We used a default program of the device with a predefined magnification of 1.3 times with a rotation time of 14 s for the radiographic exposure. Each panoramic image has a 2440 × 1280 resolution, 0.108 image spacing, and 0–65,535 range intensity.

The patients were positioned inside the digital panoramic device to align the device’s vertical line with the patient’s facial midline of the sagittal plane. Also, we positioned the Frankfort plane of these individuals parallel to the ground. We checked the patient’s alignment and positioning and visualized the computer screen images to verify all the anatomical structures necessary to perform quality control measurements.

The software tools used for manual labeling of teeth were 3D Slicer and its modules [26]. We also used the PyRadiomics package to extract the features of the labeled teeth [27]. The programming languages and the features selection tools used in this study were MATLAB, Python, and Scikit-learn modules [27, 28]. The machine learning approach was employed to predict and detect caries, i.e., artificial neural network classifier (ANN) in MATLAB [29].

Image Analysis Pipeline

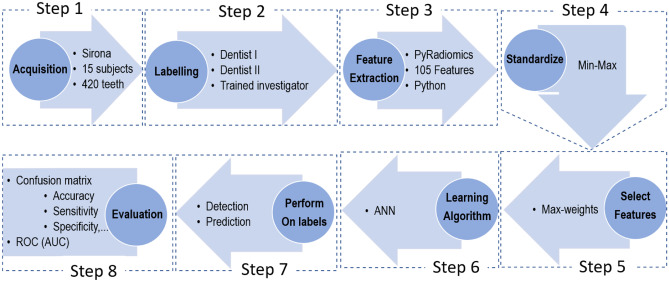

Figure 1 describes the pipeline steps to design an artificial intelligence tool for caries detection.

Fig. 1.

The proposed pipeline as a sequence of steps of the image and data processing to detect and predict caries. ANN = artificial neural network classifier

We developed the pipeline with eight steps as explained item by item in detail below.

Step 1: Acquisitions

We explained the image acquisition step in detail on the “Panoramic Radiograph Images” section.

Step 2: Labeling

Two dentists (also authors) delineated manually all 420 teeth regions labeling them using editor tools in 3D Slicer. They labeled the images for two purposes, one for detection and another for prediction. For the detection approach (the first label map), each the healthy tooth was labeled “one” (class 1) and tooth with caries with “two” (class 2) on the images before and after RT. Once all teeth were labeled, the second dentist and a trained postdoc researcher in medical image processing double-checked the label attributed to teeth. For the second label map aiming to prediction, those healthy teeth that exposed by radiotherapy and decayed after receiving Gy dose were labeled “one” (class 1 or vulnerable teeth), but the healthy teeth that remained without caries after radiotherapy were labeled “two” (class 2 or resistant teeth).

Step 3: Feature Extraction

We extracted 105 features from each tooth image region using the PyRadiomics feature extraction package in the Python environment. The 105 features included 12 shape-based, 16 Gy-level run length matrix, 5 neighborhood gray tone difference matrix, 18 first-order statistics, 16 Gy-level size zone, 24 Gy-level co-occurrence matrix, and 14 Gy-level dependence matrix features (see Appendix Table 5 for more details).

Table 5.

List of all 105 features by category, name and label extracted using PyRadiomics. More details about each feature definition can be found in PyRadiomics documentary (https://pyradiomics.readthedocs.io/en/latest/features.html)

| Label | Shape | Label | Gray-level run length matrix | Label | Neighborhood gray tone difference matrix | Label | First-order statistics | Label | Gray-level size zone | Label | Gray-level co-occurrence matrix | Label | Gray-level dependence matrix |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | VoxelVolume | 13 | RunLengthNonUniformityNormalized | 29 | Contrast | 34 | Maximum | 52 | SizeZoneNonUniformity | 68 | Contrast | 92 | SmallDependenceEmphasis |

| 2 | Maximum2DDiameterRow | 14 | HighGrayLevelRunEmphasis | 30 | Strength | 35 | Energy | 53 | ZonePercentage | 69 | MaximumProbability | 93 | DependenceEntropy |

| 3 | Maximum3DDiameter | 15 | GrayLevelVariance | 31 | Coarseness | 36 | Median | 54 | GrayLevelVariance | 70 | ClusterShade | 94 | GrayLevelVariance |

| 4 | SurfaceVolumeRatio | 16 | RunPercentage | 32 | Complexity | 37 | Minimum | 55 | LargeAreaLowGrayLevelEmphasis | 71 | DifferenceVariance | 95 | LowGrayLevelEmphasis |

| 5 | Sphericity | 17 | RunVariance | 33 | Busyness | 38 | MeanAbsoluteDeviation | 56 | LowGrayLevelZoneEmphasis | 72 | JointEntropy | 96 | DependenceNonUniformity |

| 6 | MinorAxisLength | 18 | ShortRunLowGrayLevelEmphasis | 39 | InterquartileRange | 57 | HighGrayLevelZoneEmphasis | 73 | SumSquares | 97 | SmallDependenceHighGrayLevelEmphasis | ||

| 7 | SurfaceArea | 19 | ShortRunHighGrayLevelEmphasis | 40 | Kurtosis | 58 | ZoneVariance | 74 | ClusterProminence | 98 | SmallDependenceLowGrayLevelEmphasis | ||

| 8 | Maximum2DDiameterColumn | 20 | RunEntropy | 41 | 90Percentile | 59 | SizeZoneNonUniformityNormalized | 75 | MCC | 99 | LargeDependenceEmphasis | ||

| 9 | MajorAxisLength | 21 | LongRunHighGrayLevelEmphasis | 42 | Entropy | 60 | SmallAreaEmphasis | 76 | SumEntropy | 100 | HighGrayLevelEmphasis | ||

| 10 | MeshVolume | 22 | RunLengthNonUniformity | 43 | RootMeanSquared | 61 | SmallAreaHighGrayLevelEmphasis | 77 | Id | 101 | DependenceVariance | ||

| 11 | Maximum2DDiameterSlice | 23 | LongRunLowGrayLevelEmphasis | 44 | Uniformity | 62 | LargeAreaHighGrayLevelEmphasis | 78 | InverseVariance | 102 | LargeDependenceLowGrayLevelEmphasis | ||

| 12 | Elongation | 24 | LowGrayLevelRunEmphasis | 45 | Mean | 63 | ZoneEntropy | 79 | Imc1 | 103 | LargeDependenceHighGrayLevelEmphasis | ||

| 25 | GrayLevelNonUniformityNormalized | 46 | Variance | 64 | GrayLevelNonUniformityNormalized | 80 | JointEnergy | 104 | GrayLevelNonUniformity | ||||

| 26 | GrayLevelNonUniformity | 47 | RobustMeanAbsoluteDeviation | 65 | GrayLevelNonUniformity | 81 | Idn | 105 | DependenceNonUniformityNormalized | ||||

| 27 | LongRunEmphasis | 48 | 10Percentile | 66 | LargeAreaEmphasis | 82 | DifferenceEntropy | ||||||

| 28 | ShortRunEmphasis | 49 | TotalEnergy | 67 | SmallAreaLowGrayLevelEmphasis | 83 | ClusterTendency | ||||||

| 50 | Range | 84 | Imc2 | ||||||||||

| 51 | Skewness | 85 | Idmn | ||||||||||

| 86 | JointAverage | ||||||||||||

| 87 | Correlation | ||||||||||||

| 88 | SumAverage | ||||||||||||

| 89 | Autocorrelation | ||||||||||||

| 90 | DifferenceAverage | ||||||||||||

| 91 | Idm |

Step 4: Standardize

Once we extracted the features of the teeth, their values needed to be standardized. The method used to scale the values was min–max scaling. Therefore, we scaled all values to the range between 0.0 to 1.0.

Step 5: Feature Selection

Using all features may not be a practical decision in classifiers since it may decrease the precision, increase the computation time, or induce to overfitting. We used two strategies to select the best features: the KBestSelect module in the Scikit-learn tool and another approach which we designed to use maximum weights in ANN. KBestSelect uses five functions, i.e., , mutual information (mutual_info_classif), ANOVA F values (f_classif), F-regression (F-value), and mutual information regression (mutual_info_regression), to calculate scores. Then, it selects K best (e.g., 1, 2, …, 104) among 105 feathers considering top K scores. In our maximum weights approach, we run an ANN to calculate the weights and then those weights lower than a specific threshold are removed. Thus, classifying using ANN only applies to the remaining features.

Step 6: Learning Algorithm

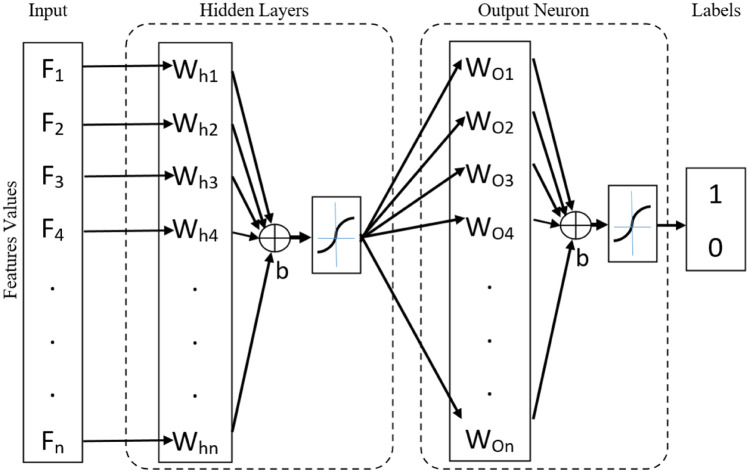

We designed the ANN using two networks, as illustrated in Fig. 2.

Fig. 2.

We defined an ANN with a hidden layer in the MATLAB software. The ANN input is the selected feature scores, and the output is a label set of 1 and 2 presenting dental caries vs healthy teeth or resistant teeth vs susceptible teeth. W = weights, b = bias

In the first layer, i.e., hidden layer, all or selected features scores are taken as input. Then, we trained the hidden layer by updating the weight (W) and the bias (b) values following the gradient descent algorithm with momentum propagation. The output of the hidden layer is the input of the classifier layers. The classifier output layers have sigmoid functions as neuron transfer functions and were trained with the resilient back-propagation algorithm to overcome the known gradient descent problem when one uses the sigmoid function.

We used 70% of the teeth dataset to train both the hidden and the classifier layers. Thus, we used the remaining data for the test and cross-validation. The software randomly selected 15% for both the test and cross-validation as well as the training dataset.

Step 7: Perform the Classifier

Prediction and Detection of RRC

We employed the ANN classification method on the selected features scores to obtain each tooth’s resulting label on either the prediction and the detection of RRC. There is a limited range for the number of hidden layers (HL) to prevent overfitting in ANN [28] as follow:

| 1 |

where, number of input neurons, number of output neurons, number of samples in training dataset, and is a value in interval depends on the application of ANN. We used this limitation to investigate an equilibrium between the number of features and the number of HL.

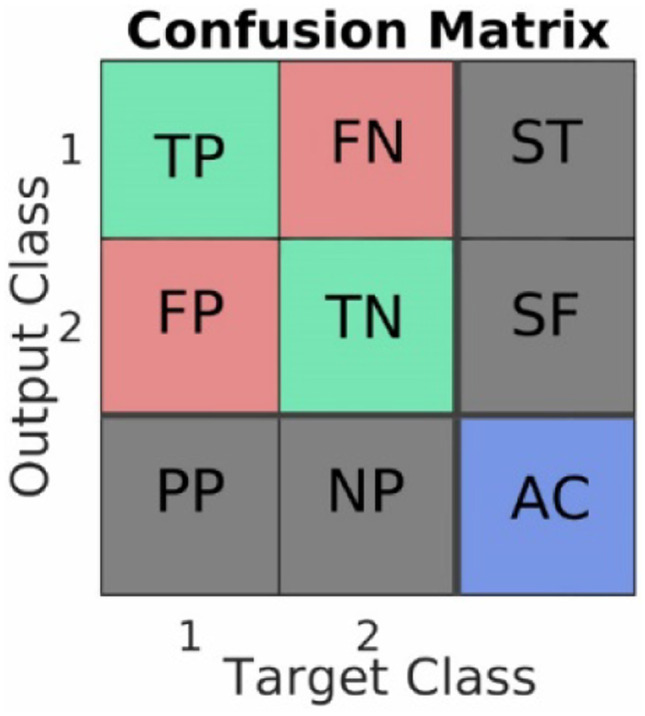

Step 8: Evaluation

Since we already have the dentists’ gold standard labeling (provided in Step 1), we estimated the confusion matrix to assess ANN. The confusion matrix includes nine values: true-positive (TP), false-negative (FN), false-positive (FP), true-negative (TN), sensitivity (ST), specificity (SF), positive predictive (PP), negative predictive (NP), and accuracy (AC) positioned in a 3 × 3 matrix as shown in Fig. 3 where we define each element of the matrix as the standard definition [30].

Fig. 3.

Confusion matrix, a standard way to estimate a classifier’s performance in labeling data into two classes. True-positive (TP), false-negative (FN), false-positive (FP), true-negative (TN), sensitivity (ST). specificity (SF), positive predictive (PP), negative predictive (NP), and accuracy (AC)

In addition to the confusion matrix, we provide receiver operating characteristic (ROC) of ANN’s best performance. Also, we compared our suggestion (ANN-PyRadiomics with maximum weighting feature selection) to several methods at the end of the evaluation step.

Results

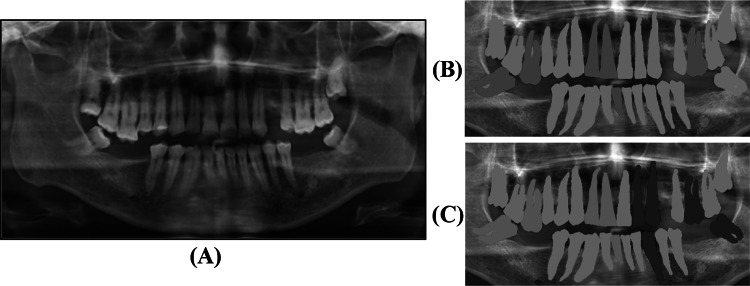

A total of 420 teeth, with 238 healthy teeth (class 1, green labels in Fig. 4B) and 182 dental caries (class 2, red label in Fig. 4B), were labeled manually by two dentists (with 0.5% variations between two labels). For the prediction, the dentist also labeled 119 teeth, 75 vulnerable (class 1, green in Fig. 4C) and 44 resistant teeth (class 2, blue in Fig. 4C) as illustrated in Fig. 4 and Table 1.

Fig. 4.

An example of A) original panoramic dental image, B) the first manual label map of healthy teeth (green) and dental caries (red) for detection approach, and C) the second manual label map of resistant (blue) and vulnerable (green) for prediction approach. In the second label map, caries dental before RT are excluded (gray)

Table 1.

Manual labeling results of resistant vs susceptible teeth of 15 patients with head-neck cancer under radiotherapy treatment

| X-ray images | Healthy teeth | Dental caries | Sum |

|---|---|---|---|

| Before radiotherapy | 129 | 90 | 219 |

| After radiotherapy | 44 | 157 | 201 |

As shown in Table 1, the number of teeth, before and after radiotherapy, is not the same since 18 teeth were no longer existing.

After, we extracted 105 features from each tooth individually using label maps and original images in the PyRadiomics package. This package extracts the features in an undefined order in the resulting output sheet. Therefore, a direct comparison between values in the resulting sequence was not possible. Thus, we defined a fixed list of the features and then set each feature’s values in the same name to solve this problem. All scores were scaled using the min–max method.

We used the SelectKBest to select only those features with higher scores using chi-squared, ANOVA F-value, mutual information (MI), F-value, and mutual info regression (MIR). We assessed the five functions and K numbers range to find the best features, as presented in Table 2.

Table 2.

Results of the SelectKBest module on 105 extracted features by PyRadiomic on the label map of teeth. The numbers of features are associated with the features in the Appendix Table 5

| Score functions | |||||

|---|---|---|---|---|---|

| NSF | ANOVA-FV | MI | FV | MIR | |

| 1 | 37 | 37 | 21 | 37 | 21 |

| 5 | 22, 37, 69, 72, 80 | 22, 37, 69, 72, 80 | 21, 22, 29, 40, 75 | 22, 37, 69, 72, 80 | 21, 22, 27, 40, 45 |

| 10 | 7, 22, 37, 44, 52, 69, 72, 75, 80, 96 | 22, 37, 52, 69, 72, 75, 80, 85, 87, 96, |

21, 22, 27, 29, 40, 68, 75, 81, 87, 91, |

22, 37, 52, 69, 72, 75, 80, 85, 87, 96, |

21, 22, 27, 29, 39, 40, 68, 75, 87, 91, |

chi-squared, FV F-value, MI mutual information, MIR mutual information regression, NSF number of selected features

The employed ANN has 105 weights, the same number of the available features. We removed the features with weights less than a minimum value to select the pipeline’s best features step. Since we decreased the number of features, then we are able to increase HL’s number using Eq. (1). The results of maximum weighting feature selection for the best, 5 and 10 best features are presented in Table 3.

Table 3.

Results of select the best features using maximum weight approach

| NSF | Selected features | |

|---|---|---|

| 0.77 | 1 | 2 |

| 0.72 | 5 | 2, 48, 77, 98, 101 |

| 0.61 | 10 | 2, 33, 37, 48, 67, 77, 93, 98, 101, 105 |

NSF number of selected features, NHL number of hidden layers, u upper bound used to remove the features with lower weights

As it can be seen in both Tables 2 and 3, the features are not the same, which shows the selection approach for the best features is crucial for the training step of NN classifiers. Even though SelectKBest module for each score function has different results which shows lower accuracy in feature selection decision.

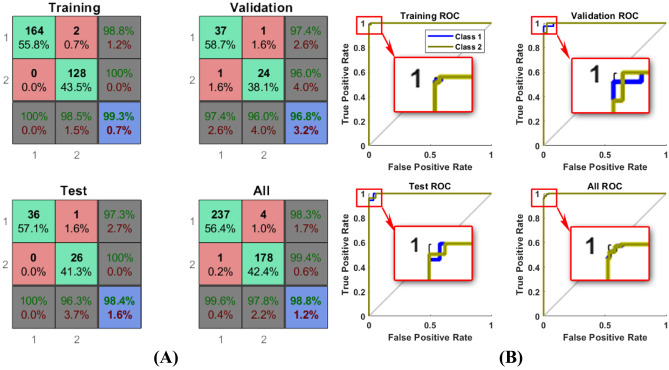

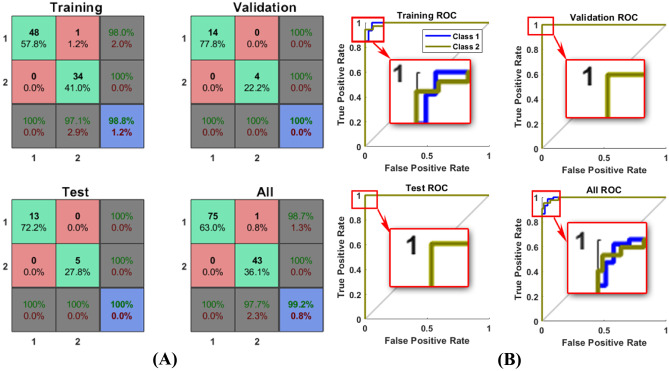

Detection

We trained the ANN for detection approach using 70% of teeth which were randomly selected by software. We present the confusion matrices for training, validation, test, and ANN’s total performance in Fig. 5,(A) and Table 6 in the Appendix including different selection features and hidden layers. In addition to confusion matrices, the ROC curves of ANN’s best performance are shown in this figure individually.

Fig. 5.

A) Confusion matrix., B) ROC calculated for the best results of ANN on all 420 teeth in detection approach, generated by MATLAB. Class1 for healthy and 2 for dental caries

Table 6.

Results of the neural network classifier performance on 15 subjects including 420 teeth. NHL number of hidden layers, NSF number of selected features, upper bound used to remove the features by maximum weights less than this

| NSF | Selected features | NHL | Accuracy (%) | |

|---|---|---|---|---|

| 0 | 105 | All | 1 | 78.6 |

| 0.006 | 103 | All-11, 3 | 2 | 87.6 |

| 0.13 | 68 | 1, 2, 4, 6, 7, 8, 9, 10, 12, 13, 16, 17, 18, 20, 22, 25, 26, 27, 28, 31, 33, 34, 35, 37, 39, 40, 41, 42, 43, 44, 45, 47, 48, 49, 50, 52, 53, 58, 60, 62, 63, 66, 67, 68, 69, 70, 71, 72, 74, 75, 77, 78, 80, 81, 84, 85, 86, 90, 91, 93, 95, 96, 98, 99, 101, 103, 104, 105 | 3 | 89.8 |

| 0.17 | 50 | 1, 2, 4, 6, 7, 8, 9, 10, 12, 16, 20, 25, 26, 28, 31, 33, 34, 35, 37, 39, 40, 41, 43, 44, 47, 48, 49, 52, 53, 62, 63, 67, 69, 70, 71,72, 74, 75, 77, 78, 80, 81, 85, 91, 93, 98, 101, 103, 104, 105 | 4 | 90.2 |

| 0.22 | 40 | 2, 4, 6, 7, 8, 9, 12, 25, 26, 28, 31, 33, 34, 35, 37, 39, 40, 41, 43, 47, 48, 49, 53, 63, 67, 70, 71, 74, 75, 77, 78, 80, 85, 91, 93, 98, 101, 103, 104, 105 | 5 | 96.0 |

| 0.26 | 33 | 2, 4, 6, 7, 8, 9,12, 25, 28, 31, 33, 34, 37, 39, 40, 43, 48, 53, 63, 67, 70, 75, 77, 78, 80, 85, 91, 93, 98,101, 103, 104, 105 | 6 | 94 |

| 0.29 | 28 | 2, 4, 6, 7, 9, 12, 25, 31, 33, 34, 37, 40, 48, 53, 63, 67, 70, 75, 77, 78, 80, 85, 91, 93, 98, 101, 104, 105 | 7 | 98.6 |

| 0.30 | 24 | 2, 4, 6, 7, 12, 31, 33, 34, 37, 40, 48, 53, 67, 70, 75, 77, 78, 85, 91, 93, 98, 101, 104, 105 | 8 | 95.2 |

| 0.35 | 21 | 2, 4, 6, 12, 33, 34, 37, 40, 48, 53, 67, 70, 75, 77, 78, 91, 93, 98, 101, 104, 105 | 9 | 98.6 |

| 0.38 | 19 | 2, 4, 6, 12, 33, 34, 37, 40, 48, 53, 67, 75, 77, 78, 93, 98, 101, 104, 105 | 10 | 98.8 |

The area under the curve (AUC) calculated for the ROC curve was 0.9869. Among 10 best features, five features with the highest weights, i.e., 2, 48, 77, 98, and 101, were selected due to their weights in the ANN training step. We explained these features in detail as follows.

Maximum2DDiameterRow (Feature 2)

Maximum 2D diameter (row) is the longest pairwise Euclidean distance between labeled teeth surface mesh vertices in the column-slice (usually the sagittal) plane.

10Percentile (Feature 48)

The 10th percentile of the tooth’s intensity level is the third feature selected as another feature with a high weight. If one calculates each tooth's histogram, the 10th percentile of those teeth with caries has the same result and the tooth without caries. Therefore, this feature will help to distinguish between dental caries and healthy teeth.

ID (Feature 77)

ID or (a.k.a. Homogeneity 1) is another measure of a tooth’s local homogeneity. With more uniform gray levels, the denominator will remain low, resulting in a higher overall value.

SmallDependenceLowGrayLevelEmphasis (Feature 98)

This feature measures small dependence’s joint distribution with lower gray-level values.

DependenceVariance (Feature 101)

Measures the variance in the dependent size of the region of interest, i.e., tooth. Like ID, this feature considers the diversity of gray levels in a tooth. Due to the difference in intensity levels of healthy teeth and dental caries, this feature had been selected as an excellent feature to separate healthy teeth with caries dental teeth.

Prediction

We perform the ANN on the second label map to predict the RRC. As we expected, the selected features using maximum weights approach were not exactly the same as detection performance. The results are shown in Appendix Table 7 and Fig. 6.

Table 7.

Results of the NNC performance on 15 subjects including 119 teeth including 75 vulnerable and 44 resistant teeth. NHL number of hidden layers, NSF number of selected features, parameter used in feature selection by maximum weights approach

| NSF | Selected features | NHL | Accuracy (%) | |

|---|---|---|---|---|

| 0 | 105 | All | 1 | 94.1 |

| 0.44 | 27 | 40, 55, 58, 64, 67, 68, 70, 71, 72, 75, 76, 79, 84, 88, 93, 95, 102, 105 | 2 | 91.6 |

| 0.56 | 17 | 9, 12, 13, 24, 34, 40, 55, 64, 67, 68, 71, 72, 75, 76, 79, 84, 93 | 3 | 99.2 |

| 0.61 | 12 | 9, 12, 34, 55, 64, 67, 68, 71, 72, 75, 76, 79 | 4 | 98.3 |

| 0.64 | 9 | 9, 12, 34, 55, 64, 67, 75, 76, 79 | 5 | 99.2 |

| 0.67 | 7 | 9, 55, 64, 67, 75, 76, 79 | 6 | 95.8 |

| 0.69 | 6 | 9, 64, 67, 75, 76, 79 | 7 | 99.2 |

| 0.70 | 5 | 64, 67, 75, 76, 79 | 8 | 96.6 |

Fig. 6.

A) Confusion matrices. B) ROC of the best performance of ANN Classifier on vulnerable (class 1) and resistant (class 2) patient teeth (219) under radiotherapy treatment

The area under the curve (AUC) calculated for the ROC curve was 0.9886. As the final evaluation of our suggestion, in Table 4, we reviewed several recent works to compare our suggestion with these methods.

Table 4.

Several NN approaches to either detect or predict caries in comparison to the proposed approach in this study

| Studies | Number of teeth | Method base | Accuracy (%) | AUC | |

|---|---|---|---|---|---|

| Prediction | Detection | ||||

| Oliveira et al. (2011) [17] | 1084 | ANN | NA | 98.7 | NA |

| Lee et al. (2018) [31] | 2400 | CNN | NA | 86.3 | 0.884 |

| Patil et al. (2019) [32] | 120 images | ANN | NA | 95 | NA |

| Mohamed et al. (2020) [33] | 120 images | GONN* | 98.1 | NA | 0.92 |

| Falk et al. (2019) [34] | 226 | CNN | NA | 95 | 0.74 |

| ANN-Radiomics (ours) | 420 | ANN | 99.2 | 98.8 | 0.9886, 0.9869 |

NA not applicable

*Genetic optimized neural networks (GONN), this method is used to predict fatigue not caries

Discussion

Radiography associated with clinical findings is considered a conventional diagnostic approach for caries detection [10, 11]. A substantial number of head and neck cancer patients (20–40%) receiving radiation therapy develops caries during the follow-up time, with the majority of cases occurring after 6 months from the end of radiotherapy [35]. The onset of RRC usually occurs after 6 months from the ending of RT. RRC starts as discreet enamel cracks and fractures and progresses to a brown/blackish discoloration [35]. Unfortunately, there is no method available to predict RRC at present. To the best of our knowledge, this study is the first to investigate the detection and prediction of RRC with machine learning in HNC patients treated by radiation therapy using panoramic radiograph images.

In medical literature, several research types investigate automatic caries detection in healthy patients using various algorithms to learn caries’ area features and specifications [31, 32, 36]. Based on the relatively low accuracy achieved in previous studies that developed based on the periapical radiograph, micro-CT, hyperspectral imaging, and fluorescence, we decided to build an image dataset with healthy teeth and RRC teeth to assess the PyRadiomics features. We have chosen the panoramic radiograph for this pilot study for several reasons. First, the panoramic radiograph provided valuable image information, and the demineralization induced by direct and indirect radiation effects on the enamel and the dentin layer conducting to RRC can be captured by the PyRadiomics features. Second, the clinical practice widely employs a panoramic radiograph, and third, it has a reduced cost compared with other dental images.

Two experienced dentists provided the image dataset. They manually labeled the teeth and caries regions to extract the PyRadiomics features. During selecting the best features to either detect or predict the RRC, each selected feature’s raw scores showed low discrimination to separate the healthy teeth accurately from RRC. We used the weights of each of the best features calculated in the ANN training step as a model parameter.

Another essential step to achieving high accuracy was balancing the number of hidden layers and feature selection. In Appendix Table 6, we showed the tuning set of hyperparameters to achieve the best model fit. According to the number of HL, the classifier variation occurs because of losing information against improving depth learning training. Consequently, we found a relationship between the number of selected features and the number of HL to reach the best accuracy. We presented the balance between HL and the number of features in Eq. (1), increasing the number of HL from 1 to the maximum limit, i.e., the optimum accuracy.

The accuracy of the prediction process was 0.04% higher than the detection one. It can be explained by morphological and statistical features of vulnerable vs resistant teeth compared to healthy vs caries. That means the weights of the selected features for prediction were higher than the selected features for the detection pipeline (see Tables 5 and 6 in the Appendix).

In this pilot study, we chose a single dichotomy characteristic to examine ML’s feasibility, combined with PyRadiomics features, to identify and predict RRC in a panoramic radiograph. This strategy has allowed us to understand the altered features in images and the behavior of ML in patients with RRC. Another critical question frequently related to the ML is the sample size utilized for training and validation through a sample of the fifteen HNC patients with 420 teeth is valid to develop an ML algorithm with this method. The overfit is a frequent problem in ANN approaches, and it can occur even if there is no reasonable relationship between the input data and the outcome. The overfit is a concern, mainly when one uses an extensive database to train the ANN with many parameters inputted to identify a relationship. The searching for the radiomic features with more weight in the ANN limited the overfit in this study.

The outcomes obtained in our study have the potential to employ in clinical practice due to several reasons. First, one can extrapolate the method (ANN-PyRadiomics) to the other image sources such as computerized tomography, cone beam radiograph, digital radiograph, and intraoral radiograph. Second, due to automating the process, which is the next step of our work, the AI tool can help the oral radiologist or dentist improve the accuracy of RRC detection quickly, improving the efficiency of health services. Third, with this method, it is possible to develop a quantitative classification system for RRC based on numerical data obtained from PyRadiomics features. Fourth, in the future, with more patients and with a prospective follow-up, it is possible to refine the ANN for the detection of non-cavitated caries, which makes it possible to preserve the tooth structure of HNC patients. Finally, one can train ANN to recognize other abnormalities on panoramic dental images such as a cystic lesion, restoration, and channels.

Under RT treatment at this institution, the patients with head and neck cancer are usually in an advanced stage, which makes the number of cases for the study difficult. Besides, according to caries of radiation, they spread quickly, causing many tooth losses. It results in a small number of teeth cases investigation. This limitation leads to the use of more features to improve accuracy. Therefore, the computation time increased considerably. In addition, usually using intraoral radiography for the diagnosis of caries is the best examination. However, in each patient, 14 periapical radiographs before and 14 after RT would be necessary, which were not possible in our study as another limitation.

Conclusion

The present study is the first to develop a supervised machine learning system to detect and predict the RRC with high accuracy, i.e., 98.8% and 99.2%, respectively, in patients with HNC treated with radiation panoramic radiograph dental images. The neural networking obtained a high accuracy to select the best radiomic features to identify RRC from a panoramic radiograph. Our findings and methodology can be useful to be employed in other images to detect and predict the development of the RRC. The findings observed here open a way to be explored in future studies for improving the dental care of HNC patients. However, as the present study was a pilot, it needs a validation in a large sample to confirm its high accuracy as the next step.

Appendix

Funding

This study was funded by the Brazilian Governmental Agencies (CAPES: grand number 001) and FAPESP (grant number 2017/20598–9).

Declarations

Conflict of Interest

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Vanessa De Araujo Faria and Mehran Azimbagirad contributed equally to this work.

References

- 1.F. Bray, J. Ferlay, I. Soerjomataram, R.L. Siegel, L.A. Torre, A. Jemal, Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries, CA: A Cancer Journal for Clinicians, 68 (2018) 394–424.10.3322/caac.21492 [DOI] [PubMed]

- 2.R.L. Siegel, K.D. Miller, A. Jemal, Cancer statistics, 2020, CA: A Cancer Journal for Clinicians, 70 (2020) 7–30.10.3322/caac.21590 [DOI] [PubMed]

- 3.P. Blanchard, B. Baujat, V. Holostenco, A. Bourredjem, C. Baey, J. Bourhis, J.-P. Pignon, Meta-analysis of chemotherapy in head and neck cancer (MACH-NC): a comprehensive analysis by tumour site, Radiotherapy and Oncology, 100 (2011) 33–40.10.1016/j.radonc.2011.05.036 [DOI] [PubMed]

- 4.Forastiere A, Koch W, Trotti A, Sidransky D. Head and neck cancer. New England Journal of Medicine. 2001;345:1890–1900. doi: 10.1056/NEJMra001375. [DOI] [PubMed] [Google Scholar]

- 5.J.B. Epstein, R.V. Kish, L. Hallajian, J. Sciubba, Head and neck, oral, and oropharyngeal cancer: a review of medicolegal cases, Oral Surgery, Oral Medicine, Oral Pathology and Oral Radiology, 119 (2015) 177–186.10.1016/j.oooo.2014.10.002 [DOI] [PubMed]

- 6.Cardoso M, Min M, Jameson M, Tang S, Rumley C, Fowler A, Estall V, Pogson E, Holloway L, Forstner D. Evaluating diffusion-weighted magnetic resonance imaging for target volume delineation in head and neck radiotherapy. Journal of medical imaging and radiation oncology. 2019;63:399–407. doi: 10.1111/1754-9485.12866. [DOI] [PubMed] [Google Scholar]

- 7.V. Saloura, A. Langerman, S. Rudra, R. Chin, E.E.W. Cohen, Multidisciplinary care of the patient with head and neck cancer, Surgical Oncology Clinics of North America, 22 (2013) 179–215.10.1016/j.soc.2012.12.001 [DOI] [PubMed]

- 8.F.M.D. Santos, G.A. Viani, J.F. Pavoni, Evaluation of survival of patients with locally advanced head and neck cancer treated in a single center, Brazilian Journal of Otorhinolaryngology, (2019).10.1016/j.bjorl.2019.06.006 [DOI] [PMC free article] [PubMed]

- 9.Du T, Xiao J, Qiu Z, Wu K. The effectiveness of intensity-modulated radiation therapy versus 2D-RT for the treatment of nasopharyngeal carcinoma: a systematic review and meta-analysis. PLOS ONE. 2019;14:e0219611. doi: 10.1371/journal.pone.0219611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sroussi HY, Epstein JB, Bensadoun RJ, Saunders DP, Lalla RV, Migliorati CA, Heaivilin N, Zumsteg ZS. Common oral complications of head and neck cancer radiation therapy: mucositis, infections, saliva change, fibrosis, sensory dysfunctions, dental caries, periodontal disease, and osteoradionecrosis. Cancer Medicine. 2017;6:2918–2931. doi: 10.1002/cam4.1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.J. Deng, L. Jackson, J.B. Epstein, C.A. Migliorati, B.A. Murphy, Dental demineralization and caries in patients with head and neck cancer, Oral Oncology, 51 (2015) 824–831.10.1016/j.oraloncology.2015.06.009 [DOI] [PubMed]

- 12.C.H.L. Hong, J.J. Napeñas, B.D. Hodgson, M.A. Stokman, V. Mathers-Stauffer, L.S. Elting, F.K.L. Spijkervet, M.T. Brennan, O.C.S.G.M.-n.A.o.S.C.i.C.I.S.o.O.O. Dental Disease Section, A systematic review of dental disease in patients undergoing cancer therapy, Supportive Care in Cancer, 18 (2010) 1007–1021.10.1007/s00520-010-0873-2 [DOI] [PMC free article] [PubMed]

- 13.Walker MP, Wichman B, Cheng AL, Coster J, Williams KB. Impact of radiotherapy dose on dentition breakdown in head and neck cancer patients. Pract Radiat Oncol. 2011;1:142–148. doi: 10.1016/j.prro.2011.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Silva ARS, Alves FA, Antunes A, Goes MF, Lopes MA. Patterns of demineralization and dentin reactions in radiation-related caries. Caries Research. 2009;43:43–49. doi: 10.1159/000192799. [DOI] [PubMed] [Google Scholar]

- 15.Abesi F, Mirshekar A, Moudi E, Seyedmajidi M, Haghanifar S, Haghighat N, Bijani A. Diagnostic accuracy of digital and conventional radiography in the detection of non-cavitated approximal dental caries. Iran J Radiol. 2012;9:17–21. doi: 10.5812/iranjradiol.6747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.S.C. White, Caries detection with xeroradiographs: the influence of observer experience, Oral Surgery, Oral Medicine, Oral Pathology, 64 (1987) 118–122.10.1016/0030-4220(87)90126-5 [DOI] [PubMed]

- 17.J. Oliveira, H. Proença, Caries Detection in Panoramic Dental X-ray Images, in: J.M.R.S. Tavares, R.M.N. Jorge (Eds.) Computational Vision and Medical Image Processing: Recent Trends, Springer Netherlands, Dordrecht, 2011, pp. 175–190.10.1007/978-94-007-0011-6_10

- 18.Choi JW. Assessment of panoramic radiography as a national oral examination tool: review of the literature. Imaging Sci Dent. 2011;41:1–6. doi: 10.5624/isd.2011.41.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.B.M. Gray, A. Mol, A. Zandona, D. Tyndall, The effect of image enhancements and dual observers on proximal caries detection, Oral Surgery, Oral Medicine, Oral Pathology and Oral Radiology, 123 (2017) e133-e139.10.1016/j.oooo.2017.01.004 [DOI] [PubMed]

- 20.Alpaydin E. Introduction to machine learning. 2. Adaptive computation and machine learning: The MIT Press (February; 2010. p. 2010. [Google Scholar]

- 21.Hizukuri A, Nakayama R, Nara M, Suzuki M, Namba K. Computer-aided diagnosis scheme for distinguishing between benign and malignant masses on breast DCE-MRI images using deep convolutional neural network with Bayesian optimization. Journal of Digital Imaging. 2020 doi: 10.1007/s10278-020-00394-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Liu C, Pang M. Extracting lungs from CT images via deep convolutional neural network based segmentation and two-pass contour refinement. Journal of Digital Imaging. 2020 doi: 10.1007/s10278-020-00388-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tufail AB, Ma YK, Zhang QN. Binary classification of Alzheimer’s disease using sMRI imaging modality and deep learning. Journal of Digital Imaging. 2020;33:1073–1090. doi: 10.1007/s10278-019-00265-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hwang JJ, Jung YH, Cho BH, Heo MS. An overview of deep learning in the field of dentistry. Imaging Sci Dent. 2019;49:1–7. doi: 10.5624/isd.2019.49.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.A.G. Cantu, S. Gehrung, J. Krois, A. Chaurasia, J.G. Rossi, R. Gaudin, K. Elhennawy, F. Schwendicke, Detecting caries lesions of different radiographic extension on bitewings using deep learning, Journal of Dentistry, 100 (2020) 103425.10.1016/j.jdent.2020.103425 [DOI] [PubMed]

- 26.Fedorov A, Beichel R, Kalpathy-Cramer J, Finet J, Fillion-Robin J-C, Pujol S, Bauer C, Jennings D, Fennessy F, Sonka M. 3D Slicer as an image computing platform for the Quantitative Imaging Network. Magnetic resonance imaging. 2012;30:1323–1341. doi: 10.1016/j.mri.2012.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, G. Louppe, P. Prettenhofer, R. Weiss, Scikit-learn: machine learning in python. arXiv, arXiv preprint arXiv:1201.0490, (2012)

- 28.H.B. Demuth, M.H. Beale, O. De Jess, M.T. Hagan, Neural network design, Martin Hagan2014

- 29.I. Witten, E. Frank, M. Hall, Data Mining: Practical Machine Learning Tools and Techniques, Morgan Kaufmann2011

- 30.F. Schoonjans, MedCalc, 2020.https://www.medcalc.org/contact.php

- 31.J.H. Lee, D.-H. Kim, S.N. Jeong, S.H. Choi, Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm, Journal of Dentistry, 77 (2018) 106–111.10.1016/j.jdent.2018.07.015 [DOI] [PubMed]

- 32.S. Patil, V. Kulkarni, A. Bhise, Algorithmic analysis for dental caries detection using an adaptive neural network architecture, Heliyon, 5 (2019) e01579.10.1016/j.heliyon.2019.e01579 [DOI] [PMC free article] [PubMed]

- 33.Hashem M, Youssef AE. Teeth infection and fatigue prediction using optimized neural networks and big data analytic tool. Cluster Computing. 2020;23:1669–1682. doi: 10.1007/s10586-020-03112-3. [DOI] [Google Scholar]

- 34.Schwendicke F, Elhennawy K, Paris S, Friebertshäuser P, Krois J. Deep learning for caries lesion detection in near-infrared light transillumination images: a pilot study. J Dent. 2020;92:103260. doi: 10.1016/j.jdent.2019.103260. [DOI] [PubMed] [Google Scholar]

- 35.N.R. Palmier, A.C.P. Ribeiro, J.M. Fonsêca, J.V. Salvajoli, P.A. Vargas, M.A. Lopes, T.B. Brandão, A.R. Santos-Silva, Radiation-related caries assessment through the International Caries Detection and Assessment System and the Post-Radiation Dental Index, Oral Surgery, Oral Medicine, Oral Pathology and Oral Radiology, 124 (2017) 542–547.10.1016/j.oooo.2017.08.019 [DOI] [PubMed]

- 36.L.B. Oliveira, C. Massignan, A.C. Oenning, K. Rovaris, M. Bolan, A.L. Porporatti, G. De Luca Canto, Validity of micro-CT for in vitro caries detection: a systematic review and meta-analysis, Dentomaxillofacial Radiology, (2019) 20190347.10.1259/dmfr.20190347 [DOI] [PMC free article] [PubMed]