Abstract

Political misinformation, astroturfing and organised trolling are online malicious behaviours with significant real-world effects that rely on making the voices of the few sounds like the roar of the many. These are especially dangerous when they influence democratic systems and government policy. Many previous approaches examining these phenomena have focused on identifying campaigns rather than the small groups responsible for instigating or sustaining them. To reveal latent (i.e. hidden) networks of cooperating accounts, we propose a novel temporal window approach that can rely on account interactions and metadata alone. It detects groups of accounts engaging in various behaviours that, in concert, come to execute different goal-based amplification strategies, a number of which we describe, alongside other inauthentic strategies from the literature. The approach relies upon a pipeline that extracts relevant elements from social media posts common to the major platforms, infers connections between accounts based on criteria matching the coordination strategies to build an undirected weighted network of accounts, which is then mined for communities exhibiting high levels of evidence of coordination using a novel community extraction method. We address the temporal aspect of the data by using a windowing mechanism, which may be suitable for near real-time application. We further highlight consistent coordination with a sliding frame across multiple windows and application of a decay factor. Our approach is compared with other recent similar processing approaches and community detection methods and is validated against two politically relevant Twitter datasets with ground truth data, using content, temporal, and network analyses, as well as with the design, training and application of three one-class classifiers built using the ground truth; its utility is furthermore demonstrated in two case studies of contentious online discussions.

Keywords: Coordinated amplification, Coordinated behaviour, Online social networks, Information campaigns

Introduction

Online social networks (OSNs) have established themselves as flexible and accessible systems for activity coordination and information dissemination. This benefit was illustrated during the Arab Spring (Carvin 2012) but inherent dangers are increasingly apparent in ongoing political interference and disinformation (Howard and Kollanyi 2016; Ferrara 2017; Keller et al. 2017; Neudert 2018; Singer and Brooking 2019; Nimmo et al. 2020). Modern Strategic Information Operations (SIOs) are participatory activities, which aim to use their audiences to amplify their desired narratives, not just receive it (Starbird et al. 2019). The widespread use of social media for political communication and its identity-obscuring nature have made it a prime target for politically-driven influence, both legitimate and illegitimate. Through cyclical reporting (i.e. social media feeding stories and narratives to traditional news media, which then sparks more social media activity), social media users can unknowingly become “unwitting agents” as “sincere activists” of concerted operations (Benkler et al. 2018; Starbird and Wilson 2020). The use of political bots and trolls to influence the framing and discussion of issues in the mainstream media (MSM) remains prevalent (Bessi and Ferrara 2016; Woolley 2016; Woolley and Guilbeault 2018; Rizoiu et al. 2018; Cresci 2020). The use of bots and sockpuppet accounts to amplify individual voices above the crowd, sometimes referred to as the megaphone effect, requires coordinated action and a degree of regularity that may leave traces in the digital record.

Relevant research has focused on high-level analyses of campaign detection and classification (Lee et al. 2013; Varol et al. 2017; Alizadeh et al. 2020), the identification of botnets and other dissemination groups (Vo et al. 2017; Woolley and Guilbeault 2018), and coordination at the community level (Kumar et al. 2018; Hine et al. 2017; Cresci 2020). Some have considered generalised approaches to social media analytics (e.g. Weber 2019; Graham et al. 2020; Nizzoli et al. 2021; Pacheco et al. 2021), but unanswered questions regarding the clarification of coordination strategies and their detection remain. Forensic studies of SIOs and other influence campaigns using these strategies (e.g. Benkler et al. 2018; Jamieson 2020; Nimmo et al. 2020) currently require significant human input to reveal the covert ties underpinning them, and could benefit greatly from enhanced automation.

In this work, we expand upon the novel approach to detect groups engaging in potentially coordinated amplification activities, revealed through anomalously high levels of coincidental behaviour, which we presented at ASONAM’20 (Weber and Neumann 2020). Links between the group members are inferred from behaviours that, when used intentionally, are used to execute a number of identifiable coordination strategies. We use a range of techniques to validate our new approach on two relevant datasets, as well as comparison with ground truth and a synthesised dataset, and show it successfully identifies coordinating communities.

Our approach infers ties between accounts to construct latent coordination networks (LCNs) of accounts, using criteria specific to different coordination strategies, which are based on features common to major OSNs. The accounts may not be directly connected, thus we use the term ‘latent’ to mean ‘hidden’ when describing these connections. The inference of connections is performed solely on the accounts’ interactions, i.e. not their content or friending/following behaviour, only metadata and temporal information, though it could incorporate them.

Highly coordinating communities (HCCs) are then detected and extracted from the LCN. We propose a variant of focal structures analysis (FSA, Şen et al. 2016) to do this, in order to take advantage of FSA’s focus on finding influential sets of nodes in a network while also reducing the computational complexity of the algorithm. A window-based approach is used to enforce temporal constraints.

The following research questions guided our evaluation:

RQ1: How can HCCs be found in an LCN?

RQ2:How do the discovered communities differ?

RQ3: Are the HCCs internally or externally focused?

RQ4: How consistent is the HCC messaging?

RQ5: What evidence is there of consistent coordination?

RQ6: How well can HCCs in one dataset inform the discovery of HCCs in another?

This paper expands upon Weber and Neumann (2020) by providing further methodological detail and experimental validation, and case studies in which the technique is applied to new real-world Twitter datasets relating to contentious political issues, as well as consideration of algorithmic complexity and comparison with several similar techniques. Prominent among the extra validation provided is the use of machine learning classifiers to show that our datasets contain similar coordination to our ground truth, and the application of a sliding frame across the time windows as a way to search for consistent coordination.

This paper provides an overview of relevant literature, followed by a discussion of online coordination strategies and their execution. Our approach is then explained, and its experimental validation is presented. Following the validation, the algorithmic complexity and performance of the technique are presented, and two case studies are explored, demonstrating the utility of the approach with real-world politically relevant datasets, and we compare our technique to those of Pacheco et al. (2021), Graham et al. (2020), Nizzoli et al. (2021) and Giglietto et al. (2020b).

A motivating example

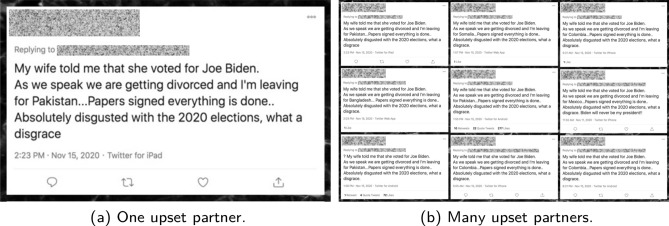

In the aftermath of the 2020 US Presidential election, a data scientist noticed a pattern emerging on Twitter.1 Figure 1a shows a tweet by someone who was so upset with their partner voting for Joe Biden in the election that they decided to divorce them immediately and move to Pakistan (in the midst of the COVID-19 pandemic). This might seem an extreme reaction, but the interesting thing was that the person was not alone. The researcher had identified dozens of similar, but not always identical, tweets by people leaving for other cities but for the same reason (Fig. 1b). Analysis of these accounts also revealed they were not automated accounts. This kind of pattern of tweeting identical text is sometimes referred to as “copypasta” and can be used to give the appearance of a genuine grassroots movement on a particular issue. It had been previously used by ISIS terrorists as they approached the city of Mosul, Iraq, in 2014, which they occupied for several years after the local forces believed a giant army was invading based on the level of relevant online activity (Brooking and Singer 2016).

Fig. 1.

Copypasta tweets noticed in the aftermath of the 2020 US Presidential election, which may be a coordinated campaign to undermine confidence in American society’s ability to accept electoral outcomes, or may just be a prank similar to a flashmob

It is unclear whether this “copypasta” campaign is part of a deliberate SIO, designed to damage trust in the electoral system and ability of Americans to accept the loss of a preferred political party in elections, or simply a group of like-minded jokers starting a viral gag or engaging in a kind of flashmob. At the very least, it is important to be able to identify which accounts are participating in the event, and how they are coordinating their actions.

Online information campaigns and related work

Social media has been increasingly used for communication in recent years (particularly political communication), and so the market has followed, with media organisations using it for cheap, wide dissemination of their products and consumers increasingly looking to it for news (Shearer and Grieco 2019). Over that same time period, people have begun to explore how to exploit the features of the internet and social media that bring us benefits: the ability to target marketing to specific audiences that connects businesses with the most receptive customers (e.g. Kosinski et al. 2013) also enables highly targeted non-transparent political advertising (Woolley and Guilbeault 2018; Singer and Brooking 2019) and the ability to expose people to propaganda and recruit them to extremist organisations (Badawy and Ferrara 2018; Benkler et al. 2018; The Soufan Center 2021); the anonymity that supports the voiceless in society to express themselves also enables trolls to attack others without repercussions (Hine et al. 2017; Burgess and Matamoros-Fernández 2016); and the automation that enables news aggregators also facilitates social and political bots (Ferrara et al. 2016; Woolley 2016; Cresci 2020). In summary, targeted marketing and automation coupled with anonymity provide the tools required for potentially significant influence in the online sphere, perhaps enough to swing an election, but certainly enough to be associated with real-world violence (The Soufan Center 2021; Karell et al. 2021).

Effective influence campaigns relying on these capabilities will somehow coordinate the actions of their participants. Early work on the concept of coordination by Malone and Crowston (1994) described it as the dependencies between the tasks and resources required to achieve a goal. One task may require the output of another task to complete. Two tasks may share, and require exclusive access to, a resource or they may both need to use the resource simultaneously.

At the other end of the spectrum, sociological studies of influence campaigns can reveal their intent and how they are conducted, but they consider coordination at a much higher level. Starbird et al. (2019) highlight three kinds of campaigns: orchestrated, centrally controlled campaigns that are run from the top down (e.g. paid teams, Chen 2015; King et al. 2017); cultivated campaigns that infiltrate existing issue-based movements to drive them to particular extreme positions (e.g. encouraging political violence during elections, Nimmo et al. 2020; Jamieson 2020; The Soufan Center 2021); and emergent campaigns arising from enthusiastic communities centred around a shared ideology (e.g. conspiracy groups and other fringe movements). Though their strategies differ, they use the same online interactions as normal users (e.g. posts, shares, mentions, hashtags, URLs), but their patterns differ. Fundamentally, however, they rely on influencing others by spreading an agenda-driven message or narrative.

At the scale of nation states, multiple disinformation campaigns may be run as part of an operation, each with different targets and different intended outcomes. The 2016 US Presidential election has received significant academic (as well as political and diplomatic) attention, and deep analysis of the interference by Russia has revealed a variety of such campaigns were employed to promote Donald Trump, detract from Hilary Clinton, sow doubt in the country’s democratic system and generally exacerbate divisions in society (Benkler et al. 2018; Mueller 2018; Jamieson 2020). Furthermore, much of the social media activity in particular was conducted by accounts made to look like average Americans, including “personable swing-voters” (p. 134, Jamieson 2020) and comparatively simple analyses of individual accounts over long periods has revealed how they were used to build audiences susceptible to their narratives (Dawson and Innes 2019). America is clearly not the only target—campaigns have been directed across any national border as well as within (Woolley and Howard 2018; Singer and Brooking 2019; Nimmo et al. 2020). Many of the analyses mentioned in these works rely on direct connections between entities (e.g. Benkler et al.’s mentions of articles and YouTube videos and Nimmo et al’s follower networks, and studies of retweet and mention networks in chapters of Woolley and Howard’s book), but Jamieson makes it clear that covert or at least indirect behaviour-related connections were a key part of the Russian operation during the 2016 US presidential election.

Disinformation campaigns effectively trigger human cognitive heuristics, such as individual and social biases to believe what we hear first (anchoring) and what we hear frequently and can remember easily (availability cascades) (Tversky and Kahneman 1973; Kuran and Sunstein 1999); thus the damage is already done by the time lies are exposed. This is especially true if they are promoted under the guise of authority, such as from accounts purporting to be media outlets, like @TodayPittsburgh or @KansasDailyNews (p. 188, Miller 2018). Persuasive messaging also relies on emotion, especially fear, and appeals to religion (Jamieson 2020), and have been effective even when such claims border on the ridiculous and conspiratorial (The Soufan Center 2021). Recent experiences of false information moving beyond social media during Australia’s 2019–2020 bushfires highlight that identifying these campaigns as they occur can aid OSN monitors and the media to better inform the public (Graham and Keller 2020; Weber et al. 2020).

In between task level coordination and entire SIOs, at the level of social media interactions, as demonstrated by Graham and Keller (2020), we can directly observe the online actions and effects of such activities, and infer links between accounts based on pre-determined criteria. Relevant efforts in computer science have focused on a variety of methods and domains (see Table 1). These efforts have uncovered a new field of research: the computer science study of the “orchestrated activities” of accounts in general, as Grimme et al. (2018) put it, regardless of their degree of automation (Cresci et al. 2017; Alizadeh et al. 2020; Nizzoli et al. 2021; Vargas et al. 2020). It must be noted that bot activity, even coordinated activity, may be entirely benign and even useful (Ferrara et al. 2016; Graham and Ackland 2017).

Table 1.

Detecting inauthentic behaviour in the computer science literature

| Automation | Ferrara et al. (2016), Davis et al. (2016), Grimme et al. (2017), Cresci (2020) |

| Campaigns | |

| —By content | Lee et al. (2013), Assenmacher et al. (2020), Alizadeh et al. (2020), Graham et al. (2020) |

| —By URL | Ratkiewicz et al. (2011), Cao et al. (2015), Giglietto et al. (2020b), Broniatowski (2021), Yu (2021) |

| —By hashtag | Ratkiewicz et al. (2011), Burgess and Matamoros-Fernández (2016), Varol et al. (2017), Weber et al. (2020) |

| Synchronicity | Chavoshi et al. (2017), Hine et al. (2017), Nasim et al. (2018), Mazza et al. (2019), Pacheco et al. (2020), Magelinski et al. (2021) |

| Communities | Vo et al. (2017), Morstatter et al. (2018), Gupta et al. (2019) |

| Political bots | Bessi and Ferrara (2016), Woolley (2016), Rizoiu et al. (2018), Woolley and Guilbeault (2018) (particularly embeddedness and organisation) |

Though some studies have observed the existence of strategic behaviour in and between online groups (e.g. Keller et al. 2017; Kumar et al. 2018; Hine et al. 2017; Keller et al. 2019; Giglietto et al. 2020b; Broniatowski 2021), the challenge of identifying a broad range of their interaction strategies and their underpinning execution methods remains to be fully explored, especially as new strategies are constantly be devised (Nimmo et al. 2020).

Inferring social networks from OSN data requires attendance to the temporal aspect to understand information (and influence) flow and degrees of activity (Holme and Saramäki 2012). Real-time processing of OSN posts can enable tracking narratives via text clusters (Assenmacher et al. 2020), but to process networks requires graph streams (McGregor 2014) or window-based pipelines (e.g. Weber 2019), otherwise processing is limited to post-collection activities (Graham et al. 2020; Alizadeh et al. 2020; Vargas et al. 2020; Pacheco et al. 2021).

This work contributes to the identification of interaction-based strategic coordination behaviours observable over relatively short time frames, along with a general technique to enable detection of groups using them. As such, this enhances the toolbox of techniques available to higher level explorations of information campaigns and operations (e.g. Benkler et al. 2018; Jamieson 2020; Nimmo et al. 2020; The Soufan Center 2021).

Coordinated amplification strategies

Influencing others online, especially on political and social issues, relies on two primary mechanisms to maximise the reach of a given narrative thus amplifying its effect: mass dissemination and engagement. For example, an investigation of social media activity following UK terrorist attacks in 20172 identified accounts promulgating contradictory narratives, inflaming racial tensions and simultaneously promoting tolerance to sow division. By engaging aggressively, the accounts drew in participants who then spread the message.

Mass dissemination aims to maximise audience, to convince through repeated exposure and, in the case of malicious use, to cause outrage, polarisation and confusion, or at least attract attention to distract from other content.

Engagement is a form of dissemination that solicits a response. It relies on targeting individuals or communities through mentions, replies and the use of hashtags as well as rhetorical approaches that invite responses (e.g. inflammatory comments or, as present in the UK terrorist example above and observed by Nimmo et al. (2020), pleas to highly popular accounts).

A number of online coordination strategies have been observed in the literature making use of both dissemination and engagement to amplify their effect, including specifically those identified in Table 2. These in particular are all potentially observable in short periods of online activity, e.g. a political debate (Rizoiu et al. 2018). Other coordinated behaviour observed in the literature require some ability to identify accounts of interest and track them over extended periods of time. Metadata shuffling involves groups of accounts hiding through changing and even swapping their names and other metadata (Mariconti et al. 2017; Ferrara 2017). Related to this is narrative switching, in which an account suddenly deletes all their posts and then, potentially after a significant period of time, starts posting about different themes and issues (perhaps also having changed their account’s appearance) (Dawson and Innes 2019). Dawson and Innes (2019) also observed changes in accounts’ follower counts to identify the purchase of fake followers and follower fishing (used to boost reputation metrics), both of which require records of potentially lengthy periods of activity. Dawson and Innes (2019) also use synchronicity to identify groups temporally correlated through activity, but neglect to describe their specific method.

Table 2.

Coordinated amplification strategies

| Pollution | Flooding a community with repeated or objectionable content, causing the OSN to shut it down |

| Observed by | (Ratkiewicz et al. 2011; Woolley 2016; Hegelich and Janetzko 2016; Hine et al. 2017; Nasim et al. 2018; Fisher 2018; Mariconti et al. 2019) |

| Boost | Heavily reposting or duplicating content to make it appear popular |

| Observed by | (Ratkiewicz et al. 2011; Cao et al. 2015; Varol et al. 2017; Vo et al. 2017; Gupta et al. 2019; Keller et al. 2019; Graham et al. 2020; Assenmacher et al. 2020) |

| Bully | Groups engaging in organised harassment of an individual or community. |

| Observed by | (Ratkiewicz et al. 2011; Burgess and Matamoros-Fernández 2016; Hine et al. 2017; Kumar et al. 2018; Datta and Adar 2019; Mariconti et al. 2019) |

Different behaviour primitives, such as those in Table 3, can be used to execute the amplification strategies mentioned. Many of these behaviour primitives have analogies on multiple OSNs, so techniques devised to detect them on one could be employed effectively on others. Dissemination can be carried out by reposting, using hashtags, or mentioning highly connected individuals in the hope they spread a message further. Accounts doing this covertly will avoid direct connections, and thus inference is required for identification. Giglietto et al. (2020b) propose detecting anomalous levels of coincidental URL use as a way to do this; we expand this approach to other interactions.

Table 3.

Social media interaction equivalents

| OSN | POST | REPOST | REPLY | MENTION | TAG | LIKE |

|---|---|---|---|---|---|---|

| Tweet | Retweet | Reply tweet | @Mention | #Hashtags | Favourite | |

| Post | Share | Comment | Mention | #Hashtag | Reactions | |

| Tumblr | Post | Repost | Comment | @Mention | #Tag | Heart |

| Post | Crosspost | Comment | /u/Mention | Subreddit | Up/down vote |

Some strategies require more sophisticated detection: detecting bullying through dogpiling (e.g. as happened during the #GamerGate incident, studied by Burgess and Matamoros-Fernández (2016), or to those posing questions to public figures at political campaign rallies3) requires collection of (mostly) entire conversation trees, which, while trivial to obtain on forum-based sites (e.g. Facebook and Reddit), are difficult on stream-of-post sites (e.g. Twitter,4 Parler and Gab). As mentioned, detecting metadata shuffling requires long term collection on broad issues to detect the same accounts being active in different contexts, and other follower and narrative analyses can also require extended collection periods.

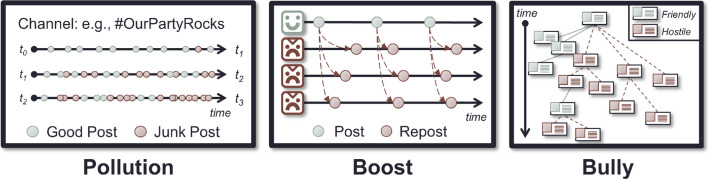

Figure 2 shows representations of the strategies highlighted above, offering clues about how they might be identified. To detect Pollution, we match the authors of posts mentioning the same (hash)tag. This way we can reveal not just those who are using the same hashtags with significantly greater frequency than the average but also those who use more hashtags than is typical. To detect a variant of Boost, we match authors reposting the same original post, and can explore which sets of users not only repost more often than the average, but those who repost content from a relatively small pool of accounts. Alternatively, we can match authors who post identical, or near-identical text, as seen in our motivating example (Sect. 1.1); Graham et al. (2020) have recently developed open-sourced methods for this kind of matching, which have previously been used for campaign analysis (Lee et al. 2013). Considering reposts like retweets, however, it is unclear whether platforms deprioritise them when responding to stream filtering and search requests, so special consideration may be required when designing data collection plans. Finally, to detect Bully, we match authors whose replies are transitively rooted in the same original post, thus they are in the same conversation. This requires collection strategies that result in complete conversation trees, and also stipulates a somewhat strict definition of ‘conversation’. On forum-based OSNs, the edges of a ‘conversation’ may be relatively clear: by commenting on a post, one is ‘joining’ the ‘conversation’. Delineating smaller sets of interactions within all the comments on a post to find smaller conversations may be achieved by regarding each top-level comment and its replies as a conversation, but this may not be sufficient. Similarly, on stream-based OSNs, a conversation may be engaged in by a set of users if they all mention each other in their posts, as it is not possible to reply to more than one post at a time.

Fig. 2.

Patterns matching the mentioned coordinated amplification strategies. Green posts and avatars are benign, whereas red or maroon ones are malign

Problem statement

A clarification of our challenge at this point is:

To identify groups of accounts whose behaviour, though typical in nature, is anomalous in degree.

There are two elements to this. The first is discovery. How can we identify not just behaviour that appears more than coincidental, but also the accounts responsible for it? That is the topic of the next section. The second element is validation. Once we identify a group of accounts via our method, what guarantee do we have that the group is a real, coordinating set of users? This is especially difficult given inauthentic behaviour is hard for humans to judge by eye (Cresci et al. 2017; Benkler et al. 2018; Jamieson 2020).

Methodology

The major OSNs share a number of features, primarily in how they permit users to interact with each other, digital media and the platforms (e.g. Table 3); hashtags, URLs, and mentions work much the same way across many OSNs. By focusing on these commonalities, we can develop approaches that generalise across OSNs.

Traditional social network analysis relies on long-standing relationships between actors (Wasserman and Faust 1994; Borgatti et al. 2009). On OSNs this requirement is typically fulfilled by friend/follower relations. These are expensive to collect and quickly degrade in meaning if not followed with frequent activity. By focusing on active interactions, however, it is possible to understand not just who is interacting with whom, but to what degree. This provides a basis for constructing (or inferring) social networks, acknowledging they may be transitory.

LCNs are built from inferred links between accounts. Supporting criteria relying on interactions alone, as observed in the literature (Ratkiewicz et al. 2011; Keller et al. 2019), include retweeting the same tweet (co-retweet), using the same hashtags (co-hashtag) or URLs (co-URL), or mentioning the same accounts (co-mention). To these we add joining the same ‘conversation’ (a tree of reply chains with a common root tweet) (co-conv). As mentioned earlier, other ways to link accounts rely on similar or identical content, metadata and temporal patterns (see Sect. 2). The criteria underpinning LCN links may be a combination of these and other interaction types.

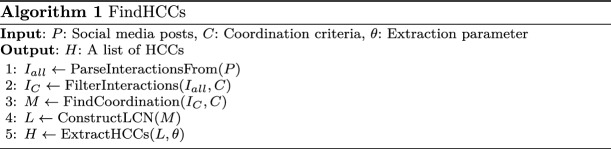

The LCN/HCC pipeline

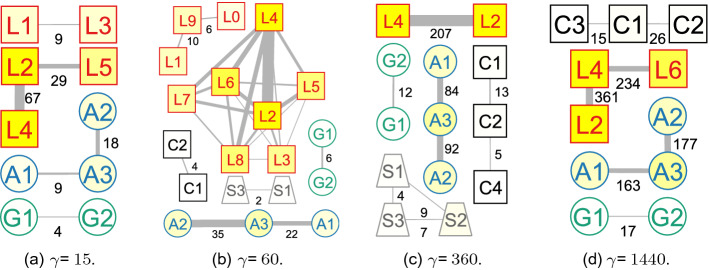

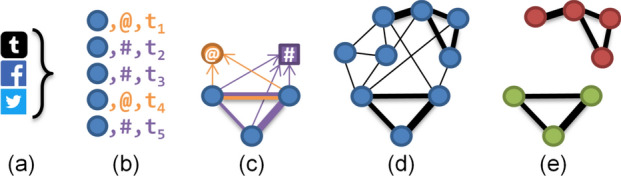

The key steps to extract HCCs from raw social media data are shown in Fig. 3 and documented in Algorithm 1. The example in Fig. 3 is explained after the algorithm has been explained, in Sect. 3.1.2.

Fig. 3.

Conceptual LCN construction and HCC discovery process

Step 1. Convert social media posts P to common interaction primitives, . This step removes extraneous data and provides an opportunity for the fusion of sources by standardising all interactions (thus including only the elements required for the coordination being sought).

Step 2. From , filter the interactions, , relevant to the set of criteria (e.g. co-mentions and co-hashtags).

Step 3. Infer links between accounts given C, ensuring links are typed by criterion. The result, M, is a collection of inferred pairings. The count of inferred links between accounts u and v due to criterion is .

Step 4. Construct an LCN, L, from the pairings in M. This network is a set of vertices V representing accounts connected by undirected weighted edges E of inferred links. These edges represent evidence of different criteria linking the adjacent vertices. The weight of each edge between vertices representing accounts u and v for each criterion c is , and is equal to .

Most community detection algorithms will require the multi-edges be collapsed to single edges. The edge weights are incomparable (e.g. retweeting the same tweet is not equivalent to using the same hashtag), however, for practical purposes, the inferred links can be collapsed and the weights combined for cluster detection using a simple summation, e.g. Eq. (1), or a more complex process like varied criteria weighting.

| 1 |

Some criteria may result in highly connected LCNs, even if its members never interact directly. Not all types of coordination will be meaningful—people will co-use the same hashtag repeatedly if that hashtag defines the topic of the discussion (e.g. #auspol for Australian politics), in which case it is those accounts who co-use it significantly more often than others which are of interest. If required, the final step filters out these coincidental connections.

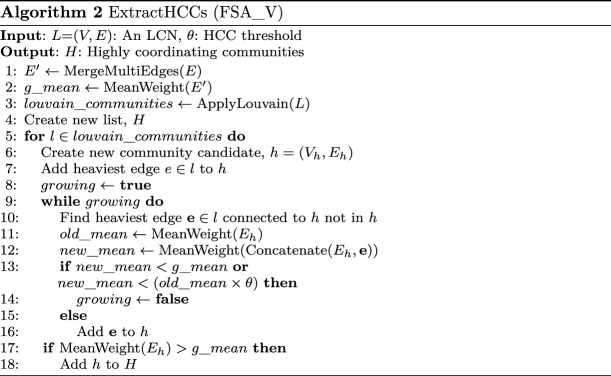

Step 5. Identify the highest coordinating communities H in L (Fig. 3e) using a suitable community detection algorithm, such as Blondel et al. (2008)’s Louvain algorithm (used by Morstatter et al. 2018; Nasim et al. 2018; Vosoughi et al. 2018; Nizzoli et al. 2021), k nearest neighbour (kNN) (used by Cao et al. 2015), edge weight thresholding (used by Lee et al. 2013; Pacheco et al. 2021), or FSA (Şen et al. 2016), an algorithm from the Social Network Analysis community that focuses on extracting sets of highly influential nodes from a network. Depending on the size of the dataset under consideration, algorithms suitable for very large networks may need to be considered (Fang et al. 2019). Some algorithms may not require the LCN’s multi-edges to be merged (e.g. Bacco et al. 2017). We present a variant of FSA (Şen et al. 2016), FSA_V (Algorithm 2), designed to take advantage of FSA’s benefits while addressing some of its costs. FSA does not just divide a network into communities (so that every node belongs to a community), but extracts only subsets of adjacent nodes that form influential communities within the overall network. FSA_V reduces the computational complexity introduced by FSA, which recursively applies Louvain to divide the network into smaller components and then, under certain circumstances, stitches them back together. The reason for this is to make FSA_V more suitable for application to a streaming scenario, in which execution speed is a priority.

Similar to FSA, FSA_V initially divides L into communities using the Louvain algorithm but then builds candidate HCCs within each, starting with the ‘heaviest’ (i.e. highest weight) edge (representing the most evidence of coordination). It then attaches the next heaviest edge until the candidate’s mean edge weight (MEW) is no less than () of the previous candidate’s MEW, or is less than L’s overall MEW. In testing, edge weights appeared to follow a power law, so was introduced to identify the point at which the edge weight drops significantly; requires tuning. A final filter ensures no HCC with a MEW less than L’s is returned. Unlike in FSA, recursion is not used, nor stitching of candidates, resulting in a simpler algorithm.

This algorithm prioritises edge weights while maintaining an awareness of the network topology by examining adjacent edges, something ignored by simple edge weight filtering. Our goal is to find sets of strongly coordinating users, so we prioritise strongly tied communities while still acknowledging coordination can also be achieved with weak ties (e.g. 100 accounts paid to retweet one tweet).

The complexity of the entire pipeline is low order polynomial due primarily to the pairwise comparison of accounts to infer links in Step 3, which can be constrained by window size when addressing the temporal aspect. For large networks (i.e. networks with many accounts), that may be too costly to be of practical use; the solution for this relies on the application domain inasmuch as it either requires a tighter temporal constraint (i.e. a smaller time window) or tighter stream filter criteria, causing a reduction in the number of accounts, potentially along with a reduction in posts. Algorithmic complexity is discussed in Sect. 3.3.

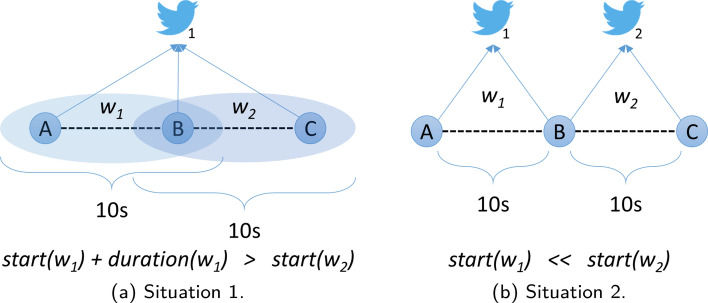

Addressing the temporal aspect

Temporal information is a key element of coordination, and thus is critical for effective coordination detection. Frequent posts within a short period may represent genuine discussion or deliberate attempts to game trend algorithms (Grimme et al. 2018; Varol et al. 2017; Assenmacher et al. 2020). We treat the post stream as a series of discrete windows to constrain detection periods. An LCN is constructed from each window (Step 4), and these are aggregated and mined for HCCs (Step 5). We assume posts arrive in order, and assign them to windows by timestamp.

A brief example

Figure 3 gives an example of searching for co-hashtag and co-mention coordination across Facebook, Twitter, and Tumblr posts. The posts are converted to their interaction primitives in Step 1, shown in Fig. 3a. The information required from each post is the identity of the post’s author,5 the timestamp of the post for addressing the temporal aspect, and the hashtag or account mentioned (there may be many, resulting in separate records for each). This is done in Fig. 3b, which shows the filtered mentions (in orange) and hashtag uses (in purple), ordered according to timestamp.

Step 3 in Fig. 3c involves searching for evidence of coordination through searching for our target coordination strategies through pairwise examination of accounts and their interactions. Here, three accounts co-use a hashtag while only two of them co-mention another account.

By Step 4 in Fig. 3d, the entire LCN has been constructed, and then Fig. 3e shows its most highly coordinating communities.

As mentioned above, to account for the temporal aspect, the LCNs produced for each time window in Fig. 3d can be aggregated and then mined for HCCs, or HCCs could be extracted from each window’s LCN and then they can be aggregated, or analysed in near real-time, as dictated by the application domain.

Opportunities for fusion

As mentioned above, many of the interaction we consider have analogies on multiple OSNs, so a technique applied to Twitter, for example, may also be effective on Reddit or Tumblr. Misinformation was widely disseminated over Facebook, Tiktok, Twitter, and WhatsApp during the 2021 Israeli/Palestinian conflict as links to misattributed videos, images of blocks of text, and audio files.6 Our technique could be used to study coordinated link (i.e. URL) sharing across these platforms in an appropriate time period, similar to the work of Giglietto et al. (2020b) and Broniatowski (2021)—all that is required from each platform’s posts are the identity of the posting account, the link posted7 and the post’s timestamp. The identities of accounts posting the URLs will differ between platforms, of course, but this technique may also provide a mechanism for cross-platform identity matching, associating accounts that frequently post the same or similar content. Nimmo et al. (2020) essentially performed this task manually by searching for the same article content across different platforms, and then confirming similarity between the account names found. Our technique could be incorporated into the researcher’s workflow to make this task easier by searching for duplication of text, and automatically linking instances where it is found, and then highlighting those connections.

Validation methods

As mentioned in Sect. 2.1, the second element of addressing our research challenge is that of validation. Once HCCs have been discovered, it is necessary to confirm that what has been found are examples of genuine coordinating groups. This step is required before addressing the further question of whether the coordination is authentic (e.g. grassroots activism) or inauthentic (e.g. astroturfing).

Datasets

In addition to relevant datasets, we make use of a ground truth (GT), in which we expect to find coordination (cf., Keller et al. 2017; Vargas et al. 2020). By comparing the evidence of coordination (i.e. HCCs) we find within the ground truth with the coordination we find in the other datasets, we can develop confidence that: (a) our method finds coordination where we expect to find it (in the ground truth); and (b) our method also finds coordination of a similar type where it was not certain to exist. Furthermore, to represent the broader population (which is not expected to exhibit coordination), similar to Cao et al. (2015), we create a randomised HCC network from the non-HCC accounts in a given dataset, and then compare its HCCs with the HCCs that had been discovered by our method.

Membership comparison

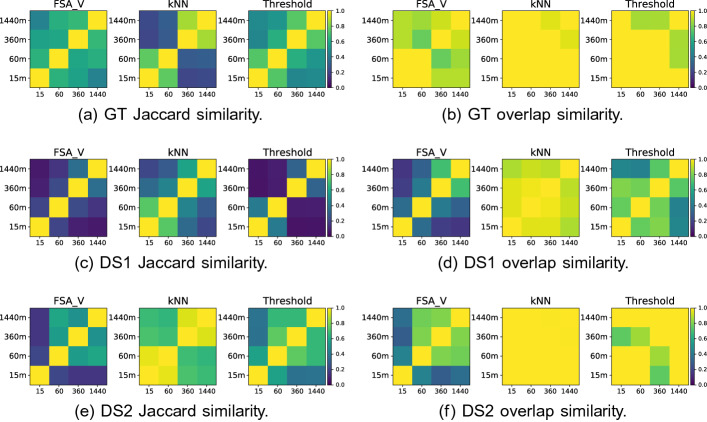

While our primary factors include the HCC extraction method (using FSA_V, kNN, or thresholds), the temporal window size, , and the strategy being targeted (Boost, Pollution or Bully), our interest prioritises the grouping of accounts over how they are individually connected, and so for each pair of results we compare the number, edge count and membership of the HCCs discovered. These figures provide context for the degree of overlap between the HCC members identified under different conditions (i.e. factor values). We use Jaccard and overlap similarity measures (Verma and Aggarwal 2020) to compare the accounts appearing in each (ignoring their groupings) and render them as heatmaps. The Jaccard similarity coefficient of two sets of items, X and Y, is:

| 2 |

If there is significant imbalance in the sizes of X and Y, then their similarity may be low, even if one is a subset of the other. An alternative measure, the Overlap coefficient (Verma and Aggarwal 2020), takes this imbalance into account by using the size of the smaller of the two sets as the denominator:

| 3 |

In a circumstance such as ours, it is unclear whether a longer time window will garner more results after HCC extraction is applied. The Jaccard and overlap coefficients can be used to quickly understand two facts about the sets of accounts identified as HCC members with different values of :

Is one set a subset of the other? If so, the overlap coefficient will reach 1.0, while the Jaccard coefficient will not if the two sets differ in size. If they are disjoint, the overlap coefficient will be 0.0 along with the Jaccard coefficient.

Do the sets differ in size? If the sets are different sizes, but one is a subset of the other, the overlap coefficient will hide this fact, while the Jaccard coefficient will expose it. If both coefficients have values close to 0.0, then the sets are clearly different in membership and potentially also in size. If the coefficient values are very close, then the sets are close in size, because the denominators are similar in size, meaning , but this will only occur if they share many members (i.e. is high).

Alongside the heatmaps, we provide exact numbers of the accounts which are common to the discovered HCCs, to better inform the reader of the overall influence of the particular factor(s) being varied. For example, by being able to compare the results for each value for in one visualisation, it is possible to see the progression of the coefficient values as the window size increases (in both the provided raw numbers and by the colour scale in the heatmaps).

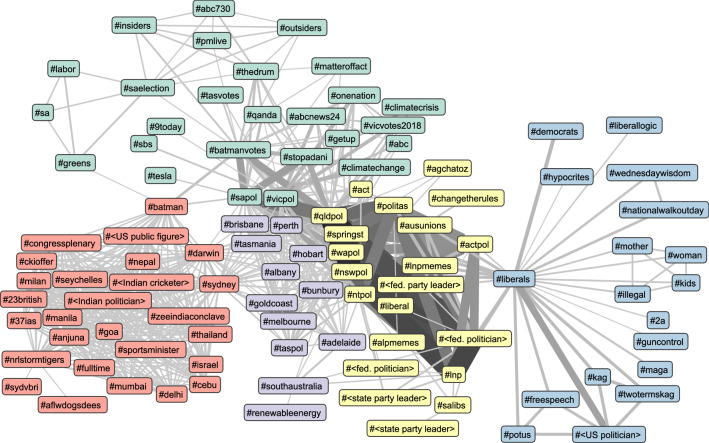

Network visualisation

A second subjective method of analysis for networks is to visualise them. We use two visualisation tools, visone (https://visone.info) and Gephi (https://gephi.org), both of which make use of force directed layouts, which help to clarify clusters in the network structure. Node colour is used to represent cluster membership detected with the Louvain method (Blondel et al. 2008). Each connected component is an HCC, and node colour can be used to represent the number of posts, and edge weight can be represented by thickness and, depending on the density of the network, darkness of colour. For analyses that involve multiple criteria (e.g. co-conv and co-mention), we use node shape to represent which combination of criteria an HCC is bound by (e.g. just co-mention or a combination of co-mention and co-conv or just co-conv).

By extending the HCC account networks with nodes to represent the ‘reasons’ or instances of evidence that link each pair of nodes, e.g. the tweets they retweet in common, or accounts they both mention or reply to, thereby creating a two-level account-reason network, we can investigate how HCCs relate to one another. In this case, the account-reason network has two types of nodes and two types of edges (‘coordinates with’ links between accounts and ‘caused by’ or ‘associated because’ links between ‘reasons’ and accounts). Visualising the two-level network by colouring nodes by their HCC and using a force-directed layout highlights how closely HCCs associate with each other, not only revealing what reasons draw HCCs together (i.e. HCCs may be bound by a single reason, or an HCC may be entirely isolated from others in the broader community), but also how many reasons bind them (i.e. many reasons may bind an HCC together or just one). Deeper insights can be revealed from this point using multi-layer network analyses.

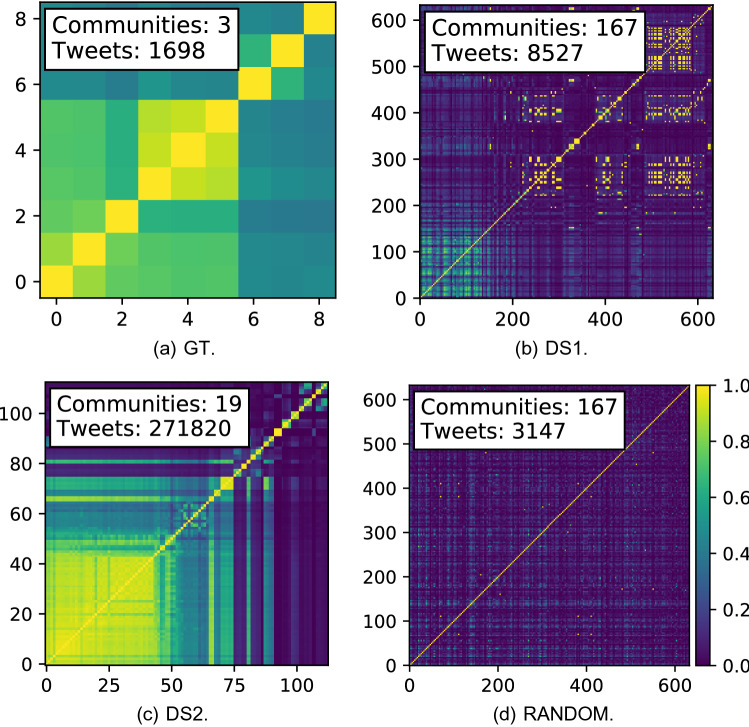

Consistency of content

To help answer RQ2, it is helpful to look beyond network structures and consider how consistent the content produced by an HCC is relative to other HCCs and the population in general. This will be most applicable when the type of strategy the HCC is suspected to have engaged in relies on repetition, e.g. co-retweeting or copypasta. If an HCC is boosting a message, it is reasonable to assume the content posted by the members of the HCC will be more similar internally than when compared externally (i.e. to the content of non-members). To analyse this internal consistency of content, we treat each HCC member’s tweets as a single document and create a doc-term matrix using 5 character n-grams for terms to maintain phrase ordering (which is lost with bag-of-word approaches). Comparing the members’ document vectors using cosine similarity in a pairwise fashion creates a matrix where n is the number of accounts in the HCC network. This approach was chosen for its performance with non-English corpora (Damashek 1995), and because using individual tweets as documents produced too sparse a matrix in a number of tests we conducted. The pairwise account similarity matrix can be visualised, using a spectrum of colours to represent similarity. By ordering the accounts on both the x and y axes to ensure they are grouped within their HCCs, if our hypothesis is correct that similarity within HCCs is higher than outside, then we should observe clear bright squares representing entire HCCs along the diagonal of the resulting similarity matrix. The diagonal itself will be the brightest because it represents each account’s similarity with itself.

If HCCs contribute few posts, which are similar or identical to other HCCs, then bright squares may appear off the diagonal, and this would be evidence similar to clusters of account nodes around a small number of reason nodes in the two-level account-reason networks mentioned above.

This method offers no indication of how active each HCC or HCC member is, so displays of high similarity may imply low levels of coincidental activity as well as high content similarity, just because of the lower likelihood that highly active accounts are highly similar in content (by contributing more posts, there are simply more opportunities for accounts’ content to diverge). The use of the 5-character n-gram approach is designed to offset this because each tweet in common between two accounts will yield a large number of points of similarity, as will the case when the same two tweets are posted in the same order (i.e. two accounts both post tweet and then ), because the overlap between the tweets will yield at least four points of similarity.

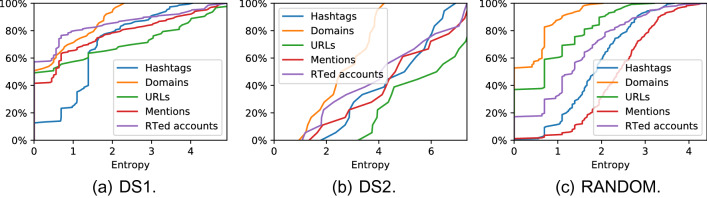

Variation of content

Converse to the consistency of content within HCC is the question of content variation, and how does the variation observed in detected HCCs differ from that of RANDOM groupings. Highly coordinated behaviour such as co-retweeting involves reusing the same content frequently, resulting in low feature variation (e.g. hashtags, URLs, mentioned accounts), which can be measured as entropy (Cao et al. 2015). A frequency distribution of each HCC’s use of each feature type is used to calculate each entropy score. Low feature variation corresponds to low entropy values. As per Cao et al. (2015), we compare the entropy of features used by detected HCCs to RANDOM ones and visualise their cumulative frequency. Entries for HCCs which did not use a particular feature are omitted, as their scores would inflate the number of groups with 0 entropy.

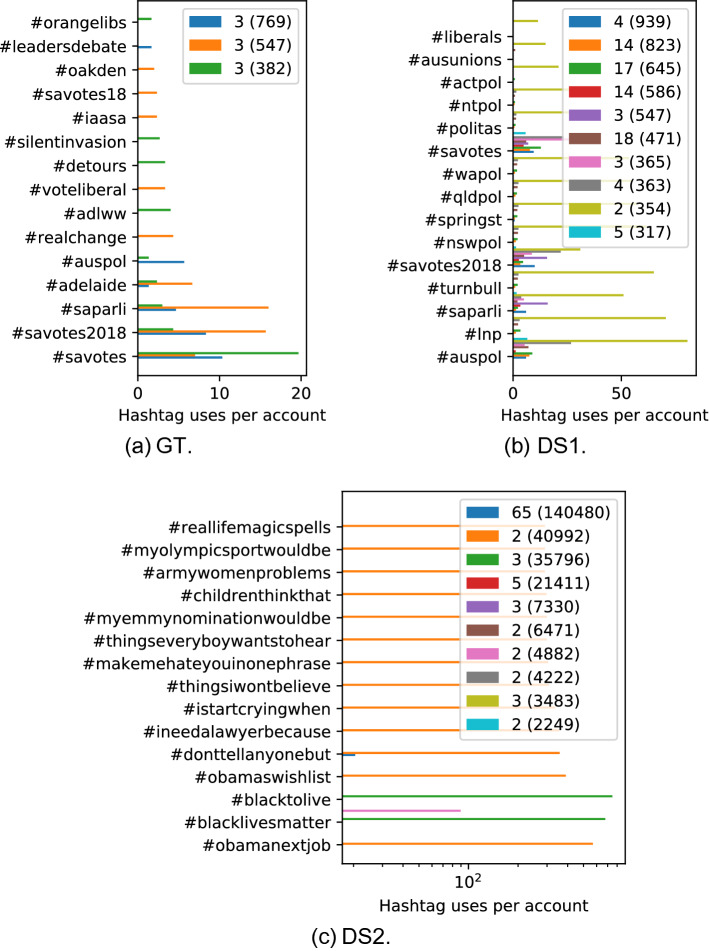

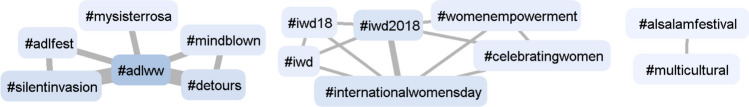

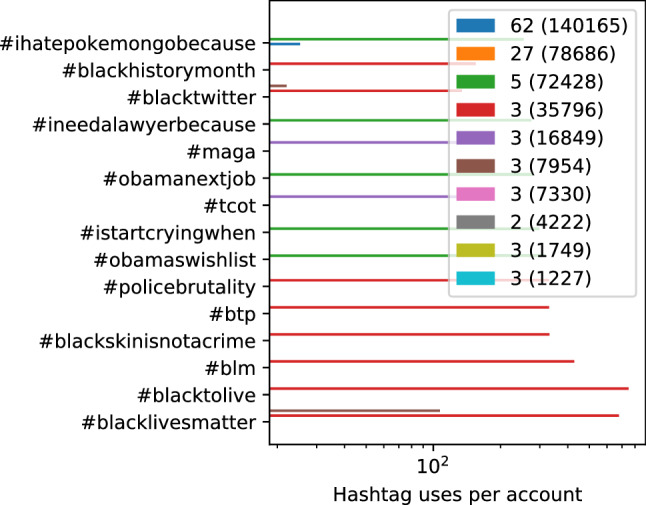

Hashtag analysis

Hashtags can be used to define discussion groups or chat channels (Woolley 2016), so hashtag analysis can be used to study those communities. It is another aspect to content analysis that relies upon social media users declaring the topic of their post through the hashtags they include. At the minimum, we can plot the frequency of the most frequently used hashtags as used by the most active HCCs. In doing so, we can quickly see which hashtags different HCCs make use of, and how they relate by how they overlap. Some hashtags will be unique to HCCs, while others will be used by many. This exposes the nature of HCC behaviour: they may focus their effort on a single hashtag, perhaps to get it trending, or they may use many hashtags together, perhaps to spread their message to different communities.

To further explore how hashtags are used together, we perform hashtag co-occurrence analysis, creating networks of hashtags linked when they are mentioned in the same tweet (as distinct from the co-hashtag linking introduced above). These hashtag co-occurrence networks are sometimes referred to as semantic networks (Radicioni et al. 2020). When visualised with force-directed layouts it is possible to see themes in the groupings of hashtags, and to gain insights from how the theme clusters are connected (including when they are isolated from one another). Colouring hashtags by their clusters detected using the Louvain method (Blondel et al. 2008) can provide a statistical measure of hashtag relations.

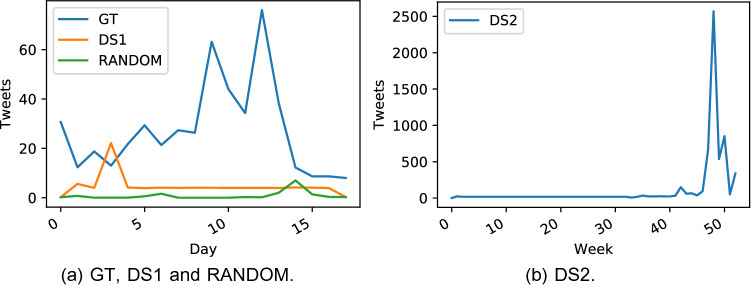

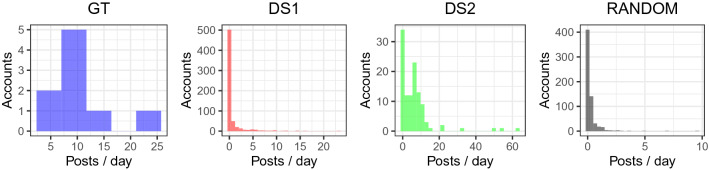

Temporal patterns

Campaign types can exhibit different temporal patterns (Lee et al. 2013), so we use the same temporal averaging technique as Lee et al. (2013) (dynamic time warping barycenter averaging) to compare the daily activities of the HCCs in the GT and RANDOM datasets with those in the test datasets. The temporal averaging technique produces a single time series made by averaging together each account’s activity time series. Using this technique avoids averaging out of time series that are off-phase from one another by aligning them before averaging them.

Another aspect of temporal analysis is the comparison of HCCs detected in different time windows, including specifically observing whether such HCCs share members and what the implications are for the behaviour of those members. This is non-trivial for any moderately large dataset, but examination of the ground truth can provide insight into the behaviours exhibited by known collaborators.

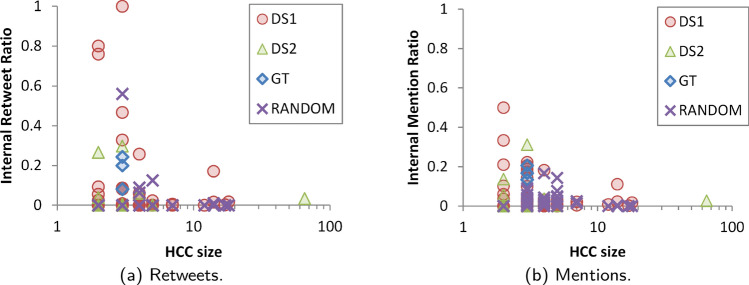

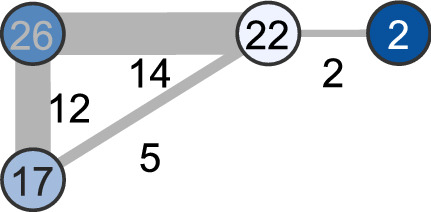

Focus of connectivity

Groups that retweet or mention themselves create direct connections between their members, meaning if one is discovered, it may be trivial to find its collaborators. To be covert, therefore, it would be sensible to have a low internal retweet and mention ratios (IRR and IMR, respectively). Formally, if and are the the sets of retweets and mentions of accounts within an HCC, respectively, and and are the corresponding sets of retweets and mentions of accounts outside the HCC, then, for a single HCC

| 4 |

| 5 |

Consistency of coordination

The method presented Sect. 3.1 highlights HCCs that coordinate their activity at a high level over an entire collection period. Further steps can be taken to determine which HCCs are coordinating their behaviour repeatedly and consistently across adjacent time windows. In this case, for each time window, we consider not just the nodes and edges from the current LCN, but additionally from previous windows, applying a degradation factor the contribution of their edge weights. To build an LCN from a sliding frame of T time windows, the new LCN includes the union of the nodes and edges of the individual LCNs from the current and previous windows, but to calculate the edge weights, we apply a decay factor, , to the weights of edges appearing in windows before the current one. In this way, we apply a multiplier of to the edge weights, where x is the number of windows into the past: the current window is 0 windows into the past, so its edges are multiplied by ; the immediate previous window is 1 window back, so its edge multiplier is ; the one before that uses , and so on until the farthest window back uses . Generalising from Step 4, the weight for an edge between accounts u and v for criterion c at window t and a sliding window T windows wide is given by

| 6 |

In this way, to create a baseline in which the sliding frame is only one window wide, one only need choose , regardless of the value of . As , the contributions of previous windows are given more consideration.

Supervised machine learning with one-class classifiers

An approach that aids in the management of data with many features is classification through machine learning. This is an approach that has been used extensively in campaign detection, in which tweets are classified, rather than accounts (e.g. Lee et al. 2013; Chu et al. 2012; Wu et al. 2018). Because of its ‘black box’ nature, its application should be considered carefully, however. Our intent is to use classification to validate that entire HCCs (not just individual tweets or accounts) detected in datasets are similar to those found in ground truth. Such classifiers will not be generally applicable, as they rely on ground truth (which is historical by nature) for training data. Tactics and strategies used in information operations will change over time, as shown by Alizadeh et al. (2020); this is not just to avoid detection but also because OSN features change over time. As our focus is only on a positive answer to whether one HCC is similar to others, it is acceptable to rely on one-class classification (i.e. an HCC detected in a dataset is recognised as COORDINATING/positive or is regarded as NON-COORDINATING/unknown). The more common binary classification approach was used by Vargas et al. (2020), however our approach has two distinguishing features:

We rely on one-class classification because we have positive examples of what we regard as COORDINATING from the ground truth, and everything else is regarded as unknown, rather than definitely ‘not coordinating’. These are sometimes referred to as positive and unlabeled, or PU, classifiers. A one-class classifier can, for example, suggest a new book from a wide range (such as a library) based on a person’s reading history. In such a circumstance, the classifier designer has access to positive examples (books the reader likes or has previously borrowed) but all other instances (books, in this case) are either positive or negative. When our one-class classifier recognises HCC accounts as positive instances, it provides confidence that the HCC members are coordinating their behaviour in the same manner as the accounts in the ground truth. We can therefore prioritise Precision over Recall (discussed below).

We rely on features from both the HCCs and the HCC members and use the HCC members as the instances for classification, given it is unclear how many members an HCC may have, and accounts that are members of HCCs may have traits in common that are distinct from ‘normal’ accounts. In contrast, Vargas et al. (2020) relied on features of “coordination networks” (i.e. HCCs) alone, as they were their classification instances. For this reason the feature vectors that our classifier is trained and tested on will comprise features drawn from the individual accounts and their behaviour as well as the behaviour of the HCC of which they are a member. Feature vectors for members of the same HCC will naturally share the feature values drawn from their grouping.

Regarding the construction of the feature vector, at a group level, we consider not just features from the HCC itself, which is a weighted undirected network of accounts, but of the activity network built from the interactions of the HCC members within the corpus. The activity network is a multi-network (i.e. supports multiple edges between nodes) with nodes and edges of different types. The three node types are accounts, URLs, and hashtags. Edges represent interactions and the following types are modelled: hashtag uses, URL uses, mentions, repost/retweets, quotes (cf. comments on a Facebook share or Tumblr repost), reply, and ‘in conversation’ (meaning that one account replied to a post that was transitively connected via replies to an original post by an account in the corpus). This activity network therefore represents not just the members of the HCC but also their degree of activity in context.

Classifier algorithms

We use the GT to train three classifiers. A bagging PU classifier (BPU, Mordelet and Vert 2014) was used, the implementation8 for which was based on a Random Forest (RF) classifier configured with 1000 trees (estimators). We also used a standard 1000 tree RF, as used by Vargas et al. (2020), to compare directly with BPU. A Support Vector Machine (SVM) classifier was also used, given the technique’s known high performance with non-linear recognition problems even with small feature sets due its use of the kernel trick. Furthermore, Mordelet and Vert (2014) employed a variety of SVMs as part of their experimentation, though our choice of implementation differed. Both SVM and RF implementations were drawn from the scikit-learn Python library (Pedregosa et al. 2011). Contrasting “unlabeled” training instances were created from the RANDOM dataset. Feature vector values were standardised prior to classification and upsampling was applied to create balanced training sets of approximately 400 positive and random elements each. 10-fold cross validation was used.

The classifiers predict whether instances provided to them are in the positive or unlabeled classes, which, to aid readability, we refer to as ‘COORDINATING’ and ‘NON-COORDINATING’, respectively.

Performance metrics

The performance metrics used include the classifier’s accuracy, scores for each class, and the Precision and Recall measures that the scores are based upon. High precision implies the classifier is good at recognising samples correctly, and high Recall implies that a classifier does not miss instances of the class they are trained on in any testing data. For example, a good apple classifier will successfully recognise an apple when it is presented with one, and when presented with a bowl of fruit, it will successfully find all the apples in it. The score combines these two measures:

| 7 |

and provides insight into to the balance between the classifier’s Precision and Recall. The accuracy of a classifier is the proportion of instances in a test data set that the classifier labeled correctly. In this way, the accuracy is the most coarse of these measures, because it offers little understanding of whether the classifier is missing instances it should find (false negatives) or labeling non-matching instances incorrectly (false positives). The score begins to address this failing, but direct examination of the Precision and Recall provides the most insight into each classifier’s performance.

Bot analysis

Although coordinated behaviour in online campaigns is often conducted without automation (Starbird et al. 2019), automation is still commonly present in campaigns, especially in the form of social bots, which aim to present themselves as typical human users (Ferrara et al. 2016; Woolley and Guilbeault 2018; Cresci 2020). For this reason, the technique presented here is a valid tool for exposing teams of cooperating bot and social bot accounts. We use the Botometer (Davis et al. 2016) service to evaluate selected accounts for bot-like behaviour. The primary summary measure for bot classification is the Complete Automation Probability (CAP),9 provided as a value in [0, 1] in two variants: one for predominantly English-speaking accounts and one language-agnostic. Other studies have relied on a CAP of 0.5 as a threshold for labelling an account as a bot, but there is a significant overlap between humans that act in a very bot-like manner and bots that are quite human-like, so we adopt the practice of Rizoiu et al. (2018) and regard scores below 0.2 to be human and above 0.6 to be bots.

Complexity analysis

The steps in processing timeline presented in Sect. 3.1 are reliant on two primary factors: the size of the corpus of posts, P, being processed, and the size of the set of accounts, A, that posted them. Therefore and the complexity of Step 1 is linear, O(|P|), because it requires processing each post, one-by-one. The set of interactions, , it produces may be larger than |P|, because a post may include many hashtags, mentions, or URLs, but given posts are not infinitely long (even long Facebook and Tumblr posts can only include several thousand words), the number of interactions will also be linear, i.e. , for some constant k. Step 2 filters these interactions down to only those of interest, , based on the type of coordinated activity sought, C, so , and again the complexity of this step is also linear, , as it requires each interaction to be considered. Step 3 seeks to find evidence of coordination between the accounts in the dataset, and so requires examining each filtered interaction and building up data structures to associate each account with their interactions (), then emitting pairs of accounts matching the coordination criteria, producing the set M, which requires the pairwise processing of all accounts, and so is steps with a subsequent complexity of . This, however, also depends on the pairwise comparison of each account’s interactions, which is likely to be small, practically, but theoretically could be as large as if one user is responsible for every single interaction in the corpus (but then |A| would be 1). On balance, as a result, we will regard the processing of each pair of users’ interactions as linear with a constant factor k (i.e. = ). In Step 4, producing the LCN, L, from the criteria is a matter of considering each match one-by-one, so is again linear (though potentially large, depending on |M|). The final step (5) is to extract the HCCs from the LCN, and its performance and complexity very much depend upon the algorithm employed, but significant research has been applied in this field (Bedru et al. 2020, as considered in, e.g.][). For FSA_V, which relies on the Louvain algorithm with complexity (Blondel et al. 2008), it considers edges within each community to build its HCC candidates, so has a complexity of less than O(|E|), where |E| is the number of edges in the LCN, meaning its complexity is linear. FSA_V’s complexity is therefore .

We regard the computation complexity of the entire pipeline as the highest complexity of its steps, which are:

Extract interactions from posts: O(|P|)

Filter interactions:

Find evidence of coordination:

Build LCN from the evidence: O(|M|)

Extract HCCs from LCN using, e.g. FSA_V:

The maximum of these is Step 3, the search for evidence of coordination, . Though in theoretical terms the method is potentially very costly, in practical terms we are bound by the number of accounts in the collection (which is determined by the manner in which the data was collected and the nature of the online discussion to which it pertains) and may be managed by constraining the time window, further reducing the number of posts (and therefore accounts) considered, as long as that suits the type of coordination being sought.

Evaluation

Our approach was evaluated in two phases:

The first was conducted as an experiment using the validation methods mentioned above and two datasets known to include coordinated behaviour, as well as a ground truth dataset.

The second phase involved two case studies in which we apply our approach against datasets relating to politically contentious topics expected to include polarised groups.

The first stage of the evaluation involved searching for Boost by co-retweet and other strategies while varying window sizes (). FSA_V was compared against two other community detection algorithms, when applied to the LCNs built in Step 4 (aggregated). We then validated the resulting HCCs through a variety of network, content, and temporal analyses and machine learning classification, guided by the research questions posed in Sect. 1. Discussion of further applications and performance metrics is also presented.

The experiment datasets

The two real-world datasets selected (shown in Table 4) represent two collection techniques: filtering a live stream of posts using keywords direct from the OSN (DS1) and collecting the posts of specific accounts (DS2):

DS1: Tweets relating to a regional Australian election in March 2018, including a ground truth subset (GT); and

DS2: A large subset of the Internet Research Agency (IRA, Chen 2015; Mueller 2018) dataset published by Twitter in October 2018.10

Table 4.

Experiment dataset statistics

| Tweets | Retweets (%) | Accounts | Tweet rate | Retweet rate | ||

|---|---|---|---|---|---|---|

| DS1 | 115,913 | 63,164 | (54.5%) | 20,563 | 0.31 | 0.17 |

| (GT) | 4193 | 2505 | (59.7%) | 134 | 1.74 | 1.04 |

| DS2 | 1,571,245 | 729,937 | (56.5%) | 1381 | 3.12 | 1.45 |

Rates are per account per day

DS1 was collected using RAPID (Lim et al. 2019) over an 18 day period (the election was on day 15) in March 2018. The filter terms included nine hashtags and 134 political handles (candidate and party accounts). The dataset was expanded by retrieving all replied to, quoted and political account tweets posted during the collection period. The political account tweets formed our ground truth. It was our expectation that some of the coordinated political influence techniques observed on the international stage may have been adopted by political parties and issue-motivated groups at the regional level by 2018 (especially given the use of political bots had been reported in the Australian setting five years prior, as reported in Woolley 2016), and hence would be present in this dataset.

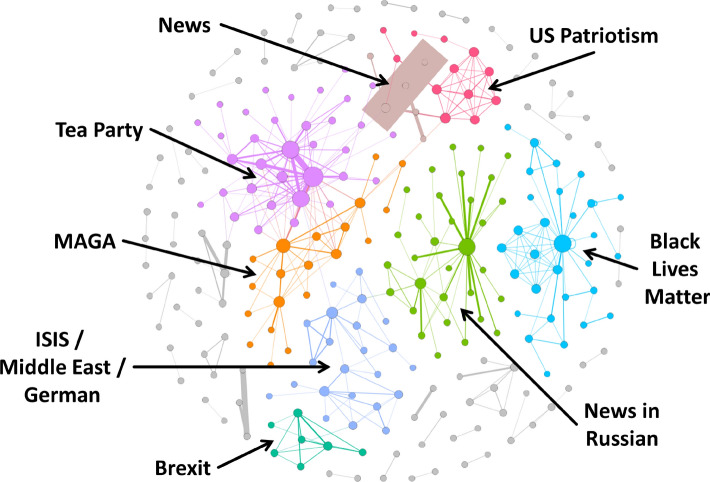

The IRA dataset released by Twitter covers 2009 to 2018, but DS2 is the subset of tweets posted in 2016, the year of the US Presidential election. Because DS2 consists entirely of IRA accounts which Twitter believed to be connected with an SIO, it was expected to include evidence of coordinated amplification. It was also much larger than DS1, and our intent was that our findings would complement forensic studies of the activity (e.g. Benkler et al. 2018; Jamieson 2020) and also contrast with techniques from more focused studies (e.g. Dawson and Innes 2019).

Experimental set up

The size of the window was set at (in minutes) and the three community detection methods used on the aggregated LCNs were:

FSA_V ();

kNN with (cf., Cao et al. 2015); and

a simple threshold retaining the edges with a normalised value above 0.1.

Parameter selection

Other than a value of for kNN (taken from Cao et al. 2015), the choice of values for parameters , and the threshold were determined as follows. Our intent was to search for human-driven coordination, i.e. teams of humans manipulating potentially several accounts each, meaning that the timeframes under examination would need to allow for the time required to switch between accounts. As discussed by Dawson and Innes (2019), the motivation for even paid coordinated behaviour may be based on numbers of posts made, rather than how tightly coordinated they are, so by examining a relatively wide ‘short’ window of 15 minutes allows for such people to react to each others’ posts as they see them (rather than the sub-minute coordination sought by others, e.g. Giglietto et al. 2020a; Pacheco et al. 2021; Dawson and Innes 2019). The 60 minute window allows for people motivated by personal interest as well as paid trolls, who check their social media frequently throughout the day while attending to other duties (e.g. preparing new content, Nimmo et al. 2020). The six hour time frame is of medium length and allows for users who check social media over breakfast, at lunch, and then at dinner who also may be more motivated by personal reasons to coordinate their behaviour. Finally, the long term time frame of a whole day allows for accounts that only check social media in concentrated sessions once a day, but who coordinate their actions with others each day outside of the six hour window. Furthermore, automated coordinated accounts (i.e. bots) can react to posts very quickly (i.e. within seconds), and simple implementations can be revealed by their consistent short response times rather than relying on the more sophisticated co-activity methods presented here. More complex bot implementations vary their response times to avoid this (Cresci et al. 2017; Cresci 2020), however if they wish to game OSN trending algorithms to improve their reach, their posts must occur near to each other in time. Values for were also informed by the observation of Zhao et al. (2015) that 75% of retweets occur within six hours of posting. This implies that if attempts were made to boost a tweet, retweeting it in much shorter times would be required for it to stand out from typical traffic. Varol et al. (2017) checked Twitter’s trending hashtags every 10 minutes, which is an indication of how quickly a concerted Boosting effort may have an effect. Values chosen for therefore ranged from 15 minutes to a day, growing by a factor of approximately four at each increment. Deliberate coordinated retweeting (i.e. covert Boosting masquerading as grassroots activity) was expected to occur in the smaller windows, but then be replaced by coincidental co-retweeting as the window size increases.

Values for and the threshold were based on experimenting with values in [0.1, 0.9], maximising the MEW to HCC size ratio, using the DS1 and DS2 aggregated LCNs when .

Experimental results

The research questions introduced in Sect. 1 guide our discussion, but we also present follow-up analyses.

HCC detection (RQ1)

Detecting different strategies

The three detection methods all found HCCs when searching for Boost (via co-retweets), Pollute (via co-hashtags), and Bully (via co-mentions), details of which are shown in Table 5. Notably, kNN consistently builds a single large HCC, highlighting the need to filter the network prior to applying it (cf., Cao et al. 2015). The kNN HCC is also consistently nearly as large as the original LCN for DS2, perhaps due to the low number of accounts and the fact that kNN retains every edge adjacent to the retained vertices, regardless of weight. It is not clear, then, that kNN is producing meaningful results used in this way, even if it can extract a community.

Table 5.

HCCs by coordination strategy

| Strategy | GT | DS1 | DS2 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Nodes | Edges | Comp. | Nodes | Edges | Comp. | Nodes | Edges | Comp. | ||

| LCN | ||||||||||

| Boost | 15 | 44 | 112 | 5 | 8855 | 80,702 | 419 | 855 | 23,022 | 14 |

| Pollute | 15 | 51 | 154 | 2 | 13,831 | 1,281,134 | 73 | 1203 | 65,949 | 5 |

| Bully | 60 | 70 | 482 | 1 | 16,519 | 1,925,487 | 222 | 1103 | 37,368 | 5 |

| FSA_V | ||||||||||

| Boost | 15 | 9 | 6 | 3 | 633 | 753 | 167 | 113 | 758 | 19 |

| Pollute | 15 | 9 | 5 | 4 | 135 | 93 | 50 | 24 | 15 | 9 |

| Bully | 60 | 11 | 7 | 4 | 338 | 280 | 119 | 109 | 1123 | 16 |

| kNN | ||||||||||

| Boost | 15 | 9 | 21 | 1 | 1041 | 33,621 | 1 | 675 | 22,494 | 1 |

| Pollute | 15 | 11 | 37 | 1 | 724 | 153,424 | 1 | 1040 | 65,280 | 1 |

| Bully | 60 | 18 | 135 | 1 | 1713 | 663,413 | 1 | 692 | 35,136 | 1 |

| Threshold | ||||||||||

| Boost | 15 | 11 | 16 | 3 | 85 | 68 | 31 | 8 | 10 | 2 |

| Pollute | 15 | 24 | 26 | 3 | 44 | 37 | 10 | 6 | 13 | 1 |

| Bully | 60 | 15 | 19 | 3 | 25 | 23 | 8 | 10 | 10 | 3 |

Varying window size

Different strategies may be executed over different time periods, based on their aims. Boosting a message to game trending algorithms requires the messages to appear close in time, whereas some forms of Bullying exhibit only consistency and low variation (mentioning the same account repeatedly). Polluting a user’s timeline on Twitter can also be achieved by frequently joining their conversations over a sustained period.

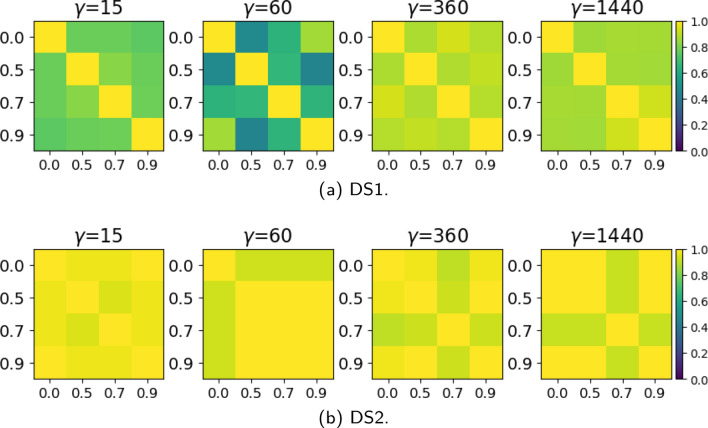

Varying searching for Boost, we found different accounts were prominent over different time frames (Table 6); the overlap in the accounts detected in each time frame differed considerably even though the number of HCCs stayed relatively similar. Figure 4 shows the Jaccard and overlap similarity between the sets of accounts appearing in each window size (agnostic of HCC membership). The overlap results for kNN shows very high levels of similarity, but lower levels of Jaccard similarity. For all datasets, as grows kNN finds more and more HCC members, including all the ones it found with smaller window sizes (overlap similarity values appear close to 1.0, shown as yellow). The highest Jaccard similarities for kNN seem to group the shorter periods () and the medium and long periods (). FSA_V finds different sets of members in each time window without significant overlap, though for DS2 it appears that the windows longer than 15 minutes have many members in common, but have very few in common with the HCCs. As might be expected, thresholding by LCN edge weight results in the identification of additional accounts as increases, and the Jaccard similarity of GT and DS1 (Fig. 4c) reveals that accounts identified in the shorter time windows () are very different to those from the longer time windows, but they still overlap somewhat (Fig. 4d). This suggests that although there are some accounts that coordinate in short periods, other accounts coordinate more over the medium and long time periods. These include media accounts that are consistently highly active over longer periods and differ from the active discussion participants who might log on to Twitter in the evening for a few hours whose behaviour is more bursty in nature.

Table 6.

HCCs by window size (Boost, FSA_V)

| Network attributes | HCC sizes | Nodes in common | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Nodes | Edges | HCCs | Min. | Max. | Mean | SD | |||||

| GT | |||||||||||

| 15 | 9 | 6 | 3 | 3 | 3 | 3.00 | 0.00 | 9 | 9 | 8 | 8 |

| 60 | 14 | 9 | 5 | 2 | 3 | 2.80 | 0.40 | – | 14 | 10 | 12 |

| 360 | 13 | 9 | 5 | 2 | 3 | 2.60 | 0.49 | – | – | 13 | 12 |

| 1440 | 17 | 12 | 6 | 2 | 3 | 2.80 | 0.37 | – | – | – | 17 |

| DS1 | |||||||||||

| 15 | 633 | 753 | 167 | 2 | 18 | 3.79 | 2.21 | 633 | 218 | 93 | 100 |

| 60 | 619 | 1293 | 151 | 2 | 13 | 4.10 | 2.30 | – | 619 | 208 | 193 |

| 360 | 503 | 1119 | 127 | 2 | 19 | 3.96 | 2.58 | – | – | 503 | 350 |

| 1440 | 815 | 2019 | 141 | 2 | 110 | 5.78 | 12.60 | – | – | – | 815 |

| DS2 | |||||||||||

| 15 | 113 | 758 | 19 | 2 | 65 | 5.95 | 13.94 | 113 | 34 | 29 | 25 |

| 60 | 77 | 394 | 18 | 2 | 27 | 4.28 | 5.64 | – | 77 | 62 | 54 |

| 360 | 98 | 775 | 15 | 2 | 32 | 6.53 | 9.13 | – | – | 98 | 56 |

| 1440 | 69 | 380 | 15 | 2 | 27 | 4.60 | 6.15 | – | – | – | 69 |

Fig. 4.

Similarity matrices of HCC account sets found using different window sizes (FSA_V). The similarity measured here relates to the accounts found not to the similarity in groupings of accounts into HCCs. Yellow implies a high similarity (Jaccard: account sets are identical, Overlap: one set is a subset), while blue implies low similarity (i.e. account sets are disjoint)

Other than in GT, which revealed very few HCCs, the sizes of the HCCs found seemed to follow a rough power law; most were very small but one or a few were very large (see the HCC Sizes section in Table 6). The number of HCCs did not vary significantly nor consistently as increased. The number of edges retrieved tells us in DS1, as the window increased, more edges had weights high enough to be retained, whereas DS2 edge counts diminished, implying that the LCNs were progressively dominated by a smaller number of very heavy edges, while other remained relatively light.

HCC detection methods

Similarly, HCCs discovered by the three community extraction methods (Table 7) exhibit large discrepancies, suggesting that whichever method is used, tuning is required to produce interpretable results. This is evident in the literature: Cao et al. conducted significant pre-processing when identifying URL sharing campaigns across two years of Twitter activity (Cao et al. 2015), and Pacheco et al. showed how specific strategies could identify groups in the online narrative surrounding the Syrian White Helmet organisation (Pacheco et al. 2020). Here we present the variation in results while controlling methods and other variables and keeping the coordination strategy constant, as our interest here is to validate the effectiveness of the method.

Table 7.

HCCs by detection method (Boost, )

| Network attributes | HCC sizes | Nodes in common | ||||||

|---|---|---|---|---|---|---|---|---|

| Nodes | Edges | HCCs | Min. | Max. | FSA_V | kNN | Threshold | |

| DS1 | ||||||||

| FSA_V | 633 | 753 | 167 | 2 | 18 | 633 | 56 | 36 |

| kNN | 1041 | 33,621 | 1 | 1041 | 1041 | – | 1041 | 44 |

| Threshold | 85 | 68 | 31 | 2 | 14 | – | – | 85 |

| DS2 | ||||||||

| FSA_V | 113 | 758 | 19 | 2 | 65 | 113 | 88 | 4 |

| kNN | 675 | 22,494 | 1 | 675 | 675 | – | 675 | 8 |

| Threshold | 8 | 10 | 2 | 2 | 6 | – | – | 8 |

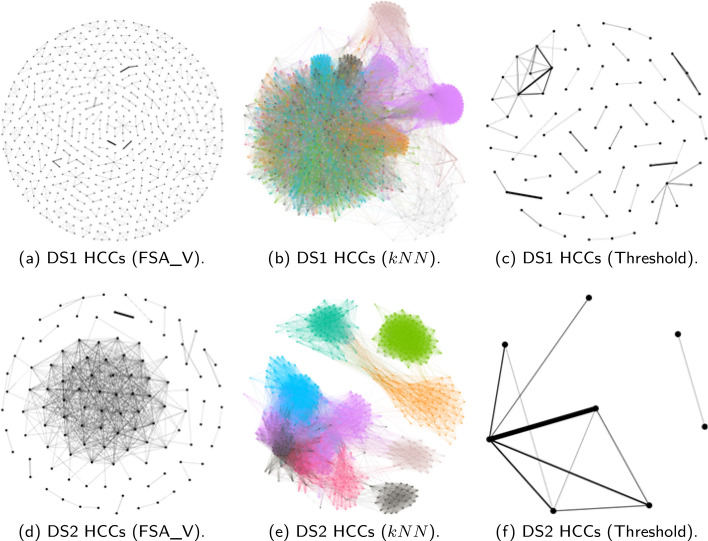

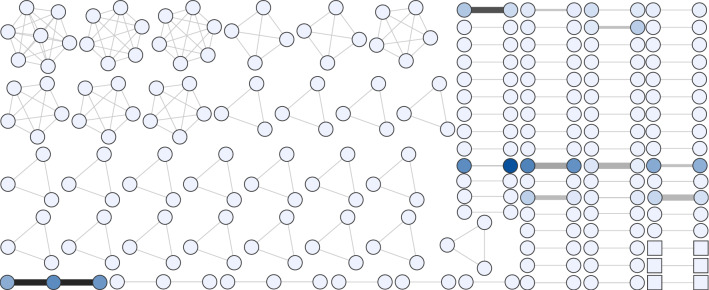

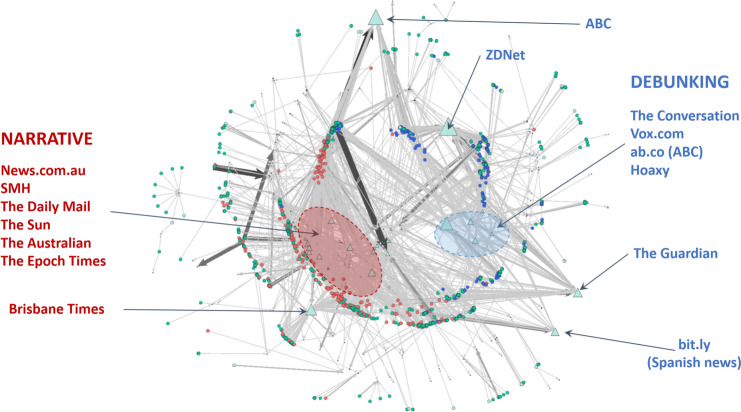

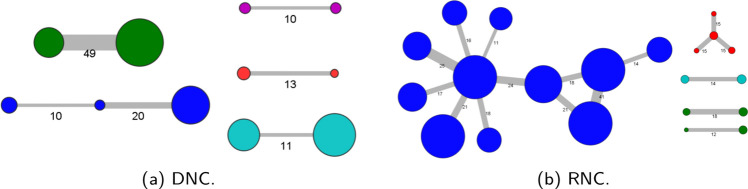

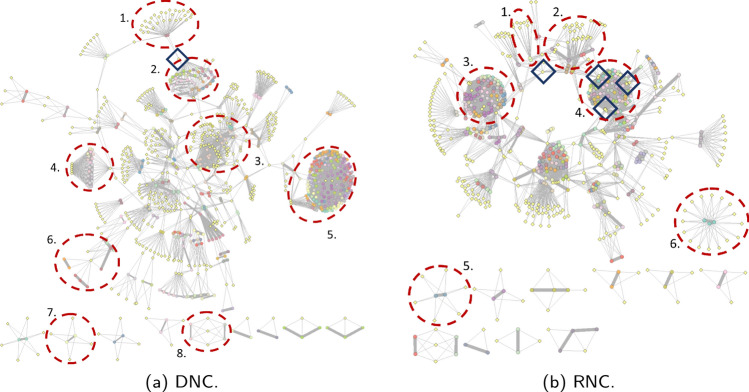

The networks were visualised using the FR layout in Fig. 5, revealing further structure within the kNN networks, each of which consisted of a single connected component. To examine the structure of the single kNN component more closely, we applied Louvain analysis (Blondel et al. 2008) and coloured the largest detected clusters. The clustering reveals distinct communities within both the lone kNN HCC found in each of the datasets. It is possible the DS2 ones are more easily discernible either due to the smaller number of accounts (675 compared with 1041) or because the accounts were, in fact, organised teams of malicious actors acting over a longer time frame. In either case, it makes clear that kNN, configured as it was, failed to distinguish communities clearly extractable via other means. This is less an indictment on kNN and more an indication that community extraction is likely to be a multi-step process embedded in particular domains and datasets, and in the particular types of networks to which they are applied. The networks in Fig. 5b, e bear a passing resemblance to many in, e.g. the deep analysis of the media landscape during the 2016 US election by Benkler et al. (2018) (which relied on simpler methods to build their networks), however these examples are networks of accounts rather than media organisations or sites, and, importantly, are not necessarily directly linked, offering the possibility of uncovering otherwise hidden connections between actors. This could be especially valuable when searching multiple OSNs.

Fig. 5.

HCCs discovered using different methods in DS1 and DS2 (Boost, ). Each kNN network consists of a single connected component, but detected clusters have been coloured to highlight internal structures

HCC differentiation (RQ2)

How similar are the discovered HCCs to each other and to the rest of the corpus? The HCC detection methods used relied on network information; in contrast we examine content, metadata and temporal information to validate the results. We contrast DS1 and DS2 results with GT and a RANDOM dataset, constructed to match the HCC distributions in DS1 (FSA_V, ). As DS2 consisted entirely of bad actors, and GT consisted entirely of political accounts, it was felt non-HCC accounts from DS1 would offer more ‘normal’ non-coordinating accounts.

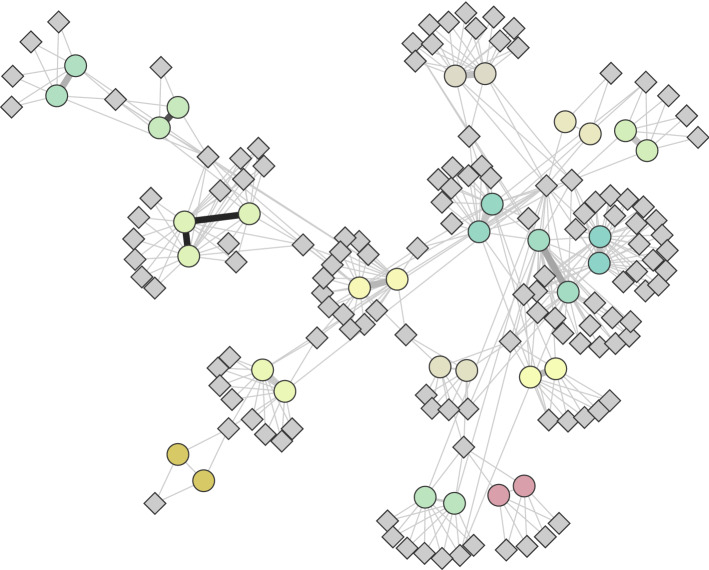

Internal consistency

Visualising the similarities between accounts using the method in Sect. 3.2.4 (Fig. 6), the HCCs are discernible as being internally similar. The RANDOM groupings demonstrated little to no similarity, internal or external, as expected, while the DS2 HCCs demonstrated high internal similarity, as expected of organised accounts over an extended period. The internal consistency of the DS1 HCCs is not as clear as for DS2, possibly due to the greater number of HCCs. Where HCCs are highly similar to others (indicated by yellow cells off the diagonal), it is highly likely these are due to small HCCs (e.g. with two or three members) retweeting the same small set of tweets (fewer than ten). The use of filtering in conjunction with FSA_V may help remove potentially spurious HCCs, as could a final merge phase, joining HCC candidates whose evidence for coordination matches closely (e.g. two small HCCs retweeting of the same tweets, kept separate by FSA_V but clearly similar).

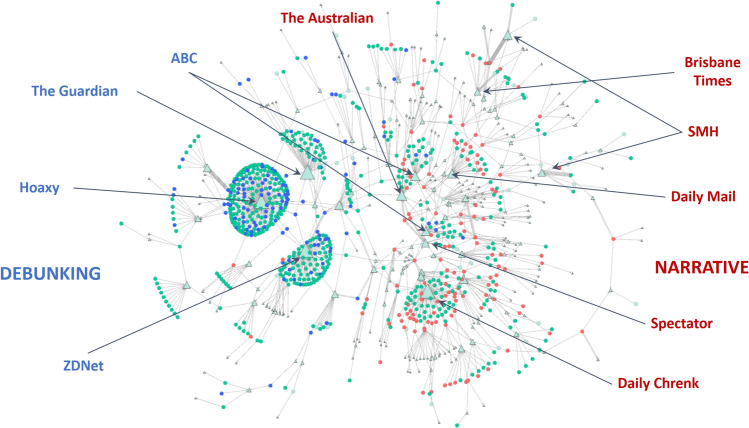

Fig. 6.