Abstract

Recently, people around the world are being vulnerable to the pandemic effect of the novel Corona Virus. It is very difficult to detect the virus infected chest X-ray (CXR) image during early stages due to constant gene mutation of the virus. It is also strenuous to differentiate between the usual pneumonia from the COVID-19 positive case as both show similar symptoms. This paper proposes a modified residual network based enhancement (ENResNet) scheme for the visual clarification of COVID-19 pneumonia impairment from CXR images and classification of COVID-19 under deep learning framework. Firstly, the residual image has been generated using residual convolutional neural network through batch normalization corresponding to each image. Secondly, a module has been constructed through normalized map using patches and residual images as input. The output consisting of residual images and patches of each module are fed into the next module and this goes on for consecutive eight modules. A feature map is generated from each module and the final enhanced CXR is produced via up-sampling process. Further, we have designed a simple CNN model for automatic detection of COVID-19 from CXR images in the light of ‘multi-term loss’ function and ‘softmax’ classifier in optimal way. The proposed model exhibits better result in the diagnosis of binary classification (COVID vs. Normal) and multi-class classification (COVID vs. Pneumonia vs. Normal) in this study. The suggested ENResNet achieves a classification accuracy and for binary classification and multi-class detection respectively in comparison with state-of-the-art methods.

Keywords: COVID-19, Image enhancement, Chest X-ray image, Deep learning, ResNet, Pneumonia

1. Introduction

Lung is one of the most vital organs in human body responsible for breathing and any type of disease can severely harm its functions which can lead to death in case of delayed treatment [1], [2]. People around the world are suffering from major lung diseases such as lung cancer, tuberculosis, pneumonia etc. Recently the novel Corona Virus (SARS-CoV-2) has been spreading rapidly through micro droplets via sneezing and coughing and is becoming deadly as no vaccine or drug has been discovered yet [3]. This COVID-19 virus attacks the respiratory system causing damage to the lungs [4]. The most common symptoms observed with a COVID-19 positive patient are sore throat, fever, dry cough etc. Prolonged infection can lead to severe pneumonia in some cases [5].

Pneumonia causes air sacs in the lungs to swell thus making them unfit for normal breathing. Viruses other than COVID-19 may also cause pneumonia [5]. Certain factors can also trigger it like smoking habits, weak immunity, presence of bronchitis or asthma, old age etc. The infection is generally treated with antibiotics, pain reliever, and cough medicine and fever reducer. In extreme cases the affected person should be hospitalized and provided mechanical ventilation system [6]. The rapid spreading of the novel Corona Virus has grown up to be a threat to the health care system globally since every health unit cannot provide well ventilation support to each patient. Therefore, it has resulted in a high mortality rate too [7]. Researchers are still finding ways to know the origin, nature, infection severity and duration associated with the latest virus [8], [9]. Sometime in most extreme cases this virus affects the whole respiratory system like pneumonia causing septic shock, pulmonary edema, acute respiratory distress syndrome and multi-organ failure [10], [11].

In this situation, it is essential to diagnose the infection by observing the symptoms and treat it urgently. Epidemiological history and positive radiographic images (computed tomography (CT)/Chest radiograph (CXR)), as well as positive pathogenic testing, can be the tools to treat infection caused by the Corona Virus. In the last few months, as per the statistical data released by World Health Organization (WHO), COVID-19 has been spread aggressively in several countries around the world [3], [12]. Hence, fast detection of the COVID-19 can be contributed to control the spread of the disease. Furthermore, artificial intelligence (AI) based systems can assist in the early diagnosis and treatment of the COVID-19 virus and other infections [13]. Recently, chest-computed tomography has been observed to detect lung abnormality in the early stages resulting in proper and rapid treatment for the Corona Virus affected pneumonia. Both CXR and CT scan are tools to diagnose pneumonia and CT scan is better than CXR with respect to preciseness and accuracy [14]. However, the CXR is more popular as it is cheap, rapid and causes less radiation damage to the body. Pneumonia cannot be always recognized as white patches in the lungs can also be the effect of bronchitis or tuberculosis [15], [16].

Image pre-processing is a crucial step for anomaly detection from medical images. To increase the performance of the deep neural network for COVID-19 detection, we have gone through the CXR image enhancement process. Some recently developed deep learning based low-dose CT image enhancement approaches has been considered in literature [17], [18], [19], [20]. Lore et al. suggested LLNet for image enhancement [17] and the next LightenNet was developed by Li et al. [18]. Residual encoder decoder based CT image enhancement approach has been introduced by Chen et al. [19]. Xu et al. designed a generative adversarial network based image enhancement model in combination with the zero sum game theory in recent [21]. The above models sometimes fail to converge in noisy CT images.

In this investigation, a modified residual net (ResNet) based CXR image enhancement method using multiple residual network block and normalization of feature maps under deep learning framework is proposed for COVID-19 detection. First, the designed network has generated residual image from the source image using ResNet and batch normalization process. The ResNet has been constructed with convolutional layer and ReLU activation function, which extract the high level features from the chest images. After that, a block has been designed called Residual Module (M), original normalized patches and residual images are considered as input in each module. The module produces features map as output through convolutional layer and max-pool. We have connected with such eight modules to design the proposed ResNet based enhancement (ENResNet) scheme for COVID-19 detection. The resultant feature map in each module is fed into the next module as input with residual image and final feature map has been obtained using a fully connected layer (FCL). Final enhanced image is achieved using reverse or up-sampling process. The output image describes the affected area of a COVID-19 patient efficiently. To detect COVID-19 or pneumonia or normal CXR image, we have designed a simple CNN based classification model from enhanced CXR images using multi-term loss function and software function. The empirical results show that the suggested model not only produces enhanced CXR images rather more robust and accurate than most privileged methods, which increase the classification accuracy in terms of both binary and multi-class cases. More detailed contributions are summarized as:

-

1.

A modified residual net (ResNet) based chest X-ray (CXR) image enhancement approach using multiple residual network block and normalization of feature maps under deep learning framework and a simple CNN based classification is proposed for COVID-19 detection. The proposed deep learning model helps to detect COVID-19 efficiently in early stages with minimum cost.

-

2.

The ENResNet has been designed for enhancement purpose in case of CXR images incorporating eight modules/ blocks consecutively. The residual images and normalized patches are treated as input to each block to produce feature map in this investigation.

-

3.

Chest X-ray examination is important to identify the affected area of the chest/ lung of a COVID-19 patient for proper treatment or comorbid patient whose chest is already affected. The primary goal of this model is to determine the affected area of the chest/ lung for COVID-19.

-

4.

The proposed model has been utilized in the diagnosis of binary classification (COVID-19 vs. normal) and multi-class issue (COVID-19 vs. Pneumonia vs. normal). The proposed model differentiates COVID-19 and Pneumonia (viral/ bacterial) efficiently.

The rest of this paper is organized as follows: The related work are discussed in Section 2. In Section 3, we discuss mathematical and architectural background. In Section 4, we illustrate the proposed deep learning model in details. In Section 5, we exhibit the experimental results and discussions. Finally, conclusions are demonstrated in Section 6.

2. Related work

Image enhancement is a crucial image pre-processing technique in medical imaging for the detection and localization of any deformities in the image. As medical images are poorly illuminated by nature so much vital information is not visible clearly. Though Corona virus can be detected from chest X-ray images of affected patients still medical professionals cannot distinguish Corona from usual pneumonia due to poor illumination in the CXR images. So to retrieve minute details in the image and restore important information, enhancement is needed which helps in early detection of COVID-19. The aim of image enhancement is to improve the image features and vital information.

Many researchers have been developing several model for COVID-19 detection. The artificial neural network offers an effective way to diagnose a Corona Virus affected person when his CT scans and clinical observation history are found [35]. CNN has been tried and found the most successful algorithm in terms of accuracy for medical imaging purposes. In an earlier investigation, CNN was applied based on the inception network to detect COVID-19 disease from CXR [22]. In another recent work, it is reported that a convolutional neural network (CNN) has been developed to detect COVID-19 CXR or CT scan images by creating augmentation of multi-images [23]. In another investigation, ‘DeepCOVIDExplainer’ has been proposed by Karim et al., which is used to detect COVID-19 automatically from CXR images [24]. ‘COVID-ResNet’ has been developed using pre-trained ResNet-50 architecture under deep learning framework for COVID-19 detection by Farooq et al. that also utilizes for multi-class classification [25]. Khan et al. designed ‘CoroNet’ for detection and diagnosis of COVID-19 from chest X-ray images [26]. The ‘DarkNet’ for COVID-19 detection from CXR images has been suggested by Ozturk et al. [27]. All aforesaid deep neural networks have achieved good accuracy for COVID-19 detection from CXR datasets but they have not performed well in the case of multi-class classification. All models are not in a position to differentiate COVID-19 from usual Pneumonia (viral/ bacterial) since they have similar symptoms. They also fail to produce a good result for noisy images and also increases the rate of false positive cases.

Artificial Intelligence can be extensively applied in medical imaging applications but the non-availability of shared data can block the advancement in medical research areas [36], [28], [13]. Recently, work was found to recognize COVID-19 in a patient with radiography images. The work performs on GAN based synthetic data and several deep learning algorithms which has given excellent results in comparison with previous methods [29]. In a different design, a GAN with deep transfer learning for COVID −19 identification in CXR images is illustrated. The major objective of this research is to gather available datasets for COVID-19 and then apply the GAN network to regenerate more images to help in the accurate identification of the Corona Virus from chest X-ray images [32]. All aforementioned deep learning model for COVID-19 detection uses raw CXR images directly for the classification. As we know, CXR images or low dose CT images contain noises, and sometimes blurring effect is present in the images and therefore, they suffer from accuracy and overfitting problems. The produced results do not satisfy the meet in the case of a multi-class problem since symptoms or abnormalities in CXR images of COVID-19 and viral pneumonia or bacterial pneumonia are almost the same. Table 1 and Table 2 illustrate the pros and cons of the recently developed deep learning based COVID-19 detection model.

Table 1.

List of related methods of COVID-19 detection from CXR images with pros/ cons.

| Author | Year | Methods with advantages | Drawbacks |

|---|---|---|---|

| Wang et al. [22] | 2020 | Simple CNN based model in deep learning framework | Low accuracy and applied on limited datasets |

| Purohit et al. [23] | 2020 | Multi-image augmented deep learning model; synthesis images was created for low size of datasets | Average accuracy and low F1-score |

| Karim et al. [24] | 2020 | DeepCOVIDExplainer: it was a multi-class neural ensemble model using gradient-guided class activation maps. | Applied on imbalanced datasets and produced average accuracy. |

| Farooq et al. [25] | 2020 | Covid-ResNet: a residual neural network based deep learning model for COVID-19 detection. | It was a pre-trained model with fixed size of images and not applicable on real time datasets. |

| Khan et al. [26] | 2020 | CoroNet: it was a 4-class classifier Xception architecture pre-trained on ImageNet dataset and trained end-to-end on a dataset prepared. | It was applied on limited dataset and model suffer overfitting problem in large dataset. |

| Ozturk et al. [27] | 2020 | DarkNet: It was used in real time COVID detection for both binary and multi-class problem | Though it achieved good accuracy in case of binary class problem but less accuracy in multiclass problem. |

| Wang et al. [28] | 2020 | COVID-Net: It was a multi-class classification model with normal, pneumonia, and COVID-19 applied on real time datasets. | The model suffers in sensitivity and positive predictive value (PPV). |

| S. Albahli [29] | 2020 | GAN based COVID detection with synthetic data generator. | Sometimes model detect viral pneumonia as COVID and suffers in low positive predictive value (PPV). |

Table 2.

List of related methods of COVID-19 detection from CXR images with pros/ cons.

| Author | Year | Methods with advantages | Drawbacks |

|---|---|---|---|

| Apostolopoulos & Mpesiana [30] | 2020 | MobileNet (v2): designed by the concept of residual network for COVID detection | Suffer in less sensitivity and specificity values for Inception, Xception; datasets were imbalanced |

| Ucar & Korkmaz [31] | 2020 | SqueezeNet: it was a pre-trained model for COVID detection and achieve good accuracy in augmented data in less computational time. | Achieved poor result (76.3%) in raw data used only 76 COVID CXR images. |

| Loey et al. [32] | 2020 | GAN and deep transfer learning solve the overfitting problem used only 307 original images. | Sometimes validation accuracy was higher than the test accuracy due to highly augmented datasets. |

| Keles et al. [33] | 2021 | COV19-CNNet and COV19-ResNet: hybrid deep learning model using CNN and ResNet architecture for the detection of COVID-19 from CXR and achieved satisfactory result | They used small number (910) of CXR images in training and model suffers in false positive rate. |

| Banerjee et al. [34] | 2021 | COVID-19 detection from audio dataset using residual neural network architecture | Dataset was created with the sound of COVID-19 coughs and non-COVID-19 coughs; accuracy was not acceptable at all. |

Keles et al. designed a hybrid deep learning model using CNN and ResNet architecture for the detection of COVID-19 from CXR and achieved satisfactory result [33]. The main drawback of this model is that they have used only 910 CXR images, which has a chance of high false positive rate. Banerjee et al. proposed a new deep learning model using Residual Network for COVID-19 detection from audio dataset. The datasets has been created with the sound of COVID-19 coughs and non-COVID-19 coughs [34]. The result of this model is not acceptable at all and suffers from false positive rate. All developed deep learning based models as discussed earlier suffer from overfitting problem, false positive rate and distraction between usual pneumonia and COVID-19. Some existing models used imbalanced dataset in the training phase. In this context, we have developed an enhancement based COVID-19 detection model using residual network architecture in the deep learning framework (ENResNet) and so the proposed model has been devised to overcome the above drawbacks.

3. Preliminaries

This section explores the basic preliminaries of the proposed deep learning based (ENResNet) model for COVID-19 detection.

3.1. Image enhancement

Computed tomography (CT) X-ray is considered one of the most relevant imaging procedures for medical diagnosis. Low dose X-ray images suffer from blurring edges, low contrast due to hardware architecture, and low signal-to-noise ratio (SNR) of projections. Thus, image enhancement is an essential procedure to improve the clarity of foreground objects and remove the blurring effect for object recognition and detection task. The primary goal of contrast enhancement is to restore or reconstruct an image to a different set up that is more disburse for further processing [19], [20].

Let be a CXR image of size pixels, having and gray-levels varying from to . Then, enhanced CXR image can be represented as:

where, is the transformation function that has been calculated from the proposed ENResNet model in this investigation.

3.2. Convolutional neural networks

Convolutional Neural Network (CNN) is the primary component of deep learning for several image processing task like denoising, contrast enhancement, classification, anomaly detection [37], [38], [17], [18]. Recently, different deep learning models have been implemented, such as CNN, RNN, LSTM, Autoencoder, etc. Consistently, CNNs is defined as a high-level abstraction of classical artificial neural networks (ANNs) and it is locally connected with 2D convoluted structure along with various filters and liking with several affine parameters. Conventionally, two operators like convolution and pooling (average or max) along with stride are used in CNN. Sub-window moves on the image from the left-upper corner to the right-down corner and excerpts granular features into a new features map in the receptive area for further processing. We supply a sequence of images with size as input layers through batch normalization, fit an offset for each layer with a kernel of size . In the convolution process, the significant features of images have been extracted in networks utilizing an active function which can be determined as [35]:

| (1) |

where, is the sigmoid function. is weight parameters that interconnect between two connected layers with size . The bias unit is and number of filter is k. The CNN includes rich isotropic component similar to pyramid model, like invariant against translation, scaling and slant [37], [39], [19]. CNNs can share parameters from layer to layer in the model of the full network. The W be scaled in size and b with in one layer during training period [18], [20].

3.3. Residual neural network

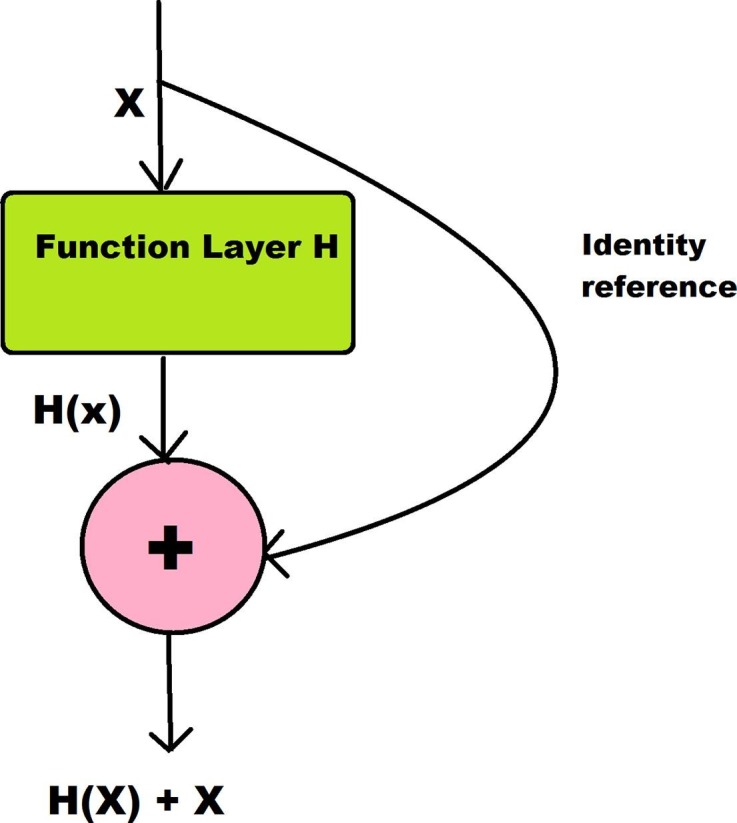

The residual neural network in the deep learning framework was first introduced by He et al. (2016) that is applied to image recognition tasks to improve their accuracy and reliability [37], [40]. It was designed to force the net to calculate residual mappings instead of direct connection. In traditional CNN, the weights on each layer were not directly connected to the weights of previous layers of the network, resulting in delayed convergence. The residual network has been designed with two direct connections with each layer; the activation functions of the previous layer and direct reference from the previous layer. Several activation functions of each block and direct references for the next block leads to fast convergence. The network is optimally trained by reference mappings. Each residual block extracts abstract features efficiently making the training of complex network easily. Fig. 1 exhibits the architecture of residual unit and identity reference. From Fig. 1, it is clear that some activation vector x is mapped from the preceded layer in the net. Activation vector x pass through certain transformation function which consists of convolutional, pooling or stride and get . Finally, the original x is added to the transformed unit , so that the resultant vector of the block is rather x [37], [38], [39]. This operation in each block is optimized the activation function when preserving the reference of the original x. ResNet-34, ResNet-50, ResNet-101 have been implemented for different image classification task in recent year [39], [41], [40].

Fig. 1.

Residual unit: a building block.

4. Methodology

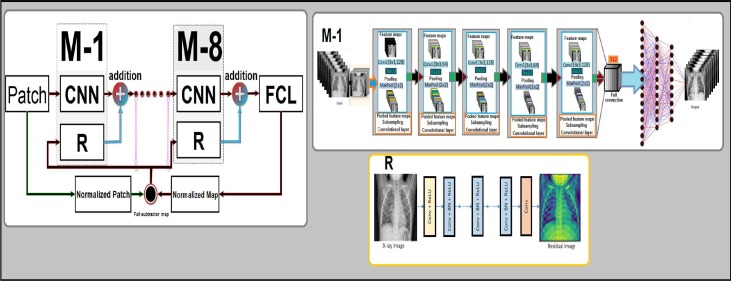

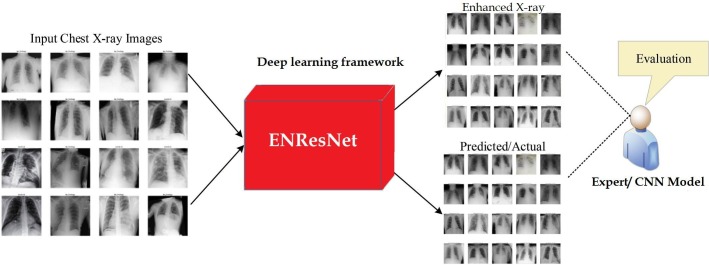

The design of the proposed modified residual network based enhancement (ENResNet) scheme under the deep learning framework for COVID-19 detection from chest X-ray (CXR) images has been illustrated in this treatment. The proposed ENResNet helps to detect COVID-19 from CXR images and also differentiate COVID-19 from usual Pneumonia (viral or bacterial) efficiently at a low cost in this study. It is also used to identify the affected area in the chest/ lung for the spreading of the Corona Virus. Fig. 2 exhibits the block diagram of the designed ENResNet enhancement model. This work can be divided into two phases: the first phase describes the enhancement of CXR images using ENResNet which increases the accuracy for the detection of COVID-19. The second phase illustrates the detection of COVID-19 from the enhanced CXR image by a simple CNN model through training. This phase classifies multi-class cases such as COVID-19, Pneumonia, and normal chest images.

Fig. 2.

Block diagram of the proposed enhancement model.

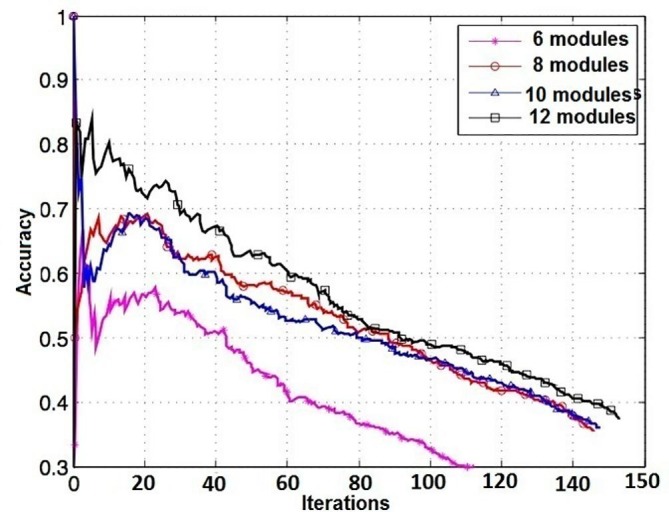

In the first phase of this work, we have designed a deep learning based enhancement network ENResNet using modified ResNet. Firstly, residual images have been produced from the source CXR images by simple CNN model and the batch normalization process. The simple CNN has been constructed convolutional layer and ReLU activation function, which extracts high-level features from the chest images. Secondly, a module/ block (M) has been composed using image patches and residual images as input and produces a feature map as output. A multi-term cost function has been devised in the model using mean square error (MSE) and edge difference loss function (EDLF) which reduces the over-smoothing problem in low dose CXR images. We have connected such eight modules to design the proposed ENResNet based enhancement scheme and output feature maps and residual images of each module are fed into the next module. The convergence curves with different module numbers have been demonstrated in Fig. 3 . Thirdly, the final feature maps are obtained using fully-connected layer (FCL). Finally, we get enhanced CXR images via the up-sampling process and training. In the second phase, we have composed a simple CNN based classification model for COVID-19 detection. The CNN comprises softmax function for multi-class cases and multi-term loss function via error term. The proposed model helps to COVID-19 efficiently at a less computational cost. The enhancement model increases the accuracy in terms of the multi-class problem for the differentiation of usual pneumonia from COVID-19 efficiently. Steps of the proposed model for CXR image enhancement and COVID-19 detection have been demonstrated as follows:

-

1.

[Step 1:] Residual image has been created from source CXR image using eight layers residual network and batch normalization.

-

2.

[Step 2:] A module/ block (M) has been constructed using six layers residual network architecture (Conv2-MaxPool-ReLu) where image patches and residual images are taken as input.

-

3.

[Step 3:] Each layer in the module has produced pooled feature map as output from sequence of input and the resultant feature maps have been fed into the next layer as input.

-

4.

[Step 4:] Eight such modules (M-1 to M-8) have been designed to obtain the final feature maps for the enhancement task.

-

5.

[Step 5:] We have utilized novel ’multi-term loss’ defined by mean square error (MSE) and edge difference loss function (EDLF) in each module (M).

-

6.

[Step 6:] Enhanced CXR images have been achieved via upsampling process with visually improved image and all minute details.

-

7.

[Step 7:] A simple CNN classification model with eleven layers has been designed for COVID-19 detection where all enhanced CXR images are considered as input in this architecture.

-

8.

[Step 8:] The Classification model consists of two both binary classifier (COVID vs. Normal) and multi-class classifier (COVID vs. Pneumonia vs. Normal) for COVID-19 detection.

Fig. 3.

Convergence curve with several numbers of module.

4.1. Design of ENResNet for image enhancement

Let be an underlying mapping to be fit by stacked layers for input x. Let us consider the dimensions of input and output are same and be the residual function which is depicted in Fig. 1 than the residual function (hypothesis) can be defined as [37], [38], [42]:

| (2) |

Therefore, each layer generates a function including reference identity and it becomes . Aforesaid two forms are able to asymptotically approximate the desired functions but learning might be different. The training error is computed through the desired output and hypothesis in each layer. The solver of Eq. (2) depends on the residual learning and identity mappings, to solve the weights of the multiple nonlinear layers towards zero to approach identity mappings. Generally, the identity mappings are optimal for all cases, but reformulation is required in residual learning to solve the problem with preconditions. Practically, sometimes it depends on the hypothesis . The hypothesis has been designed with a convolutional operator, ReLU activation function, and batch normalization to obtain a residual image, which is illustrated in Fig. 2 in block, and it is designed with eight layers as follows:

I. CONV2D Layer #1: Adapted 48 ‘Conv2D’ kernel of size with ‘ReLU’ activation function;

II. CONV2D Layer #2: Utilized 48 ‘Conv2D’ kernel of size with ‘ReLU’ activation function and batch normalization;

III. CONV2D Layer #3: Used 48 ‘Conv2D’ kernel of size with ‘ReLU’ activation function and batch normalization;

IV. CONV2D Layer #4: Adapted 48 ‘Conv2D’ kernel of size along with ‘ReLU’ activation function and batch normalization;

V. CONV2D Layer #5: Utilized 32 ‘Conv2D’ kernel of size along with ‘ReLU’ activation function and batch normalization;

VI. CONV2D Layer #6: Adapted 32 ‘Conv2D’ kernel of size with ‘ReLU’ activation function and batch normalization;

VII. CONV2D Layer #7: Used 32 ‘Conv2D’ kernel of size along with ‘ReLU’ activation function and batch normalization;

VIII. CONV2D Layer #8: Finally applied 48 ‘Conv2D’ kernel with size.

4.1.1. Module construction (M)

To design the residual net based enhancement model, we have created eight modules or blocks (). Each module has been constructed by residual learning with stacked layers. The detailed pictorial representation is depicted in Fig. 2 in block. Let us define the unit with output in each module as:

| (3) |

where, and are the output and input vectors respectively. The function is the residual mapping that is to be learned. denotes the weigh parameters in the net [37], [38]. The operator + is used to perform element wise addition with identity. The activation function ReLU is used in this model construction. We have adopted nonlinearity after addition in each layer. The dimension of the input vector and residual function must be the same. The residual function has flexibility in each layer, and we have adopted five layers consisting ‘Conv2D’, ‘ReLu’ and ‘MaxPooling’ operations and one layer as a fully connected layer in this investigation. The function is determined in the CNN architecture with six layers as:

A. Subsampling Convolutional Layer #1: Applied 128 ‘Conv2D’ kernel of size along with ‘ReLU’ activation function and ‘max_pooling’ with filter;

B. Subsampling Convolutional Layer #2: Used 64 ‘Conv2D’ kernel of size along with ‘ReLU’ activation function and ‘max_pooling’ with filter;

C. Subsampling Convolutional Layer #3: Utilized once again 128 ‘Conv2D’ kernel of size along with ‘ReLU’ activation function and ‘max_pooling’ with filter;

D. Subsampling Convolutional Layer #4: Used 64 ‘Conv2D’ kernel of size along with ‘ReLU’ activation function and ‘max_pooling’ with filter;

E. Subsampling Convolutional Layer #5: Adapted 128 ‘Conv2D’ kernel of size along with ‘ReLU’ activation function and ‘max_pooling’ with filter;

F. Dense layer #1: Dense Layer #1: 512 neurons, with dropout regularization rate of ;

The residual function is defined in each layer and it generates new output in each layer, for example, at the first layer the generated output determined as . The residual function may present multiple convolutional layers. The element wise addition has been executed on two feature maps, layer by layer. Therefore, Eq. (3) can be expressed throughout the network as:

| (4) |

where, denotes the values of the features at the layer. is the weighted parameter at layer of N number of layers in each module. In case of first layer, i.e., then is the input vector and represents the initial parameter. The primary aim of the training is to learn the network parameters. The feature map has been generated after each module and it passes the next module as input.

To solve the Eq. (4) in optimal way during training, we can represent the Eq. (4) as ordinary differential equation [38]. Let us consider be the learning rate of the network and put in Eq. (4) then Eq. (4) reduces to

| (5) |

The Eq. (5) can be written after some simple algebraic step:

| (6) |

| (7) |

with initial value Therefore, the Eq. (7) can be solved by the Euler ODE method. Hence, we can solve the ODE system Eq. (7) with boundary value to get the result of the problem of parameters in the network. Here, Eq. (7) can be interpreted as a system of partial differential equations in this image enhancement task. In such a way we have created eight modules in this investigation. The convergent curve with different module number has been depicted in Fig. 3.

4.1.2. Loss function optimization

Most of the deep learning model uses MSE (mean square error) loss function or loss function which are calculated pixel by pixel difference in image processing task. But, sometimes MSE loss function leads to higher mean absolute error (MAE) or peak signal to noise ratio (PSNR) which are not effective in enhanced images [41]. Sometimes MSE loss function is unable to take higher information from the image since it calculates the average of the differences at the pixel level. So, it is not much effective in low-dose image enhancement tasks and over-smoothing problems occur. To overcome this issue, we have utilized the edge difference loss function (EDLF) in this investigation. The EDLF is calculated using the Laplacian operator which is a sensitive edge detector and the Laplacian operator () has been illustrated as [41]:

| (8) |

The Laplacian operator () has been applied in the convolution kernel of the last layer in each module (M). The convolution operation has been executed on the enhanced image by . Therefore, the EDLF is computed from the MSE of the two feature maps. Let be the output image of the module and be the final output of iteration then MSE loss and EDLF can be determined as [41]:

| (9) |

| (10) |

The MSE loss is nothing but the second order norm of the resultant enhanced image and output image in each module. Similarly, EDLF represents the second order norm of the feature map of same by Laplacian operator . Hence the total loss i.e., the multi-loss function can be determined using Eq. (9) and Eq. (10) as:

| (11) |

Here, parameter is used to tune the multi-loss function, which is known as super parameter. The value of in Eq. (11) is acquired by heuristic approach through model tuning and it varies with datasets. We have set in this experiment.

For the sake of optimize, multi-term loss function in training, the cost function can be determined as:

| (12) |

where is the cost function, which to be minimized and is the stride. handles the reconstruction error and is the bias matrix. The hyper-parameter employ to opt the scale of weight penalty. The error term can be replaced by multi-loss function which is defined in Eq. (11). Therefore, Eq. (12) reduces to:

| (13) |

In order to minimize the cost function , we have utilized stochastic gradient descent method through the PDE with respect to and in Eq. (13). Thus, multi-term loss function produces better result in less computational cost.

4.2. Design of CNN for classification

To classify both binary and multi-class cases, we have designed a simple CNN model in a deep learning framework as depicted in Fig. 9 . The input of the CNN is enhanced CXR images () which are feature vectors from the ENResNet model. The model has been built to classify three classes of enhanced X-ray images of lungs; namely, COVID-19 affected lungs, usual pneumonia lungs, and normal lungs. We build a model for COVID-19 detection from enhanced CXR images using the following CNN eleven layers of sequential type architecture:

-

1.

Convolutional Layer #1: Apply 32 kernels (filters), with ‘ReLU’ activation function;

-

2.

Pooling Layer #1: Accomplish ‘Max-pool’ with a filter and stride ;

-

3.

Convolutional Layer #2: Apply 32 kernels, with ‘ReLU’ activation function;

-

4.

Pooling Layer #2: Again, perform ‘Max-pool’ with a filter and stride ;

-

5.

Convolutional Layer #3: Apply 16 kernels (filters), with ‘ReLU’ activation function;

-

6.

Pooling Layer #3: Accomplish ‘Max-pool’ with a filter and stride ;

-

7.

Convolutional Layer #4: Apply 16 kernels, with ‘ReLU’ activation function;

-

8.

Pooling Layer #4: Again, perform ‘Max-pool’ with a filter and stride ;

-

9.

Dense Layer #1: neurons, with dropout regularization rate of ;

-

10.

Dense Layer #1: 512 neurons, with dropout regularization rate of ;

-

11.

Dense Layer #2 (Logits Layer): ‘softmax’ function is used with 3 neurons, one for each pixel target classes with COVID, Pneumonia, and normal.

Fig. 9.

Enhanced classification model under deep learning framework.

In this way, we have arranged eight convolutional layers including ‘Conv2D’, ‘ReLU’ and ‘Max-pool’ and three FC layers, a total of eleven layers to build simple CNN architecture for the classification task in this investigation. The hyper-parameter of the proposed model has been illustrated in Table 3 .

Table 3.

List of hyper-parameter and their ranges/ values.

| Hyper-parameter | Ranges/ Values | Optimizer | Accuracy |

|---|---|---|---|

| Learning rate () | 0.32–0.45 | SGD | 92%-99% |

| Momentum () | 0.80–0.95 | SGD | 90%-99% |

| Learning rate decay() | SGD | 90%-99% | |

| Number of Epochs | 150 | SGD | 90%-99% |

| Batch size | 16, 32 | * | 95%-99% |

| Dropout rate | 0.30–0.50 | * | 90%-99% |

| Super parameter () | 0.20–0.25 | Loss function | 95% - 99% |

The model consists of eleven layers deep of sequential type, starting with a convolution layer with an output shape of then a pooling layer. Then there are three more pairs of convolution and pooling. Note the pooling strategy that is used is ‘Max Pooling’. The last layer of pooling generates a output that is flattened. We have added a dropout strategy after the flattened layer. Lastly, we used 1 dense layer with input size 6272 and output 512, and another dense layer (output layer) of input size 512 and output 3. The activation function in all the convolutional layers are ReLu, and the output layer used ‘softmax’ activation to classify the images into three classes. The model has total trainable parameters and has 0 non-trainable parameters.

5. Experimental results

The experiment and validation of the proposed ENResNet model for CXR image enhancement and COVID-19/ Pneumonia detection have been studied in this section. The description of the benchmark datasets, evaluation metrics, and validation with comparison have been also illustrated. The designed ENResNet algorithm has been executed using framework with the Keras API and at back-end on Processor Inter Core CPU 3.5 GHz with 16 GB of RAM Ubuntu -64 bits Linux environment.

5.1. Benchmark datasets

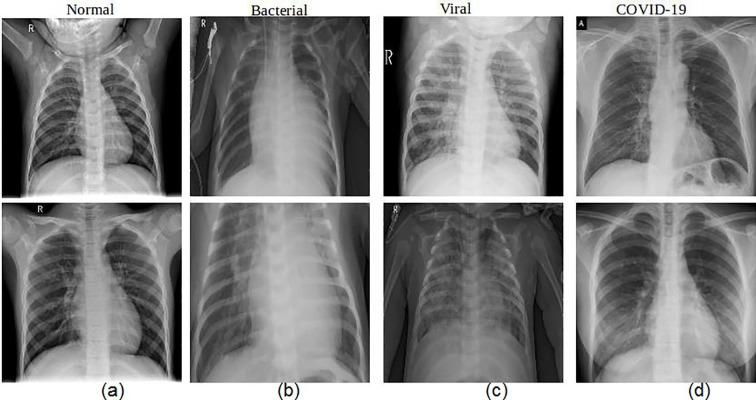

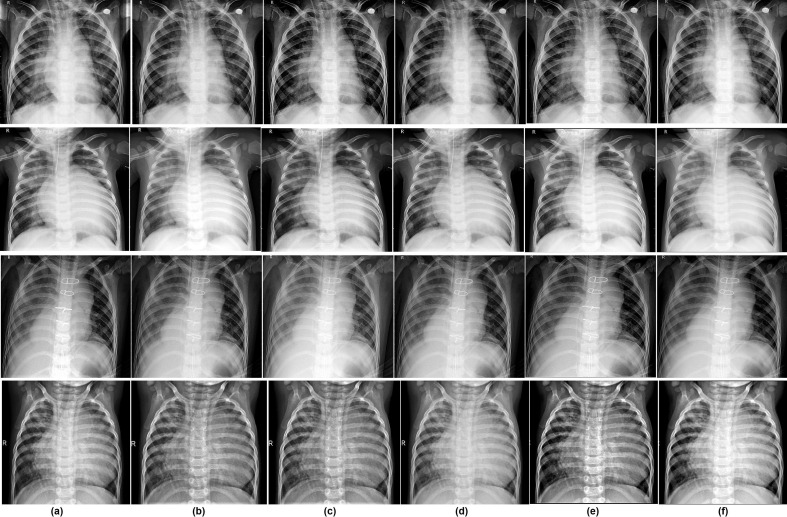

We make an empirical evaluation of ENResNet model and comparison with other enhanced methods on the ChestXray-NIHCC and Kaggle dataset [43], [28]. Chest X-ray database consists of pneumonia, normal, and COVID-19 X-ray images have taken from unique patients. The size of each image is and in PNG format [43], [28], [44]. A team of researchers from Qatar University, Doha, Qatar, and the University of Dhaka, Bangladesh along with their collaborators from Pakistan and Malaysia in collaboration with medical doctors have created a database of chest X-ray images for COVID-19 positive cases along with Normal and Viral Pneumonia images. This COVID-19, normal and other lung infection dataset is released in stages [43], [28], [44]. Fig. 4 (a-d) exhibits the sample dataset of chest X-ray (CXR) image.

Fig. 4.

Sample CXR dataset with COVID-19 and Pneumonia images.

5.2. Comparison methods and evaluation metrics

We have granted five deep learning based image enhancement algorithms such as LLNet: unsupervised learning based deep autoencoder approach image enhancement LLANet [17], LightenNet: CNN based supervised deep learning network for low illuminated image enhancement LTNNet [18], Residual encoder decoder CNN based CT image enhancement REDCNN [19], Generative adversarial network based image enhancement using improved game adversarial loss function GANAL [21], and Intuitionistic fuzzy special set with CNN based enhancement approach IFSCNN [20].

Another five deep learning model for COVID-19 detection have been considered for the validation of the proposed ENResNet model. They are COVID-Net: A deep convolutional neural network design for detection of COVID-19 cases from chest radiography images COVID-Net [28], GAN and transfer learning based COVID-19 detection in deep learning framework GANTF [32], DarkNet: Automated detection of COVID-19 cases using deep neural networks DarkNet [27], COVID-ResNet: A Deep Learning Framework for Screening of COVID19 COVID-ResNet [25], and CoroNet: A deep neural network for detection and diagnosis of COVID-19 CoroNet [26]. The parameters in the comparison baseline methods are set as per the proposals in their original studies. In experiments, the performance results of different baseline methods are generated under the suggested algorithm.

We have evaluated the ENResNetb model into two phases: the first phase illustrates the validation of CXR image enhancement, and second phase consists of the COVID-19 detection from multi-class cases. In order to estimate the quality and effectiveness of the suggested ENResNet model for low-dose image enhancement task, four popular measurement metrics such as ‘mean absolute error’ (MAE) [45], ‘universal quality index’ (UQI) [46], ‘structural similarity’ (SSIM) [45], [46], and ‘linear fuzzy index’ (LFI) [45] have been utilized in this treatment. A higher value of aforesaid metrics verifies better contrast enhancement except MAE [45] and LFI [45]. In the case of COVID-19 detection, we have considered four performance measures such as sensitivity (SEN), specificity (SPE), accuracy and F1-score [47] in this section. The aforesaid metrics involves a number of true positive (TP), false positive (FP), true negative (TN), and false negative (FN) [47]. TP and TN are the numbers of correctly classified positive and negative samples. FP and FN are the numbers of incorrectly classified positive and negative samples.

5.3. Enhancement result analysis

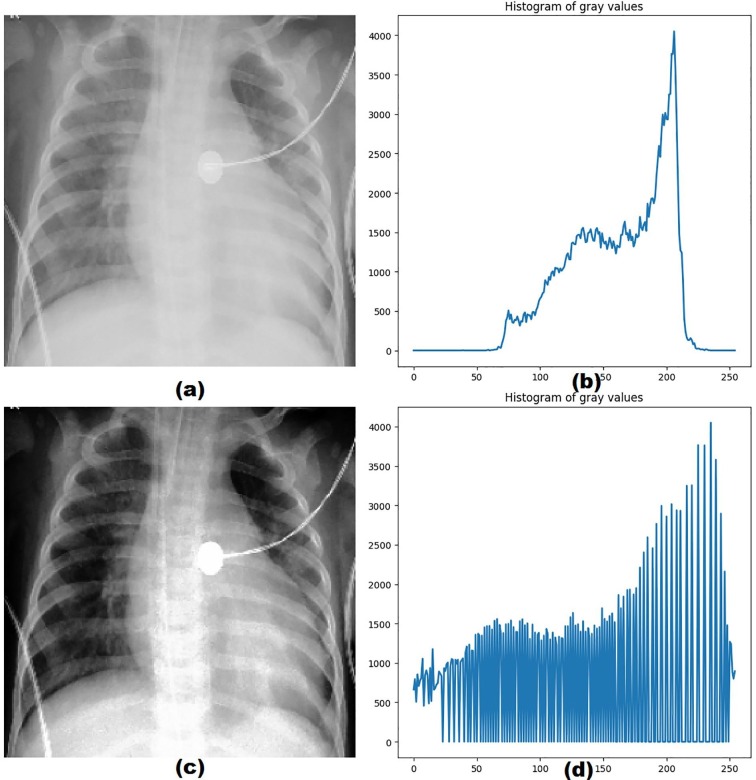

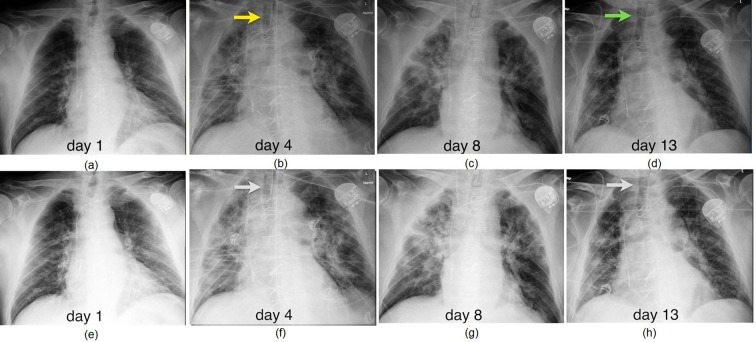

We have used a few chest X-ray image datasets [43], [28], [44] to demonstrate the performance of the proposed ENResNet scheme, which comprises various parts of the lungs in chest X-ray along with chest clinical profile including COVID-19, Pneumonia, and normal. The results of the proposed scheme are demonstrated in Fig. 5 for the chest-lung X-ray image of several COVID-19 patients. In Fig. 4(a-d), we have an original X-ray image that includes normal, bacterial pneumonia, viral pneumonia, and COVID-19. The enhanced images are presented in Fig. 5, and it is clear that the microstructure of the lobar, bronchus, and segmented bronchus are visually improved. Fig. 8 (four rows) exhibits the resultant images produced by five existing methods and the proposed ENResNet model. However, earlier LLANet and LTNNet models have not enhanced the boundary regions properly in Fig. 8(a)-(b), and some blackish effect was still present in the resultant images. The unsupervised based image enhancement algorithm such as REDCNN and GANAL have produced more detailed object area than LLANet or LightenNet model as in Fig. 8(d)-(e), but some artifacts are still present close to the lobar and edge of the cardiac notch. The proposed ENResNet scheme and hybrid IFSCNN method increase the accuracy and identify the minute details in the area of pulmonary, plexus pulmonary, pulmonary artery, visceral, pleuraparietal pleura and successfully enhance the microstructural features in Fig. 8(f).

Fig. 5.

Output enhanced CXR using proposed model on different patient.

Fig. 8.

Enhanced CXR using different methods: (a) LLANet, (b) LTNNet, (c) REDCNN, (d) GANAL, (e) IFSCNN, and (f) Proposed ENResNet scheme.

Moreover, ENResNet has discriminated against low contrast regions with top-quality. The comparative study of all enhanced images produced by recently developed contrast enhancement approaches based on deep learning framework and the proposed ENResNet scheme are given in Fig. 8. The ENResNet network has produced the best effects in Fig. 8 and it exhibits the region of lobar, bronchus, segmental, and bronchus in the CXR images for COVID-19 detection. To verify the quality of the image after enhancement, we have drawn a histogram of CXR image before and after enhancement in Fig. 6 , which exhibits a nice pixel distribution after enhancement. We can say that the five existing methods have also obviously increased the image quality to a different extent whereas the proposed ENResNet scheme has preserved the fine details without over-exposure or over-smoothing in comparison with state-of-the-art methods. Fig. 7 exhibits the CXR image of a COVID-19 patient in several observations (first row) and after enhancement using the proposed ENResNet model (second row). The affected areas of COVID-19 are clearly visible, and alteration of the area are perceptible in a COVID-19 patient as shown in Fig. 7 (e-h). It is observed that ENResNet achieves a satisfactory enhanced image which can help us to differentiate COVID-19 from usual pneumonia with all abnormalities.

Fig. 6.

Covid19 CXR with histogram: (a) CXR before enhancement, (b) histogram of a; (c) CXR after enhancement, (d) histogram of b.

Fig. 7.

CXR image of a COVID-19 patient with several observations enhanced images by proposed model: (a) day-1, (b) day-4, (c) day-8, (d) day-13 (e) Enhanced image of day-1, (f) Enhanced image of day-4, (g) Enhanced image of day-8, and (h) Enhanced image of day-13.

5.4. Comparative study on CXR enhancement

To quantitatively validate the suggested ENResNet scheme for COVID-19 detection, we have considered four evaluation metrics including mean absolute error (MAE), universal quality index (UQI), structural similarity index measure (SSIM), and linear fuzzy index (LFI) [45], [46], which are given from Table 4 . The highest values of each metric indicate the goodness of the algorithm and increase the quality of the image except MAE and LIF. The values of all performance metrics have been computed after enhancement with respect to the original image. Table 4 shows the average score of all metrics of the proposed ENResNet model along with existing deep neural network for image enhancement approaches. Table 4 exhibits that the proposed ENResNet model produces excellent score of all metrics in case of noisy image. LLANet and LTNNet model can efficiently enhance color image but are unable to enhance low-dose X-ray image properly, and therefore, UQI and SSIM values decrease. LLANet and LTNNet model produce approximately same score of all metrics in case of CXR image enhancement as given in Table 4. REDCNN and GANAL have been designed for low-dose image enhancement purpose, but they have not enhanced the boundary regions properly and are unable to produce overlapped texture properly as given in Fig. 8. Almost same scores have been produced by REDCNN and GANAL model as in Table 4. Hybrid IFSCNN has been designed for mammography images and has produced a good result in the case of mammogram enhancement task but it is unable to enhance efficiently on the affected area of CXR images as exhibited in Fig. 8. The values of performance metrics of IFSCNN model are close to the proposed ENResNet model in Table 4. The proposed residual network based ENResNet model also converges fast due to creation of residual images in each module as compared to other models as shown in Table 5 .

Table 4.

Performance comparison of the various baseline methods.

| Metric | LLANet | LTNNet | REDCNN | GANAL | IFSCNN | ENResNet | |

|---|---|---|---|---|---|---|---|

| MAE | 0.2298 | 0.2182 | 0.1846 | 0.1831 | 0.1724 | 0.1501 | |

| UQI | 0.8902 | 0.8876 | 0.9038 | 0.9068 | 0.9181 | 0.9224 | |

| SSIM | 0.9184 | 0.9198 | 0.9259 | 0.9287 | 0.9298 | 0.9346 | |

| LIF | 0.1781 | 0.1668 | 0.1588 | 0.1473 | 0.1461 | 0.1328 |

Table 5.

Average Time Complexity analysis in training for several models with training samples.

| Methods | Patch size | Epochs | Times | Patch size | Epochs | Times |

|---|---|---|---|---|---|---|

| LLANet | 50 | 62.71 | 100 | 101.34 | ||

| LTNNet | 50 | 64.36 | 100 | 103.22 | ||

| REDCNN | 50 | 59.28 | 100 | 98.42 | ||

| GANAL | 50 | 58.21 | 100 | 97.78 | ||

| IFSCNN | 50 | 36.76 | 100 | 69.24 | ||

| ENResNet | 50 | 45.48 | 100 | 86.53 |

Table 4 exhibits the MAE, UQI,SSIM and LIF values of all enhanced CXR images for the proposed ENResNet model and five deep learning models to image enhancement for CXR image enhancement task. All images have produced high UQI and SSIM values using ENResNet model which signify that the suggested ENResNet scheme obtains the best enhancement results for COVID-19 detection. The proposed ENResNet always produces smaller values of MAE and LIF in Table 4 than the existing methods. Sometimes fuzzy set based hybrid IFSCNN model obtains the evaluation metrics values which are close to that of the proposed ENResNet scheme. But suggested ENResNet model achieves good accuracy in case of noisy images. Once again, it is noticed that the proposed ENResNet model has outperformed the state-of-the-art methods in terms of all metrics.

As per medical experts carrying a clinical diagnosis action based on enhanced CXR images, they can easily detect the COVID-19 and recognize the condition of usual pneumonia in a patient and clearly characterize the precondition of the respiratory organ. Moreover, it can be observed there are very poor contrast low-grade CXR images as are shown in Fig. 4(a-d). It is really difficult to decide whether there is COVID-19 or pneumonia growth or not. We can notice that Fig. 5 has better improvement as compared to the original CXR images. Similarly, COVID-19 is not easily recognized from Fig. 7 (a)-(d), but after enhancement, COVID-19 can be easily detected from Fig. 7 (e)-(h). Thus, the use of coexisting demonstration can be beneficial in judging a COVID-19 or usual pneumonia deformity from CXR images.

5.5. Enhancement scheme in COVID-19 detection

Medical imaging is a challenging task for anomaly detection or any disease recognition, and sometimes does not produce genuine results due to hazy information in poorly illuminated medical X-ray images. After image enhancement, the contrast of the images is improved. Many parts of the CXR image such as lobe area, pleural fluid, arterial blood gases, lung abscesses, pneumonia factor into chest tomography are well visible. In the second phase of this work, a simple CNN based simple classification has been designed for COVID-19 detection and enhanced CXR images are taken as input. The simple classification model is depicted in Fig. 9.

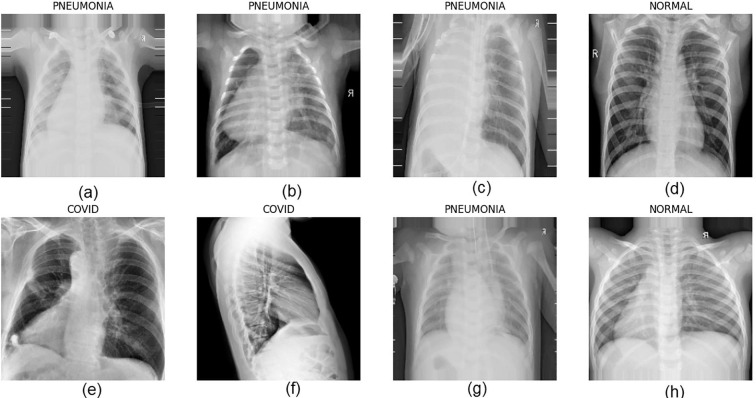

We have designed a two-way classification model for COVID-19 detection from enhanced CXR images. In the first observation, binary classification has been performed on enhanced CXR images for COVID-19 and normal CXR detection. In the second observation, we have designed multi-class (3-classes) classification model, which predicts COVID-19, usual Pneumonia and normal CXR images. The classification results on enhanced CXR images are shown in Fig. 10 for all the triplet classes. We have split the dataset into as training set and as validation set. -fold cross validation has been used to train the proposed model. We have trained ENResNet model for 50 epochs, 100 epochs and sometimes 150 epochs with patch size and respectively. Post enhancement, the abstract features are clearly captured by the model for classification task.

Fig. 10.

Enhanced classification result: (a) Pneumonia, (b) Pneumonia, (c) Pneumonia, (d) Normal, (e) COVID19, and (f) COVID19, (g) Pneumonia, and (h) Normal.

We have considered four classification accuracy measurement metrics such as sensitivity (SEN), specificity (SPE), accuracy and F1-score, which are computed from confusion matrix in this investigation. We have trained the model for both binary classification (COVID vs. normal) and multi-class classification (COVID vs. Pneumonia vs. normal) separately. The score of all metrics for binary classifier and multi-class cases are demonstrated in Table 6 and Table 7 , respectively. The suggested ENResNet model achieves excellent classification accuracy of for binary classification i.e. COVID and normal categories and values of all measurement are given in Table 6. The proposed model also obtains an outstanding classification accuracy of in case of multi-class category to classify COVID, Pneumonia, and normal. All performance metric values are illustrated in Table 7 for the detailed analysis of the model in case of multi-class classification.

Table 6.

Average score of classification metrics for binary class case of ENResNet with baseline classifier.

| Classifiers | SEN | SPE | Accuracy | F1-score |

|---|---|---|---|---|

| COVID-Net | 0.9824 | 0.9784 | 0.9713 | 0.9522 |

| GANTF | 0.9861 | 0.9795 | 0.9761 | 0.9571 |

| DarkNet | 0.9914 | 0.9856 | 0.9808 | 0.9654 |

| COVID-ResNet | 0.9923 | 0.9884 | 0.9825 | 0.9701 |

| CoroNet | 0.9948 | 0.9893 | 0.9881 | 0.9736 |

| ENResNet | 0.9986 | 0.9916 | 0.9974 | 0.9822 |

Table 7.

Average score of classification metrics for multi-class case of ENResNet with baseline classifier.

| Classifiers | SEN | SPE | Accuracy | F1-score |

|---|---|---|---|---|

| COVID-Net | 0.8012 | 0.7856 | 0.7863 | 0.7614 |

| GANTF | 0.7904 | 0.7886 | 0.7881 | 0.7601 |

| DarkNet | 0.8864 | 0.8722 | 0.8651 | 0.8236 |

| COVID-ResNet | 0.8883 | 0.8784 | 0.8714 | 0.8381 |

| CoroNet | 0.8879 | 0.8801 | 0.8782 | 0.8402 |

| ENResNet | 0.9946 | 0.9827 | 0.9842 | 0.9731 |

To validate the efficiency of the proposed method, five existing COVID-19 detection deep learning models have been considered as state-of-the-art methods. The proposed model achieves the highest F1-score of among all existing models in binary classification problem. All other methods also perform well in terms of sensitivity, specificity, and accuracy for binary classification as in Table 6. The suggested ENResNet model also outperforms all methods of multi-class problem in terms of all evaluation metrics as depicted in Table 7. ENResNet achieves accuracy, whereas DarkNet and CoroNet obtain and accuracy respectively for multi-class problem. The proposed model has obtained of F1-score which is the highest score among all models. Hence, the suggested ENResNet model obtains the best result in comparison with state-of-the-art model for both binary and multi-class problems.

Image pre-processing like enhancement is an essential role in COVID-19 epidemic situation such that, radiologist can diagnose COVID-19 or usual pneumonia from CXR images, treatment, and isolation stages of the disease. Traditional image enhancement algorithms are unable to detect all features that are associated with COVID-19 in the lungs. Therefore, deep learning models are sensitive to detect COVID-19 in the lungs and highlight all features which can easily differentiate COVID-19 from usual pneumonia. CXR images of COVID-19 patients are confirmed positive by the real-time reverse transcription-polymerase chain reaction (RT-PCR) test. After enhancement of CXR images by ENResNet model, classifier can easily detect ground-glass opacities (GGO), consolidation areas, and nodular opacities, which are associated with COVID-19 patient. Radiologists can observe the bilateral, lower lobe, and peripheral involvement in the lung images of a COVID-19 patient, and the ENResNet can detect localization of such lesions efficiently in early stages. Early diagnosis can prevent the transmission of the Corona Virus, ENResNet can recognize COVID-19 by early symptoms. After enhancement, any radiologist can predict the disease in early stages or go for automated detection. The proposed model detects COVID-19 very fast, which reduces the waiting time for patient in health care unit for result so, it can be used in hospitals or diagnostic centers. The ENResNet outperforms both binary (COVID vs. normal) and multi-class (COVID vs. Pneumonia vs. normal) classification problem. It can easily eliminate the confusion between usual pneumonia and COVID-19 which in turn reduces the false positive rate efficiently.

5.6. Superiority of the model

Table 5 exhibits the time complexity of the proposed image enhancement based ENResNet model for COVID-19 detection along with state-of-the-art methods. The suggested ENResNet scheme reduces the computational cost with respect to other existing methods except fuzzy based hybrid IFSCNN methods. We have trained the model with different patch sizes such as and for several iterations 50 and 100 respectively as shown in Table 5. For both cases the proposed ENResNet outperforms the existing deep learning based image enhancement model. On the other hand, the proposed ENResNet has produced excellent results for COVID-19 detection in both cases of binary and multi-class classification problem. In multi-class case, ENResNet achieves satisfactory results in terms of accuracy and F1-scores in comparison with existing methods. Hence, the proposed ENResNet model maintains the accuracy to highlight the minute details in CXR images after enhancement which is immensely helpful for further classification.

5.7. Discussion

The pneumonia deformity in both COVID-19 and viral/ bacterial pneumonia in the lungs leads to some pixel level change in X-ray image. The proposed ENResNet model improves the visual quality of chest X-ray in such a way that physicians can identify COVID-19 and pneumonia deformity in lung X-ray image accurately for treatment policy. The outcome of the model can be summarized with the following observations:

-

1.

The under-exposure and over-exposure problem in the enhanced image do not appear, consequently, it produces natural visual quality images.

-

2.

The residual image and feature maps in each module in the proposed scheme help to quantify imprecision of the gray level of pixel intensities for extraction of very minute changes in affected area of chest.

-

3.

The proposed model helps to identify the affected area of chest/ lungs of a COVID-19 patient.

-

4.

There are almost no artifacts in contrast-enhanced images and it is computationally efficient.

-

5.

Module based ENResNet in deep learning framework reduces the features from the image and it can deal with noisy datasets nicely.

-

6.

The proposed scheme efficiently differentiate COVID-19 from usual pneumonia through the abstract features in each module.

The enhancement results of proposed algorithm have been achieved based on large low-dose training dataset. Recent statistics shows that asymptotic patients are increasing rapidly worldwide, and they are suspected in serious conditions with 80% - 90% affected chest areas. Some patients show the COVID-19 symptoms but actually they have usual Pneumonia. The proposed model helps to identify the affected area of chest by the Corona Virus and how it differs from usual Pneumonia. The proposed algorithm reduces the time complexity for the diagnosis of COVID-19 at low cost.

6. Conclusion

This paper has devised deep learning based chest X-ray tomography enhancement approach using modified ResNet and has improved the image quality for the detection of structural area of COVID-19 and usual pneumonia malformation in X-ray image. The proposed ENResNet method can easily differentiate COVID-19 from usual pneumonia (viral/ bacterial) and efficiently identify the affected areas in the chest in early stages at low cost. Eights modules have been constructed for the designing of ENResNet which extracts abstract features from CXR images during training. The benefit of the suggested ENResNet model is that it increases the accuracy of COVID-19 detection through enhancement in both binary and multi-class classification stages. It also reduces miss-classification error in case of COVID-19 detection and increases the accuracy in multi-class classification better than other existing methods. The residual images in each module can easily work on texture analysis from CXR images that leads to the detection of COVID-19 and usual pneumonia effectively. The feature map in each module is considered as input for the next module that results in fast convergence which in turn reduces the time complexity for classification. Both subjective and quantitative results exhibit COVID-19 detection in early stages in comparison with state-of-the-art methods.

In future, we aim to propose the hybrid model which can detect the changes in affected chest according to the mutation of the Corona Virus and identify severe case automatically. We will improve this work under fuzzy framework for severe stages of COVID-19 detection. Authors are working in this direction.

CRediT authorship contribution statement

Swarup Kr Ghosh: Conceptualization, Methodology, Software, Writing – original draft, Data curation, Writing – review & editing. Anupam Ghosh: Validation, Visualization, Investigation, Supervision.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgement

aaa

References

- 1.H. Syrjala, M. Broas, P. Ohtonen, A. Jartti, E. Pääkkö, Chest magnetic resonance imaging for pneumonia diagnosis in outpatients with lower respiratory tract infection, Eur. Respiratory J. 49 (1). [DOI] [PubMed]

- 2.J.R. Zech, M.A. Badgeley, M. Liu, A.B. Costa, J.J. Titano, E.K. Oermann, Confounding variables can degrade generalization performance of radiological deep learning models, arXiv preprint arXiv:1807.00431.

- 3.W.H. Organization, et al., Coronavirus disease 2019 (covid-19): situation report, 72.

- 4.S.H. Kassani, P.H. Kassasni, M.J. Wesolowski, K.A. Schneider, R. Deters, Automatic detection of coronavirus disease (covid-19) in x-ray and ct images: A machine learning-based approach, arXiv preprint arXiv:2004.10641. [DOI] [PMC free article] [PubMed]

- 5.Guan W.-J., Ni Z.-Y., Hu Y., Liang W.-H., Ou C.-Q., He J.-X., Liu L., Shan H., Lei C.-L., Hui D.S., et al. Clinical characteristics of coronavirus disease 2019 in china. New Engl. J. Med. 2020;382(18):1708–1720. doi: 10.1056/NEJMoa2002032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Musher D.M., Thorner A.R. Community-acquired pneumonia. N. Engl. J. Med. 2014;371(17):1619–1628. doi: 10.1056/NEJMra1312885. [DOI] [PubMed] [Google Scholar]

- 7.Tolksdorf K., Buda S., Schuler E., Wieler L.H., Haas W. Influenza-associated pneumonia as reference to assess seriousness of coronavirus disease (covid-19) Eurosurveillance. 2020;25(11):2000258. doi: 10.2807/1560-7917.ES.2020.25.11.2000258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Grasselli G., Pesenti A., Cecconi M. Critical care utilization for the covid-19 outbreak in lombardy, italy: early experience and forecast during an emergency response. Jama. 2020;323(16):1545–1546. doi: 10.1001/jama.2020.4031. [DOI] [PubMed] [Google Scholar]

- 9.Huang C., Wang Y., Li X., Ren L., Zhao J., Hu Y., Zhang L., Fan G., Xu J., Gu X., et al. Clinical features of patients infected with 2019 novel coronavirus in wuhan, china. Lancet. 2020;395(10223):497–506. doi: 10.1016/S0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.M.A. Shereen, S. Khan, A. Kazmi, N. Bashir, R. Siddique, Covid-19 infection: Origin, transmission, and characteristics of human coronaviruses, J. Adv. Res. [DOI] [PMC free article] [PubMed]

- 11.G. Lippi, M. Plebani, B.M. Henry, Thrombocytopenia is associated with severe coronavirus disease 2019 (covid-19) infections: a meta-analysis, Clin. Chim. Acta. [DOI] [PMC free article] [PubMed]

- 12.Huang C., Wang Y., Li X., Ren L., Zhao J., Hu Y., Zhang L., Fan G., Xu J., Gu X., et al. Clinical features of patients infected with 2019 novel coronavirus in wuhan, china. Lancet. 2020;395(10223):497–506. doi: 10.1016/S0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Shi F., Wang J., Shi J., Wu Z., Wang Q., Tang Z., He K., Shi Y., Shen D. Review of artificial intelligence techniques in imaging data acquisition, segmentation, and diagnosis for covid-19. IEEE Rev. Biomed. Eng. 2020;14:4–15. doi: 10.1109/RBME.2020.2987975. [DOI] [PubMed] [Google Scholar]

- 14.Borghesi A., Maroldi R. Covid-19 outbreak in italy: experimental chest x-ray scoring system for quantifying and monitoring disease progression. La radiologia medica. 2020;1 doi: 10.1007/s11547-020-01200-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.W.H. Self, D.M. Courtney, C.D. McNaughton, R.G. Wunderink, J.A. Kline, High discordance of chest x-ray and computed tomography for detection of pulmonary opacities in ed patients: implications for diagnosing pneumonia, Am. J. Emerg. Med. 31(2) (2013) 401–405. [DOI] [PMC free article] [PubMed]

- 16.G.D. Rubin, C.J. Ryerson, L.B. Haramati, N. Sverzellati, J.P. Kanne, S. Raoof, N.W. Schluger, A. Volpi, J.-J. Yim, I.B. Martin, et al., The role of chest imaging in patient management during the covid-19 pandemic: a multinational consensus statement from the fleischner society, Chest. [DOI] [PMC free article] [PubMed]

- 17.Lore K.G., Akintayo A., Sarkar S. Llnet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recogn. 2017;61:650–662. [Google Scholar]

- 18.Li C., Guo J., Porikli F., Pang Y. Lightennet: a convolutional neural network for weakly illuminated image enhancement. Pattern Recogn. Lett. 2018;104:15–22. [Google Scholar]

- 19.Chen H., Zhang Y., Kalra M.K., Lin F., Chen Y., Liao P., Zhou J., Wang G. Low-dose ct with a residual encoder-decoder convolutional neural network. IEEE Trans. Med. Imaging. 2017;36(12):2524–2535. doi: 10.1109/TMI.2017.2715284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.S.K. Ghosh, B. Biswas, A. Ghosh, Development of intuitionistic fuzzy special embedded convolutional neural network for mammography enhancement, Comput. Intell.

- 21.Xu C., Cui Y., Zhang Y., Gao P., Xu J. Image enhancement algorithm based on generative adversarial network in combination of improved game adversarial loss mechanism. Multimedia Tools Appl. 2019:1–16. [Google Scholar]

- 22.S. Wang, B. Kang, J. Ma, X. Zeng, M. Xiao, J. Guo, M. Cai, J. Yang, Y. Li, X. Meng, et al., A deep learning algorithm using ct images to screen for corona virus disease (covid-19), MedRxiv. [DOI] [PMC free article] [PubMed]

- 23.K. Purohit, A. Kesarwani, D.R. Kisku, M. Dalui, Covid-19 detection on chest x-ray and ct scan images using multi-image augmented deep learning model, BioRxiv.

- 24.M. Karim, T. Döhmen, D. Rebholz-Schuhmann, S. Decker, M. Cochez, O. Beyan, et al., Deepcovidexplainer: Explainable covid-19 predictions based on chest x-ray images, arXiv preprint arXiv:2004.04582.

- 25.M. Farooq, A. Hafeez, Covid-resnet: A deep learning framework for screening of covid19 from radiographs, arXiv preprint arXiv:2003.14395.

- 26.Khan A.I., Shah J.L., Bhat M.M. Coronet: A deep neural network for detection and diagnosis of covid-19 from chest x-ray images. Comput. Methods Programs Biomed. 2020;105581 doi: 10.1016/j.cmpb.2020.105581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O., Acharya U.R. Automated detection of covid-19 cases using deep neural networks with x-ray images. Comput. Biol. Med. 2020;103792 doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wang L., Lin Z.Q., Wong A. Covid-net: A tailored deep convolutional neural network design for detection of covid-19 cases from chest x-ray images. Scientific Rep. 2020;10(1):1–12. doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Albahli S. Efficient gan-based chest radiographs (cxr) augmentation to diagnose coronavirus disease pneumonia. Int. J. Med. Sci. 2020;17(10):1439–1448. doi: 10.7150/ijms.46684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Apostolopoulos I.D., Mpesiana T.A. Covid-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020;43(2):635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ucar F., Korkmaz D. Covidiagnosis-net: Deep bayes-squeezenet based diagnosis of the coronavirus disease 2019 (covid-19) from x-ray images. Med. Hypotheses. 2020;140 doi: 10.1016/j.mehy.2020.109761. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Loey M., Smarandache F., Khalifa N.E.M. Within the lack of chest covid-19 x-ray dataset: A novel detection model based on gan and deep transfer learning. Symmetry. 2020;12(4):651. [Google Scholar]

- 33.Keles A., Keles M.B., Keles A. Cov19-cnnet and cov19-resnet: diagnostic inference engines for early detection of covid-19. Cogn. Comput. 2021:1–11. doi: 10.1007/s12559-020-09795-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.A. Banerjee, A. Nilhani, A residual network based deep learning model for detection of covid-19 from cough sounds, arXiv preprint arXiv:2106.02348.

- 35.Mei X., Lee H.-C., Diao K.-Y., Huang M., Lin B., Liu C., Xie Z., Ma Y., Robson P.M., Chung M., et al. Artificial intelligence–enabled rapid diagnosis of patients with covid-19. Nat. Med. 2020:1–5. doi: 10.1038/s41591-020-0931-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Albahli S. A deep ensemble learning method for effort-aware just-in-time defect prediction. Future Internet. 2019;11(12):246. [Google Scholar]

- 37.He K., Zhang X., Ren S., Sun J. Proceedings of the IEEE conference on computer vision and pattern recognition. 2016. Deep residual learning for image recognition; pp. 770–778. [Google Scholar]

- 38.B. Chang, L. Meng, E. Haber, L. Ruthotto, D. Begert, E. Holtham, Reversible architectures for arbitrarily deep residual neural networks, arXiv preprint arXiv:1709.03698.

- 39.Valentin M.B., Bom C.R., Coelho J.M., Correia M.D., Márcio P., Marcelo P., Faria E.L. A deep residual convolutional neural network for automatic lithological facies identification in brazilian pre-salt oilfield wellbore image logs. J. Petrol. Sci. Eng. 2019;179:474–503. [Google Scholar]

- 40.Z. Hui, X. Gao, X. Wang, Lightweight image super-resolution with feature enhancement residual network, Neurocomputing.

- 41.Liu P., Wang G., Qi H., Zhang C., Zheng H., Yu Z. Underwater image enhancement with a deep residual framework. IEEE Access. 2019;7:94614–94629. [Google Scholar]

- 42.Wu Y., Ji X., Ji W., Tian Y., Zhou H. Casr: a context-aware residual network for single-image super-resolution. Neural Comput. Appl. 2019:1–16. [Google Scholar]

- 43.K. Data, Chest x-ray images (covid-19) (2021). URL: https://www.kaggle.com/tawsifurrahman/covid19-radiography-database.

- 44.J. Paul, M. Paul, L. Dao, K. Roth, Covid-19 image data collection: Prospective predictions are the future: preprint.

- 45.Wang Z., Bovik A.C. A universal image quality index. IEEE Signal Process. Lett. 2002;9(3):81–84. [Google Scholar]

- 46.Yang C., Zhang J.Q., Wang X.R., Liu X. A novel similarity based quality metric for image fusion. Inf. Fusion. 2008;13(4):600–612. [Google Scholar]

- 47.Ghosh S.K., Ghosh A., Chakrabarti A. Vea: Vessel extraction algorithm by active contour model and a novel wavelet analyzer for diabetic retinopathy detection. Int. J. Image Graphics. 2018;18(02):1850008. [Google Scholar]