Abstract

Background:

Inpatient falls, many resulting in injury or death, are a serious problem in hospital settings. Existing falls risk assessment tools, such as the Morse Fall Scale, give a risk score based on a set of factors, but don’t necessarily signal which factors are most important for predicting falls. Artificial intelligence (AI) methods provide an opportunity to improve predictive performance while also identifying the most important risk factors associated with hospital-acquired falls. We can glean insight into these risk factors by applying classification tree, bagging, random forest, and adaptive boosting methods applied to Electronic Health Record (EHR) data.

Objective:

The purpose of this study was to use tree-based machine learning methods to determine the most important predictors of inpatient falls, while also validating each via cross-validation.

Materials and methods:

A case-control study was designed using EHR and electronic administrative data collected between January 1, 2013 to October 31, 2013 in 14 medical surgical units. The data contained 38 predictor variables which comprised of patient characteristics, admission information, assessment information, clinical data, and organizational characteristics. Classification tree, bagging, random forest, and adaptive boosting methods were used to identify the most important factors of inpatient fall-risk through variable importance measures. Sensitivity, specificity, and area under the ROC curve were computed via ten-fold cross validation and compared via pairwise t-tests. These methods were also compared to a univariate logistic regression of the Morse Fall Scale total score.

Results:

In terms of AUROC, bagging (0.89), random forest (0.90), and boosting (0.89) all outperformed the Morse Fall Scale (0.86) and the classification tree (0.85), but no differences were measured between bagging, random forest, and adaptive boosting, at a p-value of 0.05. History of Falls, Age, Morse Fall Scale total score, quality of gait, unit type, mental status, and number of high fall risk increasing drugs (FRIDs) were considered the most important features for predicting inpatient fall risk.

Conclusions:

Machine learning methods have the potential to identify the most relevant and novel factors for the detection of hospitalized patients at risk of falling, which would improve the quality of patient care, and to more fully support healthcare provider and organizational leadership decision-making. Nurses would be able to enhance their judgement to caring for patients at risk for falls. Our study may also serve as a reference for the development of AI-based prediction models of other iatrogenic conditions. To our knowledge, this is the first study to report the importance of patient, clinical, and organizational features based on the use of AI approaches.

1. BACKGROUND AND SIGNIFICANCE

Patient falls are a leading cause of human injury and mortality across international hospital settings [1–4]. It is estimated that in the United States (US) one million falls occur in hospitals annually, with an associated direct medical cost of $50 billion [5,6]. Fifty percent of inpatient falls result in injury, ten percent result in severe injury, and one percent result in death [7,8]. Patient falls can be largely prevented if important factors associated with these adverse events are known [9].

Risk of fall is commonly measured using assessments such as the Morse Fall Scale, the St. Thomas’s risk assessment tool in falling elderly inpatients (STRATIFY), and Hendrich’s High-Risk Fall Model [10–12]. These tools require clinician time for assessment and manual data entry, contributing to the clinician documentation burden [13,14]. These instruments have low specificity, which cause difficulty in determining how to focus fall prevention tactics in the hospital setting [15]. Furthermore, the few features captured in these assessments focus primarily on intrinsic risk factors and are constrained by contemporaneous clinical and methodological knowledge of the 1980s and 1990s. Advancements in data science have strengthened investigators’ ability to use data captured from electronic health records (EHRs) and electronic administrative systems to identify robust prediction models [16].

The volume of clinical and administrative data captured in hospital systems is growing in electronic systems during routine patient care [17]. This data offers opportunities to improve the quality of inpatient care and fall prevention practices [18]. Many features could exist in these data to identify a generalizable fall prediction model. Decreases in fall rates have mostly been modest in hospitals and this type of data offers much promise [19,20]. However, large amounts of data can pose methodologic challenges with the use of traditional statistical approaches that are typically applied when testing the effects of a few features. When assumptions of traditional statistics cannot be met, machine learning techniques are able to screen a multitude of factors from big data and are capable of handling nonlinear interactions [21].

Advances in computing technology can increase the prospects of utilizing EHR and electronic administrative data to identify hospitalized patients at risk of falling, without added burden on clinicians [22,23]. Machine learning methods, including random forest and adaptive boosting, have emerged as powerful techniques that can accurately predict clinical outcomes and identify important predictors [24–26]. An advantage of these tree-based methods is that they are easily explained compared to other AI methods such as deep learning [27]. Robust model validation techniques like cross-validation can help generalize the prediction error on unseen data [28]. While fall prediction statistical models exist, their use is limited by the bias attributed to inadequate sample sizes, missing data, and not accounting for overfitting in models [29–36]. Automated methods such as machine learning can identify unknown plausible factors that could explain more fully the mechanisms of patient falls.

The objective of this study was to apply automated machine learning methods to identify the importance of known and unknown hospital inpatient fall risk factors, while also validating prediction performance of models on training and testing data sets.

2. MATERIALS AND METHODS

2.1. Study Setting, Design, and Ethical Considerations

This study used EHR data from the University of Florida’s (UF) Integrated Data Repository (IDR) and administrative records from UF Health Shands (curated by QuadraMed Co., Plano, TX). We included 14 medical/surgical units of a tertiary care hospital in the southeastern US. EHR and administrative data was collected between January 1, 2013 and October 31, 2013 for patients who were at least 21 years of age on January 1. We excluded 14 patients who were hospitalized on a transition unit.

This case-control study identified risk factors for patient falls. Cases were all patients who experienced a fall event during hospitalization. Controls were patients who did not experience a fall event during their hospitalization but were at risk of falling. Each case was matched with two randomly selected controls that overlapped on at least one day of hospitalization. Risk of falls was measured by the Morse Fall Scale, which has been demonstrated to be reliable [38,39].

This study was approved by the Institutional Review Board of the University of Florida (protocol #201600423). The original data was de-identified in compliance with the US Health Insurance Portability and Accountability Act (HIPAA) [40]. Expert determination was used for the HIPAA-anonymization method [41].

2.2. Model Predictors and Outcome

UF Health’s electronic incident reporting system was used to validate patients who fell during their hospitalization. For patients with multiple fall events, one was randomly chosen, which may have occurred over multiple admissions during the study timeframe. Variables included patient characteristics (e.g., age and sex), admission information (e.g., hospital unit), assessment information (e.g., the Morse Fall Scale and mobility assessment), clinical data (e.g., the Charlson Index), and staffing information (e.g., registered nurse staffing). Missing values were imputed. This study is an extension of the work of Choi et al. by adding the Charlson comorbidity index, nurse skill mix, percentage of nurses certified, percentage of nurses with a bachelor’s degree or higher, weekday/weekend shift, day/night shift, middle/end of a shift, and the nurse staffing ratio [37]. A list of variables used in this study are listed in Table 1 and their definitions are in Lucero [42].

Table 1.

Distribution of patient, clinical, and organizational features among fallers, non-fallers, and both fallers and non-fallers

| Item | Fallers (n = 272) | Non-Fallers (n = 542) | Totala (n = 814) |

|---|---|---|---|

| Patient Characteristics, n (%) | |||

| Male | 139 (51%) | 258 (48%) | 397 (49%) |

| Age (Mean, SD) | 56.8 (14.9) | 58.3 (17.0) | 57.8 (16.3) |

| Medications, n (%) | |||

| High dose of high risk FRIDsb | 121 (44%) | 138 (25%) | 259 (32%) |

| Number of high risk FRIDsb (Mean, SD) | 4.9 (4.1) | 3.4 (3.5) | 3.9 (3.8) |

| Morse Fall Scale (MFS), n (%) | |||

| History of falls | 244 (90%) | 146 (27%) | 390 (48%) |

| Presence of a secondary diagnosis | 263 (97%) | 496 (92%) | 759 (93%) |

| Ambulatory aids | |||

| Crutches, cane, or walker | 87 (32%) | 87 (16%) | 174 (21%) |

| Furniture | 13 (5%) | 6 (1%) | 19 (2%) |

| Use of a IV/Heparin lock | 264 (97%) | 516 (95%) | 780 (96%) |

| Gait/Transferring | |||

| Weak | 153 (56%) | 162 (30%) | 315 (39%) |

| Impaired | 59 (22%) | 55 (10%) | 114 (14%) |

| Impaired mental status | 136 (50%) | 88 (16%) | 224 (28%) |

| MFS total score (Mean, SD) | 79.3 (17.0) | 51.3 (19.7) | 60.7 (23.0) |

| Medical Conditions and Indicators of Health Status, n (%) | |||

| Heart failure | 92 (34%) | 172 (32%) | 264 (32%) |

| Visual or language impairment | 43 (16%) | 35 (6%) | 78 (10%) |

| Hypoglycemic event | 17 (6%) | 26 (5%) | 43 (5%) |

| Uncontrolled diabetes mellitus | 38 (14%) | 42 (8%) | 80 (10%) |

| Impaired Mobility | 204 (75%) | 398 (73%) | 602 (74%) |

| Confusion | 111 (41%) | 88 (16%) | 199 (24%) |

| Alcohol withdrawal | 17 (6%) | 9 (2%) | 26 (3%) |

| Hemoglobin Level (g/dL) (Mean, SD) | 10.4 (2.2) | 11.1 (2.3) | 10.8 (2.3) |

| Orthopedic surgery | 6 (2%) | 18 (3%) | 24 (3%) |

| Hypotension | 56 (21%) | 99 (18%) | 155 (19%) |

| Physical Therapy initiation | 139 (51%) | 148 (27%) | 287 (35%) |

| Charlson Comorbidity Indexc (Mean, SD) | 3.5 (3.1) | 2.6 (2.8) | 2.9 (2.9) |

| Dizziness or Vertigo | 33 (12%) | 46 (8%) | 79 (10%) |

| Hallucinationsf | — | — | — |

| Visual Impairment | 5 (2%) | 16 (3%) | 21 (3%) |

| Hearing Loss | 18 (7%) | 11 (2%) | 29 (4%) |

| Language Impairment | 20 (7%) | 19 (4%) | 39 (5%) |

| Parkinson’s Diseasef | — | 9 (2%) | 10 (1%) |

| Seizure Disorders | 60 (22%) | 50 (9%) | 110 (14%) |

| Organizational characteristics | |||

| Hospital Unit Type, n (%) | |||

| Cardiology/CV telemetry | 20 (7%) | 69 (13%) | 89 (11%) |

| Medicine 1 | 25 (9%) | 44 (8%) | 69 (8%) |

| Medicine 2 | 29 (11%) | 52 (10%) | 81 (10%) |

| Medicine 3 | 34 (13%) | 39 (7%) | 73 (9%) |

| Medicine 4 | 15 (6%) | 61 (11%) | 76 (9%) |

| Vascular/ENT/Tele medicine | 18 (7%) | 50 (9%) | 68 (8%) |

| Neurology/Burn/Plastics/GI medicine | 27 (10%) | 43 (8%) | 70 (9%) |

| Neurosurgery | 31 (11%) | 34 (6%) | 65 (8%) |

| Oncology | 11 (4%) | 20 (4%) | 31 (4%) |

| Bone marrow transplant | 12 (4%) | 14 (3%) | 26 (3%) |

| Trauma/Lung transplant | 14 (5%) | 31 (6%) | 45 (6%) |

| Orthopedics | 12 (4%) | 30 (6%) | 42 (5%) |

| General/GI surgery | 10 (4%) | 25 (5%) | 35 (4%) |

| Urology | 14 (5%) | 30 (6%) | 44 (5%) |

| Nurse Skill Mixd | 71% | 72% | 71% |

| Percent Nurses Certified | 22% | 23% | 23% |

| Percent Nurses with Bachelor of nursing | 48% | 47% | 47% |

| Weekday (Weekday vs. Weekend shift) | 205 (75%) | 417 (77%) | 622 (76%) |

| Day (Day vs. Night shift) | 138 (51%) | 304 (56%) | 442 (54%) |

| Middle of Shift (Middle vs. End of shift) | 135 (50%) | 260 (48%) | 395 (49%) |

| Staffing Ratioe | |||

| 0.95 < Ratio < 1.05 [Baseline] | 60 (22%) | 135 (25%) | 195 (24%) |

| Ratio < 0.85 | 68 (25%) | 139 (26%) | 207 (25%) |

| 0.85 < Ratio < 0.95 | 69 (25%) | 140 (26%) | 209 (26%) |

| Ratio > 1.05 | 75 (28%) | 128 (24%) | 203 (25%) |

Fallers and Non-Fallers.

FRID: Fall risk increasing drug.

Calculated as the proportion of registered nurses on a shift

Calculated as the ratio of actual registered nurses to the recommended registered nurses on a shift

Cell counts with an en-dash indicate a count less than 5

In this study, we applied tree-based, machine learning methods (a single classification tree, bagging, random forest and adaptive boosting) to identify the features most predictive of patient falls [43–47]. The Gini index was used for the single classification tree to identify the hierarchical structure of important features. We also calculated the variable importance values of all features used by bagging, random forest, and boosting methods, and listed the important features. For the bagging and random forest approaches, the permutation importance of all features was measured as a proportion of the largest value [45]. For adaptive boosting, the relative influence of all features was measured as a proportion of the largest value [48]. A description of each machine learning method and the variable importance assessment is provided in Appendix A. We compared the performance of the machine learning methods to a univariate logistic regression statistical model for the Morse Fall Scale score. To account for the possibility of multicollinearity among hospital units, we compared the of a multivariate regression model of all features to both a random effects model and a generalized estimating equation multivariate regression model.

We produced the Receiver Operating Characteristic (ROC) curve for each of the four tree-based models as well as the Morse Fall Scale total score. We also calculated the sensitivity, specificity, the Area Under the Receiver Operating Characteristic (AUROC) curve, and their respective confidence intervals using ten-fold cross validation. These statistics were calculated at the cut-points of the ROC curves based on the Youden Index [49–51]. Pairwise t-tests were used to assess differences in the sensitivity, specificity, and the AUROC curves among the predictive models. The t-tests were corrected to account for the bias in Type I error when applied to cross validation techniques [52]. All comparisons were made at a p-value of 0.05.

In order to determine which variables were the most important features selected for each of the machine learning models, we compared the AUROC of the full model to the same machine learning technique with only the K variables with the highest variable importance measure. A t-test was performed to compare the AUROC measures. We reported the smallest K for which there was no difference in AUROC between the models containing all 38 features and the models containing only the K variables with the highest variable importance measure.

All statistical analyses and graphs were generated using R (version 3.5.1) [53].

3. RESULTS

We identified a total of 272 patients who fell (cases) and matched them to 542 patients who did not fall (controls) during their hospitalization. A set of 38 patient, clinical, and administrative features were included with each of the machine learning methods. Hemoglobin level was the only feature with missing data, which comprised 3.7% cases and 4.2% controls. The median hemoglobin level was imputed for missing values. Overall summary statistics are provided in Table 1, and described by unit in Appendices C1 – C14 of the Supplementary Materials.

3.1. Analysis of important factors

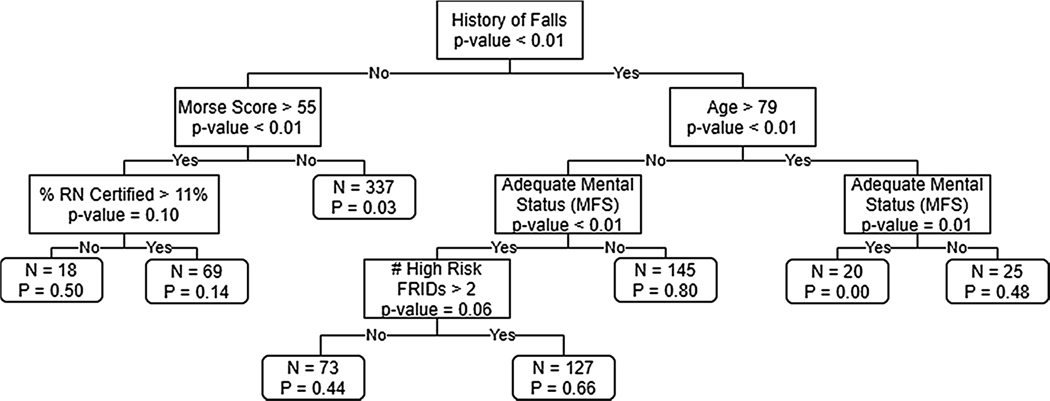

Fig. 1 depicts the results of the single classification tree analysis. Six features were automatically selected for the hierarchical structure. The features in descending order included history of falls, Morse Fall Scale total score, age, percentage of registered nurses with specialty certification, mental status, and number of high risk fall risk increasing drugs (FRIDs).

Fig. 1.

Classification tree of fall risk factors and number of patients affected at each node.

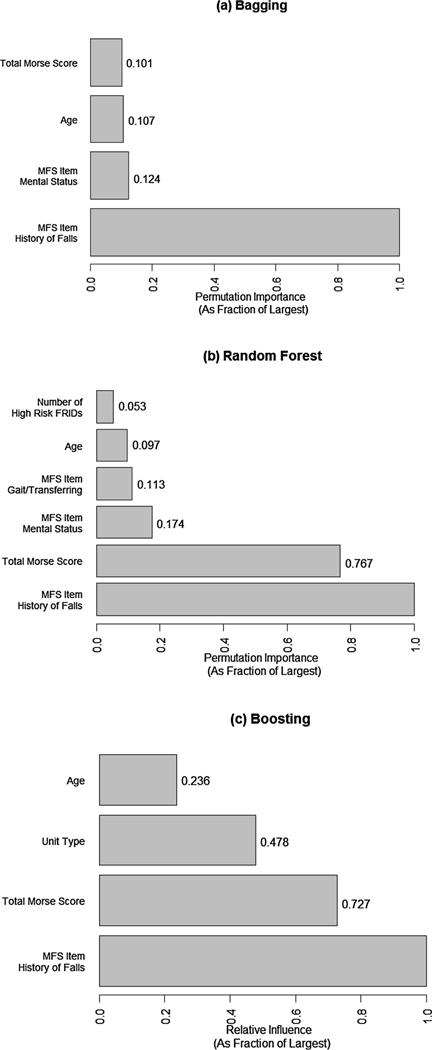

Variable importance graphs for bagging, random forest, and adaptive boosting methods appear in Fig. 2. Based on the results of the t-test to compare AUROC between the models with all 38 variables and the models containing only the K variables with the highest variable importance measure, the bagging and boosting methods had a minimum of 4 important features and the random forest method had a minimum of 6 important features that did not show a difference in AUROC. Among the top features, history of falls exhibited the greatest relevant importance to patient falls across the three approaches. Patient’s age and Morse Fall Scale total score were important in all three approaches. Mental status was important in two of the models, while Morse Fall Scale gait/transferring, and hospital unit type, and the number of high risk FRIDs were important in one. The variable importance measures of all 38 variables for each of the three methods are provided in Appendix B.

Fig. 2.

Variable importance graphs of the most important features for bagging, random forest, and adaptive boosting tree-based machine learing methods

3.2. Model evaluation

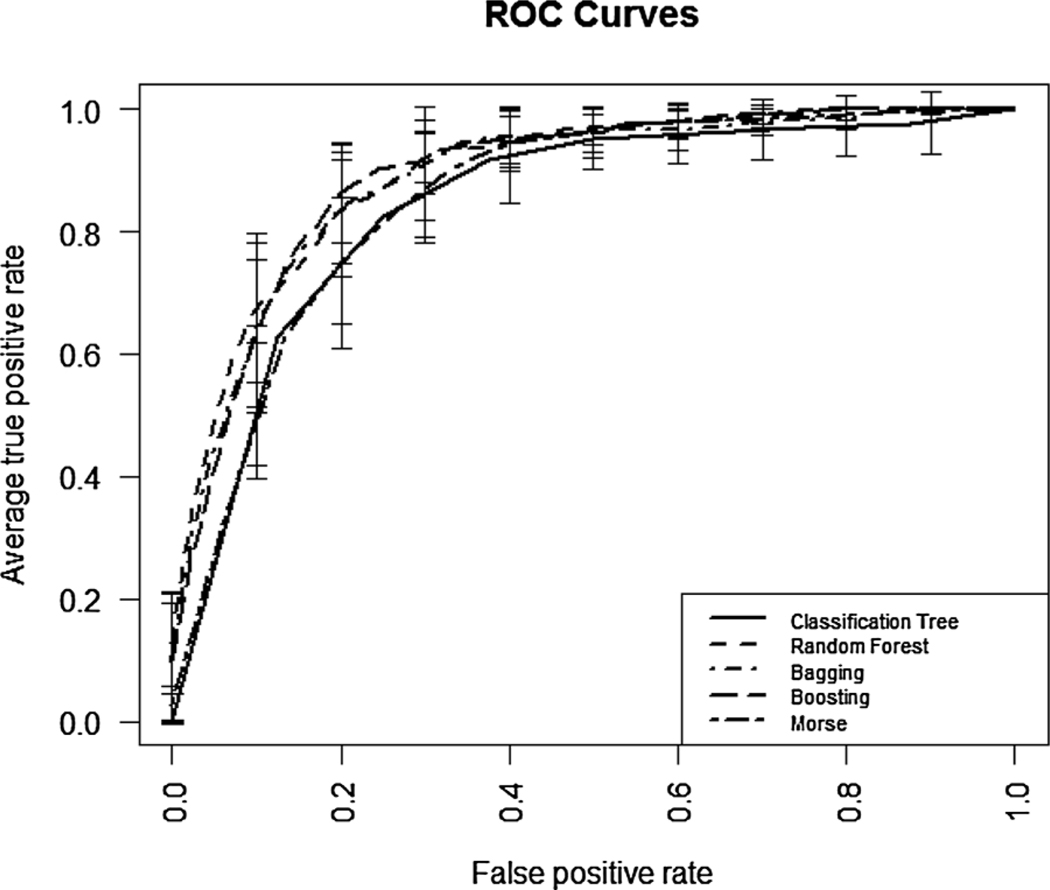

The sensitivity, specificity, AUROC, and each of their 95% confidence intervals for each predictive model are presented in Table 2. The bagging approach yielded the most sensitive model (0.79) while the Morse Fall Scale total score resulted in the least sensitive model (0.58). On the other hand, the Morse Fall Scale total score produced the most specific model (0.87) followed by adaptive boosting, random forest, bagging, and the single classification tree (i.e., 0.86; 0.86; 0.84; and 0.78, respectively). In terms of AUROC (Fig. 3), the random forest method showed the highest discriminatory ability (0.90) followed by adaptive boosting, bagging, the Morse Fall Scale total score, and finally the single classification tree (i.e., 0.89; 0.89; 0.86; and 0.85, respectively). Based on the pairwise comparisons we conducted to evaluate the performance measures among the five approaches, the Morse Fall Scale total score was lower than each of the other four methods in terms of sensitivity, and lower than the three forest based methods (bagging, random forest, and boosting) in terms of AUROC. The single classification tree model was lower than each of the four other predictive models in terms of specificity, and lower than the three forest based methods in terms of AUROC. The single classification tree predictive model and Morse Fall Scale total score had the poorest performance in terms of discriminatory ability. Finally, the Morse Fall Scale total score had the lowest sensitivity.

Table 2.

Sensitivity, Specificity, AUC and Calibration Cutoff Probability

| Statistic (95% Confidence Interval) | Bagging | Random Forest | Adaptive Boosting | Classification Tree | Morse Fall Scale Total Score |

|---|---|---|---|---|---|

| Sensitivity | 0.79 (0.73, 0.84) | 0.73 (0.68, 0.79) | 0.74 (0.68, 0.81) | 0.78 (0.66, 0.89) | 0.58 (0.52, 0.65) |

| Specificity | 0.84 (0.80, 0.87) | 0.86 (0.82, 0.89) | 0.86 (0.82, 0.90) | 0.78 (0.72, 0.84) | 0.87 (0.83, 0.91) |

| AUROC | 0.89 (0.85, 0.92) | 0.90 (0.87, 0.92) | 0.89 (0.87, 0.91) | 0.85 (0.81, 0.89) | 0.86 (0.83, 0.88) |

| Calibration Cutoff Probabilitya | 0.42 | 0.39 | 0.35 | 0.37 | 0.26 |

Point that maximizes Youden’s J statistic

Fig. 3.

ROC curves for single classification tree, random forest, bagging, adaptive boosting and Morse Fall Scale total score prediction models.

There were no differences between the main effects models and the random effects and generalized estimating equation multivariate models.

4. DISCUSSION

This study investigated AI techniques to detect and rank important predictive factors of hospitalized patient falls. Additionally, we produced cross-validated prediction models from EHR and administrative data that identify risk of falls based on easily obtainable patient, clinical, and organizational factors. Building on the advantages of organizational investments and computing technologies, the prediction models have the potential to support healthcare provider and organizational leadership decision-making that results in improved quality of care.

There is growing interest in using EHR data for clinical outcome prediction, but the context in which these data are generated could also exert influence on the quality of patient care outcomes [23]. Nonetheless, EHR data can be better suited for using AI to predict clinical outcomes. An advantage of EHR data is its size. EHR data provides opportunities to improve the quality of care by examining simultaneously multiple related outcomes, for example heart failure, 30-day readmission, stroke, and diabetes readmission [56–59]. EHR data also provides access to many predictor variables, which opens the prospects of observing changes in patients and care over time. Another key benefit is that EHR data contains many observations that can could be used as prediction model validation datasets. There are also pitfalls in using EHR data including missing data and informative presence [60–62]. Hospital systems are increasingly storing EHR data which can be used to facilitate studying rare events, such as patient falls. In this study, we analyzed 30 features from EHR and 8 features from administrative data among 814 hospitalized patients who fell and did not fall during their admission. Three AI models (i.e., bagging, random forest, and adaptive boosting) exhibited satisfactory performance in predicting patient falls and warrant further testing to establish external validity.

Given the advantages of EHR data and feasibility of AI techniques, we considered multiple interactions among features. Previous studies of patient fall prediction have primarily considered individual patient characteristics and conventional risk assessment tools with few interaction terms [10–12]. However, researchers have documented various independent relationships between nursing care and hospital patient falls [63–67]. Several features, including mental status and number of high risk FRIDs, were important across the prediction models and could be managed through clinical care and nursing unit management. We have shown that bagging, random forest, and boosting have a substantially higher ability to identify fallers over the Morse Fall Scale total score. Robust prediction models identify patients at varying degrees of risk would be of great clinical significance. With the disproportionate growth of older adults, one in five Americans will be at least 65 by 2030, and will occupy the most hospital beds on any given day [68,69]. Although AI risk prediction models cannot currently replace clinical judgment, these tools could provide immediate information to avoiding falls at critical stages of deterioration or increased environmental safety hazards [42,70].

To better understand patient fall risk and improve the interpretability of the prediction models, we ranked all features in this study, and reported the most important according to their contribution to predicting falls. Among these features, history of falls, age, Morse Fall Scale total score, mental status, unit type, gait/transferring and the number of high risk FRIDs were the most relevant factors across bagging, random forest, and boosting. While some of these factors have been identified as predictors of patient falls in previous studies, there is still room to learn whether a patient’s mental status, score of a Morse Fall Scale assessment, and the number of high risk FRIDs they are taking are valid predictors of falls when hospitalized on a medical or surgical nursing unit. It’s worth noting that existing fall risk assessments do not contain all the items identified in our list of important features (such as age, unit type, FRIDs, and impaired mental status) [10–12]. Validation of accurate prediction models that combine simple and interpretable assessment tools with high performance contemporary machine learning methods can provide valuable clinical decision support, including prioritizing of fall prevention interventions and resources in medical and surgical nursing units.

Advances in computing technology and the availability EHR and administrative data presents opportunities to prevent and reduce patient falls through a learning health system [71,72]. A learning health system can result in personalized clinical care and quality improvements by learning throughout the delivery of care. Fall risk could be automatically measured when features change during a patient’s admission. While ideas of learning health systems have been discussed, little evidence exists on the implementation or impact of such a system [73]. Among use cases, there have been efforts to improve the quality of care for pediatric patients suffering from Crohn’s disease and cerebral palsy, optimize care delivery for palliative care and lung cancer patients, and reduce missed primary care appointments [74–78]. Most healthcare systems lack the infrastructure to support these components reliably and efficiently [79]. However, requirements for knowledge generation to improve the quality of care include reliable data capture and analysis methods that can yield timely feedback of knowledge to the system [79].

There are limitations that should be discussed before applying in clinical practice. Regarding study design, the investigation was limited to data from one hospital setting. Cross-validation can be affected by population bias. Testing our models on data from other hospitals is needed to establish external validity. Secondly, the Morse Fall Score was used to measure fall risk. Not all hospitals use this assessment, which may hinder generalizability to all hospital settings. Thirdly, this study focuses on all inpatients who are at risk for falls, not just first time fallers. This presents an opportunity to conduct additional research to identify the risk factors associated with first time inpatient fallers. Fourthly, although we identified models with relatively stable performance, sensitivity, specificity and AUROC, estimates were subject to case-control study design. The model performance tests would be best performed with a population sample, which would reflect the true calibration and discrimination of the prediction model. Although it is possible to re-weight the sample of the case-control to match the prevalence of falls to the population, this prevalence varies widely across hospital populations [80–85]. The results for the bagging, random forest, and adaptive boosting algorithms are subject to variability in sensitivity and specificity measures due to using a binary classifier. Instead, it would be ideal to use a probability classification scheme [86]. In terms of AI techniques, the automated feature selection methods from machine learning models may fail in determining the true causal variables, not being able to identify confounders [87]. Even if the prediction model includes actionable features, their applicability in practice (not only prediction) may not be recommended without prospective testing or further causal analysis on observational data, e.g. defining a causal structure for variables. Finally, organizational differences can also influence how patient and clinical data are recorded in the EHR and administrative data by healthcare providers.

In summary, this preliminary study established cross-validated prediction models based on analyses of 38 individual, clinical, and organizational features. Our findings are of great clinical and organizational importance because we identified relevant and novel factors for hospital patient fall prediction. The prediction models have the potential to support personalized care and improve the quality of patient care by complementing health care provider’s judgment and decision making. Specifically, nurses could assist patients directly, such as improving mental status or administering fall risk inducing drugs, to effectively reduce fall risk. More broadly, our study may provide a reference for the development of AI-based prediction models that are modifiable by health care providers and leaders.

Supplementary Material

Summary Points.

What was known:

Existing fall risk prediction methods, such as the Morse Fall Scale, do not fully capture all risk factors associated with inpatient falls.

Electronic Health Record (EHR) data has the potential to be used to identify the most important factors of inpatient falls via machine learning methods.

Few existing studies have applied artificial intelligence (AI) to determining the most important factors of inpatient falls.

What we add:

Tree based artificial intelligence methods can effectively determine the most important factors of inpatient falls via variable importance measures.

Equipped with the knowledge of these additional factors of inpatient falls not found in the existing fall risk prediction tools, nurses can better care for their patients and effectively reduce the number of falls in the hospital setting.

This study can serve as a reference in the development of AI-based prediction models of other iatrogenic conditions.

Acknowledgments

We thank members of the UF Health Shands Hospital patient falls task force for their guidance on understanding the complexity of EHR and administrative data, especially Elaine Delvo Favre and Irene Alexitis.

Funding

UFHealth Quasi Endowment Fund; National Institutes of Health, National Institute on Aging (1R21AG062884-01; Bjarnadottir, RI and Lucero, RJ Multiple Principle Investigators).

Footnotes

Declaration of Competing Interest

The authors report no declarations of interest.

Appendix A. Supplementary data

Supplementary material related to this article can be found, in the online version, at doi:https://doi.org/10.1016/j.ijmedinf.2020.104272.

REFERENCE

- [1].Bouldin Ed, Andresen Em, Dunton Ne, et al. , Falls among adult patients hospitalized in the United States: prevalence and trends, Journal of patient safety 9 (1) (2013) 13–17, 10.1097/PTS.0b013e3182699b64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Healey F, Scobie S, Oliver D, Pryce A, Thomson R, Glampson B, Falls in English and Welsh hospitals: a national observational study based on retrospective analysis of 12 months of patient safety incident reports, BMJ Quality & Safety 17 (6) (2008) 424–430, 10.1136/qshc.2007.024695. [DOI] [PubMed] [Google Scholar]

- [3].Rigby K, Clark RB, Runciman WB, Adverse events in health care: setting priorities based on economic evaluation, Journal of quality in clinical practice 19 (1) (1999) 7–12, 10.1046/j.1440-1762.1999.00301.x. [DOI] [PubMed] [Google Scholar]

- [4].Shaw R, Drever F, Hughes H, Osborn S, Williams S, Adverse events and near miss reporting in the NHS, BMJ Quality & Safety 14 (4) (2005) 279–283, 10.1136/qshc.2004.010553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Florence CS, Bergen G, Atherly A, Burns E, Stevens J, Drake C, Medical Costs of Fatal and Nonfatal Falls in Older Adults, Journal of the American Geriatrics Society 66 (4) (2018) 693–698, 10.1111/jgs.15304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Oliver D, Healey F, Haines TP, Preventing Falls and Fall-Related Injuries in Hospitals, Clinics in geriatric medicine 26 (4) (2010) 645–692, 10.1016/j.cger.2010.06.005. [DOI] [PubMed] [Google Scholar]

- [7].Joint Commission, Preventing falls and fall-related injuries in health care facilities, Sentinel Event Alert 55 (2015) 1–5. [PubMed] [Google Scholar]

- [8].Ganz D, Huang C, Saliba D, et al. , Preventing Falls in Hospitals: A Toolkit for Improving Quality of Care, Agency for Healthcare Research and Quality, Rockville, MD, 2013. Available at: http://www.ahrq.gov/professionals/systems/hospital/fallpxtoolkit/index.html. Accessed January 31 2020. [Google Scholar]

- [9].Rothschild JM, Bates DW, Leape LL, Preventable medical injuries in older patients, Archives of internal medicine 160 (18) (2000) 2717–2728, 10.1001/archinte.160.18.2717. [DOI] [PubMed] [Google Scholar]

- [10].Morse JM, Morse RM, Tylko SJ, Development of a scale to identify the fallprone patient, Canadian Journal on Aging 8 (4) (1989) 366–377, 10.1017/S0714980800008576. [DOI] [Google Scholar]

- [11].Oliver D, Britton M, Seed P, Martin FC, Hopper AH, Development and evaluation of evidence based risk assessment tool (STRATIFY) to predict which elderly inpatients will fall: case-control and cohort studies, Bmj 315 (7115) (1997) 1049–1053, 10.1136/bmj.315.7115.1049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Hendrich A, Nyhuis A, Kippenbrock T, Soja ME, Hospital falls: development of a predictive model for clinical practice, Applied Nursing Research 8 (3) (1995) 129–139, 10.1016/S0897-1897(95)80592-3. [DOI] [PubMed] [Google Scholar]

- [13].Gephart S, Carrington JM, Finley B, A systematic review of nurses’ experiences with unintended consequences when using the electronic health record, Nursing administration quarterly 39 (4) (2015) 345–356, 10.1097/NAQ.0000000000000119. [DOI] [PubMed] [Google Scholar]

- [14].Baumann LA, Baker J, Elshaug AG, The impact of electronic health record systems on clinical documentation times: A systematic review, Health Policy 122 (8) (2018) 827–836, 10.1016/j.healthpol.2018.05.014. [DOI] [PubMed] [Google Scholar]

- [15].Aranda-Gallardo M, Morales-Asencio JM, Canca-Sanchez JC, et al. , Instruments for assessing the risk of falls in acute hospitalized patients: a systematic review and meta-analysis, BMC health services research 13 (1) (2013) 122, 10.1186/1472-6963-13-122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Shortreed SM, Cook AJ, Coley RY, Bobb JF, Nelson JC, Challenges and opportunities for using big health care data to advance medical science and public health, American journal of epidemiology 188 (5) (2019) 851–861, 10.1093/aje/kwy292. [DOI] [PubMed] [Google Scholar]

- [17].Office of the National Coordinator for Health Information Technology, Percent of Hospitals, By Type, that Possess Certified Health IT. Health IT Quick-Stat #52, 2017. Available at: dashboard.healthit.gov/quickstats/pages/certified-electronic-health-record-technology-in-hospitals.php. Accessed March 31 2020.

- [18].Prokosch HU, Ganslandt T, Perspectives for medical informatics. Reusing the electronic medical record for clinical research, Methods of information in medicine 48 (1) (2009) 38–44, 10.3414/ME9132. [DOI] [PubMed] [Google Scholar]

- [19].Centers for Medicare & Medicaid Services, Hospital-Acquired Condition (HAC) Reduction Program, 2017. Available at:https://www.cms.gov/Medicare/MedicareFee-for-Service-Payment/AcuteInpatientPPS/HAC-Reduction-Program.html Accessed March 31 2020.

- [20].Agency for Healthcare Research and Quality, AHRQ National Scorecard on Rates of Hospital-Acquired Conditions, Agency for Healthcare Research and Quality, Rockville, MD, 2016. Available at:https://www.ahrq.gov/hai/pfp/index.html Accessed March 31 2020. [Google Scholar]

- [21].Efron B, Hastie T, Computer age statistical inference, Cambridge University Press, Cambridge, UK, 2016. [Google Scholar]

- [22].Goldstein BA, Navar AM, Pencina MJ, Risk Prediction With Electronic Health Records, JAMA cardiology 1 (9) (2016) 976–977, 10.1001/jamacardio.2016.3826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Goldstein BA, Navar AM, Pencina MJ, Ioannidis JPA, Opportunities and challenges in developing risk prediction models with electronic health records data: a systematic review, Journal of the American Medical Informatics Association 24 (1) (2017) 198–208, 10.1093/jamia/ocw042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Futoma J, Morris J, Lucas J, A comparison of models for predicting early hospital readmissions, Journal of biomedical informatics 56 (2015) 229–238, 10.1016/j.jbi.2015.05.016. [DOI] [PubMed] [Google Scholar]

- [25].Meng YA, Yu Y, Cupples LA, Farrer LA, Lunetta KL, Performance of random forest when SNPs are in linkage disequilibrium, BMC bioinformatics 10 (1) (2009) 78, 10.1186/1471-2105-10-78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Shi T, Horvath S, Unsappendixupervised learning with random forest predictors, Journal of Computational and Graphical Statistics 15 (1) (2006) 118–138, 10.1198/106186006X94072. [DOI] [Google Scholar]

- [27].Rudin C, Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead, Nature Machine Intelligence 1 (5) (2019) 206–215, 10.1038/s42256-019-0048-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Markatou M, Tian H, Biswas S, Hripcsak G, Analysis of variance of cross-validation estimators of the generalization error, Journal of Machine Learning Research 6 (July) (2005) 1127–1168, 10.7916/d86d5r2x. [DOI] [Google Scholar]

- [29].Beauchet O, Noublanche F, Simon R, et al. , Falls risk prediction for older inpatients in acute care medical wards: Is there an interest to combine an early nurse assessment and the artificial neural network analysis? The journal of nutrition, health & aging 22 (1) (2018) 131–137, 10.1007/s12603-017-0950-z. [DOI] [PubMed] [Google Scholar]

- [30].McClaran J, Forette F, Golmard JL, Hérvy MP, Bouchacourt P, Two faller risk functions for geriatric assessment unit patients, Age 14 (1) (1991) 5–12, 10.1007/bf02434841. [DOI] [Google Scholar]

- [31].Memtsoudis Sg, Dy Cj, Ma Y, Chiu Yl, Valle A.G. Della, Mazumdar M, In-hospital patient falls after total joint arthroplasty: incidence, demographics, and risk factors in the United States, The Journal of arthroplasty 27 (6) (2012) 823–828, 10.1016/j.arth.2011.10.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Memtsoudis SG, Danninger T, Rasul R, et al. , Inpatient Falls after Total Knee ArthroplastyThe Role of Anesthesia Type and Peripheral Nerve Blocks, Anesthesiology: The Journal of the American Society of Anesthesiologists 120 (3) (2014) 551–563, 10.1097/aln.0000000000000120. [DOI] [PubMed] [Google Scholar]

- [33].Peel Nm, Jones Lv, Berg K, Gray Lc., Validation of a falls risk screening tool derived from interRAI Acute Care Assessment [published online ahead of print January 22, 2018], Journal of patient safety (2018), 10.1097/pts.0000000000000462. [DOI] [PubMed] [Google Scholar]

- [34].Than S, Crabtree A, Moran C, Examination of risk scores to better predict hospital-related harms, Internal medicine journal 49 (9) (2019) 1125–1131, 10.1111/imj.14121. [DOI] [PubMed] [Google Scholar]

- [35].Yip WK, Mordiffi SZ, Wong HC, Ang EN, Development and validation of a simplified falls assessment tool in an acute care setting, Journal of nursing care quality 31 (4) (2016) 310–317, 10.1097/ncq.0000000000000183. [DOI] [PubMed] [Google Scholar]

- [36].Yoo SH, Kim SR, Shin YS, A prediction model of falls for patients with neurological disorder in acute care hospital, Journal of the neurological sciences 356 (1–2) (2015) 113–117, 10.1016/j.jns.2015.06.027. [DOI] [PubMed] [Google Scholar]

- [37].Choi Y, Staley B, Henriksen C, et al. , A dynamic risk model for inpatient falls, American Journal of Health-System Pharmacy 75 (17) (2018) 1293–1303, 10.2146/ajhp180013. [DOI] [PubMed] [Google Scholar]

- [38].Morse JM, Black C, Oberle K, Donahue P, A prospective study to identify the fallprone patient, Social science & medicine 28 (1) (1989) 81–86, 10.1016/0277-9536(89)90309-2. [DOI] [PubMed] [Google Scholar]

- [39].Morse Jm, The safety of safety research: the case of patient fall research, Canadian Journal of Nursing Research Archive 38 (2) (2006) 73–88. [PubMed] [Google Scholar]

- [40].US Department of Health and Human Services, Guidance regarding methods for de-identification of protected health information in accordance with the Health Insurance Portability and Accountability Act (HIPAA) Privacy Rule, US Department of Health and Human Services, Washington, DC, 2018. Available at:https://www.hhs.gov/hipaa/for-professionals/privacy/special-topics/de-identification/index.html. Accessed January 31, 2020. [Google Scholar]

- [41].El Emam K, Abdallah K, De-identifying data in clinical trials, Applied Clinical Trials 24 (8) (2015) 40–48. [Google Scholar]

- [42].Lucero RJ, Lindberg DS, Fehlberg EA, et al. , A data-driven and practice-based approach to identify risk factors associated with hospital-acquired falls: Applying manual and semi-and fully-automated methods, International journal of medical informatics 122 (2019) 63–69, 10.1016/j.ijmedinf.2018.11.006. [DOI] [PubMed] [Google Scholar]

- [43].Hothorn T, Hornik K, Zeileis A, Unbiased Recursive Partitioning: A Conditional Inference Framework, Journal of Computational and Graphical Statistics 15 (3) (2006) 651–674, 10.1198/106186006X133933. [DOI] [Google Scholar]

- [44].Hothorn T, Buehlmann P, Dudoit S, Molinaro A, Van Der Laan M, Survival Ensembles, Biostatistics 7 (3) (2006) 355–373, 10.1093/biostatistics/kxj011. [DOI] [PubMed] [Google Scholar]

- [45].Strobl C, Boulesteix AL, Zeileis A, Hothorn T, Bias in random forest variable importance measures: Illustrations, sources and a solution, BMC bioinformatics 8 (1) (2007) 25, 10.1186/1471-2105-8-25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [46].Strobl C, Boulesteix AL, Kneib T, Augustin T, Zeileis A, Conditional Variable Importance for Random Forests, BMC Bioinformatics 9 (307) (2008), 10.1186/1471-2105-9-307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Friedman J, Hastie T, Tibshirani R, Additive logistic regression: a statistical view of boosting (with discussion and a rejoinder by the authors), The annals of statistics 28 (2) (2000) 337–407, 10.1214/aos/1016120463. [DOI] [Google Scholar]

- [48].Friedman JH, Greedy function approximation: a gradient boosting machine, Annals of statistics (2001) 1189–1232, 10.1214/aos/1013203451. [DOI] [Google Scholar]

- [49].Youden WJ, Index for rating diagnostic tests, Cancer 3 (1) (1950) 32–35, doi: . [DOI] [PubMed] [Google Scholar]

- [50].Perkins NJ, Schisterman EF, The Youden Index and the optimal cut-point corrected for measurement error, Biometrical Journal: Journal of Mathematical Methods in Biosciences 47 (4) (2005) 428–441, 10.1002/bimj.200410133. [DOI] [PubMed] [Google Scholar]

- [51].Fluss R, Faraggi D, Reiser B, Estimation of the Youden Index and its associated cutoff point, Biometrical Journal: Journal of Mathematical Methods in Biosciences 47 (4) (2005) 458–472, 10.1002/bimj.200410135. [DOI] [PubMed] [Google Scholar]

- [52].Nadeau C, Bengio Y, Inference for the generalization error, Machine Learning 52 (2003) 239–281, 10.1023/A:1024068626366. [DOI] [Google Scholar]

- [53].R Core Team, R: A language and environment for statistical computing, R Foundation for Statistical Computing, Vienna, Austria, 2019. Available at: https://www.R-project.org/. Accessed January 31, 2020. [Google Scholar]

- [54].Charlson ME, Pompei P, Ales KL, et al. , A new method of classifying prognostic comorbidity in longitudinal studies: development and validation, Journal of chronic diseases 40 (1987) 373–383, 10.1016/0021-9681(87)90171-8. [DOI] [PubMed] [Google Scholar]

- [55].Quan H, Sundararajan V, Halfon P, et al. , Coding algorithms for defining comorbidities in ICD-9-CM and ICD-10 administrative data, Medical care (2005) 1130–1139, 10.1097/01.mlr.0000182534.19832.83. [DOI] [PubMed] [Google Scholar]

- [56].Wu J, Roy J, Stewart WF, Prediction modeling using EHR data: challenges, strategies, and a comparison of machine learning approaches, Medical care (2010) S106–113, 10.1097/mlr.0b013e3181de9e17. [DOI] [PubMed] [Google Scholar]

- [57].Perkins RM, Rahman A, Bucaloiu ID, et al. , Readmission after hospitalization for heart failure among patients with chronic kidney disease: a prediction model, Clinical nephrology 80 (6) (2013) 433–440, 10.5414/cn107961. [DOI] [PubMed] [Google Scholar]

- [58].Ayyagari R, Vekeman F, Lefebvre P, et al. , Pulse pressure and stroke risk: development and validation of a new stroke risk model, Current medical research and opinion 30 (12) (2014) 2453–2460, 10.1185/03007995.2014.971357. [DOI] [PubMed] [Google Scholar]

- [59].Still CD, Wood GC, Benotti P, et al. , Preoperative prediction of type 2 diabetes remission after Roux-en-Y gastric bypass surgery: a retrospective cohort study, The Lancet Diabetes & Endocrinology 2 (1) (2014) 38–45, 10.1016/S2213-8587(13)70070-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Kharrazi H, Wang C, Scharfstein D, Prospective EHR-based clinical trials: the challenge of missing data, Journal of General Internal Medicine 29 (7) (2014) 976–978, 10.1007/s11606-014-2883-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [61].Rusanov A, Weiskopf NG, Wang S, Weng C, Hidden in plain sight: bias towards sick patients when sampling patients with sufficient electronic health record data for research, BMC medical informatics and decision making 14 (2014) 51, 10.1186/1472-6947-14-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Goldstein BA, Bhavsar NA, Phelan M, Pencina MJ, Controlling for informed presence bias due to the number of health encounters in an electronic health record, American journal of epidemiology 184 (11) (2016) 847–855, 10.1093/aje/kww112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Lopez Kd, Gerling Gj, Cary Mp, Kanak Mf., Cognitive work analysis to evaluate the problem of patient falls in an inpatient setting, Journal of the American Medical Informatics Association 17 (3) (2010) 313–321, 10.1136/jamia.2009.000422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [64].Dykes PC, Carroll DL, Hurley AC, Benoit A, Middleton B, Why do patients in acute care hospitals fall? Can falls be prevented? The Journal of nursing administration 39 (6) (2009) 299–304, 10.1097/NNA.0b013e3181a7788a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [65].Kalisch Bj, Tschannen D, Lee Kh., Missed nursing care, staffing, and patient falls, Journal of nursing care quality 27 (1) (2012) 6–12, 10.1097/NCQ.0b013e318225aa23. [DOI] [PubMed] [Google Scholar]

- [66].Patrician Pa, Loan L, McCarthy M, et al. , The association of shift-level nurse staffing with adverse patient events, JONA: The Journal of Nursing Administration 41 (2) (2011) 64–70, 10.1097/NNA.0b013e31820594bf. [DOI] [PubMed] [Google Scholar]

- [67].Rush Kl, Robey-Williams C, Patton Lm, Chamberlain D, Bendyk H, Sparks T, Patient falls: acute care nurses’ experiences, Journal of clinical nursing 18 (3) (2009) 357–365, 10.1111/j.1365-2702.2007.02260.x. [DOI] [PubMed] [Google Scholar]

- [68].Ortman JM, Velkoff VF, Hogan H, An Aging Nation: The Older Population in the United States, U.S. Census Bureau, Washington, D.C, 2014, p. 28. Available at: https://www.census.gov/prod/2014pubs/p25-1140.pdf Accessed March 31, 2020. [Google Scholar]

- [69].Weir L, Pfunter A, Maeda J, et al. , HCUP Facts and Figures: Statistics on Hospital-Based Care in the United States, 2009, Agency for Healthcare Research & Quality, Rockville, MD, 2011. Accessed March 31, 2020, https://www.hcup-us.ahrq.gov/reports/factsandfigures/2009/TOC_2009.jsp. [Google Scholar]

- [70].Bjarnadottir RI, Lucero RJ, What can we learn about fall risk factors from EHR nursing notes? A text mining study, eGEMs 6 (1) (2018) 21, 10.5334/egems.237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [71].DeRouen TA, Promises and pitfalls in the use of “Big Data” for clinical research, Journal of dental research 94 (9 suppl) (2015) 107S–109S, 10.1177/0022034515587863. [DOI] [PubMed] [Google Scholar]

- [72].Lee CH, Yoon HJ, Medical big data: promise and challenges, Kidney research and clinical practice 36 (1) (2017) 3–11, 10.23876/j.krcp.2017.36.1.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [73].McGinnis JM, Aisner D, Olsen L, The Learning Healthcare System: Workshop Summary, The National Academies Press, Washington, DC, 2007. [PubMed] [Google Scholar]

- [74].Crandall WV, Margolis PA, Kappelman MD, et al. , Improved outcomes in a quality improvement collaborative for pediatric inflammatory bowel disease, Pediatrics 129 (4) (2012) e1030–1041, 10.1542/peds.2011-1700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [75].Lowes LP, Noritz GH, Newmeyer A, et al. , ‘Learn From Every Patient’: implementation and early results of a learning health system, Developmental Medicine & Child Neurology 59 (2) (2017) 183–191, 10.1111/dmcn.13227. [DOI] [PubMed] [Google Scholar]

- [76].Kamal AH, Kirkland KB, Meier DE, et al. , A person-centered, registry-based learning health system for palliative care: a path to coproducing better outcomes, experience, value, and science, Journal of palliative medicine 21 (S2) (2018) S61–S67, 10.1089/jpm.2017.0354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [77].Fung-Kee-Fung M, Maziak DE, Pantarotto JR, et al. , Regional process redesign of lung cancer care: a learning health system pilot project, Current Oncology 25 (1) (2018) 59–66, 10.3747/co.25.3719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [78].Steiner JF, Shainline MR, Bishop MC, Xu S, Reducing Missed Primary Care Appointments in a Learning Health System, Medical care 54 (7) (2016) 689–696, 10.1097/mlr.0000000000000543. [DOI] [PubMed] [Google Scholar]

- [79].McGinnis JM, Powers B, Grossmann C. (Eds.), Digital infrastructure for the learning health system: the foundation for continuous improvement in health and health care: workshop series summary, National Academies Press, 2011. [PubMed] [Google Scholar]

- [80].Krauss Mj, Nguyen Sl, Dunagan Wc, et al. , Circumstances of patient falls and injuries in 9 hospitals in a midwestern healthcare system, Infection Control & Hospital Epidemiology 28 (5) (2007) 544–550, 10.1086/513725. [DOI] [PubMed] [Google Scholar]

- [81].Hitcho EB, Krauss MJ, Birge S, Dunagan W. Claiborne, Fischer I, Johnson S, Nast PA, Costantinou E, Fraser VJ, Characteristics and circumstances of falls in a hospital setting: a prospective analysis, Journal of general internal medicine 19 (7) (2004) 732–739, 10.1111/j.1525-1497.2004.30387.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [82].Fischer ID, Krauss MJ, Dunagan WC, Birge S, Hitcho E, Johnson S, Constantinou E, Fraser VJ, Patterns and predictors of inpatient falls and fall-related injuries in a large academic hospital, Infection Control and Hospital Epidemiology 26 (10) (2005) 822–827, 10.1086/502500. [DOI] [PubMed] [Google Scholar]

- [83].Quigley PA, Hahm B, Collazo S, Gibson W, Janzen S, Powell-Cope G, Rice F, Sarduy I, Tyndall K, White SV, Reducing serious injury from falls in two veterans’ hospital medical-surgical units, Journal of nursing care quality 24 (1) (2009) 33–41, 10.1097/NCQ.0b013e31818f528e. [DOI] [PubMed] [Google Scholar]

- [84].Chelly Je, Conroy L, Miller G, Elliott Mn, Horne Jl, Hudson Me., Risk factors and injury associated with falls in elderly hospitalized patients in a community hospital, Journal of Patient Safety 4 (3) (2008) 178–183, 10.1097/PTS.0b013e3181841802. [DOI] [Google Scholar]

- [85].Staggs VS, Mion LC, Shorr RI, Consistent differences in medical unit fall rates: implications for research and practice, Journal of the American Geriatrics Society 63 (5) (2015) 983–987, 10.1111/jgs.13387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [86].Dankowski T, Ziegler A, Calibrating random forests for probability estimation, Statistics in medicine 35 (22) (2016) 3949–3960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [87].Hahn PR, Murray JS, Carvalho CM, Bayesian regression tree models for causal inference: regularization, confounding, and heterogeneous effects, Bayesian Analysis (2017). Preprint. Available at arXiv:1706.09523. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.