Abstract

Context:

More than 78 countries have developed COVID contact-tracing apps to limit the spread of coronavirus. However, many experts and scientists cast doubt on the effectiveness of those apps. For each app, a large number of reviews have been entered by end-users in app stores.

Objective:

Our goal is to gain insights into the user reviews of those apps, and to find out the main problems that users have reported. Our focus is to assess the “software in society” aspects of the apps, based on user reviews.

Method:

We selected nine European national apps for our analysis and used a commercial app-review analytics tool to extract and mine the user reviews. For all the apps combined, our dataset includes 39,425 user reviews.

Results:

Results show that users are generally dissatisfied with the nine apps under study, except the Scottish (“Protect Scotland”) app. Some of the major issues that users have complained about are high battery drainage and doubts on whether apps are really working.

Conclusion:

Our results show that more work is needed by the stakeholders behind the apps (e.g., app developers, decision-makers, public health experts) to improve the public adoption, software quality and public perception of these apps.

Keywords: Mobile apps, COVID, Contact-tracing, User reviews, Software engineering, Software in society, Data mining

1. Introduction

As of October 2020, more than 78 countries and regions have developed so far (or are developing) COVID contact-tracing apps to limit the spread of coronavirus.1 The list is quickly growing, and as of this writing, 19 of those apps are open source.

Contact-tracing apps generally use Bluetooth signals to log when smartphones, and hence their owners, are close to each other, so if someone develops COVID symptoms or tests positive, an alert can be sent to other users they may have infected. An app can be developed using two different approaches: centralized or decentralized. Under the centralized model, the data gathered is uploaded to a remote server where matches are made with other contacts should a person start to develop COVID symptoms. This is the method that countries such as the UK were initially pursuing.

By contrast, the decentralized model gives users more control over their information by keeping it on the phone. It is there that matches are made with people who may have contracted the virus. This is the model promoted by Google, Apple and an international consortium, advised in part by the MIT-led Private Automated Contact Tracing (PACT) project (pact.mit.edu) (Scudellari, 2020). Both types have their pros and cons. Since early summer 2020, a split emerged between the two approaches. However, privacy and platform support issues have pushed countries to use the decentralized model.

The apps have been promoted as a promising tool to help bring the COVID outbreak under control. However, there are many discussions in the media, the academic (peer-reviewed) literature (Martuscelli and Heikkilä, 2020), and also the grey literature about the ‘efficacy of contact-tracing apps’ (try a Google search for the term inside quotes). A systematic review (Braithwaite et al., 2020) of 15 studies, which had studied the efficacy of contact-tracing apps, found that “there is relatively limited evidence for the impact of contact-tracing apps”. A French news article2 reported that, as of mid-August 2020, “StopCovid [the French app] had over 2.3 million downloads [of a population of 67 million people] and only 72 notifications were sent [by the app]”.

One cannot help but wonder the reasons behind the low efficacy and low adoption of the apps by the general public in many countries. The issue is a multi-faceted, complex, and interdisciplinary issue, as it relates to fields such as public health, behavioral science (Anon, 2020), epidemiology, and software engineering.

The software engineering aspect of contact-tracing apps is quite diverse in itself, e.g., whether different apps developed by different countries will cooperate/integrate (when people travel across counties/borders), and whether the app software would work as intended (e.g., will it record the nearby phone IDs properly, and will it send the alerts to all the recorded persons?). The decentralized nature of the system makes such a verification a challenging task. Some other related developments include a news article reporting that a large number of developers worldwide have found a large number of defects in one of the contract-tracing apps (England’s open-source app).3

Another software engineering angle of the issue is the availability of a high number of user reviews in the two major app stores: the Google Play Store for Android apps and the Apple App Store for the iOS apps. A user review often contains information about the user’s experience with the app and opinion of it, feature requests, or bug reports (Genc-Nayebi and Abran, 2017). Many insights can be mined by analyzing the user reviews of these apps to figure out what end-users think of COVID contact-tracing apps, and that is what we analyze and present in this paper. Studies have shown that reviews written by the users represent a rich source of information for the app vendors and the developers, as they include information about bugs and ideas for new features (Iacob and Harrison, 2013). Mining of app store data and app reviews has become an active area of research in software engineering (Genc-Nayebi and Abran, 2017) to extract valuable insights. User ratings and reviews are user-driven feedback that may help improve software quality and address missing application features.

Among the insights that we aim at deriving in this study are the ratios of users which as per their reviews, have been happy or unhappy with the contact-tracing apps and the main issues (problems) that most users have reported about the apps. The nature of our analysis is “exploratory” (Runeson and Höst, 2009) in nature, as we want to explore the app reviews and extract insights from them which could be useful for the different stakeholders, e.g., app developers, decision-makers, researchers, and the public, to benefit from or act upon.

Also, the focus of our paper is software engineering “in society” (Kazman and Pasquale, 2019), since it is clear that contact-tracing apps are widely discussed in the public media and are used by millions of people worldwide, and also have the potential to have major influences on people’s lives in the challenges that the COVID pandemics has brought upon all the people of the world. Furthermore, many resources have argued that “these apps are safety-critical”,4 since “a faulty proximity tracing app could lead to false positives, false negatives, or maybe both.” It is thus very important that these apps and their user reviews be carefully studied to ensure that upcoming updates of existing apps or new similar apps have the highest software quality.

Another motivating factor for this study is ongoing research and consulting engagement of the first author in relation to the Northern Irish contact-tracing app (called “StopCOVID NI”5 ). Since May 2020, he has been a member of an Expert Advisory Committee for the StopCOVID NI app. Some of his activities so far have included peer review and inspection of various software engineering artifacts of the app, e.g., UML design diagrams, test plans, and test suites (see page 13 of an online report by the local Health Authority6 ). In that Expert Advisory committee, the members have felt the need to review and mine insights from user reviews in app stores to be able to provide a feedback loop to the committee and the software engineering team of the app. Thus, the current study will provide benefits in that direction (to the committee), and also, by analyzing other apps from other countries, we will provide insight for other stakeholders (researchers and practitioners) elsewhere too.

The methodology applied in this paper is an “exploratory” case study focussing on user feedback (ratings and comments) for nine widely used European apps as a representative subset of the 50+ available worldwide. A series of nine research questions (Section 3.2) were created and addressed using combinations of automated sentiment analysis, numerical ratings, download figures, and manual sampling of textual reviews.

Key results include a general dissatisfaction users’ have for the various contact tracing apps (with the notable exception of the NHS Scotland app) and that users are often confused with the interface and operation, i.e., the apps are overcomplex. A consistent concern commonly raised across the apps was that of power consumption causing battery drain, something that had been widely reported in the media for early releases. Where geographical boundaries were close, and users would be expected to cross, such as in the United Kingdom, a lot of negative comments related to the lack of interoperability (if the user is over the border, their app won’t work to record contacts).

The remainder of this paper is structured as follows. In Section 2, as background information, we provide a review of contact-tracing apps and then a review of related works. We discuss the research approach, research design, and research questions of our study in Section 3. Section 4 presents the results of our study. In Section 5, we discuss a summary of our results and their implications for various stakeholders (app developers, decision-makers, researchers, the public, etc.). Finally, Section 6 concludes the paper and discusses our ongoing and future works.

2. Background and related work

As the background and related work, we review the following topics in the next several sub-sections:

-

•

Usage of computing and software technologies in the COVID pandemic (Section 2.1)

-

•

A review of contact-tracing apps and how they work (Section 2.2)

-

•

Related work on mining of app reviews (Section 2.3)

-

•

Closely related work: Mining of COVID app reviews (Section 2.4)

After discussing those related works, we will position this work with regard to the related work in Section 2.5. For the interested reader, we also provide a further review of related work in the appendix in the following groups:

-

•

Grey literature on software engineering of contact-tracing apps

-

•

Formal and grey literature on overall quality issues of contact-tracing apps

-

•

Behavioral science, social aspects, and epidemiologic aspects of the apps

2.1. Usage of computing and software technologies in the COVID pandemic

A number of digital, computing, and software technologies have been developed and are in use in the public health response to COVID-19 pandemic (Budd et al., 2020). A survey paper in the Nature Medicine magazine (Budd et al., 2020) reviewed the breadth of digital innovations (computing and software systems) for the public-health response to COVID-19 worldwide, their limitations, and barriers to their implementation, including legal, ethical, and privacy barriers, as well as organizational and workforce barriers. The paper argued that the future of public health is likely to become increasingly digital. We adopt a summary table from that paper in Table 1 (Budd et al., 2020).

Table 1.

Digital technologies in the public-health response to COVID-19 pandemic (from Budd et al., 2020)

| Public-health need | Digital tool or technology | Example of use |

|---|---|---|

| Digital epidemiological surveillance | Machine learning | Web-based epidemic intelligence tools and online syndromic surveillance |

| Survey apps and websites | Symptom reporting | |

| Data extraction and visualization | Data dashboard | |

| Rapid case identification | Connected diagnostic device | Point-of-care diagnosis |

| Sensors including wearables | Febrile symptoms checking | |

| Machine learning | Medical image analysis | |

| Interruption of community transmission | Smartphone app, low-power Bluetooth technology | Digital contact tracing |

| Mobile-phone-location data | Mobility-pattern analysis | |

| Public communication | Social-media platforms | Targeted communication |

| Online search engine | Prioritized information | |

| Chat-bot | Personalized information | |

| Clinical care | Tele-conferencing | Telemedicine, referral |

As the table shows, there are various public health needs and various digital tools/technologies to address those needs. Contact-tracing mobile apps are just one of the digital tools/technologies to address one of those needs, i.e., interruption of community transmission.

Other than contact-tracing mobile apps, other types of software systems have also been developed and used, related to the COVID pandemic, e.g., a system named Dot2Dot,7 which “is a software tool to help health authorities trace and isolate people carrying an infectious disease” and a mobile app named COVIDCare NI,8 developed in Northern Ireland, by the regional healthcare authority. The app provides various features to users, e.g., accessing personalized advice based on user’s answers to a number of symptom-check questions, deciding if the user needs clinical advice and how to access it, and easily find links to trusted information resources on COVID-19 advice and mental health resources.

2.2. A review of contact-tracing apps and how they work

As discussed in Section 1, more than 78 countries and regions have developed so far (or are developing) COVID contact-tracing apps to limit the spread of coronavirus.9 The list is quickly growing, and as of this writing, 19 of those apps are open source.

Almost all proximity-detecting contact-tracing apps use Bluetooth signals emitting from nearby devices to record contact events (Budd et al., 2020). However, in August 2020, news10 came out that a WiFi-Based contact-tracing app has been developed in the University of Massachusetts Amherst. In addition to Bluetooth and WiFi technologies, other technologies such as GPS (Wang et al., 2020), IP addresses (Wen et al., 2020), and ultrasound (Zarandy et al., 2020) have also been suggested to be used in COVID contact-tracing apps.

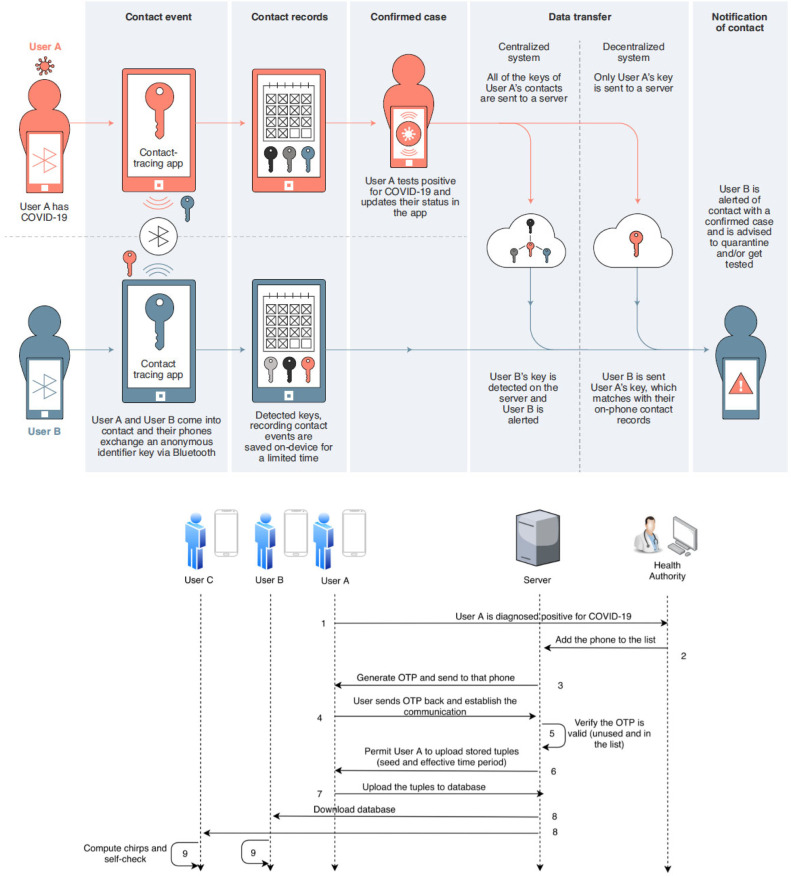

A contact-tracing app can be developed using either of two different approaches: centralized or decentralized. Centralized contact-tracing apps share information about contacts and contact events with a central server (often set up by the healthcare authority of a region or country). A centralized app uploads information when a user reports testing positive for COVID. Decentralized apps upload only an anonymous identifier of the user who reports testing positive for COVID. This identifier is then broadcast to all users of the app, which compares the identifier with on-phone contact-event records. If there is a match on the mobile app of a given user, that app gives a notification to the user. Taken from a paper in this area (Budd et al., 2020), Fig. 1 depicts the process of how these apps work. Another paper (Ahmed et al., 2020) has modeled the tracing process of a decentralized app as a UML sequence diagram (also shown in Fig. 1).

Fig. 1.

How the COVID-19 contact-tracing apps work on Bluetooth-enabled smartphones (Budd et al., 2020). Tracing process of a decentralized app.

The sequence diagram is taken from Ahmed et al. (2020).

The most widely used framework for developing decentralized contact-tracing apps is the “Exposure Notification System (ENS)”11 12 framework/API, originally known as the “Privacy-Preserving Contact Tracing Project”, which is a framework and protocol specification developed by Google Inc. and Apple Inc. and to facilitate digital contact-tracing during the COVID-19 pandemic. The framework/API is a decentralized reporting-based protocol built on a combination of Bluetooth Low Energy (BLE) technology (Gomez et al., 2012) and privacy-preserving cryptography. But let us also note that there have been various critiques of Google/Apple’s ENS framework (Hoepman, 2020).

In addition to the Google/Apple’s ENS framework, other13 frameworks and protocols have also been proposed and used for developing contact-tracing apps, e.g.,. Pan-European Privacy-Preserving Proximity Tracing (PEPP-PT) project (github.com/pepp-pt/), and BlueTrace/ OpenTrace (bluetrace.io). A comprehensive survey of contact-tracing frameworks and mobile apps is presented in Martin et al. (2020).

As of May 2020, at least 22 countries had received access to the protocol. Switzerland and Austria were among the first to back the protocol.14 Shortly after, Germany announced it would back Exposure Notification, followed by Ireland and Italy.

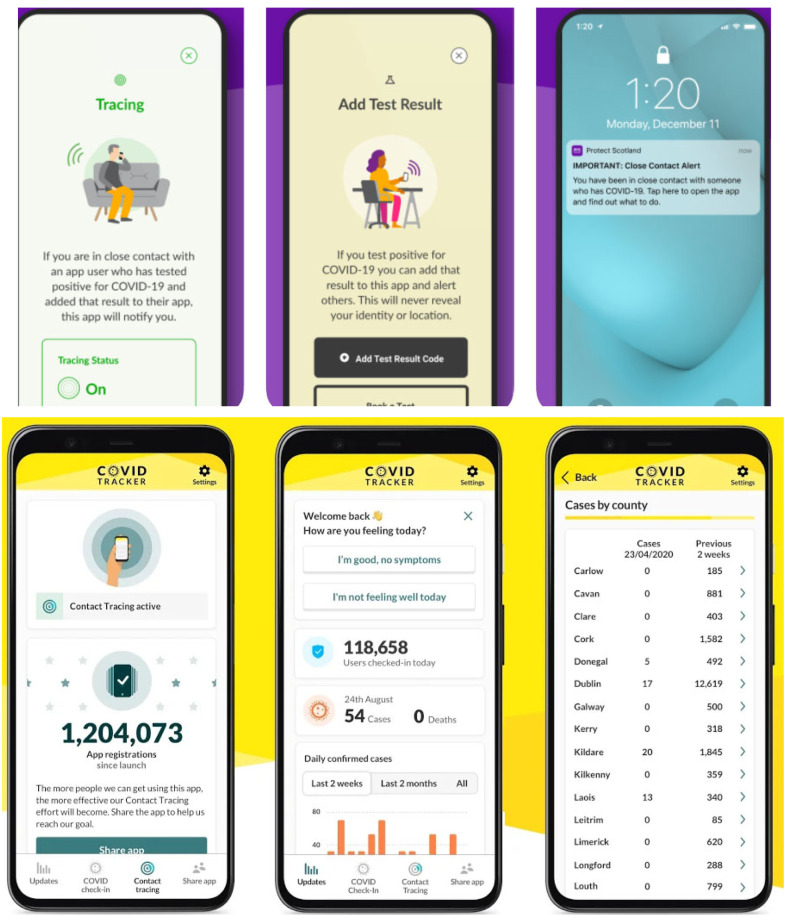

More concretely, to know what features these apps provide, we show, as examples, several screenshots from the user interface of the Protect Scotland app and the COVID Tracker Ireland app in Fig. 2. We have taken these screenshots from the apps’ pages15 in the Google Play Store. For the case of the Protect Scotland app, we can see that it only provides the “basic”/core contact-tracing features (use cases), i.e., tracing, adding test results, and sending notifications to recorded (traced) contacts. However, the COVID Tracker Ireland app provides some extra features in addition to the “core” features, e.g., showing the number of registrations since the app’s launch, COVID cases by county, etc.

Fig. 2.

Screenshots from the graphical user interface (GUI) of the Protect Scotland and COVID Tracker Ireland apps.

Since early 2020, COVID has severely impacted the work and lives of almost everyone on the planet, and contact-tracing apps have been widely discussed in online media, social media, and news outlets. As of this writing (mid-December 2020), a Google search for “contact-tracing app”16 returned 2,110,000 hits on the web, many of which are news about these apps in the media.

Also, in the relatively short timeframe since early 2020, many research papers have been published about these apps. As of this writing (mid-December 2020), a search in Google Scholar for “contact-tracing app”17 returned 1010 papers, which have been published in different research areas, e.g., public health, behavioral science (Anon, 2020), epidemiology, and software engineering. We show a short list of a few interesting papers in the following, from that large set of papers:

-

•

Contact tracing mobile apps for COVID-19: Privacy considerations and related trade-offs (Cho et al., 2020)

-

•

One app to trace them all? Examining app specifications for mass acceptance of contact-tracing apps (Trang et al., 2020)

-

•

A survey of covid-19 contact tracing apps (Ahmed et al., 2020)

-

•

COVID-19 contact tracing apps: the ’elderly paradox’ (Rizzo, 2020)

-

•

On the accuracy of measured proximity of Bluetooth-based contact-tracing apps (Zhao et al., 2020)

-

•

Vetting Security and Privacy of Global COVID-19 Contact Tracing Applications (Sun et al., 2020)

-

•

COVID-19 Contact-tracing Apps: A Survey on the Global Deployment and Challenges (Li and Guo, 2020)

Also, various reports and news articles have discussed the high costs involved in the engineering (development and testing) of contact-tracing apps. For example, for the Australian app, the cost was estimated to be 70 million Australian dollars ($49 m USD).18 For the UK NHS contact-tracing app, the cost was reported to be more than £35 million pounds.19 The development cost of the Irish app (COVID Tracker Ireland) was reported20 to be about £773 K pounds only.

2.3. Related work on mining of app reviews

User feedback has long been an important component of understanding the successes or failures of software systems, traditionally in the form of direct feedback or focus groups and more recently through social media or the distribution channels themselves, i.e., feedback in app or software stores (Morales-Ramirez et al., 2015). A systematic literature review (SLR) (Genc-Nayebi and Abran, 2017) of the approaches used to mine user opinion from app store reviews identified a number of approaches used to analyze such reviews and some interesting findings such as correlation between app rating and downloads (apps rated as high-quality gain more users), but there are significant issues identifying the overall sentiment of many reviews through automated processing. Many of their reviewed studies identified the key difference in the ease with which ratings can be used numerically compared with the difficulties in “understanding” unstructured textual commentaries, especially in different societal and linguistic settings.

With ratings being seen as key to the success of apps (Genc-Nayebi and Abran, 2017), it is important to understand the concerns and issues that lead users to most commonly complain or leave poor reviews, work which is undertaken in Khalid et al. (2014). With respect to what users complain about in mobile apps, Khalid et al. (2014) qualitatively studied 6390 low-rated user reviews for 20 free-to-download iOS apps. They uncovered 12 types of user complaints. The most frequent complaints were functional errors, feature requests, and app crashes. Of particular note in the context of this paper and COVID apps is that privacy and ethics concerns are also a common type of complaint, with an example review given of an unnamed app that it is “yet another app that thinks your contacts are fair game” (Khalid et al., 2014). Beyond the iOS focus of Khalid et al. (2014), most successful apps co-exist in at least two ecosystems (Apple and Google) and share the same brand even if they may not share the same codebase.

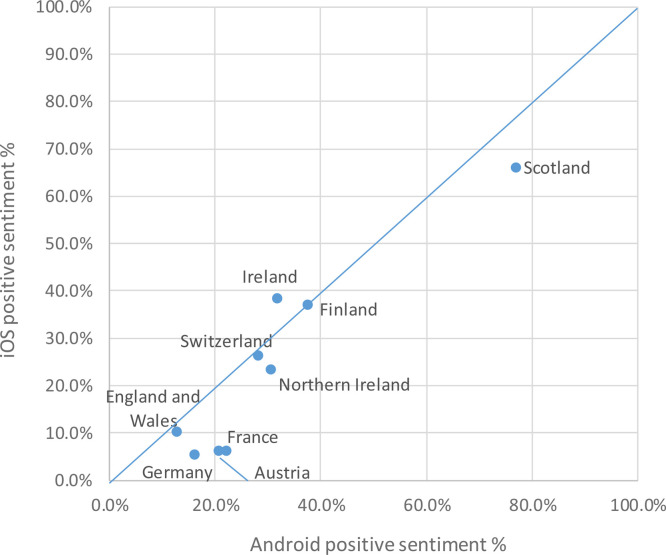

Hu et al. (2019) seek to analyze reviews of the “same” app from both Android and iOS and compare the cross-platform results, finding that nearly half (32 out of 68) of hybrid apps (where the codebase is largely shared between platforms) “receive a significantly different distribution of star ratings across both studied platforms”. The authors state that this shows a great deal of variability in how users perceive the apps even with the same fundamental features and interface depending on the users’ platform.

When mining app store data also country-specific differences in mobile app user behavior were identified (Lim et al., 2014). The authors collected data from more than 15 countries, including the USA, China, Japan, Germany, France, Brazil, United Kingdom, Italy, Russia, India, Canada, Spain, Australia, Mexico, and South Korea. Analysis of data provided by 4824 participants showed significant differences between app user behaviors across countries. For example, users from the USA are more likely to download medical apps, users from the United Kingdom and Canada are more likely to be influenced by price, users from Japan and Australia are less likely to rate apps. Also, in this paper, we analyze app reviews from several countries and, therefore, should be aware of country-specific differences when analyzing the data.

Being able to take advantage of user feedback to learn lessons and improve current or future apps have also been studied. Several papers have taken advantage of this data, especially where the volume of reviews and ratings may make manual analysis impractical. Scherr et al. (2019) presented a lightweight framework built on the use of emojis as representative of emotive feeling and expression of an app, building from their initial findings that large numbers of textual reviews also included emojis. Beyond general opinions, Guzman and Maalej (2014) presented an approach to look at user sentiment with relation to specific features and use techniques such as Natural Language Processing (NLP) to gain this insight.

Within the health domain, Stoyanov et al. (2015) defined a mobile app rating scale called “MARS” with a specific focus on descriptors aligned to health apps, including mental health ranging from UX to quality perceptions and technical considerations. This approach has the potential to be widely applied to health-related apps and used as a base of comparison between them.

Mining of app-store data, including app reviews, has become an active area of research in software engineering (Genc-Nayebi and Abran, 2017). Papers on this topic are typically published in the Mining Software Repositories (MSR) community. Authors in Genc-Nayebi and Abran (2017) provide a systematic literature review (SLR) on opinion mining studies from mobile app store user reviews. The SLR shows that mobile app ecosystems and user reviews contain a wealth of information about user experience and expectations. Furthermore, it is highlighted that developers and app store regulators can leverage the information to better understand their audience. This also holds for COVID contact-tracing apps as applied in this paper. However, the SLR also highlights that opinion spam or fake review detection is one of the largest problems in the domain. Further studies on app ratings cover topics on quality improvement through lightweight feedback analyses (Scherr et al., 2019), sentiment analysis of app reviews (Guzman and Maalej, 2014), and consistency of star ratings and reviews of popular free hybrid Android and iOS apps (Hu et al., 2019).

2.4. Closely related work: Mining of COVID app reviews

In terms of related work, three insightful blog posts under a series entitled “What went wrong with Covid-19 Contact Tracing Apps” have recently appeared in the IEEE Software blog.21 The articles reported analyses of user reviews of three such apps: Australia’s CovidSafe App, Germany’s Corona-Warn App, and the Italian app. They presented thematic findings on what went wrong with the apps, e.g., lack of citizen involvement, lack of understanding of the technological context of Australian people, ambitious technical assumptions without cultural considerations, privacy and effectiveness concerns.

2.5. Positioning this work with related work

Based on the review of each above category of related work in the above sections and also in the appendix, we can position this work with regard to related work as follows: This paper is the closest to the studies which have mined COVID app reviews, and then the large body of knowledge on mining of app reviews, in general. Since an important aspect of our work is quality assurance of COVID apps, based on user reviews, our work also related to the formal literature and grey literature on overall quality issues of contact-tracing apps, as reviewed in the appendix. In more general terms, this work is also positioned within the area of mining reviews to infer software quality and adoption.

A recent paper (Rekanar et al., 2020) presented a sentiment analysis of user review on the Irish (COVID Tracker Ireland) app. While our current paper has some similarity in objectives with those articles, we look at more apps (nine), and also, the nature and scale of our analyses are different (more in-depth) compared to the analyses, reported in the above blog posts and paper.

3. Research context, method, and questions

We discuss next the context of our research, the research method, and the research questions of our study. We then discuss our dataset and the tool that we have used to extract and mine the app reviews.

3.1. Context of research

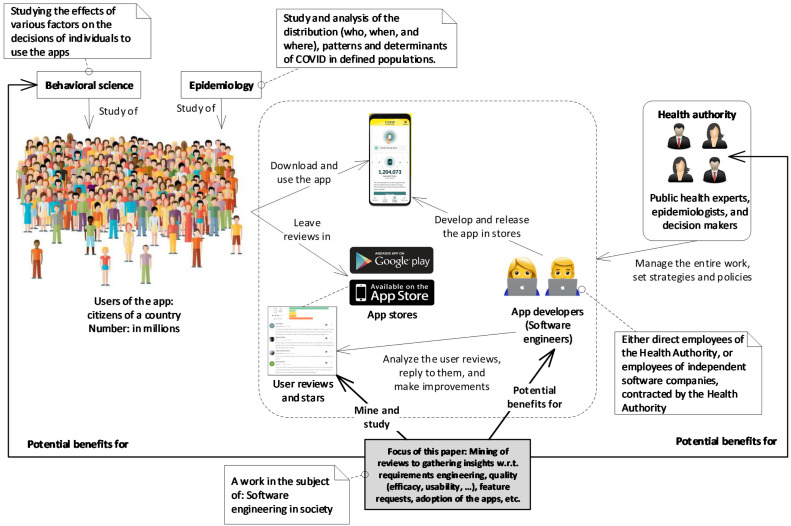

It is important to understand and clarify the research context (scope) of our work. To best present that, we have designed a context diagram as shown in Fig. 3.

Fig. 3.

Research context of this study, including the key stakeholders: app users (the public), app software engineers, public-health experts, and decision-makers.

In the center of the study are the contact-tracing apps and the reviews entered by users in the app stores, who are citizens of a given country, and their number is often in the millions. A team of software engineers develops and releases the app in stores.

A team of public-health experts and decision-makers who work for a country’s Health Authority (e.g., ministry) manage the entire work (project), set strategies and policies related to the apps and their release to the public. Software engineers are either direct employees of the Health Authority or employees of an independent software company, which is contracted by the Health Authority to develop and maintain the app.

The focus of this paper is to mine the user reviews and gather insights, with the aim of providing benefits for various stakeholders: the software engineering teams of the apps, public-health experts, decision-makers, and also the research community in this area.

We should mention that the involved teams of software engineers may already read and analyze the user reviews, sometimes replying to them in the app stores, and make improvements in their apps accordingly. However, those software engineers often only focus on reviews of their own apps. Our study extends and takes a different angle on the issue by considering several apps and analyzes the various trends in the reviews of those apps. We will discuss the apps under study and how we have selected (sampled) them from among all worldwide contact-tracing apps in Section 3.3.

While our focus in this work positions this work in the area of software and software engineering in society (Kazman and Pasquale, 2019), we also show in Fig. 3 two related fields (behavioral science and epidemiology), which we reviewed for relevant literature related to contact-tracing apps, in Section 2.5. Our analysis in this paper (Section 4) could provide potential benefits to researchers and practitioners in those fields as well.

3.2. Research method and research questions

The research method applied in this paper is an “exploratory” case study (Runeson and Höst, 2009). As defined in a widely-cited guideline paper for conducting and reporting case study research in software engineering (Runeson and Höst, 2009), the goals of exploratory studies are “finding out what is happening, seeking new insights and generating ideas and hypotheses for new research”, and those have been the goals of our study.

For data collection and measurement, we used the Goal-Question-Metric (GQM) approach (Basili, 1992). Stated using the GQM’s goal template (Basili, 1992), the goal of the exploratory case study reported in this paper is to understand and to gain insights into the user reviews (feedback) of a subset of COVID contact-tracing apps from the point of view of stakeholders of these apps (e.g., app developers, decision-makers, and public health experts).

Based on the above goal and also given the types of user review data available in app stores, we derived the following research questions (RQs):

-

•

RQ1: What ratios of users are satisfied/dissatisfied (happy/ unhappy) with the apps?

-

•

RQ2: What level of diversity/variability exists among different reviews and their informativeness?

-

•

RQ3: What are the key problems reported by users about the apps?

-

•

RQ4: By looking at the “positive” reviews, what aspects have users liked about the apps?

-

•

RQ5: What feature requests have been submitted by users in their reviews?

-

•

RQ6: When comparing the reviews of Android versus the iOS versions of a given app, what similarities and differences could be observed?

-

•

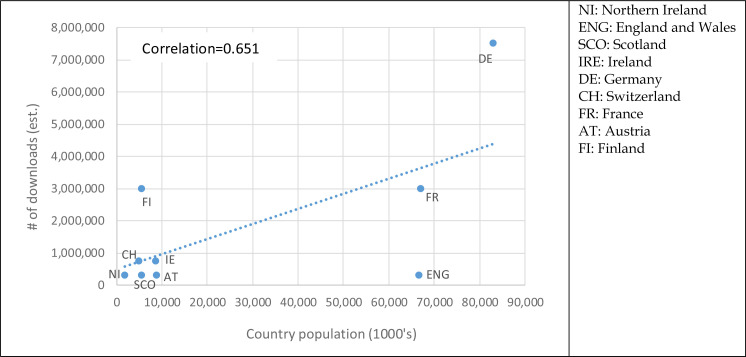

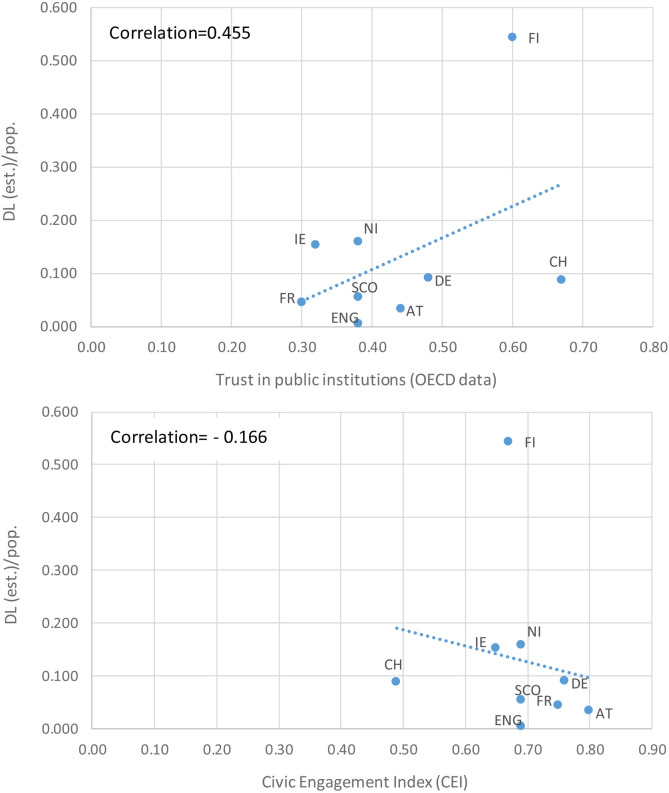

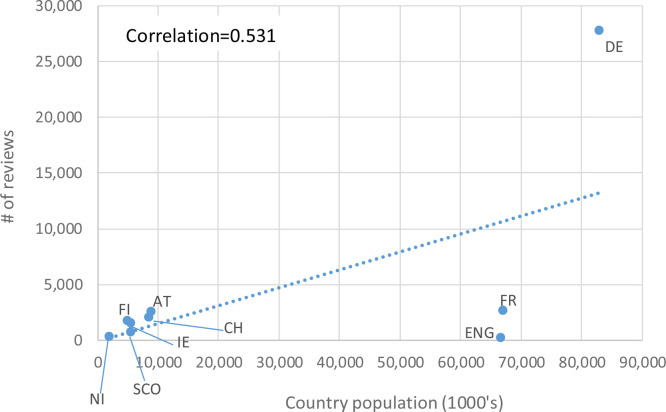

RQ7: Is there a correlation between the number of app downloads and the country’s population size?

-

•

RQ8: Are there correlations between the number of reviews and the country’s population or the number of downloads? And also, what ratio of app users has provided reviews?

-

•

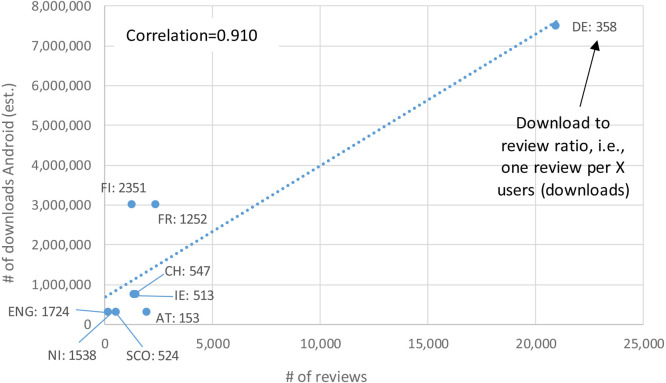

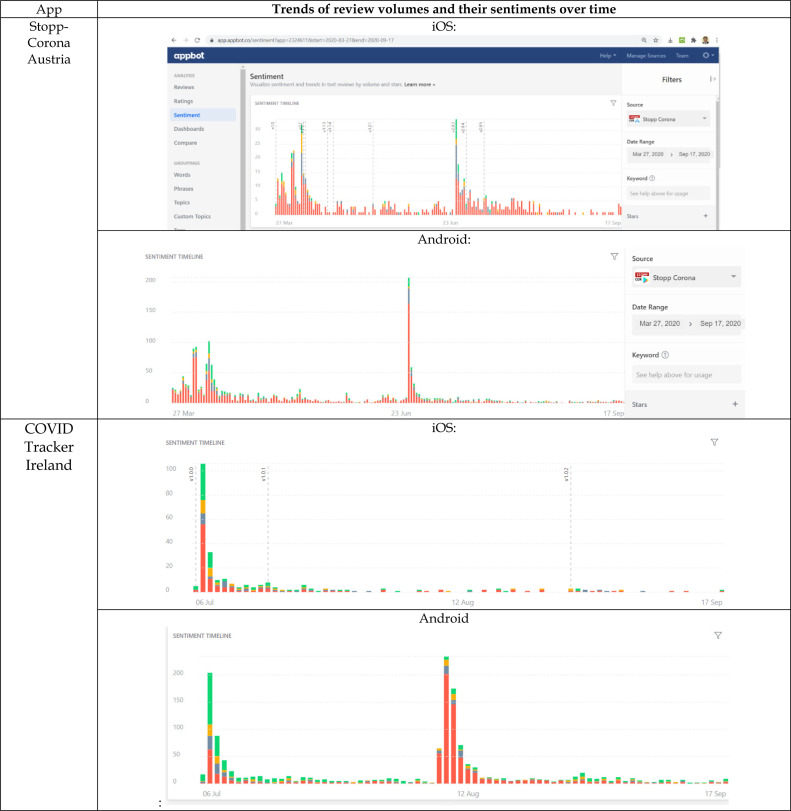

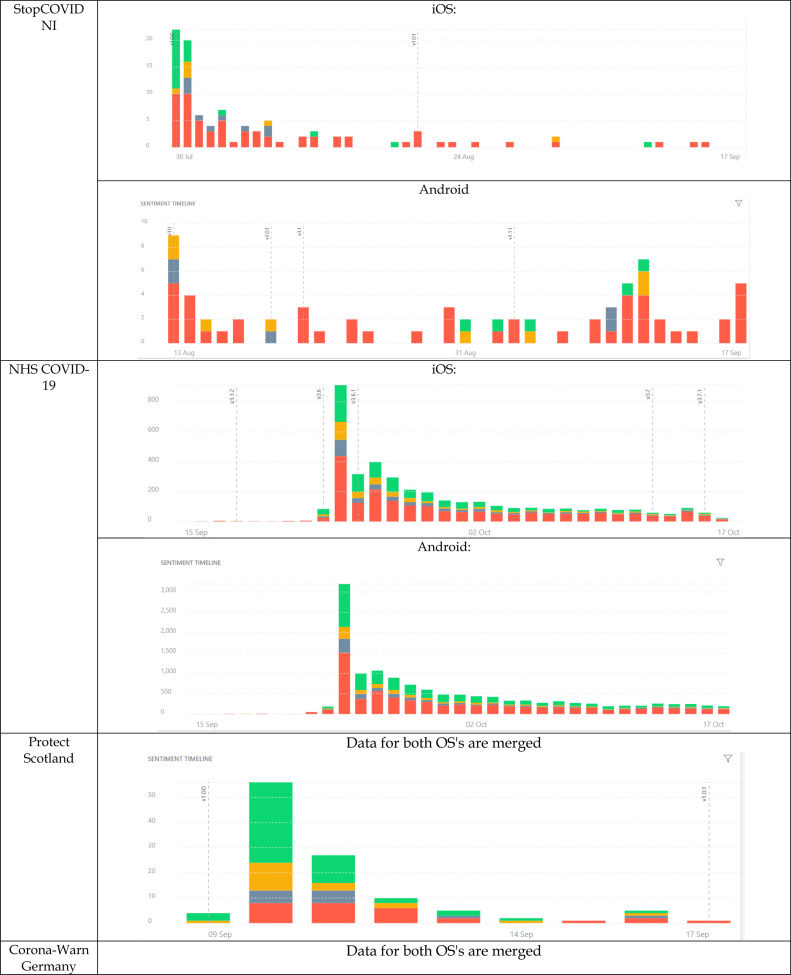

RQ9: What insights can be observed from the trends of review volumes and their sentiments over time?

An important aspect of our research method is the data analysis technique, which is mainly data mining. As we discuss in-depth in Section 3.4, we have selected and used a widely used commercial app-review data mining and analytics tool.

3.3. Apps under study: Sampling a subset of all worldwide contact-tracing apps

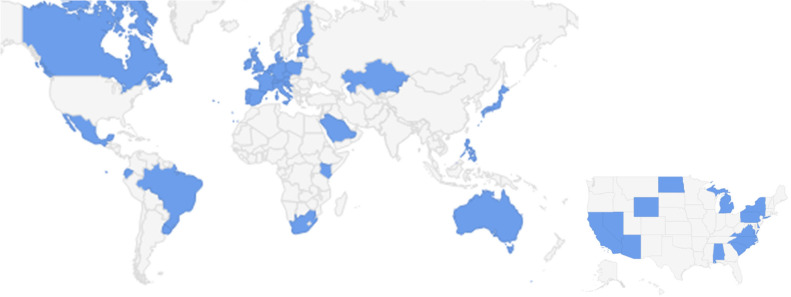

As discussed in Section 1, according to a regularly updated online article22 in the grey literature, more than 78 countries and regions have developed so far (or are developing) contact-tracing apps. At least five other open-source contact-tracing implementations have been developed, based on the Apple-Google Exposure Notification API,23 e.g., the apps by MIT and MITRE Corporation. They could be, in principle, reused and adapted by any country/region’s healthcare agency. We show in Fig. 4 the 35 countries and 15 US states that have developed and published contact-tracing apps. These data have been taken from the above online article22 (as of mid-September 2020).

Fig. 4.

The 35 countries and 15 US states which have developed contact-tracing apps.

Analyzing user reviews of “all” those 50+ apps would have been a major undertaking, and thus, instead, we decided to sample a subset of all worldwide contact-tracing apps, including nine apps. Also, to make the assessments more comparable, we limited the sampling to European countries by selecting the four apps developed in the British Isles and five apps from mainland Europe. We selected the apps developed for England and Wales (parts of the UK), the Republic of Ireland, Scotland, Northern Ireland, Germany, Switzerland, France, Austria, and Finland.

Table 2 lists the names, key information (such as first release dates and versions since the first release), and descriptive statistics of both Android and iOS versions of the nine selected apps. Each app can easily be found in each of the two app stores by searching for its name. Let us note that all data used for our analysis in this paper was gathered on September 17, 2020. We discuss in the next section the tools we used to extract and mine the data in this paper (including those shown in Table 2).

Table 2.

The sampled apps and their descriptive statistics (*: As discussed in the text, all data used for our analysis in this paper were gathered on Sept. 17, 2020)

| App | OS | First release date | # of downloads | Versions since first release | Reviews* |

||

|---|---|---|---|---|---|---|---|

| # of reviews (as of our analysis) | Avg. stars of reviews | Ratio of Android to iOS reviews | |||||

| 1-StopCOVID NI | Android | July 28, 2020 | 100,000+ | 2 | 195 | 3 | 2.01 |

| iOS | – | 97 | 2.5 | ||||

| 2-NHS COVID (ENG) | Android | August 13, 2020 | 100,000+ | 2 | 174 | 1.9 | 2.76 |

| iOS | – | 63 | 2.3 | ||||

| 3-Protect Scotland (SCO) | Android | September 10, 2020 | 100,000+ | 3 | 573 | 4 | 5.21 |

| iOS | – | 110 | 4 | ||||

| 4-COVID Tracker Ireland (IE) | Android | June 19, 2020 | 500,000+ | 3 | 1,463 | 2.9 | 5.34 |

| iOS | – | 274 | 3.1 | ||||

| 5-Corona-Warn Germany (DE) | Android | June 25, 2020 | 5,000,000+ | 8 | 20,972 | 2.7 | 3.10 |

| iOS | – | 6,772 | 2.3 | ||||

| 6-SwissCovid (CH) | Android | June 18, 2020 | 500,000+ | 9 | 1,370 | 3.1 | 2.10 |

| iOS | – | 652 | 3.1 | ||||

| 7-StopCovid France (FR) | Android | June 6, 2020 | 1,000,000+ | 10 | 2,397 | 2.6 | 9.95 |

| iOS | – | 241 | 2.1 | ||||

| 8- Stopp Corona Austria | Android | Mar 27, 2020 | 100,000+ | 10 | 1,961 | 2.4 | 3.27 |

| iOS | – | 599 | 2 | ||||

| 9-Finland Koronavilkku (FI) | Android | August 31, 2020 | 1,000,000+ | 3 | 1,276 | 3.4 | 5.41 |

| iOS | – | 236 | 3.3 | ||||

In terms of the number of downloads, we did not find any publicly available exact metrics in the app stores. Google Play Store provides approximate download counts in the form of, for example, 100,000+ (meaning 100,001–500,000). Apple App Store does not provide any exact nor estimate of download counts for the iOS apps.

An interesting point in Table 2 is that some apps have had many versions since the first release, and some only had a few. Each app has received anywhere between only 63 (NHS COVID) to 20,972 reviews (Corona-Warn Germany), and counting. It is interesting to see that, in all cases, the Android apps have received more reviews compared to iOS apps. This seems to align with the general trend in the app industry, as reported in the grey literature: “Android users tend to participate more in reviewing their apps”23 and “Android apps get way more reviews than iOS apps”.24

We will conduct and report some correlations analysis in Section 4.7 on some of the metrics shown in Table 2.

We should mention that in the writing phase of this paper (in November 2020), we heard the news that France launched25 a new contact-tracing app, named TousAntiCovid (literally translates to: “All Anti Covid”) in late October 2020, which replaced the previous app, StopCovid, in the app stores. However, the review data that we had fetched using our chosen analytics tool (AppBot, as discussed in the next section) was until mid-September, so our analysis is on the France’ StopCovid app, and the dataset had integrity w.r.t. that app.

3.4. Tool used to extract and mine the app reviews, and the dataset

We wanted to use an automated approach to extract, mine, and analyze the apps’ user reviews. We came across the Google Play API,26 which provides a set of functions (web services) to get such data. At the same time, we found that there are many powerful online tools that do the job of fetching the review data from app stores and even include useful advanced features such as text mining, topic analysis, and sentiment analysis (Guzman and Maalej, 2014) on review texts. The large number of such tools indicate the fact that an active market for app review “analytics” is emerging. There are also various research-prototype tools for mining user requirements and feedbacks, such as github.com/openreqeu and github.com/supersede-project. However, we found that there are only a handful of out-of-the-box working approaches, and commercial tools with high usability.

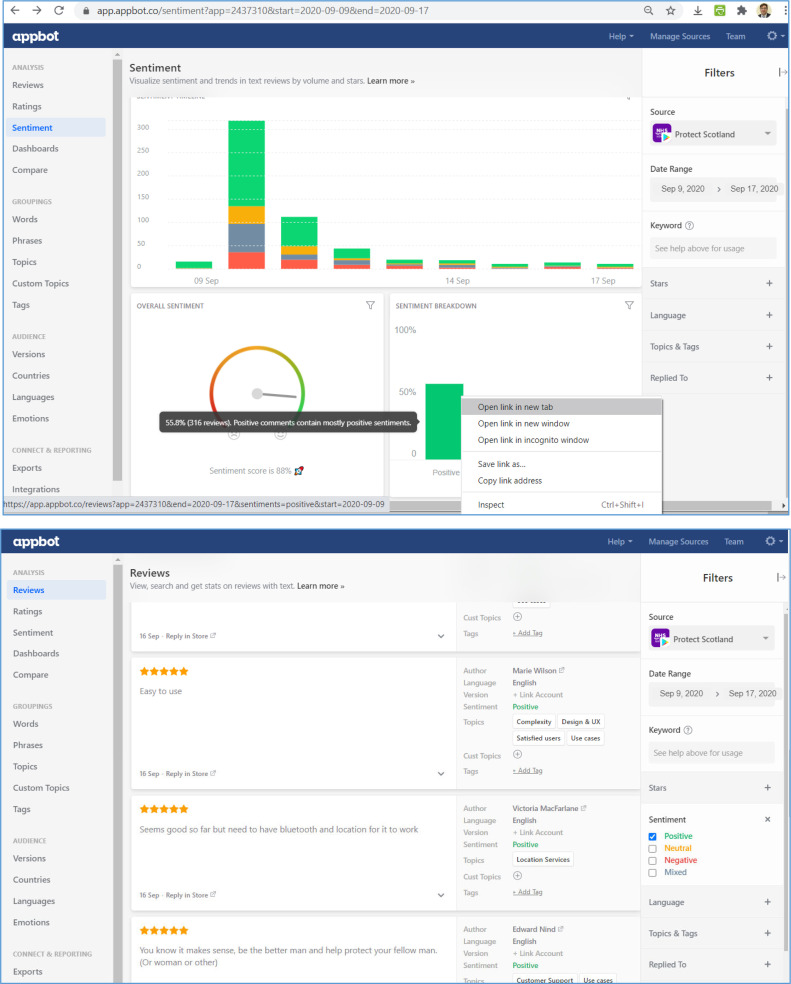

We came across a high-quality candidate tool to extract and mine the app reviews, i.e., a commercial tool named AppBot (appbot.co). The tool provides a large number of data-mining and sentiment analysis features. For example, as we will use in Section 4.1, AppBot uses an advanced method, based on AI and Natural Language Processing (NLP), to assign one of the four types of sentiments for each given review: positive, neutral, mixed, and negative sentiment. Also, as we will discuss in 4.5, another feature of AppBot is to automatically distinguish reviews that contain “Feature requests” submitted by users among all reviews of an app.

To do the above analysis, there have been specific papers that have proposed (semi-) automated techniques, which could be somewhat seen as the competitors for commercial App-analytics tools, such as AppBot. For example, a paper by Maalej and Nabil (2015) introduced several probabilistic techniques to classify app reviews into four types: bug reports, feature requests, user experiences, and ratings. The approach uses review metadata such as the star rating and the tense, as well as text classification, NLP, and sentiment analysis techniques.

Other papers have proposed or used sentiment techniques to classify each review, e.g., into a positive or negative review, just like what the AppBot tool does. For example, the authors of Guzman and Maalej (2014) used NLP techniques to extract the user sentiments about apps’ features.

In summary, to make our choice of tools/techniques to extract and mine the app reviews, we could either use the approaches presented in the above papers or the commercial tool AppBot. To make our tool choice, we tried the AppBot tool on several apps in our selected pool and observed that the tool works well and its outputs are precise. Also, the fact that “24 of Fortune-100 companies” (according to the tool’s website: appbot.co), e.g., Microsoft, Tweeter, BMW, LinkedIn, Expedia and New York Times are among the users of the tool, were strong motivations for us in favor of the AppBot tool over the techniques presented in the above papers. In addition, almost all techniques presented in the above papers had no publicly available tool support, and if we had to choose them, we had to develop new tools, which was clearly extra work, for which we saw no reason. Thus, we selected and used AppBot for all the data extraction and data mining.

However, we were still curious about the precision of the analyses (e.g., sentiment-analysis algorithm) done by AppBot. We initiated personal email communication with the co-founder of AppBot, asking about the precision of the analyses by the tool. The reply that we received was: “We [have] trained our own sentiment analysis so it worked well with app reviews. Here’s the details of our algorithm:

-

•

Developed specifically for short forms of user feedback, like app reviews

-

•

Understands the abbreviations, nuanced grammar and emoji

-

•

Powered by machine learning

-

•

Over 93% accuracy

-

•

Trained on over 400 million records”

We thus were quite satisfied that the tool that we were going to use has high quality and high precision in the analyses and results that it produces.

From another perspective, we are followers of the “open science” philosophy and reproducible research, especially in empirical software engineering (Fernández et al., 2019), and we believe that empirical data generated and analyzed in any empirical software engineering study should be provided online (when possible) for possible use by other researchers, e.g., for replication and transparency. By following that principle, we provide all the data extracted and synthesized for this paper in the following online repository: www.doi.org/10.5281/zenodo.4059087. Since to download the raw review data and analyze them using the commercial tool AppBot, we acquired a paid license for it, we cannot share the raw dump of all review data for all the apps in the above online repository, but instead, we share in there the aggregated statistics that we have gathered from the raw review data. Interested readers can easily acquire a license for the tool (AppBot) and download the raw data.

We also think that some readers may be interested in exploring the dataset and reviews on their own and possibly conducting further studies like ours. To help with those, we have recorded and provided a brief (10-minute) video of live interaction with the dataset (to be analyzed in this paper) using AppBot, which can be found in youtu.be/qXZ_8ZTr8cc.

3.5. Sampling method for each RQ

Given the considerable size of review datasets of the apps, for the case of certain RQs in our study (Section 3.2), we had to choose and apply suitable sampling methods to be able to systematically address each RQ (in Sections 4.1–4.8). In Table 3, we present the sampling methods that we applied to address the RQs.

When planning and applying the following sampling approaches, we benefitted from sampling guidelines in software engineering research (Baltes and Ralph, 2020) and general literature about sampling (Henry, 1990).

Table 3.

Sampling methods applied to address the research questions.

| Research Question | Sampling methods |

|---|---|

| RQ1: What ratios of users are satisfied/dissatisfied (happy/ unhappy) with the apps? | All reviews were analyzed to generate sentiment charts of all reviews of each app. |

| RQ2: What level of diversity/variability exists among different reviews and their informativeness? | All reviews were analyzed to generate box plots of reviews text length. Then, we used “stratified” random sampling to choose a few reviews for discussions in paper text: choosing a few “long” reviews (in terms of text length) and a few “short” reviews to discuss the issues via examples. |

| RQ3: What are the key problems reported by users about the apps? | All reviews were analyzed via word-cloud visualizations. Then, we used “stratified” random sampling to choose a few reviews for discussions: choosing a few reviews by random from each group of key topics (words) appearing the most in reviews. |

| RQ4: By looking at the “positive” reviews, what aspects have users liked about the apps? | The set of ”positive” reviews for each app was derived using the AppBot tool. We also calculated the ratios of positive reviews among all reviews of an app, and report in the paper (Section 4.4). Then, we used random sampling to choose a few reviews for discussions in paper text. |

| RQ5: What feature requests have been submitted by users in their reviews? | The set of ” feature request” reviews for each app was derived using the AppBot tool. Then, we used random sampling to choose a few reviews for discussions in paper text. |

| RQ6: When comparing the reviews of Android versus the iOS versions of a given app, what similarities and differences could be observed? | All reviews were analyzed to generate charts. To compare the problems reported for each OS version, we used random sampling to choose a few reviews for discussions in paper text. |

| RQ7: Is there a correlation between the number of app downloads and the country’s population size? | All reviews were analyzed to generate charts. |

| RQ8: Are there correlations between the number of reviews and the country’s population or the number of downloads? And also, what ratio of app users has provided reviews? | All reviews were analyzed to generate charts. |

| RQ9: What insights can be observed from the trends of review volumes and their sentiments over time? | All reviews were analyzed to generate charts. |

4. Results

We present the results of our analysis by answering the RQs of our study. Note that our study comprehensively looks at nine RQs of our study (as raised in Section 3.2) in detail through the next nine sub-sections, and thus, this section is quite extensive in terms of size and depth. Since most RQs are quite “independent” from each other, the reader can read each of the following result sub-sections independently and does not have to read all of them sequentially and in full, from this point on.

4.1. RQ1: What ratios of users are satisfied/ dissatisfied (happy/ unhappy) with the apps?

Our first exploratory RQ (analysis) was to assess the ratios of users, which, as per their reviews, have been happy or unhappy with the apps.

“Stars” (a value between 1–5) are the built-in rubric of app stores (both the Google Play and the Apple App Store) which let users mention their level of satisfaction or dissatisfaction with an app when they submit their review. This feature is also widely used in many other online software systems, such as online shopping (e-commerce) web applications, including Amazon. For the case of online shopping and also paid mobile apps, the number “stars” on a product (or app) often strongly impacts the choice of other users whether to buy a product (or app) or not (Hu et al., 2019), a relationship also seen in the levels of adoption of apps in mobile app stores (Genc-Nayebi and Abran, 2017).

A user can choose between 1 to 5 stars when s/he submits a review. Another more sophisticated way to derive users’ satisfaction with an app is to look at the semantic tone of the review text, e.g., when a user mentioned in her/his review: “I really like this app!” that would clearly mean her/his satisfaction with the app. On the other hand, a review text like: ”the app crashed on my phone several times. Thus, it is not a usable app.”, implies the user’s dissatisfaction with the app. Making broad use of this, especially on longer textual reviews, can have some limitations when automatically analyzed, but the majority of reviews can have sentiment successfully detected (Genc-Nayebi and Abran, 2017).

In the NLP literature, automatic identification of the semantic tone of a given text is referred to as sentiment analysis (Liu, 2012). Sentiment analysis refers to the use of NLP to systematically quantify the affective state of a given text. A given text can have four types of sentiments (Liu, 2012): positive, negative, neutral, and mixed. A positive sentiment denotes that the text has a positive tone in its message. “Neutral” sentiment implies that there is no strong sentiment in the text, e.g., ”I have used this app”. A text is given the “mixed” sentiment when it is conflicting sentiments (both positive and negative).

Our chosen data-mining tool (AppBot) supports the above four types of sentiments for each given review: positive, neutral, mixed, and negative sentiment. To classify the sentiment for a given review, AppBot calculates and provides a sentiment score of each review (a value between 0%–100%).

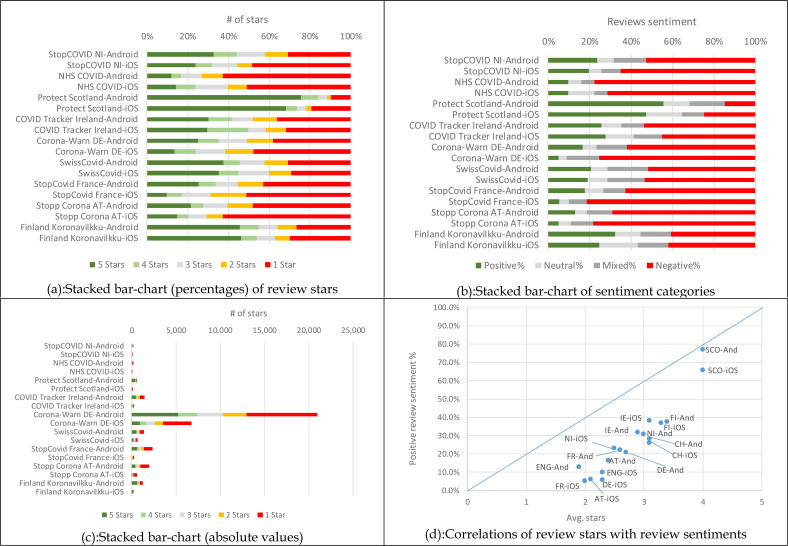

We show in Fig. 5 the distribution of stars as entered by the users in reviews and also the distribution of reviews’ sentiment categories. We show both a 100% stacked bar and a stacked bar of absolute values for the stars. As we can see, since the German Corona-Warn app has received many more reviews compared to the others in the set, it has overshadowed the others in the stacked-bar figure.

Fig. 5.

Distribution of review stars, and review sentiment categories.

We can see from these charts and also the average stars of each app (Table 2) that the users are generally dissatisfied with the apps under study, except the Scottish app. We furthermore averaged the stars from the mean score of Android and iOS versions of each app, e.g., for StopCOVID NI, this resulted in 2.75 (average of 3 and 2.5). Based on this metric, the Protect Scotland app is the highest starred (4/5), and NHS COVID is the least starred (2.1/5). The average of stars for all the other apps ranges between these two values. We should note that we have not installed nor tried any of the apps, and thus all our analyses are purely based on mining user reviews.

One very interesting consideration is what factors have led to the Scottish app be ranked the highest in terms of stars. Reviewing a subset of its reviews revealed that the app seems easy to use and is quite effective, e.g., one user said: “Brilliant app. It collects zero personal data, no sign ups, no requirement to turn on location, nothing! All you have to do is turn on Bluetooth, that’s it.”.27 Of course, more in-depth assessment and comparison of the apps are needed to be done.

We were expecting that stars and the reviews’ sentiments would have correlations, i.e., if a user has left a 1 star for an app, s/he has most probably had also left a negative (critical) comment in the review, and vice versa. We show in Fig. 5 a scatter-plot of those two metrics, in which 18 dots correspond to the 18 apps under study. The Pearson correlation coefficient of the two measures is 0.93, showing a strong correlation.

When comparing the average stars with the positive reviews sentiment percentages in Fig. 5-(d), the sentiment percentages seem to be consistently more negative (have lower values in the Y-axis). By analyzing a subset of the dataset (reviews), we observed that many reviews are similar to the following phrase/tone: “I like the app, but rant rant rant”, and then the user has entered 4 stars, for example. The “rant” (complain) part could be quite harsh, thus causing the textual reviews sentiment score to fall down.

It is also interesting to see in Fig. 5-(d) that, generally, the dots of the two OS versions of each app are relatively close to each other in this scatter-plot, meaning that users have independently scored both versions of each app in quite similar levels. In some cases, the iOS version of a given app has a slightly higher average star value than the Android version, and it was the other way around for the other apps. A uniform relationship could not be observed.

4.2. RQ2: A large diversity/variability in reviews and their informativeness

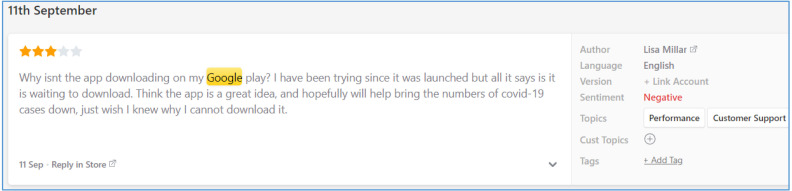

In addition to using the AppBot tool for automated text mining and sentiment analysis of the large set of reviews, it was important to read a subset of reviews, to actually get a sense of the dataset. For example, we browsed through the large list of 27,000+ reviews of the German Corona-Warn app. The Google Play Store provides a “like” button to let users express whether they found a given review ”helpful”. We found a few such reviews, such as the following28 :

“Solid user interface and good explanation of the data privacy concept. Surprisingly well done, I was expecting it to be more cumbersome. Edit: It would be good if we could see how many tokens the app has collected in the last 14 days. This would make the app more attractive to open and raise the confidence in that it actually works. Also interesting metric would be to know how many users have been warned by the app. This has been released to the public (I believe 300 notifications so far). Unfortunately, I cannot find the Android system settings which apparently shows the number of contacts collected. Either its not available easily or I just can’t find it. Anyway - I think it would be great if the app could show this information rather than asking the user to search for information in the settings”. (translated automatically from German by AppBot, which uses the Google Translate API) (see Fig. 6).

Fig. 6.

A user review and the reply by the development team for the German app.

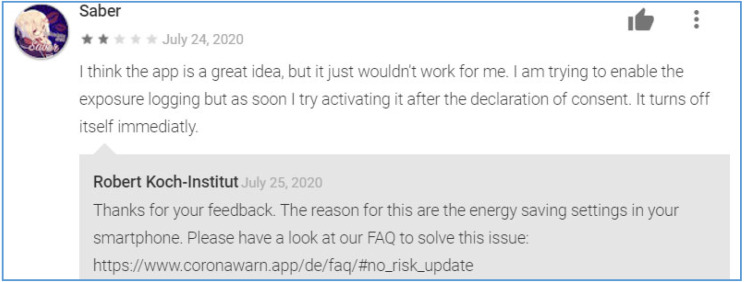

Many reviews, including the above one, were feature requests, and some of them could indeed be useful for the development team for improving the app. Many reviews were also replied by the development team in a careful way, which was refreshing to see. For example, there was the following thread in one of the reviews29 for the German app:

From the above example review, we can realize that the apps should be designed as simply as possible, since typical citizens (“laymen”) are not often “technical“ people, and we cannot assume that they will review the online FAQ pages of the app to properly configure it.

Essentially, similar to any other mobile app, reviews could range from short phrases such as “Not working. Weird privacy settings”,30 which are often not useful nor insightful for any stakeholder, to detailed objective reviews (like the one discussed above), which are often useful.

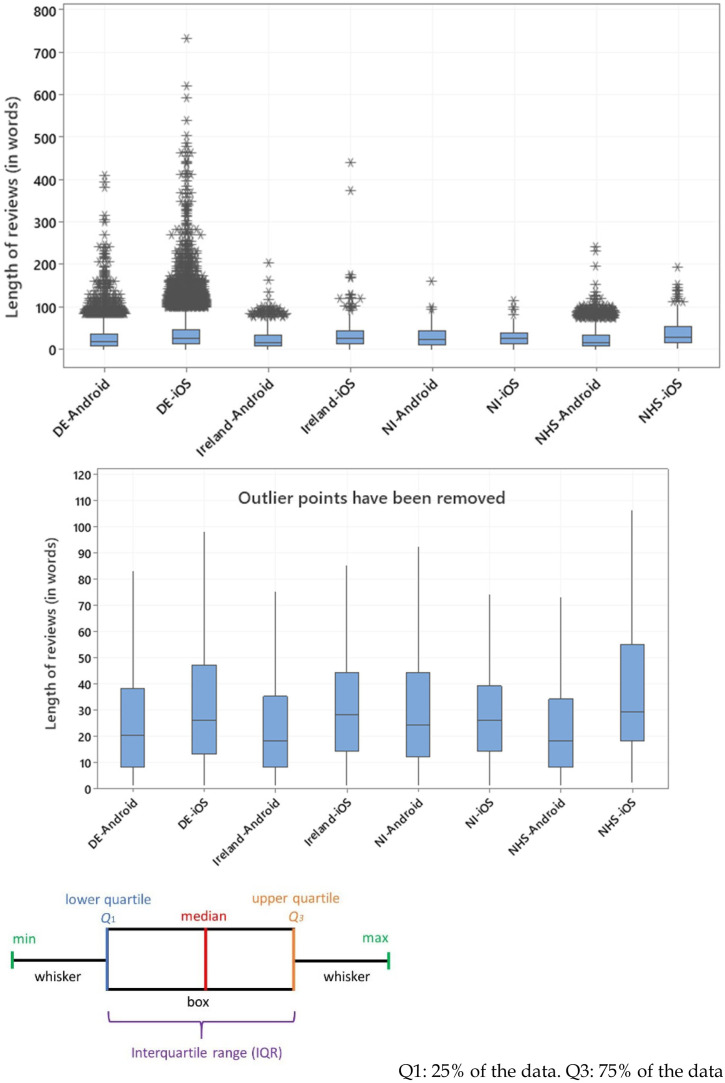

One way of analyzing the diversity/variability of reviews was to measure each review’s length in words. We gathered those data for five of the nine apps (as examples) and provided the boxplots of both OS versions of those five example apps in Fig. 7. Since we observed that there are many “outlier” data points in the box plots, we provide the plots with and without outliers. For the readers who are less familiar with boxplots, we provide a conceptual example in Fig. 7 about the meaning of the boxes in boxplots and lines in it. More details about boxplots and their terminology can be found in the statistics literature (Potter et al., 2006).

Fig. 7.

Boxplot showing the distribution of textual “length” of reviews (in words) for five example apps. With and without “outlier” data points.

As we can see in Fig. 7, for all five apps, the bulk of reviews are relatively short in length. The German app, on both Android and iOS platforms, has a more noticeable collection of longer comments which is particularly evident for the iOS version. The slight increase of the median may be due to linguistic differences, but the relatively large number of longer (>200 word) reviews implies a great degree of user engagement in commenting on these apps, especially on iOS, or could have cultural/social root causes, e.g., it could be German users often tend to provide “detailed” (extensive) feedbacks.

As another insight, we found that a proportion of reviews included error messages or crash reports. For example, for the German app (Corona-Warn) again, a user mentioned in her/his review31: “After a few days the app stopped working. Several error messages appeared including ’cause: 3’ and ’cause: 9002’. Tried to troubleshoot it by checking the Google services version, deleting the cache, reinstalling the app etc”.

When we interpret this review, it is logical to conclude that it is quite impossible for a layperson to deal with such errors and error messages, given the nature and full public outreach of the app. Thus, we wonder whether such error messages and crashes have been one of the several reasons why the apps under study have been rated quite low in reviews overall. By reading more reviews, we observed that many users (citizens) with some technical (IT) background had taken various steps to make the apps work, e.g., reinstalling them, etc. However, we believe that, for a layperson, taking such troubleshooting steps is out of the question, and such a person would usually ignore and remove the app, and possibly would leave a harsh review for it in the app store, and submit a low score for the app.

4.3. RQ3: Problems reported by users about the apps

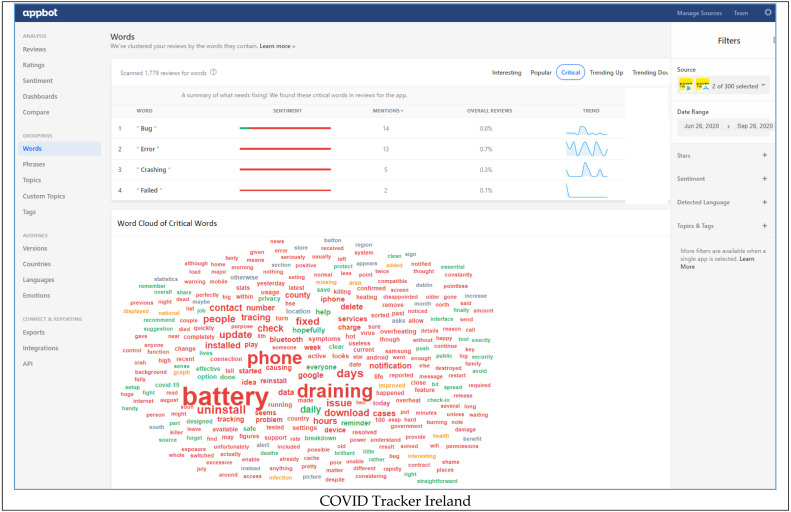

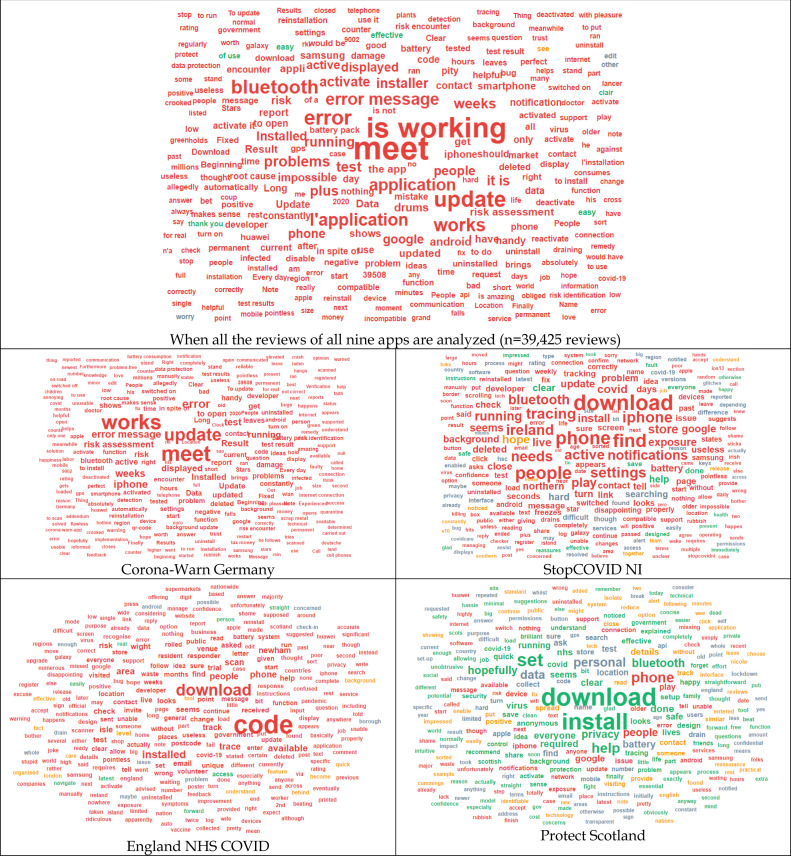

As another “core” RQ of our study, we wanted to identify the main issues (problems) that users have reported about. Having received anywhere between 63 and 20,972 reviews (and counting) as of this writing for each app, the nine apps had in total 39,425 review comments. Of course, manual analysis of such a large and diverse textual feedback was not an option. The AppBot tool provides various features such as sentiment analysis (Guzman and Maalej, 2014) and critical reviews to make sense of large review text datasets. We show the outputs of word-cloud visualization for all the nine apps in Appendix A (Fig. 33). We also include the AppBot tool’s user-interface in Appendix A, as a glimpse into how the tool works.

Fig. 33.

Word-clouds for the app reviews of all nine apps in which phrases are color codes based on sentiment analysis: positive ( ), negative (

), negative ( ), neutral (grey), and mixed (

), neutral (grey), and mixed ( ).

).

For generating word clouds based on reviews, AppBot provides six types of options to filter review subsets: interesting reviews, popular reviews, critical reviews, trending up reviews, trending down reviews, and new reviews. AppBot has a sophisticated NLP engine to tag views under those six categories, for example: “The Popular tab shows you the 10 words that are most common in your reviews. This helps you to identify the most common themes in your app reviews”32 ; and “the Critical tab is a quick way to find scary stuff in your reviews. This can help isolate bugs and crashes, so you can quickly locate and fix problems in your app faster”2. Since we are interested to know about the problems reported by users about the apps, to generate the word-cloud visualizations show in Appendix A, we have filtered by “critical” reviews.

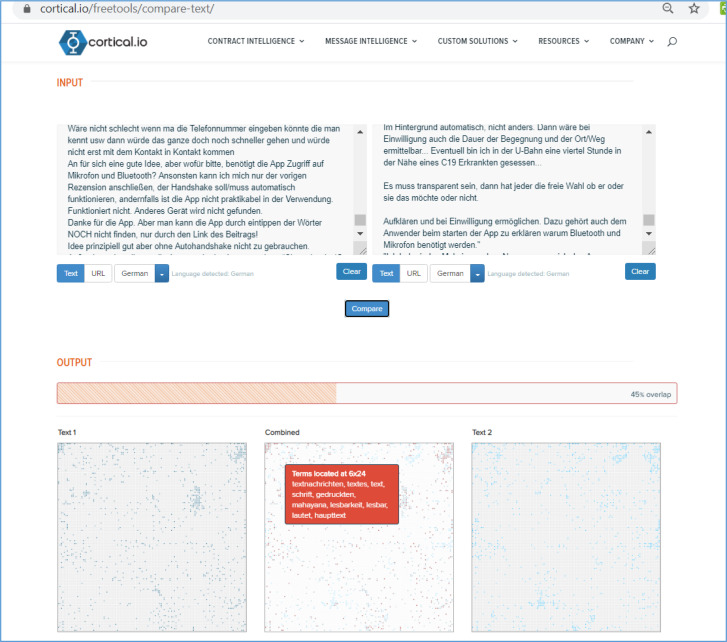

For apps of non-English-speaking nations, e.g., Germany and France, unsurprisingly, almost all reviews were in their official languages, and we used the Chrome browser’s built-in translate feature to see the review texts in English. For readers wondering about the original reviews in the original languages, we also show the word-clouds of two example apps (StopCovid France and Stopp-Corona Austria) based on their original review data.

Let us consider the COVID Tracker Ireland app as an example. As we can see in its word-cloud, “battery, “draining”, and “uninstall” is among the most “critical” words. The fact that these apps make regular usage of Bluetooth signals leads to high battery usage, and this issue has been widely discussed in many online sources.33 Furthermore, the terms “work” and “update” appear prominently with negative sentiment in the word-cloud of app reviews from Germany and Finland. By reading a subset of those reviews, we observed that it has not been obvious for many users of those apps how to use those apps properly (how to get them to “work”).

In Appendix A, words in a word cloud are colored according to their sentiments in reviews. AppBot provides four types of sentiments: positive (green labels in the word-cloud), negative (red), neutral (grey), and mixed (orange). There is another useful feature in AppBot: when we click on each word in the cloud, all the reviews containing that word are listed.

Lots of insights can be gained from the word clouds, word sentiments, and also by live interaction with the dataset in the AppBot tool (we invite the interested readers to do so). As discussed in Section 3.4, we have posted an online video of live interaction with the dataset in youtu.be/qXZ_8ZTr8cc

Of course, comparing these data and findings for two different contact-tracing apps should be done with a “grain of salt”, since their contexts (users’ demographics, software features, and requirements) are quite different. For example, England’s NHS COVID app has a feature to allow users to scan a QR code, as the government has asked shops to request shoppers to do so when entering shops. Many of the reviews for this app are about issues with that feature (see the word “code” in the word cloud), a feature that apparently does not exist in the other apps.

Among the word clouds, we can visually notice that the Protect Scotland app’s word cloud shows an overall positive picture, with lots of green (positive) sentiments. In the rest of the word clouds, red (negative) sentiments are the majority.

One word cloud in Appendix A (the second one from the top in Fig. 33) belongs to all data: when all the reviews of all apps are analyzed (n 39,425 reviews). This word cloud shows that the reviews have a negative sentiment towards the functioning (“is working” in the word cloud) of the German app. Furthermore, there seem to be major issues with the Bluetooth handshake protocol.

In the next three sub-sections, we look at three examples apps (countries) and their specific problems, as reported in user reviews. We select the two apps with the highest number of reviews: the German app (27,744 reviews, combined for both OS apps) and the French app (2638 reviews). The case of several UK apps is also interesting since UK is a nation with four regions, for which three different apps have been developed: “StopCOVID NI” for Northern Ireland, “NHS COVID-19” app for England and Wales, and “Protect Scotland” for Scotland. We also analyze the case of the UK and its apps next.

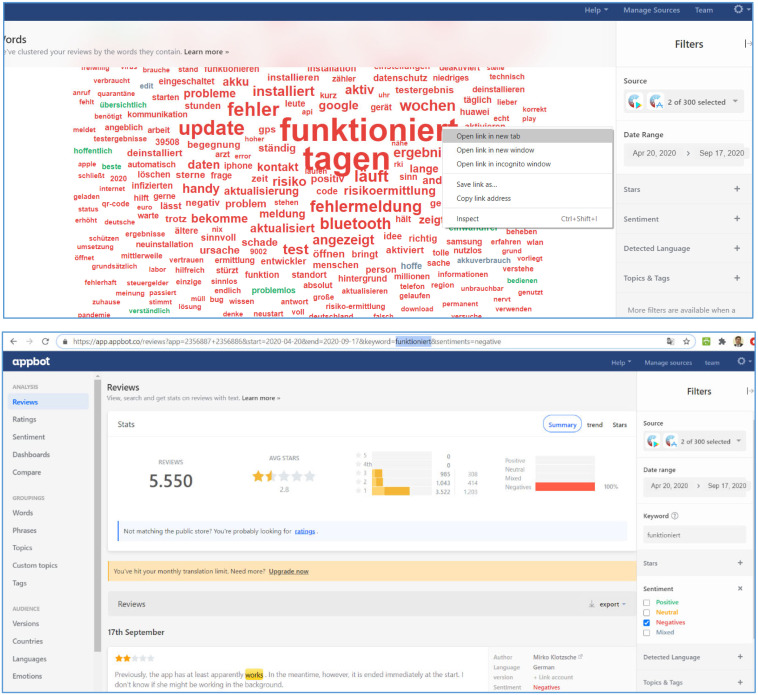

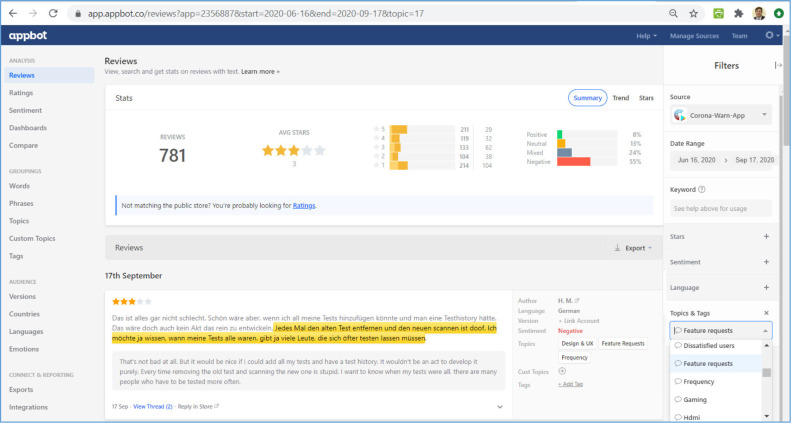

4.3.1. Problems reported about the German app

As visualized in the word-cloud in Fig. 33, one of the frequent words with negative sentiments for this app is “funktioniert“ (German), meaning ”works” (in English), which has appeared in 5550 negative reviews.

As discussed in Section 4.3, there is a useful feature in AppBot: when we click on each word in the cloud, all the reviews containing that word are listed (as shown in Fig. 8). To ensure reproducibility of our analysis and for the interested reader, we show in Fig. 8 the steps for retrieving the “critical” (negative) reviews in which a certain keyword (“funktioniert“ in this example) is mentioned, using the AppBot tool. As we can see in Fig. 8, the term “funktioniert” (German), “works” (English), has appeared in 5550 reviews in the time window under study (April–September 2020).

Fig. 8.

Retrieving the “critical” (negative) reviews of the German app in which a certain keyword is mentioned, using the AppBot tool.

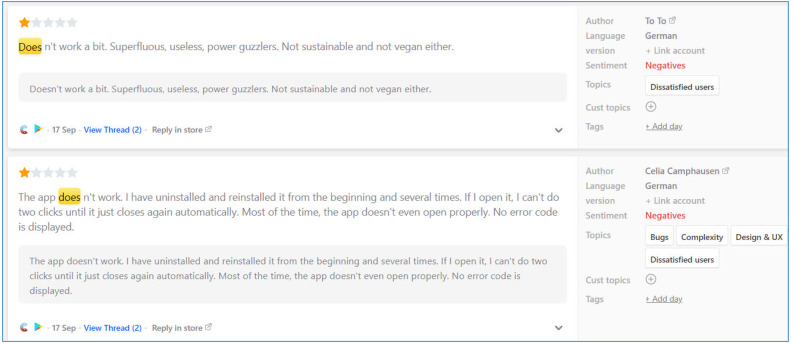

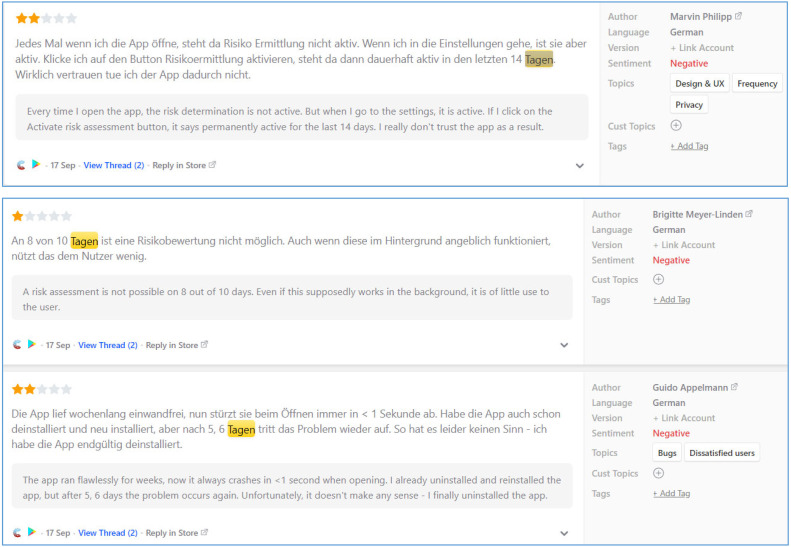

We looked at a random subset of that large review set (5550 records) which contained the keyword “works”. It turned out that most of the negative reviews with the keyword “works”, were conveying the message that the app does not work and were actually a sort of bug reports (two more examples are shown Fig. 9, Fig. 10).

Fig. 9.

Two critical (negative) reviews mentioning problems with running the German app.

Fig. 10.

A critical (negative) review mentioning sporadic (intermittent) app crashes.

We also noticed that many of the reported issues were about the app not working on certain mobile devices. One example was as follows (translated from German by AppBot):

This example review, and many other reviews that we looked at, implied occurrence of sporadic (intermittent) app crashes for specific mobile device models. Such challenges are quite common in industry and have been studied in software engineering, e.g., in Joorabchi et al. (2013). We see above that the development team has replied to this review, mentioning that they will contact the user when they have more information, but there is no newer follow-up reply about the solution. It quite is possible that the development team has fixed some of those issues in the upcoming updated versions (patches).

As visualized in the word-cloud of Fig. 33, another frequent keyword within negative sentiments for the German app is “Tagen“ (German), which has been translate to ”meet“ by AppBot (it uses Google Translate), but the correct translation should actually be ”days” when we looked at the full sentences in the reviews. This keyword has appeared in 4465 negative reviews, and three examples are shown in Fig. 11. Two of the example reviews indicate that a specific functionality (called “Risk assessment”) was not available over several days. The third example in Fig. 11 is a crash report.

Fig. 11.

Several critical (negative) reviews of the German app, mentioning problems with the keyword “days”.

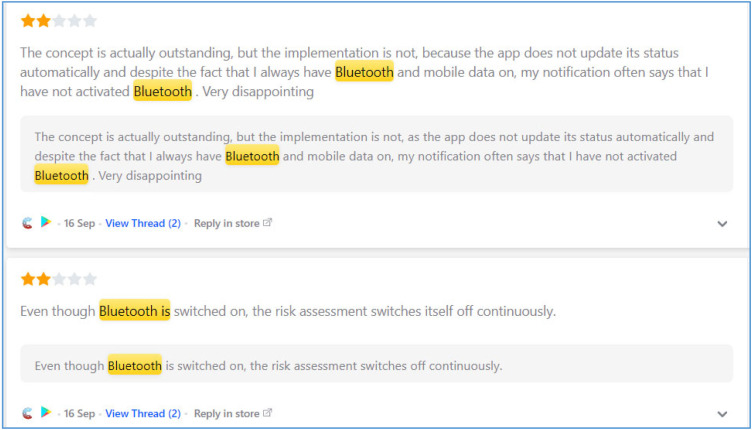

As another issue type, 2273 negative reviews mentioned the keyword ”Bluetooth”. Two example reviews from that large set are shown in Fig. 12. Both these example reviews are bug reports, but again without important information (e.g., phone model/version and steps to reproduce the defect) to trace and fix the bug.

Fig. 12.

Two critical (negative) reviews of the German app, mentioning problems with the keyword “Bluetooth”.

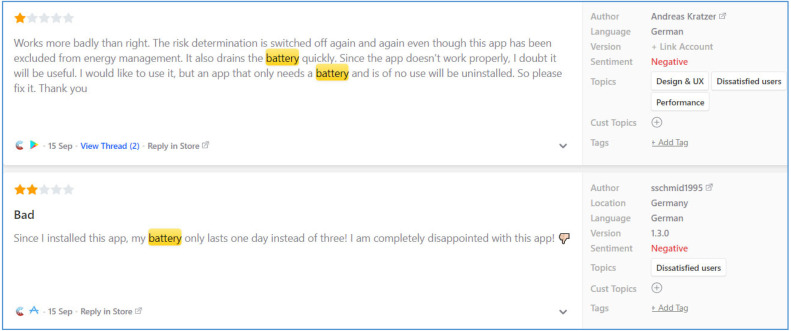

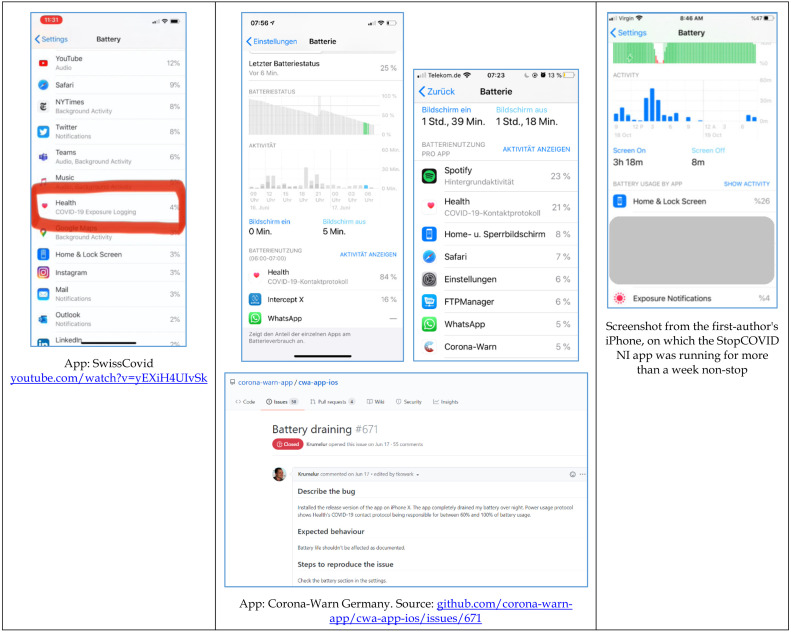

As another issue type, 1264 negative reviews mentioned the keyword “battery” (“Akku” in German). Two example reviews from that large set are shown in Fig. 13. Related to that issue, there have been a lot of discussions in the media (such as36 ) and also apps’ support pages37 about the high battery usage. Thus public (users) and media have complained about the issue. In response to this, the Android team has apparently made improvements38 to the Apple-Google Exposure Notification API, i.e., “In contrast to Classic Bluetooth, Bluetooth Low Energy (BLE) is designed to provide significantly lower power consumption”.

Fig. 13.

Two critical (negative) reviews of the German app, mentioning problems with the keyword “battery”.

Many users have reported the battery usage of the apps on their phones, e.g., a YouTube video39 shows the battery usage screenshot of an iPhone on which Switzerland’s SwissCovid is running. The video showed that, on a time period of 10 days, the app (“Exposure Logging” or “Exposure Notification” service on iPhone) had consumed only 4% of the battery (shown in Fig. 14). Also, many bug reports have been filed in the German app’s GitHub repository about its high battery usage, e.g.2, in which screenshots of battery usage have been submitted. We show two of those screenshots in Fig. 14 (they are in German, but the usage ratio is clearly understandable), along with a bug report in which the systematic bug report items have been provided, e.g., Describe the bug, Expected behavior, Steps to reproduce the issue.

Fig. 14.

Screenshots and one bug report submitted by users about battery usage of the apps.

The first author of the paper also installed the StopCOVID NI app on his iPhone, and let it run for more than a week non-stop. We provide a screenshot from the battery usage screen of his phone in Fig. 14, in which the “Exposure Notification” service has consumed only 4% of the battery, which we consider a reasonable power consumption (not high). But we should mention that he moved out of his home a few times only during that week, and he barely came close to anyone. Thus, the app did not have to exchange information with other uses who had the StopCOVID NI app on their phones.

We also found some discussions40 in an online forum about the UK NHS app, in which one user mentioned: “Our IT director reported massive battery drain after installing the app as in 70% to 15% on his journey home (three trains). I wonder if the drain comes from the amount of contacts you have with other people - if you are sat at home it has no contacts to ping but on trains etc. there might be hundreds”. Thus, just like any other mobile app, we can observe that the type of usage and movement of the user in different environments could indeed impact the battery usage of the app.

4.3.2. Problems reported about the French app

As visualized in the word-cloud of Fig. 33, for the French app, two of the frequent words with negative sentiments for this app are “application” and “l’application”, referring to “app”, which are trivial terms. When translated to English, the other frequent terms are ”activate”, “install”, and “Bluetooth”. We discuss a small randomly chosen subset of those reviews next.

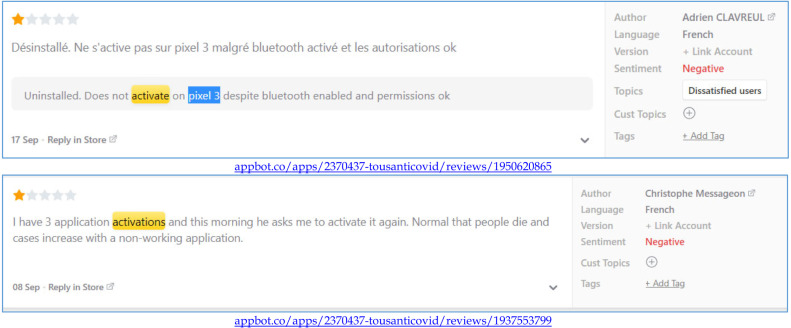

237 reviews critical reported problems with the keyword “activate” for the French app. Two example reviews from that set are shown in Fig. 15. We also include the permanent links to the reviews for traceability. These users have reported serious problems with activating the app, which is unfortunate.

Fig. 15.

Two critical reviews of the French app, mentioning problems with the keyword “activate”.

130 reviews critically reported problems with the keyword “install” for the French app. Two example reviews from that set are shown in Fig. 16.

Fig. 16.

Two critical reviews of the French app, mentioning problems with the keyword “installation”.

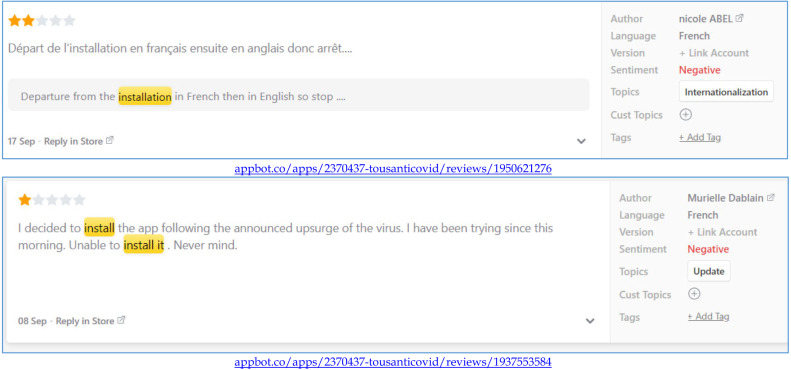

Exactly 300 critical reviews reported problems with the keyword “Bluetooth” for the French app. Two example reviews from that set are shown in Fig. 17. The first example review is about high battery drainage of Bluetooth, like all other apps in the pool.

Fig. 17.

Two critical reviews of the French app, mentioning problems with the keyword “Bluetooth”.

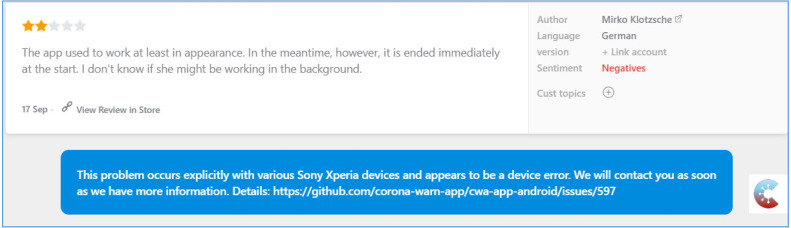

The second example review in Fig. 18 is about the incompatibility of the app on old phones. The second example review also raised an important issue: a large ratio of elderly are known to not have the latest smartphones or even how to install and use apps like these on their phones. In fact, a paper has been published on this very subject, entitled: “COVID-19 contact tracing apps: the ’elderly paradox’” (Rizzo, 2020).

Fig. 18.

Comparing a review46 without any reply from the development team for the StopCOVID NI app and a review47 with a reply from the development team for the German app..

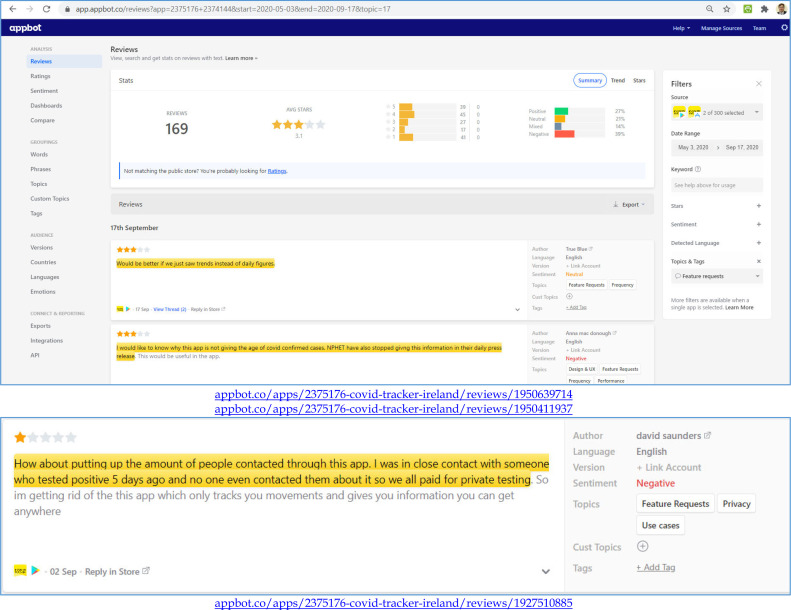

4.3.3. Problems reported about the three apps in the UK

For the four regions of the UK, three apps have been developed: NHS COVID-19 for England and Wales, StopCOVID NI for Northern Ireland, and Protect Scotland for Scotland. We review next a subset of the common problems reported for all three and then review a subset of issues reported for each of them.

Common problems reported for all three apps:

One major issue reported by users is the lack of “interoperability” between the apps, i.e., if a user from one region, using that region’s app, visits another part of the UK, the app will not record the contact IDs in the new region and in case of entering a positive COVD result, the app will not notify those contacts. This issue has been reported in a large number of reviews, e.g.:

-

•

“Complete and utter waste of space. Only works if I come into contact with someone else using the same backstreet application, who has managed to get tested without being turned away, and inputs a code into their app. If I bump into someone from England, Wales, Ireland, or anywhere else for that matter with COVID-19 then this app does diddly squat - What’s the point??” 41

-

•

“it’s not linked to apps used in other parts on the UK, again a missing feature.”42

-

•

“Live in Scotland and work in England. Only one app will work at a time. Do I choose the NHS Covid or Protect Scotland version!!” 43

Also, a number of users, understandably, compared the features of the three apps and complained about the case of a given app not having the feature provided by another UK-based app. An example review:

-

•

“Looks great, easy to use but oh how they missed out some useful features such as a [NHS] Covid-19 [app’s] alert state notifier, scanning business QR Codes, etc. so user’s data needn’t be handed over in pubs, etc”. 44

Many users reported having problems installing the apps, e.g., 10 reviews of the 573 Protect Scotland Android app. Some of those installation problems were due to having older phone models, but we still saw several reviews reporting newer (phone models not being able to install the apps, e.g., “Waste of time have tried to install numerous times got the very latest Samsung S20 and it doesn’t install on my phone”.45 This raises the issue of the development team not doing adequate installation testing of the app using the latest phone models. There are indeed many advanced commercial testing tools on the market, e.g., Testinium (testinium.com), to conduct that testing efficiently. As discussed in Section 2.3, the first author of the current paper served as a consultant to the development team of the StopCOVIDNI app and conducted an inspection of test plans and test cases of the app. One of the comments that he had made was indeed installation testing of the app on multiple phone models using such test tools.

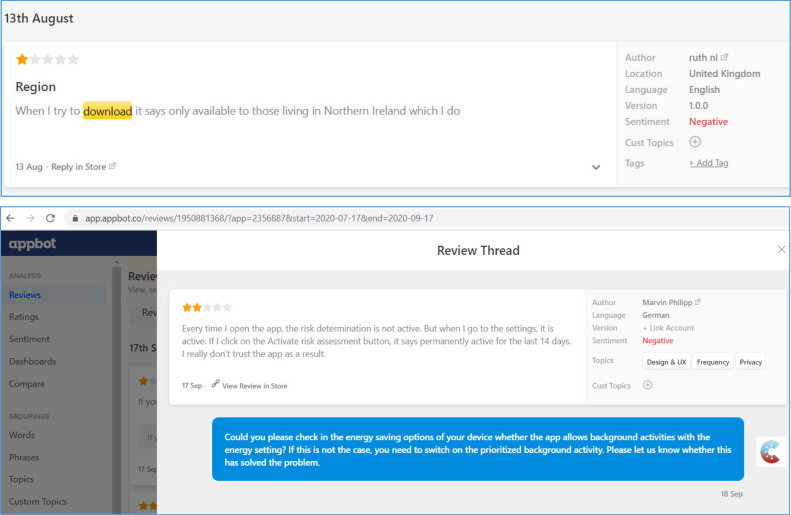

Another common issue that we noticed for the UK apps was the lack of response by apps’ development teams to almost all reviews in the app store (only the Google Play store allows replies to reviews). This was in contrast to the case of some other apps, e.g., the German app, whose development team has been proactive in replying and communicating with users directly via the review threads.

StopCOVID NI app:

As visualized in the word-cloud in Fig. 33, one of the frequent words with negative sentiments for this app is “notifications” which has appeared in 12 negative reviews, e.g.:

-

•

“Want to get people to uninstall it? Don’t produce audible notifications you haven’t been exposed this week at 6am on a Fri morning, waking people up” 48

-

•

“I am getting a warning that exposure notifications may not work for the area I am in. As this is Northern Ireland I am unclear why it is saying this. The exposure log does not appear to have made any checks since early August. This does not give confidence that the app is working properly. I do hope the designers are reading these reviews as this appears to be a recurring issue” 49

-

•

“I was keen to install and safe. I have iphone7 with latest update. And like so many others, I get the exposure notification error. Can’t select to turn on exposure notifications. Useless. Very disappointing” 50

-

•

“Hopefully the app does what it says on the tin - but I get another error message that says “Exposure Notifications Region Changed”, followed by “COVID-19 Exposure Notifications may not be supported by “StopCOVID NI” in this region. You should confirm which app you are using in Settings”. I am using an iPhone 11 Pro Max running iOS 13.5.1. so I have no confidence that the app is working properly at present”. 51

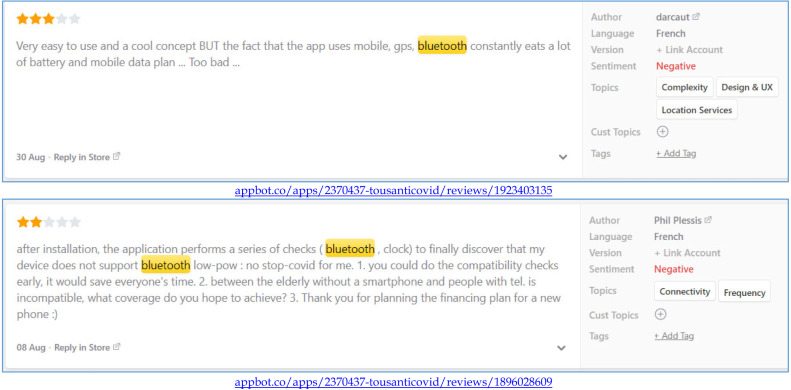

Another frequent word with negative sentiments for this app is “download”, which appeared in 16 negative reviews, e.g.:

-

•

“When I try to download it says only available to those living in Northern Ireland which I do” 52

-

•

“The app just tells me I need to live in NI to use it. I do. I deleted and downloaded again. Same problem” 53

-

•

“I just downloaded this and used the ‘share this app’ function to all my contacts in Ireland and the link doesn’t work!! Not a good start for the app and doesn’t build my confidence that any other part of the app works! I am now getting multiple messages from people asking what is the link for. Very disappointing” 54

Some randomly sampled negative reviews, under the category of the “download” issue, were:

-

•

“Tried to download on an elderly relative’s Samsung phone but the app isn’t compatible. Nowhere can I find a list of compatible devices or Android versions. Sadly the app won’t help the most vulnerable” 55

-

•

“What is the point of urging people to install this app to stop the spread of covid 19 yet when the app is not working for some people the developers don’t even bother to fix or reply to the email that they ask people to send if there is a problem. Google tried their best to resolve the matter immediately and also notified the developer yet 5 days past and nothing” 56

-

•

“The app does not seem to work correctly unless automatic battery optimisation is switched to manually allow app to run in background. Settings -> Battery -> App launch -> StopCOVID NI. Might also be under Applications -> StopCOVID NI -> Battery Optimisation depending on version. Once I switched this I went from 5 checks over 10 days to 8 checks in a single day” 57

-

•

“As others have said, the app does not properly run in the background as intended - the app needs to be open and the phone unlocked. Good idea in theory, however poor execution, going forward this app will be useless without correction.“ 58

NHS COVID-19 app:

As visualized in Fig. 33, one of the frequent words with negative sentiment for this app is “code” which has appeared in 153 of the 341 negative-sentiment reviews for this app. This phrase does not refer to source code, but to a QR code which is used in the app (see the real photo example of a restaurant with the QR code in Fig. 19). There were a great number of criticisms, and the followings are only some examples:

Fig. 19.

A QR code to be scanned with the NHS COVID-19 app before entering a venue in London, UK.

-

•

Well, as a business we are directed to register for track and trace. Having registered for a QR code and subsequently printed said code. I thought, in good naval tradition, ’Lets give it a test before we put the poster up’. So download the app from Play Store. Scanned the code and a message pops up ’There is no app that can use this code’. Next move, open the application. What do we find!! Currently only for NHS Volunteer Responders, Isle of White and Newham residents’. What is the point of publicizing this if it does not have basic functionality? Measure twice cut once MrX Also there should be an option for no Star as it appropriate for this application!59 Poor alignment of publicity timing

-

•

QR location doesn’t seem to work for me. Used a standard QR reader on my phone and it took me straight to venue but the QR reader in the app said QR code not recognized.60 Poor testing of that module software

-

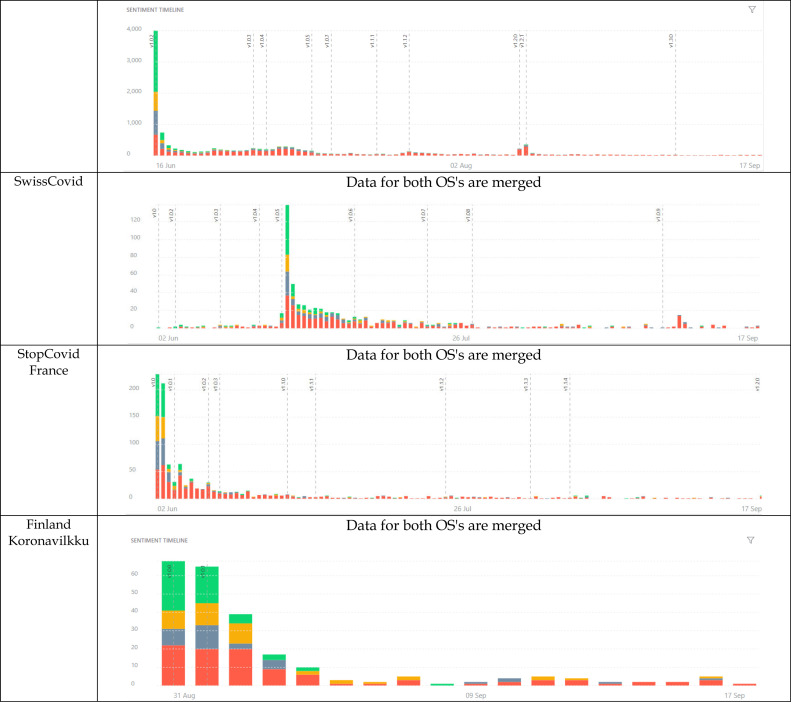

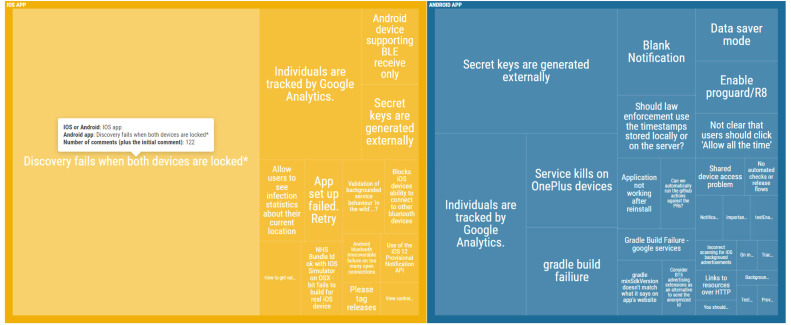

•