Key Points

Question

Could an artificial intelligence (AI)–assisted approach have an impact with regard to prostate cancer grading and quantification?

Findings

In this diagnostic study including 1000 whole-slide images from biopsy specimens of 589 men with prostate cancer, a deep neural network–based AI-powered platform was able to detect cancer with high accuracy and efficiency at the patch-pixel level and was significantly associated with reductions in interobserver variability in prostate cancer grading and quantification.

Meaning

The findings of this study suggest that an AI-powered platform may be able to detect, grade, and quantify prostate cancer with high accuracy and efficiency, which could potentially transform histopathological evaluation and improve risk stratification and clinical management of prostate cancer.

Abstract

Importance

The Gleason grading system has been the most reliable tool for the prognosis of prostate cancer since its development. However, its clinical application remains limited by interobserver variability in grading and quantification, which has negative consequences for risk assessment and clinical management of prostate cancer.

Objective

To examine the impact of an artificial intelligence (AI)–assisted approach to prostate cancer grading and quantification.

Design, Setting, and Participants

This diagnostic study was conducted at the University of Wisconsin–Madison from August 2, 2017, to December 30, 2019. The study chronologically selected 589 men with biopsy-confirmed prostate cancer who received care in the University of Wisconsin Health System between January 1, 2005, and February 28, 2017. A total of 1000 biopsy slides (1 or 2 slides per patient) were selected and scanned to create digital whole-slide images, which were used to develop and validate a deep convolutional neural network–based AI-powered platform. The whole-slide images were divided into a training set (n = 838) and validation set (n = 162). Three experienced academic urological pathologists (W.H., K.A.I., and R.H., hereinafter referred to as pathologists 1, 2, and 3, respectively) were involved in the validation. Data were collected between December 29, 2018, and December 20, 2019, and analyzed from January 4, 2020, to March 1, 2021.

Main Outcomes and Measures

Accuracy of prostate cancer detection by the AI-powered platform and comparison of prostate cancer grading and quantification performed by the 3 pathologists using manual vs AI-assisted methods.

Results

Among 589 men with biopsy slides, the mean (SD) age was 63.8 (8.2) years, the mean (SD) prebiopsy prostate-specific antigen level was 10.2 (16.2) ng/mL, and the mean (SD) total cancer volume was 15.4% (20.1%). The AI system was able to distinguish prostate cancer from benign prostatic epithelium and stroma with high accuracy at the patch-pixel level, with an area under the receiver operating characteristic curve of 0.92 (95% CI, 0.88-0.95). The AI system achieved almost perfect agreement with the training pathologist (pathologist 1) in detecting prostate cancer at the patch-pixel level (weighted κ = 0.97; asymptotic 95% CI, 0.96-0.98) and in grading prostate cancer at the slide level (weighted κ = 0.98; asymptotic 95% CI, 0.96-1.00). Use of the AI-assisted method was associated with significant improvements in the concordance of prostate cancer grading and quantification between the 3 pathologists (eg, pathologists 1 and 2: 90.1% agreement using AI-assisted method vs 84.0% agreement using manual method; P < .001) and significantly higher weighted κ values for all pathologists (eg, pathologists 2 and 3: weighted κ = 0.92 [asymptotic 95% CI, 0.90-0.94] for AI-assisted method vs 0.76 [asymptotic 95% CI, 0.71-0.80] for manual method; P < .001) compared with the manual method.

Conclusions and Relevance

In this diagnostic study, an AI-powered platform was able to detect, grade, and quantify prostate cancer with high accuracy and efficiency and was associated with significant reductions in interobserver variability. These results suggest that an AI-powered platform could potentially transform histopathological evaluation and improve risk stratification and clinical management of prostate cancer.

This diagnostic study examines the ability of an artificial intelligence–powered platform vs manual evaluation to accurately and efficiently detect, grade, and quantify prostate cancer and reduce interobserver variability among experienced pathologists.

Introduction

Current clinical management of prostate cancer is largely dependent on the Gleason grading system and tumor volume estimation of diagnostic prostate biopsies. The Gleason grading system is based on evaluation of morphological characteristics and was first introduced in 1966.1 It remains the most reliable tool for prostate cancer diagnosis and prognosis despite its limitations.2,3 In the past 2 decades, the Gleason grading system has undergone substantial modifications in pattern designation4,5 and score reporting for prostate biopsy and prostatectomy based on clinical outcome data.4,6 The more recently introduced Gleason grade group (in which tumor growth patterns are characterized by degree of differentiation, including Gleason pattern 3 [discrete well-formed glands], Gleason pattern 4 [cribriform, poorly formed, or fused glands], and Gleason pattern 5 [sheets of cancer, cords and/or solid nests of cells, or necrotic tissue]) and quantitative grading (based on percentage of Gleason patterns 4 and 5 detected) improved the Gleason grading system by providing a more reliable and accurate system for the diagnosis and clinical management of prostate cancer.7,8,9,10 Nonetheless, studies have reported that the system remains limited by interobserver variability in quantitative grading among general pathologists, between general pathologists and urological pathologists, and even among expert urological pathologists.11,12

Prostate cancer volume is also an important prognostic factor that is used for risk stratification and the identification of patients requiring active surveillance.13,14,15 In practice, prostate cancer volume is estimated as the percentage of core involvement and the linear extent of involvement detected on hematoxylin-eosin slides. Two different approaches are used for tumor volume measurement.16,17 These variable approaches, in addition to manual estimation of tumor volume, account for some of the interobserver variability in prostate cancer volume assessment, and they compromise the power of the Gleason grading system for prostate cancer risk estimation and stratification, thereby preventing optimal cancer management.

With the development of digital whole-slide imaging (WSI) and computational methods for pathological evaluation, the application of artificial intelligence (AI) to cancer detection, grading, and quantification using deep convolutional neural networks to evaluate tissue samples is now a realistic possibility. In recent years, studies have found that the performance of deep learning models is similar to that of human experts for diagnostic tasks such as tumor detection and grading.18,19,20,21,22,23,24,25,26,27 However, none of the AI systems and algorithms used in previous studies have been validated by independent pathologists or examined for their potential impact with regard to pathologists’ clinical practice. This diagnostic study assessed the ability of an AI-powered platform to detect and quantify prostate cancer with high accuracy and efficiency and evaluated whether this platform could significantly reduce interobserver variability.

Methods

This diagnostic study was conducted at the University of Wisconsin–Madison from August 2, 2017, to December 30, 2019. The study was approved by the institutional review board of the University of Wisconsin–Madison with a waiver of informed consent because only archival slides and tissue samples from patients were used. This study was performed in accordance with the Declaration of Helsinki28 and followed the Standards for Reporting of Diagnostic Accuracy (STARD) reporting guideline for diagnostic studies.

Participant and Slide Selections

A total of 589 men with biopsy-confirmed prostate cancer who received care in the University of Wisconsin Health System from January 1, 2005, to February 28, 2017, were selected chronologically. Patients with biopsy slides of low quality, including slides with drying effects, significant air bubbles, or broken glass, were excluded. All biopsies were performed using a systematic method. In general, 1 representative slide was selected from each patient if it contained the representative prostate cancer Gleason patterns and benign glands. For some patients, 2 slides were selected. A total of 1000 formalin-fixed, paraffin-embedded, hematoxylin-eosin–stained prostate biopsy slides were included. A high-capacity scanner (Aperio AT2 DX; Leica Biosystems) at ×40 magnification was used to create WSIs. The 1000 WSIs were split into a training set (n = 838) for algorithm and model development and a validation set (n = 162). The validation set was selected to include an adequate mixture of all Gleason patterns and prostate cancer Gleason grade groups.

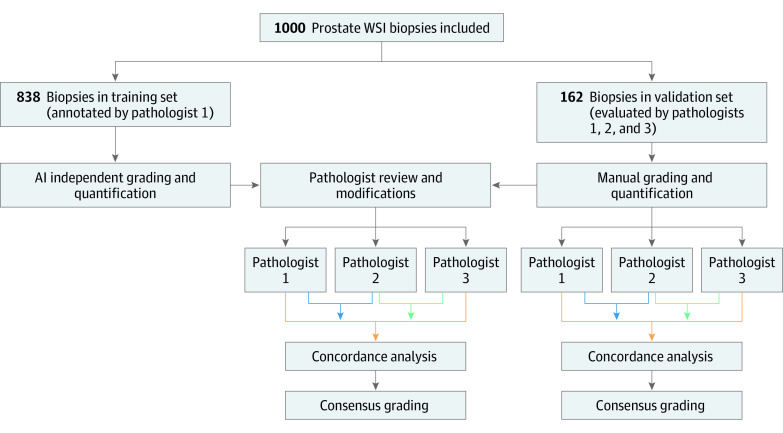

Three experienced academic urological pathologists (W.H., K.A.I., and R.H., hereinafter referred to as pathologists 1, 2, and 3, respectively) graded and quantified the biopsy slides; pathologist 1 was involved with training the algorithm. The study workflow is shown in Figure 1.

Figure 1. Study Workflow.

Pathologist 1 was W.H., pathologist 2 was K.A.I., and pathologist 3 was R.H. AI indicates artificial intelligence; WSI, whole-slide image.

Development and Training of AI System

A total of 838 slides in the training set were annotated at the patch (ie, pixel group) level using current criteria and recommendations from the International Society of Urological Pathology.29 To develop the algorithm, an experienced urological pathologist (pathologist 1) performed detailed annotation of a wide range of morphological characteristics, including Gleason pattern categories (ie, patterns 3, 4, and 5), prostate cancer, high-grade prostatic intraepithelial neoplasia, perineural invasion, vessels, and lymphocytes. Our model architecture involved 3 steps (eFigure in the Supplement). First, a gland segmenter was used to identify all epithelial regions on the tissue, including benign, low-grade cancer (Gleason pattern 3), high-grade cancer (Gleason patterns 4 and 5), and all stromal regions. The segmentation network used a 5-level-deep U-Net convolutional neural network model (University of Freiburg)30 to segment epithelium. The model used input tiles of 256 × 256 × 3 pixels at ×10 resolution and generated a 256 × 256–pixel binary mask (in which 1 represented epithelium and 0 represented stroma) at ×1 resolution.

Second, a convolutional neural network feature extractor and classifier used the epithelial patches as input to predict appropriate labels (benign, Gleason pattern 3, Gleason pattern 4, or Gleason pattern 5). A multiscale model was trained using randomly sampled tiles of 64 × 64 pixels at ×40 resolution, 256 × 256 pixels at ×40 resolution, and 1024 × 1024 pixels at ×5 resolution to capture nuclear detail and glandular context as well as tumor microenvironmental elements. Each tile was transformed to a 1024-dimensional vector representation; these vectors were concatenated and classified using the softmax function (which converts a vector of numbers into a vector of probabilities, in which the probabilities of each value are proportional to the relative scale of each value in the vector) for the target labels. Third, the area of the patches was summed across the entire slide to quantify the Gleason grade.

During the training process, data augmentation (including random flipping, rotation, and color normalization) was applied to prevent overfitting. In addition, nuclei detection was used to identify epithelial and stromal nuclei, and training patches were centered around the nuclei coordinates to generate several training examples. To balance the data set, we used a pretrained convolutional neural network feature extractor to generate high-dimensional vector representations of the various patterns. We applied k-means clustering to these vectors to generate hundreds of clusters of morphologically similar patterns in high-dimensional vector space that did not have any known pathological label but represented a specific phenotype. This clustering ensured that different subpatterns were equivalently represented, even though they may not have been present in equal amounts in the training data set or in clinical practice. This approach was particularly important for Gleason pattern 5 cancer, which appears infrequently and in small volume but has substantial consequences for treatment planning.

The gland segmenter had 37 million parameters and was trained using randomly sampled tiles with a size of 256 × 256 pixels. The loss function was defined as the cross-entropy between the predicted probability and the ground truth. We used stochastic gradient descent optimization to optimize the model parameters with an adaptive learning rate of 0.05, which was decreased by a factor of 2 after every 10 epochs. The fine-tuned networks were trained to converge in 500 epochs.

The convolutional neural network feature extractor had 17 million parameters and was trained using randomly sampled tiles of 64 × 64 pixels at ×40 resolution, 256 × 256 pixels at ×40 resolution, and 1024 × 1024 pixels at ×5 resolution. The loss function was defined as the cross-entropy between the predicted probability and the ground truth. We used stochastic gradient descent optimization to optimize the model parameters with an adaptive learning rate of 0.00005, which was decreased by a factor of 2 after every 100 epochs. The fine-tuned networks were trained to converge in 1000 epochs.

Both the models were implemented in Python using the Theano deep learning framework (University of Montreal) and were trained on an Amazon Web Services g3.4xlarge instance (a cloud computing instance with Nvidia GPU acceleration that assists with mathematical calculations to efficiently learn the weights of the AI model; Amazon.com, Inc) with 1 graphics processing unit, 16 virtual centralized processing units, 122 gigabytes of random access memory, and 8 gigabytes of graphics processing unit random access memory.

Algorithm Validation

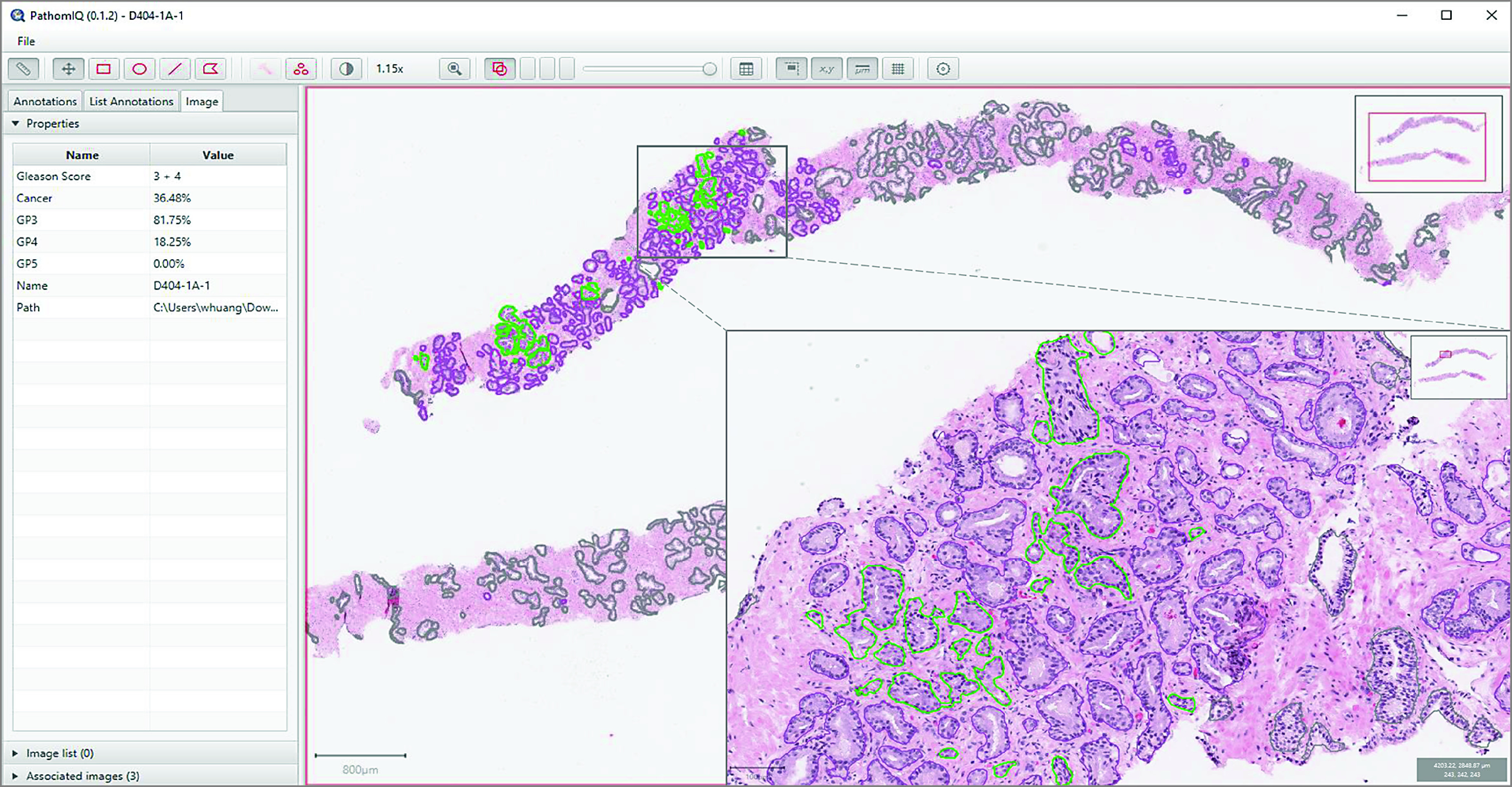

The algorithm was validated by the 3 urological pathologists independently using the validation set of WSIs. First, each pathologist was asked to download the WSI, perform manual prostate cancer grading and volume quantification, document the results, and record the time spent on this process. The pathologists were then asked to repeat the same tasks 2 months later using the AI-powered platform (Figure 1). To participate in the AI-assisted process, the pathologists were given the link to download the AI platform and install it on their computers. Next, they were asked to upload the results of the prostate cancer detection, grading, and quantification independently performed by the AI system, which included patch-level labels for Gleason patterns and benign prostatic epithelium, slide-level grading, and quantification of each WSI (Figure 2). Each pathologist reviewed the gland and patch labels generated by the AI system and changed the labels with which they disagreed using the AI platform tool; the modified results were incorporated and tabulated instantly as AI-assisted prostate cancer grading and quantification at the slide level. Because the time-tracking mechanism had not yet been implemented at the time of the study, the time spent reviewing each slide was estimated.

Figure 2. Prostate Cancer Detection, Grading, and Quantification Using AI-Powered Platform.

Screenshot of artificial intelligence (AI)–powered platform. Left panel shows results of quantitative Gleason grading and quantification of prostate cancer volume. Right panel shows biopsy cores with annotations independently made by AI (purple denotes Gleason pattern 3, light green denotes Gleason pattern 4, and dark green denotes benign acini). Inset shows a close-up view of the selected area.

The results that the AI system independently generated from the validation set were then compared with the results generated by the training pathologist (pathologist 1) to examine the AI system’s proficiency. The results of the prostate cancer grading and volume quantification generated by the 3 pathologists, both manually and using the AI-assisted method, were also compared between the pathologists to examine the AI system’s impact with regard to pathologists’ clinical practice.

Statistical Analysis

A receiver operating characteristic analysis was conducted to evaluate the extent to which the AI system was able to distinguish prostate cancer from benign prostatic epithelium and stroma using the validation set. The predictive power was quantified by calculating the area under the receiver operating characteristic curve. Linear-weighted Cohen κ values with asymptotic 95% CIs were used to measure the concordance between the AI system and the training pathologist (pathologist 1) in distinguishing prostate cancer epithelium from benign prostatic epithelium and stroma at the patch-pixel level per slide, the concordance rates between the AI system and the training pathologist in prostate cancer grading at the slide level, and the concordance between the 3 pathologists in prostate cancer grading and quantification at the slide level. A κ value of less than 0.6, representing a moderate level of agreement, was considered unacceptably low. Hence, the null hypothesis that the κ value would be no higher than 0.6 was tested against the alternative hypothesis that the κ value would be higher than 0.6. Based on the validation sample size, a κ value of 0.75 or greater was detected with 90% power at a 1-sided significance level of α = .05. The extent of consensus grading among the 3 pathologists using manual vs AI-assisted methods was also compared using a χ2 test. A 2-sided P value of less than .05 was considered statistically significant.

Statistical analysis was performed using IBM SPSS Statistics, version 27 (IBM Corp). Data were collected between December 29, 2018, and December 20, 2019, and analyzed from January 4, 2020, to March 1, 2021.

Results

Among 589 men with biopsy slides included in the study, the mean (SD) age was 63.8 (8.2) years, the mean (SD) prebiopsy prostate-specific antigen level was 10.2 (16.2) ng/mL, and the mean (SD) total cancer volume was 15.4% (20.1%). Additional patient demographic characteristics and clinical and slide information are available in eTable 1 in the Supplement.

Capability of AI-Powered Platform

The AI-powered platform was able to segment and label each cancer gland or epithelial patch with a corresponding Gleason pattern on the tissue, then sum those values to generate the Gleason pattern volumes, Gleason score, and prostate cancer volume. The prostate cancer volume (measured as prostate cancer pixels relative to total tissue pixels) and the percentages of Gleason patterns (measured as pixels of each Gleason pattern relative to total prostate cancer pixels) were tabulated instantly and precisely by the AI-powered platform (Figure 2). The platform was designed as an open and accessible system, allowing pathologists to make changes to any gland or epithelial patch labels with which they disagreed. The changes were reflected in the overall Gleason score and tumor volume at the slide level. The pathologists were generally able to complete this process (ie, review and complete a slide) in less than 1 minute.

Detection, Grading, and Quantification

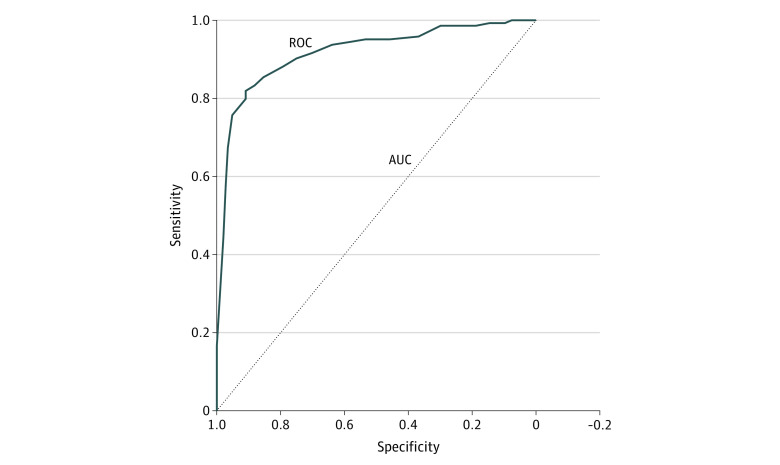

Using the validation set of WSIs, the AI system was able to detect cancer in all cores among all 162 cases at the slide level and to distinguish prostate cancer from benign prostatic epithelium and stroma with high accuracy at the patch-pixel level (area under the curve = 0.92; 95% CI, 0.88-0.95) (Figure 3). The AI system also achieved almost perfect agreement with the training pathologist for prostate cancer detection at the patch-pixel level (weighted κ = 0.97; asymptotic 95% CI, 0.96-0.98) and for prostate cancer grading at the slide level (weighted κ = 0.98; asymptotic 95% CI, 0.96-1.00) (eTable 2 in the Supplement).

Figure 3. Receiver Operating Characteristic Analysis of Prostate Cancer Detection Using AI-Powered Platform.

The area under the curve (AUC) was 0.92 (95% CI, 0.88-0.95) for the ability of the artificial intelligence (AI)–powered platform to distinguish prostate cancer epithelium from benign epithelium and stroma at the patch-pixel level using the validation data set. ROC indicates receiver operating characteristic.

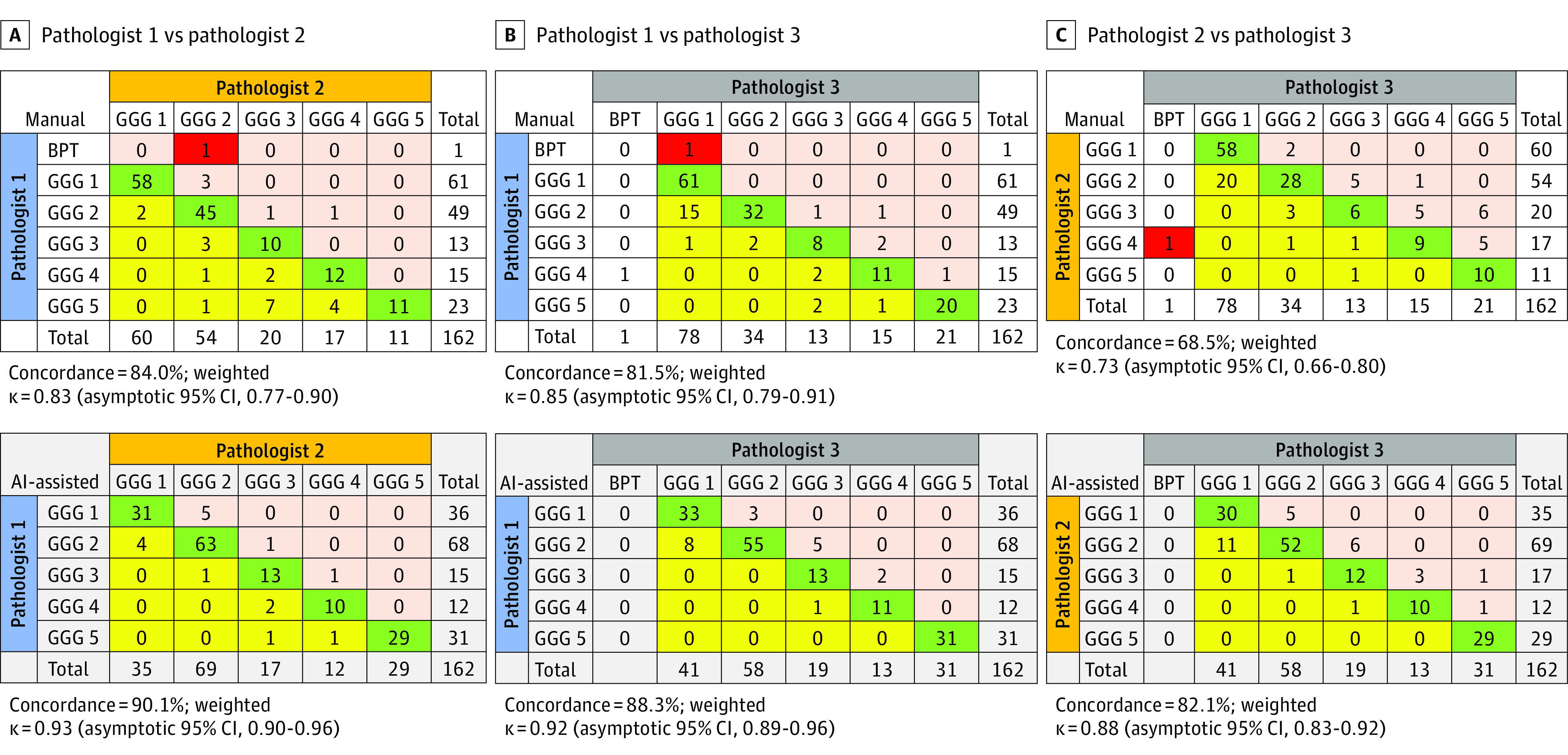

Error and Interobserver Variability

On rare occasions, a low-volume prostate cancer diagnosis was missed by pathologists during manual grading; however, no diagnoses were missed using the AI-assisted method (Figure 4). The concordance of prostate cancer grading and quantification among the 3 pathologists was significantly higher when using the AI-assisted method vs the manual method (pathologists 1 and 2: 90.1% agreement for AI-assisted method vs 84.0% agreement for manual method [P < .001]; pathologists 1 and 3: 88.3% agreement for AI-assisted method vs 81.5% agreement for manual method [P < .001]; pathologists 2 and 3: 82.1% agreement for AI-assisted method vs 68.5% agreement for manual method [P < .001]) (Figure 4), with significantly higher weighted κ values for the AI-assisted method for all 3 pathologists (pathologists 1 and 2: weighted κ = 0.90 [asymptotic 95% CI, 0.87-0.93] for AI-assisted method vs 0.45 [asymptotic 95% CI, 0.39-0.51] for manual method [P < .001]; pathologists 2 and 3: weighted κ = 0.92 [asymptotic 95% CI, 0.90-0.94] for AI-assisted method vs 0.76 [asymptotic 95% CI, 0.71-0.80] for manual method [P < .001]; pathologists 1 and 3: weighted κ = 0.92 [asymptotic 95% CI, 0.90-0.95] for AI-assisted method vs 0.41 [asymptotic 95% CI, 0.36-0.47] for manual method [P < .001]) (Table).

Figure 4. Concordance Analysis of Grading Among 3 Urological Pathologists Using Manual vs AI-Assisted Methods.

Grading cross tabulation. Green shading indicates concordant grading between pathologists. Red shading indicates that low-volume prostate cancer was missed by 1 of the pathologists. In panels A and B, pink and yellow shading indicate cases that were overgraded and undergraded, respectively, by pathologists 2 and 3 vs pathologist 1. In panel C, pink and yellow shading indicate cases that were overgraded and undergraded, respectively, by pathologist 3 vs pathologist 2. Pathologist 1 was W.H., pathologist 2 was K.A.I., and pathologist 3 was R.H. AI indicates artificial intelligence; BPT, benign prostatic tumor; and GGG, Gleason grade group.

Table. Extent of Pathologist Agreement in Cancer Volume Quantification Using Manual vs AI-Assisted Methods.

| Method | Pathologistsa | Weighted κ (asymptotic 95% CI)b |

|---|---|---|

| Manual | 1 and 2 | 0.45 (0.39-0.51) |

| 2 and 3 | 0.76 (0.71-0.80) | |

| 1 and 3 | 0.41 (0.36-0.47) | |

| AI assisted | 1 and 2 | 0.90 (0.87-0.93) |

| 2 and 3 | 0.92 (0.90-0.94) | |

| 1 and 3 | 0.92 (0.90-0.95) |

Abbreviation: AI, artificial intelligence.

Pathologist 1 was W.H., pathologist 2 was K.A.I., and pathologist 3 was R.H.

Linear weights were used to estimate weighted κ.

Further examination of the data revealed a significantly higher number of 100% consensus in grading (cases for which all 3 pathologists agreed) using the AI-assisted method (130 cases [80.2%]) vs the manual method (109 cases [67.3%]; P = .008). In 5 cases (3.1%), consensus grading could not be reached among the 3 pathologists using the manual method; however, there were 0 cases for which consensus grading could not be reached using the AI-assisted method (eTable 3 in the Supplement).

Efficiency

Using the manual method, the 3 pathologists typically spent 4 to 6 minutes per slide to complete grading and quantification and generate a diagnosis. The pathologists generally spent less than 1 minute to finish the same tasks using the AI-assisted method.

Discussion

This diagnostic study found that an AI-enabled platform based on deep convolutional neural network models and hybrid architectures could clearly distinguish prostate cancer epithelium from benign glands or epithelium and stroma and could identify different Gleason patterns with high accuracy at the patch-pixel level (area under the curve = 0.92). The volume of total prostate cancer and the percentage of Gleason patterns 3, 4, and 5 in all cores per biopsy site or slide were tabulated instantly and precisely, which was not achieved through manual analysis. Manual diagnosis, grading, and quantification of prostate cancer is subjective. Accuracy can be altered by the observer’s training, experience, and perspective. Substantial interobserver variability exists, even among practicing pathologists who are experts in prostate cancer.12,30 Major clinical management decisions, however, are often based on this subjective and error-prone process. Thus, an unmet need remains for an unbiased decision support tool that can improve the reproducibility of Gleason grading and quantification on a unified platform during routine clinical practice.22 Because the present AI-assisted method was developed on a cloud-based platform, it has the potential to be used across the world, which is especially useful for regions lacking experienced urological pathologists.

Similar to a previously published study,19 we trained our platform using annotations from a single fellowship-trained urological pathologist (pathologist 1) to avoid presenting the AI system with conflicting labels for the same morphological patterns and to achieve more consistent prostate cancer and Gleason pattern detections. This AI-assisted approach produced high accuracy in prostate cancer detection at the patch-pixel level (area under the curve = 0.92) and almost perfect agreement with the training pathologist (weighted κ = 0.97 for prostate cancer detection at the patch-pixel level and 0.98 for prostate cancer grading at the slide level).

Our AI-powered platform was designed as an open system. Although the platform was able to read the slides independently, it was accessible and interactive, which allowed users to modify the AI system’s annotations at patch-label levels and incorporate those changes into the final report. Therefore, the AI platform provided pathologists with an expert opinion of slides, a decision support tool that enabled them to not only achieve higher accuracy and efficiency in prostate cancer detection and quantification but to markedly reduce interobserver variability. The concordance of grading between the 3 pathologists using the AI-assisted method (weighted κ ranging from 0.88-0.93) was substantially higher than the reported concordance rates among general practicing pathologists (κ = 0.44)31 and expert urological pathologists (κ ranging from 0.56-0.70)32,33 using the manual method. In a search of the PubMed database, we found that all published studies using morphologically based AI systems for prostate cancer diagnosis were limited to prostate cancer detection and grading.19,20,21,22,23,34,35

To our knowledge, this study is the first to use and validate an AI-powered platform among independent pathologists for prostate cancer grading and quantification, which suggests the platform has potential applications in clinical settings. The AI-assisted method was also associated with improvements in pathologists’ clinical practice and diagnostic outcomes, which in turn could have substantial implications for risk stratification and clinical management of patients with prostate cancer. Two recent studies36,37 reported similar improvements in interobserver variability using an AI-assisted approach for prostate cancer grading. However, their AI-assisted approach was limited to evaluating the grading overlay that allowed participating pathologists to toggle slides on and off. The participating pathologists had to estimate or measure Gleason patterns and prostate cancer volume using a digital ruler and record the values manually, whereas our AI-assisted platform allowed the pathologists to view and change the AI-generated patch-level labels with which they disagreed, then tabulate the modified results (ie, the percentages of Gleason patterns and the grading and percentage of prostate cancer) instantly and precisely.

Limitations

This study has limitations. We acquired prostate cancer volume from WSIs rather than each core. However, this function of prostate cancer quantification at the core, slide, or case level can be easily adapted by our AI platform.

Our AI-assisted method quantified prostate cancer volume using prostate cancer pixels relative to total tissue pixels, which is different than the current standard manual method, which measures the prostate cancer core length relative to the total core length per slide. We believe the pixel method is a more precise and superior approach, with a substantially lower likelihood of producing interobserver variability in prostate cancer quantification.

Although the biopsy slides used in this study were collected over a 12-year period (2005-2017), they were obtained from a single institution and were relatively homogeneous. Therefore, additional training of the algorithm may be needed using WSIs from different institutions before our AI-powered platform can be widely used.

We used self-reported time spent reviewing slides for the manual method and estimated time spent reviewing slides for the AI-assisted method, which did not provide precise measurements. However, because our AI system had high accuracy in patch classification and prostate cancer detection, when paired with instant tabulation of prostate cancer (via detection of prostate cancer Gleason patterns), benign glands, and total tissue in pixels, the process of reviewing a WSI for prostate cancer grading and quantification using the AI-assisted method required little time and effort compared with the manual method. Nonetheless, a future study with a better designed time-tracking mechanism, such as an AI-enabled timer, and real-world scenario simulation is needed to further examine the impact of an AI-assisted approach for precisely improving efficiency.

Conclusions

This diagnostic study found that an AI-powered platform used as a clinical decision support tool was able to detect, grade, and quantify prostate cancer with high accuracy and efficiency and was associated with significant reductions in interobserver variability, which could potentially transform histopathological evaluation from a traditional to a precision approach.

eTable 1. Summaries of Biopsy Cohorts

eTable 2. Cross Tabulation: AI vs Training Pathologist Grading Using AI-Assisted Method

eTable 3. Cross Tabulation: Degree of Consensus Grading Using Manual and AI-Assisted Methods

eFigure. Model Architecture of AI-Powered Platform

References

- 1.Gleason DF, Mellinger GT. Prediction of prognosis for prostatic adenocarcinoma by combined histological grading and clinical staging. J Urol. 1974;111(1):58-64. doi: 10.1016/S0022-5347(17)59889-4 [DOI] [PubMed] [Google Scholar]

- 2.Partin AW, Steinberg GD, Pitcock RV, et al. Use of nuclear morphometry, Gleason histologic scoring, clinical stage, and age to predict disease-free survival among patients with prostate cancer. Cancer. 1992;70(1):161-168. doi: [DOI] [PubMed] [Google Scholar]

- 3.Partin AW, Yoo J, Carter HB, et al. The use of prostate specific antigen, clinical stage and Gleason score to predict pathological stage in men with localized prostate cancer. J Urol. 1993;150(1):110-114. doi: 10.1016/S0022-5347(17)35410-1 [DOI] [PubMed] [Google Scholar]

- 4.Epstein JI, Allsbrook WC Jr, Amin MB, Egevad LL. Update on the Gleason grading system for prostate cancer: results of an international consensus conference of urologic pathologists. Adv Anat Pathol. 2006;13(1):57-59. doi: 10.1097/01.pap.0000202017.78917.18 [DOI] [PubMed] [Google Scholar]

- 5.Lotan TL, Epstein JI. Gleason grading of prostatic adenocarcinoma with glomeruloid features on needle biopsy. Hum Pathol. 2009;40(4):471-477. doi: 10.1016/j.humpath.2008.10.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Epstein JI. An update of the Gleason grading system. J Urol. 2010;183(2):433-440. doi: 10.1016/j.juro.2009.10.046 [DOI] [PubMed] [Google Scholar]

- 7.Epstein JI, Egevad L, Amin MB, Delahunt B, Srigley JR, Humphrey PA; Grading Committee . The 2014 International Society of Urological Pathology (ISUP) Consensus Conference on Gleason Grading of Prostatic Carcinoma: definition of grading patterns and proposal for a new grading system. Am J Surg Pathol. 2016;40(2):244-252. doi: 10.1097/PAS.0000000000000530 [DOI] [PubMed] [Google Scholar]

- 8.Brimo F, Montironi R, Egevad L, et al. Contemporary grading for prostate cancer: implications for patient care. Eur Urol. 2013;63(5):892-901. doi: 10.1016/j.eururo.2012.10.015 [DOI] [PubMed] [Google Scholar]

- 9.Sauter G, Steurer S, Clauditz TS, et al. Clinical utility of quantitative Gleason grading in prostate biopsies and prostatectomy specimens. Eur Urol. 2016;69(4):592-598. doi: 10.1016/j.eururo.2015.10.029 [DOI] [PubMed] [Google Scholar]

- 10.Epstein JI, Amin MB, Reuter VE, Humphrey PA. Contemporary Gleason grading of prostatic carcinoma: an update with discussion on practical issues to implement the 2014 International Society of Urological Pathology (ISUP) Consensus Conference on Gleason Grading of Prostatic Carcinoma. Am J Surg Pathol. 2017;41(4):e1-e7. doi: 10.1097/PAS.0000000000000820 [DOI] [PubMed] [Google Scholar]

- 11.Glaessgen A, Hamberg H, Pihl CG, Sundelin B, Nilsson B, Egevad L. Interobserver reproducibility of percent Gleason grade 4/5 in total prostatectomy specimens. J Urol. 2002;168(5):2006-2010. doi: 10.1016/S0022-5347(05)64283-8 [DOI] [PubMed] [Google Scholar]

- 12.Sadimin ET, Khani F, Diolombi M, Meliti A, Epstein JI. Interobserver reproducibility of percent Gleason pattern 4 in prostatic adenocarcinoma on prostate biopsies. Am J Surg Pathol. 2016;40(12):1686-1692. doi: 10.1097/PAS.0000000000000714 [DOI] [PubMed] [Google Scholar]

- 13.Tosoian JJ, Mamawala M, Patel HD, et al. Tumor volume on biopsy of low risk prostate cancer managed with active surveillance. J Urol. 2018;199(4):954-960. doi: 10.1016/j.juro.2017.10.029 [DOI] [PubMed] [Google Scholar]

- 14.Tosoian JJ, Trock BJ, Landis P, et al. Active surveillance program for prostate cancer: an update of the Johns Hopkins experience. J Clin Oncol. 2011;29(16):2185-2190. doi: 10.1200/JCO.2010.32.8112 [DOI] [PubMed] [Google Scholar]

- 15.Ploussard G, Epstein JI, Montironi R, et al. The contemporary concept of significant versus insignificant prostate cancer. Eur Urol. 2011;60(2):291-303. doi: 10.1016/j.eururo.2011.05.006 [DOI] [PubMed] [Google Scholar]

- 16.Patel V, Hubbard S, Huang W. Comparison of two commonly used methods in measurement of cancer volume in prostate biopsy. Int J Clin Exp Pathol. 2020;13(4):664-674. [PMC free article] [PubMed] [Google Scholar]

- 17.Egevad L, Allsbrook WC Jr, Epstein JI. Current practice of diagnosis and reporting of prostate cancer on needle biopsy among genitourinary pathologists. Hum Pathol. 2006;37(3):292-297. doi: 10.1016/j.humpath.2005.10.011 [DOI] [PubMed] [Google Scholar]

- 18.Coudray N, Ocampo PS, Sakellaropoulos T, et al. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat Med. 2018;24(10):1559-1567. doi: 10.1038/s41591-018-0177-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Strom P, Kartasalo K, Olsson H, et al. Artificial intelligence for diagnosis and grading of prostate cancer in biopsies: a population-based, diagnostic study. Lancet Oncol. 2020;21(2):222-232. doi: 10.1016/S1470-2045(19)30738-7 [DOI] [PubMed] [Google Scholar]

- 20.Thomas T. Automated systems comparable to expert pathologists for prostate cancer Gleason grading. Nat Rev Urol. 2020;17(3):131. doi: 10.1038/s41585-020-0294-z [DOI] [PubMed] [Google Scholar]

- 21.Pantanowitz L, Quiroga-Garza GM, Bien L, et al. An artificial intelligence algorithm for prostate cancer diagnosis in whole slide images of core needle biopsies: a blinded clinical validation and deployment study. Lancet Digit Health. 2020;2(8):e407-e416. doi: 10.1016/S2589-7500(20)30159-X [DOI] [PubMed] [Google Scholar]

- 22.Nagpal K, Foote D, Tan F, et al. Development and validation of a deep learning algorithm for Gleason grading of prostate cancer from biopsy specimens. JAMA Oncol. 2020;6(9):1372-1380. doi: 10.1001/jamaoncol.2020.2485 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Marginean F, Arvidsson I, Simoulis A, et al. An artificial intelligence–based support tool for automation and standardisation of Gleason grading in prostate biopsies. Eur Urol Focus. Published online December 7, 2020. doi: 10.1016/j.euf.2020.11.001 [DOI] [PubMed] [Google Scholar]

- 24.Egevad L, Strom P, Kartasalo K, et al. The utility of artificial intelligence in the assessment of prostate pathology. Histopathology. 2020;76(6):790-792. doi: 10.1111/his.14060 [DOI] [PubMed] [Google Scholar]

- 25.Han W, Johnson C, Gaed M, et al. Histologic tissue components provide major cues for machine learning–based prostate cancer detection and grading on prostatectomy specimens. Sci Rep. 2020;10(1):9911. doi: 10.1038/s41598-020-66849-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Karimi D, Nir G, Fazli L, Black PC, Goldenberg L, Salcudean SE. Deep learning–based Gleason grading of prostate cancer from histopathology images—role of multiscale decision aggregation and data augmentation. IEEE J Biomed Health Inform. 2020;24(5):1413-1426. doi: 10.1109/JBHI.2019.2944643 [DOI] [PubMed] [Google Scholar]

- 27.Lucas M, Jansen I, Savci-Heijink CD, et al. Deep learning for automatic Gleason pattern classification for grade group determination of prostate biopsies. Virchows Arch. 2019;475(1):77-83. doi: 10.1007/s00428-019-02577-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.World Medical Association. World Medical Association Declaration of Helsinki: ethical principles for medical research involving human subjects. JAMA. 2013;310(20):2191-2194. doi: 10.1001/jama.2013.281053 [DOI] [PubMed] [Google Scholar]

- 29.van Leenders GJLH, van der Kwast TH, Grignon DJ, et al. ; ISUP Grading Workshop Panel Members . The 2019 International Society of Urological Pathology (ISUP) consensus conference on the grading of prostatic carcinoma. Am J Surg Pathol. 2020;44(8):e87-e99. doi: 10.1097/PAS.0000000000001497 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. arXiv. Preprint posted online May 18, 2015. https://arxiv.org/abs/1505.04597

- 31.Netto GJ, Eisenberger M, Epstein JI; TAX 3501 Trial Investigators . Interobserver variability in histologic evaluation of radical prostatectomy between central and local pathologists: findings of TAX 3501 multinational clinical trial. Urology. 2011;77(5):1155-1160. doi: 10.1016/j.urology.2010.08.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Allsbrook WC Jr, Mangold KA, Johnson MH, Lane RB, Lane CG, Epstein JI. Interobserver reproducibility of Gleason grading of prostatic carcinoma: general pathologist. Hum Pathol. 2001;32(1):81-88. doi: 10.1053/hupa.2001.21135 [DOI] [PubMed] [Google Scholar]

- 33.Allsbrook WC Jr, Mangold KA, Johnson MH, et al. Interobserver reproducibility of Gleason grading of prostatic carcinoma: urologic pathologists. Hum Pathol. 2001;32(1):74-80. doi: 10.1053/hupa.2001.21134 [DOI] [PubMed] [Google Scholar]

- 34.Egevad L, Swanberg D, Delahunt B, et al. Identification of areas of grading difficulties in prostate cancer and comparison with artificial intelligence assisted grading. Virchows Arch. 2020;477(6):777-786. doi: 10.1007/s00428-020-02858-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Zlotta AR, Sadeghian A. Re: artificial intelligence for diagnosis and grading of prostate cancer in biopsies: a population-based, diagnostic study. Eur Urol. 2020;78(2):290-291. doi: 10.1016/j.eururo.2020.04.010 [DOI] [PubMed] [Google Scholar]

- 36.Bulten W, Pinckaers H, van Boven H, et al. Automated deep-learning system for Gleason grading of prostate cancer using biopsies: a diagnostic study. Lancet Oncol. 2020;21(2):233-241. doi: 10.1016/S1470-2045(19)30739-9 [DOI] [PubMed] [Google Scholar]

- 37.Bulten W, Balkenhol M, Belinga JJA, et al. ; ISUP Pathology Imagebase Expert Panel . Artificial intelligence assistance significantly improves Gleason grading of prostate biopsies by pathologists. Mod Pathol. 2021;34(3):660-671. doi: 10.1038/s41379-020-0640-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Steiner DF, Nagpal K, Sayres R, et al. Evaluation of the use of combined artificial intelligence and pathologist assessment to review and grade prostate biopsies. JAMA Netw Open. 2020;3(11):e2023267. doi: 10.1001/jamanetworkopen.2020.23267 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eTable 1. Summaries of Biopsy Cohorts

eTable 2. Cross Tabulation: AI vs Training Pathologist Grading Using AI-Assisted Method

eTable 3. Cross Tabulation: Degree of Consensus Grading Using Manual and AI-Assisted Methods

eFigure. Model Architecture of AI-Powered Platform