Abstract

The long-awaited progress in digitalisation is generating huge amounts of medical data every day, and manual analysis and targeted, patient-oriented evaluation of this data is becoming increasingly difficult or even infeasible. This state of affairs and the associated, increasingly complex requirements for individualised precision medicine underline the need for modern software solutions and algorithms across the entire healthcare system. The utilisation of state-of-the-art equipment and techniques in almost all areas of medicine over the past few years has now indeed enabled automation processes to enter – at least in part – into routine clinical practice. Such systems utilise a wide variety of artificial intelligence (AI) techniques, the majority of which have been developed to optimise medical image reconstruction, noise reduction, quality assurance, triage, segmentation, computer-aided detection and classification and, as an emerging field of research, radiogenomics. Tasks handled by AI are completed significantly faster and more precisely, clearly demonstrated by now in the annual findings of the ImageNet Large-Scale Visual Recognition Challenge (ILSVCR), first conducted in 2015, with error rates well below those of humans. This review article will discuss the potential capabilities and currently available applications of AI in gynaecological-obstetric diagnostics. The article will focus, in particular, on automated techniques in prenatal sonographic diagnostics.

Key words: pregnancy, sonography, gynecology, malformation

Introduction

In 1997 the reigning world chess champion G. Kasparov was conquered by a computer (Deep Blue). Public awareness of artificial intelligence (AI), however, predates this success. In fact, the first successful AI applications were developed significantly beforehand and have now become an integral and accepted (often unrecognised) feature of our daily lives 4 . AI experts from large companies report that 79 percent of participants in surveys consider AI techniques as strategically highly significant or even vital for sustainable business success. Put another way, artificial intelligence has now become a mainstream technology worldwide in every industry. Competencies in core AI technologies (machine learning with deep learning, natural language processing and computer vision) are nowadays indispensable for larger companies 5 .

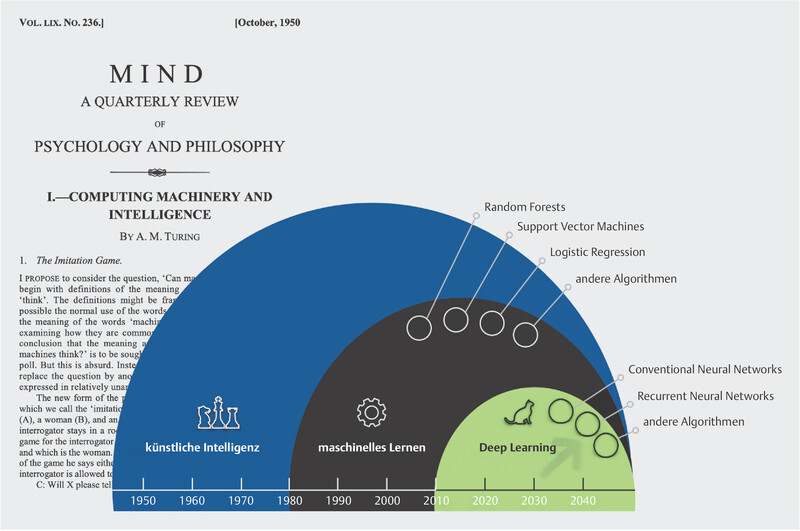

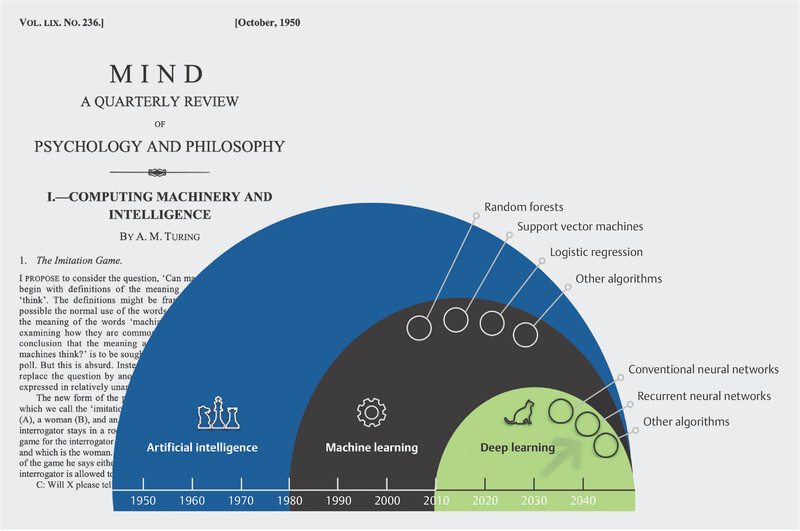

Although no single accepted definition of artificial intelligence exists, most exerts would agree that AI as a technology refers to any machine or system that can perform complex tasks that would normally involve human (or other biological) brain power 1 , 2 , 3 . Hence, the term artificial intelligence refers not just to a single technology but to a family of AI applications in a wide range of fields ( Fig. 1 ). Machine learning (ML) is a member of this family that focuses on teaching computers to perform tasks with a predetermined goal, without explicitly programming the rules for performing such tasks. It can be regarded as a statistical method that continuously improves upon itself through exposure to increasing volumes of data. This allows such systems to consecutively acquire the ability to correctly recognise objects from images, texts or acoustic data by searching for common properties and regularities and from which patterns can ultimately be extracted.

Fig. 1.

Alan M. Turingʼs 1950 review paper on “machine” intelligence formed the conceptual basis for the introduction of the “Turing test” to ascertain whether a machine can be said to exhibit artificial intelligence. The development of artificial intelligence and its applications can be viewed in a temporal context: machine learning and deep learning are not merely related in name; deep learning is a modelling approach that enables, among other things, problems in modern fields such as image recognition, speech recognition and video interpretation to be solved significantly faster and with a lower error rate than might be feasible by humans alone 4 , 47 , 55 .

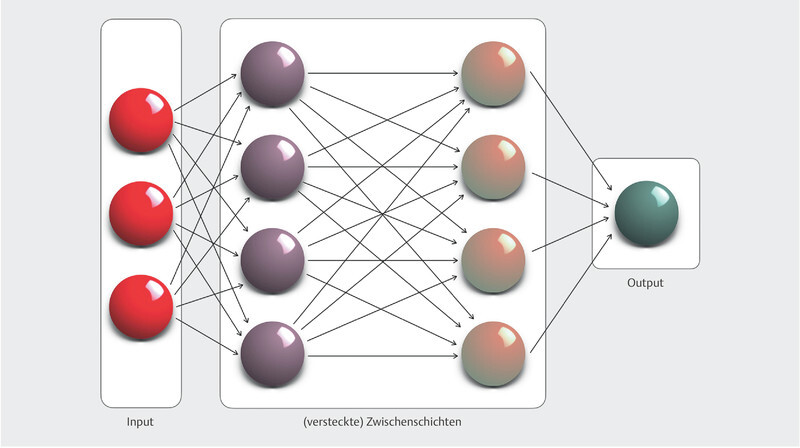

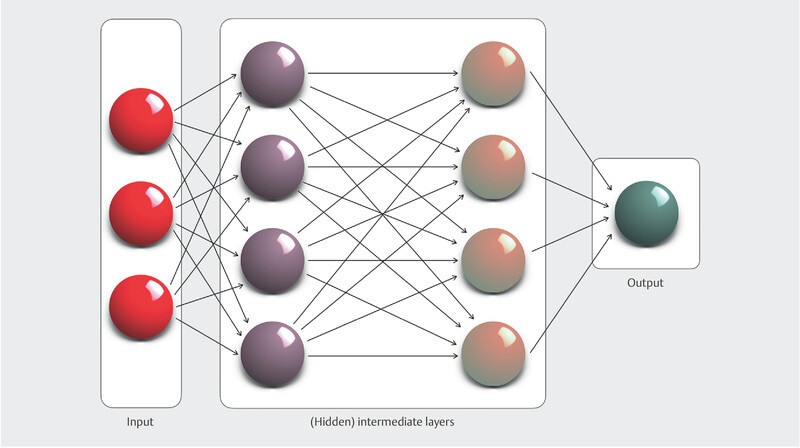

Deep learning, the flagship discipline of ML, unlike other machine learning methods, no longer requires direct intervention by humans. Such machine learning processes are made possible by artificial neural networks (ANN) or convolutional neural networks (CNN), which consist of several convolutional layers, followed by a pooling layer that aggregates the data of the filters and eliminates superfluous information ( Fig. 2 ). In this way, the abstraction level of a CNN increases with each of these filter levels. Developments in the field of computer-aided signal processing and the expansion of computing power with the latest high-speed graphics processors now allow an unlimited number of filter layers within a CNN to be created, which are thus referred to as “deep” (in contrast to conventional “shallow” neural networks, which usually consist of only one filter). Such learning is an adaptive process in which the weighting of all interconnected neurons changes so as to ultimately achieve the optimal response (output) to all input variables. The process allows either supervised or unsupervised approaches to neural network learning. In the former, ML algorithms employ a pre-coded data set to predict the desired outcome. In contrast, unsupervised approaches are supplied with only unlabelled (coded) input data in order to identify hidden patterns within them and, consequently, make novel predictions.

Fig. 2.

Schematic design of a (feed-forward) convolutional network with two hidden layers. The source information is segmented and abstracted to achieve pattern recognition in these layers and ultimately passed on to the output layer. The capacity of such neural networks can be controlled by varying their depth (number of layers) and width (number of neurons/perceptrons per layer).

Since the early 2000s, deep learning networks have been successfully employed, for example, to recognise and segment objects and image content. AI-assisted voice control and speech recognition are based on similar principles, for instance, the Amazon Alexa, Google Home and Apple Siri voice assistants. Such technologies have a wide range of applications. Their developers, for example, claim that it is now possible to utilise audio capture (“coughing” apps) to identify vocal patterns characteristic of COVID-19 6 . Furthermore, automated evaluation of speech spectrograms can now be employed to identify vocal biomarkers for a variety of diseases, such as depression 6 , 7 .

In terms of healthcare, computer-assisted analysis of image data is undoubtedly one of the most significant advantages of AI. In recent years, a new and rapidly developing field of research has emerged in this context, which, under the umbrella term “radiomics”, aims to employ AI to systematically analyse imaging data from patients, characterising over a range a large number of individual and distinct image features with regard to correlation and clinical differentiation. In contrast, the term “radiogenomics” refers to a specialised application in which radiomic or other imaging features are linked to genomic profiles 8 .

AI and Benefits for Gynaecological-Obstetric Imaging and Diagnostics

The initial hysteria that AI technologies could potentially replace clinical radiologists has now abated. In its place is an awareness that machine learning capabilities will enable personalised AI-based software algorithms with interactive visualisation and automated quantification to accelerate clinical decision-making and analysis time. The uptake of AI in other clinical fields, however, is still rather modest or hesitant 9 , 10 , 11 . Computer-assisted diagnosis (CAD) systems have actually been in use for more than 25 years, in particular in breast diagnostics 12 , 13 . Novel deep-learning algorithms are employed to optimise diagnostic capabilities both in the area of mammography and in AI-supported reporting of mammasonography data sets, addressing issues limiting the use of conventional CAD systems (high development costs, general cost/workflow (in)efficiency, relatively high false positive rate, restriction to certain lesions/entities) 14 . This is illustrated by a recent US-British study in which a CNN was trained on the basis of 76 000 mammography scans, resulting in a significant reduction of false-positive and false-negative findings by 1.2 and 2.7% (UK) and 5.7 and 9.4% (USA), respectively, compared to initial expert findings 15 . In addition, consistent AI support can also sustainably reduce workload by automatically identifying normal screening results in advance that would otherwise have required traditional assessment 16 . OʼConnell et al. have published similarly promising data on AI-assisted evaluation of breast sonography findings. They demonstrated on the basis of 300 patients that, using a commercial diagnostic tool (S-Detect), automated detection of breast lesions using a set of BI-RADS descriptors was in agreement with the results obtained by ten radiologists with appropriate expertise (sensitivity, specificity > 0.8) 17 .

The advantage of deep learning algorithms has also been made explicitly evident in other application areas in our field. Cho et al., for example, have developed and validated deep learning models to automatically classify cervical neoplasms in colposcopic images. The authors optimised pre-trained CNNs in two scoring systems: the cervical intraepithelial neoplasia (CIN) system and the lower anogenital squamous terminology (LAST) system. The CNNs were capable of efficiently identifying biopsy-worthy findings (AUC 0.947) 18 . Shanthi et al. were able to correctly classify microscopic cervical cell smears as normal, mild, moderate, severe and carcinomatous with an accuracy of 94.1%, 92.1% and 85.1%, respectively, using various CNNs trained with augmented data sets (original colposcopy, contour-extracted and binary image data) 19 . In the view of Försch et al., one of the main challenges, generally, to increased integration of AI algorithms in the assessment of pathology and diagnosis of histomorphological specimens is that, at present, only a fraction of histopathological data is in fact available in digital form and thus accessible for automated evaluation 20 . This situation still applies to the vast majority of potential clinical AI applications 1 , 21 .

Very comparable approaches have also been pursued over the last five years in reproductive medicine, in which successful attempts have been made, among other things, to utilise AI technologies for embryo selection. These have involved training CNNs to make qualitative statements based on image data and/or morphokinetic data, to predict the success of implantation 22 , 23 . A study by Bori et al. involving more than 600 patients analysed not only the above-mentioned morphokinetic characteristics but also novel parameters such as the distance and speed of pronuclear migration, inner cell mass area, increased diameter of the blastocyst and length of the trophectoderm cell cycle. Of the four test algorithms, most efficient (AUC 0.77) was the one combining conventional morphokinetic and the above-mentioned morphodynamic features, with the latter two parameters significantly more likely to be associated with differences in implanted and non-implanted embryos 24 .

It is beyond dispute that, to date, only relatively few AI-based ultrasound applications have progressed fully from academic concept to clinical application and commercialisation. In addition to the importance of AI in prenatal diagnostics, as discussed in the following paragraphs, the advantages of AI-based automated algorithms have been very impressively demonstrated in reporting gynaecological abnormalities, a task that is bound to gain in importance, in particular, given the limited quality of existing ultrasound training 25 , 26 , 27 . Even though the first work in this field dates back more than 20 years 28 , significant pioneering work has been conducted in the last decade in particular, not least due to the extensive studies of the IOTA Working Group. Model analyses on risk quantification of sonographically detected adnexal lesions have been able to demonstrate the extent to which, on the one hand, a standardised procedure for qualified assessment and, on the other, a multi-class risk model (IOTA Adnex – a ssessment of d ifferent n eoplasias in the Adn ex a) validated on the basis of thousands of patient histories have made it possible to precisely and reproducibly assess the quality of sonographic findings of adnexal processes, thereby providing a significant boost to other study approaches (± AI) in this field 29 , 30 , 31 . The incorporation of the ADNEX model into a consensus guideline of the American College of Radiologists (ACR) clearly supports these findings. The decision to do so is remarkable, as the US professional medical associations are traditionally considered to be sceptical of ultrasound across all disciplines 32 . In a recent study on the validity of two AI models to determine the character (benign/malignant) of adnexal lesions (trained on grey-scale and power doppler images), Christiansen et al. demonstrated a sensitivity of 96% and 97.1%, respectively, and a specificity of 86.7% and 93.7%, respectively, with no significant differences to expert assessments 33 . The additional benefit of various ML classifiers, alone or in combination, has been investigated in several other approaches, which have likewise found that, in the future, AI approaches will be able to identify more ovarian neoplasms and be increasingly employed in their (early) detection 34 , 35 , 36 , 37 , 38 . In a recently published study, Al-Karawi et al. used ML algorithms (support vector machine classification) to investigate seven differing familiar image texture parameters in ultrasound still images, which, according to the authors, can provide information about altered cellular composition in the process of carcinogenesis. By combining the features with the best test results, the researchers achieved an accuracy of 86 – 90% 39 .

AI in Foetal Echocardiography

Naturally, any analysis of the value of AI raises the question of how automated approaches can benefit foetal cardiac scanning – both in terms of diagnostics and with regard to the practitioner – which is one of the most important but also more complex elements of prenatal sonographic examinations. Here, it is important to recognise that although detection rates of congenital heart defects (CHD) in national or regional screening programmes have improved demonstrably over the last decade, their sensitivity still ranges from 22.5 to 52.8% 40 . The reasons for this are complex – one of the main factors is without doubt the fact that the vast majority of CHDs actually occur in the low-risk population, with only approximately 10% occurring in pregnant women with known risk factors. Furthermore, a Dutch study suggests that, in addition to the lack of expertise in routine clinical practice, factors such as limited adaptive visual-motor skills in acquiring the correct cardiac planes and reduced vigilance seem to play a crucial role in identifying cardiac abnormalities 41 .

Experience from cardiology in adults has shown, among other things, that the use of automated systems (not by any means a novel conceptual approach) is demonstrably more efficient than a conventional (manual) approach and is likely to bridge the gap between experts and less experienced practitioners, while at the same time reducing inter- and intraobserver variance. Pilot studies on automated analysis of left ventricular (functional) parameters such as ventricular volume and ejection fraction on the basis of 2-D images and studies on AI-based tracing of endocardial contours in apical two- and four-chamber views using transthoracically acquired 3-D data sets have demonstrated accuracy comparable to manual evaluation 42 , 43 . In this context, Kusunose defined four steps that are critical for developing relevant AI models in echocardiography (in addition to ensuring adequate image quality, these steps include level classification, measurement approaches and, finally, anomaly detection) 44 . Zhang et al. investigated the validity of a fully automated AI approach to echocardiographic diagnosis in a clinical context by training deep convolutional networks using > 14 000 complete echocardiograms, enabling them to identify 23 different viewpoints across five different common reference views. In up to 96% of cases, the system was able to accurately identify the individual cardiac diagnostic levels and, in addition, to quantify eleven different measurement parameters with comparable or even higher accuracy than manual approaches 45 . Does this mean that AI algorithms will replace echocardiographers or even cardiologists in the future? Should we be worried? Have we become members of the “useless class”, in the provocative words of Harari 46 ? The answers to these questions are unambiguous yet complex and equally applicable to foetal echocardiography. Although AI approaches will very soon be an integral component of routine cardiac diagnostics, examiners have a continuing or even increased responsibility to employ their clinical expertise to understand, monitor and assess automated procedures and, when errors occur, to take appropriate remedial action 47 . Arnaout et al. have successfully trained a model for creating diagnostic cross-sectional planes using 107 823 ultrasound images from > 1300 foetal echocardiograms 48 . In a separate modelling approach, they were then able to distinguish between structurally normal hearts and those with complex anomalies. The findings of the AI were comparable to those of experts. A slightly lower sensitivity/specificity (0.93 and 0.72, respectively, AUC 0.83) was documented by Le et al. in 2020 in their AI approach on nearly 4000 foetuses 49 . Dong et al. demonstrated how accurately a three-step CNN is able to detect different representations of four-chamber views based on 2-D image files and at the same time provide feedback on the completeness of the key cardiac structures imaged 50 .

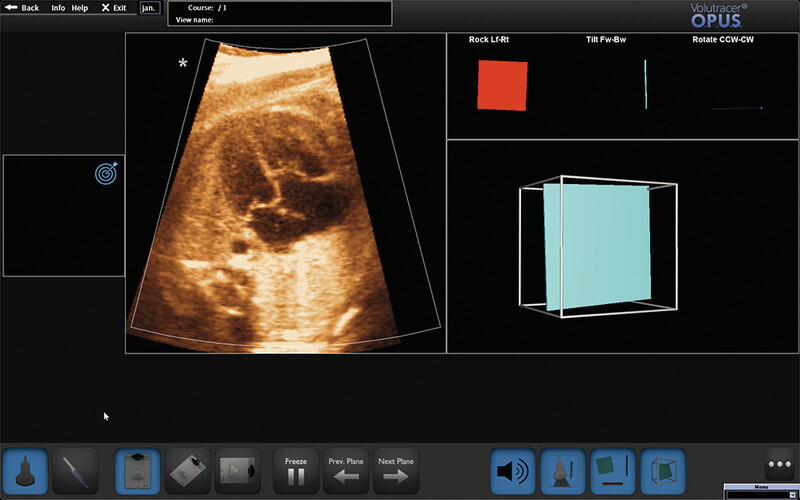

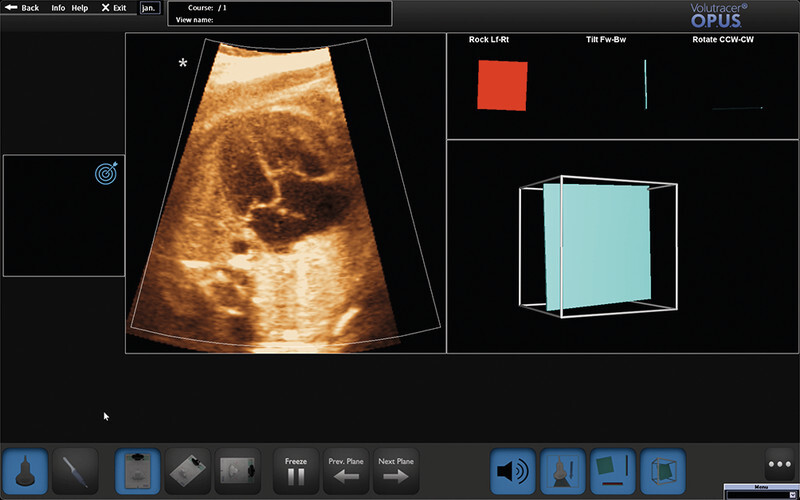

In summary, it is beyond question that the essential prerequisite for efficient cardiac diagnostics, as discussed above, is the creation of exact cross-sectional images obtained during examinations. In the final analysis, this prerequisite should apply to all disciplines, and in particular that of functional imaging diagnostics. Hinton formulated this pertinently: “To recognize shapes, first learn to generate images” 51 . Noteworthy here is the recent approval by the US Food and Drug Administration (FDA) of an adaptive ultrasound system (Caption AI) to support and optimise sectional plane creation (and recording of video sequences) echocardiography in adults. In the view of the developers, this demonstrates how the enormous potential of artificial intelligence and machine learning technologies can be used specifically to improve access to safe and effective cardiac diagnostics 52 . Another commercially available high-sensitivity ultrasound simulator (Volutracer O. P. U. S.) has a comparable AI workflow. The simulator controls and adaptively corrects in real time the manual settings and transducer movements to achieve an exact target plane in any 2-D image sequence (irrespective of the anatomical structure) ( Fig. 3 ) 53 . A major advantage of these systems is undoubtedly their usefulness in particular in training and advanced training, since, among other things, the integrated self-learning mode can be used to automatically train, evaluate and certify operators without the need for experts to personally adjust settings 54 .

Fig. 3.

Representation of the optical ultrasound simulator Volutracer O. P. U. S. Any volume data set (see also Fig. 5 ) can be uploaded and be adapted, for instance for teaching, by post-processing to acquire appropriate planes (so-called freestyle mode – without simulator instructions). In the upper right-hand corner of the screen, the system provides graphical feedback to assist movements to establish the correct target level. The simulation software also includes a variety of cloud-based training datasets that help teach users the correct settings using a GPS tracking system and audio simulator instructions with overlaid animations. Among other things, the system measures the position, angle of rotation and time until the required target plane is achieved and compares this with an expert comparison that can likewise be viewed.

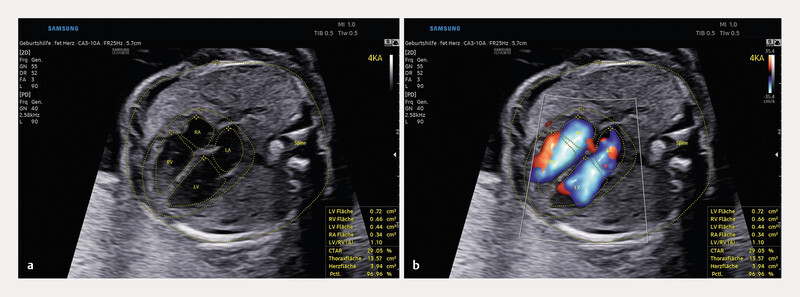

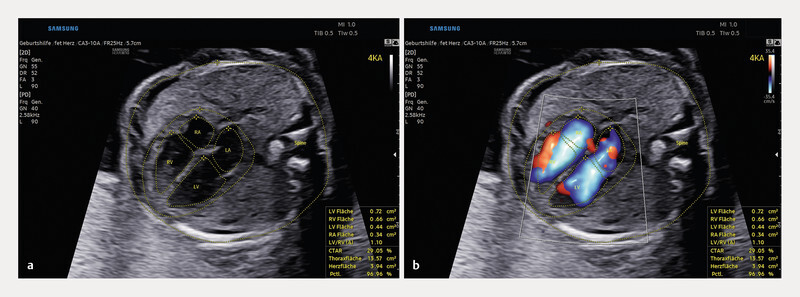

Due to its comparatively small size, the foetal heart usually takes up a comparatively small area of the US image, and this, in turn, requires any algorithm to learn to ignore at least a portion of the available image data. Another difference to postnatal echocardiography is that the relative orientation and position of the heart in the image in relation to the position of the foetus in the uterus can vary considerably, further complicating image analysis 55 , 56 . HeartAssist is an interesting approach to automated recognition, annotation and measurement of cardiac structures using deep-learning algorithms that is about to be launched on the market. HeartAssist is an intelligent software tool employed in foetal echocardiography that can identify and evaluate target structures (axial, sagittal) from 2-D static images (directly or as a single frame extracted from video sequences) of cardiac diagnostic sectional planes ( Fig. 4 ). Noteworthy is that, on the one hand, the tool can capture even partially obscured image information and integrate it into the analysis and, on the other hand, the image recognition is successful even with a limited sonographic window. This approach, like most algorithms to automate (foetal) diagnostics (e.g. BiometryAssist, Smart OB or SonoBiometry), is based on segmentation (abstraction) of foetal structures. It employs a wide variety of automated segmentation techniques (pixel, edge and region based models, as well as model and texture based models), which are usually combined to achieve better results 57 , 58 .

Fig. 4.

Four-chamber view of a foetal heart in week 23 of pregnancy. The foetusʼ spine is located at 3 oʼclock, the four-chamber view can be seen in a partially oblique orientation. In addition to abdominal and cardiac circumference, the inner outline of the atria and ventricles is automatically recognised, traced and quantified in the static image. Similarly, HeartAssist can annotate and measure all other cardiac diagnostic sectional planes (axial/longitudinal).

Particular significance of 3-D/4-D technology

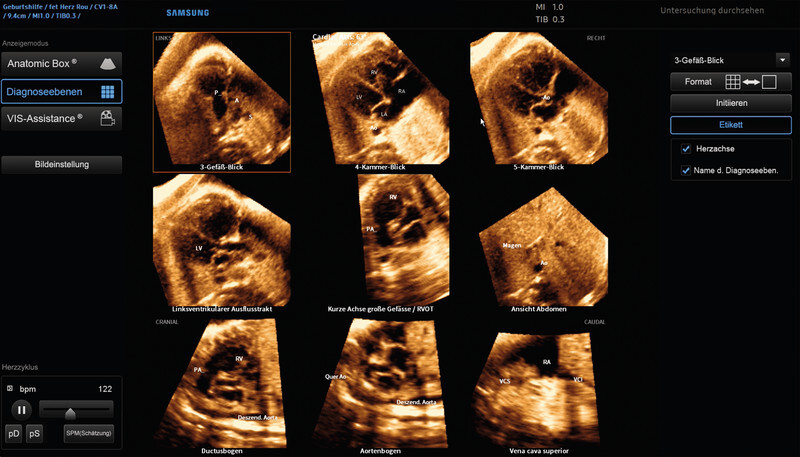

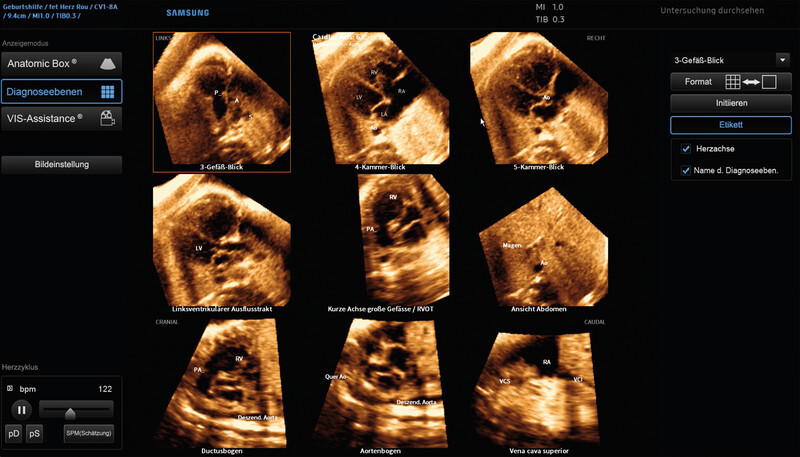

The introduction of 3-D/4-D technology, now pre-installed on most US systems, has now opened up a range of diverse display options that are being increasingly utilised for automated image analysis and layer creation. With this technology some manufacturers are offering commercial software tools to facilitate a volume-based approach to foetal echocardiography and its standardised interpretation (Fetal Heart Navigator, SonoVCADheart, Smart Planes FH and 5D Heart). The latter algorithm facilitates a standardised workflow-based 3-D/4-D evaluation of the cardiac anatomy of the foetus through implementation of “foetal intelligent navigation echocardiography” (FINE) ( Fig. 5 ). This method analyses STIC (spatial temporal image correlation) volumes with the four-chamber view as the initial plane of volume acquisition. In the next step, predefined anatomical target structures are marked and the nine diagnostic planes needed for a complete foetal echocardiographic assessment are automatically reconstructed. Each plane can subsequently be evaluated independently of the others (e.g. quantitative analysis of the outflow tracts) and, if required, then be manually adjusted. Yeo et al. showed that 98% of cardiac abnormalities could be detected using this method 59 . This has been shown to be easy to learn and simplifies work-flows to evaluate the foetal heart independently of expert practitioners, a feature that is particularly important for capturing congenital anomalies in detail 60 , 61 .

Fig. 5.

5DHeart (foetal intelligent navigation echocardiography, FINE) program interface with automatically reconstructed diagnostic planes of an Ebsteinʼs anomaly of a foetus in the week 33 of pregnancy (STIC volume). The atrialised right ventricle is clearly visible as a lead structure in the laevorotated four-chamber view (cardiac axis > 63°). The foetusʼ back is positioned at 6 oʼclock by default after the automated software has been implemented (volume acquisition, on the other hand, was performed at 7 – 8 oʼclock, see Fig. 5 ). Analysis of the corresponding planes has also revealed a tubular aortic stenosis (visualised in three-vessel view, five-chamber view, LVOT and aortic arch planes).

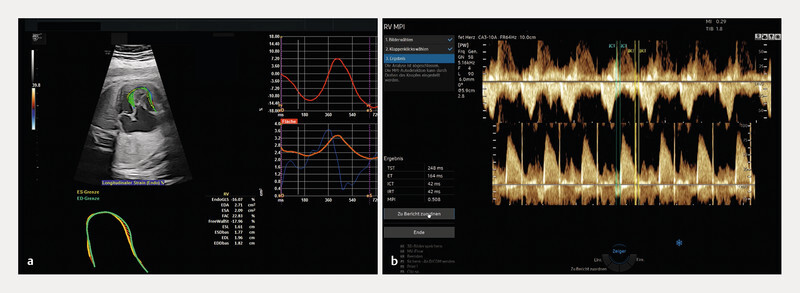

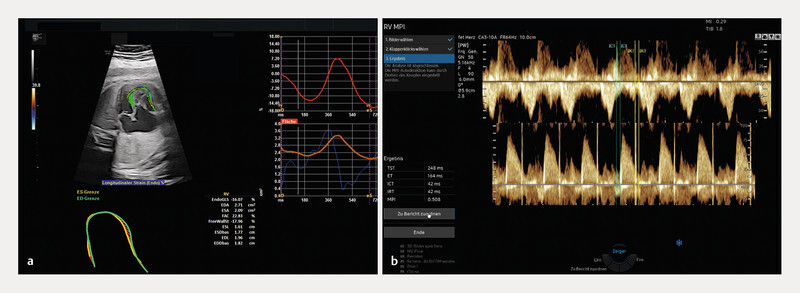

Acquisition and quantification of objectifiable foetal cardiac functional parameters is similarly demanding and thus examiner-dependent, similar to manual level reconstruction. At this juncture, special mention should be made of speckle tracking echocardiography, which provides quantitative information on two-dimensional global and segmental myocardial wall movement and deformation parameters (strain/strain rate) on the basis of “speckles” caused by interference from random scatter echoes in the ultrasound image. The introduction of semi-automatic software (fetalHQ), which uses a 2-D video clip of the heart and manual selection of a heart cycle and corresponding marking of the annulus and apex, has now made it possible for less experienced practitioners to quantify the size, shape and contractility of 24 different segments of the foetal heart using AI-assisted analysis of these speckles 62 , 63 , 64 ( Fig. 6 ). Beyond this, AI methods to analyse Doppler-based cardiac function (modified myocardial performance index (Mod-MPI), previously termed the Tei index) have been developed in recent years and are now commercially available 65 , 66 .

Fig. 6.

Software tools for functional analysis of the foetal heart. Semi-automated approach to speckle tracking analysis using fetalHQ in the foetus examined in Figs. 3 and 5 with Ebsteinʼs anomaly ( a ). A selected cardiac cycle is analysed in the approach using automatic contouring of the endocardium for the left and/or right ventricle and subsequent quantification of functional variables such as contractility and deformation. Automated calculation of the (modified) myocardial performance index (MPI, Tei index) by spectral Doppler recording of blood flow across the tricuspid and pulmonary valves using MPI+ ( b ).

AI in standardised diagnostics of the foetal CNS

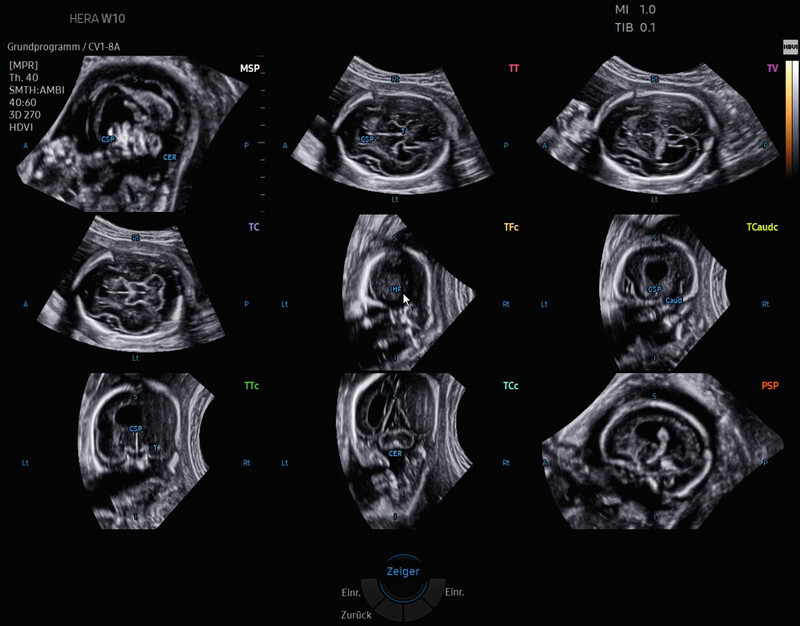

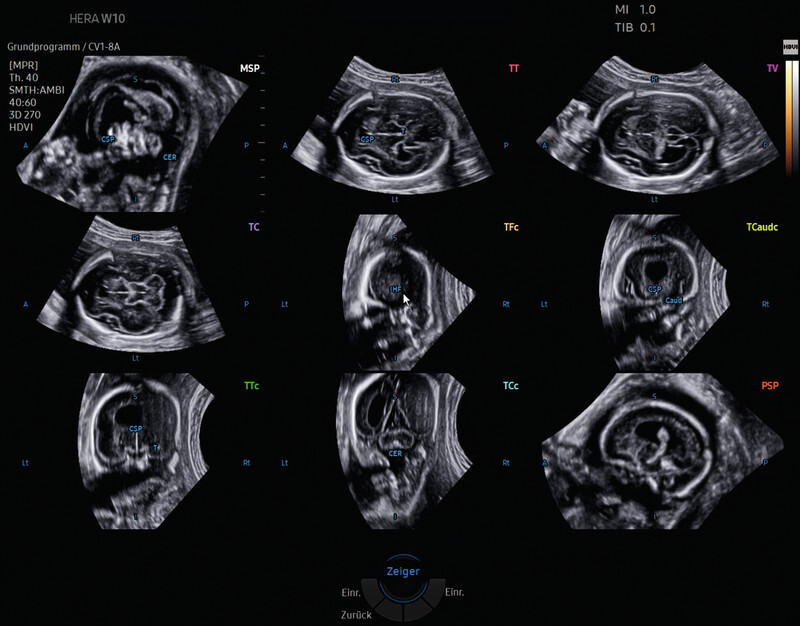

As mentioned above, the decisive advantage of automated techniques in prenatal diagnostics is clearly that they will allow less experienced practitioners to correctly identify highly complex anatomical structures such as the foetal heart or CNS in a standardised and examiner-independent manner. The basis for such tools is formed by transthalamic (TT) 3-D volume data sets (analogous to the sectional image setting needed to quantify biparietal diameter) acquired with AI-assisted post-processing and evaluation. These allow a primary examination of the foetal CNS with extraction of the transventricular (TV) or transcerebellar (TC) plane from the volume block (SonoCNS, Smart Planes CNS) or even a complete neurosonogram (5DCNS+) ( Fig. 7 ). After axial alignment of the corresponding B and C planes and marking of thalamic nuclei or the cave of the septum pellucidum, the latter algorithm also automatically reconstructs the coronal and sagittal sectional planes required for a complete neurosonogram ( Fig. 5 ). The latter working group documented successful visualisation rates of 97.7 – 99.4% for axial, 94.4 – 97.7% for sagittal and 92.2 – 97.2% for coronal planes in a prospective follow-up study using the 5DCNS+ modified algorithm 67 . A retrospective clinical validation study of more than 1100 pregnant women yielded similar results 68 . In contrast to the data of Pluym et al., the authors were able to show in their study, among other things, that this standardised approach could be used to collect biometric parameters that, in comparison, were similarly valid and reproducible to those obtained manually 69 . Ambroise-Grandjean et al. were similarly unequivocal when they showed in a feasibility study that the three primary planes including biometric measurements could be consistently reconstructed and quantitatively evaluated using AI (Smart Planes CNS) with low intra- and interobserver reproducibility [ICC > 0.98] 70 .

Fig. 7.

(Semi-)automatic reconstruction after application of 5DCNS+ of an axially acquired 3-D volume of the foetal CNS (biparietal plane) in a foetus with a semilobar holoprosencephaly in week 23 of pregnancy. The complete neurosonogram reconstructed from the source volume comprises the 9 required diagnostic sectional planes (3 axial, 4 coronal and 2 sagittal planes). In the axial planes, automatic biometric measurements (not shown) are taken, which can be adjusted subsequently by hand at any time.

These algorithms are already in use in clinical practice, however, they usually require intermediate steps taken by the practitioner. Nevertheless, in the future specially trained CNNs will be able to fully automatically extract all sectional planes from raw volumes. Huang et al. demonstrated that “view-based projection networks” (CNN) from post-processed 3-D volumes (axial output volume and corresponding 90° sagittal/coronal rotations) could reliably detect and image five predefined anatomical CNS structures in parallel in three different 3-D projections, of which the best detection rates were achieved once again on the axial view 71 . The latter is due, among other things, to the gradual reduction in image quality inherent in the orthogonal B and C planes. The authors used the data sets of the INTERGROWTH-21 study group for their analysis. Precise B image quality and accuracy in sectional plane imaging is an indispensable prerequisite for 2-D-based AI approaches, especially for automated detection of abnormal CNS findings, as recently published by Xie et al. 72 . In this paper, CNN were trained using 2-D and 3-D datasets of approximately 15 000 normal and 15 000 abnormal standard axial planes and assessed for segmentation efficiency, binary classification into normal and abnormal planes, and CNS lesion localisation (sensitivity/specificity 96.9 and 95.9%, respectively and AUC 0.989). Before such AI approaches can be used in those areas where they would be of greatest benefit, namely in routine diagnostics, a number of “hurdles” still need to be cleared. These are primarily associated with the initial steps in imaging diagnostics (in keeping with Hintonʼs exhortation that quality is based on image generation), and this ultimately also applies to other foetal target structures in prenatal diagnostics 51 . It would be interesting here to determine, for example, to what extent such automated approaches enable standardised plane reconstruction in combination with DL algorithms to classify and thus accurately and reproducibly detect, annotate and quantify two- and three-dimensional measurement parameters, thereby enabling diagnostics that in the future are significantly less dependent on the presence of an expert practitioner. Of particular interest are, clearly, approaches in which, for example, specialised neural networks are used to optimise image acquisition protocols in obstetric ultrasound diagnostics, thereby shortening examination times and providing comprehensive anatomical information, even from, at times, obscured image areas. For example, Cerrolaza et al. demonstrated (analogous to deep reinforcement learning models for incomplete CT scans) that, even if only 60% of the foetal skull were captured in a volume dataset, AI reconstruction would nevertheless still be possible 73 , 74 .

The potential of neural networks has also been demonstrated by recent papers by Cai et al. who developed a multi-task CNN that learns how to detect standard axial planes, such as foetal abdominal and head circumference (transventricular sectional plane), by detecting eye movements of the examiner when viewing video sequences 75 . Baumgartner et al. were able to show that a specially trained convolution network (SonoNet) could be used to detect thirteen different standard foetal planes in real time and correctly record target structures 76 . Yacub et al. took a similar approach, using a neural network to, on the one hand, ascertain the completeness of a sonographic abnormality diagnosis and, on the other, to perform quality control of the image data obtained (in accordance with international guidelines). No differences were demonstrated in this case compared to manual expert assessment 77 , 78 . The same approaches to modelling now also form the (intelligent) basis for the worldʼs first fully integrated AI tool for automated biometric detection of foetal target structures and AI-supported quality control (SonoLyst) 5 . The potential of neural networks is also apparent in recent data from a British research group on AI-based 2-D video analysis of the workflow of experienced practitioners. This analysis allows systems to predict which transducer movements are most likely to result in the creation of precise target planes in abnormality diagnostics 79 . The same research group were able to demonstrate, on the one hand, that their initial AI models were able to automatically recognise video content (sectional planes) and add appropriate captions and, on the other hand, that specially trained CNNs were able to evaluate combined data from a motion sensor and an ultrasound probe, converting them into signals to augment correct transducer guidance 80 , 81 .

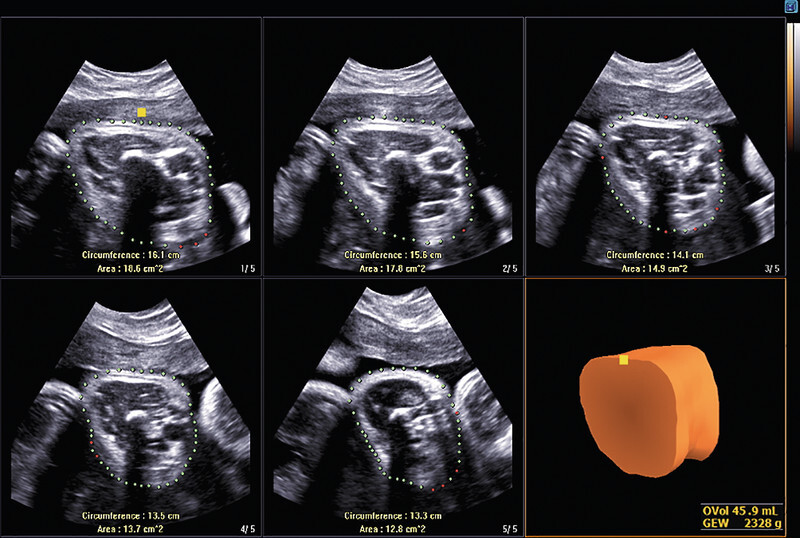

AI and Other Clinical Applications in Obstetric Monitoring

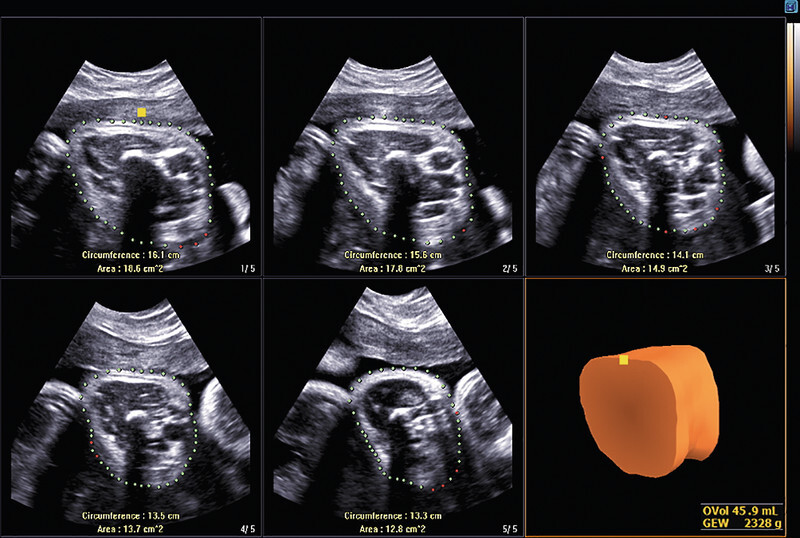

Optimisation of biometric accuracy is another area where AI can be directly clinically relevant. Regardless of the assistance systems already mentioned (see above) and notwithstanding the significant improvement in ultrasound diagnostics over the past few years, such optimisation remains a challenge. The majority of foetal weight estimation models are based on parameters (head circumference, biparietal diameter, abdominal circumference, femoral diaphysis length) measured during conventional 2-D ultrasounds. Hitherto, the development of the soft tissue of the upper and lower extremities, although not directly biometrically quantifiable, was the established surrogate parameter for foetal nutritional status 82 . Three-dimensional measurement of the fractional limb volume (FLV) of the upper arm and/or thigh has been shown to improve the precision of foetal weight estimation, even in multiple pregnancies 83 . Automated techniques that allow much faster and, above all, examiner-independent processing of 3-D volumes (efficient recognition and tracing of soft tissue boundaries) have clearly demonstrated the clinical benefit of volumetric recording of FLV (5DLimbVol), which implements workflow-based, relevant, axially acquired 3-D data sets of the upper arm or thigh and incorporates them into conventional weight estimation ( Fig. 8 ) 84 , 85 .

Fig. 8.

Automated sectional plane reconstruction of a foetal thigh in week 35 of pregnancy to estimate foetal weight (soft tissue mantle of the thigh reconstructed by 5DLimb). After 3-D volume acquisition of the thigh aligned transversely, the soft tissue volume calculated in this way can be used to improve the accuracy of estimations of foetal weight.

AI has now also made it possible to automatically record sonographic parameters such as angle of progression (AoP) and head direction (HD), even as birth progresses. The first findings on this technique were published by Youssef et al. in 2017, who found that an automated approach is possible and can be used in a reproducible manner 86 . Just how far commercially available software solutions such as LaborAssist will improve clinical care remains to be seen, however.

A heated debate, illustrative of the occasional difficulties encountered in employing automated techniques in clinical practice, is under-way regarding the potential benefits of computer-assisted assessment of peripartal foetal heart rate (electronic foetal heart rate monitoring), which, due to the clear interobserver variability and subjectivity in assessing CTG abnormalities, could, at least theoretically, benefit from objective automated analysis. Prospective randomised data from the INFANT study group did not demonstrate any advantage over conventional visual assessment by medical staff present during delivery, neither in neonatal short-term outcomes nor in outcomes at two years 87 . The question of how far methodological weaknesses in the design of the study contributed to these non-significant differences between the study arms (Hawthorne effect) remains open 88 , 89 , especially since other computer-based approaches delivered clearly promising data 90 .

To answer this, Fung et al. used data from two large population-based cohort studies (INTERGROWTH 21st and its phase II study INTERBIO 21st) to show machine learning can be employed to analyse biometric data from an ultrasound performed between weeks 20 and 30 of pregnancy along with a repeat measurement within the following ten weeks of pregnancy to determine gestational age to within three days and to predict the growth curve over the next six weeks in an individualised way for each foetus 91 . There is no doubt that AI will become ever more important in the future, potentially, for instance, in assessing and predicting foetomaternal risk constellations such as prematurity, gestational diabetes and hypertensive diseases of pregnancy 92 .

Summary

The authors of a recent web-based survey at eight university hospitals stated, among other things, that the majority of respondents tend to view AI in a positive light and, ultimately, believe that the future of clinical medicine will be shaped by a combination of human and artificial intelligence, and that sensible use of AI technologies will significantly improve patient care. The study participants considered the greatest potential lay in analysis of sensor-based, continuously collected data in electrocardiography/electroencephalography, in monitoring of patients in intensive care and in imaging procedures in targeted diagnostics and workflow support 93 . Specifically with regard to our field, it should be emphasised that the continuous development of ultrasound systems and the equipment associated with them, for instance high-resolution ultrasound probes/matrix probes for gynaecological and obstetric diagnostics, along with the inexorable introduction of efficient automated segmentation techniques for two and, in particular, three-dimensional image information, will increasingly influence and optimise the entire process chain in the future, from image data creation, analysis and processing through to its management.

A recent systematic review of more than 80 studies on automated image analysis found that AI delivered findings that were equivalent in precision to those from practitioners who were experts in their field. However, the authors also found that in many publications, no external validation of the various AI algorithms had been performed, or only inadequate validation. This situation, together with the collaboration between AI developers and clinicians, which is already well underway in many areas but still needs to be intensified, is currently making further implementation in relevant clinical processes even more difficult 94 . The current state of AI utilisation in healthcare is similar to the situation of owning a brand new car; making use of it will require both petrol and roads. In other words, the respective algorithms need to be “fuelled” with, for example, (annotated) image data, but they will only be able to fully realise their potential if the appropriate infrastructure – efficient and scalable processes with an AI-ready workflow – is in place 21 .

Outlook

AI systems continue to be developed and integrated into clinical processes, and with this comes tremendous expectations on how they will advance healthcare. What is certain is that integrating these tools is likely to fundamentally change work and training methods in the future. They will support all healthcare professionals by providing them with rapidly and reliably collected data and facts to interpret findings and consultations, which will, in the best case scenario, allow them to focus more on the uniquely human elements of their profession. Those tasks that cannot be performed by a machine because they demand emotional intelligence, such as targeted patient interaction to identify more nuanced symptoms and to build trust through human intuition, highlight just how unique and critical will be the human factor in deploying clinical AI applications of the future 95 . If nothing else, this reminds us that AI is a long way from truly replacing humans. Almost 100 years ago the visionary writings of Fritz Kahn (“The Physician of the Future”) were already foreshadowing current and future AI technologies in medicine: highly plastic constructivism in which technological civilisation and experimental science can synergistically transform the biology of the human body 96 , 97 . One thing that emerges from these advances is that, notwithstanding all technical progress, humans have not yet, nor ever will, render themselves superfluous. Predictions that up to 47% of all jobs would be lost due to automation would seem to be unfounded; in healthcare in particular, more jobs are being created than are being lost 46 , 98 , 99 .

What is needed to optimally exploit the potential of AI algorithms is interdisciplinary communication and constant involvement of physicians as the primary users of these tools in the processes of developing and the modes of operating AI tools. In the absence of such involvement, the medicine of tomorrow will be shaped exclusively by the vision of engineers and will be less able to meet the actual requirements of personalised (precision) medicine 47 , 100 . Table 1 summarises the most urgent research priorities for AI as formulated by the participants at the 2018 consensus workshop of radiological societies 101 , 102 . From the perspective of gynaecology and obstetrics, mention should be made that regarding AI-assisted sonographic parameters, continued efforts are underway to optimise imaging (pre-/post-processing) in both conventional 2-D imaging and 3-D/4-D volume sonography, and that, similar to the established algorithms with an automated workflow, the need is for further AI technologies that provide intuitive user guidance, ease of use and general (cross-device) availability to efficiently analyse image and volume data. In addition, assisted systems for real-time plane adjustment and target structure quantification should be further pursued for routine diagnostics. Of particular note here, is the fact that it is now possible to incorporate pre-trained algorithms to analyse oneʼs own population-based data (transfer learning). This constitutes an attractive and, above all, reliable method, as training a new neural network with a large volume of data is computationally and time intensive 103 . The process adopts the existing, pre-trained layers of a CNN and adapts and re-trains only the output layer to recognise the appropriate object classes of the new network.

Table 1 Recommendations from the 2018 consensus workshop on translational research held in Bethesda, USA on advancing and integrating artificial intelligence applications in clinical processes (adapted from Allen et al. 2019, Langlotz et al. 2019 101 , 102 ).

| Research priorities for artificial intelligence in medical imaging |

|---|

| Structured AI use cases and clinical problems need to be created and defined that can be actually solved by AI algorithms. |

| Novel image reconstruction methods should be developed to efficiently generate images from source data. |

| Automated image labelling and annotation methods that efficiently provide training data to explore advanced ML models and enable their intensified clinical use need to be established. |

| Research is required on machine learning methods that can more specifically communicate and visualise AI-based decision aids to users. |

| Methods should be established to validate and objectively monitor the performance of AI algorithms to facilitate regulatory approval processes. |

| Standards and common data platforms need to be developed to enable AI tools to be easily integrated into existing clinical workflows. |

It is highly likely that the greatest challenge facing targeted use of AI in healthcare in general, however, is not whether automated technologies are fully capable of meeting the demands placed on them but whether they can be incorporated into everyday clinical practice. To achieve this, among other things, appropriate approval procedures must be initiated, the appropriate (clinical) infrastructure must be established, standardisation ensured, and, above all, clinical staff must be adequately trained. It is clear that in the future these hurdles will be surmounted, but the technologies themselves may well take longer to mature. We should therefore expect to see still limited uptake of AI in clinical practice over the next five years (with more widespread uptake within ten years) 104 .

Footnotes

Conflict of Interest/Interessenkonflikt The authors (JW, MG) declare that in the last 3 years, they have received speakerʼs fees from Samsung HME and GE Healthcare./Die Autoren (JW, MG) erklären, dass sie innerhalb der vergangenen 3 Jahre Vortragshonorare von Samsung HME und GE Healthcare erhalten haben.

References/Literatur

- 1.Drukker L, Droste R, Chatelain P. Expected-value bias in routine third-trimester growth scans. Ultrasound Obstet Gynecol. 2020;55:375–382. doi: 10.1002/uog.21929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Deng J, Dong W, Socher R. ImageNet: A large-scale hierarchical image database. Paper presented at: 2009 IEEE Conference on Computer Vision and Pattern Recognition; 20 – 25 June 2009. 2009.

- 3.Russakovsky O, Deng J, Su H. ImageNet Large Scale Visual Recognition Challenge. Int J Comput Vis. 2015;115:211–252. [Google Scholar]

- 4.Turing A M. I – Computing Machinery and Intelligence. Mind. 1950;LIX:433–460. [Google Scholar]

- 5.Deloitte State of AI in the enterprise – 3rd ed. Deloitte, 2020Accessed September 30, 2021 at:http://www2.deloitte.com/content/dam/Deloitte/de/Documents/technology-media-telecommunications/DELO-6418_State of AI 2020_KS4.pdf

- 6.Anthes E. Alexa, do I have COVID-19? Nature. 2020;586:22–25. doi: 10.1038/d41586-020-02732-4. [DOI] [PubMed] [Google Scholar]

- 7.Huang Z, Epps J, Joachim D. Investigation of Speech Landmark Patterns for Depression Detection. IEEE Transactions on Affective Computing. 2019 doi: 10.1109/TAFFC.2019.2944380. [DOI] [Google Scholar]

- 8.Bodalal Z, Trebeschi S, Nguyen-Kim T DL. Radiogenomics: bridging imaging and genomics. Abdom Radiol (NY) 2019;44:1960–1984. doi: 10.1007/s00261-019-02028-w. [DOI] [PubMed] [Google Scholar]

- 9.Allen B, Dreyer K, McGinty G B. Integrating Artificial Intelligence Into Radiologic Practice: A Look to the Future. J Am Coll Radiol. 2020;17:280–283. doi: 10.1016/j.jacr.2019.10.010. [DOI] [PubMed] [Google Scholar]

- 10.Purohit K. Growing Interest in Radiology Despite AI Fears. Acad Radiol. 2019;26:e75. doi: 10.1016/j.acra.2018.11.024. [DOI] [PubMed] [Google Scholar]

- 11.Richardson M L, Garwood E R, Lee Y. Noninterpretive Uses of Artificial Intelligence in Radiology. Acad Radiol. 2021;28:1225–1235. doi: 10.1016/j.acra.2020.01.012. [DOI] [PubMed] [Google Scholar]

- 12.Bennani-Baiti B, Baltzer P AT. Künstliche Intelligenz in der Mammadiagnostik. Radiologe. 2020;60:56–63. doi: 10.1007/s00117-019-00615-y. [DOI] [PubMed] [Google Scholar]

- 13.Chan H-P, Samala R K, Hadjiiski L M. CAD and AI for breast cancer–recent development and challenges. Br J Radiol. 2019;93:2.019058E7. doi: 10.1259/bjr.20190580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fujita H. AI-based computer-aided diagnosis (AI-CAD): the latest review to read first. Radiol Phys Technol. 2020;13:6–19. doi: 10.1007/s12194-019-00552-4. [DOI] [PubMed] [Google Scholar]

- 15.McKinney S M, Sieniek M, Godbole V. International evaluation of an AI system for breast cancer screening. Nature. 2020;577:89–94. doi: 10.1038/s41586-019-1799-6. [DOI] [PubMed] [Google Scholar]

- 16.Rodriguez-Ruiz A, Lång K, Gubern-Merida A. Can we reduce the workload of mammographic screening by automatic identification of normal exams with artificial intelligence? A feasibility study. Eur Radiol. 2019;29:4825–4832. doi: 10.1007/s00330-019-06186-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.OʼConnell A M, Bartolotta T V, Orlando A. Diagnostic Performance of An Artificial Intelligence System in Breast Ultrasound. J Ultrasound Med. 2021 doi: 10.1002/jum.15684. [DOI] [PubMed] [Google Scholar]

- 18.Cho B J, Choi Y J, Lee M J. Classification of cervical neoplasms on colposcopic photography using deep learning. Sci Rep. 2020;10:13652. doi: 10.1038/s41598-020-70490-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Shanthi P B, Faruqi F, Hareesha K S. Deep Convolution Neural Network for Malignancy Detection and Classification in Microscopic Uterine Cervix Cell Images. Asian Pac J Cancer Prev. 2019;20:3447–3456. doi: 10.31557/APJCP.2019.20.11.3447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Försch S, Klauschen F, Hufnagl P. Künstliche Intelligenz in der Pathologie. Dtsch Arztebl. 2021;118:199–204. [Google Scholar]

- 21.Chang P J. Moving Artificial Intelligence from Feasible to Real: Time to Drill for Gas and Build Roads. Radiology. 2020;294:432–433. doi: 10.1148/radiol.2019192527. [DOI] [PubMed] [Google Scholar]

- 22.Tran D, Cooke S, Illingworth P J. Deep learning as a predictive tool for fetal heart pregnancy following time-lapse incubation and blastocyst transfer. Hum Reprod. 2019;34:1011–1018. doi: 10.1093/humrep/dez064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Zaninovic N, Rosenwaks Z. Artificial intelligence in human in vitro fertilization and embryology. Fertil Steril. 2020;114:914–920. doi: 10.1016/j.fertnstert.2020.09.157. [DOI] [PubMed] [Google Scholar]

- 24.Bori L, Paya E, Alegre L. Novel and conventional embryo parameters as input data for artificial neural networks: an artificial intelligence model applied for prediction of the implantation potential. Fertil Steril. 2020;114:1232–1241. doi: 10.1016/j.fertnstert.2020.08.023. [DOI] [PubMed] [Google Scholar]

- 25.DEGUM Pressemitteilungen. DEGUM, 2017. Updated 29.11.2017Accessed September 30, 2021 at:http://www.degum.de/aktuelles/presse-medien/pressemitteilungen/im-detail/news/zu-viele-kindliche-fehlbildungen-bleiben-unentdeckt.html

- 26.Murugesu S, Galazis N, Jones B P. Evaluating the use of telemedicine in gynaecological practice: a systematic review. BMJ Open. 2020;10:e039457. doi: 10.1136/bmjopen-2020-039457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Benacerraf B R, Minton K K, Benson C B. Proceedings: Beyond Ultrasound First Forum on Improving the Quality of Ultrasound Imaging in Obstetrics and Gynecology. J Ultrasound Med. 2018;37:7–18. doi: 10.1002/jum.14504. [DOI] [PubMed] [Google Scholar]

- 28.Timmerman D, Verrelst H, Bourne T H. Artificial neural network models for the preoperative discrimination between malignant and benign adnexal masses. Ultrasound Obstet Gynecol. 1999;13:17–25. doi: 10.1046/j.1469-0705.1999.13010017.x. [DOI] [PubMed] [Google Scholar]

- 29.Froyman W, Timmerman D. Methods of Assessing Ovarian Masses: International Ovarian Tumor Analysis Approach. Obstet Gynecol Clin North Am. 2019;46:625–641. doi: 10.1016/j.ogc.2019.07.003. [DOI] [PubMed] [Google Scholar]

- 30.Van Calster B, Van Hoorde K, Valentin L. Evaluating the risk of ovarian cancer before surgery using the ADNEX model to differentiate between benign, borderline, early and advanced stage invasive, and secondary metastatic tumours: prospective multicentre diagnostic study. BMJ. 2014;349:g5920. doi: 10.1136/bmj.g5920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Vázquez-Manjarrez S E, Rico-Rodriguez O C, Guzman-Martinez N. Imaging and diagnostic approach of the adnexal mass: what the oncologist should know. Chin Clin Oncol. 2020;9:69. doi: 10.21037/cco-20-37. [DOI] [PubMed] [Google Scholar]

- 32.Andreotti R F, Timmerman D, Strachowski L M. O-RADS US Risk Stratification and Management System: A Consensus Guideline from the ACR Ovarian-Adnexal Reporting and Data System Committee. Radiology. 2020;294:168–185. doi: 10.1148/radiol.2019191150. [DOI] [PubMed] [Google Scholar]

- 33.Christiansen F, Epstein E L, Smedberg E. Ultrasound image analysis using deep neural networks for discriminating between benign and malignant ovarian tumors: comparison with expert subjective assessment. Ultrasound Obstet Gynecol. 2021;57:155–163. doi: 10.1002/uog.23530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Acharya U R, Mookiah M R, Vinitha Sree S. Evolutionary algorithm-based classifier parameter tuning for automatic ovarian cancer tissue characterization and classification. Ultraschall Med. 2014;35:237–245. doi: 10.1055/s-0032-1330336. [DOI] [PubMed] [Google Scholar]

- 35.Akazawa M, Hashimoto K. Artificial Intelligence in Ovarian Cancer Diagnosis. Anticancer Res. 2020;40:4795–4800. doi: 10.21873/anticanres.14482. [DOI] [PubMed] [Google Scholar]

- 36.Aramendia-Vidaurreta V, Cabeza R, Villanueva A. Ultrasound Image Discrimination between Benign and Malignant Adnexal Masses Based on a Neural Network Approach. Ultrasound Med Biol. 2016;42:742–752. doi: 10.1016/j.ultrasmedbio.2015.11.014. [DOI] [PubMed] [Google Scholar]

- 37.Khazendar S, Sayasneh A, Al-Assam H. Automated characterisation of ultrasound images of ovarian tumours: the diagnostic accuracy of a support vector machine and image processing with a local binary pattern operator. Facts Views Vis Obgyn. 2015;7:7–15. [PMC free article] [PubMed] [Google Scholar]

- 38.Zhou J, Zeng Z Y, Li L. Progress of Artificial Intelligence in Gynecological Malignant Tumors. Cancer Manag Res. 2020;12:12823–12840. doi: 10.2147/CMAR.S279990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Al-Karawi D, Al-Assam H, Du H. An Evaluation of the Effectiveness of Image-based Texture Features Extracted from Static B-mode Ultrasound Images in Distinguishing between Benign and Malignant Ovarian Masses. Ultrason Imaging. 2021;43:124–138. doi: 10.1177/0161734621998091. [DOI] [PubMed] [Google Scholar]

- 40.Bakker M K, Bergman J EH, Krikov S. Prenatal diagnosis and prevalence of critical congenital heart defects: an international retrospective cohort study. BMJ Open. 2019;9:e028139. doi: 10.1136/bmjopen-2018-028139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.van Nisselrooij A EL, Teunissen A KK, Clur S A. Why are congenital heart defects being missed? Ultrasound Obstet Gynecol. 2020;55:747–757. doi: 10.1002/uog.20358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Knackstedt C, Bekkers S C, Schummers G. Fully Automated Versus Standard Tracking of Left Ventricular Ejection Fraction and Longitudinal Strain: The FAST-EFs Multicenter Study. J Am Coll Cardiol. 2015;66:1456–1466. doi: 10.1016/j.jacc.2015.07.052. [DOI] [PubMed] [Google Scholar]

- 43.Tsang W, Salgo I S, Medvedofsky D. Transthoracic 3D Echocardiographic Left Heart Chamber Quantification Using an Automated Adaptive Analytics Algorithm. JACC Cardiovasc Imaging. 2016;9:769–782. doi: 10.1016/j.jcmg.2015.12.020. [DOI] [PubMed] [Google Scholar]

- 44.Kusunose K. Steps to use artificial intelligence in echocardiography. J Echocardiogr. 2021;19:21–27. doi: 10.1007/s12574-020-00496-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Zhang J, Gajjala S, Agrawal P. Fully Automated Echocardiogram Interpretation in Clinical Practice. Circulation. 2018;138:1623–1635. doi: 10.1161/CIRCULATIONAHA.118.034338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Harari Y N. 16. Aufl. München: C. H. Beck; 2020. Homo sapiens verliert die Kontrolle. Die Große Entkopplung. [Google Scholar]

- 47.Gandhi S, Mosleh W, Shen J. Automation, machine learning, and artificial intelligence in echocardiography: A brave new world. Echocardiography. 2018;35:1402–1418. doi: 10.1111/echo.14086. [DOI] [PubMed] [Google Scholar]

- 48.Arnaout R, Curran L, Zhao Y. Expert-level prenatal detection of complex congenital heart disease from screening ultrasound using deep learning. medRxiv. 2020 doi: 10.1101/2020.06.22.20137786. [DOI] [Google Scholar]

- 49.Le T K, Truong V, Nguyen-Vo T H. Application of machine learning in screening of congenital heart diseases using fetal echocardiography. J Am Coll Cardiol. 2020;75:648. doi: 10.1007/s10554-022-02566-3. [DOI] [PubMed] [Google Scholar]

- 50.Dong J, Liu S, Liao Y. A Generic Quality Control Framework for Fetal Ultrasound Cardiac Four-Chamber Planes. IEEE J Biomed Health Inform. 2020;24:931–942. doi: 10.1109/JBHI.2019.2948316. [DOI] [PubMed] [Google Scholar]

- 51.Hinton G E. To recognize shapes, first learn to generate images. Prog Brain Res. 2007;165:535–547. doi: 10.1016/S0079-6123(06)65034-6. [DOI] [PubMed] [Google Scholar]

- 52.Voelker R. Cardiac Ultrasound Uses Artificial Intelligence to Produce Images. JAMA. 2020;323:1034. doi: 10.1001/jama.2020.2547. [DOI] [PubMed] [Google Scholar]

- 53.Yeo L, Romero R. Optical ultrasound simulation-based training in obstetric sonography. J Matern Fetal Neonatal Med. 2020 doi: 10.1080/14767058.2020.1786519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Steinhard J, Dammeme Debbih A, Laser K T.Randomised controlled study on the use of systematic simulator-based training (OPUS Fetal Heart Trainer) for learning the standard heart planes in fetal echocardiography Ultrasound Obstet Gynecol 201954 (S1)28–29.30693579 [Google Scholar]

- 55.Day T G, Kainz B, Hajnal J. Artificial intelligence, fetal echocardiography, and congenital heart disease. Prenat Diagn. 2021;41:733–742. doi: 10.1002/pd.5892. [DOI] [Google Scholar]

- 56.Garcia-Canadilla P, Sanchez-Martinez S, Crispi F. Machine Learning in Fetal Cardiology: What to Expect. Fetal Diagn Ther. 2020;47:363–372. doi: 10.1159/000505021. [DOI] [PubMed] [Google Scholar]

- 57.Meiburger K M, Acharya U R, Molinari F. Automated localization and segmentation techniques for B-mode ultrasound images: A review. Comput Biol Med. 2018;92:210–235. doi: 10.1016/j.compbiomed.2017.11.018. [DOI] [PubMed] [Google Scholar]

- 58.Rawat V, Jain A, Shrimali V. Automated Techniques for the Interpretation of Fetal Abnormalities: A Review. Appl Bionics Biomech. 2018;2018:6.45205E6. doi: 10.1155/2018/6452050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Yeo L, Luewan S, Romero R. Fetal Intelligent Navigation Echocardiography (FINE) Detects 98 % of Congenital Heart Disease. J Ultrasound Med. 2018;37:2577–2593. doi: 10.1002/jum.14616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Gembicki M, Hartge D R, Dracopoulos C. Semiautomatic Fetal Intelligent Navigation Echocardiography Has the Potential to Aid Cardiac Evaluations Even in Less Experienced Hands. J Ultrasound Med. 2020;39:301–309. doi: 10.1002/jum.15105. [DOI] [PubMed] [Google Scholar]

- 61.Weichert J, Weichert A. A “holistic” sonographic view on congenital heart disease: How automatic reconstruction using fetal intelligent navigation echocardiography eases unveiling of abnormal cardiac anatomy part II-Left heart anomalies. Echocardiography. 2021;38:777–789. doi: 10.1111/echo.15037. [DOI] [PubMed] [Google Scholar]

- 62.DeVore G R, Klas B, Satou G. Longitudinal Annular Systolic Displacement Compared to Global Strain in Normal Fetal Hearts and Those With Cardiac Abnormalities. J Ultrasound Med. 2018;37:1159–1171. doi: 10.1002/jum.14454. [DOI] [PubMed] [Google Scholar]

- 63.DeVore G R, Klas B, Satou G. 24-segment sphericity index: a new technique to evaluate fetal cardiac diastolic shape. Ultrasound Obstet Gynecol. 2018;51:650–658. doi: 10.1002/uog.17505. [DOI] [PubMed] [Google Scholar]

- 64.DeVore G R, Polanco B, Satou G. Two-Dimensional Speckle Tracking of the Fetal Heart: A Practical Step-by-Step Approach for the Fetal Sonologist. J Ultrasound Med. 2016;35:1765–1781. doi: 10.7863/ultra.15.08060. [DOI] [PubMed] [Google Scholar]

- 65.Lee M, Won H. Novel technique for measurement of fetal right myocardial performance index using synchronised images of right ventricular inflow and outflow. Ultrasound Obstet Gynecol. 2019;54 (S1):178–179. [Google Scholar]

- 66.Leung V, Avnet H, Henry A. Automation of the Fetal Right Myocardial Performance Index to Optimise Repeatability. Fetal Diagn Ther. 2018;44:28–35. doi: 10.1159/000478928. [DOI] [PubMed] [Google Scholar]

- 67.Rizzo G, Aiello E, Pietrolucci M E. The feasibility of using 5D CNS software in obtaining standard fetal head measurements from volumes acquired by three-dimensional ultrasonography: comparison with two-dimensional ultrasound. J Matern Fetal Neonatal Med. 2016;29:2217–2222. doi: 10.3109/14767058.2015.1081891. [DOI] [PubMed] [Google Scholar]

- 68.Welp A, Gembicki M, Rody A. Validation of a semiautomated volumetric approach for fetal neurosonography using 5DCNS+ in clinical data from > 1100 consecutive pregnancies. Childs Nerv Syst. 2020;36:2989–2995. doi: 10.1007/s00381-020-04607-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Pluym I D, Afshar Y, Holliman K. Accuracy of three-dimensional automated ultrasound imaging of biometric measurements of the fetal brain. Ultrasound Obstet Gynecol. 2021;57:798–803. doi: 10.1002/uog.22171. [DOI] [PubMed] [Google Scholar]

- 70.Ambroise Grandjean G, Hossu G, Bertholdt C. Artificial intelligence assistance for fetal head biometry: Assessment of automated measurement software. Diagn Interv Imaging. 2018;99:709–716. doi: 10.1016/j.diii.2018.08.001. [DOI] [PubMed] [Google Scholar]

- 71.Huang R, Xie W, Alison Noble J. VP-Nets: Efficient automatic localization of key brain structures in 3D fetal neurosonography. Med Image Anal. 2018;47:127–139. doi: 10.1016/j.media.2018.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Xie H N, Wang N, He M. Using deep-learning algorithms to classify fetal brain ultrasound images as normal or abnormal. Ultrasound Obstet Gynecol. 2020;56:579–587. doi: 10.1002/uog.21967. [DOI] [PubMed] [Google Scholar]

- 73.Cerrolaza J J, Li Y, Biffi C. Fetal Skull Reconstruction via Deep Convolutional Autoencoders. Annu Int Conf IEEE Eng Med Biol Soc. 2018;2018:887–890. doi: 10.1109/EMBC.2018.8512282. [DOI] [PubMed] [Google Scholar]

- 74.Ghesu F C, Georgescu B, Grbic S. Towards intelligent robust detection of anatomical structures in incomplete volumetric data. Med Image Anal. 2018;48:203–213. doi: 10.1016/j.media.2018.06.007. [DOI] [PubMed] [Google Scholar]

- 75.Cai Y, Droste R, Sharma H. Spatio-temporal visual attention modelling of standard biometry plane-finding navigation. Medical Image Analysis. 2020;65:101762. doi: 10.1016/j.media.2020.101762. [DOI] [PubMed] [Google Scholar]

- 76.Baumgartner C F, Kamnitsas K, Matthew J. SonoNet: Real-Time Detection and Localisation of Fetal Standard Scan Planes in Freehand Ultrasound. IEEE Trans Med Imaging. 2017;36:2204–2215. doi: 10.1109/TMI.2017.2712367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Yaqub M, Kelly B, Noble J A.An AI system to support sonologists during fetal ultrasound anomaly screening Ultrasound Obstet Gynecol 201852 (S1)9–10.29974595 [Google Scholar]

- 78.Yaqub M, Sleep N, Syme S. ScanNav ® audit: an AI-powered screening assistant for fetal anatomical ultrasound Am J Obstet Gynecol 2021224(Suppl.)S312. 10.1016/j.ajog.2020.12.512 [DOI] [Google Scholar]

- 79.Sharma H, Drukker L, Chatelain P. Knowledge representation and learning of operator clinical workflow from full-length routine fetal ultrasound scan videos. Med Image Anal. 2021;69:101973. doi: 10.1016/j.media.2021.101973. [DOI] [PubMed] [Google Scholar]

- 80.Droste R, Drukker L, Papageorghiou A T. Automatic Probe Movement Guidance for Freehand Obstetric Ultrasound. Med Image Comput Comput Assist Interv. 2020;12263:583–592. doi: 10.1007/978-3-030-59716-0_56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Alsharid M, Sharma H, Drukker L. Cham: Springer; 2019. Captioning Ultrasound Images Automatically. (Lecture Notes in Computer Science, vol 11767, Vol. .). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Lee W, Deter R L, Ebersole J D. Birth weight prediction by three-dimensional ultrasonography: fractional limb volume. J Ultrasound Med. 2001;20:1283–1292. doi: 10.7863/jum.2001.20.12.1283. [DOI] [PubMed] [Google Scholar]

- 83.Corrêa V M, Araujo Júnior E, Braga A. Prediction of birth weight in twin pregnancies using fractional limb volumes by three-dimensional ultrasonography. J Matern Fetal Neonatal Med. 2020;33:3652–3657. doi: 10.1080/14767058.2019.1582632. [DOI] [PubMed] [Google Scholar]

- 84.Gembicki M, Offerman D R, Weichert J. Semiautomatic Assessment of Fetal Fractional Limb Volume for Weight Prediction in Clinical Praxis: How Does It Perform in Routine Use? J Ultrasound Med. 2021 doi: 10.1002/jum.15712. [DOI] [PubMed] [Google Scholar]

- 85.Mack L M, Kim S Y, Lee S. Automated Fractional Limb Volume Measurements Improve the Precision of Birth Weight Predictions in Late Third-Trimester Fetuses. J Ultrasound Med. 2017;36:1649–1655. doi: 10.7863/ultra.16.08087. [DOI] [PubMed] [Google Scholar]

- 86.Youssef A, Salsi G, Montaguti E. Automated Measurement of the Angle of Progression in Labor: A Feasibility and Reliability Study. Fetal Diagn Ther. 2017;41:293–299. doi: 10.1159/000448947. [DOI] [PubMed] [Google Scholar]

- 87.Brocklehurst P, Field D, Greene K. Computerised interpretation of fetal heart rate during labour (INFANT): a randomised controlled trial. Lancet. 2017;389:1719–1729. doi: 10.1016/S0140-6736(17)30568-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Keith R. The INFANT study-a flawed design foreseen. Lancet. 2017;389:1697–1698. doi: 10.1016/S0140-6736(17)30714-6. [DOI] [PubMed] [Google Scholar]

- 89.Silver R M. Computerising the intrapartum continuous cardiotocography does not add to its predictive value: FOR: Computer analysis does not add to intrapartum continuous cardiotocography predictive value. BJOG. 2019;126:1363. doi: 10.1111/1471-0528.15575. [DOI] [PubMed] [Google Scholar]

- 90.Gyllencreutz E, Lu K, Lindecrantz K. Validation of a computerized algorithm to quantify fetal heart rate deceleration area. Acta Obstet Gynecol Scand. 2018;97:1137–1147. doi: 10.1111/aogs.13370. [DOI] [PubMed] [Google Scholar]

- 91.International Fetal and Newborn Growth Consortium for the 21st Century (INTERGROWTH-21st) . Fung R, Villar J, Dashti A. Achieving accurate estimates of fetal gestational age and personalised predictions of fetal growth based on data from an international prospective cohort study: a population-based machine learning study. Lancet Digit Health. 2020;2:e368–e375. doi: 10.1016/S2589-7500(20)30131-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Lee K S, Ahn K H. Application of Artificial Intelligence in Early Diagnosis of Spontaneous Preterm Labor and Birth. Diagnostics (Basel) 2020;10:733. doi: 10.3390/diagnostics10090733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Maassen O, Fritsch S, Palm J. Future Medical Artificial Intelligence Application Requirements and Expectations of Physicians in German University Hospitals: Web-Based Survey. J Med Internet Res. 2021;23:e26646. doi: 10.2196/26646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Littmann M, Selig K, Cohen-Lavi L. Validity of machine learning in biology and medicine increased through collaborations across fields of expertise. Nature Machine Intelligence. 2020;2:18–24. [Google Scholar]

- 95.Norgeot B, Glicksberg B S, Butte A J. A call for deep-learning healthcare. Nat Med. 2019;25:14–15. doi: 10.1038/s41591-018-0320-3. [DOI] [PubMed] [Google Scholar]

- 96.Borck C. Communicating the Modern Body: Fritz Kahnʼs Popular Images of Human Physiology as an Industrialized World. Canadian Journal of Communication. 2007;32:495–520. [Google Scholar]

- 97.Jachertz N. Populärmedizin: Der Mensch ist eine Maschine, die vom Menschen bedient wird. Dtsch Arztebl. 2010;107:A-391–393. [Google Scholar]

- 98.Frey C B, Osborne M A. The future of employment: How susceptible are jobs to computerisation? Technological Forecasting and Social Change. 2017;114:254–280. [Google Scholar]

- 99.Gartner H, Stüber H. Nürnberg: Institut für Arbeitsmarkt- und Berufsforschung; 2019. Strukturwandel am Arbeitsmarkt seit den 70er Jahren: Arbeitsplatzverluste werden durch neue Arbeitsplätze immer wieder ausgeglichen. 16.7.2019. [Google Scholar]

- 100.Bartoli A, Quarello E, Voznyuk I. Intelligence artificielle et imagerie en médecine fœtale: de quoi parle-t-on? [Artificial intelligence and fetal imaging: What are we talking about?] Gynecol Obstet Fertil Senol. 2019;47:765–768. doi: 10.1016/j.gofs.2019.09.012. [DOI] [PubMed] [Google Scholar]

- 101.Allen B, jr., Seltzer S E, Langlotz C P. A Road Map for Translational Research on Artificial Intelligence in Medical Imaging: From the 2018 National Institutes of Health/RSNA/ACR/The Academy Workshop. J Am Coll Radiol. 2019;16 (9 Pt A):1179–1189. doi: 10.1016/j.jacr.2019.04.014. [DOI] [PubMed] [Google Scholar]

- 102.Langlotz C P, Allen B, Erickson B J. A Roadmap for Foundational Research on Artificial Intelligence in Medical Imaging: From the 2018 NIH/RSNA/ACR/The Academy Workshop. Radiology. 2019;291:781–791. doi: 10.1148/radiol.2019190613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Tolsgaard M G, Svendsen M BS, Thybo J K. Does artificial intelligence for classifying ultrasound imaging generalize between different populations and contexts? Ultrasound Obstet Gynecol. 2021;57:342–343. doi: 10.1002/uog.23546. [DOI] [PubMed] [Google Scholar]

- 104.Davenport T, Kalakota R. The potential for artificial intelligence in healthcare. Future Healthc J. 2019;6:94–98. doi: 10.7861/futurehosp.6-2-94. [DOI] [PMC free article] [PubMed] [Google Scholar]