Abstract

While pharmaceutical industry involvement in producing, interpreting, and regulating medical knowledge and practice is widely accepted and believed to promote medical innovation, industry-favouring biases may result in prioritizing corporate profit above public health. Using diabetes as our example, we review successive changes over forty years in screening, diagnosis, and treatment guidelines for type 2 diabetes and prediabetes, which have dramatically expanded the population prescribed diabetes drugs, generating a billion-dollar market. We argue that these guideline recommendations have emerged under pervasive industry influence and persisted, despite weak evidence for their health benefits and indications of serious adverse effects associated with many of the drugs they recommend. We consider pharmaceutical industry conflicts of interest in some of the research and publications supporting these revisions and in related standard setting committees and oversight panels and raise concern over the long-term impact of these multifaceted involvements. Rather than accept industry conflicts of interest as normal, needing only to be monitored and managed, we suggest challenging that normalcy, and ask: what are the real costs of tolerating such industry participation? We urge the development of a broader focus to fully understand and curtail the systemic nature of industry’s influence over medical knowledge and practice.

Keywords: History of medicine; Diabetes mellitus, Type 2; Prediabetic state; Drug industry; Preventative medicine

Healthcare is one of the largest sectors of the U.S. economy, yet the influence market principles may have on the concepts and content of healthcare are not well understood. Corporations routinely and openly participate in many medical arenas, ranging from collaborating in the design, implementation, and dissemination of clinical research, to involvement in the deliberations of standard setting and oversight panels. Corporate priorities have become increasingly intertwined with the day-to-day science and practice of medicine. While many see this as promoting medical innovation and spurring progress toward addressing important public health issues (Abraham 2010; Crosswell and Porter 2016), we are concerned that under pervasive industry influence over the long term, industry-favouring biases are being built into the very fabric of healthcare, resulting in revisions to medicine which prioritize corporate profit above public health.

The breadth and depth of industry involvement in medicine is extensive and difficult to contain, and there is a lack of consensus over whether and how market forces should be curtailed and managed (Purdy et al. 2017). Consider, for example, that a 2017 special issue of JAMA on conflicts of interest (COI) included an impressive array of arenas of conflict such as academic medicine centers, biomedical research, continuing medical education, medical publishing, guideline development, healthcare management, philanthropy, and professional medical associations (Bauchner and Fontanarosa 2017). Clearly, this is a complicated story with no clear villains or victims. Rather it is the manifestation of what Matheson has called “a culture of influence and accommodation that naturalizes the presence of commerce within medicine,” (Matheson 2016, 32) where the goals of industry and of medicine have become intricately entangled and the collaboration between business and medicine is normalized. The impact of industry involvement on medicine is particularly evident in a notable shift in the focus of primary care medicine. Since the 1950s, the agenda has gradually moved from managing disease to managing “risk” for disease, relying on pharmaceuticals to reduce the risk that asymptomatic, essentially healthy people may develop disease in the future. Medications are widely and aggressively prescribed to lower elevated cholesterol, blood pressure, and glucose levels, not because these numbers represent an immediate health concern but because they have been statistically associated with serious conditions such as heart disease and stroke (Moynihan, Heath, and Henry 2002; Kreiner and Hunt 2014). This shift, critics have argued, has aligned the medical agenda with the ambitions of the pharmaceutical industry, tapping the almost limitless growth potential of preventive pharmacology (Greene 2007). In effect, bodily states are transformed into categories amenable to the long-term production of capital, generating immense profits and creating sustainable markets (Conrad and Leiter 2004; Dumit 2012; Sunder Rajan 2017). Greene has raised concern that given the “porous relationship between the science and business of health care” (2007, 5), the U.S. public health agenda has become aligned with the marketing practices of industry. He describes this as:

… a new form of pharmaceutical marketing that refused to accept the incidence of disease as a fixed market. … Chronic disease such as diabetes and hypertension were growth markets that could continue to expand as long as screening and diagnosis could be pushed further outward to cover more hidden patients among the apparently healthy.

(Greene 2007, 83 – 84)

These efforts appear to have been quite effective. The pharmaceutical management of risk is now commonplace; drugs for common chronic conditions such as hypertension, hyperlipidemia, and diabetes are among the most often prescribed, accounting for a good deal of the recent growth in the highly successful pharmaceutical industry (Brown 2015; Kirzinger, Wu, and Brodie 2016; QuintilesIMS Institute 2017).

In this paper, using diabetes as our example, we review a series of revisions to clinical practice guidelines over the past forty years that have dramatically expanded diagnosis and pharmaceutical treatment of diabetes. We consider some of the ways the industry has been involved in knowledge production, interpretation, and oversight related to these changes. We argue that these changes have emerged and persisted, despite weak evidence for their health benefits and serious adverse effects associated with many recommended drugs.

A Diabetes Epidemic?

Diabetes has been recognized for thousands of years as a serious, sometimes fatal condition marked by frequent urination and sugar in the urine. Until the mid-twentieth century, it was considered a relatively rare condition, affecting less than 1 per cent of the U.S. population (CDC 2017). However, today, diabetes is thought to be rampant, described by the Centers for Disease Control (CDC) as one of the “epidemics of our time” (CDC 2019). Diabetes rates are increasing at an alarming pace, nearly tripling globally between 2000 and 2019 (IDF 2020). This is especially pronounced in the United States, where it is estimated nearly 34.2 million Americans have diabetes and another 88 million have prediabetes. By these estimates, more than one in three American adults is classified as being affected by the condition (CDC 2020a).

As this epidemic has unfolded, the market for diabetes drugs has likewise significantly expanded, with more than fifty drugs presently approved for treating diabetes in the United States (FDA 2018; Stafford 2018)—many of which are among the highest-grossing medications of all drugs currently on the market (Express Scripts Lab 2016; PharmaCompass 2018).

How is it that a non-communicable disease could so rapidly come to affect such large numbers of people? A widely accepted explanation is that there is more diabetes because people are living longer and are more often overweight due to rapid changes in diet and an increasingly sedentary lifestyle. However, between 1997 and 2018 in the U.S. life expectancy increased by about two years (World Bank 2020) and obesity increased by about 61 per cent (Hales et al. 2018; Hales et al. 2020; NIDDK 2020), while during the same time period the prevalence of diabetes diagnoses in the United States increased by an astounding 176 per cent (CDC 2017; 2020a). aClearly, these changes in life expectancy and obesity cannot account for the dramatic rise in the prevalence of diabetes. A more proximate explanation may be found in the systematic expansion of diabetes diagnostic and management criteria over time. Greene (2007) argues that different diagnostic classes of diabetes were developed in the 1950s as a way to market the first anti-glycemic oral medication, sulfonylurea (Orinase). Patients with severe diabetes, who required insulin to survive and showed little response to Orinase, were classified as having Insulin-Dependent Diabetes Mellitus (IDDM). The much larger group with milder insulin problems showed a better response to Orinase. These patients were classified as having Non-Insulin-Dependent Diabetes Mellitus (NIDDM), and mass screening efforts were undertaken to identify people with “hidden” diabetes who might benefit from this drug.

In 1997, these treatment-based classifications were formally changed to the aetiology-based labels “type 1 diabetes” for patients who cannot produce insulin, and “type 2 diabetes” for those producing inadequate insulin or considered insulin resistant (ADA 1997). While the type 1 diabetes population, which is treated primarily with insulin, has remained relatively stable at less than 1 per cent of the U.S. population, it is type 2 diabetes population that has grown dramatically to affect an estimated 20 per cent of U.S. adults (CDC 2020b).

Changes in Clinical Practice Guidelines

Since the 1980s, clinical practice guidelines published by various professional associations have proliferated, translating emerging research into clinical practice recommendations, and facilitating management and oversight by largescale payers and government providers (Weisz et al. 2007). While diabetes guidelines are produced by numerous professional organizations in the United States and around the world, they are generally quite similar to one another (Rodriguez-Gutierrez et al. 2019). We will focus our analysis on those published annually by the American Diabetes Association (ADA) in Diabetes Care, which is the highest rated (H-index) journal in diabetes treatment and prevention (SJR 2021).

Clinical practice guidelines identify specific diagnostic criteria, treatment goals, and treatment recommendations, distilling complex evidence into simplified standards of care. The extent to which clinicians follow guidelines will vary with specific patient needs and individual clinician decision-making (Timmermans and Oh 2010). Such clinical discretion, however, is limited not only because guidelines are understood to reflect the most recent advances in clinical science but also because following such standards is reinforced by various rule-based institutional oversight and quality monitoring systems (Weisz et al. 2007; Nigam 2012; Hunt et al. 2017; Norton 2017; Hunt et al. 2019).

Beginning in the 1980s, the ADA has regularly published and updated diagnosis and treatment guidelines, providing a rich opportunity to trace how diagnostic and treatment standards have evolved. To better understand the immense growth of the diabetes market, we will review revisions to screening criteria, diagnostic procedures, and target number and treatment recommendations in the annually updated ADA guidelines from 1979 through the present. The major changes we have identified are summarized in Table 1.

Table 1.

Major changes in American Diabetes Association diagnostic and treatment recommendations, 1979 to 2020

| Date & Source |

Classifications | Who to Screen | Recommended Tests | Dx & Tx Thresholds | Recommended Tx |

|---|---|---|---|---|---|

| (National Diabetes Data Group 1979) | NEW TERMS: • IGT • IDDM & NIDDM DISCONTINUE TERM: • DROPS: “PreDM” |

• DM Sx (polyuria, polydipsia, ketonuria, rapid weight loss) • 1st degree relative with DM • Had baby >9lbs • Obese • Racial/Ethnic groups with high DM prevalence |

• FPG (test twice) • GTT |

• Normal: FPG <115 • DM: - FPG >140 - GTT >200 • IGT: - FPG >115 |

• IDDM require insulin • NIDDM may require insulin or oral meds • IGT: Weight loss recommended (no medications) |

| (ADA 1987, 1989) | No Major Changes | ADD • High-risk racial groups are: American Indian, Hispanic, or Black • >40 yo with “risk factors” • Previous IGT |

No Major Changes | No Major Changes | • MNT recommended as primary treatment approach for NIDDM |

| (ADA 1994) | No Major Changes | No Major Changes | No Major Changes | No Major Changes | IDDM: goal near normal (FPG <115) • NIDDM: diet and exercise; meds for some • Individual Tx goals set jointly with patient |

| (ADA 1997) | DISCONTINUE TERMS: • IDDM & NIDDM ADD TERMS: • Type 1 DM & Type 2 DM • IFG & IGT • PreDM is early stage of DM |

ADD: • Everyone >45 yo. • Any age with “risk factors” • >120% ideal weight or BMI >27 • Screen to identify PreDM and asymptomatic DM2 |

ADD: • RPG (2nd test, different day) • GTT not recommended: costly & impractical |

NEW Dx VALUES: • Normal: FPG <110 • DM: - FPG >126 - GTT >200 • PreDM, IFG & IGT: - FPG >110 - RPG >200 +Sx - GTT >140 |

• Goal is tight control, for DM1 and some DM2, using diet and exercise and combinations of rapid, short and longterm. insulins |

| (ADA 2002, 2003) | CHANGE TERMS: • IFG & IGT now called PreDM |

CHANGE: • BMI >25 |

ADD: • A1c to monitor Tx • FPG preferred for Dx |

New Tx VALUES - A1c >8 for Tx - A1c <7 Tx goal - A1c <6 Normal |

• Weight. loss more effective than meds for PreDM |

| (ADA 2004) | No Major Changes | ADD: BMI to screen differs by ethic group |

ADD: • A1c NOT for Dx use; only to monitor Tx. |

CHANGE: • Normal: FPG <100 • PreDM: -FPG > 100 |

• Diet and exercise recommendations expanded |

| (ADA 2007) | No Major Changes | No Major Changes | No Major Changes | No Major Changes | • ADD: Multi-Drug Algorithm for DM2 • Diet and exercise changes described as hard to sustain • MNT recommendations shortened • Drugs are NOT recommended for PreDM, due to possible side effects, and lack of evidence for efficacy in DM2 prevention |

| (ADA 2008, 2009) | No Major Changes | No Major Changes | No Major Changes | No Major Changes | • CHANGE: New DM2 Drug Algorithm (more elaborate/complex) • Use meds for DM2 to reach goal levels ASAP • Metformin recommended for PreDM to prevent developing into DM2 |

| (ADA 2010) | No Major Changes | ADD: • Screen regularly if A1c >5.1 • To identify those at future risk for DM2 and those in asymptomatic phase of DM2 |

CHANGE: • A1c for Dx • 2nd test recommended optional • Recommends A1c as lab test only • POC A1c test not acceptable for Dx |

NEW Dx VALUES: • DM Dx: - A1c >6.5 • PreDM Dx: - A1c 5.7–6.4 - FPG >100 |

• At Dx for DM2 begin drug therapy • Consider bariatric surgery to manage DM if BMI >35 |

| (ADA 2011) | No Major Changes | No Major Changes | No Major Changes | CHANGE Tx GOALS: • A1c <6.5 if no HG • A1c <8 if severe HG |

No Major Changes |

| (ADA 2014) | No Major Changes | No Major Changes | ADD: • A1c levels vary with race/ethnicity |

• No Major Changes | • REVISE: Drug Algorithm with new meds • Adds obesity medications chart |

| (ADA 2017) | No Major Changes | No Major Changes | No Major Changes | CHANGE Tx GOALS: • A1c <6.5 • A1c <7 if HG Sx • A1c <8 if serious HG or short life expectancy |

• CHANGE: Drug Algorithm revised to stress sequential med. addition. • Revise diet and exercise recommendations • ADD Table of medication costs |

| (ADA 2018a, 2018b) | • Community screening for PreDM acceptable with referral system | No Major Changes | • A1c for Dx requires lab and 2nd test | No Major Changes | • ADD Tables with drug benefits, costs and side effects costs • Stress diet and exercise for PreDM • Metformin for PreDM if BMI >35 or <60yo |

| (ADA 2019a, 2019b) | No Major Changes | No Major Changes | CHANGE • Dx with 2nd test using same blood sample |

No Major Changes | • Adjust glycemic targets, as needed for individual |

| (ADA 2020a, 2020c) | • Includes ADA/CDC PreDM Risk Test | No Major Changes | No Major Changes | No Major Changes | • CHANGE: Drug Algorithm revised to simplify cost/benefit assessment • Expansion of discussion of manage HG with glycemic control medications |

A1c, glycated hemoglobin; DM, diabetes mellitus; DM1, type 1 diabetes mellitus; DM2, type 2 diabetes mellitus; Dx, diagnosis; FPG, fasting plasma glucose; GTT, glucose tolerance test; HG, hypoglycemia; IDDM, insulin dependent diabetes mellitus; IFP, impaired fasting glucose; IGT, impaired glucose tolerance; IGT, impaired glucose tolerance; MNT medical nutrition therapy ; NIDDM, non-insulin dependent diabetes mellitus; POC, point of care; RPG, random plasma glucose; Sx, symptoms; Tx, treatment

Building an Ever-Expanding Market

Screening Recommendations: Developing the Prediabetes Market

In the 1950s, in an effort to identify the suspected millions of “hidden” diabetic cases (Greene 2007), screening tests were simplified to a finger-prick blood test rather than urine analysis. The ADA began promoting screening of asymptomatic people, targeting those with diabetic relatives, those who are obese, and those with conditions that may affect insulin function (National Diabetes Data Group 1979). Screening criteria have been gradually expanded over time, so that today screening is recommended for everyone over forty-five years old or anyone overweight with high blood pressure, elevated cholesterol, or of non-white racial/ethnic background (ADA 1989, 1997, 2002, 2004, 2018a, 2020a).

Another important change redefines the purpose of screenings as to identify not just people with diabetes but also those with “prediabetes:” those considered at risk of developing diabetes. Although these efforts also began in the 1950s, the idea remained controversial, and by the late 1970s, the academic community formally rejected the notion that prediabetes likely leads to full-blown diabetes, noting that few with this diagnosis actually progress to abnormal glucose tolerance (National Diabetes Data Group 1979). But in 1997, “prediabetes” reappeared in the ADA diagnosis guidelines, described as lying on a continuum with type 2 diabetes rather than as a distinct condition (ADA 1997). This represents an important conceptual shift: type 2 diabetes was thus authoritatively reframed as a progressive disease moving from mild to more serious forms if left unaddressed, advancing the idea that the vast market of non-diabetic patients with slightly elevated glucose should be targeted for treatment.

Over time, the category “prediabetes” was systematically expanded to include people previously labelled as having “impaired glucose tolerance,” “impaired fasting glucose,” and “borderline diabetes,” resulting in a poorly defined, heterogeneous category that includes a vast population (ADA 2003, 2010). While international standards are generally consistent with the ADA guidelines, there are some important differences in the prediabetes standards. The World Health Organization and the International Diabetes Federation have both discouraged diagnosing and treating prediabetes, noting that prediabetes most often does not progress to diabetes and that using medications to reduce diabetes risk offers little benefit, while carrying its own serious hazards (Yudkin and Montori 2014). In direct contrast to this, the current ADA guidelines call for treating prediabetes with the oral medication metformin (ADA 2020c).

Diagnosing Diabetes: Simpler Tests for More People

Diagnostic standards have also been progressively revised toward increasing the ease with which a diabetes diagnosis can be reached. For nearly twenty years (1979 – 1997) diabetes testing required a fasting plasma glucose tests (FPG), conducted after at least eight hours without food and confirmed by a second such test conducted on a different day. Alternatively, a patient could take an oral glucose tolerance test (OGTT), where their reaction to a glucose load would be observed over several hours (National Diabetes Data Group 1979). Over time, these testing procedures have been systematically simplified. In the 1997 guidelines, clinicians were discouraged from using the OGTT in the interest of saving time and money and reducing the burden on patients (ADA 1997). By 2019, a second blood test was no longer required; instead a second assay on the same blood sample was encouraged for asymptomatic patients, further facilitating more rapid diabetes diagnosis (ADA 2019a).

Perhaps the most important change in diabetes diagnosis and management has been the acceptance of haemoglobin A1c as the preferred diagnostic test. Rather than measure glucose in the blood directly, the A1c measures glycated haemoglobin—the amount of sugar attached to red blood cells in haemoglobin—which is believed to reflect a three-month average level of glucose and therefore is considered more revealing than direct tests of glucose levels which fluctuate throughout the day (Barr et al. 2002). Since 2002, the ADA has recommended using A1c for monitoring treatment progress in already diagnosed patients (ADA 2002). But due to concerns about laboratory standardization and accuracy, and conflicting evidence regarding its relationship to long-term complications, A1c was not recommended for diagnosis. In 2010, however, noting the association between A1c and retinopathy, the ADA reversed its position and began recommending A1c be used to diagnose diabetes and to screen for prediabetes (ADA 2010).

A1c is now the preferred test for measuring glucose control in treatment guidelines and is widely used in the United States for diabetes diagnosis and treatment management. This marks an important step in the expansion of the diabetes market. The A1c is a simple, easily accessible, stand-alone blood test that does not require fasting, greatly facilitating mass screening for “undiagnosed cases” (Sacks 2011). Reducing diabetes assessment to a single number also provides a simple treatment target which is highly responsive to pharmaceutical intervention and is readily incorporated into standardized quality oversight schemes. The A1c has become the primary indicator of glucose control in research on diabetes management, and as a result it has been converted from a measure of treatment response to being the target of treatment itself (Yudkin, Lipska, and Montori 2011). Maintaining intensive glucose control as defined by A1c numbers is now built into practice guidelines. Medications are routinely selected and often combined in pursuit of target A1c numbers.

Revising the Numeric Targets of Care

Beyond changes in the types of tests recommended, diagnosis thresholds have been progressively lowered, resulting in increased diagnoses. Citing research identifying glucose levels associated with the development of retinopathy (McCance et al. 1994; Engelgau et al. 1997), in 1997 the long-standing diagnostic level of FPG ≥140 mg/dl was lowered to FPG ≥126 mg/dl (National Diabetes Data Group 1979; ADA 1997; Alberti and Zimmet 1998). Notably, this change resulted in an immediate 14 per cent increase in the number of diabetes cases (Welch, Schwartz, and Woloshin 2011).

The definition of “normal” glucose levels has also been revised several times, resulting in growth of the “prediabetes” diagnostic category. In the 1979 guidelines, “normal” had been defined as FPG <115 mg/dl, but in 1997 it was lowered to FPG <110 mg/dl, then lowered again to <100 mg/dl in 2004, each time greatly expanding the population designated as having impaired glucose function or some form of “prediabetes” (National Diabetes Data Group 1979; ADA 1997, 2004).

Like the tests before it, the A1c has also been subject to ADA threshold revisions, again citing the retinopathy research as the rationale, despite what some have called weak evidence (International Expert Committee 2009; Kilpatrick, Bloomgarden, and Zimmet 2009). In 2002, an A1c >8 per cent indicated need for treatment, and A1c <7 per cent was the treatment goal (ADA 2002). These levels had been gradually revised, such that today pharmaceutical treatment is started at A1c >7 per cent with a treatment goal of A1c <6.5 per cent in the absence of hypoglycemia or other limiting factors (ADA 2010, 2011, 2014, 2017b, 2019b, 2020c).

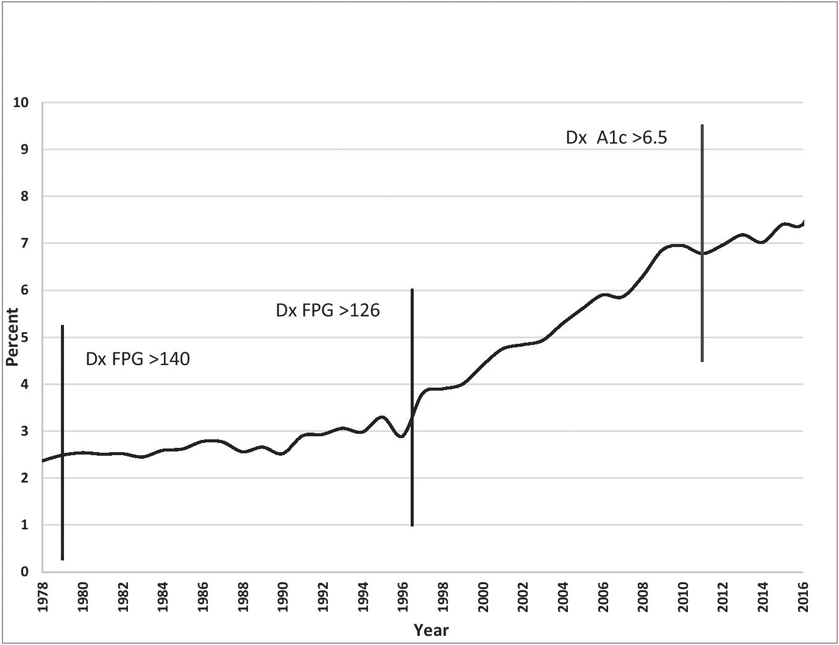

Together these changes in the clinical guideline standards of who to test, how to test them, and how to interpret those tests, coupled with lowering diagnostic and treatment goal numbers over time have coincided with a marked growth in the population of diabetes patients. While it is not possible to determine how much of that growth is attributable to changes in diagnostic procedures, as opposed to other factors such as increasing rates of obesity, the prevalence of diabetes diagnoses in the United States has climbed markedly in the years following these changes, as illustrated in Figure 1.

Fig 1.

Percentage of U.S. population with diagnosed diabetes 1979– 2018 and major changes to diabetes diagnostic criteria

Revising Treatment Recommendations

Next, let us consider developments in treatment recommendations that occurred simultaneous to changes in diagnosis and screening standards, resulting in increasingly heavy reliance on a growing pharmacopeia to manage diabetes.

For decades, ADA treatment recommendations emphasized non-pharmaceutical intervention. Lifestyle changes—diet and exercise—were central to diabetes care, based on evidence that weight management could have a significant impact on blood glucose and other bioindicators. The ADA had annually published detailed recommendations for Medical Nutrition Therapy (MNT), designed to restore normal glucose and maintain reasonable weight through healthy eating and sufficient exercise (ADA 1987, 2007). Medications were primarily used only for type 1 diabetes and introduced for type 2 only in cases of advanced disease that did not show glycaemic improvement or when patients failed to follow lifestyle recommendations (ADA 1994b). However, over time, the ADA guidelines have increasingly emphasized pharmaceutical approaches above dietary changes, recommending medications at the time of diagnosis for type 2 diabetes and that medications also be used for prediabetes (ADA 2007, 2008, 2009). While lifestyle changes continue to be discussed in the standards of care, their relative importance is clear: “ … efforts should not delay needed pharmacotherapy, which can be initiated simultaneously and adjusted based on patient response to lifestyle efforts” (Garber et al. 2017, 208).

The major shift toward pharmaceuticalization of diabetes management began in earnest after a set of large-scale clinical trials were undertaken. The first of these was the Diabetes Control and Complications Trial (DCCT) (Diabetes Control and Complications Trial Research Group 1996). This large, multi-year national study, which was funded by the NIDDK (National Institute of Diabetes and Digestive and Kidney Diseases of the National Institutes of Health), found that the development of diabetes complications, such as renal and eye disease, could be greatly reduced in type 1 diabetes patients by maintaining “tight control” of glycaemic levels through aggressive use of insulin therapy.

While this presented a compelling case for close pharmaceutical management of type 1 patients, 95 per cent of diabetes diagnoses are type 2, offering a much larger potential market. A number of large studies, were subsequently undertaken—ADVANCE, ACCORD, and UKPDS—toward demonstrating that type 2 diabetes patients would also benefit from using medications to achieve tight glucose control (Diabetes Prevention Program Research Group 2003; NIH 2008; Heller and The ADVANCE Collaborative Group 2009; ACCORD Study Group 2011; OCDEM 2018). Reflecting a growing national trend toward industry collaboration in medical research (Ehrhardt, Appel, and Meinert 2015), all of these studies were sponsored at least in part by pharmaceutical companies involved in producing the drugs being studied.

These studies reported some short-term microvascular benefits with tight glucose control for type 2 patients but did not find long-term benefit in important outcomes (Holman et al. 2008; Heller and The ADVANCE Collaborative Group 2009; ACCORD Study Group 2011). On the other hand, research has consistently shown increased negative outcomes with intensive control of type 2 diabetes, such as cardiovascular mortality, retinopathy, severe hypoglycemia, and cognitive decline (Gerstein et al. 2008; Holman et al. 2008; Eldor and Raz 2009; Montori and Fernandez-Balsells 2009; Cooper-DeHoff et al. 2010; Zoungas et al. 2010; Boussageon et al. 2011; Finucane 2012; Gerstein et al. 2012; Lipska and Krumholz 2017; Rodriguez-Gutierrez et al. 2019). Nonetheless, maintaining tight control through aggressive use of medications has become a central tenet in current practice guidelines for type 2 diabetes.

Similarly, ADA guidelines now recommend medications for prediabetes, despite seemingly contradictory evidence. In 2003, the Diabetes Prevention Program (DPP), which was jointly funded by the NIH, the ADA, and three drug companies, published results comparing lifestyle changes to metformin for preventing the development of diabetes in overweight prediabetes patients (Diabetes Prevention Program Research Group 2003). The study found that weight loss achieved through lifestyle changes reduced diabetes incidence by 58 per cent, which was significantly more effective than the incidence reduction of 31 per cent achieved with metformin. Following this study, ADA practice guidelines for prediabetes at first recommended only lifestyle changes, explicitly stating insufficient evidence to support drug therapy for diabetes prevention (ADA 2003, 2004). Gradually, however, a sceptical tone appeared in the guideline discussions of lifestyle recommendations for prediabetes management—labelling them as difficult to sustain and not cost-efficient (ADA 2007). By 2008, metformin was recommended for diabetes prevention in prediabetes patients, especially for patients labelled “high risk” (ADA 2008).

Across time, guidelines have increasingly conveyed a sense that it is urgent to reach goal numbers as quickly as possible, as though having a test reading above the population norm is itself a dangerous pathology. By 2008, the ADA guidelines described the objective of care as: “to achieve and maintain glycemic levels as close to the nondiabetic range as possible and to change interventions at as rapid a pace as titration of medications allows” (ADA 2008, S20). Since 2007, the ADA guidelines have included treatment algorithms for type 2 diabetes, recommending very specific medications and indicating multiple drugs be combined to reach the target glycaemic level (ADA 2007). Over the years, regular revisions of the algorithm have quickly incorporated newly approved medications. Metformin is now recommended at the time of diagnosis, with additional drugs added every three months if the desired glycaemic level is not reached (ADA 2017b, 2020c). Physicians are thus advised to prescribe three anti-diabetic medications within six months of a type 2 diabetes diagnosis. The net result is that polypharmacy is now the norm in type 2 diabetes management, even for mildly elevated glucose (Hunt, Kreiner, and Brody 2012; Wexler 2020).

Not only has diabetes been redefined to be an illness of epidemic prevalence, its clinical management has been fully transformed into a pharmacological undertaking, with “dual therapy” and “triple therapy” medications matter-of-factly recommended to force A1c to the target level as rapidly as possible—even “quadruple therapy” is now routinely recommended (ADA 2020c). This despite lack of clear evidence that most type 2 diabetes and pre-diabetes patients benefit from reaching goal numbers (Yudkin and Montori 2014; Rodriguez-Gutierrez et al. 2019).

Goal Numbers, Algorithms, and Blockbuster Drugs

In the wake of the diagnostic and practice changes we have reviewed, diabetes management has become very big business. The cost of managing the condition is estimated to approach $800 billion annually worldwide (IDF 2020). In the United States alone, the estimated medical costs for diabetes in 2017 was $237 billion, reflecting a more than 25 per cent growth since 2012, due to increases in both the prevalence of the diagnosis and the cost of treatment (Yang et al. 2018). Twenty-seven diabetes drugs were reported to have reached blockbuster1 status that year, with many far exceeding that mark (PharmaCompass 2020). It is illuminating to consider how this burgeoning pharmaceutical market is chronologically related to specific guideline modifications.

The dramatic increase in the size of the diabetes market that resulted from the 1997 lowering of the diagnostic threshold to FPG ≥126 mg/dl coincided with the release of several very successful drugs, notably Eli Lilly’s combination insulin, insulin lispro (Humalog), which has sustained annual sales in the billions since its release in 1996. Also noteworthy is pioglitazone (Actos), which enjoyed billions in profits between its 1999 approval and being labelled with a black box2 warning in 2007, due to its association with heart failure. The 2002 change recommending that A1c be used to assess glycaemic control propelled Aventis Pharmaceutical’s long-acting insulin, insulin glargine (Lantus), released in 2000, to become a top-selling diabetes drug, earning nearly $7 billion in 2015 alone (Brown 2015). There is some indication that the broad acceptance of lowering A1c as the goal of care resulted from an effective marketing effort to define treatment outcomes based on this drug’s ideal target. In trials, the strongest evidence for the value of this insulin glargine was its effect on A1c. Aventis undertook a variety of tactics to promote the general acceptance of A1c as a new biomarker, to both promote the drug and grow the diabetes market (Shalo 2004; Dumit 2012). This effort was wildly successful, resulting in insulin glargine quickly generating over $1 billion in annual sales and retaining this blockbuster status for many years. Interestingly, the fact that the ORIGIN clinical trial, which Aventis sponsored with the intention of showing that A1c status makes an important difference in cardiovascular health, ended with non-significant findings (Gerstein et al. 2012).

By 2010, guideline changes for diagnostic testing, target numbers, and medication use had vastly expanded the market for anti-diabetic pharmaceuticals. This was accompanied by a heightened effort by the industry to expand product lines and a rapid increase in classes of diabetes pharmacopeia. While previous diabetes medications either replaced insulin or stimulated its production or uptake, many of these new drugs address novel mechanisms to quickly achieve goal A1c levels. They do this through a variety of pathways, such as blocking hormones associated with glucose metabolism, blocking glucose absorption, or causing the kidneys to expel glucose.

In this lively market, the object of care has been fully converted to lowering levels of a surrogate outcome—the A1c—as though a slightly elevated A1c is itself a pathology. (For further discussion of issues with relying on surrogate outcomes see: Moynihan 2011; Yudkin, Lipska, and Montori 2011; Qaseem et al. 2018). The significance of managing diabetes in order to lower risk for developing neuropathy, heart and kidney diseases, and stroke seems all but forgotten. Consider for example that recent advertising for empagliflozin (Jardiance) touts its reduction in risk for heart disease as an added benefit, rather than as an outcome of controlling diabetes. At the same time, many of the new diabetes drugs, effective at lowering A1c levels, have been found to cause some of the very pathologies associated with uncontrolled diabetes, including retinopathy, congestive heart failure, kidney failure, and lower extremity amputations (FDA 2017; Feldman-Billard et al. 2018; Packer 2018).

Even so, treatment guidelines persist in recommending aggressive and combined use of these antidiabetic drugs, while the clinician is tasked with monitoring for and managing their adverse effects (ADA 2018b). Recent treatment guidelines for type 2 diabetes include recommendations for twelve different classes of medication, all of which are known to cause serious side effects. Clinicians are provided a table listing eight categories of potential health impacts, rating each of the twelve drugs for risk or benefit in each category (Garber et al. 2019; ADA 2020c). The message to clinicians is that managing the negative health consequences of diabetes medications is an unavoidable necessity in addressing the urgent matter of reaching goal A1c numbers. What seems to be lost here is a simple principle: the potential benefit of treatment may be great for people with advanced diabetes, but those with only mildly elevated glucose are unlikely to benefit; at the same time, the two groups are equally at risk for treatment harms (Brody and Light 2011; Welch, Schwartz, and Woloshin 2011).

Blurring the Boundaries between Commercial and Public Interests

Reviews have found that, like the ADA guidelines, changes to diagnosis and treatment recommendations for a variety of illnesses have evolved consistently toward expanding the population targeted for treatment and increasing the number of medications recommended (Dubois and Dean 2006; Moynihan et al. 2013). Consistent with this trend, the ADA guidelines have changed in a singular direction, toward identifying more people as needing to take more drugs. These changes have coincided with the release of several diabetes drugs that subsequently experienced tremendous market success. This leads to the question: Are there industry-favouring biases impacting the processes involved in setting these guidelines? Critics have noted that the pharmaceutical industry increasingly dominates the production of medical knowledge, and thereby exercises unprecedented control over its own regulatory environment (Brody 2007; Angell 2008; Light 2010; Abraham and Ballinger 2012). Because industry involvement is so pervasive, it is difficult to identify exactly where the boundary lies between academic and commercial means and ends. We turn now to consider pharmaceutical industry influence over the research and publications supporting the guideline revisions we have reviewed, as well as conflicts of interest in related standard-setting committees and oversight panels.

The Conflation of Science and Marketing

Evidence-based medicine (EBM), which began in the mid-1990s, uses standardized literature review methods to examine relevant research evidence in order to inform clinical decision-making. These methods are designed to produce an unbiased examination of the best scientific evidence from available medical literature (Timmermans and Berg 2003). Given that many clinicians lack the time and skill to critically assess this literature themselves, EBM has increasingly reached clinicians in the form of summary reports and as practice guidelines like those we have reviewed here (Timmermans and Oh 2010; Knaapen 2014). While EBM has been highly effective in informing standard clinical practice, serious concerns have been raised about potential industry-favouring biases that may exist in the research literature being reviewed.

Data from randomized controlled trials (RCTs) is ranked as the top tier of evidence in the grading systems used for EBM reviews. This is true for the methodologies used in systematic reviews such as the Cochrane Hierarchy of evidence, as well as less formal grading schemes, such as that used for the ADA guidelines.

RCTs use a powerful research design that minimizes bias and maximizes the reliability of findings, producing important information for assessing the efficacy and safety of new drugs. However, RCTs are also very expensive, often taking years to complete and requiring large sample sizes to achieve sufficient statistical power. As the budgets shrink for federal grant agencies such as the NIH, industry-government collaborations are an increasingly common way to fund RCTs, with the majority of studies now at least partially sponsored by the companies whose products are being tested (Angell 2008; Knaapen 2013; Ehrhardt, Appel, and Meinert 2015). Indeed, the major studies cited in the ADA guidelines to support expansion of pharmaceutical treatment for type 2 diabetes and pre-diabetes have all had significant financial or material support from diabetes drug manufacturers (see for example, Diabetes Prevention Program Research Group 2003; NIH 2008; Heller and The ADVANCE Collaborative Group 2009; Abdul-Ghani et al. 2015; OCDEM 2018; Matthews et al. 2019; Perkovic et al. 2019; Pratley et al. 2019).

Serious concerns have been raised about the potential for industry-favouring bias when research is funded by industry. Critics contend that industry-sponsored RCTs may be designed to prioritize market interests, acting as tools of marketing rather than as sources of unbiased scientific information (Lexchin et al. 2003; Klanica 2005; Brody 2007; Sismondo 2007; Matheson 2008; Dumit 2012; Healy, Mangin, and Applbaum 2014; Campbell and King 2017). Comments from pharmaceutical industry consultant Stan Bernard reinforce these concerns: “Clinical trials … have developed into powerful competitive tools designed to enhance the perception and utilization of studied brands” (Bernard 2015). He encourages incorporation of marketing objectives into every stage of research—from research design to dissemination—to highlight the product’s characteristics, differentiate it from other products, and convince payers of its cost-effectiveness.

Indeed, reviews have routinely reported a strong association between industry sponsorship of RCTs and pro-industry results, (see for example: Lexchin et al. 2003; Sismondo 2008; Lundh et al. 2017). Carefully managed publication plans may further amplify the impact of such biases, through orchestrating voluminous dissemination of product-favouring findings, obscuring sponsors’ role in authorship, and downplaying or withholding unfavourable results (Healy and Cattell 2003; Lexchin et al. 2003; Brody 2007; Sismondo 2007; Fugh-Berman and Dodgson 2008; Elliott 2010; Matheson 2016, 2017).

Industry involvement in publication of RCT findings is commonplace across all of medicine, and editors of medical journals have been struggling since the early 2000s to assure transparency concerning the role of corporate sponsors in research and to manage their influence over medical literature. Despite carefully crafted reporting requirements, there is evidence that COIs may be routinely concealed, and if this is the case, the influence of the industry over RCT dissemination may be even more pronounced than previously thought (Bauchner, Fontanarosa, and Flanagin 2018).

While we are not privy to the details of publication management for specific studies, industry involvement in diabetes research is clear. Across the diabetes literature, as with most conditions, research reports uniformly include authors who are industry employees, paid consultants, or whose research is funded by pharmaceutical companies. The extent of industry involvement in diabetes publications is impressive. Reviewing 3,782 articles reporting RCTs for glucose lowering drugs between 1993 and 2013, Holleman et al. (2015) found that more than 90 per cent of the RCTs were commercially sponsored, and 48 per cent of the most prolific authors were employees of the pharmaceutical industry. Only 6 per cent of the authors for whom COI information was available were considered fully independent. Like RCTs, systematic reviews are also ranked in the top tier of evidence for EBM and in guideline development grading schemes, including the ADA’s. Unfortunately, systematic reviews have also been found to be affected by industry COIs. A recent study found systematic reviews with COI had lower methodological quality and reported more favourable results than those without conflicts (Hansen et al. 2019). This problem of biased reviews appears to be a concern in diabetes research: a key systematic literature review examining the long-term effects of tight control in type 2 diabetes management has been cited more than four hundred times (according to Google Scholar metrics), despite having been withdrawn from the prestigious Cochrane Library because the authors were found to be employees of the pharmaceutical industry (Hemmingsen et al. 2013; Hemmingsen et al. 2015).

Beyond such concerns about the independence of medical research reporting and interpretation, questions have also been raised about whether diabetes management guidelines actually follow appropriate principles of guideline development. A recent literature review of the evidence underlying diabetes guidelines found that they are not based on systematic reviews and have not been subjected to peer review (Vigersky 2012). Furthermore, it has also been found that the recommendation for tight glucose control for type 2 patients is not supported by the literature, and the numeric goals they recommend are lower than those used in the studies cited (Vigersky 2012; Rodriguez-Gutierrez and McCoy 2019). Similarly, evidence does not support the idea that treating prediabetes will prevent development of type 2 diabetes (Yudkin and Montori 2014) and recent studies challenge the idea that untreated prediabetes will likely develop into diabetes (Rooney et al. 2021).

Conflicts of Interest at the ADA, on Guideline Panels, and at the FDA

While RCTs and systematic reviews may be considered the highest level of evidence in formal reviews, in practice, guideline recommendations are quite commonly based on low levels of evidence or expert opinion, which rely heavily on the priorities and opinions of those producing the guidelines (Dubois and Dean 2006; Knaapen 2013; Shnier et al. 2016). Setting standards is inherently a political process, reflecting negotiations over what constitutes knowledge and claims to authority; ultimately reflecting stakeholders’ interest (Timmermans and Berg 2003; Fan and Uretsky 2017). It is therefore of particular concern when pharmaceutical companies are involved in the organizations and committees producing guidelines and overseeing the marketing of their medications. Industry influence has been shown to work in subtle ways in these contexts, through social networks and workgroup dynamics, introducing bias into the findings of these groups (Abraham and Davis 2009; Knaapen 2013; Healy, Mangin, and Applbaum 2014; Knaapen 2014; Vedel and Irwin 2017). Medical professional societies which generate and disseminate practice standards and guidelines quite commonly receive substantial financial support from pharmaceutical companies, and their governing committees are often led by individuals with deep ties to the industry (Matheson 2008). The ADA fits this profile. The organization has a long history of such industry involvement. The Eli Lilly Corporation—the patent holder for the earliest insulin used to treat diabetes—was involved in first establishing the ADA and funded its initial professional publication, Diabetes Abstracts (Striker 1947; 1956). Throughout the years, the leadership of the ADA has consistently included many individuals employed by or who are professional lobbyists for pharmaceutical companies. Of note, all the manufacturers of the blockbuster diabetes drugs we have reviewed here are multi-million dollar donors to the ADA (ADA 2020b).

Quite commonly, involvement of the pharmaceutical industry with professional organizations extends to the membership of the advisory panels charged with establishing practice guidelines, bringing into question the independence of these panels. Studies of expert guideline panels for a variety of common conditions have found industry COIs are not only extremely common, but they are also frequently concealed (Dubois and Dean 2006; Neuman et al. 2011; Moynihan et al. 2013; Ioannidis 2014). This has been especially so for guideline panels focusing on type 2 diabetes. As many as 95 per cent of panel members have been found to have financial and other relationships with drug companies—often the same companies whose drugs are recommended in the guidelines (Vigersky 2012; Holmer et al. 2013; Norris et al. 2013). This is well illustrated by the recent Consensus Statement on type 2 diabetes management, where fifteen of nineteen authors report COIs due to pharmaceutical industry ties (Garber et al. 2020).

The influence of the pharmaceutical industry appears to extend to federal regulatory bodies as well, such as the Food and Drug Administration (FDA) in the United States or the Medicines and Healthcare Products Regulatory Agency (MHRA) in the United Kingdom, which are charged with overseeing safety, efficacy, and security of medical drugs. The presence of extensive COIs resulting in pro-industry bias at these agencies is well documented. Reviews of COIs on drug review panels have found members report drug industry COI on more than 70 per cent of panels and also found COIs are under-reported (Steinbrook 2005; Lurie et al. 2006; McCoy et al. 2018). Researchers have found that political pressure, career paths shifting back and forth between drug companies and regulators, workgroup dynamics, demands for speedy review, and pay-to-play institutional financing have all worked together to compromise the neutrality and objectivity of drug approval reviews (Abraham and Davis 2009; Hedgecoe 2014; Darrow, Avorn, and Kesselheim 2017; Hayes and Prasad 2018). Rather than provide reassurance that such COIs will be monitored and managed to ensure impartiality and protect the public interest over commercial interests, the FDA’s long-time director of the Center for Drug Evaluation and Research, Janet Woodcock, has explicitly embraced industry influence, stating that she strives to position the agency to act as a partner to the drug industry rather than an adversarial regulator (Kaplan 2016). The impact of this attitude is both clear and concerning. Studies have found COI enforcement to be lopsided: experts who have conducted research on the dangers of a drug are routinely removed from review panels, while those with financial COIs are routinely retained and are significantly more likely to vote in favour of their sponsors’ applications (Lurie et al. 2006; Pham-Kanter 2014; Lenzer 2016; McCoy et al. 2018).

With a few notable exceptions, it is difficult to document specific cases of product-favouring distortion in drug review and regulation. Still, many drugs have been approved based on industry-sponsored RCTs, then subsequently shown to cause serious health consequences—but not until after they have produced substantial profits (Saluja et al. 2016). The FDA appears to be reluctant to respond to mounting evidence of serious health concerns associated with some very successful diabetes medications. For example, despite clear evidence that the highly profitable thiazolidinedione drugs rosiglitazone (Avandia) and pioglitazone (Actos) cause congestive heart failure, they have been relabelled with stern warnings but have not been removed from the market (Davis and Abraham 2011). Rosiglitazone has an especially concerning history with the FDA, which approved the drug at a time when many countries were banning it. A high-level label warning regarding heart attack risk was later added then removed, apparently in an effort to save face for the agency (Burton 2013). Despite these dangers and controversies, the ADA’s 2020 pharmaceutical treatment algorithm continues to recommend thiazolidinediones (ADA 2020c).

Similarly, canagliflozin (Invokana), which lowers A1c by preventing the kidneys from absorbing glucose, was widely anticipated to become the next blockbuster diabetes drug. Prior to its approval in 2013, serious health concerns were already being raised, yet the FDA went forward with an expedited approval. As evidence mounted that canagliflozin can cause ketoacidosis, bone loss, kidney damage, and increased risk for lower limb amputation (Nissen 2013; Tuccori et al. 2016; FDA 2017), warnings were added to the label including a black box warning about increased risk of leg and foot amputations, but it remained on the market. Then, following review of post-market data, not only was the drug’s approval extended to include use to reduce risk in advanced heart and kidney disease in type 2 diabetes patients, but the black box warning was removed (FDA 2020). A recent analysis compares the timing and strength of these warnings with those of medicine regulators in other nations and notes that these changes were instituted following extensive negotiations with the drug’s manufacturer (Bhasale, Mintzes, and Sarpatwari 2020). The ADA guideline’s pharmaceutical treatment algorithm continues to recommend canagliflozin, emphasizing its success in reducing cardiovascular events, while also noting its possible adverse effects (ADA 2020c).

Conclusion

Until the late 1990s, diabetes diagnosis required marked elevation of blood glucose on multiple tests. Treatment for type 2 diabetes consisted largely of lifestyle modifications, with medications only prescribed when glucose remained quite high over the long term. This has changed radically in recent years as screening, diagnostic, and management guidelines have been gradually revised. The population identified as requiring diabetes management has been systematically expanded, and treatment goals have been recast to require lowering even slightly elevated A1c levels as quickly as possible, using an ever-growing arsenal of medications. These changes have been closely associated with pharmaceutical development and approvals and occurred at a time of heavy industry involvement in diabetes research and publication, professional organizations, expert panels, and oversight committees.

More than real changes in public health, this massive growth in diabetes diagnosis and treatment appears rooted in what some have called a process of pharmaceuticalization, wherein essentially harmless variations in biological function have been transformed into opportunities for pharmaceutical intervention (Williams, Martin, and Gabe 2011). While our analysis has focused only on diabetes, it is important to consider that market-expanding changes such as these are appearing in standards of care across medicine. For example, clinical standards for diagnosing and managing hypertension and hyperlipidaemia have also been gradually and repeatedly broadened so that even slightly elevated test levels call for medication. By today’s standards, 36 per cent of U.S. adults should take high blood pressure medications (Muntner et al. 2018), and nearly half should be prescribed statins for high cholesterol (Salami et al. 2017), raising concerns that millions of people who are not at risk for cardiovascular events are being prescribed medications (U.S. Preventative Services Task Force 2016; Unruh et al. 2016; Carroll 2017; Crawford 2017; Bakris and Sorrentino 2018). It is noteworthy that extensive ties between the pharmaceutical industry and the guideline panels for both hypertension and hyperlipidaemia have also been documented (Ioannidis 2014).

Pharmaceutical industry COIs are as ubiquitous in medicine as they are multifaceted and complex and exist at many levels, spanning medical education, research, and publication, as well as standard and policy-setting bodies (Bauchner, Fontanarosa, and Flanagin 2018; Wiersma et al. 2020). Each of these elements may have a limited impact, but taken together over the long term they act in combination to redefine health and healthcare in industry-favouring ways, not as intentional acts of deceit but resulting from the more subtle bias constituted in the many opportunities to make choices that may prioritize the “beautification of the product” (Matheson 2008, 374).

As industry-science collaborations increase, medical standards are steadily being recast to coincide with market interests (Conrad 2007; Matheson 2008; Healy, Mangin, and Applbaum 2014). Our analysis has shown that when commercial interests are allowed to shape the production, interpretation, and application of scientific knowledge, as well as regulatory oversight processes, public health may be at risk. It is important to consider that these varied influences have an accumulating and synergistic impact. Rather than accept industry participation in medical research and oversight as a normal condition, needing to be monitored and managed, we challenge that normalcy and ask a more basic question: under what circumstances might it be acceptable for industry to participate in the production, dissemination, interpretation, and oversight of medical research and practice? The net effect of industry involvements is clear: medical diagnosis and prescribing have continually expanded, particularly for chronic conditions such as high cholesterol, hypertension and diabetes, to the point that today 69 per cent of U.S. adults aged 40 – 79 are taking at least one prescription drug, and 22 per cent are taking five or more drugs simultaneously (Hales et al. 2019). Medications for common chronic conditions such as hypertension, hyperlipidaemia, and diabetes are among the most often prescribed (Brown 2015; Kirzinger, Wu, and Brodie 2016; QuintilesIMS Institute 2017). At the same time, pharmaceutical manufacturers are accelerating drug development, with more than 300,000 clinical trials currently underway (ClinicalTrials.Gov 2020).

In light of these trends, it is increasingly urgent to understand and address the real costs of consigning so much influence over medical practice into the hands of the pharmaceutical industry. Management of COIs in research networks and in organizations such as the ADA and FDA should move beyond cataloguing and overseeing individual COIs (Jacmon 2018), to seriously examine the systemic nature of pharmaceutical industry COIs and develop measures to curtail their pervasive influence over medical knowledge and practice.

Footnotes

“Blockbuster” status indicates a drug generating revenue of at least $1 billion USD annually.

A “black box” warning is the FDA’s highest level of warning, added to a drug’s label when there is evidence of serious health hazards.

Publisher's Disclaimer: This AM is a PDF file of the manuscript accepted for publication after peer review, when applicable, but does not reflect post-acceptance improvements, or any corrections. Use of this AM is subject to the publisher's embargo period and AM terms of use. Under no circumstances may this AM be shared or distributed under a Creative Commons or other form of open access license, nor may it be reformatted or enhanced, whether by the Author or third parties. See here for Springer Nature's terms of use for AM versions of subscription articles: https://www.springernature.com/gp/open-research/policies/accepted-manuscript-terms

References

- Abdul-Ghani MA, Puckett C, Triplitt C, et al. 2015. Initial combination therapy with metformin, pioglitazone and exenatide is more effective than sequential add-on therapy in subjects with new-onset diabetes. Results from the efficacy and durability of initial combination therapy for type 2 diabetes (edict): A randomized trial. Diabetes Obesity & Metabolism 17(3): 268–275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abraham J 2010. On the prohibition of conflicts of interest in pharmaceutical regulation: Precautionary limits and permissive challenges. A commentary on Sismondo (66:9, 2008, 1909-14) and O’Donovan and Lexchin. Social Science & Medicine 70(5): 648–651. [DOI] [PubMed] [Google Scholar]

- Abraham J, and Ballinger R. 2012. Science, politics, and health in the brave new world of pharmaceutical carcinogenic risk assessment: Technical progress or cycle of regulatory capture? Social Science & Medicine 75(8): 1433–1440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abraham J, and Davis C. 2009. Drug evaluation and the permissive principle: Continuities and contradictions between standards and practices in antidepressant regulation. Social Studies of Science 39(4): 569–598. [DOI] [PubMed] [Google Scholar]

- ACCORD Study Group. 2011. Long-term effects of intensive glucose lowering on cardiovascular outcomes. New England Journal of Medicine 364(9): 818–828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- ADA, (American Diabetes Association). 1987. Nutritional recommendations and principles for individuals with diabetes mellitus. In Diabetes Care 23: S43–S46.. [DOI] [PubMed] [Google Scholar]

- ADA, 1989. Screening for diabetes. Diabetes Care 12(8): 588–590. [DOI] [PubMed] [Google Scholar]

- ADA, 1994a. Standards of medical care for patients with diabetes mellitus. Diabetes Care 17(6): 616–623. [DOI] [PubMed] [Google Scholar]

- ADA, 1994b. Standards of medical care for patients with diabetes mellitus. American diabetes association. Diabetes Care 17(6): 616–623. [DOI] [PubMed] [Google Scholar]

- ADA, 1997. Report of the expert committee on the diagnosis and classification of diabetes mellitus. Diabetes Care 20(7): 1183–1197. [DOI] [PubMed] [Google Scholar]

- ADA, 2002. Standards of medical care for patients with diabetes mellitus. Diabetes Care 25(1): 213–229. [DOI] [PubMed] [Google Scholar]

- ADA, 2003. Standards of medical care for patients with diabetes mellitus. Diabetes Care 26(S1): S33–S50. [DOI] [PubMed] [Google Scholar]

- ADA, 2004. Standards of medical care in diabetes. Diabetes Care 27(S1): S15–S35. [DOI] [PubMed] [Google Scholar]

- ADA, 2007. Standards of medical care in diabetes—2007. Diabetes Care 30(S1): S4–S41. [DOI] [PubMed] [Google Scholar]

- ADA, 2008. Standards of medical care in diabetes—2008. Diabetes Care 31(S1): S12–S54. [DOI] [PubMed] [Google Scholar]

- ADA, 2009. Standards of medical care in diabetes—2009. Diabetes Care 32(S1): S13–S61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- ADA, 2010. Standards of medical care in diabetes—2010. Diabetes Care 33: S11–S61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- ADA, 2011. Standards of medical care in diabetes—2011. Diabetes Care 34(S1): S11–S61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- ADA, 2014. Standards of medical care in diabetes—2014. Diabetes Care 37(S1): S14–S80. [DOI] [PubMed] [Google Scholar]

- ADA, 2017a. Standards of medical care in diabetes—2017. The Journal of Clinical and Applied Research and Education 40(S1). [Google Scholar]

- ADA, 2017b. Standards of medical care in diabetes—2017: Summary of revisions. Diabetes Care 40(S1): S4–S5. [DOI] [PubMed] [Google Scholar]

- ADA, 2018a. Classification and diagnosis of diabetes: Standards of medical care in diabetes—2018. Diabetes Care 41(S1): S13–S27. [DOI] [PubMed] [Google Scholar]

- ADA, 2018b. Pharmacologic approaches to glycemic treatment: Standards of medical care in diabetes—2018. Diabetes Care 41(S1): S73–S85. [DOI] [PubMed] [Google Scholar]

- ADA, 2019a. Classification and diagnosis of diabetes: Standards of medical care in diabetes—2019. Diabetes Care 42(S1): S13–S28. [DOI] [PubMed] [Google Scholar]

- ADA, 2019b. Pharmacologic approaches to glycemic treatment: Standards of medical care in diabetes—2019. Diabetes Care 42(S1): S90–S102. [DOI] [PubMed] [Google Scholar]

- ADA, 2020a. Classification and diagnosis of diabetes: Standards of medical care in diabetes—2020. Diabetes Care 43(S1): S14–S31. [DOI] [PubMed] [Google Scholar]

- ADA, 2020b. Corporate sponsors. https://www.diabetes.org/pathway/supporters/corporate-sponsors. Accessed June 23, 2021.

- ADA, 2020c. Pharmacologic approaches to glycemic treatment: Standards of medical care in diabetes-2020. Diabetes Care 43(S1): S98–S110. [DOI] [PubMed] [Google Scholar]

- Alberti KG, and Zimmet PZ. 1998. Definition, diagnosis and classification of diabetes mellitus and its complications. Part 1: Diagnosis and classification of diabetes mellitus provisional report of a who consultation. Diabetes Medicine 15(7):539–553. [DOI] [PubMed] [Google Scholar]

- Angell M 2008. Industry-sponsored clinical research: A broken system. JAMA 300(9): 1069–1071. [DOI] [PubMed] [Google Scholar]

- Bakris G, and Sorrentino M. 2018. Redefining hypertension—Assessing the new blood-pressure guidelines. New England Journal Medicine 378(6): 497–499. [DOI] [PubMed] [Google Scholar]

- Barr RG, Nathan DM, Meigs JB, and Singer DE. 2002. Tests of glycemia for the diagnosis of type 2 diabetes mellitus. Annals of Internal Medicine 137(4): 263–272. [DOI] [PubMed] [Google Scholar]

- Bauchner H, and Fontanarosa P (eds.). 2017. Conflict of interest [special issue]. JAMA 317(17). [DOI] [PubMed] [Google Scholar]

- Bauchner H, Fontanarosa PB, and Flanagin A. 2018. Conflicts of interests, authors, and journals: New challenges for a persistent problem. JAMA 320(22): 2315–2318. [DOI] [PubMed] [Google Scholar]

- Bernard S 2015. Competitive trial management: Winning with clinical studies. Pharmaceutical Executive. 35(10). [Google Scholar]

- Bhasale A, Mintzes B, and Sarpatwari A. 2020. Communicating emerging risks of sglt2 inhibitors-timeliness and transparency of medicine regulators. British Medical Journal 369: m1107. [DOI] [PubMed] [Google Scholar]

- Boussageon R, Bejan-Angoulvant T, Saadatian-Elahi M, et al. 2011. Effect of intensive glucose lowering treatment on all cause mortality, cardiovascular death, and microvascular events in type 2 diabetes: Meta-analysis of randomised controlled trials. British Medical Journal 343: 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brody H 2007. Hooked: Ethics, the medical profession, and the pharmaceutical industry. Vol. xii. Explorations in bioethics and the medical humanities. Lanham: Rowman & Littlefield. [Google Scholar]

- Brody H, and Light DW. 2011. The inverse benefit law: How drug marketing undermines patient safety and public health. American Journal of Public Health 101(3): 399–404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown T 2015. The 10 most-prescribed and top-selling medications. WebMD, May 8. https://www.webmd.com/drug-medication/news/20150508/most-prescribed-top-selling-drugs. Accessed June 23, 2021 [Google Scholar]

- Burton TM 2013. Fda removes marketing limits on diabetes drug avandia. The Wall Street Journal, November 25. https://www.wsj.com/articles/fda-removes-marketing-limits-on-diabetes-drug-avandia-1385409636. Accessed June 23, 2021. [Google Scholar]

- Campbell J, and King NB. 2017. “Unsettling circularity”: Clinical trial enrichment and the evidentiary politics of chronic pain. Comparative European Politics 12(2): 191–216. [Google Scholar]

- Carroll A 2017. Why new blood pressure guidelines could lead to harm. New York Times, December 18. [Google Scholar]

- CDC (Centers for Disease Control and Prevention). 2017. Long-term trends in diabetes. https://www.cdc.gov/diabetes/statistics/slides/long_term_trends.pdf. Accessed June 23, 2021.

- CDC (Centers for Disease Control and Prevention). 2020a. Diabetes data and statistics. https://www.cdc.gov/diabetes/data/index.html. Accessed October 20, 2020.

- CDC (Centers for Disease Control and Prevention). 2020b. National diabetes statistics report, 2020. https://www.cdc.gov/diabetes/pdfs/data/statistics/national-diabetes-statistics-report.pdf. Accessed June 23, 2021.

- CDC (Centers for Disease Control and Prevention). 2020c. Ending epidemics. https://www.cdc.gov/about/24-7/ending-epidemics.html. Accessed June 23, 2021.

- ClinicalTrials.Gov. 2020. Trends, charts, and maps. https://clinicaltrials.gov/ct2/resources/trends. Accessed April 12, 2020.

- Conrad P 2007. The medicalization of society: On the transformation of human conditions into treatable disorders. Baltimore: Johns Hopkins University Press. [Google Scholar]

- Conrad P, and Leiter V. 2004. Medicalization, markets and consumers. Journal of Health and Social Behavior 45(S1): 158–176. [PubMed] [Google Scholar]

- Cooper-DeHoff RM, Gong Y, Handberg EM, et al. 2010. Tight blood pressure control and cardiovascular outcomes among hypertensive patients with diabetes and coronary artery disease. JAMA 304(1): 61–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crawford C 2017. AAFP decides to not endorse aha/acc hypertension guideline. https://www.aafp.org/news/health-of-the-public/20171212notendorseaha-accgdlne.html. Accessed March 28, 2018. [Google Scholar]

- Crosswell L, and Porter L. 2016. Inoculating the electorate: A qualitative look at American corporatocracy and its influence on health communication. Critical Public Health 26(2): 207–220. [Google Scholar]

- Darrow JJ, Avorn J, and Kesselheim AS. 2017. Speed, safety, and industry funding—from pdufa i to pdufa vi. New England Journal of Medicine 377(23): 2278–2286. [DOI] [PubMed] [Google Scholar]

- Davis C, and Abraham J. 2011. The socio-political roots of pharmaceutical uncertainty in the evaluation of “innovative” diabetes drugs in the European Union and the US. Social Science & Medicine 72(9): 1574–1581. [DOI] [PubMed] [Google Scholar]

- Diabetes Control and Complications Trial Research Group. 1996. Lifetime benefits and costs of intensive therapy as practiced in the diabetes control and complications trial. The diabetes control and complications trial research group. JAMA 276(17): 1409–1415. [PubMed] [Google Scholar]

- Diabetes Prevention Program Research Group. 2003. Within-trial cost-effectiveness of lifestyle intervention or metformin for the primary prevention of type 2 diabetes. Diabetes Care 26(9): 2518–2523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dubois RW, and Dean BB. 2006. Evolution of clinical practice guidelines: Evidence supporting expanded use of medicines. Disease Management 9(4): 210–223. [DOI] [PubMed] [Google Scholar]

- Dumit J 2012. Drugs for life: How pharmaceutical companies define our health. Experimental futures. Durham: Duke University Press. [Google Scholar]

- Ehrhardt S, Appel LJ, and Meinert CL. 2015. Trends in national institutes of health funding for clinical trials registered in clinicaltrials.Gov. JAMA 314(23): 2566–2567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eldor R, and Raz I. 2009. The individualized target hba1c: A new method for improving macrovascular risk and glycemia without hypoglycemia and weight gain. Review of Diabetic Studies 6(1): 6–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elliott C 2010. White coat, black hat: Adventures on the dark side of medicine. Boston: Beacon Press. [Google Scholar]

- Engelgau MM, Thompson TJ, Herman WH, et al. 1997. Comparison of fasting and 2-hour glucose and hba1c levels for diagnosing diabetes. Diagnostic criteria and performance revisited. Diabetes Care 20(5): 785–791. [DOI] [PubMed] [Google Scholar]

- Express Scripts Lab. 2016. Express sripts 2015 drug trend report. Express Scripts Lab. 2016. Express sripts 20215 drug trend report. Accessed August 4, 2021. [Google Scholar]

- Fan E, and Uretsky E. 2017. In search of results: Anthropological interrogations of evidence-based global health. Critical Public Health 27(2): 157–162. [Google Scholar]

- FDA (Food and Drug Administration). 2017. FDA drug safety communication: FDA confirms increased risk of leg and foot amputations with the diabetes medicine canagliflozin (invokana, invokamet, invokamet xr). https://www.fda.gov/Drugs/DrugSafety/ucm557507.htm. Accessed March 30, 2018. [Google Scholar]

- FDA (Food and Drug Administration). 2018. FDA approved diabetes medications. [Google Scholar]

- FDA (Food and Drug Administration). 2020. FDA removes boxed warning about risk of leg and foot amputations for the diabetes medicine canagliflozin (invokana, invokamet, invokamet xr). https://www.fda.gov/drugs/drug-safety-and-availability/fda-removes-boxed-warning-about-risk-leg-and-foot-amputations-diabetes-medicine-canagliflozin. Accessed November 30, 2020. [Google Scholar]

- Feldman-Billard S, Larger E, and Massin P,. 2018. Early worsening of diabetic retinopathy after rapid improvement of blood glucose control in patients with diabetes. Diabetes & Metabolism 44(1): 4–14. [DOI] [PubMed] [Google Scholar]

- Finucane TE 2012. “Tight control” in geriatrics: The emperor wears a thong. Journal of the American Geriatrics Society 60(8): 1571–1575. [DOI] [PubMed] [Google Scholar]

- Fugh-Berman A, and Dodgson SJ. 2008. Ethical considerations of publication planning in the pharmaceutical industry. Open Medicine 2(4): e121–e124. [PMC free article] [PubMed] [Google Scholar]

- Garber AJ, Abrahamson MJ, Barzilay JI, et al. 2017. Consensus statement by the american association of clinical endocrinologists and american college of endocrinology on the comprehensive type 2 diabetes management algorithm—2017 executive summary. Endocrine Practice 23(2): 207–238. [DOI] [PubMed] [Google Scholar]

- Garber AJ, Abrahamson MJ, Barzilay JI, et al. 2019. Consensus statement by the American Association of Clinical Endocrinologists and American College of Endocrinology on the comprehensive type 2 diabetes management algorithm—2019 executive summary. Endocrine Pract 25(1): 69–100. [DOI] [PubMed] [Google Scholar]

- Garber AJ, Handelsman Y, Grunberger G, et al. 2020. Consensus statement by the American Association of Clinical Endocrinologists and American College of Endocrinology on the comprehensive type 2 diabetes management algorithm—2020 executive summary. Endocrine Practice 26(1): 107–139. [DOI] [PubMed] [Google Scholar]

- Gerstein HC, Bosch J, Dagenais GR, et al. 2012. Basal insulin and cardiovascular and other outcomes in dysglycemia. New England Journal of Medicine 367(4): 319–328. [DOI] [PubMed] [Google Scholar]

- Gerstein HC, Miller ME, Byington RP, et al. 2008. Effects of intensive glucose lowering in type 2 diabetes. New England Journal of Medicine 358(24): 2545–2559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene JA 2007. Prescribing by numbers: Drugs and the definition of disease. Vol. xv. Baltimore: Johns Hopkins University Press. Reprint, NOT IN FILE. [Google Scholar]

- Hales CM, Carroll MD, Fryar CD, and Ogden CL. 2020. Prevalence of obesity and severe obesity among adults: United States, 2017 – 2018. NCHS Data Brief (360): 1–8. [PubMed] [Google Scholar]

- Hales CM, Fryar CD, Carroll MD, Freedman DS, and Ogden CL. 2018. Trends in obesity and severe obesity prevalence in us youth and adults by sex and age, 2007 – 2008 to 2015 – 2016. JAMA 319(16): 1723–1725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hales CM, Servais J, Martin CB, and Kohen D. 2019. Prescription drug use among adults aged 40 – 79 in the United States and Canada. NCHS Data Brief (347): 1–8. [PubMed] [Google Scholar]

- Hansen C, Lundh A, Rasmussen K, and Hrobjartsson A. 2019. Financial conflicts of interest in systematic reviews: Associations with results, conclusions, and methodological quality. Cochrane Database Systematic Reviews 8: MR000047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayes MJ, and Prasad V. 2018. Financial conflicts of interest at fda drug advisory committee meetings. Hastings Center Report 48(2): 10–13. [DOI] [PubMed] [Google Scholar]

- Healy D, and Cattell D. 2003. Interface between authorship, industry and science in the domain of therapeutics. British Journal of Psychiatry 183: 22–27. [PubMed] [Google Scholar]

- Healy D, Mangin D, and Applbaum K. 2014. The shipwreck of the singular. Social Studies of Science 44(4): 518–523. [DOI] [PubMed] [Google Scholar]

- Hedgecoe A 2014. A deviation from standard design? Clinical trials, research ethics committees, and the regulatory co-construction of organizational deviance. Social Studies of Science 44(1): 59–81. [DOI] [PubMed] [Google Scholar]

- Heller SR, and The ADVANCE Collaborative Group. 2009. A summary of the advance trial. Diabetes Care 32(S2): S357–S3561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hemmingsen B, Lund SS, Gluud C, et al. 2013. Targeting intensive glycaemic control versus targeting conventional glycaemic control for type 2 diabetes mellitus. Cochrane Database of Systematic Reviews (11): CD008143. [DOI] [PubMed] [Google Scholar]

- Hemmingsen B, Lund SS, Gluud C, et al. 2015. Withdrawn: Targeting intensive glycaemic control versus targeting conventional glycaemic control for type 2 diabetes mellitus. Cochrane Database of Systematic Reviews (7): CD008143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holleman F, Uijldert M, Donswijk LF, and Gale EA. 2015. Productivity of authors in the field of diabetes: Bibliographic analysis of trial publications. British Medical Journal 351: h2638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holman RR, Paul SK, Bethel MA, Neil HA, and Matthews DR. 2008. Long-term follow-up after tight control of blood pressure in type 2 diabetes. New England Journal of Medicine 359(15): 1565–1576. [DOI] [PubMed] [Google Scholar]

- Holmer HK, Ogden LA, Burda BU, and Norris SL. 2013. Quality of clinical practice guidelines for glycemic control in type 2 diabetes mellitus. PLoS One 8(4): e58625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunt LM, Bell HS, Baker AM, and Howard HA. 2017. Electronic health records and the disappearing patient. Medical Anthropology Quarterly 31(3): 403–421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunt LM, Bell HS, Martinez-Hume AC, Odumosu F, and Howard HA. 2019. Corporate logic in clinical care: The case of diabetes management. Medical Anthropology Quarterly 33(4): 463–482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunt LM, Kreiner M, and Brody H. 2012. The changing face of chronic illness management in primary care: A qualitative study of underlying influences and unintended outcomes. Annals of Family Medicine 10(5): 452–460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- IDF (International Diabetes Federation) 2020. 2020 idf diabetes atlas., 9th ed, edited by Karuranga S, Malanda B, Saeedi P and Salpea P. International Diabetes Federation. [Google Scholar]

- International Expert Committee. 2009. International expert committee report on the role of the ALC assay in the diagnosis of diabetes. Diabetes Care 32(7): 1327–1334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ioannidis JPA 2014. More than a billion people taking statins? Potential implications of the new cardiovascular guidelines. JAMA 311(5): 463–464. [DOI] [PubMed] [Google Scholar]

- Jacmon H 2018. Disclosure is inadequate as a solution to managing conflicts of interest in human research. Journal of Bioethical Inquiry 15(1): 71–80. [DOI] [PubMed] [Google Scholar]