Abstract

In a broiler carcass production conveyor system, inspection, monitoring, and grading carcass and cuts based on computer vision techniques are challenging due to cuts segmentation and ambient light conditions issues. This study presents a depth image-based broiler carcass weight prediction system. An Active Shape Model was developed to segment the carcass into 4 cuts (drumsticks, breasts, wings, and head and neck). Five regression models were developed based on the image features for each weight estimation (carcass and its cuts). The Bayesian-ANN model outperformed all other regression models at 0.9981 R2 and 0.9847 R2 in the whole carcass and head and neck weight estimation. The RBF-SVR model surpassed all the other drumstick, breast, and wings weight prediction models at 0.9129 R2, 0.9352 R2, and 0.9896 R2, respectively. This proposed technique can be applied as a nondestructive, nonintrusive, and accurate on-line broiler carcass production system in the automation of chicken carcass and cuts weight estimation.

Key words: broiler carcasses, carcass weight, computer vision system, regression modeling, statistical modeling

INTRODUCTION

Over the years, the rising preference for white meat has resulted in increased consumption of poultry meat and meat products, broiler meat being the favorite (Okinda et al., 2019; Okinda et al., 2020a; Okinda et al., 2020b; Nyalala et al., 2021b). Pork and poultry are the most globally consumed meat at 120.71 and 123.21 million metric tons, respectively (Statista, 2019). However, poultry consumption will overtake pork consumption by a large margin by 2021 (Statista, 2019). Additionally, in 2017, there were 22.85 billion broilers internationally, up from 14.38 billion in 2000 (Statista, 2019). Apart from food safety and quality, unstandardized cuts in size and weight of chicken carcasses have become challenging factors in large-scale production line systems (Adamczak et al., 2018; Teimouri et al., 2018).

Carcass weight is essential in any slaughtering plant regarding production economics and cutting equipment adjustment (Jørgensen et al., 2019). The carcass weight and size determine the appropriate cut-up station's cutting specifications for a specific broiler carcass. If a carcass is larger than the cutting line settings, meat parts will either be left at the body or overlap into other cuts. Additionally, if a carcass is smaller than the cutting line settings, bones and ribs might be cut together with the fillet (Adamczak et al., 2018). Therefore, correct carcass weight and size measurements minimize waste, improve cut quality, and optimize profits (Adamczak et al., 2018; Jørgensen et al., 2019). Furthermore, it is unfeasible to manually adjust the cutting line settings for each carcass (Adamczak et al., 2018). Therefore, the automation of these cutting lines is a fundamental factor in chicken carcass processing.

Even though the current broiler processing systems are automated, broiler carcass weighing is still challenging in an on-line production setting (Jørgensen et al., 2019). The conventional broiler carcass weighing technique is a conveyor weighing scale mounted on the processing line (Jørgensen et al., 2019). However, this technique suffers from several shortcomings, for instance, it requires the transfer of carcass off and back between the processing line and the conveyor weighing scale (Jørgensen et al., 2019). Additionally, the conveyor weighing scales are often quite large. The entire production line must be halted during maintenance, or the weighing scale is bypassed entirely (Jørgensen et al., 2019).

Studies have reported several solutions to estimate broiler carcass weight based on several techniques. Oviedo-Rondon et al. (2007) and Silva et al. (2006) presented a nondestructive real-time ultrasonic device to measure the weight of broiler breast muscle. D. Scollan et al. (1998) introduced nuclear magnetic resonance imaging (MRI) technique for determining the mass of broilers Pectoralis muscle. Tyasi et al. (2018), Yakubu and Idahor (2009), Hidayat and Iskandar (2018), and Raji et al. (2010) correlated body measurement traits and age to broiler carcass weight. Despite the mentioned methods being nondestructive, they were invasive. They required contact with a live chicken before slaughter, and they were time-consuming. Nonintrusive and noninvasive techniques have also been introduced based on computer vision systems. Teimouri et al. (2018) developed an on-line sorting system of the chicken carcass as breast, leg, fillet, wing, and drumstick based on 2D image analysis and machine learning techniques. Adamczak et al. (2018) capitalized on the ability of 3D scanning to estimate objects’ volume by introducing a 3D chicken breast weight estimation system. Most recently, Jørgensen et al. (2019) presented a broiler carcass weight estimation system based on 3D prior knowledge.

Most of these mentioned studies are applied in off-line grading systems. They may not be appropriate in real-time applications (Teimouri et al., 2018). Furthermore, 3D scanning requires a high computing resource and multiangle images before a photometric approach (Okinda et al., 2020b); hence, challenging to be applied in on-line production systems. This study presents a novel method for in-line broiler carcass and carcass parts weight estimation techniques that can be deployed non-intrusively on the processing line based on 2D machine vision systems.

This study's primary objective was to develop a machine vision system for estimating the weight of a whole broiler carcass (r1) and carcass parts (drumsticks (), breasts (), wings (), and head and neck ()). The specific objectives were 1) to develop an accurate and fast depth image preprocessing algorithm, 2) to develop an efficient and robust active shape model (ASM) to detect the cutting points for segmentation of the broiler parts, 3) to develop an efficient 2D image features extraction algorithm, and 4) to develop weight prediction regression models based on the features extracted for each carcass part.

MATERIALS AND METHODS

Samples and Image Acquisition System

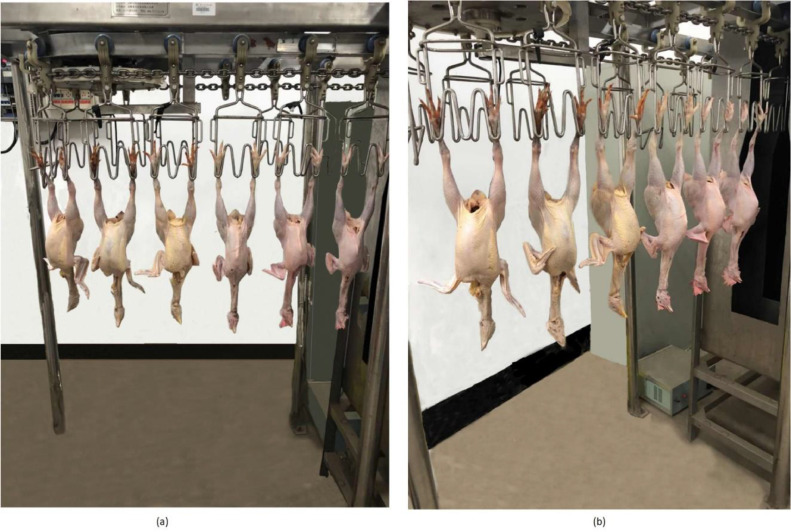

Chicken carcasses (n = 155) of different weights (ranging between 853.9 g and 1,750 g) purchased from a local chicken processing plant (Nanjing Sushi Meat Products [NSMP], Nanjing, Jiangsu, China) between January and November 2019 were used in this study. The carcasses were manually examined to be fresh and clear from visible injuries, defects, and frost. Each carcass was weighed by a calibrated electronic scale (YP10001 model, Shanghai 00000271 Instruments Company, Shanghai, China), with a ±0.1 g precision, then labeled. The whole chicken carcasses were manually hanged for image acquisition on an experimental on-line chicken carcass production line (processing speed of 5,000 pcs/h), as shown in Figure 1. Afterward, the carcasses were cut up into portions (drumsticks, arms, head and neck, hands, and breast). For each sample, the weight of each part of the carcass was registered.

Figure 1.

Chicken carcass samples hanging on the overhead processing line.

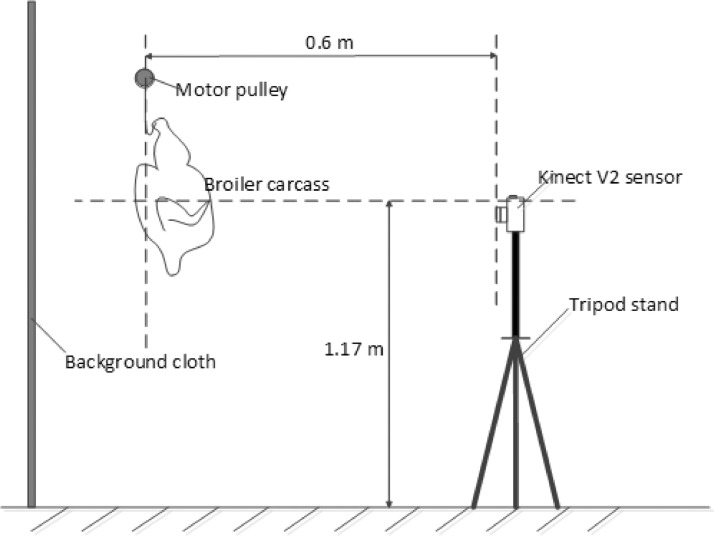

The Kinect V2 sensor (Microsoft Inc, Seattle, WA) was used for image acquisition of individual carcass hanging on the on-line machine, as indicated in Figure 2. The Kinect sensor comprises the depth/infrared (IR) channel and the RGB channel. The RGB channel (for visualization, data inspection, and authentication) has a 1,080 × 1,920 pixels resolution. The IR channel (for proposed system development and implementation) uses the time-of-flight technique (ToF) to generate depth images at a 512 × 424 pixels resolution. Depth images were used for this study since they provide faster high-quality image segmentation and are invariant to ambient lighting conditions, requiring no additional lighting during image acquisition (Okinda et al., 2018b; Nyalala et al., 2019; Okinda et al., 2020b). Table 1 presents the parameters for the Kinect V2 depth sensor.

Figure 2.

Experimental setup and image acquisition system.

Table 1.

Kinect v2 parameters (Okinda et al., 2020).

| Kinect v2 camera | ||

|---|---|---|

| Features | Depth/Infrared (I.R.) channel | RGB channel |

| Camera resolution | 512 × 424 × 16 bit per pixel | 1920 × 1080 × 16 bit per pixel |

| Field of view (h × v) | 70.5 × 60.0 degrees | 84.1 × 53.8 degrees |

| Frame rate | 30 frames per second | |

| Operative measuring range | 0.5 m range | |

| Object pixel size (GSD) | 4.5 m range | |

| Angular resolution | 0.14 degrees per pixel | - |

| Latency | ∼tb:entity>/tb:entity>50 ms | |

| Dimensions (l × w × h) | 249 × 66 × 67 mm | |

| Shutter type | Global shutter |

Where h is horizontal, v is vertical, l is length, w is width, and h is height.

The Kinect camera was positioned perpendicularly to the ground facing the on-line broiler carcass processing system at a horizontal distance of 0.6 m (within the field of view) from the carcass hangers on top of a metal tripod stand 1.17 m tall. An Intel i7-4700HQ Processor, 2.4GHz, 16 GB internal memory (Intel, Santa Clara, CA), Microsoft Windows 10 PC preinstalled with the Windows Software Development Kit (SDK) for Kinect was connected to the Kinect V2 sensor via a USB 3.0 port. Images were acquired from the Kinect camera using MATLAB R2019b (The MathWorks Inc., Natick, MA) software with the image acquisition toolkit (IAT). Ten depth images were acquired per carcass at 2 frames per second (fps). The data (n = 1500) was transferred and stored for subsequent analysis onto a 1 TB solid-state drive (SSD).

All experiments were conducted in Nanjing Agricultural University, Pukou Engineering College, in conformity and using protocols authorized by the Nanjing Agricultural University Biosafety Committee for the handling of livestock, agricultural foods, and products.

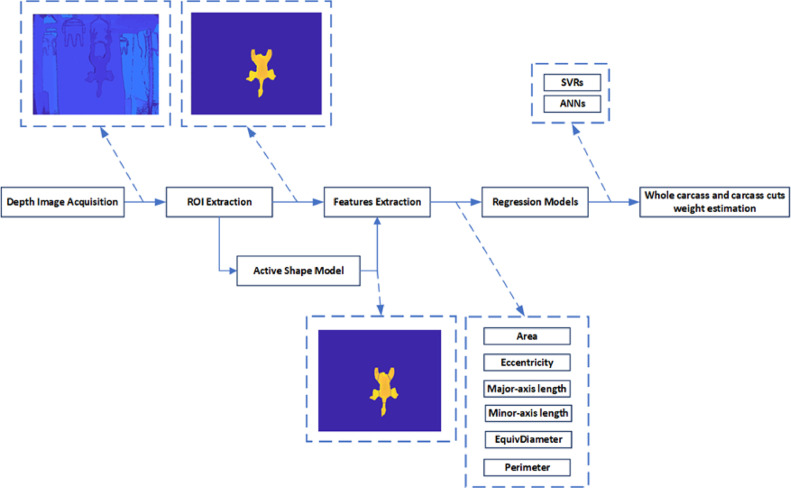

Image Processing and Carcass Parts Segmentation

This study efficiently and accurately predicts the broiler carcass's weight and cuts based on image processing and machine learning techniques. Weight prediction models were developed to correlate image features to carcass weight in the weight estimation of (), (), (), (), and (). First, was developed to predict the weight of based on extracted image features on preprocessed depth images (whole chicken image). Second, the ASM-based technique (Cootes et al., 1995) was developed to segment the into , , , and . Third, , , , and were developed based on the specific corresponding cut's image features. Different regression models were developed for comparative analysis to determine the best performing regression model in each weight prediction model (best model for each carcass cut and the whole chicken carcass). The performance of the weight estimation models was evaluated based on a comparison of the measured weight () with the estimated weight (). The carcass parts segmentation algorithm was evaluated based on the number of objects after segmentation (count) against the number of cuts (6 cuts, i.e., 2 , 1 , 2 and 1 ), processing speed, and the carcass cuts weight estimation accuracy. Figure 3 shows the proposed system's algorithmic process diagram.

Figure 3.

Proposed system algorithm flow.

Image Preprocessing Algorithm

One of the essential preprocessing operations implemented in any effective image processing algorithm is image segmentation or background elimination. In this study, all raw depth images were processed using the following procedure. First, image background removal was applied to the raw depth image data obtained using the image subtraction technique to remove the carcass background during image acquisition, as presented in Equation 1 (Li et al., 2002).

| (1) |

Where is the resultant image after it has been separated from its background, the original image is , the background image is he threshold is T. Second, after establishing minimum and maximum thresholds, distance thresholding was performed based on depth image distance intensities to obtain the region of interest (ROI) according to Equation 2 (Jana, 2012; Okinda et al., 2018b).

| (2) |

Where is the resulting image following the removal of its background, is the minimum depth distance threshold, and is the maximum depth distance threshold. Third, Smoothening was performed on the using the Gaussian kernel filter (15 × 15 pixels zero mean) (Equation 3), then morphological opening by a disk structural element of size 9 pixels to remove small holes and obtain a clear depth image (Equation 4).

| (3) |

Where is the resultant filtered image, and is the Gaussian filter kernel.

| (4) |

Where is the resultant image after morphological opening, is the structural element, is the translation of by a point p.

Carcass Parts Segmentation

The ASM was applied as a chicken body part segmentation technique. A carcass shape was fitted to an already developed ASM model with already labeled cutting positions. An ASM is a nonrigid/ nontemplate shape matching problem that utilizes a point distribution model (PDM) to describe the shape variation of the modeled structure and an intensity model that models the specific intensities PDM points in a neighborhood. It is thus designed to represent the object's complicated deformation shape patterns and locate the object in new images (Wang, 2012; Morel-Forster et al., 2018). The cutting locations (10 location points) were marked for each image. As already mentioned, the core principle was to fit the broiler shape into the developed ASM to determine the cutting locations' positions to segment the carcass into carcass parts. The procedure applied in this study to establish the ASM is explained below:

Step 1: Five hundred preprocessed broiler carcass images (different weights) were selected for ASM model development. For each image, 300 landmark points (along the image boundary) were extracted.

- Step 2: Consider that each image has landmark points (on the image boundary as depicted in Figure 4A), the coordinate of the point is denoted by , hence, the landmark points can be presented by a shape vector which is a vector in Equation 5.

(5)

Where , and is the total number of images.

Step 3: Translated all the shapes to be centered at the origin point ().

- Step 4: one shape () was manually selected as the golden standard, was scaled so that . All the other shapes were aligned to in terms of scale () (Equation 6) and rotation () (Equation 7) for each image.

(6) (7)

Where , and . Hence, the aligned image landmark points for the image is given by Equation 8 (Procrustes analysis) to provide sets of aligned training shapes, each described by a vector of feature points.

| (8) |

Step 5: Reduced the dimensionality of to K "2n by principal component analysis (PCA) technique (Ringnér, 2008). Such that any shape can be approximated by . Where both and (mean of the shapes) are vectors, is a and is a vector.

- Step 5: Fitted the ASM model to new data by determining the best translation, rotation, scale (), and the model parameter according to Equation 9, which is a minimization problem.

(9)

Where is the new data set of vectors, and describes the as given in Equation 10.

| (10) |

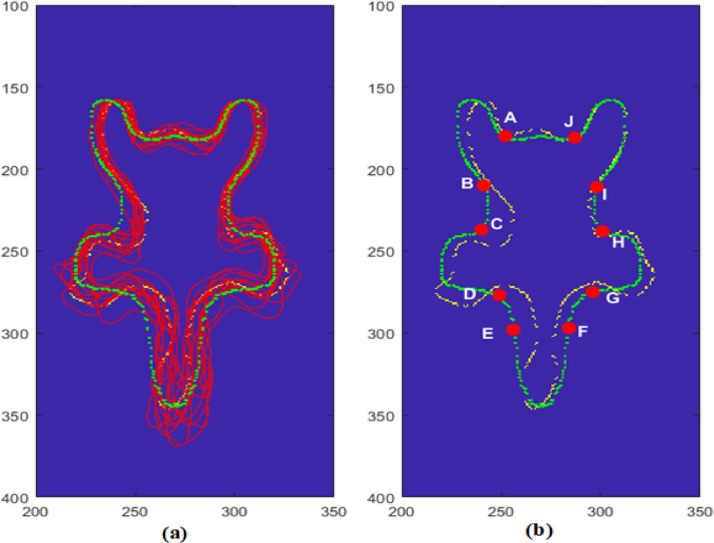

Figure 4.

(A) Landmarks points on the carcass image boundary, (B) ASM model with the chicken parts cutting positions.

The summary of matching the ASM model to a targeted image is given in Algorithm 1 below:

Algorithm 1.

The active shape model fitting

| 1 | |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 6 |

The cutting location labeling on the developed ASM was performed by a professional butcher (PB) from NSMP. When a new image was matched to the ASM, the locations , ,,, and were extracted. Figure 4B shows the ASM model with the chicken parts cutting positions such that the segmentation lines were , ,,, and . The primary segmentation principle is that an ASM model is developed, then a PB labels the segmentation points on the shape model, as shown in Figure 4B. When fitting a new shape into the model, the cutting points are located on the new image (transfer of points from the model to a new image), as shown in Figure 4B.

Weight Estimation Algorithm

To estimate the weight of chicken carcass and carcass parts, 5 regression models were developed: Support Vector Regression (SVR) (Linear, Quadratic, Cubic, and radial basis function [RBF]) and Bayesian Artificial Neural Network (Bayesian-ANN). For each weight estimation model, data were randomly divided into training (70%) and testing (30%) datasets, as presented inTable 2 (each model was trained independently; therefore, 5 separate models , , , , and were developed for , , , , and respectively). The images for feature extraction were selected under the criteria that the chicken carcass does not touch the image edge due to the carcasses' movement along the production line. Based on the extracted features, the models were developed by performing a 10-fold cross-validation-based parameter search on the training dataset, that is, a validation sample (15% of the training set) was used to quantify the network generalization and stop training once generalization stops improving.

Table 2.

Details of the datasets used in regression models development.

| Models | Training | Test | Total |

|---|---|---|---|

| 1,050 | 450 | 1,500 | |

| 2,100 | 900 | 3,000 | |

| 1,050 | 450 | 1,500 | |

| 2,100 | 900 | 3,000 | |

| 1,050 | 450 | 1,500 | |

| Total dataset | 7,350 | 3,150 | 10,500 |

Feature Extraction

The performance of any machine learning model is highly dependent on the underlying feature variables implemented in the model training. No feature selection technique was applied in this study; the model's training and validation process included all the features extracted. Additionally, only 2D image geometric features were used in this study; a full 3D broiler carcass model was not feasible due to the camera's static position. From each image, 6 features were extracted: Projected area , perimeter , major-axis length , minor-axis length , eccentricity , and EquivDiameter . The summary of the extracted features is given in Table 3.

Table 3.

The extracted feature variables.

| Extracted features | Defining equations | ||

|---|---|---|---|

| Area |

|

||

| Perimeter |

|

||

| Major-Axis length |

|

||

| Minor-Axis length |

|

||

| Eccentricity |

|

||

| EquivDiameter |

|

Consider that the contour of a 2D image is defined by , where and , when the 2D image is fitted with an ellipse { and and and are the endpoint coordinates of the major-axis and minor-axis, respectively.

(Equation 11) is the exact number of pixels within an object, region, or area the broiler carcass takes up when projected onto an image plane. It has extensively been used as a weight estimation feature for livestock and agricultural products (Okinda et al., 2018a; Nyalala et al., 2019; Okinda et al., 2020b). (Equation 12) is the distance around the object's boundary. It is calculated by counting the number of pixels in a closed contour (De Wet et al., 2003; Nyalala et al., 2019; Okinda et al., 2020b). (Equation 13) and (Equation 14) are the pixel distance between an ellipse's (fitted ellipse over a 2D shape) major-axis and minor-axis endpoints, which have the same normalized second central moments as the region, respectively. (Equation 15) s the ratio of the Eigenvalues and corresponding to the ellipse's major and the minor axis, respectively. (Equation 16) is the Euclidian distance on the boundary or diameter of a circle having the same area as the region between the two farthest points.

Regression Models

ANN Model Development

Given its ability to simplify and respond to unpredicted inputs, ANN is universally used for classification, pattern recognition, and clustering. Neural networks have many advantages; they require less statistical training; all possible interactions between predictor variables can be detected. The ability to detect implicitly complex nonlinear relationships among dependent and independent variables and multiple training algorithms is accessible (Tu, 1996). ANN essentially classifies inputs into a set of target categories. ANN receives information from input vectors in the input layer, transmits this information via the neurons, and eventually gives the output layer a specific output value (Nyalala et al., 2021a). For this research, 3-layer feed-forward networks consisting of 6 input neurons corresponding to the extracted feature variables, 12 hidden neurons, and 1 hidden neuron were implemented using the Neural Network Toolbox 11.1 in MATLAB R2019b software (The MathWorks Inc.). The Bayesian regularization training algorithm was applied in this study. The Bayesian ANN is a probabilistic method that returns the posterior probability of the weight, given the features but using a regression function of a similar form as the traditional ANN (Mortensen et al., 2016).

SVR Model Development

The SVR, an extension of Support Vector Machines (SVMs), is employed in high-dimensional spaces to solve regression problems. SVR is considered a nonparametric statistical learning algorithm based on kernel functions (Vapnik et al., 1997). SVR has the advantages that the training is relatively easy, and they offer better performance (accuracy), they can be controlled explicitly, lacking an optimal local solution, elegant mathematical tractability, they prevent overfitting as they do not need a broad set of training samples, and provide direct geometrical interpretation (Burges, 1998; Schölkopf et al., 1999; Nyalala et al., 2019) In an SVR model, it is the kernel function that maps input data into the required form. The applied kernel function greatly affects the performance of SVR models (Nyalala et al., 2021a). This study applied the Linear (Equation 17), Polynomial (Equation 18), and RBF (Equation 19) kernel functions.

| (17) |

| (18) |

| (19) |

Evaluation Metric

The accuracy of for each model category (i.e., , , , , and ) was compared to in terms of RMSE, R-Squared () and relative error. Besides, an independent t test was conducted to analyze the statistical differences between and for each model category. The independent t test or the student's t test is an inferential statistical test that determines whether there is a statistically significant difference between the means in 2 unrelated groups on the same continuous dependent variable (Rochon et al., 2012). No data transformation was performed in this study after evaluating the skewness value and skewness standard error values. All statistical analyses were conducted using version 25.0 of the SPSS software package (SPSS Inc., Chicago, IL).

RESULTS

Dataset Statistical Description

In this study, 5 datasets (model categories) were applied, as presented in Table 2. A descriptive statistic of all the datasets is given in Table 4 in terms of measures of variability and central tendency in . For the 150 broiler carcasses, the results established that the mean was 1,289.648 g, with a median of 1283.700 g ranging from 853.900 g (minimum) to 1,750.000 g (maximum). The data had a skewness of 0.217 g at a standard error of 15.148 g. For the carcass cuts, the mean weight of was 80.556 g, with a median of 79.950 g ranging from 53.300 g (minimum) to 101.500 g (maximum). The data had a skewness of −0.317 g at a standard error of 0.940 g. In , the mean weight was 879.285 g, with a median of 885.000 g ranging from 566.400 g (minimum) to 1219.500 g (maximum) and skewness of 0.317 g at a standard error of 10.982 g. From the dataset, the mean weight was 35.726 g, with a median of 34.800 g ranging from 23.900 g (minimum) to 54.450 g (maximum) and skewness of 0.860 g at a standard error of 0.476 g. Lastly, for dataset, the mean weight was 111.918 g, with a median of 111.100 g ranging from 86.500 g (minimum) to 143.500 g (maximum) and skewness of 0.251 g at a standard error of 1.266 g.

Table 4.

The descriptive statistics of the measured weight of broiler carcass and carcass parts (n = 150).

| Descriptive statistics | () | () | () | () | () |

|---|---|---|---|---|---|

| Mean | 1,289.648 | 80.556 | 879.285 | 35.726 | 111.918 |

| Standard error | 15.148 | 0.940 | 10.982 | 0.476 | 1.266 |

| Median | 1,283.700 | 79.950 | 885.000 | 34.800 | 111.100 |

| Standard deviation | 185.524 | 11.510 | 134.505 | 5.836 | 15.504 |

| Variance | 34,418.970 | 132.475 | 18,091.552 | 34.055 | 240.380 |

| kurtosis | 0.750 | −0.111 | 1.032 | 2.219 | −0.631 |

| Skewness | 0.217 | −0.317 | 0.317 | 0.860 | 0.251 |

| Range | 896.100 | 48.200 | 653.100 | 30.550 | 57.000 |

| Minimum | 853.900 | 53.300 | 566.400 | 23.900 | 86.500 |

| Maximum | 1,750.000 | 101.500 | 1,219.500 | 54.450 | 143.500 |

Carcass Parts Segmentation Algorithm Evaluation

The performance of the carcass parts segmentation algorithm was assessed in terms of segmentation accuracy (image object count) and the comparison between and of the carcass parts. Note that a professional butcher performed the carcass cutting. Hence, this study compares the weight of manually cut carcass parts and the auto-carcass part segmentation machine vision-based weight estimation. It was observed that the proposed carcass segmentation technique successfully segmented all the carcass cuts in the explored images, that is, the number of image objects (after parts segmentation was equal to 6), as shown in Figure 5.

Figure 5.

ASM based segmentation after fitting the cutting points on a carcass shape. (A) left-wing, (B) left thigh, (C) right thigh, (D) right-wing, (E) head and neck and (F, G, h, i) shows the carcass parts segmentation of different carcasses.

Additionally, to determine the effect of carcass size on the introduced technique, the average relative error (in , , , and ) for each carcass weight was evaluated as presented in Figure 6. It was observed that the proposed technique was invariant to the carcass weight. In the highest and the least average relative errors were 3.112 and 2.104% for 1,174.600 g and 1,712.200 g, respectively. In the highest and the least average relative errors were 3.170% and 2.312% for 1,750.000 g and 1,094.800 g, respectively. In the highest and the least average relative errors were 3.601 and 2.158% for 963.400 g and 1,529.100 g, respectively. Lastly, in the highest and the least average relative errors were 3.177 and 2.172% for 1,750.000 g and 1094.800 g, respectively.

Figure 6.

Average relative error evaluation for – per carcass weight.

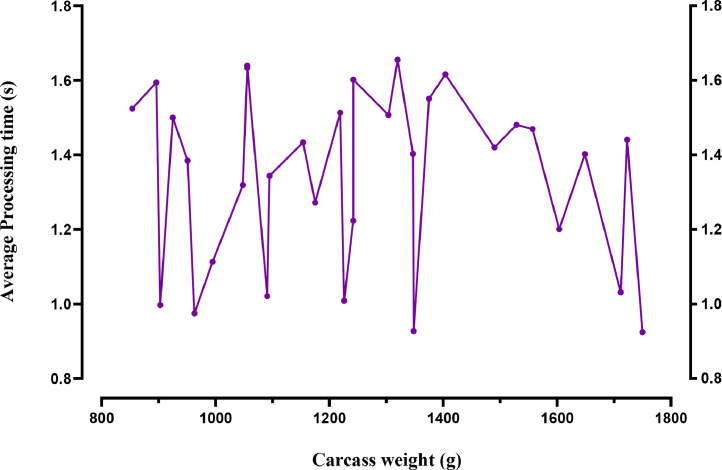

Furthermore, the proposed technique was evaluated based on the processing speed, as presented in Figure 7. It was established that the computational speed was also invariant to the carcass weight. The lowest processing time of 0.924 s was observed in the 1,750.000 g carcass, while the highest processing time of 1.656 s was observed in the 1,320.000 g carcass. Thus, the segmentation speed is not affected by the carcass size.

Figure 7.

Evaluation of the processing speed in weight estimation based on the carcass weight.

Weight Estimation Model Evaluation

Table 5 presents the model parameters of all the modeling techniques applied in this study. Generally, model parameters are critical factors that affect any model's performance. During the 10-fold cross-validation, the SVR kernel function parameters were computed iteratively. These kernel parameters resulted in the highest validation accuracy while avoiding model underfitting and overfitting to provide a good generalization ability. Similarly, after assessing the percent error during the model validation phase and computation time for the Bayesian-ANN, the number of the hidden neurons was set to 12. As the number of neurons increases, the error percentage declines as computational time increases (Nyalala et al., 2019). The developed models were applied to testing datasets, as presented in Table 6 in R2 and RMSE.

Table 5.

Parameters of the developed regression models.

| Regression parameters |

|

|

|

|

|

|||||

|---|---|---|---|---|---|---|---|---|---|---|

| SVR kernel parameters | Scale | Degree | Scale | Degree | Scale | Degree | Scale | Degree | Scale | Degree |

| Linear | 0.440 | — | 0.678 | — | 0.667 | — | 0.484 | — | 0.122 | — |

| Quadratic | 0.441 | 2 | 0.688 | 2 | 0.580 | 2 | 0.508 | 2 | 0.123 | 2 |

| Cubic | 0.503 | 3 | 0.669 | 3 | 0.632 | 3 | 0.695 | 3 | 0.112 | 3 |

| RBF | 0.510 | — | 0.619 | — | 0.647 | — | 0.791 | — | 0.610 | — |

| Bayesian-ANN topology | 6-12-1 | 6-12-1 | 6-12-1 | 6-12-1 | 6-12-1 | |||||

Table 6.

Regression models performance evaluation based on the testing dataset and .

| Regression models |

m |

|

|

|

|

|||||

|---|---|---|---|---|---|---|---|---|---|---|

| Linear SVR | 0.9032 | 3.84 | 0.8008 | 7.83 | 0.8593 | 5.84 | 0.9271 | 3.22 | 0.9163 | 3.46 |

| Quadratic SVR | 0.8946 | 3.96 | 0.8695 | 6.96 | 0.8393 | 5.99 | 0.8963 | 4.76 | 0.8699 | 4.09 |

| Cubic SVR | 0.8615 | 4.18 | 0.8846 | 6.79 | 0.8168 | 6.28 | 0.8385 | 4.81 | 0.8976 | 3.86 |

| RBF-SVR | 0.9890 | 2.97 | 0.9129 | 4.38 | 0.9352 | 3.84 | 0.9896 | 2.58 | 0.9485 | 2.64 |

| Bayesian-ANN | 0.9981 | 2.76 | 0.9052 | 5.17 | 0.8918 | 4.95 | 0.9634 | 2.79 | 0.9847 | 2.47 |

The boldface shows the highest accuracy for each model category.

From the results in Table 6, it can be observed that there is an alternating maximum accuracy between the Bayesian-ANN and RBF-SVR. It can be observed that the Bayesian-ANN gave the best results in of 0.9981 R2 at an RMSE of 2.76 g.

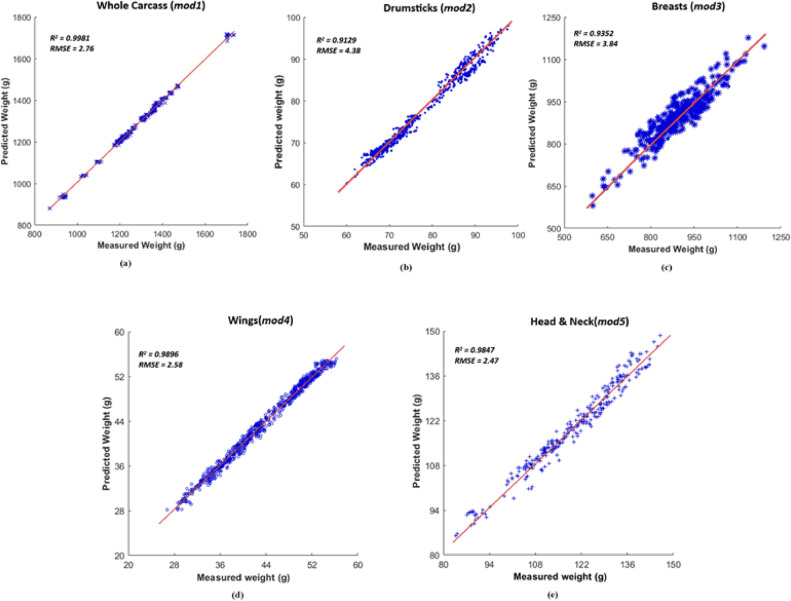

Furthermore, had the best regression results compared to other carcass cuts weight prediction models. In , the Bayesian-ANN model had the best regression results of 0.9847 R2 at an RMSE of 2.47 g. For models ,, and , the RBF-SVR performed better than all other models with 0.9896 R2 at an RMSE of 2.58 g, 0.9352 R2 at an RMSE of 3.84 g, and 0.9129 R2 at an RMSE of 4.38 g, respectively. A linear regression plot for the comparison between and for each weight prediction model (best performing models) is presented in Figure 8. It was established that the average relative error for in was 2.198% with a maximum and minimum of 3.796 and 0.097%, respectively, as shown in Table 7. In addition, a statistical analysis was performed to identify the differences between and in . By an independent t test, there was no statistically significant difference between and in , as shown in Table 8.

Figure 8.

Linear regression plots for the comparison between measured weight and the estimated weight for (A) whole carcass (), (B) drumsticks (), (C) breasts (), (D) wings (), and (E) head and neck ().

Table 7.

Results of the Carcass and cuts weight estimation of the different model categories.

| Model category | R2 | RMSE (g) | Relative error (%) |

|||

|---|---|---|---|---|---|---|

| Minimum | Maximum | Average | n | |||

| 0.9981 | 2.76 | 0.097 | 3.796 | 2.198 | 450 | |

| 0.9129 | 4.38 | 0.260 | 6.458 | 5.186 | 900 | |

| 0.9352 | 3.84 | 0.817 | 7.692 | 6.871 | 450 | |

| 0.9896 | 2.58 | 0.447 | 9.270 | 5.973 | 900 | |

| 0.9847 | 2.47 | 0.514 | 7.361 | 3.537 | 450 | |

Table 8.

The statistical results of the measured weight and the estimated expressed in terms of mean and standard deviation.

| () | ||

|---|---|---|

| Model category | ||

| 1312.536 ± 152.796a | 1319.963 ± 161.809a | |

| 80.184 ± 10.000a | 76.963 ± 10.642a | |

| 880.783 ± 117.896a | 876.274 ± 128.365a | |

| 35.536 ± 4.984a | 29.157 ± 5.260b | |

| 111.099 ± 14.209a | 108.062 ± 14.937a |

Mean ± Std within a row, with no superscript in common, differ significantly .

Carcass Parts Weight Estimation Model Evaluation

For the entire dataset, was estimated at R2 of 0.9129 with an RMSE of 4.38 g. The average relative error was 6.871% with a minimum and maximum 0.817% and 7.692%, respectively, as presented in Table 7 and Figure 8. By an independent t test, there was no statistically significant difference between and in as shown in Table 8.

For the entire dataset, was estimated at R2 of 0.9352 with an RMSE of 3.84 g. The average relative error was 5.186% with a minimum and maximum 0.260 and 6.458%, respectively, as presented in Table 7 and Figure 8. By an independent t test, there was no statistically significant difference between and in as shown in Table 8.

For the entire dataset, was estimated at R2 of 0.9896 with an RMSE of 2.58 g. The average relative error was 5.973% with a minimum and maximum 0.447% and 9.270%, respectively, as presented in Table 7 and Figure 8. By an independent t test, there was a statistically significant difference between and in , as shown in Table 8.

For the entire dataset, was estimated at R2 of 0.9847 with an RMSE of 2.47 g. The average relative error was 3.537% with a minimum and maximum 0.514% and 7.361%, respectively, as presented in Table 7 and Figure 8. By an independent t test, there was no statistically significant difference between and in as shown in Table 8.

DISCUSSION

This study proposes a computer vision-based automatic carcass and carcass cuts weight estimation system applied in a production line setting. The initial step was the ROI interest extraction by background removal. A segmentation algorithm was introduced to separate the carcass into parts based on the ASM technique. ASM has been applied in several image processing operations such as segmentation (Van Ginneken et al., 2002), classification (Søgaard, 2005), facial recognition (Wan et al., 2005; Wang, 2012), to name a few. This study applied ASM to fit a carcass to an already developed shape model to detect the segmentation points. A similar technique was applied by Jørgensen et al. (2019), where a statistical shape model (SSM) to capture underlying broiler carcass physical characteristics for weight estimation using 3D prior knowledge was applied.

In this introduced study, all the carcasses were hanged with the legs as the breast face the camera. Hence, a reduced degree of freedom in ASM fitting (reduced processing time). Figure 4A presents the mean of the ASM (green), while Figure 4B presents the fitting process. A new 2D shape (yellow) is iterated to fit into the model (green) to locate the cuts' segmentation locations. Figure 5 presents the ASM-based segmentation results after the cutting points were fitted on different carcass shapes samples. It can be observed that the carcass parts were successfully segmented with the developed ASM. However, the main limitation observed was that the model failed on some images, mainly due to a broken wing bone. Thus, these images were not considered in this study.

Both 2D and 3D image features have been applied in chicken weight estimation systems, as already mentioned. Due to the static position of the Kinect camera, it was impossible to generate a full 3D image model; hence the acquired depth data was only used to improve the segmentation process (Okinda et al., 2020b). Therefore, carcass image features were described in this study by 6 simple shape descriptors , , , , and . A comparison of the explored regression models in Table 6 shows that the Bayesian-ANN and RBF-SVR. The Bayesian-ANN can handle outliers during model development (Mortensen et al., 2016). The RBF-SVR has strong generalization capability and robustness in the testing dataset (Okinda et al., 2019). This study indicates that broiler carcass and cuts weight can be estimated using 2D image features. The results for the estimation of concurs with those of Jørgensen et al. (2019), who reported a mean absolute percentage error of 3.47% using 2D and 3D image features. Additionally, the results for the estimation of concurs with those of Adamczak et al. (2018), using 3D scanning technique to determine the weight at an estimation error of 7.6%. Furthermore, D. Scollan et al. (1998) applied nuclear magnetic resonance imaging to measure in vivo the mass of chicken's pectoralis muscles. The authors reported that the relationship between body weight and the pectoralis muscle weight using regression analysis had an of 0.92 and 0.99 for the relationship between pectoralis muscle weight and muscle volume, respectively. Moreover, Raji et al. (2010) estimated broiler , , fat weight, and and established a statistically significant correlation () between , , and fat weight against .

In any machine vision-based weight estimation system, the performance accuracy determines the underlying technology's effectiveness, efficiency, and robustness. Figure 6 shows that the performance of the introduced system is independent of the carcass weight and size. However, the relative errors in were the highest; this can be attributed to the position (closed or open wings due to storage) during image acquisition. Additionally, this can be seen in Table 8 such as there was a statistically significant difference between and in with an overall mean reduction of 6.379 g in in comparison to in . However, the proposed system within an average relative error range of 2.198 to 6.871% can accurately estimate the weight of chicken carcass and carcass cuts.

The proposed technique's computational time was observed to be invariant to the carcass size (Figure 7). This robustness ability can be attributed to the ASM modeling phase. Carcasses of different weights and sizes were applied in ASM development. In an industrial production line setting, the chicken carcasses move at a speed of 5,000 birds per hour, that is, 0.72 s of available processing time per carcass. This study reported an average processing time range of 0.924 s to 1.656 s per image frame for the entire carcass, and carcass cuts weight estimation at a capture time of 0.2 s per frame. Therefore, this introduced system can be applied successfully in an industrial production system. Additionally, to further improve the computational speed, a superior computing resource can be installed.

CONCLUSIONS

As outlined in the introduction, this present research's primary aim was to establish a weight prediction system based on machine vision and machine learning algorithms for broiler carcass and carcass cuts. We conclude that the Bayesian-ANN and RBF-SVR can be used to model and predict carcass and cuts weight. This proposed system can be applied for carcass weight prediction within a poultry production line environment. There are several limitations to this study that must be acknowledged. The system was based solely on 2D image features and applied only to the broiler kind of chicken carcasses. This approach, therefore, needs to be verified and evaluated on different types of chicken carcasses. Besides, there should be more on-line validation data for the system.

Notwithstanding these shortfalls, the proposed system accomplished this study's objective in measuring the weight of broiler carcass and carcass cuts. Developing accurate, reliable, and efficient sorting and grading systems incorporated in in-line processing systems to minimize production time, expenses, and labor are of great importance. This proposed system is applicable at any location along the production line. Moreover, it can be integrated with other quality inspections, identification and classification, contamination, safety, and disease detection in chicken meat grading and sorting systems. In addition, this system can be implemented non-intrusively on the production line utilizing 2D computer vision systems. The results presented are significant and could help researchers apply machine learning techniques for accurate carcass weight estimation in other livestock.

Acknowledgments

ACKNOWLEDGMENTS

This project was supported by the National Science and Technology Support Program (No: 2015BAD19806), provided by the College of Engineering, Nanjing Agricultural University, China.

DISCLOSURES

The authors declare that they have no known competing financial interests or personal relationships that could have influenced the work reported in this paper.

REFERENCES

- Adamczak L., Chmiel M., Florowski T., Pietrzak D., Witkowski M., Barczak T. The use of 3D scanning to determine the weight of the chicken breast. Comput. Electron. Agric. 2018;155:394–399. [Google Scholar]

- Burges C.J.C. A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 1998;2:121–167. [Google Scholar]

- Cootes T.F., Taylor C.J., Cooper D.H., Graham J. Active shape models-their training and application. Comput. Vision Image Understand. 1995;61:38–59. [Google Scholar]

- D. Scollan N., Caston L.J., Liu Z., Zubair A.K., Leeson S., McBride B.W. Nuclear magnetic resonance imaging as a tool to estimate the mass of the pectoralis muscle of chickens in vivo. Br. Poult. Sci. 1998;39:221–224. doi: 10.1080/00071669889150. [DOI] [PubMed] [Google Scholar]

- De Wet L., Vranken E., Chedad A., Aerts J.M., Ceunen J., Berckmans D. Computer-assisted image analysis to quantify daily growth rates of broiler chickens. Br. Poult. Sci. 2003;44:524–532. doi: 10.1080/00071660310001616192. [DOI] [PubMed] [Google Scholar]

- Hidayat C., Iskandar S. Weight estimation of empty carcass and carcass cuts weight of female SenSi-1 Agrinak chicken. JITV. 2018:24–29. [Google Scholar]

- Jana A. Packt Pub; Mumbai, India: 2012. Kinect for Windows SDK Programming Guide. [Google Scholar]

- Jørgensen, A., J. V. Dueholm, J. Fagertun, and T. B. Moeslund. 2019. Weight estimation of broilers in images using 3D prior knowledge. Proc. Scandinavian Conference on Image Analysis Norrköping, Sweden.

- Li Q., Wang M., Gu W. Computer vision based system for apple surface defect detection. Comput. Electron. Agric. 2002;36:215–223. [Google Scholar]

- Morel-Forster A., Gerig T., Lüthi M., Vetter T. Springer; Basel, Switzerland: 2018. Probabilistic Fitting of Active Shape Models. [Google Scholar]

- Mortensen A.K., Lisouski P., Ahrendt P. Weight prediction of broiler chickens using 3D computer vision. Comput. Electron. Agric. 2016;123:319–326. [Google Scholar]

- Nyalala I., Okinda C., Chao Q., Mecha P., Korohou T., Yi Z., Nyalala S., Jiayu Z., Chao L., Kunjie C. Weight and volume estimation of single and occluded tomatoes using machine vision. Int. J. Food Prop. 2021;24:818–832. [Google Scholar]

- Nyalala I., Okinda C., Kunjie C., Korohou T., Nyalala L., Chao Q. Weight and volume estimation of poultry and products based on computer vision systems: a review. Poult. Sci. 2021;100 doi: 10.1016/j.psj.2021.101072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nyalala I., Okinda C., Nyalala L., Makange N., Chao Q., Chao L., Yousaf K., Chen K. Tomato volume and mass estimation using computer vision and machine learning algorithms: cherry tomato model. J. Food Eng. 2019;263:288–298. [Google Scholar]

- Okinda C., Liu L., Zhang G., Shen M. Swine live weight estimation by adaptive neuro-fuzzy inference system. Indian J. Anim. Res. 2018;52:923–928. [Google Scholar]

- Okinda C., Lu M., Liu L., Nyalala I., Muneri C., Wang J., Zhang H., Shen M. A machine vision system for early detection and prediction of sick birds: a broiler chicken model. Biosyst. Eng. 2019;188:229–242. [Google Scholar]

- Okinda C., Lu M., Nyalala I., Li J., Shen M. Asphyxia occurrence detection in sows during the farrowing phase by inter-birth interval evaluation. Comput. Electron. Agric. 2018;152:221–232. [Google Scholar]

- Okinda C., Nyalala I., Korohou T., Okinda C., Wang J., Achieng T., Wamalwa P., Mang T., Shen M. A review on computer vision systems in monitoring of poultry: a welfare perspective. Artif. Intell. Agric. 2020:184–208. [Google Scholar]

- Okinda C., Sun Y., Nyalala I., Korohou T., Opiyo S., Wang J., Shen M. Egg volume estimation based on image processing and computer vision. J. Food Eng. 2020;283 [Google Scholar]

- Oviedo-Rondon E.O., Parker J., Clemente-Hernandez S. Application of real-time ultrasound technology to estimate in vivo breast muscle weight of broiler chickens. Br. Poult. Sci. 2007;48:154–161. doi: 10.1080/00071660701247822. [DOI] [PubMed] [Google Scholar]

- Raji A.O., Igwebuike J.U., Kwari I.D. Regression models for estimating breast, thigh and fat weight and yield of broilers from non invasive body measurements. Agric. Biol. JN Am. 2010;1:469–475. [Google Scholar]

- Ringnér M. What is principal component analysis? Nat. Biotechnol. 2008;26:303. doi: 10.1038/nbt0308-303. [DOI] [PubMed] [Google Scholar]

- Rochon J., Gondan M., Kieser M. To test or not to test: preliminary assessment of normality when comparing two independent samples. BMC Med. Res. Methodol. 2012;12:81. doi: 10.1186/1471-2288-12-81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schölkopf B., Burges C.J.C., Smola A.J. MIT press; Cambridge, MA: 1999. Advances in Kernel Methods: Support Vector Learning. [Google Scholar]

- Silva S.R., Pinheiro V.M., Guedes C.M., Mourao J.L. Prediction of carcase and breast weights and yields in broiler chickens using breast volume determined in vivo by real-time ultrasonic measurement. Br. Poult. Sci. 2006;47:694–699. doi: 10.1080/00071660601038776. [DOI] [PubMed] [Google Scholar]

- Søgaard H.T. Weed classification by active shape models. Biosyst. Eng. 2005;91:271–281. [Google Scholar]

- Statista. 2019. Projected poultry meat consumption worldwide from 2015-2027. In Statista. Accessed July 2021. https://www.statista.com/statistics/739951/poultry-meat-consumption-worldwide/.

- Teimouri N., Omid M., Mollazade K., Mousazadeh H., Alimardani R., Karstoft H. On-line separation and sorting of chicken portions using a robust vision-based intelligent modelling approach. Biosyst. Eng. 2018;167:8–20. [Google Scholar]

- Tu J.V. Advantages and disadvantages of using artificial neural networks versus logistic regression for predicting medical outcomes. J. Clin. Epidemiol. 1996;49:1225–1231. doi: 10.1016/s0895-4356(96)00002-9. [DOI] [PubMed] [Google Scholar]

- Tyasi T.L., Qin N., Niu X., Sun X., Chen X., Zhu H., Zhang F., Xu R. Prediction of carcass weight from body measurement traits of Chinese indigenous Dagu male chickens using path coefficient analysis. Indian J. Anim. Sci. 2018;88:744–748. [Google Scholar]

- Van Ginneken B., Frangi A.F., Staal J.J., ter Haar Romeny B.M., Viergever M.A. Active shape model segmentation with optimal features. IEEE Trans. Med. Image. 2002;21:924–933. doi: 10.1109/TMI.2002.803121. [DOI] [PubMed] [Google Scholar]

- Vapnik, V., S. E. Golowich, and A. J. Smola. 1997. Pages 281-287 in support vector method for function approximation, regression estimation and signal processing. Advances in neural information processing systems, Denver, CO.

- Wan K.-W., Lam K.-M., Ng K.-C. An accurate active shape model for facial feature extraction. Pattern Recognit. Lett. 2005;26:2409–2423. [Google Scholar]

- Wang Q., Kernel principal component analysis and its applications in face recognition and active shape models, arXiv, 2012, 1–9, preprint arXiv:1207.3538.

- Yakubu A., Idahor K.O. Using factor scores in multiple linear regression model for predicting the carcass weight of broiler chickens using body measurements. Rev. Cient. UDO Agr. 2009;9:963–967. [Google Scholar]