Abstract

Background:

Skin cancer remains the most commonly diagnosed cancer in the US with more than 1 million new cases each year. Melanomas account for about 1% of all skin cancers and most skin cancer deaths. Multiethnic individuals whose skin is pigmented underestimate their risk for skin cancers and melanomas and may delay seeking a diagnoses. The use of Artificial Intelligence (AI) may help improve diagnostic precision of dermatologists/physicians to identify malignant lesions.

Methods:

To validate our AI’s efficiency in distinguishing between images, we utilized 50 images obtained from our ISIC dataset (n=25) and pathologically confirmed lesions (n=25). We compared the ability of our AI to visually diagnose these 50 skin cancer lesions with a panel of three dermatologists.

Results:

The AI model better differentiated between melanoma vs non-melanoma with an AUC of 0.948. The three panel member dermatologists correctly diagnosed a similar number of images (n=35) as the AI program (n=34). Fleiss’ Kappa (κ) score for the raters and AI indicated fair (0.247) agreement. However, the combined result of the dermatologists panel with the AI assessments correctly identified 100% of the images from the test data set.

Conclusions:

Our AI platform was able to utilize visual images to discriminate melanoma from non-melanoma, using de-identified images. The combined results of the AI with those of the dermatologists support the use of AI as an efficient lesion assessment strategy to reduce time and expense in diagnoses to reduce delays in treatment.

Keywords: melanoma, public health skin cancer, ethnic skin, artificial intelligence

Introduction

Background

Skin cancer remains the most commonly diagnosed cancer in the United States (US) with more than 1 million new cases diagnosed each year [1,2]. The majority of skin cancer are basal and squamous cell cancers; however, melanomas are a more serious type of skin cancer that occurs in the skin cells that provide pigment [3]. Although this type accounts for only about 1% of all skin cancers, the vast majority of deaths are attributed to melanomas [4]. According to the American Cancer Society, an estimated 96,480 new cases of melanoma were diagnosed in the US in 2019, as well as an estimated 7,230 deaths [1,3]. Early detection, monitoring, and screening are often associated with limiting the risk of death from skin cancer. Early diagnosis is crucial because melanoma has nearly a 95% cure rate if diagnosed and treated in the early stages; and early detection can reduce costs, morbidity and mortality [5].

Regardless of skin complexion, everyone is at risk for skin cancer, contrary to the belief that light skin is more susceptible [6]. Dark skin may not burn as quickly as fairer skin from sun exposure; however, UVR from the sun and other sources can cause damage making anyone susceptible [6]. Multiethnic individuals whose skin normally tans or is pigmented often underestimate their risk for cancer, and in doing so may delay a skin cancer diagnoses [7,8]. Although ethnic groups with darker skin have lower incidence rates, ethnicity is a poor proxy for risk because melanomas are more prevalent and frequently fatal in ethnic minority groups [7]. Hawai‘i’s multiethnic, multi-complexion populations experience year-round UVR exposure and therefore are at higher risks for melanoma. In Hawai‘i, about 400 new cases of melanomas are diagnosed each year with 30 people dying from the disease. Recent reports on trends for melanoma of the skin in Hawai‘i indicate a 1% a year increase in incidence rates between 2013-2017 [9,10]. Skin cancer prevention strategies used in Hawai‘i provide a unique opportunity to identify the best ways to reduce the burden of this disease.

More than 90% of melanomas can be recognized with the naked eye and can be visually diagnosed by dermatologists [11]. However, melanoma remains a troublesome diagnosis for these experts, as there is no gold standard biomarker, meaning the current diagnostic criteria for skin lesions remains subjective and imprecise [12]. Improving the accuracy of melanoma detection is important and crucial for saving lives. Additionally, visually identifying lesions for early detection by primary care providers or through self-examination has not been shown to reduce mortality from the disease. Variability in the subjective assessment of melanocytic lesions undermines the potential utility of this screening method to reduce death [11,12]. Each year in the U.S., poor diagnostic precision adds 673 million dollars more to the nearly 932 million dollars spent in therapeutic costs for melanomas [12].

To aid in better diagnosis, dermoscopy facilitates a visualization of features that cannot be seen through the naked eye [12]. Dermoscopy magnifies and illuminates the skin image, which allows for improved melanoma diagnoses [13]. Trained dermatologists utilizing dermoscopic tools and methods may improve diagnostic sensitivity by 10-30 percent [12]. Recognition of melanoma from dermoscopic images remains a challenging task even with enhanced tools and techniques [12,13]. The use of artificial intelligence (AI) to help discriminate high-risk lesions types from images may offer time and monetary savings for dermatologists who typically are overloaded with diagnosing melanomas and non-melanomas. Additionally, the use of AI may support strategies for primary care providers to accurately triage suspicious lesions for follow-up or treatment by dermatologists [12].

The benefits of employing artificial intelligence hold promise for more standardized levels of diagnostic accuracy with the added benefit of better utilization of dermatologists’ time and financial resources [14]. Many computer vision (CV) algorithms perform well in classifying skin lesions, although they are not yet used in clinical practice. The use of AI also provides more access to reliable diagnostic assessment for people regardless of proximity to healthcare facilities and dermatologists [14]. Deep Learning Computer Vision (DLCV) techniques can be added to existing tele-dermatology platforms including use of cell phone-based images, with or without a dermoscopic attachments (costs ~$15) to provide a patient-centered, public health approach to the early detection of melanomas [15,16]. DLCV can be included with patients’ skin self-examination strategies, where pictures of suspicious lesions can be sent and triaged by DLCV for subsequent follow-up by a dermatologist [15,16].

Integrating human experts with an AI or DLCV to approach skin cancer diagnostics is essential to reduce the margin of potential error and improving patient outcomes while simultaneously providing a way for individuals to access diagnostic capabilities independent of the location of a dermatologist [17]. Utilizing AI to better screen and diagnose melanomas does not replace dermatologists, but improves the management of suspected cases. Our study presents the creation and use of an AI to distinguish between benign and melanoma images. The AI’s efficiency was then validated through a panel of dermatologists to determine if the AI could make accurate diagnoses when presented de-identified images that were also assessed by three local dermatologists. For this study, titled the Systematic Melanoma Assessment and Risk Triaging (SMART) Study, the University of Hawai‘i Cancer Center collaborated with Hawai‘i Pathologists’ Laboratory and the Hawai‘i Dermatological Society to establish a DLCV method to triage lesions appropriate for biopsy, while providing a platform for increased vigilance of benign lesions. Our specific study aims were to identify and label a set of de-identified images of pigmented skin lesions, clinically diagnosed as melanoma or non-melanoma to train the UH Cancer Center’s AI platform to visually diagnose melanomas. Another aim of the study was to compare and contrast the UH Cancer Center’s AI platform’s ability to distinguish melanoma versus non-melanoma skin lesions with the abilities of dermatologists to classify these lesions. Our hypotheses were 1) that a specific image format will be superior for use by the AI platform in discriminating melanomas from non-melanoma lesions and 2) that the AI platform will be as accurate and efficient as dermatologists in distinguishing lesions suitable for biopsy. In this study, we addressed the hypotheses by obtaining de-identified images from local dermatologists and from an online publically available dataset to test our AI in distinguishing lesions, as well as test our AIs’ diagnostic capabilities against three dermatologists (readers) in distinguishing between 50 de-identified images of lesions.

Methods

To begin training our AI, first, we obtained de-identified images, histology, and diagnoses from the medical records of dermatology patients through our partnership with the Hawai’i Pathologists’ Laboratory. However, because of time constraints and the amount of images needed to train our DLCV, we utilized publically available datasets of images for training the AI platform. Available datasets included the International Skin Imaging Collaboration (ISIC) Archive, MED-NODE, PH, DermNet, Asan, and Hallym datasets. For this study, we utilized the ISIC Archive because this archive contains nearly 24,000 high-resolution clinical and dermatoscopic images with metadata that incorporated lesion type and biopsy confirmation (see Table 1) [18]. Therefore, the ISIC Archive was used for all training and validation datasets in this paper. Images were separated and stored on a secured Microsoft Access database according to diagnosis type to ensure proper classification. These images were then further de-identified by creating copies of these images with the diagnosis removed to ensure no classification type was assigned to these images. Images were then cropped to eliminate background interference such as skin, hair, and other markings to provide the best image available for our AI through a CROPGUI program and to enhance the quality of the dataset [19]. Cropping of the images allowed for a focus on the lesion’s region of interest, this exhibited superior performance compared to uncropped images.

Table 1.

| Malignancy | Lesion Type | Count |

|---|---|---|

| Benign | Keratosis (actinic, lichenoid, seborrheic) | 447 |

| Angiofibroma or Fibrous Papule Angioma, Dermatofibroma, Scar, Basal Cell Carcinoma | 5 | |

| Atypical Melanocytic Proliferation | 15 | |

| Lentigo (NOS, Simplex, Solar) | 135 | |

| Nevus | 12405 | |

| Other | 10 | |

| Unknown | 202 | |

| Malignant | Basal Cell Carcinoma | 64 |

| Melanoma | 1189 | |

| Seborrheic Keratosis | 1 | |

| Squamous Cell Carcinoma | 22 | |

| Unknown | 27 | |

|

| ||

| SMART Hawaii-Based Dermatologist Image Dataset Breakdown for Training Model | ||

|

| ||

| Lesion Type | Count | Percent |

|

| ||

| Blue Nevi | 12 | 2.2 |

|

| ||

| Intradermal Melanocytic Nevus | 1 | .2 |

|

| ||

| Lentiginous Junctional Melanocytic Nevus | 309 | 57.3 |

|

| ||

| Malignant Melanoma | 32 | 5.9 |

|

| ||

| Melanoma | 6 | 1.1 |

|

| ||

| Mildly Atypical Melanocytic Nevus | 3 | .6 |

|

| ||

| Moderate Atypical Melanocytic Nevus | 3 | .6 |

|

| ||

| Pigmented Actinic Keratosis | 5 | .9 |

|

| ||

| Pigmented Basal Cell Carcinoma | 71 | 13.2 |

|

| ||

| Pigmented Seborrheic Keratosis | 96 | 17.8 |

|

| ||

| Severely Atypical Melanocytic Nevus | 1 | .2 |

|

| ||

| Sex | ||

|

| ||

| Female | 270 | 50.1 |

|

| ||

|

Male

|

269 | 49.9 |

|

Age Groups mean age- 57.3

|

||

| 18-24 | 14 | 2.6 |

|

| ||

| 25-34 | 48 | 8.9 |

|

| ||

| 35-44 | 69 | 12.8 |

|

| ||

| 45-54 | 81 | 15.0 |

|

| ||

| 55-64 | 119 | 22.1 |

|

| ||

| 64-74 | 110 | 20.4 |

|

| ||

|

75+

|

92 | 17.1 |

|

N=539

|

||

From Cummings & Reiter18

The International Skin Imaging Collaboration provides nearly 24,000 digital skin images to help reduce melanoma mortality, these images were used to train the AI.

Deep Learning Computer Vision Platform

Similar to the approach used by Esteva et al. (2017) & Haensslel et al. (2018), we implemented Google’s InceptionV3 network as our feature extractor (base model) [20,21]. This architecture was chosen as the framework of our convolutional neural network (CNN) because of its ability to capture spatial patterns across a range of receptive fields. We removed the standard 1000 class classifier and instead fed the 8x8x2048 feature tensor, and flattened to a vector of size 131072 into a multilayer perceptron. This process produced probability estimates over the two classification cases considered: Benign vs Malignant and Melanoma vs Non-Melanoma.

Training Regime

The ADAM algorithm, a method for efficient stochastic optimization that only necessitates first-order gradients with slight memory requirement, was used as the gradient descent optimizer for training our model [22]. Two performance baselines were determined by training the standard Inception network from scratch and another by fine-tuning the network previously trained on the ImageNet dataset; a technique sometimes referred to as Transfer Learning [23]. Grid Search was performed on these networks separately to determine the optimal hyper-parameter combination. For the ADAM optimizer we decided on a learning-rate of 10–3, β1 of 0.91, β2 of 0.999, and learning-rate decay of 10–3. Random image augmentation was introduced (in real-time at training) to the input images in the form of shear, zoom, rotation, horizontal flip, and vertical flip to increase robustness of extracted features (i.e. those that are invariant to scaling, rotation, and warping).

We encountered several challenges during the various stages of model development and training. In the early stages of model development, we used the raw uncropped images from the ISIC archive and achieved good training accuracy and loss metrics. However, when we proceeded to test it on a holdout validation dataset, the model performed worse than a fair coin flip, leading us to believe that our model exhibited signs of overfitting since our model’s loss and accuracy plots converged early in the training regime with >85% accuracy (see Fig. 1). Through investigation of the dataset, we discovered a correlation between certain image artifacts (mm ruler, skin markers, timestamps, objects, etc.) and a lesion type (melanoma, benign, keratosis, etc.). To overcome this problem, we augmented our dataset by cropping the image to isolate the lesion and its immediate surrounding tissue.

Figure 1.

ROC Melanoma vs Non-Melanoma 70% Trained, 20% Validate, 10% Test

Dermatologist Panel

To validate our AI’s efficiency in distinguishing between benign and malignant images, we utilized 50 images obtained from our ISIC dataset (n=25) (see Table 1) and those from a set of de-identified pathologically confirmed lesions obtained from Hawai‘i Pathology Laboratories (n=25) (see Table 1), consisting of 25 benign and 25 malignant skin images. Table 1 also provides the lesion type, sex, and age of the individual at the time the lesion image was taken, these images were collected from Hawai‘i Pathology Laboratories and these images were collected from different practices within Hawai’i through a secured web-based patient interface. The inclusion of these additional images was to validate the ability of the AI platform to prospectively distinguish lesions and reduce the potential bias resulting from the use of lesions previously classified by the AI platform. The images used for this reader study were randomly selected from both the ISIC and the Hawai‘i Pathology Laboratories datasets using a random number generator and were de-identified to ensure no diagnosis or which dataset the images were taken from was attached to the images. These dermoscopic images were then modified to reduce background skin, lesions, hair, and any other potential hindrances to the image quality. Through our partnership with the Hawai’i Pathologists’ Laboratory, three local dermatologists were identified and provided an invitation to visually diagnose the images using Google Forms. These participants were provided 50 images in the same random order with possible response categories of either benign or malignant. Our AI was also provided these same images in the same order to distinguish between benign and malignant. For assigning a probability for benign or malignant, we utilized a maximum threshold and went with the highest. We used the highest probability over 50% and no cutoffs, this was to ensure the diagnoses by dermatologists’ would be fairly compared to the AI. This study served as a baseline reader study, our intention is to conduct a future larger study with a more representative and expansive sample of the possible thousands of available images. The decision to use 50 images was made to balance respecting the time of the panel of dermatologists because participation in the imaging diagnosis test was voluntary, while also providing a large enough sample for a comparison with the AI platform.

Data Analysis

This study utilized Statistical Package for the Social Sciences (SPSS) to calculate Fleiss’ Kappa (κ) scores to differentiate between the three dermatologists’ scores for distinguishing between the images and the AIs’ score. Fleiss’ Kappa is a statistical measure of agreement or accuracy between raters; making use of this measure allowed for the evaluation of inter-rater consensus between our raters [24]. In this case, the raters are the three local dermatologists and our AI model, and their prediction of either correct or incorrect diagnoses of de-identified lesion images.

Results

Model Results

We found that dermatoscopic images offered better discriminative performance compared to clinical non-dermatoscopic images in the training data set (see Fig. 2). We trained and evaluated our model on two fundamentally different classification tasks: Benign vs Malignant and Melanoma vs Non-Melanoma. With our 2-Layer Multi-Perceptron Classifier we obtained an Area Under the Curve (AUC) of 0.948 for each classification task on a test set consisting of valid skin lesion images. As stated before, the classification task initially distinguished between benign vs malignant. However, after reviewing the images of the test dataset, the model better differentiated between melanoma vs non-melanoma. Initially we focused on the AI classification of benign vs malignant skin lesions; however, after conducting a review of our dataset, our industry expert noted that our model was in fact differentiating between melanoma vs non-melanoma skin lesions. Therefore, or AI algorithm distinguished images from the ISIC dataset as melanoma and non-melanoma for the purpose of this study. The images cropped of excess hair, lesions, and other distractions provided the best images for our AI model; however, the ISIC images were found to be lacking skin complexities as far as a range of skin types (Fitzpatrick levels 4-6).

Figure 2.

Benign vs Malignant Dermatologist-AI Comparative Screening

Panel Results

Findings from the dermatologist and AI comparative image testing indicated similar results between the readers (see Table 2). Two readers had the same number of correct diagnoses (35), which is similar to the 34 images the AI successfully diagnosed. Out of the 50 images, there were no images that all three dermatologists and the AI incorrectly diagnosed, meaning there were no images incorrectly diagnosed by all dermatologists and the AI combined. Table 2 demonstrates the dermatologists’ results in comparison to the AI model. All three dermatologists incorrectly diagnosed the same five images with the AI correctly diagnosing those images (see Fig. 3), while the AI incorrectly diagnosed five images that all three dermatologists successfully diagnosed (see Fig. 4) (see Table 2). Figure 2 provides the receiver operating characteristics curve (ROC) for the readers and our AI model for benign and malignant classifications. Each reader is listed as a participant on the ROC along with the AI potential curve as the optimal performance possible on the 50 screening images with further tuning of the threshold (see Fig. 2). Findings from this reader study indicate similar diagnoses from our panel of dermatologists and our AI Model in distinguishing de-identified images as either melanoma vs non-melanoma.

Table 2.

Dermatologist and AI Visual Diagnosis Test Panel Results and Classification Predictionsa

| # IMAGES RIGHT N | PERCENT CORRECT | |||||

|---|---|---|---|---|---|---|

| Total Images N=50 | ||||||

| DERM 1 | 35 | 70% | ||||

| DERM 2 | 35 | 70% | ||||

| DERM 3 | 27 | 54% | ||||

| AI | 34 | 68% | ||||

| AI and Dermatologists’ Visual Diagnosis Prediction Concordance | ||||||

| AI PREDICTION | DERMATOLOGISTS PREDICTIONb | # OF IMAGES Nc | PERCENT | |||

| Incorrect | Incorrect | 0 | 0% | |||

| Incorrect | 1 Derm Correct | 3 | 6% | |||

| Incorrect | 2 Derms Correct | 8 | 16% | |||

| Incorrect | 3 Derms Correct | 5 | 10% | |||

| Correct | 3 Derms Incorrect | 5 | 10% | |||

| Correct | 1 Derm Correct | 4 | 8% | |||

| Correct | 2 Derms Correct | 10 | 20% | |||

| Correct | 3 Derms Correct | 15 | 30% | |||

| Fleiss Kappa Results | ||||||

| FLEISS KAPPA SCORE | N | CONFIDENCE INTERVAL | ||||

| RATERS AND AI | 0.247 | 5 | 95% CI:0.108-0.387 | |||

| RATERS ONLY | 0.327 | 3 | 95% CI:0.143-0.512 | |||

| AI ONLY | 0.622 | 2 | 95% CI:0.399-0.845 | |||

|

| ||||||

| AI Classification Predictions by Imaged | ||||||

|

| ||||||

| Image 1 | Image 2 | Image 3 | Image 4 | Image 5 | Image 6 | |

|

| ||||||

| Prediction | Benign | Malignant | Malignant | Benign | Malignant | Malignant |

|

| ||||||

| Benign | 99.9% | 3.5% | 3.5% | 96.8% | 3.5% | 5.2% |

|

| ||||||

| Malignant | 0.1% | 96.5% | 96.5% | 3.2% | 96.5% | 94.8% |

Individual and combined results from the three dermatologists and AI image test.

Correpsonds to the number of dermatologists who made the corresponding prediction of correct or incorrect, out of a possible three dermatologists.

Indicates the number of images out of a possible 50 corresponding to each row.

Indicates the probabilities of predictions made by the AI.

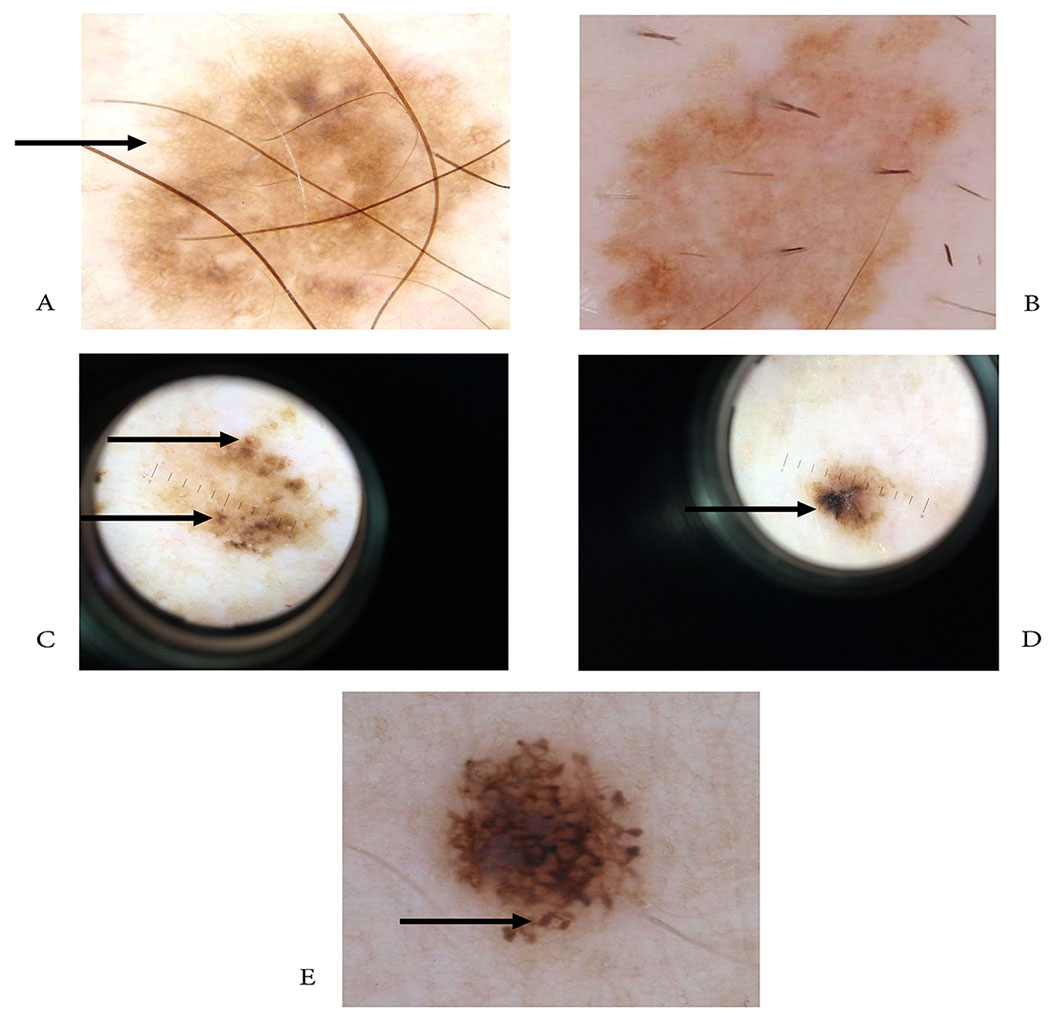

Figure 3.

Dermoscopic images AI correctly diagnosed/dermatologists incorrectly diagnosed. Two skin lesions (A,B) with regular pigmented network, honeycomb-pattern (C,D) skin lesions with irregular asymmetric blotches (E), & lesion showing peripheral rim of globules

Figure 4.

Dermoscopic images dermatologist correctly diagnosed/AI incorrectly diagnosed. (A) asymmetric multicomponent pattern w/ irregular globules & blue/white veil also seen in (B), (C) atypical network, (D) millia-like cysts, (E) diffuse reticular pattern

We evaluated the performance of each Dermatologist by their sensitivity and specificity in the binary classification of an image as benign vs malignant. Our previously trained DL model was tested on the same batch of 50 images and its performance is summarized in the ROC given by Figure 2. The performance of each Dermatologist given by their false positive rate against specificity is plotted within Figure 2.

Agreement between Raters Results

Table 2 also presents the Fleiss’ Kappa (κ) scores from the dermatologist panel and our AI model. The κ score for the independent raters and AI indicated fair (0.247) agreement among raters at the 95% confidence interval. This score indicates a fair agreement over and above the agreement expected between raters by chance; however, there is no rule of thumb to access κ values but rather agreed-upon classifications of the strengths of agreement, in this case a fair agreement between readers [24].

Discussion

In this study, we were able to train our AI platform to utilize visual images to discriminate melanomas from non-melanoma and use this trained algorithm to distinguish between de-identified melanoma and non-melanoma images, and compared this with three dermatologists and their predictions. This training required a tremendous amount of visual data including the use of over 20,000 pre-classified images. Despite this large amount of publically available data, we encountered challenges related to image quality and artifacts that hindered the ability of the AI platform to classify lesions. This factor has relevance when considering scalable public health-based approaches to image capture and classification using non-standardized techniques including smartphone cameras and software applications [15,16]. As reported in a recent review by Freeman et al. (2020), these approaches do not provide the quality of evaluable images needed to support the accuracy of the AI algorithms used in existing application software [25]. Our study identified that the use of cropped and scaled dermatoscopic images were far superior for use by the AI platform to accurately classify images for diagnoses. All images from both the ISIC and the Hawai‘i Pathology Laboratories datasets were cropped to reduce background and to isolate the lesion and its immediate surrounding tissue. This aided in the accuracy of our AI model; however, this act also served as a limitation of this study. Cropping these images was subjective and subject to human bias. However, this study aims to curtail this limitation in future studies with the use of digital dermatoscopes to aid in prospective image capturing from local dermatologists. Utilizing these digital dermatoscopes provides dermatologists with improved observation of skin lesions during patient examinations with this device equipped with an integrated camera to capture high-quality images. A future study will supply a small amount of dermatologists with these devices to capture lesion images to train our AI model and reduce the need of any image cropping because these images reduce background noise and consistent background illumination [26].

All three dermatologists incorrectly diagnosed the same five images with the AI correctly diagnosing those images (see Fig. 3), while the AI incorrectly diagnosed five images that all three dermatologists successfully diagnosed (see Fig. 4) (see Table 2). These lesions in the Google survey were clinically challenging. Two lesions showed dermoscopic characteristics of a benign lesion with a regular pigmented network (reticulation) and honeycomb pattern represented by pigmented lines and hypopigmentation holes (see Fig. 3 A,B) usually associated with melanocytic nevi. The final histologic diagnosis for the lesions was malignant. The other three lesions showed dermoscopic characteristics of a malignant lesion. Two of them showed an asymmetric, irregular blotch, with irregular contours and off center (see Fig. 3 C,D). This finding is typically associated with melanoma. The third lesion showed globules at the periphery, some of them not connected to the main lesion (see Fig. 3 E). This finding can be seen in dysplastic nevus. The final histologic diagnosis for these lesions was benign. In summary, we can hypothesize that the five clinically challenging cases for the dermatologists were due to equivocal dermoscopic findings showing the importance of histologic diagnosis as a gold standard in the classification of melanocytic lesions (see Fig. 3).

As for Figure 4, our AI incorrectly diagnosed these five images. The AI incorrectly classified three lesions as benign, Figure 4 (A–C) and two lesions as malignant (D,E). Dermatologist assigned a correct malignant diagnosis in cases shown in A-C. Figure 4 (A,B) demonstrates an asymmetric multicomponent pattern with irregular globules, atypical network, and blue-white veil. Lesion C shows atypical network, with peripheral brown structure-less areas. These findings are usually associated with melanoma. Likewise, dermatologists assigned a correct benign diagnosis for cases shown in Figure 4 (D,E). The former, lesion D, indicates dermoscopic features of seborrheic keratosis with millia-like cysts and network-like structures. Figure 4 (E) provides dermoscopic features of a typical melanocytic nevus with diffuse reticular pattern. As for the reasons of the AI equivocal choices where the dermatologists made the correct diagnosis (see Fig. 4 A–E), it is difficult to determine which were the decisions the algorithm made for classifying the lesions. Our CNN was trained using images from the ISIC archives with dermoscopic pictures, and most of them were cropped to eliminate artifacts that could potentially bias the model. A complete understanding of how the algorithm can accurately classify and incorrectly classify a lesion needs further investigation. We cannot identify the specific causes for false classifications due to the lack of interpretability of current deep learning algorithms. Due to this lack of interpretability, it is difficult to make improvements, leading to repeating the same mistakes in the future. Future studies and enhanced interpretability of the CNN’s classifications are needed to take this research to the next level. Ideally, our AI algorithm would either determine the decision tree features used by the dermatologists so that they could review results from the AI (show saliency information for each image to show what pixels were most influential for any given scoring), and/or investigate the characteristics of these images that may be associated with a false negative/ false positive. However, we are unable to do any of these things with the limited dataset we have.

Another relevant finding was that the publically available ISIC dataset, although extensive, did not have the heterogeneity in skin complexion types needed to generalize the discrimination capabilities of our AI platform to population level. There remains a real need to build training datasets for AI platforms that include an abundant range of complexion types including Fitzpatrick levels 4-6, that represent populations at high risk of morbidity and mortality from melanomas [27,28]. Those with darker skin complexions often do not label the sun reaction on their skin as burning and underestimate skin damage or reaction from the sun because Fitzpatrick skin types 4-6 rarely burn and frequently tan [28]. There remains a need for lesion images of Fitzpatrick levels 4-6 regularly found in Hawai‘i that local providers might be able to supply to aid in triaging images for all skin types.

There are multitudes of AI applications that attempt to predict melanocytic malignancy using dermoscopy; our AI model found similar results as many of these studies. Our study supports the ability of an AI platform to support the visual diagnoses of raw images. More importantly it highlights the synergistic effects of combining both AI and dermatologist readers to improve accuracy. This finding is congruent with recent studies where AI algorithms were used in collaboration with AI platforms to improve the discriminating capabilities of both AI types. A recent study by Hekler et al (2019) used methods similar to those used in this pilot study to extend the classification capabilities of a combined AI dermatological assessment to achieve a sensitivity of 89% versus 86% of the AI platform alone [29]. The effect of improving the overall sensitivity of the diagnostic analyses, and thereby reducing the number of missed cancer cases is paramount when considering combining AI and dermatological assessments of lesion images [29]. Esteva et al (2017) achieved an AUC of 0.96 for the classification of malignant melanomas vs. benign seborrheic keratosis on their dataset of 129,450 skin lesions [20]. Our study was not able to establish as large of a dataset; however, with the data available to us, our model was able to achieve an AUC of 0.948, which is comparable to their AUC of 0.96 with respect to melanoma detection [20]. In their work, Han et al (2018) concluded that model classification varied depending on the lesion type when utilizing twelve skin diseases [30]. The authors’ AUC values laid in a range from 0.82 to 0.97, whereas ours greatly varied between 0.420 and 0.956 [30]. The authors also found that the tested algorithm was comparable to that of sixteen dermatologists when provided a random sample of 480 images from their dataset [30]. Our study demonstrated similar findings for a scaled down reader study. Finally, Li & Shen (2018) utilized the ISIC 2017 dataset to propose two deep learning methods to address skin lesion image processing [13]. The authors provided a similar AUC of 0.912 for lesion segmentation and classification when compared to our AUC of 0.948 of melanoma classification [13].

Clinical review by a dermatologist will always be needed to provide the context needed for an accurate diagnosis. Dermatologists incorporate more information than that available using mere images, such as palpation, to better assess the affected areas or other surrounding lesions [29]. Clinical strategies to efficiently combine the use of AI and dermatologists may help improve patient outcomes including timely care. Our AI platform provides a probability assessment for each image’s diagnostic classification that can help support a triaging strategy for general practitioners; particularly those in rural areas where access to dermatologists may be limited (see Table 2). This triaging strategy can be used to determine the best clinical follow-up procedures needed for patients based upon these classification probabilities. Examples include the use of text messaging reminders to send revised images and momentary ecological assessment-based interventions to remind clients to protect their skin when outdoors or during high exposure periods during the day (see Fig. 5) [31, 32]. Additionally, this use of image classification and triaging can support the enhanced training of the AI platform in building accuracy in the overall prediction model.

Figure 5.

SMART decision tree/triaging strategy to be used by general practitioners/primary care providers

When diagnosing melanoma versus non-melanoma, biopsy versus non-biopsy may not be a clear decision or prediction as all cancers should be biopsied and treated accordingly. This AI model provided a means for distinguishing between skin cancer and non-skin cancer (melanoma versus non-melanoma), and this reader study was designed to create a potential platform for general practitioners to focus on melanoma which can be life threatening. This study is important to Hawai‘i because of the rurality of many communities and minority populations, as well as the rurality of some general practitioners. The intent of this study and AI creation is for a future classification and triaging application to aid rural communities with limited access to general practitioners in providing skin lesion images to an AI to determine the urgency in seeking treatment. Our future study intends to link rural communities and rural general practitioners with dermatologists with digital dermatoscopes to aid in skin lesion diagnosing, as well as in the creation of an AI triaging model to better assist their patients. Our next steps include a larger study to improve the accuracy of our AI model in distinguishing melanoma from non-melanoma to provide the best means possible of triaging for remote communities and improved telehealth capabilities where possible.

The synergistic benefits of combining the use of AI platforms with input from physicians illustrates a need to conduct implementation studies to obtain direct input from physicians on ways to incorporate AI into clinical practice. One strategy would be to utilize qualitative research methods such as focus groups or interviews with dermatologists who currently utilize imaging approaches in their practices to determine the best ways to reduce and streamline their workload using AI-based imaging assessments. Another strategy would be to determine the needs and capabilities of general practitioners to use standardized imaging methods to assess suspicious dermatological lesions during their routine patient care encounters. The engagement of physicians is a necessary step in the evolution and future use of AI in clinical practice [32].

Conclusions

We found support for our hypotheses that 1) cropped images to eliminate background interference such as skin, hair, and other markings were superior for use by the AI platform in discriminating benign from malignant lesions, and 2) the AI platform was as accurate and efficient as dermatologists in distinguishing between benign and malignant lesions. These findings illustrate clinical strategies are needed that combine the use of AI and dermatologists to improve patient outcomes including timely care. Our AI platform provides support for a triaging strategy (see Fig. 5) to be used by general practitioners, particularly those in rural areas where access to dermatologists may be limited, to aid in technology-based triage decisions to reduce dermatologists’ time and monetary expenses involved in lesion diagnoses.

Acknowledges

Funding support for this project was provided through a SEED grant from the National Institute of Health, University of Hawai‘i Cancer Center Support Grant, UH CCSG SMART Study: P30 2P30CA071789-18.

References

- 1.Cancer Facts & Figures 2019. Atlanta: American Cancer Society;2019. [Google Scholar]

- 2.Clayman GL, Lee JJ, Holsinger FC, et al. Mortality risk from squamous cell skin cancer. J Clin Oncol. 2005;23(4):759–765. [DOI] [PubMed] [Google Scholar]

- 3.National Cancer Institute. SEER Stat Fact Sheets: Melanoma of the Skin. http://seer.cancer.gov/statfacts/html/melan.html. Published 2020. Accessed September, 2020.

- 4.Sheha MA, Mabrouk MS, Sharawy A. Automatic Detection of Melanoma Skin Cancer using Texture Analysis International Journal of Computer Applications 2012;42(20):22–26. [Google Scholar]

- 5.Preston DS, Stern RS. Nonmelanoma Cancers of the Skin. New England Journal of Medicine. 1992;327(23):1649–1662. [DOI] [PubMed] [Google Scholar]

- 6.National Cancer Institute. Anyone Can Get Skin Cancer. National Cancer Institute. http://www.cancer.gov/cancertopics/prevention/skin/anyone-can-get-skin-cancer. Published 2011. Accessed April 7, 2014. [Google Scholar]

- 7.Jackson BA. Nonmelanoma skin cancer in persons of color. Semin Cutan Med Surg. 2009;28(2):93–95. [DOI] [PubMed] [Google Scholar]

- 8.Wheat CM, Wesley NO, Jackson BA. Recognition of skin cancer and sun protective behaviors in skin of color. J Drugs Dermatol. 2013;12(9):1029–1032. [PubMed] [Google Scholar]

- 9.National Cancer Institute. 5-Year Rate Changes-Incidence. State Cancer Profiles Website. https://statecancerprofiles.cancer.gov/recenttrend/index.php?0&2115&0&9599&001&999&00&0&0&0&1#results. Published 2020. Accessed December 17, 2020.

- 10.National Cancer Institute. 5-Year Rate Changes-Mortality. State Cancer Profiles Website. https://statecancerprofiles.cancer.gov/recenttrend/index.php?0&2115&0&9599&001&999&00&0&0&0&2#results. Published 2020. Accessed December 17, 2020.

- 11.Farmer ER, Gonin R, Hanna MP. Discordance in the histopathologic diagnosis of melanoma and melanocytic nevi between expert pathologists. Hum Pathol. 1996;27(6):528–531. [DOI] [PubMed] [Google Scholar]

- 12.Bhattacharya A, Young A, Wong A, Stalling S, Wei M, Hadley D. Precision Diagnosis Of Melanoma And Other Skin Lesions From Digital Images. AMIA Jt Summits Transl Sci Proc. 2017;2017:220–226. [PMC free article] [PubMed] [Google Scholar]

- 13.Li Y, Shen L. Skin Lesion Analysis towards Melanoma Detection Using Deep Learning Network. Sensors (Basel). 2018;18(2). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Mar VJ, Soyer HP. Artificial intelligence for melanoma diagnosis: how can we deliver on the promise? Ann Oncol. 2018;29(8):1625–1628. [DOI] [PubMed] [Google Scholar]

- 15.Lamel S, Chambers CJ, Ratnarathorn M, Armstrong AW. Impact of live interactive teledermatology on diagnosis, disease management, and clinical outcomes. Arch Dermatol. 2012; 148(1):61–65. [DOI] [PubMed] [Google Scholar]

- 16.Lamel SA, Haldeman KM, Ely H, Kovarik CL, Pak H, Armstrong AW. Application of mobile teledermatology for skin cancer screening. J Am Acad Dermatol. 2012;67(4):576–581. [DOI] [PubMed] [Google Scholar]

- 17.Miller DD, Brown EW. Artificial Intelligence in Medical Practice: The Question to the Answer? Am J Med. 2018; 131(2):129–133. [DOI] [PubMed] [Google Scholar]

- 18.Cummings A, Reiter O. ISIC Archive: 2019. https://www.isicarchive.com/#!/topWithHeader/wideContentTop/main. Published 2019. Accessed January 8, 2019.

- 19.Takahashi R, Matsubara T, Uehara K. RICAP: Random Image Cropping and Patching Data Augmentation for Deep CNNs. Proceedings of Machine Learning Research. 2018;95:786–798. [Google Scholar]

- 20.Esteva A, Kuprel B, Novoa RA, et al. Corrigendum: Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;546(7660):686. [DOI] [PubMed] [Google Scholar]

- 21.Haenssle HA, Fink C, Schneiderbauer R, et al. Man against machine: diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann Oncol. 2018;29(8):1836–1842. [DOI] [PubMed] [Google Scholar]

- 22.Kingma DP, Ba JL. ADAM: A Method For Stochastic Optimization. ICLR 2015; 2014. [Google Scholar]

- 23.Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L. ImageNet: A Large-Scale Hierarchical Image Database. In: CVPR09; 2009. [Google Scholar]

- 24.Nichols TR, Wisner PM, Cripe G, Gulabchand L. Putting the Kappa Statistic to Use. Qual Assur J. 2010;13:57–61. [Google Scholar]

- 25.Freeman K, Dinnes J, Chuchu N, et al. Algorithm based smartphone apps to assess risk of skin cancer in adults: systematic review of diagnostic accuracy studies. BMJ. 2020;368:m127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Glaister J, Wong A, Clausi DA. Segmentation of Skin Lesions From Digital Images Using Joint Statistical Texture Distinctiveness. IEEE Trans Biomed Eng. 2014;61(4):1220–1230. [DOI] [PubMed] [Google Scholar]

- 27.Fors M, Gonzalez P, Viada C, Falcon K, Palacios S. Validity of the Fitzpatrick Skin Phototype Classification in Ecuador. Adv Skin Wound Care. 2020;33(12):1–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Pichon LC, Landrine H, Corral I, Hao Y, Mayer JA, Hoerster KD. Measuring skin cancer risk in African Americans: is the Fitzpatrick Skin Type Classification Scale culturally sensitive? Ethn Dis. 2010;20(2): 174–179. [PubMed] [Google Scholar]

- 29.Hekler A, Utikal JS, Enk AH, et al. Superior skin cancer classification by the combination of human and artificial intelligence. Eur J Cancer. 2019; 120:114–121. [DOI] [PubMed] [Google Scholar]

- 30.Han SS, Kim MS, Lim W, Park GH, Park I, Chang SE. Classification of the clinical images for benign and malignant cutaneous tumors using a deep learning algorithm. J Invest Dermatol. 2018;138(7):1529–38. [DOI] [PubMed] [Google Scholar]

- 31.Doherty K, Balaskas A, G. D. The Design of Ecological Momentary Assessment Technologies. Interacting with Computers. 2020;32(3):257–278. [Google Scholar]

- 32.Zakhem GA, Motosko CC, Ho RS. How Should Artificial Intelligence Screen for Skin Cancer and Deliver Diagnostic Predictions to Patients? JAMA Dermatol. 2018; 154(12):1383–1384. [DOI] [PubMed] [Google Scholar]