Abstract

Background

Identification of implant model from primary knee arthroplasty in pre-op planning of revision surgery is a challenging task with added delay. The direct impact of this inability to identify the implants in time leads to the increase in complexity in surgery. Deep learning in the medical field for diagnosis has shown promising results in getting better with every iteration. This study aims to find an optimal solution for the problem of identification of make and model of knee arthroplasty prosthesis using automated deep learning models.

Methods

Deep learning algorithms were used to classify knee arthroplasty implant models. The training, validation and test comprised of 1078 radiographs with a total of 6 knee arthroplasty implant models with anterior–posterior (AP) and lateral views. The performance of the model was calculated using accuracy, sensitivity, and area under the receiver-operating characteristic curve (AUC), which were compared against multiple models trained for comparative in-depth analysis with saliency maps for visualization.

Results

After training for a total of 30 epochs on all 6 models, the model performing the best obtained an accuracy of 96.38%, the sensitivity of 97.2% and AUC of 0.985 on an external testing dataset consisting of 162 radiographs. The best performing model correctly and uniquely identified the implants which could be visualized using saliency maps.

Conclusion

Deep learning models can be used to differentiate between 6 knee arthroplasty implant models. Saliency maps give us a better understanding of which regions the model is focusing on while predicting the results.

Keywords: Knee implant, Revision arthroplasty, Implant identification, Deep learning, Image processing

Introduction

By 2030, the demand for primary total knee arthroplasties is estimated to grow by 673% to 3.48 million per annum and for primary total hip arthroplasties by 174% to 572,000 procedures. Although the clinical success rate at 10 years exceeds 90%, late failure remains a problem that can result in revision surgery [1]. The demand for hip revision procedures is projected to double by the year 2026, while the demand for knee revisions is expected to have increased by 332% by 2030 [2]. If a patient presents with acute prosthesis joint infection, surgeons must change the modular components, such as the liner, but parts from one manufacturer can only be replaced by identical ones, so rapid and accurate identification of the implant model before revision surgery becomes critical to a successful outcome. Implants are misidentified or not identified before surgery for several reasons: (1) patients notes are not available as 30% of revisions are undertaken at a different hospital from the original surgery, increasing to 40% after 3 years of post-primary procedure: patient notes may be on paper, hard to obtain quickly or unavailable because the implant was put in abroad; (2) implant failures occur 5–10 years after insertion and so legacy models are regularly encountered; (3) surgeons are familiar with only a small number that they regularly use and procedures require many options available to that customize for anatomic variability. Wilson et al. conducted a detailed study which shows every year the rate of inability to identify the implants due to osteolysis, subsidence, and loosening during the preoperative and intraoperative cases is also on the rise from 12,421 misclassified in 2011 to 28,079 misclassifications in 2020 and has been predictive to go as high as 54,000 by 2030 in the USA alone [3]. Identification of the make and model of knee implants is a challenging task before revision surgery. For identifying implants patient X-rays, followed by hospital operative record, official record, surgeon's operative dictation, and hospital implant sheet/labels were the top five methods reported. Incomplete device documentation was indicated as a barrier in identifying components of the failed implant resulted in more components replaced, increased blood loss, increased bone loss, and increased recovery time. As well as higher implant costs resulting in higher procedural charges for revision arthroplasty which were 76.0% higher compared to primary total joint replacements. From an economic point of view, a prolonged operative time means higher financial expense. Besides, the mean hospital stay was 4 days longer in our study cohort for revision arthroplasty compared to matched primary cases. This demonstrates the inability associated with the routine identification of the implants in revision arthroplasty. To minimize the expected increasing burden of revision surgeries, particularly in cases of early failure, a comprehensive understanding of the relevant risk factors is crucial. Standard radiography remains the basic imagery method for any preoperative detection of implants due to its low cost and mass availability, especially for revision arthroplasty. But over the last decade, the increasing application of deep learning in the medical field for prognosis has shown promising results getting better with every iteration. Given the rapid development and ever-increasing challenge of identifying implants due to the increasing number of potential manufacturer models, it is becoming difficult day by day to distinguish the implant models from one another. We can leverage the power of deep learning to automate this process and reduce the time needed to accurately identify these implants. We make use of this potential to identify the implant manufacturer with a dataset containing both anterior–posterior (AP) and lateral views to learn from these different perspectives and generalize better and reduce the error rate.

Related Works

Kang et al. developed a recognition system using machine learning, to identify the make and model of the hip implants. The authors collected the postoperative X-rays of anteroposterior view of Hip arthroplasty and recognized the 29 models of implants [4].

Paul et al. used deep learning approaches to differentiate the TKA and UKA for identifying Smith and Nephew, Zimmer, NexGen models. They have focused on specific features such as the femoral component in recognizing and differentiating models using AP knee radiographs. Radiographs images were augmented to increase the image quantity so that deep CNN architecture can perform efficiently. Transfer learning technique Reset was adopted in their study to train the data and differentiated only two models of knee implants with good accuracy [5].

Borjalia et al. [1] developed a system to identify the hip replacement implants model by convolution neural network. The system used transfer learning techniques which identified three model implants of hip namely, Accolade, Corail, and S-ROM. The Dense Net architecture was used to classify the models of only AP view radiographs of hip replacement. Data augmentation was done to avoid the overfitting concept.

Bredow et al. [2] adopted a technique of template matching. The 2D templates were generated from the 3D models of the knee prosthesis. The template matching process is initiated and the similarities between the images were validated. The accuracy obtained by them was around 90%. The template matching process would help the surgeon in the challenging environment of the post-operation scenario.

Howard et al. [6] designed a system in 2019 to identify the 45 different models of the cardiac rhythm device pacemaker from the chest radiographs with the help of convolution neural network concepts. The validation of the dataset was done on five different CNN architectures, namely DenseNet, inception v3, VGGNet, Resnet, and Xception. The loss and accuracy for the five architectures are recorded and Xception showed the highest accuracy of 91%.

Karnuta et al. [7] developed a knee implant identification system in 2020 which made use of 9 implant models with only anterior–posterior (AP) radiographs comprised of 682 images. They made use of InceptionV3 as their base model and trained for 1000 epochs with a variable learning rate decay algorithm. An accuracy of 99% and AUC score of 0.992 and specificity of 95% were obtained and class activation maps were used to visualize the discriminative regions used by the model to identify the implant.

Lu et al. [8] developed a reinforcement learning technique in 2019 which helps the medical practitioners to keep track of the details of knee replacement surgery as it is a continuous process treatment of 5–6 months of duration. Markov process and unsupervised approach are adopted to make the learning process of the patient's record to improve the functioning of clinical decisions.

Urban et al. [9] developed a deep learning model and compare their performance to alternative classifiers, such as random forests and gradient

Boosting with a data set containing X-ray images of shoulder implants from 4 manufacturers and 16 different models, deep learning can identify the correct manufacturer with an accuracy of approximately 80% in tenfold cross-validation, while other classifiers achieve an accuracy of 56% or less.

Dataset Description

The dataset used in this study consists of Knee Implant Radiographs comprising 6 classes: Optetrack Logic, Maxx Freedom Knee, Smith, and Nephew Legion, Stryker NRG, Zimmer LPS, Zimmer Persona is shown in Fig. 1. These data were obtained from two major hospitals Army Hospital (Research and Referral), New Delhi, India, and SRM Institute of Medical Sciences Hospital, Chennai, India. To maintain the privacy of the individual patients involved during the data collection all the radiographs were anonymized. This being a retrospective study, all obtained radiographs were meticulously labeled by experienced orthopedic surgeons.

Fig. 1.

Sample radiographs from the dataset corresponding to the 6 classes: a Smith and Nephew Legion, b Maxx Freedom, c Zimmer Persona, d Stryker NRG, e Optetrack Logic, f Zimmer LPS

The original knee implant samples which were collected from different hospitals, and their statistical interpretations in terms of sample size is given by the following Table 1.

Table 1.

Detailed dataset description

| Hospital name | Doctor’s name | Implants name | No. of data collected |

|---|---|---|---|

| Army Research and Referral, New Delhi | Dr. (Col) Barun Datta | Maxx Freedom | 127 images |

| Zimmer LPS | 182 images | ||

| Sunshine Hospitals, Hyderabad | Dr. A.B. Suhas Masilamani, | Smith and Nephew Legion | 139 images |

| Dr. Gurava Reddy | Stryker NRG | 180 images | |

| Stanford University Medical Center, US | Dr. Derek F. Amanatullah | Exactech Opetrak | 323 images |

| Kasturba Medical College, Manipal | Dr. Sandeep Vijayan | Zimmer Persona | 127 images |

The original samples collected comprises of 1078 images in total with 6 knee implant models. The data samples were extended using auto data augmentation technique in the proposed CNN model to avoid underfitting problems.

After carefully cleaning the dataset of unwanted radiographs that had unknown artifacts present or did not pass the image quality tests performed on them using Blind Reference less Image Spatial Quality Evaluator (BRISQUE) [10], we were left with a dataset consisting of a total of 1078 images. This dataset comprised of 6 implant models includes Exactech Opetrak with 323 images, Maxx Freedom Knee with 127 images, Smith and Nephew Legion with 139 images, Stryker NRG with 180 images, Zimmer LPS with 182 images, and Zimmer Persona with 127 images. All these implant models had both anterior–posterior (AP) and lateral views so that our model can generalize and identify these implant models from both views. To check for the robustness of the model, an external real-time dataset was also used to test our model’s performance.

Proposed Methodology

Preprocessing

The dataset was split into three subsets used for training, testing, and validation with a split ratio of 75–15–10 so that 75% of the images are used for training, 15% for testing, and 10% for validation. Only training set images were augmented to account for the relatively smaller dataset size to make the architecture generalize better and reduce the error rate.

Architectural Description

This study used a special type of Deep Convolutional Neural Network called Densely Connected Convolutional Neural Network or commonly known as DenseNet [11]. DenseNet solves the problem of connectivity patterns found in Deep Convolutional Neural Networks with a maximum flow of information by connecting each layer to its preceding layer directly. This helps in a huge reduction of required trainable parameters, hence also reducing the complexity and size of the model making use of only the essential feature maps required; this technique was previously not seen in any Deep Convolutional Neural Network (DCNNs).

The particulars in the flow of information are way lesser in this type of architecture since only gradients obtained from loss function are passed by each layer.

Also, unlike other architectures like Residual Neural Networks [12] which were once the state of the art of Deep Convolutional Neural Networks and used to make use of pure summation using identity from the previous layers using skip connections, dense Nets on the other hand concatenate the feature maps passed on by the previous layer as shown in Fig. 2. The proposed model controls the rate of flow of information using growth rate which can be calculated by using the following equation: ki = ko + k × (i − 1), where i denotes the layer number of layers.

Fig. 2.

Customized DenseNet architecture

When compared to other architectures like Visual Geometric Group (VGG) [13] or Residual Networks (ResNet) which make use of 138 Million and 23 Million parameters respectively, dense Net’s benefit in terms of usage of only required trainable parameters (8 Million) since there is no requirement to learn the redundant feature maps. DenseNet use concatenation instead of summation, the volume of the network increase by growth rate dense layers. To tackle this problem, transition blocks were introduced to reduce the number of feature maps passed and thereby reducing the volume of the architecture by half. Other than DenseNet which makes such judicious use of parameters, Mobile Nets [14] are also famous for their use of only 3.5 Million parameters but result in a reduction in accuracy since as the name suggests they were developed to be used on Mobile Devices with limited computational power. For comparison purposes, all the above-mentioned models were used to train on the dataset in an exactly similar manner.

The method known as Fine-Tuning in Transfer Learning, in which we employ these models that have been pretrained on ImageNet [15], an image database with 1000 classes that is used to test the performance of these models. These pertained weights serve as a great starting point since the model has already learned rich discriminative filters. We connect our own new fully connected layers on top of this base model. Our newly attached layers consist of Global Average Pooling 2D layer which calculates the average output of each feature map in the previous layer converting 7 × 7 × 1280 feature maps to 1280, followed by a dense layer with variance scaling kernel initializer which makes the layer capable of adapting its scale to the shape of the weights tensor, followed by last dense layer which would predict probabilities of our desired 6 classes with variance scaling initializer and added bias vector to optimize the model performance even more. The addition of any other set of layers would be redundant and result in an added complexity and reduction in accuracy when compared to our results is shown in Fig. 3. Figure 4 shows the novel trainable features extracted in different layers using DenseNet CNN model for knee implant model classification. The proposed model is capable to avoid extracting the non-trainable parameters.

Fig. 3.

Proposed CNN architecture for Knee Implant Model Classification

Fig. 4.

Generated feature map using DenseNet CNN model

Training

To train multiple models, the whole training process was performed on Titan XP GPU with 24 GB of memory. The training was carried out for 30 epochs for each model with an optimized learning rate. Learning rate plays an essential role while training a model, it decides how fast or slow the model should learn. Keeping it too large would result in a very fast updating of weights which would result in an oscillating performance over training epochs. Keeping it too small may never even converge and may remain in the suboptimal local minimum. Hence the need to find an optimal learning rate is very important and the optimal learning rate for our above-mentioned architecture on our dataset was calculated, by setting a starting learning rate and an ending learning rate, and the losses were computed while iterating between this range.

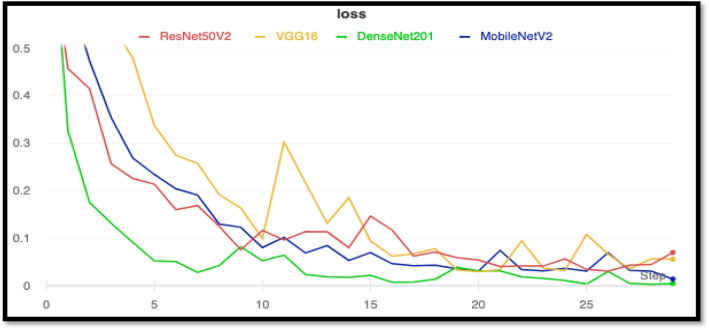

The learning rate which produced the least loss was chosen for training. The minimum numerical gradient for DenseNet201 was found out to be 5.75E−04 is shown in Fig. 5, with a minimum loss of 1.58E−03 is shown in Fig. 6. The training losses for each model were plotted for comparison. After training for 30 epochs, it was observed that the training loss of Densenet201 was the smallest. Mobilenetv2 achieved a similar training loss right behind densenet201.

Fig. 5.

DenseNet learning rate hyperparameter tuning

Fig. 6.

Combined training loss curves for all the models

Results and Discussion

After doing an in-depth analysis on all the 4 models, namely: ResNet50V2, VGG16, MobileNetV2, and DenseNet201, a few observations were made. First, it was observed that ResNet50v2 went through exploding gradients, and even though its training loss is low as indicated in the dark red line. A similar trend was seen in VGG16 as well, VGG16 overtook MobilNetV2 in validation accuracy but suffered a great loss on the test set since it started overfitting and created a bias towards one of the classes, which is where MobileNetV2 took over VGG16 in terms of performance.

It can be observed that DenseNet201 is in a league of its own producing the best results in both validations set with an accuracy of 95.1 and 96.38% on the test set is shown in Table 2. The observed loss in accuracy on DenseNet201 was due to some poor-quality images in Zimmer LPS and Smith and Nephew Legion class which brought in extra noise which resulted in a few misclassifications. Since we are dealing with medical data, we need to consider some more metrics to evaluate the models like sensitivity which can be measured by calculating recall score which can be thought as of a model’s ability to find all the data points of interest in a dataset, and area under the curve (AUC) which calculates the overall performance of a classification model based on the area under the receiver operating characteristic curve. The threshold for calculating these values was kept at 0.5 which computed the best overall accuracy for all our models (Fig. 7).

Table 2.

Comparative analysis of deep learning models

| Model | Test accuracy (%) | Sensitivity | AUC |

|---|---|---|---|

| ResNet50V2 | 84.57 | 0.813 | 0.9658 |

| VGG16 | 87.71 | 0.861 | 0.9709 |

| MobileNetV2 | 88.86 | 0.877 | 0.9812 |

| DenseNet201 | 96.38 | 0.972 | 0.9857 |

DenseNet201 has achieved the highest accuracy (bold)

Fig. 7.

Combined validation loss curves for all the models

For the analysis of all the misclassifications made by each model, the confusion matrix was plotted, two of the top-performing model’s confusion matrices are shown below in Figs. 8 and 9. A lot of inferences can be formed from these confusion matrices and they give us a good insight as to which class is being predicted wrongly. MobileNetV2 misclassified a total of 44 images, from a set of 268 unseen images, whereas DenseNet201 misclassified only 12 images on the same test set.

Fig. 8.

Confusion matrix for MobileNetV2

Fig. 9.

Confusion matrix for DenseNet201

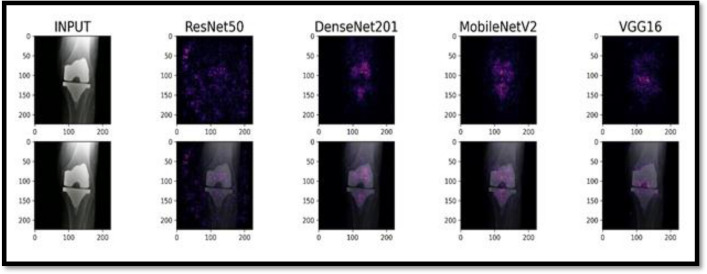

For further understanding as to what the models were looking at while predicting, we made use of saliency maps which help in model interpretability using integrated gradients. This is used to visualize the relationship between input features and model predictions. Where on one hand GradCams helps us just to visualize and generalize where the model might be looking at while running inference on the model, saliency maps prove to be more useful since they accurately represent where the model is focusing while making predictions.

These integrated gradients can be calculated using the following Eq. (1). Where ‘i’ is the feature, x is the input, x′ is baseline and Alpha is the interpolation constant:

| 1 |

Since computing a definite integral is not numerically possible and is computationally costly, we take the numerical approximation using the Eq. (2).

The computed gradients after the set of forward passes were approximated using trapezoidal rule. The approximated gradients can be used to generate the corresponding attribution masks:

| 2 |

The computed gradients after the set of forward passes were approximated using trapezoidal rule. The approximated gradients can be used to generate the corresponding attribution masks (Fig. 10).

Fig. 10.

Saliency map by combining baseline image with original radiograph image and overlaying attribution image

Saliency maps sometimes find interesting patterns which even a very experienced surgeon might not look at while analyzing these images. For our use case, we plot saliency maps produced by all models on the same set of images to understand the difference between the prediction strategies of each model (Fig. 11).

Fig. 11.

Saliency map for NRG implant model

The brightest colored regions represent regions that influence the prediction, the fact that models like Densenet201 and Mobilenetv2 focus the most on the implant region and more specifically the unique points of the implant which distinguishes it from other implant models makes it even more clear as to why the model is predicting so well on totally unseen data since other models like ResNet50V2 which mainly is getting confused by the external noise and model like VGG16 is shifting its focus towards the tibia rather than the implant itself, gives us a better insight as to why DenseNet outperformed other models and why it would be the best choice for the current use case (Fig. 12).

Fig. 12.

Saliency map for LPS implant model

In this work, we proposed a deep CNN model which is capable enough to categorize different knee implant models using the region of interest extracted from different convolution operation and the creative points using saliency feature extraction technique. Our model utilizes both AP and lateral views of knee implants to extract the high-level features to improve the TKR model prediction accuracy. Main advantage of our work over the other similar work lies in the feature extraction process. Our model is being trained to identify the model of implant in AP view and lateral view X-rays. Algorithm is devised in such a way to adapt to both kinds of inputs. Saliency feature extraction technique and identification of both views provides a lot of advantages for our model to be efficient and accurate than the existing models of CNN.

Conclusion

This study has proved that correctly implemented deep learning techniques can accurately classify and automate the process of total knee replacement implant identification. Transfer learning serves as a great starting point for training our deep learning model and retraining the network results in a very confident model and for identification of medical images, Dense Convolutional Neural Networks performs well and achieved the best accuracy of 95.1% on the validation set and 96.38% on the testing set. This indicates the potential of involving Deep Neural Networks, which could identify these TKR implants in a matter of seconds will make orthopedic surgeons more confident of the implant type before surgery, be more productive, and be able to proceed with higher exactness knowing what type of implant is present. By eliminating the delay in identifying an implant will give clinics more time to obtain replacement parts and clinicians can operate sooner, reducing patient discomfort, hospital stay, having the correct replacements (such as plastic inserts), alleviating the need for further interventions caused by relapse and a subsequent failure. We show what factors affect the decision-making strategy of a Deep Neural Network using saliency maps and focus on creative points that can be used for classification. The biggest limitation of the study would be the dataset size, given a bigger dataset would help these models generalize much better and reduce the loss to a bare minimum. We aim to further develop an optimized model to focus on other use cases like, able to identify implants of other joint replacements and to identify the images with no implants.

Author contributions

VB and SV drafted the project; SS and MP performed the experiments; CM and MG evaluated the research experiments; ABSM, DFA, GR, SV and BD shared the implant data; MK performed the data consolidation, manipulation and labeling; SK and DFA shared their knowledge about implants and revision surgeries; ABSM and GR reviewed the article.

Funding

No funds were received in support of this study.

Declarations

Conflict of interest

The authors declare no conflict of interest.

Ethical standard statement

This article does not contain any studies with human or animal subjects performed by any of the authors.

Informed consent

For this type of study informed consent is not required.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Sukkrit Sharma, Email: sukkritsharma@gmail.com.

Vineet Batta, Email: Vineet.Batta@ldh.nhs.uk.

Malathy Chidambaranathan, Email: malathyc@srmist.edu.in.

Prabhakaran Mathialagan, Email: prabhakaran_mathialagan@srmuniv.edu.in.

Gayathri Mani, Email: gayathrm2@srmist.edu.in.

M. Kiruthika, Email: kiruthi21m@gmail.com

Barun Datta, Email: barun.datta@gmail.com.

Srinath Kamineni, Email: skamineni@hotmail.com.

Guruva Reddy, Email: guravareddy@gmail.com.

Suhas Masilamani, Email: drsuhas09@gmail.com.

Sandeep Vijayan, Email: sandeep.vijayan@manipal.edu.in.

Derek F. Amanatullah, Email: dfa@stanford.edu

References

- 1.Borjali A, Chen AF, Muratoglu OK, Morid MA, Varadarajan KM. Detecting total hip replacement prosthesis design on plain radiographs using deep convolutional neural network. Journal of Orthopaedic Research. 2020 doi: 10.1002/jor.24617. [DOI] [PubMed] [Google Scholar]

- 2.Bredow J, Wenk B, Westphal R, Wahl F, Budde S, Eysel P, et al. Software-based matching of X-ray images and 3D models of knee prostheses. Technology and Health Care. 2014 doi: 10.3233/THC-140858. [DOI] [PubMed] [Google Scholar]

- 3.Wilson NA, Jehn M, York S, Davis CM. Revision total hip and knee arthroplasty implant identification: Implications for use of unique device identification 2012 AAHKS member survey results. Journal of Arthroplasty. 2014 doi: 10.1016/j.arth.2013.06.027. [DOI] [PubMed] [Google Scholar]

- 4.Huang G, Liu Z, Van Der Maaten L, Weinberger KQ. Densely connected convolutional networks. In Proceedings—30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, 2017. 10.1109/CVPR.2017.243

- 5.Wilson N, Broatch J, Jehn M, Davis C. National projections of time, cost and failure in implantable device identification: Consideration of unique device identification use. Healthcare. 2015 doi: 10.1016/j.hjdsi.2015.04.003. [DOI] [PubMed] [Google Scholar]

- 6.He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2016 doi: 10.1109/CVPR.2016.90. [DOI] [Google Scholar]

- 7.Kang YJ, Yoo JI, Cha YH, Park CH, Kim JT. Machine learning-based identification of hip arthroplasty designs. Journal of Orthopaedic Translation. 2020 doi: 10.1016/j.jot.2019.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Karnuta JM, Luu BC, Roth AL, Haeberle HS, Chen AF, Iorio R, et al. Artificial intelligence to identify arthroplasty implants from radiographs of the knee. Journal of Arthroplasty. 2020 doi: 10.1016/j.arth.2020.10.021. [DOI] [PubMed] [Google Scholar]

- 9.Urban G, Porhemmat S, Stark M, Feeley B, Okada K, Baldi P. Classifying shoulder implants in X-ray images using deep learning. Computational and Structural Biotechnology Journal. 2020;18:967–972. doi: 10.1016/j.csbj.2020.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chow LS, Rajagopal H. Modified-BRISQUE as no reference image quality assessment for structural MR images. Magnetic Resonance Imaging. 2017 doi: 10.1016/j.mri.2017.07.016. [DOI] [PubMed] [Google Scholar]

- 11.Howard JP, Fisher L, Shun-Shin MJ, Keene D, Arnold AD, Ahmad Y, et al. Cardiac rhythm device identification using neural networks. JACC Clinical Electrophysiology. 2019 doi: 10.1016/j.jacep.2019.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dy CJ, Bozic KJ, Padgett DE, Pan TJ, Marx RG, Lyman S. Is changing hospitals for revision total joint arthroplasty associated with more complications? Clinical Orthopaedics and Related Research. 2014 doi: 10.1007/s11999-014-3515-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sandler M, Howard A, Zhu M, Zhmoginov A, Chen LC. MobileNetV2: Inverted residuals and linear bottlenecks. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2018 doi: 10.1109/CVPR.2018.00474. [DOI] [Google Scholar]

- 14.Lu H, Wang M. RL4health: Crowdsourcing reinforcement learning for knee replacement pathway optimization. ArXiv 2019.

- 15.Deng, J., Dong, W., Socher, R., Li, L.-J., Kai, L., & Li, F.-F. (2010). ImageNet: A large-scale hierarchical image database. 2010. 10.1109/cvpr.2009.5206848.