Abstract

With the advent of the Fourth Industrial Revolution, the economic, social, and technological demands for pipe maintenance are increasing due to the aging of the infrastructure caused by the increase in industrial development and the expansion of cities. Owing to this, an automatic pipe damage detection system was built using a laser-scanned pipe’s ultrasonic wave propagation imaging (UWPI) data and conventional neural network (CNN)-based object detection algorithms. The algorithm used in this study was EfficientDet-d0, a CNN-based object detection algorithm which uses the transfer learning method. As a result, the mean average precision (mAP) was measured to be 0.39. The result found was higher than COCO EfficientDet-d0 mAP, which is expected to enable the efficient maintenance of piping used in construction and many industries.

Keywords: plumbing maintenance, deep learning, ultrasonic wave propagation imaging, CNN, external damage

1. Introduction

Piping is widely used as an important material not only in construction but also in many industrial fields such as aviation and machinery, and as a result the economic, social and technical demands for maintenance due to aging are increasing. In many industrial fields, it is required to apply inspection technology that can detect pipe damage at an early stage [1].

Through laser scanning-based research conducted in previous studies, the applicability to steel and bolt loosening was confirmed [2,3,4,5,6,7]. On account of this, we aim to detect damage to the pipe with ultrasonic wave propagation imaging (UWPI) in the current work.

The UWPI is one of the signal processing methods that excites a test object with a Q-switched pulse laser system, measures it with an acoustic emission (AE) sensor that acquires the wave propagation data, and displays it as waveform data using an image [8].

Many academic research activities are being carried out on piping. Intensity-based optical system [9,10], microwave nondestructive testing [11], pipe NDT inspection using an automated robot [12,13,14], eddy-current-based crack recognition [15], etc., are among the investigated techniques. However, most damage detection techniques depend on the empirical and subjective judgment of experienced experts. To overcome this problem, a lot of research based on computer vision technology using machine learning [16] is being conducted in the fields of structural health monitoring (SHM) and nondestructive evaluation (NDE) [17].

Recently, research using deep learning technology among various machine learning technologies has been actively conducted. Among the various deep-learning-based technologies, image classification using CNN shows better performance results than existing image classification algorithms and is continuously being researched and developed [18]. To detect pipe damage through CNN-based object detection, a large amount of data is required. In addition, it is difficult to obtain data and a lot of learning time is required. In this regard, we intend to utilize the transfer learning [19] technique that enables efficient learning using a small amount of data. Using the pre-learned COCO 2017 EfficientDet-d0 model, it is proposed to detect a damage in piping by laser scanning utilizing UWPI. The main objectives of this study are as follows. The primary goal of this study is to confirm the possibility of establishing a damage detection system through CNN learning on the ultrasonic wave propagation images found from the laser scanning of a pipe. Next, by applying the transfer learning technique, we want to check whether it is possible to efficiently detect damage with only a small amount of UWPI learning data. The structure of this paper is as follows. Section 2 describes the UWPI system and its theory that utilizes laser scanning technology to create training data. Section 3 describes the CNN algorithm and EfficientDet-d0 model used to detect pipe damage. In Section 4, we present the experiments and experimental results, and in Section 5 we present the conclusion of the study.

2. Ultrasonic Wave Generation Mechanism Using Pulsed Laser

2.1. Ultrasonic Wave Mode Generation Theory

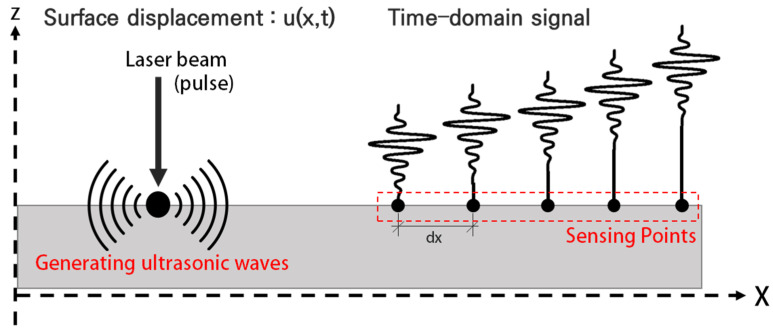

The generation of ultrasonic waves by a pulsed laser and the sensing of the generated waves takes place as shown in Figure 1 [20]. A source of impulsive pressure is applied to the surface and the resulting time records are tracked at different locations on the surface. When a pulsed laser beam collides with a target structure, various physical phenomena can occur. The basic problems of ultrasonic thermoelasticity generation can be divided into three sub-problems: moderate absorption of electromagnetic energy, reflection, transmission of the laser radiation. As a result of these processes, the absorbed laser energy causes local heating of the area, leading to a thermoelastic expansion of the material and the generation of ultrasonic waves [20,21,22].

Figure 1.

Ultrasonic wave mode generation in a plate [20].

2.2. Ultrasonic Wave Propagation Imaging System Configuration

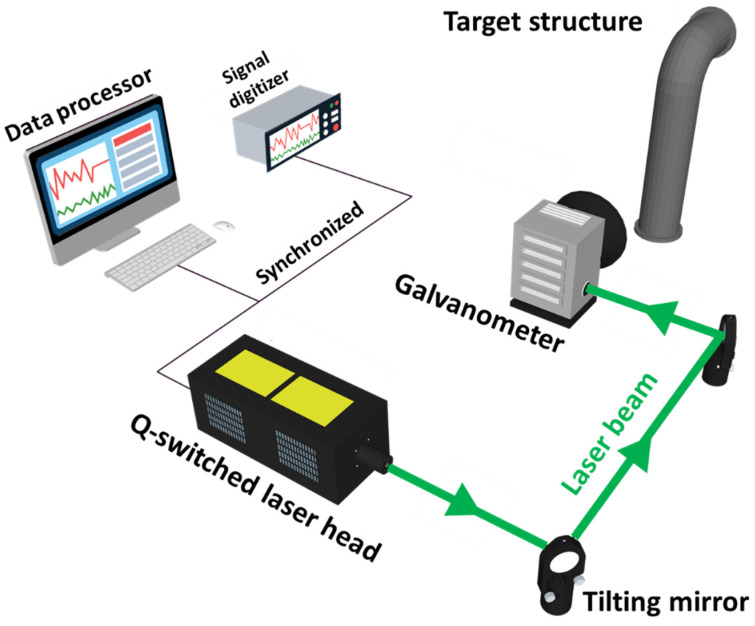

The UWPI system consists of a Q-switched laser system, a galvanometer (laser mirror scanner), an AE sensor (ultrasonic sensor), a digitizer and an image processor as shown in Figure 2. All devices are synchronized and the ultrasonic response signal is measured by the AE sensor in the digitizer at the same time.

Figure 2.

Conceptual diagram of an UWPI system.

A galvanometer is used to target a structure with a laser mirror scanner, to specify a point at the desired location and use it for laser pulse incidence. The laser mirror scanner is driven by two tilting mirrors and is designed to operate at a wavelength of 1064 nm. The maximum angular velocity of the galvanometer is 100 rad/s within the range of ±0.35 rad (±20.05°). The rotation axes of the two tilting mirrors are perpendicular to each other, which allows the laser mirror scanner to scan the 2D scan area at high speed. The scanning takes place as follows: the laser mirror scanner first performs an upward scan on the vertical axis, then moves to the horizontal axis to perform a downward scan after the vertical axis scan is complete. Through these scanning processes, ultrasonic waves are arranged on the target structure in the form of a grid. The details of the laser system are as specified in Table 1.

Table 1.

Specifications of the laser system.

| Laser Head: Brilliant Ultra GRM100 |

Galvanometer: Scancube 10 |

|---|---|

| Wavelength: 1064 nm | Wavelength: 1064 nm |

| Energy per pulse: 100 mJ | Tracking error: 0.16 ms |

| Pulse repetition rate: 20 Hz | Positioning speed: 10 m/s |

| Pulse duration: 6.5 ns | Max. angular velocity: 100 rad/s |

| Beam diameter: 3 mm | (within 0.35 rad) |

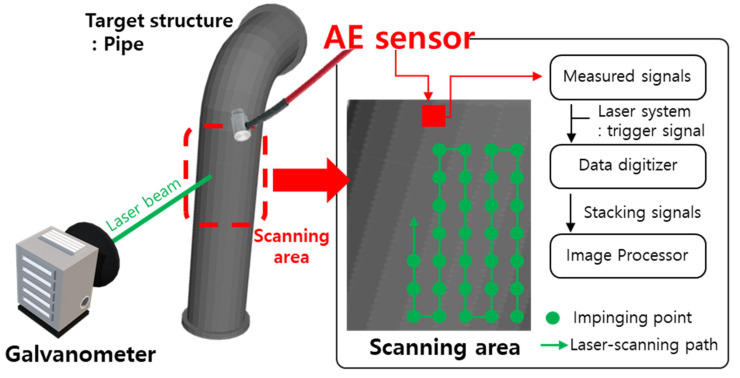

To drive the UWPI system and acquire the data required for ultrasound images from the acoustic emission sensor (AE sensor) with a built-in amplifier, the UWPI control system was configured as shown in Figure 3 using LabVIEW. The software program consists of a scanning grid configuration for a test object, a parameter setting part (sampling, frequency, number of measured samples, trigger signal level, etc.) necessary for a digitizer, a laser system and laser mirror scanner communication parameter setting part and an ultrasound imaging part.

Figure 3.

Laser-induced UWPI system.

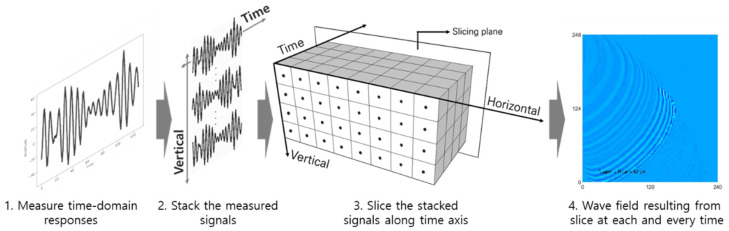

2.3. Ultrasonic Wave Propagation Imaging Algorithm

The steps to generate an ultrasonic wave image using the ultrasonic signal in the time domain measured by the UWPI system consist of a total of three steps as shown in Figure 4. First, the measured time domain signals are arranged on a vertical plane. At this time, each measurement signal is positioned at the laser beam incident point to construct 3D data of the horizontal axis, the vertical axis, and the time axis. The value at each excitation point on this plane becomes the ultrasonic amplitude value at a specific time instant, and if the image is reproduced repeatedly along the measurement time on the time axis and then played in quick succession, an ultrasonic wave propagation movie can be obtained [5].

Figure 4.

Process of ultrasonic wave propagation imaging (UWPI) system [5].

3. Deep Learning-CNN

Deep learning refers to machine learning techniques that construct a model with a large number of neural layers for pattern recognition problems or feature point learning [23]. Since the publication of the deep belief network paper by Hinton at the University of Toronto in Canada in 2009 [24], deep learning has been developed along with various algorithms in many industries [25]. The neural network structures to which deep learning technology is applied include auto-encoders, restricted Boltzmann machines (RBMs), convolutional neural networks (CNNs), and recurrent neural networks (RNNs) [26,27,28,29]. In this study, we intend to utilize a CNN, which has been in the spotlight in image recognition and classification fields, to determine the presence or absence of damage to piping structures through image learning.

3.1. CNN

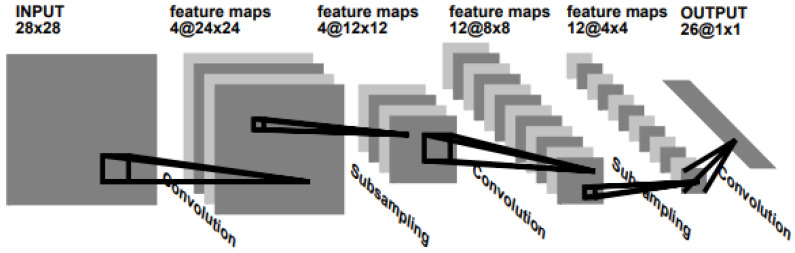

The conventional neural network (CNN) was devised by LeCun of New York University, USA, and it is one type of deep learning. It is the most popular algorithm in the field of image recognition and classification [30]. CNNs have made great strides in image recognition and classification and has shown tremendous performance in computer vision [31].

The basic structure of the CNN is shown in Figure 5 below. As indicated, it passes the image through the filter of the convolution layer and the pooling layer repeatedly, and classifies the image through the existing fully connected network, multilayer perceptron and softmax algorithm.

Figure 5.

Convolutional neural network (CNN) for image processing [25].

Typically, through TensorFlow [32] and Keras [33], which are open source software provided by Google, people who are not computer developers can use image recognition and classification using deep learning and CNN.

3.2. Object Detection

Object detection (OD) refers to an important computer vision task in digital image processing that can detect instances of visual objects of a specific class (human, animal, car, etc.) [34]. Generally, it is divided into general object detection and detection applications. Detection applications refer to applied detection technologies such as COVID-19 mask detection and automatic vehicle number recognition systems that are commonly seen around. In this study, we intend to perform the learning on laser scanning images of the pipe and detect the damage by using application-specific detection.

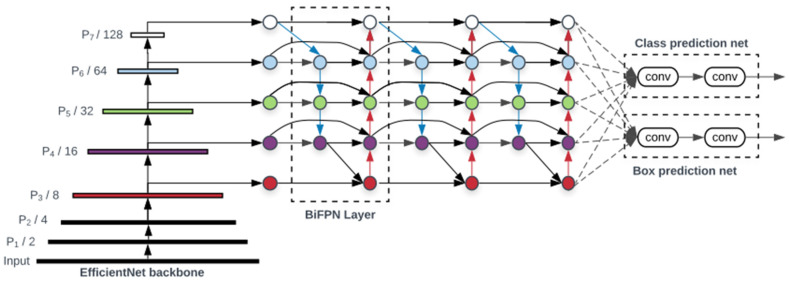

3.3. EfficientDet

EfficientDet used in this study ranked first among the models whose performance was measured without extra training data in the 2019 Dataset Object Detection competition on the COCO minival dataset, and it was found that it is an efficient network with good performance, that is, with a low amount of computation (FLOPS) and good accuracy [35]. It is an object detection algorithm that achieved the highest mAP in performance comparison experiments conducted with single-model single-scale and updated SOTA (state-of-the-art, the current highest level of results). Therefore, EfficientDet presents two differences compared with existing models. First, the existing models have developed a cross-scale feature fusion network structure, but EfficientDet pointed out that the contribution to the output feature should be different because each resolution of the input feature is different. To resolve this problem, a weighted bidirectional FPN (BiFPN) [35] structure was proposed as shown in Figure 6. EfficientDet employs EfficientDet [36] as the backbone network, BiFPN as the feature network, and a shared class/box prediction network. Second, the existing models depended on huge backbone networks for large input image size for accuracy, but EfficientDet used compound scaling, a method of increasing the input resolution, depth, and width, which are factors that determine the size and computational amount of the model simultaneously and increase them.

Figure 6.

EfficientDet architecture [35].

4. UWPI-System-Based Pipe Damage Detection Experiment and CNN Learning

4.1. Detecting External Damage to Pipe Bends Using UWPI System

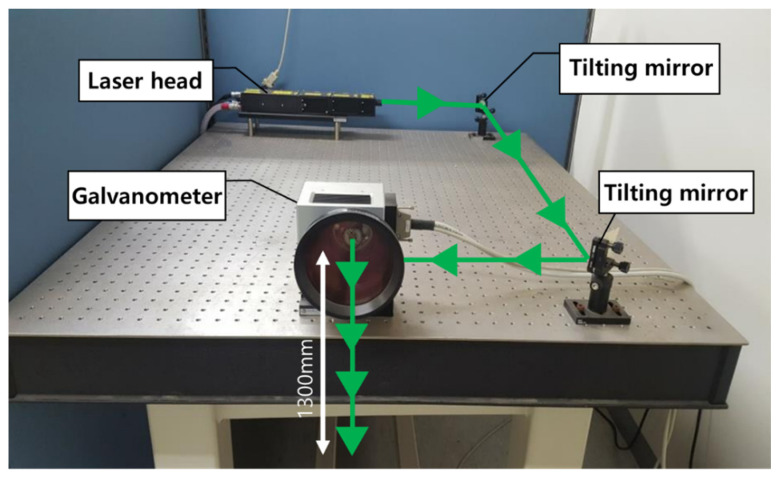

To obtain an image of pipe damage to be used in this study, a Nd:YAG pulse laser was used to generate Lamb waves, and an AE sensor was used to measure the waveform. The laser system used in the experiment is shown in Figure 7.

Figure 7.

A noncontact laser ultrasonic scanning system composed of a Q-switched Nd:YAG pulsed laser with a galvanometer for ultrasonic excitation scanning [5].

The Q-switched Nd:YAG pulse laser emits a laser beam through a galvanometer after a trigger signal is delivered [5]. Using the mirror inside the galvanometer, the laser beam is emitted to the target point along the scan path, and the measured data are sent to the digitizer through the acoustic emission (AE) sensor. Then, the digitized signal reaches the image processor, where the UWPI process occurs [6].

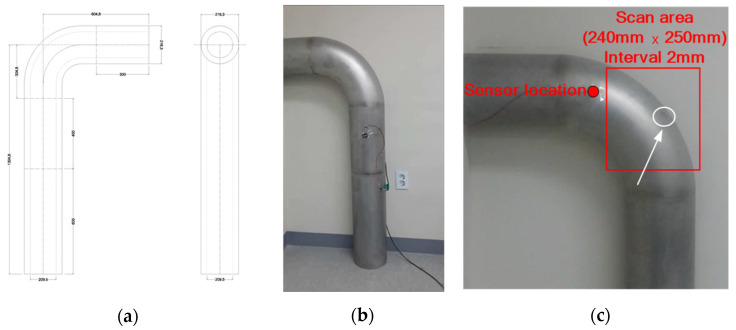

For the test pipe utilized in this study, a stainless steel 304 specimen was used, and on the curved surface of a pipe a 1 mm deep damage was artificially applied to a diameter of 30 mm, as shown in Figure 8.

Figure 8.

Stainless steel pipe damage diagnosis test specimens. (a) Stainless steel 304 specimen drawing; (b) stainless steel pipe specimen; (c) artificial damage carved on the specimen.

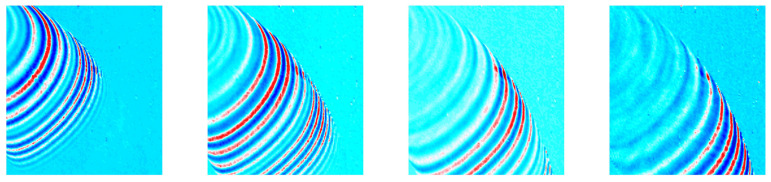

The laser scanning area is 240 mm wide and 250 mm high, and the laser excitation interval is 2 mm. The number of laser excitation points is 15,125, and the scanning time is 12.6 min. The result of the UWPI after scanning the pipe bend is shown in Figure 9.

Figure 9.

UWPI unfiltered video data to be used for deep learning.

4.2. CNN Learning Using Damage Data

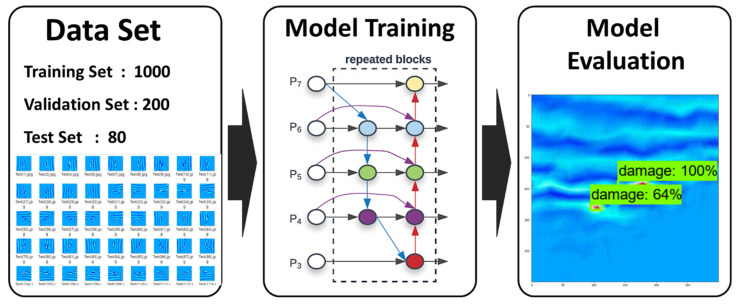

In this study, to find out the applicability of the pipe damage detection model using the laser scanning data of the curved pipe part, dataset construction, data learning and detection, and evaluation were performed in three steps as shown in Figure 10.

Figure 10.

Experimental procedure of the CNN training.

In the first step, an ultrasound image of the pipe was acquired using a laser scanning technique, and an image dataset was constructed using it. In the second step, the CNN (EfficientDet) model was trained using the image dataset. Finally, the learned model was evaluated using the test set.

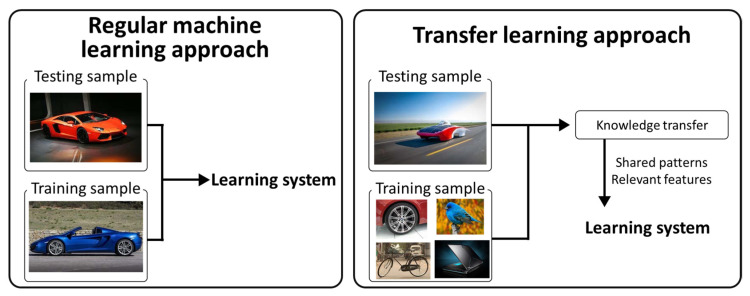

4.2.1. Transfer Learning

The dataset used in this study comprises about 1280 images, and it is difficult to evaluate it with a general learning method. To this end, a transfer-learning-based EfficientDet model was applied using a COCO dataset [37] that was pretrained with about 330,000 images and 80 categories. The structure of the deep learning network is very complex, and as the amount of training data is small, problems such as overfitting occur and the learning performance deteriorates. As the amount of training data increases, the deep learning network performance improves [38]. In the field of image object detection, when it is difficult to collect specific data, such as an UWPI image used in this study, a transfer learning technique that learns new data using a model pretrained with a lot of data is a widely used technique in various deep learning applications [19,39]. The difference between the existing learning method and the transfer learning is shown in Figure 11. In this study, we train and evaluate the detection and evaluation of pipe bend damage by using the EfficientDet pretrained model using transfer learning.

Figure 11.

Basic frameworks of traditional machine learning approaches and knowledge transfer approaches [19].

4.2.2. Train Dataset

In general, when developing deep learning algorithms, open image data that are freely available on the Internet such as ImageNet and COCO [37] are used a lot. However, in the case of open image data, the image is object-centered, and the background of the object is often simple and uncomplicated. However, open image data that can be used free of charge on the Internet did not have the UWPI images used in this study. Therefore, the images used in this study were acquired using laser scanning technology, which is detailed in Section 2 of this paper.

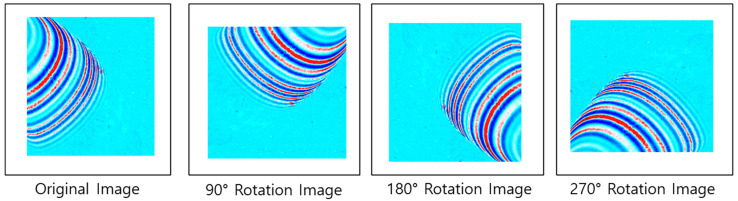

The damage to the curved part of the pipe was scanned and the scan data were produced as UWPI image data using MATLAB software. The number of image data produced was 500, with size 1024 × 1024. Of the 500 scanned images, 320 pieces of data that can predict damage information were extracted. A total of 1280 training images were constructed by rotating by 90 degrees, as shown in Figure 12, for accurate deep learning construction.

Figure 12.

Laser scanning image dataset configuration.

To increase the resolution consistency and learning precision of the 1280 images, re-sizing was performed to a size of 512 × 512. The image data were divided into 1000, 200, and 80 images for the training, validation, and test sets, respectively. After dividing the image data set into training, validation, and test sets, the coordinate labeling work of the bounding box (the area of the actual damage location) was performed on each image using LabelImg software. In the case of the images used in this study, the class name was not designated, as damage was determined based on the laser scanning image of the pipe, and a collective label name “damage” was used.

4.2.3. Training Dataset

The hardware specifications used in this study were: Intel Xeon® Silver 4210 CPU, Nvidia GeForce RTX 3060, and 32GB RAM. The main software environment consisted of Anaconda, Python 3.8, TensorFlow 2.5.0, CUDA 11.2, Cudnn 8.1.1. The CNN-based pipe bend damage model was trained using the EfficientDet-d0 model [35].

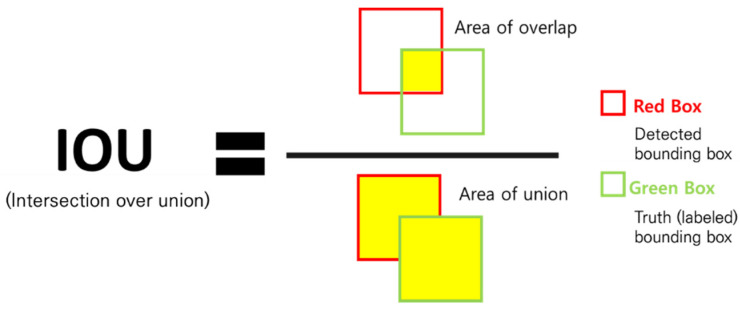

The model was evaluated using intersection over union (IOU) and mean average precision (mAP), which are evaluation indicators that are often used in object detection. Unlike the existing object classification evaluation method, object detection requires both the evaluation of the class classification and the bounding box to find the position. In this study, since there is only one class (damage), the bounding box was evaluated.

The calculation method of IOU is as shown in Figure 13, and it is an indicator of how well the bounding box is predicted. IOU indicates the ratio of the intersection of the bounding box labeled during the composition of the predicted area and the actual dataset to their union. In general, if the IOU value exceeds 0.5, it is judged as the correct answer [40].

Figure 13.

Intersection over union (IOU).

Finally, the mAP is an index used as an evaluation criterion in PASCAL VOC, and it represents the performance of the object detection algorithm as an index, i.e., as an average value of average precision (AP) for each classification class [41]. Precision and recall are commonly used to evaluate the performance of detection models. Precision shows the ratio of detection of the true value to the total detection of data as in Equation (1), and recall refers to the ratio of detection of the true value to the cases of correct detection as in Equation (2). Since the two indicators are correlated with each other, AP, which is the area under the graph, is used in the precision–recall graph. The closer the AP value is to 1, the higher the performance of the object detection algorithm.

| (1) |

| (2) |

4.2.4. UWPI Data Deep Learning Result

Prior to conducting this study, a transfer learning technique using a pretrained model used in object detection was applied to compensate for the lack of training data. Through the learning process, it was possible to know whether the used model was learning the image data well, by looking at the predicted values and the actual values. Learning was carried out in three stages as shown in Table 2. The same hardware specifications as well as the same batch size were applied for accurate comparison. For the batch size, step, and epoch values applied to training, Equation (3), which is widely used in the field of object detection, was used.

| (3) |

Table 2.

Pipe damage detection CNN training configuration information.

| Batch Size | Steps | Epochs | No. of Samples |

|---|---|---|---|

| 8 | 10,000 | 80 | 1000 |

| 8 | 30,000 | 240 | 1000 |

| 8 | 50,000 | 400 | 1000 |

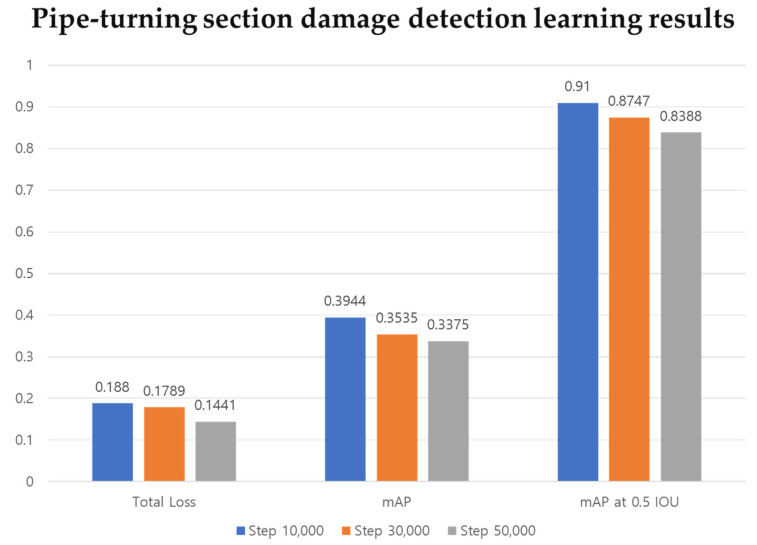

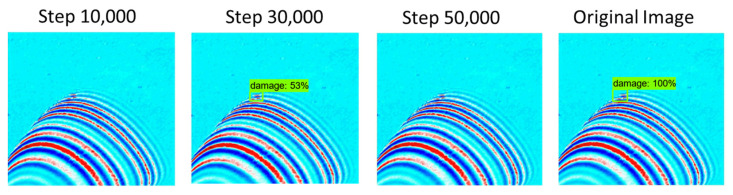

Figure 14 shows the learning results after 10,000, 30,000 and 50,000 steps. The sum of damage detection loss and bounding box regression loss for learning according to each step is summarized as total loss. From the results of a total of three learning stages, it was confirmed that the total loss was less than 0.2. Comparing results after 10,000 steps and 50,000 steps, the loss decreases as repeated learning progresses to 0.188 and 0.1441, respectively. In addition, the learning progresses normally.

Figure 14.

Comparison of deep learning results according to steps (Total loss, mAP, mAP at 0.5 IOU).

As a result of performance evaluation for the trained model, the average mAP values of the pipe damage data learning were calculated as 0.3944, 0.3535, and 0.3375, (as shown in Figure 13) and the average mAP values at 0.5 IOU were calculated as 0.91, 0.8747, and 0.8388, after 10,000, 30,000, and 50,000 steps, respectively. Observing that the average mAP value of the COCO 2017 pretrained CNN (EfficientDet-d0) algorithm used in this study was 0.336 [35], it can be deduced that the learning proceeded normally. The evaluation was conducted using a preclassified test image data set before the learning. As a result of evaluating a total of 80 test images as evaluation data, the results shown in Table 3 below were obtained.

Table 3.

Damage detection rate of test images for each step.

| Test Image | Step 10,000 | Step 30,000 | Step 50,000 |

|---|---|---|---|

| 1 | 79% | 89% | 97% |

| 2 | 79% | 88% | 96% |

| 3 | 79% | 90% | 97% |

| 4 | 78% | 91% | 96% |

| 5 | 77% | 91% | 98% |

| 6 | 72% | 90% | 98% |

| 7 | 73% | 90% | 94% |

| 8 | 77% | 89% | 94% |

| 9 | 77% | 89% | 94% |

| 10 | 77% | 90% | 92% |

| 11 | 78% | 87% | 84% |

| 12 | 77% | 87% | 92% |

| 13 | 82% | 83% | 86% |

| 14 | 85% | 88% | 90% |

| 15 | 86% | 85% | 89% |

| 16 | 85% | 81% | 89% |

| 17 | 85% | 80% | 91% |

| 18 | 82% | 75% | 88% |

| 19 | 76% | 76% | 90% |

| 20 | 66% | 76% | 94% |

| 21 | 67% | 81% | 91% |

| 22 | 66% | 83% | 90% |

| 23 | 62% | 78% | 95% |

| 24 | 59% | 78% | 93% |

| 25 | 58% | 73% | 89% |

| 26 | 67% | 77% | 88% |

| 27 | 68% | 72% | 85% |

| 28 | 68% | 70% | 81% |

| 29 | 58% | 72% | 87% |

| 30 | 67% | 74% | 83% |

| 31 | 71% | 80% | 75% |

| 32 | 73% | 83% | 68% |

| 33 | 77% | 90% | 85% |

| 34 | 80% | 92% | 94% |

| 35 | 82% | 92% | 94% |

| 36 | 82% | 92% | 94% |

| 37 | 83% | 91% | 94% |

| 38 | 84% | 93% | 94% |

| 39 | 86% | 93% | 96% |

| 40 | 87% | 94% | 99% |

| 41 | 87% | 94% | 98% |

| 42 | 87% | 92% | 98% |

| 43 | 88% | 93% | 97% |

| 44 | 88% | 95% | 98% |

| 45 | 88% | 94% | 98% |

| 46 | 88% | 94% | 97% |

| 47 | 88% | 95% | 98% |

| 48 | 89% | 96% | 98% |

| 49 | 86% | 95% | 95% |

| 50 | 85% | 95% | 96% |

| 51 | 82% | 93% | 96% |

| 52 | 85% | 93% | 96% |

| 53 | 87% | 93% | 96% |

| 54 | 88% | 93% | 98% |

| 55 | 89% | 93% | 98% |

| 56 | 88% | 94% | 97% |

| 57 | 85% | 92% | 96% |

| 58 | 82% | 93% | 94% |

| 59 | 83% | 93% | 94% |

| 60 | 84% | 90% | 92% |

| 61 | 84% | 92% | 96% |

| 62 | 83% | 91% | 96% |

| 63 | 83% | 94% | 93% |

| 64 | 83% | 92% | 92% |

| 65 | 80% | 92% | 94% |

| 66 | 82% | 92% | 94% |

| 67 | 82% | 89% | 96% |

| 68 | 81% | 87% | 94% |

| 69 | 77% | 89% | 97% |

| 70 | 77% | 90% | 97% |

| 71 | 77% | 87% | 92% |

| 72 | 63% | 68% | 64% |

| 73 | 51% | 57% | 0% |

| 74 | 0% | 66% | 52% |

| 75 | 58% | 69% | 0% |

| 76 | 50% | 70% | 53% |

| 77 | 0% | 53% | 0% |

| 78 | 0% | 56% | 58% |

| 79 | 67% | 76% | 50% |

| 80 | 87% | 93% | 98% |

| Average detection rate | 75% | 86% | 88% |

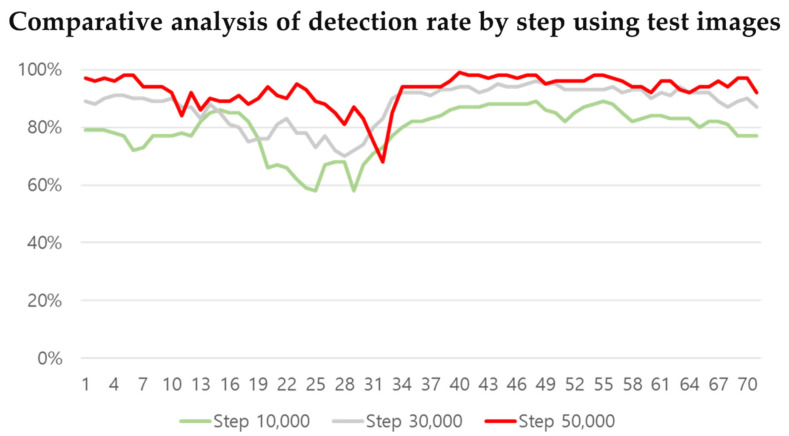

Following the evaluation at the 10,000, 30,000, and 50,000 step, average detection rates of 75%, 86%, and 88%, respectively, were confirmed. When evaluating the performance of the learning model, the average mAP was lower at steps 30,000 and 50,000 compared to step 10,000. However, because of the direct evaluation, the damage detection rate was higher at step 50,000. At step 10,000, the detection rate ranged from 50% to 89%, resulting in an average detection rate of 75%. At step 30,000 it ranged from 53% to 96%, and at step 50,000 it ranged from 50% to 99%. To see the overall aspect of learning, the undetected data are excluded and are shown in a graph in Figure 15. Taking a close look at the graph, it can be seen that the most accurate result was obtained after 50,000 steps.

Figure 15.

Comparative analysis of detection rate by step using test images.

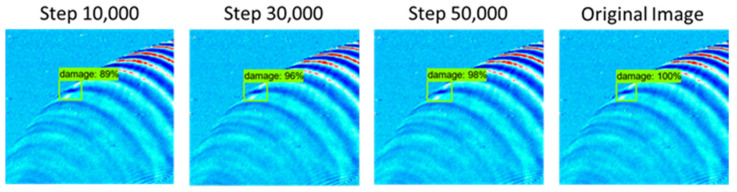

Figure 16 shows the test result with the highest detection rate compared to the original image data and it can be seen that an average detection rate of 89% or more was achieved compared to the original image data. Observing the overall test, no erroneous detection occurred. However, at steps 10,000 and 50,000, three non-detections occurred as shown in Figure 17.

Figure 16.

Test result of damage detection with excellent accuracy.

Figure 17.

Test result of damage not detected and low accuracy.

In general, the main cause of non-detection in learning results is that there is no difference in color or contrast between an object and the background. This problem is due to a shape that appears depending on the background and physical environment such as color or lighting of the object [42].

From the results of the test, no erroneous detection was found in this study, and three cases of non-detection occurred at steps 10,000 and 50,000. This is thought to be for the following reasons. First, regarding the undetected results, the problem is that there is no difference in contrast between the background color of the image and the color of the damaged part, which is believed to have affected the learning results. Second, it is presumed that some non-detection occurred because there was no experience in learning the UWPI image of this study with the COCO 2017dataset. Therefore, it can be deduced that it will be improved if many pipe UWPI images are acquired and used with deep learning in order to improve detection.

5. Conclusions

In this study, we proposed an automatic damage detection system for pipe bends using a CNN object detection algorithm with laser scanning data to efficiently extend the safety management of pipes used in the construction industry and many industries. Using a Q-switched Nd:YAG pulse laser and an acoustic emission (AE) sensor, UWPI image data were produced for the detection of damage introduced artificially to the pipe bend. A damage detection system was constructed using a total of 1280 training images obtained through post-processing of the UWPI data. Since 1280 images are insufficient to proceed with deep learning, a transfer learning technique using the pretrained COCO 2017 EfficientDet-d0 algorithm was applied.

Examining the learning model using the pipe damage data, it was confirmed that the detection performance index, mAP, was higher than the value of 0.336 from the COCO 2107 Effi-cientDetd-0 model. This indicates that the model training was successful, and it was confirmed that there was no performance difference when comparing the existing methods of learning that use a lot of data with the one implemented through transfer learning with 1280 pieces of data. From the result of the CNN learning using pipe damage data, three cases were not detected after 10,000 steps and 50,000 steps. It was deduced that a small amount of non-detection occurred due to an insufficient quality and quantity of images. Therefore, to supplement the undetected problem, we intend to proceed with the following additional research.

Through additional experiments and research, we intend to secure UWPI data according to the damage size using laser scanning techniques for the components (curved part, curved pipe part, bolted joint part, welding, etc.) of pipes.

This study confirmed the possibility of detecting damage to pipes based on laser scanning through the transfer learning technique, and based on this, we intend to propose a better detection technique using new algorithms and large amounts of data.

To acquire ultrasonic signals in the laser scanning system, this study used the AE sensor installed directly on the pipe. Therefore, we intend to develop a noncontact nondestructive system for efficient pipe damage detection by using laser diameter vibration (LDV) instead of an AE sensor.

In this study, using the UWPI system and CNN, an automatic pipe bend damage detection system was proposed. Therefore, it is expected that efficient maintenance will be possible for piping used in construction and many industries.

Author Contributions

Conceptualization, C.L., K.D.T. and B.Y.; methodology, C.L., K.D.T. and B.Y; formal analysis, B.Y.; software, B.Y.; investigation, B.Y.; resources, S.P.; writing—original draft preparation, B.Y.; writing—review and editing, B.Y.; visualization, K.D.T.; supervision, C.L.; project administration, B.Y.; funding acquisition, S.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIP) (No. NRF-2017R1A2B3007607) (NRF-2021R1A4A3033128).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Gunarathna R.N.P., Sivahar V. Challenges in Monitoring Metallic Pipeline Corrosion Using Ultrasonic Waves—A Review Article. Eng. J. Inst. Eng. 2021;54:67–75. [Google Scholar]

- 2.Lee C., Kang D., Park S. Visualization of Fatigue Cracks at Structural Members Using a Pulsed Laser Scanning System. Res. Nondestruct. Eval. 2014;26:123–132. doi: 10.1080/09349847.2014.985349. [DOI] [Google Scholar]

- 3.Lee C., Park S. Flaw Imaging Technique for Plate-Like Structures Using Scanning Laser Source Actuation. Shock. Vib. 2014;2014:1–14. doi: 10.1155/2014/725030. [DOI] [Google Scholar]

- 4.Lee C., Park S. Damage visualization of pipeline structures using laser-induced ultrasonic waves. Struct. Heal. Monit. 2015;14:475–488. doi: 10.1177/1475921715596220. [DOI] [Google Scholar]

- 5.Tran D.Q., Kim J.-W., Tola K.D., Kim W., Park S. Artificial Intelligence-Based Bolt Loosening Diagnosis Using Deep Learning Algorithms for Laser Ultrasonic Wave Propagation Data. Sensors. 2020;20:5329. doi: 10.3390/s20185329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tola K.D., Tran D.Q., Yu B., Park S. Determination of Plate Corrosion Dimension Using Nd:YAG Pulsed Laser-generated Wavefield and Experimental Dispersion Curves. Materials. 2020;13:1436. doi: 10.3390/ma13061436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lee C., Zhang A., Yu B., Park S. Comparison Study between RMS and Edge Detection Image Processing Algorithms for a Pulsed Laser UWPI (Ultrasonic Wave Propagation Imaging)-Based NDT Technique. Sensors. 2017;17:1224. doi: 10.3390/s17061224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Michaels J.E. Ultrasonic wavefield imaging: Research tool or emerging NDE method? AIP Conf. Proc. 2017;1806:020001. doi: 10.1063/1.4974542. [DOI] [Google Scholar]

- 9.Carmen V., David S.M. Intensity-Based Optical Systems for Fluid Level Detection. Recent Pat. Electr. Electron. Eng. 2012;5:85–95. [Google Scholar]

- 10.Safizadeh M., Azizzadeh T. Corrosion detection of internal pipeline using NDT optical inspection system. NDT E Int. 2012;52:144–148. doi: 10.1016/j.ndteint.2012.07.008. [DOI] [Google Scholar]

- 11.Chen G., Katagiri T., Song H., Yusa N., Hashizume H. Investigation of the effect of a bend on pipe inspection using microwave NDT. NDT E Int. 2020;110:102208. doi: 10.1016/j.ndteint.2019.102208. [DOI] [Google Scholar]

- 12.Miro J.V., Hunt D., Ulapane N., Behrens M. Field and Service Robotics. Volume 5. Springer; Manhattan, NY, USA: 2017. Towards Automatic Robotic NDT Dense Mapping for Pipeline Integrity Inspection; pp. 319–333. [Google Scholar]

- 13.Kim S., Kim C.H., Bae Y.-G., Na H., Jung S. NDT inspection mobile robot with spiral driven mechanism in pipes; Proceedings of the IEEE ISR 2013; Seoul, Korea. 24–26 October 2013; pp. 1–2. [Google Scholar]

- 14.Krys D., Najjaran H. Development of Visual Simultaneous Localization and Mapping (VSLAM) for a Pipe Inspection Robot; Proceedings of the 2007 International Symposium on Computational Intelligence in Robotics and Automation; Jacksonville, FL, USA. 20–23 June 2007; pp. 344–349. [Google Scholar]

- 15.Dai L., Feng H., Wang T., Xuan W., Liang Z., Yang X. Pipe Crack Recognition Based on Eddy Current NDT and 2D Impedance Characteristics. Appl. Sci. 2019;9:689. doi: 10.3390/app9040689. [DOI] [Google Scholar]

- 16.Cha Y.-J., Choi W., Büyüköztürk O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput. Civ. Infrastruct. Eng. 2017;32:361–378. doi: 10.1111/mice.12263. [DOI] [Google Scholar]

- 17.Azimi M., Eslamlou A.D., Pekcan G. Data-Driven Structural Health Monitoring and Damage Detection through Deep Learning: State-of-the-Art Review. Sensors. 2020;20:2778. doi: 10.3390/s20102778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Zhu Y., Newsam S. Dense Net for dense flow; Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP); Beijing, China. 17–20 September 2017; pp. 790–794. [Google Scholar]

- 19.Shao L., Zhu F., Li X. Transfer Learning for Visual Categorization: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2015;26:1019–1034. doi: 10.1109/TNNLS.2014.2330900. [DOI] [PubMed] [Google Scholar]

- 20.Hayward G., Hyslop J. Determination of lamb wave dispersion data in lossy anisotropic plates using time domain finite el-ement analysis. Part I: Theory and experimental verification. IEEE Trans. 2006;53:443–448. doi: 10.1109/tuffc.2006.1593383. [DOI] [PubMed] [Google Scholar]

- 21.Drain L.E. Laser Ultrasonics Techniques and Applications. Routledge; Abington, UK: 2019. [Google Scholar]

- 22.White R.M. Generation of Elastic Waves by Transient Surface Heating. J. Appl. Phys. 1963;34:3559–3567. doi: 10.1063/1.1729258. [DOI] [Google Scholar]

- 23.Zhu H., Ge W., Liu Z. Deep Learning-Based Classification of Weld Surface Defects. Appl. Sci. 2019;9:3312. doi: 10.3390/app9163312. [DOI] [Google Scholar]

- 24.Hinton G.E. Deep belief networks. Scholarpedia. 2009;4:5947. doi: 10.4249/scholarpedia.5947. [DOI] [Google Scholar]

- 25.LeCun Y., Yoshua B. The Handbook of Brain Theory and Neural Networks. MIT Press; Cambridge, MA, USA: 1997. Convolutional networks for images, speech, and time series; pp. 255–258. [Google Scholar]

- 26.Pascal V., Hugo L., Isabelle L., Yoshua B., Manzagol P.-A. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010;11:3371–3408. [Google Scholar]

- 27.Hinton G.E., Osindero S., Teh Y.-W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006;18:1527–1554. doi: 10.1162/neco.2006.18.7.1527. [DOI] [PubMed] [Google Scholar]

- 28.LeCun Y., Bottou L., Bengio Y., Haffner P. Gradient-based learning applied to document recognition. Proc. IEEE. 1998;86:2278–2324. doi: 10.1109/5.726791. [DOI] [Google Scholar]

- 29.Jozefowicz R., Vinyals O., Schuster M., Shazeer N., Wu Y. Exploring the limits of language modeling; Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); Prague, Czech Republic. 22–27 May 2011; pp. 5528–5531. [Google Scholar]

- 30.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 31.Lee H.S. Master’s Thesis. Graduate School of Chung-Ang University; Seoul, Korea: 2018. A Structure of Convolutional Neural Networks for Image Contents Search. [Google Scholar]

- 32.TensorFlow. [(accessed on 9 November 2015)]. Available online: https://www.tensorflow.org/

- 33.Keras. [(accessed on 14 June 2015)]. Available online: https://github.com/keras-team/keras.

- 34.Abadi M., Barham P., Chen J., Chen Z., Davis A., Dean J., Devin M., Ghemawat S., Irving G., Isard M., et al. TensorFlow: A system for large-scale machine learning; Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation; Savannah, GA, USA. 2–4 November 2016; pp. 256–283. [Google Scholar]

- 35.Tan M., Pang R., Le Q.V. Efficient Det: Scalable and Efficient Object Detection; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); Seattle, WA, USA. 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- 36.Tan M., Le Q.V. Efficient Net: Rethinking Model Scaling for Convolutional Neural Networks; Proceedings of the 36th International Conference on Machine Learning (ICML 2019); Long Beach, CA, USA. 28 May 2019; pp. 6105–6114. [Google Scholar]

- 37.Lin T.Y., Maire M., Belongie S., Hays J., Perona P., Ramanan D. 2014.Microsoft COCO: Common objects in context. Comput. Vis. ECCV. 2014;8693:740–755. [Google Scholar]

- 38.Liu B., Wei Y., Zhang Y., Yang Q. Deep Neural Networks for High Dimension, Low Sample Size Data; Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence (IJCAI-17); Melbourne, Australia. 19–25 August 2017; pp. 2287–2293. [DOI] [Google Scholar]

- 39.Pan S.J., Yang Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010;22:1345–1359. doi: 10.1109/TKDE.2009.191. [DOI] [Google Scholar]

- 40.Rezatofighi H., Tsoi N., Gwak J., Sadeghian A., Reid I., Savarese S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); Long Beach, CA, USA. 15–20 June 2019; pp. 658–666. [Google Scholar]

- 41.Everingham M., Van Gool L., Williams C.K.I., Winn J., Zisserman A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010;88:303–338. doi: 10.1007/s11263-009-0275-4. [DOI] [Google Scholar]

- 42.Kim M., Shin S., Suh Y. Application of Deep Learning Algorithm for Detecting Construction Workers Wearing Safety Helmet Using Computer Vision. J. Korean Soc. Saf. 2019;34:29–37. doi: 10.14346/JKOSOS.2019.34.6.29. [DOI] [Google Scholar]