Abstract

The emergence of pose estimation algorithms represents a potential paradigm shift in the study and assessment of human movement. Human pose estimation algorithms leverage advances in computer vision to track human movement automatically from simple videos recorded using common household devices with relatively low-cost cameras (e.g., smartphones, tablets, laptop computers). In our view, these technologies offer clear and exciting potential to make measurement of human movement substantially more accessible; for example, a clinician could perform a quantitative motor assessment directly in a patient’s home, a researcher without access to expensive motion capture equipment could analyze movement kinematics using a smartphone video, and a coach could evaluate player performance with video recordings directly from the field. In this review, we combine expertise and perspectives from physical therapy, speech-language pathology, movement science, and engineering to provide insight into applications of pose estimation in human health and performance. We focus specifically on applications in areas of human development, performance optimization, injury prevention, and motor assessment of persons with neurologic damage or disease. We review relevant literature, share interdisciplinary viewpoints on future applications of these technologies to improve human health and performance, and discuss perceived limitations.

Keywords: pose estimation, movement tracking, computer vision, artificial intelligence, markerless motion capture, assessment, kinematics, development, machine learning

1. Introduction

Humans have long been interested in quantitative measurement of our movements [1,2]. This is evident in many aspects of life: an Olympic judge scrutinizes and scores a figure skater’s performance; a physical therapist measures a patient’s walking speed to assess mobility; a running coach inspects and adjusts a distance runner’s foot-strike pattern to prevent injury. We also interpret the movements of others to communicate (e.g., sign language) or make inferences about emotional state (i.e., “reading body language”; [3,4,5]).

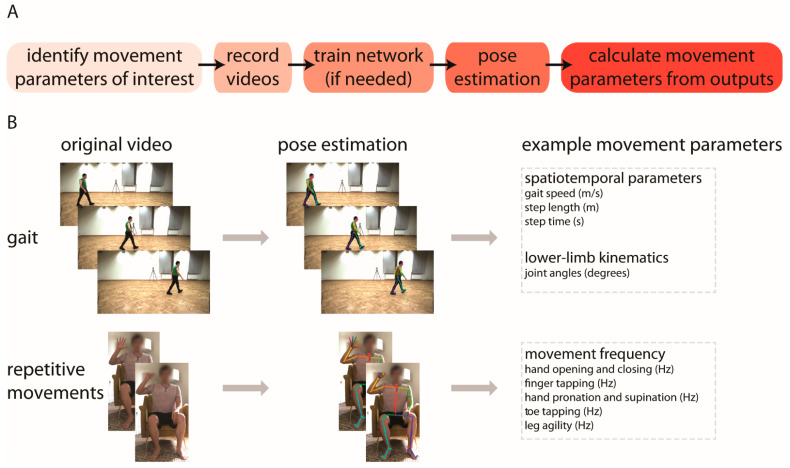

In this review, we focus on applications of human pose estimation, an emerging technology for quantitative measurement of human movement kinematics [6,7,8,9,10,11,12,13]. Pose estimation algorithms use computer vision to identify key landmarks on the body (e.g., fingertip, elbow, knee) from simple digital videos that can be recorded using common household devices (example workflow and applications are shown in Figure 1A,B, respectively). This simplicity offers exciting potential for measuring whole-body kinematics in nearly any setting, with minimal costs of money, time, and effort. We also see significant opportunities for the ongoing maturation and validation of these approaches to offer robust supplements or alternatives to subjective visual motor assessments and to improve accessibility to measurement of movement kinematics by removing long-standing barriers. The ability to capture quantitative, whole-body kinematics using a household device could substantially reduce reliance on traditional methods that are inaccessible or data-limited, such as expensive research-grade motion capture systems or wearable devices.

Figure 1.

(A) Basic workflow for using pose estimation to measure movement kinematics from video; (B) Example applications of using pose estimation to quantify spatiotemporal and kinematic gait parameters (top) and frequencies of repetitive upper and lower extremity movements (bottom). These applications are described in greater detail in [14,15]. The gait images shown in (B) are taken from the GPJATK dataset [16].

We focus specifically on applications of human pose estimation for improving human health and performance. We note that pose estimation algorithms are used for many other applications (e.g., intelligent video surveillance [17], activity recognition [18], sign language translation [19]), and prior reviews have discussed technical aspects of various algorithms and their perceived advantages and disadvantages [20,21,22]. Here, we focus less on the technical aspects of pose estimation and instead discuss applications of these algorithms, both in terms of current applications and those that we perceive may be possible in the future. We cover areas of application across the human lifespan, including human development, human performance optimization, musculoskeletal injury prevention, and motor assessment of persons with neurologic damage or disease.

We also integrate the clinical perspective on pose estimation applications. Much prior work on human pose estimation (including our own) has suggested promise for clinical application. However, in our view, the clinician’s (i.e., end user) viewpoint on these potential applications has not received adequate consideration or representation, and applications of pose estimation have not been contextualized within current models of clinical care. We aim to address these issues by providing an interdisciplinary perspective that integrates views from physical therapy, speech-language pathology, movement science, and engineering.

2. What Is Pose Estimation?

Markerless human pose estimation relies on recent advances in computer vision to automatically track anatomical landmarks—so-called keypoints—of the human body from digital videos. Examples of possible tracked keypoints include the ankle, knee, hip, wrist, elbow, shoulder, foot (e.g., heel, big toe, and small toe), hand (e.g., tip and three joints of every finger), and face (e.g., ears, eyes, nose, and mouth). Current state-of-the-art algorithms used to track human poses have been trained on large datasets of digital images and/or videos of human movement in which keypoints have been manually annotated [23,24]. The trained algorithms can then track new, unlabeled videos of humans. This enables automated, video-based human movement tracking, with the greatest accuracy achieved for movements similar to those in the training dataset.

The primary output from pose estimation is a series of two-dimensional pixel coordinates of the tracked keypoints, as they appear projected onto the image sensor of the camera. From the two-dimensional pixel coordinates, different approaches of analyzing and processing data have been reported, and fall into three broad categories. First, some studies use the output to represent planar two-dimensional kinematics of human movement, from which specific metrics of interest can be calculated [15,25,26,27,28]. An example of an instance in which this approach may be appropriate is capturing a video of the sagittal view of human locomotion and subsequently calculating sagittal gait kinematics (e.g., lower limb joint angles). Second, it is possible to reconstruct three-dimensional kinematics of human movement if capturing videos from multiple viewpoints using at least two cameras [29,30,31]. This approach offers significant advantages over a single camera view, in part because occlusions occur and out-of-plane motions are not well-captured by a single camera; however, this approach also has potential drawbacks associated with setup and computational complexity. Last, it is also possible to use the pose estimation output as an input for further processing by neural networks designed to predict specific metrics of interest [32,33,34]. Subsequent processing by neural networks may be appropriate when predicting a scalar value such as peak knee flexion during walking or clinical ratings, but this approach may be less accurate when predicting frame-by-frame time-series data. This inaccuracy is commonly due to the fact that most algorithms do not aim to minimize frame-to-frame variation when performing pose estimation with video data.

These diverse approaches to data analysis of pose estimation of human movement make it possible to obtain many parameters associated with movement. For example, pose estimation has been used to study human locomotion [15,34,35] and provide kinematic measures such as lower limb joint angles; spatiotemporal measures such as gait speed, step length, and step time; and clinical ratings such as the Gait Deviation Index in patients with cerebral palsy or MDS–UPDRS gait scores for persons with Parkinson’s disease. Other studies have used pose estimation to assess neuromotor risk and development in human infants [36,37]. These areas of application are introduced briefly here, but will be covered in greater detail in later sections of this manuscript.

3. What Tools Are Available?

Several different algorithms for pose estimation have been published over the past decade (e.g., OpenPose [13], DeepLabCut [12], DeepPose [10], DeeperCut [8], AlphaPose [38], ArtTrack [7]). Using these algorithms, it is possible to take advantage of pretrained networks that are freely available, or train new networks customized for various research or clinical needs. For example, a commonly used pretrained network is the human pretrained demo of OpenPose that includes keypoints of the body, feet, hands, and face [13,39] and has been used in several recent studies for quantitative analysis of human movement [15,26,29,31,34,40].

The computations needed for training a new network and tracking new videos often require intensive computing capabilities. Therefore, the computing power of a graphics processing unit (GPU) may be necessary in order for processing times to reach acceptable limits (many algorithms provide documentation with hardware recommendations, as in [11]). If a user does not have their own GPU, some computing environments (e.g., Google Colaboratory) provide GPU access for faster processing; however, these may not be suitable for applications involving protected health information because the processing occurs externally. Processing without a GPU is slower but may be sufficient depending on the user’s time constraints and processing needs (e.g., length of videos, number of people tracked, number of keypoints tracked). Furthermore, it is also possible to use pose estimation for real-time movement tracking (as is available with OpenPose, for example [39]). This capability may be particularly useful to some users, as it could be implemented to provide real-time biofeedback for various applications. Beyond these increasingly popular deep learning approaches, other approaches also use optimization [41,42,43] and filtering [44,45] techniques to perform pose estimation.

4. How Can These Tools Be Used to Improve Human Health and Performance?

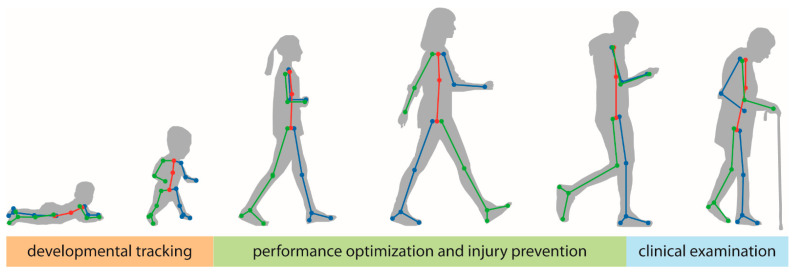

In the following subsections, we will focus on three specific areas of application across the human lifespan: (1) human development, (2) performance optimization and injury prevention, and (3) motor assessment of persons with neurologic damage or disease (Figure 2). Certainly, many additional areas of application exist beyond the scope of this review. We focus on these applications due to the emerging nature of the relevant literature and the expertise of the authors. We expect that many of the principles discussed below are likely to generalize to other applications and/or populations of interest.

Figure 2.

In this manuscript, we focus on three general areas of applications of pose estimation in human health and performance across the lifespan: tracking of motor and non-motor development in young children (orange), performance optimization and injury prevention in athletes and other populations that are primarily young or middle-aged adults (green), and clinical examinations of persons with neurologic damage or disease who are primarily older adults (blue).

4.1. Tracking General Motor Development

Developmental scientists study the emergence of specific behaviors from infancy to adolescence in many different settings, including the laboratory, home environment, clinic, and classroom. Accordingly, video recordings are an integral component of most, if not all, developmental research programs. Video-based approaches have been used to study multiple domains of development, including gross and fine motor development as well as social, language, and play development [46,47,48,49]. One major limitation of current video-based approaches is the time-intensive but necessary process of manually coding child behaviors of interest by clinicians and researchers. Pose estimation technologies offer a much-needed opportunity to accelerate video coding to capture specific behaviors of interest in such developmental investigations. Due to the extensive manual video coding that has been done in the field over decades, there are large existing video databases that have already undergone human coding/reliability checks and can provide a valuable source of ground truth data for training and validation of machine learning models of development (e.g., [50]). Such approaches could further help decrease reliance on assessment tools that require the expertise and time of trained clinicians for interpretation and, in turn, offer cost-effective and scalable alternatives to more subjective measures of typical and atypical development.

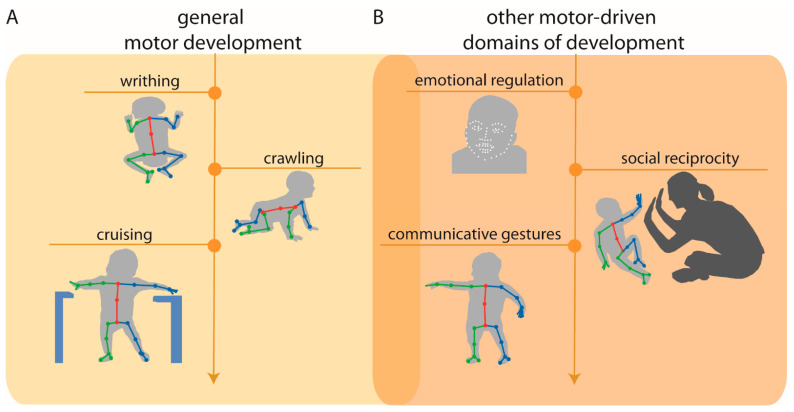

Although in the early stages of application, pose estimation approaches are beginning to be applied to the study of general motor development [36,51] (Figure 3A). For example, pose estimation has been used to detect normal writhing movements (i.e., typical spontaneous movements produced by newborns) vs. abnormal movements from video recordings of newborns in their first days of life [51]. Preliminary findings are promising and suggest that normal vs. abnormal writhing movements can be automatically classified with 80% accuracy, a percentage comparable to expert human classification.

Figure 3.

(A) Example applications of pose estimation to quantify early motor developmental milestones (left), including writhing movements (e.g., [51]), crawling, and cruising (e.g., [36]); and (B) other motor-driven domains of development, including emotional regulation, social reciprocity, and communicative gestures. Overlap between (A,B) denotes that these areas of development are intimately linked with one another. Arrow indicates that application of pose estimation is not restricted to these examples and can be applied to quantify later motor and motor-driven developmental milestones.

As infants progress in their gross motor development, the onsets of crawling and walking—gross motor advances that allow infants to explore and learn from their environment—have been found to be intimately linked with growth in other developmental domains [52,53]. Indeed, findings from developmental science literature suggest that delays in the onset of walking may result in limited opportunities for exploration and input from caregivers and family members, leading to subsequent delays in language and social communication development [48,54,55]. As a result, it is critical to improve the early detection of delays in locomotor development in order to intervene prior to any cascading effects on other domains of development.

Researchers have begun to implement pose estimation as a useful tool for quantitative tracking of infant locomotor development. For example, Ossmy and Adolph [36] used a combination of pose estimation, machine learning, and time-series analyses to examine the role of experience in infant acquisition of interlimb coordination based on video recordings of the infants “cruising” (i.e., side-stepping with support of the upper extremities)—which is the transitional behavior between crawling and walking—at 11 months of age. More specifically, the authors used pose estimation to track frame-by-frame body movements and subsequently calculated the distance between the limbs (i.e., the distance between the hands and the distance between the feet) for each tracked video frame to extract the coordination pattern for cruising. The results of this study provided insight into the mechanisms by which infants learn to optimally cruise and, as a result, may hold implications for future work aiming to investigate early detection and intervention for delays in locomotor development.

4.2. Clinical Use in Pediatric Populations

Early detection of atypical development is critical for the diagnosis of congenital movement-based disorders (e.g., cerebral palsy) and neurodevelopmental disorders (e.g., autism spectrum disorder) to ensure timely access to early intervention services to improve motor outcomes (e.g., coordination, postural support) and other domains of development (e.g., social, language). Advances in pose estimation approaches and the emergence of novel machine learning-based models offer exciting potential for the assessment of movement-based predictors of clinical disorders. For example, pose estimation is beginning to be applied, not only to measure predictors of later motor-based disorders, but also predictors of other motor-driven domains of development (social communication; Figure 3B). In this subsection, we provide examples of these advances.

Cerebral palsy (CP) is the most common movement disorder in childhood, caused by abnormal neural development or injury that impairs the ability to control movement and posture [56]. Diagnosis of CP using conventional assessments typically occurs between age 12 and 24 months; however, using a combination of standardized assessments and neonatal magnetic resonance imaging (MRI), CP can be accurately predicted before 6 months corrected age [57]. Yet, there remain significant drawbacks to this approach: standardized assessments are based on subjective human observation that requires substantial training and clinical expertise, and neonatal MRI is expensive and often inaccessible in low-resource areas [58].

Recent research efforts have attempted to address these shortcomings by aiming to use video recordings to implement low-cost, automatic, objective alternatives for the detection of CP risk. Such investigations have succeeded in predicting CP based on automatic movement assessment from infant video recordings with performance comparable to standardized CP risk measures [59,60,61]. For example, in a multi-site cohort investigation, an automated, objective, movement assessment of infant video recordings was compared to standard risk assessment measures (i.e., the General Movement Assessment and neonatal neuroimaging) at 9–15 weeks corrected age to predict CP status and motor function at approximately 3.7 years of age. The results of this investigation found that the automated, video-based approach exhibited sensitivity and specificity comparable to standard measures used to predict CP [61].

There are also clear applications for pose estimation to potentially improve the early identification of neurodevelopmental disorders, such as the early detection of autism spectrum disorder (ASD). Although parents often report first concerns about ASD when their child is between 12 to 14 months of age [62,63] and reliable ASD diagnosis is possible by age 2, the majority of children with ASD remain undiagnosed until 4 years of age [64]. Shortages of ASD expert clinicians and limited capacities at autism tertiary diagnostic centers contribute to the long wait times for families [65]. Families living in rural and low-resource communities are often required to travel long distances to receive diagnostic services, placing them at an even greater disadvantage in accessing services. Indeed, a recent report indicates that approximately 84% (2635/3142) of U.S. counties do not have the necessary ASD diagnostic resources [66]. Given these barriers to a timely diagnosis, a significant portion of children with ASD are missing a critical window for early intervention services, as evidence shows that intervention before the age of 2 significantly improves behavioral and developmental outcomes for children with ASD [67,68,69]. The detrimental impact of diagnostic delays has resulted in federal prioritization of early identification of ASD and an urgency to develop accessible and accurate early screening methods [64].

Leveraging advances in machine learning, efforts have been made to develop scalable, video-based ASD screeners to improve access to diagnostic and early intervention services. For example, Crippa et al. developed an algorithm to examine the predictive value of motor behavioral biomarker measures in ASD to discriminate preschool children with ASD from children with typical development using a simple upper-limb reach-to-drop task [70]. The resulting model showed an accuracy rate of 96.7%, suggesting that video-based approaches combined with machine learning can be a useful method of classification and discrimination in the diagnostic process [70].

The emerging evidence supporting the application of automated, video-based assessments to monitor general gross motor development and promote early detection of both motor-based and neurodevelopmental disorders is promising. In order to establish the clinical utility of pose estimation, future work is needed to examine the feasibility and acceptability of clinician use of such techniques.

4.3. Human Performance Optimization, Injury Prevention, and Safety

Numerous applications of pose estimation exist within optimization of human performance and safety, with these applications spanning injury risk assessment, rehabilitation, and enhancing human performance. This application space commonly consists of some type of instructor, such as a coach, trainer, or clinician, attempting to assess an individual’s movement patterns to determine whether the individual is at an increased risk for injury, is moving differently from a healthy, uninjured individual, or is moving with some level of inefficiency that can be modified to improve performance. Within injury assessment, common applications of pose estimation have been to evaluate an individual’s risk for specific musculoskeletal injuries and to perform a post-hoc analysis following the occurrence of an injury. For example, two-dimensional pose estimation techniques have been applied to develop proof-of-concept screening technologies that detect abnormal gait patterns during walking and running [71,72,73,74,75], fall detection [76,77,78], abnormal movements that are indicative of injury risk in manual labor work environments [79,80,81], and risk of sports-related injury, such as anterior cruciate ligament rupture [82,83,84]. Post-hoc analysis following an injury has primarily been targeted towards sports performance applications and focused on understanding mechanisms of injury, with the ultimate goal of developing techniques to mitigate injury risk [85,86].

Applications of pose estimation to rehabilitation following injury or surgery typically focus on using these techniques to monitor an individual’s return to normal movement patterns and to guide the motion of rehabilitation technology that is designed to interface with a patient. Pose estimation techniques have been used to measure a patient’s range of motion and movement during functional exercises and assess their progression towards a healthy range of motion [87,88,89]. In particular, there has been an emphasis on the use of pose estimation to monitor rehabilitation progress outside of the clinic, such as in home or on an athletic field [90,91,92,93]. Additionally, many technologies have been designed to actively interface with an individual to either support their movement during rehabilitation or to help provide a mechanical stimulus to enhance rehabilitation. These technologies are commonly referred to as rehabilitation robotics, and techniques have been developed that leverage pose estimation to inform the movement of these systems [94,95,96,97].

The use of pose estimation for enhancing human performance remains a challenging application, given the large range of joint articulation, out of plane motion, and fast movements that can be difficult to capture with the relatively slow sampling rates of common video recording devices and risk of occlusion that occurs in these applications [98,99]. However, a number of proof-of-concept systems have been developed to inform pose of an athlete during training, particularly for sports in which success for the athlete is directly linked to pose (e.g., gymnastics and skiing) [100,101,102]. Development of new pose estimation techniques for human performance applications have focused on achieving high accuracy with ‘in the wild’ pose estimations, given the importance of performing these measurements outside of the lab in these applications [11,103,104]. While this previous research has demonstrated applications that may be made possible with pose estimation, very few of these proof-of-concept technologies have made the transition to regular use in a clinical, athletic, or other relevant environments. This likely derives from the fact that many unique requirements arise when attempting to apply these techniques to human performance applications outside of the laboratory.

For pose estimation to influence the broader human performance community, including non-clinical populations, research must drive towards robust ‘in the wild’ pose estimation encompassing a range of environments and populations. To this end, we will define desirable components of an ideal dataset for pose estimation algorithm development, training, and validation. Future studies should focus on capturing and making available these datasets to expand the application space of pose estimation or define functional limitations of the current hardware or software technology.

Many injury and performance evaluations are based on highly dynamic motion analysis [85,86,105], requiring that any pose estimation validation datasets should include accurate ground truth measurements of human joint kinematics for as many degrees of freedom as feasible. Ideally, this will include kinematics of complex joints, such as the ankle, wrists, intervertebral joints, and scapular motion—all of which play a key role in many injuries and are not estimated in most existing pose estimation techniques. Linear kinematics of the various body components should also be reported on, especially in relation to conditions that result from impact injuries (e.g., traumatic brain injury, chronic traumatic encephalopathy) [106]. Optical motion tracking is currently the gold standard for such ground truth measurements, but further accuracy (and cost) improvements are desirable due to artifacts arising from relative marker motion with respect to the underlying bony anatomy [107]. Therefore, researchers should aim to account for these artifacts within the pose estimation process.

Validation datasets should be captured outside of laboratory environments and include complexities such as partial occlusion (self-occlusion, inter-subject occlusion, environmental occlusion), various illuminations, loose-fitting clothing, and multiple camera standoffs or viewing angles. Recent examples of pose estimation outside of the lab are primarily based on monocular RGB images [108,109,110,111]. However, these techniques are generally less accurate—especially in three dimensions—when compared to laboratory pose estimation. The fusion of other pose estimation modalities, including inertial measurement units and infrared imaging, with single or multi-view RGB images is a promising direction for improved pose estimation [112], and should be included in validation datasets, such as those provided by Malleson et al. [113].

As new pose estimation algorithms are developed for human performance applications, special consideration should be given to the evaluation metrics reported. Motion type classification is of limited usefulness for in-depth biomechanical analysis and, instead, joint kinematic errors should be reported for each degree of freedom. Furthermore, estimation accuracies should be reported under varying conditions, including differences between lab-based and outdoor estimations. Finally, the computational cost per frame of pose estimation should be reported to understand applicability to real-time, highly dynamic application spaces [113].

4.4. Clinical Motor Assessment in Adult Neurologic Conditions

Clinical assessments and the resulting outcome measures are critical to motor rehabilitation in adults with neurologic conditions. These clinical assessments are typically administered to capture either a patient’s status at a specific point in time or to track their motor function longitudinally. When administered at a single time point, assessments are used to classify the severity of an individual’s deficits. When administered longitudinally, assessments are commonly used to track disease progression/regression, measure recovery, or evaluate the effectiveness of an intervention.

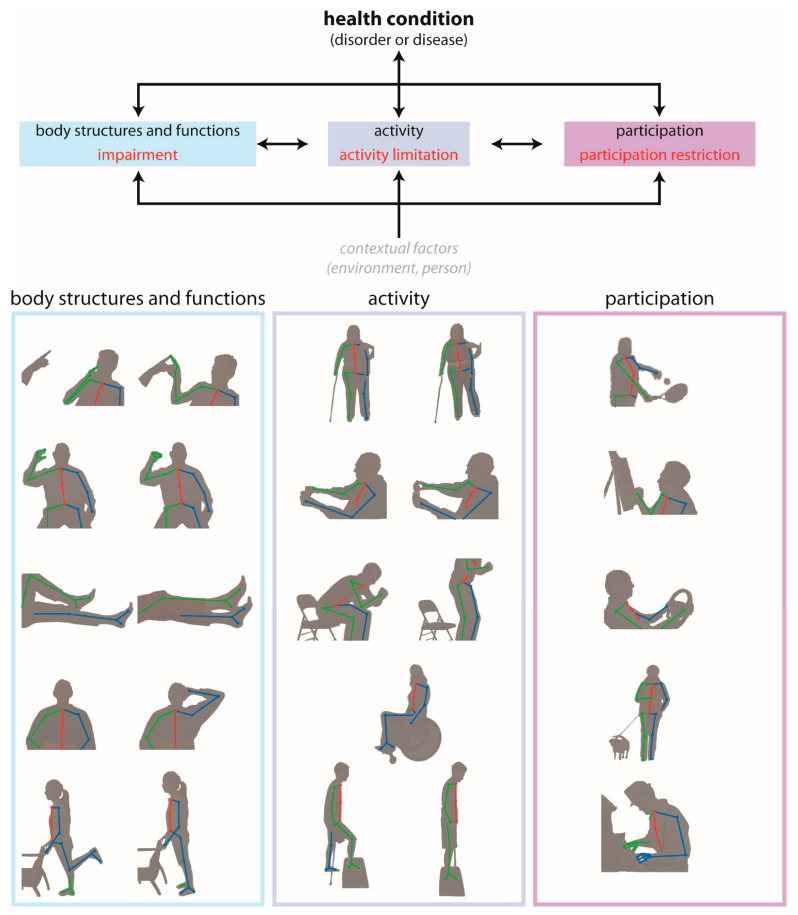

The International Classification of Functioning, Disability and Health (ICF) is a common, widely accepted framework developed by the World Health Organization for describing health and disability at individual and population levels [114]. It provides standard language and has a wide range of uses across different sectors by identifying three primary levels of human functioning:

Body structures and functions are anatomical parts of the body and physiological functions of the body systems, respectively. The term impairment refers to problems in body structure or function.

Activity is the execution of a task or action by an individual. The term activity limitation describes difficulties with completion of an activity.

Participation is involvement in a life situation. Participation restrictions are problems that an individual encounters during participation in real-world situations.

To provide a concrete example of how this framework is used, consider a person who has experienced a stroke. This person might experience changes in all three levels of human functioning: the impairment of left-sided hemiparesis (body structures and functions level), the activity limitation of difficulty walking (activity level), and the participation restriction of inability to attend their desired religious activities (participation level). One can quickly observe that, while the three levels may be related to one another, there are independent needs for quantitative measurement within each level. In other words, there are needs for quantitative measurement of the hemiparesis, daily walking activity, and the inability to attend religious activities in this particular example.

Clinical outcome measures for each level of the ICF are administered as a part of routine clinical practice. Current measures of impairment involve a skilled clinician observing a patient as they perform a series of movements designed to expose deficits in body structure and function. For instance, one item on the Fugl–Meyer Assessment—a widely used quantitative measure of motor impairment after stroke—involves asking the patient to move their hand from the contralateral knee to ipsilateral ear while individual elements (e.g., shoulder retraction, shoulder elevation, elbow flexion, forearm supination) of this movement are scored subjectively from 0 to 2 [115]. Measures of activity limitations involve the patient performing one or more tasks that simulate activities encountered in daily life. An example of an ecologically valid task is the water pouring item of the Action Research Arm Test—an extensively used activity level measure for people with stroke [116]—where the person pours water from one glass to another. Lastly are measures of participation restrictions, which are often self-reported measures of the person’s perceptions of their movement abilities and resulting impact on their quality of life (e.g., the Stroke Impact Scale [117], a self-report questionnaire that evaluates disability and health-related quality of life after stroke) and daily participation. The data gathered from existing outcome measures are valuable for their use in diagnosing movement disorders, establish rehabilitation goals, and track changes in patient status.

Pose estimation tools have the potential to address two important challenges that exist within current clinical assessments spanning all three levels of the ICF (Figure 4). First, they can increase the accuracy, precision, and frequency with which movement kinematics are measured and assessed. Presently, body structure/function and activity level assessments primarily rely on visual observation of movement or task performance, and many are scored on ordinal scales that require a clinic visit or other similarly time-consuming interaction for both patients and their providers. Pose estimation offers the potential to provide precise, quantitative, and continuous data about single joint or whole-body movements through short video recordings that could be recorded in virtually any setting with much higher frequency. This opportunity to obtain frequent, quantitative motor assessments could significantly enhance the abilities of clinicians to detect and track impairments and activity limitations in their patients longitudinally. Second, current assessments of participation restrictions are almost exclusively self-reported. The self-report format has been necessary due to the difficulty of measuring movement kinematics in the home, but many self-report measures lack reliability and often do not correlate with clinically-administered motor assessments. There is clear potential for the propagation of telerehabilitation and pose estimation tools to make a significant impact in this area by providing significantly improved accessibility for clinicians and researchers to obtain quantitative data about how people move and participate in their home and community environments.

Figure 4.

Depiction of potential applications of pose estimation for movement tracking during clinical assessments across the domains of the International Classification of Functioning, Disability and Health (ICF) model. For instance, finger–nose coordination testing the body structures and functions domain (left), walking assessment in the activity domain (middle), and playing tennis in the participation domain (right).

The uses of pose estimation in clinical populations are expanding, but ultimately remain in the beginning stages. At the body structure/function level, early work has involved detecting hallmark motor signs in persons with Parkinson’s disease (PD). For instance, dyskinesia is an involuntary movement of the head, arm, leg, or entire body. Dyskinesia is commonly seen in persons with PD, often as a side effect of long-term levodopa treatment. A number of recent studies have used pose estimation to assess dyskinesias in persons with PD and found similar or superior performance with standard clinical assessments [118,119,120]. Bradykinesia, or slowness of movement, is another cardinal motor sign of PD. Liu et al. report that their computer vision-based method was 89.7% accurate in quantifying bradykinesia severity in people with PD as they performed repetitive movements including finger tapping, hand clasping, and alternating hand pronation/supination movements [121].

There are also a number of studies that have begun to use pose estimation to measure activity-level behaviors. Gait assessment, in particular, has been an early clinical target for these evolving tools. Video-based tools have been used to successfully capture gait parameters such as step lengths, step width, step time, stride length, gait velocity, and cadence in people with stroke [122], PD [25,123] or dementia [124]. Beyond gait, the timed up and go is a widely accepted assessment of functional mobility in patients with a range of neurological disorders or disease. Li et al. recently validated and used a video-based activity classification to automatize timed-up-and-go sub-task segmentation (sit-to-stand, walk, turn, walk-back, sit-back) in people with PD [125].

Future work should focus on further validation of pose estimation with gold standard kinematic tools and interpretability alongside standard clinical assessments. Additional patient populations with a wide range of different movement patterns should be included in these investigations in order to develop algorithms that are broadly applicable. The potential of video-based analysis and pose estimation to quantitatively measure participation-level data in the home and the community should also be a top priority. Precise data captured in the real world not only will provide clinicians with important data from which they can make clinical decisions, but this may also facilitate early diagnosis of movement disorders and the ability to track movement patterns throughout a disease course.

We summarize many of the applications discussed in Section 4 in Table 1 below.

Table 1.

Summary of example applications of pose estimation in human health and performance across the lifespan.

| Domain | Behavior/Movement Pattern Tracked | References |

|---|---|---|

| Motor and non-motor development | Infant cruising (early locomotion) | [36] |

| Infant play/general movement | [37] | |

| Infant writhing | [51] | |

|

Human performance optimization,

injury prevention, and safety |

Healthy repetitive movements | [14] |

| Healthy gait | [15,26,29,30,31,35,40] | |

| Sign language | [19] | |

| Healthy running | [27,35] | |

| Bilateral squat | [28] | |

| Healthy gait/jumping/throwing | [29] | |

| Lifting | [79,84] | |

| Various unsafe working behaviors | [80,81] | |

| ACL injury risk | [82,85,86] | |

| Handcart pushing and pulling | [83] | |

| Ergonomic postural assessment | [87] | |

| Remotely-delivered rehabilitation | [88,91,92,93] | |

| Healthy finger movements | [90] | |

| Rehabilitation robotics | [94,95,96,97] | |

| Athletic training | [100,101] | |

| Swimming | [102] | |

| Clinical motor assessment | Gait in Parkinson’s disease | [25,33,123] |

| Knee kinetics in osteoarthritis | [32] | |

| Gait in cerebral palsy | [34] | |

| Simulated abnormal gait | [72,74] | |

| Gait in older adults | [73] | |

| Fall detection | [76,77,78] | |

| Dyskinesias in Parkinson’s disease | [118,119,120] | |

| Gait in older adults with dementia | [124] | |

| Timed up-and-go in Parkinson’s disease | [125] |

5. What Are the Limitations of Pose Estimation?

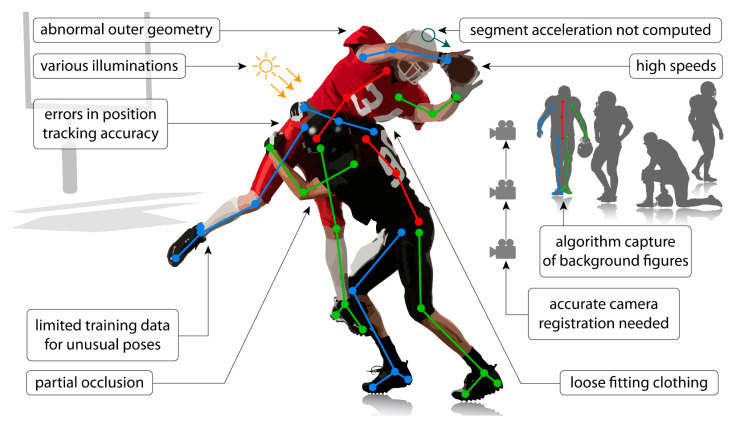

While many of our perspectives on the limitations of human pose estimation algorithms with regard to applications in human health and performance are embedded within the sections above, we considered that it may be helpful to include a condensed summary section here. As mentioned previously, technical limitations have been discussed extensively in prior reviews [20,21]. Here, we list perceived limitations in two general areas: application limitations and barriers to implementation. We consider application limitations to be those associated with obtaining high quality, usable data from video recordings via pose estimation (some are also discussed in [21]) and barriers to implementation to be limitations associated with the uptake and implementation of pose estimation approaches for common use among clinicians and researchers (with an emphasis on implementation in clinical settings).

5.1. Application Limitations

Occlusions: these occur when one or more of the anatomical locations desired to be tracked are not visible. This may be due to occlusion by other body segments, by other people in the frame, or by inanimate objects (e.g., assistive devices—canes, walkers, crutches, orthoses, robotics; clinical objects—beds, hospital gowns, medical devices; sporting equipment—helmets, balls, bats, sticks).

Limited training data: networks that are trained on sets of images that lack diversity (e.g., clothing, poses, illuminations, viewpoints, unusual postures associated with clinical conditions) may not perform well in applications where the videos are quite different from those included in the training set. Applications of current techniques that require a training dataset may require creation of a new training dataset if movements/images of a patient population are substantially different from those included in the existing training dataset (e.g., abnormal hand postures after stroke). This is particularly important given that most training datasets are biased toward healthy movement patterns.

Capture errors: pose estimation algorithms may identify and track unwanted human or human-like figures in the field of view (e.g., people in the background, images on posters or artwork).

Positional errors: tracking may be difficult when conditions introduce uncertainty into the positions of anatomical locations within the image (e.g., wearing a dress, hospital gown, athletic uniform or padding). This may also occur when attempting to track a movement from a suboptimal viewpoint (e.g., measuring knee flexion from a frontal view).

Limitations of recording devices: use of devices with low sampling rates (e.g., the sampling rate of common video recording devices is often approximately 30 Hz) may be unable to capture accurate movement kinematics of movements that occur at high speeds or high frequencies. The aperture and shutter speed of recording devices can also impact image quality and introduce blurring, which can impact the quality of the tracking achieved through pose estimation.

Examples of application limitations are depicted in Figure 5.

Figure 5.

Common application limitations with current pose estimation algorithms and challenges with using these algorithms outside of the laboratory. These applications commonly require three-dimensional kinematics of multiple people moving at relatively high speeds to be tracked in environments with background figures (e.g., irrelevant people and objects shaped similarly to people). This leads to challenges with segment occlusion, unintentional capture of background figures, and registration of multiple cameras. Additionally, using current algorithms for scenarios different than the training dataset (e.g., different movements, different types of clothing or equipment being worn, different lighting) may lead to reduced accuracy in the predicted kinematics or, potentially, failure of the algorithm. Finally, most algorithms do not predict kinematic metrics that are required for some applications (e.g., head acceleration to assess concussion risk), and limitations with using current algorithms on time-series data make it challenging to accurately derive these metrics.

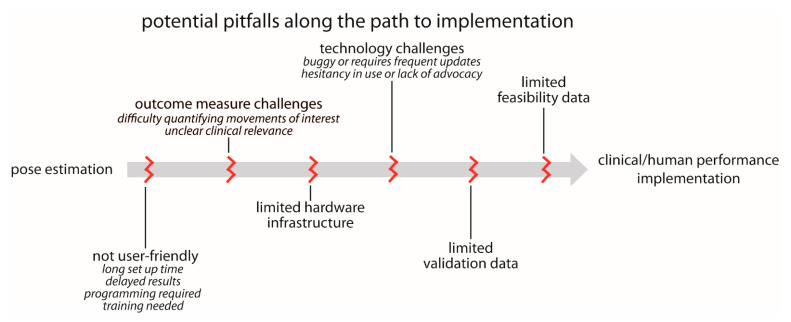

5.2. Barriers to Implementation

-

User-friendliness: we currently lack plug-and-play options for pose estimation. While we certainly understand and acknowledge the many reasons for this, pose estimation is unlikely to be used widely in clinical settings in particular until user-friendliness improves. We outline several relevant components to user-friendliness below:

-

▪

Set up time: in our experience, many users want point-and-click capability. They want to be able to carry a recording device in their pocket, use it to record a quick video of their patient or research participant when needed, and ultimately obtain meaningful information about movement kinematics. Alternatively, they want a reserved space where a recording device could be permanently mounted and easily started and stopped (e.g., a tablet mounted to a wall). Any configuration that requires multi-camera calibration or prolonged set up time is unlikely to be adopted for widespread clinical use.

-

▪

Delayed results: many users want results in near real-time. There is a need for fast, automated approaches that immediately process the pose estimation outputs, calculate relevant movement parameters, and return interpretable data.

-

▪

Programming and training requirements: some existing pose estimation options are very easy to download, install, and use for users with basic technical expertise. However, even these can remain prohibitively daunting for clinicians and researchers without technical backgrounds. Technologies that require any amount of programming or significant training are unlikely to reach widespread use in clinical settings.

-

▪

Outcome measure challenges: in some cases, users want to use movement data to improve clinical or performance-related decision-making, but it is not immediately clear what parameters of the movement will lead to improved outcomes (e.g., a user may express interest in measuring “walking” but is not sure which specific gait parameters are most relevant to their research study or clinical intervention). Therefore, there is a desire to collect kinematic data, but how these data should be used is not well-defined. Similarly, in the case of clinical assessments, there needs to be a clear link to relevant clinical and translational outcomes—the users should have input as to what output metrics are important.

Limited hardware infrastructure: as described above, some applications of pose estimation for human movement tracking require significant computational power. Some clinical and research settings are unlikely to have access to the hardware (e.g., GPUs) needed to execute their desired applications in a timely manner.

Technology challenges: many technologies that promise potential for clinical or human performance impact are made available before they are fully developed. This can lead to buggy software and frequent updating, which harms trust and credibility among users. This can, in turn, exacerbate the hesitancy in adopting new technologies present in some clinical and research communities, especially in artificial intelligence technologies (such as pose estimation) that are purported to supplement or even replace expert human assessment.

Lack of validation and feasibility data: there is a need for large-scale studies to validate pose estimation outputs against ground truth measures in a wide range of different populations. This may be accomplished in a variety of ways, including (but not limited to) comparisons with three-dimensional motion capture, wearable devices with proven accuracy, expert clinical ratings and/or assessments, or even possibly other pose estimation algorithms. The error (relative to the ground truth measurement) that is deemed acceptable is likely to depend on the use case and the metrics being used. In our experience, users who study very specific movements of joints or other anatomical landmarks (e.g., biomechanics or motor control researchers) are likely to seek greater accuracy than, for example, a clinician who may wish to incorporate a video-based assessment of walking speed as part of a larger clinical examination. It may be desirable to begin to develop field-specific accuracy standards for some applications.

There is also a need for testing of sensitivity, specificity, feasibility, and reliability. When a new clinical outcome measure is developed, a first step should be to establish criterion-validity or construct validity between the pose estimated measures and age-concurrent, clinician-coded, gold-standard clinical measures. Next, using receiver operating characteristic (ROC) analysis, sensitivity and specificity should be compared to assess the ability of the new pose estimated measure in predicting dichotomous outcomes (e.g., motor impaired vs. motor unimpaired). Area under the curve (AUC) should further be computed as a measure of the ability to distinguish between groups. Finally, it is important to evaluate the feasibility and acceptability of the new pose estimation protocol. One way to assess feasibility is to assess the number of completed and submitted usable videos by patients (i.e., the total number of videos submitted divided by the number expected, multiplied by 100). One way to assess acceptability is through satisfaction questionnaires/surveys. For example, after video submission, patients, families of patients (if patients are children), and clinicians can complete a brief satisfaction questionnaire/survey regarding their experience using the pose estimation protocol.

These potential pitfalls along the path to implementation are shown in Figure 6.

Figure 6.

Common pitfalls that must be avoided on the path to widespread implementation of pose estimation applications for human health and performance. These are covered in greater detail in the “What are limitations of pose estimation?” section of the manuscript.

6. Conclusions

The emergence and continued development of human pose estimation approaches offer exciting potential for making quantitative assessments of human movement kinematics significantly more accessible. Pose estimation algorithms directly address an important and widespread need for low cost, easy to use, accessible technologies that enable human movement tracking in virtually any environment, including the home, clinic, classroom, playing field, and other ‘in the wild’ settings. Applications in health and human performance have begun to emerge in the literature, but we perceive that these technologies are still in their relative infancy with regard to the potential for research and clinical implementation. Many limitations persist, and it is important that users are aware of these and adjust expectations accordingly. However, we anticipate that applications of pose estimation in human health and performance will continue to expand in coming years, and these technologies will provide powerful tools for capturing meaningful aspects of human movement that have been difficult to capture with conventional techniques.

Acknowledgments

We would like to thank the Janney Program within the Johns Hopkins University Applied Physics Laboratory for providing partial funding for this work, which nurtures a culture of discovery, embraces risk, and welcomes being at the center of a vibrant innovation ecosystem.

Author Contributions

Conceptualization: J.S., K.M.C.-A., C.O.P., R.D.R., M.F.V. and R.T.R.; writing—original draft preparation: J.S., K.M.C.-A., C.O.P., R.D.R., M.F.V. and R.T.R.; writing—review and editing: J.S., K.M.C.-A., C.O.P., R.D.R., M.F.V. and R.T.R.; funding acquisition: C.O.P., R.D.R., M.F.V. and R.T.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by a Kennedy Krieger Institute Goldstein Innovation Grant to RDR, NIH grant R21 AG059184 to RTR.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results. All authors have read and agreed to the published version of the manuscript.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Mündermann L., Corazza S., Andriacchi T.P. The Evolution of Methods for the Capture of Human Movement Leading to Markerless Motion Capture for Biomechanical Applications. J. NeuroEng. Rehabil. 2006;3:6. doi: 10.1186/1743-0003-3-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Baker R. The History of Gait Analysis before the Advent of Modern Computers. Gait Posture. 2007;26:23–28. doi: 10.1016/j.gaitpost.2006.10.014. [DOI] [PubMed] [Google Scholar]

- 3.Roether C.L., Omlor L., Christensen A., Giese M.A. Critical Features for the Perception of Emotion from Gait. J. Vis. 2009;9:1–32. doi: 10.1167/9.6.15. [DOI] [PubMed] [Google Scholar]

- 4.Michalak J., Troje N.F., Fischer J., Vollmar P., Heidenreich T., Schulte D. Embodiment of Sadness and Depression-Gait Patterns Associated with Dysphoric Mood. Psychosom. Med. 2009;71:580–587. doi: 10.1097/PSY.0b013e3181a2515c. [DOI] [PubMed] [Google Scholar]

- 5.Kendon A. Movement Coordination in Social Interaction: Some Examples Described. Acta Psychol. 1970;32:101–125. doi: 10.1016/0001-6918(70)90094-6. [DOI] [PubMed] [Google Scholar]

- 6.Martinez G.H., Raaj Y., Idrees H., Xiang D., Joo H., Simon T., Sheikh Y. Single-Network Whole-Body Pose Estimation; Proceedings of the IEEE International Conference on Computer Vision; Seoul, Korea. 27 October–2 November 2019; pp. 6982–6991. [DOI] [Google Scholar]

- 7.Insafutdinov E., Andriluka M., Pishchulin L., Tang S., Levinkov E., Andres B., Schiele B. ArtTrack: Articulated Multi-Person Tracking in the Wild; Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 6457–6465. [DOI] [Google Scholar]

- 8.Insafutdinov E., Pishchulin L., Andres B., Andriluka M., Schiele B. Computer Vision—ECCV 2016. Springer International Publishing; Cham, Switzerland: 2016. Deepercut: A Deeper, Stronger, and Faster Multi-Person Pose Estimation Model; pp. 34–50. (Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics)). [DOI] [Google Scholar]

- 9.Pishchulin L., Insafutdinov E., Tang S., Andres B., Andriluka M., Gehler P., Schiele B. DeepCut: Joint Subset Partition and Labeling for Multi Person Pose Estimation; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 4929–4937. [DOI] [Google Scholar]

- 10.Toshev A., Szegedy C. DeepPose: Human Pose Estimation via Deep Neural Networks; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; Columbus, OH, USA. 23–28 June 2014; pp. 1653–1660. [DOI] [Google Scholar]

- 11.Nath T., Mathis A., Chen A.C., Patel A., Bethge M., Mathis M.W. Using DeepLabCut for 3D Markerless Pose Estimation across Species and Behaviors. Nat. Protoc. 2019;14:2152–2176. doi: 10.1038/s41596-019-0176-0. [DOI] [PubMed] [Google Scholar]

- 12.Mathis A., Mamidanna P., Cury K.M., Abe T., Murthy V.N., Mathis M.W., Bethge M. DeepLabCut: Markerless Pose Estimation of User-Defined Body Parts with Deep Learning. Nat. Neurosci. 2018;21:1281–1289. doi: 10.1038/s41593-018-0209-y. [DOI] [PubMed] [Google Scholar]

- 13.Cao Z., Simon T., Wei S.E., Sheikh Y. Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields; Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 1302–1310. [DOI] [Google Scholar]

- 14.Cornman H.L., Stenum J., Roemmich R.T. Video-Based Quantification of Human Movement Frequency Using Pose Estimation. bioRxiv. 2021 doi: 10.1101/2021.02.01.429161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Stenum J., Rossi C., Roemmich R.T. Two-Dimensional Video-Based Analysis of Human Gait Using Pose Estimation. PLoS Comput. Biol. 2021;17:e1008935. doi: 10.1371/journal.pcbi.1008935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kwolek B., Michalczuk A., Krzeszowski T., Switonski A., Josinski H., Wojciechowski K. Calibrated and Synchronized Multi-View Video and Motion Capture Dataset for Evaluation of Gait Recognition. Multimed. Tools Appl. 2019;78:32437–32465. doi: 10.1007/s11042-019-07945-y. [DOI] [Google Scholar]

- 17.Wang L., Tan T., Ning H., Hu W. Silhouette Analysis-Based Gait Recognition for Human Identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003;25:1505–1518. doi: 10.1109/TPAMI.2003.1251144. [DOI] [Google Scholar]

- 18.Holte M.B., Cuong T., Trivedi M.M., Moeslund T.B. Human Pose Estimation and Activity Recognition from Multi-View Videos: Comparative Explorations of Recent Developments. IEEE J. Sel. Top. Signal Process. 2012;6:538–552. doi: 10.1109/JSTSP.2012.2196975. [DOI] [Google Scholar]

- 19.Isaacs J., Foo S. Hand Pose Estimation for American Sign Language Recognition; Proceedings of the Thirty-Sixth Southeastern Symposium on System Theory; Atlanta, GA, USA. 16 March 2004; pp. 132–136. [DOI] [Google Scholar]

- 20.Cronin N.J. Using Deep Neural Networks for Kinematic Analysis: Challenges and Opportunities. J. Biomech. 2021;123:110460. doi: 10.1016/j.jbiomech.2021.110460. [DOI] [PubMed] [Google Scholar]

- 21.Seethapathi N., Wang S., Saluja R., Blohm G., Kording K.P. Movement Science Needs Different Pose Tracking Algorithms. arXiv. 20191907.10226 [Google Scholar]

- 22.Arac A. Machine Learning for 3D Kinematic Analysis of Movements in Neurorehabilitation. Curr. Neurol. Neurosci. Rep. 2020;20:29. doi: 10.1007/s11910-020-01049-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Andriluka M., Pishchulin L., Gehler P., Schiele B. 2D Human Pose Estimation: New Benchmark and State of the Art Analysis; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; Columbus, OH, USA. 23–28 June 2014; pp. 3683–3693. [DOI] [Google Scholar]

- 24.Lin T.Y., Maire M., Belongie S., Hays J., Perona P., Ramanan D., Dollár P., Zitnick C.L. Computer Vision—ECCV 2014. Springer International Publishing; Cham, Switzerland: 2014. Microsoft COCO: Common Objects in Context; pp. 740–755. (Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics)). [DOI] [Google Scholar]

- 25.Sato K., Nagashima Y., Mano T., Iwata A., Toda T. Quantifying Normal and Parkinsonian Gait Features from Home Movies: Practical Application of a Deep Learning–Based 2D Pose Estimator. PLoS ONE. 2019;14:e0223549. doi: 10.1371/journal.pone.0223549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chambers C., Kong G., Wei K., Kording K. Pose Estimates from Online Videos Show That Side-by-Side Walkers Synchronize Movement under Naturalistic Conditions. PLoS ONE. 2019;14:e0217861. doi: 10.1371/journal.pone.0217861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Cronin N.J., Rantalainen T., Ahtiainen J.P., Hynynen E., Waller B. Markerless 2D Kinematic Analysis of Underwater Running: A Deep Learning Approach. J. Biomech. 2019;87:75–82. doi: 10.1016/j.jbiomech.2019.02.021. [DOI] [PubMed] [Google Scholar]

- 28.Ota M., Tateuchi H., Hashiguchi T., Kato T., Ogino Y., Yamagata M., Ichihashi N. Verification of Reliability and Validity of Motion Analysis Systems during Bilateral Squat Using Human Pose Tracking Algorithm. Gait Posture. 2020;80:62–67. doi: 10.1016/j.gaitpost.2020.05.027. [DOI] [PubMed] [Google Scholar]

- 29.Nakano N., Sakura T., Ueda K., Omura L., Kimura A., Iino Y., Fukashiro S., Yoshioka S. Evaluation of 3D Markerless Motion Capture Accuracy Using OpenPose With Multiple Video Cameras. Front. Sports Act. Living. 2020;2:50. doi: 10.3389/fspor.2020.00050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zago M., Luzzago M., Marangoni T., De Cecco M., Tarabini M., Galli M. 3D Tracking of Human Motion Using Visual Skeletonization and Stereoscopic Vision. Front. Bioeng. Biotechnol. 2020;8:181. doi: 10.3389/fbioe.2020.00181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.D’Antonio E., Taborri J., Palermo E., Rossi S., Patanè F. A Markerless System for Gait Analysis Based on OpenPose Library; Proceedings of the 2020 IEEE International Instrumentation and Measurement Technology Conference (I2MTC); Dubrovnik, Croatia. 25–28 May 2020; pp. 1–6. [DOI] [Google Scholar]

- 32.Boswell M.A., Uhlrich S.D., Kidziński Ł., Thomas K., Kolesar J.A., Gold G.E., Beaupre G.S., Delp S.L. A Neural Network to Predict the Knee Adduction Moment in Patients with Osteoarthritis Using Anatomical Landmarks Obtainable from 2D Video Analysis. Osteoarthr. Cartil. 2021;29:346–356. doi: 10.1016/j.joca.2020.12.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lu M., Poston K., Pfefferbaum A., Sullivan E.V., Fei-Fei L., Pohl K.M., Niebles J.C., Adeli E. Medical Image Computing and Computer Assisted Intervention—MICCAI 2020. Volume 12263. Springer International Publishing; Cham, Switzerland: 2020. Vision-Based Estimation of MDS-UPDRS Gait Scores for Assessing Parkinson’s Disease Motor Severity; pp. 637–647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kidziński Ł., Yang B., Hicks J.L., Rajagopal A., Delp S.L., Schwartz M.H. Deep Neural Networks Enable Quantitative Movement Analysis Using Single-Camera Videos. Nat. Commun. 2020;11:4054. doi: 10.1038/s41467-020-17807-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ota M., Tateuchi H., Hashiguchi T., Ichihashi N. Verification of Validity of Gait Analysis Systems during Treadmill Walking and Running Using Human Pose Tracking Algorithm. Gait Posture. 2021;85:290–297. doi: 10.1016/j.gaitpost.2021.02.006. [DOI] [PubMed] [Google Scholar]

- 36.Ossmy O., Adolph K.E. Real-Time Assembly of Coordination Patterns in Human Infants. Curr. Biol. 2020;30:4553–4562. doi: 10.1016/j.cub.2020.08.073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Chambers C., Seethapathi N., Saluja R., Loeb H., Pierce S.R., Bogen D.K., Prosser L., Johnson M.J., Kording K.P. Computer Vision to Automatically Assess Infant Neuromotor Risk. IEEE Trans. Neural Syst. Rehabil. Eng. 2020;28:2431–2442. doi: 10.1109/TNSRE.2020.3029121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Fang H., Xie S., Lu C. RMPE: Regional Multi-Person Pose Estimation. arXiv. 20181612.001737 [Google Scholar]

- 39.Cao Z., Hidalgo Martinez G., Simon T., Wei S.-E., Sheikh Y.A. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021;43:172–186. doi: 10.1109/TPAMI.2019.2929257. [DOI] [PubMed] [Google Scholar]

- 40.Viswakumar A., Rajagopalan V., Ray T., Parimi C. Human Gait Analysis Using OpenPose; Proceedings of the IEEE International Conference Image Information Processing; Shimla, India. 15–17 November 2019; pp. 310–314. [DOI] [Google Scholar]

- 41.Ye Q., Yuan S., Kim T.-K. Spatial Attention Deep Net with Partial PSO for Hierarchical Hybrid Hand Pose Estimation. arXiv. 20161604.03334 [Google Scholar]

- 42.Ivekovic S., Trucco E. Human Body Pose Estimation with PSO; Proceedings of the 2006 IEEE International Conference on Evolutionary Computation; Vancouver, BC, Canada. 16–21 July 2006; pp. 1256–1263. [DOI] [Google Scholar]

- 43.Lee K.-Z., Liu T.-W., Ho S.-Y. Intelligent Data Engineering and Automated Learning. Springer; Berlin/Heidelberg, Germany: 2003. Model-Based Pose Estimation of Human Motion Using Orthogonal Simulated Annealing. [DOI] [Google Scholar]

- 44.Halvorsen K., Söderström T., Stokes V., Lanshammar H. Using an Extended Kalman Filter for Rigid Body Pose Estimation. J. Biomech. Eng. 2004;127:475–483. doi: 10.1115/1.1894371. [DOI] [PubMed] [Google Scholar]

- 45.Janabi-Sharifi F., Marey M. A Kalman-Filter-Based Method for Pose Estimation in Visual Servoing. IEEE Trans. Robot. 2010;26:939–947. doi: 10.1109/TRO.2010.2061290. [DOI] [Google Scholar]

- 46.Fanning P.A.J., Sparaci L., Dissanayake C., Hocking D.R., Vivanti G. Functional Play in Young Children with Autism and Williams Syndrome: A Cross-Syndrome Comparison. Child Neuropsychol. J. Norm. Abnorm. Dev. Child. Adolesc. 2021;27:125–149. doi: 10.1080/09297049.2020.1804846. [DOI] [PubMed] [Google Scholar]

- 47.Kretch K.S., Franchak J.M., Adolph K.E. Crawling and Walking Infants See the World Differently. Child Dev. 2014;85:1503–1518. doi: 10.1111/cdev.12206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.LeBarton E.S., Iverson J.M. Fine Motor Skill Predicts Expressive Language in Infant Siblings of Children with Autism. Dev. Sci. 2013;16:815–827. doi: 10.1111/desc.12069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Masek L.R., Paterson S.J., Golinkoff R.M., Bakeman R., Adamson L.B., Owen M.T., Pace A., Hirsh-Pasek K. Beyond Talk: Contributions of Quantity and Quality of Communication to Language Success across Socioeconomic Strata. Infancy Off. J. Int. Soc. Infant Stud. 2021;26:123–147. doi: 10.1111/infa.12378. [DOI] [PubMed] [Google Scholar]

- 50.Le H., Hoch J.E., Ossmy O., Adolph K.E., Fern X., Fern A. Modeling Infant Free Play Using Hidden Markov Models; Proceedings of the 2021 IEEE International Conference on Development and Learning (ICDL); Beijing, China. 23–26 August 2021; pp. 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Doroniewicz I., Ledwoń D.J., Affanasowicz A., Kieszczyńska K., Latos D., Matyja M., Mitas A.W., Myśliwiec A. Writhing Movement Detection in Newborns on the Second and Third Day of Life Using Pose-Based Feature Machine Learning Classification. Sensors. 2020;20:5986. doi: 10.3390/s20215986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Iverson J.M., Shic F., Wall C.A., Chawarska K., Curtin S., Estes A., Gardner J.M., Hutman T., Landa R.J., Levin A.R. Early Motor Abilities in Infants at Heightened versus Low Risk for ASD: A Baby Siblings Research Consortium (BSRC) Study. J. Abnorm. Psychol. 2019;128:69. doi: 10.1037/abn0000390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Iverson J.M. Developing Language in a Developing Body: The Relationship between Motor Development and Language Development. J. Child Lang. 2010;37:229–261. doi: 10.1017/S0305000909990432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Alcock K.J., Krawczyk K. Individual Differences in Language Development: Relationship with Motor Skill at 21 Months. Dev. Sci. 2010;13:677–691. doi: 10.1111/j.1467-7687.2009.00924.x. [DOI] [PubMed] [Google Scholar]

- 55.Adolph K.E., Hoch J.E. Motor Development: Embodied, Embedded, Enculturated, and Enabling. Annu. Rev. Psychol. 2019;70:141–164. doi: 10.1146/annurev-psych-010418-102836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Rosenbaum P., Paneth N., Leviton A., Goldstein M., Bax M., Damiano D., Dan B., Jacobsson B. A Report: The Definition and Classification of Cerebral Palsy April 2006. Dev. Med. Child Neurol. Suppl. 2007;109:8–14. [PubMed] [Google Scholar]

- 57.Novak I., Morgan C., Adde L., Blackman J., Boyd R.N., Brunstrom-Hernandez J., Cioni G., Damiano D., Darrah J., Eliasson A.-C. Early, Accurate Diagnosis and Early Intervention in Cerebral Palsy: Advances in Diagnosis and Treatment. JAMA Pediatr. 2017;171:897–907. doi: 10.1001/jamapediatrics.2017.1689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Geethanath S., Vaughan J.T.J. Accessible Magnetic Resonance Imaging: A Review. J. Magn. Reson. Imaging JMRI. 2019;49:e65–e77. doi: 10.1002/jmri.26638. [DOI] [PubMed] [Google Scholar]

- 59.Adde L., Helbostad J.L., Jensenius A.R., Taraldsen G., Grunewaldt K.H., Støen R. Early Prediction of Cerebral Palsy by Computer-based Video Analysis of General Movements: A Feasibility Study. Dev. Med. Child Neurol. 2010;52:773–778. doi: 10.1111/j.1469-8749.2010.03629.x. [DOI] [PubMed] [Google Scholar]

- 60.Rahmati H., Aamo O.M., Stavdahl Ø., Dragon R., Adde L. Video-Based Early Cerebral Palsy Prediction Using Motion Segmentation. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2014:3779–3783. doi: 10.1109/EMBC.2014.6944446. [DOI] [PubMed] [Google Scholar]

- 61.Ihlen E.A., Støen R., Boswell L., de Regnier R.-A., Fjørtoft T., Gaebler-Spira D., Labori C., Loennecken M.C., Msall M.E., Möinichen U.I. Machine Learning of Infant Spontaneous Movements for the Early Prediction of Cerebral Palsy: A Multi-Site Cohort Study. J. Clin. Med. 2020;9:5. doi: 10.3390/jcm9010005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Chawarska K., Klin A., Paul R., Volkmar F. Autism Spectrum Disorder in the Second Year: Stability and Change in Syndrome Expression. J. Child Psychol. Psychiatry. 2007;48:128–138. doi: 10.1111/j.1469-7610.2006.01685.x. [DOI] [PubMed] [Google Scholar]

- 63.Landa R., Garrett-Mayer E. Development in Infants with Autism Spectrum Disorders: A Prospective Study. J. Child Psychol. Psychiatry. 2006;47:629–638. doi: 10.1111/j.1469-7610.2006.01531.x. [DOI] [PubMed] [Google Scholar]

- 64.Maenner M.J., Shaw K.A., Baio J. Prevalence of Autism Spectrum Disorder among Children Aged 8 Years—Autism and Developmental Disabilities Monitoring Network, 11 Sites, United States, 2016. MMWR Surveill. Summ. 2020;69:1–12. doi: 10.15585/mmwr.ss6904a1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Gordon-Lipkin E., Foster J., Peacock G. Whittling down the Wait Time: Exploring Models to Minimize the Delay from Initial Concern to Diagnosis and Treatment of Autism Spectrum Disorder. Pediatr. Clin. 2016;63:851–859. doi: 10.1016/j.pcl.2016.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Ning M., Daniels J., Schwartz J., Dunlap K., Washington P., Kalantarian H., Du M., Wall D.P. Identification and Quantification of Gaps in Access to Autism Resources in the United States: An Infodemiological Study. J. Med. Internet Res. 2019;21:e13094. doi: 10.2196/13094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Brian J.A., Smith I.M., Zwaigenbaum L., Bryson S.E. Cross-site Randomized Control Trial of the Social ABCs Caregiver-mediated Intervention for Toddlers with Autism Spectrum Disorder. Autism Res. 2017;10:1700–1711. doi: 10.1002/aur.1818. [DOI] [PubMed] [Google Scholar]

- 68.Dawson G., Rogers S., Munson J., Smith M., Winter J., Greenson J., Donaldson A., Varley J. Randomized, Controlled Trial of an Intervention for Toddlers with Autism: The Early Start Denver Model. Pediatrics. 2010;125:e17–e23. doi: 10.1542/peds.2009-0958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Landa R.J., Holman K.C., O’Neill A.H., Stuart E.A. Intervention Targeting Development of Socially Synchronous Engagement in Toddlers with Autism Spectrum Disorder: A Randomized Controlled Trial. J. Child Psychol. Psychiatry. 2011;52:13–21. doi: 10.1111/j.1469-7610.2010.02288.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Crippa A., Salvatore C., Perego P., Forti S., Nobile M., Molteni M., Castiglioni I. Use of Machine Learning to Identify Children with Autism and Their Motor Abnormalities. J. Autism Dev. Disord. 2015;45:2146–2156. doi: 10.1007/s10803-015-2379-8. [DOI] [PubMed] [Google Scholar]

- 71.Karatsidis A., Richards R.E., Konrath J.M., van den Noort J.C., Schepers H.M., Bellusci G., Harlaar J., Veltink P.H. Validation of Wearable Visual Feedback for Retraining Foot Progression Angle Using Inertial Sensors and an Augmented Reality Headset. J. NeuroEng. Rehabil. 2018;15:78. doi: 10.1186/s12984-018-0419-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Guo Y., Deligianni F., Gu X., Yang G.-Z. 3-D Canonical Pose Estimation and Abnormal Gait Recognition with a Single RGB-D Camera. IEEE Robot. Autom. Lett. 2019;4:3617–3624. doi: 10.1109/LRA.2019.2928775. [DOI] [Google Scholar]

- 73.Kondragunta J., Hirtz G. Gait Parameter Estimation of Elderly People Using 3D Human Pose Estimation in Early Detection of Dementia. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2020:5798–5801. doi: 10.1109/EMBC44109.2020.9175766. [DOI] [PubMed] [Google Scholar]

- 74.Chaaraoui A.A., Padilla-López J.R., Flórez-Revuelta F. Abnormal Gait Detection with RGB-D Devices Using Joint Motion History Features; Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG); Ljubljana, Slovenia. 4–8 May 2015; pp. 1–6. [DOI] [Google Scholar]

- 75.Li G., Liu T., Yi J. Wearable Sensor System for Detecting Gait Parameters of Abnormal Gaits: A Feasibility Study. IEEE Sens. J. 2018;18:4234–4241. doi: 10.1109/JSEN.2018.2814994. [DOI] [Google Scholar]

- 76.Chen Y., Du R., Luo K., Xiao Y. Fall Detection System Based on Real-Time Pose Estimation and SVM; Proceedings of the 2021 IEEE 2nd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE); Nanchang, China. 26–28 March 2021; pp. 990–993. [DOI] [Google Scholar]

- 77.Bian Z., Hou J., Chau L., Magnenat-Thalmann N. Fall Detection Based on Body Part Tracking Using a Depth Camera. IEEE J. Biomed. Health Inform. 2015;19:430–439. doi: 10.1109/JBHI.2014.2319372. [DOI] [PubMed] [Google Scholar]

- 78.Huang Z., Liu Y., Fang Y., Horn B.K.P. Video-Based Fall Detection for Seniors with Human Pose Estimation; Proceedings of the 2018 4th International Conference on Universal Village (UV); Boston, MA, USA. 21–24 October 2018; pp. 1–4. [DOI] [Google Scholar]

- 79.Mehrizi R., Peng X., Tang Z., Xu X., Metaxas D., Li K. Toward Marker-Free 3D Pose Estimation in Lifting: A Deep Multi-View Solution. arXiv. 20181802.01741 [Google Scholar]

- 80.Han S., Lee S. A Vision-Based Motion Capture and Recognition Framework for Behavior-Based Safety Management. Autom. Constr. 2013;35:131–141. doi: 10.1016/j.autcon.2013.05.001. [DOI] [Google Scholar]

- 81.Han S., Achar M., Lee S., Peña-Mora F. Empirical Assessment of a RGB-D Sensor on Motion Capture and Action Recognition for Construction Worker Monitoring. Vis. Eng. 2013;1:6. doi: 10.1186/2213-7459-1-6. [DOI] [Google Scholar]

- 82.Blanchard N., Skinner K., Kemp A., Scheirer W., Flynn P. “Keep Me In, Coach!”: A Computer Vision Perspective on Assessing ACL Injury Risk in Female Athletes; Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV); Waikoloa, HI, USA. 7–11 January 2019; pp. 1366–1374. [DOI] [Google Scholar]

- 83.Vukicevic A.M., Macuzic I., Mijailovic N., Peulic A., Radovic M. Assessment of the Handcart Pushing and Pulling Safety by Using Deep Learning 3D Pose Estimation and IoT Force Sensors. Expert Syst. Appl. 2021;183:115371. doi: 10.1016/j.eswa.2021.115371. [DOI] [Google Scholar]

- 84.Mehrizi R., Peng X., Metaxas D.N., Xu X., Zhang S., Li K. Predicting 3-D Lower Back Joint Load in Lifting: A Deep Pose Estimation Approach. IEEE Trans. Hum. Mach. Syst. 2019;49:85–94. doi: 10.1109/THMS.2018.2884811. [DOI] [Google Scholar]

- 85.Krosshaug T., Nakamae A., Boden B.P., Engebretsen L., Smith G., Slauterbeck J.R., Hewett T.E., Bahr R. Mechanisms of Anterior Cruciate Ligament Injury in Basketball: Video Analysis of 39 Cases. Am. J. Sports Med. 2007;35:359–367. doi: 10.1177/0363546506293899. [DOI] [PubMed] [Google Scholar]

- 86.Olsen O.-E., Myklebust G., Engebretsen L., Bahr R. Injury Mechanisms for Anterior Cruciate Ligament Injuries in Team Handball: A Systematic Video Analysis. Am. J. Sports Med. 2004;32:1002–1012. doi: 10.1177/0363546503261724. [DOI] [PubMed] [Google Scholar]

- 87.Kim W., Sung J., Saakes D., Huang C., Xiong S. Ergonomic Postural Assessment Using a New Open-Source Human Pose Estimation Technology (OpenPose) Int. J. Ind. Ergon. 2021;84:103164. doi: 10.1016/j.ergon.2021.103164. [DOI] [Google Scholar]

- 88.Li Y., Wang C., Cao Y., Liu B., Tan J., Luo Y. Human Pose Estimation Based In-Home Lower Body Rehabilitation System; Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN); Glasgow, UK. 19–24 July 2020; Jul 19–24, pp. 1–8. [DOI] [Google Scholar]

- 89.Cordella F., Di Corato F., Zollo L., Siciliano B. New Trends in Image Analysis and Processing—ICIAP 2013. Volume 8158. Springer; Berlin/Heidelberg, Germany: 2013. A Robust Hand Pose Estimation Algorithm for Hand Rehabilitation; pp. 1–10. [DOI] [Google Scholar]

- 90.Zhu Y., Lu W., Gan W., Hou W. A Contactless Method to Measure Real-Time Finger Motion Using Depth-Based Pose Estimation. Comput. Biol. Med. 2021;131:104282. doi: 10.1016/j.compbiomed.2021.104282. [DOI] [PubMed] [Google Scholar]

- 91.Milosevic B., Leardini A., Farella E. Kinect and Wearable Inertial Sensors for Motor Rehabilitation Programs at Home: State of the Art and an Experimental Comparison. Biomed. Eng. Online. 2020;19:25. doi: 10.1186/s12938-020-00762-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Tao Y., Hu H., Zhou H. Integration of Vision and Inertial Sensors for 3D Arm Motion Tracking in Home-Based Rehabilitation. Int. J. Robot. Res. 2007;26:607–624. doi: 10.1177/0278364907079278. [DOI] [Google Scholar]

- 93.Ranasinghe I., Dantu R., Albert M.V., Watts S., Ocana R. Cyber-Physiotherapy: Rehabilitation to Training; Proceedings of the 2021 IFIP/IEEE International Symposium on Integrated Network Management (IM); Bordeaux, France. 17–21 May 2021; pp. 1054–1057. [Google Scholar]

- 94.Tao T., Yang X., Xu J., Wang W., Zhang S., Li M., Xu G. Trajectory Planning of Upper Limb Rehabilitation Robot Based on Human Pose Estimation; Proceedings of the 2020 17th International Conference on Ubiquitous Robots (UR); Kyoto, Japan. 22–26 June 2020; pp. 333–338. [DOI] [Google Scholar]

- 95.Palermo M., Moccia S., Migliorelli L., Frontoni E., Santos C.P. Real-Time Human Pose Estimation on a Smart Walker Using Convolutional Neural Networks. Expert Syst. Appl. 2021;184:115498. doi: 10.1016/j.eswa.2021.115498. [DOI] [Google Scholar]

- 96.Airò Farulla G., Pianu D., Cempini M., Cortese M., Russo L.O., Indaco M., Nerino R., Chimienti A., Oddo C.M., Vitiello N. Vision-Based Pose Estimation for Robot-Mediated Hand Telerehabilitation. Sensors. 2016;16:208. doi: 10.3390/s16020208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Sarsfield J., Brown D., Sherkat N., Langensiepen C., Lewis J., Taheri M., McCollin C., Barnett C., Selwood L., Standen P., et al. Clinical Assessment of Depth Sensor Based Pose Estimation Algorithms for Technology Supervised Rehabilitation Applications. Int. J. Med. Inf. 2019;121:30–38. doi: 10.1016/j.ijmedinf.2018.11.001. [DOI] [PubMed] [Google Scholar]

- 98.Xu W., Chatterjee A., Zollhoefer M., Rhodin H., Mehta D., Seidel H.-P., Theobalt C. MonoPerfCap: Human Performance Capture from Monocular Video. arXiv. 20181708.02136 [Google Scholar]

- 99.Habermann M., Xu W., Zollhoefer M., Pons-Moll G., Theobalt C. LiveCap: Real-Time Human Performance Capture from Monocular Video. arXiv. 20191810.02648 [Google Scholar]

- 100.Wang J., Qiu K., Peng H., Fu J., Zhu J. AI Coach: Deep Human Pose Estimation and Analysis for Personalized Athletic Training Assistance; Proceedings of the 27th ACM International Conference on Multimedia; Nice, France. 21–25 October 2019; pp. 374–382. [DOI] [Google Scholar]

- 101.Einfalt M., Dampeyrou C., Zecha D., Lienhart R. Frame-Level Event Detection in Athletics Videos with Pose-Based Convolutional Sequence Networks; Proceedings of the 27th ACM International Conference on Multimedia; Nice, France. 21–25 October 2019; pp. 42–50. [DOI] [Google Scholar]

- 102.Einfalt M., Zecha D., Lienhart R. Activity-Conditioned Continuous Human Pose Estimation for Performance Analysis of Athletes Using the Example of Swimming; Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV); Lake Tahoe, NV, USA. 12–15 March 2018; pp. 446–455. [DOI] [Google Scholar]

- 103.Güler R.A., Neverova N., Kokkinos I. DensePose: Dense Human Pose Estimation in the Wild. arXiv. 20181802.00434 [Google Scholar]

- 104.Patacchiola M., Cangelosi A. Head Pose Estimation in the Wild Using Convolutional Neural Networks and Adaptive Gradient Methods. Pattern Recognit. 2017;71:132–143. doi: 10.1016/j.patcog.2017.06.009. [DOI] [Google Scholar]