Abstract

Purpose

We propose a deep learning–based image reconstruction algorithm to produce high-resolution optical coherence tomographic angiograms (OCTA) of the intermediate capillary plexus (ICP) and deep capillary plexus (DCP).

Methods

In this study, 6-mm × 6-mm macular scans with a 400 × 400 A-line sampling density and 3-mm × 3-mm scans with a 304 × 304 A-line sampling density were acquired on one or both eyes of 180 participants (including 230 eyes with diabetic retinopathy and 44 healthy controls) using a 70-kHz commercial OCT system (RTVue-XR; Optovue, Inc., Fremont, California, USA). Projection-resolved OCTA algorithm removed projection artifacts in voxel. ICP and DCP angiograms were generated by maximum projection of the OCTA signal within the respective plexus. We proposed a deep learning–based method, which receives inputs from registered 3-mm × 3-mm ICP and DCP angiograms with proper sampling density as the ground truth reference to reconstruct 6-mm × 6-mm high-resolution ICP and DCP en face OCTA. We applied the same network on 3-mm × 3-mm angiograms to enhance these images further. We evaluated the reconstructed 3-mm × 3-mm and 6-mm × 6-mm angiograms based on vascular connectivity, Weber contrast, false flow signal (flow signal erroneously generated from background), and the noise intensity in the foveal avascular zone.

Results

Compared to the originals, the Deep Capillary Angiogram Reconstruction Network (DCARnet)–enhanced 6-mm × 6-mm angiograms had significantly reduced noise intensity (ICP, 7.38 ± 25.22, P < 0.001; DCP, 11.20 ± 22.52, P < 0.001), improved vascular connectivity (ICP, 0.95 ± 0.01, P < 0.001; DCP, 0.96 ± 0.01, P < 0.001), and enhanced Weber contrast (ICP, 4.25 ± 0.10, P < 0.001; DCP, 3.84 ± 0.84, P < 0.001), without generating false flow signal when noise intensity lower than 650. The DCARnet-enhanced 3-mm × 3-mm angiograms also reduced noise, improved connectivity, and enhanced Weber contrast in 3-mm × 3-mm ICP and DCP angiograms from 101 eyes. In addition, DCARnet preserved the appearance of the dilated vessels in the reconstructed angiograms in diabetic eyes.

Conclusions

DCARnet can enhance 3-mm × 3-mm and 6-mm × 6-mm ICP and DCP angiogram image quality without introducing artifacts.

Translational Relevance

The enhanced 6-mm × 6-mm angiograms may be easier for clinicians to interpret qualitatively.

Keywords: reconstruction, optical coherence tomographic angiograms, intermediate capillary plexus, deep capillary plexus, deep learning

Introduction

Optical coherence tomographic angiography (OCTA) is a noninvasive imaging technique that can provide angiograms at specific depths within the retina and visualize microvasculature in vivo.1,2 Numerous studies have demonstrated its utility in the diagnosis of retinal pathology.3–5 However, current OCTA technology has some significant limitations. One of these limitations is the presence of artifacts. While many artifacts, such as projection artifacts and bulk motion artifacts, can be removed by postprocessing algorithms,6–8 images may still have strong background noise, and vessels may become fragmented due to image processing and display strategies. Another important limitation in current OCTA technology is a relatively small field of view. Contemporary research is moving toward wider fields of view.9,10 At a fixed device speed, capturing a larger field of view without increasing the duration of the imaging procedure requires reducing sampling density, which both lowers image resolution and exacerbates the effects of artifacts.11 We previously reported on a deep learning–based method that effectively enhances en face images of the superficial vascular complex (SVC).12 Plexus-specific pathology of the intermediate capillary plexus (ICP) and deep capillary plexus (DCP), however, can provide novel diagnostic and prognostic indicators.5 For example, dilated capillaries in the ICP and DCP are associated with the more severe diabetic retinopathy (DR)13 and may be associated with increased treatment requirements in branch retinal vein occlusion.14 However, optimal imaging of the ICP and DCP is more difficult due to their posterior location and the increased prevalence of artifacts.

Several groups have proposed algorithms to improve OCTA image quality. However, most previous work has focused on traditional image-processing algorithms that must be handcrafted for specific contexts. This is in contrast to cutting-edge deep learning–based approaches, which usually generalize well.15–20 State-of-the-art deep learning–based methods are currently applied to several different kinds of clinical image-processing tasks,21 such as image classification,22,23 semantic segmentation,24–31 and enhancement.32,33 In this study, we propose and evaluate a Deep Capillary Angiogram Reconstruction Network (DCARnet), a deep learning–based method to enhance 6-mm × 6-mm and 3-mm × 3-mm ICP and DCP en face OCTA images. We have released the Python source code at https://github.com/octangio/DCARnet.

Methods

Data Acquisition

The study was approved by the Institutional Review Board/Ethics Committee of Oregon Health & Science University, and informed consent was collected from all participants, in compliance with the Declaration of Helsinki. In total, 274 eyes from 180 participants were scanned. We acquired 3-mm × 3-mm and 6-mm × 6-mm OCTA macular scans from the same eye using a 70-kHz commercial OCTA system (RTVue-XR version 2017.1.0.151; Optovue, Inc., Fremont, California, USA). Each raster position takes two repeated B-scans to produce motion contrast and construct an OCTA data volume using the efficient split-spectrum amplitude-decorrelation algorithm.1 Each 3-mm × 3-mm B-scan consists of 304 A-lines, or 101 A-lines/mm. There were 400 A-lines in each 6-mm × 6-mm B-scan, which yields a lower sampling density of 67 A-lines/mm. Thirty-eight 6-mm × 6-mm OCTA macular scans from a commercial 120-kHz spectral domain OCT system (Solix; Optovue, Inc.) with 15-µm lateral resolution and 5-µm axial resolution were acquired.

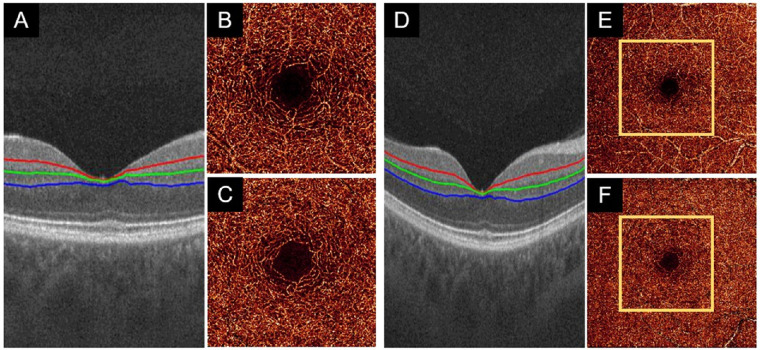

All en face OCTA images require retinal layer segmentation in order to determine the regions over which flow signal should be projected and to isolate separate plexuses. We used a guided bidirectional graph search to perform this segmentation34 (Figs. 1A, 1D). A projection-resolved (PR) OCTA algorithm was used to suppress the projection artifacts in retinal capillary plexuses.7 The 3-mm × 3-mm and 6-mm × 6-mm angiograms of the ICP (Figs. 1B, 1E) were generated by maximum projection of the OCTA signal in a slab including the inner plexiform layer (IPL) and inner nuclear layer (INL).35 The 3-mm × 3-mm and 6-mm × 6-mm angiograms of the DCP (Figs. 1C, 1F) were projected in a slab including the INL and outer plexiform layer.

Figure 1.

Examples of data acquisition in a healthy eye. (A) ICP (outer 20% of the ganglion cell complex and inner 50% of the INL) slab and DCP (outer 50% of the INL and outer plexiform layer) segmentation boundaries on a 3-mm × 3-mm scan. (B, C) The 3-mm × 3-mm angiograms of the ICP and DCP generated by maximum projection of the OCTA signal in the slabs delineated in (A). (D–F) Equivalent images for 6-mm × 6-mm angiograms from the same eye are of lower quality than 3-mm × 3-mm angiograms.

Convolutional Neural Network Architecture

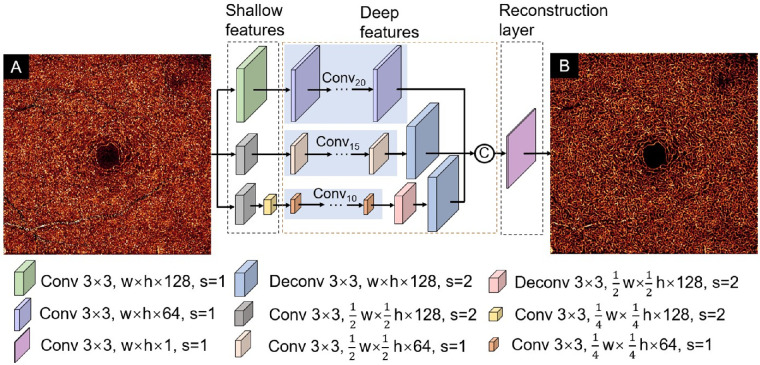

DCARnet takes individual en face angiograms as input (Fig. 2A). DCARnet comprises shallow feature extraction layers, deep feature extraction layers, and a reconstruction layer. The image features at different scales are extracted by three bypasses. The first bypass includes 21 convolutional layers. The first convolutional layer with 128 channels operates on the input image without changing the resolution of the image to extract shallow features, and the remaining 20 convolutional layers with 64 feature maps are used to extract deeper features. The second bypass includes 16 convolutional layers and a deconvolutional layer. The stride of the first convolutional layer with 128 channels is 2 in order to down-sample the image and extract low-level features. The remaining 15 convolutional layers with 64 channels generate deeper features, and a deconvolutional layer with 128 channels is used to up-sample the image to achieve the same scale as the input image. The strides of the deconvolutional layer and remaining convolutional layers are 2 and 1, respectively. The third bypass includes 12 convolutional layers and 2 deconvolutional layers. The strides of the first and second convolutional layer with 128 channels are set to 2 to reduce image resolution and extract shallow features. The remaining 10 convolutional layers with 64 feature maps are applied to generate high-level features. The stride of both deconvolutional layers is set to 2 to achieve the same scale as the input image. Then, all of the deep features extracted by the three bypasses are fused by concatenation. The fused hierarchical features are sent to a reconstruction layer that has one feature map to produce the final reconstructed image (Fig. 2B). In all layers, the kernel size is 3-mm × 3-mm pixels. The feature extraction bypasses used in DCARnet can extract features at different scales. In the first bypass, all feature maps were kept at the same resolution as the original input. The other two bypasses have down-sampling layers that reduced the size of feature map by factors of 2 and 4, respectively. These two bypasses reduced the number of parameters to accelerate the training process and enhanced the tolerance of DCARnet to disturbances like noise and flow signal strength changes. The number of convolutional layers in the three passes decreases successively, making the network pay more attention to the feature extraction from the original input image.

Figure 2.

DCARnet structure. Input to the network consists of a single OCTA en face image (A). The network is composed of a shallow feature extraction module and a deep feature extraction module, as well as a reconstruction module. The different-colored cubes represent convolutional layers or deconvolutional layers with different parameters. The kernel size in all layers is 3 × 3. The width and height of the input image are represented by w and h, respectively. The stride is represented by s. C represents concatenation operation. The output reconstructed angiogram shows higher contrast between vessels and background (B).

Training

Data Preprocessing

To acquire the input and the ground truth for training DCARnet, we up-sampled the 6-mm × 6-mm SVC (Fig. 3B), ICP, and DCP angiograms (Fig. 3(D)) to achieve the same scale as a 3-mm × 3-mm scan (Figs. 3A, 3C). We then registered the original 3-mm × 3-mm scan to the up-sampled 6-mm × 6-mm angiograms using the relatively noise-free and vasculature-rich SVC slabs12; the same transformations were applied to the deeper ICP and DCP slabs. Next, we cropped the maximum-area inscribed rectangle from registered 3-mm × 3-mm and 6-mm × 6-mm angiograms (Fig. 3E). The cropped 3-mm × 3-mm angiograms serve as the ground truth (Fig. 3F), and the cropped central 6-mm × 6-mm angiograms [Fig. 3(G)] are the inputs used for training DCARnet.

Figure 3.

Data preprocessing flowchart. (A) The original 3-mm × 3-mm SVC angiogram. (B) Up-sampled 6-mm × 6-mm SVC angiogram. (C) The original 3-mm × 3-mm DCP angiogram. (D) Up-sampled 6-mm × 6-mm DCP angiogram. (E) Registered DCP angiograms. The yellow box is the largest inscribed rectangle. (F) Cropped original 3-mm × 3-mm DCP angiogram. (G) Cropped central 3-mm × 3-mm section from the 6-mm × 6-mm DCP angiogram. Preprocessing for ICP angiograms follows the same pattern.

Loss Function

The loss function in this work consisted of the mean square error36 (MSE):

| (1) |

the structural similarity index (SSIM),37

| (2) |

and root mean square (RMS) contrast loss index38 (Closs)

| (3) |

where w, h refer to the width and height of the image, respectively; DT (i, j) and DP(i, j) are the pixel value at position (i, j); and are the mean pixel values; and are the standard deviations; and is the covariance of the ground truth image DT and predicted image DP, respectively. The values of the constants C1 = 0.01 and C2 = 0.03 were taken from the literature.37 The full loss function is then a linear combination of these components:

| (4) |

Together, each loss term provides helpful information during training. The MSE is used to measure the pixel-wise difference between ground truth and output image; SSIM is based on three comparison measurements: reflectance amplitude, contrast, and structure, while Closs is used to further enhance the contrast of the image. Although MSE can suppress large errors during training, it does not consider the relationship between adjacent pixels and often produces excessively smooth textures. Just using MSE, it is almost impossible to capture high-frequency texture details to generate satisfactory perceptual results.39 SSIM considers the similarity in three prominent perceptual categories in human vision: brightness, contrast, and shape. Even though SSIM considers the contrast of the overall structure, Closs is used to further enhance the contrast so that the produced image will not be overly smooth—results using a contrast loss calculation preserve more details. The combination of these loss functions makes up for the shortcomings of a single loss.

Participants

In this study, the whole data set consists of 274 eyes scanned from 180 participants. Each eye was scanned once using a 3-mm × 3-mm and once using a 6-mm × 6-mm scan pattern. The training data set includes 140 paired 3-mm × 3-mm and 6-mm × 6-mm ICP and DCP angiograms. The validation data set includes 33 paired 3-mm × 3-mm and 6-mm × 6-mm ICP and DCP scans. The remaining 101 paired 3-mm × 3-mm and 6-mm × 6-mm ICP and DCP scans were reserved for testing. The training data includes eyes with DR (n = 125) and healthy eyes (n = 15). The validation data set consists of 30 eyes with DR and 3 healthy eyes. The performance of this network on test data was separately evaluated on eyes with DR (n = 75) and healthy controls (n = 26). Fifteen healthy eyes from the test set were used to verify whether our algorithm generates false flow signal. In addition, to compare the performance of eight repeated en face averaging with our algorithm, we used four healthy eyes from the test set.

Training Parameters

The training data set was expanded by several data augmentation methods, including horizontal flipping, vertical flipping, transposition, and 90-degree rotation. To reduce computation cost, 76 × 76-pixel patches were used for training. Thus, after data augmentation, the training data set included 1400 images that were further divided into 34,955 patches extracted by cropping the ICP and DCP angiograms with a stride of 38. The validation data set of 330 images was also decomposed into 9900 patches by randomly cropping an image into 30 patches. As DCARnet is a fully convolutional neural network, images with arbitrary sizes can be input into the DCARnet during testing. Thus, we input the entire image into the model for testing. We used an Adam optimizer with a learning rate 0.01 to train DCARnet. The training batch size was 128. We performed 10 epochs of training to get an optimal model. DCARnet was implemented in Python 3.6 with Keras (Tensorflow-backend) on a PC with a 32G RAM and Intel i9 CPU, as well as two NVIDIA, Santa Clara, California, USA. GeForce GTX1080Ti graphics cards.

Evaluation of Noise Intensity, Vascular Connectivity, and Contrast

We evaluated DCARnet's performance by measuring noise intensity:

| (5) |

where D(i, j) is the pixel value at position (i, j) of the angiograms and R is a manually delineated circle inscribing the foveal avascular zone (FAZ). We also examined vascular connectivity.12 The angiograms were binarized using a global adaptive threshold method, then skeletonized. Connected flow pixels were defined as any contiguous flow region with a length of at least 5 (including diagonal connections), and the vascular connectivity was defined as the ratio of the number of connected flow pixels to the total number of pixels on a vascular skeleton map. We also evaluated reconstructed angiograms using Weber contrast, a metric used to evaluate a small fraction of features (capillaries) within a uniform background (speckled background). The foreground and background pixel positions were obtained by binarized angiograms. Symbolically, Weber contrast is

| (6) |

where Df is the averaged luminance of foreground image and Db is the averaged luminance of background image.

Results

Assessment of False Flow Signal

Due to their depth within the retina, ICP and DCP angiograms include relatively strong noise that may mislead image enhancement algorithms into generating a false flow signal. To determine whether this is an issue with DCARnet, we examined 3-mm × 3-mm high-quality ICP and DCP angiograms from 15 healthy eyes. These angiograms were denoised using simple Gabor and median filters (Fig. 4A1). We then added simulated Gaussian noise with different parameters (μ, σ) to these denoised angiograms, where μ and σ are the mean value and the standard deviation of the Gassian distribution, respectively. In all, we obtained 6000 ICP and DCP angiograms that were 3 × 3 mm (Figs. 4B1–D1) with different simulated noise intensities (0–2100; equation (5)) by changing μ and σ separately in increments of 0.005 from 0.001 to 1 and from 0.001 to 0.05. Finally, the denoised angiograms and angiograms with simulated noise were input to DCARnet (Figs. 4A2–D2). To determine if the reconstructed angiograms produced false flow, we calculated

| (7) |

Figure 4.

False flow signal characterized with simulated noise. (A1–D1) DCP angiograms with different simulated noise intensities. (A2–D2) DCARnet output for angiograms (A1–D1). Only at the highest simulated noise intensity is false flow generated.

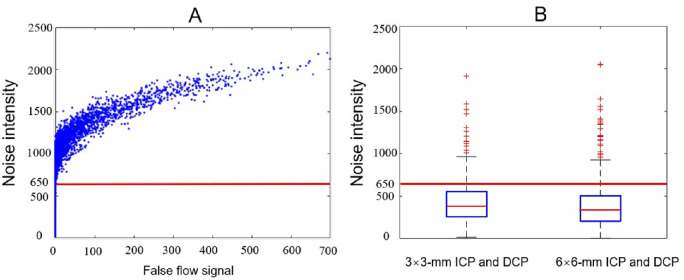

Because the FAZ is avascular, anatomically the value of IFalse should vanish. The results show that DCARnet did not produce false flow signal when noise intensity was under 650, which is above the noise intensity measured in the original angiograms (3 × 3 mm, 427.35 ± 246.62; 6 × 6 mm, 401.63 ± 304.49; Fig. 5).

Figure 5.

False flow signal and noise intensity. (A) The relationship between false flow signal and noise intensity. The blue points indicate six thousand 3-mm × 3-mm ICP and DCP angiograms with different simulated noise intensities. The red line represents a cutoff value (INoise = 650) under which no false flow signal was generated. (B) Boxplots of the noise intensity measured in original 3-mm × 3-mm and 6-mm × 6-mm ICP and DCP angiograms from all data sets. The average noise intensities of original 3-mm × 3-mm and 6-mm × 6-mm angiograms are below 650.

Performance on DR Angiograms

Studies have shown the OCTA can detect pathology related to DR in the deep capillary plexus.40 Microaneurysms and dilated capillaries are closely associated with DR progression.13,41,42 Thus, a basic requirement for image reconstruction is that pathologies such as these can be preserved. Visual inspection of DCARnet's output from eyes with severe DR reveals these pathologic vascular abnormalities in both ICP (Fig. 6) and DCP (Fig. 7) angiograms. We also compared the noise intensity, connectivity, and Weber contrast of angiograms from eyes with DR and healthy eyes. As with healthy eyes, DCARnet was able to significantly reduce noise intensity in eyes with DR (Fig. 8A), the connectivity of the reconstructed angiograms of eyes with DR was similarly greatly improved (Fig. 8B), and the contrast of the reconstructed angiograms of eyes with DR also was significantly enhanced (Fig. 8C).

Figure 6.

Qualitative performance on representative ICP scans. Shown are (A) a healthy eye, (B) an eye diagnosed with mild nonproliferative diabetic retinopathy (NPDR), (C) an eye diagnosed with moderate NPDR, and (D) an eye diagnosed with severe proliferative diabetic retinopathy (PDR). Compared to original, low-resolution 6-mm × 6-mm ICP angiograms (row 1), clearer capillary details and suppressed background can be perceived in DCARnet output (row 2). Dilated capillaries (green arrows) are visible in the reconstructed and original angiograms, demonstrating that DCARnet preserves this vascular pathology.

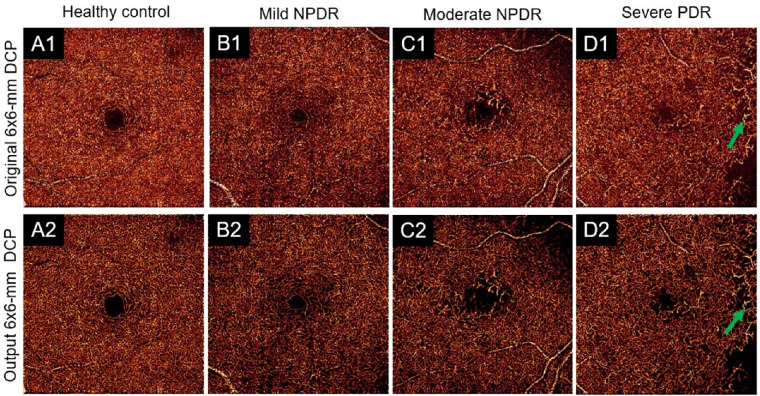

Figure 7.

Qualitative performance on representative DCP scans. Shown are (A) a healthy eye, (B) an eye diagnosed with mild nonproliferative diabetic retinopathy (NPDR), (C) an eye diagnosed with moderate NPDR, and (D) an eye diagnosed with severe proliferative diabetic retinopathy (PDR). Compared to original, low-resolution 6-mm × 6-mm ICP angiograms (row 1), clearer capillary details and suppressed background can be perceived in DCARnet output (row 2). Dilated capillaries (green arrows) are visible in the reconstructed and original angiograms, demonstrating that DCARnet preserves this vascular pathology.

Figure 8.

Quantitative evaluation in healthy eyes and eyes with DR. (A) Noise intensity, (B) connectivity, and (C) contrast in the original scans and DCARnet output. Significant improvement from the original images was achieved by all metrics, as evaluated by paired t-test with Holm–Bonferroni correction.

Comparison to Other Methods

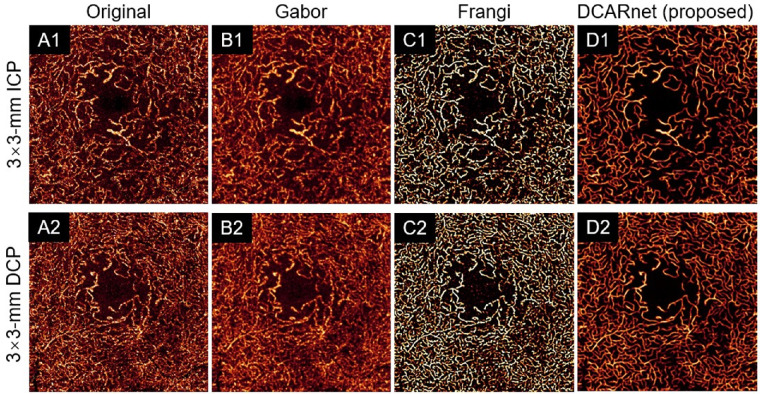

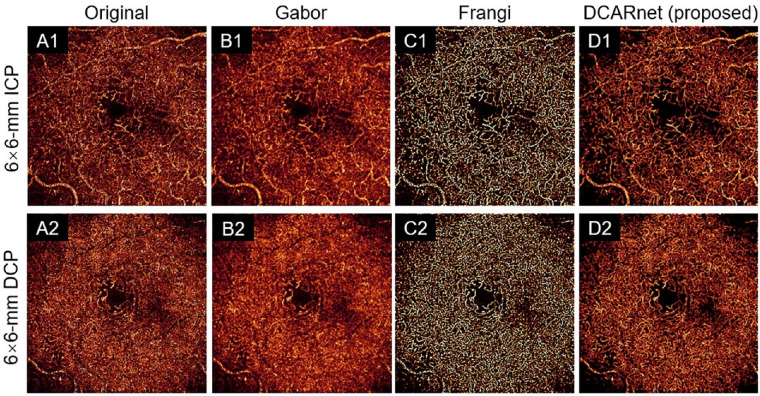

The quality of OCT angiograms can be improved by many image-filtering methods, including Frangi19 and Gabor20 filters. We applied these methods to the original 3-mm × 3-mm (Fig. 9) and 6-mm × 6-mm (Fig. 10) ICP and DCP angiograms. All these methods are based on different filters and require parameter tuning. We selected these parameters by searching for the values that yielded the best results for the performance metrics (noise intensity and connectivity) discussed above. Compared to the original angiograms, the Gabor filter significantly reduced noise intensity (Fig. 11(A) and improved connectivity (Fig. 11B) but greatly reduced the contrast in both 3-mm × 3-mm and 6-mm × 6-mm ICP and DCP angiograms (Fig. 11C). The Frangi filter greatly improved connectivity (Fig. 11B) and enhanced contrast (Fig. 11C) but did not significantly decrease noise intensity in 6-mm × 6-mm ICP and DCP angiograms (Fig. 11A). The Frangi filter may also produce false flow signal. Compared to the original angiograms, DCARnet significantly reduced noise intensity, improved connectivity, and enhanced contrast in both 3-mm × 3-mm and 6-mm × 6-mm ICP and DCP angiograms. Compared to other methods, the angiograms reconstructed by DCARnet showed the lowest noise intensity and best connectivity in both 3-mm × 3-mm and 6-mm × 6-mm ICP and DCP angiograms.

Figure 9.

The 3-mm × 3-mmmm angiogram enhancement of the ICP (top row, A1–D1) and DCP (bottom row, A2–D2) from an eye with DR using various methods. The original images (A), Gabor filtered images (B), Frangi filtered images (C), and DCARnet reconstructed images (D) are demonstrated. Compared to the original and Gabor filtered images, DCARnet produces images with visibly lower background levels. While the Frangi filtered images also achieve high contrast, note the artifactual signal generated in the FAZ, which is absent in DCARnet's output.

Figure 10.

The 6-mm × 6-mm angiogram enhancement of the ICP (top row, A1–D1) and DCP (bottom row, A2–D2) from an eye with DR using various methods. The original images (A), Gabor filtered images (B), Frangi filtered images (C), and DCARnet reconstructed images (D) are demonstrated. Vascular detail is visibly most clear in the DCARnet output.

Figure 11.

Quantitative evaluation of different methods. (A) Noise intensity, (B) connectivity, and (C) contrast compared between original scans and scans processed by different methods. A significant difference between the original and reconstructed images was achieved by all metrics, evaluated by paired t-test with Holm–Bonferroni correction. DCARnet achieves superior performance in noise intensity and connectivity.

Comparison to Multiple En Face Image Averaging

Multiple B-scan averaging is widely used to enhance the image quality of OCT angiograms. This technique also can reduce noise and improve the continuity of blood vessel segments or fragments.17,43 In addition to the filters discussed above, we also compared the performance of the averaged en face images with that of the original images reconstructed by DCARnet.

We evaluated the performance of 8-scan averaged en face images and the images reconstructed by DCARnet. SVC angiograms (Figs. 12A1–A8) were first registered, and the registration information of SVC was transferred to ICP or DCP angiograms (Figs. 12B1–B8). These registered angiograms were then averaged to generate the 8-scan averaged en face images (Fig. 12B). As a comparison, each of the original angiograms was reconstructed by DCARnet (Figs. 12C1–C8). We found 8-scan averaging was able to reduce noise intensity and increase connectivity but reduced contrast, whereas the results from our algorithm show low noise intensity, good connectivity, and strong contrast. More important, DCARnet just needs a single image to reconstruct a high-definition image, which dramatically reduces the time constraint on data collection.

Figure 12.

Comparison of 8-scan averaged en face image and angiograms reconstructed by DCARnet. (A1–A8) The 3-mm × 3-mm angiogram of the SVC, (B1–B8) DCP, and (C1–C8) reconstructed DCP from a healthy eye. Scans were registered using the SVC to produce 8-scan averaged composite images. (A) The 8-scan averaged SVC en face images. (B) The 8-scan averaged DCP en face images. DCARnet's output does not include the blurring effect generated by the averaging approach.

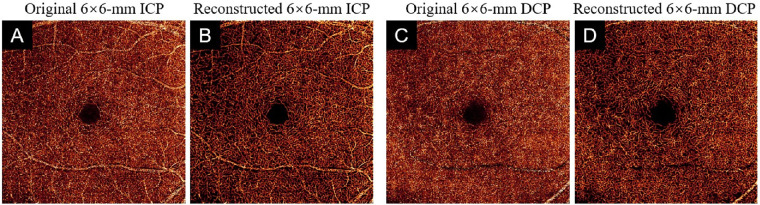

Performance on Scans from Different Devices

We also tested the robustness and generalization of our algorithm on 38 independent scans from a different device. We input 6-mm × 6-mm ICP and DCP angiograms from a commercial 120-kHz spectral domain OCT system (Solix; Optovue, Inc.) with a 15-µm lateral resolution and a 5-µm axial resolution to DCARnet (Fig. 13). The reconstructed scans from the Solix device by DCARnet also showed low noise intensity, good connectivity, and strong Weber contrast.

Figure 13.

The output of DCARnet on 6-mm × 6-mm ICP and DCP angiograms from a Solix device. (A) Original 6-mm × 6-mm ICP angiogram. (B) Reconstructed 6-mm × 6-mm ICP angiogram. (C, D) Equivalent image from a 6-mm × 6-mm DCP angiogram. Vascular detail is more apparent in images reconstructed from data from the Solix instrument.

Discussion

Assessment of plexus-specific pathology in the ICP and DCP using projection-resolved OCTA is helpful in assessing DR44,45 and other retinal vascular diseases.4 However, OCTA data from the ICP and DCP are especially susceptible to image artifacts due to the attenuated flow signal.46–48 Strong background noise and vessel fragments are common in ICP and DCP angiograms, affecting the quantitative and qualitative assessment of OCTA. Lower sampling densities used in wider-field-of-view OCTA further exacerbate these problems. Researchers have proposed many methods to enhance image quality. Traditional approaches with filters are less effective for wide-field-of-view OCTA with lower sampling density,49 and averaging multiple en face images requires long acquisition time, increasing the probability of image artifacts caused by eye movements. However, the lack of accessible algorithms to enhance ICP and DCP OCTA en face images renders any possible advantage to be gained from high-resolution OCT angiograms inaccessible; therefore, we provide an open-source platform (https://github.com/octangio/DCARnet) that we hope will be of use to other researchers that may find such enhancement. This study shows that a deep learning–based image enhancement approach, whether from eyes with DR or healthy eyes, achieves lower noise intensity, better connectivity, and stronger contrast than these alternative approaches without extending scan acquisition time.

Researchers have used very deep and complicated network structures to improve performance in natural image super-resolution reconstruction.39,50,51 However, with increased depth, networks can encounter more problems during training, such as gradient explosion/vanishing and overfitting.52,53 Furthermore, as the number of network layers increases, the features in the input image may be lost. Our proposed solution, DCARnet, is a simple, efficient, and easy-to-train network that aims to learn an end-to-end mapping function between the undersampled 6-mm × 6-mm angiograms and 3-mm × 3-mmmm angiograms with proper sampling density. Feature maps at the original resolution extracted more detailed information, such as vascular morphology. Down-sampled feature maps extracted enhanced the network's denoising ability. DCARnet fused multiscale features to enhance representational ability, preserve details, and improve tolerance to artifacts, low signal quality, and other disturbances. We used the validation data set to select the best model trained by the training data set. Then a test data set, which is completely independent of the training and validation data sets, is used to evaluate our algorithm. Even though DCARnet is not a very deep and complicated network, DCARnet produced good performance.

A significant concern in image reconstruction is the introduction of structure produced from noise that may mimic a true vascular signal. This is called a false signal and should be considered in image reconstruction projects. As noted, this is an issue for handcrafted algorithms such as a Frangi filter.19 The angiograms reconstructed by DCARnet did not produce false flow signal in angiograms with normal noise intensities. However, for those angiograms with noise intensity higher than 650, which is above the average noise intensity of this data set, DCARnet may produce some artifacts. As the performance of OCT systems improves, the signal-to-noise ratios in OCTA images will grow. This issue will therefore be less of a concern in future OCTA devices.

DCARnet was designed to remove background noise and reconstruct high-resolution capillary details. Our algorithm therefore does not suppress other artifacts due to projection or motion. For this reason, DCARnet is best used in conjunction with other artifact removal algorithms such as the reflectance-based algorithm for projection-resolved OCTA7 and the regression-based algorithm for bulk motion subtraction in OCTA.8 DCARnet also does not compensate for shadow artifacts to recover capillaries in the regions severely affected by shadows. If no capillary signal is detected by OCTA, DCARnet has no way to recover it. We also tested and demonstrated the strong generalization of this network on independent scans with similar sampling density acquired by a different device than the one used to acquire our training data.

We previously reported on High-resolution Angiogram Reconstruction Network (HARNet), which enhances SVC image resolution, and we now propose DCARnet to enhance ICP and DCP angiograms. Although a single, unified network theoretically could provide denoising simultaneously in the SVC, ICP, and DCP, anatomic differences between the layers make this difficult. Compared to SVC angiograms, ICP and DCP angiograms have denser capillaries and stronger background noise. If SVC, ICP, and DCP angiograms are trained together, the features specific to ICP and DCP angiograms may be introduced into SVC angiograms and vice versa. In addition, smaller blood vessels in the SVC may be lost due to excessive denoising. Conversely, noise will be misjudged as capillaries in ICP and DCP angiograms, because the noise intensity in the ICP and DCP is much higher than that in the SVC.

Conclusion

In summary, we developed a high-resolution reconstruction network to enhance ICP and DCP angiograms. DCARnet significantly reduced noise intensity and improved connectivity in 3-mm × 3-mm and 6-mm × 6-mm ICP and DCP angiograms without producing false flow signal. The enhanced 3-mm × 3-mm or 6-mm × 6-mm angiograms may improve qualitative and quantitative analysis of these angiograms.

Acknowledgments

Supported by grants from the National Institutes of Health (R01 EY027833, R01 EY024544, R01 EY031394, P30 EY010572, T32 EY023211), an unrestricted departmental funding grant, and the William & Mary Greve Special Scholar Award from the Research to Prevent Blindness (New York, NY) and the Bright Focus Foundation (G2020168).

Disclosure: M. Gao, None; T.T. Hormel, None; J. Wang, None; Y. Guo, None; S.T. Bailey, None; T. S. Hwang, None; Y. Jia, Optovue (F, P), Optos (P)

References

- 1. Jia Y, Tan O, Tokayer J, et al.. Split-spectrum amplitude-decorrelation angiography with optical coherence tomography. Opt Express. 2012; 20(4): 4710–4725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Jia Y, Baileya ST, Hwanga TS, et al.. Quantitative optical coherence tomography angiography of vascular abnormalities in the living human eye. Proc Natl Acad Sci U S A. 2015; 112(18): E2395–E2402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Gao SS, Jia Y, Zhang M, et al.. Optical coherence tomography angiography. Invest Ophthalmol Vis Sci. 2016; 57(9): OCT27–OCT36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Patel RC, Wang J, Hwang TS, et al.. Plexus-specific detection of retinal vascular pathologic conditions with projection-resolved OCT angiography. Ophthalmol Retin. 2018; 2(8): 816–826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Hormel TT, Jia Y, Jian Y, et al.. Plexus-specific retinal vascular anatomy and pathologies as seen by projection-resolved optical coherence tomographic angiography. Prog Retin Eye Res. 2020; 80: 100878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Zhang M, Hwang TS, Campbell JP, et al.. Projection-resolved optical coherence tomographic angiography. Biomed Opt Express. 2016; 7(3): 816–828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Wang J, Zhang M, Hwang TS, et al.. Reflectance-based projection-resolved optical coherence tomography angiography. Biomed Opt Express. 2017; 8(3): 1536–1548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Camino A, Jia Y, Liu G, Wang J, Huang D. Regression-based algorithm for bulk motion subtraction in optical coherence tomography angiography. Biomed Opt Express. 2017; 8(6): 3053–3066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. You QS, Guo Y, Wang J, et al.. Detection of clinically unsuspected retinal neovascularization with wide-field optical coherence tomography angiography. Retina. 2020; 40(5): 891–897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Wei X, Hormel TT, Guo Y, Hwang TS, Jia Y. High-resolution wide-field OCT angiography with a self-navigation method to correct microsaccades and blinks. Biomed Opt Express. 2020; 11(6): 3234–3245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Hormel TT, Huang D, Jia Y. Artifacts and artifact removal in optical coherence tomographic angiography. Quant Imaging Med Surg. 2021; 11(3): 1120–1133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Gao M, Guo Y, Hormel TT, Sun J, Hwang TS, Jia Y. Reconstruction of high-resolution 6×6-mm OCT angiograms using deep learning. Biomed Opt Express. 2020; 11(7): 3585–3600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Hwang TS, Miao Z, Bhavsar K, et al.. Visualization of 3 distinct retinal plexuses by projection-resolved optical coherence tomography angiography in diabetic retinopathy. JAMA Ophthalmol. 2016; 134(12): 1411–1419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Tsuboi K, Guo Y, Wang J, Kamei M, et al.. Association of dilated capillary area and anti-vascular endothelial growth factor treatment requirement for macular edema in branch retinal vein occlusion. Invest Ophthalmol Vis Sci. 2020; 61(9): PB003. [Google Scholar]

- 15. Tan B, Wong A, Bizheva K. Enhancement of morphological and vascular features in OCT images using a modified Bayesian residual transform. Biomed Opt Express. 2018; 9(5): 2394–2406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Uji A, Balasubramanian S, Lei J, Baghdasaryan E, Al-Sheikh M, Sadda SVR. Impact of multiple en face image averaging on quantitative assessment from optical coherence tomography angiography images. Ophthalmology. 2017; 124(7): 944–952. [DOI] [PubMed] [Google Scholar]

- 17. Uji A, Balasubramanian S, Lei J, et al.. Multiple enface image averaging for enhanced optical coherence tomography angiography imaging. Acta Ophthalmol. 2018; 96(7): E820–E827. [DOI] [PubMed] [Google Scholar]

- 18. Camino A, Zhang M, Dongye C, et al.. Automated registration and enhanced processing of clinical optical coherence tomography angiography. Quant Imaging Med Surg. 2016; 6(4): 391–401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Frangi AF, Niessen WJ, Vincken KL, Viergever MA. Multiscale vessel enhancement filtering. Lect Notes Comput Sci. 1998; 1496: 130–137. [Google Scholar]

- 20. Soares JVB, Leandro JJG, Cesar RM, Jelinek HF, Cree MJ. Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Trans Med Imaging. 2006; 25(9): 1214–1222. [DOI] [PubMed] [Google Scholar]

- 21. Hormel TT, Hwang TS, Bailey ST, et al.. Artificial intelligence in OCT angiography [published online March 22, 2021]. Prog Retin Eye Res. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Kumar A, Kim J, Lyndon D, Fulham M, Feng D. An ensemble of fine-tuned convolutional neural networks for medical image classification. IEEE J Biomed Heal Informatics. 2017; 21(1): 31–40. [DOI] [PubMed] [Google Scholar]

- 23. Wan S, Liang Y, Zhang Y. Deep convolutional neural networks for diabetic retinopathy detection by image classification. Comput Electr Eng. 2018; 72: 274–282. [Google Scholar]

- 24. Ronneberger O, Fischer P, Brox T. U-net: convolutional networks for biomedical image segmentation. Lect Notes Comput Sci. 2015; 9351: 234–241. [Google Scholar]

- 25. Guo Y, Camino A, Wang J, Huang D, Hwang TS, Jia Y. MEDnet, a neural network for automated detection of avascular area in OCT angiography. Biomed Opt Express. 2018; 9(11): 5147–5158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Guo Y, Hormel TT, Xiong H, et al.. Development and validation of a deep learning algorithm for distinguishing the nonperfusion area from signal reduction artifacts on OCT angiography. Biomed Opt Express. 2019; 10(7): 3257–3268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Zang P, Wang J, Hormel TT, Liu L, Huang D, Jia Y. Automated segmentation of peripapillary retinal boundaries in OCT combining a convolutional neural network and a multi-weights graph search. Biomed Opt Express. 2019; 10(8): 4340–4352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Wang J, Hormel TT, Gao L, et al.. Automated diagnosis and segmentation of choroidal neovascularization in OCT angiography using deep learning. Biomed Opt Express. 2020; 11(2): 927. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Wang J, Hormel TT, You Q, et al.. Robust non-perfusion area detection in three retinal plexuses using convolutional neural network in OCT angiography. Biomed Opt Express. 2020; 11(1): 330–345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Guo Y, Hormel TT, Xiong H, Wang J, Hwang TS, Jia Y. Automated segmentation of retinal fluid volumes from structural and angiographic optical coherence tomography using deep learning. Transl Vis Sci Technol. 2020; 9(2): 54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Guo Y, Hormel TT, Gao L, et al.. Quantification of nonperfusion area in montaged widefield OCT angiography using deep learning in diabetic retinopathy. Ophthalmol Sci. 2021; 1(2): 100027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Li M, Shen S, Gao W, Hsu W, Cong J. Computed tomography image enhancement using 3D convolutional neural network. Lect Notes Comput Sci. 2018; 11045 LNCS: 291–299. [Google Scholar]

- 33. Jiang Z, Huang Z, Qiu B, et al.. Comparative study of deep learning models for optical coherence tomography angiography. Biomed Opt Express. 2020; 11(3): 1580–1597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Guo Y, Camino A, Zhang M, et al.. Automated segmentation of retinal layer boundaries and capillary plexuses in wide-field optical coherence tomographic angiography. Biomed Opt Express. 2018; 9(9): 4429–4442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Hormel TT, Wang J, Bailey ST, Hwang TS, Huang D, Jia Y. Maximum value projection produces better en face OCT angiograms than mean value projection. Biomed Opt Express. 2018; 9(12): 6412–6424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Horé A, Ziou D. Image quality metrics: PSNR vs. SSIM. In: Proceedings—International Conference on Pattern Recognition, IEEE:Istanbul, Turkey, 2010: 2366–2369. [Google Scholar]

- 37. Wang Z, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process. 2004; 13(4): 600–612. [DOI] [PubMed] [Google Scholar]

- 38. Peli E. Contrast in complex images. J Opt Soc Am. 1990; 7(10): 2032–2040. [DOI] [PubMed] [Google Scholar]

- 39. Ledig C, Theis L, Huszár F, et al.. Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings—30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, IEEE:Hawaii, USA, 2017: 105–114. [Google Scholar]

- 40. Couturier A, Mané V, Bonnin S, et al.. Capillary plexus anomalies in diabetic retinopathy on optical coherence tomography angiography. Retina. 2015; 35(11): 2384–2391. [DOI] [PubMed] [Google Scholar]

- 41. Klein R, Meuer SM, Moss SE, Klein BEK. Retinal microaneurysm counts and 10-year progression of diabetic retinopathy. Arch Ophthalmol. 1995; 113(11): 1386–1391. [DOI] [PubMed] [Google Scholar]

- 42. Sjølie AK, Klein R, Porta M, et al.. Retinal microaneurysm count predicts progression and regression of diabetic retinopathy: post-hoc results from the DIRECT Programme. Diabet Med. 2010; 28(3): 345–351. [DOI] [PubMed] [Google Scholar]

- 43. Mo S, Phillips E, Krawitz BD, et al.. Visualization of radial peripapillary capillaries using optical coherence tomography angiography: the effect of image averaging. PLoS One. 2017; 12(1): e0169385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Hwang TS, Jia Y, Gao SS, et al.. Optical coherence tomography angiography features of diabetic retinopathy. Retina. 2015; 35(11): 2371–2376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Hwang TS, Gao SS, Liu L, et al.. Automated quantification of capillary nonperfusion using optical coherence tomography angiography in diabetic retinopathy. JAMA Ophthalmol. 2016; 134(4): 367–373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Spaide RF, Fujimoto JG, Waheed NK. Image artifacts in Optical coherence tomography angiography. Retina. 2015; 35(11): 2163–2180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Say EAT, Ferenczy S, Magrath GN, Samara WA, Khoo CTL, Shields CL. Image quality and artifacts on optical coherence tomography angiography. Retina. 2017; 37(9): 1660–1673. [DOI] [PubMed] [Google Scholar]

- 48. Ghasemi Falavarjani K, Al-Sheikh M, Akil H, Sadda SR. Image artefacts in swept-source optical coherence tomography angiography. Br J Ophthalmol. 2017; 101(5): 564–568. [DOI] [PubMed] [Google Scholar]

- 49. Chlebiej M, Gorczynska I, Rutkowski A, et al.. Quality improvement of OCT angiograms with elliptical directional filtering. Biomed Opt Express. 2019; 10(2): 1013–1031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE:Las Vegas, USA, 2016: 770–778. [Google Scholar]

- 51. Tong T, Li G, Liu X, Gao Q. Image super-resolution using dense skip connections. In: Proceedings of the IEEE International Conference on Computer Vision, IEEE:Venice, Italy, 2017: 4799–4807. [Google Scholar]

- 52. Cogswell M, Ahmed F, Girshick R, Zitnick L, Batra D. Reducing overfitting in deep networks by decorrelating representations. arXiv preprint 2015, arXiv:1511.06068.

- 53. Hanin B. Which neural net architectures give rise to exploding and vanishing gradients? Adv Neural Inf Process Syst. 2018; 2018(iii): 582–591. [Google Scholar]