Abstract

Background

The whole brain is often covered in [18F]Fluorodeoxyglucose positron emission tomography ([18F]FDG-PET) in oncology patients, but the covered brain abnormality is typically screened by visual interpretation without quantitative analysis in clinical practice. In this study, we aimed to develop a fully automated quantitative interpretation pipeline of brain volume from an oncology PET image.

Method

We retrospectively collected 500 oncologic [18F]FDG-PET scans for training and validation of the automated brain extractor. We trained the model for extracting brain volume with two manually drawn bounding boxes on maximal intensity projection images. ResNet-50, a 2-D convolutional neural network (CNN), was used for the model training. The brain volume was automatically extracted using the CNN model and spatially normalized. For validation of the trained model and an application of this automated analytic method, we enrolled 24 subjects with small cell lung cancer (SCLC) and performed voxel-wise two-sample T test for automatic detection of metastatic lesions.

Result

The deep learning-based brain extractor successfully identified the existence of whole-brain volume, with an accuracy of 98% for the validation set. The performance of extracting the brain measured by the intersection-over-union of 3-D bounding boxes was 72.9 ± 12.5% for the validation set. As an example of the application to automatically identify brain abnormality, this approach successfully identified the metastatic lesions in three of the four cases of SCLC patients with brain metastasis.

Conclusion

Based on the deep learning-based model, extraction of the brain volume from whole-body PET was successfully performed. We suggest this fully automated approach could be used for the quantitative analysis of brain metabolic patterns to identify abnormalities during clinical interpretation of oncologic PET studies.

Keywords: Brain segmentation, Quantitative PET analysis, Deep learning, Convolutional neural network, FDG-PET, Brain FDG-PET

Background

[18F]fluorodeoxyglucose positron emission tomography ([18F]FDG-PET) has been playing a crucial role in tumor imaging [1, 2]. The clinical implications include diagnosis of unknown tumor, staging, monitoring for recurrence, and clinical assessment of therapy [3]. The routine protocol for oncologic 18F-FDG-PET is so-called torso imaging, covering from skull base to mid-thigh in most of the PET centers [4]. However, in practice, covering the whole level of skull is often clinically useful, for example, assessment of brain metastasis in the high-prevalence type of tumor, such as lung cancer [5–7]. The covered brain volume is typically analyzed by visual inspection in clinical practice due to low sensitivity in metastatic lesions compared with magnetic resonance (MR) imaging. Thus, in the clinical practice, roles of oncologic 18F-FDG-PET for detection of brain lesions is underestimated [8]. However, incidental brain metastasis is important for clinical decisions. Furthermore, brain metabolism reflecting functional activity is affected by chemotherapy as well as tumors themselves—e.g., paraneoplastic encephalitis, which could affect outcome [9, 10]. As functional image assesses the metabolism of the whole body as well as tumors, quantitative information of covered brain extracted from the oncology FDG-PET study could be utilized to identify brain abnormality as well as unexpected metastasis. More specifically, with the aid of automated analytic methods, such as statistical parametric mapping (SPM) [11, 12], the information from brain PET images might improve sensitivity for detecting incidental brain disorders by measuring regional metabolic abnormalities [13], such as local-onset seizures [14], or Alzheimer’s disease (AD) [15], as well as brain metastasis [16].

Some studies have implemented deep learning-based approaches for automatic analysis of brain images, especially targeting MR imaging. Since MR image typically covers head region only, these studies target segmenting anatomic structures [17] or anatomically apparent lesions [18, 19]. Other studies based on PET images also target segmenting metabolically active tumor lesions [20, 21]. However, no study, as far as we know, has targeted extracting the brain itself from the images covering the whole body.

To achieve this goal, we implemented a convolutional neural network (CNN)-based deep learning model, which has been successful in solving a variety of problems in the field of image processing, including image classification, object detection, and segmentation [22, 23]. A vast portion of this success includes medical image processing [24, 25], including anatomical segmentation of the brain [26] or detection of the tumorous lesion [27] of MR images.

In this study, we aimed to develop a fully automatic quantitative analysis pipeline of brain volume from a given oncology PET image. To achieve this goal, deep learning models were exploited to detect the location of the brain and to identify whether a given PET study included the whole brain. The detected brain was cropped and spatially normalized to the template brain. The automatically extracted and normalized brain volume could be used to perform statistical analysis, including SPM. As an example, we applied this model to identify brain metastasis from whole-body FDG-PET imaging.

Methods

Subjects

For the training and validation data of the automatic brain extractor, 500 whole-body [18F]FDG-PET scans were retrospectively collected. These PET scans were performed from June to July 2020 in a single center (Age = 66.7 ± 3.4, M: F = 194: 306). The scans which were explicitly prescribed to include the brain (by the oncologic clinicians) were excluded from analysis, to remove the bias in the evaluation of detecting accuracy of brain existence. Among the 500 cases, the primary site of malignancy was breast (19.8%), lung (18.6%), hematologic (14.2%), colorectal (9.4%), biliary (6.8%), ovary (5.4%), pancreas (5.2%), liver (5.0%), stomach (4.2%), thymus (3.6%), urinary tract (3.4%), soft tissue (3.0%), thyroid (0.6%), or unknown (0.8%).

For the validation of our trained model and the quantitative assessment of the extracted brain as an independent test, FDG-PET images acquired from small-cell lung cancer (SCLC) patients were retrospectively collected. The scans were acquired from January 2014 to December 2017 in the same institute. To test whether our automated brain analysis pipeline identifies brain metastasis in SCLC patients, groups were defined according to the presence of brain metastasis. Four patients had brain metastasis confirmed by brain MRI at baseline and follow-up (age: 66.8 ± 6.5, M: F = 4: 0). Twenty PET scans without brain metastasis, according to the baseline brain MRI were regarded as controls (age: 71.2 ± 6.1; M: F = 17:3).

Image acquisition

As a routine protocol of FDG-PET, after fasting more than 4 h, patients were intravenously injected with 5.18 MBq/kg of FDG. After 1 h, PET image was acquired from the skull base to the proximal thigh using dedicated PET/CT scanners (Biograph mCT 40 or mCT 64, Siemens, Erlangen, Germany) for 1 min per bed. A Gaussian filter (FWHM 5 mm) was applied to reduce noise, and images were reconstructed using an ordered-subset expectation maximization algorithm (2 iterations and 21 subsets).

Deep learning model and training data for the brain extraction

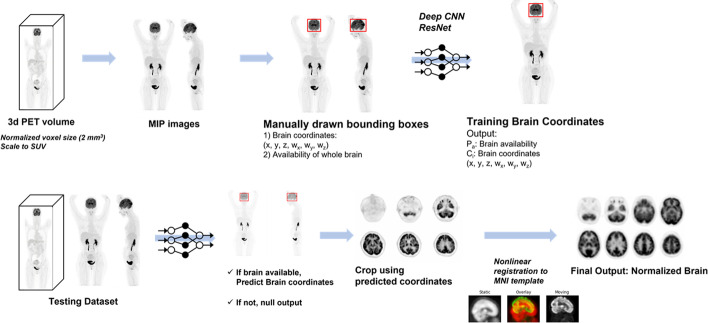

We devised an automatic brain extractor based on the following two objectives: (1) the evaluation of whether a scan included the entire brain and (2) the establishment of a 3-D bounding box which included brain volume. The brief outline of the study is shown in Fig. 1.

Fig. 1.

Brief outline of the automatic brain extraction. We trained the model with two manually drawn bounding boxes on maximal intensity projection (MIP) images. ResNet-50, a convolutional neural network (CNN) was used for learning model. Internal validation of model was performed. Finally, the brain volume was extracted and spatially normalized to the template space

For training of the model, the maximum intensity projection (MIP) image for each of the PET scans was generated. For each of the 500 MIP images, 2-D bounding boxes were manually drawn on the anterior and lateral views of the MIP image. We used VGG Image Annotator (VIA) [28] to manually create bounding boxes on the MIP images and acquire coordinates of them. Coordinates from the two bounding boxes were merged to obtain coordinates of a 3-D bounding box for each PET image. Images that did not contain full range of the brain were classified elsewhere, as “not containing entire brain”.

Two MIP images, anterior and lateral views, were changed to square matrices by zero-padding. The matrices were changed to 224 × 224 using bilinear interpolation. The pixel values represented the standardized uptake value (SUV). To be inputs of a CNN model, pixel values were divided by 30, as most voxel values of PET volume have less than SUV 30 except urine, and then multiplied by 255 to have a range approximately 0 to 255.

We utilized ResNet-50 [29, 30] for the learning model, a 2-D CNN pre-trained with images from the ImageNet database [31]. The module used the Python front end of the open-source library TensorFlow [32], which runs on Graphical Processing Unit (GPU, NVIDIA GeForce RTX 2080Ti). ResNet-50 was implemented for preprocessing the input data and predicting the coordinates of 3-D bounding boxes from the MIP images. The pre-trained ResNet-50 respectively extracted feature vectors from the two views of MIP images. The extracted features were concatenated. An additional fully connected layer with 4096 dimensions was connected to the concatenated feature vectors and then finally connected to different outputs. An output represented coordinates of the bounding box of the brain consisting of 6-D vectors (coordinates for three axes and width, length, and depth of the bounding box for three axes). Another output with a 1-D vector represented whether a given PET volume included the entire brain. Image augmentation was applied to the training dataset. MIP images were randomly augmented by multiplying voxel values, changing contrast, scaling, and translating images. For the optimizer, we implemented Adam [33] with a learning rate of 0.00001, 150 epochs, and batch size of 8.

We performed the internal validation by randomly selecting 10% of the data (n = 50) as a validation set. The loss function was defined by two terms:

where indicates the true existence of the brain (equal to 1 when the whole brain exists, 0 when not), denotes the predicted existence, and vectors and denote the predicted and true coordinates of the bounding box, respectively. Therefore, two terms of the loss function, and represent (1) binary cross-entropy of an output that represented whether a given PET volume included the entire brain and (2) mean squared error estimated by the 6-D vector representing coordinates of the bounding box, respectively (Fig. 1). The weight for the loss was empirically determined for the training: we set to alpha = 10 and beta = 1 for sum of the loss function. We measured intersection-over-union (IOU) for the predicted and labeled bounding boxes. From the predicted coordinates of bounding boxes, we extracted brain images from whole-body PET and spatially normalized them to the template space, as mentioned later.

Processing of the extracted brain

The trained model was applied to whole-body PET images to extract brain if the model predicted that the image contains the whole-brain volume. FDG-PET volumes were resliced to have a voxel size of 2 × 2 × 2 mm3. We segmented the brain with the coordinates of the 3-D bounding boxes predicted by the model. Padding of 10 voxels is applied for each axis to determine the brain volume. The extracted brain volumes were spatially normalized onto Montreal Neurological Institute (McGill University, Montreal, Quebec, Canada) standard templates. The spatial normalization was performed by symmetric normalization (SyN) with the cross-correlation loss function implanted in the DIPY package [34]. More specifically, a given extracted brain volume was linearly transformed to the template PET image with affine transform. The warping was performed by the symmetric diffeomorphic registration algorithm. The spatially normalized PET volume was saved for further quantitative imaging analysis.

Quantitative analysis of the extracted brain

The extracted and spatially normalized brain volume was analyzed by a quantitative software, SPM12 (Institute of Neurology, University College of London, London, U K) implemented in MATLAB 2019b (The MathWorks, Inc., Natick, MA, U SA). The normalized brain images were smoothed by convolution with an isotropic Gaussian kernel having a 10 mm full width at half maximum to increase the signal-to-noise ratio.

For the 24 subjects with SCLC, we performed the voxel-wise two-sample T test for each of the normalized brain volumes from the four scans with metastatic lesions, with the whole images from the 20 control group subjects. Uncorrected P < 0.001 was applied to identify patient-wise metabolically abnormal regions.

For each of the four comparisons, we also constructed a map of T-statistics and extracted the peak T values. As a proof-of-concept study, we investigated whether the statistical analysis successfully revealed the metastatic lesions confirmed by the brain MRI previously.

Results

Extraction of the brain volume

The deep learning-based brain extractor successfully identified the existence of whole-brain volume, with an accuracy of 98% for the internal validation set. The performance of extracting the brain measured by the IOU of 3-D bounding boxes was 72.9 ± 12.5% for the validation set. Using the predicted coordinates, all brains were successfully cropped and automatically normalized into the template space.

We show some representative images we applied for interval validation of the model in Fig. 2. In both of the “torso” PET covering up to mid-thigh and “total-body” PET covering whole heights of the body the extractor successfully located the brain (Fig. 2a, b). The extractor was also capable of identification of the brain when the artifact caused by radiopharmaceutical injection was projected to the brain at the MIP image (Fig. 2c). When the brain volume was not fully included, the extractor classified the image as “not containing entire brain” (Fig. 2d).

Fig. 2.

Representative results of the automatic brain extractor. a, b In both of the “torso”, PET covering up to mid-thigh and “total-body” PET covering whole heights of body the extractor successfully located the brain. c The extractor was also capable of identifying brain when the artifact caused by radiopharmaceutical injection was projected to the brain at the MIP image. d When the brain volume was not fully included, the extractor classified the image as “not containing entire brain”

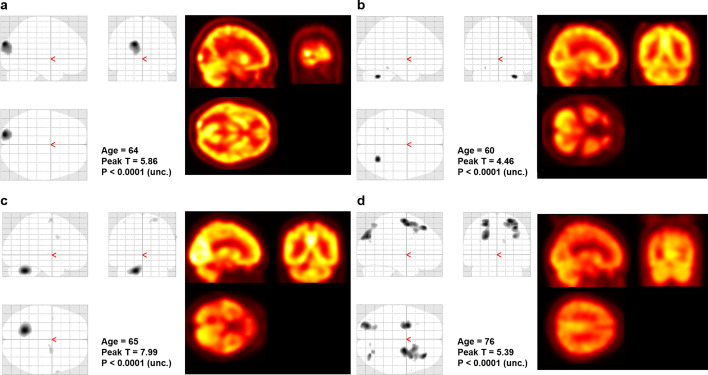

Identification of brain metastasis from whole-body FDG-PET using the fully automated brain analysis pipeline

The fully automated brain extraction and quantitative analysis were applied to the patients with SCLC as a proof-of-concept study. In the 24 whole-body PET images, the automatic brain extractor has identified the existence of the whole brain with an accuracy of 100%, and the IOU of 3-D bounding boxes was 75.8 ± 7.2%. The voxel-wise T test successfully identified the metastatic lesions in the brain at three of four subjects in the case group (P < 0.001). In all of the three successful cases, the analysis revealed hypometabolic lesions due to edematous change around the lesion (Fig. 3). In the other case with the unsuccessful result, the statistical analysis showed diffuse hypometabolism in the frontoparietal lobe, instead of focal metabolic defect at the metastatic site (Fig. 3).

Fig. 3.

Quantitative analysis of the extracted brain. The voxel-wise T test successfully identified the metastatic lesions in the brain at three of four subjects in the case group (uncorrected P < 0.001). The graphics on the left side show the brain regions that show hypometabolism compared to the control group. The image on the right side shows the corresponding FDG-PET image. a, b, c In all of the three successful cases, the analysis revealed hypometabolic lesions due to edematous change around the lesion. d In the other case with unsuccessful result, the statistical analysis showed diffuse hypometabolism in frontoparietal lobe, instead of focal metabolic defect at the metastatic site

Discussion

Since the increased utilization of FDG-PET in neurologic disorders, many kinds of literature suggest methods for quantitative analysis for FDG-PET images of the brain [12, 35, 36]. However, most of the subjects with FDG-PET scans, especially oncologic patients, are not benefited from this kind of progress, for the lack of a handy and automated method of quantification. This work aims to achieve the first step of this automatization by deep learning-based extraction of brain volume from the oncologic PET scan, which is followed by a scout quantitative analysis of the extracted brains. Fully automated brain extraction and providing quantitative information in oncologic PET can be integrated into a system that warns of metastatic lesions or major brain diseases that can be overlooked in visual reading.

The key step of automated quantitative brain imaging analysis from the oncologic PET images was the extraction of brain volumes. In most of the scans of internal validation, the automatic brain extractor based on ResNet-50 successfully identified the coverage of full brain in the whole-body scan and located and extracted the brain volume, even in the presence of artifact projected to the MIP image.

The extracted brain PET volume can be analyzed by many conventional quantitative analysis approaches. In this study, for the fully automated process, we employed a spatial normalization process based on SyN algorithm implemented in the DIPY package. Notably, the spatial normalization process after the brain extraction was fully automated. The spatially normalized brain can be further analyzed by quantitative software, including SPM and 3-D stereotactic surface projection (3-D SSP) [37]. In this work, as a proof-of-concept study, we implemented SPM to identify metastatic lesions. This reveals the implication of the automatic brain extraction we performed, which could potentially extend to aid in the identification of unexpected metastasis during visual interpretation of oncologic PET study. Moreover, this method could be used to identify overlooked brain abnormalities such as dementia as well as tumorous lesions in the brain.

In the process of the quantitative analysis, as a proof-of-concept study, age matching was not performed between the metastatic subject and control group to yield rather non-specific decreased metabolism along the cerebral cortex in an elderly subject. This might have resulted from the physiologic decrease in gray matter volume accompanied by a normal aging process [38, 39]. Adjustment of patient factors (e.g., age and underlying disease) would be crucial to detect a localized metabolic disorder, apart from the diffuse change of metabolism resulted from the systemic condition. In addition, although the brain extraction model showed good results, there is room for optimization such as hyperparameter tuning and revising model architecture. Nonetheless, considering that the purpose of the model was ‘spatially normalized brain’, which could be obtained from the extracted brain even with small errors in the brain coordinates. As a proof-of-concept study, our suggested model has proved the final purpose of identifying brain abnormality from the automatically spatially normalized brain.

Conclusions

Based on the deep learning-based model, we successfully developed a fully automated brain analysis method from oncologic FDG-PET. The model could identify the existence of the brain volume, locate the contour of brain from the PET image, and perform the spatial normalization to the template. The quantitative analysis showed the feasibility of the identification of the metastatic brain lesion. We suggested that the model could be used to support FDG-PET interpretation and analysis by finding unexpected brain abnormalities, including metastasis as well as brain disorders.

Acknowledgements

We thank our colleagues from Seoul National University Hospital, who provided insight and expertise that greatly assisted the research.

Abbreviations

- FDG

Fluorodeoxyglucose

- PET

Positron emission tomography

- MIP

Maximal intensity projection

- CNN

Convolutional neural network

- SCLC

Small-cell lung cancer

- IOU

Intersection-over-union

- MR

Magnetic resonance

- SPM

Statistical parametric mapping

- AD

Alzheimer’s disease

- SUV

Standardized uptake value

- SyN

Symmetric normalization

- SSP

Stereotactic surface projection

Authors' contributions

HC designed this study. WW performed data collection, preparation, and statistical analysis. HC created software used in the work. JCP, GJC, KWK, and DSL supported the result interpretation and discussion. All authors interpreted data results, drafted, and edited the manuscript. All authors read and approved the final manuscript.

Funding

This research was supported by the National Research Foundation of Korea (NRF-2019R1F1A1061412 and NRF-2019K1A3A1A14065446). This work was supported by the Korea Medical Device Development Fund grant funded by the Korea government (the Ministry of Science and ICT, the Ministry of Trade, Industry and Energy, the Ministry of Health & Welfare, the Ministry of Food and Drug Safety) (Project Number: 202011A06) and Seoul R&BD Program (BT200151).

Availability of data and materials

Not applicable.

Declarations

Ethics approval and consent to participate

All procedures performed were in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards (SNUH IRB No. 2104-046-1209).

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Almuhaideb A, Papathanasiou N, Bomanji J. 18F-FDG PET/CT imaging in oncology. Ann Saudi Med. 2011;31(1):3–13. doi: 10.4103/0256-4947.75771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zhu A, Lee D, Shim H, editors. Metabolic positron emission tomography imaging in cancer detection and therapy response. Seminars in oncology. Amsterdam: Elsevier; 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fletcher JW, Djulbegovic B, Soares HP, Siegel BA, Lowe VJ, Lyman GH, et al. Recommendations on the use of 18F-FDG PET in oncology. J Nucl Med. 2008;49(3):480–508. doi: 10.2967/jnumed.107.047787. [DOI] [PubMed] [Google Scholar]

- 4.von Schulthess GK, Steinert HC, Hany TF. Integrated PET/CT: current applications and future directions. Radiology. 2006;238(2):405–422. doi: 10.1148/radiol.2382041977. [DOI] [PubMed] [Google Scholar]

- 5.Kamel EM, Zwahlen D, Wyss MT, Stumpe KD, von Schulthess GK, Steinert HC. Whole-body 18F-FDG PET improves the management of patients with small cell lung cancer. J Nucl Med. 2003;44(12):1911–1917. [PubMed] [Google Scholar]

- 6.Lee H-Y, Chung J-K, Jeong JM, Lee DS, Kim DG, Jung HW, et al. Comparison of FDG-PET findings of brain metastasis from non-small-cell lung cancer and small-cell lung cancer. Ann Nucl Med. 2008;22(4):281. doi: 10.1007/s12149-007-0104-1. [DOI] [PubMed] [Google Scholar]

- 7.Tasdemir B, Urakci Z, Dostbil Z, Unal K, Simsek FS, Teke F, et al. Effectiveness of the addition of the brain region to the FDG-PET/CT imaging area in patients with suspected or diagnosed lung cancer. Radiol Med (Torino) 2016;121(3):218–224. doi: 10.1007/s11547-015-0597-y. [DOI] [PubMed] [Google Scholar]

- 8.Krüger S, Mottaghy MF, Buck KA, Maschke S, Kley H, Frechen D, et al. Brain metastasis in lung cancer. Nuklearmedizin. 2011;50(03):101–106. doi: 10.3413/Nukmed-0338-10-07. [DOI] [PubMed] [Google Scholar]

- 9.Silverman DH, Dy CJ, Castellon SA, Lai J, Pio BS, Abraham L, et al. Altered frontocortical, cerebellar, and basal ganglia activity in adjuvant-treated breast cancer survivors 5–10 years after chemotherapy. Breast Cancer Res Treat. 2007;103(3):303–311. doi: 10.1007/s10549-006-9380-z. [DOI] [PubMed] [Google Scholar]

- 10.Younes-Mhenni S, Janier M, Cinotti L, Antoine J, Tronc F, Cottin V, et al. FDG-PET improves tumour detection in patients with paraneoplastic neurological syndromes. Brain. 2004;127(10):2331–2338. doi: 10.1093/brain/awh247. [DOI] [PubMed] [Google Scholar]

- 11.Muzik O, Chugani DC, Juhász C, Shen C, Chugani HT. Statistical parametric mapping: assessment of application in children. Neuroimage. 2000;12(5):538–549. doi: 10.1006/nimg.2000.0651. [DOI] [PubMed] [Google Scholar]

- 12.Penny WD, Friston KJ, Ashburner JT, Kiebel SJ, Nichols TE. Statistical parametric mapping: the analysis of functional brain images. Amsterdam: Elsevier; 2011. [Google Scholar]

- 13.Signorini M, Paulesu E, Friston K, Perani D, Colleluori A, Lucignani G, et al. Rapid assessment of regional cerebral metabolic abnormalities in single subjects with quantitative and nonquantitative [18F] FDG PET: a clinical validation of statistical parametric mapping. Neuroimage. 1999;9(1):63–80. doi: 10.1006/nimg.1998.0381. [DOI] [PubMed] [Google Scholar]

- 14.Kim YK, Lee DS, Lee SK, Chung CK, Chung J-K, Lee MC. 18F-FDG PET in localization of frontal lobe epilepsy: comparison of visual and SPM analysis. J Nucl Med. 2002;43(9):1167–1174. [PubMed] [Google Scholar]

- 15.Ishii K, Willoch F, Minoshima S, Drzezga A, Ficaro EP, Cross DJ, et al. Statistical brain mapping of 18F-FDG PET in Alzheimer’s disease: validation of anatomic standardization for atrophied brains. J Nucl Med. 2001;42(4):548–557. [PubMed] [Google Scholar]

- 16.Shofty B, Artzi M, Shtrozberg S, Fanizzi C, DiMeco F, Haim O, et al. Virtual biopsy using MRi radiomics for prediction of BRAf status in melanoma brain metastasis. Sci Rep. 2020;10(1):1–7. doi: 10.1038/s41598-020-63821-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Akkus Z, Galimzianova A, Hoogi A, Rubin DL, Erickson BJ. Deep learning for brain MRI segmentation: state of the art and future directions. J Digit Imaging. 2017;30(4):449–459. doi: 10.1007/s10278-017-9983-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Işın A, Direkoğlu C, Şah M. Review of MRI-based brain tumor image segmentation using deep learning methods. Proc Comput Sci. 2016;102:317–324. doi: 10.1016/j.procs.2016.09.407. [DOI] [Google Scholar]

- 19.Sarraf S, Tofighi G. Classification of alzheimer's disease using fmri data and deep learning convolutional neural networks. arXiv preprint arXiv: 08631. 2016.

- 20.Zhu W, Jiang T, editors. Automation segmentation of PET image for brain tumors. In: 2003 IEEE nuclear science symposium conference record (IEEE Cat No 03CH37515); 2003. IEEE.

- 21.Stefano A, Comelli A, Bravatà V, Barone S, Daskalovski I, Savoca G, et al. A preliminary PET radiomics study of brain metastases using a fully automatic segmentation method. BMC Bioinform. 2020;21(8):1–14. doi: 10.1186/s12859-020-03647-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dhillon A, Verma GK. Convolutional neural network: a review of models, methodologies and applications to object detection. Progress Artif Intell. 2020;9(2):85–112. doi: 10.1007/s13748-019-00203-0. [DOI] [Google Scholar]

- 23.Li Q, Cai W, Wang X, Zhou Y, Feng DD, Chen M, editors. Medical image classification with convolutional neural network. In: 2014 13th International conference on control automation robotics & vision (ICARCV); 2014. IEEE.

- 24.Milletari F, Navab N, Ahmadi S-A, editors. V-net: fully convolutional neural networks for volumetric medical image segmentation. In: 2016 Fourth international conference on 3D vision (3DV); 2016. IEEE.

- 25.Winkler JK, Sies K, Fink C, Toberer F, Enk A, Deinlein T, et al. Melanoma recognition by a deep learning convolutional neural network—performance in different melanoma subtypes and localisations. Eur J Cancer. 2020;127:21–29. doi: 10.1016/j.ejca.2019.11.020. [DOI] [PubMed] [Google Scholar]

- 26.Chen H, Dou Q, Yu L, Heng P-A. Voxresnet: Deep voxelwise residual networks for volumetric brain segmentation. 2016. [DOI] [PubMed]

- 27.Saouli R, Akil M, Kachouri R. Fully automatic brain tumor segmentation using end-to-end incremental deep neural networks in MRI images. Comput Methods Programs Biomed. 2018;166:39–49. doi: 10.1016/j.cmpb.2018.09.007. [DOI] [PubMed] [Google Scholar]

- 28.Dutta A, Zisserman A, editors. The VIA annotation software for images, audio and video. In: Proceedings of the 27th ACM international conference on multimedia; 2019.

- 29.ResNet-50. Available from: https://arxiv.org/abs/1512.03385.

- 30.He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. arXiv 2015. arXiv preprint arXiv: 03385. 2015.

- 31.ImageNet. Available from: https://ieeexplore.ieee.org/document/5206848.

- 32.Girija SSJ. Tensorflow: large-scale machine learning on heterogeneous distributed systems. 2016;39(9).

- 33.Kingma DP, Ba JA. A method for stochastic optimization. arXiv preprint arXiv: 14126980. 2014.

- 34.Garyfallidis E, Brett M, Amirbekian B, Rokem A, Van Der Walt S, Descoteaux M, et al. Dipy, a library for the analysis of diffusion MRI data. Front Neuroinform. 2014;8:8. doi: 10.3389/fninf.2014.00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Alf MF, Wyss MT, Buck A, Weber B, Schibli R, Krämer SD. Quantification of brain glucose metabolism by 18F-FDG PET with real-time arterial and image-derived input function in mice. J Nucl Med. 2013;54(1):132–138. doi: 10.2967/jnumed.112.107474. [DOI] [PubMed] [Google Scholar]

- 36.Kang KW, Lee DS, Cho JH, Lee JS, Yeo JS, Lee SK, et al. Quantification of F-18 FDG PET images in temporal lobe epilepsy patients using probabilistic brain atlas. Neuroimage. 2001;14(1):1–6. doi: 10.1006/nimg.2001.0783. [DOI] [PubMed] [Google Scholar]

- 37.Minoshima S, Frey KA, Koeppe RA, Foster NL, Kuhl DE. A diagnostic approach in Alzheimer's disease using three-dimensional stereotactic surface projections of fluorine-18-FDG PET. J Nucl Med. 1995;36(7):1238–1248. [PubMed] [Google Scholar]

- 38.Kalpouzos G, Chételat G, Baron J-C, Landeau B, Mevel K, Godeau C, et al. Voxel-based mapping of brain gray matter volume and glucose metabolism profiles in normal aging. Neurobiol Aging. 2009;30(1):112–124. doi: 10.1016/j.neurobiolaging.2007.05.019. [DOI] [PubMed] [Google Scholar]

- 39.Yanase D, Matsunari I, Yajima K, Chen W, Fujikawa A, Nishimura S, et al. Brain FDG PET study of normal aging in Japanese: effect of atrophy correction. Eur J Nucl Med Mol Imaging. 2005;32(7):794–805. doi: 10.1007/s00259-005-1767-2. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.