In September 2008 ClinicalTrials.gov implemented a results database in response to the Food and Drug Administration Amendments Act (FDAAA).1 The results database consists of structured tables of summary results information without discussion or conclusions. FDAAA, its implementing regulations (42 CFR Part 11),2,3 and several policies require results reporting to ClinicalTrials.gov to address non-publication of clinical trial results, and incomplete outcome and adverse event reporting.4 These have necessitated process changes for sponsors and investigators in both industry and at academic medical centers (AMCs).5 We previously estimated that the regulations and the National Institutes of Health (NIH) trial reporting policy6 would affect over half of registered trials conducted at U.S. AMCs.7 The scope and significance of these requirements demand that we monitor and evaluate the impact of this evolving results reporting mechanism on the clinical trials enterprise. In 2011 we characterized early experiences with nearly 2,200 posted results.4 A decade after launch, the results database currently contains over 36,000 results (as of May 2019). In this paper we describe the current requirements, the state of results reporting on ClinicalTrials.gov, and challenges and opportunities for further advancement.

U.S. Clinical Trial Results Reporting Requirements

Laws, Regulations, and Policies

FDAAA, its regulations, and several U.S. policies require or encourage results reporting on ClinicalTrials.gov (Table 1). These regulations, which were an important milestone in implementing FDAAA, clarified key definitions and the information to be reported, including the additional requirement to submit full protocol documents with results information for studies completed on or after January 18, 20173. Under the regulations, the party responsible for reporting is generally the “sponsor,” which is defined as either the FDA investigational product application holder or, if none, the initiator of the trial such as a grantee institution. Sponsors may designate qualified principal investigators for meeting the requirements. We will refer to this entity or individual as the “sponsor or investigator.” Both the regulations and the NIH trial reporting policy, which follows the regulatory reporting framework, require sponsors or investigators to submit study results information within one year of the trials’ primary completion date (generally defined as final collection of data for the primary outcome measure), with delayed results submission permitted in certain situations. Registration and results information may also be submitted to ClinicalTrials.gov on an optional basis for clinical studies to which the law, regulations, or policies do not apply, but must follow the established content and quality control review procedures. Although this paper focuses on the U.S. landscape, we note the international scope of results reporting requirements, including the European Union’s Clinical Trial Regulations.8

Table 1.

Summary of Key U.S. Laws, Regulations, and Policies Related to ClinicalTrials.gov Results Submission

| Name | General Scope | Information Submission Type and Submission Timeline | Relevant Dates | Possible Penalty for Non-Compliance |

|---|---|---|---|---|

| Food and Drug Administration Amendments Act (FDAAA)1 (U.S. Federal law) and associated regulations, 42 CFR Part 112 (U.S. Federal regulations) |

|

|

|

|

| Dissemination of National institutes of Health (NIH)-funded clinical trial information6 (NIH Policy) |

|

|

|

Suspension or termination of NIH grant or contract funding and consideration in future funding decisions |

| Submission of results of valid analyses by sex/gender and race/ethnicity9,10 (U.S. Federal law & NIH Policy) |

|

|

NIH-defined Phase III ACTs under Federal regulations supported by all new, competing grants and cooperative agreements awarded on or after December 13, 2017 | Consideration in future funding decisions |

| International Committee of Medical Journal Editors (ICMJE) Clinical Trial Registration Policy11 |

|

|

Effective Dates:

|

Refusal by editor to publish manuscript |

| Department of Veterans Affairs Office of Research and Development (VA ORD)12 |

|

|

Effective Dates:

|

Condition of funding |

| Patient-Centered Outcomes Research Institute (PCORI)13 |

|

|

Policy Adoption: February 24, 2015 | Condition of funding |

Content of Required Results Information

Each ClinicalTrials.gov study record represents one trial with information submitted by the sponsor or investigator. The registration section, which is generally provided at trial initiation, summarizes key protocol details and other information to support enrollment and tracking the trial’s progress. Following trial completion, results information can be added to the record using required and optional data elements organized into the following scientific modules: Participant Flow, Baseline Characteristics, Outcome Measures and Statistical Analyses, Adverse Events Information, and Study Documents (protocol and statistical analysis plan)3 (Table 2).

Table 2.

ClinicalTrials.gov Results Information by Module for Studies Completed on or After January 18, 2017

| Results Module Name and Brief Description | Specific Items to Report (Italics indicate optional items) |

|---|---|

|

Participant flow A tabular summary of participants’ progress through the study by assignment group (similar to a CONSORT flow diagram) |

|

|

Demographic and baseline characteristics A tabular summary of collected demographic and baseline data by analysis group and overall (similar to “Table 1” in a publication) |

|

|

Outcomes and statistical analyses A tabular summary of aggregate results data for each outcome measure by analysis group, and a tabular summary of statistical tests of significance or other parameters estimated from the outcome measure data |

|

|

Adverse event information A tabular display of adverse events, independent of attribution and whether anticipated, by analysis group |

|

|

Study documents Study documents submitted in Portable Document Format Archival (PDF/A) format |

|

|

Other information Administrative and other relevant information about the study |

|

Quality Control Review Criteria and Process

Information submitted to ClinicalTrials.gov undergoes quality control review, consisting of automated validation followed by manual review by National Library of Medicine (NLM) staff. The goal of quality control review is to ensure that all required information is complete and meaningful by identifying apparent errors, deficiencies, or inconsistencies.14 Review criteria were developed based on established scientific reporting principles15 and informed by our experience. Requirements for each data element are explained and reinforced by the system’s tabular structure (e.g., a measure type of “mean” must have a unit of dispersion).16 Quality control review criteria are described in review criteria documents,14 and when possible, automated messages are provided before submission within the system. As part of the quality control review process, NLM staff apply the review criteria and provide data submitters with comments noting “major issues” that must be corrected or addressed and “advisory issues” that are provided as suggestions for improving clarity. The quality control review process ends when all noted major issues have been addressed in a subsequent submission. Common types of major issues include invalid or inconsistent units of measure (e.g., “time to myocardial infarction” as measure, with “number of participants” as unit of measure), listing of a scale without the required information about the domain or the directionality (e.g., the minimum and maximum scores), and inconsistencies within the record (e.g., number analyzed for an outcome measure greater than number started the study) (see Table S1 in the Supplementary Appendix).17

Description of Results Database Experience

We characterized results posted on ClinicalTrials.gov as of January 1, 2019, at which time over 3,300 sponsors or investigators had posted over 34,000 records with results. As of May 2019 approximately 120 new results are posted to the site each week, with an additional 128 posted records with results updated each week. Most posted study results are clinical trials, while 6% (n=1,973) are for observational studies. Table S2 shows the characteristics of posted trials with results. Over 1,500 of the posted results have protocol and statistical analysis plan documents. The median (IQR) time to posting by NLM from primary completion date was 2.0 years (1.3 to 3.8), which includes time from the final collection of data for the primary outcome measure of the trial until the summary results information were submitted to NLM, time for NLM quality control review, and time for sponsors or investigators to address quality control issues.

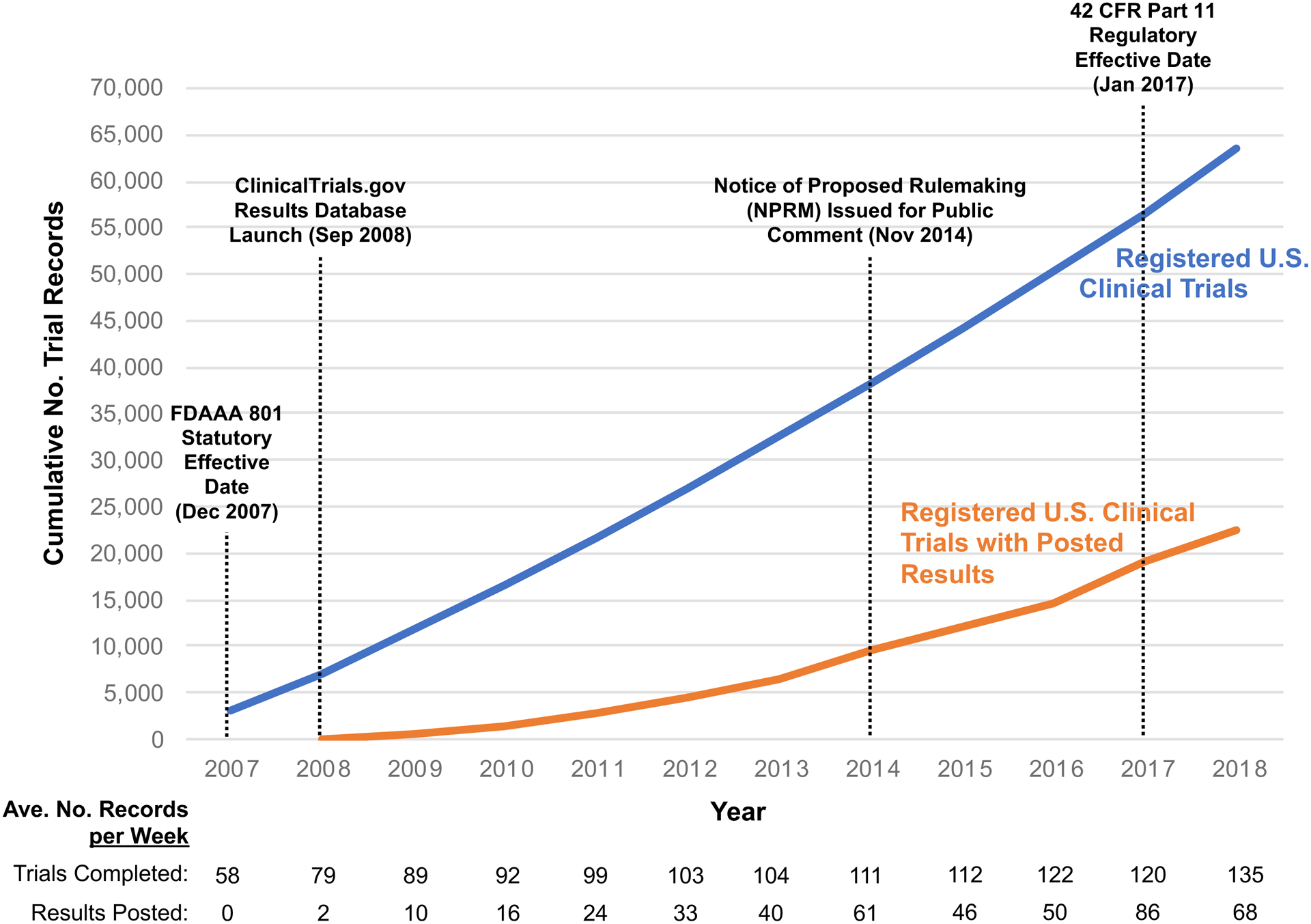

After the regulations became effective in January 2017 (Table 1), there was an increase in the rate of results posting for completed U.S. clinical trials from an average of 50 new results posted per week in 2016 to 86 new results posted/week in 2017 (Figure). Although assessing the adherence of results reporting with FDAAA may require non-public data (including information not required or collected until after the Final Rule effective date) and further evaluation of study specific considerations, others have used public ClinicalTrials.gov data to approximate frequency of results reporting with components of FDAAA or other metrics (e.g., any results reported in publications or ClinicalTrials.gov within two years). Reported percentages of completed trials that had results reported in ClinicalTrials.gov from these heterogeneous analyses range from 22% of relevant trials completed in 200918 to up to 66% in May 2019.19 These efforts by various groups to highlight rates of reporting, including the naming of specific sponsors, correspond to improvements in reporting generally, and by named sponsors. For example, an updated 2018 STAT analysis documented the most improvement among sponsors previously named in a 2016 STAT analysis.20,21

Figure.

Cumulative Numbers of U.S. Clinical Trials Registered on ClinicalTrials.gov by Primary Completion Date (Blue Line) and Registered U.S. Trials with Posted Results by the Results First Posted Date (Orange Line) with Key Events. The blue line shows the cumulative number of clinical trials with at least one facility site in the United States (“U.S. clinical trials”) registered on ClinicalTrials.gov with a primary completion date between 2007 and 2018 as of May 8, 2019. The orange line shows the cumulative number of registered U.S. clinical trials with results first posted on ClinicalTrials.gov between 2008 and 2018.

Key Issues in Meeting Results Reporting Requirements

To explore the degree to which sponsors and investigators are meeting the quality control review criteria, on October 31, 2018 we identified all trial records with required and optional results first submitted on or after May 1, 2017 and that underwent quality control review at least once by September 30, 2018. All results submissions (whether required or optional) are subject to the same review criteria, and were considered to have met quality control review criteria (“success”) for a review cycle if no major issues were identified (see Supplementary Appendix). The success rate during the first review cycle for industry records was 31% (862/2,780) and non-industry records was 17% (582/3,486). Cumulative success rates after the second review cycle increased to 77.2% (1,653/2,140) for industry records and 63.2% (1,492/2,359) for non-industry records.

In our analysis of high volume sponsors (sponsors who submitted ≥20 results during sample period), the cycle 1 success rates were heterogeneous. For example, high-volume industry sponsor cycle 1 success ranged from 16.4% to 77.1%, while non-industry sponsors ranged from 5 to 44.4% (see Figure S1 in the Supplementary Appendix). The fact that some high-volume industry sponsors achieved cycle 1 success rates of over 70%, indicates that the reporting requirements can be understood and followed appropriately. Although the median cycle 1 success rate is relatively low, 62% achieved success after two review cycles. We believe that a goal of achieving success within two cycles is reasonable, and is analogous to the need to make changes in response to editorial comments prior to journal publication (see Figure S2 in the Supplementary Appendix). We have observed that industry sponsors tend to have a centralized, well-staffed process for supporting results submission whereas non-industry sponsors tend to rely on individual investigators with minimal centralized support. A 2017 survey found that AMCs had a median of 0.08 full-time equivalent staff (3.2 hours/week) with varying levels of education dedicated to supporting registration and results submission.5 In addition to limited support, some sponsors have described challenges with providing structured information in a system that is unfamiliar in format and terminology.12 NLM recognizes these challenges and has made system improvements over time; we continue to invest in evaluating and improving the system, including providing more just-in-time automated user support prior to submission. We also continue to conduct training workshops, add and improve resource materials (e.g., templates, checklists, and tutorials), and provide one-on-one assistance when needed.

Effect of Results Database on Evidence Base

The effect of the ClinicalTrials.gov registry was reviewed in a previous publication22 and sample evidence for the effect of the results database is provided in Table 3. Additionally, we conducted two analyses to evaluate the relationship between the results database and the published literature (see Supplementary Appendix).

Table 3:

Potential Benefits and Uses of ClinicalTrials.gov Results Database Entries with Examples of Evidence of Benefit

| Contribution to Clinical Trial Enterprise | Sample Evidence |

|---|---|

| Evaluate consistency of reporting | |

| Augment evidence base with results for unpublished trials | |

| Provide more complete results for published trials |

|

| Augment evidence base to mitigate publication bias | Results entries uniquely available in ClinicalTrials.gov sometimes provide unique evidence relevant to reviews even though no study has shown that such results entries impact conclusions of systematic reviews32,33 |

| Monitor other effects of results reporting requirements | Implementation of FDAAA has been associated with the following:

|

| Other uses | Compared discontinuation rates and reasons across trials for pain conditions using results entries36 |

Relationship to Published Literature

To investigate the broad impact of ClinicalTrials.gov on public availability of trial results, we compared the timing of initial results availability between the results database and corresponding journal publications (when available). On March 1, 2018, we identified 1,902 registered trials with required or optional results first posted on ClinicalTrials.gov between April 1, 2017 and June 30, 2017. We then extracted a 20% random set of 380 records with results, manually identified corresponding publications using previously described methods,37 and compared the date that results were first posted on ClinicalTrials.gov with the publication date. Results posting and publication dates within a one-month period were categorized as “simultaneous” while others were designated as published “before” or “after” posting. Relative to the time of posting on ClinicalTrials.gov, 31% (117/380) of records had a results publication before, 2% (7/380) were published simultaneously, 9% (36/380) were published after posting, and 58% (220/380) did not have any publications by the end of the follow-up period on July 15, 2018. Twenty-four months after the trial primary completion date, 41% (156/380) of trials had posted results available on ClinicalTrials.gov, and 27% (101/380) of trials had publications (see Table S2 in the Supplementary Appendix). These findings are consistent with prior analyses which showed that substantial numbers of trials have no publications 2–4 years after completion,37 with ClinicalTrials.gov providing the only public representation of results for many trials.4,28

Completeness of Results Reporting

Researchers have previously shown inadequacies in the reporting of clinical trial information in ClinicalTrials.gov and corresponding publications, including for all-cause mortality, which is critical, unambiguous information.23,38 To improve reporting in ClinicalTrials.gov, an all-cause mortality table is now required for trials completed on or after January 18, 2017. Of 160 trials in our sample with a results publication, we identified 47 trials that included the all-cause mortality table in ClinicalTrials.gov. Of these, 26 had zero deaths reported in ClinicalTrials.gov and 21 had at least one death reported, for a total of 995 reported deaths. The associated publications reported 964 deaths (see Table S3 in the Supplementary Appendix). For those trials with zero deaths in ClinicalTrials.gov, 3.8% (1/26) of publications stated that there were no deaths and 96.2% (25/26) did not specifically mention deaths. For those trials with at least one death in ClinicalTrials.gov, 61.9% (13/21) were concordant with the publication, 14.3% (3/21) reported fewer deaths in the publication, 9.5% (2/21) reported the same overall number of deaths, but in different numbers of arms that were discordantly described, and in 14.3% (3/21) the total number of deaths were ambiguous in the publication. No trials had more deaths reported in a publication than in ClinicalTrials.gov.

Although discrepancies between two or more sources generally raises questions about which is accurate, it is unlikely that sponsors or investigators would report more deaths than actually occurred, especially because the focus on “all-cause mortality” should remove any subjectivity. While not specifically evaluated in this analysis, differences in timing of disclosing trial results may lead to some discrepancies. For example, publication prior to trial completion would only include deaths occurring up to that time while the ClinicalTrials.gov results entry would include all additional deaths observed until trial completion and thereby serve as a key source of final results for such trials.

Discussion

We previously described the mandates to report results to ClinicalTrials.gov as an experiment for addressing non-publication and incomplete reporting of clinical trial results.4 A decade after launch, the results database is the only publicly accessible source of results information for thousands of trials, supports complete reporting, and serves as a tool for timely dissemination of trial results that complement existing publications. ClinicalTrials.gov study records provide an informational scaffold by which information about a trial can be discovered, including access to individual participant data (IPD) sharing statements and, in some cases, links to where IPD are deposited.39,40 This scaffolding function is facilitated when documents about a clinical trial (e.g., publications, IPD repositories, press releases, and news articles) reference the ClinicalTrials.gov unique identifier (NCT number) assigned to each registered study. The recent addition of protocol documents, statistical analysis plans, and informed consent forms further informs users about a study’s design and we encourage their use in meta-research and quality improvement efforts.41

Efforts to improve the quality of reporting need to consider the full study life cycle. For example, the presumption of both trial registration and summary results reporting had been that required information would flow directly from the trial protocol, statistical analysis plan, and the data analysis itself. Based on experience operating ClinicalTrials.gov, however, we have seen heterogeneity in the degree to which the necessary information is specified or available. Thus, we support recent efforts aimed at strengthening this early stage of the clinical research lifecycle with structured, electronic protocol development tools42–44 as well as the use of standardized, well-specified outcome measures45 that are consistent with scientific principles and harmonized with ClinicalTrials.gov reporting. For such broad efforts and more targeted quality efforts to take root, leadership in the clinical research community is needed to champion their value and provide resources and incentives. In parallel, as the database operators, we continue to evaluate user’s needs, ensure reporting requirements are known and understood by those involved throughout the clinical research lifecycle, to improve the submission process and reporting quality.

We also believe that the full value of the trial reporting system will emerge when various parties recognize and leverage the significant effort invested in the use of this curated, structured system for summary results reporting. For example, providing appropriate academic credit for results posting on ClinicalTrials.gov (as a complement to credit for publications) would incentivize more timely and careful entries by investigators. Additionally, results database tables can be reused in manuscripts and during the publication editorial or peer review process to ensure consistency across sources. Publications can also refer to the full set of results in ClinicalTrials.gov, while focusing on a subset of interest (e.g.,30,31). Just as the results database supports systematic reviews, we see opportunities for those who oversee research, including funders, ethics committees, and sponsoring organizations to conduct landscape analyses prior to approving the initiation of new clinical trials as well as to monitor a field of research over time. In support of this, we aim to develop tools to optimize search strategies further and enhance the viewing and visualization of search results to support such activities. For instance, NLM recently updated the ClinicalTrials.gov application programming interface to provide support to third-parties with better targeted queries and more expansive content availability as well as changes to the main website search features.

While the results database has evolved considerably in the past decade, efforts to strengthen the culture and practice of systematic reporting must continue. We previously outlined steps that various stakeholders could take to enhance the trial reporting system.22 These actions can be described in two broad themes: (1) facilitating high quality reporting while reducing the burden and (2) modifying incentives to encourage reporting and embracing its value as part of the scientific process. As such, we endeavor to support researchers and institutions in maximizing the value of their efforts and those of the research participants as well as the overall value of the ClinicalTrials.gov results database to the scientific enterprise.

Supplementary Material

Funding:

All authors supported by the Intramural Research Program of the National Library of Medicine, National Institutes of Health

Footnotes

Publisher's Disclaimer: Disclaimer: The views expressed in this article are those of the authors and do not necessarily reflect the positions of the National Institutes of Health

References

- 1.Food and Drug Administration Amendments Act of 2007. Public Law 110–85. September 27, 2007. (http://www.gpo.gov/fdsys/pkg/PLAW-110publ85/pdf/PLAW-110publ85.pdf).

- 2.Final rule — clinical trials registration and results information submission. Fed Regist 2016; 81: 64981–5157 (https://www.federalregister.gov/documents/2016/09/21/2016-22129/clinical-trials-registration-and-results-information-submission). [PubMed] [Google Scholar]

- 3.Zarin DA, Tse T, Williams RJ, Carr S. Trial Reporting in ClinicalTrials.gov - The Final Rule. The New England journal of medicine 2016;375:1998–2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Zarin DA, Tse T, Williams RJ, Califf RM, Ide NC. The ClinicalTrials.gov results database--update and key issues. The New England journal of medicine 2011;364:852–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Mayo-Wilson E, Heyward J, Keyes A, et al. Clinical trial registration and reporting: a survey of academic organizations in the United States. BMC medicine 2018;16:60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.National Institutes of Health, Department of Health and Human Services. NIH policy on the dissemination of NIH-funded clinical trial information. Fed Regist. 2016. September 21;81 FR 64922−−64928. (https://www.federalregister.gov/documents/2016/09/21/2016-22379/nih-policy-on-the-dissemination-of-nih-funded-clinical-trial-information). [Google Scholar]

- 7.Zarin DA, Tse T, Ross JS. Trial-results reporting and academic medical centers. The New England journal of medicine 2015;372:2371–2. [DOI] [PubMed] [Google Scholar]

- 8.Regulation (EU) No 536/2014 of the European Parliament and of the Council of 16 April 2014 on clinical trials on medicinal products for human use, and repealing Directive 2001/20/EC. Official Journal of the European Union. 27.May.2014. EN. L 158/1–76. (https://ec.europa.eu/health//sites/health/files/files/eudralex/vol-1/reg_2014_536/reg_2014_536_en.pdf). [Google Scholar]

- 9.Amendment: NIH Policy and Guidelines on the Inclusion of Women and Minorities as Subjects in Clinical Research. NIH Guide 2017. November 28; NOT-OD-18–014 (https://grants.nih.gov/grants/guide/notice-files/NOT-OD-18-014.html). [Google Scholar]

- 10.21st Century Cures Act. Public Law 114–255. Dece,ber 13, 2016. Sec. 2053. (https://www.govinfo.gov/content/pkg/PLAW-114publ255/pdf/PLAW-114publ255.pdf).

- 11.International Committee of Medical Journal Editors. Clinical Trial Registration in Recommendations for the Conduct, Reporting, Editing, and Publication of Scholarly Work in Medical Journals. 2018. December (http://icmje.org/recommendations/browse/publishing-and-editorial-issues/clinical-trial-registration.html#one). [PubMed]

- 12.Huang GD, Altemose JK, O’Leary TJ. Public access to clinical trials: Lessons from an organizational implementation of policy. Contemp Clin Trials 2017;57:87–9. [DOI] [PubMed] [Google Scholar]

- 13.Patient-Centered Outcomes Research Institute. PCORI’s Process for Peer Review of Primary Research and Public Release of Research Findings. Available at http://www.pcori.org/sites/default/files/PCORI-Peer-Review-and-Release-of-Findings-Process.pdf.

- 14.ClinicalTrials.gov Review Criteria and Other Support Materials. Available at https://ClinicalTrials.gov/ct2/manage-recs/resources#ReviewCriteria.

- 15.Schulz KF, Altman DG, Moher D, Group C. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. Bmj 2010;340:c332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.ClinicalTrials.gov Data Element Definitions. Available at https://ClinicalTrials.gov/ct2/manage-recs/resources#DataElement.

- 17.Dobbins HD, Cox C, Tse T, Williams RJ, Zarin DA. Characterizing Major Issues in ClinicalTrials.gov Results Submissions. Presented at: Eighth International Congress on Peer Review and Scientific Publication; September 2017; Chicago, IL. Abstract available at https://peerreviewcongress.org/prc17-0383. [Google Scholar]

- 18.Prayle AP, Hurley MN, Smyth AR. Compliance with mandatory reporting of clinical trial results on ClinicalTrials.gov: cross sectional study. Bmj 2012;344:d7373. [DOI] [PubMed] [Google Scholar]

- 19.FDAAA TrialsTracker (http://fdaaa.trialstracker.net/).

- 20.Piller C, Bronshtein T. Faced with public pressure, research institutions step up reporting of clinical trial results. STAT. 2018. January 9. Available at https://www.statnews.com/2018/01/09/clinical-trials-reporting-nih/. [Google Scholar]

- 21.Piller C Failure to report: A STAT investigation of clinical trials reporting. STAT. 2015. December 15/ Available at https://www.statnews.com/2015/12/13/clinical-trials-investigation/. [Google Scholar]

- 22.Zarin DA, Tse T, Williams RJ, Rajakannan T. Update on Trial Registration 11 Years after the ICMJE Policy Was Established. The New England journal of medicine 2017;376:383–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hartung DM, Zarin DA, Guise JM, McDonagh M, Paynter R, Helfand M. Reporting discrepancies between the ClinicalTrials.gov results database and peer-reviewed publications. Annals of internal medicine 2014;160:477–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Becker JE, Krumholz HM, Ben-Josef G, Ross JS. Reporting of results in ClinicalTrials.gov and high-impact journals. JAMA 2014;311:1063–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Becker JE, Ross JS. Reporting discrepancies between the ClinicalTrials.gov results database and peer-reviewed publications. Annals of internal medicine 2014;161:760. [DOI] [PubMed] [Google Scholar]

- 26.Schwartz LM, Woloshin S, Zheng E, Tse T, Zarin DA. ClinicalTrials.gov and Drugs@FDA: A Comparison of Results Reporting for New Drug Approval Trials. Annals of internal medicine 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Williams RJ, Tse T, DiPiazza K, Zarin DA. Terminated Trials in the ClinicalTrials.gov Results Database: Evaluation of Availability of Primary Outcome Data and Reasons for Termination. PLoS One 2015;10:e0127242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Fain KM, Rajakannan T, Tse T, Williams RJ, Zarin DA. Results Reporting for Trials With the Same Sponsor, Drug, and Condition in ClinicalTrials.gov and Peer-Reviewed Publications. JAMA Intern Med 2018;178:990–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Riveros C, Dechartres A, Perrodeau E, Haneef R, Boutron I, Ravaud P. Timing and completeness of trial results posted at ClinicalTrials.gov and published in journals. PLoS medicine 2013;10:e1001566; discussion e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wedzicha JA, Banerji D, Chapman KR, et al. Indacaterol-Glycopyrronium versus Salmeterol-Fluticasone for COPD. The New England journal of medicine 2016;374:2222–34. [DOI] [PubMed] [Google Scholar]

- 31.Cahill KN, Katz HR, Cui J, et al. KIT Inhibition by Imatinib in Patients with Severe Refractory Asthma. The New England journal of medicine 2017;376:1911–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Adam GP, Springs S, Trikalinos T, et al. Does information from ClinicalTrials.gov increase transparency and reduce bias? Results from a five-report case series. Syst Rev 2018;7:59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Baudard M, Yavchitz A, Ravaud P, Perrodeau E, Boutron I. Impact of searching clinical trial registries in systematic reviews of pharmaceutical treatments: methodological systematic review and reanalysis of meta-analyses. Bmj 2017;356:j448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Phillips AT, Desai NR, Krumholz HM, Zou CX, Miller JE, Ross JS. Association of the FDA Amendment Act with trial registration, publication, and outcome reporting. Trials 2017;18:333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Zou CX, Becker JE, Phillips AT, et al. Registration, results reporting, and publication bias of clinical trials supporting FDA approval of neuropsychiatric drugs before and after FDAAA: a retrospective cohort study. Trials 2018;19:581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Cepeda MS, Lobanov V, Berlin JA. Use of ClinicalTrials.gov to estimate condition-specific nocebo effects and other factors affecting outcomes of analgesic trials. J Pain 2013;14:405–11. [DOI] [PubMed] [Google Scholar]

- 37.Ross JS, Tse T, Zarin DA, Xu H, Zhou L, Krumholz HM. Publication of NIH funded trials registered in ClinicalTrials.gov: cross sectional analysis. Bmj 2012;344:d7292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Earley A, Lau J, Uhlig K. Haphazard reporting of deaths in clinical trials: a review of cases of ClinicalTrials.gov records and matched publications-a cross-sectional study. BMJ Open 2013;3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Zarin DA, Tse T. Sharing Individual Participant Data (IPD) within the Context of the Trial Reporting System (TRS). PLoS medicine 2016;13:e1001946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Taichman DB, Sahni P, Pinborg A, et al. Data Sharing Statements for Clinical Trials - A Requirement of the International Committee of Medical Journal Editors. The New England journal of medicine 2017;376:2277–9. [DOI] [PubMed] [Google Scholar]

- 41.Lynch HF, Largent EA, Zarin DA. Reaping the Bounty of Publicly Available Clinical Trial Consent Forms. IRB 2017;39:10–5. [PMC free article] [PubMed] [Google Scholar]

- 42.Chan AW, Tetzlaff JM, Altman DG, et al. SPIRIT 2013 statement: defining standard protocol items for clinical trials. Annals of internal medicine 2013;158:200–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.The SEPTRE (SPIRIT Electronic Protocol Tool & Resource) home page (http://www.spirit-statement.org/implementation-tools/).

- 44.The National Institutes of Health e-Protocol Writing Tool home page. (https://e-protocol.od.nih.gov/).

- 45.NIH Common Data Element (CDE) Resource Portal. Available at https://www.nlm.nih.gov/cde/index.html.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.