Abstract

Recent advances in photoacoustic (PA) imaging have enabled detailed images of microvascular structure and quantitative measurement of blood oxygenation or perfusion. Standard reconstruction methods for PA imaging are based on solving an inverse problem using appropriate signal and system models. For handheld scanners, however, the ill-posed conditions of limited detection view and bandwidth yield low image contrast and severe structure loss in most instances. In this paper, we propose a practical reconstruction method based on a deep convolutional neural network (CNN) to overcome those problems. It is designed for real-time clinical applications and trained by large-scale synthetic data mimicking typical microvessel networks. Experimental results using synthetic and real datasets confirm that the deep-learning approach provides superior reconstructions compared to conventional methods.

Keywords: Photoacoustic imaging, deep learning, reconstruction, convolutional neural network

I. Introduction

REAL-time integrated photoacoustic and ultrasound (PAUS) imaging is a promising approach to bring the molecular sensitivity of optical contrast mechanisms into clinical ultrasound (US) systems. Laser pulses transmitted into tissue induce light absorption from endogenous chromophores or exogenous contrast agents, which in turn launch acoustic waves according to the photoacoustic (PA) effect that can be used for imaging. We have recently developed a customized system for simultaneous PA and US imaging using interleaved techniques at fast scan rates [1]. One of the potential clinical applications is real-time, quantitative monitoring of blood oxygenation in the microvasculature [2], [3]. It requires not only multiple measurements at different optical wavelengths, but also high image quality to preserve microvascular topology.

Similar to US beamforming, PA reconstruction widely uses a traditional delay-and-sum (DAS) algorithm [4] for simplicity. However, the limited view and relatively narrow bandwidth of clinical US arrays greatly degrade image quality due to the ultra-broad bandwidth nature of PA signals [5]. This ill-posed problem causes structure loss, low contrast, and diverse artifacts making image interpretation difficult.

To address these challenges, many groups have adopted reconstruction techniques from US or radar imaging to PA imaging. In particular, adaptive approaches such as a Minimum Variance (MV) method was developed to reduce off-axis signal and sidelobe artifacts by assigning apodization weights based on statistics [6], [7]. Reconstruction using Delay Multiply and Sum (DMAS) methods can achieve higher image contrast by enhancing signal coherence nonlinearly [8], [9]. Also, iterative techniques for the inverse reconstruction problem have been consistently developed for PA imaging using signal sparsity and low-rankness [10], [11]. All these methods may improve image quality by adopting sophisticated models based on system physics, data statistics, and underlying object properties. However, the main drawbacks are high computational complexity and the requirement of carefully selecting a handful of parameters.

Currently, a new category of reconstruction methods has been inspired by the field of machine learning (ML). Due to the great success of deep convolutional neural networks (CNN) in computer vision, reconstruction using supervised learning is an emerging research area in medical imaging [12]. The network extracts best features via learning weights/filters to mitigate ill-posedness in inverse problems. The most popular ML framework uses learning in the image-domain, where training inputs are corrupted images processed by a standard reconstruction method under ill-posed conditions [13]-[17]. Using this approach, the network avoids trying to capture detailed reconstruction operations and concentrates on filtering artifacts and noise. However, since the details of actual detector data are lost after image reconstruction, ML applied to reconstructed images often cannot recover weak signals and fine structures can be lost.

Some frameworks are based on iterative schemes to train regularizations in Compressed Sensing (CS), but their extensive computations restrict real-time clinical applications [18], [19]. Zhu et al proposed a framework starting with acquired data without prior knowledge of physics, but a fully connected layer requires a large number of weighting parameters for large data sets [20]. Allman et al employed PA raw data, but the application was only limited to the classification of point-like targets from artifacts [21].

Here we explore practical PA image reconstruction based on a deep-learning technique suitable for real-time PAUS imaging. We first examine the link between model-based methods and basic neural network layers to help design and interpret the learning structure. As discussed below, this study led us to modify 2-D raw data (with time and detector dimensions) into a 3-D array (with two spatial dimensions and a channel dimension), where a channel packet corresponds to the propagation delay profile for one spatial point, as an input to the neural network. The delay operation simplifies the learning process and the extension to channel dimension retains more information and increases learning accuracy.

Our subsequent architecture is based on U-net [22], where dyadic scale decomposition can access data in multi-resolution support. The structure can extract comprehensive features from the transformed 3-D array, replacing hand-crafted functions and generalizing standard filtering techniques. For training, we restrict the scope of absorber types to microvessels and create synthetic datasets using simulation. Operators transforming ground-truth to radio frequency (RF) array data are based on our current fast-swept PAUS system [1], i.e. take into account the spectral bandwidth and geometry of a real imaging probe. However, it is not limited to only one imaging system and can be applied to any PA system with known geometry and characteristics.

To demonstrate the performance of the CNN-based method, we first compare it to standard methods using synthetic data. Then, we performed phantom experiments using the fast-swept PAUS system, and finally imaged a human finger in vivo.

II. Signal and System Model

A. PA Forward Operation

The spatiotemporal pressure change p(t, r) from short laser pulse excitation is captured in the photoacoustic equation [23],

| (1) |

where vs is the sound speed, β is the thermal coefficient of volume expansion, and Cp is the specific heat capacity at constant pressure. H denotes the heating function given as H = μaΦ where μa is the optical absorption coefficient and Φ is the fluence rate in a scattering medium. The heating function can be approximately described as H(t, r) = s(r)δ(t), where s(r) denotes the spatial absorption function. The forward solution using the Green’s function can be expressed as [24]

| (2) |

where is defined as the Grueneisen parameter and r′ is the detection position. Assume a transducer contains J detection elements. Then, measurements recorded by the jth element can be expressed as

| (3) |

where h(t) and denote the system impulse response and system noise, respectively, and * represents the temporal convolution operator. denotes the function containing the directivity pattern in the integration in Eq. 2.

B. Limitations of Handheld Linear Array System

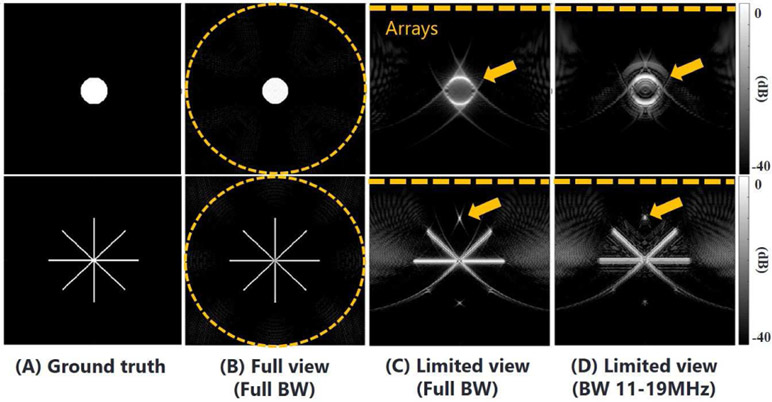

Reconstruction methods have been developed based on the measurement geometry. Filtered back-projection (FBP) is derived from the inverse of the PA forward operation in the spatiotemporal domain or k-space (frequency) domain [25]. Exact FBP formulas were demonstrated for a 3-D absorber distribution where the detection geometry is spherical, planar or cylindrical [26]. Imaging for a 2D spatial plane (slice) was adopted in standard tomographic scans assuming the detector (transducer) is focused in the plane [27], [28]. A circular transducer for a 2D source distribution produces an accurate reconstruction if the number of detector elements provides enough spatial sampling density, as shown in Fig. 1 (B) [15], [29]-[31], and their bandwidth is not limited. In contrast, a linear sensor geometry (r′ = x) (as for a conventional US transducer) greatly limits the view, and also the bandwidth. Both limitations degrade image quality, as shown in Fig. 1 (C) and (D).

Fig. 1.

Simulation results using standard filtered back-projection reconstruction. (A) presents two example object shapes. (B-D) shows reconstructions when the measurement conditions are (B) circular array with full bandwidth, (C) linear array with full bandwidth, and (D) linear array with limited bandwidth (11-19 MH). Arrows indicate structural losses and artifacts. All images are visualized on a log-scale colormap (40 dB range).

This ill-posed problem for a finite bandwidth linear array is more understandable in the frequency domain. For simplicity, assume that the directivity function is constant and the noise power is zero. Then, the 2-D Fourier transform of Eq. 3 for this geometry can be represented as [32]

| (4) |

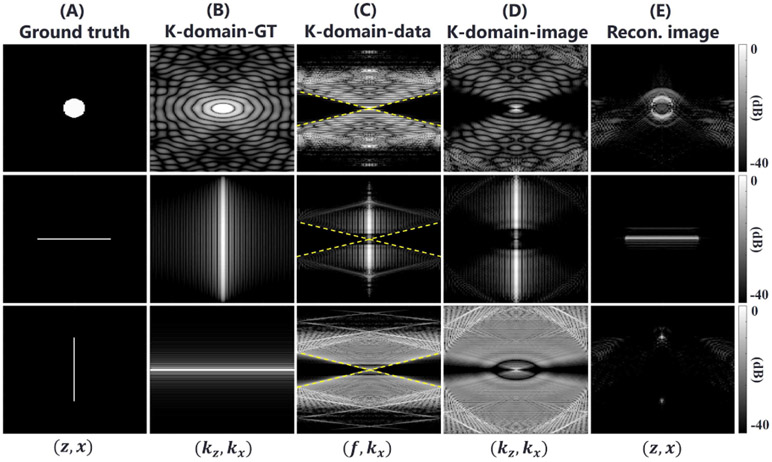

where kz is defined by the mapping . As illustrated in Fig. 2, k-domain-data Y(f, kx) (Fig. 2 (C)) are highly associated with the k-domain-image S(kz, kx) (Fig. 2 (D)) in spite of the nonlinear mapping. In addition to losses from evanescent waves, the narrow frequency bandwidth weakens low-frequency components of the object. As shown in Fig. 2 (E) (first row), reconstruction of a continuous absorbing medium is problematic for the linear array geometry.

Fig. 2.

Reconstruction simulations in k-space using one circular object and two simple linear objects rotated by 90 degrees. All images visualize absolute pixel values on a log-scale colormap (40 dB range). The maximum value in each image is represented as pure white. (A) Ground-truth images. (B) K-domain-GT obtained by 2-D Fourier transforming the ground truth. (C) K-domain-data obtained by 2-D Fourier transforming raw data. Raw x-t data obtained with the forward model. The dotted lines indicate f = ckx. The empty region (f < ckx) corresponds to evanescent waves. (D) K-domain-image by nonlinear mapping of K-domain-data. (E) Reconstructed image obtained by 2-D inverse Fourier transforming K-domain-image.

A special case of a vertical line source (bottom line in Fig. 2) exaggerates the problem. Its k-space spectrum is almost totally filtered, and only low amplitude spectral sidelobes invisible in the ground truth image survive. Thus, only top and bottom source points are visualized in the reconstruction.

In this paper, the target objects of interest are microvessels, where the shape can be represented as a sum of straight and curvy lines. Since the signal components of the typical vascular structure are widely distributed in the k-domain, and a sufficient fraction are maintained even after limited view/bandwidth induced filtration, there is the possibility to reconstruct the entire object shape. However, note that it will be very challenging to recover vertical portions, as shown in Fig. 1 and Fig. 2.

III. Conventional Methods in PA Image Reconstruction

A. Delay and Sum (DAS)

Most commercial US systems use DAS beamforming [4] for real-time image reconstruction. This procedure applies a delay due to the propagation distances between an observation point in the image and each transducer element prior to summation of signals across all array elements. Likewise, since the PA signal is based on one-way acoustic propagation, the DAS method can be applied as

| (5) |

where w(r, r′j) denotes apodization weights. The framework of FBP is identical to DAS because DAS is associated with the adjoint of the forward operation (See Appendix I). Typically, standard DAS imaging applies a Hilbert transform after summation [4]. The expression can simply be represented by transforming data y to f as

| (6) |

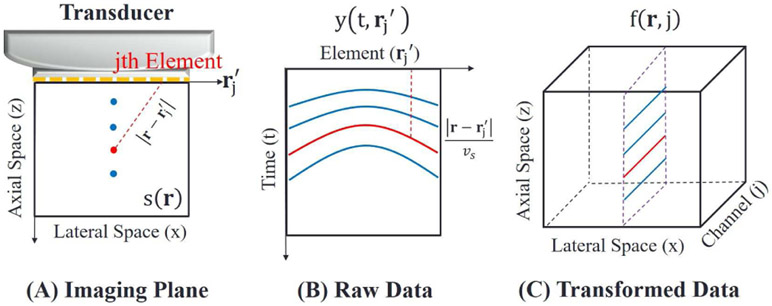

where . Fig. 3 illustrates the (delay) transformation provided that a detector is a linear-array transducer and the imaging plane is 2-D (r = (z, x)).

Fig. 3.

(A) Measurement geometry. A 2-D image plane with respect to a linear array transducer is defined as the z-x plane. (B) 2-D measurement data. The curved lines indicate propagation delay profiles of particular image points at different depths. (C) 3-D transformed data. Channel packets correspond to the delay profiles indicated by straight lines.

B. Minimum Variance (MV)

MV is also based on Eq. 6 but the weighting is adaptive [6]. For a position ri, the vector form of Eq. 6 can be written as , where T denotes transpose and vectors are in RJ. The weights are determined by minimizing the variance of as

| (7) |

where E[·] denotes the expectation operator and the constraint forces unity gain at the focal point. The solution of the optimization problem is given as

| (8) |

where . The covariance matrix Ri can be estimated as

| (9) |

where is the subarray for the position ri, L is the subarray length, and 2N + 1 is the number of averages over axial samples near the position ri. Details can be found in [6].

C. Delay Multiply and Sum (DMAS)

The multi-channel array f(r, j) is the beginning of the DMAS algorithm, as illustrated in Fig. 4 (B). Before summation, signal samples over channels at each position ri are combinatorially coupled as

| (10) |

where . This nonlinear computation acts as a spatial cross-correlation, enhancing coherent signals while suppressing off-axis interference. The operations, sign and square root, are normalization steps to conserve signal power. The signal has modulated components near zero-frequency and harmonic components due to the coupling operation [9]. Therefore, bandpass/highpass filtering is required in post-processing to suppress the components near zero-frequency.

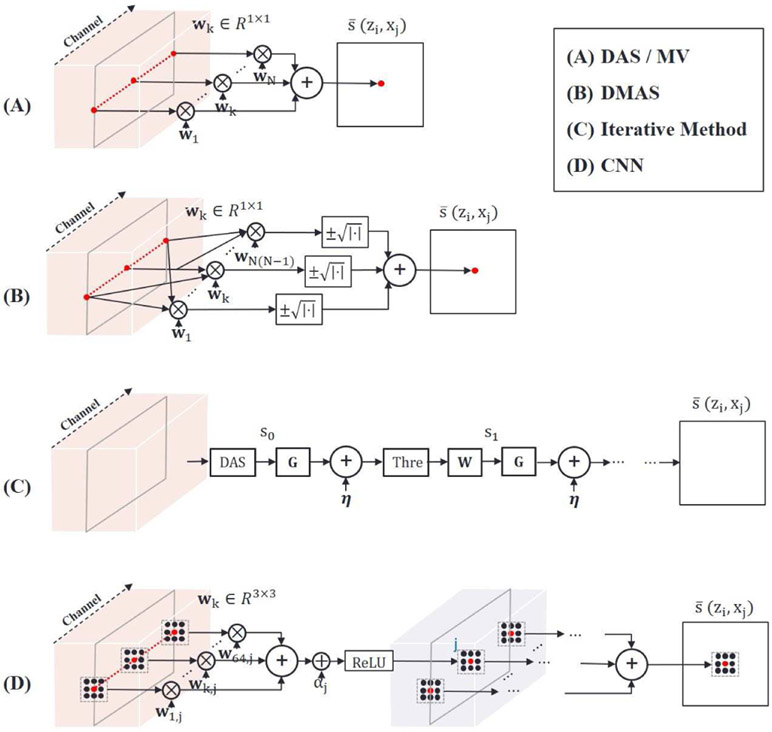

Fig. 4.

Schematic diagram illustrating reconstruction methods. 2-D measurement data are transformed into a 3-D array as shown in Fig. 3 followed by reconstruction. (A) DAS / MV methods. An image pixel is determined by weighting and summing channel samples at a corresponding pixel position. Weights vary with position. Unlike DAS, MV adaptively assigns weights depending on data statistics. (B) DMAS method. Channel samples are coupled and multiplied before summation. This additional nonlinear operation is required to prevent a dimensional problem. (C) Iterative method. This is based on the L1 minimization problem in compressed sensing (CS). The initial solution is ordinarily obtained by DAS. The solution is updated by matrix multiplications, matrix additions, and threshold operations. (D) Basic structure of CNN. It applies convolution with a 3 × 3 kernel to multi-channel inputs, and returns multi-channel outputs. The full network consists of multiple layers, where each layer contains the convolution operation, bias addition and Rectified Linear Unit (ReLU) operation to enhance expressive power.

D. Iterative Method with Compressed Sensing (CS)

Iterative methods attempt to solve the inverse problem by adding regularization to overcome the ill-posed condition [10], [11]. The matrix form of Eq. 3 can be expressed as y = Hs + n, where the matrix H is involved with the forward operation, directivity and system impulse response. The standard form of the inverse problem is given as

| (11) |

where W is the transform for sparsity, a = WTs is the corresponding coefficient, and λ is the regularization parameter. Since the non-linear l1 term does not allow a closed form solution, it is solved by iterative methods such as iterative shrinkage-thresholding argorithms (ISTA) and alternating direction method of multipliers (ADMM) [33]-[35]. For example, ISTA solves the optimization problem as

| (12) |

where Θα is the soft-thresholding operator with value α and 1/τ is the Lipschitz constant. The solution is updated by repetitive operations including matrix multiplication, matrix addition and thresholding.

The main disadvantage of MV, DMAS and CS is the high computational complexity for real-time imaging even though modifications have been proposed to reduce the burden. The selection of statistical operators or feature bases is a crucial step in model-based schemes. If the selection does not agree with the inherent properties of PA data, imaging is inaccurate. As an alternative, deep-learning approaches can build optimal feature maps through training and provide a practical reconstruction framework due to fast computation. In addition, they are well-known for strong generalization over parameters such as SNR [36].

IV. Deep-learning reconstruction

Recently, researchers have begun to find connections between conventional model-based approaches and deep convolutional neural networks (CNN) for inverse problems [13], [33]. We have also explored these links to build an efficient CNN for PA imaging using limited view/bandwidth arrays so that the network takes full advantage of signal characteristics such as row-rank, coherence and sparsity during learning.

A. Preprocessing

It is not clear that a CNN can reconstruct absorption structures directly from PA data. Given the data dimension, learning would be extremely complex since the network architecture must encode the underlying PA forward operation. A popular approach to reduce this burden is preprocessing raw data with simple DAS reconstruction and using the resultant rough images as training input [14], [15]. The CNN can then focus on learning the characteristics of artifacts in input images. However, since these images can lose detailed information on object structure, the CNN output would not be perfect [20].

Our strategy is to use the transformed 3-D data f(ri, j) illustrated in Fig. 3 as the network input. As shown in the previous section, this operation is the first step for most reconstruction methods since it is based on the simple physics of wave propagation. The array represents delayed signals, where the delay is the propagation time from position ri to element j. Delayed data have several advantages: 1) detailed information embedded in raw data y(t, r) is not lost; and 2) it accelerates learning efficiency because channel samples at ri focus on waves coming from position ri.

This preprocessing is associated with row rank and sparsity in CS. The spatial domains combining axial and lateral dimensions {ri = (zi, xi)} naturally reduce the number of bases, either patch-based or non-local, required to capture the essential features of microvessels. In addition, data extension to the channel axis can increase coefficient sparsity. That is, this representation can potentially reduce the rank of the problem and help discard off-target signals that introduce clutter.

Note that MV and DMAS methods access the coherence using channel-sample correlation to indirectly enhance low-rank (high-coherence) signals while suppressing high-rank (less-coherence) artifacts and noise. The success of these methods suggests that preprocessing would help the CNN to find optimal filter bases (weights) for regression using a realistic number of examples in the training set.

B. Structure

1). Operation and notation:

a = v(A) ∈ Rnm×1 denotes vectorization by stacking the columns of the matrix A ∈ Rn×m. Inversely, denotes the matrix formed from the vector. η(·) denotes the rectified linear unit function. 1n,m ∈ Rn×m denotes the matrix with every entry equal to one.

2). Encoder-decoder:

A standard encoder structure in CNN can be represented as

| (13) |

where Fi ∈ Rn1×n2 is the ith channel of the input, Ψi,j ∈ Rd1×d2 are the learning weights for the ith channel of the input and jth channel of the output, ⊛ is the 2-D convolution operation, αj is the bias for the jth channel of the output, ΦT ∈ Rm1m2×n1n2 is the pooling matrix, and Cj ∈ Rm1×m2 is the jth channel of the encoding output. A corresponding decoder can be expressed as

| (14) |

where is the ith channel of the input, Ψi,j ∈ Rd1×d2 are the learning weights for the ith channel of the input and jth channel of the output, βj is the bias for the jth channel of the output, is the unpooling matrix, and Zj ∈ Rn1×n2 is the jth channel of the decoding output.

The encoder-decoder convolution layer is similar to the standard reconstruction methods shown in Fig. 4. The common structure is weighting (filtering) channel samples at a location ri or its neighborhood for an image pixel at ri, whereas the scope of data locations (called the effective size or receptive field) contributing to a pixel varies with method. The methods based on DAS can assign different weights for every pixel.

Although a CNN layer shares identical weights over space due to the convolution operation, the framework of multi-channel weights per layer and multi-layers increases the expressive power. The iterative method consists of matrix multiplications with no compacting support. While a CNN uses fixed filter size (usually 3 × 3), pooling operations enlarge the effective filter size in the middle layers.

Currently, deep learning approaches have been investigated to understand the mathematical framework needed to solve inverse problems. Yin et al proposed the low-rank Hankel matrix approach using a combination of nonlocal basis and local basis for sparse representation of signals [37]. The framelet method has been successfully applied to image processing tasks since matrix decomposition reflects both local and nonlocal behavior of the signal. Ye et al discovered that the encoder-decoder framework of CNN generalizes the framelet representation [38]. In particular, the neural network can decompose the Hankel matrix of 3-D input data and shrink its rank to achieve a rank-deficient ground-truth (See Appendix II).

3). Implementation Details:

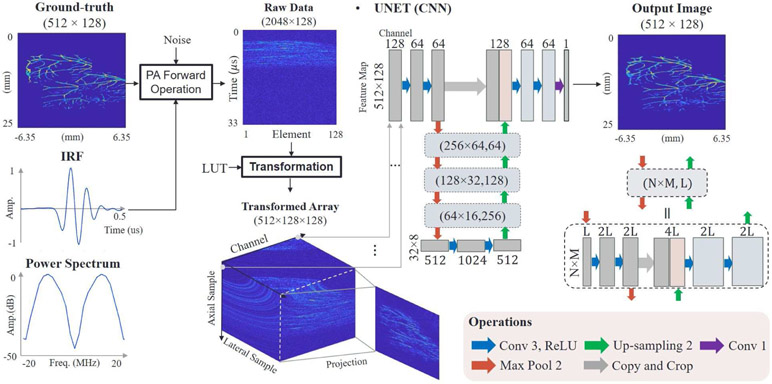

Our network is based on U-net. Fig. 5 presents the architecture. The left and right sides of the U-shape network correspond to successive encoders and decoders, respectively. Following a 3 × 3 convolutional layer and a ReLU layer, a batch normalization layer is used to improve learning speed and stability. The layers are repeated twice, either before 2 × 2 max-pooling or after 2 × 2 up-convolution (unpooling). The pooling and unpooling operations allow multi-scale decomposition, where the size of feature maps are 512 × 128, 256 × 64, 128 × 32, 64 × 16 and 32 × 8. Total trainable parameters for all layers are 31,078,657. For fast preprocessing, we generated a sparse matrix (lookup table) mapping a 2-D raw data array Y ∈ R2048×128 into a 3-D delayed array F ∈ R512×128×128.

Fig. 5.

Deep-learning architecture for PA image reconstruction. Raw data are converted into a 3-D array by a lookup table (LUT). The array is used as a multi-channel input to the network (first box). Each box represents a multi-channel feature map. The number of channels is denoted on the top or bottom of the box. Feature map sizes decrease and increase via max-pooling and upsampling, respectively. All convolutional layers consist of 3 × 3 kernels except the last layer. The network is trained by minimizing the mean squared error between output images and ground-truth images.

C. Network Training

Training was performed by minimizing a loss function as

| (15) |

where F(k) and s(k) denote the kth input and kth ground-truth, respectively, K denotes the number of training samples, ϒ denotes the trainable network structure, and ∥·∥F denotes the Frobenius norm. s(k) was normalized as , where STD(·) denotes the standard deviation. We exploited stochastic gradient descent (SGD) as an optimization method to minimize the loss function. The learning rate for SGD was set to 0.005 and the batch size is 8. Among a total of 16,000 samples, 80% were used for training and the rest were used for validation during the iterative learning process. The network was trained with a total of 150 epochs without over-fitting and under-fitting. To track the loss convergence of validation samples, we defined a fractional error for the jth epoch as ϵj = ∣ej − ej−1∣/ej where ej and ej−1 are the mean squared error (MSE) values at the jth and j-1th epoch, respectively. Fractional errors at the last 10 epochs are under 0.005. The MSE value at the final epoch for the validation samples is 0.23. Table I summarizes the parameters.

TABLE I.

Parameters for training network

| Category/Function | Parameter | Value/Range |

|---|---|---|

| Raw data | Temporal samples | 2048 |

| Temporal sampling rate | 62.5 MHz | |

| Transducer aperture size | 12.8 mm | |

| Transducer element pitch | 0.1 mm | |

| Transducer element numbers | 128 | |

| Transducer center frequency | 15.63 MHz | |

| Ultrasonic wavelength | 0.1 mm | |

| Training image | Image numbers | 16,000 |

| Signal dynamic range | 20 dB | |

| Ratio (max signal/noise std) | 10-35 dB | |

| Vascular diameter | 0.05-0.3 mm | |

| Mean power | 1 | |

| Axial samples | 512 (25.6 mm) | |

| Lateral samples | 128 (12.7 mm) | |

| Training | Batch size | 8 |

| Epochs | 150 | |

| Learning rate | 0.005 | |

| Trainable parameter numbers | 31,078,657 |

D. Training Data

The supervised learning framework requires data at a large scale. However, it is mostly impractical to obtain clinical raw data accompanied by real ground-truth vascular maps. Therefore, we trained the network by creating synthetic data mimicking typical microvessel networks, as shown in Fig. 5.

The simulation transforming ground-truth to RF array data is based completely on our PAUS system. The impulse response function h(t) in Eq. 3 was measured by the system with a point source target. Fig. 5 shows the response function and its power spectrum. The directivity is modeled as

| (16) |

where l is the transducer element pitch, λ is the ultrasonic wavelength and θj is the incident angle of a wave propagating from position r = (z, x) to the jth transducer element r′j = x′j [39].

Reference vascular images were obtained from the fundus oculi drive [40]. The database contains retina color images captured by camera that can be used for vessel extraction. We used only binary images (manually extracted images), where white pixels denote the segmentation of blood vessels. These images were randomly partitioned, re-sized, rotated and combined with each other to augment the training numbers. Next, every binary image was modified to a gray-scale image where the dynamic range of the vessel signal intensity is 20 dB. Lastly, every image was amplified with different values to obtain measurements involving a wide range of SNR. Table I summarizes all parameters.

V. Simulation Results

We tested the reconstruction methods using both simulation and experimental data. We compared CNN-based approaches with other common reconstruction methods including DAS, DMAS and/or CS. Here, we call a network using DAS results (without Hilbert transform) a single-channel input ‘UNET’ and a network using 3-D transformed arrays a multi-channel input ‘upgUNET’. As shown in Fig. 5, UNET learns in the image-domain (2D projection) while upgUNET learns in the data-domain (3D array). DAS employs a rectangular apodization function where the activated aperture size is determined by f-number (=0.5). For image display, it uses the Hilbert transform following summation. The iterative method exploits total-variation (TV) regularization and wavelet transforms for sparsity dictionaries. We used the general criteria that the iteration stops when the norm of the gradient is less than a threshold value. We checked that more iterations barely change image quality or metric values. We empirically selected all parameter values minimizing the error between ground-truth and reconstruction results. Table II summarizes all parameters for the reconstructions.

TABLE II.

Parameters for reconstruction methods

| Category | Parameter | Value/Type |

|---|---|---|

| DAS | f-number | 0.1 or 0.5 |

| MV | Element number (J) | 128 |

| Subarray length (L) | 32 | |

| Axial average number (2N+1) | 5 | |

| DMAS | Filter cutoff | 6 MHz |

| Highpass filter type | 6th-order Butterworth | |

| CS | TV regularization (λ1) | 0.02 |

| Wavelet type (W) | Daubechies 4 | |

| Wavelet regularization (λ2) | 0.005 |

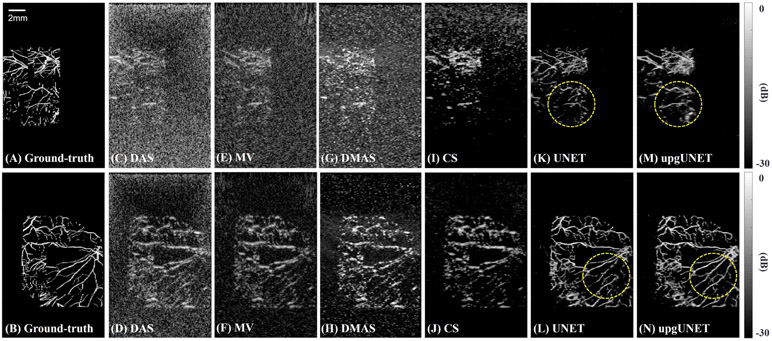

The same procedure was used to generate testing and training datasets. However, two sets were generated from independent objects for independent verification. Fig. 6 shows imaging results using the selected reconstruction methods from two particular examples where object shapes and data SNR are totally different. As expected, standard DAS (f-number=0.5) followed by Hilbert transformation provides low-contrast, poor-resolution images. While MV, DMAS and CS improve contrast in general, they often suppress weak signals. CNN-based methods restore most of the vasculature with stronger contrast and higher resolution. For upgUNET processing, fine vessels are more clearly visible, as shown in the circled areas of Figs. 6 (M) and (N). Lost structures are mostly vertically-extended vessels because their signal power is extremely low.

Fig. 6.

Reconstruction results using synthetic data. Two particular objects are tested and all images are displayed using a log-scale colormap. (A,B) Ground-truth images. (C,D) Delay-and-sum results. Hilbert transform is applied as post-processing. (E,F) Minimum variance results. (G,H) Delay-multiply-and-sum results. (I,J) Iterative CS method results. Wavelet dictionaries and total-variation regularization are used for compressed sensing. (K,L) Deep-learning results. An input is a 2-D array using DAS. (M,N) Deep-learning results. An input is a 3-D multi-channel array.

In addition to these qualitative comparisons, we quantified performance differences employing the peak-signal-to-noise ratio (PSNR) and structure similarity (SSIM) metrics. The PSNR is defined via the MSE as

| (17) |

where ∥·∥F denotes the Frobenius norm, n1 × n2 denotes the image size, and Imax is the dynamic range (this value is 1 in our experiments). The SSIM is given as [41]

| (18) |

where μs, , σs and denote the averages and standard deviations (i.e., square root of the variances) for s and . denotes the covariance of s and . Two variables c1 = 0.012 and c2 = 0.032, where used to stabilize the metric when either or is very close to zero. Since the resultant images have enough signal strength and deviation, the small variables are rarely influential.

Table III presents average PSNR and SSIM values computed from 1,000 samples where every vascular shape is different. The numbers in parentheses are the standard deviations. Deep-learning approaches offer significant gain over standard methods, strongly suggesting that the network provides quantitatively better image quality. Note that the upgUNET produces the best values, in agreement with visual inspection.

TABLE III.

Quantitative comparison of different methods

| Metric | DAS | MV | DMAS | CS | UNET | upgUNET |

|---|---|---|---|---|---|---|

| PSNR | 20.97 | 22.18 | 22.32 | 22.34 | 26.71 | 27.73 |

| (std.) | (5.92) | (4.72) | (5.07) | (4.70) | (5.03) | (5.21) |

| SSIM | 0.208 | 0.210 | 0.260 | 0.283 | 0.745 | 0.754 |

| (std.) | (0.12) | (0.11) | (0.11) | (0.14) | (0.19) | (0.20) |

VI. Experimental Results

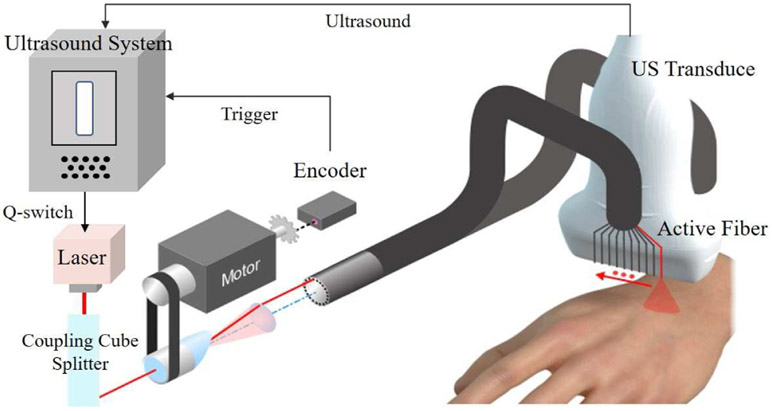

A. PAUS System

Our customized system for spectroscopic PA imaging is illustrated in Fig. 7. The scanner (Vantage, Verasonics, WA, uSA) is programmed to record RF data at different wavelengths and fiber positions. A compact diode-pumped laser (TiSon GSA, Laser-export, Russia) transmits a light pulse at any arbitrary wavelength ranging from 700 to 900 nm at a 1 kHz rate. Transmitted pulses are sequentially delivered to 20 fiber terminals mounted around the top and bottom surfaces of a linear array transducer (LA 15/128-1633, Vermon S.A. France). The transducer center frequency is 15 MHz and the 3 dB bandwidth is around 8 MHz. US firings are interspersed with laser firings such that a full PA/US image frame at a fixed optical wavelength is recorded every 20 ms, producing a 50 Hz display rate for integrated US and PA images. System details are described in [1].

Fig. 7.

Our customized PAUS system. An US scanner triggers a compact diode-pumped laser such that it emits pulses (around 1 mJ energy) at a 1 kHz rate with switching wavelength ranging from 700 nm to 900 nm. The laser is delivered to integrated fibers arranged on the two sides of a linear array transducer. A motor controlled by the scanner allows laser pulses to couple with different fibers sequentially. The scanner records PA signals that originate from light propagation into tissue.

Here, for the purpose of reconstruction tests, we acquired data using one wavelength at 795 nanometers. The sampling rate for acoustic array data ts is 62.5 MHz. The transducer contains 128 elements linearly arranged along the x-axis with a pitch of 0.1 mm. One PA data frame contains 2048 samples × 128 elements × 20 fibers. We averaged every data frame over fibers to enhance signal to noise ratio. The resultant data can be written as . We reconstructed a 2-D image using each data frame. The image matrix can be expressed as where axial and lateral resolutions are zr = 0.05 mm and xr = 0.1 mm, respectively.

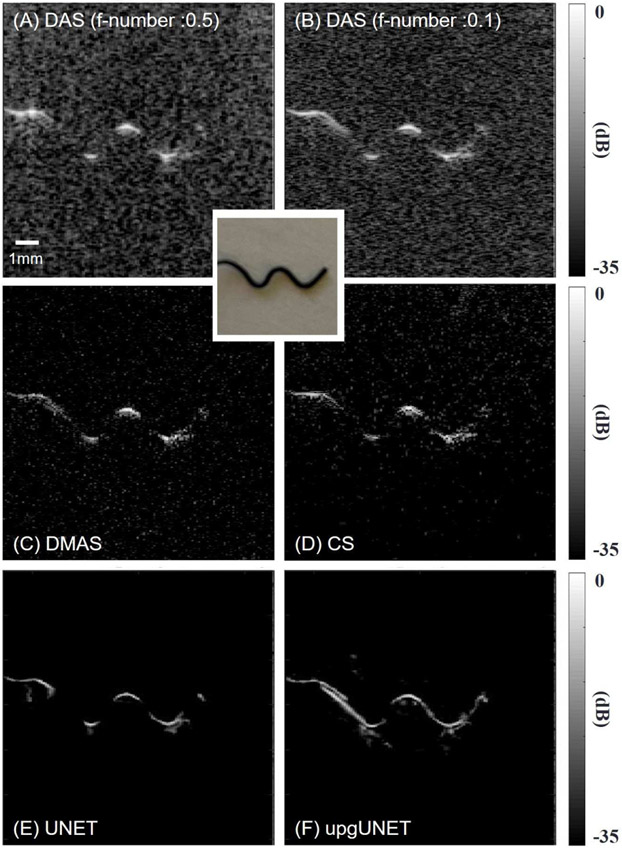

B. Phantom Study

We constructed a phantom containing a metal wire acting as an optical absorption target. As shown in Fig. 8, the wire shape approximates the letter ‘W’. It was suspended from a cubical container such that it appears as the ‘W’ shape in the z-x imaging plane. The container was filled with an intralipid solution (Fresenius Kabi, Deerfield, USA) acting as a scattering medium. The concentration of the intralipid is around 2% and the effective attenuation coefficient is around 0.1 mm−1. Channel data were recorded with our customized system. Reconstruction results are shown in Fig. 8, which compares deep-learning methods with standard methods. For DAS, we tested a small f-number (0.1) in addition to the default value (0.5). The lower number corresponds to larger aperture size, which can access some information from diagonal lines at the expense of SNR in the entire field. In agreement with simulation results, DMAS and CS improve contrast but suppress weak structures.

Fig. 8.

Reconstruction results. All images are displayed using a log-scale colormap (35 dB range). A ‘W’ shape wire is scanned by the PAUS system. (A) Delay-and-sum result. The f-number is 0.5. (B) Delay-and-sum result. The f-number is 0.1. (C) Delay-multiply-and-sum results. (D) Iterative CS method results. (E) UNET deep-learning result. An input is a 2-D array using DAS. (F) upgUNET deep-learning result. An input is a 3-D multi-channel tensor.

In the deep-learning imaging results, object shapes are more distinct with higher resolution. In particular, the preferred upgUNET method restores most wire structure. One flaw in the deep-learning approaches is that the networks sometimes produce artifacts near objects, as shown in Figs. 8 (E) and (F). We believe they arise from low-level reverberations not modeled in synthetic training data.

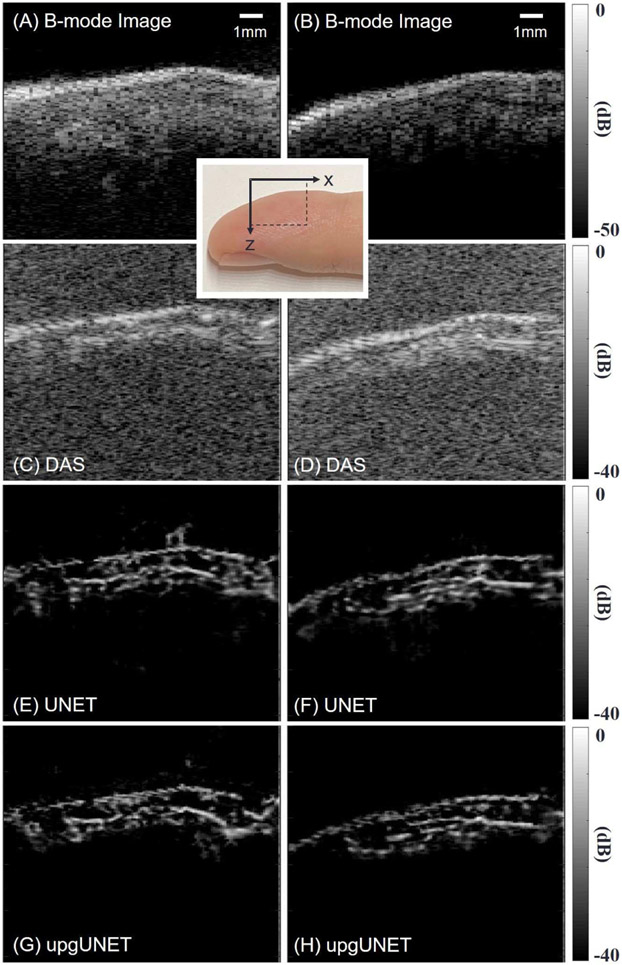

C. In-vivo Test

Lastly, we scanned and imaged a human finger to study the feasibility of our suggested method for in-vivo vascular imaging in real-time. These studies were approved by the Institutional Review Board (IRB) of the University of Washington (Study# 00009196) and used both optical and acoustic energies well within ANSI (optical) and FDA (US) guidelines. The PAUS system recorded US and PA measurements using an interleaved pulse sequence for 50 Hz frame-rate imaging.

Figs. 9 (A) and (B) show two longitudinal cross-sections of the finger as US B-mode images. We applied DAS, UNET and upgUNET to PA reconstructions for visual comparison (See Fig. 9 (C-H)). In this limited test, we found little difference between UNET and upgUNET reconstructions. We presume that upgUNET presents more realistic microvascular structures than B-mode images. However, it is obvious that deep learning images provide markedly superior contrast and resolution than equivalent images reconstructed with DAS.

Fig. 9.

In vivo reconstruction results. A human finger is scanned by the PAUS system. Two sagittal planes are tested. (A, B) US B-mode image. (C, D) Delay-and-sum result. The f-number is 0.5. (E, F) UNET deep-learning result. An input is a 2-D array using DAS. (G, H) upgUNET deep-learning result. An input is a 3-D multi-channel tensor. All images are displayed using a log-scale colormap. Mapping ranges for US and PD images are 50dB and 40dB, respectively.

VII. Discussion

As discussed in the introduction, the inverse problem of PA imaging can be solved exactly only when the detection surface represents a whole sphere, cylinder or infinite plane. These conditions can be obtained for small animal imaging [42] but are difficult to achieve in a clinical environment.

Hand-held US probes with relatively narrow bandwidth yields serious image quality losses for PA images, and such techniques alone will unlikely be accepted for medical use [43].

However interleaved spectroscopic PAUS has been recently shown to dramatically improve the capabilities of diagnostic US in monitoring interventional procedures even under limited view and bandwidth conditions [3]. Very recently, a fast-sweep PAUS approach has been developed to operate at clinically acceptable frame rates for both PA and US modalities [1].

The goal of this paper was to investigate whether deep-learning algorithms can improve the quality of images obtained with a hand-held US probe. We note that large-scale absorbing heterogeneities are unlikely to be fully corrected with the proposed upgUNET algorithm because the low frequencies associated with these objects are not preserved within the limited bandwidth of the detector. In our opinion, however, microvascular networks can be improved with advanced reconstruction algorithms based on upgNET. Although not fully validated over a wide range of experimental conditions, the simulations, phantom measurements and in vivo results presented here strongly support this statement.

We have explored several different signal processing methods beyond traditional DAS reconstruction and found that deep learning has the potential for enhanced image quality and real-time implementation if input data are structured to match the network architecture of a reasonably sized net. Here we reformatted elemental signals from an US transducer array into multi-channel 3D data (tensor) using prior knowledge of propagation delays related to simple wave physics. Effective decomposition of this tensor by the neural network can capture latent structures and features.

Both experiments and simulations have demonstrated how the proposed neural network improves image quality for PA reconstruction. It produces images with stronger contrast, higher spatial resolution compared to DAS, and few structure losses even given the limited spatial and temporal bandwidth of the real-time system used for PA data acquisition. Additional image quality improvements were demonstrated using multi-channel data as the network input (upgUNET). The final advantage of this approach is that the network effectively learns filtering weights from training data while standard methods must explicitly impose parameter values, filtering dictionaries, or regularizers.

Again, the novelty of our architecture is leveraging image reconstruction in the data-domain. It is less computational complex than end-to-end fully-connected networks that directly learn from raw-data. In addition, it preserves information better than image-to-image networks. Our architecture is based on U-net to efficiently cope with the non-stationary nature of off-axis signals, artifacts and noise, as well as to restore vascular structures. Compared to DL-based iterative schemes, it is much better suited to real-time imaging due to lower computations.

The computational cost of the matrix multiplication mapping a 2D data array (K × J) to a 3D array (I1 × I2 × J) is O(KJ2I1I2). However, the operation matrix is mostly sparse, so the cost can be reduced as O(ΓI1I2J), where Γ is the number of non-zeros per column. Convolution operations dominate computations in a CNN. The cost for an N × N × R1 input, N × N × R2 output, K × K filters per layer and L layers is O(N2K2R1R2L). Since the operation is simple, it is ideal for parallel processing to reduce computational time. We employed Tensorflow with Keras to construct the network shown in Fig. 5. We implemented code for training and testing in Python and ran it on a computer using an Intel i7 and an NVIDIA 1080Ti. The code will be available on the website (https://github.com/bugee1727/PARecon) upon publication.

The average computation time for image reconstruction from raw data is 40 msec, representing a 25 Hz real-time frame rate. Our current system functions at 50 Hz and higher frame rates, so these computation times must be reduced by at least a factor of two for true real-time implementation. Given an optimized hardware architecture for our specific reconstruction approach, a real-time frame rate of 50 Hz and higher is very realistic in the short term for the specific deep learning algorithm presented here for PA image reconstruction.

The architecture combining a trainable neural network with a non-trainable preprocessor inputting raw data can be effective for diverse medical imaging fields and remote sensing applications. For example, some groups have recently investigated trainable apodization methods to replace slow MV beamforming for US B-mode imaging [45], [46]. They showed that a simple network enables fast imaging computation without reducing MV image quality. In addition, one group has applied the similar architecture to a semi-cylindrical PA tomographic system [47].

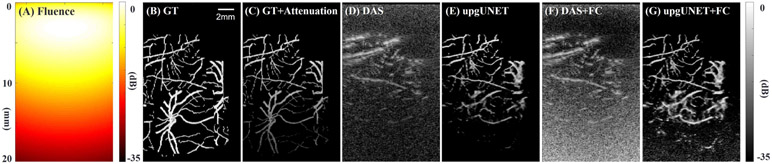

As noted in Sections IV and V, the deep learning approach presented here was trained over a wide range of SNR and demonstrated superior results compared to conventional DAS at all tested levels of SNR. This characteristic is especially attractive for PAUS imaging in vivo using a handheld probe with limited spatial and temporal bandwidth where depth-dependent light attenuation limits the penetration depth of PA images. To demonstrate potential gains in penetration depth with DL-based reconstruction, a test case (Fig. 10) was analyzed where a simple model of fluence variation with depth was applied to ground truth data.

Fig. 10.

Reconstruction results using synthetic data to explore penetration depth when PA signal attenuates with depth. One particular absorption object is tested and all images are displayed using a log-scale colormap. (A) Sum of light fluences from 20 fibers in a scattering medium. MCX Studio is used for the Monte Carlo simulation [44]. The details of the transducer geometry can be found Ref [1]. The effective attenuation coefficient of the medium is 1.73 cm−1. (B) Ground-truth object. (C) Attenuated object due to the heterogeneous fluence. Measurements are obtained from the object using Eq. 3. (D-G) Reconstruction results using (D) DAS, (E) deep-learning, (F) DAS with fluence compensation, and (G) deep-learning with fluence compensation.

The sum of fluences from 20 fibers is presented in Fig. 10 (A) for a scattering medium with an effective attenuation coefficient of 1.73 cm−1. MCX Studio was used for the Monte Carlo simulation [44] producing this distribution. The PA signal for this case (Fig. 10 (C)) depends not only on the absorption of the vascular object (Fig. 10 (B)) but also on the depth-dependent fluence. Figs. 10 (D) and (E) compare conventional DAS and DL-based reconstructions with noise added. The average photoacoustic SNR for this example is about 25 dB at a depth of 5 mm and about 5 dB at a depth of 15 mm.

Figs. 10 (F) and (G) compare DAS and DL-based reconstruction after fluence compensation has been applied. The specific compensation approach was similar to that applied in many PA systems in which the reconstructed images in Figs. 10 (C) and (D) are multiplied by a depth-dependent gain factor, similar to the time-gain control (TGC) function in a real-time US scanner, based on the expected fluence attenuation function. Clearly, DL-based reconstruction not only improves overall image quality as noted above, but also increases PA image penetration by about 50% for this particular case. In general, enhanced penetration for real-time, handheld PAUS imaging systems is another attractive feature of DL-based reconstruction.

One limitation of the CNN identified in these studies is poor image quality for structures aligned almost vertically (i.e., nearly parallel to the normal to the 1D transducer array). As expected, restoration is challenging for this case since most of the PA signal spatial frequencies are not captured by the transducer array. As shown in the top images of Fig. 6, this loss is more serious if the PA signal power of the object is weak compared with the noise power. Part of our future work will explore alternative approaches leveraging additional information from ultrasound imaging, or better system conditions such as acquiring one additional view at a significant angle with respect to the array normal at the first position.

The second limitation of the method is the presence of some unexpected image artifacts for real data tests, which can reduce specificity. Obtaining real ground-truth maps is impracticable at large scale. Thus, reducing discrepancies between synthetic and real data is needed to guarantee that a trained CNN works best for real data.

One of the main difficulties in reconstruction arises from the object dimension. Although a transducer lens focuses to a 2D plane (imaging plane), it cannot fully limit the sensitivity to a selected plane in an object. In other words, signals still come from points outside the imaging plane. Thus, generating training data based on 3D structures is required for the next step. Experiments are ongoing to map the full 3D PSF of our PA system and to include these details into the forward model for synthetic data generation. We expect that new synthetic data accompanied with an extended detection view will further improve image quality.

There certainly are alternative learning frameworks. Our architecture is limited to supervised learning requiring ground-truth pairing with input data. Some architectures such as Generate Adversarial Networks (GANs) do not need pairs [48], [49]. They can provide realistic fake images from measurements using real vascular image datasets obtained by other medical imaging modalities. Since only real data and real images are required for training, they can prevent unexpected artifacts. Also, different learning frameworks can bring additional information from US images to bear on PA image reconstruction.

Overall, our future studies will address these two limitations and develop specific deep learning architectures for real-time implementation. The goal is to create a CNN tuned to the problem of PA image reconstruction using limited spatial and temporal bandwidth data for robust, high-quality spectroscopic PAUS imaging at frame rates of 50 Hz and higher.

VIII. Conclusions

In this paper, we described a deep convolutional network for real-time PAUS imaging. The PAUS platform has the potential for real-time clinical implementation but the limited view/bandwidth of clinical US arrays degrades PA image quality. Our target of interest is microvessels, which can be almost entirely restored by advanced reconstruction methods, in contrast to large homogeneous objects. We developed a deep learning network for this application trained with realistic simulation data synthesized from ground-truth vascular image sets using a deterministic PA forward operator and the measured impulse response of a real transducer. Reformatting raw channel data into a multi-channel array as a pre-processing step increased learning efficiency with respect to network complexity and imaging performance. The neural network is based on U-net, decomposing signals via multi-scale feature maps. Coupling the trainable network with the transformation method, we imaged structures mimicking vascular networks both in simulation and experiment. Overall, this approach reconstructs PA data with much higher image quality than conventional methods but loses some portions of complex absorber geometries and generates minor artifacts.

Acknowledgments

This work was supported in part by NIH grants (HL-125339 and EY-026532).

Appendix A

Adjoint of operator F

The solution of the forward model in Eq. 2 can simply be written as

| (19) |

where r ∈ Ω and (t, r′) ∈ (R × Ξ). The function F(·) is mapping L2(Ω) → L2(R × Ξ). Now, introduce q(t, r′) to define the adjoint of the operator F. The inner product between F(s(r)) and q(t, r′) can be expressed as

| (20) |

where and . FT denotes the adjoint of the operator F. In general, DAS reconstruction ignores the derivative operation and applies a Hilbert transform after summation.

Appendix B

Framelet Expansion

Preprocessed data can be represented as a third-order tensor F ∈ RN×M×J where N, M and J denotes the numbers of axial samples, lateral samples and channels. Fj = [f1,j, … , fm,j, … , fM,j] ∈ RN×M denotes the matrix of the jth channel where fm,j denotes the mth column vector of the matrix. The block Hankel matrix for the periodic tensor is defined as

| (21) |

where Hd1,d2 (F) ∈ RNM×d1d2J, Hd1,d2 (Fj) ∈ RNM×d1d2. The block matrix Hd1 (fm,j) is given as

| (22) |

where fm,j[l] denotes the lth element of the vector. The Hankel matrix approach finds a solution minimizing and the rank of the matrix where F* denotes ground-truth. To address this minimization, a framelet approach handles the matrix decomposition using local and global bases as

| (23) |

where , Φ ∈ RNM×p denote non-local base pairs, , Ψ ∈ Rd1d2J×q denote local base pairs, and C denotes framelet coefficients. This expression can be equivalently represented by an encoder-decoder structure if the reLU and bias are ignored for simplification. The non-local bases pairs correspond to pooling and unpooling operations. Thus, CNN can be interpreted as finding the best non-local bases and manipulating coefficients to access the minimization solution.

Contributor Information

MinWoo Kim, uWAMIT Center in the Department of Bioengineering at the University of Washington, Seattle, WA, 98105 USA.

Geng-Shi Jeng, Department of Electronics Engineering at National Chiao Tung University, Hsinchu, Taiwan.

Ivan Pelivanov, uWAMIT Center in the Department of Bioengineering at the University of Washington, Seattle, WA, 98105 USA.

Matthew O’Donnell, uWAMIT Center in the Department of Bioengineering at the University of Washington, Seattle, WA, 98105 USA.

References

- [1].Jeng G-S, Li M-L, Kim M, Yoon SJ, Pitre JJ, Li DS, Pelivanov I, and O’Donnell M, “Real-time spectroscopic photoacoustic/ultrasound (PAUS) scanning with simultaneous fluence compensation and motion correction for quantitative molecular imaging,” bioRxiv, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Attia ABE, Balasundaram G, Moothanchery M, Dinish U, Bi R, Ntziachristos V, and Olivo M, “A review of clinical photoacoustic imaging: Current and future trends,” Photoacoustics, p. 100144, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Schellenberg MW and Hunt HK, “Hand-held optoacoustic imaging: A review,” Photoacoustics, vol. 11, pp. 14–27, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Szabo TL, Diagnostic ultrasound imaging: inside out. Academic Press, 2004. [Google Scholar]

- [5].Han SH, “Review of photoacoustic imaging for imaging-guided spinal surgery,” Neurospine, vol. 15, no. 4, p. 306, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Park S, Karpiouk AB, Aglyamov SR, and Emelianov SY, “Adaptive beamforming for photoacoustic imaging,” Optics letters, vol. 33, no. 12, pp. 1291–1293, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Synnevag J-F, Austeng A, and Holm S, “Benefits of minimum-variance beamforming in medical ultrasound imaging,” IEEE transactions on ultrasonics, ferroelectrics, and frequency control, vol. 56, no. 9, pp. 1868–1879, 2009. [DOI] [PubMed] [Google Scholar]

- [8].Kirchner T, Sattler F, Gröhl J, and Maier-Hein L, “Signed real-time delay multiply and sum beamforming for multispectral photoacoustic imaging,” Journal of Imaging, vol. 4, no. 10, p. 121, 2018. [Google Scholar]

- [9].Matrone G, Savoia AS, Caliano G, and Magenes G, “The delay multiply and sum beamforming algorithm in ultrasound b-mode medical imaging,” IEEE transactions on medical imaging, vol. 34, no. 4, pp. 940–949, 2014. [DOI] [PubMed] [Google Scholar]

- [10].Guo Z, Li C, Song L, and Wang LV, “Compressed sensing in photoacoustic tomography in vivo,” Journal of biomedical optics, vol. 15, no. 2, p. 021311, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Lin X, Feng N, Qu Y, Chen D, Shen Y, and Sun M, “Compressed sensing in synthetic aperture photoacoustic tomography based on a linear-array ultrasound transducer,” Chinese Optics Letters, vol. 15, no. 10, p. 101102, 2017. [Google Scholar]

- [12].Lee J-G, Jun S, Cho Y-W, Lee H, Kim GB, Seo JB, and Kim N, “Deep learning in medical imaging: general overview,” Korean journal of radiology, vol. 18, no. 4, pp. 570–584, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].McCann MT, Jin KH, and Unser M, “A review of convolutional neural networks for inverse problems in imaging,” arXiv preprint arXiv:1710.04011, 2017. [DOI] [PubMed] [Google Scholar]

- [14].Cai C, Deng K, Ma C, and Luo J, “End-to-end deep neural network for optical inversion in quantitative photoacoustic imaging,” Optics letters, vol. 43, no. 12, pp. 2752–2755, 2018. [DOI] [PubMed] [Google Scholar]

- [15].Antholzer S, Haltmeier M, and Schwab J, “Deep learning for photoacoustic tomography from sparse data,” Inverse problems in science and engineering, vol. 27, no. 7, pp. 987–1005, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Davoudi N, Deán-Ben XL, and Razansky D, “Deep learning optoacoustic tomography with sparse data,” Nature Machine Intelligence, vol. 1, no. 10, pp. 453–460, 2019. [Google Scholar]

- [17].Awasthi N, Prabhakar KR, Kalva SK, Pramanik M, Babu RV, and Yalavarthy PK, “PA-fuse: deep supervised approach for the fusion of photoacoustic images with distinct reconstruction characteristics,” Biomedical optics express, vol. 10, no. 5, pp. 2227–2243, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Boink YE, Manohar S, and Brune C, “A partially learned algorithm for joint photoacoustic reconstruction and segmentation,” arXiv preprint arXiv:1906.07499, 2019. [DOI] [PubMed] [Google Scholar]

- [19].Aggarwal HK, Mani MP, and Jacob M, “Modl: Model-based deep learning architecture for inverse problems,” IEEE transactions on medical imaging, vol. 38, no. 2, pp. 394–405, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Zhu B, Liu JZ, Cauley SF, Rosen BR, and Rosen MS, “Image reconstruction by domain-transform manifold learning,” Nature, vol. 555, no. 7697, p. 487, 2018. [DOI] [PubMed] [Google Scholar]

- [21].Allman D, Reiter A, and Bell MAL, “Photoacoustic source detection and reflection artifact removal enabled by deep learning,” IEEE transactions on medical imaging, vol. 37, no. 6, pp. 1464–1477, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Ronneberger O, Fischer P, and Brox T, “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical image computing and computer-assisted intervention. Springer, 2015, pp. 234–241. [Google Scholar]

- [23].Wang LV and Wu H.-i., Biomedical optics: principles and imaging. John Wiley & Sons, 2012. [Google Scholar]

- [24].Xia J, Yao J, and Wang LV, “Photoacoustic tomography: principles and advances,” Electromagnetic waves (Cambridge, Mass.), vol. 147, p. 1, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Xu M and Wang LV, “Photoacoustic imaging in biomedicine,” Review of scientific instruments, vol. 77, no. 4, p. 041101, 2006. [Google Scholar]

- [26].——, “Universal back-projection algorithm for photoacoustic tomography,” in Photoacoustic Imaging and Spectroscopy. CRC Press, 2017, pp. 37–46. [Google Scholar]

- [27].Wang B, Xiong W, Su T, Xiao J, and Peng K, “Finite-element reconstruction of 2D circular scanning photoacoustic tomography with detectors in far-field condition,” Applied optics, vol. 57, no. 30, pp. 9123–9128, 2018. [DOI] [PubMed] [Google Scholar]

- [28].Wang B, Su T, Pang W, Wei N, Xiao J, and Peng K, “Back-projection algorithm in generalized form for circular-scanning-based photoacoustic tomography with improved tangential resolution,” Quantitative imaging in medicine and surgery, vol. 9, no. 3, p. 491, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Xu M and Wang LV, “Time-domain reconstruction for thermoacoustic tomography in a spherical geometry,” IEEE transactions on medical imaging, vol. 21, no. 7, pp. 814–822, 2002. [DOI] [PubMed] [Google Scholar]

- [30].Haltmeier M, “Inversion of circular means and the wave equation on convex planar domains,” Computers & Mathematics with Applications, vol. 65, no. 7, pp. 1025–1036, 2013. [Google Scholar]

- [31].Rosenthal A, Razansky D, and Ntziachristos V, “Fast semi-analytical model-based acoustic inversion for quantitative optoacoustic tomography,” IEEE transactions on medical imaging, vol. 29, no. 6, pp. 1275–1285, 2010. [DOI] [PubMed] [Google Scholar]

- [32].Xu Y, Feng D, and Wang LV, “Exact frequency-domain reconstruction for thermoacoustic tomography. i. planar geometry,” IEEE transactions on medical imaging, vol. 21, no. 7, pp. 823–828, 2002. [DOI] [PubMed] [Google Scholar]

- [33].Jin KH, McCann MT, Froustey E, and Unser M, “Deep convolutional neural network for inverse problems in imaging,” IEEE Transactions on Image Processing, vol. 26, no. 9, pp. 4509–4522, 2017. [DOI] [PubMed] [Google Scholar]

- [34].Gregor K and LeCun Y, “Learning fast approximations of sparse coding,” in Proceedings of the 27th International Conference on International Conference on Machine Learning, 2010, pp. 399–406. [Google Scholar]

- [35].Boyd S, Parikh N, Chu E, Peleato B, Eckstein J et al. , “Distributed optimization and statistical learning via the alternating direction method of multipliers,” Foundations and Trends® in Machine learning, vol. 3, no. 1, pp. 1–122, 2011. [Google Scholar]

- [36].Kawaguchi K, Kaelbling LP, and Bengio Y, “Generalization in deep learning,” arXiv preprint arXiv:1710.05468, 2017. [Google Scholar]

- [37].Yin R, Gao T, Lu YM, and Daubechies I, “A tale of two bases: Local-nonlocal regularization on image patches with convolution framelets,” SIAM Journal on Imaging Sciences, vol. 10, no. 2, pp. 711–750, 2017. [Google Scholar]

- [38].Ye JC, Han Y, and Cha E, “Deep convolutional framelets: A general deep learning framework for inverse problems,” SIAM Journal on Imaging Sciences, vol. 11, no. 2, pp. 991–1048, 2018. [Google Scholar]

- [39].Hasegawa H and Kanai H, “Effect of element directivity on adaptive beamforming applied to high-frame-rate ultrasound,” IEEE transactions on ultrasonics, ferroelectrics, and frequency control, vol. 62, no. 3, pp. 511–523, 2015. [DOI] [PubMed] [Google Scholar]

- [40].Staal J, Abràmoff MD, Niemeijer M, Viergever MA, and Van Ginneken B, “Ridge-based vessel segmentation in color images of the retina,” IEEE transactions on medical imaging, vol. 23, no. 4, pp. 501–509, 2004. [DOI] [PubMed] [Google Scholar]

- [41].Wang Z, Bovik AC, Sheikh HR, Simoncelli EP et al. , “Image quality assessment: from error visibility to structural similarity,” IEEE transactions on image processing, vol. 13, no. 4, pp. 600–612, 2004. [DOI] [PubMed] [Google Scholar]

- [42].Xia J and Wang LV, “Small-animal whole-body photoacoustic tomography: a review,” IEEE Transactions on Biomedical Engineering, vol. 61, no. 5, pp. 1380–1389, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Steinberg I, Huland DM, Vermesh O, Frostig HE, Tummers WS, and Gambhir SS, “Photoacoustic clinical imaging,” Photoacoustics, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Fang Q and Boas DA, “Monte carlo simulation of photon migration in 3d turbid media accelerated by graphics processing units,” Optics express, vol. 17, no. 22, pp. 20 178–20 190, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Luijten B, Cohen R, de Bruijn FJ, Schmeitz HA, Mischi M, Eldar YC, and van Sloun RJ, “Adaptive ultrasound beamforming using deep learning,” arXiv preprint arXiv:1909.10342, 2019. [DOI] [PubMed] [Google Scholar]

- [46].Khan S, Huh J, and Ye JC, “Adaptive and compressive beamforming using deep learning for medical ultrasound,” arXiv preprint arXiv:1907.10257, 2019. [DOI] [PubMed] [Google Scholar]

- [47].Schwab J, Antholzer S, Nuster R, and Haltmeier M, “Real-time photoacoustic projection imaging using deep learning,” arXiv preprint arXiv:1801.06693, 2018. [Google Scholar]

- [48].Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, and Bengio Y, “Generative adversarial nets,” in Advances in neural information processing systems, 2014, pp. 2672–2680. [Google Scholar]

- [49].Yi X, Walia E, and Babyn P, “Generative adversarial network in medical imaging: A review,” Medical image analysis, p. 101552, 2019. [DOI] [PubMed] [Google Scholar]