Abstract

BACKGROUND:

The COMprehensive Post-Acute Stroke Services (COMPASS) model, a transitional care intervention for stroke patients discharged home, was tested against status quo post-acute stroke care in a cluster-randomized trial in 40 hospitals in North Carolina. This study examined the hospital-level costs associated with implementing and sustaining COMPASS.

METHODS:

Using an activity-based costing survey, we estimated hospital-level resource costs spent on COMPASS-related activities during approximately 1 year. We identified hospitals that were actively engaged in COMPASS during the year prior to the survey and collected resource cost estimates from 22 hospitals. We used median wage data from the Bureau of Labor Statistics and COMPASS enrollment data to estimate the hospital-level costs per COMPASS enrollee.

RESULTS:

Between November 2017 and March 2019, 1,582 patients received the COMPASS intervention across the 22 hospitals included in this analysis. Average annual hospital-level COMPASS costs were $2,861 per patient (25th percentile: $735; 75th percentile: $3,475). Having 10 percent higher stroke patient volume was associated with 5.1 percent lower COMPASS costs per patient (P=.016). About half (N=10) of hospitals reported post-acute clinic visits as their highest-cost activity, while a third (N=7) reported case ascertainment (i.e., identifying eligible patients) as their highest-cost activity.

CONCLUSIONS:

We found that the costs of implementing COMPASS varied across hospitals. On average, hospitals with higher stroke volume and higher enrollment reported lower costs per patient. Based on average costs of COMPASS and readmissions for stroke patients, COMPASS could lower net costs if the model is able to prevent about 6 readmissions per year.

Keywords: stroke, cost analysis, costs, patient centered care, continuity of care

I. Introduction

Stroke is a leading cause of death and long-term disability in the United States. Stroke survivors discharged home often experience multiple co-morbidities, poor knowledge and management of risk factors, residual disability, and high rates of hospital readmission.1,2 For these patients, decisions about post-acute care upon hospital discharge can have long-term health consequences.2,3 Despite this, there is currently no standard for post-acute stroke care in the United States and nearly half of individuals hospitalized for stroke receive no post-acute care.2

Promoting improvement in transitional care for stroke and preventing stroke-related hospital readmissions are priorities in the United States.4,5 In recent years, the Centers for Medicare and Medicaid Services (CMS) has implemented new reimbursement policies to incentivize better care coordination and adoption of transitional care services for chronic diseases including stroke.6 However, adoption of transitional stroke care models remains low and evidence of their effectiveness is limited.7-10

The COMprehensive Post-Acute Stroke Services (COMPASS) model is a transitional stroke care intervention that was recently implemented as part of a pragmatic cluster-randomized trial in 40 hospitals across North Carolina. COMPASS is an evidence-based model developed from the evidence on previous transitional care models and consistent with CMS requirements for Transitional Care Management billing.1,2 Results from the COMPASS trial suggest that comprehensive transitional care significantly improves patient-reported outcomes (e.g. functional status, disability, depression, falls, and mortality).11 Key elements of the COMPASS intervention included: 1) identifying and enrolling eligible patients who experienced a stroke or transient ischemic attack (TIA), 2) providing educational materials to patients at discharge, 3) communicating with patients within 2 days post-discharge, 4) conducting an outpatient clinic visit to develop an individualized electronic care plan for each patient, and 5) connecting patients with relevant community resources (e.g., home health providers, pharmacy services, local support groups).2

Understanding the hospital-level costs of implementing the COMPASS model is important for assessing the potential adoption and sustainability of the model. While transitional care models for conditions other than stroke have been widely tested in the United States, evidence about the cost-effectiveness of these models is limited.2,12 Using an activity-based costing method, this study estimated the hospital-level resource costs of implementing COMPASS. The results of this study provide a first step in evaluating the model’s budget impact for hospitals.

II. Methods

COMPASS Trial Design

COMPASS was tested in a cluster-randomized pragmatic trial implemented between July 2016 and March 2019. The intervention included telephone follow-up by a post-acute care nurse within 2 days following discharge from the hospital and a clinic visit with a provider within 30 days post-discharge, during which an individual electronic care plan was generated for each patient.2 Patients who received this clinic visit and care plan were considered to have received the intervention (i.e., patient received intervention “per protocol”). During implementation, COMPASS research staff conducted audits to track the number of patients enrolled into COMPASS at each hospital and the number who received the “per protocol” intervention.

COMPASS had a two-phase, waitlist design. Forty hospitals agreed to participate in the trial; half of the hospitals were randomized to implement COMPASS in the first phase (July 2016 to March 2018) while the other half were randomized to implement COMPASS in the second phase (November 2017 to March 2019). Hospitals that were randomized to implement in the first phase had the opportunity to continue sustaining COMPASS with their own resources during the second phase of the trial.

Data collection

To estimate the hospital-level resource costs associated with implementing COMPASS, we developed a self-administered, web-based survey using an activity-based costing approach. This approach requires collecting data on the value of all resources used for each main COMPASS activity, including staff time, hospital office space, and other materials.13-15 To ensure consistent data collection across hospitals, we worked with COMPASS leadership and staff to define activity categories that were common across sites and around which program work was organized.14 Additionally, we conducted pilot surveys and follow-up interviews with respondents at 2 COMPASS hospitals to ensure the survey instrument was clear, usable, and captured program activities as intended. Our final set of COMPASS activities included hiring and retraining staff, case ascertainment and enrollment, follow-up phone calls with enrollees, clinic visits, and engagement with community resources.

The web-based survey was administered to COMPASS sites in March and April 2019. The instrument was designed to capture each hospital’s total COMPASS-related costs incurred in the past year. Based on the timing of the survey and because COMPASS was implemented across hospitals in 2 phases, we surveyed both crossover sites (i.e., sites that provided usual care during Phase 1 and began implementing COMPASS in Phase 2) and sustaining sites (i.e., sites that had begun implementing COMPASS during Phase 1 and were sustaining the model in Phase 2). The survey instrument was modified for the sustaining hospitals to ensure that the survey captured costs related to sustaining COMPASS during the past year.

A survey link was emailed to each hospital’s primary COMPASS contact, typically the post-acute stroke care coordinator. We asked respondents to provide estimates for time that staff spent on each COMPASS activity at start-up and/or in an average week during the past year, as well as quantity and cost estimates of other resources used for COMPASS (e.g., hospital office space, software, mailing materials) over the same time period. We focused only on costs incurred over the past year in order to limit concerns about recall bias. Respondents were encouraged to consult with co-workers, if necessary, to obtain accurate time and resource estimates for COMPASS activities. To ensure completeness of data, we required respondents to provide responses for all questions. We also asked respondents to rate, from 0 to 100, their level of confidence in their resource estimates.

To estimate the costs of staff time, we collected regionally-adjusted median wage data from the Bureau of Labor Statistics (BLS).12,16 We also received hospital-level data from the COMPASS study team, including data on hospital characteristics, number of weeks spent actively implementing COMPASS, and the number of patients that each hospital enrolled and the number that received the per-protocol intervention (i.e., had a clinic visit within 30 days post-discharge and received an electronic care plan) during Phase 2 of the COMPASS trial.

We intended to capture the resource costs for hospitals that had been actively implementing or sustaining COMPASS during the second phase (i.e., November 2017 to March 2019). Of the 40 hospitals originally participating in COMPASS, 18 were excluded from this analysis – 15 due to inactivity (i.e., no patients receiving the per-protocol intervention) and 3 due to survey non-response (88% response rate). Our final sample included 22 hospitals – 8 sustaining sites and 14 crossover sites.

Data analysis

Survey data on COMPASS staff time and other resource estimates were linked with COMPASS total enrollment information. We calculated the COMPASS staff time for each activity by taking the estimated annual hours spent on the activity (e.g., registered nurse, physician, administrative assistant) and multiplying by the median wage for that staff type. Costs for other materials such as hospital office space and training materials were also included. Hospital office space cost was estimated based on an estimate from Heber (2008) and other material costs were estimated by survey respondents.17

The number of weeks spent actively implementing COMPASS varied by hospital, ranging from 33 to 71 weeks. In order to standardize comparisons between hospitals, we annualized cost and enrollment numbers for each hospital. We calculated an annualized total COMPASS cost estimate for each hospital by summing the average weekly cost of each activity and multiplying by 52. We calculated annualized enrollment numbers by dividing total enrollment by number of active weeks and multiplying by 52. Finally, we calculated average annual cost per patient by dividing the annualized total cost by the annualized number of patients who received the per-protocol intervention.

We conducted bivariate statistical tests to estimate variation in costs by selected hospital characteristics including stroke patient volume, primary stroke center (PSC) status, metropolitan status, crossover vs sustaining sites, and mean National Institutes of Health Stroke Scale (NIHSS) for COMPASS patients. NIHSS is a common measure of stroke severity, with a higher score indicating higher impairment.18 We used log-log bivariate regression to estimate the cost elasticity of per-protocol enrollment and stroke patient volume. We also examined average costs for key COMPASS activities. Cost estimates are summarized using means, standard deviations, medians and interquartile ranges. All costs are presented in 2018 dollars.

III. Results

From November 2017 to March 2019, 4,067 stroke patients discharged home from the 22 hospitals were eligible for COMPASS, of whom 1,582 (39%) received the intervention. Hospital-level descriptive statistics are summarized in Table 1. About 64 percent of included hospitals were primary stroke centers, 59 percent were in metropolitan areas, and about 64 percent were crossover sites in the implementation phase. For comparison, we also present descriptive statistics for the 18 hospitals that were excluded from the cost analysis because they did not complete the survey or because of inactivity during the study period. Compared to the hospitals included in our analyses, excluded hospitals on average had lower stroke patient volumes, were less likely to be classified as primary stroke centers, and were more likely to be in rural areas and in the sustaining phase (i.e., in their second year of implementing COMPASS).

Table 1.

Descriptive Statistics

| COMPASS hospitals included in analysis (n=22) |

COMPASS hospitals excluded from analysis (n=18) |

p-value | |

|---|---|---|---|

| Hospital stroke patient volume | |||

| Mean | 359 | 335 | |

| (SD) | −411 | −388 | 0.848 |

| Median (Inter-quantile range) | 165 (121 - 382) | 141 (50 - 694) | |

| Primary Stroke Center (%) | 63.60% | 38.90% | 0.119 |

| Rural/Urban Classification | 0.391 | ||

| Metro (%) | 59.10% | 44.40% | |

| Micro (%) | 36.30% | 38.90% | |

| Rural (%) | 4.60% | 16.70% | |

| Phase | |||

| Implementation (%) | 63.60% | 33.30% | 0.091 |

| Sustaining (%) | 36.40% | 66.70% |

Total annual COMPASS costs

Data on COMPASS costs and enrollment are summarized in Table 2. Average annual hospital-level costs of implementing COMPASS were $74,975 and annual median costs were $59,276 (interquartile range: $46,240 to $87,285). The primary cost inputs of COMPASS consisted of time spent by registered nurses, nurse practitioners, and physicians. The overall relationship between total annual COMPASS costs and annual per-protocol enrollment was weakly positive, but statistically insignificant (Appendix Figure 1). Non-PSC hospitals had both lower total costs ($36,648 vs. $96,875, P=.010) and lower annual per-protocol patient enrollment (18 vs. 94 patients, P=.029), suggesting relatively low levels of engagement with COMPASS compared to hospitals with stroke certification. There were no statistically significant differences in average total costs by stroke volume, metropolitan versus non-metropolitan location, or sustaining versus crossover sites.

Table 2.

Cost and Enrollment Estimates

| COMPASS hospitals included in analysis (n=22) | |||||

|---|---|---|---|---|---|

| Mean | (SD) | 25th Percentile |

50th Percentile |

75th Percentile |

|

| Annual Total COMPASS Cost | $74,975 | ($55,162) | $46,240 | $59,276 | $87,285 |

| Annual COMPASS Cost Per Patient | |||||

| Total Costs | $2,861 | ($3,120) | $735 | $1,447 | $3,475 |

| By Activity | |||||

| Hiring/Retraining Staff | $54 | ($121) | $3 | $6 | $72 |

| Case Ascertainment | $586 | ($725) | $136 | $360 | $660 |

| Enrollment | $362 | ($421) | $78 | $194 | $424 |

| Follow-up calls | $342 | ($349) | $54 | $165 | $682 |

| Clinic Visits | $638 | ($708) | $196 | $456 | $652 |

| Engagement with Community Resources | $561 | ($1137) | $22 | $102 | $229 |

| Other Costs | $317 | ($572) | $33 | $138 | $267 |

| Per-protocol enrollment | 67 | (81) | 18 | 36 | 90 |

Per-patient annual COMPASS costs

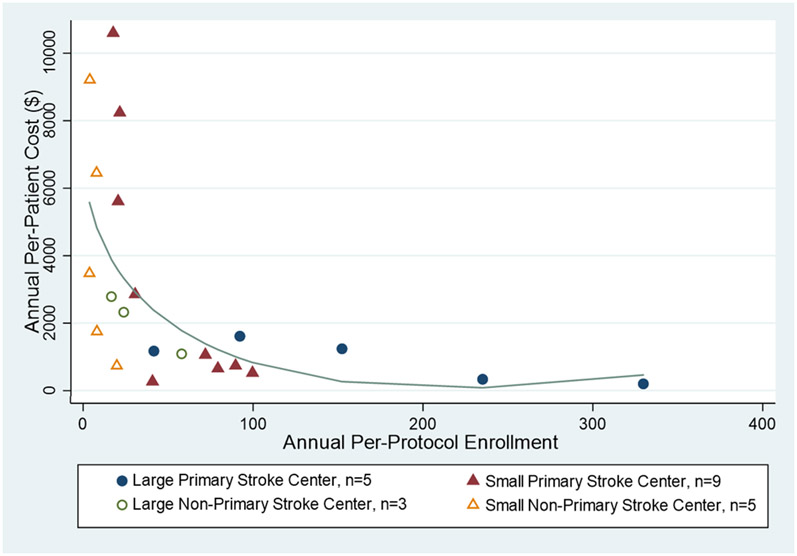

We found that the average annual cost per patient was $2,861 with an annual median cost per patient of $1,426 (interquartile range: $735 to $3,475). Figure 1 depicts per-protocol enrollment and annual costs per patient for each hospital. Among all sites, having 10 percent higher per-protocol enrollment is associated with about 7.1 percent lower average costs per patient (P<.001) (Table 3). Additionally, higher stroke patient volume was associated with lower average costs per patient – specifically, a 10 percent increase in stroke patient volume was associated with 5.1 percent lower per-patient COMPASS costs (P=.016).

Figure 1. Annual COMPASS Cost Per Enrollee and Per-Protocol Enrollment, by Site.

This scatter plot shows the annual per-protocol enrollment and per-enrollee cost in dollars for each hospital included in the analysis. Hospitals are stratified into four categories – 1) large (>=400 annual stroke patients) primary stroke centers (PSC); 2) small (<400 annual stroke patients) PSCs); 3) large (>=165 annual stroke patients) non-PSCs; and 4) small (<165 annual stroke patients) non-PSCs. A fractional-polynomial line was fit to the scatter plot to show the relationship between enrollment and per-enrollee costs.

Table 3.

Bivariate analyses of hospital characteristics on annual cost per patient and percent change in annual cost per patient

| Variable a | Coefficient | Standard Error | P-value |

|---|---|---|---|

| Annual cost per patient ($) | |||

| Large vs. small hospitals b | −2382 | 1313 | 0.031 |

| PSC vs. non-PSC | −970 | 1400 | 0.479 |

| Non-metro vs. metro | −181 | 1386 | 0.896 |

| Sustaining phase vs. implementation phase | −1341 | 1385 | 0.266 |

| Mean NIHSS Score | −1153 | 586 | 0.061 |

| % change in annual cost per patient c | |||

| 10% increase in per-protocol enrollment | −7.1% | 1.1% | 0.000 |

| 10% increase in annual stroke volume | −5.1% | 1.9% | 0.016 |

NIHSS = National Institutes of Health Stroke Scale

We ran a separate bivariate model for each predictor variable. Therefore, the coefficients represent bivariate associations between the predictor variable and the outcome

Based on the definition used in the COMPASS trial, large hospitals are defined as those with at least 400 annual stroke patients for PSC hospitals, and those with at least 165 annual stroke patients for non-PSC hospitals

Estimated using log-log bivariate regression

Comparisons of per-patient annual costs by observed hospital characteristics are shown in Figure 2. Median per-patient costs were lower for large (at least 400 annual stroke patients for PSC hospitals and at least 165 annual stroke patients for non-PSC hospitals), non-metropolitan, and PSC hospitals compared to small, metropolitan, and non-PSC hospitals, respectively. Compared to hospitals in the sustaining phase, crossover sites in the implementation phase had similar median costs per patient, but higher average costs. Of note, there was considerably more variation in costs in the implementing vs sustaining hospitals.

Figure 2. Per-patient Annual Costs, by Hospital Characteristic.

Box-and-whisker plots show the median, interquartile range, and range values for per-patient annual costs in dollars by four observed hospital characteristics – 1) phase of COMPASS implementation; 2) small or large, defined by annual stroke patient volume; 3) primary stroke center status; and 3) metropolitan vs. non-metropolitan status. Outliers (i.e., values beyond 1.5 times the interquartile range) are depicted as dots.

COMPASS Costs by Activity

Table 2 summarizes the average annual per-patient costs for each core COMPASS activity. On average, post-acute clinic visits, which involved direct patient care, were the costliest element of the COMPASS model with an average annual cost of $638 per patient. The next largest cost drivers were case ascertainment (i.e., identifying eligible patients) and engaging with community resources, with average annual per-patient costs of $586 and $561, respectively. Of the 22 hospitals, 10 reported post-acute clinic visits as their highest-cost activity, 7 reported case ascertainment, 3 reported follow-up calls (i.e., 2- and 30-day calls), and 1 each reported community resources and enrollment as their highest-cost activity.

Most respondents reported having a reasonable level of confidence in their estimates; the median confidence rating was 72.5 and only 3 of the 22 respondents reported a confidence level below 50 (on a scale of 100). Sensitivity analyses excluding these 3 hospitals did not significantly affect the results.

IV. Discussion

Understanding the hospital-level costs of implementing care models like the COMPASS model is essential for evaluating the model’s potential long-term sustainability and budget impact. The resource costs for implementing COMPASS consist almost entirely of staff time – particularly registered nurse time and time spent by Advanced Practice Providers (APPs) and physicians on post-acute clinic visits. Case ascertainment was a major cost-driver for many sites; automation of the case ascertainment process could significantly reduce these costs in future implementation. For context, average costs per patient for COMPASS ($2,861) were about one fifth of the average cost of an admission for stroke.19 We are not aware of any published implementation costs for similar transitional care interventions.

While this analysis was based on a relatively small sample, which limited statistical power, bivariate analyses revealed significant heterogeneity in implementation costs across sites. Higher stroke patient volume and COMPASS per-protocol enrollment were significantly associated with lower costs per patient, which is consistent with positive economies of scale – in other words, the average input (i.e., cost per patient) declines as the output (i.e., number of patients receiving the intervention) increases. Therefore, the COMPASS model may be more feasible in hospitals that treat a relatively high number of stroke patients.

We found that hospitals designated as primary stroke centers were more engaged with COMPASS, both spending more resources and achieving higher per-protocol enrollment compared to non-PSC hospitals. Compared to hospitals in the sustaining phase, sites in the implementation phase had similar median costs per patient, but higher average costs and higher variability across sites; this may due to hospitals that experience relatively high COMPASS costs choosing to drop out after the first year of implementation. While descriptive statistics suggest differences in COMPASS costs across phase, PSC status, and metropolitan status, bivariate regression analyses did not reveal any statistically significant differences (Table 3), possibly because these analyses lacked statistical power due to small sample size. Additional research could be conducted to further identify hospital-level factors that are associated with higher engagement and more efficient implementation of care models like COMPASS.

While the results of this analysis suggest that COMPASS could be a feasible post-acute care model, future research will need to be conducted to determine whether COMPASS is effective in improving patient outcomes and/or reducing care costs for stroke patients post-discharge. Based on an average annual cost of $74,975 for implementing COMPASS in this study, and assuming an average 30-day hospital readmission cost of $14,200 for patients discharged with acute ischemic stroke19, COMPASS could lower net costs if the model is able to prevent about 6 readmissions per year.

Limitations

This study is constrained by a few limitations. First, we were only able to include a subset of COMPASS hospitals in our analysis. Because our data collection was aimed at collecting resource cost estimates during the second phase, most hospitals that discontinued COMPASS after the first phase of implementation were excluded. Of the 40 hospitals originally involved in the COMPASS trial, only 23 were actively implementing or sustaining COMPASS during the second phase. Therefore, bias from sample selection is a concern. For example, some hospitals may have elected to stop implementing the COMPASS model after their first year due to high costs – such hospitals would be excluded from our analysis, potentially downwardly biasing our cost estimates for hospitals in their second year of implementation (i.e., the sustaining phase). While we found no statistically significant differences in costs between the implementation and sustaining phase hospitals, this may have simply been due to lack of statistical power.

Secondly, this study relies on a single, retrospective survey conducted near the end of the COMPASS trial. Because of the timing of the study, we did not have the opportunity to validate the survey results by replicating the survey at different time points during the intervention. Although we made efforts to minimize recall bias, the risk of this bias remains. For example, it is possible that smaller sites tended to over-estimate their COMAPSS activities, or vice-versa, which is a potential alternative explanation for why higher enrollment was associated with lower per-patient costs. Future economic evaluations of COMPASS and similar models should seek to estimate costs at multiple time points for validation.

Finally, non-response may affect this study. While the response rate to our survey among active COMPASS hospitals was relatively high (88%), it is possible that non-response was associated with having relatively high costs or low engagement.

Conclusion

We find that the costs of implementing COMPASS appear to be feasible for most hospitals in our sample, but that implementation costs vary widely across hospitals. Hospitals interested in implementing COMPASS will want to know whether the costs of implementation will be justified by a positive budget impact through the reduction of downstream patient costs such as 30-day readmissions. Future research is currently being conducted to determine whether the COMPASS model is likely to be a positive budget impact for hospitals, especially under new alternative payment models.

Supplementary Material

Funding:

This research was support by the Agency for Healthcare Research and Quality (AHRQ R01HS025723; Co-PIs: Bushnell and Trogdon)

Footnotes

Conflicts of Interest: Pamela Duncan and Cheryl Bushnell: Ownership in Care Directions, LLC

References

- 1.Condon C, Lycan S, Duncan P, Bushnell C. Reducing Readmissions After Stroke With a Structured Nurse Practitioner/Registered Nurse Transitional Stroke Program. Stroke. 2016;47(6):1599–1604. doi: 10.1161/STROKEAHA.115.012524 [DOI] [PubMed] [Google Scholar]

- 2.Bushnell CD, Duncan PW, Lycan SL, et al. A Person-Centered Approach to Poststroke Care: The COMprehensive Post-Acute Stroke Services Model. J Am Geriatr Soc. 2018;66(5):1025–1030. doi: 10.1111/jgs.15322 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Prvu Bettger J, Alexander KP, Dolor RJ, et al. Transitional care after hospitalization for acute stroke or myocardial infarction: a systematic review. Ann Intern Med. 2012;157(6):407–416. doi: 10.7326/0003-4819-157-6-201209180-00004 [DOI] [PubMed] [Google Scholar]

- 4.Broderick Joseph P, Abir Mahshid. Transitions of Care for Stroke Patients. Circ Cardiovasc Qual Outcomes. 2015;8(6_suppl_3):S190–S192. doi: 10.1161/CIRCOUTCOMES.115.002288 [DOI] [PubMed] [Google Scholar]

- 5.Ovbiagele B, Goldstein LB, Higashida RT, et al. Forecasting the future of stroke in the United States: a policy statement from the American Heart Association and American Stroke Association. Stroke. 2013;44(8):2361–2375. doi: 10.1161/STR.0b013e31829734f2 [DOI] [PubMed] [Google Scholar]

- 6.Centers for Medicare and Medicaid Services. Transitional Care Management Services. January 2019. https://www.cms.gov/Outreach-and-Education/Medicare-Learning-Network-MLN/MLNProducts/MLN-Publications-Items/ICN908628. Accessed March 6, 2020.

- 7.Bindman AB, Cox DF. Changes in Health Care Costs and Mortality Associated With Transitional Care Management Services After a Discharge Among Medicare Beneficiaries. JAMA Intern Med. 2018;178(9):1165–1171. doi: 10.1001/jamainternmed.2018.2572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Olson DM, Bettger JP, Alexander KP, et al. Transition of care for acute stroke and myocardial infarction patients: from hospitalization to rehabilitation, recovery, and secondary prevention. Evid ReportTechnology Assess. 2011;(202):1–197. [PMC free article] [PubMed] [Google Scholar]

- 9.Puhr MI, Thompson HJ. The Use of Transitional Care Models in Patients With Stroke. J Neurosci Nurs J Am Assoc Neurosci Nurses. 2015;47(4):223–234. doi: 10.1097/JNN.0000000000000143 [DOI] [PubMed] [Google Scholar]

- 10.Wang Y, Yang F, Shi H, Yang C, Hu H. What Type of Transitional Care Effectively Reduced Mortality and Improved ADL of Stroke Patients? A Meta-Analysis. Int J Environ Res Public Health. 2017;14(5). doi: 10.3390/ijerph14050510 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Duncan PW, Bushnell CD, Jones SB, et al. A Randomized Pragmatic Trial of Stroke Transitional Care: The COMPASS Study. Cardiovascular Quality and Outcomes. 2020. In Press. [DOI] [PubMed] [Google Scholar]

- 12.Liu SW, Obermeyer Z, Chang Y, Shankar KN. Frequency of ED revisits and death among older adults after a fall. Am J Emerg Med. 2015;33(8):1012–1018. doi: 10.1016/j.ajem.2015.04.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Keel G, Savage C, Rafiq M, Mazzocato P. Time-driven activity-based costing in health care: A systematic review of the literature. Health Policy. 2017;121(7):755–763. doi: 10.1016/j.healthpol.2017.04.013 [DOI] [PubMed] [Google Scholar]

- 14.Khavjou OA, Honeycutt AA, Hoerger TJ, Trogdon JG, Cash AJ. Collecting Costs of Community Prevention Programs: Communities Putting Prevention to Work Initiative. Am J Prev Med. 2014;47(2):160–165. doi: 10.1016/j.amepre.2014.02.014 [DOI] [PubMed] [Google Scholar]

- 15.Subramanian S, Ekwueme DU, Gardner JG, Trogdon J. Developing and Testing a Cost-Assessment Tool for Cancer Screening Programs. Am J Prev Med. 2009;37(3):242–247. doi: 10.1016/j.amepre.2009.06.002 [DOI] [PubMed] [Google Scholar]

- 16.Bureau of Labor Statistics. Overview of BLS Wage Data by Area and Occupation: U.S. Bureau of Labor Statistics. https://www.bls.gov/bls/blswage.htm. Accessed July 23, 2019. [Google Scholar]

- 17.Hebert PL, Sisk JE, Wang JJ, et al. Cost-effectiveness of nurse-led disease management for heart failure in an ethnically diverse urban community. Ann Intern Med. 2008;149(8):540–548. doi: 10.7326/0003-4819-149-8-200810210-00006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lyden P, Brott T, Tilley B, et al. Improved reliability of the NIH Stroke Scale using video training. NINDS TPA Stroke Study Group. Stroke. 1994;25(11):2220–2226. doi: 10.1161/01.STR.25.11.2220 [DOI] [PubMed] [Google Scholar]

- 19.Bambhroliya AB, Donnelly JP, Thomas EJ, et al. Estimates and Temporal Trend for US Nationwide 30-Day Hospital Readmission Among Patients With Ischemic and Hemorrhagic Stroke. JAMA Netw Open. 2018;1(4):e181190–e181190. doi: 10.1001/jamanetworkopen.2018.1190 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.