Abstract

The positive-negative axis of emotional valence has long been recognized as fundamental to adaptive behavior, but its origin and underlying function have largely eluded formal theorizing and computational modeling. Using deep active inference, a hierarchical inference scheme that rests on inverting a model of how sensory data are generated, we develop a principled Bayesian model of emotional valence. This formulation asserts that agents infer their valence state based on the expected precision of their action model—an internal estimate of overall model fitness (“subjective fitness”). This index of subjective fitness can be estimated within any environment and exploits the domain generality of second-order beliefs (beliefs about beliefs). We show how maintaining internal valence representations allows the ensuing affective agent to optimize confidence in action selection preemptively. Valence representations can in turn be optimized by leveraging the (Bayes-optimal) updating term for subjective fitness, which we label affective charge (AC). AC tracks changes in fitness estimates and lends a sign to otherwise unsigned divergences between predictions and outcomes. We simulate the resulting affective inference by subjecting an in silico affective agent to a T-maze paradigm requiring context learning, followed by context reversal. This formulation of affective inference offers a principled account of the link between affect, (mental) action, and implicit metacognition. It characterizes how a deep biological system can infer its affective state and reduce uncertainty about such inferences through internal action (i.e., top-down modulation of priors that underwrite confidence). Thus, we demonstrate the potential of active inference to provide a formal and computationally tractable account of affect. Our demonstration of the face validity and potential utility of this formulation represents the first step within a larger research program. Next, this model can be leveraged to test the hypothesized role of valence by fitting the model to behavioral and neuronal responses.

1 . Introduction

We naturally aspire to attain and maintain aspects of our lives that make us feel “good.” On the flip side, we strive to avoid environmental exchanges that make us feel “bad.” Feeling good or bad—emotional valence—is a crucial component of affect and plays a critical role in the struggle for existence in a world that is ever-changing yet also substantially predictable (Johnston, 2003). Across all domains of our lives, affective responses emerge in context-dependent yet systematic ways to ensure survival and procreation (i.e., to maximize fitness).

In healthy individuals, positive affect tends to signal prospects of increased fitness, such as the satisfaction and anticipatory excitement of eating. In contrast, negative affect tends to signal prospects of decreased fitness—such as the pain and anticipatory anxiety associated with physical harm. Such valenced states can be induced by any sensory modality, and even by simply remembering or imagining scenarios unrelated to one's current situation, allowing for a domain-general adaptive function. However, that very same domain-generality has posed difficulties when attempting to capture such good and bad feelings in formal or normative treatments. This kind of formal treatment is necessary to render valence quantifiable, via mathematical or numerical analysis (i.e., computational modeling). In this letter, we propose a computational model of valence to help meet this need.

In formulating our model, we build on both classic and contemporary work on understanding emotional valence at psychological, neuronal, behavioral, and computational levels of description. At the psychological level, a classic perspective has been that valence represents a single dimension (from negative to positive) within a two-dimensional space of “core affect” (Russell, 1980; Barrett & Russell, 1999), with the other dimension being physiological arousal (or subjective intensity); further dimensions beyond these two have also been considered (e.g., control, predictability; Fontaine, Scherer, Roesch, & Ellsworth, 2007). Alternatively, others have suggested that valence is itself a two-dimensional construct (Cacioppo & Berntson, 1994; Briesemeister, Kuchinke, & Jacobs, 2012), with the intensity of negative and positive valence each represented by its own axis (i.e., where high negative and positive valence can coexist to some extent during ambivalence).

At a neurobiological level, there have been partially corresponding results and proposals regarding the dimensionality of valence. Some brain regions (e.g., ventromedial prefrontal (VMPFC) regions) show activation patterns consistent with a one-dimensional view (reviewed in Lindquist, Satpute, Wager, Weber, & Barrett, 2016). In contrast, single neurons have been found that respond preferentially to positive or negative stimuli (Paton, Belova, Morrison, & Salzman, 2006; Morrison & Salzman, 2009), and separable brain systems for behavioral activation and inhibition (often linked to positive and negative valence, respectively) have been proposed (Gray, 1994), based on work highlighting brain regions that show stronger associations with reward and/or approach behavior (e.g., nucleus accumbens, left frontal cortex, dopamine systems; Rutledge, Skandali, Dayan, & Dolan, 2015) or punishment and/or avoidance behavior (e.g., amygdala, right frontal cortex; Davidson, 2004). However, large meta-analyses (e.g., Lindquist et al., 2016) have not found strong support for these views (with the exception of one-dimensional activation in VMPFC), instead finding that the majority of brain regions are activated by increases in both negative and positive valence, suggesting a more integrative, domain-general use of valence information, which has been labeled an “affective workspace” model (Lindquist et al., 2016). Note that the associated domain-general (“constructivist”) account of emotions (Barrett, 2017)—as opposed to just valence—contrasts with older views suggesting domain-specific subcortical neuronal circuits and associated “affect programs” for different emotion categories (e.g., distinct circuits for generating the feelings and visceral/behavioral expressions of anger, fear, or happiness; Ekman, 1992; Panksepp, Lane, Solms, & Smith, 2017). However, this debate between constructivist and “basic emotions” views goes beyond the scope of our proposal. Questions about the underlying basis of valence treated here are much narrower than (and partially orthogonal to) debates about the nature of specific emotions, which further encompasses appraisal processes, facial expression patterns, visceral control, cognitive biases, and conceptualization processes, among others (Smith & Lane, 2015; Smith, Killgore, Alkozei, & Lane, 2018; Smith, Killgore, & Lane, 2020).

At a computational level of description, prior work related to valence has primarily arisen out of reinforcement learning (RL) models—with formal models of links between reward/punishment (with close ties to positive/negative valence), learning, and action selection (Sutton & Barto, 2018). More recently, models of related emotional phenomena (mood) have arisen as extensions of RL (Eldar, Rutledge, Dolan, & Niv, 2016; Eldar & Niv, 2015). These models operationalize mood as reflecting a recent history in unexpected rewards or punishments (positive or negative reward prediction errors (RPEs)), where many recent better-than-expected outcomes lead to positive mood and repeated worse-than-expected outcomes lead to negative mood. The formal mood parameter in these models functions to bias the perception of subsequent rewards and punishments with the subjective perception of rewards and punishments being amplified by positive and negative mood, respectively. Interestingly, in the extreme, this can lead to instabilities (reminiscent of bipolar or cyclothymic dynamics) in the context of stable reward values. However, these modeling efforts have had a somewhat targeted scope and have not aimed to account for the broader domain-general role of valence associated with findings supporting the affective workspace view mentioned above.

In this letter, we demonstrate that hierarchical (i.e., deep) Bayesian networks, solved using active inference (Friston, Parr, & de Vries, 2018), afford a principled formulation of emotional valence—building on both the work mentioned above as well as prior work on other emotional phenomena within the active inference framework (Smith, Parr, & Friston, 2019; Smith, Lane, Parr, & Friston, 2019); Smith, Lane, Nadel, L., & Moutoussis, 2020; Joffily & Coricelli, 2013; Clark, Watson, & Friston, 2016; Seth & Friston, 2016). Our hypothesis is that emotional valence can be formalized as a state of self that is inferred on the basis of fluctuations in the estimated confidence (or precision) an agent has in her generative model of the world that informs her decisions. This is implemented as a hierarchically superordinate state representation that takes the aforementioned confidence estimates at the lower level as data for further self-related inference. After motivating our approach on theoretical and observational grounds, we demonstrate affective inference by simulating a synthetic animal that “feels” its way forward during successive explorations of a T-maze. We use unexpected context changes to elicit affective responses, motivated in part by the fact that affective disorders are associated with deficiencies in performing this kind of task (Adlerman et al., 2011; Dickstein et al., 2010).

2 . A Bayesian View on Life: Survival of the Fittest Model

Every living thing from bachelors to bacteria seeks glucose proactively—and does so long before internal stocks run out. As adaptive creatures, we seek outcomes that tend to promote our long-term functional and structural integrity (i.e., the well-bounded set of states that characterize our phenotypes). That adaptive and anticipatory nature of biological life is the focus of the formal Bayesian framework called active inference. This framework revolves around the notion that all living systems embody statistical models of their worlds (Friston, 2010; Gallagher & Allen, 2018). In this way, beliefs about the consequences of different possible actions can be evaluated against preferred (typically phenotype-congruent) consequences to inform action selection. In active inference, every organism enacts an implicit phenotype-congruent model of its embodied existence (Ramstead, Kirchhoff, Constant, & Friston, 2019; Hesp et al., 2019), which has been referred to as self-evidencing (Hohwy, 2016). Active inference has been used to develop neural process theories and explain the acquisition of epistemic habits (Friston, FitzGerald et al. 2016; Friston, FitzGerald, Rigoli, Schwartenbeck, & Pezzulo, 2017). This framework provides a formal account of the balance between seeking informative outcomes (that optimize future expectations) versus preferred outcomes (based on current expectations; Schwartenbeck, FitzGerald, Mathys, Dolan, & Friston, 2015).

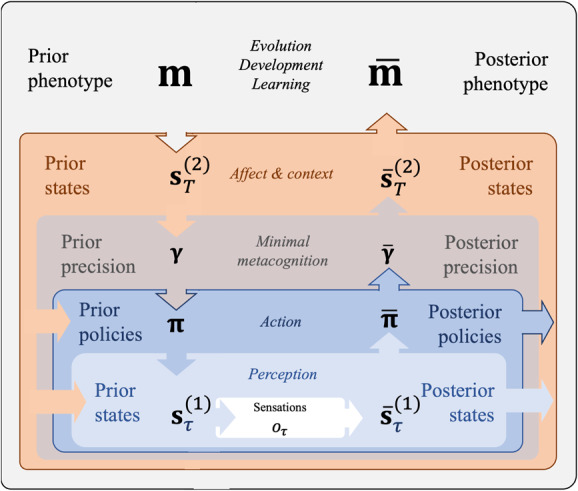

Active inference formalizes our survival and procreation in terms of a single imperative: to minimize the divergence between observed outcomes and phenotypically expected (i.e., preferred) outcomes under a (generative) model that is fine-tuned over phylogeny and ontogeny (Badcock, 2012; Badcock, Davey, Whittle, Allen, & Friston, 2017; Badcock, Friston, & Ramstead, 2019). This discrepancy can be quantified using an information-theoretic quantity called variational free energy (denoted F; see appendix A1; Friston, 2010). To minimize free energy is mathematically equivalent to maximizing (a lower bound on) Bayesian model evidence, which quantifies model fit or subjective fitness; this contrasts with biological fitness, which is defined as actual reproductive success (Constant, Ramstead, Veissière, Campbell, & Friston, 2018). Subjective fitness more specifically pertains to the perceived (i.e., internally estimated) efficacy of an organism's action model in realizing phenotype-congruent (i.e., preferred) outcomes. Through natural selection, organisms that can realize phenotype-congruent outcomes more efficiently than their conspecifics will (on average) tend to experience a fitness benefit. This type of natural (model) selection will favor a strong correspondence between subjective fitness and biological fitness by selecting for phenotype-congruent preferences and the means of achieving them. This Bayesian perspective casts groups of organisms and entire species as families of viable models that vary in their fit to a particular niche. On this higher level of description, evolution can be cast as a process of Bayesian model selection (Campbell, 2016; Constant et al., 2018; Hesp et al., 2019), in which biological fitness now becomes the evidence (also known as marginal likelihood) that drives model (i.e., natural) selection across generations. In the balance of this letter, we exploit the correspondence between subjective fitness and model evidence to characterize affective valence. Section 3 begins by reviewing the formalism that underlies active inference. In brief, active inference offers a generic approach to planning as inference (Attias, 2003; Botvinick & Toussaint, 2012; Kaplan & Friston, 2018) under the free energy principle (Friston, 2010). It provides an account of belief updating and behavior as the inversion of a generative model. In this section we emphasize the hierarchical and nested nature of generative models and describe the successive steps of increasing model complexity that enable an agent to navigate increasingly complicated environments. Of the lowest complexity is a simple, single-time-point model of perception. Somewhat more complex perceptual models can include anticipation of future observations. Complexity increases when a model incorporates action selection and must therefore anticipate the observed consequences of different possible plans or policies. As we explain, one key aspect of adaptive planning is the need to afford the right level of precision or confidence in one's own action model. This constitutes an even higher level of model complexity, which can be regarded as an implicit (i.e., subpersonal) form of metacognition—a (typically) unconscious process estimating the reliability of one's own model. This section concludes by describing the setup we use to illustrate affective inference and the key role of an update term within our model that we refer to as “affective charge.”

In section 3, we also introduce the highest level of model complexity we consider, which affords a model the ability to perform affective inference. In brief, we add a representation of confidence, in terms of “good” and “bad” (i.e., valenced) states that endow our affective agent with explicit (i.e., potentially self-reportable) beliefs about valence and enable her to optimize her confidence in expected (epistemic and pragmatic) consequences.

Having defined a deep generative model (with two hierarchical levels of state representation) that is apt for representing and leveraging valence representations, section 4 uses numerical analyses (i.e., simulations) to illustrate the associated belief updating and behavior. We conclude in section 5 with a discussion of the implications of this work, such as the relationship between implicit metacognition and affect, connections to reinforcement learning, and future empirical directions.

3 . Methods

3.1 . An Incremental Primer on Active Inference

At the core of active inference lie generative models that operate with—and only with—local information (i.e., without external supervision, which maintains biological plausibility). We focus on partially observable Markov decision processes (MDPs), a common generative model for Bayesian inference over discretized states, where beliefs take the form of categorical probability distributions. MDPs can be used to update beliefs about hidden states of the world “out there” (denoted ), based on sensory inputs (referred to as outcomes or observations, denoted ). Given the importance of the temporally deep and hierarchical structure afforded by MDPs in our formulation, we introduce several steps of increasing model complexity on which our formulation will build, following the sequence in Figure 1.

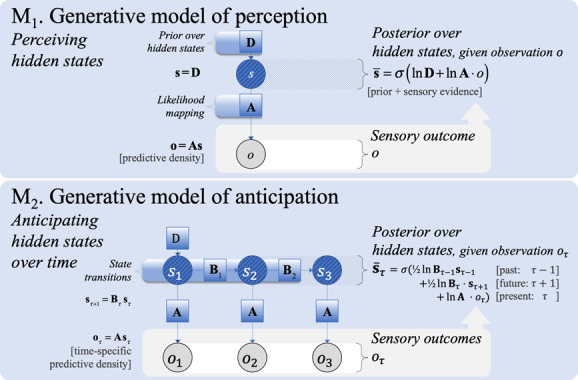

Figure 1:

The first (M, top panel) and second steps (M, bottom panel) of a generative model of increasing complexity. M: A minimal generative model of perception can infer hidden states from an observation , based on prior beliefs (D) and a likelihood mapping (A). M: A generative model of anticipation extends perception (as in M forward into the future (and backward into the past) using a transition matrix () for hidden states.

3.1.1 . Step 1: Perception

At the lowest complexity, we consider a generative model of perception (see Table 1) at a single point in time: M in Figure 1 (top panel). It entails prior beliefs about hidden states (prior expectationD), as well as beliefs about how hidden states generate sensory outcomes (via a likelihood mappingA). Perception here corresponds to a process of inferring which hidden states (posterior expectations) provide the best explanation for observed outcomes (see also appendix A2). However, this model of perception is too simple for modeling most agents, because it fails to account for the transitions between hidden states over time that lend the world—and subsequent inference—dynamics or narratives. This takes us to the next level of model complexity.

Table 1: A Generative Model of Perception.

| Prior Beliefs (Generative Model) (P) | Approximate Posterior Beliefs (Q) |

|---|---|

Notes: The generative model is defined in terms of prior beliefs about hidden states (where is a vector encoding the prior probability of each state) and a likelihood mapping (where is a matrix encoding the probability of each outcome given a particular state). denotes a categorical probability distribution (see also the supplementary information A3). Through variational inference, the beliefs about hidden states are updated given an observed sensory outcome , thus arriving at an approximate posterior (see also supplementary information in appendix A1), where . Here, the dot notation indicates backward matrix multiplication (in the case of a normalized set of probabilities and a likelihood mapping): for a given outcome, returns the (renormalized) probability or likelihood of each hidden state s (see also the supplementary information in appendix A2).

3.1.2 . Step 2: Anticipation

The next increase in complexity involves a generative model that specifies how hidden states evolve from one point in time to the next (according to state transition probabilities ). As shown in Table 2 (M in Figure 1, top panel), updating posterior beliefs about hidden states () now involves the integration of beliefs about past states (), sensory evidence (), and beliefs about future states (). From here, the natural third step is to consider how dynamics depend on the choices of the creature in question.

Table 2: A Generative Model of Anticipation (M in Figure 1, Bottom Panel).

| Generative Model (P) | Approximate Posterior Beliefs (Q) |

|---|---|

Notes: The generative model is defined in terms of prior beliefs about initial hidden states , hidden state transitions , and a likelihood mapping . Note the factor of in posterior state beliefs results from the marginal message-passing approximation introduced by Parr et al. (2019).

3.1.3 . Step 3: Action

The temporally extended generative model already discussed can be extended to model planning (M in Figure 2; see Table 3) by conditioning transition probabilities () on action. Policy selection (i.e., planning) can now be cast as a form of Bayesian model selection, in which each policy (a sequence of -matrices, subscripted by for policy) represents a possible version of the future. A priori, the agent's beliefs about policies () depend on a baseline prior expectation about the most likely policies (which can often be thought of as habits, denoted ) and an estimate of the negative log evidence it expects to obtain for each policy—the expected free energy (denoted ). The latter is biased toward phenotype-congruence in the sense that any given behavioral phenotype is associated with a range of species—typical (i.e., preferred) sensory outcomes. For example, within their respective ecological niches, different creatures will be more or less likely to sense different temperatures through their thermoreceptors (i.e., those consistent with their survival). These phenotypic priors (“prior preferences”) are cast in terms of a probability over observed future outcomes. Together, the baseline and action model priors () are supplemented by the evidence that each new observation provides for a particular policy—leading to a posterior distribution over policies with the form , which is equivalent to .

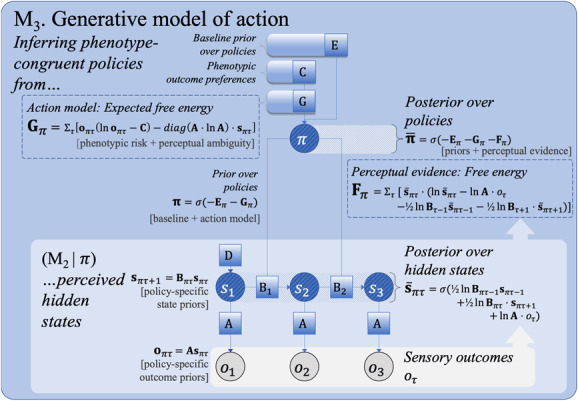

Figure 2:

The third step (M) of an incremental summary of active inference. In a generative model of action, state transitions are conditioned on policies . Prior policy beliefs are informed by the baseline prior over policies (“model free,” denoted ) and the expected free energy (), which evaluates each policy-specific perception model (as in M) in terms of the expected risk and ambiguity. Risk biases the action model toward phenotype-congruent preferences (). Posterior policy beliefs are informed by the fit between anticipated (policy-specific) and preferred outcomes, while at the same time minimizing their ambiguity.

Table 3: A Generative Model of Action (M in Figure 2).

| Prior Beliefs (Generative Model) (P) | Posterior Beliefs (Q) and Expectations |

|---|---|

| ) | |

Note: Posterior policies inferred from (policy-specific) posterior beliefs about hidden states , based on (policy-specific) state transitions , the baseline policy prior , the expected free energy (action model), and prior preferences over outcomes .

Expected free energy can be decomposed into two terms, referred to as the risk and ambiguity for each policy. The risk of a policy is the expected divergence between anticipated and preferred outcomes (denoted by ), where the latter is a prior that encodes phenotype-congruent outcomes (e.g., reward or reinforcement in behavioral paradigms). Risk can therefore be thought of as similar to a reward probability estimate for each policy. The ambiguity of a policy corresponds to the perceptual uncertainty associated with different states (e.g., searching under a streetlight versus searching in the dark). Policies with lower ambiguity (i.e., those expected to provide the most informative observations) will have a higher probability, providing the agent with an information-seeking drive. The resulting generative model provides a principled account of the subjective relevance of behavioral policies and their expected outcomes, in which an agent trades off between seeking reward and seeking new information (Friston, FitzGerald, Rigoli, Schwartenbeck, & Pezzulo, 2017. Furthermore, it generalizes many established formulations of optimal behavior (Itti & Baldi, 2009; Schmidhuber, 2010; Mirza, Adams, Mathys, & Friston, 2016; Veale, Hafed, & Yoshida, 2017) and provides a formal description of the motivated and self-preserving behavior of living systems (Friston, Levin, Sengupta, & Pezzulo, 2015).

3.1.4 . Step 4: Implicit Metacognition

The three steps of increasing complexity are jointly sufficient for the vast majority of (current) active inference applications. However, a fourth level is required to enable an agent to estimate its own success, which could be thought of as a minimal form of (implicit, non-reportable) metacognition (M in Figure 3; see Table 4). Estimation of an agent's own success specifically depends on an expected precision term (denoted ) that reflects prior confidence in the expected free energy over policies (). This expected precision term modulates the influence of expected free energy on policy selection, relative to the fixed-form policy prior (): higher values afford a greater influence of the expected free energies of each policy entailed by one's current action model. Formulated in this way, we can think of as an internal estimate of model fitness (subjective fitness), because it represents an estimate of confidence (M) in a phenotype-congruent model of actions (M), given inferred hidden states of the environment (M).

Figure 3:

The fourth step (M) of our incremental description of active inference in terms of the nested processes of perception (M in Figure 1), action (M in Figure 2), and implicit metacognition (M in this figure), emphasizing the inherently hierarchical, recurrent nature of these generative models. This generative model infers confidence in its own action model in terms of the expected precision (), which modulates reliance on for policy selection (as in M), based on perceptual inferences (as in M). Expected precision () changes when inferred policies differ from expected policies. This term increases when posterior (policy-averaged) expected free energy is lower than when averaged under the policy prior , and decreases when it is higher ).

Table 4: A Generative Model of Minimal (Implicit) Metacognition—(M in Figure 3): Inferring Expected Precision from Posterior Policies , Based on a Gamma Distribution with Temperature .

| Prior Beliefs (Generative Model) (P) | Posterior Beliefs (Q) and Expectations |

|---|---|

Note: Bayes-optimal updates of differ only in sign from the term we label affective charge (; see also M in Figure 3).

In turn, estimates for this precision term () are informed by a (gamma) prior that is usually parameterized by a rate parameter , with which it has an inverse relation. When expected model evidence is greater under posterior beliefs compared to prior beliefs (i.e., when ), values increase. That is, confidence in the success of one's model rises. In the opposite case (when ), values decrease. That is, confidence in the success of one's model falls. Note that while related, values are not redundant with the precision of the distribution over policies (). High values of the latter (which correspond to high confidence in the best policy or action) need not always correspond to high confidence in the success of one's model (high ). To emphasize its relation to valence in our formulation, going forward we refer to updates using the term affective charge (AC):

| (3.1) |

This shows that the timescale over which beliefs about policies are updated sets of the relevant timescale for AC, such that valence is linked inextricably to action. AC can only be nonzero when inferred policies differ from expected policies . It is positive when perceptual evidence favors an agent's action model and negative otherwise. In other words, positive and negative AC corresponds, respectively, to increased and decreased confidence in one's action model. Accordingly, because is a function of achieving preferred outcomes, AC can be construed as a reward prediction error, where reward is inversely proportional to (Friston et al., 2014). For example, a predator may be confidently pleased with itself after spotting a prey (positive AC) and frustrated when it escapes (negative AC). However, having precise beliefs about policies should not be confused with having confidence in one's action model. For instance, consider prey animals that are nibbling happily on food and suddenly find themselves being pursued by a voracious predator. While fleeing was initially an unlikely policy, this dramatically changes upon encountering the predator. Now these animals have a very precise belief that they should flee, but this dramatic change in their expected course of action suggests that their action model has become unreliable. Thus, while they have precise beliefs about action, AC would be highly negative (i.e., a case of negative valence but confident action selection).

This completes our formal description of active inference under Markov decision process models. This description emphasizes the recursive and hierarchical composition of such models that equip a simple likelihood mapping between unobservable (hidden) states and observable outcomes with dynamics. These dynamics (i.e., state transitions) are then cast in terms of policies, where the policies themselves have to be inferred. Finally, the ensuing planning as inference is augmented with metacognitive beliefs in order to optimize the reliance on expected free energy (i.e., based on one's current model) during policy selection. This model calls for Bayesian belief updating that can be framed in terms of affective charge (AC).

AC is formally related to reward prediction error within reinforcement learning models (Friston et al., 2014; Schultz, Dayan, & Montague, 1997; Stauffer, Lak, & Schultz, 2014). Accordingly, it may be reported or encoded by neuromodulators like dopamine in the brain (Friston, Rigoli et al., 2015; Schwartenbeck et al., 2015), a view that has been empirically supported using functional magnetic resonance imaging of decision making under uncertainty (Schwartenbeck et al., 2015). The formal relationship between AC (across each time step) and the neuronal dynamics that may optimize it within each time step can be obtained (in the usual way) through a gradient descent on free energy (as derived in Friston, FitzGerald et al., 2017). Through substitution of AC, we find that posterior beliefs about expected precision () satisfy the following equality:

| (3.2) |

where denotes the passage of time within a trial time step and thus sets the timescale of convergence (here the bar notation indicates posterior beliefs; dot notation indicates rate of change). The corresponding analytical solution shows that the magnitude of fluctuations in expected precision is proportional to AC:

| (3.3) |

We discuss the potential neural basis of AC further below. In the next section, we describe the simulation setup that we will use to quantitatively illustrate the proposed role of AC in affective behavior.

3.1.5 . The T-Maze Paradigm

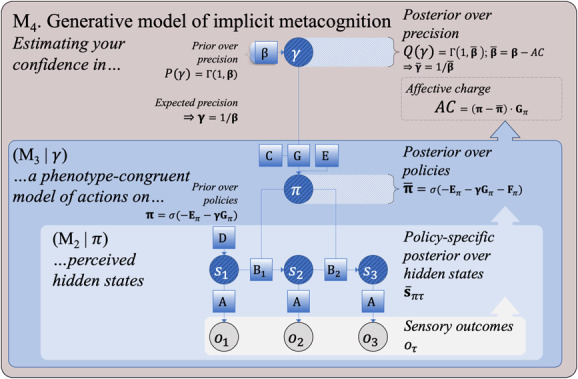

The generative model we have described has been formulated in a generic way (reflecting the domain-generality of our formulation). The particular implementation of active (affective) inference we use in this letter is based on a T-maze paradigm (see Figure 4), for which an active-inference MDP has been validated previously (Pezzulo, Rigoli, & Friston, 2015). Here we describe this implementation and subsequently use it to show simulations demonstrating affective inference in a synthetic animal. Simulated behavior in this paradigm is consistent with that observed in real rats within such contexts.

Figure 4:

The setup of the T-maze task (top panel) and its typical solution (bottom panel). The synthetic agent (here, a rat) starts in the middle of the T-maze. If it moves up, it will encounter two one-way doors, left and right, which lead to either a rewarding food source or a painful shock (high versus low pragmatic value, respectively). If it moves downward, it will encounter an informative cue (high epistemic value) that indicates whether the food is in the left or right arm.

For the sake of simplicity, the agent is equipped with (previously gathered) prior knowledge about the workings of the T-maze in her generative model. Starting near the central intersection, the agent can either stay put or move in three different directions: left, right, or down in the T-maze. She knows that a tasty reward is located in either the left or right arm of the T-maze, and a painful shock is in the opposite arm. She is also aware that the left and right arms are one-way streets (i.e., absorbing states): once entered, she must remain there until the end of the trial. She knows that an informative cue at the downward location provides reliable contextual information about whether the reward is located in the left or right arm in the current trial. The key probability distributions for the generative model are provided in Figure 5.

Figure 5:

A generative model for the T-maze setup of Figure 4, with the priors (top-left panel) as in Figure 3, now specified as vectors or matrices. Here, the probabilities reflect a set of simple assumptions embedded in the agent's generative model, each of which could itself be optimized by fitting to empirical data. Middle-left panel: Prior expectations for initial states are defined as uniform, given the rat has been trained in a series of random left and right trials. Middle panel: The vector encoding preferences is defined such that reward outcomes are strongly preferred (green circles): odds e:1 compared to “none” outcomes labeled “none” (gray crosses), and punishments are extremely nonpreferred (red): odds e:1 compared to outcomes labeled “none.” Bottom-left panel: The matrix for the likelihood mapping reflects two assumptions about the agent's beliefs given each particular context (which could be trained through prior trials). First, the location-reward mappings always have some minimal amount of uncertainty (.02 probability). Second, the cue is a completely reliable context indicator. Top-right panel: The matrix for the state transitions reflects the fact that changing location is either very easy (100% efficacious) or impossible when stuck in one of the one-way arms. Bottom-right panel: The vector for the policies reflects possible combinations of actions over the two time steps and associated baseline prior over policies , which starts at an initial, uniformly distributed level of evidence for each policy, which can be seen as reflecting an initial period of free exploration of the maze structure (here the value of 2.3 regulates the impact of subsequently observed policies, where the value for each policy increments by 1 each time it is subsequently chosen).

Although this generative model is relatively simple, it has most of the ingredients needed to illustrate fairly sophisticated behavior. Because actions can lead to epistemic or informative outcomes, which change beliefs, it naturally accommodates situations or paradigms that involve both exploration and exploitation under uncertainty. Our primary focus here is on the expected precision term and its updates (i.e., AC), that we have already described.

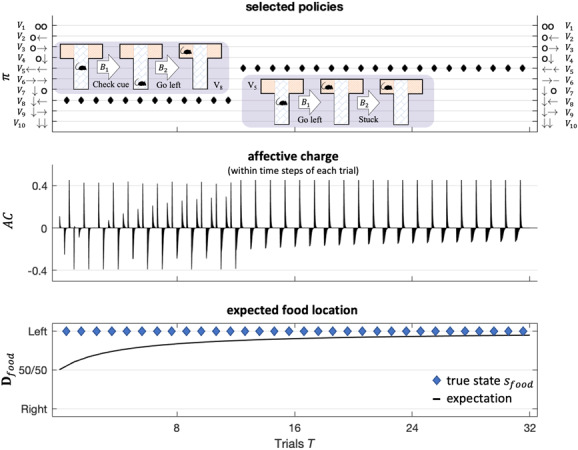

Figure 6 illustrates typical behavior under this particular generative model. These results were modeled after Friston, FitzGerald et al. (2017) and show a characteristic transition from exploratory behavior to exploitative behavior as the rat becomes more confident about the context in which she is operating—here, learning that the reward is always on the left. This increase in confidence is mediated by changes in prior beliefs about the context state (the location of the reward) that are accumulated by repeated exposure to the paradigm over 32 trials (this accumulation is here modeled using a Dirichlet parameterization of posterior beliefs about initial states). These changes mean that the rat becomes increasingly confident about what she will do, with concomitant increases or updates to the expected precision term. These increases are reflected by fluctuations in affective charge (middle panel). We will use this kind of paradigm later to see what happens when the reward contingencies reverse.

Figure 6:

Simulated responses over 32 trials with food located on the left side of the T-maze. This figure reports the behavioral and (simulated) affective charge responses during successive trials. The top panel shows, for each trial, the selected policy (in image format) over the policies considered (arrows indicate moving to each respective arm; circles indicate staying in, or returning to, the center position). The policy selected in the first 12 trials corresponds to an exploratory policy, which involves examining the cue in the lower arm and then going to the left or right arm to secure the reward (i.e., depending on the cue, which here always indicates that reward is on the left). After the agent becomes sufficiently confident that the context does not change (after trial 12), she indulges in pragmatic behavior, moving immediately to the reward without checking the cue. The middle panel shows the associated fluctuations in affective charge. The bottom panel shows the accumulated posterior beliefs about the initial state.

3.2 . Affective Valence as an Estimate of Model Fitness in Deep Temporal Models

Within various modeling paradigms, a few researchers have recognized and aimed to formalize the relation between subjective fitness and valence. For example, Phaf and Rotteveel (2012) used a connectionist approach to argue that valence corresponds broadly to match-mismatch processes in neural networks, thus monitoring the fit between a neural architecture and its input. As another example, Joffily and Coricelli (2013) proposed an interpretation of emotional valence in terms of rates of change in variational free energy. However, this proposal did not include formal connection to action.

The notion of affective charge that we describe might be seen as building on such previous work by linking changes in free energy (and the corresponding match-mismatch between a model and sensory input) to an explicit model of action selection. In this case, an agent can gauge subjective fitness by evaluating its phenotype-congruent action model against perceptual evidence deduced from actual outcomes . Such a comparison, and a metric for its computation, is exactly what is provided by affective charge, which specifies changes in the expected precision of (i.e., confidence in) one's action model (see M in Figure 3). Along these lines, various researchers have developed conceptual models of valence based on the expected precision of beliefs about behavior (Seth & Friston, 2016; Badcock et al., 2017; Clark et al., 2018). Crucially, negatively valenced states lead to behavior suggesting a reduced reliance on prior expectations (Bodenhausen, Sheppard, & Kramer, 1994; Gasper & Clore, 2002), while positively valenced states appear to increase reliance on prior expectations (Bodenhausen, Kramer, & Süsser, 1994; Park & Banaji, 2000)—both consistent with the idea that valence relates to confidence in one's internal model of the world.

One might correspondingly ask whether an agent should rely to a greater or lesser extent on the expected free energy of policies when deciding how to act. In effect, the highest level of the generative model shown in Figure 3 (M, also outlined in Table 4) provides an uninformative prior over expected precision that may or may not be apt in a given world. If the environment is sufficiently predictable to support a highly reliable model of the world, then high confidence should be afforded to expected free energy in forming (posterior) plans. In economic terms, this would correspond to increasing risk sensitivity, where risk-minimizing policies are selected. Conversely, in an unpredictable environment, it may be impossible to predict risk, and expected precision should, a priori, be attenuated, thereby paying more attention to sensory evidence.

This suggests that in a capricious environment, behavior would benefit from prior beliefs about expected precision that reflect the prevailing environmental volatility—in other words, beliefs that reflect how well a model of that environment can account for patterns in its own action-dependent observations. In what follows, we equip the generative model with an additional (hierarchically and temporally deeper) level of state representation that allows an agent to represent and accumulate evidence for such beliefs, and we show how this leads naturally to a computational account of valence from first principles.

Deep temporal models of this kind (with two levels of state representation) have been used in previous research on active inference (Friston, Rosch, Parr, Price, & Bowman, 2017). In these models, posterior state representations at the lower level are treated as observations at the higher level. State representations at the higher level in turn provide prior expectations over subsequent states at the lower level (see section 3.3). This means that higher-level state representations evolve more slowly, as they must accumulate evidence from sequences of state inferences at the lower level. Previous research has shown, for example, how this type of deep hierarchical structure can allow an agent to hold information in working memory (Parr & Friston, 2017) and to infer the meaning of sentences based on recognizing a sequence of words (Friston, Rosch et al., 2017).

Here we extend this previous work by allowing an agent to infer higher-level states not just from lower-level states, but also from changes in lower-level expected precision (AC). This entails a novel form of parametric depth, in which higher-level states are now informed by lower-level model parameter estimates. As we will show, this then allows for explicit higher-level state representations of valence (i.e., more slowly evolving estimates of model fitness), based on the integration of patterns in affective charge over time. In anthropomorphic terms, the agent is now equipped to explicitly represent whether her model is doing “good” or “bad” at a timescale that spans many decisions and observed outcomes. Hence, something with similar properties as valence (i.e., with intrinsically good/bad qualities) emerges naturally out of a deep temporal model that tracks its own success to inform future action. Note that “good” and “bad” are inherently domain-general here, and yet—as we will now show—they can provide empirical priors on specific courses of action.

3.3 . Affective Inference

This letter characterizes the valence component of affective processing with respect to inference about domain-general valence states—those inferred from patterns in expected precision updates over time. In particular, we focus on how valence emerges from an internal monitoring of subjective fitness by an agent. To do so, we specify how affective states participate in the generative model and what kind of outcomes they generate. Since deep models involve the use of empirical priors—from higher levels of state representation—to predict representations at subordinate levels (Friston, Parr, & Zeidman 2018), we can apply such top-down predictions to supply an empirical prior for expected precision (). Formally, we associate alternative discrete outcomes from a higher-level model with different values of the rate parameter () for the gamma prior on expected precision.

Note that we are not associating the affective charge term to emotional valence directly. The affective charge term tracks fluctuations in subjective fitness. To model emotional valence, we introduce a new layer of state inference that takes fluctuations in the value of (i.e., AC-driven updates) over a slower timescale as evidence favoring one valence state versus another.

By implementing this hierarchical step in an MDP scheme, we effectively formulate affective inference as a parametrically deep form of active inference. Parametric depth means that higher-order affective processes generate priors that parameterize lower-order (context-specific) inferences, which in turn provide evidence for those higher-order affective states.

3.3.1 . Simulating the Affective Ups and Downs of a Synthetic Rat

As a concrete example, we implement a minimal model of valence in which a synthetic rat infers whether her own affective state is positive or negative within the T-maze paradigm. Our hierarchical model of the T-maze task comprises a lower-level MDP for context-specific active inference (M in Figure 3) and a higher-level MDP for affective inference (see Figure 7). Note, however, that this is simply an example; the lowerlevel model in principle could generalize to any other type of task that is relevant to the agent in question. The hidden states at the higher level provide empirical priors over any variable at the lower level that does not change over the timescale associated with that level. These variables include the initial state, priors over expected precision, fixed priors over policies, and so on (see the MDP model descriptions in section 3.1). Here, we consider higher-level priors on the initial state and the rate parameter of the priors over expected precision. By construction, state transitions at the higher (affective) level are over trials endowing the model with a deep temporal structure. This enables it to keep track of slow changes over multiple trials, such as the location of the reward. In other words, belief updating at the second level from trial to trial enables the agent to accumulate evidence and remember contingencies that are conserved over trials.

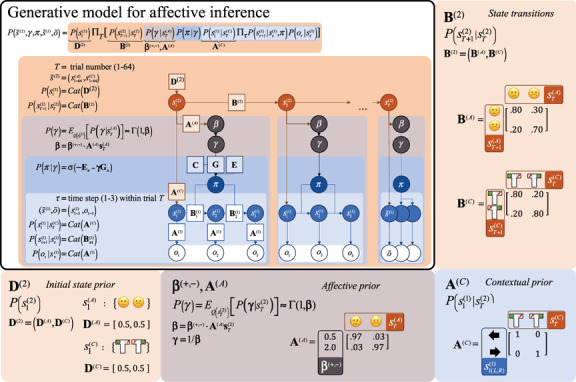

Figure 7:

A generative model for affective inference in terms of its key equations and probabilistic graphical model (top left panel) and the associated matrices, again reflecting a number of relatively minimal assumptions about the agent's beliefs concerning the experimental setup—where each of these parameters could itself be optimized by fitting to empirical data. Bottom left: Prior expectations for initial states at the second level are distributed uniformly. (Bottom middle) The likelihood matrix reflects some degree of uncertainty in the affective predictions (.03), which, when multiplied by , sets the lower-level prior on expected precision, allowing it to vary between 0.5 and 2.0. Bottom right: The matrix for the likelihood mapping from context states to the lower level reflects that the agent is always certain which context she observed after each trial is over. Top right: The matrix for the state transitions at the second level reflects two assumptions for cross-trial changes: (1) Both affective and contextual states vary strongly but have some stability across trials (.2–.3 probability of changing) and (2) the agent has a positivity bias in the sense that she is more likely to switch from a negative to a positive state than vice versa (.3 versus .2 probability). The lower-level model is the same as in Figure 5.

In our example, we use two distinct sets of hidden states (i.e., hidden state factors) at the second level, each with two states. The first state factor corresponded to the location of the reward (food on the left or right, denoted and ), and the second state factor corresponded to valence (positive or negative, denoted and ). We will refer to these as Contexts () and Affective states (), respectively—that is, . This means the rat could contextualize her behavior in terms of a prior over second-level states () and their state transitions from trial to trial (), in terms of both where she believes the reward is most likely to be (Context) and how confident she should be in her action model (Valence).

In short, our synthetic subject was armed with high-level beliefs about context and affective states that fluctuate slowly over trials. In what follows, we consider the belief updating in terms of messages that descend from the affective level to the lower level and ascend from the lower level to the affective level. Descending messages provide empirical priors that optimize policy selection. This optimization can be regarded as a form of covert action or attention that allows the impact of one's generative model on action selection to vary in a state-dependent manner. Ascending messages can be interpreted as mediating belief updates about the current context and affective state: affective inference reflecting belief updates about model fitness.

3.3.2 . Descending Messages: Contextual and Affective Priors

On each trial, discrete prior beliefs about the reward being on the left () are encoded in empirical priors or posterior beliefs at the second level, which inherit from the previous posterior and enable belief updating from trial to trial. Similarly, beliefs over discrete valence beliefs () are equipped with an initial prior at the affective level and are updated from trial to trial based on a second-level probability transition matrix. From the perspective of the generative model, the initial context states at the lower level are conditioned on the context states at the higher level, while the rate parameter , (which constitutes prior beliefs about expected precision) is conditioned on affective states.

Because affective states are discrete and the rate parameter is continuous, message passing between these random variables calls for the mixed or hybrid scheme (described in Friston, Parr, & de Vries, 2018). In these simulations, the affective states (i.e., valence) were associated with two values of the rate parameter , where the corresponding precisions provide evidence for positive valence () and negative valence (). Effectively, and are upper and lower bounds on the expected precision under the two levels of the affective state. The descending messages correspond to Bayesian model averages, a mixture of the priors under each level of the context and affective states:

In short, the empirical priors over the initial state at the lower level (and expected precision) now depend on hidden (valence) states at the second level.

3.3.3 . Ascending Messages: Contextual and Affective Evidence

During each trial, exogenous (reward location) and endogenous (affective charge) signals induce belief updating at the second level of hidden states. They do so in such a way that fluctuations in context and affective beliefs (across trials) are slower than fluctuations in lower-level beliefs concerning states, policies, and expected precision. These belief updates following each trial are mediated by ascending messages that are gathered from posterior beliefs about the initial food location at the end of each trial (), which serves as Bayesian model evidence for the appropriate context state:

As with inference at the first level, this second-level expectation comprises empirical priors from the previous trial and evidence based on the posterior expectation of the initial (context) state at the lower level.

For the ascending messages from the (continuous) expected precision to the (discrete) affective states, we use Bayesian model reduction (for the derivation, see Friston, Parr, and Zeidman, 2018) to evaluate the marginal likelihood under the priors associated with each affective state:

Again, this contains empirical priors based on previous affective expectations and evidence for changes in affective state based on affective charge, , evaluated at the end of each trial time step. Notice that when the affective charge is zero, the affective expectations on the current trial are determined completely by the expectations at the previous trial (as the logarithm of one is zero). See Figure 7 for a graphical description of this deep generative model.

We used this generative model to simulate affective inference of a synthetic rat that experiences 64 T-maze trials, in which the food location switches after 32 trials from the left arm to the right arm. When our synthetic subject becomes more confident that her actions will realize preferred outcomes (), increased (subpersonal) confidence in her action model () should provide evidence for a positively valenced state (through AC). Conversely, when she is less confident about whether her actions will realize preferred outcomes, there will be evidence for a negatively valenced state. In that case, our affective agent will fall back on her baseline prior over policies (), a quick and dirty heuristic that tends to be useful in situations that require urgent action to survive (i.e., in the absence of opportunity to resolve uncertainty via epistemic foraging).

In this setting, our synthetic subject can receive either a tasty reward or a painful shock, based on whether she chooses left or right. Of course, she has a high degree of control over the outcome, provided she forages for context information and then chooses left or right, accordingly. However, her generative model includes a small amount of uncertainty about these divergent outcomes, which corresponds to a negatively valenced (anxious) affective state at the initial time point. Starting from that negative state, we expected that our synthetic rat would become more confident over time, as she grew to rely increasingly on her context beliefs about the reward location. We hoped to show that at some point, our rat would infer a state of positive valence and be sufficiently confident to take her reward directly. Skipping the information-foraging step would allow her to enjoy more of the reward before the end of each trial (comprising two moves). The second set of 32 trials involved a somewhat cruel twist (introduced by Friston, FitzGerald et al., 2016): we reversed the context by placing the reward on the opposite (right) arm. This type of context reversal betrays our agent's newly found confidence that T-mazes contain their prize on the left. Given enough trials with a consistent reward location, our synthetic rat should ultimately be able to regain her confidence.

4 . Results

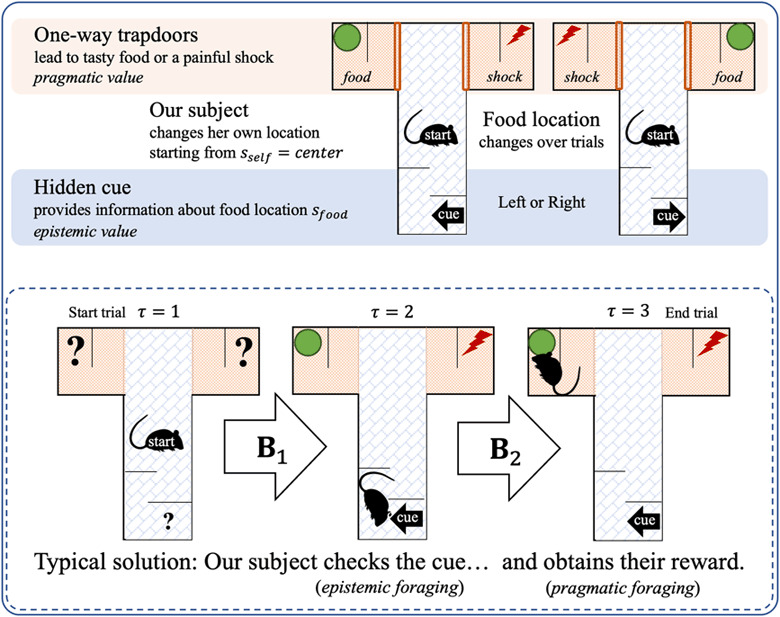

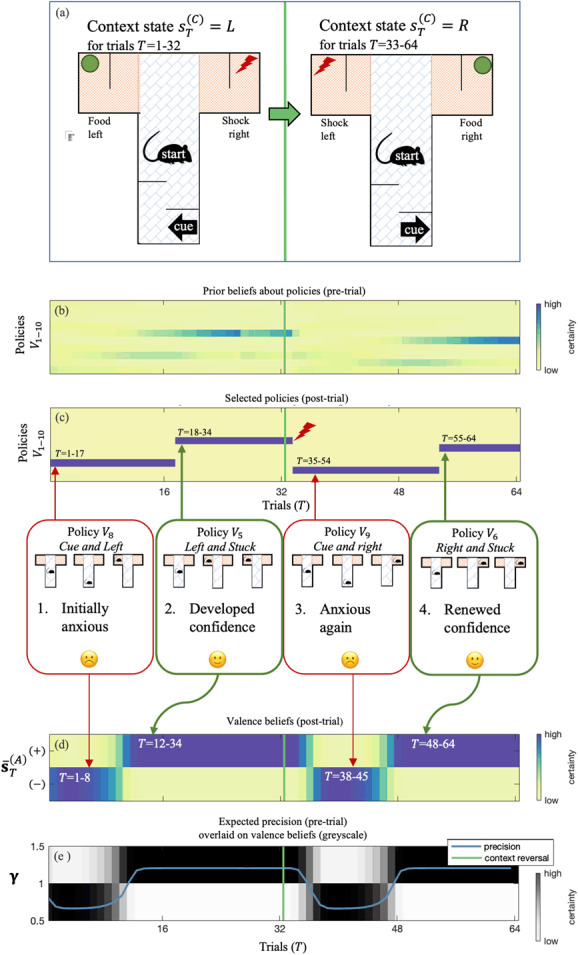

Figure 8 shows the simulation outcomes for the setup we have described. The dynamics of this simulation can be roughly divided into four quarters: two periods within each of the 32 trials before and after the context reversal. These periods show an initial phase of negative valence (quarters 1 and 3), followed by a phase of purposeful confidence (positive valence; quarters 2 and 4). As stipulated in terms of priors, our subject started in a negative anxious state. Because it takes time to accumulate evidence, her affective beliefs lagged somewhat behind the affective evidence at hand (patterns in affective charge). As our rat kept finding food on the left, her expected precision increased until she entered a robustly positive state around trial 12. Later, around trial 16, she became sufficiently confident to take the shortcut to the food—without checking the informative cue. After we reversed the context at trial 33, our rat realized that her approach had ceased to bear fruit. Unsure of what to do, she lapsed into an affective state of negative valence—and returned to her information-foraging strategy. More slowly than before (about 15 trials after the context reversal, as opposed to 12 trials after the first trial), our subject returned to her positive feeling state as she figured out the new contingency: food is now always on the right. It took her about 22 trials following context reversal to gather enough courage (i.e., confidence) to take the shortcut to the food source on the right. The fact that it took more trials (22 instead of 16) before taking the shortcut suggests that she had become more skeptical about consistent contingencies in her environment (and rightly so).

Figure 8:

A summary of belief updating and behavior of our simulated affective agent over 64 trials. Probabilistic beliefs are plotted using a blue-yellow gradient (corresponding with high-low certainty). As shown in the graphic that connects panels c and d, the dynamics of this simulation can be divided into four quarters: two periods within each of the 32 trials before and after the context reversal, each comprising an initial phase of negative valence (anxiety; quarters 1 and 3), followed by a phase of positive valence (confidence; quarters 2 and 4). (a) The context changed midway through the experiment (indicated in all panels with a vertical green line): food was on the left for the first 32 trials (L) and on the right for the subsequent 32 trials (R). (b, c) These density plots show the subject's beliefs about the best course of action, both before (panel b) and after the trial (panel c). Prior beliefs were based purely on baseline priors and her action model, which entailed high ambiguity (yellow) during quarters 1 and 3 of the trial series (corresponding with cue-checking policies V and high certainty (blue) during quarters 2 and 4 (corresponding to shortcut policies V. After perceptual evidence was accumulated (after the trial), posterior beliefs about policies always converged to the best policy, except in the first trial after context reversal (trial 33, when the rat receives a highly unexpected shock), which explains her initial confusion. Whenever prior certainty about policies was high, expectations agreed with posterior beliefs about policies (again, except for trial 33). (d) This density plot illustrates affective inference in terms of beliefs about her valence state (confident positive or anxious negative states ). Roughly speaking, our rat experienced a negatively valenced state during quarters 1 and 3 and a positively valenced state during quarters 2 and 4. (e) We plot lower-level expected precision (), overlaid on a density plot of valence beliefs (grayscale version of panel d).

Roughly speaking, our agent experienced (i.e., inferred) a negatively valenced state during quarters 1 and 3 and a positively valenced state during quarters 2 and 4 of the 64 trials. A closer look at these temporal dynamics reveals a dissociation between positive valence and confident risky behaviors: a robust positive state (Figure 8d) preceded the agent's pragmatic choice of taking the shortcut to the food (Figure 8b).

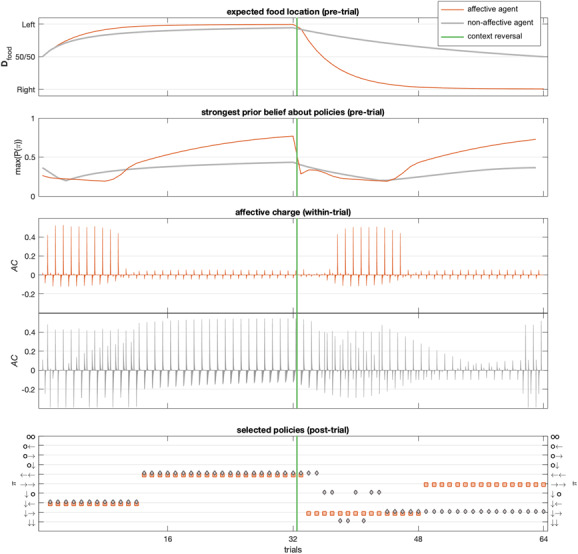

To illustrate the importance of higher-level beliefs in this kind of setting, we repeated the simulations in the absence of higher-level contextual and affective states. After removing the higher level, the resulting (less sophisticated) agent, which could be thought of as an agent with a “lesion” to higher levels of neural processing, updated expectations about food location by simply accumulating evidence in terms of the number of times a particular outcome was encountered. Figure 9 provides a summary of differences in belief updating and behavior between this simpler model and an affective inference model. In the top panel of Figure 9, we see that higher-level context states can quickly adjust lower-level expectations based on recent observations (recency effects), while the less sophisticated rat is unable to forget about past observations (after observing 32 times left and right, its expected food location is again 50/50). The effect of removing affective states is subtler. This effect becomes apparent when we inspect the difference between the strongest prior beliefs about policies with and without affective states in play (second panel). As expected, we see that affective states and associated fluctuations in expected precision (as in Figures 8d and 8e) are associated with much larger variation in the strength of prior beliefs about policies at the start of the trial (when our rat is still in the centre of the maze). Furthermore, a comparison in terms of the AC elicited within trials (third versus fourth panel of Figure 9) demonstrates how higher-level modulation of expected precision tends to attenuate the generation of AC within trials. Conversely, the simpler agent cannot habituate to its own successes and failures: after every trial, expected precision is reset and AC is elicited again and again. Finally, the combined effects of lesioning the higher level neatly explain the observed behavioral outcomes (bottom panel of Figure 9). Before context reversal, both agents end up selecting the same policies. The absence of the higher-level affective state beliefs particularly disrupts the capacity to deal with the change in context. First, she persisted in pragmatic foraging for three trials despite receiving several painful shocks—as opposed to the affective inference rat, which switched after a single unexpected observation. Second, the affective inference rat switched back to her default strategy right away (checking the cue, then getting the food), but the less sophisticated rat (with a “lesion” to the higher-level model) started avoiding both left and right arms altogether. For eight consecutive trials, she checked the informative cue but either stayed with the cue or returned to the center. Only after she had gathered enough evidence about the reliability of the new food location did she dare to move to the right arm (reminiscent of drift diffusion models of decision making). She kept using that strategy until the end of the experiment, while our affective inference rat moved directly to the right arm for the last quarter of the series of trials.

Figure 9:

A comparison of belief updating (four top rows) and behavior (bottom row) over 64 trials in our affective agent (plotted in orange) and an agent without higher-level contextual and affective states (plotted in gray). Context was changed midway through (vertical green line): food was on the left for the first 32 trials and on the right for the subsequent 32 trials. (First panel) The top panel shows differences in temporal dynamics of food location expectations. Thanks to her higher-level context states (which decayed over time due to uncertainty about cross-trial state transitions as defined in Figure 8), our affective agent (orange) weighed recent evidence more heavily, allowing her to shift context beliefs. In contrast, the agent without the higher affective level (gray) counted events only over time. While her expectations developed similarly to the affective agent for the first 32 trials, she was much slower in adjusting to the change in context (her beliefs return to 50/50 only after observing 32 trials for both left and right). (Second panel) This panel displays the strongest prior belief about policies for each agent (pretrial), tracking the product of the expected precision and the maximum of model evidence (negative ). The affective agent varied (pretrial) her expected precision dynamically with context reliability. The nonaffective agent instead obtained (initial) certainty about the best course of action much more slowly, only as a function of her action model (as initial expected precision was constant). (Third and fourth panels) A comparison of within-trial AC responses (fluctuations in expected precision) between the affective agent (third panel, orange) and the nonaffective agent (fourth panel, gray). Our affective agent exhibited large fluctuations in expected precision within trials only when she was switching between affective states: she attenuated AC responses by integrating them across trials, adjusting expected precision preemptively. In contrast, the nonaffective agent exhibited large fluctuations throughout the series of trials, being surprised repeatedly because she was unable to integrate affective charge. (Fifth panel) The bottom panel shows the behavioral outcomes for both agents. Before context reversal, their behaviors were indistinguishable. After context reversal, the nonaffective agent only foraged for information and exhibited avoidance behaviors, either staying down (policy 10) or moving back to the center (policy 7).

Clearly, one can imagine many other variants of the generative model we used to illustrate affective inference; we will explore these in future work. For example, it is not necessary to have separate contextual and affective states on the higher level. One set of higher-level states could stand in for both, providing empirical priors for beliefs about contingencies between particular contexts and valence states. Nevertheless, our simulations provide a sufficient vehicle to discuss a number of key insights offered by affective inference.

5 . Discussion

In this letter, we have constructed and simulated a formal model of emotional valence using deep active inference. We provided a computational proof of principle of affective inference in which a synthetic rat was able to infer not only the states of the world but also her own affective (valence) states. Crucially, her generative model inferred valence based on patterns in the expected precision of her phenotype-congruent action model. To be clear, we do not equate this notion of expected precision (or confidence) with valence directly; rather, we suggest that AC signals (updates in expected precision) are an important source of evidence for valence states. Aside from AC, valence estimates could also be informed by other types of evidence (e.g., exteroceptive affective cues). Our formulation thus provides a way to characterize valenced signals across domains of experience. We showed the face validity of this formulation of a simple form of affect, in that sudden changes in environmental contingencies resulted in negative valence and low confidence in one's action model.

Extending nested active inference models of perception, action, and implicit metacognition (M; see Figure 3), our deep formulation of affective inference can be seen as a logical next step. It required us to specify mutual (i.e., top-down and bottom-up) constraints between higher-level contextual and affective inferences (across contexts) and lower-level inferences (within contexts) about states, policies, and expected precision. In Figure 10, we emphasize the inherent hierarchical and nested structure of the computational architecture of our affective agent. It evinces a metacognitive (i.e., implicitly self-reflective) capacity, where creatures hold alternative hypotheses about their own affective state, reflecting internal estimates of model fitness. This affords a type of mental action (Limanowski & Friston, 2018; Metzinger, 2017) in the sense that the precision ascribed to low-level policies is influenced by higher levels in the hierarchy. Concurrently, at each level (top-down constrained), prior beliefs follow a gradient ascent on an upper bound on model evidence, thus providing mutual constraints between levels in forming posterior beliefs.

Figure 10:

A schematic breakdown of the nested processes of Bayesian inference in terms of the affective agent presented in this letter. At each level, top-down prior beliefs change along a gradient ascent on bottom-up model evidence (negative ), moving the entire hierarchy toward mutually constrained posteriors. Perception (light blue; M in Figure 1 and Table 2) provides evidence for beliefs over policies (blue; M in Figure 2 and Table 3) and higher-level contextual states. Action outcomes inform subjective fitness estimates through affective charge (brown; M in Figure 3 and Table 4), which provides evidence to inform valence beliefs (orange). These nested processes of inference unfold continuously in each individual phenotype throughout development and learning (e.g., neural Darwinism, natural selection; see Campbell, 2016; Constant et al., 2018). In turn, the reproductive success of each phenotype provides model evidence that shapes the evolution of a species.

5.1 . Implicit Metacognition and Affect: “I Think, Therefore I Feel.”

Our affective agent evinces a type of implicit metacognitive capacity that is more sophisticated than that of the generative model presented in our primer on active inference (M in Figures 1–3). Beliefs about her own affective state are informed by signals conveying the phenotype congruence of what she did or is going to do; put another way, they are informed by the degree to which actions did, or are expected to, bring about preferred outcomes. This echoes other work on Bayesian approaches to metacognition (Stephan et al., 2016). The emergence of this metacognitive capacity rests on having a parametrically deep generative model, which can incorporate other types of signals from within and from without. Beyond internal fluctuations in subjective fitness (AC, as in our formulation), affective inference is also plausibly informed by exteroceptive cues as well as interoceptive signals (e.g., heart rate variability; Allen, Levy, Parr, & Friston, 2019; Smith, Thayer, Khalsa, & Lane, 2017). The link to exogenous signals or stimuli is crucial: equipped with affective inference, our affective agent can associate affective states with particular contexts (through and ). Such associations can be used to inform decisions on how to respond in a given context (given a higher-level set of policies ) or how to forage for information within a given niche (via ). If our synthetic subject can forage efficiently for affective information, she will be able to modulate her confidence in a context-sensitive manner, as a form of mental action. Furthermore, levels deeper in the cortical hierarchy (e.g., in prefrontal cortex) might regulate such affective responses by inferring or enacting the policies that would produce observations leading to positive AC. Such processes could correspond to several widely studied automatic and voluntary emotion regulation mechanisms (Buhle et al., 2014; Phillips, Ladouceur, & Drevets, 2008; Gyurak, Gross, & Etkin, 2011; Smith, Alkozei, Lane, & Killgore, 2016; Smith, Alkozei, Bao, & Killgore, 2018), as well as capacities for emotional awareness (Smith, Steklis, Steklis, Weihs, & Lane, 2020; Smith, Bajaj et al., 2018; Smith, Weihs, Alkozei, Killgore, & Lane, 2019; Smith, Killgore, & Lane, 2020), each of them central to current evidence-based psychotherapies (Barlow, Allen, & Choate, 2016; Hayes, 2016).

5.2 . Reinforcement Learning and the Bayesian Brain

It is useful to contrast the view of motivated behavior on offer here with existing normative models of behavior and associated neural theories. In studies on reinforcement learning (De Loof et al., 2018; Sutton & Barto, 2018), signed reward prediction error (RPE) has been introduced as a measure of the difference between expected and obtained reward, which is used to update beliefs about the values of actions. Positive versus negative RPEs are often also (at least implicitly) assumed to correspond to unexpected pleasant and unpleasant experiences, respectively. Note, however, that reinforcement learning can occur in the absence of changes in conscious affect, and pleasant or unpleasant experiences need not always be surprising (Smith & Lane, 2016; Smith, Kaszniak et al., 2019; Panksepp et al., 2017; Winkielman, Berridge, & Wilbarger, 2005; Pessiglione et al., 2008; Lane, Weihs, Herring, Hishaw, & Smith, 2015; Lane, Solms, Weihs, Hishaw, & Smith, 2020). The term we have labeled affective charge can similarly attain both positive and negative values that are of affective significance. However, unlike reinforcement learning, our formulation focuses on positively and negatively valenced states and the role of AC in updating beliefs about these affective states (i.e., as opposed to directly mediating reward learning). While similar in spirit to RPE, the concept of AC has a principled definition and a well-defined role in terms of belief updating, and it is consistent with the neuronal process theories that accompany active inference.

Specifically, affective charge scores differences between expected and obtained results as the agent strives to minimize risk and ambiguity (; see Table 3). In cases where expected ambiguity is negligible, AC becomes equivalent to RPE, as both score differences in utility between expected and obtained outcomes (see Rao, 2010; Colombo, 2014; FitzGerald, Dolan, & Friston, 2015). However, expected ambiguity becomes important when one's generative model entails uncertainty (e.g., driving exploratory behaviors such as those typical of young children). This component of affective inference allows us to link valenced states to ambiguity reduction, while also accounting for the delicate balance between exploitation and exploration.

In traditional RL models (as described by Sutton & Barton, 2018), the primary candidates for valence appear to be reward and punishment or approach and avoidance tendencies. In contrast to our model, RL models tend to be task specific and do not traditionally involve any internal representation of valence (e.g., reward is simply defined as an input signal that modifies the probability of future actions). More recent models have suggested that mood reflects the recent history of reward prediction errors, which serves the function of biasing perception of future reward (Eldar et al., 2016; Eldar & Niv, 2015). This contrasts with our approach, which identifies valence with a domain-general signal that emerges naturally within a Bayesian model of decision making and can be used to inform representations of valence that track the success of one's internal model and adaptively modify behavior in a manner that could not be accomplished without hierarchical depth. Presumably this type of explicit valence representation is also a necessary condition for self-reportable experience of valence. The adaptive benefits of this type of representation are illustrated in Figure 9. Only with this higher-order valence representation was the agent able to arbitrate the balance between behavior driven by expected free energy (i.e., explicit goals and beliefs) and behavior driven by a baseline prior over policies (i.e., habits). More generally, the agent endowed with the capacity for affective inference could more flexibly adapt to a changing situation than an agent without the capacity for valence representation, since it was able to evaluate how well it was doing and modulate reliance on its action model accordingly. Thus, unlike other modeling approaches, valence is here related to, but distinct from, both reward and punishment and approach and avoidance behavior (i.e., consistent with empirically observed dissociations between self-reported valence and these other constructs; see Smith & Lane, 2016; Panksepp et al., 2017; Winkielman et al., 2005) and serves a unique and adaptive domain-general function.

Prior work has suggested that expected precision updates (i.e., AC) may be encoded by phasic dopamine responses (e.g., see Schwartenbeck, 2015). If so, our model would suggest a link between dopamine and valence. When considering this biological interpretation, however, it is important to contrast and dissociate AC from a number of related constructs. This includes the notion of RPEs discussed above, as well as that of salience, wanting, pleasure, and motivation, each of which has been related to dopamine in previous literature and appears distinct from AC (Berridge & Robinson, 2016). In reward learning tasks, phasic dopamine responses have been linked to RPEs, which play a central role in learning within several RL algorithms (Sutton & Barto, 2018); however, dopamine activity also increases in response to salient events independent of reward (Berridge & Robinson, 2016). Further, there are contexts in which dopamine appears to motivate energetic approach behaviors aimed at “wanting” something, which can be dissociated from the hedonic pleasure upon receiving it (e.g., amphetamine addicts gaining no pleasure from drug use despite continued drives to use; Berridge & Robinson, 2016). Thus, if AC is linked to valence, it is not obvious a priori that its tentative link to dopamine is consistent with, or can account for, these previous findings.

While these considerations may point to the need for future extensions of our model, many can be partially addressed. First, there are alternative interpretations of the role of dopamine proposed within the active inference field (FitzGerald et al., 2015; Friston et al., 2014)—namely, that it encodes expected precision as opposed to RPEs. Mathematically, it can be demonstrated that changes in the expected precision term (gamma) will always look like RPEs in the context of reward tasks (i.e., because reward cues update beliefs about future action and relate closely to changes in expected free energy). However, since salient (but nonrewarding) cues also carry action-relevant information (i.e., they change confidence in policy selection), gamma also changes in response to salient events. Thus, this alternative interpretation can actually account for both salience and RPE aspects of dopaminergic responses. Furthermore, reward learning is not in fact compromised by attenuated dopamine responses and therefore does not play a necessary role in this process (FitzGerald et al., 2015). The active inference interpretation can thus explain dissociations between learning and apparent RPEs.

Arguably, the strongest and most important challenge for claiming a relation of dopamine, AC, and valence arises from previous studies linking dopamine more closely to “wanting” than pleasure (i.e., which is closely related to positive valence; Berridge & Robinson, 2016). On the one hand, some studies have linked dopamine to the magnitude of “liking” in response to reward (Rutledge et al., 2015), and some effective antidepressants are dopaminergic agonists (Pytka et al., 2016); thus, there is evidence supporting an (at least indirect) link to pleasure. However, pleasure is also associated with other neural signals (e.g., within the opioid system). A limitation of our model is that it does not currently have the resources to account for these other valence-related signals. It is also worth considering that because only one study to date has directly tested and found support for a link between AC and dopamine (Schwartenbeck et al., 2015), future research will be necessary to establish whether AC might better correspond to other nondopaminergic signals. We point out, however, that our model only entails that AC provides one source of evidence for higher-level valence representations and that pleasure is only one source of positive valence. Thus, it does not rule out the additional influence of other signals on valence, which would allow the possibility that AC contributes to, but is also dissociable from, hedonic pleasure (for additional considerations of functional neuroanatomy in relation to affective inference, see appendix A4).

5.3 . Affective Charge Lies in the Mind of the Beholder