Abstract

Background

Technology can benefit older adults in many ways, including by facilitating remote access to services, communication, and socialization for convenience or out of necessity when individuals are homebound. As people, especially older adults, self-quarantined and sheltered in place during the COVID-19 pandemic, the importance of usability-in-place became clear. To understand the remote use of technology in an ecologically valid manner, researchers and others must be able to test usability remotely.

Objective

Our objective was to review practical approaches for and findings about remote usability testing, particularly remote usability testing with older adults.

Methods

We performed a rapid review of the literature and reported on available methods, their advantages and disadvantages, and practical recommendations. This review also reported recommendations for usability testing with older adults from the literature.

Results

Critically, we identified a gap in the literature—a lack of remote usability testing methods, tools, and strategies for older adults, despite this population’s increased remote technology use and needs (eg, due to disability or technology experience). We summarized existing remote usability methods that were found in the literature as well as guidelines that are available for conducting in-person usability testing with older adults.

Conclusions

We call on the human factors research and practice community to address this gap to better support older adults and other homebound or mobility-restricted individuals.

Keywords: mobile usability testing, usability inspection, methods, aging, literature synthesis, usability study, mobile usability, elderly, older adults, remote usability, mobility restriction

Introduction

The Need for Remote Operations

Technology can support access to services, communication, and socialization for older adults and others whose mobility is restricted due to health-related risks such as susceptibility to disease (eg, COVID-19), disability, and a lack of resources (eg, transportation). However, the delivery, support, and evaluation of technologies that are used by homebound or mobility-restricted individuals require remote operations, including remote usability testing. Herein, we review the unique needs and technology opportunities of homebound older adults and the literature on remote usability testing methods. Based on our findings, we identified a gap in guidance for remote usability testing with older adults. Therefore, we call on relevant research and practice communities to address this gap.

Supporting Homebound Individuals With Technology

Over 2 million Americans are homebound due to an array of social, functional, and health-related causes, and this number is projected to grow as the size of the older population increases [1]. Situational factors such as inclement weather and, on a larger scale, pandemics or national disasters can also temporarily render individuals homebound. For example, in March 2020, the US Centers for Disease Control and Prevention [2] warned older adults to remain at home due to the disproportionate COVID-19–related health risks that they face. Prior to the pandemic, an estimated 1 in 4 older US adults were already socially isolated, and this rate has likely increased [3].

People who shelter in place or stay home for other reasons may turn to technology to access remote services, including remote banking, grocery shopping, and medical care services. The prevalence of these physically distant interactions is reportedly on the rise [4], especially for certain services. A prominent example is the increased frequency of patients’ telemedicine visits with health care professionals—a form of telehealth that has been long available but whose usage has increased dramatically in the United States, as the COVID-19 pandemic resulted in changes to federal reimbursement policies in March 2020 [5].

Testing Technology With Homebound Technology Users

When technology users are homebound, researchers and care practitioners who intend to test a technology’s usability in an ecologically valid manner must either travel to the user’s home or conduct remote testing. Travel is not always an option. Safety, health, or personal reasons may prevent researchers from entering a home or community. Travel may be too costly or otherwise impractical, or participants may live in an area that is inaccessible to the project team. During the COVID-19 pandemic for example, academic and practice-based project teams have anecdotally reported barriers to in-person visits, including the need to distance themselves from infected and at-risk individuals, members of project teams working from home, and the need to reduce travel expenses due to economic pressures. Even if in-person visits are possible, remote testing can also be more convenient and cost-efficient for all parties involved.

Methods

We performed a rapid review of studies involving remote usability testing methods for all users and those specifically for older adults and summarized their findings. Rapid reviews are an accepted knowledge synthesis approach that has become popular for understanding the most salient points on emerging or timely topics [6]. Rapid reviews typically do not include an exhaustive set of studies, do not involve formal analyses of study quality, and report findings from prior studies via narrative synthesis [7]. The primary goal of this review was to identify methods for performing remote usability assessments with older adults (if any existed). Secondarily, we wished to summarize the literature on existing remote usability methodologies for any population and existing guidelines on performing in-person usability testing with older adults. Sources for the second goal were largely retrieved while searching for sources to support the primary goal and via a secondary search within Google Scholar.

Our rapid review began with a keyword search on the Google Scholar and Science Direct scholarly databases. This was followed by a supplementary keyword search in top human factors journals and proceedings. Both searches are summarized in Table 1.

Table 1.

Keywords that were searched for the rapid review.

| Search type and sources | Keywords | |

| Primary search | ||

|

|

Google Scholar (database) | elderly remote usability, senior remote usability, and older adult remote usability |

|

|

Science Direct (database) | elderly remote usability, senior remote usability, and older adult remote usability |

| Secondary search | ||

|

|

Ergonomics | elderly remote usability, senior remote usability, and older adult remote usability |

|

|

Human Factors | elderly remote usability, senior remote usability, and older adult remote usability |

|

|

Applied Ergonomics | elderly remote usability, senior remote usability, and older adult remote usability |

|

|

Human Factors and Ergonomics Society Conference Proceedings | elderly remote usability, senior remote usability, and older adult remote usability |

|

|

International Journal of Human-Computer Interaction | elderly remote usability, senior remote usability, and older adult remote usability |

|

|

International Journal of Human-Computer Studies | elderly remote usability, senior remote usability, and older adult remote usability |

|

|

Gerontechnology | elderly remote usability, senior remote usability, and older adult remote usability |

|

|

Google Scholar (database) | usability older adults, elderly usability, senior usability, and remote usability |

We began with Google Scholar to take advantage of its relevance-based sorting feature and broader inclusion of diverse disciplines, academic and practice-based publications, and grey literature [8]. However, we conducted further searches because of the known limitations of Google Scholar, such as its lack of transparency and lack of specialization [9].

In the interest of establishing a starting point for understanding remote usability testing with older adults, we had broad inclusion criteria and did not restrict studies based on their date of publication or an analysis of their quality or peer-review status. We also defined remote usability broadly as usability assessments of participants (users) who were in separate locations from the researchers or practitioners. Duplicate studies, as well as studies in which usability was assessed by an expert (eg, heuristic analysis on a website) on behalf of older adults instead of through direct participant feedback, were excluded.

Two authors (JRH and JCB) performed the search in Google Scholar while one author (JCB) performed the search in Science Direct and the human factors sources. Both authors took notes in a shared cloud-based document. We chose a stopping rule based on the assumption that a narrative synthesis of literature is a form of qualitative content analysis [10]. Therefore, we concluded our search when we reached theoretical saturation [11]—a qualitative analysis stopping rule that means that the search continues until results begin to repeat and negligible new categories of information are produced through additional searching.

Results and Discussion

Summary of the Search Results

Of all of the sources found, 33 were screened in-depth (18 on remote usability methods and 15 on usability testing with older adults), and 21 were included in this review (16 on various remote usability methods and 5 on usability testing with older adults).

Importantly, sources that provided guidance or information on remote usability testing with older adults (the primary goal of this review) were not found. Therefore, we organized the results according to our secondary goals—summarizing existing methods for remote usability testing and outlining existing guidelines for in-person usability testing with older adults. In this Results and Discussion section, we combined the results with our interpretations and discussion to adhere to conventions for narrative reviews. We also present our overall conclusions in the Conclusions section.

Usability-In-Place: The State of the Practice of Remote Usability Testing

Studies on remote usability testing date back to the 1990s [12,13]. Since then, most traditional in-person usability evaluation methods have been attempted remotely. Remote moderated testing has been supported by advances in internet-based software, such as WebEx and NetMeeting, which permit simultaneous video and audio transmissions, screen sharing, and remote control [14,15]. Studies have also used novel methods, such as using virtual reality to simulate laboratory usability testing environments [16] and remotely capturing eye-tracking data [17]. Technologies for unmoderated testing have also evolved, as described elsewhere [18].

Asynchronous methods have long been used to overcome the barriers of time and space. Such methods include conducting self-administered survey questionnaires, using user diaries and incident reports, and obtaining voluntary feedback [19]. Studies have also used activity logging to passively collect use data for analyzing usability [20].

The major remote usability testing methods are described in Table 2 along with key findings from the literature. An important replicated finding was that the results from remote and in-person usability testing were generally similar, although significant differences may have appeared under extenuating circumstances, such as poor product usability or the cognitive difficulty of the usability testing tasks [21].

Table 2.

Remote usability testing methods and key findings.

| Remote usability testing method | Description | Key findings |

| Synchronous remote testing [14,15,20-23] | In-person testing is simulated by using video and audio transmissions and remote desktop access. |

|

| Web-based questionnaires or surveys [14,20,21] | Users fill out web-based questionnaires as they complete tasks or after the completion of tasks. |

|

| Postuse interview [24] | Users are interviewed over the phone about the usability of a design (qualitative and quantitative data are collected) after they have completed tasks. |

|

| User-reported critical incidents or diaries [12,13,19,20] | Users fill out a diary and take notes during a period of use or fill out an incident form when they identify a critical problem with an interface. | |

| User-provided feedback [25] | While completing timed tasks, users provide comments or feedback in a separate browser window. Once a task is complete, the user rates the difficulty of the completed task. |

|

| Log analysis [20] | The actions taken by the user (eg, clicks) are captured for future analysis. |

|

aConflicting evidence has been found to support both the statement and its opposite in the literature.

The following general benefits of remote usability testing methods were identified:

Does not require a facility, thereby reducing the time requirements of participants and evaluators and lowering costs [20]

Can recruit participants from a broader geographic vicinity, thereby allowing evaluators to collect results from a larger and more diverse group of people (including those living in other countries or rural areas or those who are otherwise isolated) [14,23]

Allows participants to test technologies in a more realistic environment. For example, Petrie et al [24] had people with disabilities perform remote usability testing from the comfort of their own homes. The benefits thereof include the use of a home-based environment that is almost impossible to perfectly replicate in a lab.

Several drawbacks were also described, as follows:

General agreement that remotely collecting data results in a loss of some of the contextual information and nonverbal cues from participants that are collected during in-person evaluations [15,20,22,24,25]

Remote usability methods (especially asynchronous methods) appear to result in the identification of fewer usability problems, cause users to make more errors during testing, and are more time-consuming for users [14,15]. However, test participants identified about as many usability issues as those identified by the evaluators, but the participants’ categorization of the identified usability problems were deemed not useful. Contrarily, this was not found by Tullis et al [25] when they compared lab-based usability testing against remote usability testing.

Dray and Siegel [20] also listed validity problems with self-report methodologies, the inability of log files to distinguish the cause of navigation errors, and management challenges related to troubleshooting network issues and ensuring system compatibility as other drawbacks of remote usability testing.

Many of the factors that may affect the validity, reliability, or efficiency of remote usability testing have not been scientifically studied [26]. These include factors such as the characteristics of users (eg, age and literacy), the effect of slow or unstable internet, the type of devices being used, and testing tactics (eg, verbal, printed, or on-screen instructions).

No matter the method, remote usability testing also involves challenges to implementing the methods in natural contexts, namely in home and community settings [27,28]. These challenges include recruiting a representative sample, especially among populations that may be less comfortable with using certain technology, have lower literacy, or are mistrustful of research [26]. McLaughlin and colleagues [26] proposed strategies such as providing access to phone support prior to the start of any web-based testing.

Remote Usability Testing With Older Adults

Prior work on remote usability testing has been performed with convenience samples of college students [13,14] or healthier and younger adults recruited from workplaces [22,23,25]. We found no published instance of fully remote usability testing with older adults. Diamantidis et al [29] conducted a test of a mobile health system with older individuals with chronic kidney disease. Participants received an in-person tutorial of the system; they used the system at home, received physical materials by mail, and completed a paper diary. Afterward, they returned to complete an in-person satisfaction survey. Petrie et al [24] reported 2 case studies of remote usability testing—one with blind younger adults (n=8) and another with a more heterogeneous group of individuals with disabilities (n=51). They demonstrated the feasibility of remote testing and showed comparable results between in-person and remote testing, although in-person participants in the second study reported more usability problems with the tested website.

Others have described ways to improve in-person usability testing with older adults that may be transferable to remote methods. For example, touch screen devices and hardware that is selected for simplicity may produce better usability testing results with older adults [30-32] and can therefore reduce barriers to remote usability testing. Additionally, the use of large closed captions during a remote testing session has been recommended for older users with visual or hearing impairments. Holden [33] published a Simplified System Usability Scale that was modified for and tested with older adults and those with cognitive disabilities but did not demonstrate its use in remote testing.

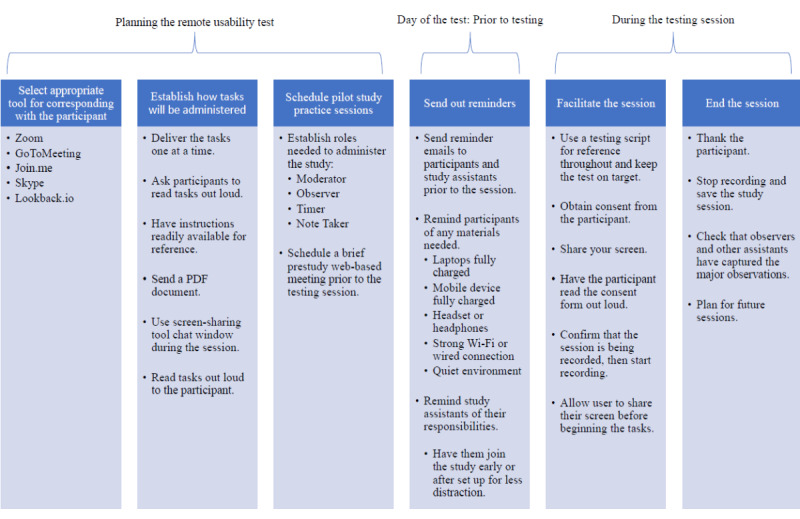

Older adults in remote usability tests may also benefit from non–age-specific strategies for optimizing remote usability testing [34]. These recommendations, which are summarized in Figure 1, include mailing a written copy of instructions, conducting web-based training prior to testing sessions, and sending reminders.

Figure 1.

General guidelines for conducting moderated remote usability testing (adapted from the Nielsen Norman Group [34]).

Conclusions

Our rapid review and synthesis of the literature revealed that remote usability testing still appears to be an emerging field [26] whose great potential is accentuated during major events, such as the COVID-19 pandemic. The decision to pursue the further development of and research on remote usability testing is straightforward, given the apparent advantages, validity, and feasibility of remote usability testing and the need for the method.

The method however must be adapted to and tested with older adults. The use of technology for remote services among older adults in the United States has been increasing [35,36], as has older adults’ proficiency with internet-based technology [37]. A Pew Research Center national survey reported increases in internet use (from 12% to 67%) and the adoption of home broadband (from 0% to 51%) from 2000 to 2016, as well as increases in smartphone (from 11% to 42%) and tablet (from 1% to 32%) ownership from 2011 to 2016 [4]. However, the older adult population is diverse and has different needs compared to those of other groups when it comes to technology and the usability testing of technology. US adults aged 65 years are more likely than their younger counterparts to experience difficulties with physical or cognitive function, including reduced memory capacity, stiff joints or arthritis, and vision or hearing disability [38,39]. These factors and the discomfort with or reduced motivation to use technology elevate the importance of usability testing [40] but ironically may increase the difficulty of conducting remote usability testing. Additional recommendations and best practices will thus be needed to ensure effective and efficient remote usability testing with older adults.

We call on human factors, human-computer interaction, and digital health communities to further develop, describe, and test remote usability testing approaches that will be suitable across diverse populations, including older adults, those with lower literacy or health literacy, and individuals with cognitive or physical disabilities. Progress toward this goal will not only better support homebound or mobility-restricted individuals but may also improve the efficiency, ecological validity, and effectiveness of usability testing in general.

Acknowledgments

We thank Dr Anne McLaughlin and the members of the Brain Safety Lab for their input. The authors of this paper were supported by grant R01 AG056926 from the National Institute on Aging of the National Institutes of Health. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We thank the three anonymous reviewers.

Footnotes

Authors' Contributions: JRH and RJH conceived this paper. All authors wrote and edited this paper and approved the final version.

Conflicts of Interest: None declared.

References

- 1.Ornstein KA, Leff B, Covinsky KE, Ritchie CS, Federman AD, Roberts L, Kelley AS, Siu AL, Szanton SL. Epidemiology of the homebound population in the United States. JAMA Intern Med. 2015 Jul;175(7):1180–1186. doi: 10.1001/jamainternmed.2015.1849. http://europepmc.org/abstract/MED/26010119 .2296016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.COVID-19 risks and vaccine information for older adults. Centers for Disease Control and Prevention. [2020-08-27]. https://www.cdc.gov/coronavirus/2019-ncov/need-extra-precautions/older-adults.html# .

- 3.Cudjoe TKM, Kotwal AA. "Social Distancing" amid a crisis in social isolation and loneliness. J Am Geriatr Soc. 2020 Jun;68(6):E27–E29. doi: 10.1111/jgs.16527. http://europepmc.org/abstract/MED/32359072 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Anderson M, Perrin A. Technology use among seniors. Pew Research Center. 2017. May 17, [2020-11-24]. https://www.pewresearch.org/internet/2017/05/17/technology-use-among-seniors/

- 5.Wosik J, Fudim M, Cameron B, Gellad ZF, Cho A, Phinney D, Curtis S, Roman M, Poon EG, Ferranti J, Katz JN, Tcheng J. Telehealth transformation: COVID-19 and the rise of virtual care. J Am Med Inform Assoc. 2020 Jun 01;27(6):957–962. doi: 10.1093/jamia/ocaa067. http://europepmc.org/abstract/MED/32311034 .5822868 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tricco AC, Antony J, Zarin W, Strifler L, Ghassemi M, Ivory J, Perrier L, Hutton B, Moher D, Straus SE. A scoping review of rapid review methods. BMC Med. 2015 Sep 16;13:224. doi: 10.1186/s12916-015-0465-6. https://bmcmedicine.biomedcentral.com/articles/10.1186/s12916-015-0465-6 .10.1186/s12916-015-0465-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Grant M, Booth A. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Info Libr J. 2009 Jun;26(2):91–108. doi: 10.1111/j.1471-1842.2009.00848.x. doi: 10.1111/j.1471-1842.2009.00848.x.HIR848 [DOI] [PubMed] [Google Scholar]

- 8.Yasin A, Fatima R, Wen L, Afzal W, Azhar M, Torkar R. On using grey literature and Google Scholar in systematic literature reviews in software engineering. IEEE Access. 2020;8:36226–36243. doi: 10.1109/access.2020.2971712. https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8984351 . [DOI] [Google Scholar]

- 9.Shultz M. Comparing test searches in PubMed and Google Scholar. J Med Libr Assoc. 2007 Oct;95(4):442–445. doi: 10.3163/1536-5050.95.4.442. http://europepmc.org/abstract/MED/17971893 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O, Peacock R. Storylines of research in diffusion of innovation: a meta-narrative approach to systematic review. Soc Sci Med. 2005 Jul;61(2):417–430. doi: 10.1016/j.socscimed.2004.12.001.S0277-9536(04)00647-1 [DOI] [PubMed] [Google Scholar]

- 11.Strauss A, Corbin J. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory, 2nd ed. Thousand Oaks, California: Sage; 1998. [Google Scholar]

- 12.Castillo JC, Hartson HR, Hix D. Remote usability evaluation: Can users report their own critical incidents?. CHI98: ACM Conference on Human Factors and Computing Systems; April 18-23, 1998; Los Angeles, California, USA. 1998. Apr, pp. 253–254. [DOI] [Google Scholar]

- 13.Hartson HR, Castillo JC. Remote evaluation for post-deployment usability improvement. AVI98: Advanced Visual Interface '98; May 24-27, 1998; L'Aquila, Italy. 1998. May, pp. 22–29. [DOI] [Google Scholar]

- 14.Andreasen M, Nielsen H, Schrøder S, Stage J. What happened to remote usability testing? An empirical study of three methods. CHI '07: SIGCHI Conference on Human Factors in Computing Systems; April 28 to May 3, 2007; San Jose, California, USA. 2007. pp. 1405–1414. [DOI] [Google Scholar]

- 15.Thompson KE, Rozanski EP, Haake AR. Here, there, anywhere: Remote usability testing that works. SIGITE04: ACM Special Interest Group for Information Technology Education Conference 2004; October 28-30, 2004; Salt Lake City, Utah, USA. 2004. Oct, pp. 132–137. [DOI] [Google Scholar]

- 16.Madathil KC, Greenstein JS. Synchronous remote usability testing: a new approach facilitated by virtual worlds. CHI '11: SIGCHI Conference on Human Factors in Computing Systems; May 7-12, 2011; Vancouver, British Columbia, Canada. 2011. [DOI] [Google Scholar]

- 17.Chynał P, Szymański JM. Remote usability testing using eyetracking. IFIP Conference on Human-Computer Interaction – INTERACT 2011; September 5-9, 2011; Lisbon, Portugal. 2011. pp. 356–361. [DOI] [Google Scholar]

- 18.Whitenton K. Tools for unmoderated usability testing. Nielsen Norman Group. 2019. Sep 22, [2020-11-24]. https://www.nngroup.com/articles/unmoderated-user-testing-tools/

- 19.Bruun A, Gull P, Hofmeister L, Stage J. Let your users do the testing: a comparison of three remote asynchronous usability testing methods. CHI '09: CHI Conference on Human Factors in Computing Systems; April 4-9, 2009; Boston, Massachusetts, USA. 2009. Apr, pp. 1619–1628. [DOI] [Google Scholar]

- 20.Dray S, Siegel D. Remote possibilities?: international usability testing at a distance. Interactions (NY) 2004;11(2):10–17. doi: 10.1145/971258.971264. [DOI] [Google Scholar]

- 21.Sauer J, Sonderegger A, Heyden K, Biller J, Klotz J, Uebelbacher A. Extra-laboratorial usability tests: An empirical comparison of remote and classical field testing with lab testing. Appl Ergon. 2019 Jan;74:85–96. doi: 10.1016/j.apergo.2018.08.011.S0003-6870(18)30284-9 [DOI] [PubMed] [Google Scholar]

- 22.Bernheim Brush AJ, Ames M, Davis J. A comparison of synchronous remote and local usability studies for an expert interface. CHI04: CHI 2004 Conference on Human Factors in Computing Systems; April 24-29, 2004; Vienna, Austria. 2004. [DOI] [Google Scholar]

- 23.Hammontree M, Weiler P, Nayak N. Remote usability testing. Interactions (NY) 1994 Jul;1(3):21–25. doi: 10.1145/182966.182969. [DOI] [Google Scholar]

- 24.Petrie H, Hamilton F, King N, Pavan P. Remote usability evaluations with disabled people. CHI '06: SIGCHI Conference on Human Factors in Computing Systems; April 22-27, 2006; Montréal, Québec, Canada. 2006. pp. 1133–1141. [DOI] [Google Scholar]

- 25.Tullis T, Fleischman S, Mcnulty M, Cianchette C, Bergel M. An empirical comparison of lab and remote usability testing of Web sites. Usability Professionals’ Association 2002 Conference Proceedings; 2002; Bloomingdale, Illinois, USA. 2002. [Google Scholar]

- 26.McLaughlin AC, DeLucia PR, Drews FA, Vaughn-Cooke M, Kumar A, Nesbitt RR, Cluff K. Evaluating medical devices remotely: Current methods and potential innovations. Hum Factors. 2020 Nov;62(7):1041–1060. doi: 10.1177/0018720820953644. https://journals.sagepub.com/doi/10.1177/0018720820953644?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Holden RJ, Scott AMM, Hoonakker PLT, Hundt AS, Carayon P. Data collection challenges in community settings: insights from two field studies of patients with chronic disease. Qual Life Res. 2015 May;24(5):1043–1055. doi: 10.1007/s11136-014-0780-y. http://europepmc.org/abstract/MED/25154464 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Valdez RS, Holden RJ. Health care human factors/ergonomics fieldwork in home and community settings. Ergon Des. 2016 Oct;24(4):4–9. doi: 10.1177/1064804615622111. http://europepmc.org/abstract/MED/28781512 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Diamantidis CJ, Ginsberg JS, Yoffe M, Lucas L, Prakash D, Aggarwal S, Fink W, Becker S, Fink JC. Remote usability testing and satisfaction with a mobile health medication inquiry system in CKD. Clin J Am Soc Nephrol. 2015 Aug 07;10(8):1364–1370. doi: 10.2215/CJN.12591214. https://cjasn.asnjournals.org/cgi/pmidlookup?view=long&pmid=26220816 .CJN.12591214 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Murata A, Iwase H. Usability of touch-panel interfaces for older adults. Hum Factors. 2005;47(4):767–776. doi: 10.1518/001872005775570952. [DOI] [PubMed] [Google Scholar]

- 31.Page T. Touchscreen mobile devices and older adults: a usability study. Int J Hum Factors Ergon. 2014;3(1):65. doi: 10.1504/IJHFE.2014.062550. [DOI] [Google Scholar]

- 32.Ziefle M, Bay S. How older adults meet complexity: Aging effects on the usability of different mobile phones. Behav Inf Technol. 2005 Sep;24(5):375–389. doi: 10.1080/0144929042000320009. [DOI] [Google Scholar]

- 33.Holden RJ. A Simplified System Usability Scale (SUS) for Cognitively Impaired and Older Adults. Proceedings of the International Symposium on Human Factors and Ergonomics in Health Care; 2020 International Symposium on Human Factors and Ergonomics in Health Ca; March 8-11, 2020; Toronto, Ontario. 2020. Sep 16, pp. 180–182. [DOI] [Google Scholar]

- 34.Moran K, Pernice K. Remote moderated usability tests: How to do them. Nielsen Norman Group. 2020. Apr 26, [2020-11-24]. https://www.nngroup.com/articles/moderated-remote-usability-test/

- 35.Baker S, Warburton J, Waycott J, Batchelor F, Hoang T, Dow B, Ozanne E, Vetere F. Combatting social isolation and increasing social participation of older adults through the use of technology: A systematic review of existing evidence. Australas J Ageing. 2018 Sep;37(3):184–193. doi: 10.1111/ajag.12572. [DOI] [PubMed] [Google Scholar]

- 36.Lai HJ. Investigating older adults’ decisions to use mobile devices for learning, based on the unified theory of acceptance and use of technology. Interactive Learning Environments. 2018 Nov 21;28(7):890–901. doi: 10.1080/10494820.2018.1546748. [DOI] [Google Scholar]

- 37.Morrow-Howell N, Galucia N, Swinford E. Recovering from the COVID-19 pandemic: A focus on older adults. J Aging Soc Policy. 2020;32(4-5):526–535. doi: 10.1080/08959420.2020.1759758. [DOI] [PubMed] [Google Scholar]

- 38.Barnard Y, Bradley MD, Hodgson F, Lloyd AD. Learning to use new technologies by older adults: Perceived difficulties, experimentation behaviour and usability. Comput Human Behav. 2013 Jul;29(4):1715–1724. doi: 10.1016/j.chb.2013.02.006. [DOI] [Google Scholar]

- 39.Wildenbos GA, Peute L, Jaspers M. Aging barriers influencing mobile health usability for older adults: A literature based framework (MOLD-US) Int J Med Inform. 2018 Jun;114:66–75. doi: 10.1016/j.ijmedinf.2018.03.012.S1386-5056(18)30245-4 [DOI] [PubMed] [Google Scholar]

- 40.Brown J, Kim HN. Validating the usability of an Alzheimer’s caregiver mobile app prototype. 2020 IISE Annual Conference; May 30 to June 2, 2020; New Orleans, Louisiana, USA. 2020. [Google Scholar]