Abstract

Sharing emotional experiences impacts how we perceive and interact with the world, but the neural mechanisms that support this sharing are not well characterized. In this study, participants (N = 52) watched videos in an MRI scanner in the presence of an unfamiliar peer. Videos varied in valence and social context (i.e., participants believed their partner was viewing the same (joint condition) or a different (solo condition) video). Reported togetherness increased during positive videos regardless of social condition, indicating that positive contexts may lessen the experience of being alone. Two analysis approaches were used to examine both sustained neural activity averaged over time and dynamic synchrony throughout the videos. Both approaches revealed clusters in the medial prefrontal cortex that were more responsive to the joint condition. We observed a time‐averaged social‐emotion interaction in the ventromedial prefrontal cortex, although this region did not demonstrate synchrony effects. Alternatively, social‐emotion interactions in the amygdala and superior temporal sulcus showed greater neural synchrony in the joint compared to solo conditions during positive videos, but the opposite pattern for negative videos. These findings suggest that positive stimuli may be more salient when experienced together, suggesting a mechanism for forming social bonds.

Keywords: affect, social attention, social context, social neuroscience

Participants watched emotional videos in an MRI either with a partner or alone. Social‐emotion interactions in the amygdala and superior temporal sulcus showed greater neural synchrony in the joint condition during positive videos, alongside reports of greater feelings of togetherness. These findings suggest that positive stimuli may be more salient when experienced together, suggesting a mechanism for forming social bonds.

1. INTRODUCTION

Social experiences shape human behavior. Sharing the world with others is an integral part of how we perceive and learn about our world, as well as how we create social bonds (Shteynberg, Hirsh, Bentley, & Garthoff, 2020; Wolf, Launay, & Dunbar, 2016; Wolf & Tomasello, 2020). The knowledge that we are attending to the same thing as another person (i.e., shared attention) can change our perception of that experience, and can impact cognitive processes such as memory, motivation, and judgments (Shteynberg, 2015; Stephenson, Edwards, & Bayliss, 2021). This shared attention often includes verbal or non‐verbal communication among partners, but it does not necessarily require eye contact or even shared physical presence. Despite the importance of this phenomenon, open questions remain regarding how shared experiences affect the brain, individual attention, and behavior. The current study addresses these gaps by examining the neural, eye gaze, and behavioral responses to shared and non‐shared contexts during naturalistic emotional video events.

1.1. Social sharing

1.1.1. Neural bases of sharing events

Past work on the neuroscience of sharing experiences has primarily used a joint attention framework. Joint attention is the coordination of attention between two people onto a third, separate thing (for example, another person, an object, or an idea) at which point social partners achieve the state of shared attention awareness (Redcay & Saxe, 2013). This body of literature has pinpointed regions in the mentalizing network of the brain, including the posterior superior temporal sulcus and temporoparietal junction (pSTS/TPJ), dorsomedial prefrontal cortex (dmPFC), and posterior cingulate cortex (PCC), as critical for joint attention engagement, even in non‐interactive settings (Mundy, 2018; Redcay & Saxe, 2013). Real‐life joint attention also involves complex interaction with social partners in real time, including receiving communicative feedback. Neuroimaging studies that have extended classic joint attention paradigms through interactive designs where participants believe they are responding to a partner in real time show more extensive engagement of both the medial and lateral regions of the mentalizing network, as well as some engagement of additional neural systems, such as the reward network (Bristow, Rees, & Frith, 2007; Redcay et al., 2010; Schilbach et al., 2010). However, the psychological phenomenon of shared attention itself is difficult to examine in interactive contexts within joint attention paradigms. Direct joint attention interactions with a partner include receiving gaze or pointing cues, which conflates the effect of visual and social attention with the perceptual experience of sharing attention. Additionally, sharing attention in interactive contexts may be confounded with direct positive feedback. Therefore, studying the neural bases of shared attention requires balancing the benefits of simulated realistic social experiences with the confounds that arise, as well as the restricted nature of the MRI scanner.

1.1.2. Co‐viewing paradigms with naturalistic stimuli

Employing naturalistic stimuli in MRI designs, in particular dynamic viewing events over longer timescales, increases both ecological and predictive validity (Finn & Rosenberg, 2021; Redcay & Moraczewski, 2019; Zaki & Ochsner, 2009). While there is increasing concern about generally low test–retest reliability in the field of fMRI (Elliot et al., 2020; Milham, Vogelstein, & Xu, 2021; Zuo, Xu, & Milham, 2019), naturalistic viewing has been suggested as more reliable both within and between subjects (Hasson, Malach, & Heeger, 2010) and outperforms resting state when predicting real‐world behavior (Finn & Bandettini, 2021). Movie clips that simulate what the brain perceives in the real world engage a broad network of regions, are effective in inducing emotional states, and allow for social and non‐social viewing designs without direct communication (Bartels & Zeki, 2003; Fernández‐Aguilar, Navarro‐Bravo, Ricarte, Ros, & Latorre, 2019; Golland, Levit‐Binnun, Hendler, & Lerner, 2017; Haxby, Gobbini, & Nastase, 2020). Furthermore, use of co‐viewing to examine how the brain supports sharing stimuli without direct communication can isolate feelings or awareness of social sharing while controlling for the other perceptual, affective, and cognitive processes that arise during a direct social interaction. There is a large body of behavioral literature using co‐viewing paradigms with naturalistic stimuli that demonstrate mere social presence effects, briefly highlighted in the following section (Bruder et al., 2012; Fridlund, 1991; Golland, Mevorach, & Levit‐Binnun, 2019; Hess, Banse, & Kappas, 1995). We can draw upon these designs, adapted to the MRI environment, to examine the neural processes that support such cognitive and behavioral effects that differ in social context. Studies that place one person in an MRI scanner and one person outside have demonstrated changes in the neural response to stimuli simply through the perception of simultaneous viewing (Golland et al., 2017; Wagner et al., 2015). These tasks are well‐suited to examine interactive effects of social context and other stimulus effects, such as emotions, which have not typically been studied in a joint attention framework.

1.2. Interactions between shared events and emotions

Prior studies of joint attention in the brain have mostly used neutral or meaningless stimuli so that the phenomenon was not confounded by other stimulus effects. However, emotion and social context are tightly linked in the real world. There is also evidence for bidirectional interactions of emotional valence and social context, which are discussed below.

1.2.1. Social context effects on positive emotions

Evidence suggests that social context facilitates the expression of positive emotions. The mere presence of another person causes an increase in smiling in individuals (Bruder et al., 2012; Fridlund, 1991; Hess et al., 1995) and greater dyadic smiling synchrony between partners (Golland et al., 2019). Individual displays of amusement also increase with partner familiarity (e.g., friends vs. strangers) and communication ability (e.g., whether the partner is visible and whether they directly interact), indicating that greater sociality may lead to greater changes in positive emotion (Bruder et al., 2012). However, co‐viewing paradigms have not consistently demonstrated associated changes in self‐reported feelings of positive emotion, with studies showing either no effects (Fridlund, 1991; Hess et al., 1995; Jakobs et al., 1999; Jolly, Tamir, Burum, & Mitchell, 2019) or greater facilitation (Wagner et al., 2015). Facilitation and convergence of positive emotions in a social context have been linked to physiological and neurological changes, with reports of increased dyadic facial synchrony (Golland et al., 2019) as well as changes in reward areas of the brain (Wagner et al., 2015).

1.2.2. Social context effects on negative emotions

Social presence effects on negative emotions may differ from positive emotions. Effects on facial displays of fear or sadness in the presence of others are more mixed, with some studies finding similar facilitation effects as positive emotions (Bruder et al., 2012) and some finding less (Jakobs, Manstead, & Fischer, 2001). As with positive feelings, however, self‐reported negative emotions are not consistently facilitated or transferred (Bruder et al., 2012). In fact, social presence may instead lead to fewer negative effects of aversive stimuli (Mawson, 2005; Qi et al., 2020; Wingenbach, Ribeiro, Nakao, Gruber, & Boggio, 2019). Affiliative social behavior has been linked to a reduction in negative outcomes (such as stress responses) across a number of species including humans, a phenomenon known as social buffering (Kikusui, Winslow, & Mori, 2006; Kiyokawa & Hennessy, 2018). Social baseline theory hypothesizes that because the human brain has evolved within a highly social environment, its default state is to be surrounded by others (Beckes & Coan, 2011). Effects supporting this theory include lower BOLD activity in response to stressful stimuli when the brain is put in a social context compared to in isolation (Coan, Schaefer, & Davidson, 2006; Eisenberger, Taylor, Gable, Hilmert, & Lieberman, 2007). Additionally, social deprivation or isolation causes abnormal stress levels, while social support acts to reduce or normalize stress responses (Bugajski, 1999; Gunnar & Hostinar, 2015; Koss, Hostinar, Donzella, & Gunnar, 2014; Thomas et al., 2018). Thus, social contexts may increase positive emotions and decrease negative emotions; however, the mixed results of reported emotion changes in social context suggest a need to more thoroughly examine underlying neural responses.

1.2.3. Emotion effects on social affiliation

Relatedly, emotional content has the potential to impact feelings of social affiliation during shared events, and effects have been found independent of emotional valence. Facial synchrony among partners during both positive and negative events is predictive of feelings of affiliation (Golland et al., 2019). Larger collective gatherings in which participants perceive that they are in emotional synchrony with the group lead to stronger sociality and greater emotions (Páez, Rimé, Basabe, Wlodarczyk, & Zumeta, 2015; Shteynberg et al., 2014), and studies manipulating threat have been shown to increase both emotional contagion and partner affiliation (Gump & Kulik, 1997). Recent work examining movie viewing in a variety of shared emotional contexts found that rather than increased enjoyment or amplified emotions, participants reported greater motivation to share experiences due to a desire for social connection (Jolly et al., 2019). There is also some evidence that the neural mechanisms supporting social processing may be impacted by emotion content, both in and out of social context. Neural responses in the mentalizing network during solo emotional video viewing track with self‐reported feelings of negative valence, which suggests a mechanism for promoting emotional sharing (Nummenmaa et al., 2012). Furthermore, emotional intensity feedback from a partner during movie viewing increases group neural alignment in the medial prefrontal cortex as well as regions of the emotional processing network, and individual differences in these neural responses are associated with feelings of togetherness (Golland et al., 2017).

To summarize, an extensive body of literature indicates that social and emotional processing are intertwined, but the relationship is context‐dependent, and therefore difficult to disentangle. A general picture has emerged of potential facilitation of positive feelings and increased social affiliation during positive shared experiences, and no facilitation or a buffering effect of negative feelings during negative shared experiences. However, these interactions have not typically been examined within the framework of the neuroscience of shared attention. Some evidence suggests that sharing emotional stimuli may cause greater engagement or synchrony of regions within the mentalizing, emotional, and reward networks of the brain, although the question of how sharing dynamic positive and negative events without direct partner feedback impacts activity in any or all of these regions remains unresolved.

1.3. Dynamic and sustained processes during shared attention

The presence of another person during real‐world shared events is a sustained state, yet events themselves can unfold dynamically, with specific details causing fluctuations in social–emotional processing and engagement. Certain moments may be more emotional than others, and certain moments may cause a person to think more or less about their partner. Thus, identifying both sustained and dynamic effects in the brain requires the use of different analysis methods. Inter‐subject correlation (ISC) involves correlating time‐locked, stimulus‐driven activity among participants and can be used to determine common patterns of neural processing irrespective of the direction or magnitude of the underlying BOLD signal (Hasson, Nir, Levy, Fuhrmann, & Malach, 2004; Nastase, Gazzola, Hasson, & Keysers, 2019). In other words, higher ISC reflects greater inter‐subject synchronous brain activity evoked by a stimulus. In this way, ISC is well suited to handle complex and dynamic stimuli that are idiosyncratic between trials but consistent across people (Ben‐Yakov, Honey, Lerner, & Hasson, 2012; Golland et al., 2007; Wilson, Molnar‐Szakacs, & Iacoboni, 2008). On the other hand, traditional approaches such as event‐related averaging through general linear models (GLM) can also provide important information about neural processing that is not captured by ISC. This technique is well‐suited to data that evokes similar sustained neural patterns for each trial within a condition, and a more robust signal can be found by collapsing across time. To get a more complete picture of how the brain behaves in real‐world contexts, it is important to look at and compare both time‐averaged GLM data to understand sustained response differences, and time‐dependent ISC data to understand dynamic or fluctuating responses. In this study, we analyzed the same data two different ways (i.e., time‐averaged neural engagement through GLM and time‐dependent neural synchrony through ISC) to compare sustained and fluctuating average neural responses during six different (two social × three emotion) contexts.

The current study examined both neural and behavioral effects of sharing attention with a partner while viewing videos of varying emotional content. To examine neural effects, we collected functional MRI data during positive, neutral, and negative video events that participants watched either at the same time as a partner or alone. The partner was a lab confederate seated outside the scanner, so the target neural process of interest was the individual perception of co‐viewing these emotional events compared to watching alone. To examine behavior effects associated with these social and emotional contexts, we collected eye‐tracking data and self‐reported emotional and social feelings (i.e., perceived togetherness) about the viewing experience. We expected that perceived togetherness would increase during the joint condition compared to solo, and a corresponding difference in neural activity would be found within the mentalizing and reward networks of the brain. We expected that perceived affect would reflect the emotional content of the videos and hypothesized that emotional videos would recruit regions of the reward and emotional salience networks. We also expected to observe social facilitation effects for positive emotions and social buffering effects for negative emotions demonstrated by a boost in reported positive affect during the joint compared to solo conditions. We expected that any observed emotion‐by‐social context interactions in neural engagement or synchrony would occur within the aforementioned mentalizing, emotion, and reward networks.

2. METHODS

The hypotheses and analysis plan for the data collected in this study were pre‐registered on the Open Science Framework: https://osf.io/muzc3. Changes or amendments made to the planned methods and analyses are explained in each section.

2.1. Participants

Fifty‐two participants (mean age: 20.6; age range: 18–34; F = 29, M = 23; race/ethnicity: 43% Asian, 16% Black/African American, 6% Hispanic/Latinx, 27% White, and 8% Multiracial) were recruited from the undergraduate population at the University of Maryland. A total of 50 participants were sought, and our stopping rule was to collect usable data until all scheduled subjects were complete, which led to two additional participants. Data from an additional 3 subjects were collected but not included in analysis because they reported that they did not believe their confederate partner was a true participant. All participants were right‐handed English speakers with normal or corrected‐to‐normal vision. All participants signed an informed consent form in accordance with the Declaration of Helsinki and the Institutional Review Board at the University of Maryland and were compensated for their time through money or course credit.

2.2. Behavioral session

The first session had participants get to know a peer through (1) an unstructured 5‐minute conversation and (2) a 15‐min activity that they completed together where they came up with a common list of favorite things (either books, movies, TV shows, or music). This session aimed to induce familiarity among peers, as evidence suggests closeness increases effects of shared attention (Bruder et al., 2012; Hess et al., 1995; Shteynberg et al., 2014), and past studies have examined shared attention in friend pairs (Wagner et al., 2015). The peer was not another participant, but actually an undergraduate research assistant confederate. We employed a total of six confederates throughout data collection and gender‐matched confederates to participants. In this session the participants also completed surveys related to their social behavior and answered questions about their partner and the interaction they had.

2.3. Imaging session

Participants returned for a second session with their peer confederate at the Maryland Neuroimaging Center. The participants believed that they had signed up specifically for the MRI version of the experiment, while their partner (i.e., the confederate) had signed up for a behavioral version. They engaged in a brief practice session with their partner, where they watched either the same video or different videos on laptops. Both the practice and the experimental videos were presented in PsychoPy2 version 1.83.04 (Peirce et al., 2019). They were then brought into the scanner room, where their partner was directed to sit in front of a computer with a video camera trained on their profile. The participants were then set up in the scanner room.

During the session, participants viewed brief videos of positive, negative, and neutral affect content. These videos showed various scenarios involving humans, animals and the environment, lasting either 20 or 30 s. In half of the trials, participants believed they were watching the video at the same time as their partner, whereas in the other half of the trials they believed their partner was watching a different video. This resulted in a 2 × 3 design with six conditions in total: three emotions in the joint context, and three emotions in the solo context. A total of 12 videos were included in each condition (one 20 s and one 30 s video per run, across six runs) for a total of 300 s of video stimulation per condition. One limitation of using these types of naturalistic events is that there could be some effects of video content unrelated to emotional feelings induced. To control for some of these potential effects, a larger set of 95 videos was viewed and rated by a group of pilot subjects (N = 20) prior to use in the full study. The final set was selected to meet the following conditions: novelty (less than ¼ of the subjects had seen each video), luminance (roughly equivalent brightness for visual similarity), arousal (emotion videos were significantly more arousing than neutral on a scale of 1–9 at p < .001), and affect (the emotion conditions were significantly different from each other on a scale of 1–9 in the expected direction at p < .001 with no outliers). Additionally, we ensured that the positive and negative emotion conditions contained the same number of visible human faces. To further control for potential confounds related to video content across social conditions, videos were counterbalanced so half of the subjects received a set of videos in the joint condition, and half of the subjects received the same video set in the solo condition.

In the scanner, participants rated their subjective feelings of aloneness vs. togetherness (“How alone or together do you feel?” on a scale of 1 = “Very Alone” to 9 = “Very Together”) and positive vs. negative affect (“How Negative or Positive do you feel?” on a scale of 1 = “Very Negative” to 9 = “Very Positive”) immediately after viewing, and then were shown what they believed to be their partner's affect rating with their partner's name (in the joint condition) or the average affect rating from a separate pilot group with “Average Rating” (in the solo condition). These ratings were presented to further the illusion that participants were co‐viewing movies in the joint condition, although the ratings themselves did not differ across conditions. The numbers shown were taken from average affect ratings for each video from the pilot group of subjects. The true data ranged from 2.6 to 7.6 and were recategorized to fit the 1–9 scale and rounded to the nearest whole number. During each trial, participants also saw a smaller “live” video feed of their partner's profile next to the primary video that was randomly presented either on the left or the right side of the screen. These videos were pre‐recorded and showed the confederates actually watching and reacting to the same videos that were used in the task (although they only showed the partner's face in profile and not the videos). In the joint condition, each video of the partner matched up with the main video shown to the participant, and the orientation of their face in the video was directed toward the screen. In the solo condition, the partner video was randomly selected from the pool of videos within emotional condition, meaning it showed them watching a different video, and the orientation of their face in the video was directed away from the screen. We included this design to give a clear cue to the participant whether they were in a joint or a solo trial, and to further induce the perception that they were watching joint videos with the partner and solo videos alone. All of the partner videos used in the joint and solo conditions were identical over the course of the session to control for any condition‐wide differential effects of facial expression or other visual content. See Figure S1 for an example of this setup. Eye movements were tracked in the scanner with the EyeLink1000 system (SR Research; https://www.sr-research.com/). Sampling rate for this data was 1,000 Hz and was collected from the right eye.

2.4. Functional MRI acquisition, preprocessing, and analysis

Functional MRI data were collected at the Maryland Neuroimaging Center on a 3.0 Tesla scanner with a 32‐channel head coil (MAGNETOM Trio Tim System, Siemens Medical Solutions). Visual stimuli were presented on a rear projection screen and viewed by participants on a head coil‐mounted mirror.

A single T1 image was acquired using a three‐dimensional magnetization‐prepared, rapid acquisition gradient‐echo (MPRAGE) pulse sequence (192 contiguous sagittal slices, voxel size = 0.45 × 0.45 × 0.90 mm, TR = 1,900 ms, TE = 2.32 ms, flip angle = 9°, pixel matrix = 512 × 512), and opposite phase‐encoding fieldmap scans (66 interleaved axial slices, voxel size = 2.19 × 2.19 × 2.20 mm, TR = 7,930 ms, TE = 73 ms, flip angle = 90°, pixel matrix = 96 × 96). Six runs of blood oxygenation level dependent (BOLD) task data were acquired using multiband‐accelerated echo‐planar imaging (66 interleaved axial slices, multiband factor = 6, voxel size = 2.19 × 2.19 × 2.20 mm, TR = 1,250 ms, TE = 39.4 ms, flip angle = 90°, pixel matrix = 96 × 96). A further two runs of BOLD localizer data were acquired with the same parameters. These data were not used in the analyses described in this study.

We utilized a publicly available standardized pre‐processing pipeline (fMRIPrep 1.4.1 workflow [Esteban et al., 2019], available at 10.5281/zenodo.852659). Any differences in preprocessing steps from the preregistered planned analyses were due to this standardization of methods, which allows for more generalizable and potentially reproducible results. The following parameters were used to prepare data for analysis: The T1‐weighted image was corrected for intensity nonuniformity, skull‐stripped, and spatially normalized to standard space with a volume‐based nonlinear registration. The BOLD runs were co‐registered to the T1w reference, slice‐time corrected, and resampled into standard (MNI) space. Automatic removal of motion artifacts using independent component analysis (ICA‐AROMA: Pruim, Mennes, Buitelaar, & Beckmann, 2015) was performed on the preprocessed BOLD runs. Corresponding “non‐aggressively” denoised runs were produced after such smoothing. A confounding time‐series of framewise displacement (FD) was calculated based on the preprocessed BOLD. Full details of the parameters and programs used are found in the supplementary methods.

2.4.1. General linear model

A general linear model was conducted using AFNI's REMLfit program, with each of the six conditions of interest (joint positive, joint neutral, joint negative, solo positive, solo neutral, solo negative) and the 4‐second rating period post‐video collapsed across condition included in the model as regressors. These first‐level results were then entered into a group‐level mixed effects multilevel model using AFNI's 3dMVM. Planned analyses included a 2 × 2 model to examine main effects (joint vs. solo, emotion vs. neutral) and a social by emotion (i.e., positive + negative) interaction, as well as post‐tests to examine individual contrast differences for each condition. We also conducted an exploratory 2 × 3 ANOVA to examine any main effect differences among the three emotion conditions, and social‐by‐emotional‐valence interaction effects. The between‐subject covariates included in these models were as follows: age, mean FD, gender‐matched confederate, and counterbalanced group. Correction for multiple comparisons was conducted through calculating cluster sizes with probability thresholds above false positive (noise‐only) clusters with AFNI's noise volume estimation and cluster simulation through spatial auto‐correlation functions (“acf”). These cluster threshold values were calculated for each test separately, and all significant data are presented at a voxel threshold of p < .001 (bi‐sided; NN = 1) with the associated minimum cluster threshold at α = 0.05 from these simulations (GLM: mentalizing mask = 12 voxels; emotion mask = 9 voxels; reward mask = 15 voxels/ISC: mentalizing mask = 12 voxels; emotion mask = 10 voxels; reward mask = 16 voxels).

2.4.2. Intersubject correlation

After each run was preprocessed through the fMRIprep pipeline, a general linear model regressed out the BOLD response from the rating periods after each video. The residuals from this analysis were then separated by individual video, with the onset and ending shifted by six volumes to accommodate the hemodynamic lag. These video responses were then re‐concatenated into a consistent video order for every subject, separated by the six social × valence conditions (joint positive, joint neutral, joint negative, solo positive, solo neutral, solo negative). Because the videos were counterbalanced by social condition across subjects, the group average ISC was separated into two groups, and the analysis was conducted only within identical videos for each condition. Each subject's reordered time‐series was correlated on a voxel level with the average time series from the rest of their group, and the resulting r values were transformed to subject‐specific z maps.

These subject‐level results were then entered into the same full multilevel model group analyses as the GLM to examine the (counterbalanced group‐independent) effects of social and emotional condition. These models included two within‐subject variables of interest (social condition and emotional valence), as well as a number of nuisance variables: two categorical between‐subject variables (group, confederate), and two quantitative between‐subject variables (age, mean FD). Correction for multiple comparisons was again conducted through calculating cluster size thresholds with AFNI's noise volume estimation and cluster simulation function.

We aimed to replicate these group average ISC results by utilizing a crossed‐random effects analysis method through pairwise ISC comparisons (Chen, Taylor, Shin, Reynolds, & Cox, 2017; Moraczewski, Chen, & Redcay, 2018; Moraczewski, Nketia, & Redcay, 2020). This approach accounts for the non‐independence of the group due to each subject's data being represented N‐1 times in the model and has been shown to control the false positive rate (FPR) better than prior methods (Chen et al., 2017). However, it has only been applied previously to linear mixed effects models with simpler A‐B contrasts, rather than data with six conditions (2 × 3 setup). Due to the complexity of our model, we elected to fit our data within this framework by testing individual contrasts that were found to have significant results in the group average ISC approach to confirm their effect with this more conservative approach. Previous results using this method have been shown to demonstrate a similar pattern but at a lower threshold of p < .01 (Moraczewski et al., 2020). Similar to prior work, we found weaker effects in the same regions as the group average ISC results. These results are presented in Supplementary Material.

2.4.3. Networks of interest

To test whether effects were found within hypothesized brain networks, we generated and downloaded network association maps from Neurosynth (Yarkoni, Poldrack, Nichols, Van Essen, & Wager, 2012) using the search terms “mentalizing,” “emotion,” and “reward” (thresholded at Z = 4, or p < .0001, two‐tailed; Figure S2). We used the Neurosynth mentalizing map instead of our own theory of mind functional localizer (as described in the preregistration) to increase generalizability across populations and to ensure the maximum number of subjects were usable, as some did not have high‐quality localizer data. All 2 × 2 and 2 × 3 group‐level analyses as well as significant cluster‐size simulations were conducted within binarized masks generated from these three network maps. Some of these maps had regions that overlapped with each other, and therefore were used to constrain hypotheses rather than to make specific cognitive inferences about brain activity within one network compared to another. Future work could combine these masks into one, or could look more closely at the overlapping regions themselves.

2.4.4. Significance testing

F‐test maps that reached a voxel threshold level of p < .001 and cluster threshold of α < 0.05 were considered to be significant for main effects and interactions within each network of interest. The main social effect was tested within the mentalizing network, the emotion effect was tested within the emotion and reward networks, and the interaction was tested within all three networks. To probe interaction effects, post‐test contrasts were conducted for each of the social effects within emotion conditions (joint emotions > solo emotions; joint neutral > solo neutral; joint positive > solo positive; joint negative > solo negative) and for each of the emotion effects within social conditions (joint emotions > joint neutral; solo emotions > solo neutral; joint positive > joint neutral; joint negative > joint neutral; joint positive > joint negative; solo positive > solo neutral; solo negative > solo neutral; solo positive > solo negative). T‐test maps that reached a voxel threshold level of p < .001 and cluster threshold of α < 0.05 were considered to be significant for these contrasts. Contrast clusters that overlapped with interaction effect clusters are considered significant post‐tests for these interactions, and are listed within the results tables in the overlapping contrast column.

3. RESULTS

3.1. Affect and togetherness ratings

Affect ratings. As expected, participants reported feeling more negative while watching negative videos and more positive while watching positive videos (F[2,51] = 472.8, p < .0001; Figure 1a). These results are consistent with a recent meta‐analysis showing that movie clips are effective ways to induce positive and negative emotional states (Fernández‐Aguilar et al., 2019). Contrary to our hypothesis, although consistent with some work (e.g., Fridlund, 1991; Hess et al., 1995; Jolly et al., 2019), participants did not report feeling more emotional during the joint condition compared to solo (F[1,51] = 0.005, p = .95).

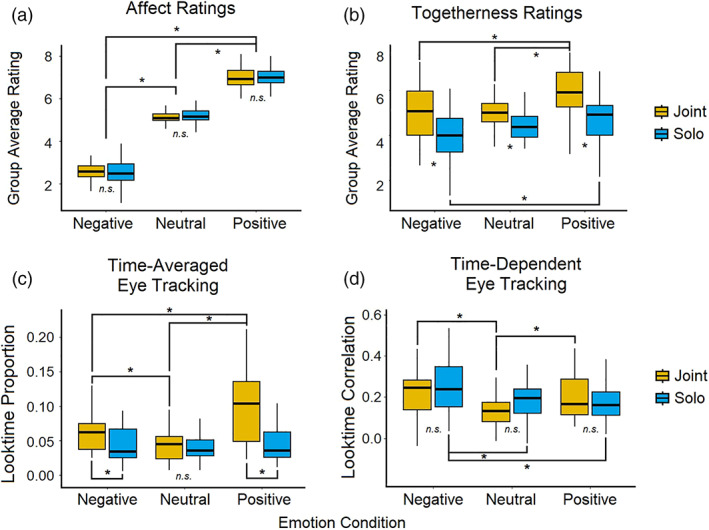

FIGURE 1.

Behavioral results. (a) Group average (N = 52) in‐scanner ratings of how emotional participants felt after watching each video on a scale of 0 (very negative) to 9 (very positive). (b) Group average (N = 38) in‐scanner ratings of how alone or together they felt with their partner after watching each video on a scale of 0 (very alone) to 9 (very together). Fewer subjects are included in this data as the togetherness question was added after 14 scans had already been completed. (c) Group average (N = 27) of the proportion of time spent looking at the partner video feed compared to the main video feed. (d) Group average (N = 27) of the correlation between individual and group (N – subject) time spent looking at the confederate through the duration of each condition

Togetherness ratings. As expected, there was an effect of social condition wherein participants reported feeling more together with their partner during the joint condition compared to solo (F[1,37] = 21.2, p < .0001; Figure 1b). There was also a main effect of emotion (F[2, 37] = 18.3, p < .0001) and a social‐emotion interaction (F[1,37] = 3.61, p < .05) in togetherness ratings, showing that participants felt less alone during positive videos compared to both negative and neutral, and that the strongest feelings of togetherness were in the positive joint condition, and the strongest feelings of aloneness were in the negative solo condition.

3.2. Eye tracking

We also examined eye tracking data that was collected during the scan. Twenty‐seven out of the 52 fMRI subjects had usable data for analysis. In order to determine differences between individual visual attention behaviors and synchronous visual attention behaviors, we examined the data in two different ways: time‐averaged and time‐dependent. This also allows for more analogous comparison between these behavioral data and fMRI results. Time‐averaged (analogous to the GLM fMRI method) visual attention was calculated as the proportion of time spent looking at the partner feed compared to the main video across each entire condition. Time‐dependent visual attention, in contrast, are analogous to the ISC fMRI method. Each subject's time spent looking at the partner video (data sampled at 1,250 ms, equivalent to 1TR) was concatenated for all videos within each condition. We then calculated the correlation between the subject and the group average without the subject (leave‐one‐out) and entered these correlation results into a full group model.

Time‐averaged. We found that overall, people looked more at their partner during the joint condition compared to solo conditions (F[1,25] = 22.12, p < .001; Figure 1c), and that there was an emotion‐by‐social interaction such that emotional conditions showed a greater proportion of partner looking compared to neutral (F[1,25] = 11.8, p < .001). Post‐tests revealed a greater amount of partner looking during joint positive compared to joint negative (t = 3.4, p < .001), and joint positive compared to solo positive (t = 5.687, p < .0001), indicating a bias to look toward one's partner with positive emotional content during social experiences.

Time‐dependent. On the other hand, there was no social effect within the time‐dependent results, but there was a significant main effect of emotion (F[1,25] = 5.1, p < .05; Figure 1d). Follow‐up t‐tests showed that emotional videos caused more consistency in when participants looked at their partner compared to neutral (t = 3.7, p = .001). This was particularly driven by negative videos, which elicited more consistency than either neutral or positive (t = 4.15, p < .001/t = 2.24, p < .05).

Taken together, the time‐averaged and time‐dependent eye‐tracking results suggest that differing emotional valence is an important factor in social sharing, wherein people may more often seek out or orient toward a social partner during positive emotional times, but there is little consistency in when this happens during the content. In contrast, negative videos seem to elicit more content‐dependent gaze shifts between videos, as there were no social effects found in the consistency over time measure. Post‐test results showed that solo negative videos elicited more consistency than either neutral or positive, whereas both conditions of joint emotional videos were greater than joint neutral, and not significantly different from each other.

3.3. Functional MRI

We conducted several multivariate model analyses to test both confirmatory and exploratory questions. Confirmatory analyses were done through 2 × 2 group‐level tests within hypothesized brain networks to examine the main effects of social context, valence‐collapsed emotion (vs. neutral) content, and the interaction between these. We further explored whether there were differential interactions due to valence by conducting 2 × 3 tests (emotion conditions = positive, neutral, and negative). All tests were run on both time‐averaged (GLM) and time‐dependent (ISC) data.

3.3.1. Time‐averaged

To test whether time‐averaged effects were present in the hypothesized mentalizing, emotion, and reward brain networks, we used three separate Neurosynth‐generated masks to restrict our analyses.

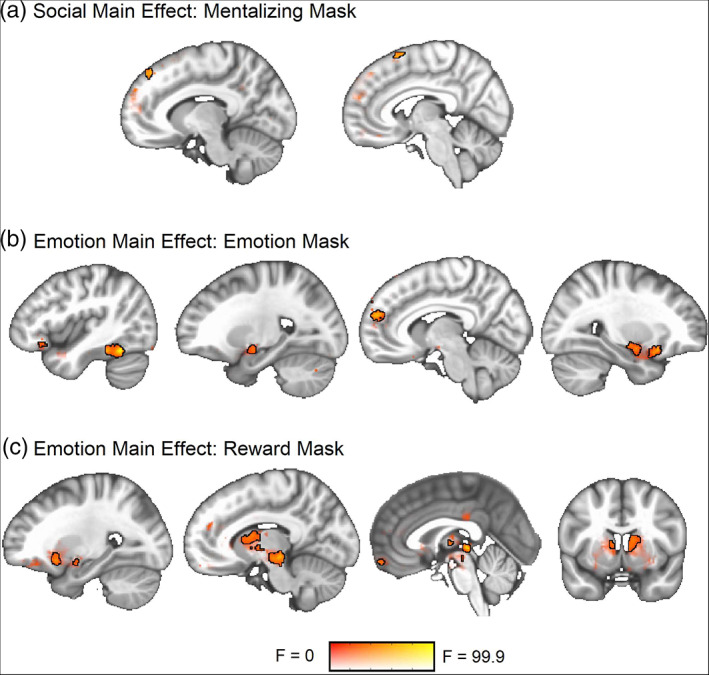

Social. As expected, the GLM analysis revealed a main effect of social condition (joint > solo) in the mentalizing network, specifically the dorsomedial prefrontal cortex (dmPFC) (Figure 2a; Table 1).

FIGURE 2.

ANOVA main effect result maps from the time‐averaged (GLM) data. Significant clusters are outlined in black at a voxel threshold of p < .001 (cluster α = 0.05). Sub‐threshold effects (i.e., F > 0, p > .001) are displayed with transparent fade and not outlined. (a) Social main effect within the mentalizing network. (b) Emotion main effect within the emotion network. (c) Emotion main effect within the reward network

TABLE 1.

Time‐averaged social main effect

| Region | X | Y | Z | Cluster size | Peak F | Contrast | t‐value |

|---|---|---|---|---|---|---|---|

| L dorsomedial prefrontal cortex | −10 | 42 | 48 | 12 | 23.47 | Joint > solo | 4.84 |

| R superior frontal gyrus | 6 | 16 | 64 | 21 | 21.19 | Joint > solo | 4.33 |

Emotion. A main effect of emotion content (emotions > neutral) was observed in both the emotion and reward networks (Figure 2b,c; Table 2). There was a large overlap in main effect activity patterns between the valence‐collapsed 2 × 2 (emotions, neutral) and valence‐separated 2 × 3 (positive, neutral, negative) models (see Table S1 for 2 × 3 results maps).

TABLE 2.

Time‐averaged emotion main effect (2 × 2)

| Mask | Region | X | Y | Z | Cluster size | Peak F | Contrast | t‐value |

|---|---|---|---|---|---|---|---|---|

| Emotion | R amygdala | 22 | −6 | −10 | 186 | 41.03 | Emotion > neutral | 5.93 |

| R hippocampus | 28 | 18 | −14 | 162 | 47.54 | Emotion > neutral | 6.9 | |

| L temporal fusiform cortex | −42 | −54 | −18 | 116 | 70.59 | Emotion > neutral | 8.4 | |

| L amygdala | −20 | −8 | −12 | 93 | 35.11 | Emotion > neutral | 5.93 | |

| Brain stem | 4 | −30 | −2 | 68 | 58.24 | Emotion > neutral | 7.63 | |

| R medial prefrontal cortex | 8 | 56 | 20 | 62 | 45.09 | Emotion > neutral | 6.72 | |

| R medial prefrontal cortex | 4 | 56 | 34 | 49 | 40.5 | Emotion > neutral | 6.36 | |

| L temporal pole | −36 | 14 | −26 | 17 | 28.65 | Emotion > neutral | 5.35 | |

| L ventrolateral prefrontal cortex | −34 | 24 | −16 | 13 | 22.35 | Emotion > neutral | 4.73 | |

| L ventrolateral prefrontal cortex | −42 | 26 | −12 | 12 | 19.09 | Emotion > neutral | 4.37 | |

| L medial prefrontal cortex | −8 | 52 | 18 | 10 | 20.29 | Emotion > neutral | 4.5 | |

| R ventrolateral prefrontal cortex | 54 | 32 | −8 | 9 | 40.06 | Emotion > neutral | 6.33 | |

| R medial prefrontal cortex | 18 | 58 | 18 | 9 | 17.45 | Emotion > neutral | 4.18 | |

| Reward | Brain stem | 4 | −30 | −2 | 601 | 58.2 | Emotion > neutral | 7.63 |

| R thalamus | 8 | −2 | 8 | 175 | 28.11 | Emotion > neutral | 5.3 | |

| L ventrolateral prefrontal cortex | −26 | 14 | −12 | 137 | 31.29 | Emotion > neutral | 5.59 | |

| L caudate | −8 | 4 | 12 | 86 | 30.24 | Emotion > neutral | 5.5 | |

| L amygdala | −20 | −4 | −12 | 30 | 23.97 | Emotion > neutral | 4.9 | |

| Ventromedial prefrontal cortex | −2 | 58 | −18 | 27 | 31.78 | Emotion > neutral | 5.6 |

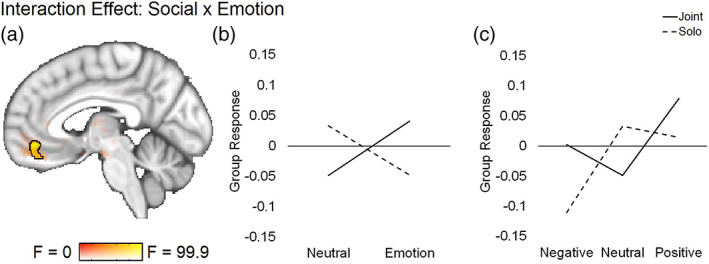

Interaction. An interaction between social and emotion conditions was observed within a ventromedial prefrontal cortex cluster of the brain that was present in both mentalizing and reward network masks (Figure 3a; Table 3). This same region was found to be significant in both valence‐collapsed and valence‐separated models (Table 3). Overlapping post‐test contrasts showed that the valence‐collapsed cluster was more responsive to joint emotion than solo emotion or joint neutral videos, showing a sensitivity to social sharing of emotions in particular (Figure 3b; Table 3). The valence separated model indicated that the joint vs. solo emotion effect found is driven particularly by negative emotions, as this region was more sensitive to joint negative than solo negative videos only. This model also indicated a greater responsivity to both solo positive and neutral than solo negative videos, showing a sensitivity to differential emotions even in the absence of social sharing (Figure 3c; Table 3).

FIGURE 3.

ANOVA social‐emotion interaction result maps from the time‐averaged (GLM) data. Significant cluster is outlined in black at a voxel threshold of p < .001 (cluster α = 0.05). Sub‐threshold effects (i.e., F > 0, p > .001) are displayed with transparent fade and not outlined. Line graphs plot extracted values from each relevant condition to illustrate direction of significant effects. (a) The same vmPFC region located within both mentalizing and reward masks was found to be significant in the 2 × 2 and 2 × 3 models, although the cluster size and peak voxel location differed by model (2 × 2 reward mask results shown: Table 3 shows all cluster information). (b) Extracted values for from the 2 × 2 (emotion, neutral) model in the reward mask. (c) Extracted values from the 2 × 3 (positive, neutral, negative) model in the reward mask

TABLE 3.

Time‐averaged interaction effects

| Mask | Region | X | Y | Z | Cluster size | Peak F | Overlapping contrast | t‐value |

|---|---|---|---|---|---|---|---|---|

| Interaction: 2 × 2 | ||||||||

| Reward | Ventromedial prefrontal cortex | −4 | 46 | −10 | 57 | 22.81 |

Emotions: Joint > solo Joint: Emotion > neutral |

4.53 3.63 |

| Mentalizing | Ventromedial prefrontal cortex | −2 | 44 | −12 | 15 | 15.26 | Emotions: Joint > solo | 4.48 |

| Interaction: 2 × 3 | ||||||||

| Reward | Ventromedial prefrontal cortex | −4 | 46 | −10 | 48 | 15.23 |

Negative: Joint > solo Solo: Neutral > negative Solo: Positive > negative |

4.86 4.53 4.89 |

| Mentalizing | Ventromedial prefrontal cortex | −2 | 44 | −12 | 17 | 9.8 | ||

3.3.2. Time‐dependent

To test whether time‐dependent effects were present in the hypothesized mentalizing, emotion, and reward brain networks, we used the same masks to restrict our ISC analyses.

Social. The time‐dependent (ISC) analysis revealed a main effect of social condition (joint > solo) in the dmPFC (Figure 4a; Table 4).

FIGURE 4.

ANOVA main effect result maps from the time‐dependent (ISC) data. Significant clusters are outlined in black at a voxel threshold of p < .001 (cluster α = 0.05). Sub‐threshold effects (i.e., F > 0, p > .001) are displayed with transparent fade and not outlined. (a) Main social effect within the mentalizing network. (b) Main 2 × 3 emotion (positive, neutral, negative) effect within the emotion network. (c) Main 2 × 3 emotion (positive, neutral, negative) effect within the reward network

TABLE 4.

Time‐dependent social main effect

| Region | X | Y | Z | Cluster size | Peak F | Contrast | t‐value |

|---|---|---|---|---|---|---|---|

| Medial prefrontal cortex | −6 | 56 | 22 | 85 | 22.31 | Joint > solo | 4.83 |

Emotion. The valence‐collapsed model did not show any significant main effect of emotion within either the emotion or reward networks. However, small clusters were observed in the valence‐separated model in both of these networks (Figure 4b,c; Table 5), indicating that positive and negative videos are tracked differently over time within these networks, even though the time‐averaged results show that similar regions process both positive and negative emotions overall.

TABLE 5.

Time‐dependent emotion main effect (2 × 3)

| Mask | Region | X | Y | Z | Cluster size | Peak F | Overlapping contrast | t‐value |

|---|---|---|---|---|---|---|---|---|

| Emotion | Brain stem | 2 | −30 | 2 | 26 | 15.83 | Negative > neutral | 5.83 |

| Medial prefrontal cortex | 20 | −10 | −12 | 10 | 12.05 | |||

| Reward | L putamen | −14 | 2 | −8 | 36 | 17.28 |

Neutral > positive Negative > positive |

3.99 5.54 |

| R caudate | 18 | 22 | −6 | 23 | 12.26 | Negative > positive | 4.09 | |

| Brain stem | 6 | −26 | 4 | 18 | 15.1 |

Negative > neutral Negative > positive |

3.79 5.74 |

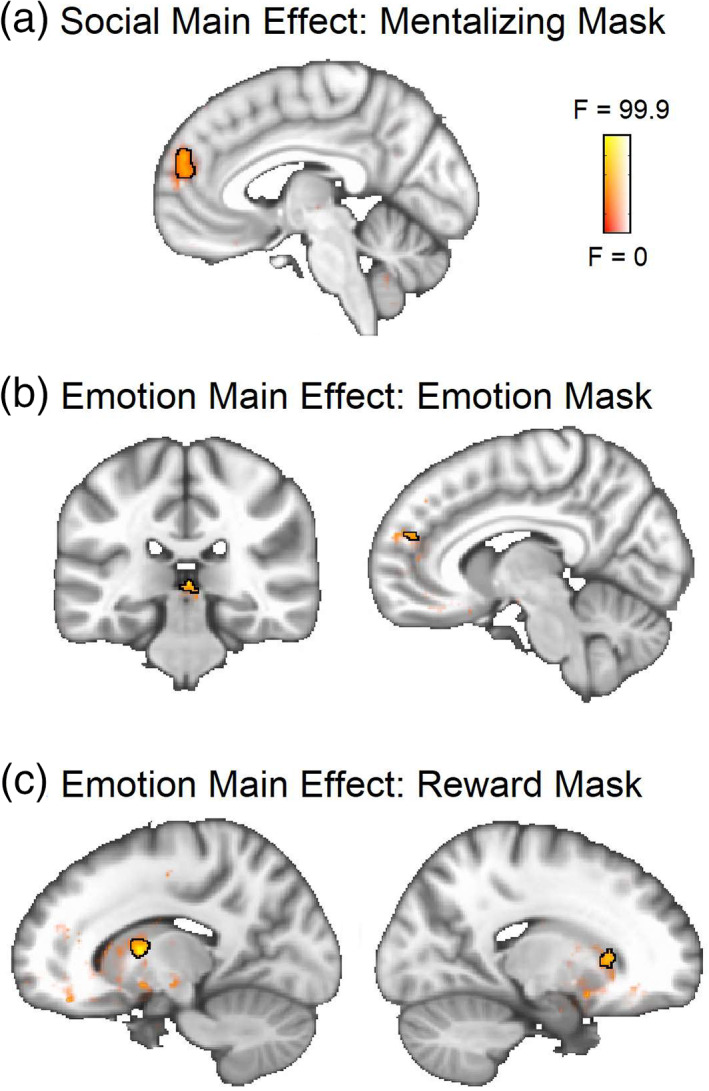

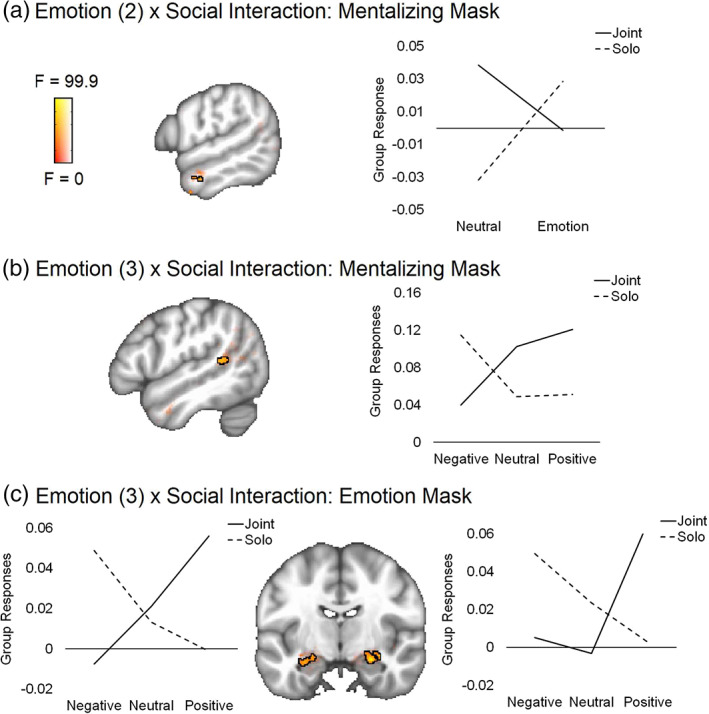

Interaction. Social‐emotion interaction clusters were found in the mentalizing network in both models (left anterior temporal lobe [ATL]: Figure 5a; left STS: Figure 5b; Table 6) and in the emotion network in the valence‐separated model only (bilateral amygdalae: Figure 5c; Table 6). Overlapping post‐test individual contrasts in the bilateral amygdalae show greater synchrony for joint positive than solo positive events, but a trending opposite pattern for negative events, where solo is greater than joint (p < .005). The individual contrasts also show greater synchrony for joint positive than joint negative events. The left ATL post‐tests indicate that the interaction effect in this region is driven by the neutral condition (joint > solo), and the left STS post‐tests indicated that the interaction effect in this region is driven by negative events (negative > neutral in solo condition only).

FIGURE 5.

ANOVA social‐emotion interaction result maps from the time‐dependent (ISC) data. All group tests were conducted within three separate masks generated from Neurosynth and reflecting different brain networks: mentalizing, emotion, and reward. Significant cluster is outlined in black at a voxel threshold of p < .001 (cluster α = 0.05). Sub‐threshold effects (i.e., F > 0, p > .001) are displayed with transparent fade and not outlined. Line graphs plot extracted values from each relevant condition to illustrate direction of significant effects. (a) Interaction from the 2 × 2 valence‐combined (emotion, neutral) model found in the mentalizing network. (b) Interaction from the 2 × 3 valence‐separated (positive, neutral, negative) model found in the mentalizing network. (c) Interaction from the 2 × 3 valence‐separated model found in the reward network

TABLE 6.

Time‐dependent interaction effects

| Region | X | Y | Z | Cluster size | Peak F | Overlapping contrast | t‐value |

|---|---|---|---|---|---|---|---|

| Interaction: 2 × 2 | |||||||

| L anterior temporal lobe | −56 | −6 | −24 | 15 | 26.67 | Neutral: Joint > solo | 4.54 |

| Interaction: 2 × 3 | |||||||

| L posterior superior temporal sulcus | −50 | −44 | 12 | 20 | 12.8 | Solo: Negative > neutral | 3.63 |

| R amygdala | 26 | −10 | −16 | 60 | 21.41 |

Positive: Joint > solo Joint: Positive > neutral Joint: Positive > negative |

3.92 3.89 4.84 |

| L amygdala | −28 | −10 | −18 | 41 | 13.72 |

Positive: Joint > solo Joint: Positive > negative |

3.07 4.51 |

3.3.3. Exploratory analyses

Additional analyses were conducted to confirm our results and to provide hypotheses for further study. We examined whether other brain regions outside the three hypothesized networks supported dynamic social‐emotion processing through whole‐brain (non‐mask‐restricted) analyses. Many of the regions found for the main effects were the same as within the network‐restricted analyses, although we also observed that the main emotion effect in the time‐dependent analysis engaged a much broader extent of the cortex (Figure S7B–C). There were no additional social main effects in the time‐averaged analysis, although a cluster in the cerebellum was observed in the time‐dependent analysis (Figure S7A). Both the time‐averaged and time‐dependent results also yielded several additional significant interaction clusters in visual and fronto‐parietal regions of the brain (Figures S6 and S8), suggesting differential effects of attention and eye gaze. Neural synchrony was found to be increased to solo emotional content and shared neutral content within these, indicating that attentional shifts are more similar across participants when stimuli have a singular salient dimension (e.g., either emotion content or social context) rather than the inclusion or absence of both. Full details are included in Supplementary Text.

4. DISCUSSION

This study examined the neural and behavioral effects of social and emotional context through naturalistic viewing of emotional videos both with a partner and alone. We found evidence that the medial prefrontal cortex (mPFC) supports social sharing through both sustained and temporally fluctuating activity. We also found that the ventromedial prefrontal cortex (vmPFC) is sensitive only to sustained states of shared attention, whereas the amygdala and areas of the left superior temporal sulcus track responses across the duration of the shared emotional events. Within these time‐dependent results, we observed a valence dissociation between positive content with a partner and negative content when alone, indicating that the brain takes social context into account when viewing different types of emotion‐eliciting events, and that the salience of these events may be affected by the presence or absence of another person.

4.1. Social sharing

We found converging evidence through two methods of analysis that clusters within the mPFC are engaged in social event sharing, irrespective of the emotional content of that experience, on both a time‐averaged (i.e., sustained responses over the duration of video events) and time‐dependent (i.e., allowing for dynamic shifts during the video events) scale. The mPFC has been found to be involved in responding to joint attention without interaction (i.e., recorded gaze cues) (Williams, Waiter, Perra, Perrett, & Whiten, 2005) as well as both initiating and responding to joint attention cues from a computer avatar (Caruana, McArthur, Woolgar, & Brock, 2015; Schilbach et al., 2010) and a live partner (Redcay, Kleiner, & Saxe, 2012). The mPFC activity found in this study could be linked to similar gaze‐directed behavior, as we also observed that participants looked toward the video of their partner more on average during shared events. Although studies have found mPFC associations with both initiating and responding to joint attention (Redcay et al., 2012; Schilbach et al., 2010), it is suggested to be a particularly important part of the goal‐directed attention system (in contrast to the orienting and perceptual attention system), and therefore supports higher‐order related processes such as intent to communicate with a partner (Mundy & Newell, 2007; Pfeiffer et al., 2014; Redcay et al., 2012). In our case the partners were not looking back (and not expected to), so the effect was not directly interactive, but it may still indicate the desire to share with a partner prior to interaction. However, partner referencing is not sufficient to explain all mPFC activity, in particular the time‐dependent social effect, as we did not see corresponding time‐dependent social eye‐tracking effects. This suggests that certain events in the videos elicited partner‐related processing but did not cause gaze shifts. The mPFC has been suggested to play a role in person‐specific mentalizing as well as self‐referential and introspective thought more generally (Schilbach et al., 2012; Welborn & Lieberman, 2014), and therefore likely supports making connections between these as well as reasoning about triadic associations (e.g., me, you, and other) (Saxe, 2006). This main joint vs. solo effect we found shows that this same region responds to shared attention events even in the absence of passive or interactive cues, suggesting that activity is driven by the self‐generated phenomena of a shared experience, rather than any external social input from a partner.

4.2. Interactions between shared events and emotions

4.2.1. Time‐averaged social‐emotion interactions in vmPFC

We also observed that the medial and dorsomedial areas of the PFC that were engaged in social sharing overall are not sensitive to interactions with emotion; instead, it is the ventromedial region of the PFC that responds differently to these conditions. In particular, the vmPFC shows a greater response for social–emotional events compared to non‐social, non‐emotional (i.e., neutral alone condition) events. We also observed that it is sensitive to emotional differences within social conditions. This region was identified within both mentalizing and reward network analyses, which is consistent with its placement in an extended socio‐affective‐default network characterized through conjunction and connectivity meta‐analyses (Amft et al., 2015; Schilbach et al., 2012). As it is densely connected to the ventral striatum, it is thought to support processes of reward‐based learning and feedback in both social and non‐social contexts (Bzdok et al., 2013; Daniel & Pollman, 2014; Diekhof, Kaps, Falkai, & Gruber, 2012), as well as motivation and cognitive modulation of affect (Amft et al., 2015). The time‐averaged results presented here converge with several decades of research showing a dissociation between dorsomedial and ventromedial areas of the PFC along these lines, with ventral regions showing greater responses to emotions (Amodio & Frith, 2006; Bzdok et al., 2013; Olsson & Ochsner, 2008; Van Overwalle, 2009). However, we found that the vmPFC does not dynamically track these different conditions, as this region did not show a significant interaction in the ISC results. The vmPFC is theorized to represent information related to incoming stimuli, but it is not directly activated by such exogenous information, and thus may not be temporally synced across participants (Chang et al., 2021; Roy, Shohamy, & Wager, 2012). In line with this, the vmPFC has been shown to have greater variability across subjects than other regions (Bhandari, Gagne, & Badre, 2018; Gordon et al., 2016; Mueller et al., 2013). Our finding is also consistent with prior work that shows an association with periods of self‐generated thought (Konu et al., 2020), which are more heterogeneous and therefore likely not sensitive to synchronous fluctuations in neural activity across people. Our results support the idea that this region is influenced by both social and emotional contexts, but these influences are temporally idiosyncratic across individuals.

4.2.2. Time‐dependent social‐emotion interactions in amygdala and left temporal lobe

In contrast, we found a time‐dependent interaction in the bilateral amygdala, as well as smaller clusters in the left temporal lobe (pSTS and anterior temporal lobe). The amygdala in particular showed more synchronous activity to joint positive compared to joint neutral, joint negative, and solo positive events, as well as a trending opposite effect (solo negative > joint negative). The amygdala has been demonstrated to be sensitive to personally relevant stimuli, including both social and emotional content (Adolphs, 2009; Phelps & LeDoux, 2005). These interaction results suggest that negative events may be less salient with social context (or else are unaffected by social context), whereas positive events are more salient when shared with a social partner. This is consistent with the social buffering hypothesis, which suggests that social presence may mitigate the response to negative events (Kikusui et al., 2006; Kiyokawa & Hennessy, 2018). On the other side, positive events being more salient when shared could be a specific driver of social behavior, detailed in the following section.

4.3. Positive emotion content increases social affiliation

Indeed, our behavioral data in the form of eye tracking and togetherness ratings also indicate a particular relevance of positive content to social feelings during shared events. We observed relatively greater reports of togetherness with a partner during the positive condition compared to neutral or negative, and participants also spent a proportionally greater amount of time looking at their partner during the positive condition compared to neutral and negative. Even in the absence of the social partner, positive videos increased ratings of togetherness, which suggests that the experience of being alone is less salient during these times, in contrast to neutral trials (which may be boring) or negative trials (which may be upsetting). Within the positive condition itself, we observed greater increases in look time and togetherness during the joint compared to solo trials, even though these conditions contained identical videos counterbalanced across participants. This shows that our results cannot simply be explained by video content differences between positive and neutral or negative conditions, and suggests that positive content does not just yield the absence of feelings of aloneness, but may induce a drive or desire to share positive events with others. This fits with behavioral co‐viewing studies that show facilitation or partner convergence of socially communicative displays (i.e., smiling) during positive events that are not necessarily accompanied by an increase in positive emotional feelings (Fridlund, 1991; Golland et al., 2019), but which are associated with increased social motivation and partner bonding (Jolly et al., 2019; Pearce, Launay, & Dunbar, 2015; Tarr, Launay, & Dunbar, 2015; Wolf & Tomasello, 2020). Evidence also links positive emotions to greater well‐being (Fredrickson & Joiner, 2002) and suggests that sharing positive experiences with others leads to greater resilience (Arewasikporn, Sturgeon, & Zautra, 2019). This combination of positive emotions and sociality may therefore be a mechanism for forming and maintaining social bonds, which is beneficial on an individual as well as a dyadic relational level.

4.4. Limitations and future directions

This study had several limitations that leave room for future investigation. Our use of lab confederates as partners allowed us to examine social effects on the neural responses of individual participants, but did not allow for direct examination of dyadic measures among pairs. Hyperscanning methods that collect neural or behavioral data from both participants can help to elucidate interpersonal dynamics such as interaction responses or partner‐specific synchrony during shared events (Babiloni & Astolfi, 2014; Golland et al., 2019). Such methods could determine whether degree of dyadic neural synchrony in response to shared emotional events is associated with feelings of togetherness or social motivation, for example. Additionally, other individual differences could be examined for potential links to neural responses, such as self‐reports of how close participants felt to their partner or how willing they would be to continue a friendship. One further limitation is that the emotional valence of the videos used was defined overall by block, rather than dynamically throughout the videos. Examining brain responses during peak moments of emotional intensity, for instance by using real‐time subject‐reported emotional responses (e.g., Golland et al., 2017; Nummenmaa et al., 2012), could lead to more robust effects. Finally, the eye‐tracking data was difficult to collect in the scanner, which resulted in a smaller subset of participants with available data, so direct brain–eye‐tracking comparisons were not possible. Future investigations of gaze behavior related to shared attention could implement newer calibration methods to ensure that high‐quality eye movement data is collected.

In conclusion, this study examined sustained and dynamic neural processing of naturalistic social–emotional scenes with a partner and alone. We showed there are context‐dependent differences in positive and negative content that led to differential attentional allocation and feelings of sociality. We observed greater feelings of togetherness during positive shared videos, and corresponding higher neural synchrony in the amygdala, whereas synchrony was lower in negative shared conditions. This indicates that emotional content of differing valence is processed differently in salience regions due to social context; in particular, there is a coupling of positive and shared events alongside increased social feelings that are consistent with the social facilitation of positive events literature. In contrast, shared negative videos yielded less synchrony in this region, suggesting that these events may be less salient when shared, consistent with literature suggesting social buffering of negative events. We also observed that the dmPFC is engaged during shared events independent of emotion content, and different clusters show sustained engagement and dynamic synchrony across people. The vmPFC shows sustained neural response increases due to both social and emotional content, but activity in this region does not fluctuate synchronously across the group. These results help shed light on the complex interactions between social and emotional events that arise both neurally and behaviorally in realistic social situations.

CONFLICT OF INTEREST

The authors declare that they have no competing interests, financial or otherwise.

Supporting information

Appendix S1: Supplementary Information

ACKNOWLEDGMENTS

Research reported in this publication was supported by the National Institute of Mental Health of the National Institutes of Health under Award Number R01MH112517 (awarded to ER). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Dziura, S. L. , Merchant, J. S. , Alkire, D. , Rashid, A. , Shariq, D. , Moraczewski, D. , & Redcay, E. (2021). Effects of social and emotional context on neural activation and synchrony during movie viewing. Human Brain Mapping, 42(18), 6053–6069. 10.1002/hbm.25669

Funding information National Institute of Mental Health, Grant/Award Number: R01MH112517

DATA AVAILABILITY STATEMENT

The data from consenting participants that support the findings of this study have been uploaded to the National Institute of Mental Health Data Archive (NDA) under collection #2661 and are available upon request at https://nda.nih.gov.

REFERENCES

- Adolphs, R. (2009). The social brain: Neural basis of social knowledge. Annual Review of Psychology, 60, 693–716. 10.1146/annurev.psych.60.110707.163514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amft, M. , Bzdok, D. , Laird, A. R. , Fox, P. T. , Schilbach, L. , & Eickhoff, S. B. (2015). Definition and characterization of an extended social‐affective default network. Brain Structure and Function, 220, 1031–1049. 10.1007/s00429-013-0698-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amodio, D. M. , & Frith, C. D. (2006). Meeting of minds: The medial frontal cortex and social cognition. Nature Reviews Neuroscience, 7, 268–277. 10.1038/nrn1884 [DOI] [PubMed] [Google Scholar]

- Arewasikporn, A. , Sturgeon, J. A. , & Zautra, A. J. (2019). Sharing positive experiences boosts resilient thinking: Everyday benefits of social connection and positive emotion in a community sample. American Journal of Community Psychology, 63, 110–121. 10.1002/ajcp.12279 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Babiloni, F. , & Astolfi, L. (2014). Social neuroscience and hyperscanning techniques: Past, present and future. Neuroscience and Biobehavioral Reviews, 44, 76–93. 10.1016/j.neubiorev.2012.07.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartels, A. , & Zeki, S. (2003). The neural correlates of maternal and romantic love. NeuroImage, 21, 1155–1166. 10.1016/j.neuroimage.2003.11.003 [DOI] [PubMed] [Google Scholar]

- Beckes, L. , & Coan, J. A. (2011). Social baseline theory: The role of social proximity in emotion and economy of action. Social and Personality Psychology Compass, 5, 976–988. 10.1111/j.1751-9004.2011.00400.x [DOI] [Google Scholar]

- Ben‐Yakov, A. , Honey, C. J. , Lerner, Y. , & Hasson, U. (2012). Loss of reliable temporal structure in event‐related averaging of naturalistic stimuli. NeuroImage, 63, 501–506. 10.1016/j.neuroimage.2012.07.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhandari, A. , Gagne, C. , & Badre, D. (2018). Just above chance: Is it harder to decode information from prefrontal cortex hemodynamic activity patterns? Journal of Cognitive Neuroscience, 30, 1473–1498. 10.1162/jocn_a_01291 [DOI] [PubMed] [Google Scholar]

- Bristow, D. , Rees, G. , & Frith, C. D. (2007). Social interaction modifies neural response to gaze shifts. Social Cognitive and Affective Neuroscience, 2, 52–61. 10.1093/scan/nsl036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruder, M. , Dosmukhambetova, D. , Nerb, J. , & Manstead, A. S. R. (2012). Emotional signals in nonverbal interaction: Dyadic facilitation and convergence in expressions, appraisals, and feelings. Cognition and Emotion, 26, 480–502. 10.1080/02699931.2011.645280 [DOI] [PubMed] [Google Scholar]

- Bugajski, J. (1999). Social stress adapts signaling pathways involved in stimulation of the hypothalamic‐pituitary‐adrenal axis. Journal of Physiology and Pharmacology, 50, 367–379. [PubMed] [Google Scholar]

- Bzdok, D. , Langner, R. , Schilbach, L. , Engermann, D. A. , Laird, A. R. , Fox, P. T. , & Eickhoff, S. B. (2013). Segregation of the human medial prefrontal cortex in social cognition. Frontiers in Human Neuroscience, 7, 232. 10.3389/fnhum.2013.00232 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caruana, N. , McArthur, G. , Woolgar, A. , & Brock, J. (2015). Simulating social interactions for the experimental investigation of joint attention. Neuroscience and Biobehavioral Reviews, 74, 115–125. 10.1016/j.neubiorev.2016.12.022 [DOI] [PubMed] [Google Scholar]

- Chang, L. J. , Jolly, E. , Cheong, J. H. , Rapuano, K. M. , Greenstein, N. , Chen, P.‐H. A. , & Manning, J. R. (2021). Endogenous variation in ventromedial prefrontal cortex state dynamics during naturalistic viewing reflects affective experience. Science Advances, 7, eabf7129. 10.1101/487892 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen, G. , Taylor, P. A. , Shin, Y.‐W. , Reynolds, R. C. , & Cox, R. W. (2017). Untangling the relatedness among correlations, part II: Inter‐subject correlation group analysis through linear mixed‐effects modeling. NeuroImage, 147, 825–840. 10.1016/j.neuroimage.2016.08.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coan, J. A. , Schaefer, H. S. , & Davidson, R. J. (2006). Lending a hand: Social regulation of the neural response to threat. Psychological Science, 17, 1032–1039. 10.1111/j.1467-9280.2006.01832.x [DOI] [PubMed] [Google Scholar]

- Daniel, R. , & Pollman, S. (2014). A universal role of the ventral striatum in reward‐based learning: Evidence from human studies. Neurobiology of Learning and Memory, 114, 90–100. 10.1016/j.nlm.2014.05.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diekhof, E. K. , Kaps, L. , Falkai, P. , & Gruber, O. (2012). The role of the human ventral striatum and the medial orbitofrontal cortex in the representation of reward magnitude – An activation likelihood estimation meta‐analysis of neuroimaging studies of passive reward expectancy and outcome processing. Neuropsychologia, 50, 1252–1266. 10.1016/j.neuropsychologia.2012.02.007 [DOI] [PubMed] [Google Scholar]

- Eisenberger, N. I. , Taylor, S. E. , Gable, S. L. , Hilmert, C. J. , & Lieberman, M. D. (2007). Neural pathways link social support to attenuated neuroendocrine stress responses. NeuroImage, 35, 1601–1612. 10.1016/j.neuroimage.2007.01.038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elliot, M. L. , Knodt, A. R. , Ireland, D. , Morris, M. L. , Poulton, R. , Ramrakha, S. , … Hariri, A. R. (2020). What is the test‐retest reliability of common task‐functional MRI measures? New empirical evidence and a meta‐analysis. Psychological Science, 31, 792–806. 10.1177/0956797620916786 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Esteban, O. , Markiewicz, C. J. , Blair, R. W. , Moodie, C. A. , Isik, A. I. , Erramuzpe, A. , … Gorgolewski, K. J. (2019). fMRIPrep: A robust preprocessing pipeline for functional MRI. Nature Methods, 16(1), 111–116. 10.1038/s41592-018-0235-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernández‐Aguilar, L. , Navarro‐Bravo, B. , Ricarte, J. , Ros, L. , & Latorre, J. M. (2019). How effective are films in inducing positive and negative emotional states? A meta‐analysis. PLoS One, 14, e0225040. 10.1371/journal.pone.0225040 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finn, E. S. , & Bandettini, P. A. (2021). Movie‐watching outperforms rest for functional connectivity‐based prediction of behavior. NeuroImage, 235, 117963. 10.1016/j.neuroimage.2021.117963 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finn, E. S. , & Rosenberg, M. D. (2021). Beyond fingerprinting: Choosing predictive connectomes over reliable connectomes. NeuroImage, 239, 118254. 10.1016/j.neuroimage.2021.118254 [DOI] [PubMed] [Google Scholar]

- Fredrickson, B. L. , & Joiner, T. (2002). Positive emotions trigger upward spirals toward emotional well‐being. Psychological Science, 13, 172–175. 10.1111/1467-9280.00431 [DOI] [PubMed] [Google Scholar]

- Fridlund, A. J. (1991). Sociality of solitary smiling: Potentiation by an implicit audience. Journal of Personality and Social Psychology, 60(2), 229–240. 10.1037/0022-3514.60.2.229 [DOI] [Google Scholar]

- Golland, Y. , Bentin, S. , Gelbard, H. , Benjamini, Y. , Heller, R. , Nir, Y. , … Malach, R. (2007). Extrinsic and intrinsic systems in the posterior cortex of the human brain revealed during natural sensory stimulation. Cerebral Cortex, 17, 766–777. 10.1093/cercor/bhk030 [DOI] [PubMed] [Google Scholar]

- Golland, Y. , Levit‐Binnun, N. , Hendler, T. , & Lerner, Y. (2017). Neural dynamics underlying emotional transmissions between individuals. Social Cognitive and Affective Neuroscience, 12, 1249–1260. 10.1093/scan/nsx049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golland, Y. , Mevorach, D. , & Levit‐Binnun, N. (2019). Affiliative zygomatic synchrony in co‐present strangers. Scientific Reports, 9, 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon, E. M. , Laumann, T. O. , Adeyemo, B. , Huckins, J. F. , Kelley, W. M. , & Peterson, S. E. (2016). Generation and evaluation of a cortical area parcellation from resting‐state correlations. Cerebral Cortex, 26, 288–303. 10.1093/cercor/bhu239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gump, B. B. , & Kulik, J. A. (1997). Stress, affiliation, and emotional contagion. Journal of Personality and Social Psychology, 72, 305–319. 10.1037/0022-3514.72.2.305 [DOI] [PubMed] [Google Scholar]

- Gunnar, M. R. , & Hostinar, C. E. (2015). The social buffering of the hypothalamic‐pituitary‐adrenocortical axis in humans: Developmental and experiential determinants. Social Neuroscience, 10, 479–488. 10.1080/17470919.2015.1070747 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson, U. , Malach, R. , & Heeger, D. J. (2010). Reliability of cortical activity during natural stimulation. Trends in Cognitive Sciences, 14, 40–48. 10.1016/j.tics.2009.10.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson, U. , Nir, Y. , Levy, I. , Fuhrmann, G. , & Malach, R. (2004). Intersubject synchronization of cortical activity during natural vision. Science, 303, 1634–1640. 10.1126/science.1089506 [DOI] [PubMed] [Google Scholar]

- Haxby, J. V. , Gobbini, M. I. , & Nastase, S. A. (2020). Naturalistic stimuli reveal a dominant role for agentic action in visual representation. NeuroImage, 216, 116561. 10.1016/j.neuroimage.2020.116561 [DOI] [PubMed] [Google Scholar]

- Hess, U. , Banse, R. , & Kappas, A. (1995). The intensity of facial expression is determined by underlying affective state and social situation. Journal of Personality and Social Psychology, 69(2), 280–288. 10.1037/0022-3514.69.2.280 [DOI] [Google Scholar]

- Jakobs, E. , Manstead, A. S. R. , & Fischer, A. H. (1999). Social motives and emotional feelings as determinants of facial displays: The case of smiling. Personality and Social Psychology Bulletin, 25, 424–435. 10.1177/0146167299025004003 [DOI] [Google Scholar]

- Jakobs, E. , Manstead, A. S. R. , & Fischer, A. H. (2001). Social context effects on facial activity in a negative emotional setting. Emotion, 1, 51–69. 10.1037/1528-3542.1.1.51 [DOI] [PubMed] [Google Scholar]

- Jolly, E. , Tamir, D. I. , Burum, B. , & Mitchell, J. P. (2019). Wanting without enjoying: The social value of sharing experiences. PLoS One, 14, e0215318. 10.1371/journal.pone.0215318 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kikusui, T. , Winslow, J. T. , & Mori, Y. (2006). Social buffering: Relief from stress and anxiety. Philosophical Transactions of the Royal Society B: Biological Sciences, 361, 2215–2228. 10.1098/rstb.2006.1941 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiyokawa, Y. , & Hennessy, M. B. (2018). Comparative studies of social buffering: A consideration of approaches, terminology, and pitfalls. Neuroscience and Biobehavioral Reviews, 86, 131–141. 10.1016/j.neubiorev.2017.12.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konu, D. , Turnbull, A. , Karapanagiotidis, T. , Wang, H.‐T. , Brown, L. R. , Jefferies, E. , & Smallwood, J. (2020). A role for the ventromedial prefrontal cortex in self‐generated episodic social cognition. NeuroImage, 218, 116977. 10.1016/j.neuroimage.2020.116977 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koss, K. J. , Hostinar, C. E. , Donzella, B. , & Gunnar, M. R. (2014). Social deprivation and the HPA axis in early development. Psychoneuroendocrinology, 50, 1–13. 10.1016/j.psyneuen.2014.07.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mawson, A. R. (2005). Understanding mass panic and other collective responses to threat and disaster. Psychiatry, 68, 95–113. 10.1521/psyc.2005.68.2.95 [DOI] [PubMed] [Google Scholar]

- Milham, M. P. , Vogelstein, J. , & Xu, T. (2021). Removing the reliability bottleneck in functional magnetic resonance imaging research to achieve clinical utility. JAMA Psychiatry, 78, 587–588. 10.1001/jamapsychiatry.2020.4272 [DOI] [PubMed] [Google Scholar]

- Moraczewski, D. , Chen, G. , & Redcay, E. (2018). Inter‐subject synchrony as an index of functional specialization in early childhood. Scientific Reports, 8, 2252. 10.1038/s41598-018-20600-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moraczewski, D. , Nketia, J. , & Redcay, E. (2020). Cortical temporal hierarchy is immature in middle childhood. NeuroImage, 2016, 116616. 10.1016/j.neuroimage.2020.116616 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mueller, S. , Wang, D. , Fox, M. D. , Yeo, B. T. T. , Sepulcre, J. , Shafee, R. , … Liu, H. (2013). Individual variability in functional connectivity architecture of the human brain. Neuron, 77, 586–595. 10.1016/j.neuron.2012.12.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mundy, P. (2018). A review of joint attention and social‐cognitive brain systems in typical development and autism spectrum disorder. European Journal of Neuroscience, 47, 497–514. 10.1111/ejn.13720 [DOI] [PubMed] [Google Scholar]

- Mundy, P. , & Newell, L. (2007). Attention, joint attention, and social cognition. Current Directions in Psychological Science, 16, 269–274. 10.1111/j.1467-8721.2007.00518.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nastase, S. A. , Gazzola, V. , Hasson, U. , & Keysers, C. (2019). Measuring shared responses across subjects using intersubject correlation. Social Cognitive and Affective Neuroscience, 14, 667–685. 10.1093/scan/nsz037 [DOI] [PMC free article] [PubMed] [Google Scholar]