Abstract

Purpose:

Glaucoma is one of the preeminent causes of incurable visual disability and blindness across the world due to elevated intraocular pressure within the eyes. Accurate and timely diagnosis is essential for preventing visual disability. Manual detection of glaucoma is a challenging task that needs expertise and years of experience.

Methods:

In this paper, we suggest a powerful and accurate algorithm using a convolutional neural network (CNN) for the automatic diagnosis of glaucoma. In this work, 1113 fundus images consisting of 660 normal and 453 glaucomatous images from four databases have been used for the diagnosis of glaucoma. A 13-layer CNN is potently trained from this dataset to mine vital features, and these features are classified into either glaucomatous or normal class during testing. The proposed algorithm is implemented in Google Colab, which made the task straightforward without spending hours installing the environment and supporting libraries. To evaluate the effectiveness of our algorithm, the dataset is divided into 70% for training, 20% for validation, and the remaining 10% utilized for testing. The training images are augmented to 12012 fundus images.

Results:

Our model with SoftMax classifier achieved an accuracy of 93.86%, sensitivity of 85.42%, specificity of 100%, and precision of 100%. In contrast, the model with the SVM classifier achieved accuracy, sensitivity, specificity, and precision of 95.61, 89.58, 100, and 100%, respectively.

Conclusion:

These results demonstrate the ability of the deep learning model to identify glaucoma from fundus images and suggest that the proposed system can help ophthalmologists in a fast, accurate, and reliable diagnosis of glaucoma.

Keywords: Artificial intelligence, convolutional neural networks, deep learning, glaucoma, support-vector machine

Glaucoma is a collection of eye diseases that impair the optic nerve; they bale out nerve fibers that transport information to the brain due to elevated intraocular pressure. Glaucoma causes permanent blindness and early detection is challenging. Other extreme vision impairments and blindness are usually generated by four ocular pathologies like cataracts, diabetic retinopathy, age-related macular degeneration, and glaucoma.[1] Glaucoma shows no symptoms in its initial stage, but it can lead to peripheral vision loss. For effective treatment, early diagnosis is a must; otherwise, its heads to total blindness. The only way to combat the progression of this disease is early detection and treatment. The diagnosis rate of glaucoma was extremely low even in developed countries; it was less than 50%. Consequently, glaucoma stays a significant public health concern. By 2040, it is anticipated that around 116 million of us will be affected by this disease worldwide.[2]

Manual assessments pose a challenge in developing nations, where there is a lack of trained experts. To date, glaucoma is clinically diagnosed using slit-lamp biomicroscopy by an ophthalmologist. This strategy is manual and incredibly relies on clinical expertise, which can prompt a high pace of interexaminer variability, misdiagnosis, and waste of clinical information. Thus, novel methods for the early diagnosis of glaucoma are needed to address these constraints.[3] These methods generate a positive impact on both people and the economy. The present research explains that screening of glaucoma can be cost-effective in threat inhabitants (family history, black ethnicity, age) and may be improved utilizing a preliminary automated classification followed by the talented appraisal of a specialist. Artificial Intelligence (AI) has an impressive effect in ophthalmology, mostly through accurate and capable image interpretation.[4]

AI alludes to the arena of computer science that impersonates human intellectual capacity. AI technique is basically derived from the machine learning (ML) model. The AI technique lets the computer to function in a mode in a self-learning scheme, without being explicitly programmed, which could drive the future of technology. Nowadays, Deep Learning (DL) algorithms is a branch of ML providing successful results in health care and gradually propagate into areas like Ocular imaging.[5] DL is the one type of class derived from ML that exploits many layers of nonlinear information processing modules for both supervised or unsupervised feature extraction from a set of training data and make predictions correctly. The AI and its subset model and Convolution Neural Network (CNN) structure are also shown in Fig. 1. [Fig. 1a, 1b]

Figure 1.

(a) Artificial Intelligence subsets and (b) structure of CNN

As of now, DL has focal functions in different assignments; they are significantly applicable in Facebook for facial recognition purpose, in Google for image exploration, and diagnostic help in IBM Watson for Oncology. Typical DL algorithms are Deep Belief Network, CNN, and Recurrent Neural Network. The most typically applied algorithm in image recognition is CNN, and it is significantly working in visual image classification than analogy data schemes.

Computational models of neural networks were first proposed by McCulloch and Pitts in the year 1943. Yann LeCun in 1988 suggested a specialized type of the artificial neural network model called CNN. The architecture of CNN is cognate to that of the linking form of neurons in the brain of humans and was inspired by the suggestion of the visual cortex. Singular neurons respond to provocations in a restricted area of the visual field disclosed as the receptive field. A collection of such fields laps over to cover the full visual area. The network consists of four kinds of layers to be specific: convolution layer, ReLU layer, subsampling layer, and the output layer or fully connected layer. In general, images are constructed as a matrix of pixels, and these pixel values are given as an input-to-input layer along with biases and weights (for nonlinearity).

The convolution layer is the first layer of the CNN network and performs convolution operation. It is a mathematical operation that takes an image matrix and a filter or kernel as inputs. This layer consists of a group of filters, which kind of filter is required to be well learned. The filters are smaller than the input data, and the kind of multiplication applied between a filter-sized patch of the input and the filter is a dot product. Each filter is convolved with the input data to calculate an activation map made of neurons. These filters are local in input space and are thus well suited to exploit the strong spatially local correlation existing in images. The two-dimensional output array from the convolution process is called activation or feature map. The output volume of the convolutional layer is gained by stacking the activation maps of entire filters along the depth dimension. The ReLU layer is mainly used to develop nonlinearity. ReLU achieves an element-wise operation on the activation map and sets all negative pixels to zero value of the images. It acquaints nonlinearity to the network, and the made output is a rectified feature map. The main benefits of ReLU are sparsity, which brings the model a compact, better predictive power and a reduced likelihood of vanishing gradient. The pooling layer of a CNN reduces the sum of parameters and computation by downsampling feature maps. The two regular pooling methods are max pooling or average pooling. Max pooling layers select the supreme element feature from the image and act as sample-based discretization. The feature map of the last convolution or pooling layer is transformed into a one-dimensional vector and connected to one or more fully connected layers called as dense layers to classify the image. The last fully connected layer has one and the same number of exit nodes as the number of classes. Each neuron in a dense layer receives weight that prioritizes the most proper label. Eventually, neurons vote on every one of the labels, and the champion of that vote is the classification decision. Activation function has soft max or sigmoid applied to categorize the outputs as two forms.

Prior works on automated glaucoma detection from fundus images can be broadly classified into two categories: 1) those based on traditional ML classifiers and 2) those based on DL algorithms. In the first category, fundus images are first preprocessed followed by segmentation and finally vital features are extracted for glaucoma classification. The second category of method uses either custom CNN or pretrained models for glaucoma detection. However, we have revised existing development schemes of glaucoma detection using a DL method, which is most useful for our research experimentation. Review studies are as follows.

Chen et al.[6] implemented a six-layer CNN architecture for Glaucoma screening. This experimentation was performed on ORIGA and SCES datasets and achieved AUC of 0.831 and 0.887% respectively. Ahn et al.[7] suggested an approach utilizing CNN with three convolutional layers and max pooling applied at each layer followed by two fully connected layers to recognize glaucoma in retinal Colour fundus images. The performance of the model was evaluated on a private dataset of 1542 and obtained detection accuracy of 87.9% and AUC of 0.94 on test data. Another example is the work done by Raghavendra et al.[16] which uses a CNN with four convolutional layers, and batch normalization, one ReLU, and one Max pooling applied at each layer, followed by fully connected and Soft-max layers. The authors attained an accuracy of 98.13% on a private dataset. Baida Al Bander et al.[13] developed a model which uses a pre-trained AlexNet model which consists of 23 layers and SVM classifier to categorize the images into either normal or glaucomatous. The performance of the model was evaluated in the RIMONE database consisting of 255 normal and 200 glaucomatous images. The proposed system achieved a specificity of 90.8%, sensitivity of 85% and accuracy of 88.2% respectively

Most of the previous work mentioned here either used a single dataset or unique datasets for training and testing their models; in contrast, this work trains the models with four different datasets to create a generalized model for glaucoma detection. This work plans to utilize the effectiveness of CNN for the diagnosis of glaucoma.

The article further marshaled as follows. Section 2 presents methodology followed by results in Section 3. Section 4 deals with the experimental procedure in detail. At last, Section 5 deals with the exploratory outcomes obtained using CNN.

Methods

In this section, we present the proposed methodology scheme, which was used in this research work to identify glaucoma from fundus images. The pipeline of the proposed method using CNN for glaucoma detection is shown in Fig. 2. These proposed diagrams labeled the training phase and testing phase. In the training phase, a training model is developed using a training dataset. There are several steps used to identify glaucoma such as preprocessing the data, extract features from the CNN model, and the development of predictive models. The test phase consists of two phases such as test data is applied to the trained model and evaluated the enactment of the model [Fig. 2]. Ethics committee approval obtained from IEC of LF Hospital (13th Feb 2020).

Figure 2.

Pipeline of the proposed model for identification of glaucoma from fundus images

The dataset used for this study was collected from several public datasets such as HRF, Origa, Drishti, and fundus images collected at Little Flower Hospital and Research Centre, and its particulars are shown in Table 1.

Table 1.

Particulars of Images in the Dataset

| Dataset | Normal | Glaucomatous | Resolution | Total |

|---|---|---|---|---|

| HRF | 15 | 15 | 3504×2336 JPG | 30 |

| Origa | 482 | 168 | 3072×2048 JPG | 650 |

| Drishti_GS1 | 31 | 70 | 2996×1944 PNG | 101 |

| Images from LF | 132 | 200 | 3965×3000 JPG | 300 |

| Total | 660 | 453 | 1113 |

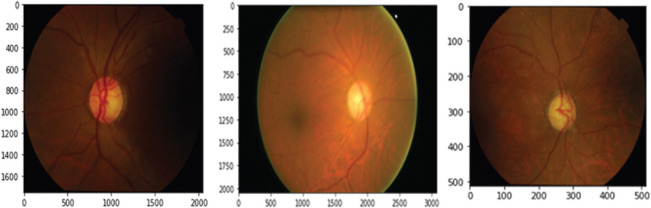

The dataset contains 1113 images of various sizes from patients of varying ethnicity, age groups, and from varying levels of illumination, which is not suitable for glaucoma detection. Therefore, we have brought these images into a common format and size. Thus, we have first resized all the images into 3000 × 2000 pixels and performed color and brightness normalization to all images that make use of the OpenCV package (http: OpenCV.org/). Then, the images were downsized into 512 × 512 pixels [Fig. 3] which holds all the features we desired to identify and make the system computationally efficient [Fig. 3].

Figure 3.

Preprocessed dataset sample image

In this study, we randomly split dataset into three parts: 70% (462 normal and 317 glaucomatous) for training, 20% (132 normal and 88 glaucomatous) for validation, and the remaining 10% (66 normal and 48 glaucomatous) for testing. The training set images were utilized to extract and learn the features; validation set images provided a fair assessment of a model fit on the training dataset, while tuning model hyperparameters and test images were used to calculate the accuracy of the proposed method.

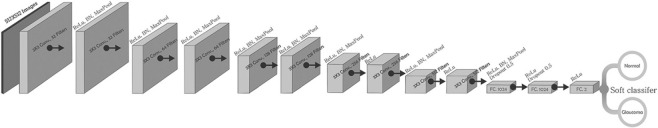

The architecture of the CNN model used for this study is illustrated in Fig. 4. This type of model is known to automatically learn local features and generate a classification model. It comprises 10 convolutional layers followed by three fully connected layers. The model takes normalized images as input that are processed by subsequent layers of CNNs. The batch normalization technique trains neural networks that standardize input images to a layer batch wise, thus stabilizing the procedure of learning. This accelerates the learning process and the number of training epochs required to train deep networks is considerably lowered. Two-dimensional convolutional layer creates a convolution kernel that convolves with the layer input and produces a tensor of outputs. Max pooling downsamples input image to reduce its dimensions. It allows making assumptions about features contained in the binned subregions. The featured map obtained is pooled and then flattened. The pooled feature map matrix is transformed into a single column vector by flattening, which is further processed by the fully connected layers. First, two fully connected layers are superseded by dropout layers of 0.5 probability. Drop-out layers are useful in preventing overfitting as well as accelerate the training process. The last fully connected layers with SoftMax activation function evaluate the probability of each class and classify the target images [Fig. 4].

Figure 4.

Architecture of the convolutional neural network of the proposed model

In this experimentation, we have used the RELU layer, which is applied to entire fully connected layers and convolution layers apart from the last layer. Its point is to secure convergence learning and to acquaint the nonlinearity to the recommended system. After applying all RELU layers, then we use the Max pooling layer to lessen the spatial dimension with a 3 × 3 filter and a stride of the length equivalent to two layers. This model is trained by feeding a training dataset to the model. The model is supposed to predict whether the image belongs to class glaucomatous or normal.

To develop efficient and successful DL models, a large amount of annotated dataset is required for the optimization of parameters during training, and in some cases, it is not feasible, especially in the case of medical data. Regularization strategies like data balancing and augmentation technique are applied to training dataset to keep away from bias during training process. Our dataset is unbalanced having 462 normal and 317 glaucomatous images. Balancing the training dataset means generating an equal number of fundus images from both classes. So, we applied Synthetic Minority Oversampling Technique – a very popular data balancing method that generates synthetic samples, thereby generating a balanced dataset that contains 462 normal and 462 glaucomatous images.[9] Then, the balanced training dataset was augmented for brightness, gamma correction, flipping horizontal, and vertical, so that the amount of training data elevated by 13 times. In total, 12012 fundus images are generated to train our proposed model. We trained our model using Adam Optimizer with a default learning rate of 0.001 and a batch size of 32. Categorical cross-entropy was used as loss function to calculate errors.

Results

Our experimentation is implemented on fundus images in Table 1. We used Keras Deep Learning Library with the tensor flow as a backend. All experiments related to this study were conducted on Colab or Google Colaboratory – a product from Google Research that provides free GPU service for education and research purposes. The GPUs obtainable in Colab often include T4s, Nvidia K80s, P4s, and P100s.

The parameters used for the performance evaluation of the model were specificity, sensitivity, and accuracy. Sensitivity measures how likely the test is positive for someone who has glaucoma, and specificity measures how likely the test is someone does not have glaucoma. Accuracy represents the overall classification result.

To obtain better performance, we tuned hyperparameters of our model during training. The proposed CNN topology was trained for 50 epochs with diverse batch sizes of 8, 16, 32, 64, and 128. The batch dimensions profoundly contribute to characterizing learning parameters, which influence the accuracy of the model. The best-picked-up accuracy was 99.26%, with a batch size equal to 32. The model accuracy and loss curves obtained while training the model for 50 epochs are shown in Fig. 5. These curves prove that the proposed model does not overfit, which means that it keeps the same prediction performance with new test data. Also, Table 2 describes the accuracy and loss obtained during training, test accuracy, and validation loss of the model on different epochs [Fig. 5].

Figure 5.

(a) Learning curve of model accuracy on the train and test datasets over each training epoch (b) Learning curve of model loss on train and test dataset over each training epoch

Table 2.

The training performance of the model on different epochs

| EPOCH | Training Loss | Training Accuracy | Validation Loss | Validation Accuracy |

|---|---|---|---|---|

| 1/50 | 1.0626 | 0.5400 | 1.1431 | 0.3421 |

| 10/50 | 0.4664 | 0.8400 | 0.5690 | 0.7895 |

| 20/50 | 0.2629 | 0.9833 | 0.3247 | 0.9737 |

| 30/50 | 0.1648 | 1.0000 | 0.1871 | 1.0000 |

| 40/50 | 0.1164 | 1.0000 | 0.0984 | 1.0000 |

| 50/50 | 0.0850 | 1.0000 | 0.0796 | 1.0000 |

After training and building the model, the weights are saved for the prediction of the new image. The trained model is then used for the classification of the test image as glaucomatous or normal based on the probabilistic predicted value [Table 2].

One of the tools to evaluate the classification model performance is the confusion matrix. It is a summarized table with correct and incorrect predictions to analyze the strength of the suggested method with the help of true positives, false positives, true negatives, and false negatives. Fig. 6 displays the confusion matrix of CNN with a SoftMax classifier and an SVM classifier, respectively. [Fig. 6]. The standard measures of performance sensitivity, specificity, and accuracy can be calculated from the confusion matrix.

Figure 6.

Screenshots of the confusion matrix of softmax classifier and an SVM classifier for glaucoma prediction

We tested our model on a previously unseen dataset of 114 fundus images that contains 66 normal and 48 glaucomatous images. The test result showed that CNN with an SVM classifier outperforms CNN with a SoftMax classifier. Table 3 presents evaluation metrics of the proposed model with both SoftMax and SVM classifiers [Table 3].

Table 3.

Performance comparison of model CNN-Softmax and CNN-SVM

| Metrics | CNN-SoftMax | CNN-SVM |

|---|---|---|

| True Positive (TP) | 41 | 43 |

| False Positive (FP | 0 | 0 |

| True Negative (TN) | 66 | 66 |

| False Negative (FN) | 7 | 5 |

| Accuracy = (TP + TN)/(TP + FP + FN + TN) | 93.86% | 95.61% |

| Precision=TP/(TP + FP) | 100% | 100% |

| Sensitivity=TP/(TP + FN) | 85.42% | 89.58% |

| Specificity=TN/(TN + FP) | 100% | 100% |

| AUC = (Sensitivity + Specificity)/2 | 92.71% | 94,79% |

Discussion

Retinal photography provides an inexpensive, uncomplicated, and more handy method for detection of glaucoma among under privileged people by non-clinical professionals. This method brings diagnostic methods more people oriented. In this section, we analyse the performance of the existing systems with our proposed model. Fig. 7 and 8 shows the features that were extracted from the first and third convolutional layers. [Fig. 7] [Fig. 8] It is evident from the feature maps that, first convolutional layers extract the basic features and deeper layers pick out more distinct features which will make accurate image classification. We investigate the different performance metrics, which are tabulated in table 4 and depicted in Fig. 9. [Table 4] [Fig. 9]. Figs. 7 and 8 show the features that were extracted from the first and third convolutional layers. [Figs. 7 and 8] It is evident from the feature maps that first convolutional layers extract the basic features and deeper layers pick out more distinct features which will make accurate image classification.

Figure 7.

Visualization of the first 32 features learned by the first convolutional layer

Figure 8.

Visualization of the 64 features learned by the third convolutional layer

Table 4.

Comparison analysis of existing with proposed method

| References | Method | Accuracy | Specificity | Sensitivity |

|---|---|---|---|---|

| Blagus R et al (2013) [9] | Multi branch neural network | 91.51% | 90.90% | 92.33% |

| Mojab N et al (2019) [10] | Deep CNN | 79.04% | 88.95% | 79.04% |

| Bajwa M N et al (2019) [11] | Two-stage framework RCNN | 95.45% | 93.45% | 96.43% |

| Burlina P et al (2016) [12] | SVM Classifier | 95.00% | 95.60% | 96.40% |

| Tan J H et al (2018) [19] | Deep CNN | 93.45% | 96.43% | 79.04% |

| Chai et al (2018) [18] | RCNN | 91.51% | 90.90% | 92.33% |

| Our proposed model | CNN with SoftMax | 93.80% | 100.00% | 85.40% |

| CNN with SVM | 95.60% | 100.00% | 89.50% |

Figure 9.

Performance comparison of the proposed model with existing systems[10,11,12] mentioned in reference

The proposed model used multiple datasets comprised of 1113 retinal images. About 70% of the images from the dataset were augmented and obtained 12012 images that are used for training. Twenty percent images from the dataset were used for validation. We trained our model in KERAS with 50 EPOCHS. From Table 2, it is evident that when the epoch value raises from 1 to 50, the training loss reduces from 1.0626 to 0.0850 and validation loss recedes from 1.1431 to 0.0796. Also, the training accuracy and validation accuracy rises from 0.5400 and 0.3421, respectively, to the maximum value of 1.0000 in 30 epochs.

In total, 114 images (10% unseen sample) were used for testing. From these 114 images, 48 images were glaucomatous and 66 were images of normal eyes. CNN with SoftMax classifier identified 41 glaucomatous images correctly and misinterpreted seven images as normal. Consequently, CNN with SoftMax classifier attained an accuracy of 93.86%, specificity of 100%, precision of 100%, and sensitivity of 85.42%. The same images were tested on CNN with an SVM classifier that detected 43 as positive cases while five were misinterpreted as normal out of 48 glaucomatous retinal images and attained an accuracy of 95.61%, sensitivity of 89.58%, specificity of 100%, and precision of 100%.

As the technology advances, several methods employing DL approaches have been developed for the identification of glaucoma from retinal images. Chen et al.[14] proposed a six-layer DL model for glaucoma diagnosis, and the AUC was 83 and 88% on two different datasets. By employing Inception-V3[15] architecture, Li et al.[16] applied a DL method on a large dataset with approximately 40,000 fundus images. The AUC achieved is approximately 99%. Christopher et al.[17] developed several DL methods and employed a dataset comprised of 14,000 fundus images and achieved an AUC of 91%. The present study achieved a diagnostic AUC of 95 and 96% on two different DL architectures on multiple datasets comprising of 1113 images.

Another DL model composed of 18 layers, trained and tested by Raghavendra et al.[8] on roughly 1500 fundus images and attained an accuracy of 98% in detecting glaucoma. In 2018, a multibranch neural network was presented by Chai et al.[18] based on the Faster RCNN DL model to obtain features. The performance of this system was evaluated on a private dataset consisting of 2554 images and achieved 91.51% accuracy, 92.33% sensitivity, and 90.90% specificity. A DL technique for glaucoma detection suggested by Bajwa et al.[11] is used to compare the results of the proposed algorithm. They developed a two-stage framework – RCNN is used for automatic OD detection followed by glaucoma classification by deep networks. This method yielded an accuracy of 87.40, specificity of 85%, and sensitivity of 71.17 on fundus and OCT images. Also, deep features with an SVM classifier scheme was used by Burlina P et al.[12] to achieve the accuracy of 95%, specificity of 95.6%, and sensitivity of 96.4%. An another deep CNN scheme used by Tan JH et al.[19] achieved the performance value of 79.04%, and the CNN model was used to detect glaucoma by achieving the accuracy of 93.45% and specificity of 96.43%.

When comparing the results of previous studies with the obtained results of the present study, our model attained 100% specificity and precision in detecting fundus images of nonglaucomatous eye as normal in both SoftMax and SVM classifiers. Both methods correctly detected 66 normal eye images from a total of 114 test images. No other studies reviewed attained a specificity of this high.

In terms of accuracy, the present study exhibited 93.8 and 95.6%, respectively, in SoftMax and SVM classifiers. This method shows significantly higher accuracy than the studies of[18,19] in both methods and[12] in the SVM classifier.

The major achievement and strength of this study is that the algorithm has correctly detected normal eyes. This means that our algorithm can precisely predict negative results for people who do not have glaucoma. Both SoftMax and SVM classifiers precisely calculated specificity and precision to its maximum value of 1 (100%). Sixty-six out of 66 normal images are nonglaucomatous, thereby attained 100% specificity and 100% precision. In total, 89.58% of the positive cases (glaucomatous images) are correctly classified by our algorithm.

The imitation of this study is labeled data for training the model. DL models rely on a large dataset for learning features for producing accurate predictions. Annotation of retinal images is expensive and time consuming. Sharing resources between ophthalmologists and ML experts will help to overcome this problem to some extent. Another challenge is the acceptance of the black box approach of DL techniques by medical experts.

Nowadays, DL-based algorithms have a substantial role in the field of medical imaging.[20] The perseverance of this study is to examine the role of CNNs, a highly efficient form of DL to diagnose glaucoma from fundus images. First, Labeled images were applied to the CNN after resizing and normalization. Then, the dataset was split into 70% for training and the remainder for validation and test data results. The CNN extracted auto features from the input images and used these features to train SoftMax/SVM classifiers. The same procedure was used to extract image features from the test set. We used the performance measures accuracy, sensitivity, and precision to evaluate the performance of the model. Our model with SoftMax classifier attained an accuracy of 93.86%, specificity of 100%, precision of 100%, and sensitivity of 85.42%. AUC of diagnosis for SoftMax is 92.7%. Accuracy, specificity, precision, and sensitivity of the model with an SVM classifier were 95.61, 100, 100, and 89.58%, respectively. AUC of diagnosis is 94.79%. According to the result obtained from the classifiers, SVM classifiers have higher accuracy and sensitivity and AUC of diagnosis. Since the results are correctly reproduced over and over, it is stated that our algorithm is reliable and can be trusted.

There is a potential for improving the performance of the model when more labeled data is available. The main points of this experiment are that all the normal images of the test data were classified correctly by both classifiers. The developed algorithms can detect glaucoma from fundus images at a level of accuracy exceeding practicing ophthalmologists.

However, the AI structure still cannot replace ophthalmologists in retinal disease diagnosis because AI systems are developed for specific task, whereas ophthalmologists are trained to diagnose a huge spectrum of eye diseases.[21] It is better for ophthalmologists to have a working knowledge of DL so that they can provide more attention and care to a large number of patients. As for further studies, we are planning to focus on improving the sensitivity and accuracy by adopting more advanced transfer learning technology for an upgraded approach for better classification results.

Conclusion

These results of our study demonstrate the ability of the deep learning model to identify glaucoma from fundus images. The proposed system may help ophthalmologists in fast, accurate, and reliable diagnosis of glaucoma.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

References

- 1.Flaxman SR, Bourne RRA, Resnikoff S, Ackland P, Braithwaite T, Cicinelli MV. Global causes of blindness and distance vision impairment 1990-2020:A systematic review and meta-analysis. Lancet Glob Health. 2017;5:1221–34. doi: 10.1016/S2214-109X(17)30393-5. [DOI] [PubMed] [Google Scholar]

- 2.World report on vision. [Last accessed on 2021 Jan 09]. Available from: https://www.who.int/publications-detail-redirect/world-report-on-vision .

- 3.Lu W, Tong Y, Yu Y, Xing Y, Chen C, Shen Y. Applications of artificial intelligence in ophthalmology:General overview. J Ophthalmol. 2018;2018 doi: 10.1155/2018/5278196. Article ID:5278196. https://doi.org/10.1155/2018/5278196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Akkara JD, Kuriakose A. Role of artificial intelligence and machine learning in ophthalmology. Kerala J Ophthalmol. 2019;31:150–60. doi: 10.4103/ijo.IJO_622_19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rahimy E. Deep learning applications in ophthalmology. Curr Opin Ophthalmol. 2018;29:254–60. doi: 10.1097/ICU.0000000000000470. [DOI] [PubMed] [Google Scholar]

- 6.Haloi M. Improved microaneurysm detection using deep neural networks. ArXiv150504424 Cs. 2016. [Last accessed on 2021 Jan 09]. Available from: http://arxiv.org/abs/1505.04424 .

- 7.van Grinsven MJJP, van Ginneken B, Hoyng CB, Theelen T, Sanchez CI. Fast convolutional neural network training using selective data sampling:Application to hemorrhage detection in color fundus images. IEEE Trans Med Imaging. 2016;35:1273–84. doi: 10.1109/TMI.2016.2526689. [DOI] [PubMed] [Google Scholar]

- 8.Raghavendra U, Fujita H, Bhandary SV, Gudigar A, Tan JH, Acharya UR. Deep convolution neural network for accurate diagnosis of glaucoma using digital fundus images. Inf Sci. 2018;441:41–9. [Google Scholar]

- 9.Blagus R, Lusa L. SMOTE for high-dimensional class-imbalanced data. BMC Bioinformatics. 2013;14:106. doi: 10.1186/1471-2105-14-106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mojab N, Noroozi V, Yu PS, Hallak JA. Deep multi-task learning for interpretable glaucoma detection. 2019 IEEE 20th International Conference on Information Reuse and Integration for Data Science (IRI) 2019:167–74. [Google Scholar]

- 11.Bajwa MN, Malik MI, Siddiqui SA, Dengel A, Shafait F, Neumeier W. Two-stage framework for optic disc localization and glaucoma classification in retinal fundus images using deep learning. BMC Med Inform Decis Mak. 2019;19:136. doi: 10.1186/s12911-019-0842-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Burlina P, Freund DE, Joshi N, Wolfson Y, Bressler NM. Detection of age-related macular degeneration via deep learning. 2016 IEEE International Symposium on Biomedical Imaging:From Nano to Macro, ISBI 2016 - Proceeding. IEEE Computer Society. 2016. [Last accessed 2021 Jan 09]. pp. 184–8. Available from: https://jhu.pure.elsevier.com/en/publications/detection-of-age-related-macular-degeneration-via-deep-learning .

- 13.Horta A, Joshi N, Pekala M, Pacheco KD, Kong J, Bressler N, et al. A hybrid approach for incorporating deep visual features and side channel information with applications to AMD detection. 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA) 2017:716–20. [Google Scholar]

- 14.Chen X, Xu Y, Wong DWK, Wong TY, Liu J. Glaucoma detection based on deep convolutional neural network. Annu Int Conf IEEE Eng Med Biol Soc. 2015;2015:715–8. doi: 10.1109/EMBC.2015.7318462. [DOI] [PubMed] [Google Scholar]

- 15.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the inception architecture for computer vision [Internet] arXiv [cs.CV]. 2015. Available from: http://arxiv.org/abs/1512.00567 .

- 16.Li Z, He Y, Keel S, Meng W, Chang RT, He M. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology. 2018;125:1199–206. doi: 10.1016/j.ophtha.2018.01.023. [DOI] [PubMed] [Google Scholar]

- 17.Christopher M, Belghith A, Bowd C, Proudfoot JA, Golbaum MH, Weinreb RN, et al. Performance of deep learning architectures and transfer learning for detecting glaucomatous optic neuropathy in fundus photographs. Sci Rep. 2018;8:16685. doi: 10.1038/s41598-018-35044-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chai Y, Liu H, Xu J. Glaucoma diagnosis based on both hidden features and domain knowledge through deep learning models. Knowl Based Syst. 2018;161:147–56. [Google Scholar]

- 19.Tan JH, Bhandary SV, Sivaprasad S, Hagiwara Y, Bagchi A, Raghavendra U. Age-related macular degeneration detection using deep convolutional neural network. Future Gener Comput Syst. 2018;87:127–35. [Google Scholar]

- 20.Akkara JD, Kuriakose A. Commentary:Rise of machine learning and artificial intelligence in ophthalmology. Indian J Ophthalmol. 2019;67:1009–10. doi: 10.4103/ijo.IJO_622_19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Akkara JD, Kuriakose A. Commentary:Artificial intelligence for everything:Can we trust it? Indian J Ophthalmol. 2020;68:1346–7. doi: 10.4103/ijo.IJO_216_20. [DOI] [PMC free article] [PubMed] [Google Scholar]