Abstract

In response to crises, people sometimes prioritize fewer specific identifiable victims over many unspecified statistical victims. How other factors can explain this bias remains unclear. So two experiments investigated how complying with public health recommendations during the COVID19 pandemic depended on victim portrayal, reflection, and philosophical beliefs (Total N = 998). Only one experiment found that messaging about individual victims increased compliance compared to messaging about statistical victims—i.e., “flatten the curve” graphs—an effect that was undetected after controlling for other factors. However, messaging about flu (vs. COVID19) indirectly reduced compliance by reducing perceived threat of the pandemic. Nevertheless, moral beliefs predicted compliance better than messaging and reflection in both experiments. The second experiment's additional measures revealed that religiosity, political preferences, and beliefs about science also predicted compliance. This suggests that flouting public health recommendations may be less about ineffective messaging or reasoning than philosophical differences.

Keywords: COVID19, Public health, Cognitive psychology, Social psychology, Experimental philosophy, Moral psychology, Cognitive reflection test, Effective altruism, Numeracy, Political psychology, Religiosity, Science communication, Identifiable victim effect

“…if it is in our power to prevent something bad from happening, without thereby sacrificing anything of comparable moral importance, we ought, morally, to do it.”

Peter Singer (1972)

“We appropriately honor the one, Rosa Parks, but by turning away from the crisis in Darfur we are, implicitly, placing almost no value on the lives of millions there.”

Paul Slovic (2007)

1. Introduction

The genocide in Darfur is said to have killed up to 400,000 people (Slovic, 2007). The Rwandan genocide wiped out between 500,000 and 700,000 people (Verpoorten, 2005). Estimated death tolls for other genocides are in the millions (Scherrer, 1999). Suppose that we could prevent mass casualties of this scale by adopting a few quick, easy, do-it-yourself practices (Morens & Fauci, 2007). Many people think that we should (Singer, 2016). However, when public health officials recommended low risk, straightforward methods for preventing millions of deaths during the COVID19 pandemic (Walker et al., 2020, p. 12), some leaders and individuals were fatally resistant (Canning, Karra, Dayalu, Guo, & Bloom, 2020; Pei, Kandula, & Shaman, 2020). Why?

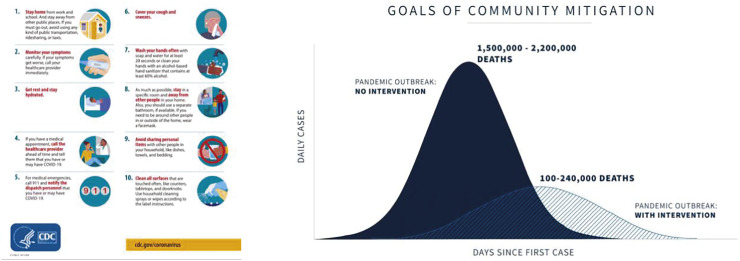

Philosophers and social scientists have been diagnosing our alarmingly ineffective responses to global crises for years. Peter Singer argues that many people wrongly prioritize nearby, less needy people over far more needy people abroad (Singer, 1972, Singer, 2015). Paul Slovic argues that we can mitigate unfortunate tendencies like this by depicting crisis victims as individuals rather than statistics—a.k.a., the identifiable victim effect (Slovic, 2007). So while public health officials recommend simple and relatively convenient practices to limit preventable suffering and death (Fig. 1a), their messages to “flatten the curve” that depict victims statistically rather than individually (Fig. 1b) may be less than optimally motivating. Indeed, as the pandemic death toll rose, some have wondered whether focusing on individual victims could help people better appreciate the threat of the COVID19 pandemic and comply with public health recommendations (Beard, 2020; Graham, 2020; Lewis, 2020).

Fig. 1.

The (a) the CDC's recommended measures to prevent the spread of COVID19 and (b)“flatten the curve” graph shared by the White House in March 2020.

Also, some leaders compared COVID19 to seasonal flus in 2020 despite their public health officials explaining that COVID19 is caused by a different virus, spreads more easily, has a longer period of contagion, causes more serious illness, and is not yet preventable via vaccination (CDC, 2020; Piroth et al., 2020). Some worry that such comparisons to the flu decreased public health compliance and thereby increased COVID19 deaths (Brooks, 2020).

Of course, individual responses to public health officials' recommendations were mixed. While many people complied with the recommendations, conspiracy theories and fake news about COVID19 may have reduced the perceived threat of the pandemic, especially among those who were less reflective (Alper, Bayrak, & Yilmaz, 2020; Greene & Murphy, 2020). Also, some political blocs protested governments' attempts to stop the spread of COVID19 (Fig. 2 ) in the United Kingdom (Picheta, 2020) and the United States (Gillespie & Hudak, 2020; Miolene, 2020). Further, some religious groups in Germany, South Korea, and the United States continued to hold crowded services despite contrary guidance (Boston, 2020; Newberry, 2020; Rashid, 2020).

Fig. 2.

Public protests in response to government restrictions in Hartford, USA (left; Miolene, 2020) and London, UK (right; Picheta, 2020).

Why might otherwise life-affirming people resist easy-to-adopt and expert-recommended practices when so many lives have been lost and so many more lives are at stake? This introduction suggests that at least three factors are relevant: the content of messaging (e.g., individual vs. statistical victims), the receivers' reasoning (e.g., more or less reflective), and the receiversl' prior philosophical beliefs (e.g., about politics). The current research investigated the impact of these factors in two pre-registered experiments.

2. The current research

Prior evidence suggests that we would find an identifiable victim effect (e.g., Jenni & Loewenstein, 1997) on compliance with public health recommendations: compliance with public health recommendations would be lower in response to messaging about statistical pandemic victims of COVID19—e.g., flatten the curve graphs—than messaging about identifiable pandemic victims—e.g., an image of someone in the hospital on a respirator. However, some have suggested that comparing COVID19 to the common flu may have reduced perceived threat of the pandemic and, therefore, compliance with public health recommendations (Brooks, 2020). Moreover, prior research found that message effectiveness depended on people's reasoning (e.g., Friedrich & McGuire, 2010; Lammers, Crusius, & Gast, 2020) and people's philosophical beliefs (e.g., Conway, Woodard, Zubrod, & Chan, 2020). So we controlled for the use of ‘Flu’ as well as individual differences in both reasoning and philosophical beliefs while testing for an identifiable victim effect.

We paired common public health messaging with an image of either individual or statistical victims. Both messages had two variants: a message about a flu pandemic or a message about a COVID19 pandemic. After random assignment to those four conditions, participants completed measures of intentions to comply with public health officials' recommendations (Everett, Colombatto, Chituc, Brady, & Crockett, 2020), perceived threat of COVID19 (Kachanoff, Bigman, Kapsaskis, & Gray, 2020), reasoning (Byrd, 2021a; Byrd, Gongora, Joseph, & Sirota, 2021), philosophical beliefs (Bourget & Chalmers, 2014; Byrd, 2021b; Yaden, 2020), and demographics. Only one experiment found that identifiable victims slightly improved compliance. Messages about flu (vs. COVID19) indirectly reduced compliance by reducing perceived threat of the pandemic. However, both experiments found that philosophical tendencies were far more predictive of compliance. We report all manipulations, measures, and exclusions. Our pre-registration, data, analysis code, and this manuscript are on the Open Science Framework: osf.io/5ux6f/. All experiments and analyses followed APA and IRB ethical guidelines.

3. Experiment 1

Experiment 1 primarily attempted to test the effects of individual (vs. statistical) victims and Flu (vs. COVID19) pandemic messaging on two outcomes: compliance with public health recommendations and the perceived threat of pandemics. The secondary goal of Experiment 1 was to test whether such manipulations would explain variance in compliance and perceived threat when controlling for reasoning test performance and prior philosophical commitments.

3.1. Methods

3.1.1. Participants

Prior to data collection from April 15–20, GPower suggested that 235 participants would confer 80% power to detect a typical effect size of d = 0.408 (Gignac & Szodorai, 2016). To ensure sufficient power after exclusions and stable correlations (Schönbrodt & Perugini, 2013), we recruited 263 English-fluent adults (Białek, Muda, Stewart, Niszczota, & Pieńkosz, 2020) from the United States with at least some high school education from a single call for participants on CloudResearch (Chandler, Rozenweig, Moss, Robinson, & Litman, 2019). To ensure data quality, only participants who passed CloudResearch's SENTRY pre-study assessments were allowed to take the survey (CloudResearch, 2020). We agreed in advance to exclude those who failed an in-survey attention check (N = 17), leaving a final sample of 246 participants (165 women, mean age = 45.54, SD = 13.62, age range 19k–66, 205 identified as White, 20 Black, 4 Hispanic or Latino, 8 Pacific Islander, 1 American Indian or Native American, and 25 as another ethnicity).

3.1.2. Materials

3.1.2.1. Manipulation

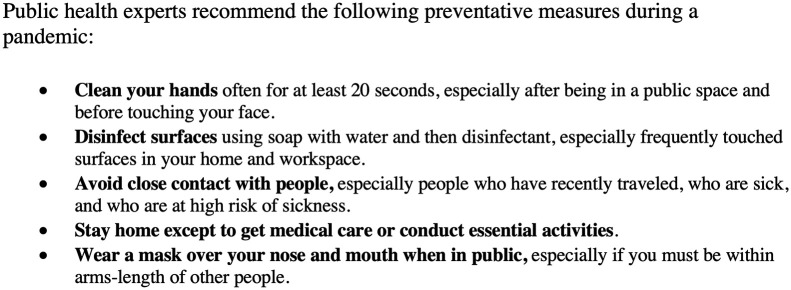

After reading five public health recommendations (Fig. 3 ), participants were randomly assigned to one of four conditions in a 2 (type of information, statistical or individual) × 2 (type of disease, Flu or COVID19) between-subject design (Fig. 4 ).

Fig. 3.

Public health recommendations from Experiments 1 and 2.

Fig. 4.

Four public health messages used in Experiments 1 and 2.

3.1.2.2. Compliance

Self-reported intentions to comply with public health recommendations were assessed with five items from a validated measure of pandemic behavioral intentions (Everett et al., 2020). Evidence suggests that scales like these predict actual social distancing behaviors (Gollwitzer, Martel, Marshall, Höhs, & Bargh, 2020). Participants rated the likelihood that they would comply with public health recommendations about hand washing, surface cleaning, physical distancing, sheltering in place, and mask wearing using a sliding scale from “extremely unlikely” to “extremely likely” with 10,000 invisible increments (0.00–100.00). The scale had excellent reliability (α = 0.88), so a single Compliance variable was computed by averaging participants' five responses to the compliance items.

3.1.2.3. Threat

Research suggests that those who violate public health recommendations see COVID19 as less threatening (De Neys et al., 2020; Hornik et al., 2020). So perceived threat of the pandemics was also assessed with a single item: “After reading this message, how likely are you to consider COVID-19/Flu a serious threat to society?” The sliding scale for the compliance items was used for perceived threat as well.

3.1.2.4. Reflection

A cognitive reflection test employed a logical, verbal, and arithmetic reflection test question (Byrd & Conway, 2019). All three items are designed to lure participants toward a particular response that, upon reflection, they can realize is incorrect (Byrd, 2021a). An example of one question is, “If the concentration of the coffee doubles every minute and it takes four minutes for the coffee to be ready, how long would it take for the coffee to reach half of the final concentration?” (à la Frederick, 2005). Response options included correct (e.g., 3 min), lured (e.g., 2 min), and other incorrect responses in a validated multiple choice format (Sirota & Juanchich, 2018). Lured responses were summed to produce an unreflective factor (α = 0.35) and correct responses were summed to produce a reflective factor (α = 0.48). To dissociate unreflective and reflective factors, all other incorrect responses were ignored (Byrd, 2021a).

3.1.2.5. Attention check

Included in the randomized order of reflection test items was an attention check that was designed to mimic a widely used reflection test question: “A bat and a ball cost $1.10 in total. If you are still reading this, do not answer this question. How much does the ball cost?” (see Frederick, 2005). The response format was identical to that of the other reflection test items.

3.1.2.6. Effective altruism

Participants also rated their agreement with the statement, “If we can prevent great harm without incurring great harm, then we should”. Responses were recorded using the aforementioned slider, except that this slider ranged from “Strongly disagree” to “Strongly agree” with “undecided” at the midpoint. This principle is adapted from a famous premise in an argument for effective altruism (Singer, 1972; see also epigraph). As a strong prescriptive norm motivated by a calculation of harmful consequences, this principle is more in line with the strict prescriptive consequentialist duties that motivate effective altruism than the imperfect prescriptive duties that motivate deontological duties (Baron & Goodwin, 2020, Section 2; Byrd & Conway, 2019, Section 4.2). For example, consider norms that favor giving to charity. Deontologists reject “culpability” for “failure to fulfill” this kind of prescription (Kant, 1797, p. 390/194), but effective altruists like Peter Singer think that we are “wrong not to do” what is prescribed by this norm (Singer, 1972, p. 235)—a difference that has been found among laypeople as well (Janoff-Bulman, Sheikh, & Hepp, 2009). So effective altruists have not only endorsed this principle when arguing for effective altruism but have done so in ways that other moral theorists would resist. Hence, the label ‘effective altruism’. Nonetheless, some notions of effective altruism seem to align with the strict consequentialist duty that seeks maximal improvement while other forms of effective altruism—like the one in this paper—align better with the scalar consequentialism that seeks net improvement (MacAskill, 2016; Norcross, 2006; Sinhababu, 2018). Of course, the history of ideas is long and diverse enough to find thinkers who do not know about or identify as effective altruists but will endorse this statement.

3.2. Results

3.2.1. Univariate analysis

To assist related research and meta-analyses, correlations between all measures across all conditions in Experiment 1 are reported in Table 1 . As expected, compliance with specific public health recommendations were intercorrelated and—replicating related research—average compliance with public health recommendations increased with the perceived threat of the pandemic (Jordan, Yoeli, & Rand, 2020). Further replicating other findings, compliance was higher among both women (Clark, Davila, Regis, & Kraus, 2020) and older people (Everett et al., 2020), there were ceiling effects in compliance (ibid.), and reflection test performance did not correlate with compliance (Díaz & Cova, 2020).

Table 1.

Means (M), standard deviations (SD), and correlations [with 95% confidence intervals] from Experiment 1 (N = 246).

| Variable | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Average compliance | 87.52 | 17.24 | |||||||||||

| 2. Hand washing | 91.41 | 17.32 | 0.86⁎⁎ | ||||||||||

| [0.83, 0.89] | |||||||||||||

| 3. Surface cleaning | 85.49 | 22.44 | 0.83⁎⁎ | 0.70⁎⁎ | |||||||||

| [0.79, 0.87] | [0.63, 0.76] | ||||||||||||

| 4. Physical distancing | 91.11 | 17.83 | 0.85⁎⁎ | 0.76⁎⁎ | 0.65⁎⁎ | ||||||||

| [0.81, 0.88] | [0.70, 0.81] | [0.57, 0.72] | |||||||||||

| 5. Sheltering in place | 88.41 | 19.32 | 0.87⁎⁎ | 0.76⁎⁎ | 0.60⁎⁎ | 0.73⁎⁎ | |||||||

| [0.84, 0.90] | [0.70, 0.81] | [0.52, 0.68] | [0.66, 0.78] | ||||||||||

| 6. Mask wearing | 81.17 | 26.14 | 0.79⁎⁎ | 0.50⁎⁎ | 0.53⁎⁎ | 0.52⁎⁎ | 0.62⁎⁎ | ||||||

| [0.74, 0.83] | [0.40, 0.59] | [0.44, 0.62] | [0.42, 0.60] | [0.53, 0.69] | |||||||||

| 7. Perceived societal threat of pandemic | 82.79 | 23.67 | 0.65⁎⁎ | 0.54⁎⁎ | 0.53⁎⁎ | 0.56⁎⁎ | 0.57⁎⁎ | 0.54⁎⁎ | |||||

| [0.58, 0.72] | [0.44, 0.62] | [0.43, 0.61] | [0.47, 0.64] | [0.48, 0.65] | [0.44, 0.62] | ||||||||

| 8. Lured reflection test answers (0–3) | 1.96 | 0.93 | 0.11 | 0.10 | 0.11 | 0.10 | 0.06 | 0.11 | 0.07 | ||||

| [−0.01, 0.23] | [−0.03, 0.22] | [−0.02, 0.23] | [−0.03, 0.22] | [−0.07, 0.18] | [−0.02, 0.23] | [−0.06, 0.19] | |||||||

| 9. Correct reflection test answers (0–3) | 0.82 | 0.91 | −0.02 | 0.04 | −0.05 | 0.00 | 0.02 | −0.05 | −0.01 | −0.88⁎⁎ | |||

| [−0.14, 0.11] | [−0.09, 0.16] | [−0.18, 0.07] | [−0.12, 0.13] | [−0.11, 0.14] | [−0.18, 0.07] | [−0.13, 0.12] | [−0.91, −0.85] | ||||||

| 10. Agreement with effective altruism principle | 88.53 | 18.40 | 0.55⁎⁎ | 0.54⁎⁎ | 0.42⁎⁎ | 0.53⁎⁎ | 0.52⁎⁎ | 0.37⁎⁎ | 0.47⁎⁎ | 0.11 | 0.08 | ||

| [0.46, 0.64] | [0.44, 0.62] | [0.31, 0.52] | [0.43, 0.61] | [0.42, 0.60] | [0.26, 0.47] | [0.37, 0.56] | [−0.02, 0.23] | [−0.05, 0.20] | |||||

| 11. Age | 44.89 | 13.59 | 0.20⁎⁎ | 0.19⁎⁎ | 0.12 | 0.15⁎ | 0.21⁎⁎ | 0.17⁎⁎ | 0.11 | 0.25⁎⁎ | −0.19⁎⁎ | 0.20⁎⁎ | |

| [0.08, 0.32] | [0.07, 0.31] | [−0.01, 0.24] | [0.03, 0.27] | [0.09, 0.32] | [0.05, 0.29] | [−0.02, 0.23] | [0.13, 0.37] | [−0.31, −0.07] | [0.07, 0.31] | ||||

| 12. Gender (W) | 0.63 | 0.48 | 0.20⁎⁎ | 0.19⁎⁎ | 0.17⁎⁎ | 0.20⁎⁎ | 0.15⁎ | 0.15⁎ | 0.21⁎⁎ | 0.03 | −0.01 | 0.12 | 0.11 |

| [0.08, 0.32] | [0.07, 0.31] | [0.05, 0.29] | [0.07, 0.31] | [0.02, 0.27] | [0.03, 0.27] | [0.09, 0.33] | [−0.10, 0.15] | [−0.13, 0.12] | [−0.01, 0.24] | [−0.01, 0.23] |

Indicates p < 0.05.

Indicates p < 0.01.

3.2.2. Multi-variate analysis

Hierarchical regression tested the effects of statistical (vs. individual) victim and Flu (vs. COVID19) messaging (and their interaction) on compliance and perceived threat both with and without reasoning and philosophy control variables (Table 2 ). The two message groups were contrast coded (−0.5, 0.5) to allow us to report main effects rather than dummy coded (0,1), which would limit us to reporting simple effects (Brehm & Alday, 2020). The two statistical victim messages were positive, and the two identifiable victim messages were negative. Similarly, the two Flu messages were positive and the two COVID19 messages were negative. Both sets of message codes were also mean-centered to test interactions (Schielzeth, 2010).

Table 2.

Hierarchical regression models predicting public health recommendation compliance and perceived threat of pandemic in Experiment 1 (N = 246).

| Messaging-only |

Messaging + Reasoning |

Messaging + Reasoning + Philosophy |

||||

|---|---|---|---|---|---|---|

| Compliance | Threat | Compliance | Threat | Compliance | Threat | |

| Stat. (vs. Ind.) Victim | −0.11† | −0.13⁎ | −0.12† | −0.14⁎ | −0.04 | −0.08 |

| Flu (vs. COVID19) Pandemic | −0.02 | −0.20⁎⁎ | −0.04 | −0.21⁎⁎⁎ | −0.02 | −0.20⁎⁎⁎ |

| Interaction | 0.06 | 0.02 | 0.04 | 0.01 | 0.05 | 0.02 |

| Lured Refl. Test Answers | 0.45⁎⁎ | 0.34⁎ | 0.00 | −0.04 | ||

| Correct Refl. Test Answers | 0.39⁎⁎ | 0.31⁎ | −0.05 | −0.07 | ||

| Effective Altruism | 0.55⁎⁎⁎ | 0.47⁎⁎⁎ | ||||

| Combined Multiple-R2 | 0.02 | 0.06** | 0.06⁎ | 0.08*** | 0.32*** | 0.27⁎⁎⁎ |

| Increase in Multiple-R2 | ⁎⁎ | ⁎ | ⁎⁎⁎ | ⁎⁎⁎ | ||

Standardized regression coefficients.

p < 0.1.

p < 0.05.

p < 0.01.

p < 0.001.

3.2.2.1. Messaging

The first message-only models did not detect messaging effects on compliance, but compliance was marginally lower in the statistical victim messages than the identifiable victim messages, β = −0.11, F(1,242) = 3.13, p = 0.07. The other model found that perceived threat was slightly lower for messages about statistical victims than messages individual victims (β = −0.13, F(1, 242) = 4.67, p = 0.03) and for the flu pandemic messages than the COVID19 pandemic messages (β = −0.20, F(1, 242) = 10.27, p = 0.002) .

3.2.2.2. Messaging and reasoning

The second models predicted significalty more variance, but did not detect effects of messaging on compliance. Nonetheless, perceived threat was slightly lower for messages about statistical (vs. identifiable) victims, β = −0.14, F(1,240) = 5.05, p = 0.03. and messages about Flu (vs. COVID19), β = −0.21, F(1,240) = 11.94, p < 0.001. The second messaging and reasoning model found that both correct and lured reflection test answers predicted higher compliance and threat (0.31 < β < 0.45, 0.001 < p < 0.02). This suggests that the relationship between reasoning and pandemic responses may be explained by another variable. A caveat is that the reasoning tets had very low reliability, and some dose of caution is required when interpreting these results.

3.2.2.3. Messaging, reasoning, and philosophy

The third models confirmed that correlations between reflection test performance and pandemic attitudes were no longer detected after endorsement of the effective altruist principle was included. In fact, effective altruism was the strongest predictor of compliance with public health recommendations (β = 0.55, F(1, 239) = 89.64, p < 0.001) and perceived threat (β = 0.47, F(1,239) = 61.14, p < 0.001). Moreover, adding the effective altruism variable to this full model allowed it to predict significantly more variance than the prior model. After controlling for effective altruism, messaging about Flu messages still reduced the perceived threat compared to messaging about COVID19 (β = −0.19, F(1,239) = 12.36, p < 0.001), but the identifiable victim effect was no longer detected (p > 0.17).

3.3. Discussion

Experiment 1 did not find strong support for the identifiable victim effect: participants who received messages about individual victims were not reliably more likely to comply with public health recommendations than participants who received messages about statistical victims. Rather, we found that public health compliance and perceived threat of pandemics was better explained by prior philosophical beliefs than messaging or reasoning. However, the low reliability of reflection test responses could have obscured the actual role of reasoning in health recommendation compliance. So another pre-registered experiment attempted to replicate and clarify these results.

4. Experiment 2

The second experiment was designed to replicate and clarify the findings of Experiment 1. By tripling the sample size, we obtained enough statistical power to include additional measures that had the potential to clarify the role of reasoning and prior philosophical beliefs in people's attitudes and intentions about pandemics. This higher powered experiment was also able to test the replicability of the marginal findings from Experiment 1 and identify potential mediators.

4.1. Methods

4.1.1. Participants

Prior to data collection from May 7–11, GPower suggested that 741 participants would confer 95% power to detect a typical effect size of d = 0.408 when employing hierarchical regression with 19 predictors (Gignac & Szodorai, 2016). To ensure sufficient statistical power after excluding participants that failed an attention check, we recruited 820 English-fluent adults from the United States with at least some high school education in a single call for participants using the CloudResearch pre-study assessment. We agreed in advance to exclude those who failed an in-survey attention check (N = 68), leaving a final sample of 752 participants (474 women, mean age = 41.72, SD = 12.55, age range 18–66, 597 identified as White, 42 Hispanic or Latino, 40 Black, 11 Pacific Islander, 10 American Indian or Native American, and 50 as another ethnicity).

4.1.2. Materials

4.1.2.1. Experiment 1 materials

The conditions, compliance items (α = 0.91), threat question, and endorsement of the effective altruism principle from Experiment 1 were reused in Experiment 2.

4.1.2.2. Philosophy

Experiment 2 asked participants about their stances on various philosophical issues in random order. Responses were recorded on the sliding scales used in Experiment 1. These items are labeled ‘philosophical’ because they concern topics that are often considered philosophical, broadly: morality, politics, metaphysics, and epistemology.

4.1.2.2.1. Morality

In addition to the effective altruist principle from Experiment 1, participants also reported their endorsement of instrumental harm (Kahane et al., 2018) by answering another moral question, “Can it be acceptable to cause some harm to one person in order to prevent greater harm to more people?” on a scale from “Never” to “Always” with “Sometimes” at the midpoint.

4.1.2.2.2. Politics

Participants reported both their social and economic political orientations by answering, “How liberal (left-wing) or conservative (right-wing) are you on social/economic issues?” on a scale from “Very liberal” to “Very conservative” with “Neither” at the midpoint. Participants also indicated their preferences for libertarianism or egalitarianism by answering, “What is more important in a good society: equality or liberty?” (a la Bourget & Chalmers, 2014) on a sliding scale from “Equality” to “Liberty”.

4.1.2.2.3. Metaphysics & epistemology

Questions about metaphysics and epistemology were also adapted (a la Bourget & Chalmers, 2014). Agreement with scientific realism was measured by asking for participants agreement with, “The best scientific theories are probably true” on a scale from “Strongly disagree” to “Strongly agree” with “Undecided” at the midpoint. Scientific anti-realism was gauged by indicating agreement with, “The methods of science cannot accurately reveal reality” on the aforementioned scale. Participants' endorsement of naturalism was determined by their agreement with, “There are no supernatural forces, causes, or beings” on the same scale. The same scale was used to determine participants' endorsement of theism—i.e., that “A God exists.” Participants also reported their view of free will's relationship to determinism by answering, “If every event in the universe is determined, then how likely is it that people have free will?” (ibid.) on a sliding scale from “Not at all” to “Totally” with “Somewhat” at the midpoint.

4.1.2.2.4. Religiosity

Participants were asked to report their religiosity in response to the following question (Levine et al., 2019): “If religiosity is defined as participating with an organized religion, then to what degree do you consider yourself religious?” using the same sliding scale.

4.1.2.3. Reflection

To improve upon the reliability of the reflection test in the first experiment, we reused only one reflection test item and used five other reflection test questions (Byrd & Conway, 2019), doubling the number of reflection items in the second experiment: two logical, two verbal, and two arithmetic reflection test items. An example of one question is, “If it takes 3 nurses 3 minutes to test 3 patients for the flu, how long would it take 90 nurses to test 90 patients for the flu?” Response options included correct (e.g., 3 minutes), lured (e.g., 90 minutes), and other incorrect responses in a validated multiple choice format (Sirota & Juanchich, 2018). As in Experiment 1, the reflective factor was computed by summing correct responses (α = 0.3) and the unreflective factor was computed by summing lured responses (α = 0.21). To dissociate reflective from unreflective factors, all other incorrect responses were ignored. Contrary to our expectations, the reliability of the expanded reflection test answers was lower than Experiment 1.

4.1.2.4. Numeracy

Participants also completed the four-item Berlin Numeracy Test (Cokely, Galesic, Schulz, Ghazal, & Garcia-Retamero, 2012). An example of one item is as follows: “Imagine we are throwing a five-sided die 50 times. On average, out of these 50 throws how many times would this five-sided die show an odd number (1, 3 or 5)?” Numeracy was computed by summing correct responses (α = 0.22). The reliability of the numeracy test responses was also remarkably low.

4.2. Results

4.2.1. Univariate analysis

To further assist related investigations, correlations between all measures from Experiment 2 are reported in Table 3 . Replicating Experiment 1, compliance with specific public health recommendations were highly intercorrelated, average compliance with public health recommendations increased with the perceived threat of the pandemic, and reflection test performance did not correlate with compliance. Consistent with Experiment 1 and replicating past work, age correlated with public health recommendation compliance and there were ceiling effects in compliance (Everett et al., 2020). However, in contrast with that work neither gender nor ethnicity correlated with compliance.

Table 3.

Means (M), standard deviations (SD), and correlations between all measures in Experiment 2 (N = 752).

| Variable | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Average compliance | 85.22 | 19.01 | |||||||||||||||||||||||

| 2. Hand washing | 89.34 | 18.20 | 0.85⁎⁎ | ||||||||||||||||||||||

| 3. Surface cleaning | 83.73 | 22.64 | 0.87⁎⁎ | 0.76⁎⁎ | |||||||||||||||||||||

| 4. Physical distancing | 86.94 | 21.39 | 0.85⁎⁎ | 0.73⁎⁎ | 0.70⁎⁎ | ||||||||||||||||||||

| 5. Sheltering in place | 83.82 | 22.80 | 0.88⁎⁎ | 0.66⁎⁎ | 0.65⁎⁎ | 0.65⁎⁎ | |||||||||||||||||||

| 6. Mask wearing | 82.26 | 25.61 | 0.85⁎⁎ | 0.57⁎⁎ | 0.63⁎⁎ | 0.60⁎⁎ | 0.78⁎⁎ | ||||||||||||||||||

| 7. Perceived societal threat of pandemic | 81.15 | 24.68 | 0.77⁎⁎ | 0.57⁎⁎ | 0.63⁎⁎ | 0.59⁎⁎ | 0.74⁎⁎ | 0.73⁎⁎ | |||||||||||||||||

| 8. Lured Reflection Test Answers | 3.38 | 1.30 | 0.02 | 0.03 | 0.06 | 0.01 | 0.01 | −0.01 | −0.01 | ||||||||||||||||

| 9. Correct Reflection Test Answers | 1.87 | 1.24 | −0.01 | 0.01 | −0.05 | 0.01 | −0.01 | 0.01 | 0.00 | −0.79⁎⁎ | |||||||||||||||

| 10. Numeracy | 1.21 | 0.96 | 0.04 | 0.06 | 0.00 | 0.04 | 0.03 | 0.05 | 0.01 | −0.16⁎⁎ | 0.21⁎⁎ | ||||||||||||||

| 11. Effective Altruism | 84.82 | 19.93 | 0.51⁎⁎ | 0.48⁎⁎ | 0.43⁎⁎ | 0.40⁎⁎ | 0.47⁎⁎ | 0.41⁎⁎ | 0.43⁎⁎ | −0.08⁎ | 0.10⁎⁎ | 0.03 | |||||||||||||

| 12. Instrumental Harm | 48.99 | 27.29 | 0.00 | −0.04 | −0.01 | 0.02 | −0.01 | 0.04 | 0.05 | −0.03 | 0.02 | 0.02 | 0.01 | ||||||||||||

| 13. Liberty (vs. Equality) | 51.70 | 30.98 | −0.20⁎⁎ | −0.13⁎⁎ | −0.17⁎⁎ | −0.15⁎⁎ | −0.20⁎⁎ | −0.21⁎⁎ | −0.18⁎⁎ | 0.02 | −0.05 | −0.05 | −0.14⁎⁎ | 0.18⁎⁎ | |||||||||||

| 14. Social Conservatism | 52.64 | 31.38 | −0.13⁎⁎ | −0.10⁎⁎ | −0.09⁎ | −0.07 | −0.14⁎⁎ | −0.14⁎⁎ | −0.11⁎⁎ | 0.10⁎⁎ | −0.13⁎⁎ | −0.11⁎⁎ | −0.16⁎⁎ | 0.09⁎ | 0.34⁎⁎ | ||||||||||

| 15. Economic Conservatism | 54.68 | 30.38 | −0.14⁎⁎ | −0.11⁎⁎ | −0.11⁎⁎ | −0.06 | −0.17⁎⁎ | −0.16⁎⁎ | −0.13⁎⁎ | 0.07⁎ | −0.09⁎⁎ | −0.05 | −0.13⁎⁎ | 0.12⁎⁎ | 0.33⁎⁎ | 0.83⁎⁎ | |||||||||

| 16. Naturalism | 44.29 | 34.95 | 0.08⁎ | 0.01 | 0.05 | 0.05 | 0.09⁎⁎ | 0.10⁎⁎ | 0.11⁎⁎ | 0.02 | −0.04 | −0.02 | 0.08⁎ | 0.15⁎⁎ | −0.01 | −0.01 | 0.01 | ||||||||

| 17. Scientific Realism | 69.94 | 24.79 | 0.40⁎⁎ | 0.29⁎⁎ | 0.35⁎⁎ | 0.31⁎⁎ | 0.36⁎⁎ | 0.40⁎⁎ | 0.40⁎⁎ | −0.08⁎ | 0.08⁎ | 0.05 | 0.37⁎⁎ | 0.12⁎⁎ | −0.16⁎⁎ | −0.14⁎⁎ | −0.15⁎⁎ | 0.27⁎⁎ | |||||||

| 18. Scientific Anti-realism | 49.06 | 30.93 | −0.06 | −0.09⁎ | −0.03 | −0.08⁎ | −0.05 | −0.03 | −0.02 | 0.14⁎⁎ | −0.13⁎⁎ | −0.05 | −0.01 | 0.19⁎⁎ | 0.19⁎⁎ | 0.29⁎⁎ | 0.28⁎⁎ | 0.15⁎⁎ | −0.06 | ||||||

| 19. Theism | 74.82 | 33.45 | 0.13⁎⁎ | 0.11⁎⁎ | 0.12⁎⁎ | 0.10⁎⁎ | 0.13⁎⁎ | 0.10⁎⁎ | 0.07⁎ | 0.15⁎⁎ | −0.16⁎⁎ | −0.12⁎⁎ | 0.08⁎ | 0.05 | 0.13⁎⁎ | 0.33⁎⁎ | 0.28⁎⁎ | −0.14⁎⁎ | −0.08⁎ | 0.33⁎⁎ | |||||

| 20. Compatibilism | 59.44 | 31.09 | 0.10⁎⁎ | 0.07 | 0.14⁎⁎ | 0.07 | 0.07 | 0.09⁎ | 0.11⁎⁎ | 0.09⁎ | −0.12⁎⁎ | −0.01 | −0.00 | 0.13⁎⁎ | 0.05 | 0.25⁎⁎ | 0.24⁎⁎ | 0.05 | 0.07⁎ | 0.23⁎⁎ | 0.28⁎⁎ | ||||

| 21. Religiosity | 52.29 | 35.64 | 0.13⁎⁎ | 0.07 | 0.14⁎⁎ | 0.08⁎ | 0.13⁎⁎ | 0.13⁎⁎ | 0.15⁎⁎ | 0.14⁎⁎ | −0.15⁎⁎ | −0.07 | 0.01 | 0.20⁎⁎ | 0.13⁎⁎ | 0.37⁎⁎ | 0.31⁎⁎ | 0.03 | −0.02 | 0.32⁎⁎ | 0.67⁎⁎ | 0.34⁎⁎ | |||

| 22. Age | 41.72 | 12.55 | 0.09⁎⁎ | 0.10⁎⁎ | 0.08⁎ | 0.14⁎⁎ | 0.07 | 0.03 | 0.08⁎ | 0.02 | −0.04 | −0.09⁎ | 0.10⁎⁎ | −0.13⁎⁎ | 0.17⁎⁎ | 0.15⁎⁎ | 0.19⁎⁎ | −0.01 | −0.01 | 0.04 | 0.10⁎⁎ | 0.09⁎⁎ | 0.02 | ||

| 23. Gender (W) | 0.63 | 0.48 | −0.00 | 0.04 | 0.00 | 0.01 | −0.01 | −0.04 | 0.01 | −0.01 | −0.03 | −0.04 | 0.03 | −0.20⁎⁎ | −0.06 | −0.09⁎ | −0.08⁎ | −0.14⁎⁎ | −0.15⁎⁎ | −0.08⁎ | −0.04 | −0.12⁎⁎ | −0.18⁎⁎ | 0.05 | |

| 24. White Ethnicity | 0.79 | 0.40 | 0.02 | 0.02 | 0.05 | 0.04 | −0.02 | 0.00 | 0.03 | 0.02 | 0.00 | 0.00 | 0.01 | 0.03 | 0.07⁎ | 0.10⁎⁎ | 0.10⁎⁎ | −0.05 | 0.00 | −0.01 | 0.03 | −0.02 | 0.04 | 0.20⁎⁎ | −0.04 |

Univariate analysis also found common correlations. Reflection test performance correlated with numeracy (Attali & Bar-Hillel, 2020; Białek, Bergelt, Majima, & Koehler, 2019; Białek & Sawicki, 2018; Erceg, Galic, & Ružojčić, 2020; Patel, 2017; Szaszi, Szollosi, Palfi, & Aczel, 2017), morality (Byrd & Conway, 2019; Patil et al., 2018; Reynolds, Byrd, & Conway, 2020), political orientation (Deppe et al., 2015; Yilmaz, Saribay, & Iyer, 2019), and theism (Gervais et al., 2018; Pennycook, Ross, Koehler, & Fugelsang, 2016). Further, older people were more conservative (Cornelis, Hiel, Roets, & Kossowska, 2009; Luberti, Blake, & Brooks, 2020) and women were less likely to endorse instrumental harm (Białek, Paruzel-Czachura, & Gawronski, 2019; Friesdorf, Conway, & Gawronski, 2015; Muda, Niszczota, Białek, & Conway, 2018). These correlations can serve as a robustness check of our dataset.

Besides the perceived threat of the pandemic, the strongest and most reliable predictors of compliance with public health recommendations were endorsement of the effective altruism principle and scientific realism. Theists were also modestly more likely to comply. Non-compliance was best predicted by endorsing liberty (relative to equality). The other moral, political, metaphysical, and religious measures did not reliably correlate with compliance.

4.2.2. Multivariate analysis

Hierarchical regression examined the effects of statistical (vs. individual) victim and Flu (vs. COVID19) information while controlling for correlations with the second experiment's reasoning and philosophical measures (Table 4 ). Message coding was identical to Experiment 1.

Table 4.

Hierarchical regression models predicting public health recommendation compliance and perceived threat of pandemic in Experiment 2 (N = 752).

| Messaging-only |

Messaging + Reasoning |

Messaging + Reasoning + Philosophy |

||||

|---|---|---|---|---|---|---|

| Compliance | Threat | Compliance | Threat | Compliance | Threat | |

| Stat. (vs. Ind.) Victim | 0.04 | 0.04 | 0.04 | 0.04 | 0.02 | −0.02 |

| Flu (vs. COVID19) Pandemic | −0.01 | −0.13⁎⁎⁎ | −0.01 | −0.13⁎⁎⁎ | 0.00 | −0.12⁎⁎⁎ |

| Interaction | 0.04 | 0.07† | 0.04 | 0.07⁎ | 0.01 | 0.05 |

| Lured Refl. Test Answers | 0.04 | −0.00 | 0.04 | 0.00 | ||

| Correct Refl. Test Answers | 0.02 | 0.00 | −0.03 | −0.03 | ||

| Numeracy | 0.04 | 0.01 | 0.04 | −0.00 | ||

| Effective Altruism | 0.39⁎⁎⁎ | 0.31⁎⁎⁎ | ||||

| Instrumental Harm | −0.02 | −0.00 | ||||

| Liberty (vs. Equality) | −0.09⁎⁎ | −0.09⁎⁎ | ||||

| Social Conservatism | −0.01 | 0.00 | ||||

| Economic Conservatism | −0.07 | −0.09 | ||||

| Naturalism | 0.00 | 0.00 | ||||

| Scientific Realism | 0.24⁎⁎⁎ | 0.25⁎⁎⁎ | ||||

| Scientific Anti-realism | −0.08⁎ | −0.03 | ||||

| Theism | 0.07† | −0.04 | ||||

| Compatibilism | 0.06⁎ | 0.06† | ||||

| Religiosity | 0.12⁎⁎ | 0.20⁎⁎⁎ | ||||

| Combined Multiple-R2 | 0.00 | 0.03*** | 0.01 | 0.03** | 0.36*** | 0.32*** |

| Increase in Multiple-R2 | – | – | *** | *** | ||

Standardized regression coefficients.

p < 0.1.

p < 0.05.

p < 0.01.

p < 0.001.

4.2.2.1. Messaging

The messaging-only models did not detect effects of messaging on compliance or of individual (vs. statistical) victim information on perceived threat. However, one messaging-only model did find that perceived threat of pandemic was slightly lower for the messages about Flu than the messages about COVID19 (β = −0.13, F(1, 748) = 13.97, p < 0.001). Also, the model also found a marginal interaction effect of flu (vs. COVID19) and statistical (vs. individual) victims on perceived threat (β = 0.07, F(1, 748) = 3.94, p = 0.047).

4.2.2.2. Messaging and reasoning

The second messaging and cognition models found the same effects of messaging as the messaging-only model while controlling for reflection and numeracy test performance. Neither reflection nor numeracy test performance were related to compliance or threat in this second model. The low replicability of the effects of reasoning may be attributable to surprisingly low reliability of its measurement.

4.2.2.3. Messaging, reasoning, and philosophy

The third models added the battery of philosophical questions. Replicating Experiment 1, endorsement of effective altruism was the strongest predictor of compliance with public health recommendations (β = 0.39, F(1, 734) = 141.34, p < 0.001) and perceived threat (β = 0.31, F(1,734) = 80.68, p < 0.001). In order of strength, the remaining philosophical predictors of both compliance and threat were scientific realism (β = 0.24–0.25, F (1,734) = 49.17–52.46, p < 0.001) and religiosity (β = 0.12–0.2, F (1,734) = 8.12–19.97, p < 0.005). The only predictor of both non-compliance and reduced threat was relative preference for liberty over equality (β = −0.09, F (1,734) = 6.69–7.61, p = 0.006–0.01). Compliance with public health recommendations decreased slightly as endorsement of scientific anti-realism increased (β = −0.08, F(1,734) = 6.19, p = 0.01), but increased marginally as compatibilism about free will increased (β = 0.06, F(1,734) = 3.96, p = 0.046). After controlling for correlations with philosophy and reasoning, the only detected effect of messaging was a reduction of the perceived threat by messages about Flu relative to messages about COVID19 (β = −0.17, F(1, 734) = 14.61, p < 0.001). The interaction effect on threat from the prior models was not detected in the third models.

4.2.2.4. Mediation analysis

To clarify our conclusions, we tested whether and how the control variables in the multivariate analyses were causally affected by our treatments (Angrist & Pischke, 2008) and whether the outcome variables were colliders (Pearl, 2009), impacted not just by direct effects of our treatments, but by indirect effects through our control variables. To do this, we used a GLM mediation model with 10,000 bootstrap repetitions in Jamovi (Sahin & Aybek, 2019) according to the recommendations of Preacher and Hayes (2004).

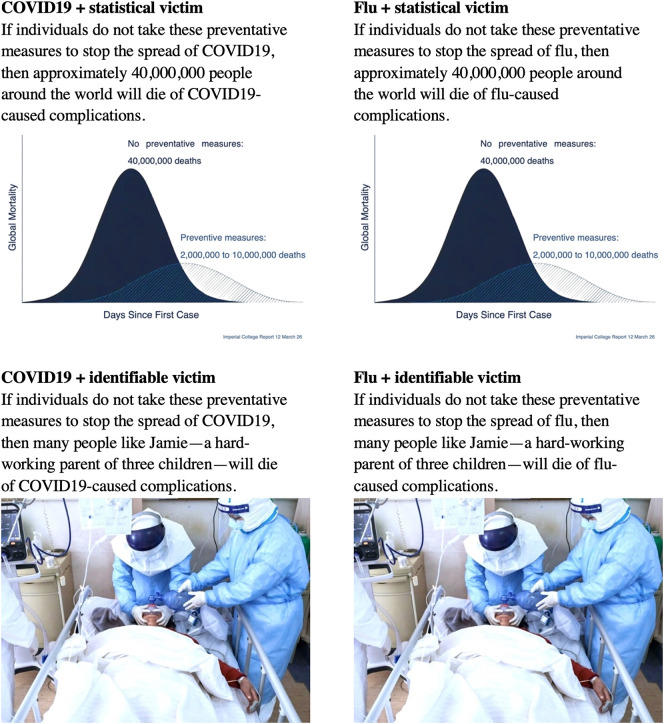

No effects of statistical (vs. indentifiable) victims on any control or outcome variables were detected. However, direct and indirect effects of Flu (vs. COVID19) messaging on compliance were detected. Flu messaging directly increased compliance relative to COVID19 messaging, β = 0.09, p < 0.0001, but indirectly reduced compliance by reducing perceived threat, β = −0.10, p < 0.001. No other direct or indirect effects on compliance were detected.

Nonetheless, component relationships of the mediation paths were detected. Messaging about Flu (vs. COVID19) had a small negative effect on instrumental harm, β = −0.08, p < 0.05. No other component effects of the treatments were detected. However, most of the component relationships from the hierarchical regression analyses were detected in the mediation analysis: compliance was predicted by effective altruism (β = 0.21, p < 0.00001), scientific realism (β = 0.08, p < 0.01), scientific anti-realism (β = −0.07, p < 0.01), and theism (β = 0.11, p < 0.00001).

So, as Fig. 5 shows, compliance was not a collider and our control variables were not substantially impacted by our treatments. The full mediation model can be found in Table A1.

Fig. 5.

Direct effects (wider lines) and indirect effects (thinner lines) of the two manipulations on compliance with public health recommendations in Experiment 2 (N = 750). Solid lines represent an indirect effect. Dashed lines indicate undetected indirect effects. Dark lines and borders represent relationships with p-values less than 0.05 and light lines represent all other p-values.. Line values are completely standardized effect sizes. ⁎ p < 0.05., ⁎⁎p < 0.01., ⁎⁎⁎ p < 0.001, ⁎⁎⁎⁎ p < 0.0001, ⁎⁎⁎⁎⁎p < 0.00001.

5. General discussion

During the COVID19 pandemic, we investigated the predictors of compliance with public health recommendations. We considered both message and receiver, testing messages' depiction of victims (individuals vs. statistics) and mention of disease (flu vs. COVID19) as well as receivers' reasoning and philosophical beliefs. Messaging that mentioned Flu (rather than COVID19) reduced compliance by reducing the perceived threat of the pandemic. However, messaging about identifiable victims (rather than statistical victims) only marginally reduced compliance in just one experiment—an effect that was no longer detected after introducing a more potent predictor of compliance: beliefs about whether we ought to prevent harm if we can do so without incurring as much harm.

Both experiments found the best predictors of complying with public health recommendations and the perceived threat of pandemics were prior philosophical beliefs about morality and science. Moreover, preferring liberty to equality was the best direct predictor of non-compliance: the more that individuals valued liberty over equality, the more they reported being likely to flout public health recommendations. Nonetheless, aligning with findings from other countries (De Neys et al., 2020; Kittel, Kalleitner, & Schiestl, 2021), perceived threat of the pandemic was also predictive of complying with public health recommendations. So more important than strategic public health messaging may be the public's prior philosophical beliefs and perceptions.

5.1. Identifiable victim effect

One may wonder why we did not reliably detect identifiable victim effects in the present experiments given the history and recency of finding such effects (Bergh & Reinstein, 2020). We offer two responses to this curiosity: equivalence testing and alternative explanations.

5.1.1. Equivalence testing

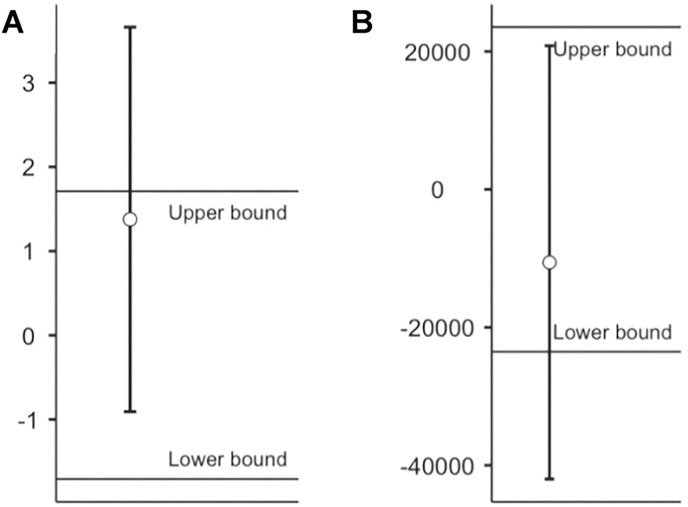

A reviewer asked for equivalence tests of a real zero effect. Rather than compare the observed effect to zero, equivalence tests examine whether observed effects are statistically smaller than the smallest effect size of interest. If it is, then the investigated effect is considered so small that it is practically equivalent to zero (Seaman & Serlin, 1998). Lee and Feeley (2016) found a meta-analytic identifiable victim effect size (d) 95% confidence interval of 0.09 to 0.011 from 41 experiments. So we employed equivalence tests on the higher-powered experiment (2) in Jamovi using that meta-analytic smallest effect size of interest: a lower bound of d = −0.09 and an upper bound of d = 0.09 (Lakens, 2017).

As in prior analyses, we did not detect a main identifiable victim effect, t(747) = 0.992, p = 0.321. Nonetheless, the observed effect was larger than the lower bound, t(747) = 2.26, p = 0.013. However, the effect is not considered equivalent to zero because it was not smaller than the upper bound, t(747) = −0.241, p = 0.405. Further, the direction of this effect was the opposite of what was expected: compliance was higher in the group who viewed the flatten the curve graph (M = 85.95) than the group who viewed the photo of an identifiable respiratory virus victim (M = 84.58), suggesting either a “null” or “small” reverse identifiable victim effect, t (746.75) = 2.23, p = 0.01, depending on labeling (e.g., Cohen, 1988, 1.4, 2.2.3, and 11.1; Funder & Ozer, 2019). Fig. 6A illustrates this equivalence test.

Fig. 6.

Equivalence tests of the effect of statistical (vs. identifiable) victims on (A) compliance and (B) randomly generated 5-digit numbers in Experiment 2 (N = 752) with 90% confidence intervals. Lower bound is d = −0.09. Upper bound is d = 0.09.

One limitation of this analysis is that the meta-analytic smallest effect size of interest is already “null” or “small”, depending on labeling (ibid.). So it is extremely difficult to test whether an effect is even smaller. Using our scale, the confidence interval of the difference between statistical and indefinable victim groups would have to be smaller than +/− 1.71 points on a 100-point scale. Our relatively large dataset for Experiment 2 (about 375 observations per group) is less than half of the 1713 observations per group recommended for 80% power to test a slightly larger equivalence bound of d = 0.1 when ⍺ = 0.05 (Lakens, 2017, Table 1).

To elaborate, consider a meaningless identifiable victim effect: the effect of identifiable victims on participants' computer-generated random 5-digit payment codes. As expected, a main effect was undetected, t (748.38) = −0.56, p = 0.579, as was an effect above the lower bound, t (748.38) = −0.679, p = 0.249. However, Fig. 6B shows that an effect below the upper bound was detected, t (748.38) = −1.78, p = 0.037. In other words, equivalence testing could not reject a meaningless identifiable victim effect as small as Lee and Feeley's (2016) smallest meta-analytic effect in our data. So those who demand conventional levels of power to infer a true zero effect will not be satisfied to infer a true zero identifiable victim effect from these equivalence tests.

5.1.2. Alternative explanations

One potential explanation of the failure to reliably detect expected identifiable victim effects involves not only messaging, but also the effect of general level of threat. For example, in line with the current findings, some people perceive the pandemic as less threatening than others (Dryhurst et al., 2020) and seem to be less likely to comply with government public health recommendations (De Neys et al., 2020; Glöckner, Dorrough, Wingen, & Dohle, 2020). So if the perceived threat and compliance is high already, then identifiable victims may not increase them further. Indeed, the present findings replicated ceiling effects in both compliance (e.g., Bavel et al., 2020; Everett et al., 2020) and perceived threat (Dryhurst et al., 2020). So perhaps individual victim effects will be more difficult to detect when perceived threats and likelihood of responding are already very high—e.g., during pandemics.

Similarly, one may wonder whether additional differences between pandemics and other crises can explain the differences between our null results and prior identifiable victim effects. For example, one may be able to guarantee that a life will be saved by giving food to a starving person, but one may not be as certain that a life will be saved by wearing a mask or complying with other public health recommendations. So responses to public health crises may be less sensitive to identifiable victim effects than other crises due to differences in opportunities to respond and differences in certainty about the outcomes of responding. Further research may find that identifiable victim effects depend on these or other variables (Kogut, Ritov, Rubaltelli, & Liberman, 2018; e.g., Lee & Feeley, 2016).

Another explanation may be that our stimuli did not successfully identify a victim because the patient's face was somewhat occluded by a respirator in the photograph used in these experiments. Analyzing the impact of subtle differences between images of individuals may be a worthwhile project. Until that research program is mature, we point readers to prior images used in a seminal paper purporting to present an identifiable victim (e.g., Small, Loewenstein, & Slovic, 2007). Due to image quality, the victim less than fully identifiable (Fig. 7 ), suggesting that optimal identifiability has not been considered necessary for existing research in this arena.

Fig. 7.

Stimuli about “identifiable” victim from Small et al. (2007).

Another explanation of the null identifiable victim effect may have to do with the source of the public health recommendations. All of the present conditions included, “Public health experts recommend…”. Some find that people align their attitudes about the COVID19 pandemic with public health experts regardless of other factors such as political affiliation (Vlasceanu & Coman, 2020). So identifiable victim effects may be detected only when recommendations come from less trustworthy sources.

5.2. Messaging

Another limitation of the paper is that the 40 million deaths projected by some researchers (Walker et al., 2020) is more believable for the COVID19 messages than the Flu messages and this could have contributed to our results. This projection was included to ensure a severe test of the identifiable victim effect (Mayo, 2018): if the power of identifiable victims is more potent than statistical victims, then identifiable victims should be compared to the most alarming statistics. Also, stipulating a similar death toll for COVID19 and Flu pandemics was required by the logic of one of our hypotheses: one projection had to be included in both COVID19 and Flu messages that contained statistical victims in order to maintain similarity between conditions and, therefore, the ability to draw inferences about the difference between ‘flu’ and ‘COVID19’ rather than a difference in projected deaths. Sure enough, the Flu messaging indirectly decreased compliance relative to the COVID19 messaging—with or without the death statistic—by reducing perceived threat of the pandemic. If the “40 million” statistic had appeared only in the COVID19 condition, then this effect would have confounded the indirect compliance-reducing effect of a Flu message with a lower projected death toll of Flu.

Another limitation of these experiments is its reliance on one kind of statistical visualization: a flatten the curve graph. Other visualizations may have had different impacts on the likelihood of complying with public health recommendations (Petersen et al., 2021). However, existing evidence suggests that people report a high willingness to comply with these recommendations regardless of whether and how the COVID-19 pandemic is visualized (Thorpe et al., 2021).

5.3. Cognition

The reliability and predictive power of the current reflection and numeracy tests were weak. This may be related to recent findings that a substantial proportion of correct answers on reflection tests may not actually involve reflection (e.g., Bago and De Neys, 2017, Bago and De Neys, 2019; Byrd et al., 2021). Of course, the weak reliability of the reflection test parameters did not seem to invalidate the entire dataset, given that well-replicated correlates of reflection were observed. Nonetheless, the relatively low reliability of the cognitive tests raises questions about cognition during a pandemic .

5.3.1. Cognitive test robustness

The present experiments added more null findings of a relationship between reflection test performance and compliance with public health recommendations during the first wave of the COVID19 pandemic (Erceg, Ružojčić, & Galic, 2020; Pennycook, McPhetres, Bago, & Rand, 2020; cf., Stanley, Barr, Peters, & Dr Paul Seli, 2020; Teovanović et al., 2020). One may be surprised by these null results given that correlates of reflection such as individual differences in working memory and self-reported need for cognition (De Neys, 2006; Pennycook, Cheyne, Koehler, & Fugelsang, 2015) have predicted compliance (Swami & Barron, 2020; Xie, Campbell, & Zhang, 2020). One way toward compatibility between these null and positive results would be evidence that reflection test performance has been impacted by the kind of economic insecurity produced by the COVID19 pandemic (Mani, Mullainathan, Shafir, & Zhao, 2013). Indeed, some have found abnormal reflection test performance during the COVID19 pandemic (Arechar & Rand, 2021; Bogliacino et al., 2021). So one may think that the abnormally low reliability of the current reflection tests (0.21 < α < 0.48) compared to pre-pandemic research (e.g., 0.53 < α < 0.76 in Bialek & Pennycook, 2018) may be a result of the pandemic (e.g., Karwowski, Groyecka-Bernard, Kowal, & Sorokowski, 2020). Nevertheless, other mid-pandemic reflection tests of participants recruited from other crowd work platforms achieved typical reliability (0.57 < α < 0.76 in , Study 2 and 3). So our reflection test results may indicate that test performance differs between crowd work platforms, that the present test performance was unreliable, or that test performance is not closely related to compliance with public health recommendations. Higher powered, cross-sectional research could arbitrate between these and other explanations.

5.3.2. Cognition and understanding of COVID19

The null relationships between reasoning tests and compliance with public health recommendations might also be explained by a third factor: appreciation of science. After all, reflection test performance has predicted appreciation of scientific knowledge about COVID19 (Glöckner et al., 2020). So despite our null reflection-compliance finding, recent COVID19 social science aligns with the current findings insofar as reflective people appreciate science and science appreciation predicted compliance. Such an indirect relationship could be a reason that reflection test performance has been a less potent predictor of attitudes about COVID19 prevention than beliefs about science (Čavojová, Šrol, & Ballová Mikušková, 2020).

5.4. Philosophy

Prior philosophical beliefs were more potent predictors of attitudes and intentions about pandemics than either messaging or cognition. Examining these and other results will produce hypotheses for future research.

5.4.1. Liberty vs. public health

Political preferences like social and economic conservatism have not been potent predictors of pandemic attitudes and intentions in our research or others' (Díaz & Cova, 2020; cf. Calvillo, Ross, Garcia, Smelter, & Rutchick, 2020). We found that only preferences for liberty over equality reliably predicted non-compliance with public health recommendations and lower perceived threat of pandemics (Experiment 2). Importantly, libertarian preferences negatively correlated with the perceived duty to effectively prevent harm (Experiment 2). And—aligning with earlier work (e.g., Díaz & Cova, 2020; Navajas et al., 2020)—caring about preventing harm was a standout positive predictor of compliance with public health recommendations and perceived threat of pandemic in both of the present experiments. Thus, libertarian and effective altruist tendencies not only anti-correlate, but they differentially predict public health attitudes and intentions. So there may be a real trade-off between acting according to the value of liberty and acting according to one's duties to others' health. Of course, people may value both liberty and others' health even when certain dilemmas pit one value against the other (e.g., Codagnone et al., 2020; Palma, Huseynov, & Jr, 2020).

5.4.2. Scientific realism vs. trust in science

Pandemic attitudes and intentions were reliably predicted by positive endorsement of scientific realism, but not naturalism and anti-realism about science. This pattern may be partly explained by the fact that the scientific anti-realism measure unexpectedly failed to negatively correlate with the scientific realism measure. Recent research found that trust in science was a more potent predictor of complying with public health recommendations than hypothesized variables such as political orientation (Koetke, Schumann, & Porter, 2020; Ruisch et al., 2021; van Mulukom, 2020). Together these points suggest that the predictive power of the scientific realism measure may have less to do with realism about science than trust in science (Bicchieri et al., 2020). Future research could profitably dissociate trust in science from metaphysical beliefs about science.

5.4.3. Metaphysical beliefs vs. community involvement

Although theism was a more reliable predictor of perceiving and responding to the threat of pandemics in univariate and mediation analyses, religiosity was the stronger predictor in the hierarchical regression analysis (Experiment 2). Our measure of religiosity asked about participation in certain organizations rather than endorsement of certain beliefs. This raises the question of whether community involvement may predict compliance with public health recommendations differently than the beliefs associated with those communities (e.g., Perry, Whitehead, & Grubbs, 2020). Further research could profitably dissociate the roles of social and philosophical factors in public health compliance and outcomes.

6. Conclusion

While public health officials may find “flattening the curve” messaging compelling among each other, it is not clear that they will be more effective than messaging about identifiable victims during a pandemic. However, we did find evidence suggesting that messages comparing COVID19 to the common flu may have reduced compliance with public health recommendations. Moreover, while encouraging the public to appreciate the threat of pandemics, we may encounter more resistance from some groups than others. This may not be a result of the quality of the message or the competence of the receiver, but rather individual differences in philosophical beliefs. Some may believe that liberty matters more than their duties to others or their appreciation of science. Of course, subsequent research may reveal more about the relationship between public health messaging and compliance therewith. However, until we have tested all common public health messages and confounding factors, the present research suggests that effective public health messaging may require appealing to particular people's prior philosophical beliefs.

CRediT author statement

Nick Byrd: Funding acquisition, Project administration, Conceptualization, Methodology, Investigation, Data curation, Formal analysis, Writing- Original draft preparation, Writing - Review & Editing, Visualization. Michał Bialek: Conceptualization, Methodology, Data curation, Formal analysis, Writing- Original draft preparation, Writing - Review & Editing.

Funding

Funding was provided by the Adelaide Wilson Fellowship from The Graduate School at Florida State University. None of the parties responsible for dispersing that funding were aware of, involved in, or otherwise influential in any part of this research prior to publication.

Appendix A

Table A1.

Effect sizes and confidence intervals from mediation analysis of Experiment 2 (N = 752).

| Type | Effect |

95% C.I. (non-std. est.) |

β | p | |

|---|---|---|---|---|---|

| Lower | Upper | ||||

| Indirect | Stat (v. Ident) Victim ⇒ Public health threat ⇒ Compliance | −0.697 | 2.788 | 0.030 | 0.239 |

| Stat (v. Ident) Victim⇒ Reflection ⇒ Compliance | −0.035 | 0.047 | 0.000 | 0.762 | |

| Stat (v. Ident) Victim⇒ Lure ⇒ Compliance | −0.083 | 0.142 | 0.001 | 0.606 | |

| Stat (v. Ident) Victim⇒ Numeracy ⇒ Compliance | −0.070 | 0.139 | 0.001 | 0.514 | |

| Stat (v. Ident) Victim⇒ Effective Altruism ⇒ Compliance | −0.428 | 0.604 | 0.003 | 0.738 | |

| Stat (v. Ident) Victim⇒ Instrumental Harm ⇒ Compliance | −0.102 | 0.051 | 0.000 | 0.518 | |

| Stat (v. Ident) Victim⇒ Libertarianism ⇒ Compliance | −0.098 | 0.082 | 0.000 | 0.860 | |

| Stat (v. Ident) Victim⇒ Economic Conservatism ⇒ Compliance | −0.063 | 0.108 | 0.001 | 0.611 | |

| Stat (v. Ident) Victim⇒ Social Conservatism ⇒ Compliance | −0.032 | 0.036 | 0.000 | 0.916 | |

| Stat (v. Ident) Victim⇒ Naturalism ⇒ Compliance | −0.048 | 0.052 | 0.000 | 0.928 | |

| Stat (v. Ident) Victim⇒ Scientific Realism ⇒ Compliance | −0.133 | 0.269 | 0.002 | 0.506 | |

| Stat (v. Ident) Victim⇒ Scientific Anti-realism ⇒ Compliance | −0.129 | 0.208 | 0.001 | 0.645 | |

| Stat (v. Ident) Victim⇒ Compatibilism ⇒ Compliance | −0.161 | 0.057 | −0.002 | 0.352 | |

| Stat (v. Ident) Victim⇒ Theism ⇒ Compliance | −0.404 | 0.147 | −0.004 | 0.362 | |

| Stat (v. Ident) Victim⇒ Religiosity ⇒ Compliance | −0.030 | 0.027 | 0.000 | 0.894 | |

| Flu (v. COVID-19) ⇒ Public health threat ⇒ Compliance | −5.059 | −1.550 | −0.096⁎⁎⁎ | 0.0002 | |

| Flu (v. COVID) ⇒ Reflection ⇒ Compliance | −0.023 | 0.020 | 0.000 | 0.906 | |

| Flu (v. COVID) ⇒ Lure ⇒ Compliance | −0.072 | 0.164 | 0.001 | 0.448 | |

| Flu (v. COVID) ⇒ Numeracy ⇒ Compliance | −0.089 | 0.103 | 0.000 | 0.887 | |

| Flu (v. COVID) ⇒ Effective Altruism ⇒ Compliance | −0.834 | 0.207 | −0.009 | 0.238 | |

| Flu (v. COVID) ⇒ Instrumental Harm ⇒ Compliance | −0.075 | 0.204 | 0.002 | 0.363 | |

| Flu (v. COVID) ⇒ Libertarianism ⇒ Compliance | −0.056 | 0.212 | 0.002 | 0.256 | |

| Flu (v. COVID) ⇒ Economic Conservatism ⇒ Compliance | −0.057 | 0.097 | 0.001 | 0.617 | |

| Flu (v. COVID) ⇒ Social Conservatism ⇒ Compliance | −0.078 | 0.138 | 0.001 | 0.586 | |

| Flu (v. COVID) ⇒ Naturalism ⇒ Compliance | −0.012 | 0.013 | 0.000 | 0.935 | |

| Flu (v. COVID) ⇒ Scientific Realism ⇒ Compliance | −0.275 | 0.128 | −0.002 | 0.475 | |

| Flu (v. COVID) ⇒ Scientific Anti-realism ⇒ Compliance | −0.050 | 0.337 | 0.004 | 0.146 | |

| Flu (v. COVID) ⇒ Compatibilism ⇒ Compliance | −0.213 | 0.061 | −0.002 | 0.275 | |

| Flu (v. COVID) ⇒ Theism ⇒ Compliance | −0.373 | 0.174 | −0.003 | 0.477 | |

| Flu (v. COVID) ⇒ Religiosity ⇒ Compliance | −0.027 | 0.029 | 0.000 | 0.939 | |

| Component | Stat (v. Ident) Victim ⇒ Public health threat | −1.396 | 5.593 | 0.043 | 0.239 |

| Stat (v. Ident) Victim⇒ Reflection | −0.231 | 0.122 | −0.022 | 0.546 | |

| Stat (v. Ident) Victim⇒ Lure | −0.134 | 0.236 | 0.020 | 0.591 | |

| Stat (v. Ident) Victim⇒ Numeracy | −0.087 | 0.186 | 0.026 | 0.476 | |

| Stat (v. Ident) Victim⇒ Effective Altruism | −2.340 | 3.304 | 0.012 | 0.738 | |

| Stat (v. Ident) Victim⇒ Instrumental Harm | −2.206 | 5.564 | 0.031 | 0.397 | |

| Stat (v. Ident) Victim⇒ Libertarianism | −4.020 | 4.821 | 0.006 | 0.859 | |

| Stat (v. Ident) Victim⇒ Economic Conservatism | −7.326 | 1.342 | −0.049 | 0.176 | |

| Stat (v. Ident) Victim⇒ Social Conservatism | −4.716 | 4.228 | −0.004 | 0.915 | |

| Stat (v. Ident) Victim⇒ Naturalism | −7.155 | 2.828 | −0.031 | 0.396 | |

| Stat (v. Ident) Victim⇒ Scientific Realism | −2.315 | 4.763 | 0.025 | 0.498 | |

| Stat (v. Ident) Victim⇒ Scientific Anti-realism | −5.464 | 3.360 | −0.017 | 0.640 | |

| Stat (v. Ident) Victim⇒ Compatibilism | −7.379 | 1.468 | −0.048 | 0.190 | |

| Stat (v. Ident) Victim⇒ Theism | −7.045 | 2.513 | −0.034 | 0.353 | |

| Stat (v. Ident) Victim⇒ Religiosity | −4.731 | 5.456 | 0.005 | 0.889 | |

| Flu (v. COVID-19) ⇒ Public health threat perception | −10.126 | −3.137 | −0.134⁎⁎⁎ | 0.0002 | |

| Flu (v. COVID) ⇒ Reflection | −0.165 | 0.188 | 0.005 | 0.901 | |

| Flu (v. COVID) ⇒ Lure | −0.106 | 0.263 | 0.030 | 0.404 | |

| Flu (v. COVID) ⇒ Numeracy | −0.126 | 0.146 | 0.005 | 0.887 | |

| Flu (v. COVID) ⇒ Effective Altruism | −4.536 | 1.109 | −0.043 | 0.234 | |

| Flu (v. COVID) ⇒ Instrumental Harm | −8.171 | −0.402 | −0.079⁎ | 0.031 | |

| Flu (v. COVID) ⇒ Libertarianism | −8.269 | 0.572 | −0.062† | 0.088 | |

| Flu (v. COVID) ⇒ Economic Conservatism | −6.980 | 1.688 | −0.044 | 0.231 | |

| Flu (v. COVID) ⇒ Social Conservatism | −8.483 | 0.460 | −0.064† | 0.079 | |

| Flu (v. COVID) ⇒ Naturalism | −5.472 | 4.511 | −0.007 | 0.850 | |

| Flu (v. COVID) ⇒ Scientific Realism | −4.858 | 2.220 | −0.027 | 0.465 | |

| Flu (v. COVID) ⇒ Scientific Anti-realism | −8.227 | 0.596 | −0.062† | 0.090 | |

| Flu (v. COVID) ⇒ Compatibilism | −8.776 | 0.072 | −0.070 | 0.054 | |

| Flu (v. COVID) ⇒ Theism | −6.535 | 3.023 | −0.026 | 0.471 | |

| Flu (v. COVID) ⇒ Religiosity | −5.295 | 4.893 | −0.003 | 0.938 | |

| Public health threat perception ⇒ Compliance | 0.465 | 0.531 | 0.714⁎⁎⁎⁎⁎ | < 0.00001 | |

| Reflection ⇒ Compliance | −0.767 | 0.534 | −0.008 | 0.726 | |

| Lure ⇒ Compliance | −0.039 | 1.203 | 0.044† | 0.066 | |

| Numeracy ⇒ Compliance | −0.141 | 1.543 | 0.039 | 0.103 | |

| Effective Altruism ⇒ Compliance | 0.142 | 0.223 | 0.210⁎⁎⁎⁎⁎ | < 0.00001 | |

| Instrumental Harm ⇒ Compliance | −0.045 | 0.014 | −0.024 | 0.316 | |

| Libertarianism ⇒ Compliance | −0.046 | 0.006 | −0.036 | 0.127 | |

| Economic Conservatism ⇒ Compliance | −0.034 | 0.019 | −0.013 | 0.583 | |

| Social Conservatism ⇒ Compliance | −0.033 | 0.018 | −0.014 | 0.567 | |

| Naturalism ⇒ Compliance | −0.024 | 0.022 | −0.002 | 0.928 | |

| Scientific Realism ⇒ Compliance | 0.023 | 0.088 | 0.080⁎⁎ | 0.001 | |

| Scientific Anti-realism ⇒ Compliance | −0.064 | −0.012 | −0.068⁎⁎ | 0.005 | |

| Compatibilism ⇒ Compliance | −0.008 | 0.043 | 0.032 | 0.185 | |

| Theism ⇒ Compliance | 0.032 | 0.081 | 0.110⁎⁎⁎⁎⁎ | < 0.00001 | |

| Religiosity ⇒ Compliance | −0.028 | 0.017 | −0.011 | 0.641 | |

| Direct | Stat (v. Ident) Victim⇒ Compliance | −1.359 | 1.870 | 0.007 | 0.757 |

| Flu (v. COVID) ⇒ Compliance | 1.486 | 4.770 | 0.091⁎⁎⁎ | 0.00019 | |

| Total | Stat (v. Ident) Victim⇒ Compliance | −1.340 | 4.096 | 0.036 | 0.320 |

| Flu (v. COVID) ⇒ Compliance | −3.066 | 2.370 | −0.009 | 0.802 | |

Note. Betas are completely standardized effect sizes.

†p = 0.05., ⁎p < 0.05., ⁎⁎p < 0.01., ⁎⁎⁎p < 0.001., ⁎⁎⁎⁎p < 0.0001., ⁎⁎⁎⁎⁎p < 0.00001.

References

- Alper S., Bayrak F., Yilmaz O. 2020. Psychological correlates of COVID-19 conspiracy beliefs and preventive measures: Evidence from Turkey. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angrist J.D., Pischke J.-S. Princeton University Press; 2008. Mostly harmless econometrics: An empiricist’s companion. [Google Scholar]

- Arechar A.A., Rand D. Turking in the time of COVID. 2021 doi: 10.31234/osf.io/vktqu. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Attali Y., Bar-Hillel M. The false allure of fast lures. Judgment and Decision making. 2020;15(1):93–111. http://journal.sjdm.org/19/191217/jdm191217.html [Google Scholar]

- Bago B., De Neys W. Fast logic?: Examining the time course assumption of dual process theory. Cognition. 2017;158:90–109. doi: 10.1016/j.cognition.2016.10.014. [DOI] [PubMed] [Google Scholar]

- Bago B., De Neys W. The smart system 1: Evidence for the intuitive nature of correct responding on the bat-and-ball problem. Thinking & Reasoning. 2019;25(3):257–299. doi: 10.1080/13546783.2018.1507949. [DOI] [Google Scholar]

- Baron J., Goodwin G. Consequences, norms, and inaction: A critical analysis. Judgment and Decision making. 2020;15(3) (journal.sjdm.org/19/190430a/jdm190430a.html) [Google Scholar]

- Bavel J.J.V., Cichocka A., Capraro V., Sjåstad H., Nezlek J.B., Alfano M.…Palomäki J. National identity predicts public health support during a global pandemic: Results from 67 nations. PsyArXiv. 2020 doi: 10.31234/osf.io/ydt95. [DOI] [Google Scholar]

- Beard D. Photo of COVID-19 victim in Indonesia sparks fascination—And denial. National Geographic. 2020, July 21 https://www.nationalgeographic.com/photography/2020/07/covid-victim-photograph-sparks-fascination-and-denial-indonesia/ [Google Scholar]

- Bergh R., Reinstein D. Empathic and numerate giving: The joint effects of victim images and charity evaluations. Social Psychological and Personality Science. 2020;1948550619893968 doi: 10.1177/1948550619893968. [DOI] [Google Scholar]

- Białek M., Bergelt M., Majima Y., Koehler D.J. Cognitive reflection but not reinforcement sensitivity is consistently associated with delay discounting of gains and losses. Journal of Neuroscience, Psychology, and Economics. 2019;12(3–4):169–183. doi: 10.1037/npe0000111. [DOI] [Google Scholar]

- Białek M., Muda R., Stewart K., Niszczota P., Pieńkosz D. Thinking in a foreign language distorts allocation of cognitive effort: Evidence from reasoning. Cognition. 2020;205:104420. doi: 10.1016/j.cognition.2020.104420. [DOI] [PubMed] [Google Scholar]

- Białek M., Paruzel-Czachura M., Gawronski B. Foreign language effects on moral dilemma judgments: An analysis using the CNI model. Journal of Experimental Social Psychology. 2019;85:103855. doi: 10.1016/j.jesp.2019.103855. [DOI] [Google Scholar]

- Bialek M., Pennycook G. The cognitive reflection test is robust to multiple exposures. Behavior Research Methods. 2018;50(5):1953–1959. doi: 10.3758/s13428-017-0963-x. [DOI] [PubMed] [Google Scholar]

- Białek M., Sawicki P. Cognitive reflection effects on time discounting. Journal of Individual Differences. 2018;39(2):99–106. doi: 10.1027/1614-0001/a000254. [DOI] [Google Scholar]

- Bicchieri C., Fatas E., Aldama A., Casas A., Deshpande I., Lauro M.…Wen R. 2020. In science we (should) trust: Expectations and compliance during the COVID-19 pandemic. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bogliacino F., Codagnone C., Montealegre F., Folkvord F., Gómez C., Charris R.…Veltri G.A. Negative shocks predict change in cognitive function and preferences: Assessing the negative affect and stress hypothesis. Scientific Reports. 2021;11(1):3546. doi: 10.1038/s41598-021-83089-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boston W. More than 100 in Germany found to be infected with coronavirus after Church’s services. Wall Street Journal. 2020, May 24 https://www.wsj.com/articles/more-than-100-in-germany-found-to-be-infected-with-coronavirus-after-a-churchs-services-11590340102 [Google Scholar]

- Bourget D., Chalmers D. What do philosophers believe? Philosophical Studies. 2014;170(3):465–500. doi: 10.1007/s11098-013-0259-7. [DOI] [Google Scholar]

- Brehm L., Alday P.M. The 26th architectures and mechanisms for language processing conference. AMLap 2020. 2020. A decade of mixed models: It’s past time to set your contrasts.https://mediaup.uni-potsdam.de/Play/Chapter/223 [Google Scholar]

- Brooks B. Like the flu? Trump's coronavirus messaging confuses public, pandemic researchers say. 2020, March 14. https://www.reuters.com/article/us-health-coronavirus-mixed-messages-idUSKBN2102GY Reuters.

- Byrd N. Under review; 2021. All measures are not created equal: Reflection test, think aloud, and process dissociation protocols. [Google Scholar]

- Byrd N. 2021. Great minds do not think alike: Individual differences in philosophers’ trait reflection, beliefs, and education [In preparation] [Google Scholar]

- Byrd N., Conway P. Not all who ponder count costs: Arithmetic reflection predicts utilitarian tendencies, but logical reflection predicts both deontological and utilitarian tendencies. Cognition. 2019;192:103995. doi: 10.1016/j.cognition.2019.06.007. [DOI] [PubMed] [Google Scholar]

- Byrd N., Gongora G., Joseph B., Sirota M. 2021. Tell us what you really think: A think aloud protocol analysis of the verbal cognitive reflection test [In preparation] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvillo D.P., Ross B.J., Garcia R.J.B., Smelter T.J., Rutchick A.M. Political ideology predicts perceptions of the threat of COVID-19 (and susceptibility to fake news about it) Social Psychological and Personality Science. 2020 doi: 10.1177/1948550620940539. 1948550620940539. [DOI] [Google Scholar]

- Canning D., Karra M., Dayalu R., Guo M., Bloom D.E. The association between age, COVID-19 symptoms, and social distancing behavior in the United States. MedRxiv. 2020 doi: 10.1101/2020.04.19.20065219. 2020.04.19.20065219. [DOI] [Google Scholar]

- Čavojová V., Šrol J., Ballová Mikušková E. How scientific reasoning correlates with health-related beliefs and behaviors during the COVID-19 pandemic? Journal of Health Psychology. 2020 doi: 10.1177/1359105320962266. 1359105320962266. [DOI] [PubMed] [Google Scholar]

- CDC Similarities and differences between flu and COVID-19. 2020, July 10. https://www.cdc.gov/flu/symptoms/flu-vs-covid19.htm Centers for Disease Control and Prevention.

- Chandler L., Rozenweig C., Moss A.J., Robinson J., Litman L. Online panels in social science research: Expanding sampling methods beyond mechanical Turk. Behavior Research Methods. 2019;50(2022) doi: 10.3758/s13428-019-01273-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark C., Davila A., Regis M., Kraus S. Predictors of COVID-19 voluntary compliance behaviors: An international investigation. Global Transitions. 2020 doi: 10.1016/j.glt.2020.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- CloudResearch SENTRY | Data Quality Validation for Online Research. 2020. https://www.cloudresearch.com/products/sentry-data-quality-validation/

- Codagnone C., Bogliacino F., Gómez C., Charris R., Montealegre F., Liva G.…Veltri G.A. Assessing concerns for the economic consequence of the COVID-19 response and mental health problems associated with economic vulnerability and negative economic shock in Italy, Spain, and the United Kingdom. PLoS One. 2020;15(10) doi: 10.1371/journal.pone.0240876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen J. Lawrence Erlbaum Associates; 1988. Statistical power analysis for the behavioral sciences. [Google Scholar]

- Cokely E.T., Galesic M., Schulz E., Ghazal S., Garcia-Retamero R. Measuring risk literacy: The Berlin numeracy test. Judgment and Decision making. 2012;7(1):25. http://search.proquest.com/docview/1011295450/abstract/88072502EA77452DPQ/1 [Google Scholar]

- Conway L.G., Woodard S.R., Zubrod A., Chan L. Testing experiential versus political explanations. 2020. Why are conservatives less concerned about the coronavirus (COVID-19) than liberals? [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornelis I., Hiel A.V., Roets A., Kossowska M. Age differences in conservatism: Evidence on the mediating effects of personality and cognitive style. Journal of Personality. 2009;77(1):51–88. doi: 10.1111/j.1467-6494.2008.00538.x. [DOI] [PubMed] [Google Scholar]

- De Neys W. Dual processing in reasoning two systems but one reasoner. Psychological Science. 2006;17(5):428–433. doi: 10.1111/j.1467-9280.2006.01723.x. [DOI] [PubMed] [Google Scholar]

- De Neys W., Raoelison M., Boissin E., Voudouri A., Bago B., Białek M. 2020. Moral outrage and social distancing: Bad or badly informed citizens? [DOI] [Google Scholar]

- Deppe K.D., Gonzalez F.J., Neiman J.L., Jacobs C., Pahlke J., Smith K.B., Hibbing J.R. Reflective liberals and intuitive conservatives: A look at the cognitive reflection test and ideology. Judgment and Decision making. 2015;10(4):314–331. (sas.upenn.edu/~baron/journal/15/15311/jdm15311.html) [Google Scholar]

- Díaz R., Cova F. 2020. Moral values and trait pathogen disgust predict compliance with official recommendations regarding COVID-19 pandemic in US samples. [DOI] [Google Scholar]

- Dryhurst S., Schneider C.R., Kerr J., Freeman A.L.J., Recchia G., van der Bles A.M.…van der Linden S. Risk perceptions of COVID-19 around the world. Journal of Risk Research. 2020;0(0):1–13. doi: 10.1080/13669877.2020.1758193. [DOI] [Google Scholar]

- Erceg N., Galic Z., Ružojčić M. A reflection on cognitive reflection – Testing convergent validity of two versions of the cognitive reflection test. Judgment and Decision making. 2020;15(5):741–755. doi: 10.31234/osf.io/ewrtq. [DOI] [Google Scholar]

- Erceg N., Ružojčić M., Galic Z. 2020. Misbehaving in the Corona Crisis: The role of anxiety and unfounded beliefs. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Everett J.A., Colombatto C., Chituc V., Brady W.J., Crockett M. 2020. The effectiveness of moral messages on public health behavioral intentions during the COVID-19 pandemic. [DOI] [Google Scholar]

- Frederick S. Cognitive reflection and decision making. Journal of Economic Perspectives. 2005;19(4):25–42. doi: 10.1257/089533005775196732. [DOI] [Google Scholar]

- Friedrich J., McGuire A. Individual differences in reasoning style as a moderator of the identifiable victim effect. Social Influence. 2010;5(3):182–201. doi: 10.1080/15534511003707352. [DOI] [Google Scholar]

- Friesdorf R., Conway P., Gawronski B. Gender differences in responses to moral dilemmas A process dissociation analysis. Personality and Social Psychology Bulletin. 2015;41(5):696–713. doi: 10.1177/0146167215575731. [DOI] [PubMed] [Google Scholar]

- Funder D.C., Ozer D.J. Evaluating effect size in psychological research: Sense and nonsense. Advances in Methods and Practices in Psychological Science. 2019;2(2):156–168. doi: 10.1177/2515245919847202. [DOI] [Google Scholar]

- Gervais W.M., van Elk M., Xygalatas D., McKay R.T., Aveyard M., Buchtel E.E.…Bulbulia J. Analytic atheism: A cross-culturally weak and fickle phenomenon? Judgment and Decision making. 2018;13(3):268–274. https://econpapers.repec.org/article/jdmjournl/v_3a13_3ay_3a2018_3ai_3a3_3ap_3a268-274.htm [Google Scholar]

- Gignac G.E., Szodorai E.T. Effect size guidelines for individual differences researchers. Personality and Individual Differences. 2016;102:74–78. doi: 10.1016/j.paid.2016.06.069. [DOI] [Google Scholar]

- Gillespie R., Hudak S. Protesters call for reopening, but Demings says decision to be based on science—Orlando sentinel. 2020, April 17. https://www.orlandosentinel.com/coronavirus/os-ne-coronavirus-orange-update-friday-20200417-667p2w4jjnajbcslxq7fbxtujq-story.html Orlando Sentinel.

- Glöckner A., Dorrough A.R., Wingen T., Dohle S. 2020. The perception of infection risks during the early and later outbreak of COVID-19 in Germany: consequences and recommendations. [Google Scholar]

- Gollwitzer A., Martel C., Marshall J., Höhs J.M., Bargh J.A. Connecting self-reported social distancing to real-world behavior at the individual and U.S. state level. PsyArXiv. 2020 doi: 10.31234/osf.io/kvnwp. [DOI] [Google Scholar]

- Graham R. Remembering lives lost to coronavirus, one tweet at a time—The Boston globe. Boston Globe. 2020, May 12 https://www.bostonglobe.com/2020/05/12/opinion/remembering-lives-lost-coronavirus-one-tweet-time/ [Google Scholar]

- Greene C., Murphy G. 2020. Individual differences in susceptibility to false memories for COVID-19 fake news. [DOI] [PMC free article] [PubMed] [Google Scholar]