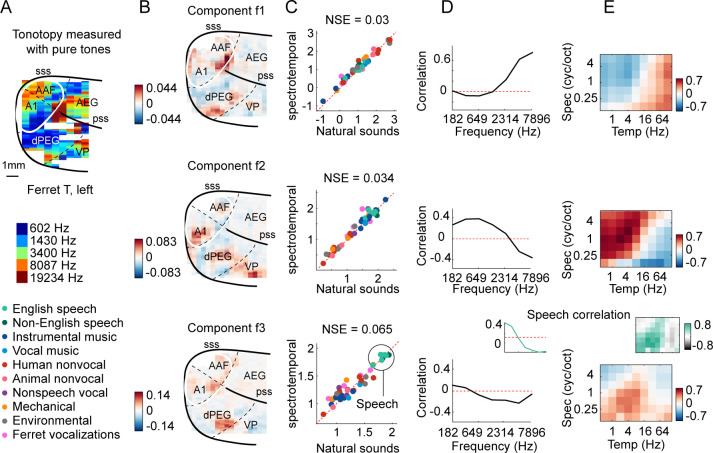

Figure 3. Organization of frequency and modulation tuning in ferret auditory cortex, as revealed by component analysis.

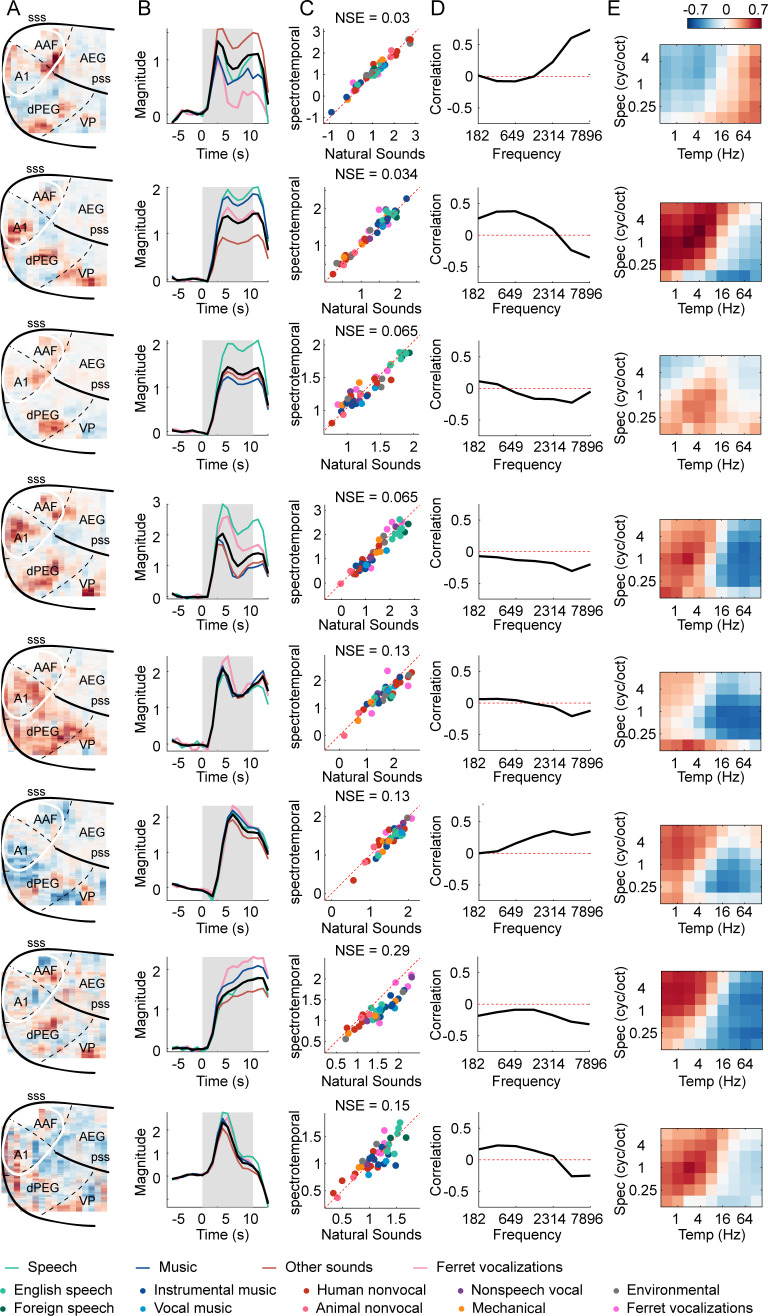

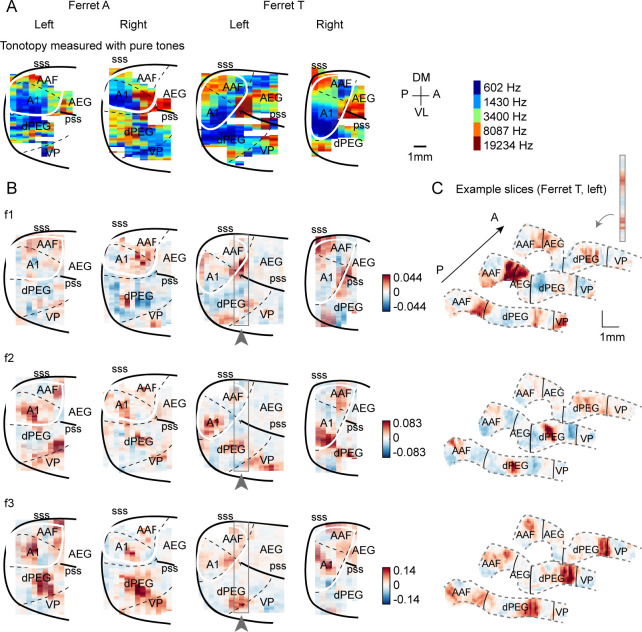

(A) For reference with the weight maps in panel (B), a tonotopic map is shown, measured using pure tones. The map is from one hemisphere of one animal (ferret T, left). (B) Voxel weight maps from three components, inferred using responses to natural and synthetic sounds (see Figure 3—figure supplement 1 for all eight components and Figure 3—figure supplement 2 for all hemispheres). The maps for components f1 and f2 closely mirrored the high- and low-frequency tonotopic gradients, respectively. (C) Component response to natural and spectrotemporally matched synthetic sounds, colored based on category labels (labels shown at the bottom left of the figure). Component f3 responded preferentially to speech sounds. (D) Correlation of component responses with energy at different audio frequencies, measured from a cochleagram. Inset for f3 shows the correlation pattern that would be expected from a response that was perfectly speech selective (i.e., 1 for speech, 0 for all other sounds). (E) Correlations with modulation energy at different temporal and spectral rates. Inset shows the correlation pattern that would be expected for a speech-selective response. Results suggest that f3 responds to particular frequency and modulation statistics that happen to differ between speech and other sounds.