Abstract

Purpose

The challenges associated with cochlear implant (CI)–mediated listening are well documented; however, they can be mitigated through the provision of aided acoustic hearing in the contralateral ear—a configuration termed bimodal hearing. This study extends previous literature to examine the effect of acoustic bandwidth in the non-CI ear for music perception. The primary aim was to determine the minimum and optimum acoustic bandwidth necessary to obtain bimodal benefit for music perception and speech perception.

Method

Participants included 12 adult bimodal listeners and 12 adult control listeners with normal hearing. Music perception was assessed via measures of timbre perception and subjective sound quality of real-world music samples. Speech perception was assessed via monosyllabic word recognition in quiet. Acoustic stimuli were presented to the non-CI ear in the following filter conditions: < 125, < 250, < 500, and < 750 Hz, and wideband (full bandwidth).

Results

Generally, performance for all stimuli improved with increasing acoustic bandwidth; however, the bandwidth that is both minimally and optimally beneficial may be dependent upon stimulus type. On average, music sound quality required wideband amplification, whereas speech recognition with a male talker in quiet required a narrower acoustic bandwidth (< 250 Hz) for significant benefit. Still, average speech recognition performance continued to improve with increasing bandwidth.

Conclusion

Further research is warranted to examine optimal acoustic bandwidth for additional stimulus types; however, these findings indicate that wideband amplification is most appropriate for speech and music perception in individuals with bimodal hearing.

Cochlear implants (CIs) have been remarkably successful in improving quality of life and enabling high levels of speech perception. However, signal processing and design limitations continue to make the perception of complex stimuli challenging. This is particularly true for inputs that demand faithful representation of pitch (e.g., music; Chatterjee et al., 2017; Chatterjee & Peng, 2008; Hsiao & Gfeller, 2012; Luo et al., 2007). As such, music perception tends to be poorer for CI users as compared to listeners with normal hearing (NH; e.g., D'Onofrio et al., 2020; Gfeller et al., 2007; Kang et al., 2009; Kong et al., 2004).

The challenges associated with CI listening are well documented; however, they can be mitigated through the provision of aided acoustic hearing in the contralateral ear—a configuration commonly termed bimodal hearing. Indeed, current estimations indicate that approximately 60%–72% of adult unilateral CI patients have some degree of useable residual hearing in the contralateral ear (Dorman & Gifford, 2010; Holder et al., 2018). Bimodal benefit for music listening—that is, the benefit obtained via the addition of acoustic hearing (via hearing aid) in the non-CI ear—has been demonstrated in a myriad ways, including tasks of melody perception (Dorman et al., 2008; El Fata et al., 2009; Kong et al., 2005; Sucher & McDermott, 2009), pitch perception (Cheng et al., 2018; Crew et al., 2015; Cullington & Zeng, 2011), timbre perception (Kong et al., 2012), musical sound quality (El Fata et el., 2009; Plant & Babic, 2016; Sucher & McDermott, 2009), and musical emotion perception (D'Onofrio et al., 2020; Giannantonio et al., 2015; Shirvani et al., 2016). For many, the benefit of contralateral acoustic hearing can be substantial; however, there is considerable intersubject variability, as well as intrasubject variability, across various test measures. Furthermore, there is no standard hearing aid fitting procedure for bimodal stimulation. As the number of patients with residual hearing continues to increase, establishing data-driven guidelines for the bimodal population is of increased importance.

Effect of Acoustic Bandwidth on Bimodal Listening

A number of studies have investigated hearing aid parameters with respect to the speech domain, most commonly by varying low-frequency acoustic bandwidth in the non-CI ear. Zhang et al. (2010) examined bimodal benefit for acoustic stimuli presented to the non-CI ear low-pass filtered at 125, 250, 500, and 750 Hz, and unfiltered (wideband). Significant improvement was reported for all conditions, including the lowest frequency passband, which encompassed 64–256 Hz. Furthermore, speech understanding in noise improved systematically as the acoustic bandwidth increased up to the wideband condition, thereby suggesting that amplification should be provided at all aidable frequencies for maximum benefit. Consistent with Zhang et al. (2010), Sheffield and Gifford (2014) indicated that the minimum acoustic bandwidth necessary for significant bimodal benefit was < 125 Hz for male talkers in noise and < 250 Hz for female talkers in noise and male talkers in quiet. Additionally, bimodal benefit was found to increase significantly with increasing acoustic bandwidth up to < 500 Hz for male talkers in noise and up to < 750 Hz for female talkers in noise and male talkers in quiet. An increase in performance beyond 750 Hz was also noted; however, this improvement failed to reach statistical significance. More recent data from Neuman et al. (2019) added further support that providing the broadest acoustic bandwidth yielded maximum bimodal benefit. Thus, in light of these results for speech perception, converging evidence suggests that traditional, wideband amplification should be recommended for patients with bimodal hearing.

In contrast, Messersmith et al. (2015) demonstrated that, for select individuals, amplification over a restricted bandwidth (< 2000 Hz) resulted in optimal performance. These results were based on a small, highly selective sample of participants (n = 6) who were chosen to participate because of their poor performance with wideband amplification. In fact, three of the six participants had thresholds greater than 110 dB HL above 2000 Hz, and thus, cochlear dead regions (DRs)—regions of the cochlea with minimal to no functional inner hair cells and/or neurons—were presumed to be present. Similarly, data from Zhang et al. (2014) suggest that a restricted bandwidth yielded perceptual improvement for cases where high-frequency DRs were present. Specifically, they demonstrated significant improvement in speech recognition in quiet and noise as well as improved subjective speech sound quality with a restricted hearing aid (HA) bandwidth for individuals with confirmed cochlear DRs. In contrast, individuals without cochlear DRs demonstrated best performance with a wide HA bandwidth (Zhang et al., 2014). Similarly, other studies have demonstrated that select bimodal listeners exhibit significant improvements in speech recognition in quiet and noise with restricted HA bandwidth, though this was not the norm (Neuman & Svirsky, 2013; Neuman et al., 2019).

Taken together, the existing literature suggests that, for most patients, amplifying the broadest range possible generally yields optimal speech perception performance. However, for some, there can be diminishing returns with increased bandwidth, or even a decrement in performance for which a restricted acoustic bandwidth would be recommended (e.g., Davidson et al., 2015; Messersmith et al., 2015; Zhang et al., 2014).

To our knowledge, there has been no systematic evaluation of the effect of acoustic bandwidth in the music domain for bimodal listeners; however, both Zhang et al. (2014) and El Fata et al. (2009) shed light on this issue indirectly. In the study by Zhang et al. (2014), music sound quality was judged to be optimal when a restricted bandwidth was utilized for listeners with DRs—a finding that is consistent with results in the speech domain. El Fata et al. (2009) investigated the effect of degree of hearing loss in the nonimplanted ear on bimodal benefit for song identification. The authors divided their participants into two groups according to degree of hearing loss. Although DRs were not explicitly evaluated, their findings indicate that bimodal benefit may be affected by degree of residual acoustic hearing and, further, may impact optimal acoustic bandwidth. Further investigation into the effect of acoustic bandwidth is needed in order to establish evidence-based clinical guidelines for optimal music perception in a bimodal configuration.

With respect to the earlier speech perception literature, it should be noted that all 12 participants in the Sheffield and Gifford (2014) study had audiometric thresholds better than or equal to 80 dB HL for frequencies 125–500 Hz. Similarly, in the study by Neuman et al. (2019), nearly all (20 out of 23 participants) had thresholds better than or equal to 80 dB HL at or below 500 Hz. In contrast, this was true for only five of the 14 total participants in the study by El Fata et al. (2009). Because of the reduced peripheral auditory fidelity often associated with severe-to-profound thresholds, it is possible that, for the other nine listeners, a narrower bandwidth may actually yield improved performance, as suggested by Zhang et al. (2014) and Messersmith et al. (2015).

Key Structural Features of Music

While it is clear that CI performance tends to improve with access to acoustic hearing, we must further explore how this benefit relates to specific musical features that differ from that of speech. There are five key structural features of music, including rhythm (a regular, repeated pattern of sounds), pitch (the perceptual correlate of frequency), melody (pitches played sequentially), harmony (pitches played concurrently), and timbre (the physical attribute used to differentiate sounds of the same pitch, loudness, and duration). With respect to rhythm perception, CI listeners generally perform comparably to NH listeners on basic rhythmic tasks (Gfeller et al., 1997; Hsiao & Gfeller, 2012; Kong et al., 2004), though performance may diminish with increased task complexity (Jiam & Limb, 2019; Kong et al., 2004; Phillips-Silver et al., 2015; Reynolds & Gifford, 2019). Further research is warranted to determine how much benefit, if any, CI listeners may receive from residual acoustic hearing on more complex rhythm perception tasks.

In contrast to rhythm, perception of pitch, melody, and harmony often improves significantly with the addition of acoustic hearing (Brockmeier et al., 2010; Cheng et al., 2018; Crew et al., 2015; Cullington & Zeng, 2011; Dorman et al., 2008; El Fata et al., 2009; Gantz, 2005; Gfeller et al., 2006, 2007, 2008; 2012; Kong et al., 2005; Parkinson et al., 2019; Sucher & McDermott, 2009). Similarly, timbre perception tends to improve via the addition of acoustic hearing—albeit to a lesser degree (Kong et al., 2012; Parkinson et al., 2019; Yüksel et al., 2019). In an investigation of children with bimodal hearing, Yüksel et al. (2019) found timbre perception performance to be about 14 percentage points better when compared with a previous study in children using CI-only listening (48% vs. 34%, from Jung et al., 2012). A notable limitation of this study is that they did not complete a within-subject analysis (e.g., CI-alone vs. bimodal performance), and thus, it is possible—albeit unlikely—that the difference in scores was due simply to a difference in samples. Still, any improvement with residual hearing could be expected to correlate with strength of temporal fine structure (TFS) representation in the non-CI ear. To that end, Kong et al. (2012) aimed to determine the relative contribution of spectral and temporal envelope cues for timbre perception in a group of bimodal and bilateral CI recipients. The authors reported that three of the seven total bimodal participants demonstrated increased reliance on the spectral envelope cue. This is in contrast to only one bilateral participant, which suggests a possible advantage of the bimodal condition over CI alone on this task.

We would be short-sighted, however, if our assessments were limited solely to those features of music in isolation. In practice, several structural features are combined and integrated in meaningful and strategic ways to impact sound quality, musical emotion perception, and ultimately overall music appreciation. A number of studies have demonstrated poorer musical emotion perception among CI listeners when compared to those with residual acoustic hearing (Caldwell et al., 2015; D'Onofrio et al., 2020; Giannantonio et al., 2015). Furthermore, CI recipient reports of dissatisfaction with music listening or unpleasant sound quality underscore the shortcomings of current CI processing for music appreciation (Lassaletta et al., 2007; Mirza et al., 2003). For the same reasons discussed above, residual acoustic hearing can yield improved musical emotion perception, sound quality, and overall music appreciation, as listeners are able to incorporate greater use of spectral information from the acoustic hearing ear—namely, greater access to fundamental frequency (F0) and TFS (D'Onofrio et al., 2020; Giannantonio et al., 2015; Plant & Babic, 2016; Shirvani et al., 2016).

This Study

In order to provide evidence-based services to bimodal patients, we must systematically investigate the acoustic information that is both minimally and optimally beneficial for bimodal listening. Using music as a stimulus provides a uniquely advantageous mechanism for the examination of fitting optimization, particularly for complex signal processing. The current study extends earlier work by Sheffield and Gifford (2014) to examine the effect of low-frequency acoustic bandwidth for music perception. The primary aim of the study is to determine the minimum and optimum acoustic bandwidth necessary to obtain bimodal benefit for timbre perception, musical sound quality, and speech recognition. Our primary hypothesis is that bimodal benefit for perception will increase with audible acoustic bandwidth in the nonimplanted ear.

Method

Participants

Participants included 12 adult bimodal listeners (seven men, five women) and 12 NH adult controls (two men, 10 women). An NH control group was added, particularly for the music assessments, as the sound quality measure utilized here was novel and had not yet been validated or normed for a group of NH listeners. The Ollen Musical Sophistication Index (Ollen, 2006) was completed by all participants to quantify individual musical training and aptitude. The Ollen Musical Sophistication Index consists of 10 items and indicates the probability that a music expert would consider the individual as “more” or “less musically sophisticated.” Scores over 500 are considered “more musically sophisticated”; scores less than 500 are considered “less musically sophisticated.” Participants from both groups were largely considered “less musically sophisticated,” and an independent-samples t test confirmed that there was no significant difference in musical background between groups, t(22) = –0.809, p = .43. Tables 1 and 2 include additional demographic information for the NH and bimodal participants, respectively. Serial audiograms leading up to implantation were not available for most participants, and thus, duration of deafness is an approximation based on patient report, ranging from 5 months to 50 years.

Table 1.

NH participant demographic information.

| Participant | Age (years) | OMSI |

|---|---|---|

| 1 | 24 | 299 |

| 2 | 24 | 127 |

| 3 | 28 | 139 |

| 4 | 23 | 323 |

| 5 | 23 | 97 |

| 6 | 56 | 998 |

| 7 | 62 | 208 |

| 8 | 56 | 165 |

| 9 | 63 | 953 |

| 10 | 22 | 99 |

| 11 | 25 | 432 |

| 12 | 22 | 46 |

| M | 36 | 323.83 |

| SD | 17.60 | 323.81 |

Note. NH = normal-hearing; OMSI = Ollen Musical Sophistication Index.

Table 2.

Bimodal participant demographic information.

| Participant | Age (yrs) | Manufacturer | Internal | Implant ear | Etiology | Strategy | OMSI |

|---|---|---|---|---|---|---|---|

| 1 | 52 | Cochlear | CI512 | R | Mumps | ACE | 105 |

| 2 | 80 | Cochlear | CI512 | L | Sudden SNHL | ACE | 400 |

| 3 | 65 | AB | Mid-Scala | R | Meniere's disease | Optima-S | 16 |

| 4 | 80 | Cochlear | CI24RE (CA) | L | Unknown | ACE | 300 |

| 5 | 36 | AB | Mid-Scala | L | Unknown | Optima-S | 184 |

| 6 | 70 | AB | Mid-Scala | R | Unknown | Optima-S | 110 |

| 7 | 49 | AB | SlimJ | L | Meniere's disease | Optima-S | 988 |

| 8 | 57 | AB | Mid-Scala | L | Unknown | Optima-S | 250 |

| 9 | 59 | AB | Mid-Scala | R | Meniere's disease | Optima-S | 21 |

| 10 | 26 | AB | Mid-Scala | L | Unknown | Optima-S | 61 |

| 11 | 80 | AB | Mid-Scala | L | Unknown | Optima-S | 169 |

| 12 | 44 | AB | Mid-Scala | L | Unknown | Optima-P | 108 |

| M | 58 | 226 | |||||

| SD | 18 | 266 |

Note. yrs = years; OMSI = Ollen Musical Sophistication Index; R = right; ACE = Advanced Combination Encoder; L = left; AB = Advanced Bionics; SNHL = sensorineural hearing loss.

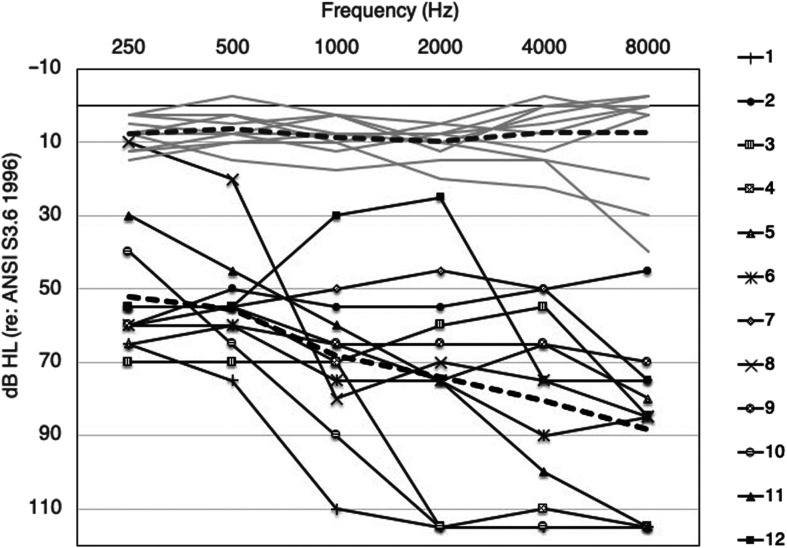

NH was defined as audiometric thresholds ≤ 25 dB HL between 250 and 4000 Hz, bilaterally. A Grason Stadler GSI 61 audiometer was used for all hearing evaluations. ER-3A insert earphones were used for all acoustic ear testing. CI-aided thresholds were tested in the sound field, and thresholds were between 20 and 30 dB HL from 250 to 6000 Hz for all qualifying participants. Audiometric thresholds for both the NH and bimodal groups are shown in Figure 1. For the NH group, the right and left ears were averaged together, and for the bimodal group, thresholds are shown for the nonimplanted ear only.

Figure 1.

Audiometric thresholds for NH (right and left ears averaged; solid light gray lines) and bimodal listeners (nonimplanted ear only; solid dark gray lines with symbols). Group means for NH and bimodal listeners are shown in light and dark gray, respectively. *NH defined as thresholds ≤ 25 dB HL through 4 kHz. NH = normal hearing.

Low-frequency pure-tone average (LFPTA), pure-tone average (PTA), and high-frequency PTA (HFPTA) for individual bimodal participants are shown in Table 3. LFPTA is defined as the average of thresholds at 125, 250, and 500 Hz. PTA is the average of 500, 1000, and 2000 Hz, and HFPTA is the average of 4000, 6000, and 8000 Hz.

Table 3.

LFPTA, PTA, and HFPTA for individual bimodal participants.

| Participant | LFPTA | PTA | HFPTA |

|---|---|---|---|

| 1 | 67 | 100 | 115 |

| 2 | 57 | 53 | 48 |

| 3 | 70 | 67 | 70 |

| 4 | 53 | 80 | 113 |

| 5 | 63 | 67 | 72 |

| 6 | 55 | 70 | 87 |

| 7 | 55 | 50 | 63 |

| 8 | 15 | 57 | 83 |

| 9 | 57 | 62 | 70 |

| 10 | 45 | 90 | 115 |

| 11 | 37 | 60 | 110 |

| 12 | 53 | 37 | 80 |

| M | 52 | 66 | 86 |

| SD | 15 | 18 | 23 |

Note. All values are represented in dB HL. LFPTA = low-frequency pure-tone average; PTA = pure-tone average; HFPTA = high-frequency PTA.

All testing was completed with the participants' CI programmed to user settings. When testing the CI ear, the contralateral ear was plugged with a 3M Classic foam earplug to prevent the non-CI ear from responding. In addition, DRs in the non-CI ear were assessed using the Threshold Equalizing Noise test (Moore, 2010) and were characterized using a shift criterion of ≥ 10 dB. Testing determined that two participants had a DR at 750 Hz (Participants 1 and 8), two at 1500 Hz (Participants 4 and 10), and one at 4000 Hz (Participant 11).

All test procedures were explained to the participants, and written informed consent in accordance with the Vanderbilt Institutional Review Board was obtained. At the conclusion of the study, participants were compensated for their time spent participating.

Test Environment

All testing was completed in a single-walled sound-attenuation chamber.

Test Procedure

Music perception was assessed via measures of timbre perception and subjective sound quality of real-world music samples. Speech perception was assessed via monosyllabic word recognition in quiet. For listeners with NH, all stimuli were presented both binaurally and monaurally (via insert earphones). For monaural presentation, the test ear chosen was counterbalanced across participants and remained the same for a given individual throughout testing. For the bimodal group, all stimuli were presented CI alone (via direct audio input [DAI]) and bimodally (via DAI to the CI and via insert earphone to the non-CI ear).

For presentation to the non-CI ear and in accordance with methods described by Sheffield and Gifford (2014), all stimuli were presented in the following filter conditions: < 125, < 250, < 500, and < 750 Hz, and wideband (WB; full, nonfiltered bandwidth). Thus, for each stimulus, there were a total of six different listening conditions (CI-alone, CI + 125, CI + 250, CI + 500, CI + 750, and CI + WB). Filtering was completed using MATLAB software with a finite impulse response filter with a specific order (256, 512, or 1,024) allowing for a 90-dB/octave roll-off in each filter condition. All stimuli were processed through each filter condition. To account for each participant's hearing loss, individual frequency shaping was completed in accordance with the NAL-NL2 hearing aid prescriptive formula for a 65 dB SPL input level.

For presentation to the CI ear, unfiltered stimuli were presented via DAI. All Advanced Bionics participants utilized a Naida processor regularly; however, a Harmony processor was utilized for testing in the lab to allow for a DAI connection via Direct Connect earhook. All cochlear recipients were tested using N6 (CP910) processors equipped with DAI port. The environmental microphone input was disabled during testing using an AUX-only microphone setting.

All stimuli were delivered at the participants' “loud, but comfortable” level. For each stimulus, participants listened to a sample trial in one ear (CI ear for bimodal participants; right or left ear to NH participants) and were asked to rate perceived loudness on a categorical scale with the following descriptors: “barely audible,” “soft,” “comfortable,” “loud, but comfortable,” and “too loud.” Stimuli were then played in the opposite ear, and participants were asked to match loudness. For the bimodal group, stimuli to the nonimplanted ear were always frequency-shaped in accordance with NAL–NL2, as previously described. Once perceived loudness was matched, the experimenter then played the sample to both ears simultaneously, and participants were asked if the stimulus sounded balanced. In the bimodal group, loudness matching was always completed using the wideband condition in the nonimplanted ear. The level decided upon was used for all remaining filter conditions. We acknowledge that this may result in the filtered conditions being perceived as softer. However, there are two reasons that preclude the ability to match loudness for each filtered condition: (a) For the narrowest conditions, the gain needed would likely exceed the limits of the equipment, and (b) the output for specific frequencies would vary substantially across the filtered conditions, thereby confounding the question of interest. Absolute presentation levels ranged from 70 to 85 dB SPL for NH listeners and from 85 to 105 dB SPL for bimodal listeners.

Measure of Timbre Perception

Timbre perception was measured using the Timbre Identification subtest of the University of Washington Clinical Assessment of Music Perception test (UW-CAMP; Kang et al., 2009). Each stimulus presentation was presented as the same five-note sequence of C4–A4–F4–G4–C5, played at 82 bpm, recorded at a mezzo forte dynamic marking. The participant's task was to choose the instrument they think they heard from a closed set of eight instruments, resulting in an overall percent correct score. The eight instruments included cello, clarinet, flute, guitar, piano, saxophone, trumpet, and violin. Prior to testing, each instrument was played for the participant 3 times with the associated name of the instrument visible for familiarization. Each test trial consisted of 24 questions, with one trial per listening condition. For the NH group, listening conditions consisted of binaural and monaural presentations. For the bimodal group, listening conditions included CI-alone, CI + 125, CI + 250, CI + 500, CI + 750, and CI + WB.

Measures of Sound Quality

Two tasks were used to assess subjective sound quality of real-world music. The first consisted of experimenter-chosen music selections. Song samples were chosen from a preselected library of songs consisting of various genres categorized in accordance with the record label's description. The genres for selection included alternative, blues, disco/electronic, hip-hop/rap, jazz, pop, rhythm & blues, and rock. Two songs were chosen from each genre for task presentation, resulting in a total of 16 songs. The clips were 20 s in duration, and each participant was instructed to rate sound quality directly following stimulus presentation on an 11-point scale ranging from 0 to 10, with the following descriptive anchors: 1 = very bad, 3 = rather bad, 5 = midway (neutral), 7 = rather good, and 9 = very good. Each test trial consisted of 16 questions, with one trial per listening condition. For the NH group, listening conditions consisted of binaural and monaural presentations. For the bimodal group, listening conditions included CI-alone, CI + 125, CI + 250, CI + 500, CI + 750, and CI + WB. The mean overall rating, per trial, was used for subsequent analyses.

The second assessment included participant-chosen music selections. Participants were asked to choose two of their favorite songs and provide the title and artist information to the experimenter prior to the test session. The participant-chosen music task was added in an effort to test listeners on music representative of what they listen to in the real world. Because music sound quality ratings can be influenced by individual factors (i.e., musical training, familiarity with musical style and/or genres, demographic variables, personality characteristics; Ginocchio, 2009; LeBlanc et al., 1999; Nater et al., 2006; Robinson et al., 1996), participant-chosen songs were also included to control for any bias that may otherwise be present when making sound quality judgments of songs for which a participant may not enjoy or is lacking in familiarity. The test procedure for the participant-chosen selections was identical to that previously described for the experimenter-chosen selections.

Measure of Speech Recognition

In an attempt to replicate a portion of the study by Sheffield and Gifford (2014), consonant–nucleus–consonant (CNC) monosyllabic words (Peterson & Lehiste, 1962) were used to assess speech recognition in quiet for all listening conditions. This measure also served as a within-subject comparison of the cues contributing to bimodal benefit for speech recognition and music perception. The CNC test battery includes 10 phonemically balanced lists, each containing 50 words. The words are spoken by a male talker with a mean F0 around 123 Hz and an SD of 17 Hz, as reported by Zhang et al. (2010). Testing consisted of one trial (50-word list) per listening condition. For the bimodal group, listening conditions included CI-alone, CI + 125, CI + 250, CI + 500, CI + 750, and CI + WB. NH listeners were not tested on the speech recognition task. A summary of experimental tasks is shown in Table 4.

Table 4.

Experimental tasks.

| Tasks |

Listening conditions |

|||

|---|---|---|---|---|

| Timbre perception | Sound quality | Word recognition | Bimodal | NH |

| UW-CAMP – Timbre Identification subtest | Experimenter-chosen music Participant-chosen music |

CNC monosyllabic words | CI-alone (DAI) CI + 125 Hz CI + 250 Hz CI + 500 Hz CI + 750 Hz CI + WB |

Binaural Monaural |

Note. NH = normal hearing; UW-CAMP = University of Washington Clinical Assessment of Music Perception test; CNC = consonant–nucleus–consonant; CI = cochlear implant; DAI = direct audio input; WB = wideband.

Data Analysis

Statistical Tests

A power analysis was completed for a sample size justification. We have conducted a study of a continuous, yet categorically classified response variable (acoustic bandwidth) from matched pairs of study subjects (CI-alone and bimodal). Our pilot data for this study indicated that the difference in the response between conditions was normally distributed with an SD of 10.9. If the true difference in the mean response of matched pairs was 12.5 as shown in our pilot data, we would need to study eight participants (CI and bimodal) to be able to reject the null hypothesis that this response difference is zero across the conditions with a probability (power) of 0.8. The Type I error probability associated with the test of this null hypothesis is 0.05. To account for the potential effects of attrition, we increased our enrollment by 50% to 12 total participants. We have included an equal number of participants in our control group with NH.

The IBM SPSS Statistics Version 25 and GraphPad Prism 7.0 software programs were utilized for statistical analyses. Data analysis focused on within-subject and between-group performance and rating differences. Analyses were completed using mixed-model and repeated-measures analyses of variance (ANOVAs) and paired comparisons based on a priori hypotheses. Among bimodal listeners, within-subject conditions of comparison included CI-alone, CI + 125, CI + 250, CI + 500, CI + 750, and CI + WB. An additional condition, termed CI + Best, was included for DR analyses. CI + Best was defined as the filter condition for which the participant obtained the highest performance. For example, if Participant 1's scores on a given measure were the following: CI-alone = 20%, CI + 125 = 25%, CI + 250 = 30%, CI + 500 = 35%, CI + 750 = 55%, and CI + WB = 45%, the CI + 750 condition (55%) would be considered this individual's CI + Best condition for that particular stimulus. Additional analyses focused on correlations between bimodal benefit and pure-tone thresholds. The strength of the correlations was described according to Cohen's (1988) conventions quantifying effect size. In all cases, bimodal benefit was defined as the difference between scores in the bimodal condition and scores in the CI-alone condition.

Results

Measure of Timbre Perception

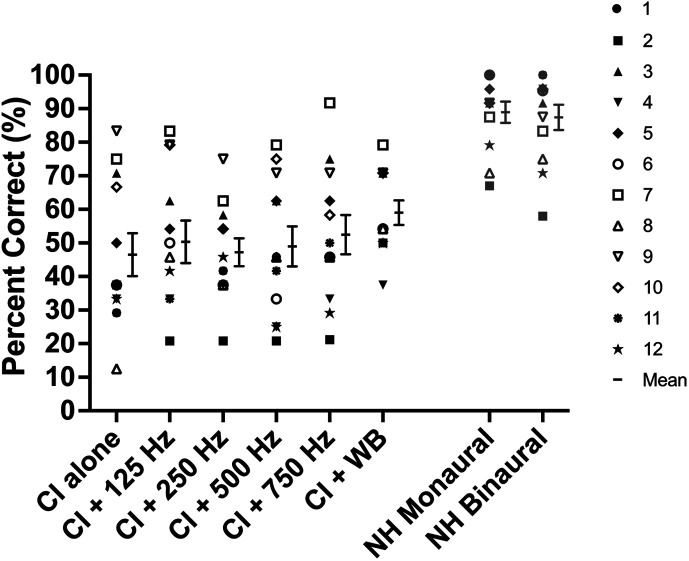

Figure 2 shows results from the timbre perception task. For the NH listeners, a paired-samples t test revealed no significant difference in performance between the binaural and monaural conditions t(11) = 1.123, p = .29.

Figure 2.

Mean and individual timbre identification scores in percent correct. Bimodal and NH groups are shown in black and gray, respectively. Error bars represent ± 1 SEM. CI = cochlear implant; NH = normal hearing.

Best performance among bimodal listeners was achieved in the CI + WB condition—though this was 28–30 percentage points poorer than NH performance in the monaural and binaural conditions, respectively. Mean performance differences across the six listening conditions (CI-alone, CI + 125, CI + 250, CI + 500, CI + 750, and CI + WB) was assessed using a repeated-measures ANOVA and revealed a significant effect of listening condition, F(5, 55) = 3.401, p < .01, ηp 2 = .24. Follow-up pairwise comparisons using Bonferroni adjustment for multiple comparisons revealed a significant difference between the CI + 250 and CI + WB conditions (p < .02). All other comparisons failed to reach statistical significance. Taken together, there was a trend toward improved performance with increasing acoustic bandwidth, and significant improvement was observed for the CI + WB condition, but only when compared to the CI + 250 condition. Mean timbre perception scores for the NH and bimodal listeners are displayed in Tables 5 and 6, respectively.

Table 5.

Mean timbre perception scores and sound quality ratings for the normal-hearing group.

| Task | Listening condition | M |

|---|---|---|

| Timbre | Binaural | 89% |

| Monaural | 87% | |

| Sound quality (E) | Binaural | 6.8 |

| Monaural | 6.5 | |

| Sound quality (P) | Binaural | 7.9 |

| Monaural | 7.5 |

Table 6.

Mean timbre perception scores, sound quality ratings, and speech recognition scores for the bimodal group.

| Task | Listening condition | M |

|---|---|---|

| Timbre | CI-alone | 47% |

| CI + 125 | 50% | |

| CI + 250 | 47% | |

| CI + 500 | 49% | |

| CI + 750 | 53% | |

| CI + WB | 59% | |

| Sound quality (E) | CI-alone | 4.8 |

| CI + 125 | 5.3 | |

| CI + 250 | 5.6 | |

| CI + 500 | 5.9 | |

| CI + 750 | 6.2 | |

| CI + WB | 6.9 | |

| Sound quality (P) | CI-alone | 5.7 |

| CI + 125 | 6.2 | |

| CI + 250 | 6.7 | |

| CI + 500 | 7.3 | |

| CI + 750 | 7.5 | |

| CI + WB | 8.6 | |

| Word recognition | CI-alone | 63% |

| CI + 125 | 69% | |

| CI + 250 | 72% | |

| CI + 500 | 74% | |

| CI + 750 | 79% | |

| CI + WB | 85% |

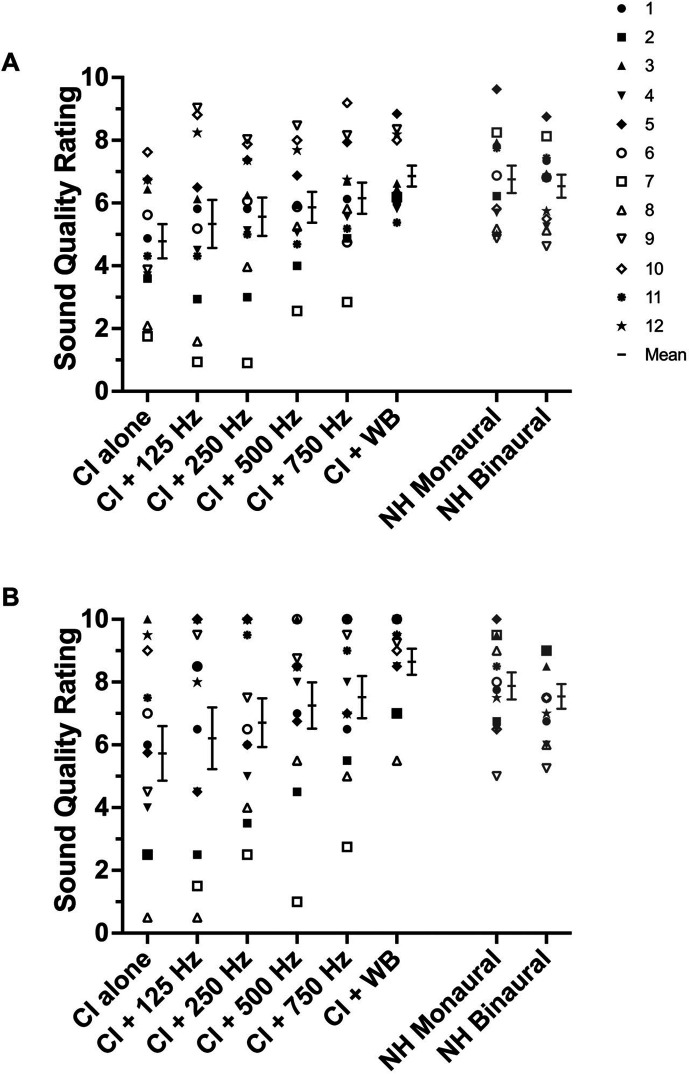

Measures of Sound Quality

Figures 3A and 3B show results from the sound quality task. Among NH listeners, sound quality ratings were examined using a two-way repeated-measures ANOVA with the two factors being listening condition (binaural, monaural) and stimulus type (experimenter-chosen, participant-chosen). Analysis revealed a significant main effect of stimulus type, F(1, 11) = 25.691, p < .01, ηp 2 = .70, but no significant effect of listening condition, F(1, 11) = 1.536, p = .24, ηp 2 = .12, and no significant interaction effect, F(1, 11) = 0.118, p = .74, ηp 2 = .01. Thus, listeners with NH rated participant-chosen songs significantly higher than experimenter-chosen songs, for both binaural and monaural presentations.

Figures 3.

(A) Mean and individual sound quality ratings for experimenter-chosen songs. (B) Mean and individual sound quality ratings for participant-chosen songs. Bimodal and NH groups are shown in black and gray, respectively. Error bars represent ± 1 SEM. CI = cochlear implant; NH = normal hearing.

With respect to the bimodal group, ratings improved steadily with the addition of more acoustic information to a level that was comparable to NH ratings for experimenter-chosen selections and exceeded NH ratings for participant-chosen selections. An independent-samples t test was used to compare ratings in the CI + WB condition to NH ratings in the binaural condition and revealed no significant difference between groups for both the experimenter-chosen songs, t(22) = 0.194, p = .85, and the participant-chosen songs, t(22) = 1.282, p = .21. Thus, NH and bimodal listeners in the CI + WB condition did not differ in their sound quality ratings. A two-way repeated-measures ANOVA was used to examine mean sound quality ratings in the bimodal group with the two factors being listening condition (CI-alone, CI + 125, CI + 250, CI + 500, CI + 750, and CI + WB) and stimulus type (experimenter-chosen, participant-chosen). A Greenhouse–Geisser correction on the interaction effect was used for sphericity. Analysis revealed a significant main effect of stimulus type, F(1, 11) = 7.684, p < .02, ηp 2 = .41, and listening condition, F(5, 55) = 8.418, p < .01, ηp 2 = .43. There was no significant interaction effect, F(2.470, 27.168) = 1.262, p = .30, ηp 2 = .10. Follow-up pairwise comparisons on the main effect of listening condition were completed with Bonferroni correction. Significant differences were found between the CI-alone and CI + WB conditions (p < .01). No other significant differences were observed. Thus, these results indicate that, for musical sound quality, WB amplification was required for significant bimodal benefit. Additionally, consistent with the NH results, significantly higher ratings were given to participant-chosen songs for all listening conditions. Mean sound quality ratings for the NH and bimodal listeners are displayed in Tables 5 and 6, respectively.

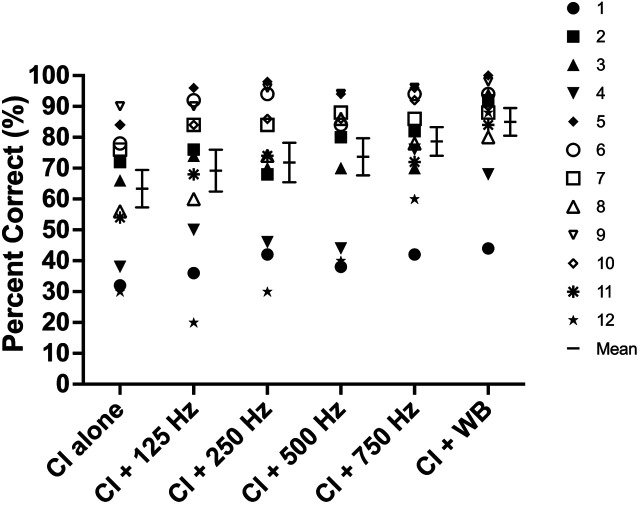

Measure of Speech Recognition

Figure 4 shows results for CNC word recognition. Performance among bimodal listeners improved steadily with the addition of more acoustic information. A repeated-measures ANOVA was used to examine mean CNC word scores in the bimodal group across the six listening conditions (CI-alone, CI + 125, CI + 250, CI + 500, CI + 750, and CI + WB). Analysis revealed a significant effect of listening condition, F(2.208, 24.287) = 10.127, p < .01, ηp 2 = .48. Follow-up pairwise comparisons were completed with a Bonferroni correction and revealed a significant improvement between CI-alone and the following: CI + 250 Hz (p < .04), CI + 500 Hz (p < .03), CI + 750 Hz (p < .01), and CI + WB, (p < .01). Importantly, all other paired comparisons failed to reach significance. Thus, these results indicate that, for speech recognition in quiet, < 250 Hz is minimally required for bimodal benefit, though a trend toward further improvement with increasing acoustic bandwidth was also observed. Mean speech recognition scores for the bimodal listeners are displayed in Table 6.

Figure 4.

Mean and individual consonant–nucleus–consonant word scores, in percent correct, for the bimodal group. Error bars represent ± 1 SEM. CI = cochlear implant.

DRs

DRs were present in five of the total 12 participants. For timbre perception, the condition yielding optimal performance was CI + WB for four of those five participants. For sound quality, the optimal condition was CI + WB for those same four participants. For word recognition, the optimal condition was CI + WB for only two of the five participants with DRs. Future research with a larger sample size is needed to determine whether cochlear DRs may have a greater impact on optimal acoustic bandwidth for speech recognition than for music.

Bimodal Benefit and Pure-Tone Thresholds

Further analyses were completed to examine the relationship between bimodal benefit and pure-tone thresholds. Analyses were conducted with each participant's bimodal benefit score for the CI + WB condition. In all cases, partial correlations were completed while controlling for CI-alone (baseline) performance. LFPTA ranged from 15 to 70 dB HL. The partial correlation between LFPTA and bimodal benefit for CI + WB was small to moderate and nonsignificant for timbre perception (r = −.464, p = .15), sound quality (r = .276, p = .41), and word recognition (r = −.081, p = .81).

Because several participants had useable hearing above 500 Hz, traditional PTA and an HFPTA were also examined. The partial correlation between PTA and bimodal benefit for CI + WB was small and nonsignificant for timbre perception (r = −.168, p = .62) and for sound quality (r = −.330, p = .32). However, the relationship between PTA and bimodal benefit for CI + WB for word recognition was strong and significant (r = −.691, p < .02). The partial correlation between HFPTA and bimodal benefit for CI + WB was small and nonsignificant for timbre perception (r = −.288, p = .39) and moderate but nonsignificant for word recognition (r = −.489, p = .13). However, the relationship between HFPTA and bimodal benefit for CI + WB was moderate and significant for sound quality (r = −.650, p < .03).

Thus, low-frequency audiometric thresholds in the nonimplanted ear were not related to timbre perception, sound quality ratings, or word recognition. Midfrequency audiometric thresholds were related to word recognition but were not related to timbre perception or sound quality ratings. High-frequency audiometric thresholds were related to sound quality ratings but were not related to timbre perception or word recognition. All correlation results are summarized in Table 7.

Table 7.

Summary of correlation results.

| Task | Correlation | r or ρ | p |

|---|---|---|---|

| Timbre | LFPTA vs. bimodal benefit for CI + WB | −0.464 | ns |

| Sound quality | LFPTA vs. bimodal benefit for CI + WB | 0.276 | ns |

| Word recognition | LFPTA vs. bimodal benefit for CI + WB | −0.081 | ns |

| Timbre | PTA vs. bimodal benefit for CI + WB | −0.168 | ns |

| Sound quality | PTA vs. bimodal benefit for CI + WB | −0.330 | ns |

| Word recognition | PTA vs. bimodal benefit for CI + WB | −0.691 | < .02 |

| Timbre | HFPTA vs. bimodal benefit for CI + WB | −0.288 | ns |

| Sound quality | HFPTA vs. bimodal benefit for CI + WB | −0.650 | < .03 |

| Word recognition | HFPTA vs. bimodal benefit for CI + WB | −0.489 | ns |

Note. “ns” indicates a p value that is greater than .05 and was not statistically significant. LFPTA = low-frequency pure-tone average; CI = cochlear implant; WB = wideband; PTA = pure-tone average; HFPTA = high-frequency PTA.

Discussion

CI recipients may receive substantial perceptual benefit via the contribution of residual acoustic hearing; however, the effect of acoustic bandwidth for music listening has not been previously evaluated. To examine this issue, the current study presented stimuli to the non-CI ear in the following filter conditions: < 125, < 250, < 500, and < 750 Hz, and wideband (full bandwidth), with the primary aim being to determine the minimum and optimum acoustic bandwidth necessary to obtain bimodal benefit for timbre perception, musical sound quality, and speech recognition.

Timbre Perception

For NH listeners, no differences were noted between monaural and binaural listening. For bimodal listeners, mean timbre perception performance generally increased with increasing acoustic information; however, performance remained poorer than the NH group for all conditions tested.

The timbre perception task utilized in this study has previously been validated in NH and CI listeners, and our results were comparable to prior studies. Here, listeners with NH scored 87% and 89% in the monaural and binaural conditions, respectively, as compared to 88% reported previously by Kang et al. (2009). For CI-alone testing, listeners scored 45.5%, as compared to 45.0% and 43.2% reported by Kang et al. (2009) and Drennan et al. (2015), respectively. Importantly, scores for CI-alone listening were well above chance performance (12.5%)—a result likely due to the salience of temporal envelope cues via CI processing. Indeed, timbre perception via CI-mediated listening tends to be comparatively better than other measures of music perception (i.e., melody perception; Jung et al., 2012; Kang et al., 2009), which has largely been attributed to the contribution of temporal envelope cues associated with timbre.

We hypothesized that, with the addition of acoustic hearing, listeners would benefit from greater access to important timbral cues not otherwise available via the CI (e.g., TFS; Limb & Roy, 2014; Moore, Glasberg, Flanagan, et al., 2006; Moore, Glasberg, & Hopkins, 2006). Consistent with our hypothesis, performance improved with increasing acoustic bandwidth; specifically, we noted significant improvement with use of wideband amplification as compared to the < 250-Hz low-pass filter condition. These results are consistent with previous work utilizing the same measure of timbre perception. Yüksel et al. (2019) demonstrated a 14-percentage point improvement among children with low-frequency contralateral acoustic hearing when compared to previous reports in children with CI-only listening (48% vs. 34%, from Jung et al., 2012). Other measures have been used with bimodal listeners in an effort to examine the contribution of specific timbral properties. Kong et al. (2012) hypothesized that, with the addition of acoustic hearing, bimodal listeners would incorporate greater use of spectral cues. Although their hypothesis was not statistically supported, results were trending toward increased incorporation of the spectral envelope cue. Our results were similar, albeit with a different test measure.

Importantly, we predicted that the presence of DRs may influence each individual's CI + Best condition, such that optimal benefit would be achieved with a reduced bandwidth for individuals with DRs. Our data (albeit a limited sample) did not support this premise, as four of the five participants with DRs performed optimally with WB amplification.

Musical Sound Quality

Sound quality was measured using two stimulus types—experimenter-chosen and participant-chosen. The use of two stimulus types was implemented in an effort to control for any inherent bias in ratings due to stimulus familiarity or stimulus dislike. Results indicate that both NH and bimodal listeners rated their own song selections significantly higher than the experimenter-chosen selections, thereby demonstrating a response bias toward better sound quality ratings when songs were familiar and liked. This is consistent with literature in the music psychology domain, which has demonstrated that music sound quality ratings can be influenced by demographics, personality traits, musical training, and familiarity with certain musical styles (Ginocchio, 2009; LeBlanc et al., 1999; Nater et al., 2006; Robinson et al., 1996). This is an important consideration for future investigations as both NH and bimodal participants were unable to disentangle the ratings of sound quality from song familiarity and enjoyment, despite explicit instructions to the contrary. Interestingly, our results are in contrast to Roy et al. (2012) who did not find a difference in sound quality ratings for familiar versus unfamiliar songs. Listeners in their study were CI-only participants and did not have residual hearing. It is possible that residual acoustic hearing is an important factor that warrants further study.

Similar to the results for timbre perception, no differences were noted between monaural and binaural listening in the NH group. For bimodal listeners, a systematic improvement in sound quality ratings was observed with increasing acoustic bandwidth; however, the improvement over the CI-alone condition only reached significance for the CI + WB condition. Thus, CI + WB was minimally required for a significant improvement in sound quality. Consistent with the results for timbre perception, the presence of DRs does not appear to inform the CI + Best condition, as four of the five participants with DRs performed optimally in the WB condition.

Speech Recognition

In an effort to replicate a portion of Sheffield and Gifford (2014), speech recognition in quiet was examined using CNC words. Additionally, this measure allowed across-stimulus analysis in this study and provided a within-subject comparison of the cues contributing to bimodal benefit for speech recognition and music perception. In this study, CI + 250 Hz was minimally required for significant bimodal benefit, which was in agreement with Sheffield and Gifford (2014) who likewise found that the minimum acoustic bandwidth required for significant benefit for CNC words was CI + 250 Hz. The significant increase in performance with acoustic information < 250 Hz is consistent with previous studies examining the weighting of low-frequency information for speech recognition among CI users. Bosen and Chatterjee (2016) reported that individuals with CIs tend to rely more heavily on low frequencies (~100–300 Hz) for speech recognition than individuals with NH. Thus, access to acoustic hearing afforded by the CI + 250 Hz condition may have increased the salience of speech cues in this frequency range for these listeners. Incidentally, frequency information < 125 Hz was not sufficient, as performance in the CI + 125 Hz condition did not produce significant improvement over the CI-alone condition. The CNC words used for testing are spoken by a male talker with a mean F0 around 123 Hz and an SD of 17 Hz. Thus, the F0 of the talker is encompassed within the < 250-Hz low-pass filter, though it may have not been fully encompassed within the < 125-Hz low-pass filter. Traunmüller and Eriksson (1995) summarize several original reports on average voice F0 and the F0 variation in standard deviation. For a male talker with F0 around 125 Hz, 1 SD can be as much as 30–40 Hz; thus, it is likely that the < 125-Hz condition was too restrictive, as it would not have encompassed the natural F0 perturbations present in everyday speech.

An additional observation from our data is that mean word recognition improved steadily with increasing bandwidth, although further improvements failed to reach statistical significance when compared to smaller acoustic bandwidth conditions. That is, despite trends toward further improvement with increasing acoustic bandwidth, our data suggest that CI + 250 Hz is both minimally required and sufficient for optimal speech recognition performance with a male talker in quiet. This is in partial contrast with Sheffield and Gifford (2014), who found CI + WB performance to be significantly better than performance in the CI + 125, CI + 250, and CI + 500 Hz conditions. There are a couple possible reasons for this discrepancy. First, performance in the CI + 125, CI + 250, and CI + 500 Hz conditions was lower in the Sheffield and Gifford study, although CI + WB performance was similar across studies. Thus, the absolute magnitude of improvement between these three filter conditions and CI + WB was greater in Sheffield and Gifford. Second, while the hearing configuration of the participants in both studies was similar, the participants in Sheffield and Gifford had poorer higher frequency thresholds (2000 Hz and above). It is possible that differences in hearing loss severity contributed to the slight discrepancy in findings. Importantly, however, overall magnitude of improvement between the CI-alone and CI + WB conditions in this study and the Sheffield and Gifford study was equivalent at approximately 22 percentage points for both.

With respect to DRs, only two of the five participants with DRs performed optimally with the WB condition. For the three who performed best with a reduced bandwidth, the amplification cutoff frequency was always lower than the DR cutoff frequency (Participant 4: DR at 1500 Hz, optimal condition was CI + 750 Hz; Participant 8: DR at 750 Hz, optimal condition was CI + 500 Hz; Participant 10: DR at 1500 Hz, optimal condition was CI + 750 Hz). Thus, it is possible that DRs have a greater impact for speech stimuli as previously demonstrated (e.g., Messersmith et al., 2015; Zhang et al., 2014); however, future study with a larger sample size is warranted.

Clinical Implications

The benefit of amplification for individuals with aidable residual hearing in the non-CI ear can be substantial for both speech and music stimuli; however, the acoustic bandwidth that is both minimally and optimally beneficial appears dependent upon stimulus type. On average, significant benefit for speech recognition was obtained with a narrow, low-frequency acoustic bandwidth of < 250 Hz, whereas WB amplification was needed for significant benefit for sound quality. Still, it is important to note that, despite failing to reach statistical significance, average speech recognition also continued to improve out to the WB condition. Thus, our findings do not suggest that amplification should be limited to 250 Hz and below; rather, this simply illustrates that relatively little acoustic information may be required for certain stimuli in comparison to others.

Limitations

The timbre identification task utilized here poses a potential methodological issue, in that it is predicated upon participants having audibility minimally through 523 Hz (C4). While all of our participants have useable thresholds in that range, higher harmonic information may not have been audible. Future tasks that intend to isolate timbre only, in the absence of a potential audibility confound, may aim to utilize stimuli in a lower register or stimuli with a similar starting frequency but that varies over a more restricted spectral range.

Additionally, an independent-samples t test revealed a significant difference in mean age between the NH and bimodal groups, t(22) = –3.118, p < .01. This presents a potential confound, particularly with respect to generational music preferences. To control for individual variability in musical preference, the experimenter-chosen selections were from a number of genres, time periods, and artists. Furthermore, participant-chosen music selections were also included in the test battery. The extent to which age may have influenced our findings is likely to be minimal.

Finally, our test protocol did not include an HA-alone condition. Without explicit examination of HA-alone performance, it is impossible to determine whether improvements in performance were truly the product of bimodal listening or whether performance may have been solely influenced by the bandwidth available in the acoustic ear alone. In the current study, it was our goal to assess the benefit of the bimodal configuration—that is, acoustic hearing in conjunction with electric hearing—as this represents the most likely listening configuration for these participants. Future research is warranted to further parse out the independent contribution of the acoustic hearing ear.

Conclusions

Substantial bimodal benefit for individuals with residual hearing in the non-CI ear can be obtained for both speech and music stimuli. In this study, we observed a trend toward improved performance for all stimuli with increasing acoustic bandwidth; however, the acoustic bandwidth that is both minimally and optimally beneficial appears dependent upon stimulus type. On average, music sound quality required WB amplification, whereas speech recognition with a male talker in quiet required a smaller acoustic bandwidth (< 250 Hz) for significant benefit. Still, it is important to note that average speech recognition performance continued to improve with increasing acoustic bandwidth. Thus, our findings suggest that, for both music and speech stimuli, wideband amplification ought to be standard of care for individuals with bimodal hearing.

Acknowledgments

This work was supported by National Institutes of Health Grant R01 DC009404 (PI: Gifford) as well as the Vanderbilt Institute for Clinical and Translational Research (National Institutes of Health Grant UL1 TR000445). We also sincerely thank Linsey Sunderhaus and Courtney Kolberg for their assistance with participant recruitment and data collection; Leonid Litvak, Senior Director of Research and Technology at Advanced Bionics, and Chen Chen, Senior Research Scientist at Advanced Bionics, for their assistance with software programming/coding; Daniel Ashmead for consultation on statistical analysis; and Spencer Smith and Reyna Gordon for their helpful comments on earlier versions of this article.

Funding Statement

This work was supported by National Institutes of Health Grant R01 DC009404 (PI: Gifford) as well as the Vanderbilt Institute for Clinical and Translational Research (National Institutes of Health Grant UL1 TR000445).

References

- Bosen, A. K. , & Chatterjee, M. (2016). Band importance functions of listeners with cochlear implants using clinical maps. The Journal of the Acoustical Society of America, 140(5), 3718–3727. https://doi.org/10.1121/1.4967298 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brockmeier, S. J. , Peterreins, M. , Lorens, A. , Vermeire, K. , Helbig, S. , Anderson, I. , Skarzynski, H. , Van de Heyning, P. , Gstoettner, W. , & Kiefer, J. (2010). Music perception in electric acoustic stimulation users as assessed by the Mu.S.I.C. test. In Van de Heyning P. & Kleine Punte A. (Eds.), Cochlear implants and hearing preservation (Vol. 67, pp. 70–80). Karger Publishers. https://doi.org/10.1159/000262598 [DOI] [PubMed] [Google Scholar]

- Caldwell, M. , Rankin, S. K. , Jiradejvong, P. , Carver, C. , & Limb, C. J. (2015). Cochlear implant users rely on tempo rather than on pitch information during perception of musical emotion. Cochlear Implants International, 16(Suppl. 3), S114–S120. https://doi.org/10.1179/1467010015Z.000000000265 [DOI] [PubMed] [Google Scholar]

- Chatterjee, M. , Deroche, M. L. , Peng, S. C. , Lu, H. P. , Lu, N. , Lin, Y. S. , & Limb, C. J. (2017). Processing of fundamental frequency changes, emotional prosody and lexical tones by pediatric CI recipients. In Proceedings of the International Symposium on Auditory and Audiological Research (Vol. 6, pp. 117–125). [Google Scholar]

- Chatterjee, M. , & Peng, S. C. (2008). Processing F0 with cochlear implants: Modulation frequency discrimination and speech intonation recognition. Hearing Research, 235(1), 143–156. https://doi.org/10.1016/j.heares.2007.11.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng, X. , Liu, Y. , Wang, B. , Yuan, Y. , Galvin, J. J. , Fu, Q. J. , Shu, T. , & Chen, B. (2018). The benefits of residual hair cell function for speech and music perception in pediatric bimodal cochlear implant listeners. Neural Plasticity, 2018, 4610592. https://doi.org/10.1155/2018/4610592 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences. Routledge. [Google Scholar]

- Crew, J. D. , Galvin, J. J., III , Landsberger, D. M. , & Fu, Q. J. (2015). Contributions of electric and acoustic hearing to bimodal speech and music perception. PLOS ONE, 10(3), e0120279. https://doi.org/10.1371/journal.pone.0120279 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cullington, H. E. , & Zeng, F. G. (2011). Comparison of bimodal and bilateral cochlear implant users on speech recognition with competing talker, music perception, affective prosody discrimination and talker identification. Ear and Hearing, 32(1), 16–30. https://doi.org/10.1097/AUD.0b013e3181edfbd2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson, L. S. , Firszt, J. B. , Brenner, C. , & Cadieux, J. H. (2015). Evaluation of hearing aid frequency response fittings in pediatric and young adult bimodal recipients. Journal of the American Academy of Audiology, 26(4), 393–407. https://doi.org/10.3766/jaaa.26.4.7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- D'Onofrio, K. L. , Caldwell, M. T. , Limb, C. J. , Smith, S. , Kessler, D. M. , & Gifford, R. H. (2020). Musical emotion perception in bimodal patients: Relative weighting of musical mode and tempo cues. Frontiers in Neuroscience, 14, 114. https://doi.org/10.3389/fnins.2020.00114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman, M. F. , & Gifford, R. H. (2010). Combining acoustic and electric stimulation in the service of speech recognition. International Journal of Audiology, 49(12), 912–919. https://doi.org/10.3109/14992027.2010.509113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman, M. F. , Gifford, R. H. , Spahr, A. J. , & McKarns, S. A. (2008). The benefits of combining acoustic and electric stimulation for the recognition of speech, voice and melodies. Audiology and Neurotology, 13(2), 105–112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drennan, W. R., Oleson, J. J., Gfeller, K., Crosson, J., Driscoll, V. D., Won, J. H., Anderson, E. S., & Rubinstein, J. T. (2015). Clinical evaluation of music perception, appraisal and experience in cochlear implant users. International Journal of Audiology, 54(2), 114–123. https://doi.org/10.3109/14992027.2014.948219 [DOI] [PMC free article] [PubMed] [Google Scholar]

- El Fata, F. , James, C. J. , Laborde, M. L. , & Fraysse, B. (2009). How much residual hearing is ‘useful' for music perception with cochlear implants? Audiology and Neurotology, 14(Suppl. 1), 14-21. https://doi.org/10.1159/000206491 [DOI] [PubMed] [Google Scholar]

- Gantz, B. J. , Turner, C. , Gfeller, K. E. , & Lowder, M. W. (2005). Preservation of hearing in cochlear implant surgery: Advantages of combined electrical and acoustical speech processing. The Laryngoscope, 115(5), 796–802. https://doi.org/10.1097/01.MLG.0000157695.07536.D2 [DOI] [PubMed] [Google Scholar]

- Gfeller, K. , Jiang, D. , Oleson, J. J. , Driscoll, V. , Olszewski, C. , Knutson, J. F. , Turner, C. , & Gantz, B. (2012). The effects of musical and linguistic components in recognition of real-world musical excerpts by cochlear implant recipients and normal-hearing adults. Journal of Music Therapy, 49(1), 68–101. https://doi.org/10.1093/jmt/49.1.68 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gfeller, K. , Oleson, J. , Knutson, J. F. , Breheny, P. , Driscoll, V. , & Olszewski, C. (2008). Multivariate predictors of music perception and appraisal by adult cochlear implant users. Journal of the American Academy of Audiology, 19(2), 120–134. https://doi.org/10.3766/jaaa.19.2.3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gfeller, K. E. , Olszewski, C. , Turner, C. , Gantz, B. , & Oleson, J. (2006). Music perception with cochlear implants and residual hearing. Audiology and Neurotology, 11(Suppl. 1), 12–15. https://doi.org/10.1159/000095608 [DOI] [PubMed] [Google Scholar]

- Gfeller, K. , Turner, C. , Oleson, J. , Zhang, X. , Gantz, B. , Froman, R. , & Olszewski, C. (2007). Accuracy of cochlear implant recipients on pitch perception, melody recognition, and speech reception in noise. Ear and Hearing, 28(3), 412–423. https://doi.org/10.1097/AUD.0b013e3180479318 [DOI] [PubMed] [Google Scholar]

- Gfeller, K. , Woodworth, G. , Robin, D. A. , Witt, S. , & Knutson, J. F. (1997). Perception of rhythmic and sequential pitch patterns by normal hearing adults and adult cochlear implant users. Ear and Hearing, 18(3), 252–260. https://doi.org/10.1097/00003446-199706000-00008 [DOI] [PubMed] [Google Scholar]

- Giannantonio, S. , Polonenko, M. J. , Papsin, B. C. , Paludetti, G. , & Gordon, K. A. (2015). Experience changes how emotion in music is judged: Evidence from children listening with bilateral cochlear implants, bimodal devices, and normal hearing. PLOS ONE, 10(8), e0136685. https://doi.org/10.1371/journal.pone.0136685 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ginocchio, J. (2009). The effects of different amounts and types of music training on music style preference. Bulletin of the Council for Research in Music Education, 182, 7–17. [Google Scholar]

- Holder, J. T., Reynolds, S. M., Sunderhaus, L. W., & Gifford, R. H. (2018). Current profile of adults presenting for preoperative cochlear implant evaluation. Trends in Hearing, 22, 1–6. https://doi.org/10.1177/2331216518755288 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsiao, F. , & Gfeller, K. (2012). Music perception of cochlear implant recipients with implications for music instruction: A review of literature. Update (Music Educators National Conference [U.S.]), 30(2), 5–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiam, N. T. , & Limb, C. J. (2019). Rhythm processing in cochlear implant-mediated music perception. Annals of the New York Academy of Sciences, 1453(1), 22–28. https://doi.org/10.1177/8755123312437050 [DOI] [PubMed] [Google Scholar]

- Jung, K. H. , Won, J. H. , Drennan, W. R. , Jameyson, E. , Miyasaki, G. , Norton, S. J. , & Rubinstein, J. T. (2012). Psychoacoustic performance and music and speech perception in prelingually deafened children with cochlear implants. Audiology and Neurotology, 17(3), 189–197. https://doi.org/10.1159/000336407 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang, R. , Nimmons, G. L. , Drennan, W. , Longnion, J. , Ruffin, C. , Nie, K. , Won, J. H. , Worman, T. , Yueh, B. , & Rubinstein, J. (2009). Development and validation of the University of Washington Clinical Assessment of Music Perception test. Ear and Hearing, 30(4), 411–418. https://doi.org/10.1097/AUD.0b013e3181a61bc0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong, Y. Y. , Cruz, R. , Jones, J. A. , & Zeng, F. G. (2004). Music perception with temporal cues in acoustic and electric hearing. Ear and Hearing, 25(2), 173–185. https://doi.org/10.1097/01.AUD.0000120365.97792.2F [DOI] [PubMed] [Google Scholar]

- Kong, Y. Y. , Mullangi, A. , & Marozeau, J. (2012). Timbre and speech perception in bimodal and bilateral cochlear-implant listeners. Ear and Hearing, 33(5), 645–659. https://doi.org/10.1097/AUD.0b013e318252caae [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong, Y. Y. , Stickney, G. S. , & Zeng, F. G. (2005). Speech and melody recognition in binaurally combined acoustic and electric hearing. The Journal of the Acoustical Society of America, 117(3), 1351–1361. https://doi.org/10.1121/1.1857526 [DOI] [PubMed] [Google Scholar]

- Lassaletta, L. , Castro, A. , Bastarrica, M. , Pérez-Mora, R. , Madero, R. , De Sarriá, J. , & Gavilán, J. (2007). Does music perception have an impact on quality of life following cochlear implantation? Acta Oto-Laryngologica, 127(7), 682–686. https://doi.org/10.1080/00016480601002112 [DOI] [PubMed] [Google Scholar]

- LeBlanc, A. , Jin, Y. C. , Stamou, L. , & McCrary, J. (1999). Effect of age, country, and gender on music listening preferences. Bulletin of the Council for Research in Music Education, Summer(141), 72–76. http://www.jstor.org/stable/40318987 [Google Scholar]

- Limb, C. J. , & Roy, A. T. (2014). Technological, biological, and acoustical constraints to music perception in cochlear implant users. Hearing Research, 308, 13–26. https://doi.org/10.1016/j.heares.2013.04.009 [DOI] [PubMed] [Google Scholar]

- Luo, X. , Fu, Q.-J. , & Galvin, J. (2007). Vocal emotion recognition by normal-hearing listeners and cochlear-implant users. Trends in Amplification, 11, 301–315. https://doi.org/10.1177/1084713807305301 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Messersmith, J. J. , Jorgensen, L. E. , & Hagg, J. A. (2015). Reduction in high-frequency hearing aid gain can improve performance in patients with contralateral cochlear implant: A pilot study. American Journal of Audiology, 24(4), 462–468. https://doi.org/10.1044/2015_AJA-15-0045 [DOI] [PubMed] [Google Scholar]

- Mirza, S. , Douglas, S. A. , Lindsey, P. , Hildreth, T. , & Hawthorne, M. (2003). Appreciation of music in adult patients with cochlear implants: A patient questionnaire. Cochlear Implants International, 4(2), 85–89. https://doi.org/10.1179/cim.2003.4.2.85 [DOI] [PubMed] [Google Scholar]

- Moore, B. C. (2010). Testing for cochlear dead regions: Audiometer implementation of the TEN (HL) test. The Hearing Review, 17, 10–16. [Google Scholar]

- Moore, B. C. , Glasberg, B. R. , Flanagan, H. J. , & Adams, J. (2006). Frequency discrimination of complex tones: Assessing the role of component resolvability and temporal fine structure. The Journal of the Acoustical Society of America, 119(1), 480–490. https://doi.org/10.1121/1.2139070 [DOI] [PubMed] [Google Scholar]

- Moore, B. C. , Glasberg, B. R. , & Hopkins, K. (2006). Frequency discrimination of complex tones by hearing-impaired subjects: Evidence for loss of ability to use temporal fine structure. Hearing Research, 222(1–2), 16–27. https://doi.org/10.1016/j.heares.2006.08.007 [DOI] [PubMed] [Google Scholar]

- Nater, U. M. , Abbruzzese, E. , Kreb, M. , & Elhert, U. (2006). Sex differences in emotional and psychophysiological responses to musical stimuli. International Journal of Psychophysiology, 62(6), 300308. https://doi.org/10.1016/j.ijpsycho.2006.05011 [DOI] [PubMed] [Google Scholar]

- Neuman, A. C. , & Svirsky, M. A. (2013). The effect of hearing aid bandwidth on speech recognition performance of listeners using a cochlear implant and contralateral hearing aid (bimodal hearing). Ear and Hearing, 34(5), 553–561. https://doi.org/10.1097/AUD.0b013e31828e86e8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neuman, A. C. , Zeman, A. , Neukam, J. , Wang, B. , & Svirsky, M. A. (2019). The effect of hearing aid bandwidth and configuration of hearing loss on bimodal speech recognition in cochlear implant users. Ear and Hearing, 40(3), 621–635. https://doi.org/10.1097/AUD.0000000000000638 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ollen, J. E. (2006). A criterion-related validity test of selected indicators of musical sophistication using expert ratings [Electronic resource] . https://etd.ohiolink.edu/apexprod/rws_olink/r/1501/10?p10_etd_subid=65208&clear=10

- Parkinson, A. J. , Rubinstein, J. T. , Drennan, W. R. , Dodson, C. , & Nie, K. (2019). Hybrid music perception outcomes: Implications for melody and timbre recognition in cochlear implant recipients. Otology & Neurotology, 40(3), e283–e289. https://doi.org/10.1097/MAO.0000000000002126 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson, G. E. , & Lehiste, I. (1962). Revised CNC lists for auditory tests. Journal of Speech and Hearing Disorders, 27(1), 62–70. https://doi.org/10.1044/jshd.2701.62 [DOI] [PubMed] [Google Scholar]

- Phillips-Silver, J. , Toiviainen, P. , Gosselin, N. , Turgeon, C. L. , & Peretz, I. (2015). Cochlear implant users move in time to the beat of drum music. Hearing Research, 321, 25–34. https://doi.org/10.1016/j.heares.2014.12.007 [DOI] [PubMed] [Google Scholar]

- Plant, K. , & Babic, L. (2016). Utility of bilateral acoustic hearing in combination with electrical stimulation provided by the cochlear implant. International Journal of Audiology, 55(Suppl. 2), S31–S38. https://doi.org/10.3109/14992027.2016.1150609 [DOI] [PubMed] [Google Scholar]

- Reynolds, S. M. , & Gifford, R. H. (2019). Effect of signal processing strategy and stimulation type on speech and auditory perception in adult cochlear implant users. International Journal of Audiology, 58(6), 363–372. https://doi.org/10.1080/14992027.2019.1580390 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson, T. O. , Weaver, J. B. , & Zillmann, D. (1996). Exploring the relation between personality and the appreciation of rock music. Psychological Reports, 78(1), 259–269. https://doi.org/10.2466/pr0.1996.78.1.259 [DOI] [PubMed] [Google Scholar]

- Roy, A. T. , Jiradejvong, P. , Carver, C. , & Limb, C. J. (2012). Musical sound quality impairments in cochlear implant (CI) users as a function of limited high-frequency perception. Trends in Amplification, 16(4), 191–200. https://doi.org/10.1177/1084713812465493 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheffield, S. W. , & Gifford, R. H. (2014). The benefits of bimodal hearing: Effect of frequency region and acoustic bandwidth. Audiology and Neurotology, 19(3), 151–163. https://doi.org/10.1159/000357588 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shirvani, S. , Jafari, Z. , Motasaddi Zarandi, M. , Jalaie, S. , Mohagheghi, H. , & Tale, M. R. (2016). Emotional perception of music in children with bimodal fitting and unilateral cochlear implant. Annals of Otology, Rhinology & Laryngology, 125(6), 470–477. https://doi.org/10.1177/0003489415619943 [DOI] [PubMed] [Google Scholar]

- Sucher, C. M. , & McDermott, H. J. (2009). Bimodal stimulation: benefits for music perception and sound quality. Cochlear Implants International, 10(S1), 96–99. [DOI] [PubMed] [Google Scholar]

- Traunmüller, H. , & Eriksson, A. (1995). The frequency range of the voice fundamental in the speech of male and female adults. Unpublished manuscript.

- Yüksel, M. , Meredith, M. A. , & Rubinstein, J. T. (2019). Effects of low frequency residual hearing on music perception and psychoacoustic abilities in pediatric cochlear implant recipients. Frontiers in Neuroscience, 13, 924. https://doi.org/10.3389/fnins.2019.00924 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang, T. , Dorman, M. F. , Gifford, R. , & Moore, B. C. (2014). Cochlear dead regions constrain the benefit of combining acoustic stimulation with electric stimulation. Ear and Hearing, 35(4), 410–417. https://doi.org/10.1097/AUD.0000000000000032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang, T. , Dorman, M. F. , & Spahr, A. J. (2010). Information from the voice fundamental frequency (F0) region accounts for the majority of the benefit when acoustic stimulation is added to electric stimulation. Ear and Hearing, 31(1), 63–69. https://doi.org/10.1097/AUD.0b013e3181b7190c [DOI] [PMC free article] [PubMed] [Google Scholar]