Abstract

Purpose

Of the three currently recognized variants of primary progressive aphasia, behavioral differentiation between the nonfluent/agrammatic (nfvPPA) and logopenic (lvPPA) variants is particularly difficult. The challenge includes uncertainty regarding diagnosis of apraxia of speech, which is subsumed within criteria for variant classification. The purpose of this study was to determine the extent to which a variety of speech articulation and prosody metrics for apraxia of speech differentiate between nfvPPA and lvPPA across diverse speech samples.

Method

The study involved 25 participants with progressive aphasia (10 with nfvPPA, 10 with lvPPA, and five with the semantic variant). Speech samples included a word repetition task, a picture description task, and a story narrative task. We completed acoustic analyses of temporal prosody and quantitative perceptual analyses based on narrow phonetic transcription and then evaluated the degree of differentiation between nfvPPA and lvPPA participants (with the semantic variant serving as a reference point for minimal speech production impairment).

Results

Most, but not all, articulatory and prosodic metrics differentiated statistically between the nfvPPA and lvPPA groups. Measures of distortion frequency, syllable duration, syllable scanning, and—to a limited extent—syllable stress and phonemic accuracy showed greater impairment in the nfvPPA group. Contrary to expectations, classification was most accurate in connected speech samples. A customized connected speech metric—the narrative syllable duration—yielded excellent to perfect classification accuracy.

Discussion

Measures of average syllable duration in multisyllabic utterances are useful diagnostic tools for differentiating between nfvPPA and lvPPA, particularly when based on connected speech samples. As such, they are suitable candidates for automatization, large-scale study, and application to clinical practice. The observation that both speech rate and distortion frequency differentiated more effectively in connected speech than on a motor speech examination suggests that it will be important to evaluate interactions between speech and discourse production in future research.

Primary progressive aphasia (PPA) is a clinical syndrome that is marked by gradual loss of language while nonlinguistic cognition remains relatively preserved (Mesulam, 1982). International consensus classification criteria provide guidelines for how to differentiate its diverse speech and language profiles into three clinical variants with relatively distinct behavioral profiles, affected brain regions, and pathophysiology (Gorno-Tempini et al., 2011). Behaviorally, a diagnosis of the nonfluent variant (nfvPPA) requires the presence of either the motor programming disorder apraxia of speech (AOS) or syntactic impairment in the form of expressive agrammatism. In contrast, a diagnosis of the logopenic variant (lvPPA) involves documented absence of at least one of these features. The AOS diagnosis is thus integral to contemporary variant classification. This importance is magnified by the additional complication that AOS sometimes is the first or only presentation in people who have no clear evidence of aphasia. Because the latter onset profile appears to suggest distinct pathophysiology and prognostic and therapeutic implications, the term primary progressive AOS (PPAOS) has been advocated (Duffy, 2006).

Despite its central role in differentiating among nfvPPA, lvPPA, and PPAOS, there are limited guidelines for how to diagnose AOS in progressive disease. In many studies, AOS has been treated as an unequivocal feature that the diagnostician readily recognizes as either present or absent, when in reality it is a complex and multidimensional syndrome that is vulnerable to interpretation differences (Haley et al., 2012; Molloy & Jagoe, 2019). As a syndrome within a syndrome, detailed quantification and systematic interpretation of AOS are warranted. The Apraxia of Speech Rating Scale (Strand et al., 2014) has addressed this need via a structured rating format, where diagnosticians indicate the degree to which they observe features of AOS in connected speech and in word and sentence repetition tasks. Iterations of the scale have been used successfully in recent studies to diagnose AOS in progressive disease (e.g., Botha et al., 2018; Utianski, Whitwell, et al., 2018). The main limitations of the Apraxia of Speech Rating Scale are that validity and reliability are contingent upon perceptual training and calibration with experienced raters (Wambaugh et al., 2019) and that the measurement level is ordinal. Our alternative approach, based on a research program focused on AOS in stroke, is to define key features in sufficiently concrete terms that they can be measured objectively on interval or ratio scales, with strong agreement among diagnosticians who work in different laboratories and clinics and have varied experiences and expectations.

Three primary features of AOS are considered most important for diagnosis. They include (a) perceptually evident phonetic distortions of consonant and vowel segments, (b) slow speaking rate in multisyllabic utterances, and (c) abnormal prosody in terms of stress patterns and pausing (Duffy, 2020; McNeil et al., 2009; Strand et al., 2014). Clinical diagnosis based on these criteria requires that each is defined in observable terms and demonstrated reliably in speech samples. In this study, we evaluate the extent to which quantitative metrics that correspond to AOS criteria differentiate between nfvPPA and lvPPA.

Defining Phonetic Distortion of Consonants and Vowels

Consonant and vowel errors occur in both nfvPPA and lvPPA, but the underlying processes that generate them are presumed to be different. Sound errors are common in nfvPPA and considered products of faulty motor programming or at least strongly affected by such impairment. It is also expected that articulatory accuracy may be diminished further by coexisting dysarthria that reduces articulation strength, speed, and coordination (Ogar et al., 2007). In contrast, sound errors are not universally present in lvPPA and—when they are found—they are explained as disruptions to lexical or phonological retrieval and assembly processes. Because these presumed motor, phonological, and lexical mechanisms are not directly available for inspection, diagnosticians must rely on the qualities they observe to draw inferences about underlying mechanisms.

It is possible, even straightforward, to define and measure many acoustic and perceptual features of the speech signal, but the relationship between such measures and underlying brain processes is complex. For example, it is sometimes assumed that productions that listeners perceive as phonemic substitutions are also caused by impaired phonological processing at a neural level. However, this inference is not necessarily justified. Instead, the perception may be a natural consequence of the phonemic perception bias that is fundamental to human speech perception (Fowler et al., 2016). Because of this bias, even the most extensively trained listeners are predisposed to classify sound errors phonemically. Though clinicians and other listeners hear substitutions, omissions, and additions of phonemes in the output of speakers with nfvPPA as well as speakers with lvPPA, these errors may originate from either impaired motor programming or phonological processing or both. In any case, the source of the errors is opaque to the listener.

On the other hand, when consonant and vowel errors are due to faulty programming, execution or coordination of speech movements, it is reasonable to expect additional evidence of temporal and spatial motor imprecision in the form of phonetic distortion. To some extent, listeners can learn to detect discrete distortion errors as deviations in consonant and vowel quality, characterize this quality, and link it temporally to individual consonant and vowel segments via narrow phonetic transcription. There is now strong auditory perceptual evidence that stroke survivors with aphasia and AOS, consistent with prediction, produce a higher frequency of phonetically transcribable vowel and consonant distortion errors than do speakers who have only aphasia (Basilakos et al., 2017; Bislick et al., 2017; Cunningham et al., 2016; Haley et al., 2017).

Despite their importance to the AOS diagnosis, phonetic distortion errors are insufficiently defined in the progressive aphasia literature. They have been characterized as misarticulations that do not involve phonemic substitutions (Wilson et al., 2010) and speech sounds that are not part of the speakers’ native phonological system (Ash et al., 2010). Because many phonetic variations could fit these descriptions, there is risk that diagnosticians interpret them subjectively and with limited precision. In lieu of operational definitions, most studies have assumed that listeners intuitively recognize abnormal phonetic form and can identify when it occurs in the speech output. For this reason, it is understandable that estimates of distortion error frequency have varied extensively across studies (Ash et al., 2010; Croot et al., 2012; Graham et al., 2016; Wilson et al., 2010). In this study, we use diacritic marks from a narrow phonetic transcription system we developed for stroke AOS research (Haley et al., 2017, 2019) to define the precise quality of each consonant and vowel segment that phonetically trained listeners perceive as distorted. The obvious advantages of operational definitions are that they allow frequency counts and permit other researchers to replicate results independently. Additionally, the use of a comprehensive transcription system helps to characterize the overall distortion error profile and, therefore, to understand the underlying movement patterns that produce it.

Defining Slow Speaking Rate

Reduced speaking rate is a common sign of motor impairment and a diagnostic criterion for AOS. Characteristic of AOS, many sounds and syllables are prolonged, and pauses appear where they normally would not. After stroke, these features are most readily observed in multisyllabic words and word sequences (Haley & Overton, 2001; Strand & McNeil, 1996). Because AOS after stroke is often accompanied by marked difficulties with grammatical organization and lexical retrieval, analyses are most practical when based on word repetition tasks rather than connected speech. For the past 8 years, our group has relied on a simple metric that expresses the average syllable duration in single words with three or more syllables—the word syllable duration (WSD; Haley et al., 2012). This study is our team’s first application of WSD to study AOS in progressive disease. Of interest, Duffy et al. (2017) used a similar metric (syllables per second, calculated as number of syllables divided by duration in seconds) to differentiate repetition rate for a single multisyllabic word in a group of 21 speakers with PPAOS from that of 20 speakers with lvPPA. Assuming the nfvPPA presentation includes AOS for most speakers, WSD should, similarly, differentiate between nfvPPA and lvPPA profiles. We hypothesized that a meaningful WSD difference may also be present in the multisyllabic words people with progressive aphasia produce during connected speech.

In contrast to the straightforward WSD metric, interpretation problems arise for speaking rate metrics in connected speech. On the one hand, most rate and duration metrics are sensitive for screening purposes in the sense that they differentiate impaired speech production from normal speech production (Nevler et al., 2019; Thompson et al., 2012; Wilson et al., 2010). On the other hand, diagnostic specificity is limited among qualitatively different communication disorders. Besides motor programming or execution impairment, challenges with memory, attention, executive functions, and linguistic formulation all influence how many syllables or words speakers generate per unit time. Returning to the consensus criteria for variant classification (Gorno-Tempini et al., 2011), we infer that the ideal rate metric for differentiating between nfvPPA and lvPPA in connected speech would be specific to impaired speech programming and grammatical formulation but minimally affected by other cognitive and lexical challenges. Conveniently, there is evidence that “relatively short” connected speech pauses indicate problems with speech articulation, fluency, and syntax, whereas “relatively long” pauses reflect response preparation and word retrieval hesitation (Hird & Kirsner, 2010). The application problem lies in determining what is to be considered a relatively short pause and what is to be considered a relatively long pause.

Researchers have used varied strategies for eliminating longer pauses in speech samples from people with PPA in order to more accurately evaluate motor performance. For example, Wilson et al. (2010) measured maximum speech rate during stretches where there were minimal interruptions and hesitations, whereas Cordella et al. (2017, 2019) calculated articulation rate based on eliminating pauses longer than 100 ms. Differentiation among PPA variants was more complete with these adjusted rate metrics compared to unadjusted speaking rate measures. However, if valuable information is conveyed through moderately long pauses, there is risk that overly aggressive pause trimming may reduce diagnostic precision. Indeed, syllable segmentation and intersyllabic pause prolongation do include pauses longer than 100 ms, and these features have long been considered characteristic of AOS (Kent & Rosenbek, 1982).

Of both practical and theoretical significance, there is empirical support that “relatively short” and “relatively long” pauses operate somewhat independently of each other. Log-transformed pause durations have been found to distribute bimodally in speakers with and without communication disorders, with short pauses clustering at a distinctly different mode than long pauses (Hird & Kirsner, 2010; Rosen et al., 2010). Importantly, the locations of these pause duration modes vary across speakers and speech samples, and it is not possible to identify a single appropriate cutoff that would be functionally meaningful for a clinical population. Under the hypothesis that precise diagnostic differentiation in progressive disease would require customized locations of these pause duration modes across speakers and speech samples, we therefore obtained rate and prosody measures based on ignoring only the longest pauses produced by a given speaker in a given speech sample.

Defining Abnormal Prosody

Slow speaking rate and abnormal prosody are strongly interconnected in AOS. Prosodic impairment affects timing primarily, disrupting the rhythm and forward flow of speech and resulting in a slower than normal speaking rate. Equalized duration of syllable nuclei, along with intersyllabic pauses, often result in the perceptual impression of “excess and equal stress” or “syllable segmentation,” where all syllables seem approximately equal in length or are separated by pauses, giving the impression that they are produced one at a time (Darley et al., 1975; Duffy, 2020). The degree to which syllables are equalized in duration can be expressed with a scanning index (SI; Ackermann & Hertrich, 1993). Ackermann and Hertrich developed this metric to evaluate prosody in connected speech produced by people with Friedreich’s ataxia, and our team recently applied it to illustrate a 2-year recovery period for a person with isolated AOS after focal traumatic brain injury (Haley et al., 2016). The SI is calculated based on the combined duration of pauses and consonant and vowel segments within syllables. It ranges from 0 to 1, with 1 indicating that all syllables have the same duration. Accordingly, we expect a higher SI for people with nfvPPA (with AOS) than for speakers with lvPPA.

Another application that has received considerable attention in AOS is the pairwise variability index (PVI). The metric was originally developed to compare rhythm in accents and dialects during connected speech (Ling et al., 2000). It is calculated as the normalized durational difference across adjacent syllable pairs. Basilakos et al. (2017) used PVI calculated from syllable nucleus durations to study connected speech in a stroke sample and found significantly lower PVI for participants with aphasia and AOS than for participants with aphasia alone. Most other applications have used a word-level variation of the metric where duration differences are compared between adjacent pairs of unstressed and stressed syllable nuclei. Word-level PVI has been found to be lower for stroke survivors with AOS and aphasia than for stroke survivors with aphasia alone (Haley & Jacks, 2019; Vergis et al., 2014) and for individuals with nfvPPA compared to individuals with lvPPA (Ballard et al., 2014; Duffy et al., 2017, 2015). These differences have been interpreted as reduced precision of lexical stress production in people with AOS.

Selecting a Speech Sample

The task that is used to collect the speech samples matters, as do the speakers’ interpretations of the task purpose. During free expression or semispontaneous speech, such as describing a picture or telling a story that is familiar to the conversation partner, speakers can choose their own words, consciously or subconsciously avoiding those they find challenging and relying instead on accessible and phonetically simple expressions. Conversely, when lexical retrieval, phonological preparation, or grammatical formulation is severely impaired, speakers may have limited ability to generate meaningful speech discourse on their own and consequently produce insufficient exemplars for proper evaluation of their true motor abilities. Due to the risk that connected speech will overestimate speech abilities in moderate-to-mild AOS/aphasia and underestimate them in severe aphasia, experienced clinicians routinely supplement interviews and semistructured connected speech samples with a motor speech examination (Duffy, 2020; Wertz et al., 1984). The examination is repetition-based to reduce lexical and syntactical complications and includes words with both low and high phonetic complexity to ensure appropriate challenge across severity levels. With this focus, the clinician has a uniform foundation for identifying articulatory, prosodic, and rate features that are indicative of AOS and dysarthria. Because the diagnosis of AOS is integral to the differentiation between nfvPPA and lvPPA, a motor speech examination should highlight critical differences, and with proper definitions, these differences should be measurable. In comparison, a connected speech sample may or may not preserve specificity to AOS features and may, therefore, be less useful for differentiating between nfvPPA and lvPPA based on speech production alone.

Purpose of This Study

We conducted this study to determine to what extent metrics of sound production and temporal prosody differentiate between nfvPPA and lvPPA in word repetition and connected speech samples. We hypothesized that we would be able to replicate diagnostic group differences for stroke AOS at the word level. Specifically, we expected that distortion errors would be more frequent and syllable durations longer and more equalized in nfvPPA than in lvPPA. We were unsure to what extent similar distinctions would be present in connected speech.

Method

Participants and Speech Samples

The study was conducted retrospectively. We analyzed speech samples produced by 20 native English speakers with PPA, 10 of whom were diagnosed with nfvPPA and 10 of whom were diagnosed with lvPPA. Participants were selected from a larger cohort based on the availability of three targeted speech tasks, described below. For descriptive comparison, we also present data for five participants with the semantic variant (svPPA). These five participants were not included in statistical comparisons, because the study purpose was to identify differences between the variants with the most similar speech output. Our expectation was simply that the svPPA speakers would serve as a supplemental reference point for low frequencies of sound errors and relatively normal speaking rate.

Data were stored and processed according to procedures approved by the human research ethics institutional review boards for the collaborating universities. Other than progressive neurologic disease, participants had no history of stroke or other neurologic condition. PPA variant classification was made by an interdisciplinary team evaluation based on a battery of neurological, language, speech, and neuropsychological testing, which has been detailed elsewhere (Gorno-Tempini et al., 2004). Demographics and clinical test results are presented in Table 1. Speech and language scores diverged as expected based on the variant classification. Though all participants scored 12 or greater on the Mini-Mental State Examination (MMSE), the MMSE scores (which reflected all intelligible responses given by participants) were significantly higher for the nfvPPA group (Mdn = 28) than for the lvPPA group (Mdn = 21, p = .002). There were no group differences in age, years of education, time post onset, or aphasia severity as estimated by the Western Aphasia Battery–Revised (WAB-R; Kertesz, 2006).

Table 1.

Participant demographics and clinical test results (median and range).

| Variable | Group |

||

|---|---|---|---|

| nfvPPA (n = 10) | lvPPA (n = 10) | svPPA (n = 5) | |

| Age (years) | 68.5 (57.0–79.0) | 68.0 (58.0–77.0) | 71.0 (64.0–74.0) |

| Sex | 5 female, 5 male | 3 female, 7 male | 3 female, 2 male |

| Handedness | 10 right | 10 right, 0 left | 4 right, 1 ambidext. |

| Time since symptom onset (months) | 48.0 (24.0–72.0) | 48.0 (12.0–132.0) | 30.0 (24.0–72.0) |

| Education (years) | 16.0 (12.0–18.0) | 16.0 (12.0–21.0) | 21.0 (16.0–21.0) |

| MMSE | 28.0 (24.0–30.0) | 21.0 (16.0–27.0) | 26.0 (11.0–27.0 |

| CVLT-SF a Trials 1–4 total | 25.0 (18.0–31.0) | 11.5 (7.0–28.0) | 19.5 (1.0–21.0) |

| CVLT-SF a 30 s free recall | 6.5 (4.0–9.0) | 3.0 (0.0–6.0) | 5.0 (0.0–6.0) |

| CVLT-SF a 10 min free recall | 6.5 (5.0–9.0) | 2.5 (1.0–5.0) | 3.5 (0.0–5.0) |

| Complex figure copy a | 14.0 (9.0–17.0) | 16.0 (13.0–17.0) | 16.0 (5.0–17.0) |

| Complex figure recall (10 min) a | 11.5 (5.0–15.0) | 8.0 (2.0–10.0) | 5.5 (5.0–11.0) |

| Digit span (forward) | 5.0 (4.0–8.0) | 4.5 (3.0–6.0) | 6.0 (4.0–8.0) |

| Digit span (backward) | 3.0 (2.0–5.0) | 3.0 (2.0–4.0) | 4.0 (3.0–4.0) |

| WAB-R Aphasia Quotient | 84.5 (76.2–96.9) | 84.8 (68.1–91.0) | 77.6 (57.6–93.6) |

| WAB-R Repetition | 87.5 (69.0–96.0) | 74.0 (62.0–94.0) | 80.0 (63.0–100.0) |

| WAB-R Sequential Commands | 80.0 (58.0–80.0) | 71.5 (36.0–80.0) | 72.0 (38.0–80.0) |

| BNT | 55.0 (50.0–58.0) | 44.5 (2.0–51.0) | 15.0 (1.0–34.0) |

| PPT | 14.0 (13.0–14.0) | 14.0 (13.0–14.0) | 14.0 (8.0–14.0) |

| PPVT a | 16.0 (11.0–16.0) | 15.0 (10.0–16.0) | 13.5 (7.0–16.0) |

| AOS severity | 3.0 (2.0–6.0) | 0.0 (0.0–0.0) | 0.0 (0.0–0.0) |

| Dysarthria severity | 2.5 (0.0–5.0) | 0.0 (0.0–0.0) | 0.0 (0.0–0.0) |

Note. nfvPPA = nonfluent variant of primary progressive aphasia; lvPPA = logopenic variant of primary progressive aphasia; svPPA = semantic variant of primary progressive aphasia; ambidext. = ambidextrous; MMSE = Mini-Mental State Examination (/30); CVLT-SF = California Verbal Learning Test, Short Version (/9 per trial; not available for two participants in the nfvPPA group, one in the lvPPA group, and one in the svPPA group); Complex figure copy and recall = /17, not available for one in the svPPA group; WAB-R = Western Aphasia Battery–Revised; WAB-R Aphasia Quotient = /100, not available for two participants in the lvPPA group; WAB-R Repetition = /100; WAB-R Sequential Commands = /80; BNT = Boston Naming Test (/60; not available for one participant in the nfvPPA group); PPT = Pyramids and Palm Trees Test, Short Version (/14); PPVT = Peabody Picture Vocabulary Test, Short Version (/16; not available for one participant in the nfvPPA group, two in the lvPPA group, and one in the svPPA group); AOS = apraxia of speech (rating: eight levels, 0 = no AOS, 7 = severe AOS); Dysarthria = rating: eight levels (0 = no dysarthria, 7 = severe dysarthria).

From Neuropsychological battery described in Kramer et al. (2003).

The speech assessment included a repetition-based motor speech examination (Wertz et al., 1984) and the spontaneous speech tasks from the WAB-R (Kertesz, 2006). Based on these tasks, AOS and dysarthria were diagnosed by clinical impression, using a severity rating (0 = none present, 1 = minimal, 2 = mild, 3 = mild to moderate, 4 = moderate, 5 = moderately severe, 6 = severe, 7 = profound). All speakers in the nfvPPA group were diagnosed with AOS, with severity ranging from mild to severe. None of the other speakers were diagnosed with AOS. Additionally, eight of 10 speakers with nfvPPA were diagnosed with minimal to moderate dysarthria, whereas all other speakers were judged to be free from dysarthria. Dysarthria was diagnosed based on perceptual characteristics alone. Though subtyping was not conducted specifically, the impressions were consistent with spastic, hypokinetic, or mixed spastic–hypokinetic dysarthria. The AOS and dysarthria proportions are similar to previously published samples from the University of California San Francisco and the University of Texas at Austin (e.g., Henry et al., 2018; Santos-Santos et al., 2016). Nine participants in the nfvPPA group showed evidence of both agrammatism and AOS, but one presented with AOS and dysarthria without agrammatism. This participant scored above the aphasia cutoff on the WAB-R and had mild-to-moderate AOS and moderate dysarthria. Other research teams may have used a diagnosis of PPAOS for this specific case. However, because we followed the international consensus criteria, where only agrammatism or AOS—not both—are necessary for diagnosis (Gorno-Tempini et al., 2011), we included him in the nfvPPA group. Six of the 10 participants in the lvPPA group were observed to produce salient phonological errors in confrontation naming or connected speech during the clinical evaluation.

Each participant produced three speech samples for video recording. The audio signal was extracted from the video for this study. The first speech sample was the motor speech examination. It was elicited with a published protocol that consisted of 36 words (19 monosyllabic, five disyllabic, and 12 multisyllabic), organized in sections of single words, consecutive word repetitions, and words of increasing length (Wertz et al., 1984). Participants were asked to repeat these words in a fixed order based on experimenter modeling. The second sample was a connected speech monologue in the form of the picnic scene picture description task from the WAB-R. Participants were asked tell the examiner what they saw in the picture and try to talk in sentences. As a third speech sample, we used the Cinderella story narrative task. Participants were given a wordless picture book of Cinderella to review, then the experimenter removed the picture book and asked them to tell the story in their own words. The Cinderella speech sample was available only for a subset of study participants (eight nfvPPA, eight lvPPA, and three svPPA).

Sound Error Coding

Three phonetically trained and experienced transcribers—blinded to participant diagnosis—completed narrow phonetic transcription of all three speech samples. They used headphones and supplemented their auditory perception with waveform/spectrographic displays in Praat (Boersma & Weenink, 2017). Each transcriber had completed one course in phonetics under the direction of the first author, followed by specialized training in narrow transcription that lasted an additional university semester and exclusively involved transcription of speech samples from people with stroke-induced aphasia, dysarthria, and AOS. Aside from clinical observations, the current study was the transcribers’ first exposure to speech in PPA.

The coding was completed in Excel spreadsheets, using computer-readable phonetic characters (Vitevitch & Luce, 2004) to mark English phonemes and numerical codes to mark 11 distortion categories. The distortion categories were identified across three successive studies of left-hemisphere stroke survivors with aphasia and AOS. In first study (Cunningham et al., 2016), our team transcribed speech samples with a set of 35 distortion marks from a clinical phonetics resource (Shriberg & Kent, 2003). Next, we reduced the set gradually by eliminating categories that were observed infrequently and combining others that were redundant for our purposes (Haley et al., 2017, 2019). Definitions for the remaining 11 distortion categories are provided in Appendix A. The transcribers were instructed to reserve distortion codes for abnormal or unusual productions and not use them for normal allophonic variation.

The transcription team used a consensus method to maximize accuracy. First, two transcribers reviewed the audio-recorded speech samples independently. If there were any differences in the exact transcription for a word, the third coder transcribed the word without knowledge of the other two transcriptions. Exact agreement with one of the other coders was used as the consensus transcription. If the third coder did not fully agree with one of the other coders, all three coders listened to the word, discussed their impressions, and generated a narrow phonetic transcription that all three endorsed.

All 36 target words in the motor speech examination were transcribed phonetically. Per the protocol, six of the multisyllabic words were repeated five times consecutively. For these targets, we transcribed only the first word. If the correct number of syllables was not produced, the team transcribed the first production with the closest to correct number of syllables. This happened rarely, for example, when a vowel was added in a consonant cluster or deleted in an unstressed syllable. The same strategy of transcribing the first valid response was used when productions were self-corrected. These decision rules are consistent with our previous research (e.g., Haley et al., 2020, 2017, 2019), and their purpose was to ensure assessment fidelity.

The transcription process was adjusted slightly for the connected speech samples. Fully correct words were simply indicated orthographically, but the perception of any sound error prompted narrow phonetic transcription of the entire word. The first transcriber generated an orthographic gloss and identified words that were to be evaluated. Prepositions, pronouns, determiners, conjunctions, auxiliary verbs, and interjections or fillers (e.g., “wow,” “oh,” and “um”) were not transcribed because they are often brief in connected speech and difficult to code with narrow phonetic transcription. Like the motor speech examination, the transcribers analyzed only the first production when a word was produced more than once sequentially. No partial word revisions were transcribed.

Phonemic errors were operationally defined as those involving the substitution, omission, addition, or transposition of phoneme symbols; distortion errors were operationally defined as the addition of one or more distortion marks to a transcribed phoneme. The three dependent variables included the percentage of evaluated words that were transcribed with at least one of the following: (a) a phonemic error, (b) a distortion error, or (c) a phonemic error that was simultaneously coded with a distortion error. Henceforth, we will refer to the latter error category as “distorted substitutions.” Distorted substitution errors were a subset of both phonemic errors and distortion errors, which meant that words with distorted substitution errors were also coded as words with phonemic errors and words with distortion errors. By expressing error frequency as the proportion of affected words rather than affected phonemes, we were able to use comparable metrics across samples without having to generate a target transcription for all content words in the connected speech samples. Definitions of the dependent variables are provided in Appendix B, and illustrations of transcription coding are provided in Appendix C.

For the motor speech examination sample where the target word was known and consistent across speakers, we were also able to calculate the percentage of consonant/vowel segments with a phonetic distortion mark and the percentage of consonant/vowel segments that were phonemically incorrect (derived from the phonemic edit distance; Smith et al., 2019). The purpose of these latter analyses was to interpret the error frequencies we observed relative to stroke data our team collected previously, using similar transcription methods.

Rate and Prosody Coding

Six student research assistants completed the rate and prosody analyses. Two of them also participated in the narrow phonetic transcription coding, whereas four worked exclusively on rate and prosody coding. The coders completed their measurements independently while blinded to participant diagnosis. The primary task was to mark the onset and offset of syllables and vowel nuclei. This segmentation was based on visual inspection of waveform, spectrogram, and intensity analyses in the Praat software, in combination with auditory judgment. Each coder had completed a semester-long course in acoustic analysis and received individualized instruction on how to perform the task. Boundary marks were extracted from TextGrids with custom scripts that imported duration values into Excel for final calculations.

For the motor speech examination, we obtained acoustic measures of prosody from the multisyllabic words. WSD was measured for all 12 multisyllabic words; SI was computed for words with four or more syllables. In addition, PVI was calculated for four multisyllabic words that had a weak–strong stress pattern (“catastrophe” comparing Syllables 1 and 2, “microscopic” and “segregation” comparing Syllables 2 and 3, and “impossibility” comparing Syllables 3 and 4). Detailed definitions are provided in Appendix B.

For the connected speech samples, the first step was to segment the samples into analysis groups in such a way that particularly “long” pauses were eliminated. The end of an analysis group was defined based on (a) speech disfluency, filler words, laughing, humming, or other nonspeech sounds or (b) the presence of a “long” pause. As planned, we customized the criterion for a long pause to each participant and each connected speech sample. Specifically, we used the “median average deviation” (MAD; Leys et al., 2013) to establish cutoff values. The steps for finding the MAD were as follows: (1) determine the median duration of all intersyllable intervals (i.e., pauses), (2) find the absolute deviation of each intersyllabic interval from the median in Step 1, (3) obtain the median of the series of absolute deviations in Step 2, and (4) multiply the median absolute deviation in Step 3 by a constant b that is equal to 1 divided by the 75th percentile of the distribution in Step 3. Prosodic measures cannot be obtained from analysis groups with fewer than four syllables, so any analysis groups that were this short were excluded. Average cutoffs and analysis group data are provided in Appendix D. Note, for example, that the cutoff for what was to be considered a “long” pause had twice the duration in the WAB-R Picture Description task (nfvPPA, M = 1.12 s; lvPPA, M = 0.96 s) than in the Cinderella story task (nfvPPA, M = 0.56 s; lvPPA, M = 0.51 s). Once the analysis groups were prepared, we derived the following metrics according to defined formulas for connected speech: narrative syllable duration (NSD), PVI, 1 and SI. Definitions are provided in Appendix B.

To estimate interobserver reliability, a second coder repeated the analysis group segmentation and duration measurement independently for 25% of the samples. Reliability was expressed with intraclass correlations (ICCs), using a single-rater, absolute-agreement, two-way random-effects model (R package irr v 0.84.1; Gamer et al., 2019) and interpretation guidelines provided by Koo and Li (2016). Measurements were compared by individual words for the motor speech examination and by analysis groups for the connected speech samples. Based on the primary coder’s analysis group boundaries, average measures of WSD, NSD, PVI, and SI were calculated from raw duration calculations reported by the secondary coder and then compared to results from the primary coder.

ICCs indicated excellent reliability for all raw measurements of syllable duration (ICC > .930) and for the derived average syllable duration metrics (WSD and NSD ICCs > .957; see Table 2). In previous work, we have observed that reliability for derived measures, such as the PVI, can be markedly worse than for the raw measures used to calculate them (Haley & Jacks, 2019). There was a similar relationship in this study. Starting with the motor speech examination word repetition task, interobserver reliability was excellent for the individual syllable durations on which the word-level PVI and SI metrics were based (ICC = .976, CI [.958, .986]). However, as shown in Table 2, reliability was only moderate to good for the PVI and SI values that were derived from these measurements (ICC = .844, CI [.570, .944] for PVI; ICC = .817, CI [.713, .885] for SI).

Table 2.

Reliability for rate and prosody variables.

| Variable | ICC | 95% Confidence interval |

|---|---|---|

| Motor speech examination | ||

| Word syllable duration (ms) | .991 | [.964, .997] |

| Pairwise variability index | .844 | [.570, .944] |

| Scanning index | .817 | [.713, .885] |

| Western Aphasia Battery Picture Description | ||

| Word syllable duration (ms) | .981 | [.961, .991] |

| Narrative syllable duration (ms) | .924 | [.872, .952] |

| Pairwise variability index | .600 | [.477, .701] |

| Scanning index | .890 | [.847, .921] |

| Cinderella story telling | ||

| Word syllable duration (ms) | .957 | [.929, .974] |

| Narrative syllable duration (ms) | .939 | [.919, .954] |

| Pairwise variability index | .363 | [.085, .559] |

| Scanning index | .702 | [.597, .778] |

Note. ICC = intraclass correlation, calculated with a single-rater, absolute-agreement, two-way random-effects model.

Interobserver reliability in the connected speech samples showed a similar pattern. Whereas the ICCs were excellent for the raw syllable duration measures that entered the equations (ICC = .937, CI [.912, .955] for the picture description; ICC = .937, CI [.892, .960] for the Cinderella story), they were poor to moderate for the derived connected speech PVI (ICC = .600, CI [.477, .700] for the picture description; ICC = .363, CI [.085, .559] for the Cinderella story) and moderate to good for the derived connected speech SI (ICC = .890, CI [.847, .921] for the picture description; ICC = .702, CI [.597, .778] for the Cinderella story).

Analysis Plan

The reference standard for classification was the interdisciplinary team’s diagnosis of PPA variant, which for this sample corresponded exactly with a clinical diagnosis of AOS. Inferential statistics were restricted to the nfvPPA and lvPPA speakers and completed in R. Due to nonnormal distributions and the small sample size, we used the Mann–Whitney U statistic for group comparisons. In the event of a tie between two rank-ordered values, the normal approximation was used to estimate the p value. We report differences significant at p < .05. To index the strength of classification, we conducted receiver operating characteristic analyses, using the R package pROC (Robin et al., 2011), and calculated the area under the curve (AUC), which we interpreted qualitatively according to common criteria (e.g., Carter et al., 2016). Finally, we calculated the Spearman correlation to evaluate stability across speech samples for the measures that best distinguished between the two variants.

Results

Sound Errors

The presentation of results will focus on the nfvPPA and lvPPA groups. Descriptive results for the svPPA participants were similar to those for the lvPPA participants (see Figure 1 and Appendix E). There were no statistically significant differences between the nfvPPA and lvPPA groups in terms of the number of analyzed words for any of the samples. Participants in both groups produced most of the 36 target words in the motor speech examination sample (nfvPPA Mdn = 35.5, lvPPA Mdn = 33.0). In comparison, speech samples from the WAB-R Picnic Scene Picture Description task generated a higher frequency of words that were not prepositions, pronouns, determiners, conjunctions, auxiliary verbs, interjections, or fillers and thus eligible for transcription (nfvPPA Mdn = 45.5, lvPPA Mdn = 57.5), and the frequency of transcribed words was even greater for the Cinderella story narrative task (nfvPPA Mdn = 87.0, lvPPA Mdn = 89.0). The Cinderella task generated story telling that continued for a longer time than the WAB-R Picture Description; however, the number of evaluated words per minute was comparable (nfvPPA Mdn = 16.7, lvPPA Mdn = 22.2 for the WAB-R and nfvPPA Mdn = 15.8, lvPPA Mdn = 18.1 for the Cinderella story).

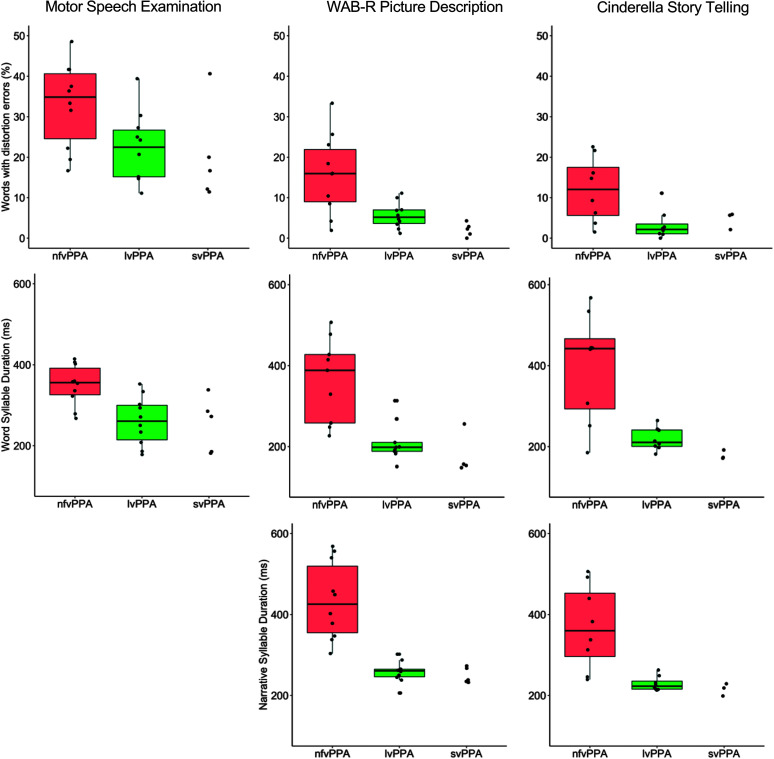

Figure 1.

Percent words produced with distortion errors (top), duration of word syllables (middle), and narrative syllable duration (bottom) across the three speech samples. WAB-R = Western Aphasia Battery–Revised; nfvPPA = nonfluent variant of primary progressive aphasia; lvPPA = logopenic variant of primary progressive aphasia; svPPA = semantic variant of primary progressive aphasia.

Table 3 shows the results of the group comparisons for the dependent variables that were derived from the phonetic transcription analysis. The percentage of words with one or more distortion errors was significantly greater in the nfvPPA group than in the lvPPA group for all three speech samples. In particular, the lvPPA speakers rarely produced words with distortion errors during the connected speech samples (Mdn = 5.2% of words for the WAB-R Picture Description and 2.2% of words for the Cinderella story), and the proportion of distortion errors was considerably greater in these samples for the nfvPPA participants (Mdn = 16.0% of words for the WAB-R Picture Description and 12.0% of words for the Cinderella story). The receiver operating characteristic analysis showed that discrimination was fair for the motor speech examination sample (AUC = .790) and good for the connected speech samples (AUC = .820 and .859). The improved differentiation from word repetition to connected speech is illustrated in Figure 1 (top row), which also shows that the distortion error frequencies were considerably greater for both speaker groups in the MSE word repetition samples than in the two connected speech samples.

Table 3.

Median values of sound error measures across the three speech samples and statistical group comparisons.

| Variable | nfvPPA | lvPPA | p | AUC |

|---|---|---|---|---|

| Motor speech examination | ||||

| Words with phonemic errors (%) | 23.6 | 12.9 | .256 | .655 |

| Words with distortion errors (%) | 34.8 | 22.5 | .031* | .790 |

| Words with distorted substitution errors (%) | 2.9 | 0.0 | .439 | .600 |

| Western Aphasia Battery Picture Description | ||||

| Words with phonemic errors (%) | 15.6 | 3.1 | .009** | .850 |

| Words with distortion errors (%) | 16.0 | 5.2 | .015* | .820 |

| Words with distorted substitution errors (%) | 0.0 | 0.0 | NA | NA |

| Cinderella story telling | ||||

| Words with phonemic errors (%) | 11.6 | 3.1 | .083 | .766 |

| Words with distortion errors (%) | 12.0 | 2.2 | .015* | .859 |

| Words with distorted substitution errors (%) | 0.0 | 0.0 | .566 | .578 |

Note. Group comparisons were completed using the Mann–Whitney U statistic. AUC indicates area under the receiver operating curve for differentiating nfvPPA from lvPPA with clinical diagnosis as the reference standard. nfvPPA = nonfluent variant of primary progressive aphasia; lvPPA = logopenic variant of primary progressive aphasia; svPPA = semantic variant of primary progressive aphasia; NA = not applicable.

p < .05.

p < .01.

Though the nfvPPA group produced numerically greater percentages of words with phonemic errors compared to the lvPPA group, the difference reached statistical significance only in the WAB-R Picture Description task (see Table 3; Mdn =15.6% for nfvPPA participants, Mdn = 3.1% for lvPPA). On the motor speech examination, very few (Mdn = 2.9%) of the words produced by the nfvPPA participants included distorted substitution errors (consonant or vowel segments that were simultaneously coded with phonemic and distortion errors). None of the single words produced by the lvPPA participants and none of the words in the connected speech samples for either group included measurable frequencies of distorted substitution errors.

Recall that, to obtain more fine-grained analysis and facilitate descriptive comparison with our previously reported stroke data, we also calculated the proportion of phoneme segments that were produced with phoneme or distortion errors, but we did this only for the motor speech examination sample. Like percent words with phonemic errors, percent segments with phonemic errors did not differ between groups (nfvPPA group Mdn = 27.2%, M = 28.8%; lvPPA group Mdn = 20.5%, M = 20.5%). At this level of analysis, there was not a significant group difference for frequency of distortion errors (nfvPPA Mdn = 7.8%, M = 6.7%; lvPPA Mdn = 3.8%, M = 4.5%).

Across all three speech samples, our transcriber team coded a total of 282 distortion errors for the nfvPPA speakers and 139 distortion errors for the lvPPA speakers. Table 4 shows the proportion of these combined distortion errors that were coded with each of the 11 diacritic marks. Though there were twice as many distortion errors in the nfvPPA group, the distortion qualities were similar for both groups Voicing ambiguity was most common, followed by sound lengthening and raised tongue body.

Table 4.

Distribution across the 11 coded distortion types and expressed as a proportion of all distortion errors in each participant group (282 for the nonfluent variant of primary progressive aphasia [nfvPPA], 139 for the logopenic variant of primary progressive aphasia [lvPPA]).

| Type of distortion errors coded | nfvPPA (%) | lvPPA (%) |

|---|---|---|

| Voicing ambiguity | 34.4 | 27.3 |

| Nasal ambiguity | 6.7 | 6.5 |

| Rhotic ambiguity | 2.5 | 1.4 |

| Frictionalized or weakened | 6.4 | 9.4 |

| Lengthened | 12.1 | 20.1 |

| Shortened | 1.8 | 3.6 |

| Centralized tongue body | 8.2 | 3.6 |

| Retracted tongue body | 6.7 | 5.8 |

| Advanced tongue body | 4.6 | 7.2 |

| Raised tongue body | 12.4 | 13.7 |

| Lowered tongue body | 4.3 | 1.4 |

Note. The distribution of distortions is presented within each speaker group and across all three speech samples.

Rate and Prosody

Rate and prosody measures were calculated for syllable sequences in multisyllabic words and connected speech. The frequency of multisyllabic words did not differ between the nfvPPA and lvPPA groups in any of the samples. However, there were large and expected differences among the three speech samples. For both speaker groups, almost one third of the produced word repetitions on the motor speech examination were multisyllabic (nfvPPA Mdn = 32.4%, lvPPA Mdn = 27.3%). In contrast, few words on the WAB-R Picture Description were multisyllabic (nfvPPA Mdn = 4.2%, lvPPA Mdn = 3.4%), with two participants (one in the nfvPPA group, one in the lvPPA group) producing no multisyllabic words at all on this task. The proportion of multisyllabic words for the Cinderella story-telling task was intermediate (nfvPPA Mdn = 12.0%, lvPPA Mdn = 12.7%).

Results of the rate and prosody comparisons are presented in Table 5. As predicted, WSD during the motor speech examination was longer for nfvPPA speakers (Mdn = 356.1 ms) than for lvPPA speakers (Mdn = 260.7 ms, p = .003), and the discrimination between these diagnostic groups was good (AUC = .880). Classification accuracy was good to excellent for multisyllabic words extracted from the two connected speech samples (p = .001, AUC = .926 for the WAB-R Picture Description sample and p = .010, AUC = .875 for the Cinderella story-telling sample). Figure 1 (middle row) illustrates how the connected speech samples magnified the WSD difference through diverging distributions and reduced variability for the lvPPA participants. The relative advantage of WSD in connected speech sample is somewhat muted by a modest correlation between the picture description and story-telling samples (ρ = .585). This was likely due to the very low number of multisyllabic words produced during the picture description task. Pairwise Spearman correlations across all WSD and NSD metrics are provided in Appendix F.

Table 5.

Median values of rate and prosody measures across the three speech samples and statistical group comparisons.

| Variable | nfvPPA | lvPPA | p | AUC |

|---|---|---|---|---|

| Motor speech examination | ||||

| Word syllable duration (ms) | 356.1 | 260.7 | .003* | .880 |

| Pairwise variability index | 6.2 | 23.2 | .243 | .667 |

| Scanning index | .919 | .893 | .035* | .780 |

| Western Aphasia Battery Picture Description | ||||

| Word syllable duration (ms) | 388.4 | 198.3 | .001** | .926 |

| Narrative syllable duration (ms) | 425.7 | 260.9 | < .001*** | 1.000 |

| Pairwise variability index | 50.8 | 53.1 | .353 | .630 |

| Scanning index | .652 | .468 | .043* | .770 |

| Cinderella story telling | ||||

| Word syllable duration (ms) | 442.1 | 210.4 | .010* | .875 |

| Narrative syllable duration (ms) | 360.1 | 223.0 | .002** | .938 |

| Pairwise variability index | 59.1 | 70.1 | .010* | .875 |

| Scanning index | .799 | .650 | .010* | .875 |

Note. Group comparisons were completed using the Mann–Whitney U statistic. AUC indicates area under the receiver operating curve for differentiating nfvPPA from lvPPA with clinical diagnosis as the reference standard.

p < .05.

p < .01.

p < .001.

Variant classification was nearly perfect for the NSD (AUC = 1.000 for the WAB-R samples and .938 for the CIN samples). The classification utility of the NSD is further supported by a strong correlation for the NSD between the connected speech samples (ρ = .953; see Appendix F). When defining individualized MAD thresholds between longer and shorter pauses, we observed earlier that that they were almost twice as long in the WAB-R samples than in the Cinderella story (see Appendix D). Similarly, inspection of Table 5 and Figure 1 shows that the NSD was numerically longer for the WAB-R samples (nfvPPA Mdn = 425.7 ms, lvPPA Mdn = 260.9 ms) than for the Cinderella samples (nfvPPA Mdn = 360.1 ms, lvPPA Mdn = 223.0 ms).

In contrast to the robust effects for the syllable durations, the syllable-based PVI showed no group difference for any of the speech samples. Recall that there was excellent reliability for raw syllable duration measurements but limited reliability for the derived PVI metric. This undoubtedly contributed to the lack of group effect. In contrast, the SI—which exhibited moderate to good interobserver reliability for the derived values—yielded statistically significant group differences and fair-to-good discrimination for all three speech samples (AUC = .780 for the motor speech examination; AUC = .770 for the WAB-R Picture Description; AUC = .875 for the Cinderella story telling).

Discussion

The purpose of this study was to determine to what extent quantitative correlates of AOS differentiate between the two recognized PPA variants that involve speech sound errors and reduced speaking rate. To answer the question, we relied on an interdisciplinary team diagnosis as the reference standard for PPA classification and obtained operationalized phonetic measures of articulation, rate, and prosody. The quantification strategy was based on our previous work with AOS in stroke and focal traumatic brain injury in the context of a standard motor speech examination. We expected similar results in this study, given that all participants with nfvPPA and no participants with lvPPA were diagnosed with AOS. While results confirmed the prediction and most measures did differentiate between nfvPPA and lvPPA, the distinction was most complete for connected speech samples. Results indicate clinical value and a need for further research to address interactions among motor, cognitive, and linguistic factors during connected speech production.

Distortion Error Frequency Differentiates Between nfvPPA and lvPPA

The first AOS feature we examined was consonant/vowel distortions, which are usually defined as errors involving relatively subtle phonetic differences. Because phonetic distortions can manifest diversely in AOS, our quantification approach was based on a set of 11 distortion categories that were previously observed in stroke AOS (Haley et al., 2019). The categories were coded through a team-based narrow phonetic transcription procedure. Like the other diagnostic features we evaluated, the primary question was whether distortion errors were more common in nfvPPA than in lvPPA, and if so, how completely these errors differentiated between diagnostic groups. Results confirmed our prediction and add to a growing literature that has used a variety of perceptual methods to demonstrate greater frequencies of distortion errors in nfvPPA than in lvPPA (Croot et al., 2012; Wilson et al., 2010).

In our sample, diagnostic discrimination based solely on sound distortions was fair when coded within a motor speech examination (AUC = .790). The relatively modest differentiation indicates, similar to recent single-word repetition studies of stroke aphasia (Bislick et al., 2017; Haley et al., 2017), that perceptible distortion errors are not unique to AOS but are also found to a lesser extent in people who have aphasia without AOS. At first glance, this finding may seem unexpected, since it is often assumed that distortion errors should not be present in the speech of people with aphasia and no AOS. However, acoustic studies of aphasia after stroke have observed repeatedly that people with conduction and Wernicke’s aphasia produce distortion errors when asked to repeat words or short phrases (Baum et al., 1990; Blumstein et al., 1980; Haley, 2002, 2004; Kurowski & Blumstein, 2016; Kurowski et al., 2007; Seddoh et al., 1996; Tuller, 1984; Verhaegen et al., 2020; Vijayan & Gandour, 1995; Ziegler & Hoole, 1989). To our knowledge, studies of this type have, so far, not been conducted with progressive aphasia, but it would be logical to expect similar results. Like perceptually detected distortion errors, the frequency of acoustically determined consonant and vowel distortions has always been lower for participants with fluent aphasia and no AOS than for participants with AOS and Broca’s aphasia. So far, the presentation has been considered “subclinical” (Blumstein, 1998), because the presence of distortion errors is not immediately evident to listeners. The result of this study suggests that subtle distortion qualities in reality are available to phonetically trained listeners. Qualitative similarities between the errors our transcribers observed most often and the previously documented acoustic distortion errors (ambiguous voicing, prolongation, and tongue body modifications) support this interpretation and suggest a path for future research to pursue acoustic–perceptual links. It is also worth mentioning that evidence of acoustically defined consonant and vowel distortion errors is not necessarily indicative of pathology, as such errors can also be induced in neurologically healthy speakers (Frisch & Wright, 2002; Goldrick et al., 2011; Goldrick & Blumstein, 2006).

Distortion frequencies were far less common in connected speech, and the most interesting finding was that the differentiation between nfvPPA and lvPPA was relatively complete for this variable in both the WAB-R Picture Description and the Cinderella story-telling samples (AUC = .820 and .859, respectively). This difference between speech samples was also observed in some speakers with svPPA. Our study is certainly not the first to report higher error rates on repetition tasks than in connected speech. In fact, the task difference is considered typical of classic stroke-induced conduction aphasia (Goodglass, 1993) as well as lvPPA (Gorno-Tempini et al., 2011). Recent studies have reported dissimilarities between single-word production and connected speech for people with lvPPA in terms of both distortion errors and phonemic errors (Croot et al., 2012; Petroi et al., 2014; Sajjadi, Patterson, Tomek, & Nestor, 2012; Wilson et al., 2010). The results of this study add to these observations and indicates a need to evaluate how impairment profiles and speaking conditions interact when generating speech errors.

To understand why so many more phonemic and distortion errors were observed in the motor speech examination than in connected speech and why this difference was particularly prominent for the lvPPA speakers, we must consider both speaker and listener factors. On the speaker side, a potentially important distinction is that the motor speech examination, by design, included a high proportion of multisyllabic and other phonetically complex words, whereas the connected speech samples did not. A comprehensive evaluation of phonetic complexity was beyond the scope of this study, but the greater incidence of sound errors on the motor speech examination may simply reflect that phonetically complex targets are necessary to reveal milder degrees of speech production impairment. Presumably, the effect would be evident for both distortion and phonemic errors and regardless of the underlying nature of the disorder. Moreover, there is no reason to assume that the brain processes that orchestrate speech production are easily dichotomized as distinct modules of phonetic versus phonemic networks (Guenther et al., 2006; Hickok, 2014). In future research, it will be important to evaluate the relationship between phonetic complexity and distortion frequency, as it will have implications for treatment planning. Additionally, if complexity does mediate both phonemic and distortion error frequency in lvPPA and svPPA, it would be necessary to revisit the recommendation that “increasing distortion errors with increasing utterance length and phonetic complexity” should be considered a primary distinguishing feature of AOS (Strand et al., 2014).

When speaking freely, it would appear strategically advantageous to avoid words on which one is likely to make errors. Task-related flexibility on how to approach a semispontaneous connected speech task is presumably the same for people with nfvPPA and lvPPA; however, differences in language access may affect the ability to take advantage of this freedom. One hypothesis is that the lvPPA participants were more inclined to produce a phonetically simple output, either due to particularly ineffective lexical access or to greater flexibility for using circumlocution or alternative words to express themselves. Alternative explanations are that cognitive demands for the repetition task align with specific impairment (e.g., in phonologic working memory) to reveal otherwise masked performance challenges or simply that milder problems surface with the greater time pressure and anxiety that is inherent in a confrontation task.

On the listener side, distortion errors are likely easier to detect in a word repetition task where the target is known, speaking rate is slower, coarticulation is limited to individual words, and there are limited distractions in the form of discourse content and extralinguistic communication. Consequently, listeners are able to focus their full attention on the phonetic detail that may be overlooked in a linguistically and communicatively richer discourse context. This focus may be particularly important in disorders like AOS and aphasia, where sound production errors are often both intermittent and inconsistent (Haley et al., 2020).

Over time, speech production worsens in progressive disease and improves after stroke. To integrate results across the diverse samples that are represented in the literature, it is important to consider severity of impairment. In our stroke research, we have found that key variables considered diagnostic of AOS are strongly affected by severity (Haley et al., 2020, 2017), and there is every reason to expect the same to be true in progressive disease. To facilitate interpretation of this study relative to the broader literature, we reported the percentage of phonemes produced with distortion and phonemic errors on the motor speech examination, which corresponds to several recent studies that have used a similar transcription protocol in stroke AOS and aphasia. The mean percentage of consonant/vowel segment distortions at 6.7% in the nfvPPA group is somewhat lower than corresponding levels for stroke participants with AOS (M = 11.6%–17.2%; Haley et al., 2017, 2019, 2020). However, the percentage of segments with phonemic errors at 27.2% for the nfvPPA participants was similar (M = 25.4%–30.5%; Haley et al., 2020, 2019), indicating no reason to suspect meaningful differences in speech production severity. A more likely explanation for the lower frequencies of distortion errors in this study is that the team transcription process resulted in more conservative assignment of distortion marks compared to the transcription data for our stroke research, which was based on a single primary transcriber. We caution that these comparisons are only approximations, since the studies were methodologically different.

Given the dynamic nature of PPA, stage of progression should always be taken into consideration when evaluating the presentation profile. In the sample we examined, time postonset and aphasia severity did not differ significantly between the nfvPPA and lvPPA participant groups. However, the nfvPPA group scored significantly higher than the lvPPA group on the MMSE. It is possible that this severity difference contributed to study results, given that speech sound errors presumably become more frequent with increasing disease severity. Had our groups been more closely matched for severity, the nfvPPA and lvPPA groups may have differed more in the frequency of phonemic and distortion errors. In future research, it will be important to evaluate severity effects in larger participant samples and determine how the measures we presented in this study respond to disease progression.

A secondary purpose for the distortion analysis was to characterize the type of distortion errors diagnosticians should be listening for, given that previous studies had not defined their quality. The profile that emerged involved primarily voicing ambiguity, lengthening, and tongue body modification and was similar to previous observations in stroke (Haley et al., 2017, 2019). As discussed, these distortion types are also consistent with distortion qualities that have been documented with acoustic methods. The similarity will be a practical advantage for future measurement development and an eventual transition to acoustic documentation in both research and clinical settings. Because time and resource demands for narrow phonetic transcription are impractical, the quantification we used in this study is only a necessary first step on a path to identify distortion correlates that are suitable for automated quantification. With the larger sample sizes afforded by automated methods, it will also be possible to evaluate interactions between target and distortion qualities, such as to what extent phonetic complexity might predict distortion type.

Distortion errors can also result from weakness or dyscoordination of the muscle groups that control speech production. Dysarthria is an additional layer of impairment for many people with AOS. In progressive disease, dysarthria typically accompanies nfvPPA and PPAOS rather than lvPPA or svPPA (Duffy, 2006; Ogar et al., 2007). Accordingly, most of the nfvPPA participants in our study and none of the lvPPA or svPPA participants were diagnosed with dysarthria. It is unclear how and to what extent AOS and dysarthria influence the speech output differently when they coexist. Wilson et al. (2010) addressed this discrimination problem by defining distortion errors in their participants with nfvPPA and dysarthria as those that were judged to occur above and beyond an estimated baseline of each participants’ most accurate speech. We have found it challenging to make such judgments in a reliable and transparent way and conclude instead that we are unable to determine the origin of most consonant and vowel distortion errors. That said, we purposefully did not code abnormal phonatory or resonatory qualities, such as strained quality, reduced loudness, and hypernasality, which are recognized features of dysarthria. Similarly, because a moderately slow speaking rate can be another feature of dysarthria or simply characterize dialect or idiolect, our transcribers were instructed to code sound prolongation only when a consonant or vowel was unquestionably long relative to the local phonetic context. Had these phonatory, resonatory, and rate-related features been coded as distortion errors, we would have detected far greater frequencies of distortion errors in the nfvPPA group and a greater group difference, since dysarthria—as is typical—was present in this group but not in the lvPPA group.

The frequency of distortion errors in our study was fundamentally determined by our decision to restrict the definition of distortion errors to the articulatory domain. Definitions may have been very different in other studies. As we observed in the study introduction, a lack of transparency about what exactly does and does not constitute a distortion error makes it impossible to compare error frequencies across studies. The fact that reported frequencies have varied extensively and indicated vastly different ratios of phonemic to distortion errors (e.g., Ash et al., 2010; Croot et al., 2012; Wilson et al., 2010) indicate a need for greater methodological transparency and documentation.

Operational definitions are also necessary for distorted substitution errors. Our group’s previous experience in stroke and now in progressive disease is that phonetic transcribers rarely assign discrete phonemic and distortion errors to the same consonant or vowel segment (Haley et al., 2017, 2019). There may be some degree of specificity for AOS in that distorted substitution errors were never observed in the lvPPA or svPPA participants. However, sensitivity was woefully inadequate, with a median occurrence of less than 3% in the motor speech examination for the nfvPPA participants. It is essential to clarify the exact nature of distorted substitution errors if this error category is to be considered a distinguishing diagnostic feature for AOS (Strand et al., 2014; Utianski, Duffy, Clark, Strand, Botha, et al., 2018).

Most Rate and Prosody Metrics Differentiate Between nfvPPA and lvPPA

The two AOS features we examined were slow speaking rate and abnormal temporal prosody in the form of syllable segmentation and stress equalization. Our primary measurement technique was based on the duration of syllables within multisyllabic words or connected speech. These syllables were defined to include the nucleus, any onset and coda, and short pauses between syllables. Syllable durations were also used to calculate two ratios: the PVI and the SI. We elected to not pursue analyses of nucleus or pause durations within syllables, largely due to insufficient reliability between our coders. This does not mean that closer segmentation is unwarranted, but rather that further stimulus restrictions and coder training are needed. Considering our robust results for other metrics, follow-up will be most important for the PVI.

Our first measure in the rate and prosody category was WSD, which is calculated as the mean syllable duration in multisyllabic words. Confidence in this measure as a feature of AOS was based on our group’s extensive use of the metric in previous stroke research (e.g., Cunningham et al., 2016; Haley et al., 2020, 2012, 2017) and the simplicity of the technique. When applied to the motor speech examination, classification accuracy was good (AUC = .880). Like our stroke results, the best cutoff value for longer than normal WSD is somewhere between 300 and 350 ms.

The discrimination was preserved and even enhanced when we applied WSD to connected speech. Based on visual inspection (see Figure 1), there was a tendency for participants in the nfvPPA group to produce multisyllabic words slower and more variably and for the lvPPA and svPPA groups to produce multisyllabic words faster and less variably when they were embedded in connected speech compared to when they were produced in isolation. This may be interpreted as an extension of similar effects that were previously demonstrated for vowel duration (Strand & McNeil, 1996). Any clinical use of WSD quantification in connected speech must obviously consider the lexical constraints of the speaking task. The picnic scene picture in the WAB-R can be described thoroughly without using any multisyllabic words, since depicted noun and action words consist of one or two syllables (e.g., tree, dog, house, blanket, pouring, kids, sand, flag, kite, flying). This was reflected in the low multisyllabic word frequencies we observed on this task (nfvPPA Mdn = 4.2%, lvPPA Mdn = 3.4%). In contrast, numerous multisyllabic words help tell the Cinderella story (e.g., enchanted, animals, stepsisters, invitation, announcement, godmother, beautiful), with correspondingly higher productions of multisyllabic words on this task (nfvPPA Mdn = 12.0%, lvPPA Mdn = 12.7%). If some minimal number of multisyllabic words can be ensured through strategically selected lexical content, connected speech WSD may be practical in clinical settings, given that it is easy to define and measure.

We thought a metric for connected speech that was conceptually equivalent to the WSD but independent of multisyllabic word production would be preferable, and for this reason, we added the NSD. It is defined as the mean duration of syllable strings that are not interrupted by “long” pauses or nonspeaking behavior. The results showed that the NSD measure alone differentiated nfvPPA from lvPPA perfectly or nearly perfectly (AUC = 1.000 for the WAB-R Picture Description, AUC = .938 for the Cinderella story telling). As such, the metric is certainly worthy of future study. There is a need to understand the principles for individual pause duration distributions and how to best interpret them in disordered speech. We customized the boundaries between “short” and “long” pauses by identifying outliers in each speech sample. It is possible that other methods are equally or more effective. Conceptually, a dynamic definition of pause categories is analogous to research aiming to identify distinctions between phonemic categories based on individual performance distributions rather than group averages (Haley, 2002; Haley et al., 2010). We suspect that quantitative speech assessment may benefit more broadly from a focus on individual performance distributions rather than isolated production exemplars.

While the inclusion of short pauses, by definition, was responsive to the pause prolongation that is thought to characterize AOS (Duffy, 2020; McNeil et al., 2009), it may also have included grammatical formulation hesitations. This may explain why NSD was so effective in differentiating nfvPPA from lvPPA, since both AOS and agrammatism are diagnostic criteria for the former but not the latter variant. Only one participant was diagnosed with AOS without agrammatism. His NSD was 347.1 ms for the WAB-R Picture Description and 312.8 ms for the Cinderella story telling, which corresponds to the shorter range of nfvPPA scores. Larger and more diverse samples should help differentiate the relative contribution of motor programming and grammatical formulation impairments as this research moves forward. Future studies may also seek to elucidate whether factors beyond motoric or linguistic impairments, such as compensatory behaviors enacted to minimize errorful production, contribute to slowed speech rate in nfvPPA.

In addition to slow syllable production, speech rhythm is often affected in AOS, resulting in abnormal prosodic features that listeners perceive as syllable segmentation and equalized stress (Croot et al., 2012; Darley et al., 1975). We recruited the syllable SI to evaluate the degree of equalization acoustically. Results indicated fair to good classification accuracy for nfvPPA versus lvPPA in connected speech (AUC = .770 and .875). Recently, we applied the same metric in a case study that spanned a 2-year period of recovery with isolated AOS after focal traumatic brain injury. When examined in connected speech monologues, the SI normalized steadily throughout the two years—long after the frequency of segmental speech errors had stabilized at a functionally negligible level (Haley et al., 2016). These results—like those of this study—support the utility of the original SI (Ackermann & Hertrich, 1993) for people who are able to produce connected speech with some grammatical structure. Because the extension of the SI to multisyllabic words on the motor speech examination showed fair classification accuracy (AUC = .780), application is also reasonable for those who have more significant agrammatism.

The PVI was the only rate or prosodic metric that did not discriminate between the nfvPPA and the lvPPA groups. Syllable nuclei durations are the intended raw measures for this index. Largely because we did not obtain satisfactory reliability for the necessary segmentation, we based the calculations instead on the entire syllable duration, which was measured with excellent reliability. The lack of classification accuracy indicates that the comparison should be replicated with PVI based on vowel nuclei durations. We advise caution regarding potential error propagation. In a recent study on lexical prosody, our team demonstrated a discrepancy between reliable measurement of nucleus durations and unreliable PVIs derived from those same measures (Haley & Jacks, 2019). We observed a similar deterioration of reliability in this study, with ICCs of .937 for raw syllable duration in both of the connected speech samples, but only .600 and .363 for the derived PVI. More broadly, as speech quantification applications continue to evolve for speech disorders, researchers are cautioned to report measurement reliability at the level of the data that are to be analyzed.

Syndrome Profiles Are Multidimensional and Heterogeneous

Variants of PPA are multidimensional syndromes. Metrics that have excellent diagnostic accuracy relative to a gold standard reference, as the NSD appears to have, could potentially serve as diagnostic screening tools. However, additional evidence is, by definition, necessary to define the full syndromes. Much of this evidence will continue to come from clinical assessment. Additionally, there is increasing promise that connected speech tasks can be analyzed in complementary and multidimensional ways that are sensitive to feature combinations that define PPA variants (Fraser et al., 2014; Knibb et al., 2009; Thompson et al., 2012).

It is surprisingly often forgotten that AOS is also a multidimensional syndrome. Though experienced clinicians may recognize the syndrome by familiarity in its most prototypical form, their classifications of cases encountered in clinical practice do not necessarily agree with those of their colleagues in other clinics or laboratories (Haley et al., 2012). Subjective diagnosis based on pattern recognition may or may not be accurate, and due to the associated lack of documentation, it is unfortunately impossible to know. For these reasons, the field must move beyond treating AOS as a simple checkbox in an aphasia battery and, by extension, a unitary diagnostic criterion for nfvPPA. Performance on dimensions of articulation, speaking rate, and prosody should instead be evaluated quantitatively, with a requirement that diagnostic assignment be supported by data. In response to the lack of validity and reliability for current practices, it has been suggested that the use of AOS as a diagnostic feature of nfvPPA should be abandoned until appropriate quantitative measures are available (Sajjadi, Patterson, Arnold, et al., 2012). Our position is that the research community does have such tools at our disposal and that some methods also are feasible for immediate clinical application.

Better quantification leads to increased recognition that performance profiles are heterogeneous. Questions arise about disorder subtypes and alternative classification strategies (Graham et al., 2016; Harris et al., 2013; Josephs et al., 2013; Sajjadi, Patterson, Arnold, et al., 2012; Utianski, Duffy, Clark, Strand, Botha, et al., 2018; Wicklund et al., 2014). A quantitative approach to classification is of particular value if it contributes to clinical management or theory building. Proper documentation in stroke and progressive disorders will ensure that discussions and scientific inquiry remain productive, even when there are differences in classification preferences or hypotheses turn out to be incorrect. Finally, in addition to advancing our appreciation for differences among individuals, it is important to continue to pursue a better understanding of disease progression (Ash et al., 2019; Utianski, Duffy, Clark, Strand, Boland, et al., 2018) and therapeutic response. It is our hope that the set of metrics we evaluated in this study may contribute to these essential purposes.

Author Contributions