Abstract

Modern conformal beam delivery techniques require image-guidance to ensure the prescribed dose to be delivered as planned. Recent advances in artificial intelligence (AI) have greatly augmented our ability to accurately localize the treatment target while sparing the normal tissues. In this paper, we review the applications of AI-based algorithms in image-guided radiotherapy (IGRT), and discuss the indications of these applications to the future of clinical practice of radiotherapy. The benefits, limitations and some important trends in research and development of the AI-based IGRT techniques are also discussed. AI-based IGRT techniques have the potential to monitor tumor motion, reduce treatment uncertainty and improve treatment precision. Particularly, these techniques also allow more healthy tissue to be spared while keeping tumor coverage the same or even better.

Keywords: Artificial intelligence (AI), convolutional neural network, deep learning, image-guided radiotherapy (IGRT), machine learning, target positioning

Introduction

In the past decades, radiotherapy is becoming increasingly conformal with the development of advanced beam delivery techniques such as intensity modulated radiotherapy (IMRT) (1), volumetric modulated arc therapy (VMAT) (2-4), and stereotactic ablative radiotherapy (SABR) (5-7). These techniques have substantially augmented routine practice to achieve a highly conformal dose distribution which is tightly shaped to the tumor volume, enabling less normal-tissue to be irradiated. Routine radiotherapy is based on the assumption that the anatomical alignment is consistent between the images used to design the computer-generated treatment plans and the actual anatomical morphology at the time of beam delivery (i.e., radiation treatment). However, this assumption is hard to hold and margins are therefore used to take the violation of the assumption into account. The margins limit the degree of conformity of radiotherapy and benefits from beam delivery technical advancements. To tackle this limitation, imaging systems for radiotherapy machines are employed to guide the treatment on a daily basis, referred to image-guided radiotherapy (IGRT) (8-11), resulting in increased accuracy and precision in dose placement to the target volumes and the surrounding normal-tissues. The introduction of IGRT has enabled knowledge of the location of the irradiation target and management of organ motion during treatment. It ensures high precision radiation machines deliver radiation dose as planned, and is therefore a crucial requirement for modern radiotherapy.

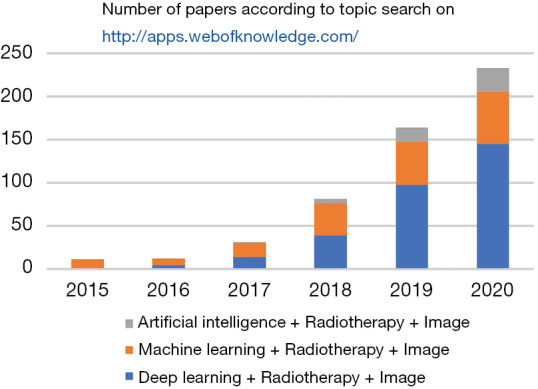

Recent developments in computer science, especially in machine learning and deep learning, have greatly augmented the potential to reshape the workflow of radiotherapy using advanced delivery techniques (12-14). These developments constitute the field of artificial intelligence (AI)-based IGRT, which can make it possible to rapidly provide high-quality personalized conformal treatment for cancer patients. As shown in Figure 1, the applications of AI in IGRT have exponentially increased over the past five years, with a major contribution from deep learning approaches. The deep learning applications in medical physics appeared in 2016 Annual Meeting of AAPM. Since the pioneer applications of deep learning in radiation therapy in 2016 (15,16) and 2017 (17-20) where Xing and his colleagues use deep learning techniques for inverse treatment planning and organ segmentation, there have been growing interests in the applications of AI in IGRT in recent years. Specifically, these developments have mainly been focused on the usage of AI-based approaches to provide better target definition, the usage of AI-based approaches for multi-modality image registration, and target localization based on 2D and 3D imaging using AI approaches (21-23). All these developments aim to achieve better knowledge of the tumor position and motion management of organs-at-risk (OARs) during treatment with a simplified radiotherapy workflow. Here, we provide a concise introduction of IGRT and AI, followed by an overview of the applications of AI in IGRT. We also discuss the associated clinical benefits and the potential limitations of AI-based IGRT approaches.

Figure 1.

Number of papers according to topic search on “Web of knowledge” by using keywords “Artificial intelligence”, “Machine learning”, “Deep learning”, “Radiotherapy”, and “Image”.

Rationale for image guidance and clinical IGRT technologies

IGRT has the merits of treatment verification and treatment guidance, both of which enable more accurate target positioning, ensuring tumor coverage stays the same or better while more healthy tissue can be spared (11). IGRT imaging systems can perform verification imaging before, during, and/or after beam delivery to record a patient’s position throughout the treatment. In current clinical practice, the verification is typically not used to guide the radiation beam but to reposition the patient if a detected misalignment exceeds a predefined threshold [such as 3 mm (24)]. Imaging before treatment can reduce setup errors and geometric uncertainties. Imaging during treatment can reflect the range of organ motion and changes of tumor size and shape that takes place during treatment. It can therefore increase the confidence of treatment and facilitate the awareness of mistargeting. The treatment verification can be used as a quality assurance (QA) to ensure the beam are delivered to the target as planned, even with the presence of organ motion.

Advanced radiotherapy techniques, including VMAT, IMRT and SABR, are associated with high doses and steep dose distributions at the target boundary such that the high dose conforms tightly around the target and health tissues are greatly spared. These advanced conformal techniques also facilitate the implementation of hypofractionated radiotherapy regimens, which can shorten radiotherapy schedules and save costs. However, these steep dose distributions call stringent requirements for the enhanced target positioning.

To tackle this challenge, two-dimensional (2D) imaging and three-dimensional (3D) volumetric imaging can be used to guide the radiation beam to the tumor at the time of treatment. For example, instead of irradiating the whole volume where the tumor can be located during respiration, radiation delivery can be gated to a specific phase of respiration (e.g., exhale), or follow the moving target during breathing using 2D or 3D imaging (25-27). By using these real-time image guidance techniques, safety margin in planning target volume (PTV) that accounts for the target motion can be significantly reduced and the irradiated volume of healthy tissue can therefore also be reduced (28). In addition, real-time volumetric and temporal imaging can timely reflect changes in the target or healthy tissue that may take place during a course of treatment, and allow the treatment beam to adapt to these changes. These interventions can further improve the clinical outcome and the therapeutic ratio.

Several image-guidance capable radiation treatment machine systems are routinely used in clinical practice (29-31). These systems either generate oblique fiducial radiographic images that are analyzed to perform the image-guidance corrections [such as the real-time tumor-tracking radiation therapy (RTRT) system and the CyberKnife® (Accuray Inc., Sunnyvale, USA)] (28), or generate volumetric cone-beam CT (CBCT) images using an on-board flat-panel detector (e.g., Varian Truebeam) (31). Besides, treatment machines that integrates treatment and imaging systems are becoming popular options for the next-generation radiotherapy systems [such as TomoTherapy (32-34), ViewRay MRIdian (35), United Imaging uRT-Linac (36)].

Artificial intelligence

AI denotes intelligence demonstrated by machines. It describes techniques that mimic cognitive functions (such as learning) of the human mind. Recent advances in deep learning (37), especially convolutional neural network (CNN), enable learning semantic features and understand complex relationships from data. This has greatly augmented the applications of AI in imaging involved fields, including IGRT. Of note, all the main IGRT components: contouring, registration, planning, QA, and beam delivery may benefit from the advanced AI-based algorithms (14). Furthermore, the complex workflow of routine IGRT could be simplified via AI modeling (e.g., machine QA and patient-specific QA) (38-43). AI can also build up powerful motion management models to account for motion variability which encompasses magnitude, amplitude, frequency, and so on. These models can take data acquired from external surrogate makers as inputs to predict respiratory motion (44-46).

Several standard routine practices, for example linear accelerator (linac) commissioning and QA are labor-intensive and time consuming (40). The data acquired during commissioning is the input to the treatment planning software (TPS). The quality of these beam data is therefore utmost importance for high-performance radiotherapy. Machine learning which constructs an automatic prediction model through data-driven or experience fashion can be applied to reduce the work load of linac commissioning (47). Specifically, by using the previously acquired beam data, one can train a machine learning algorithm to model the inherent correlation of beam data under different configurations, and the trained model is then able to generate accurate and reliable beam data for linac commissioning for routine radiotherapy (47). Here the machine learning-based method can simplify the linac commissioning procedure, save time and manpower.

As a subset of AI, machine learning uses the human-engineered features to build up a prediction model to make informed decisions (48). These features are dependent on the problem that is being investigated. For the aforementioned beam data modeling problem, the decision-making important factors include beam energy, field size, and other linac parameters, where these features used for model training needs to be extracted manually. Unlike classical machine learning, deep learning uses a deep neural network to progressively learn high-level features for constructing a prediction model. Throughout, no human-engineered feature is required and the feature extraction procedure can be completed automatically (37,48). Since images are heavily involved in the workflow of IGRT, a specific class of deep neural networks, convolutional neural network (CNN) which is particularly suitable for image analysis tasks, has been extensively studied for many aspects in IGRT.

In the following section, we review and discuss the application of AI in IGRT with focuses on CNN in treatment verification and guidance. Specifically, we introduce how to use the deep neural network to perform pretreatment setup and real-time volumetric imaging, and to improve the clinical gain and therapeutic ratio.

Image registration

In standard radiotherapy clinical practice, treatment planning usually employs a CT examination performed with the patient in the treatment position and this CT is referred as the planning CT. In addition to the CT images, other imaging modalities including positron emission tomography (PET), magnetic resonance imaging (MRI) or single-photon emission computed tomography (SPECT) which can provide complementary anatomical and/or functional information, especially information about the tumor target, are employed to enhance the tumor target definition. For example, PET image can show the heterogeneity of the tumor and thus define a biological target volume to improve the therapeutic ratio (49-56).

In order to take advantage of the complementary information to enhance tumor target definition, image registration between different modalities is required because it is impossible to acquire multi-modality images at the same time in the same time interval. Deep learning-based image registration methods have been extensively investigated for treatment verification (57-61). Yu et al. proposed a two-stage 3D non-rigid image registration between PET and CT scans. The method encompasses a 3D CNN which predicts a voxel-wise displacement field between PET/CT images, and a 3D spatial transformer and a resampler which warp the PET images using the predicted displacement field. The method has been evaluated using abdominopelvic PET/CT images and shows potential to improve the accuracy of target definition (59). Fan et al. use a generative adversarial network (GAN)-type framework to perform MR-CT image registration (60). Their framework first uses a deformable registration network to predict image deformations, which was inputted a deformable transformation layer (60). Using the adversarial learning strategy, the deformed image was then distinguished whether it was well aligned with the other image modality using a discriminator network. The registration network was trained adversarially with the discrimination network, which was designed to distinguish whether the image pair were well aligned with each other. The framework was evaluated using MR-CT image registration for prostate cancer patients. The registered image can take advantage of both MR and CT images to redefine the irradiation target. Of note, many deep learning-based registration methods have not been developed specifically in the context of IGRT, but these methods could potentially be employed for IGRT treatment verification (62,63).

In addition to image registration between different image modalities, deep learning-based image registration has also extensively used for the same imaging modality (64,65). In radiotherapy, image registration between CT images acquired from different treatment fractions provides better tumor definition, and it also corrects patient set-up (i.e., offline set-up). For different fractions, image registration is able to monitor the position of the patients and adapts the safety margins and/or treatment planning accordingly, which plays an important role in adaptive radiotherapy (ART) (6).

Although deep learning-based image registration methods have shown promising results for mono-modality and multi-modality imaging, these methods still have limitations. One of the major limitations is the absence of thorough validation. This is partially due to the lack of ground truth transformation between image pairs or the unavailability of well-aligned image pairs, especially for multi-modality images.

Two-dimensional imaging-based localization

Target localization during treatment delivery can be performed using 2D radiographs. Initially, megavoltage (MV) radiographic was used to provide localization data in the 2D verification image by comparing with the radiographs derived from the planning CT images. However, MV images usually rely on the high-density skeletal anatomy for target verification. Compared to the MV radiographs, kV images have the potential to yield higher contrast images with a lower radiation dose (66-68). However, due to tissue overlay in the 2D radiograph image, low-contrast tumors are usually invisible in the radiographs. To address this problem, high-density fiducials (such as gold fiducials) can be implanted in or near the tumor to aid target localization (69-71). For example, gold fiducials have been used for prostate (70), lung (28), liver, pancreatic cancers (69). By using the implanted fiducials, Shirato and colleagues developed the RTRT system to treat moving tumors using room-mounted kV fluoroscopy (28). Instead of irradiating the whole volume where the tumor can be located during respiration, the approach used radio-opaque fiducials to trigger the beam delivery (72,73). Specifically, radiation is delivered only when the fiducials are located within a predefined volume to spare the normal-tissues (28).

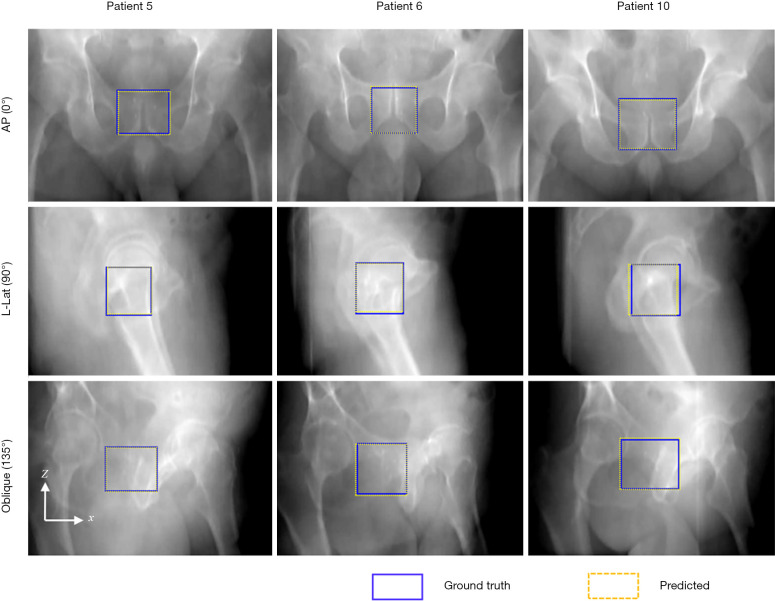

The fiducial-based RTRT approach is essentially invasive and requires prolonged treatment initiation. Moreover, the presence of fiducials may cause bleeding, infection and discomfort to the patient. Hence, non-invasive or markerless image-guidance is of great clinical relevance. Zhao et al. propose a deep learning-based approach for markerless tumor target positioning using 2D kV X-ray images acquired from the on-board imager (OBI) system (22,74). The deep learning model encompasses a region proposal network (RPN) and a target detection network, where the latter network takes the output (i.e., region proposals) of the RPN as input. To train the network, the planning CT images were deformed to mimic different anatomy scenarios at the time of treatment and the deformed CT images were used to generate digitally reconstructed radiographs (DRRs), which incorporated the target location. The trained deep learning models have been successfully applied to challenging prostate cancer patients (as shown in Figure 2) and pancreatic cancer patients, which have low contrast resolution and respiratory motion, respectively. The markerless approach is able to localize the tumor target before treatment for patient set-up and during treatment for motion management. Moreover, depending on the target volume used for DRR labeling, the approach can be employed to track the PTV, the clinical target volume (CTV) or the gross tumor volume (GTV). It is therefore able to further reduce the safety margin and thus provide better healthy tissue sparing.

Figure 2.

Results of target localization for prostate cancer patients pretreatment setup and real-time tracking using a deep learning approach. The deep learning model predicted target positions are shown in yellow, and their corresponding ground truth is in blue. AP, anteroposterior; L-Lat, Left-lateral. Adapted with permission from reference (22). Copyright 2019 Elsevier.

Regarding to the morphologic difference between the real kV projection images and the DRRs, instead of using classical image processing algorithms, a GAN-type framework is proposed by Dhont et al. (75) to synthesize DRR from the input kV image acquired from the OBI. This synthetic DRR is able to further improve the accuracy of the aforementioned markerless target positioning method (75). The deep learning-based markerless IGRT methods can not only be applied to the OBI for image guidance, but also to orthogonal kV live images acquired from stereotactic radiosurgery for real-time image guidance (21).

For spine and lung tumors that have better contrast resolution, 2D/3D registration methods can be applied for patient setup and target monitoring (76,77). Recent deep learning-based 2D/3D image registration approaches have also augmented our ability to treatment verification and image guidance using 2D kV radiographs (78-80). Foote et al. develop a CNN model and a patient-specific motion subspace to predict anatomical positionings by using a 2D fluoroscopic projection in real-time (79). They used the CNN model to perform deformation composed with 2D radiographs. The CNN model can recover subspace coordinates to define the patient-specific deformation field between the patient treatment positioning and planning positioning.

Volumetric imaging-based localization

Volumetric imaging using various modalities has been a standard procedure for treatment planning for decades. In contrast to this, anatomical position verification using in-room volumetric imaging before beam delivery and at the time of beam delivery are getting popular in recent years, mainly attributed to the availability of the integrated linear accelerator-CT scanner systems. These systems include CT-on-rails system and other integrated systems that allow diagnostic quality CT acquisition in the treatment room (e.g., Primatrom, Siemens; ExaCT, Varian Medical Systems; uRT-linac, United-Imaging). Although the imaging and treatment isocenters of the integrated system are not coincident, the CT imaging is usually performed in close proximity to the treatment position. The high-quality CT scans can reflect the volumetric change during radiotherapy in individuals. In addition to the high-quality diagnostic CT scans, flat-detector in the OBI system can also be used to acquire 3D volumetric CBCT images before treatment. Different from the in-room diagnostic CT acquisition, the CBCT gantry rotation and the treatment beam share the same isocenter.

Registration of the CBCT images with respect to the planning CT images indicates the correctness of the patient positioning and provides feedbacks for any needed adjustment. Recent advances in the deep learning-based CBCT/CT registration methods have been developed to aid fast patient set up and pretreatment positioning (63,81-83). For example, Liao et al. used reinforcement learning (RL) to perform rigid image registration between CT and CBCT images (63). Due to the long acquisition time (CBCT acquisition usually takes 30 seconds to 2 minutes while planning CT usually takes less than a few hundred milliseconds), large cone angle and limited detector dynamic range, there are substantial artifacts in the CBCT images and the low-contrast resolution is substantially inferior to the planning CT images. Hence, CBCT/CT registration usually relies on the high-contrast bony structure or implanted fiducials. To mitigate this limitation, synthetic CT images can be generated from daily CBCT images using deep learning-based approaches (81,84,85). The synthetic CT images usually have comparable accuracy of HU values compared to the planning CT image, as shown in Figure 3. They therefore have potential to be used for dose calculation and treatment replanning, making CBCT-based adaptive radiotherapy possible.

Figure 3.

Results of synthetic CT images from daily cone-beam CT images using a deep learning approach. Evaluation studies using prostate cancer patients show the deep learning approach can synthesize CT-quality images with accurate CT numbers from CBCT images. The first, second, and third rows are the daily CBCT, the predicted synthetic CT, and the deformed planning CT images, respectively. sCT, synthesized CT; dpCT, deformed planning CT. Adapted with permission from reference (85). Copyright 2019 John Wiley and Sons.

In addition to the synthetic CT image from CBCT images, deep learning algorithms can also perform contour propagation from planning CT to the daily CBCT. The planning CT image is of high-quality and the target volumes and OARs are delineated on this image. On the contrary, the CBCT images have low image quality and it is usually impossible to delineate target volumes on CBCT images, but to some extent, they still provide the anatomical information of the patient at the time of treatment. To take advantage of the daily CBCT images to achieve adaptive radiotherapy, Elmahdy et al. used deep learning to develop a registration pipeline for automatic contour propagation for intensity-modulated proton therapy of prostate cancer (86). The propagated contours obtained from the pipeline can generate reasonable treatment plans adapted to the daily anatomy. Liang et al. proposed a regional deformable model-based unsupervised learning framework to automatically propagate the delineated prostate contours from planning CT to CBCT. The results showed the deep learning-based method could provide accurate contour propagation for daily CBCT-guided adaptive radiotherapy (87).

Instead of the above deformation of contours from the planning CT to the CBCT, delineation can be performed directly on the daily CBCT using deep learning approaches to minimize the treatment uncertainties. Several synthetic MRI methods have been developed to delineate OAR for prostate and head-and-neck radiotherapy (88,89). For these methods, the synthetic MRI was firstly generated from CBCT images using cycleGAN, and the delineation was then performed on the synthetic MRI images.

Typically, to perform CBCT imaging using the OBI system, hundreds of radiographic projections need to be acquired. It is therefore impossible to provide real-time volumetric images at the time of beam delivery. To monitor the intrafractional motion, Shen et al. showed that single-view tomographic imaging can be achieved by developing a deep learning model and integrating patient-specific prior knowledge in a data-driven image reconstruction process (90). This study was the first to push sparse sampling for CT imaging to the limit of a single projection view. It has the potential to generate real-time volumetric tomographic X-ray images by using the kV projection acquired from the OBI during the beam delivery, which can be used for motion management, image guidance and real-time adaptive radiotherapy.

Recently, integrated MR-linac systems that allow real-time imaging during the treatment have become available for clinical use (91,92), and AI-based approaches have also been employed to aid tumor tracking and online adaptive radiotherapy. Cerviño et al. was the first to use an artificial neural network (ANN) model for tracking lung tumor motion at the time of treatment. Specifically, a volume that encompasses the tumor locations during treatment was selected to perform principal components analysis (PCA). The output of the PCA was incorporated into the ANN model to predict the tumor positioning during treatment delivery (93). To achieve real-time adaptation using MRI-guided radiotherapy, target motion needs to be monitored with low latency such that the radiation beam can be adapted to the current anatomy. Proton MRI is an imaging technique that can help quantify lung function using existing MRI systems without contrast material. To take advantage of this feature, Capaldi et al. (94) generated pulmonary ventilation maps with free-breathing proton MRI and a deep CNN, laying a solid foundation for future functional image-guided lung radiotherapy. To reduce the data acquisition time, deep learning has been employed to fasten the MR acquisition by enabling higher undersampling factors (95-97).

Nowadays, volumetric imaging using modalities like CT, MRI, and PET are routinely used in clinical practice for treatment verification and image guidance. Whereas deep learning-based algorithms have greatly augmented the power of these volumetric imaging techniques to provide better healthy tissue sparing and clinical gains, there still exist several limitations for realistic implementation of these algorithms. This will be discussed in the following.

AI-based IGRT benefits

Fractionated radiotherapy usually takes several weeks and significant anatomy changes may be seen between different treatment fractions. Studies have shown that substantial organ motion and positioning errors might take place during a course of radiotherapy even for patients immobilized/positioned with invasive (immobilization) techniques (98,99). For every fraction, it is therefore necessary to use treatment verification or image-guidance to mitigate both interfraction and intrafraction motion (27,100). Compared to previous 2D IGRT practice, AI-based IGRT algorithms for 3D volumetric imaging are able to make the geometric uncertainties and motion that arise more apparent during treatment. Moreover, they augment the ability to provide more accurate tumor localization as well as measurement of tumor changes, which include changes in size, shape, and position. For example, both interfraction variation and breathing-induced intrafraction motion can be evaluated in real-time by single-view volumetric imaging during radiotherapy (90). In addition, the single-view volumetric imaging can be further used to calculate radiation dose and replan for adaptive radiotherapy applying deep learning.

By increasing the geometric precision, the irradiated volumes of the OARs around the tumor can be reduced, resulting in a reduction in the amount of healthy tissue treated. Different from classical fiducial-based approaches which are invasive and associated with prolonged treatment procedure, AI-based IGRT eliminates the requirement of fiducial implantation (22,74).

With the AI-based volumetric imaging of IGRT, it is possible to perform real-time soft-tissue registration without the use of fiducials, making soft-tissue (such as target volumes and OARs) image guidance more practical for routine clinical use than it was previously. With the AI-based 2D imaging of IGRT, it is possible to not only track the PTV, but also track the GTV in real-time. Hence, the safety margin that accounts for motion track can be significantly reduced and the irradiation to the normal tissue can then be mitigated. Consequently, the probability of toxic effects to healthy tissue can be decreased and an escalation of the dose to the tumors might be performed to increase the probability of tumor control. On the other hand, even without dose escalation or a reduction in the target margin, target positioning verification based on the real-time AI-based IGRT approaches is still able to enhance the probability that the prescribed dose is actually delivered and increase the treatment confidence.

With respect to clinical impact, AI-based IGRT based treatment replanning and image guidance could reduce toxic effects and risk for recurrence. These AI-based image guidance techniques have the potential to enable the use of radiotherapy in scenarios for which it was not previously possible. For example, single-view volumetric imaging makes real-time adaptive radiotherapy possible using commercially available OBI system (90). The approaches are usually performed in the image domain which is quite flexible and the approaches can therefore be applied to different sites, including head-and-neck, prostate, pancreas, upper abdomen cancers that are easily affected by respiratory motion.

AI-based IGRT limitations

Although the technological advances in AI-based IGRT hold the potential to improve therapeutic ratio and clinical outcome, few technologies or strategies have been implemented in routine practice yet. AI-based approaches, especially deep learning, are data-driven and usually data-hungry. To achieve better performance, sufficient training datasets need to be collected and sometimes to be well labeled manually. This is not an easy task and the training datasets may be limited in many cases. More importantly, the performance of AI-based approaches also relies on the training data distribution. For the testing data that are out of the distribution of the training data, the predictive model usually yields inferior results. Therefore, many of these approaches are usually not robust enough to be applied in realistic applications as every data at the clinical scenario is unique (101).

Different from the classical machine learning algorithms, deep learning-based approaches are considered as “black-boxes” and lack good interpretability. Hence, attention need to be paid to inappropriate margin reduction and overconfidence. Up to now, almost all AI-based IGRT studies have been retrospective and few methods have been applied in clinical practice. Of note, before applied to commercial use, AI-based IGRT algorithms should be subjected to premarket review and postmarket surveillance. The premarket review will require to demonstrate reasonable assurance of safety and effectiveness (within the acceptable tolerance limits for target positioning), and establish a clear expectation for the AI-based algorithms to continually manage patient risks throughout the lifecycle of the application. For the postmarket surveillance, the model deployment and performance are required to be monitored closely. The risk management approaches should be incorporated as well as other approaches in the development, validation, and execution of the algorithm changes.

The AI-based IGRT techniques need to be further evaluated. Instead of using clinically relevant metrics, many of the deep learning-based approaches are evaluated using metrics (such as structural similarity) originated from computer science. The results provided by these metrics are usually not clinically meaningful and it is not clear to what extent the results of the methods are allowed to be used in practice. In addition, some practical issues may be usually neglected in the AI-based IGRT studies. These issues should be considered in realistic applications. For example, when synthesizing the CT images from the daily CBCT images, there are different fields of views between CT and CBCT images. To perform OAR delineation and treatment replanning, the synthetic CT images may need to have the same field of view as the original planning CT images.

Summary

Highly conformal beam delivery techniques have been developed in the past decades and image-guidance is a stringent requirement for fully exploiting these advanced techniques. We review the applications of AI-based algorithms in IGRT and highlight some important trends in research and development in AI-based IGRT, and discuss the indications of these algorithms for the future of clinical practice of radiotherapy. In particular, the applications of AI-based IGRT techniques based on 2D imaging and 3D volumetric imaging are reviewed and analyzed. More importantly, the benefits and limitations of these techniques are discussed. To sum up, these techniques have the potential to monitor tumor motion, reduce the treatment uncertainty and improve the treatment precision. They also allow more healthy tissue to be spared while keeping tumor coverage the same or better. However, looking forward, there is still much which needs to be done to implement the AI-based algorithms for clinical translation and to maximize the utility and robustness of AI to benefit cancer patients.

Acknowledgments

Funding: This work was partially supported by Medical Big Data and AI R&D project (No. 2019MBD-043).

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

Footnotes

Provenance and Peer Review: With the arrangement by the Guest Editors and the editorial office, this article has been reviewed by external peers.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://dx.doi.org/10.21037/qims-21-199). The special issue “Artificial Intelligence for Image-guided Radiation Therapy” was commissioned by the editorial office without any funding or sponsorship. The authors have no other conflicts of interest to declare.

References

- 1.Staffurth J, Radiotherapy Development Board . A review of the clinical evidence for intensity-modulated radiotherapy. Clin Oncol (R Coll Radiol) 2010;22:643-57. 10.1016/j.clon.2010.06.013 [DOI] [PubMed] [Google Scholar]

- 2.Otto K. Volumetric modulated arc therapy: IMRT in a single gantry arc. Med Phys 2008;35:310-7. 10.1118/1.2818738 [DOI] [PubMed] [Google Scholar]

- 3.Crooks SM, Wu X, Takita C, Watzich M, Xing L. Aperture modulated arc therapy. Phys Med Biol 2003;48:1333-44. 10.1088/0031-9155/48/10/307 [DOI] [PubMed] [Google Scholar]

- 4.Yu CX. Intensity-modulated arc therapy with dynamic multileaf collimation: an alternative to tomotherapy. Phys Med Biol 1995;40:1435-49. 10.1088/0031-9155/40/9/004 [DOI] [PubMed] [Google Scholar]

- 5.Palma DA, Olson R, Harrow S, Gaede S, Louie AV, Haasbeek C, et al. Stereotactic ablative radiotherapy versus standard of care palliative treatment in patients with oligometastatic cancers (SABR-COMET): a randomised, phase 2, open-label trial. Lancet 2019;393:2051-8. 10.1016/S0140-6736(18)32487-5 [DOI] [PubMed] [Google Scholar]

- 6.Timmerman R, Xing L. Image Guided and Adaptive Radiation Therapy. Baltimore Lippincott Williams & Wilkins; 2009. [Google Scholar]

- 7.Timmerman RD, Kavanagh BD, Cho LC, Papiez L, Xing L. Stereotactic body radiation therapy in multiple organ sites. J Clin Oncol 2007;25:947-52. 10.1200/JCO.2006.09.7469 [DOI] [PubMed] [Google Scholar]

- 8.Xing L, Thorndyke B, Schreibmann E, Yang Y, Li TF, Kim GY, Luxton G, Koong A. Overview of image-guided radiation therapy. Med Dosim 2006;31:91-112. 10.1016/j.meddos.2005.12.004 [DOI] [PubMed] [Google Scholar]

- 9.Dawson LA, Sharpe MB. Image-guided radiotherapy: rationale, benefits, and limitations. Lancet Oncol 2006;7:848-58. 10.1016/S1470-2045(06)70904-4 [DOI] [PubMed] [Google Scholar]

- 10.Verellen D, De Ridder M, Linthout N, Tournel K, Soete G, Storme G. Innovations in image-guided radiotherapy. Nat Rev Cancer 2007;7:949-60. 10.1038/nrc2288 [DOI] [PubMed] [Google Scholar]

- 11.Jaffray DA. Image-guided radiotherapy: from current concept to future perspectives. Nat Rev Clin Oncol 2012;9:688-99. 10.1038/nrclinonc.2012.194 [DOI] [PubMed] [Google Scholar]

- 12.Xing L, Giger ML, Min JK. Artificial Intelligence in Medicine: Technical Basis and Clinical Applications. London, UK: Academic Press; 2020. [Google Scholar]

- 13.Liu F, Yadav P, Baschnagel AM, McMillan AB. MR-based treatment planning in radiation therapy using a deep learning approach. J Appl Clin Med Phys 2019;20:105-14. 10.1002/acm2.12554 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Huynh E, Hosny A, Guthier C, Bitterman DS, Petit SF, Haas-Kogan DA, Kann B, Aerts HJWL, Mak RH. Artificial intelligence in radiation oncology. Nat Rev Clin Oncol 2020;17:771-81. 10.1038/s41571-020-0417-8 [DOI] [PubMed] [Google Scholar]

- 15.Mardani Korani M, Dong P, Xing L. Deep‐Learning Based Prediction of Achievable Dose for Personalizing Inverse Treatment Planning. Med Phys 2016;43:3724. 10.1118/1.4957369 [DOI] [Google Scholar]

- 16.Ibragimov B, Pernus F, Strojan P, Xing L. Machine‐Learning Based Segmentation of Organs at Risks for Head and Neck Radiotherapy Planning. Med Phys 2016;43:3883. 10.1118/1.4958186 [DOI] [PubMed] [Google Scholar]

- 17.Ibragimov B, Xing L. Segmentation of organs-at-risks in head and neck CT images using convolutional neural networks. Med Phys 2017;44:547-57. 10.1002/mp.12045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ibragimov B, Toesca D, Chang D, Koong A, Xing L. Combining deep learning with anatomical analysis for segmentation of the portal vein for liver SBRT planning. Phys Med Biol 2017;62:8943-58. 10.1088/1361-6560/aa9262 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ibragimov B, Korez R, Likar B, Pernus F, Xing L, Vrtovec T. Segmentation of Pathological Structures by Landmark-Assisted Deformable Models. IEEE Trans Med Imaging 2017;36:1457-69. 10.1109/TMI.2017.2667578 [DOI] [PubMed] [Google Scholar]

- 20.Arık SÖ, Ibragimov B, Xing L. Fully automated quantitative cephalometry using convolutional neural networks. J Med Imaging (Bellingham) 2017;4:014501. 10.1117/1.JMI.4.1.014501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Zhao W, Capaldi D, Chuang C, Xing L. Fiducial-Free Image-Guided Spinal Stereotactic Radiosurgery Enabled Via Deep Learning. Int J Radiat Oncol Biol Phys 2020;108:e357. 10.1016/j.ijrobp.2020.07.2348 [DOI] [Google Scholar]

- 22.Zhao W, Han B, Yang Y, Buyyounouski M, Hancock SL, Bagshaw H, Xing L. Incorporating imaging information from deep neural network layers into image guided radiation therapy (IGRT). Radiother Oncol 2019;140:167-74. 10.1016/j.radonc.2019.06.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Li X, Chen H, Qi X, Dou Q, Fu CW, Heng PA. H-DenseUNet: Hybrid Densely Connected UNet for Liver and Tumor Segmentation From CT Volumes. IEEE Trans Med Imaging 2018;37:2663-74. 10.1109/TMI.2018.2845918 [DOI] [PubMed] [Google Scholar]

- 24.Chen AM, Farwell DG, Luu Q, Donald PJ, Perks J, Purdy JA. Evaluation of the planning target volume in the treatment of head and neck cancer with intensity-modulated radiotherapy: what is the appropriate expansion margin in the setting of daily image guidance? Int J Radiat Oncol Biol Phys 2011;81:943-9. 10.1016/j.ijrobp.2010.07.017 [DOI] [PubMed] [Google Scholar]

- 25.Kubo HD, Hill BC. Respiration gated radiotherapy treatment: a technical study. Phys Med Biol 1996;41:83-91. 10.1088/0031-9155/41/1/007 [DOI] [PubMed] [Google Scholar]

- 26.Shirato H, Shimizu S, Kunieda T, Kitamura K, Van Herk M, Kagei K, Nishioka T, Hashimoto S, Fujita K, Aoyama H, Tsuchiya K, Kudo K, Miyasaka K. Physical aspects of a real-time tumor-tracking system for gated radiotherapy. Int J Radiat Oncol Biol Phys 2000;48:1187-95. 10.1016/S0360-3016(00)00748-3 [DOI] [PubMed] [Google Scholar]

- 27.Cai J, Chang Z, Wang Z, Paul Segars W, Yin FF. Four-dimensional magnetic resonance imaging (4D-MRI) using image-based respiratory surrogate: a feasibility study. Med Phys 2011;38:6384-94. 10.1118/1.3658737 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Shirato H, Shimizu S, Shimizu T, Nishioka T, Miyasaka K. Real-time tumour-tracking radiotherapy. Lancet 1999;353:1331-2. 10.1016/S0140-6736(99)00700-X [DOI] [PubMed] [Google Scholar]

- 29.Zhang K, Tian Y, Li M, Men K, Dai J. Performance of a multileaf collimator system for a 1.5T MR-linac. Med Phys 2021;48:546-55. 10.1002/mp.14608 [DOI] [PubMed] [Google Scholar]

- 30.Adler JR, Jr, Chang SD, Murphy MJ, Doty J, Geis P, Hancock SL. The Cyberknife: a frameless robotic system for radiosurgery. Stereotact Funct Neurosurg 1997;69:124-8. 10.1159/000099863 [DOI] [PubMed] [Google Scholar]

- 31.Jaffray DA, Siewerdsen JH, Wong JW, Martinez AA. Flat-panel cone-beam computed tomography for image-guided radiation therapy. Int J Radiat Oncol Biol Phys 2002;53:1337-49. 10.1016/S0360-3016(02)02884-5 [DOI] [PubMed] [Google Scholar]

- 32.Mackie TR, Balog J, Ruchala K, Shepard D, Aldridge S, Fitchard E, Reckwerdt P, Olivera G, McNutt T, Mehta M. Tomotherapy. Semin Radiat Oncol 1999;9:108-17. 10.1016/S1053-4296(99)80058-7 [DOI] [PubMed] [Google Scholar]

- 33.Liu Z, Fan J, Li M, Yan H, Hu Z, Huang P, Tian Y, Miao J, Dai J. A deep learning method for prediction of three-dimensional dose distribution of helical tomotherapy. Med Phys 2019;46:1972-83. 10.1002/mp.13490 [DOI] [PubMed] [Google Scholar]

- 34.Xu S, Wu Z, Yang C, Ma L, Qu B, Chen G, Yao W, Wang S, Liu Y, Li XA. Radiation-induced CT number changes in GTV and parotid glands during the course of radiation therapy for nasopharyngeal cancer. Br J Radiol 2016;89:20140819. 10.1259/bjr.20140819 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Klüter S. Technical design and concept of a 0.35 T MR-Linac. Clin Transl Radiat Oncol 2019;18:98-101. 10.1016/j.ctro.2019.04.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Yu JB, Brock KK, Campbell AM, Chen AB, Diaz R, Escorcia FE, Gupta G, Hrinivich WT, Joseph S, Korpics M, Onderdonk BE, Pandit-Taskar N, Wood BJ, Woodward WA. Proceedings of the ASTRO-RSNA Oligometastatic Disease Research Workshop. Int J Radiat Oncol Biol Phys 2020;108:539-45. [DOI] [PubMed] [Google Scholar]

- 37.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015;521:436-44. 10.1038/nature14539 [DOI] [PubMed] [Google Scholar]

- 38.Valdes G, Scheuermann R, Hung CY, Olszanski A, Bellerive M, Solberg TD. A mathematical framework for virtual IMRT QA using machine learning. Med Phys 2016;43:4323. 10.1118/1.4953835 [DOI] [PubMed] [Google Scholar]

- 39.Carlson JN, Park JM, Park SY, Park JI, Choi Y, Ye SJ. A machine learning approach to the accurate prediction of multi-leaf collimator positional errors. Phys Med Biol 2016;61:2514-31. 10.1088/0031-9155/61/6/2514 [DOI] [PubMed] [Google Scholar]

- 40.Li Q, Chan MF. Predictive time-series modeling using artificial neural networks for Linac beam symmetry: an empirical study. Ann N Y Acad Sci 2017;1387:84-94. 10.1111/nyas.13215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Li J, Wang L, Zhang X, Liu L, Li J, Chan MF, Sui J, Yang R. Machine Learning for Patient-Specific Quality Assurance of VMAT: Prediction and Classification Accuracy. Int J Radiat Oncol Biol Phys 2019;105:893-902. 10.1016/j.ijrobp.2019.07.049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Valdes G, Chan MF, Lim SB, Scheuermann R, Deasy JO, Solberg TD. IMRT QA using machine learning: A multi-institutional validation. J Appl Clin Med Phys 2017;18:279-84. 10.1002/acm2.12161 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Chan MF, Witztum A, Valdes G. Integration of AI and Machine Learning in Radiotherapy QA. Front Artif Intell 2020;3:577620. 10.3389/frai.2020.577620 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Isaksson M, Jalden J, Murphy MJ. On using an adaptive neural network to predict lung tumor motion during respiration for radiotherapy applications. Med Phys 2005;32:3801-9. 10.1118/1.2134958 [DOI] [PubMed] [Google Scholar]

- 45.Kakar M, Nyström H, Aarup LR, Nøttrup TJ, Olsen DR. Respiratory motion prediction by using the adaptive neuro fuzzy inference system (ANFIS). Phys Med Biol 2005;50:4721-8. 10.1088/0031-9155/50/19/020 [DOI] [PubMed] [Google Scholar]

- 46.Murphy MJ, Pokhrel D. Optimization of an adaptive neural network to predict breathing. Med Phys 2009;36:40-7. 10.1118/1.3026608 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Zhao W, Patil I, Han B, Yang Y, Xing L, Schüler E. Beam data modeling of linear accelerators (linacs) through machine learning and its potential applications in fast and robust linac commissioning and quality assurance. Radiother Oncol 2020;153:122-9. 10.1016/j.radonc.2020.09.057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Du M, Liu N, Hu X. Techniques for interpretable machine learning. Commun ACM 2019;63:68-77. 10.1145/3359786 [DOI] [Google Scholar]

- 49.Brianzoni E, Rossi G, Ancidei S, Berbellini A, Capoccetti F, Cidda C, D'Avenia P, Fattori S, Montini GC, Valentini G, Proietti A, Algranati C. Radiotherapy planning: PET/CT scanner performances in the definition of gross tumour volume and clinical target volume. Eur J Nucl Med Mol Imaging 2005;32:1392-9. 10.1007/s00259-005-1845-5 [DOI] [PubMed] [Google Scholar]

- 50.Navarria P, Reggiori G, Pessina F, Ascolese AM, Tomatis S, Mancosu P, Lobefalo F, Clerici E, Lopci E, Bizzi A, Grimaldi M, Chiti A, Simonelli M, Santoro A, Bello L, Scorsetti M. Investigation on the role of integrated PET/MRI for target volume definition and radiotherapy planning in patients with high grade glioma. Radiother Oncol 2014;112:425-9. 10.1016/j.radonc.2014.09.004 [DOI] [PubMed] [Google Scholar]

- 51.Alongi P, Laudicella R, Desideri I, Chiaravalloti A, Borghetti P, Quartuccio N, Fiore M, Evangelista L, Marino L, Caobelli F, Tuscano C, Mapelli P, Lancellotta V, Annunziata S, Ricci M, Ciurlia E, Fiorentino A. Positron emission tomography with computed tomography imaging (PET/CT) for the radiotherapy planning definition of the biological target volume: PART 1. Crit Rev Oncol Hematol 2019;140:74-9. 10.1016/j.critrevonc.2019.01.011 [DOI] [PubMed] [Google Scholar]

- 52.Mirzakhanian L, Bassalow R, Zaks D, Huntzinger C, Seuntjens J. IAEA-AAPM TRS-483-based reference dosimetry of the new RefleXion biology-guided radiotherapy (BgRT) machine. Med Phys 2021;48:1884-92. 10.1002/mp.14631 [DOI] [PubMed] [Google Scholar]

- 53.Fan Q, Nanduri A, Zhu L, Mazin S. TU‐G‐BRA‐04: Emission Guided Radiation Therapy: A Simulation Study of Lung Cancer Treatment with Automatic Tumor Tracking Using a 4D Digital Patient Model. Med Phys 2012;39:3922. 10.1118/1.4736008 [DOI] [Google Scholar]

- 54.Fan Q, Nanduri A, Mazin S, Zhu L. Emission guided radiation therapy for lung and prostate cancers: a feasibility study on a digital patient. Med Phys 2012;39:7140-52. 10.1118/1.4761951 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Wu J, Aguilera T, Shultz D, Gudur M, Rubin DL, Loo BW, Jr, Diehn M, Li R. Early-Stage Non-Small Cell Lung Cancer: Quantitative Imaging Characteristics of (18)F Fluorodeoxyglucose PET/CT Allow Prediction of Distant Metastasis. Radiology 2016;281:270-8. 10.1148/radiol.2016151829 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Wu J, Gensheimer MF, Dong X, Rubin DL, Napel S, Diehn M, Loo BW, Jr, Li R. Robust Intratumor Partitioning to Identify High-Risk Subregions in Lung Cancer: A Pilot Study. Int J Radiat Oncol Biol Phys 2016;95:1504-12. 10.1016/j.ijrobp.2016.03.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Ferrante E, Dokania PK, Silva RM, Paragios N. Weakly Supervised Learning of Metric Aggregations for Deformable Image Registration. IEEE J Biomed Health Inform 2019;23:1374-84. 10.1109/JBHI.2018.2869700 [DOI] [PubMed] [Google Scholar]

- 58.Xia KJ, Yin HS, Wang JQ. A novel improved deep convolutional neural network model for medical image fusion. Cluster Comput 2019;22:1515-27. 10.1007/s10586-018-2026-1 [DOI] [Google Scholar]

- 59.Yu H, Zhou X, Jiang H, Kang H, Wang Z, Hara T, Fujita H. Learning 3D non-rigid deformation based on an unsupervised deep learning for PET/CT image registration. Proc. SPIE 10953, Medical Imaging 2019: Biomedical Applications in Molecular, Structural, and Functional Imaging, 109531X (15 March 2019).

- 60.Fan J, Cao X, Wang Q, Yap PT, Shen D. Adversarial learning for mono- or multi-modal registration. Med Image Anal 2019;58:101545. 10.1016/j.media.2019.101545 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Fu Y, Wang T, Lei Y, Patel P, Jani AB, Curran WJ, Liu T, Yang X. Deformable MR-CBCT prostate registration using biomechanically constrained deep learning networks. Med Phys 2021;48:253-63. 10.1002/mp.14584 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Wu G, Kim M, Wang Q, Munsell BC, Shen D. Scalable High-Performance Image Registration Framework by Unsupervised Deep Feature Representations Learning. IEEE Trans Biomed Eng 2016;63:1505-16. 10.1109/TBME.2015.2496253 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Liao R, Miao S, de Tournemire P, Grbic S, Kamen A, Mansi T, Comaniciu D. An artificial agent for robust image registration. Proceedings of the AAAI Conference on Artificial Intelligence; 2017. [Google Scholar]

- 64.Eppenhof KAJ, Pluim JPW, Pulmonary CT. Registration Through Supervised Learning With Convolutional Neural Networks. IEEE Trans Med Imaging 2019;38:1097-105. 10.1109/TMI.2018.2878316 [DOI] [PubMed] [Google Scholar]

- 65.Fu Y, Lei Y, Wang T, Higgins K, Bradley JD, Curran WJ, Liu T, Yang X. LungRegNet: An unsupervised deformable image registration method for 4D-CT lung. Med Phys 2020;47:1763-74. 10.1002/mp.14065 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Ng JA, Booth JT, Poulsen PR, Fledelius W, Worm ES, Eade T, Hegi F, Kneebone A, Kuncic Z, Keall PJ. Kilovoltage intrafraction monitoring for prostate intensity modulated arc therapy: first clinical results. Int J Radiat Oncol Biol Phys 2012;84:e655-61. 10.1016/j.ijrobp.2012.07.2367 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Soete G, Verellen D, Michielsen D, Vinh-Hung V, Van de Steene J, Van den Berge D, De Roover P, Keuppens F, Storme G. Clinical use of stereoscopic X-ray positioning of patients treated with conformal radiotherapy for prostate cancer. Int J Radiat Oncol Biol Phys 2002;54:948-52. 10.1016/S0360-3016(02)03027-4 [DOI] [PubMed] [Google Scholar]

- 68.Xie Y, Djajaputra D, King CR, Hossain S, Ma L, Xing L. Intrafractional motion of the prostate during hypofractionated radiotherapy. Int J Radiat Oncol Biol Phys 2008;72:236-46. 10.1016/j.ijrobp.2008.04.051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.van der Horst A, Wognum S, Dávila Fajardo R, de Jong R, van Hooft JE, Fockens P, van Tienhoven G, Bel A. Interfractional position variation of pancreatic tumors quantified using intratumoral fiducial markers and daily cone beam computed tomography. Int J Radiat Oncol Biol Phys 2013;87:202-8. 10.1016/j.ijrobp.2013.05.001 [DOI] [PubMed] [Google Scholar]

- 70.Budiharto T, Slagmolen P, Haustermans K, Maes F, Junius S, Verstraete J, Oyen R, Hermans J, Van den Heuvel F. Intrafractional prostate motion during online image guided intensity-modulated radiotherapy for prostate cancer. Radiother Oncol 2011;98:181-6. 10.1016/j.radonc.2010.12.019 [DOI] [PubMed] [Google Scholar]

- 71.Moseley DJ, White EA, Wiltshire KL, Rosewall T, Sharpe MB, Siewerdsen JH, Bissonnette JP, Gospodarowicz M, Warde P, Catton CN, Jaffray DA. Comparison of localization performance with implanted fiducial markers and cone-beam computed tomography for on-line image-guided radiotherapy of the prostate. Int J Radiat Oncol Biol Phys 2007;67:942-53. 10.1016/j.ijrobp.2006.10.039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Li R, Mok E, Chang DT, Daly M, Loo BW, Jr, Diehn M, Le QT, Koong A, Xing L. Intrafraction verification of gated RapidArc by using beam-level kilovoltage X-ray images. Int J Radiat Oncol Biol Phys 2012;83:e709-15. 10.1016/j.ijrobp.2012.03.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Li R, Han B, Meng B, Maxim PG, Xing L, Koong AC, Diehn M, Loo BW, Jr. Clinical implementation of intrafraction cone beam computed tomography imaging during lung tumor stereotactic ablative radiation therapy. Int J Radiat Oncol Biol Phys 2013;87:917-23. 10.1016/j.ijrobp.2013.08.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Zhao W, Shen L, Han B, Yang Y, Cheng K, Toesca DAS, Koong AC, Chang DT, Xing L. Markerless Pancreatic Tumor Target Localization Enabled By Deep Learning. Int J Radiat Oncol Biol Phys 2019;105:432-9. 10.1016/j.ijrobp.2019.05.071 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Dhont J, Verellen D, Mollaert I, Vanreusel V, Vandemeulebroucke J. RealDRR - Rendering of realistic digitally reconstructed radiographs using locally trained image-to-image translation. Radiother Oncol 2020;153:213-9. 10.1016/j.radonc.2020.10.004 [DOI] [PubMed] [Google Scholar]

- 76.Birkfellner W, Stock M, Figl M, Gendrin C, Hummel J, Dong S, Kettenbach J, Georg D, Bergmann H. Stochastic rank correlation: a robust merit function for 2D/3D registration of image data obtained at different energies. Med Phys 2009;36:3420-8. 10.1118/1.3157111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Gendrin C, Furtado H, Weber C, Bloch C, Figl M, Pawiro SA, Bergmann H, Stock M, Fichtinger G, Georg D, Birkfellner W. Monitoring tumor motion by real time 2D/3D registration during radiotherapy. Radiother Oncol 2012;102:274-80. 10.1016/j.radonc.2011.07.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Hou B, Alansary A, McDonagh S, Davidson A, Rutherford M, Hajnal JV, Rueckert D, Glocker B, Kainz B. Predicting slice-to-volume transformation in presence of arbitrary subject motion. International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer, 2017. [Google Scholar]

- 79.Foote MD, Zimmerman BE, Sawant A, Joshi SC. Real-Time 2D-3D Deformable Registration with Deep Learning and Application to Lung Radiotherapy Targeting. In: Chung A, Gee J, Yushkevich P, Bao S. editors. Information Processing in Medical Imaging. IPMI 2019. Lecture Notes in Computer Science, vol 11492. Cham: Springer, 2019. [Google Scholar]

- 80.Miao S, Piat S, Fischer P, Tuysuzoglu A, Mewes P, Mansi T, Liao R. Dilated FCN for multi-agent 2d/3d medical image registration. Proceedings of the AAAI Conference on Artificial Intelligence, 2018. [Google Scholar]

- 81.Yang X, Lei Y, Wang T, Liu Y, Tian S, Dong X, Jiang X, Jani A, Curran WJ, Jr, Patel PR, Liu T. CBCT-guided Prostate Adaptive Radiotherapy with CBCT-based Synthetic MRI and CT. Int J Radiat Oncol Biol Phys 2019;105:S250. 10.1016/j.ijrobp.2019.06.372 [DOI] [Google Scholar]

- 82.Jiang Z, Yin FF, Ge Y, Ren L. A multi-scale framework with unsupervised joint training of convolutional neural networks for pulmonary deformable image registration. Phys Med Biol 2020;65:015011. 10.1088/1361-6560/ab5da0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Liang X, Zhao W, Hristov DH, Buyyounouski MK, Hancock SL, Bagshaw H, Zhang Q, Xie Y, Xing L. A deep learning framework for prostate localization in cone beam CT-guided radiotherapy. Med Phys 2020;47:4233-40. 10.1002/mp.14355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Chen L, Liang X, Shen C, Jiang S, Wang J. Synthetic CT generation from CBCT images via deep learning. Med Phys 2020;47:1115-25. 10.1002/mp.13978 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Liang X, Chen L, Nguyen D, Zhou Z, Gu X, Yang M, Wang J, Jiang S. Generating synthesized computed tomography (CT) from cone-beam computed tomography (CBCT) using CycleGAN for adaptive radiation therapy. Phys Med Biol 2019;64:125002. 10.1088/1361-6560/ab22f9 [DOI] [PubMed] [Google Scholar]

- 86.Elmahdy MS, Jagt T, Zinkstok RT, Qiao Y, Shahzad R, Sokooti H, Yousefi S, Incrocci L, Marijnen CAM, Hoogeman M, Staring M. Robust contour propagation using deep learning and image registration for online adaptive proton therapy of prostate cancer. Med Phys 2019;46:3329-43. 10.1002/mp.13620 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Liang X, Bibault JE, Leroy T, Escande A, Zhao W, Chen Y, Buyyounouski MK, Hancock SL, Bagshaw H, Xing L. Automated contour propagation of the prostate from pCT to CBCT images via deep unsupervised learning. Med Phys 2021;48:1764-70. 10.1002/mp.14755 [DOI] [PubMed] [Google Scholar]

- 88.Dai X, Lei Y, Wang T, Dhabaan AH, McDonald M, Beitler JJ, Curran WJ, Zhou J, Liu T, Yang X. Head-and-neck organs-at-risk auto-delineation using dual pyramid networks for CBCT-guided adaptive radiotherapy. Phys Med Biol 2021;66:045021. 10.1088/1361-6560/abd953 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Lei Y, Wang T, Tian S, Dong X, Jani AB, Schuster D, Curran WJ, Patel P, Liu T, Yang X. Male pelvic multi-organ segmentation aided by CBCT-based synthetic MRI. Phys Med Biol 2020;65:035013. 10.1088/1361-6560/ab63bb [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Shen L, Zhao W, Xing L. Patient-specific reconstruction of volumetric computed tomography images from a single projection view via deep learning. Nat Biomed Eng 2019;3:880-8. 10.1038/s41551-019-0466-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Mutic S, Dempsey JF. The ViewRay system: magnetic resonance-guided and controlled radiotherapy. Semin Radiat Oncol 2014;24:196-9. 10.1016/j.semradonc.2014.02.008 [DOI] [PubMed] [Google Scholar]

- 92.Winkel D, Bol GH, Kroon PS, van Asselen B, Hackett SS, Werensteijn-Honingh AM, Intven MPW, Eppinga WSC, Tijssen RHN, Kerkmeijer LGW, de Boer HCJ, Mook S, Meijer GJ, Hes J, Willemsen-Bosman M, de Groot-van Breugel EN, Jürgenliemk-Schulz IM, Raaymakers BW. Adaptive radiotherapy: The Elekta Unity MR-linac concept. Clin Transl Radiat Oncol 2019;18:54-9. 10.1016/j.ctro.2019.04.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Cerviño LI, Du J, Jiang SB. MRI-guided tumor tracking in lung cancer radiotherapy. Phys Med Biol 2011;56:3773-85. 10.1088/0031-9155/56/13/003 [DOI] [PubMed] [Google Scholar]

- 94.Capaldi DPI, Guo F, Xing L, Parraga G. Pulmonary Ventilation Maps Generated with Free-breathing Proton MRI and a Deep Convolutional Neural Network. Radiology 2021;298:427-38. 10.1148/radiol.2020202861 [DOI] [PubMed] [Google Scholar]

- 95.Terpstra ML, Maspero M, d'Agata F, Stemkens B, Intven MPW, Lagendijk JJW, van den Berg CAT, Tijssen RHN. Deep learning-based image reconstruction and motion estimation from undersampled radial k-space for real-time MRI-guided radiotherapy. Phys Med Biol 2020;65:155015. 10.1088/1361-6560/ab9358 [DOI] [PubMed] [Google Scholar]

- 96.Wu Y, Ma Y, Capaldi DP, Liu J, Zhao W, Du J, Xing L. Incorporating prior knowledge via volumetric deep residual network to optimize the reconstruction of sparsely sampled MRI. Magn Reson Imaging 2020;66:93-103. 10.1016/j.mri.2019.03.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Mardani M, Gong E, Cheng JY, Vasanawala SS, Zaharchuk G, Xing L, Pauly JM. Deep Generative Adversarial Neural Networks for Compressive Sensing MRI. IEEE Trans Med Imaging 2019;38:167-79. 10.1109/TMI.2018.2858752 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Langen KM, Jones DT. Organ motion and its management. Int J Radiat Oncol Biol Phys 2001;50:265-78. 10.1016/S0360-3016(01)01453-5 [DOI] [PubMed] [Google Scholar]

- 99.van Lin EN, van der Vight LP, Witjes JA, Huisman HJ, Leer JW, Visser AG. The effect of an endorectal balloon and off-line correction on the interfraction systematic and random prostate position variations: a comparative study. Int J Radiat Oncol Biol Phys 2005;61:278-88. 10.1016/j.ijrobp.2004.09.042 [DOI] [PubMed] [Google Scholar]

- 100.Keall PJ, Nguyen DT, O'Brien R, Zhang P, Happersett L, Bertholet J, Poulsen PR. Review of Real-Time 3-Dimensional Image Guided Radiation Therapy on Standard-Equipped Cancer Radiation Therapy Systems: Are We at the Tipping Point for the Era of Real-Time Radiation Therapy? Int J Radiat Oncol Biol Phys 2018;102:922-31. 10.1016/j.ijrobp.2018.04.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Heaven D. Why deep-learning AIs are so easy to fool. Nature 2019;574:163-6. 10.1038/d41586-019-03013-5 [DOI] [PubMed] [Google Scholar]