Abstract

Due to the irregular shapes,various sizes and indistinguishable boundaries between the normal and infected tissues, it is still a challenging task to accurately segment the infected lesions of COVID-19 on CT images. In this paper, a novel segmentation scheme is proposed for the infections of COVID-19 by enhancing supervised information and fusing multi-scale feature maps of different levels based on the encoder-decoder architecture. To this end, a deep collaborative supervision (Co-supervision) scheme is proposed to guide the network learning the features of edges and semantics. More specifically, an Edge Supervised Module (ESM) is firstly designed to highlight low-level boundary features by incorporating the edge supervised information into the initial stage of down-sampling. Meanwhile, an Auxiliary Semantic Supervised Module (ASSM) is proposed to strengthen high-level semantic information by integrating mask supervised information into the later stage. Then an Attention Fusion Module (AFM) is developed to fuse multiple scale feature maps of different levels by using an attention mechanism to reduce the semantic gaps between high-level and low-level feature maps. Finally, the effectiveness of the proposed scheme is demonstrated on four various COVID-19 CT datasets. The results show that the proposed three modules are all promising. Based on the baseline (ResUnet), using ESM, ASSM, or AFM alone can respectively increase Dice metric by 1.12%, 1.95%,1.63% in our dataset, while the integration by incorporating three models together can rise 3.97%. Compared with the existing approaches in various datasets, the proposed method can obtain better segmentation performance in some main metrics, and can achieve the best generalization and comprehensive performance.

Keywords: Semantic segmentation, Multi-scale features, Attention mechanism, Feature fusion, COVID-19

1. Introduction

Since the outbreak of COVID-19 in December, 2019, it has spread rapidly around the world, and has caused millions of casualties and amount of economic losses. Rapid diagnosis of COVID-19 is of great significance for diagnosis, assessment and staging COVID-19 infection [1], [2], [3]. Nucleic acid testing is the “gold standard” for the diagnosis of COVID-19, but the diagnosis are easily influenced by the quality of the sample collection, and it is also more time consuming. Therefore, it is still common to use the imaging diagnosis methods such as CT and X-ray. Especially, the combining of artificial intelligence (AI) with other methods has been proposed to help auxiliary diagnosis by using medical images for COVID-19 in clinical practice, and some deep learning-based methods are becoming hot spots in the detection and segmentation of COVID-19 infected areas. For example, a modified inception neural network was proposed to train the Regions of Interest (RoI) instead of the whole CT images for classifying COVID-19 patients from control group [7]. Amyar et al. [5] proposed a multitask deep learning model to jointly identify COVID-19 patient and segment COVID-19 lesion from chest CT images. Oulefki et al. [10] presented the utility of an automated tool of segmentation and measurement for COVID-19 lung Infection using chest CT imagery. Owing to the fact that lung infected region segmentation is a necessary initial step for lung image analysis, some image segmentation algorithms are also proposed for some specific application scenarios. For instance, an improved Inf-Net was proposed to segment the infection area of the novel coronavirus, and a semi-supervised training method is put forward to solve insufficient amount of labeled CT and improve the segmentation performance [6]. Currently, most of the methods are based on detection and classification tasks, but not much on the semantic segmentation of infection on CT slices [4], so that the assessment and staging COVID-19 infection are greatly limited. Therefore, according to CT imaging characteristics, it is necessary to propose some segmentation methods for the infection regions of COVID-19, so that we can further achieve quantitative analysis of the lesions.

However, it is a still challenging task to accurately segment the infected lesions of COVID-19 on CT images owing to the following facts.

-

1.

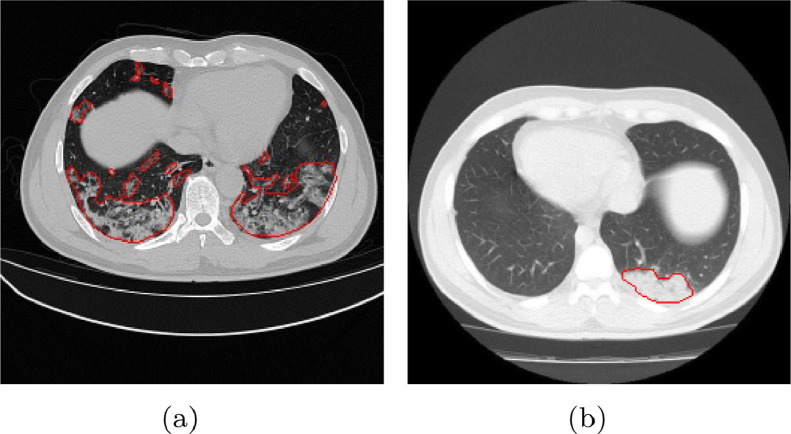

The infections have irregular boundary, different sizes and shapes from slice to slice on CT images (shown in Fig. 1 a). It would easily lead to missing some small ground-glass lesions or generating excessive over-segmentation for the infections on CT images.

-

2.

There seems to be no discernible difference between infections and normal tissues (shown in Fig. 1b). It is unaffected for the detection or classification, but it can decrease segmentation accuracy and quantified quality.

-

3.

The existing semantic segmentation approaches like the encoder-decoder structure exist a “semantic gap” between low-level visual features and high-level semantic concepts, which greatly limits the efficiency of semantic segmentation.

Fig. 1.

An illustration of challenging task for identification the infected lesions (contours in red) of COVID-19 on CT images. (a) The infections have various scales and shapes. (b) There is no obvious difference between normal and infected tissues. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

To address these issues, a novel segmentation scheme is proposed for the infections of COVID-19 based on the encoder-decoder architecture [11] in this paper, and the proposed scheme can collaboratively enhance supervised information of different levels and fuse different scale feature maps. For the proposed deep collaborative supervision scheme, we propose an Auxiliary Semantic Supervised Module (ASSM) and an Edge Supervised Module (ESM) to guide the network learning the features of edges and semantics in the encoding stage, respectively. As for multi-scale feature maps, an Attention Fusion Module (AFM), following with the decoding stage, is proposed to reduce the semantic gaps between high-level and low-level feature maps. The proposed attention fusion strategy can take full advantage of different scale context information. Finally, a series of experiments are conducted on the COVID-19 dataset to verify the effectiveness of the proposed scheme. The results show that our method can obtain better performance for the segmentation of COVID-19 infections than the existing approaches. The main contributions of this paper are listed as follows.

-

•

An ESM is put forward to highlight low-level boundary features. The edge supervised information is incorporated into the initial stage of down-sampling, as the proposed edge supervised loss function allows to capture rich spatial information in various scales.

-

•

An ASSM is proposed to enhance high-level semantics from feature maps with different scales. The mask supervised information is introduced into the later stage of down-sampling, thanks to the corresponding auxiliary semantic loss function that is defined to explore sufficient semantic information from various scale infections on COVID-19 CT images.

-

•

An AFM is developed to fuse various scale feature maps from the up-sampling stage. An attention mechanism is utilized to reduce the semantic gaps between high-level and low-level feature maps, so as to strengthen and supplement the lost detailed information in high-level representations.

-

•

A joint loss function is constructed by combining the edge supervised loss, auxiliary semantic supervised loss and fusion loss. It can guide the network achieving a deep collaborative supervision on edges and semantics, and prompting the fusion efficiency on multiple scale feature maps from different levels.

This paper is organized as follows. Section 2 introduces the related works. Section 3 describes details about the proposed methods, including Edge Supervised Module (ESM), Auxiliary Semantic Supervised Module (ASSM) and Attention Fusion Module (AFM). Section 4 presents experiments, results and discussions, and Section 5 concludes this work.

2. Related works

In this section, we provide a short review of previous studies on network models, edge supervision, multi-scale object recognition, and attention mechanism.

2.1. Network models

Deep network models are a kind of hierarchical feature learning methods by learning multiple levels of representation to model complex relationships among data, and higher-level features and concepts are thus defined in terms of lower-level ones, and such a hierarchy of features is called a deep architecture [12]. Usually, the first layers will learn the low level features like intensity, color, lines, dots and curves, then the more the layers approach the output layer, the more the layers will learn the high level features like objects and shapes in a feature extracting pipeline. For example, from AlexNet [13], VGG [14] to ResNet [15], the ability of feature extraction is becoming more and more powerful with the deepening of the network depth. Accordingly, the deeper networks can provide a powerful feature extraction ability for semantic segmentation tasks, and can greatly improve segmentation accuracy.

Since FCN [16] is proposed, other semantic segmentation networks attempt to improve this architecture by adding new modules to solve the problems regarding the lack of spatial and contextual information. For example, U-Net [11] is greatly improved only by adding the skip connection based on FCN. PSPNet [17] employs pyramid pooling module to explore the global context information, and it can improve the accuracy of target segmentation at different scales. Besides, DeepLabV3+ [18] combines the advantages of Spatial Pyramid Pooling (SPP) module and encoder-decoder structure, and further explore the Xception model and apply the depthwise separable convolution to both Atrous Spatial Pyramid Pooling (ASPP) and decoder modules. PSANet [19] can capture pixel level relationship and relative position information in spatial dimension through convolution layer. In addition, EncNet [21] also introduced a channel attention mechanism to capture the global context.

Although many advanced network structures have been emerged for semantic segmentation tasks, U-Net and its derivatives are still the most popular architecture and have been widely applied in the medical imaging community [27], [37]. However, despite their outstanding overall performance in segmenting medical images, the U-Net-based architecture seems to be lacking in certain aspects. For example, although the high-level feature map can be optimized through the concatenation the feature maps of the low-level layers and the high-level layer by using skipping connection, it is still very difficult to reduce the semantic gap between low-level visual features and high-level semantic features. Thus, we select ResUNet as the backbone to attempt to exploit a novel segmentation architecture for the COVID-19 segmentation task in this work.

2.2. Edge supervision and multi-scale object recognition

Edge information, as an important image feature, is drawing more and more attention in deep learning community owing to the fact that edge information is conducive to the extraction of object contour in segmentation tasks. For example, explicit edge-attention are utilized to model the boundaries and enhance the representations in Fan et al. [6]. Wu et al. [22] proposed a novel edge aware salient object detection method, and it passes messages between two tasks in two directions, and refines multi-level edge and segmentation features. ET-Net [23] integrates edge detection and object segmentation into a deep learning network, and the edge attention representation is embedded to supervise the segmentation prediction. Normally, edge information can provide useful fine-grained constraints to guide feature extraction in semantic segmentation tasks. However, high-level feature maps have little edge information, while low-level layers contain richer object boundaries.

For the multi-scale object recognition problem, it is common practice to exploit multiple levels of coarse and fine-grained semantic features by adopting different network structures in computer vision. For example, the operations of convolution and pooling on the original image is used to obtain feature maps of different sizes, and it is similar to constructing pyramids in the feature space of images. Feature Pyramid Networks (FPN) [24] is one of the most typical examples, and it adopts a top-down architecture with lateral connections for building high-level semantic feature maps at all scales. It has been demonstrated a significant improvement as a generic feature extractor in detection tasks, and has been widely applied in different detection architectures, such as Faster R-CNN [25] and Mask R-CNN [26].

It is widely known that the low-level feature maps pay more attention to detail information, while the high-level lay much attention to semantic information. More specifically, the encoded pathway is mainly used for feature extraction, and there are hierarchy and gradation for various feature. Because the spatial resolution and the semantics can be decreased and strengthened along with the deepening of down-sampling, respectively. Significantly, FPN [24] and U-Net [11] both adopt encoder-decoder architecture, but they are respectively applied in object detection and semantic segmentation. The main difference is that there are multiple prediction layers for various scale features in FPN [24]. Inspired by this, we attempt to exploit sufficient multi-scale context information from different levels of the encoder in this work. Low level detailed feature maps can exploit rich spatial information, and they could strengthen the boundaries of the infected regions; while high-level semantic feature maps can endow position information, and they could locate the infected regions.

2.3. Attention mechanism

Attention can be regarded as a mechanism, and it emphasizes the features that need attention through the context of feature maps. Normally, an attention mechanism is used to highlight the important context in the channel-wise or space-wise [7], [8], while suppressing the context information irrelevant to the content. For example, Fu et al. [28] proposed Dual Attention Network (DAN), and two attention modules were introduced to capture the spatial dependence between any two positions in the feature maps. A similar self-attention mechanism was used to capture the channel dependence between any two channels, and the weighted sum of all channel was utilized to update each channel. Huang et al. [29] proposed Criss-Cross Net (CCNet) to capture this important information in a more effective way, specifically, for each pixel, CCNet can obtain the context information on its crisscross path through a Criss-Cross attention module. Non-local operations, proposed by Wang et al. [30], can directly capture remote dependencies by calculating the interaction between any two locations. Besides, an attention mechanism is also used to aggregate different levels of features to bridge the semantic gaps between low-level features and high-level semantics. For example, Li et al. [31] proposed Gated Fully Fusion(GFF) to fully fuse multi-level feature maps controlled by learned gate maps, and the novel module can bridge the gap between high resolution with low semantics and low resolution with high semantics. Inspired by this, we adopt an attention mechanism to fuse various level feature maps, and the proposed AFM can reduce the semantic gaps between high-level and low-level feature maps, so as to strengthen and supplement the missing detailed information in high-level representations.

3. Methods

In this section, we first present the proposed network architecture. Then we introduce in details the proposed three modules: ESM, ASSM and AFM.

3.1. Proposed network architecture

As mentioned above, U-Net [11] and FPN [24] both have a similar encoder-decoder structure for multi-scale object vision tasks, consisting of a contracting path to capture context and a symmetric expanding path that enables precise localization. While U-Net [11] creates a path for information propagation allowing signals propagate between low and high levels by copying low level features to the corresponding high levels. Despite achieving good segmentation performance in U-Net and its variations, however, the edge information and channels would decrease and increase along with down-sampling of the contracting path, respectively. Both cases can lead to effective information missing, thereby not exploring sufficient information from full scales so as to suffer segmentation performance degradation. While FPN [24] can overcome these drawbacks to retain multi-scale contextual information by using multiple prediction layers: one for each up-sampling layer. Based on this idea, we propose a novel segmentation scheme for the infections of COVID-19.

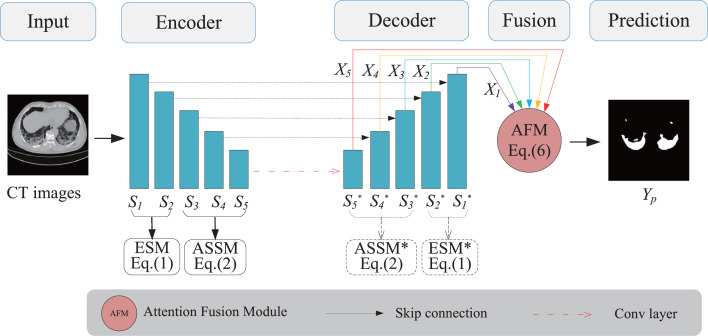

Fig. 2 illustrates the proposed network architecture. Firstly, we collaboratively enhance the supervised information by introducing edge and semantic information into the encoding stage. Note that the initial stages are used for the edge supervision, while the later stages for the semantic supervision. They occupy the whole down-sampling together, more precisely, the sum of the low-level and high-level layers is equal to the total layers of the encoder. Especially, low-level feature maps from shallow layers are with high resolution, but with limited semantics, whereas high-level feature maps from deep layers have low spatial resolution without detailed information (like object boundaries). When various levels are selected to enhance the supervised information, there is a trade-off between edge supervision and semantic supervision, thus we call it “collaborative supervision” (“Co-supervision”). Then we fuse multi-scale feature maps of different levels from the decoding stage in an encoder-decoder framework (like U-Net). Considering the fact that low level detailed feature maps have high resolution and can capture rich spatial information like object boundaries, we design an ESM to highlight low-level boundary features by incorporating the edge supervised information into the initial stage (like and in Fig. 2) of down-sampling in the encoder. While high-level semantic feature maps embody position information like object concepts, thus we present an ASSM to strengthen high-level semantic information by integrating object mask supervised information into the later stage (like in Fig. 2). Finally, the obtained various scale feature maps from the up-sampling stage are fused by adopting an attention mechanism to achieve good segmentation performance for infections of COVID-19.

Fig. 2.

An Illustration of the overall network architecture. The proposed architecture comprises of ASSM, ESM and AFM based on encoder-decoder structure. (1) ESM is used to further highlight the low-level features in the initial shallow layers of the encoder, and it can capture more detailed information like object boundaries. (2) While ASSM is employed to strengthen high-level semantic information by integrating object mask supervised information into the later stages of the encoder. (3) Finally, AFM is utilized to fuse multi-scale feature maps of different levels in the decoder.

3.2. Edge supervised module (ESM)

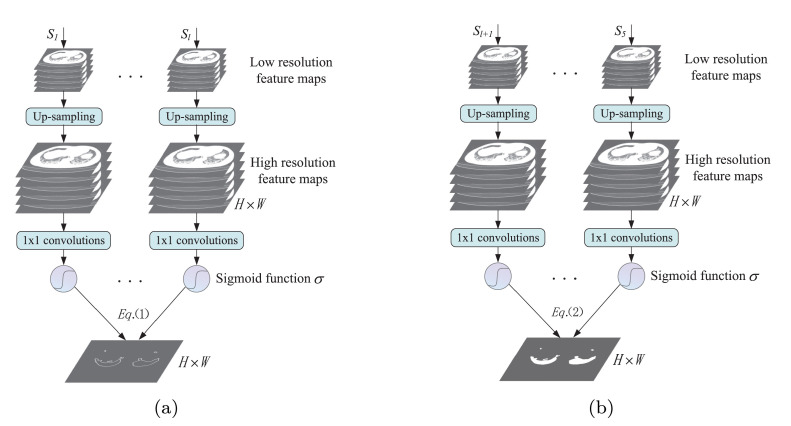

Many studies [22], [23] show that the edge information can provide effective constraints to the feature extraction in the segmentation task. To supplement the missing edge information along with down-sampling, we propose ESM to further highlight the object boundary features in the low-level layers. Because feature maps of low level from shallow layers are with high resolution and detailed information (including edge information), and these detailed information are easily lost during the initial stage of the down-sampling process, the proposed ESM can capture more detailed information like object boundaries. Specifically, we can guide the network to extract edge features from the initial stages like and (shown in Fig. 2) by defining edge supervised loss function. To this end, the output feature maps from the initial stage are firstly resized to the size of the original image by using bilinear interpolation up-sampling. Then the obtained large feature maps of each layer in ESM are reduced to a feature map by using convolution operation. Finally each pixel value is converted to a probability by using Sigmoid function (shown in Fig. 3 a), and an edge prediction image with is obtained. Accordingly, the edge supervised loss function is given based on Dice coefficient as follows.

| (1) |

where is the edge prediction image obtained by using bilinear interpolation up-sampling in the stage. is the corresponding Ground Truth (GT) of edge image, which is obtained by generating edge GT from the segmentation mask. is the number of stages used for edge supervised in the ESM. () is the weight coefficient of the stage. By using skip connections and AFM, the edge features in the high-level feature maps can also be strengthened.

Fig. 3.

An illustration of ESM and ASSM. Firstly, the low resolution feature maps from the stage are resized to the same size with the input image by using bilinear interpolation up-sampling. Then all high resolution feature maps are reduced to a feature map by using convolutions. Finally each pixel value of the obtained feature map is converted to a probability by using Sigmoid function , and the prediction image of the stage is obtained. (a) ESM: the edge supervision is achieved by comparing between the obtained edge prediction image and the corresponding edge Ground Truth (GT) based on Eq.(1). (b) ASSM: the auxiliary semantic supervision is achieved by comparing between the obtained coarse segmented image and the corresponding Ground Truth (GT) of segmentation mask based on Eq.(2).

3.3. Auxiliary semantic supervised module (ASSM)

For the multi-scale object segmentation, the multi-level loss function is used to build receptive fields of different sizes for different layers in the network. For example, FPN [24] uses multi-level auxiliary loss to detect objects at different scales, and it is a great breakthrough in multi-scale object detection task. Inspired by this, we develop an ASSM based on the similar strategy in our network. Specifically, the semantic information is gradually strengthened along with the down-sampling process in the encoder, and the high-level feature map has rich semantics but low spatial resolution without detailed information. Different layers contain different level semantic features according to the feature hierarchy of the contracting path. Thereby we can define an auxiliary semantic loss function to reduce the semantic gaps between high-level and low-level feature maps in the later stage (i.e.,) of the encoder. Eventually, low-level semantic features can be strengthened by using multi-scale skip connections and AFM, and it can also reduce the background noise in the low-level feature maps.

Similar to the above steps in ESM, we can obtain one coarse segmented image with the size of and the probability of each pixel through a series of operations, such as bilinear interpolation, convolution, and Sigmoid function (shown in Fig. 3b). Then the auxiliary semantic loss function is defined based on Dice coefficient as follows.

| (2) |

where and are the obtained coarse segmented image in the stage of the Encoder and the Ground Truth (GT) of segmentation mask, respectively. () is the weight coefficient of the stage.

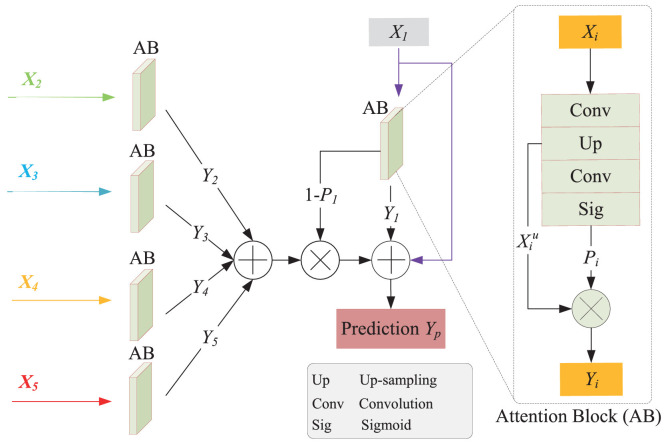

3.4. Attention fusion module (AFM)

As mentioned above, high-level features are very efficient in semantic segmentation tasks. However, the high-level feature maps easily lead to inferior results for small or thin objects owing to the fact that the operations of convolution and pooling can cause the detailed information missing, thereby high-level feature maps have coarse resolution. To compensate the lost detailed information in high-level representations, it is necessary to import low level features. However, the full-scale skip connections can only incorporate low-level details with high-level semantics from feature maps in different scales of the same level, and the semantic gaps existing among various levels hampers the effectiveness of the semantic segmentation. Thus we propose the AFM to fuse multi-scale feature maps of different levels by using an attention mechanism to strengthen and supplement the lost detailed information in high-level representations.

Gated Fully Fusion(GFF) [31] can selectively fuse features from multiple levels using gates in a fully connected way, and add weights to each spatial position by using skip connection. Inspired by this idea, an attention mechanism is incorporated into the AFM by aggregating different level features, aiming at reducing the semantic gaps between low-level features and high-level features. The corresponding attention mechanism is illustrated in Fig. 4 . In general, we can directly obtain the segmentation maps from the top feature map , where , and are the channel number, height and width, respectively) of the expansive path in the standard U-Net. The has high spatial resolution because the outputs need to be with the same resolution as the input image, but actually, multiple down-sampling and up-sampling operations make the deep network cause mistake and loss in the detailed information. As well as strengthening the top feature map , therefore, we can aggregate feature maps of other levels (i.e., ) to supplement the lost detailed information caused by the filters or pooling operations.

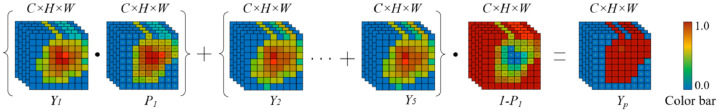

Fig. 4.

An illustration of the attention mechanism. represents the up-sampling intermediate result by bilinear interpolation for the feature map , and its 2D size is the same size with the input image.

More precisely, we can obtain a confidence map ) through the attention block (AB) of the top feature map . The points with high confidence have a greater possibility to retain the original feature map values, and vice versa. Similarly, the lost detailed information is represented by the confidence map , in which the higher the value, the less object information it contains. Thus, we can strengthen the top feature map through the dot product between the confidence map and , and can supplement the lost detailed information by using dot product between the confidence map and the sum of other feature maps. The procedure of the attention block is illustrated in Fig. 5 , and the final prediction result can be defined as follows.

| (3) |

where is the output by using the attention block to process the corresponding . While is firstly up-sampled to the same size with the input image by bilinear interpolation. Then can be obtained by processing the up-sampling intermediate result based on the attention block, and it is defined as follows.

| (4) |

where is the attention function.

Fig. 5.

The procedure of the attention block. The color bar represents the trends of confidence values, and the red and blue denote 1 and 0, respectively. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

The specific process is as follows.

-

1.

Each up-sampling feature map () is processed through an attention block.

-

2.

After an convolution operation, the channels are reduced to 64, and we can obtain the level feature maps.

-

3.

Then the resolution is resized to by using bilinear interpolation.

-

4.

After the operation of a convolution and Sigmoid function , we can obtain the confidence output by using a dot product . Note that the top feature map is selected as the main prediction, while other confidence output only as the supplement of . When is small, it means that the corresponding confidence is low, and thereby we can compensate the lost information by doing a dot product between () and the sum of the confidence outputs of other layer feature maps ().

-

5.

Finally, the final prediction result is obtained by summing the residuals of . The specific process is shown in Algorithm 1 .

Algorithm 1.

Fusion algorithm.

The loss function for fusion is defined as follows:

| (5) |

where represents the ground truth of COVID-19.

| (6) |

where and are weight coefficients.

Considering the fact that there would be negative values in the category imbalance case when using the cross-entropy loss function. Therefore, we select Dice loss to supervise the predictions and labels in our experiments. To achieve deep fusions and supervisions for the features of different level, the overall loss function integrates ESM, ASSM and AFM, given as Eq. (6).

4. Experiments

4.1. Datasets and baselines

We collect the COVID-19 segmentation dataset from two sources. One is from [32], including more than 900 CT images, among them about 400 slices with infections. Another is from [33], and it contains 3D CT images of 20 patients, and we can obtain 3686 images by converting from 3D volumes into 2D slices. Due to the small datasets, the two sources are put together in a total of 4449 2D slices, among them 4000 slices for training sets and 449 slices for test sets, respectively. The GT contains four categories: represent background, ground glass, consolidation and plural effect, respectively. Owing to the imbalance of infection categories in the dataset, for example, only few slices contain plural effect infection, we take all types of infection as one type. Considering the limitation of GPU memory, we resize the image resolution of to by bilinear interpolation, then Z-score is used for data normalization. Besides, to further verify the effectiveness and generalization ability of the proposed method, we select three additional public COVID-19 datasets for testing and comparison, including MosMedData [42], UESTC-COVID-19 [41] and COVID-ChestCT [43]. MosMedData is a dataset of 100 axial CT images from more than 40 patients with COVID-19, including 829 slices with size (see Morozov et al. [42] for details), and UESTC-COVID-19 contains CT scans (3D volumes) of 50 patients diagnosized with COVID-19 from 10 different hospitals (see Wang et al. [41] for details). While COVID-ChestCT is a small dataset, and it contains 20 CT scans of patients diagnosed with COVID-19 as well as segmentations of lungs and infections made by experts (see Cohen et al. [43] for details).

We select ResUNet as the backbone of the proposed network, in which the down-sampling of U-Net is replaced with ResNet. To verify the effectiveness of the proposed scheme, we use a series of popular segmentation models for comparison in the medical image segmentation area, such as U-Net [11], UNet++ [9], and Attention U-Net [34], and we compare our methods with two cutting-edge models from the semantic segmentation: DeepLabV3+ [18] and PSPNet [17].

4.2. Evaluation metrics and experimental settings

We adopt three metrics to evaluate our methods, such as Dice similarity coefficient, Sensitivity (Sens.), Precision (Prec.). Besides, we also introduce three golden metrics to verify the detection and segmentation performance from the object detection field, such as Structure Measure [35], Enhance-alignment Measure [36], and Mean Absolute Error. In our evaluation, we select as the final output prediction, and measure the similarity/dissimilarity between and ground-truth , which can be formulated as follows.

-

•Dice similarity coefficient: it is used to measure the proportion of intersection between and , which is defined as follows.

(7) -

•Structure Measure (S): it is used to measure the structural similarity between a prediction S and ground-truth , which is more consistent with the human visual system.

where and are the object-aware similarity and region-aware similarity, respectively. is a balance factor between and . We report S using the default setting ( = 0.5) suggested in the original paper.(8) -

•Sensitivity (): it is used to measure the percentage of positive samples in the total number of patients, or the probability of no missed diagnosis. The formulation is given as follows.

(9) -

•Precision (): it is used to measure the percentage of samples with negative test in the total number of healthy people, or the probability of not misdiagnosing. The formulation is given as follows:

(10) -

•Enhanced-alignment Measure (): it is a recently proposed metric for evaluating both local and global similarity between two binary maps. The formulation is given as follows:

where and are the width and height of ground-truth , and denotes the coordinate of each pixel in . Symbol is the enhanced alignment matrix. We obtain a set of by converting the prediction into a binary mask with a threshold from 0 to 255. In our experiments, we report the mean of E computed from all the thresholds.(11) -

•Mean Absolute Error (): it is used to measure the pixel-wise error between and , which is defined as:

(12)

For the hyper-parameters in the experiments is given in Table 1 by try-and-error, respectively. Note that the learning rate is initially selected as 1e-4, then is reduced by a factor of 0.5 when the test loss is not improved within 25 epoch. Early stopping is used to avoid over-fitting. All experiments are conducted on a desktop computer with an E3-1230 v5 3.40 GHz 8-core processor, and with a GeForce GTX 1070 graphics card. A GPU implementation accelerates the forward propagation and back propagation routines by using the Adam optimizer under the Pytorch framework. Each experiment is run three times, then its average and standard deviation are obtained.

Table 1.

Hyperparameter setting.

| Parameters | Values |

|---|---|

| Input image size | |

| batch_size | 8 |

| learning rate | |

| Early stopping | 25 epochs |

| 0.8 | |

| 0.4 | |

| () | 1 |

| () | 1 |

4.3. Experimental results

4.3.1. Quantitative results

A series of comparison experiments are implemented on our dataset, and the results are shown in Table 2 . From Table 2, the proposed method can achieve the best performances among these methods in , and . Thereinto, our method has improved by around 4.4% and 1.44% in the main metric—Dice coefficient compared with U-Net [11] and Inf-Net [6], respectively. In particular, UNet++ [9] and Attention U-Net [34] represent the best U-Net-based methods in the medical image processing area, while Inf-Net [6], CE-Net [38] and CPFNet [40] are the newest and best methods for the segmentation of medical images. It suggests that the proposed scheme is effective and competitive, and can effectively fuse the multi-scale and multi-level features to accurately achieve the COVID-19 infection segmentation.

Table 2.

Comparisons between different networks on our dataset. Bold black text represents the best results.

Besides, we further analyze the influence of edge supervision in different levels on segmentation performance by adding or reducing level edge supervision in the low-level features. To facilitate the analysis, ResUNet with Co-supervision and Fusion Model (ResUNet_CF) represents the first levels (i.e., ) in the low level to use ESM, while the rest (i.e., ) adopt ASSM in the Co-supervision, where is the number of down-sampling ( here). The results is illustrated in Table 3 , and it is obvious that Dice coefficient firstly rises and then declines along the first level number from 1 to 5. When (i.e., ResUNet_CF), the proposed method can obtain the best segmentation performance. It means that the features of low-level boundary and high-level semantic can both be strengthened as the first level number increases and reduces, respectively. When , there is a trade-off between the number of low-level and high-level (i.e., the use of context and localization accuracy), consequently ResUNet_CF can surpass other ResUNet_CF in most metrics, such as , , and . More precisely, the proposed ESM and ASSM can incorporate low-level details with high-level semantics from feature maps in different levels by using AFM.

Table 3.

The results of different numbers of edge supervised on our dataset. Bold black text represents the best results.

| Methods | (%) | (%) | ||||

|---|---|---|---|---|---|---|

| ResUNet_CF | ||||||

| ResUNet_CF | ||||||

| ResUNet_CF | ||||||

| ResUNet_CF | ||||||

| ResUNet_CF |

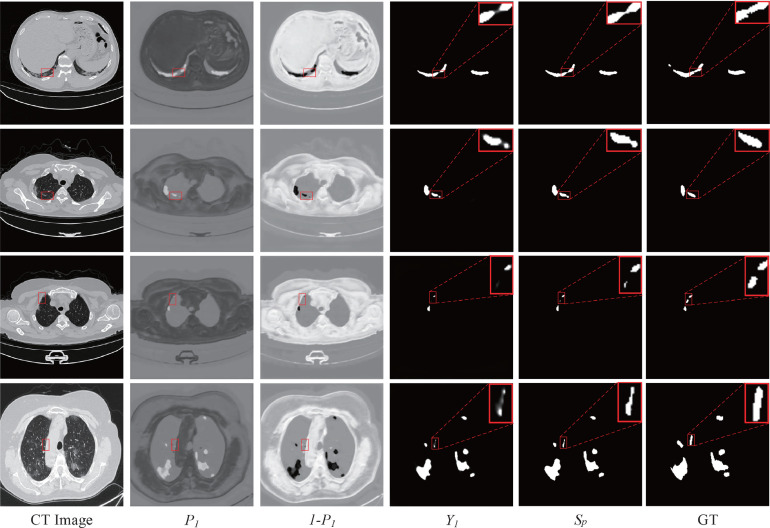

4.3.2. Qualitative results

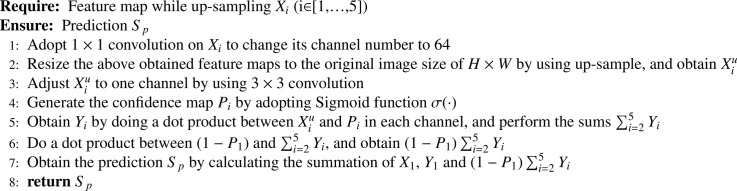

To further demonstrate the effectiveness of the proposed scheme, we visualize the prediction results of different networks. As shown in Fig. 6 , our method can remarkably outperform the baseline methods in the lung infection segmentation. Specifically, our segmentation results have much less mis-segmented tissues, while there are a lot of lossing and improper segmentation in the baseline U-Net and other methods. For the infection edge marked with a red box, for instance, our method can obtain a complete edge, and it is much closer to the real label in edge detail, which benefits from the more detailed edge information provided by the proposed ESM. Besides, from the regions marked by the blue box, our method can avoid over-segmentation, under-segmentation and incorrect segmentation efficiently. Especially in the rows, only our method and Deeplabv3+ can correctly detect the small infection (marked the blue box). It can be also observed obviously that our method is better than Deeplabv3+ in the edge details of large targets (marked the red box) because our method can provide different sizes of receptive fields and have good segmentation performance for different scale objects.

Fig. 6.

Visual qualitative comparison of lung infection segmentation results among U-Net, PSPNet, DeepLabv3+, Inf-Net and the proposed method. Column 1: the original CT image; Column 2: U-Net; Column 3: PSPNet; Column 4: DeepLabv3+; Column 5: Inf-Net; Column 6: our method; Column 7: the corresponding ground truth (GT).

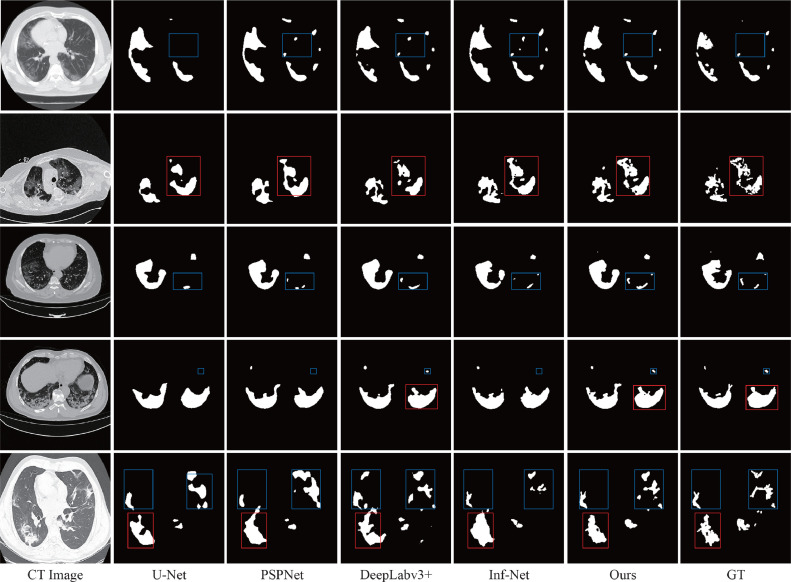

Along the down-sampling process in U-Net, edge feature information becomes less and less, while semantic one becomes richer and richer. For further verification, we visualize the feature maps of different levels (i.e., from to ) in ResUNet_CF. As shown in Fig. 7, the feature maps of low-level output ( and ) contain more details, and the feature map in is the closest to the edge GT. With the deepening of down-sampling, edge feature information becomes less obvious. In the back propagation, we can extract more semantic information from the feature maps of high-level, as shown in . It demonstrates that our ESM in low-level and ASSM in hige-level are very efficient to deal with a such difficult segmentation.

Fig. 7.

Visualization of each stage supervised by ESM. Column 1: the original CT image; Columns 2 to 6: to ; Column 7: the corresponding edge ground truth (GT).

4.4. Ablation experiments

To further analyse and test the validity of the proposed modules, a series of comparison experiments are conducted on our dataset by using various combinations among ESM, ASSM and AFM based on the baseline ResUNet. The experimental results are shown in the third row of Table 4 , and each module can improve independently the Dice coefficient of infection segmentation. Thereinto, compared with the baseline ResUNet without any other modules, ASSM can obtain independently the greatest performance improvements, followed by AFM. While for various combinations between ESM, ASSM and AFM, they can also outperform their separate modules, and the combination of ASSM and AFM can obtain slightly better performance than that of ESM and AFM. Finally, the combination of the three modules can obtain the best performance, the reason is that the integration can take full advantage of them and obtain the optimal segmentation effect. Our network can be generalized for other segmentation applications due to the effectiveness of its architecture.

Table 4.

Ablation experiments on our dataset. Bold black text represents the best results.

| Baseline | ESM | ASSM | ASSM | ESM | AFM | |

|---|---|---|---|---|---|---|

| ResUnet | ||||||

To test the effects of the proposed modules in the decoder, ESM and ASSM are applied separately or jointly in the up-sampling path. For convenience, indicates the corresponding modules and stages in the up-sampling path (shown in Fig. 2). Owing the symmetric structure between the encoder and decoder, ESM and ASSM are symmetrically placed in the low level (i.e., to ) and high level (i.e., to ) of the up-sampling path, respectively. The experimental results are shown in the fourth row of Table 4. Compared with the baseline method, the Dice performance can be improved in certain extent when these modules are separately or jointly adopted in up-sampling path, particularly the combination of the three modules can obtain the second best segmentation performance. However, the obtain performance in the up-sampling path is slightly worse than that of the corresponding down-sampling path in general. It means that the proposed Co-supervision scheme can both guide the network learning the features of edges and semantics in the down-sampling and up-sampling paths, but the effect would be more appreciable when the supervision modules is applied in the down-sampling path. The reason is that the levels of the down-sampling path contain richer primitive feature information than those of the up-sampling path owing to the encoder close to the original input data, while the edge and semantic information exist more or less some loss and noise when reconstructing a higher resolution layers by using bilinear interpolation up-sampling. Accordingly, the supervision in the levels of the down-sampling path is more stronger than that of the up-sampling path.

However, interestingly, the segmentation performance is even decreased compared with the baseline method when the proposed Co-supervision scheme are simultaneously applied in the down-sampling and up-sampling paths, and the fourth row of Table 4 shows the results. Except the combination between ESM, ESM and AFM, all combinations between the down-sampling and up-sampling paths can obtain poorer segmentation performance than the baseline method. While the combination between ESM, ESM and AFM can increase by about 1% over the baseline method. The most probable cause is the conflict and interference of the Co-supervision between the down-sampling and up-sampling paths. For example, the down-sampling path (i.e., encoder) is used to encode the input image into feature representations at multiple different levels, thereby capturing the context of the image like edge detail information. While the up-sampling path (i.e., decoder) is to semantically project the discriminative features (lower resolution) learned by the encoder onto the pixel space (higher resolution) to get a precise localization. Correspondingly, the loss function is to put more emphasis on edge details in the encoder path, while to highlight localization information for the decoder path. But all the feature maps of the decoder come from the encoder by concatenating and up-sampling, which results in the conflict and interference between the encoder and decoder when the Co-supervision modules are simultaneously applied in the two paths.

4.5. Comparison of fusion methods

Multilevel feature fusion means different level of feature maps are integrated together to enrich the feature information, and traditional fusion approaches usually use feature addition or concatenation. An addition process is to add multiple feature maps to be one, which means that the amount of information under the characteristics of the description image is increased. While a concatenation is a combination of the number of channels, which means that the features describing the image itself are increased, but the information under each feature is not increased. To further verify the advantage of the proposed AFM, a series of comparison experiments are carried out by only using different fusion approaches, and the segmentation results are shown in Table 5 . It can be seen that the proposed AFM can surpass the other two methods in all metrics except . The reason is that all feature maps are evenly fused according to the same importance in the adding or concatenating process. However, it is obviously unreasonable because there are great differences between different levels in feature representations, and it is not sufficient to adaptively compensate low level finer details to high level semantic features only by simple adding or concatenating operation. Meanwhile, the concatenation operation can reduce the weight of the feature maps with poor semantics in the subsequent features in the convolution layer, while retaining rich semantic features in the channel. Whereas the addition operation can weaken the discrimination of features due to the simple pixel-wise summation for the feature maps. Therefore, the concatenation fusion method can surpass the addition operation.

Table 5.

The results of different fusion methods on our dataset.

| Methods | (%) | (%) | ||||

|---|---|---|---|---|---|---|

| Add | ||||||

| Concatenate | ||||||

| Attention |

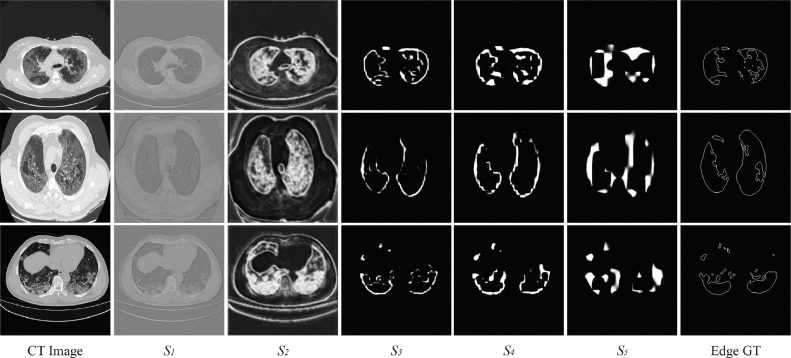

Fig. 8 illustrates the visual results of the fusion process by utilizing an attention mechanism. is only processed by the attention block (AB), thereby it is the nearest output to the segmentation prediction of the baseline. While is the segmentation results by fusing multiple level feature maps, which would achieve the goal of both high resolution and rich semantics by combining the complementary strengths of multiple level feature maps. It is obvious that the is more complete than the , and its lost information is lesser than that of the . The obtain confidence attaches importance to the to ensure the most information retained. As a complement to the , whereas, the confidence map pays attention to the lost detailed information, and it can exploit sufficient spatial and semantic features to supplement the lost detailed information by fusing different levels. Thus the proposed methods can overcome the under-segmentation problem of the baseline, and retain multi-scale contextual information from multiple different levels.

Fig. 8.

Visual results of the fusion process based on the proposed AFM. Column 1: the original CT image; Column 2: the obtained confidence map ; Column 3: the confidence map of the lost detailed information; Column 4: the major result from the top feature map through the attention block (AB); Column 5: the final prediction result ; Column 6: the corresponding ground truth (GT).

4.6. Comparisons on other COVID-19 datasets

To further verify the effectiveness and generalization ability, a series of comparison experiments are conducted on MosMedData [42], UESTC-COVID-19 [41] and COVID-ChestCT [43], respectively. We select three important metrics for the evaluation of the COVID-19 lung infection segmentation, including , and . The results are shown in Table 6, Table 7, Table 8 . For the MosMedData dataset, our method is slightly superior than Attention U-Net [34] and UNet++ [9] with Dice metric, but it can obtain 3.06% better than its nearest competitor F3Net [20] with Sensitivity (), and can achieve the best performance among these three methods with all metrics (shown in Table 6). In the UESTC-COVID-19 dataset, our method is slightly better than its nearest competitor with and metrics, and is slightly lower than its nearest competitor in Sensitivity (). Overall, our method can obtain the best comprehensive performance among these methods (shown in Table 7). As for the COVID-ChestCT, our method can achieve the first, first and third best performance in Sensitivity (), and , respectively. Compared with other methods, our method can also achieve the best overall performance (shown in Table 8). From the above results, our method can achieve the first three best performances for various datasets using all metrics, and has the best comprehensive performance comparing to other methods.

Table 6.

Performance comparisons between different methods on MosMedData. Bold black text represents the best results.

Table 7.

Performance comparisons between different methods on UESTC-COVID-19. Bold black text represents the best results.

Table 8.

Performance comparisons between different methods on COVID-ChestCT. Bold black text represents the best results.

5. Conclusion

It is still a challenging task to accurately segment the infected lesions of COVID-19 on CT images owing to the irregular shapes with various sizes and indistinguishable boundaries between normal and infected tissues. In this paper, a novel segmentation scheme is proposed for the infection segmentation of COVID-19 on CT Images. To achieve this, we propose three modules for deep collaborative supervision and attention fusion based on ResUnet. To verify the effectiveness of the proposed scheme, a series of experiments are conducted on four COVID-19 datasets. The results show that our method can achieve the best performance for most of the datasets with metrics, such as , Sensitivity() and , and has better generalization performance comparing to the existing approaches.

The proposed technique has four advantages as follows. Firstly, it is able to capture rich spatial information in various scales through an edge supervised module, denoted as the ESM, which allows to incorporate the edge supervised information into the initial stage of down-sampling in the framework of ResUnet. As low-level layers contain richer object boundaries, they are used to define the edge supervised loss function to capture all spatial information. The main benefit of this module is to highlight low-level boundary features and provide useful fine-grained constraints to guide feature extraction in semantic segmentation tasks. Secondly, the proposed method can explore semantic information from various scale infections on COVID-19 CT images by using an auxiliary semantic supervised module (i.e., ASSM) that can integrate the appearance supervised information into the later stage of down-sampling. The main advantage of this module is to strengthen high-level semantic information during the feature extraction process. Thirdly, we propose an attention fusion module (i.e., AFM) to fuse multiple scale feature maps of different levels from the up-sampling stage to reduce the semantic gaps between high-level and low-level feature maps. The main advantage of this module is to strengthen and supplement the lost detailed information in high-level representations. Lastly, we construct a joint loss function by combining the edge supervised loss, auxiliary semantic supervised loss and fusion loss. The joint function can guide the network in learning the features of COVID-19 infections, thereby achieving a deep collaborative supervision on edges and semantics. Meanwhile, it can also act as an incentive to effectively fuse multi-scale feature maps of different levels.

Although our network can get a good result in segmenting the overall infection region, it is not sufficient to estimate the severity of infected COVID-19, because finer segmentation of the different infection regions is required. In the future, we might collect a large amount of COVID-19 data, and consider further recognizing the severity of COVID-19 according to the area, size, and location of infections. The code is publicly available at https://github.com/slz674763180/COVID19. The package includes the proposed three modules and joint loss function for reproducibility purposes.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

The authors would like to express their appreciation to the referees for their helpful comments and suggestions. This work was supported in part by Zhejiang Provincial Natural Science Foundation of China (Grant nos. LGF20H180002 and GF22F037921), and in part by National Natural Science Foundation of China (Grant nos. 61802347, 61801428 and 61972354), the National Key Research and Development Program of China (Grant no.2018YFB1305202), and the Microsystems Technology Key Laboratory Foundation of China.

Biographies

Haigen Hu received the Ph.D. degree in Control theory and Control Engineering from Tongji University, Shanghai, China in 2013. He has come out from the post-doctoral station of LITIS Laboratory, Université de Rouen, France in 2019. He is currently an associate professor in the College of Computer Science and Technology at Zhejiang University of Technology, China. His current research interests include deep learning, computer vision and medical image processing.

Leizhao Shen was was born in Zhejiang in 1996, And graduated from Zhejiang University of technology with a bachelor’s degree in 2018. Now is a postgraduate in Zhejiang University of Technology, Zhejiang, China, from 2018. His current research interests include Machine Learning (Deep learning), Medical Image Processing.

Qiu Guan is currently a Professor and PhD supervisor with the College of Computer Science and Technology, Zhejiang University of Technology, Hangzhou, China. Her research interests include computer vision and medical image computing and understanding. Sponsored by China Scholarship Council in 2007, she has been a visiting scholar of University College London in UK for one year and focused her study on medical image processing there. She carried out a number of China’s State (NSF61103140, U20A20171, 60870002) and Province funded research projects (LY21F020027, 2015C33073, 2014C33110, 2010C33095) as a PI or co-PI in the area related to these fields, which resulted in a number of papers published in the international journals and conference proceedings.

Xiaoxin Li received the B.E. and M.E. degrees from the Wuhan University of Technology, Wuhan, China, in 2002 and 2005, respectively, and the Ph.D. degree from the South China University of Technology, Guangzhou, China, in 2009., Since 2009, he has been a Postdoctoral Research Fellow with the Center for Computer Vision, School of Mathematics and Computational Science, Sun Yat-Sen University, Guangzhou, China. He is also currently a Lecturer with the College of Computer Science and Technology, Faculty of Information Technology, Zhejiang University of Technology, Hangzhou, China. His current research interests include image processing, statistical data analysis, and bioinformatics.

Qianwei Zhou (A17-M19) received the Ph.D. degree in communication and information systems from the Shanghai Institute of Microsystem and Information Technology, University of Chinese Academic and Sciences, Shanghai, China, in 2014. In July 2014, he joined Zhejiang University of Technology, Hangzhou, China, where he is currently a Research Scientist in the College of Computer Science. His researches have been published in many international journals, including the IEEE Transactions on Industrial Electronics, IEEE Transactions on Magnetics, IEEE Signal Processing Letters, IEEE Transactions on Instrumentation and Measurement, and Journal of Sound and Vibration. His research interests include the crossing field of machine learning and computer-aided design, Internet-of-Thing related signal processing, pattern recognition and algorithms for the understanding of medical images.

Su Ruan (Senior Member, IEEE) received the M.S. and the Ph.D. degrees in image processing from the University of Rennes, France, in 1989 and 1993, respectively. From 2003 to 2010, she was a Full Professor with the University of Reims Champagne-Ardenne, France. She is currently a Full Professor with the Department of Medicine, and the Leader of the QuantIF Team, LITIS Research Laboratory, University of Rouen, France. Her research interests include pattern recognition, machine learning, information fusion, and medical imaging.(Based on document published on 8 April 2021).

References

- 1.Wang C., Horby P.W., Hayden F.G., Gao G.F. A novel coronavirus outbreak of global health concern. Lancet. 2020;395(10223):470–473. doi: 10.1016/S0140-6736(20)30185-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Huang C., Wang Y., et al. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet. 2020;395(10223):497–506. doi: 10.1016/S0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.He K., Zhao W., Xie X., Ji W., Liu M., Tang Z., Shi Y., Shi F., Gao Y., Liu J., Zhang J., Shen D. Synergistic learning of lung lobe segmentation and hierarchical multi-instance classification for automated severity assessment of COVID-19 in CT images. Pattern Recognit. 2021;113:107828. doi: 10.1016/j.patcog.2021.107828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rorat M., Jurek T., Simon K., Guziński M. Value of quantitative analysis in lung computed tomography in patients severely ill with COVID-19. PLoS One. 2021;16(5):e0251946. doi: 10.1371/journal.pone.0251946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Amyar A., Modzelewski R., Li H., Ruan S. Multi-task deep learning based CT imaging analysis for COVID-19 pneumonia: classification and segmentation. Comput. Biol. Med. 2020;126:104037. doi: 10.1016/j.compbiomed.2020.104037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Fan D.P., Zhou T., Ji G.P., et al. Inf-Net: automatic COVID-19 lung infection segmentation from CT scans. IEEE Trans. Med. Imaging (TMI) 2020;39(8):2626–2637. doi: 10.1109/TMI.2020.2996645. [DOI] [PubMed] [Google Scholar]

- 7.Wang S., Kang B., Ma J., et al. A deep learning algorithm using CT images to screen for corona virus disease (COVID-19) Eur. Radiol. 2021;31(8):6096–6104. doi: 10.1007/s00330-021-07715-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chen J., Wu L., Zhang J., et al. Deep learning-based model for detecting 2019 novel coronavirus pneumonia on high-resolution computed tomography. Sci. Rep. 2020;10(1):19196. doi: 10.1038/s41598-020-76282-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zhou Z., Rahman Siddiquee M.M., Tajbakhsh N., Liang J. Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Vol. 11045. 2018. UNet++: a nested U-Net architecture for medical image segmentation; pp. 3–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Oulefki A., Agaian S., Trongtirakul T., Laouar A.K. Automatic COVID-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognit. 2021;114:107747. doi: 10.1016/j.patcog.2020.107747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ronneberger O., Fischer P., Brox T. Medical Image Computing and Computer Assisted Intervention (MICCAI), Munich Germany. vol. 9351. 2015. U-Net: convolutional networks for biomedical image segmentation; pp. 234–241. [Google Scholar]

- 12.Deng L., Yu D. Deep learning: methods and applications. Found. Trends Signal Process. 2013;7(3–4):197–387. [Google Scholar]

- 13.Krizhevsky A., Sutskever I., Hinton G.E. Advances in Neural Information Processing Systems, California, USA. 2012. Imagenet classification with deep convolutional neural networks; pp. 1097–1105. [Google Scholar]

- 14.Simonyan K., Zisserman A. Computer Vision and Pattern Recognition (CVPR), Columbia USA. 2014. Very deep convolutional networks for large-scale image recognition. [Google Scholar]

- 15.He K., Zhang X., Ren S., Sun J. Computer Vision and Pattern Recognition (CVPR), Las Vegas USA. 2016. Deep residual learning for image recognition; pp. 770–778. [Google Scholar]

- 16.Long J., Shelhamer E., Darrell T. Computer Vision and Pattern Recognition (CVPR), Boston, USA. 2015. Fully convolutional networks for semantic segmentation; pp. 3431–3440. [DOI] [PubMed] [Google Scholar]

- 17.Zhao H., Shi J., Qi X., Wang X., Jia J. Conference on Computer Vision and Pattern Recognition (CVPR), Hawaii, USA. 2017. Pyramid scene parsing network; pp. 2881–2890. [Google Scholar]

- 18.Chen L.-C., Zhu Y., Papandreou G., Schroff F., Adam H. European Conference on Computer Vision (ECCV), Munich, Germany. 2018. Encoder decoder with atrous separable convolution for semantic image segmentation; pp. 801–818. [Google Scholar]

- 19.Zhao H., Zhang Y., Liu S., Shi J., Loy C.C., Lin D., Jia J. European Conference on Computer Vision (ECCV), Munich, Germany. 2018. Psanet: point-wise spatial attention network for scene parsing; pp. 267–283. [Google Scholar]

- 20.Wei J., Wang S., Huang Q. AAAI Conference on Artificial Intelligence 2020 (AAAI 2020) 2020. F3Net: fusion, feedback and focus for salient object detection; pp. 12321–12328. [Google Scholar]

- 21.Zhang H., Dana K., Shi J., Zhang Z., Wang X., Tyagi A., Agrawal A. Computer Vision and Pattern Recognition (CVPR), Utah, USA. 2018. Context encoding for semantic segmentation; pp. 7151–7160. [Google Scholar]

- 22.Wu Z., Su L., Huang Q. International Conference on Computer Vision (ICCV), Seoul South Kerean. 2019. Stacked cross refinement network for edge-aware salient object detection; pp. 7264–7273. [Google Scholar]

- 23.Zhang Z., Fu H., Dai H., Shen J., Pang Y., Shao L. Medical Image Computing and Computer Assisted Intervention (MICCAI), Shenzhen China. vol. 11764. 2019. ET-Net: a generic edge-attention guidance network for medical image segmentation. [Google Scholar]

- 24.Lin T.-Y., Dollár P., Girshick R.B., He K., Hariharan B., Belongie S.J. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017. Feature pyramid networks for object detection; pp. 936–944. [Google Scholar]

- 25.Ren S., He K., Girshick R., Sun J. Advances in Neural Information Processing Systems. 2015. Faster R-CNN: towards real-time object detection with region proposal networks; pp. 91–99. [DOI] [PubMed] [Google Scholar]

- 26.He K., Gkioxari G., Dollár P., Girshick R. Proceedings of the IEEE International Conference on Computer Vision (ICCV) 2017. Mask R-CNN; pp. 2961–2969. [Google Scholar]

- 27.Hu H., Guan Q., Chen S., Ji Z., Yao L. Detection and recognition for life state of cell cancer using two-stage cascade CNNs. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020;17(3):887–898. doi: 10.1109/TCBB.2017.2780842. [DOI] [PubMed] [Google Scholar]

- 28.Fu J., Liu J., Tian H., Li Y., Bao Y., Fang Z., Lu H. Conference on Computer Vision and Pattern Recognition (CVPR), California, USA. 2019. Dual attention network for scene segmentation; pp. 3146–3154. [Google Scholar]

- 29.Huang Z., Wang X., Huang L., Huang C., Wei Y., Liu W. International Conference on Computer Vision (ICCV), Seoul, South Kerean. 2019. CCNet: criss-cross attention for semantic segmentation; pp. 603–612. [Google Scholar]

- 30.Wang X., Girshick R., Gupta A., He K. Conference on Computer Vision and Pattern Recognition (CVPR), Utah, USA. 2018. Non-local neural networks; pp. 7794–7803. [Google Scholar]

- 31.Li X., Zhao H., Han L., Tong Y., Yang K. Association for the Advance of Artificial Intelligence (AAAI), New York USA. 2019. GFF: gated fully fusion for semantic segmentation. [Google Scholar]

- 32.“COVID-19 CT segmentation dataset, 2020, https://medicalsegmentation.com/covid19/.

- 33.“COVID-19 CT segmentation dataset, 2020, https://gitee.com/junma11/COVID-19-CT-Seg-Benchmark.

- 34.Oktay O., Schlemper J., et al. International Conference on Medical Imaging with Deep Learning (MIDL) 2018. Attention U-Net: learning where to look for the pancreas. [Google Scholar]

- 35.Fan D.-P., Cheng M.-M., Liu Y., Li T., Borji A. IEEE International Conference on Computer Vision (ICCV) 2017. Structure-measure: a new way to evaluate foreground maps; pp. 4548–4557. [Google Scholar]

- 36.Fan D.-P., Gong C., Cao Y., Ren B., Cheng M.-M., Borji A. International Joint Conference on Artificial Intelligence (IJCAI), Stockholm. 2018. Enhanced-alignment measure for binary foreground map evaluation; pp. 698–704. [Google Scholar]

- 37.Hu H., Liu A., Zhou Q., Guan Q., Li X., Chen Q. An adaptive learning method of anchor shape priors for biological cells detection and segmentation. Comput. Methods Prog. Biomed. 2021;208:106260. doi: 10.1016/j.cmpb.2021.106260. [DOI] [PubMed] [Google Scholar]

- 38.Gu Z., Cheng J., Fu H., et al. CE-Net: context encoder network for 2D medical image segmentation. IEEE Trans. Med. Imaging. 2019;38(10):2281–2292. doi: 10.1109/TMI.2019.2903562. [DOI] [PubMed] [Google Scholar]

- 39.Zhang F., Chen Y., Li Z., Hong Z., Liu J., Ma F., Han J., Ding E. 2019 IEEE/CVF International Conference on Computer Vision, ICCV. 2019. ACFNet: attentional class feature network for semantic segmentation; pp. 6797–6806. [Google Scholar]

- 40.Feng S., Zhao H., Shi F., et al. CPFNet: context pyramid fusion network for medical image segmentation. IEEE Trans. Med. Imaging. 2020;39:3008–3018. doi: 10.1109/TMI.2020.2983721. [DOI] [PubMed] [Google Scholar]

- 41.Wang G., Liu X., Li C., Xu Z., Ruan J., Zhu H., Meng T., Li K., Huang N., Zhang S. A noise-robust framework for automatic segmentation of COVID-19 pneumonia lesions from CT images. IEEE Trans. Med. Imaging. 2020;39(8):2653–2663. doi: 10.1109/TMI.2020.3000314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Morozov S.P., Andreychenko A.E., Blokhin I.A., et al. Mosmeddata: data set of 1110 chest CT scans performed during the COVID-19 epidemic. Digit. Diagn. 2020;1(1):49–59. doi: 10.17816/DD46826. [DOI] [Google Scholar]

- 43.J.P. Cohen, P. Morrison, L. Dao, et al., COVID-19 image data collection: prospective predictions are the future, 2020, https://github.com/ieee8023/covid-chestxray-dataset. arXiv:2006.11988