Abstract

Simple Summary

In this study, we established four convolutional neural network (DCNN) models (AlexNet, ResNet101, DenseNet201, and InceptionV3) to predict drug-sensitive mutations from images of tissues with gastrointestinal stromal tumors. The treatment of these tumors depends on the mutational subtype of the KIT/PDGFRA genes. Previous studies rarely focused on mesenchymal tumors and mutational subtypes. More than 5000 images of 365 GISTs from three independent laboratories were used to generate the model. DenseNet201 achieved an accuracy of 87% while the accuracies of AlexNet, InceptionV3, and ResNet101 were 75%, 81%, and 86%, respectively. Cross-institutional inconsistency and the contributions of image color and subcellular components were studied and analyzed.

Abstract

Gastrointestinal stromal tumors (GIST) are common mesenchymal tumors, and their effective treatment depends upon the mutational subtype of the KIT/PDGFRA genes. We established deep convolutional neural network (DCNN) models to rapidly predict drug-sensitive mutation subtypes from images of pathological tissue. A total of 5153 pathological images of 365 different GISTs from three different laboratories were collected and divided into training and validation sets. A transfer learning mechanism based on DCNN was used with four different network architectures, to identify cases with drug-sensitive mutations. The accuracy ranged from 87% to 75%. Cross-institutional inconsistency, however, was observed. Using gray-scale images resulted in a 7% drop in accuracy (accuracy 80%, sensitivity 87%, specificity 73%). Using images containing only nuclei (accuracy 81%, sensitivity 87%, specificity 73%) or cytoplasm (accuracy 79%, sensitivity 88%, specificity 67%) produced 6% and 8% drops in accuracy rate, respectively, suggesting buffering effects across subcellular components in DCNN interpretation. The proposed DCNN model successfully inferred cases with drug-sensitive mutations with high accuracy. The contribution of image color and subcellular components was also revealed. These results will help to generate a cheaper and quicker screening method for tumor gene testing.

Keywords: gastrointestinal stromal tumor, KIT, PDGFRA, deep convolutional neural network, machine learning

1. Introduction

Mutational analysis of cancer cells is a key, and usually rate-determining, step in targeted therapy. In most circumstances, pathologists diagnose cancers using microscopic examination of histopathology slides, to assess tumor morphology, followed by the selection of representative tumor areas for subsequent nucleic acid extraction and genetic analysis of drug-sensitive mutations. While morphology is the basis for diagnosis, genes are usually the major determinant of treatment. The acquisition of mutational data, however, is usually time consuming, especially in local hospitals where the necessary equipment for gene sequencing is not available. One approach to reducing the time required to diagnose drug-sensitive mutation is the use of deep learning algorithms to provide a suggestion of mutations directly from tumor features. Several studies have addressed this issue of morphology–genotype correlation in cancers [1,2,3,4,5,6]. However, in these studies, researchers primarily focused on identifying the presence of specific mutations within carcinomas, without taking into account the knowledge that, in some circumstances, the mutational subtype, not merely the presence or absence of a mutation, is the main determinant of effective treatment.

In this study gastrointestinal stromal tumors (GISTs) were chosen to be the subject because: (1) GIST is the most common mesenchymal malignancy of the gastrointestinal tract, and reducing the patients’ waiting time has significant clinical impact. (2) The target gene of this tumor, KIT or the PDGFRA receptor tyrosine kinase gene, has a complex pattern of mutation, which makes morphology–genotype correlation extremely difficult for human perception. (3) Most of the GISTs show consistent morphological features across the whole tumor, making it easy to obtain representative microscopic images. This exempts pathologists from the need for submitting whole-slide images and greatly enhances the efficiency of data transmission. (4) The target gene, KIT/PDGFRA, is the major protein-producing gene in GIST, and mutation of this gene is expected to generate considerable morphological variation, which may facilitate detection. (5) Deep learning studies into mesenchymal tumors are rare [2], and mesenchymal tumors arise from an extremely heterogenous group of diseases, with a few cases in each category in open image databases. (6) In GISTs, the subtype of mutations present is more important than the presence of mutation itself. For example, mutations in exon 11 of the KIT gene are usually drug-sensitive, while mutations in exon 9 are usually drug-resistant [7]. Genotype–phenotype studies relating mutational subtypes to histology have rarely been performed.

Conventional computer-aided diagnosis systems are based on communication between physicians and computer scientists. Details of the findings useful for diagnosis are passed from physicians to computer scientists, who generate formulas and metrics. Quantitative features are extracted from medical images and analyzed using machine learning algorithms, an approach known as artificial intelligence (AI). Recently, deep convolutional neural networks (DCNNs) have been widely used to automatically generate and combine image features [8] and have successfully improved decision making in areas such as tumor and microorganism detection, evaluation of malignancy, and prediction of prognosis [9,10,11,12]. Instead of using handcrafted features, relevant features can be extracted using multiple convolutional layers. A previous study using a DCNN model achieved an accuracy of 95.3%, a sensitivity of 93.5%, and a specificity of 96.3% for the detection of Bacillus in whole-slide pathology images [9]. With this high accuracy, the diagnostic process can be accelerated, and the efficiency enhanced. Quantification of the rich information embedded in histopathology images using DCNNs permits genomic-based correlation and measurement for the prediction of disease prognosis [8].

Furthermore, using multiple convolutional, max pooling, and fully connected layers, the underlying patterns used for classification tasks can be identified and interpreted. Large numbers of features are extracted and combined to represent features ranging from the edges of objects to meaningful interpretations. Using this approach, the manual manipulation of image features can be minimized. One of the requirements for deep learning is the availability of a large number of training images for the neural network. When establishing a model for a new task, the collection of data and training of the network is time consuming. Transfer learning can be helpful in addressing this problem. Especially for medical images, considering the time and cost involved in obtaining data and agreements from patients and the institutional review board, the use of transfer learning to train a target task from a pre-trained model is valuable. This process involves transferring knowledge from numerous natural images from a source such as ImageNet to obtain substantial image features [13,14], and transferring pre-trained weights to the target task to accelerate the learning process.

In this study, GIST histopathology slides were collected from three different laboratories, with images registered by two independent pathologists. A prediction model was constructed using DCNNs. The deep learning system could identify drug-sensitive mutations from tumor cell morphology with an accuracy of more than 85%. Subsequent subcellular studies involving nuclear or cytoplasmic subtraction demonstrated that the cellular alterations were not confined to the nucleus or cytoplasm. We also investigated the impact of the use of color images versus gray-scale images on the accuracy of model prediction. Our study expands the application of AI in daily medical practice.

2. Materials and Methods

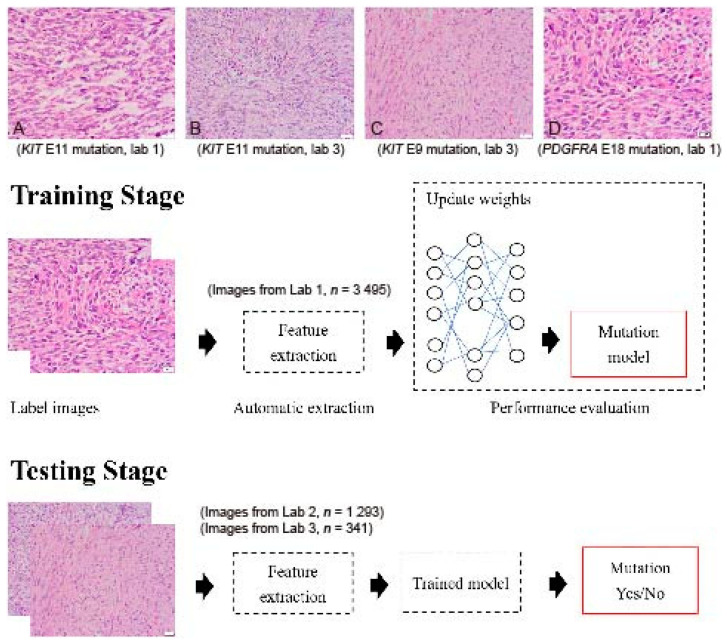

GIST histopathology sections, 4 µm thick and stained with hematoxylin and eosin (H&E), were collected from three independent laboratories: Lab 1 (Dr. C.W.L), Lab 2 (Dr. H.Y.H), and Lab 3 (Dr. P.W.F). A total of 5153 H&E sections from 365 tumors were collected, including cases with KIT exon 11 mutations (drug-sensitive), KIT exon 9 mutations (drug-insensitive), other KIT mutations (variable drug sensitivity), PDGFRA mutations (variable drug sensitivity), and KIT/PDGFRA wild type (WT) mutations (drug-insensitive) (Figure 1A–D). The breakdown of each mutational pattern and the number of cases are listed in Table 1. The overall workflow chart is shown in Figure 1. For each tumor, an average of 15 representative high-power field (400×) images were selected and registered by two pathologists. Areas of necrosis, heavy inflammation, and section artifacts were avoided. Representative images were taken from the tumor region, not including adjacent normal tissue. The images were then uploaded to a Cloud server for subsequent analysis. The study has been approved by the Institute Review Board of Fu Jen Catholic University (No. C107134, approved on 20 June 2019).

Figure 1.

Flowchart of the implemented deep convolutional neural network. Images were collected from 3 independent laboratories (Lab 1, Lab 2, and Lab 3) using 4 µm thick, hematoxylin-and-eosin slides. (A) An example image of a GIST with a KIT exon 11 mutation from Lab 1 (400×). (B) An example image of a GIST with a KIT exon 11 mutation from Lab 3 (400×). (C) An example image of a GIST with a KIT exon 9 mutation from Lab 3 (400×). (D) An example image of a GIST with a PDGFRA exon 18 mutation from Lab 1 (400×). Although similar staining protocols were used, the overall hue differs between laboratories. A total of 5129 images were enrolled in the study. Four different DCNNs, AlexNet, InceptionV3, ResNet, and DenseNet, were used with transfer learning from ImageNet. The transferred DCNN model generated the probability that each image contained a drug-sensitive mutation. A probability higher than 0.5 was regarded as positive. The dataset was randomly separated into 10 equal-sized subsets. In each iteration, one subset was picked and used to test the trained model on the remaining nine subsets.

Table 1.

Breakdown of mutational patterns and number of cases in each data set.

| Mutational Pattern | Dataset 1 (Lab 1) | Dataset 2 (Lab 2) | Dataset 3 (Lab 3) | Sum (Case) | Sum (Image) | |||

|---|---|---|---|---|---|---|---|---|

| Case No. | Image No. | Case No. | Image No. | Case No. | Image No. | |||

| KIT E11 | 125 | 1959 | 92 | 893 | 15 | 198 | 232 | 3050 |

| KIT E9 | 34 | 684 | 2 | 14 | 5 | 104 | 41 | 802 |

| KIT Non-E9 non-E11 | 5 | 130 | 0 | 0 | 1 | 16 | 6 | 146 |

| PDGFRA | 15 | 271 | 6 | 68 | 0 | 0 | 21 | 339 |

| KIT/PDGFRA WT | 29 | 473 | 35 | 320 | 1 | 23 | 65 | 816 |

| Sum | 208 | 3517 (3495) * |

135 | 1295 (1293) * |

22 | 341 (341) * |

365 | 5153 (5129) * |

* Real image numbers entering DCNN calculation after excluding images with artifacts.

Mutational data for each tumor were obtained by Sanger sequencing as previously described [15,16]. The tumors were separated into two groups: the “drug-sensitive” group (KIT exon 11 mutations) (Figure 1A,C) and the “other” group (KIT exon 9 mutations, KIT other mutations, PDGFRA mutations, and WT cases) (Figure 1B,D). The aim of this study was to determine whether cases with drug-sensitive KIT exon 11 mutations could be predicted by the deep learning system directly from tumor cell morphology.

In the experiment, data from each laboratory were used as a training set (Lab 1), a validation 1 set (Lab 2), and a validation 2 set (Lab 3). Different DCNNs, including AlexNet [17], Inception V3 [18], ResNet101 [19], and DenseNet201 [20] were used with transfer learning, and their performances were evaluated. The parameters trained from ImageNet were transferred into AlexNet, Inception V3, Res-Net101, and DenseNet201 for the task of GIST mutation. Training settings used in MATLAB (MathWorks Inc.) include initial learning rate = 0.001 to achieve the optima weight smoothly, max epochs = 4 as the learning curve is converged, and mini batch size = 64 for the balance between memory and computation. The transferred DCNN model generated the probability that each image contained a drug-sensitive mutation. A probability higher than 0.5 was regarded as positive. To evaluate the generalization ability of the networks, 10-fold cross validation was used [21]. The dataset was randomly separated into 10 equal-sized subsets. In each iteration, one subset was picked and used to test the trained model on the remaining nine subsets. Original color and corresponding gray-scale images were prepared as input sources, as well as images with only cell nuclei and without cell nuclei [2].

3. Results

Sample GIST images with different mutations from different laboratories are presented in Figure 1A–D. Although similar staining protocols were used, the images from different laboratories had slightly different color hues.

Table 2 shows the prediction accuracies of the different DCNN models with transfer learning on a combination of all three datasets (70–85%) and of the Lab 1 on the largest dataset. Of the four DCNN models, DenseNet201 produced the best results. Overall, the Lab 1 model established by DenseNet201 provided the best accuracy of prediction (87%). Testing the best Lab 1 DenseNet model on the datasets from the Lab 2 and Lab 3 produced a testing accuracy ranging from 65% to 66%, and the model accuracy trained individually on datasets from the Lab 2 and Lab 3 ranged from 79% to 94% (Table 3). This drop (from 87% to 65% and 66%) and recovery (to 79% and 94%) of accuracies in model cross-applying and processing between different laboratories indicated the presence of cross-institutional inconsistency.

Table 2.

Accuracies of using different DCNNs on combination of three datasets (Lab 1, Lab 2, and Lab 3) and the largest dataset (Lab 1).

| DCNN | Combination (Lab 1, Lab2 and Lab 3) | Lab 1 |

|---|---|---|

| AlexNet | 70% | 75% |

| Inception V3 | 77% | 81% |

| ResNet101 | 84% | 86% |

| DenseNet201 | 85% | 87% |

Table 3.

Accuracies of the Lab 1 model (DenseNet201) on the Lab 2 and Lab 3 datasets, and model building using the Lab 2 and Lab 3 dataset.

| Dataset | Testing Accuracy | Model Accuracy |

|---|---|---|

| Lab 2 | 66% | 79% |

| Lab 3 | 65% | 94% |

To address this issue and to test which element of the input images had the greatest impact on the prediction accuracy, transformed gray-scale images were prepared to estimate the differentiating power of the staining colors. There was a drop in prediction accuracy from 87% to 80% when input color images were transformed into gray-scale images. Similar approaches were applied to investigate the significance of the cell nuclei and cytoplasm in segregation. There was a drop in prediction accuracy from 87% to 81% when nucleus-only images were input, while a drop in prediction accuracy from 87% to 79% was observed when the nuclei were extracted from the input images. The results are shown in Table 4.

Table 4.

Performances of training DenseNet201 on the Lab 1 dataset with gray-scale, only cell nuclei, and without cell nuclei.

| Dataset | Accuracy | Sensitivity | Specificity | Positive Predictive Value | Negative Predictive Value | AUC |

|---|---|---|---|---|---|---|

| Gray-scale | 80% | 87% | 73% | 79% | 83% | 0.8645 |

| Only cell nuclei | 81% | 87% | 73% | 80% | 83% | 0.8832 |

| Without cell nuclei | 79% | 88% | 67% | 77% | 83% | 0.8562 |

4. Discussion

In this study, we aimed to use variable kinds of DCNNs to identify a direct link between tumor cell morphology and the mutational status of specific genes. Several recent studies have addressed a similar issue, that of phenotype–genotype correlation in cancers, using deep learning systems [1,2,3,4,5,6]. However, in these studies, only a few cases of mesenchymal tumors were included [2]. The largest dataset to date, produced by Fu et al. in their 2020 study [2], incorporated 200 malignant mesenchymal tumor (sarcoma) cases. Sarcoma is an extremely heterogenous group of diseases, encompassing more than 50 entities. Although the sarcoma breakdown list was not provided in that study, from the analytical result that MDM2, TP53, and RB1 were discriminative genes, one can expect that a significant portion of the dataset comprised liposarcoma and complex karyotype sarcomas such as leiomyosarcoma and osteosarcoma, which are currently not subjects of targeted therapy. Xu et al. conducted a radiogenomic study on GISTs in 2018 and reported that GISTs with and without KIT exon 11 mutations could be discriminated by AI texture analysis of enhanced computed tomography images [22]. However, in that study, only four cases of GIST without KIT exon 11 mutations were included in the validation cohort, leaving room for discussion of the interpretation [22].

As mentioned in the Introduction, we chose GIST as the subject for several reasons. Cancer tumorigenesis usually involves a stepwise accumulation of hundreds to thousands of genetic mutations. It can be expected that some of these mutations contribute with varying degrees to the morphological changes. Owing to the common intratumoral heterogeneity in cancers, there are usually different regions with different morphologies in the same tumor. Given this diversity, if one wants to establish a link between certain mutations of a gene and certain patterns of morphological changes, thousands of input images may be needed. Submitting whole-slide images for analysis may be beneficial because several morphological patterns can be included simultaneously, and thus exempt pathologists from the need to select representative images of each pattern. However, submitting such whole-slide images generates heavy loads in internet transmission, and the analysis of large amounts of data is time consuming, making this approach less appealing in daily practice. GISTs have the advantages of low mutational burden and low intratumoral heterogeneity, making them suitable subjects for this pioneering study [23,24]. Clinicians can conveniently upload representative .jpg high power field images, which are usually <1000 KB in size, for analysis on a server. Because the drug-sensitive genes (KIT/PDGFRA) in GISTs are the major protein-producing genes in these cells, based on their high transcription levels in the gene expression profile [25], they are expected to have a major impact on morphological alterations, and, therefore, the number of input images necessary to reach biological significance may be reduced. The result of our study is encouraging, since, with ~5000 images, a prediction rate with an accuracy of greater than 80% can be obtained.

In the choice of DCNNs for the clinical application, DenseNet201, which performed the best, would be a good one for use. However, heavy computation was needed for the model training and, meanwhile, a large amount of data needed to be collected for the best fitting. These were the two main concerns compared with the conventional image processing and feature extraction methods [26,27]. Nevertheless, automatic feature extraction and combination in DCNN reduced the misunderstanding and time-consuming communications between pathologists and computer scientists, especially for the gene mutation task, where it is difficult to discover sophisticated information from pathological im-ages with human eyes.

In this study, we found cross-institutional inconsistency when applying DCNN models. The cause of this cross-institution reduction in model prediction accuracy may be caused by different slide quality, different staining procedures/reagents, different batch effects (such as humidity and pH value), and varying degrees of slide color fading in storage between different institutions. To explore the cause of cross-institution inconsistency, we assume that the colors in tissue images play an important role in DCNN interpretation, since different laboratories usually have similar but slightly different staining protocols and environments. Pathologists rely on H&E sections to make correct diagnoses. Hematoxylin (H) is the major dye that gives nuclei a blue color, and eosin (E) is the major dye that gives cytoplasm a red color [28]. Within this color spectrum (from deep to light, from blue to red, and its mixture, purple) pathologists gain experience linking certain color combinations to certain diagnoses. By transforming color images to gray-scale images, a drop in prediction rate was observed, indicating that image color did play a role in DCNN interpretation. Paradoxically, an enhancement in the prediction rate was observed when combining all the images for analysis after gray-scale transformation. This process addresses the issue of color inconsistency between different laboratories, which is usually due to different staining protocols and environmental factors, such as different humidity and temperature, may play an even more important role in DCNN interpretation error. The importance of color calibration in input images is therefore clear [29].

As previously mentioned, an importing factor affecting slide staining colors is stain fading in archived and aged samples. Color fading may be asymmetric, uneven, or un-proportionate, and the extent/severity of the fading may be affected by slide storage condition (humidity, temperature, etc.), different staining protocols, quality of the staining reagents, tissue intrinsic factors, and extrinsic artifacts. Most of the studies use de-identified data of which the slide age is not known, as it is in this study. This limitation again stresses the importance of color control in digital imaging, and the United States Food and Drug Administration have released guidance in management of color reproducibility in digital pathology images [30]. We are planning on using more image processing techniques to analyze a future image database with time stamps to clarify this issue.

Surgical pathologists show great interest in knowing the major subcellular locations of morphological changes caused by certain mutations. By segmenting out the nuclei, a significant drop in prediction rate was observed in both nuclei-only and cytoplasm-only images. However, the reduction was limited, leading to the hypothesis that both nuclei and cytoplasm are major subcellular locations of morphological alterations, and combining these two components generates synergic and buffering effects on accurate prediction.

Using state-of-the-art machine learning models keeps shaping our future scope of medical application and can help mitigate technical gaps between different pathology laboratories. In areas where local hospitals cannot afford molecular laboratories, the implementation of prediction models can help doctors obtain tumor mutational data of their patients in minutes, which greatly accelerates the treatment processes. Although the result of this pioneering study is encouraging, there are still challenges. Larger studies with more cases need to be conducted in the future to enhance the prediction accuracy, to expand the classification subcategories, and to include cases with rare mutational patterns. Novel mutations are hardly predicted by machine learning models and doctors should always be aware of cases that are unusual in clinical or pathological presentation.

5. Conclusions

In summary, in this study, we demonstrated that a deep learning system can be used to establish a link between cellular morphology and certain gene mutations. This approach helps to reduce the waiting time for mutational analysis, and may benefit the patients by facilitating targeted therapy in the future.

Acknowledgments

We thank Yih-Leong Chang in National Taiwan University Hospital for administrative support with this study. The authors would also like to thank all the technical staff of the Department of Pathology at Fu Jen Catholic University Hospital for their contributions.

Author Contributions

C.-W.L.: Conceptualization; Data curation; Formal analysis; Funding acquisition; Investigation; Methodology; Resources; Software; Validation; Visualization; Roles/Writing-original draft P.-W.F.: Data curation; Formal analysis; Investigation; Resources. H.-Y.H.: Data curation: Formal analysis; Investigation; Resources C.-M.L.: Data curation; Project administration; Methodology; Resources; Supervision; Software; Validation; Visualization; Roles/Writing-original draft; Writing-review & editing. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Science and Technology of Taiwan [grant numbers NSC102-2320-B-002 to C.-W.L.] and Fu Jen Catholic University Hospital [grant number PL-20190800X to C.-W.L.].

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of Fu Jen Catholic University (No. C107134; 20 June 2019).

Informed Consent Statement

Informed Consent Statement were waived for this study, due to usage of anonymous images from archived pathology tissue bank.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to ethical restriction on image distribution.

Conflicts of Interest

The authors declare no potential conflict.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Krause J., Grabsch H.I., Kloor M., Jendrusch M., Echle A., Buelow R.D., Boor P., Luedde T., Brinker T.J., Trautwein C., et al. Deep learning detects genetic alterations in cancer histology generated by adversarial networks. J. Pathol. 2021;254:70–79. doi: 10.1002/path.5638. [DOI] [PubMed] [Google Scholar]

- 2.Fu Y., Jung A.W., Torne R.V., Gonzalez S., Vöhringer H., Shmatko A., Yates L.R., Jimenez-Linan M., Moore L., Gerstung M. Pan-cancer computational histopathology reveals mutations, tumor composition and prognosis. Nat. Cancer. 2020;1:800–810. doi: 10.1038/s43018-020-0085-8. [DOI] [PubMed] [Google Scholar]

- 3.Kather J.N., Heij L.R., Grabsch H.I., Loeffler C., Echle A., Muti H.S., Krause J., Niehues J.M., Sommer K.A.J., Bankhead P., et al. Pan-cancer image-based detection of clinically actionable genetic alterations. Nat. Cancer. 2020;1:789–799. doi: 10.1038/s43018-020-0087-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chen M., Zhang B., Topatana W., Cao J., Zhu H., Juengpanich S., Mao Q., Yu H., Cai X. Classification and mutation prediction based on histopathology H&E images in liver cancer using deep learning. NPJ Precis. Oncol. 2020;4:1–7. doi: 10.1038/s41698-020-0120-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kather J.N., Pearson A.T., Halama N., Jäger D., Krause J., Loosen S.H., Marx A., Boor P., Tacke F., Neumann U.P., et al. Deep learning can predict microsatellite instability directly from histology in gastrointestinal cancer. Nat. Med. 2019;25:1054–1056. doi: 10.1038/s41591-019-0462-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Coudray N., Ocampo P.S., Sakellaropoulos T., Narula N., Snuderl M., Fenyö D., Moreira A.L., Razavian N., Tsirigos A. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat. Med. 2018;24:1559–1567. doi: 10.1038/s41591-018-0177-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Demetri G.D., von Mehren M., Antonescu C.R., DeMatteo R.P., Ganjoo K.N., Maki R.G., Pisters P.W., Raut C.P., Riedel R.F., Schuetze S., et al. NCCN task force report: Update on the management of patients with gastrointestinal stromal tumors. J. Natl. Compr. Canc. Netw. 2010;8((Suppl. 2)):S1–S41. doi: 10.6004/jnccn.2010.0116. quiz S42–S44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Madabhushi A., Lee G. Image analysis and machine learning in digital pathology: Challenges and opportunities. Med. Image Anal. 2016;33:170–175. doi: 10.1016/j.media.2016.06.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lo C., Wu Y., Li Y.J., Lee C. Computer-aided bacillus detection in whole-slide pathological images using a deep convolutional neural network. Appl. Sci. 2020;10:4059. doi: 10.3390/app10124059. [DOI] [Google Scholar]

- 10.Chiang T., Huang Y., Chen R., Huang C., Chang R. Tumor detection in automated breast ultrasound using 3-D CNN and prioritized candidate aggregation. IEEE Trans. Med. Imaging. 2018;38:240–249. doi: 10.1109/TMI.2018.2860257. [DOI] [PubMed] [Google Scholar]

- 11.Mukherjee P., Zhou M., Lee E., Schicht A., Balagurunathan Y., Napel S., Gillies R., Wong S., Thieme A., Leung A., et al. A shallow convolutional neural network predicts prognosis of lung cancer patients in multi-institutional computed tomography image datasets. Nat. Mach. Intell. 2020;2:274–282. doi: 10.1038/s42256-020-0173-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Litjens G., Sánchez C.I., Timofeeva N., Hermsen M., Nagtegaal I., Kovacs I., Hulsbergen-van de Kaa C., Bult P., van Ginneken B., van der Laak J. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci. Rep. 2016;6:26286. doi: 10.1038/srep26286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Samala R.K., Chan H., Hadjiiski L.M., Helvie M.A., Cha K.H., Richter C.D. Multi-task transfer learning deep convolutional neural network: Application to computer-aided diagnosis of breast cancer on mammograms. Phys. Med. Biol. 2017;62:8894–8908. doi: 10.1088/1361-6560/aa93d4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lo C., Chen Y., Weng R., Hsieh K.L. Intelligent glioma grading based on deep transfer learning of MRI radiomic features. Appl. Sci. 2019;9:4926. doi: 10.3390/app9224926. [DOI] [Google Scholar]

- 15.Corless C.L., McGreevey L., Haley A., Town A., Heinrich M.C. KIT Mutations are Common in Incidental Gastrointestinal Stromal Tumors One Centimeter Or Less in Size. Am. J. Pathol. 2002;160:1567–1572. doi: 10.1016/S0002-9440(10)61103-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Heinrich M.C., Corless C.L., Duensing A., McGreevey L., Chen C.J., Joseph N., Singer S., Griffith D.J., Haley A., Town A., et al. PDGFRA Activating Mutations in Gastrointestinal Stromal Tumors. Science. 2003;299:708–710. doi: 10.1126/science.1079666. [DOI] [PubMed] [Google Scholar]

- 17.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012;25:1097–1105. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 18.Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., Rabinovich A. Going deeper with convolutions. arXiv. 20151409.4842 [Google Scholar]

- 19.He K., Zhang X., Ren S., Sun J. Deep Residual Learning for Image Recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 20.Huang G., Liu S., Van der Maaten L., Weinberger K.Q. Condensenet: An Efficient Densenet Using Learned Group Convolutions; Proceedings of the IEEE Conference on Computer Vision And Pattern Recognition; Slat Lake City, UT, USA. 18–23 June 2018; pp. 2752–2761. [Google Scholar]

- 21.Field A. Discovering Statistics Using IBM SPSS Statistics. Sage; Newcastle upon Tyne, UK: 2013. [Google Scholar]

- 22.Xu F., Ma X., Wang Y., Tian Y., Tang W., Wang M., Wei R., Zhao X. CT texture analysis can be a potential tool to differentiate gastrointestinal stromal tumors without KIT exon 11 mutation. Eur. J. Radiol. 2018;107:90–97. doi: 10.1016/j.ejrad.2018.07.025. [DOI] [PubMed] [Google Scholar]

- 23.Hatakeyama K., Nagashima T., Ohshima K., Ohnami S., Ohnami S., Shimoda Y., Serizawa M., Maruyama K., Naruoka A., Akiyama Y., et al. Mutational burden and signatures in 4000 Japanese cancers provide insights into tumorigenesis and response to therapy. Cancer Sci. 2019;110:2620–2628. doi: 10.1111/cas.14087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Liang C., Yang C., Flavin R., Fletcher J.A., Lu T.P., Lai I.R., Li Y.I., Chang Y.L., Lee J.C. Loss of SFRP1 expression is a key progression event in gastrointestinal stromal tumor pathogenesis. Hum. Pathol. 2021;107:69–79. doi: 10.1016/j.humpath.2020.10.010. [DOI] [PubMed] [Google Scholar]

- 25.Yamaguchi U., Nakayama R., Honda K., Ichikawa H., Hasegawa T., Shitashige M., Ono M., Shoji A., Sakuma T., Kuwabara H., et al. Distinct gene expression-defined classes of gastrointestinal stromal tumor. J. Clin. Oncol. 2008;26:4100–4108. doi: 10.1200/JCO.2007.14.2331. [DOI] [PubMed] [Google Scholar]

- 26.Lo C., Chen C., Yeh Y., Chang C., Yeh H. Quantitative Analysis of Melanosis Coli Colonic Mucosa using Textural Patterns. Appl. Sci. 2020;10:404. doi: 10.3390/app10010404. [DOI] [Google Scholar]

- 27.Lo C.M., Weng R.C., Cheng S.J., Wang H.J., Hsieh K.L. Computer-Aided Diagnosis of Isocitrate Dehydrogenase Genotypes in Glioblastomas from Radiomic Patterns. Medicine. 2020;99:e19123. doi: 10.1097/MD.0000000000019123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kleczek P., Jaworek-Korjakowska J., Gorgon M. A Novel Method for Tissue Segmentation in High-Resolution H&E-Stained Histopathological Whole-Slide Images. Comput. Med. Imaging Graph. 2020;79:101686. doi: 10.1016/j.compmedimag.2019.101686. [DOI] [PubMed] [Google Scholar]

- 29.Bautista P.A., Hashimoto N., Yagi Y. Color standardization in whole slide imaging using a color calibration slide. J. Pathol. Inform. 2014;5:4. doi: 10.4103/2153-3539.126153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Clarke E.L., Treanor D. Colour in Digital Pathology: A Review. Histopathology. 2017;70:153–163. doi: 10.1111/his.13079. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to ethical restriction on image distribution.