Significance

Video is an increasingly common source of political information. Although conventional wisdom suggests that video is much more persuasive than other communication modalities such as text, this assumption has seldom been tested in the political domain. Across two large-scale randomized experiments, we find clear evidence that “seeing is believing”: individuals are more likely to believe an event took place when shown information in video versus textual form. When it comes to persuasion, however, the advantage of video over text is markedly less pronounced, with only small effects on attitudes and behavioral intentions. Together, these results challenge popular narratives about the unparalleled persuasiveness of political video versus text.

Keywords: political persuasion, communication modality, video, text, generalizability

Abstract

Concerns about video-based political persuasion are prevalent in both popular and academic circles, predicated on the assumption that video is more compelling than text. To date, however, this assumption remains largely untested in the political domain. Here, we provide such a test. We begin by drawing a theoretical distinction between two dimensions for which video might be more efficacious than text: 1) one’s belief that a depicted event actually occurred and 2) the extent to which one’s attitudes and behavior are changed. We test this model across two high-powered survey experiments varying exposure to politically persuasive messaging (total n = 7,609 Americans; 26,584 observations). Respondents were shown a selection of persuasive messages drawn from a diverse sample of 72 clips. For each message, they were randomly assigned to one of three conditions: a short video, a detailed transcript of the video, or a control condition. Overall, we find that individuals are more likely to believe an event occurred when it is presented in video versus textual form, but the impact on attitudes and behavioral intentions is much smaller. Importantly, for both dimensions, these effects are highly stable across messages and respondent subgroups. Moreover, when it comes to attitudes and engagement, the difference between the video and text conditions is comparable to, if not smaller than, the difference between the text and control conditions. Taken together, these results call into question widely held assumptions about the unique persuasive power of political video over text.

With the rise of social media, video is more important than ever as a means of political persuasion (1). Although video has long been a popular tool for political communication (2), video-sharing websites such as YouTube have created new opportunities for individuals to seek out and encounter political content online (3–5). This growing prominence of video as a political medium raises concerns that for many people “seeing is believing”—and therefore that video may be especially persuasive relative to the more traditional modality of text. These fears are particularly acute given the newfound ability of artificial intelligence to generate ultra-realistic “deepfake” videos of events that never occurred (6, 7).

Nevertheless, despite the widely held intuition that video is more believable and compelling than text, surprisingly little research to date has examined whether this assumption applies in political contexts. Outside the domain of politics, a long line of research has yielded inconclusive findings regarding the impact of video versus text on recall of factual information (e.g., refs. 8–11), engagement with and attention to message content (12–14), and opinion change (15, 16). Within the political realm, the relative persuasive advantage of video versus text likewise remains an open question (17–19). Although some scholars argue that video’s audiovisual components can improve the recall of political information (20, 21) and facilitate persuasion (22–25), others suggest that textual information may more effectively mobilize political action (26).

Furthermore, whereas past research suggests that persuasive writing (e.g., print news, op-eds) can cause sizable and lasting changes in policy attitudes (27–29), video-based persuasion—particularly in the form of political advertising—seems to have, at most, small and short-lived effects on candidate evaluations and voting intentions (30–32, although see ref. 33). Taken at face value, these results might imply a persuasive advantage of text over video. However, it is difficult to draw comparisons between these two strands of research, which tend to employ different methodologies and study different outcome variables. For instance, prior studies that examine the effects of political advertising on voting behavior may detect less persuasion than text-based studies focused on policy attitudes simply because individuals have stronger prior beliefs about candidates versus issues (34, 35). As such, it remains unclear whether political video can meaningfully persuade the public and, if so, whether its effects exceed those of equivalent text modalities.

Here, we directly test whether political video is more compelling than text. We begin with the observation that an enhanced impact of video (relative to text) could occur across either or both of two distinct dimensions. First, there is the belief that the information being conveyed is genuine—for example, that a depicted event actually occurred or that a speaker actually made a particular claim. Second, there is the extent to which this information is persuasive—that is, the extent to which the information alters individuals’ attitudes or behavior. These two dimensions are theoretically dissociable in that individuals might believe a claim was made but not find that claim persuasive, or they might not believe a claim was made but nonetheless update their attitudes (e.g., because the presented information resonates with their prior beliefs).

We hypothesize that communication modality strongly influences the first dimension but may have a lesser impact on the second. Previous work suggests that the ease with which information is processed (its “fluency”) shapes its perceived truthfulness (36, 37). To the extent that videos are easier to process than text, they may therefore feel intuitively more believable (for a discussion, see ref. 38). As a result, it is perhaps unsurprising that multimodal misinformation is often viewed as highly credible (38–40)—especially relative to textual misinformation (refs. 25 and 41, though see ref. 42). However, even if individuals more readily believe that an event occurred if it is shown on video versus relayed in text, this increased credibility may or may not have downstream consequences for attitudes and behavior, particularly when it comes to highly polarized issues. By decomposing the impact of video versus text into these two elements, we can more precisely identify the mechanisms that underlie previously documented communication modality effects.

To test this conceptual model, we conducted two high-powered survey experiments with diverse US samples. The first of these studies was fielded on Lucid between March and April of 2021 (Study 1; n = 4,266; 16,735 total observations), and the second was fielded on Dynata in May of 2021 (Study 2; n = 3,343; 9,849 total observations). In both studies, respondents were presented with a random selection of persuasive messages. For each message, respondents were randomly assigned to watch a short video clip, read an annotated transcript of the video clip, or receive no new information (the control condition). We employed a within-subject design in which respondents could be assigned to different experimental conditions for each of the messages they were shown. We sought to include a wide variety of policy-relevant clips in order to understand the general effect of video versus text across subject areas (43, 44); to this end, across our two studies, we incorporated a total of 72 persuasive messages spanning a range of topics and varying in the extent to which their content was political or nonpolitical. For Study 1, we examined 48 messages, all drawn from the Peoria Project’s database of professionally produced political ads. These videos were uniformly political but encompassed a diverse array of hot-button issues (e.g., climate change, healthcare, the minimum wage). In contrast, for Study 2, we focused on a single issue: the COVID-19 pandemic. We compiled a set of 24 clips, each of which was widely viewed on YouTube during the peak of the pandemic in the United States (following guidance from ref. 45). Although some of these clips were again political in nature (e.g., an MSNBC clip of former president Donald Trump discussing testing rates), many were not (e.g., a demonstration of the benefits of handwashing in preventing the spread of the virus). Additional details about the stimulus set are available in SI Appendix, Stimulus Selection.

We examined responses to these messages across four categories of outcomes. In line with our proposed two-dimensional model, we first evaluated the believability of each message by asking respondents in the two treatment conditions to rate the extent to which they thought the events shown in the video or described in the transcript actually took place. For example, for a clip that contained footage of Senator Bernie Sanders advocating for free public college, we asked respondents to rate the extent to which they believed Sanders “actually said that tuition to public colleges and universities should be free.” We then measured the persuasiveness of each message by having all respondents—in both the treatment and control groups—report their level of agreement with the message’s core persuasive claim (e.g., their agreement that tuition to public colleges and universities should be free). As a secondary measure of persuasion, we then asked respondents in the two treatment groups to indicate their likelihood of sharing the message with a friend or colleague. Finally, for all respondents, we assessed personal engagement using two measures: respondents’ interest in learning more about the message’s topic and their perceptions of the importance of the message’s topic relative to other issues (Study 1 only).

Results

To test our main hypotheses, we fit a series of Bayesian multilevel linear regression models given the clustered structure of the underlying data (46–48). For each outcome variable, our primary quantity of interest is the average treatment effect (ATE) of exposure to video versus text. The dependent variables in all cases are standardized (see SI Appendix, Results Using Untransformed Dependent Variables for results using the untransformed dependent variables); the reported treatment effects are thus expressed in units of SD. Following our preregistration, we allow the ATE (among other parameters) to vary across both messages and respondents, and we specify vague, weakly informative prior distributions for all model parameters. For each of our analyses, we report the posterior median and the 95% highest posterior density interval (HPDI). The HPDI is the narrowest region that covers the value of the ATE with a 95% probability given the data and model. Full model specifications and diagnostics are reported in SI Appendix, Model Specification and Diagnostics.

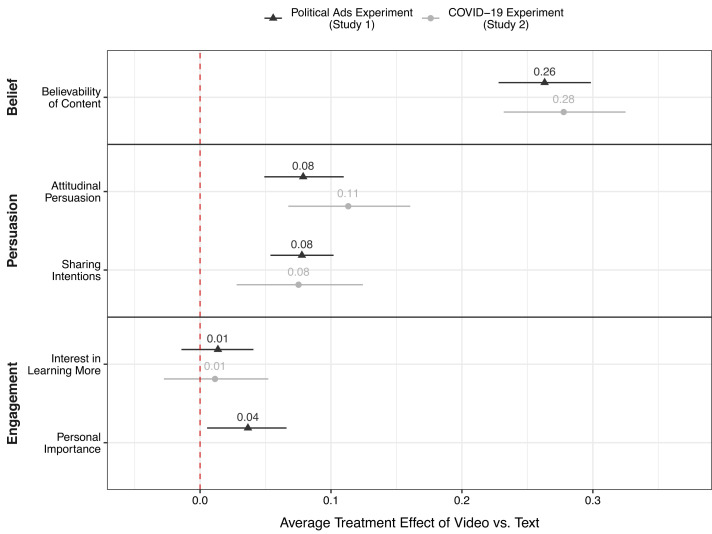

Overall, the effects of video versus text are remarkably similar across our two studies (Fig. 1). In both cases, we observe a positive effect of video versus text on the first dimension of our model: respondents’ belief that the presented information is authentic. Specifically, we find that respondents in the video condition are, on average, more likely to believe the events depicted in a message actually occurred, relative to respondents in the text condition (Study 1: 0.26 SD, 95% HPDI = [0.23, 0.30]; Study 2: 0.28 SD, 95% HPDI = [0.23, 0.33]). Therefore, as expected, video seems modestly but meaningfully more believable than text. However, the effects are much smaller for the second dimension of our model: the extent to which the presented information is persuasive. In particular, we find a precisely estimated but small effect of video versus text on respondents’ attitudes (Study 1: 0.08 SD, 95% HPDI = [0.05, 0.11]; Study 2: 0.11 SD, 95% HPDI = [0.07, 0.16]) and intended sharing behavior (Study 1: 0.08 SD, 95% HPDI = [0.05, 0.10]; Study 2: 0.08 SD, 95% HPDI = [0.03, 0.12]). Critically, the estimated credible intervals for these two outcome variables rule out ATEs larger than 0.16 SD with greater than 95% probability, whereas the credible intervals for the belief variable suggest ATEs of at least 0.23 SD. Thus, the effect of video versus text appears substantially larger for belief than for persuasion. Moreover, we find minimal evidence that video is more engaging than text, either in terms of respondents’ interest in learning more about the message (Study 1: 0.01 SD, 95% HPDI = [−0.01, 0.04]; Study 2: 0.01 SD, 95% HPDI = [−0.03, 0.05]) or the perceived importance of the message’s topic (Study 1: 0.04 SD, 95% HPDI = [0.01, 0.07]). In sum, although video may be more believable than text, this enhanced credibility does not seem to be accompanied by a commensurate increase in persuasion or personal engagement.

Fig. 1.

ATE of assignment to the video versus text condition on ratings of believability, persuasion, and personal engagement. All estimates are expressed in units of SD. The point estimate of the ATE is based on the posterior median; specifically, we compute the median of the posterior distribution on the fixed effect parameter for the dummy variable indicating assignment to the video versus text condition. 95% HPDIs are displayed. Note that ratings of personal importance are only available for Study 1.

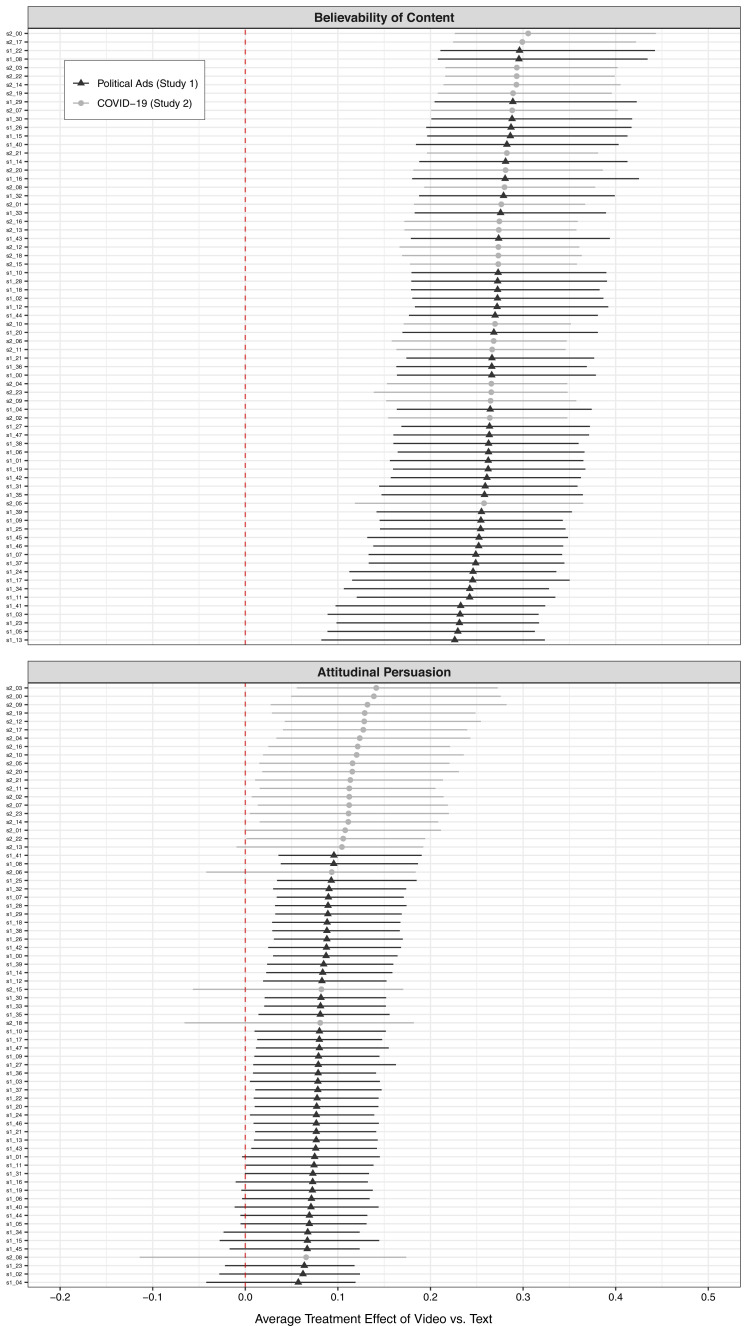

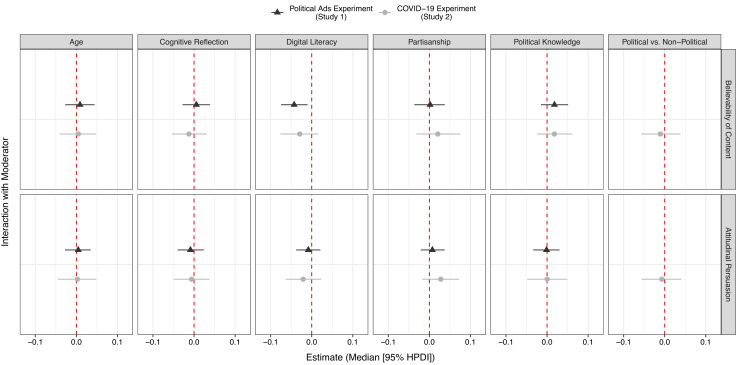

These aggregate patterns are likewise apparent for individual stimuli. When examining the ATEs of video versus text for each of our 72 persuasive messages, we find that the strongest ATE on attitudes remains smaller than the weakest ATE on beliefs (Fig. 2). In other words, we find that, across the board, the relative believability of video versus text is greater than the relative persuasiveness of these two modalities. Furthermore, for Study 2, we find few differences in treatment effects when comparing political and nonpolitical messages about COVID-19, suggesting that these limited persuasion effects are not just contained to overtly political content (rightmost column of Fig. 3). Finally, the treatment effects appear quite stable across respondent subgroups (Fig. 3); we do not observe consistent differences in the persuasiveness of video versus text based on demographic traits (e.g., age), political attributes (e.g., partisanship, political knowledge), or personal dispositions (e.g., cognitive reflection). Altogether, our results therefore appear to be highly generalizable across different types of messages and across different subpopulations.

Fig. 2.

ATE of assignment to the video versus text condition disaggregated by persuasive message. All estimates are expressed in units of SD. Posterior medians and 95% HPDIs are displayed. Note that the message-specific ATEs in these comparisons are mildly regularized, improving their out-of-sample predictive accuracy on average (47). Additional information about each message is available in SI Appendix, Stimulus Selection.

Fig. 3.

Differences in the ATE of assignment to the video versus text condition based on respondent characteristics (age, cognitive reflection, digital literacy, partisanship, and political knowledge) and message characteristics (political versus nonpolitical, Study 2 only). The moderator variables in all cases are standardized, and the posterior medians and 95% HPDIs are displayed. A full summary of the moderator analyses is provided in SI Appendix, Moderator Models.

Importantly, however, the relatively small differences between video and text should not be taken as evidence that neither form of appeal is persuasive. On the contrary, both video and text seem to affect political attitudes and engagement. Using the control group as a benchmark, we find that exposure to text corresponds to a small but detectable change in respondents’ attitudes (Study 1: 0.07 SD, 95% HPDI = [0.04, 0.11]; Study 2: 0.14 SD, 95% HPDI = [0.08, 0.20]; see SI Appendix, Comparison to Control Condition). This difference in persuasion between the text and control conditions is similar to—if not larger than—the difference between video and text, suggesting that both forms of persuasive messaging have the potential to shape public opinion. Moreover, these two modalities do not meaningfully differ from one another in their effect on personal engagement, despite both increasing engagement relative to a true control in which no information was displayed. In Study 1, for example, compared to the control group, video and text have similar effects on respondents’ interest in learning more about the message’s topic (video versus control: 0.05 SD, 95% HPDI: [0.01, 0.09]; text versus control: 0.04 SD, 95% HPDI: [0.02, 0.08]) and perceptions of the importance of the message’s topic (video versus control: 0.15 SD, 95% HPDI: [0.11, 0.19]; text versus control: 0.11 SD, 95% HPDI: [0.08, 0.15]). Altogether, these results suggest that video has only a small added benefit over text when it comes to political attitudes and personal engagement.

Discussion

Across two large-scale studies and nearly 75 video clips, we find that video has a significant, albeit modest, effect on the first dimension of our proposed model: whether presented information is believed to be real. Critically, however, for the second dimension of our model—whether this information is persuasive—video has a much smaller impact on individuals’ attitudes and sharing intentions relative to text. Even if video-based information is more believable than text, this heightened credibility does not seem to guarantee a similar boost in political persuasion. Our findings thus speak to the importance of distinguishing between believability and persuasion when studying media effects. Although conventional wisdom often assumes an intrinsic link between these two constructs, our results suggest that believability and persuasion are far from interchangeable.

Moreover, the relative persuasive advantage of video versus text may in fact be even smaller outside an experimental context. In both of our studies, the text-based treatments were presented in the form of a detailed transcript containing an exact replication of the audio output as well as a comprehensive description of key visual cues. In reality, politically persuasive writing may be structured quite differently (e.g., as a news article or opinion piece). To the extent that these more realistic formats are more compelling than a rote transcription, our studies likely overestimate the differences between video versus text and underestimate the differences between the text and control conditions. However, despite these concerns about external validity, our text-based treatments offer a crucial benefit: they allow us to hold constant the information presented across the two treatment groups. This design enables us to parse the persuasive effect of video that is attributable to the information it contains from the effect that is due to other factors (e.g., affective or visual cues). With this perspective in mind, we find that a substantial portion—as much as half—of video’s (relatively small) persuasive effect is tied to noninformational aspects of the medium. However, we cannot discern which features of video have the strongest influence on political persuasion; future work should take up this question.

Given these results, basic assumptions about the primacy of political video over text should perhaps be revisited. One important implication of these findings is that current concerns about the unparalleled persuasiveness of video-based misinformation, including deepfakes, may be somewhat premature. In addition, these findings add nuance to longstanding debates regarding the efficacy of political advertising. Although previous studies find that political ads, particularly in video form, typically have small and fleeting effects on candidate evaluations and voting behavior, our results suggest that both video- and text-based ads have the potential to sway the public’s policy opinions, even for highly polarized topics like climate change, reproductive rights, or the COVID-19 pandemic. When exposed to persuasive content, members of the public tend to update their issue attitudes in the direction of the message,regardless of whether this content is delivered in video versus textual form.

It should be noted, however, that although we observe only small differences in the persuasiveness of video versus text across our two studies, the effects of these two modalities may diverge more sharply outside an experimental context. In particular, it is possible that video is more attention grabbing than text, such that people scrolling on social media are more likely to attend to and therefore be exposed to video versus text. As a result, even if video has only a limited persuasive advantage over text within a controlled, forced-choice setting, it could still exert an outsized effect on attitudes and behavior in an environment where it receives disproportionate attention. Efforts to integrate this attentional component of media consumption into future studies may prove informative. Understanding how individuals interact with video versus text content can also help clarify why certain types of content are more or less likely to spread via social media. To this end, in both of our studies, we measured individuals’ stated likelihood of sharing each message with a friend or colleague. However, measures of real-world, rather than self-reported, sharing behavior may be able to better illuminate how video versus text content diffuses online. Field experiments administered directly on social media platforms may be helpful in this regard.

Overall, our results suggest minimal heterogeneity in the persuasiveness of video versus text across messages and respondent subgroups. That these effects are generally stable across messages is especially notable. Although our videos do not constitute a representative sample of all politically persuasive content, we sampled a large and relatively diverse set of clips. Consequently, if there is a powerful effect of video over text—as is often assumed—we would expect to observe it in our experiments. Nonetheless, future research should investigate whether our findings persist across an even more expansive stimulus set. In particular, although we observe clear effects of video on belief in unmanipulated content, future work should explore whether our results replicate in the context of misinformation, including deepfakes as well as so-called “shallow” or “cheap” fakes that use simple editing tricks to craft deceptive footage. In addition, future work should investigate the durability of these effects; although video appears marginally more persuasive than text immediately after exposure, these differences may dissipate over time. Finally, the conceptual model we introduce differentiating belief and persuasion can guide a wide range of future research on media effects both within and outside the political domain.

Materials and Methods

Data, study materials, and the replication code are available online (61). All hypotheses and analyses were preregistered (https://osf.io/uy8wc for Study 1; https://osf.io/5se6k for Study 2).

Participants.

For the political ads study (Study 1), we contracted with Lucid to recruit a diverse nonprobability sample of the American public (49) with a preregistered sample size of 4,000 respondents. A total of 8,381 respondents entered the study, 7,982 consented to participate, and 4,629 passed a series of technical checks confirming their ability to watch video content. Following our preregistration, we retained all respondents who viewed at least one experimental stimulus (n = 4,266; Mage = 54; 61.3% female). For the COVID-19 study (Study 2), we preregistered a sample size of 2,500 respondents, recruited via Dynata, but ended up including over 700 additional respondents to address concerns about the representativeness of the initial sample. In total, 6,082 respondents started the survey, 5,713 consented to participate, and 3,698 successfully completed the same technical checks as in Study 1. As before, we retained all respondents who viewed at least one experimental stimulus (n = 3,343; Mage = 40; 54.9% female). See SI Appendix, Sample Demographics for more information about sample demographics.

For both studies, we sought to recruit an attentive sample of respondents given recent concerns about rising rates of inattentiveness on online survey panels (50, 51). To filter out disengaged respondents, we required all respondents to pass a stringent audiovisual check confirming their ability and willingness to watch video content before proceeding to the rest of the study. Specifically, immediately after providing informed consent, respondents were shown a brief test clip and were then asked two questions to determine whether they both watched and listened to this clip. Respondents who failed to answer these questions correctly after two attempts were removed from our sample. SI Appendix, Survey Questionnaire describes this procedure in greater detail. In addition, the survey also included two instructional manipulation checks (“screeners”; see ref. 52) designed to assess respondent inattentiveness; when we stratify participants based on their responses to these two screener items, we find largely similar treatment effects across groups (see SI Appendix, Responses by Attentiveness).

Experimental Procedure.

Both studies employed a nearly identical experimental procedure. Respondents first answered a series of pretreatment questions, including a standard demographic battery, a four-item political knowledge scale (53), a four-item Cognitive Reflection Test (54, 55), and a six-item digital literacy scale (56).

After completing these items, respondents were assigned to view a random selection of messages presented in random order. For Study 1, respondents were shown four messages (from a list of 48), and for Study 2, respondents were shown three messages (from a list of 24). To avoid spillover across similar topics, we grouped these messages into high-level categories, and respondents were allowed to view only a single message from within a given category. For each message, respondents were then randomly assigned to one of three conditions: 1) the video condition, in which they were asked to watch a short video clip, 2) the text condition, in which they were asked to read a detailed transcription of the video clip, and 3) the control condition, in which no new information was provided. Further details about the randomization procedure can be found in SI Appendix, Randomization Procedure. Finally, after viewing each message, respondents completed a series of outcome variables described below in Measures.

For Study 1, all experimental stimuli were drawn from the Peoria Project’s database of politically persuasive messages (https://thepeoriaproject.org/videos/). For Study 2, we instead compiled our own set of widely viewed YouTube clips about the COVID-19 pandemic. See SI Appendix, Stimulus Selection for a full description of the stimulus selection process.

Measures.

We measured each of our dependent variables using five-point scales. Note that our outcome variables were all based on single-item scales. Although multi-item scales have important benefits, we opted to use single-item scales in light of concerns about the overall length of the survey instrument and the associated risks of respondent attrition and response satisficing (57).

First, we asked respondents in the video and text conditions to rate the believability of the content—that is, their perceptions of whether the depicted events actually occurred—using the following format: “To what extent do you believe [the speaker] actually [made a particular claim from the message]?” (1 = Definitely did not happen, 5 = Definitely happened). Second, we asked respondents in all three conditions to rate their attitudes regarding the subject of the message—that is, whether they agreed or disagreed with the core persuasive claim—using the following format: “Please rate the extent to which you agree or disagree with the following statement: [Persuasive claim from the message]” (1 = Strongly disagree, 5 = Strongly agree). Third, in Study 1, we asked all respondents how important the message’s topic was using the following format: “How important is [the subject of the persuasive message] to you, relative to other issues?” (1 = Not at all important, 5 = Extremely important). Fourth, we asked all respondents to rate their interest in learning more about the message’s topic using the following format: “To what extent are you interested in learning more about [the subject of the persuasive message]?” (1 = Not at all interested, 5 = Extremely interested). Lastly, we asked respondents in the video and text conditions to rate their likelihood of sharing the content with others: “How likely would you be to share the message you just saw with a friend or colleague?” (1 = Not at all likely, 5 = Extremely likely). The exact wording of all outcome measures is available in SI Appendix, Survey Questionnaire.

Analysis Strategy.

To test our main hypotheses, we fit a series of Bayesian multilevel linear regression models using the brms package in R (58, 59). For models comparing the effects of video versus text, our primary quantity of interest is the parameter on a dummy variable indicating assignment to the video versus text condition, representing the ATE of exposure to video versus text. However, for our measures of attitudinal persuasion and personal engagement, we also include a second dummy variable indicating assignment to the control versus text condition. We allow the parameters on these two dummy variables (and the intercept) to vary across both respondents and messages (that is, a “maximal” random effects structure; see ref. 60).

For our moderator models, we again fit Bayesian multilevel linear regression models but, for each potential moderator, linearly interact the treatment indicators with the (standardized) moderator variable. For models assessing heterogeneity based on respondent characteristics (e.g., age, partisanship), we allow all parameters to vary across messages but allow only the intercept and ATE parameters to vary across respondents given that the moderator variables are measured at the respondent level and therefore do not vary for a given respondent across messages. The reverse is true for models assessing heterogeneity based on message-specific attributes (e.g., political versus nonpolitical videos). Additional details about the model specifications can be found in SI Appendix, Model Specification and Diagnostics.

We obtain satisfactory convergence for most parameters and models (SI Appendix, Model Diagnostics: Summary Tables and Model Diagnostics: Trace Plots). However, because we had relatively few observations per respondent, in several cases, the sampling chains for the respondent-level random effects were not sufficiently well mixed. As a robustness check, we refit all of our models without the respondent-level random effects and find nearly identical estimates as those in the full, preregistered models (for a side-by-side comparison, see SI Appendix, Models without Respondent-Level Random Effects). We thus report the estimates from our original specifications here.

Political Classification.

For Study 2, we included a mix of both political and nonpolitical messages related to the COVID-19 pandemic. We used crowd-sourced classification (n = 164 respondents, recruited via Amazon Mechanical Turk) to categorize the content of each video based on a two-stage process. First, respondents were asked, in their opinion, whether the video was political or nonpolitical. If they selected one of these two options, they were then asked how confident they were in this classification using a five-point scale. If they selected “Not sure,” they were then asked whether, if forced to choose, they thought the video was political, nonpolitical, or if they were still unsure. Responses to these two sets of questions were recoded into a 13-point score ranging from −6 (Extremely confident nonpolitical) to 6 (Extremely confident political).

Consent and Ethics.

All respondents provided informed consent, and both studies were approved as exempt by the Massachusetts Institute of Technology (MIT) Committee on the Use of Humans as Experimental Subjects protocols no. E-3075 and E-3150. After completing the experimental portion of the survey, respondents were debriefed about the goals of the study and were told that the content they were shown was, to the best of our knowledge, authentic. Additionally, at two points in Study 2, we informed respondents that some of the messages they were shown were produced at the beginning of the pandemic (as early as January 2020) and might contain out-of-date information. First, prior to the experimental portion of the study, we told respondents that some of the content they might see was created earlier in the pandemic and might not reflect current events or public health guidance. Then, at the end of the study, we again reminded respondents about the timing of the clips and provided links to up-to-date information from the Centers for Disease Control and Prevention.

Supplementary Material

Acknowledgments

We thank Paige Bollen, Naylah Canty, Emily Huang, Paul Irvine, John Jones, Liberty Ladd, Ji Min Lee, Rorisang Lekalake, Anna Weissman, and Nicole Wilson for research assistance; Reagan Martin for video editing assistance; and Rocky Cole, Yasmin Green, Andrew Gully, Zach Markovich, and participants at the MIT Behavioral Research Laboratory for helpful comments and advice. We are especially grateful to Jonathan Zong for his involvement in the design and development of our pilot study. We gratefully acknowledge funding from Jigsaw.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2114388118/-/DCSupplemental.

Data Availability

Datasets and study materials have been deposited in the Open Science Framework (https://osf.io/xwmqn/) (61).

References

- 1.Pew Research Center, “State of the news media 2014: News video on the web: A growing, if uncertain, part of news” (Pew Research Center, 2014).

- 2.Fowler E. F., Franz M. M., Ridout T. N., Political Advertising in the United States (Routledge, ed. 1, 2016). [Google Scholar]

- 3.Harwell D., How YouTube is shaping the 2016 presidential election. The Washington Post, 25 March 2016. https://www.washingtonpost.com/news/the-switch/wp/2016/03/25/inside-youtubes-explosive-transformation-of-american-politics/. Accessed 30 June 2021.

- 4.Shearer E., Gottfried J., News use across social media platforms 2017. Pew Research Center, 7 September 2017. https://www.pewresearch.org/journalism/2017/09/07/news-use-across-social-media-platforms-2017/. Accessed 30 June 2021.

- 5.Stocking G., van Kessel P., Barthel M., Matsa K. E., Khuzam M., Many Americans get news on YouTube, where news organizations and independent producers thrive side by side. Pew Research Center, 28 September 2020. https://www.pewresearch.org/journalism/2020/09/28/many-americans-get-news-on-youtube-where-news-organizations-and-independent-producers-thrive-side-by-side/. Accessed 30 June 2021.

- 6.CNN Business, When seeing is no longer believing: Inside the Pentagon’s race against deepfake videos. CNN, 2019. https://www.cnn.com/interactive/2019/01/business/pentagons-race-against-deepfakes/. Accessed 22 March 2020.

- 7.Rothman J., In the age of A.I., is seeing still believing? New Yorker, 5 November 2018. https://www.newyorker.com/magazine/2018/11/12/in-the-age-of-ai-is-seeing-still-believing. Accessed 22 March 2020.

- 8.Corston R., Colman A. M., Modality of communication and recall of health-related information. J. Health Psychol. 2, 185–194 (1997). [DOI] [PubMed] [Google Scholar]

- 9.Furnham A., Gunter B., Effects of time of day and medium of presentation on immediate recall of violent and non-violent news. Appl. Cogn. Psychol. 1, 255–262 (1987). [Google Scholar]

- 10.Jones M. Y., Pentecost R., Requena G., Memory for advertising and information content: Comparing the printed page to the computer screen. Psychol. Mark. 22, 623–648 (2005). [Google Scholar]

- 11.Yadav A., et al. , If a picture is worth a thousand words is video worth a million? Differences in affective and cognitive processing of video and text cases. J. Comput. High. Educ. 23, 15–37 (2011). [Google Scholar]

- 12.Fishfader V. L., Howells G. N., Katz R. C., Teresi P. S., Evidential and extralegal factors in juror decisions: Presentation mode, retention, and level of emotionality. Law Hum. Behav. 20, 565–572 (1996). [Google Scholar]

- 13.Mohammadi G., Park S., Sagae K., Vinciarelli A., Morency L.-P., “Who is persuasive?: The role of perceived personality and communication modality in social multimedia” in Proceedings of the 15th ACM on International Conference on Multimodal Interaction, (ACM Press, 2013), pp. 19–26.

- 14.Pezdek K., Avila‐Mora E., Sperry K., Does trial presentation medium matter in jury simulation research? Evaluating the effectiveness of eyewitness expert testimony. Appl. Cogn. Psychol. 24, 673–690 (2010). [Google Scholar]

- 15.Chaiken S., Eagly A. H., Communication modality as a determinant of message persuasiveness and message comprehensibility. J. Pers. Soc. Psychol. 34, 605–614 (1976). [Google Scholar]

- 16.Chaiken S., Eagly A. H., Communication modality as a determinant of persuasion: The role of communicator salience. J. Pers. Soc. Psychol. 45, 241–256 (1983). [Google Scholar]

- 17.Andreoli V., Worchel S., Effects of media, communicator, and message position on attitude change. Public Opin. Q. 42, 59–70 (1978). [Google Scholar]

- 18.Pfau M., Holbert R. L., Zubric S. J., Pasha N. H., Lin W.-K., Role and influence of communication modality in the process of resistance to persuasion. Media Psychol. 2, 1–33 (2000). [Google Scholar]

- 19.Worchel S., Andreoli V., Eason J., Is the medium the message? A study of the effects of media, communicator, and message characteristics on attitude change. J. Appl. Soc. Psychol. 5, 157–172 (1975). [Google Scholar]

- 20.Graber D. A., Seeing is remembering: How visuals contribute to learning from television news. J. Commun. 40, 134–156 (1990). [Google Scholar]

- 21.Prior M., Visual political knowledge: A different road to competence? J. Polit. 76, 41–57 (2014). [Google Scholar]

- 22.Glasford D. E., Seeing is believing: Communication modality, anger, and support for action on behalf of out-groups. J. Appl. Soc. Psychol. 43, 2223–2230 (2013). [Google Scholar]

- 23.Goldberg M. H., et al. , The experience of consensus: Video as an effective medium to communicate scientific agreement on climate change. Sci. Commun. 41, 659–673 (2019). [Google Scholar]

- 24.Grabe M. E., Bucy E. P., Image Bite Politics: News and the Visual Framing of Elections (Oxford University Press, ed. 1, 2009). [Google Scholar]

- 25.Sundar S. S., Molina M. D., Cho E., Seeing is believing: Is video modality more powerful in spreading fake news via online messaging apps? J. Comput. Mediat. Commun., https://doi.org/10.1093/jcmc/zmab010 (1 August 2021). [Google Scholar]

- 26.Powell T. E., Boomgaarden H. G., De Swert K., de Vreese C. H., Video killed the news article? Comparing multimodal framing effects in news videos and articles. J. Broadcast. Electron. Media 62, 578–596 (2018). [Google Scholar]

- 27.Chong D., Druckman J. N., Dynamic public opinion: Communication effects over time. Am. Polit. Sci. Rev. 104, 663–680 (2010). [Google Scholar]

- 28.Coppock A., Ekins E., Kirby D., The long-lasting effects of newspaper op-eds on public opinion. Quart. J. Polit. Sci. 13, 59–87 (2018). [Google Scholar]

- 29.Kuziemko I., Norton M. I., Saez E., Stantcheva S., How elastic are preferences for redistribution? Evidence from randomized survey experiments. Am. Econ. Rev. 105, 1478–1508 (2015). [Google Scholar]

- 30.Coppock A., Hill S. J., Vavreck L., The small effects of political advertising are small regardless of context, message, sender, or receiver: Evidence from 59 real-time randomized experiments. Sci. Adv. 6, eabc4046 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gerber A. S., Gimpel J. G., Green D. P., Shaw D. R., How large and long-lasting are the persuasive effects of televised campaign ads? Results from a randomized field experiment. Am. Polit. Sci. Rev. 105, 135–150 (2011). [Google Scholar]

- 32.Hill S. J., Lo J., Vavreck L., Zaller J., How quickly we forget: The duration of persuasion effects from mass communication. Polit. Commun. 30, 521–547 (2013). [Google Scholar]

- 33.Huber G. A., Arceneaux K., Identifying the persuasive effects of presidential advertising. Am. J. Pol. Sci. 51, 957–977 (2007). [Google Scholar]

- 34.Broockman D., Kalla J., When and why are campaigns’ persuasive effects small? Evidence from the 2020 US Presidential Election. OSF [Preprint] (2020). (Accessed 30 June 2021). [Google Scholar]

- 35.DellaVigna S., Gentzkow M., Persuasion: Empirical evidence. Annu. Rev. Econ. 2, 643–669 (2010). [Google Scholar]

- 36.Alter A. L., Oppenheimer D. M., Uniting the tribes of fluency to form a metacognitive nation. Pers. Soc. Psychol. Rev. 13, 219–235 (2009). [DOI] [PubMed] [Google Scholar]

- 37.Reber R., Schwarz N., Effects of perceptual fluency on judgments of truth. Conscious. Cogn. 8, 338–342 (1999). [DOI] [PubMed] [Google Scholar]

- 38.Vaccari C., Chadwick A., Deepfakes and disinformation: Exploring the impact of synthetic political video on deception, uncertainty, and trust in news. Soc. Media Soc. 6, 1–13 (2020). [Google Scholar]

- 39.Dobber T., Metoui N., Trilling D., Helberger N., de Vreese C., Do (microtargeted) deepfakes have real effects on political attitudes? Int. J. Press. 26, 69–91 (2020). [Google Scholar]

- 40.Ternovski J., Kalla J., Aronow P. M., Deepfake warnings for political videos increase disbelief but do not improve discernment: Evidence from two experiments. OSF [Preprint] (2021). (Accessed 30 June 2021). [Google Scholar]

- 41.Hameleers M., Powell T. E., Meer T. G. L. A. V. D., Bos L., A picture paints a thousand lies? The effects and mechanisms of multimodal disinformation and rebuttals disseminated via social media. Polit. Commun. 37, 281–301 (2020). [Google Scholar]

- 42.Barari S., Lucas C., Munger K., Political deepfakes are as credible as other fake media and (sometimes) real media. OSF [Preprint] (2021). (Accessed 30 June 2021). [Google Scholar]

- 43.Reeves B., Yeykelis L., Cummings J. J., The use of media in media psychology. Media Psychol. 19, 49–71 (2016). [Google Scholar]

- 44.Wells G. L., Windschitl P. D., Stimulus sampling and social psychological experimentation. Pers. Soc. Psychol. Bull. 25, 1115–1125 (1999). [Google Scholar]

- 45.Li H. O.-Y., Bailey A., Huynh D., Chan J., YouTube as a source of information on COVID-19: A pandemic of misinformation? BMJ Glob. Health 5, e002604 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Brauer M., Curtin J. J., Linear mixed-effects models and the analysis of nonindependent data: A unified framework to analyze categorical and continuous independent variables that vary within-subjects and/or within-items. Psychol. Methods 23, 389–411 (2018). [DOI] [PubMed] [Google Scholar]

- 47.McElreath R., Statistical Rethinking: A Bayesian Course with Examples in R and STAN (Chapman and Hall/CRC, ed. 2, 2020). [Google Scholar]

- 48.Yarkoni T., The generalizability crisis. Behav. Brain Sci., 1–37 (2020) 10.1017/S0140525X20001685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Coppock A., McClellan O. A., Validating the demographic, political, psychological, and experimental results obtained from a new source of online survey respondents. Res. Polit. 6, 1–14 (2019). [Google Scholar]

- 50.Aronow P. M., Kalla J., Orr L., Ternovski J., Evidence of rising rates of inattentiveness on lucid in 2020. OSF [Preprint] (2020). (Accessed 5 October 2021). [Google Scholar]

- 51.Peyton K., Huber G. A., Coppock A., The generalizability of online experiments conducted during the COVID-19 pandemic. J. Exp. Polit. Sci., 1–16 (2021). https://www.cambridge.org/core/journals/journal-of-experimental-political-science/article/generalizability-of-online-experiments-conducted-during-the-covid19-pandemic/977D0A898CD4EA803ABE474A49B719E0. Accessed 5 October 2021. [Google Scholar]

- 52.Berinsky A. J., Margolis M. F., Sances M. W., Separating the shirkers from the workers? Making sure respondents pay attention on self-administered surveys. Am. J. Pol. Sci. 58, 739–753 (2014). [Google Scholar]

- 53.Delli Carpini M. X., Keeter S., What Americans Know about Politics and Why It Matters (Yale University Press, 1996). [Google Scholar]

- 54.Frederick S., Cognitive reflection and decision making. J. Econ. Perspect. 19, 25–42 (2005). [Google Scholar]

- 55.Thomson K. S., Oppenheimer D. M., Investigating an alternate form of the cognitive reflection test. Judgm. Decis. Mak. 11, 99–113 (2016). [Google Scholar]

- 56.Guess A. M., Munger K., Digital literacy and online political behavior. OSF [Preprint] (2020). (Accessed 7 August 2020). [Google Scholar]

- 57.Bergkvist L., Rossiter J. R., The predictive validity of multiple-item versus single-item measures of the same constructs. J. Mark. Res. 44, 175–184 (2007). [Google Scholar]

- 58.Bürkner P.-C., brms: An R package for Bayesian multilevel models using Stan. J. Stat. Softw. 80, 1–28 (2017). [Google Scholar]

- 59.Stan Development Team, Stan Modeling Language User’s Guide and Reference Manual, version 2.27 (2020). https://mc-stan.org (Accessed 30 June 2021).

- 60.Barr D. J., Levy R., Scheepers C., Tily H. J., Random effects structure for confirmatory hypothesis testing: Keep it maximal. J. Mem. Lang. 68, 255–278 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.C. Wittenberg, B. M. Tappin, A. J. Berinsky, D. G. Rand, Replication Data for “The (minimal) persuasive advantage of political video over text.” Open Science Framework (OSF). https://osf.io/xwmqn/. Deposited 14 October 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Datasets and study materials have been deposited in the Open Science Framework (https://osf.io/xwmqn/) (61).