Abstract

Three-dimensional convolutional neural networks (3D CNN) of artificial intelligence (AI) are potent in image processing and recognition using deep learning to perform generative and descriptive tasks. Compared to its predecessor, the advantage of CNN is that it automatically detects the important features without any human supervision. 3D CNN is used to extract features in three dimensions where input is a 3D volume or a sequence of 2D pictures, e.g., slices in a cone-beam computer tomography scan (CBCT). The main aim was to bridge interdisciplinary cooperation between forensic medical experts and deep learning engineers, emphasizing activating clinical forensic experts in the field with possibly basic knowledge of advanced artificial intelligence techniques with interest in its implementation in their efforts to advance forensic research further. This paper introduces a novel workflow of 3D CNN analysis of full-head CBCT scans. Authors explore the current and design customized 3D CNN application methods for particular forensic research in five perspectives: (1) sex determination, (2) biological age estimation, (3) 3D cephalometric landmark annotation, (4) growth vectors prediction, (5) facial soft-tissue estimation from the skull and vice versa. In conclusion, 3D CNN application can be a watershed moment in forensic medicine, leading to unprecedented improvement of forensic analysis workflows based on 3D neural networks.

Keywords: forensic medicine, forensic dentistry, forensic anthropology, 3D CNN, AI, deep learning, biological age determination, sex determination, 3D cephalometric, AI face estimation, growth prediction

1. Introduction

Conventional forensic analysis is based on forensic expert’s manual extraction of information. Forensic expert provides opinions established on medical and other fields of current knowledge combined with personal work experience. This is not only time-consuming, albeit frequently affected by subjective factors that are tough to overcome [1].

The main purpose of this paper is to analyze and introduce a very promising line of research applicable to forensic anthropology and various traditional sectors of forensic medicine. The application of artificial intelligence (AI) is a new trend in forensic medicine and a possible watershed moment for the whole forensic field [1,2,3,4,5,6].

This chapter paper explains basic terminology, principles and the current horizon of knowledge. The methodology chapter presents the novel clinical workflow based on implementing three-dimensional convolutional neural network (3D CNN) algorithms [7,8,9]. The input is full head cone-beam computer tomography scans (CBCT) in the Digital Imaging and Communications in Medicine format (DICOM) [9,10,11,12,13,14]. The methodology chapter describes technical data preparation for 3D CNN utilization in the following practical aspects from forensic medicine:

Biological age determination [7,8,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31]

Automatized 3D cephalometric landmark annotation [41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58]

Soft-tissue face prediction from skull and in reverse [59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77]

Facial growth vectors prediction [13,59,78,79,80,81,82,83,84,85,86,87,88,89,90]

The result of this paper is a detailed guide for forensic scientists to implement features of 3D CNN to forensic research and analyses of their own (in five themes described above). This resulting practical concept—possible workflow shall be useful for any forensic expert interested in implementing this advanced artificial intelligence feature. This study is based on the worldwide review of 3D CNN use-cases that apply to clinical aspects of forensic medicine

This article’s secondary objective is to inspire forensic experts and approximate them to implement three-dimensional convolutional neural networks (3D CNN) in their forensic research in the fields of age, sex, face and growth determination.

1.1. Basic Terminology and Principles in Era of AI Enhanced Forensic Medicine

Artificial intelligence has brought new vigor to forensic medicine, but at the same time also some challenges. AI and forensic medicine are developing collaboratively and advanced AI implementation until now required extensive interdisciplinary cooperation. In the era of big data [3], forensic experts shall become familiar with these advanced algorithms and understand used technical terms.

For many forensic experts, the current benefits of advanced AI processes are still unknown. For example, automated AI algorithms for skull damage detection from CT [91] or soft-tissue prediction of a face from the skull [66,67,89,92] are still a mystery to many outstanding forensic scientists. Enabling them would catapult forensic research to a new era [1].

A Convolutional Neural Network (CNN) is a Deep Learning algorithm that can take in an input image, assign importance (learnable weights and biases) to various aspects/objects in the image, and differentiate one from the other.

CNN is an efficient recognition algorithm that is widely used in pattern recognition and image processing. It has many features such as simple structure, less training parameters and adaptability. CNN is a supervised type of Deep learning, most preferable used in image recognition and computer vision (Figure 1a,b).

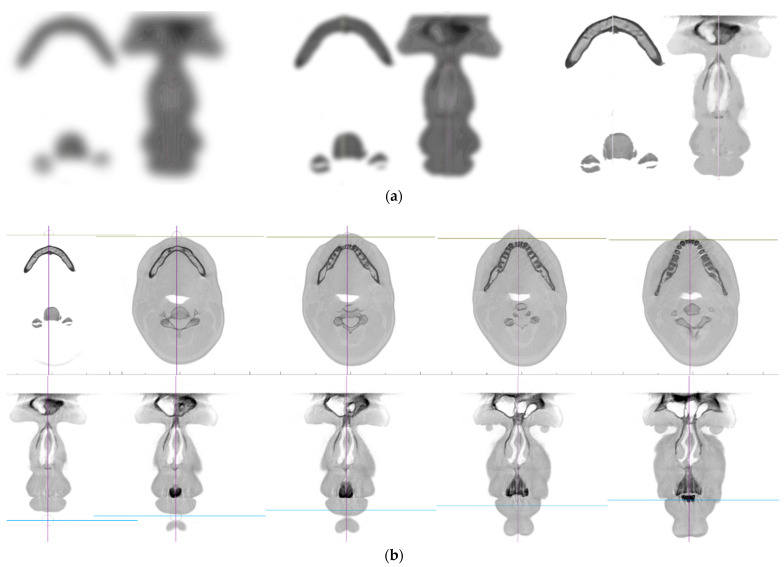

Figure 1.

(a) Recognition of objects. Try, using your imagination, to recognize the objects on the three blurred variants of the same anatomical slice. Convolutional Neural Networks (CNNs) work similar to our visual brain when trying to recognize these objects. (b) Our recognition of objects on the picture is significantly improved when more layers—slices are added thus providing further context with the 3rd dimension. In the top row is recognizable intersection of the mandible and vertebra and on the lower row is recognizable slice of the face. 3D CNN recognition is similarly improved with providing context of depth.

Compared to its predecessors, the main advantage of CNN is that it automatically detects the crucial features without any human supervision. For example, given many pictures of cats and dogs, it learns distinctive features for each class. CNN is also computationally efficient.

3D CNN is used to extract features in 3 Dimensions or establish a relationship between 3 dimensions. A 3D CNN is simply the 3D equivalent: it takes as input a 3D volume or a sequence of 2D frames (e.g., CBCT scan).

In terms of Neural Networks and Deep Learning: Convolutions are filters (matrix/vectors) with learnable parameters used to extract low-dimensional features from input data. They have the property to preserve the spatial or positional relationships between input data points.

2D CNNs predict segmentation maps for DICOM slices in a single anatomical plane. 3D CNNs address this issue by using 3D convolutional kernels to make segmentation predictions for a volumetric patch of a scan (Figure 2).

Figure 2.

The comparison of 2D CNN (above) and 3D CNN (below). 3D CNN works with 3rd dimension and can reconstruct shapes from the CBCT 2D slides. The sequence of 2D pictures where the 3rd dimension is time, we speak of a common video sequence that can be a subject of 3D CNN analysis too.

In 3D convolution, a 3D filter can move in all 3-directions (height, width, channel of the image). At each position, the element-wise multiplication and addition provide one number. Since the filter slides through a 3D space, the output numbers are also arranged in a 3D space. The output is then 3D data.

The recognition of similar structures from the CBCT is based on their similar opacity on the X-ray classified by the Hounsfield scale. The process of defining ranges for particular tissues is called “thresholding”, which is prior to final the segmentation (Figure 3). Setting different thresholds for segmentation preprocessing step allows segmentation of different structures such as soft tissues (skin, airway, sinuses), nerves (inferior alveolar nerve, dental pulp), bones (mandible, maxilla or cervical vertebras) and many other (Figure 4).

Figure 3.

The example of the process of defining ranges for particular visualized tissues is called “thresholding”.

Figure 4.

The examples of the segmentation process on the CBCT data based on defining ranges for particular tissues thus defining 3D structures such as airway, nerve canal, face surface or bone structures.

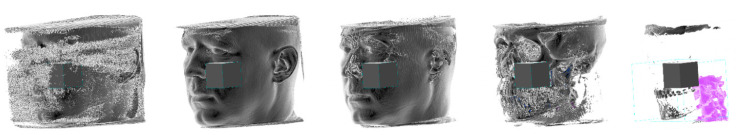

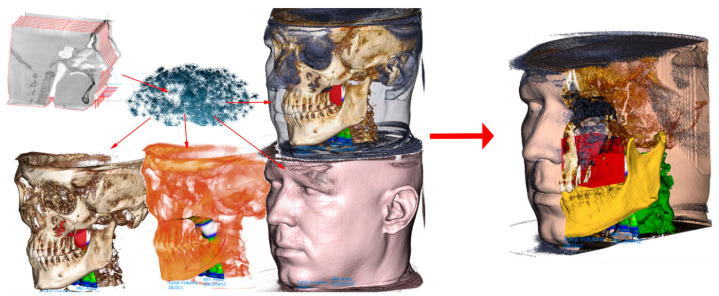

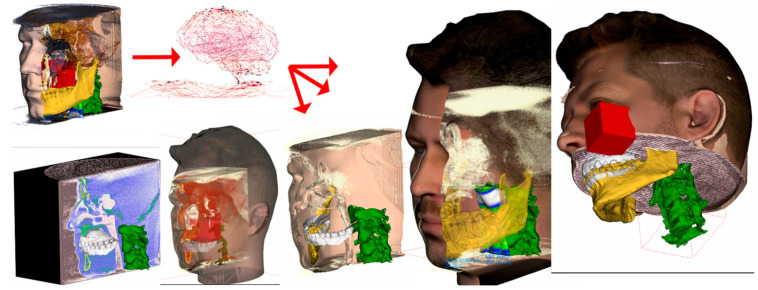

The segmentation of original CBCT data can result in the definition of various 3D structures involved in 3D CNN training, or these 3D structures can serve as anchors for mapping another 3D scan, such as an intraoral optical scan or extraoral scan that includes texture. All these three data sources can be merged, and the 3D CNN network can work with unprecedented data that include wider face regions from face scan or morphological information on teeth and gums (Figure 5).

Figure 5.

The example of 3D data augmentation in a sense of mapping another 3D scans on the segmented structures. Facial 3D scan with texture mapped on the segmented face surface from CBCT and intraoral scan of teeth and gums mapped on tooth surfaces from the CBCT. Finally merged into complex set of 3D models. Training of 3D CNN with such a complex 3D virtualized model has never been performed before and is worth a consideration.

1.2. Overview of Used Artificial Intelligence for Forensic Age and Sex Determination

Traditional forensic analyses of age, gender and facial appearance are based on forensic expert manually acquiring information that provides the identification established on expert`s medical and biological knowledge and mathematical calculations [93,94,95]. In forensic outputs, the experiences of the investigator subjectivity and fatigue and emotions play a role [93,94,95]. To have forensic expert well trained on thousands of skulls of all possible ethnicities, would take a lifetime. Possible bias sourcing from fatigue, limited training dataset, emotional engagement or human calculation error cannot be absolutely eradicated with human forensic expert. Implementation of artificial intelligence (AI) can limit all these mentioned sources of possible bias. Machine learning works based on models that mimic neurons in the brain and can learn from experiences and solve complex problems. It is not influenced by subjective judgment; it does not become tired and does not use emotions and thus can work more efficiently [96,97,98].

AI usage is not without risks of undesired side effects. AI may become biased in the same way as a human forensic expert, depending on the source data used for AI training [99]. Obermeyer at el. found evidence of bias in a healthcare algorithm responsible for 200 million people, which systemically prevented almost 30% of eligible black patients from receiving additional care by giving lower risk scores to black patients than white patients with equal diagnoses [100].

Many studies in forensic science have been conducted in recent years, and some recent studies are beginning to focus on neural networks [101]. These studies were mainly aimed at determining the age and sex of postmortem skeletal remains and living people. Age and gender assessment active, used to identify victims, determine criminal liability or identify persons without legal documentation [8,102]. There is considerable interest in accelerating identification procedures, and experts are involved in machine learning in forensic procedures. They use X-ray images [103,104,105,106,107,108], MRI images [8,109], photography [90,110,111], CT scans [112,113,114,115,116,117] of the head or other bones such as the collarbone, femur, teeth, etc. and use databases to teach artificial intelligence to identify people’s age or gender. Pham et al. [113] examined age using the femur and mandible for neuronal networks. The femur could play a key role in predicting adulthood, especially the shape of the femoral head and bone densitometry. They used 814 whole-body post-mortem computed tomography (CT) scans to obtain results: 619 men, 195 women aged 20 to 70 years. They omitted subjects with fractures. Each CT output was in digital imaging and communication in medicine (DICOM) format [11,12]. The extracted femur and mandible data were preprocessed to create a 3D voxel inserted into a neural network model. Using this approach, the mean absolute error (MAE) of the mandible age identification was 7.07 years, and the MAE calculated from a femur age determination was 5.74 years. The combination of both approaches reached an excellent result—MAE = 5.15 years. CT scans were also used for learning and age determination in a study by Farhadian et al. [115]. AI determined the age learned from CT scans of 300 subjects aged 14 to 60 years of the canine teeth. In this study, they compared the methodology of neural networks with a regression model. The MAE for neural networks was 4.12 years, and the MAE for the regression model was 8.17 years, which demonstrated the higher accuracy of neural networks. Mauer et al. [102] aimed to develop a fully automated and computerized method for age estimation based on the knee’s 3D magnetic resonance imaging (MRI). They had 185 coronal and 404 sagittal MR images of Caucasian men aged 13 to 21 years. The best result obtained was a MAE of 0.67 ± 0.49 years and an accuracy of 90.9%. Here it can be seen that the group with a minor age variance more accurately determines the age of the individuals. A similar study was performed by Stern et al. performed a similar study [109] where 328 MR images were used for learning neural networks and subsequent age detection. Age was reported with a MAE of 0.37 ± 0.51 years for the age range of individuals ≤ 18 years.

Several research teams have tried neural network learning based on X-ray images [103,104,108]. Guo et al. [103] used 10,257 samples of dental orthopantomograms and, similar to Farhadian et al. [115], compared logistic regression linear models for each legal age limit (14, 16 and 18 years) with the neural network. The results showed that neural networks work better (linear regression models: 92.5%, 91.3% and 91.8% and neural networks: 95.9%, 95.4% and 92.3% success rate for age limits 14, 16 and 18 years). In Stepanovsky et al. [105] used 976 orthopantomography (662 men, 314 women) of people aged 2.7 to 20.5 years to learn neural networks. The results were very favorable, and the average absolute error (MAE) was below 0.7 years for both men and women. Vila-Blanco et al. [106] used landmarks on the mandible to search for patterns by neural networks. The age estimate reached an accuracy of 87.8%, and the MAE was only 1.57 years. De Tobel et al. [107] used panoramic molar panoramic radiographs to estimate age. The accuracy of the results was, on average, MAE = 0.5. Boedi et al. [108] later conducted a similar study with similar results. Li et al. [104] used 1875 X-ray images of the pelvis as a basis for evaluating bone age through deep learning. The age of the people whose X-rays were used to teach the model was 10 to 25 years. The performance of the model was MAE = 0.94 years.

More studies modelled gender determination using AI. Bewes et al. [42] used neural networks for this purpose with a detection accuracy of 95%. However, they trained them on 900 skull scans from CT scans. Oner et al. [114] achieved the same goal by using CT images of the sternum transmitted to the orthogonal plane for learning neural networks. They used 422 thin sections of thoracic CT scans (213 females, 209 males) with an age range of 27–60 years. The accuracy of gender prediction was 0.906, and the confidence interval of 94%. The success rate was higher than that achieved by linear models. Etli et al. [116] compared several methods in the study. They used CT scans with sacral and coccyx metric parameters of 480 patients. They used one-dimensional discriminant analysis, linear discriminant functional analysis, sequential analysis of discriminant function and multilayer perceptron neural networks. The maximum accuracy for each method was 67.1% for one-dimensional discriminant analysis, 82.5% for linear analysis of the discriminant function, 78.8% for sequential analysis of the discriminant function, and 86.3% for multilayer perceptron neural networks.

Gender classification was also discussed by Liew et al. [111]. The maximum accuracy for each method was 67.1% for one-dimensional discriminant analysis, 82.5% for linear analysis of the discriminant function, 78.8% for sequential analysis of the discriminant function, and 86.3% for multilayer perceptron neural networks. Gender classification was also discussed by Liew et al. [111]. They used grayscale images of 200 men and 200 women for analysis. The classification performance reached 98.75% and 99.38% in the facial databases SUMS and AT&T. To estimate the sex of infants in the study of Ortega et al. [110] used 2D photographs of the ilium of 135 individuals aged 5 months to 6 years were used. The accuracy was 59% compared to 61% for the specialist. In addition, Porto et al. [88] sought to determine the legal age of offenders at 14 and 18 years as Guo et al. [103]. They based on a database of photographs of 18,000 faces of men and women based on photo anthropometric indices from cephalometric landmarks marked and checked by forensic experts. The accuracy of age determination by neural networks was 0.72 with an age interval of 5 years and for the estimation of the age group higher than 0.93 and 0.83 for the threshold values of 14 and 18 years.

It is almost unbelievable how accurately neural networks can determine age or gender compared to commonly used methods. Therefore, we emphasize their use in forensic practice [9,46,50,117].

Regarding the Skeletal age estimation for forensic purposes, we consider ourselves useful for the direction of the 3D CNN on particular areas of the head and neck. Various experts published research on age estimation by measuring open apices in teeth, stage of teeth eruption, frequently of third molars or canine tooth/pulp ratio [6,17,18,20,21,23,24,25,27,29,31]. In general, teeth are frequently used for age assessment, but they are not the only structures in the skull to be considered. It is known that the shape of the frontal sinus can be an essential tool in personal forensic identification and is linked together with the cranial base to growth changes that can be evaluated [6,118]. Another typical location for skeletal age assessment in the head and neck X-ray diagnostics region is the stage of cervical vertebrae maturation [23,119]. Deep learning has been already implemented in this area [83]. Extensive research is published regarding skeletal age expert estimation Pinchi et al. [120,121,122,123,124,125,126] mainly combines dental and skeletal findings. If the 3D CNN fails to identify these valuable areas, we still have the opportunity to direct the focus on these areas.

Regarding forensic medico-legal aspects, the perspective on natural development estimated by AI algorithms is always relevant, especially in the situation of trauma or other damage that conflicted with this estimated development. AI is now used to evaluate CT scans of lungs and to predict the deterioration of COVID-19 patients in the emergency department [127,128,129].

In this case, 3D CNN algorithms can automatically evaluate not only hard-tissue structures and search for inapparent damage that could have been responsible for a sudden death incident [91,130].

1.3. Artificial Intelligence Implementation in 3D Cephalometric Landmark Identification

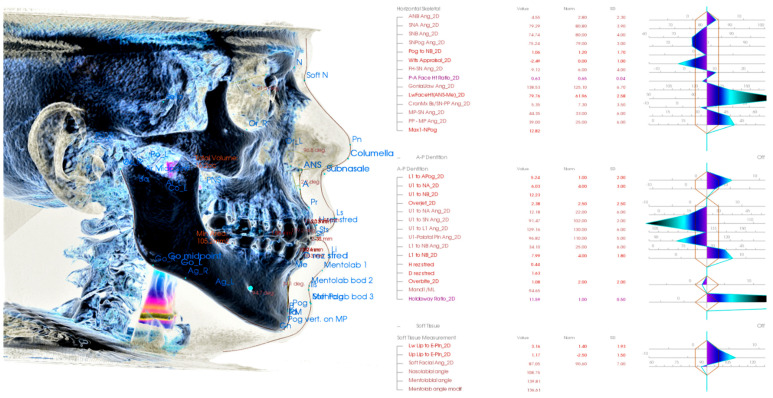

Analysis of complex cranial and facial structures is a domain of orthodontics. Historically they are fundamental for proper treatment planning, and they represent lines, angles, planes on the axilla-facial structures identifiable, especially on the X-ray (typically lateral X-ray). There is massive research regarding cephalometric parameters and their values. Observer defines the points, and their interobserver error are the main weakness of cephalometric analysis (Figure 6). Anthropometry in Forensic Medicine and Forensic Science is frequently used for sex and biological age determination and other purposes [129,130,131].

Figure 6.

Example of 3D cephalometric analysis where orthodontist identifies more than 50 points and the hard- and soft-tissues analyzed. Humans chose these points as the most reproducible on X-ray. These might not be ideal representatives of head and neck structures linked with biological ageing or sexual dimorphism.

As the various cephalometric parameters (angles, ratios and distances) were well researched, and some are proven to be related to age, sex or growth, they are a frequent springboard for many research studies focused on facial parameters. Implementation of AI in cephalometric analysis has been published [132,133,134,135,136]. The question is whether the 3D CNN trained networks will find even better regions and soft- and hard-tissue features on CBCTs when autonomously searching for links between voxel structures and the age or sex. Either way, the reliable automatized 3D cephalometric algorithm precisely identifying particular points with extreme repeatability would be a helpful tool not intended to replace humans in cephalometric points identifications. However, the human error is impossible to cancel completely as the interobserver error.

1.4. Artificial Intelligence Implementation in Soft-Tissue Face Prediction from Skull and Vice Versa

Reconstruction of the face from the skull is an age-old desire of forensic experts. Current methods of not implementing AI are very limited. Prediction of soft tissues according to the hard tissues of the skull and vice versa can be significantly improved upon big-data training of 3D CNN with supplementary metadata about age, sex, BMI or ethnicity. New algorithms to perform facial reconstruction from a given skull has forensic application in helping the identification of skeletal remains when additional information is unavailable [29,64,66,67,68,69,70,72,73,85,86,88,89,92,137]. Implementation of 3D CNN can also unintentionally open pandora box of guided improving the morphology of the facial soft-tissues. From a socio-psychological standpoint, this is regarded as an important therapeutic goal in modern orthodontic treatments. Currently, many of the algorithms implemented in commercially available software present ability to predict profile changes grounded on the incorrect assumption that the amount of movement of hard-tissue and soft-tissue has a proportional relationship [82].

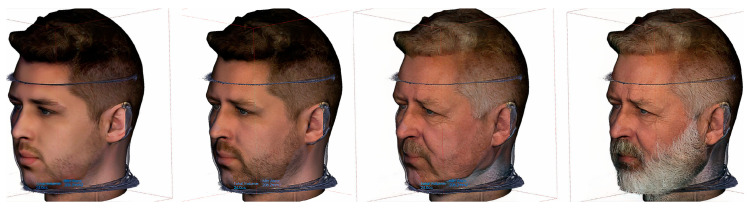

The beauty industry has seen rapid growth in multiple countries, and due to its applications in entertainment, the analysis and assessment of facial attractiveness have received attention from scientists, physicians, and artists because of digital media, plastic surgery, and cosmetics. An analysis of techniques is used to assess facial beauty that considers facial ratios and facial qualities as elements to predict facial beauty [81,82,138,139,140]. A popular and famous free app using AI is FaceApp, which uses neural networks to enhance, age or otherwise change 2D digital photos of users uploading them using this application (Figure 7). Using the 3D CNN approach was not yet implemented despite iPhones having a 3D lidar scanner to acquire a 3D soft-tissue scan of the user’s face. From a forensic aspect, this era of digital 2D face manipulation brought deep-fake videos and images. Detecting manipulated facial images and videos is an increasingly important topic in digital media forensics [118,141]. Any face can be used in the fake video, or unlimited numbers of nearly authentic pictures, including fake social media profiles, can be created. AI is used in forensic evaluation for facial forgery detection and manipulated region localization [118].

Figure 7.

Example of CNN use of the FaceApp AI application to render the face mapped on CBCT to look younger or older. The algorithms changed just the texture and not the 3D mask, however this is probably only a matter of time. 2D face morphing based on AI or face swapping in popular videos are available and popular already a couple of years. Original face is the 2nd one.

This paper most complex AI application is the final 5th theme—“Facial growth vectors prediction”. The authors of this paper addressed it for various reasons. Firstly, it is fundamentally different from the first four themes. Secondly, it requires the most complex implementation of AI strategies. To our knowledge, this is only the second paper in the world that handles the problem of facial growth prediction with ML methods and absolutely the first paper to consider a 3D CNN for facial growth predictions.

Prediction of natural growth is compared to typically forensic topics such as human remains reconstruction and identification or age and sex determination rather less familiar topic. Mainly because despite numerous research attempts to predict facial growth, a satisfactory method has not been established yet, and the problem still poses a challenge for medical experts [142,143,144]. Predicting natural growth and later ageing is relevant for orthodontic therapy planning and from a forensic aspect. Any damage to the head and neck region that would affect otherwise natural growth or simple ageing could be evaluated. The effect of such a trauma could be in the future forensically quite accurately evaluated.

In 1971 Hirschfeld and Moyers published an article named “Prediction of craniofacial growth: the state of the art” [144]. This was one of the first attempts for facial growth predictions. The authors concluded that there are many reasons why they fail to predict craniofacial growth, and some they named persisted until today. They expressed doubts that we have not always measured the right thing. They also pointed out the lack of biological meaning for many traditional cephalometric measures. They have also pointed to the heritability of attained growth in the face and predicted the future importance of craniofacial genetics. The future that comes proved them correct in many aspects. Since these first attempts to predict the facial growth direction over half of a century ago, we did not become much better in facial growth prediction [142]. The complexity of the problem is challenging.

The only study that was focused on the prediction of the facial growth direction with Machine Learning methods and has been published so far is a paper with its pre-print [90,145] from 2021 by Stanislaw Kazmierczak et al. The outcomes of this paper are not impressive regarding facial growth prediction, albeit inspiring in the method of evaluation. The authors of this novel paper [94] performed feature selection and pointed out the attribute that plays a central role in facial growth. Then they performed data augmentation (DA) methods. This study is discussed in more detail later in this paper.

2. 3D Convolutional Neural Networks and Methods of Their Use in Forensic Medicine

2.1. Hardware and Software Used

CBCT scans analyzed for this paper were made on one machine: i-CAT™ FLX V17 with the Field of View (FOV) of 23 cm × 17 cm with technical parameters and settings Table 1.

Table 1.

Full-head CBCT scans were mate with i-CAT™ FLX V17 with these settings.

| Parameter | Setting |

|---|---|

| Sensor Type | Amorphous Silicon Flat Panel Sensor with Csl Scintillator |

| Grayscale Resolution | 16-bit |

| Voxel Size | 0.3 mm, |

| Collimation | Electronically controlled fully adjustable collimation |

| Scan Time | 17.8 s |

| Exposure Type | Pulsed |

| Field-of-View | 23 cm × 17 cm |

| Reconstruction Shape | Cylinder |

| Reconstruction Time | Less than 30 s |

| Output | DICOM |

| Patient Position | Seated |

Medical software used for DICOM data processing and analysis was Invivo™ 6 from Anatomage Inc., Silicon Valley, Thomas Road Suite 150, Santa Clara, CA 95054, USA.

Software for the AI solution base we have used the Python programming language along with 3 deep learning libraries—TensorFlow 2, PyTorch and MONAI. As for the hardware, the whole AI system is powered by multiple GPUs.

2.2. Main Tasks Definitions

Task 1—Age estimation from whole 3D CT scan image

Definition: the task is to estimate the approximate age of a person from a whole head 3D CBCT scan

Proposed method: build regression model represented by a 3D deep neural network that has the current state of the art network architecture as a backbone

Metrics: Mean Absolute Error (MAE) and Mean Squared Error (MSE) (see Section Evaluation)

Task 2—Sex classification from thresholded soft and hard tissues

Definition: the task is to classify input 3D CBCT scans (whole head or experimentally segmented parts) into one of 2 predefined categories—female and male

Proposed method: build classification model represented by 3D deep neural network based on convolutional layers and outputs class probabilities for both targets

Metrics: Accuracy and Confusion Matrix (CM) (other metrics such as precision, recall and F1 score will be evaluated in a later phase)

Task 3—Automatization of cephalometric measurements

Definition: the task is to create an automated system able to tag cephalometric landmarks on whole head 3D CT scan

Proposed method: build object detection model based on 3D neural network that estimates cephalometric measurements automatically

Metrics: Mean Absolute Error (MAE) and Mean Squared Error (MSE) (see Section Evaluation)

Task 4—Soft-tissue face prediction from skull and vice versa

Definition: the task is to create an automated system that predicts the distance of the face surface from the bone surface according to the estimated age and sex. 3D CNN to be trained on whole-head CBCTs of soft-tissue and hard-tissue pairs. *CBCTs with trauma and other unnatural deformations shall be excluded.

Proposed method: build a generative model based on Generative Adversarial Network that synthesizes both soft and hard tissues

Metrics: the slice-wise Frechet Inception Distance (see Section Evaluation)

Task 5—Facial growth prediction

Definition: the task is to create an automated system that predicts future morphological change in defined time for the face’s hard- and soft tissues. This shall be based on two CBCT input scans of the same individual in two different time points. The second CBCTs must not be deformed with therapy affecting morphology or unnatural event. This already defines the extremely challenging condition. There is a high possibility of insufficient datasets and the necessity of multicentric cooperation for successful training of 3D CNN on this task.

Proposed method: In this final complex task, the proposed method builds on previous tasks. We strongly recommend adding metadata layers on gender, biological age and especially genetics or letting the CNN determine them by itself. We suggest disregarding the established cephalometric points, lines, angles and plains as these were defined in regards to lateral X-ray, emphasising good contrast of the bone structures with high reproducibility of the point and not necessarily with focus on particular structures most affected by growth. We suggest letting3D CNN establish its observations and focus areas.

We also suggest allowing 3D CNN analysis of genetic predisposition in a smart way: by analysis of possibly CBCT of the biological parents or preferably non-invasive face-scan providing at least facial shell data.

2.3. The Data Management

The processing of data in deep learning is crucial for the sufficient result of any neural network. Currently, most of the implementations depend on the dominant model-centric approach to AI, which means that developers spend most of their time improving neural networks.

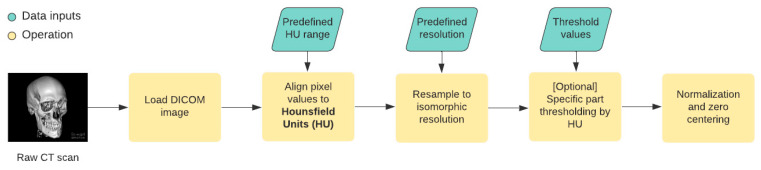

For medical images, various preprocessing steps are recommended. In most cases, the initial steps are following (Figure 8):

Loading DICOM files—the proper way of loading the DICOM file ensures that we will not lose the exact quality

Pixel values to Hounsfield Units alignment—the Hounsfield Unit (HU) measures radiodensity for each body tissue. The Hounsfield scale that determines the values for various tissues usually ranges from −1000 HU to +3000 HU, and therefore, this step ensures that the pixel values for each CT scan do not exceed these thresholds.

Resampling to isomorphic resolution—the distance between consecutive slices in each CT scan defines the slice thickness. This would mean a nontrivial challenge for the neural network. The thickness depends on the CT device setup, and therefore it is necessary to create equally spaced slices.

[Optional] Specific part segmentation—each tissue corresponds to a specific range in the Hounsfield units scale, and in some cases, we can segment out specific parts of the CT scan by thresholding the image.

Normalization and zero centering—these two steps ensure that the input data that are feed into the neural network are normalized into [0, 1] interval (normalization) and are zero centered (achieved by subtracting the mean value of the image pixel values).

Figure 8.

For medical images, there are various preprocessing steps that are recommended.

Preprocessing the image dataset before feeding the CNN or other classifiers is important for all imaging modalities. Several preprocessing steps are recommended for the medical images before they are fed as input to the deep neural network model, such as (1) artefact removal, (2) normalization, (3) slice timing correction (STC), (4) image registration and (5) bias field correction. While all the steps (1) to (5), help in acquiring reliable results, STC and image registration are very important in the case of 3D medical images (especially nMR and CT images). Artefact removal and normalization are the most performed preprocessing steps across the modalities [146].

2.4. Dataset Specification

This study comprises approximately 500 iCAT CBCT scans of human heads. Each CBCT scan has the spatial resolution of 768 × 768 pixels and the default device pixel spacing is [0.3 × 0.3 × 0.3] millimeters.

The subjects are split by sex, with the ratio of 6:4 for female/male ranging from 8 to 72 years. The majority (90%) of the subjects are between 18 and 36 years.

These dataset parameters were used in suggested considerations for 3D CNN applications concepts stated in Section 2.2 Main tasks definitions.

2.5. Deep Learning Approach

2.5.1. Age Estimation Using 3D Deep Neural Networks

In recent research [7,14] AI-based age estimation has proven to be a successful competitor to classical approaches from forensic medicine. The aim of this study is to create an automated system for age estimation from 3D cranial CT scans. There is an expectation that particular parts of the skull have a decisive impact on the final prediction, and therefore we propose a solution that includes two stages:

Age estimation from dense tissue layer—we use whole skull CT scan as an input into the 3D convolutional neural network, which would serve as a regression model that estimates the continuous values of age for each CT scan separately.

[Experimental] Visualization of network activations that represent regions of interest—neural network’s intermediate layers often serve as a an excellent explaining tool in order to find visual explanation heat maps [113] that highlight regions that affect neural network the most.

As for the specific neural network architecture, we derive the backbone part from the current state of the art research. We primarily consider the EfficientNet [147] and DenseNet [148] with their implementations adapted to 3D inputs.

Both architectures base includes convolutional layers that serve as feature extraction blocks to obtain specific indicators from input x represented as a loaded DICOM image. These extracted feature maps are then forwarded to a fully-connected layer that outputs the single age estimation value:

| ŷ = F C(CL(x)) | (1) |

where CL is an intermediate block consisting of convolutional layers, FC is a fully-connected top part of the network that outputs a single floating-point value.

2.5.2. Sex Classification Using 3D Deep Neural Networks

The determination of sex from human remains is a challenging task in various fields such as archeology, physical anthropology and forensics because there is no proven method that exactly leads to correct classification.

The use of AI in this field is highly desirable as manual determination is often very complex and time-consuming. The objectiveness of the deep learning approach can also eliminate human bias leading to reliable software products.

The sex classification is carried out similarly to the previous age estimation approach, but this task´s objective is to classify the final outputs from the neural network into 2 classes—female and male. For this purpose, we use the softmax activation function as a last operation to obtain class probabilities for both targets. The computation is following:

| ŷ = α (FC(CL(x))) | (2) |

where CL and FC represent the convolutional and fully-connected blocks of the neural network.

The experimental part would include the input x consisting of 2 separate inputs—one will be the segmented skull and the other will be the segmented soft tissue (skin) which is achieved by setting different thresholds for segmentation preprocessing step.

2.5.3. Automatization of Cephalometric Analysis

The cephalometric analysis aims to set landmarks of CT(CBCT) scans which serve as an important factor in the alignment of a skull. These measurements can also be used as surgery planning parameters or pre-and post-surgery comparisons [149,150].

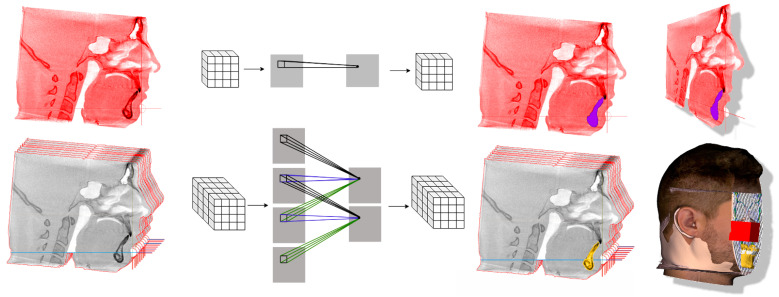

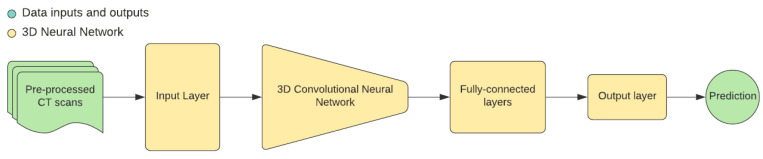

The idea behind this approach is to use 3D convolutional neural networks for fully automated cephalometric analysis. Networks aim to output probabilistic estimations for each cephalometric landmark and then create a projection of these estimations into a real skull CT scan (Figure 9).

Figure 9.

Pipeline from pre-processed CBCT scans to prediction on 3D CNN.

Two approaches come into consideration:

Landmarks estimation in whole CT scan image—in this approach, the probability estimation for all landmarks is assigned for each pixel in the CT scan

Landmarks estimation for selected regions of interest—assuming that each landmark corresponds to a specific area we could add another preprocessing step—slice cut where each slice would be a template-based region fed into a neural network. We can determine the expected landmark detection for each slice independently, which should help in the final model performance

2.5.4. Neural Networks Architectures and Clinical Data Pre-Processing

Recently, CNNs have been successfully applied in widespread medical image analysis and achieved significant benefits [9,59,115,141,151]. We investigated the design of a 3D CNN with backbones based on Resnet, MobileNet, and SqueezeNet models, which have proven to be the most efficient and widely used in various applications. One of the preferable architectures was based on 3D Resnet34 for the mandible segmentation in research of Pham et al. 2021 [113].

We have considered various approaches:

Use whole 3D CT scan as an input into the neural network and output one value for age estimation as floating value and one for sex classification as a binary value.

Segment out the mandible and use it as input into the neural network. Output is the same as in the previous task.

(experimental) Use a whole 3D CT scan to input into the neural network and output multiple values representing specific skull features (as discussed at the meeting last week). Then use these values as an input into another machine learning model to estimate age and gender.

Suppose we take an example of mandible segmentation from DICOM. The first step is to have DICOM files loaded and then, added any missing metadata; particularly, the slice thickness, that is, the pixel size in the Z direction, which was obtained from the DICOM file. The unit of measurement in CBCT scans is the Hounsfield Unit (HU), which is a measure of radiodensity. Thus, HU shall be converted to pixel values. Subsequently, it shall be resampled to an isomorphic resolution to remove the scanner resolution. The slice thickness refers to the distance between consecutive slices (when viewing a 3D image as a collection of 2D slices) and varies between scans.

The final preprocessing step is bone segmentation and pixel normalization. Mandible bone extraction is complex because the surrounding bone has to be removed. An image binary thresholding and morphological opening operation for each slice shall be applied.

The morphological opening operation is an essential technique in image processing, achieved by erosion and the dilation of an image. This technique helps to remove small objects while retaining more significant parts from an image. To obtain the mandible bone part, the largest areas after morphological opening shall be kept. Finally, all the slices shall be stacked together to obtain the mandible voxels.

2.6. Evaluation

All approaches are evaluated in a classical machine learning manner—the dataset is split into three parts train, validation and test split. The test split mainly serves as a benchmarking set in order to compare our results with other approaches.

2.6.1. Regression Models

When dealing with regression models in the deep learning field, we usually take into consideration two main regression metrics—Mean Absolute Error (MAE) and Mean Squared Error (MSE). Both metrics calculate the error between predicted y and ground truth labels denoted as y.

MAE is defined as the mean of the sum of absolute differences between y and ŷ for each pixel:

| (3) |

while MSE is defined as mean of the squares of the errors, where error is defined as difference between y and ŷ:

| (4) |

the regression tasks are primarily related to Task 1—age estimation and Task 3—automated cephalometric analysis.

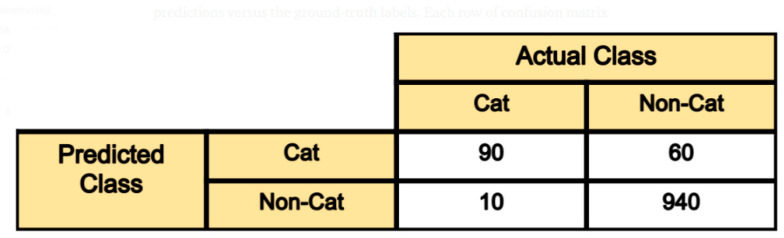

2.6.2. Classification Models

In order to evaluate the classification task, which in our case is represented by Task 2—sex classification, we need to consider the current distribution of male and female samples in our dataset. As the distribution is approximately 6:4 (almost equal), we can calculate the overall accuracy and corresponding confusion matrix (CM). In the later phase, we can also test other metrics such as precision, recall or F1 score.

The calculation of accuracy is defined just as the number of correct predictions divided by the total number of predictions. More interesting for use would be the CM. It is a tabular visualization of a model prediction for each class separately (Figure 10).

Figure 10.

Confusion matrix for 2 classes image classification—Cat and Non-Cat. Each row corresponds to the predicted class from neural network output. In case of a class Cat 90 samples were correctly classified as Cat and 60 samples were incorrectly classified as Non-Cat.

3. Resulting Summary of Proposed Approach for Utilization of 3D CNN in Investigated Aspects of Forensic Medicine

This chapter is presenting summary outcome from the detailed research in previous sections of this paper. Investigation of 3D CNN modalities, their features, advantages and disadvantages and also clinical requirements for implementation in the field of forensic medicine has led to these proposed designs (guide) of future forensic research based on 3D CNN analyses.

Table 2 presents condensed summary of recommended approach for 3D CNN implementations in various forensic topics. Expected input data is the minimal dataset of 500 full-head CBCT scans, described in more detail in previous sections.

Table 2.

Guide of recommended designs for 3D CNN implementations in various forensic topics.

| Area of Forensic Research | Proposed Method | Metrics |

|---|---|---|

| Biological age determination | Regression model by 3D deep CNN | MAE, MSE |

| Sex determination | Deep 3D CNN—conv.layers and outputs class probabilities for both targets | CM such as precision, recall and F1 score |

| 3D cephalometric analysis | Object detection model on 3D CNN that auto.estimates cephalom.measurements | MAE, MSE |

| Face prediction from skull | model on Generative Adversarial Network that synthesize soft/hard tissues | slice-wise Frechet Inception Distance |

| Facial growth prediction | Based on methods stated above 1 | another 1 |

1 Method and metrics are not proposed from the current state of knowledge for Facial growth prediction and need further consideration upon clinical experience from 3D CNN applications.

4. Discussion

The authors of this paper have no doubts that 3D CNN, as another evolutionary step in advanced AI, will be with practical implementation a watershed moment in forensic medicine fields dealing with morphological aspects.

With considered data input as CT or CBCT (DICOM), the implementation of 3D CNN algorithms opens unique opportunities in areas of:

Biological age determination

Sex determination

-

Automatized, precise and reliable:

-

○

3D cephalometric analysis of soft and hard tissues

-

○

3D face prediction from the skull (soft-tissues) and vice versa

-

○

Search for hidden damage in post-mortem high-resolution CT images

-

○

Asymmetry and disproportionality evaluation

-

○

-

Predictions of:

-

○

Hard-tissue and soft tissue growth

-

○

Aging in general

-

○

Ideal face proportions respecting golden ratio proportions

-

○

-

3D reconstructions of:

-

○

Missing parts of the skull or face

-

○

3D dental fingerprints for identification with 2D dental records

-

○

First clinical applications of 3D CNN have shown [91,113,115,126,150] that the algorithms can be successfully used in CT analysis and identifications of specific diseases such as Alzheimer or COVID19 as these have a specific representation on the X-ray. With a high probability bordering on certainty, the future development of advanced 3D CNN will result in sophisticated automatized algorithms processing 3D diagnostic data similarly to the trained human eye of the forensic expert. These algorithms will automatically process 3D diagnostic data such as CT or NMR, searching for patterns they were trained to see. They will recognize unseen details of hidden damage or representations of rare diseases when trained to do so. In the next level, they will approximate the finding to become an ultimate autopsy tool for even unknown diseases [36,113,126,152].

The limitation of this paper is that practical examination of the proposed directions for 3D CNN implementations will require some time. Currently, there are many different 3D CNN in development, and actually, this is where most of the research activity is carried out [151,153,154,155].

Another limitation of this study is the high level of dynamics of research and development in this field of advanced AI implementations. The velocity in training the 3D CNN is high, and it is possible that a better approach can be recognized in the process.

Interesting limitation of 3D CNN usage is the known fact [99] the any AI may become biased in the same way as a human forensic expert does and not only in the context of the criminal trial. This depends on the source data used for AI training [99] and is elaborated in more context in Section 1.2. On the other hand, in many forensic cases we need to achieve highest probabilities on the boundary with certainty. Here a respected and internationally recognized algorithm might become a useful tool for achieving an unprecedented levels of probability superior to human evaluation. However, this development is a possibility, not certainty.

The final limitation of implementing the suggested designs for 3D CNN implementation for forensic researchers is the physical and legal availability of big data necessary for 3D CNN training. This can be solved with multicentric cooperation.

There already exist many CNN processing DICOM data and are available for use [11,12,14]. Researchers this year have already achieved significant milestones in multiclass CBCT image segmentation for orthodontics with Deep Learning. They trained and validated a mixed-scale dense convolutional neural network for multiclass segmentation of the jaw, the teeth, and the background in CBCT scans [153]. This study showed that multiclass segmentation of jaw and teeth was accurate, and its performance was comparable to binary segmentation. This is important because this strongly reduces the time required to segment multiple anatomic structures in CBCT scans.

In our efforts, we have faced the issue of CBCT scan distortion caused by metal artefacts (mostly by amalgam dental fillings). Fortunately, a novel coarse-to-fine segmentation framework was recently published based on 3D CNN and recurrent SegUnet for mandible segmentation in CBCT scans. Moreover, the experiments indicate that the proposed algorithm can provide more accurate and robust segmentation results for different imaging techniques compared to the state-of-the-art models with respect to these three datasets [156].

As there already exists a fully automated method for 3D individual tooth identification and segmentation from dental CBCT [154], these algorithms can be combined.

The most complex area covered by this paper is a 3D prediction of growth and in a wider perspective of ageing. It is known that this process is laden with various variables including hormonal (sex) [142,143,157,158,159] and functional aspects (bad habits) [160,161,162], as well as genetics [163,164,165,166].

The only published study focused on predicting the facial growth direction with the implementation of Machine Learning methods is from 2021 Kazmierczak et al. 2021 [90,145]. The outcomes of this paper are limited in regards to facial growth prediction. The authors of this original paper did feature selection and pointed the attribute that plays a central role in facial growth. Then they performed data augmentation (DA) methods.

The principal weakness of this study is not the method but probably the input. The authors used only 2D lateral X-rays of various qualities and sizes. In addition, the evaluation was performed only in one 2D projection. The researchers focused on the angle between the Sella—Nasion line and the Mandibular plane formed by connecting the point gonion to gnathion at the inferior border of the mandible. They engaged an orthodontic expert to identify approximately 20 characteristic anatomic landmarks on LC to assess a subject. These were assessed manually on the lateral cephalogram. Some of the landmarks define angles which, from the clinical perspective, have special significance. As far as facial growth direction is concerned, there are no standardized measurements available in the literature to evaluate. The focus of supervised ML with a concentration on established cephalometric parameters might be wrong. It is the fact that they were originally chosen as well distinguished points on lateral X-ray with a priority of high reproducibility. So as considered by Hirschfeld and Moyers more than 50 years ago, we might be looking in the wrong places. Prediction of the change of SN/MP also oversimplifies the problem. The questions from the past remain, and facial growth prediction remains a complex mystery. The application of 3D CNN on this most complex task is described in more technical details and discussed later in the context of addressing other variables such as biological age, sex and genetics.

5. Conclusions

In conclusion, we can assume that the 3D CNN, as an advanced AI feature, will shift the paradigm in all areas researched in this paper. Forensic experts are now guided to step into the era of artificial intelligence as a helpful tool for research and possibly even future routine forensic analyses. Proposed methods and metrics for 3D CNN application on particular forensic topics (Biological age determination, Sex determination, 3D cephalometric analysis and Face prediction from skull), summarized in resulting Table 2, can be used as the initial guide. Forensic 3D reconstructions using artificial intelligence will be new, exciting and practically usable methods.

The implementation of advanced AI still requires interdisciplinary cooperation, albeit, with understanding, it can be used to crack unsolved mysteries. It definitely is not a trend that can be ignored.

Acknowledgments

We acknowledge technological support of Cognexa software company for support and digital dental lab infrastructure of 3Dent Medical s.r.o company as well as dental clinic Sangre Azul s.r.o.

Abbreviations

| 3D CNN | Three-Dimensional Convolutional Neural Network |

| AI | Artificial Intelligence |

| CBCT | Cone-Beam Computer Tomography |

| CM | Confusion Matrix |

| DICOM | Communications in Medicine Format |

| DA | Data Augmentation |

| FOV | Field of View |

| HU | Hounsfield Unit |

| ML | Machine Learning |

| MAE | Mean Absolute Error |

| MSE | Mean Squared Error |

| nMR | Nuclear Magnetic Resonance |

| STC | Slice Timing Correction |

Author Contributions

Conceptualization, A.T., H.S.K., V.K., S.K., R.B., N.M., P.K., K.M.K., M.P. and I.V.; methodology, A.T., H.S.K., V.K., S.K., R.B., N.M., P.K., K.M.K., M.P. and I.V.; software, A.T., H.S.K., S.K. and I.V.; validation, A.T., H.S.K., V.K., S.K. and I.V.; formal analysis, A.T., H.S.K., V.K., S.K. and I.V.; investigation, A.T., H.S.K., V.K., S.K. and I.V.; resources, A.T.; data curation, A.T.; writing—original draft preparation, A.T., H.S.K., V.K., S.K., R.B., N.M., P.K., K.M.K., M.P. and I.V.; writing—review and editing, A.T., H.S.K., V.K., S.K., R.B., N.M., P.K., K.M.K., M.P. and I.V.; visualization, A.T.; supervision, A.T., H.S.K., S.K. and I.V.; project administration, A.T.; funding acquisition, A.T., H.S.K., S.K. and I.V.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the KEGA grant agency of the Ministry of Education, Science, Research, and Sport of the Slovak Republic (Grant No. 081UK-4/2021).

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and no approval was necessary by the Ethics Committee. Ethical review and approval were waived for this study, due to the fact that no experimental materials or approaches were used.

Informed Consent Statement

Written informed consent was obtained from all subjects involved in the study.

Data Availability Statement

We fully adhere to Data Availability Statements in section “MDPI Research Data Policies” at https://www.mdpi.com/ethics (accessed on 1 November 2021).

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Ya-ting F.A., Qiong L.A., Tong X.I. New Opportunities and Challenges for Forensic Medicine in the Era of Artificial Intelligence Technology. J. Forensic Med. 2020;36:77. doi: 10.12116/J.ISSN.1004-5619.2020.01.016. [DOI] [PubMed] [Google Scholar]

- 2.Khanagar S.B., Vishwanathaiah S., Naik S., Al-Kheraif A.A., Devang Divakar D., Sarode S.C., Bhandi S., Patil S. Application and Performance of Artificial Intelligence Technology in Forensic Odontology—A Systematic Review. Leg. Med. 2021;48:101826. doi: 10.1016/j.legalmed.2020.101826. [DOI] [PubMed] [Google Scholar]

- 3.Lefèvre T. Big Data in Forensic Science and Medicine. J. Forensic Leg. Med. 2018;57:1–6. doi: 10.1016/j.jflm.2017.08.001. [DOI] [PubMed] [Google Scholar]

- 4.Pathak M., Narang H. Application of Artificial Intelligence in the Field of Forensic Medicine. Indian J. Forensic Med. Toxicol. 2021;15 doi: 10.37506/IJFMT.V15I4. [DOI] [Google Scholar]

- 5.Franke K., Srihari S.N. Computational Forensics. Vol. 5158. Springer; Berlin/Heidelberg, Germany: 2008. Computational Forensics: An Overview; pp. 1–10. [DOI] [Google Scholar]

- 6.Cossellu G., de Luca S., Biagi R., Farronato G., Cingolani M., Ferrante L., Cameriere R. Reliability of Frontal Sinus by Cone Beam-Computed Tomography (CBCT) for Individual Identification. Radiol. Med. 2015;120:1130–1136. doi: 10.1007/s11547-015-0552-y. [DOI] [PubMed] [Google Scholar]

- 7.der Mauer M.A., Well E.J., Herrmann J., Groth M., Morlock M.M., Maas R., Säring D. Automated Age Estimation of Young Individuals Based on 3D Knee MRI Using Deep Learning. Int. J. Leg. Med. 2020;135:649–663. doi: 10.1007/s00414-020-02465-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Armanious K., Abdulatif S., Bhaktharaguttu A.R., Küstner T., Hepp T., Gatidis S., Yang B. Organ-Based Chronological Age Estimation Based on 3D MRI Scans; Proceedings of the 28th European Signal Processing Conference; Amsterdam, The Netherlands. 24–28 August 2021; pp. 1225–1228. [DOI] [Google Scholar]

- 9.Souadih K., Belaid A., Salem D. Automatic Segmentation of the Sphenoid Sinus in CT-Scans Volume with DeepMedics 3D CNN Architecture. Med. Technol. J. 2019;3:334–346. doi: 10.26415/2572-004X-vol3iss1p334-346. [DOI] [Google Scholar]

- 10.Dubey C., Raj S., Munuswamy S., Katta A., Chetty G. Semantic Segmentation Using Deep Neural Networks in Medicine—A Survey. ISO. 2018;3:39–48. [Google Scholar]

- 11.Du R., Vardhanabhuti V. 3D-RADNet: Extracting Labels from DICOM Metadata for Training General Medical Domain Deep 3D Convolution Neural Networks. Proc. Mach. Learn. Res. 2020;121:174–192. [Google Scholar]

- 12.Fajar A., Sarno R., Fatichah C., Fahmi A. Reconstructing and Resizing 3D Images from DICOM Files. J. King Saud Univ. Comput. Inf. Sci. 2020 doi: 10.1016/j.jksuci.2020.12.004. [DOI] [Google Scholar]

- 13.da Silva R.D.C., Jenkyn T., Carranza V. Convolutional Neural Networks and Geometric Moments to Identify the Bilateral Symmetric Midplane in Facial Skeletons from CT Scans. Biology. 2021;10:182. doi: 10.3390/biology10030182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Singh S.P., Wang L., Gupta S., Goli H., Padmanabhan P., Gulyás B. 3D Deep Learning on Medical Images: A Review. Sensors. 2020;20:5097. doi: 10.3390/s20185097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Armanious K., Abdulatif S., Shi W., Salian S., Küstner T., Weiskopf D., Hepp T., Gatidis S., Yang B. Age-Net: An MRI-Based Iterative Framework for Biological Age Estimation. arXiv. 2009 doi: 10.1109/TMI.2021.3066857.10765.14-37 [DOI] [PubMed] [Google Scholar]

- 16.Sajedi H., Pardakhti N. Age Prediction Based on Brain MRI Image: A Survey. J. Med Syst. 2019;43:1–30. doi: 10.1007/s10916-019-1401-7. [DOI] [PubMed] [Google Scholar]

- 17.de Luca S., Alemán I., Bertoldi F., Ferrante L., Mastrangelo P., Cingolani M., Cameriere R. Age Estimation by Tooth/Pulp Ratio in Canines by Peri-Apical X-Rays: Reliability in Age Determination of Spanish and Italian Medieval Skeletal Remains. J. Archaeol. Sci. 2010;37:3048–3058. doi: 10.1016/j.jas.2010.06.034. [DOI] [Google Scholar]

- 18.Cameriere R., Ferrante L., Cingolani M. Age Estimation in Children by Measurement of Open Apices in Teeth. Int. J. Leg. Med. 2006;120:49–52. doi: 10.1007/s00414-005-0047-9. [DOI] [PubMed] [Google Scholar]

- 19.Hostiuc S., Diaconescu I., Rusu M.C., Negoi I. Age Estimation Using the Cameriere Methods of Open Apices: A Meta-Analysis. Healthcare. 2021;9:237. doi: 10.3390/healthcare9020237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cameriere R., de Luca S., Egidi N., Bacaloni M., Maponi P., Ferrante L., Cingolani M. Automatic Age Estimation in Adults by Analysis of Canine Pulp/Tooth Ratio: Preliminary Results. J. Forensic Radiol. Imaging. 2015;3:61–66. doi: 10.1016/j.jofri.2014.10.001. [DOI] [Google Scholar]

- 21.Rai B., Kaur J., Cingolani M., Ferrante L., Cameriere R. Age Estimation in Children by Measurement of Open Apices in Teeth: An Indian Formula. Int. J. Leg. Med. 2010;124:237–241. doi: 10.1007/s00414-010-0427-7. [DOI] [PubMed] [Google Scholar]

- 22.Cameriere R., Ferrante L. Canine Pulp Ratios in Estimating Pensionable Age in Subjects with Questionable Documents of Identification. Forensic Sci. Int. 2011;206:132–135. doi: 10.1016/j.forsciint.2010.07.025. [DOI] [PubMed] [Google Scholar]

- 23.Cameriere R., Giuliodori A., Zampi M., Galić I., Cingolani M., Pagliara F., Ferrante L. Age Estimation in Children and Young Adolescents for Forensic Purposes Using Fourth Cervical Vertebra (C4) Int. J. Leg. Med. 2015;129:347–355. doi: 10.1007/s00414-014-1112-z. [DOI] [PubMed] [Google Scholar]

- 24.Cameriere R., Pacifici A., Pacifici L., Polimeni A., Federici F., Cingolani M., Ferrante L. Age Estimation in Children by Measurement of Open Apices in Teeth with Bayesian Calibration Approach. Forensic Sci. Int. 2016;258:50–54. doi: 10.1016/j.forsciint.2015.11.005. [DOI] [PubMed] [Google Scholar]

- 25.de Micco F., Martino F., Velandia Palacio L.A., Cingolani M., Campobasso C. pietro Third Molar Maturity Index and Legal Age in Different Ethnic Populations: Accuracy of Cameriere’s Method. Med. Sci. Law. 2021;61:105–112. doi: 10.1177/0025802419900686. [DOI] [PubMed] [Google Scholar]

- 26.Scendoni R., Cingolani M., Giovagnoni A., Fogante M., Fedeli P., Pigolkin Y.I., Ferrante L., Cameriere R. Analysis of Carpal Bones on MR Images for Age Estimation: First Results of a New Forensic Approach. Forensic Sci. Int. 2020;313 doi: 10.1016/j.forsciint.2020.110341. [DOI] [PubMed] [Google Scholar]

- 27.Cameriere R., Santoro V., Roca R., Lozito P., Introna F., Cingolani M., Galić I., Ferrante L. Assessment of Legal Adult Age of 18 by Measurement of Open Apices of the Third Molars: Study on the Albanian Sample. Forensic Sci. Int. 2014;245:205.e1–205.e5. doi: 10.1016/j.forsciint.2014.10.013. [DOI] [PubMed] [Google Scholar]

- 28.de Luca S., Mangiulli T., Merelli V., Conforti F., Velandia Palacio L.A., Agostini S., Spinas E., Cameriere R. A New Formula for Assessing Skeletal Age in Growing Infants and Children by Measuring Carpals and Epiphyses of Radio and Ulna. J. Forensic Leg. Med. 2016;39:109–116. doi: 10.1016/j.jflm.2016.01.030. [DOI] [PubMed] [Google Scholar]

- 29.Cameriere R., de Luca S., Biagi R., Cingolani M., Farronato G., Ferrante L. Accuracy of Three Age Estimation Methods in Children by Measurements of Developing Teeth and Carpals and Epiphyses of the Ulna and Radius. J. Forensic Sci. 2012;57:1263–1270. doi: 10.1111/j.1556-4029.2012.02120.x. [DOI] [PubMed] [Google Scholar]

- 30.Cameriere R., de Luca S., de Angelis D., Merelli V., Giuliodori A., Cingolani M., Cattaneo C., Ferrante L. Reliability of Schmeling’s Stages of Ossification of Medial Clavicular Epiphyses and Its Validity to Assess 18 Years of Age in Living Subjects. Int. J. Leg. Med. 2012;126:923–932. doi: 10.1007/s00414-012-0769-4. [DOI] [PubMed] [Google Scholar]

- 31.Cameriere R., de Luca S., Cingolani M., Ferrante L. Measurements of Developing Teeth, and Carpals and Epiphyses of the Ulna and Radius for Assessing New Cut-Offs at the Age Thresholds of 10, 11, 12, 13 and 14 Years. J. Forensic Leg. Med. 2015;34:50–54. doi: 10.1016/j.jflm.2015.05.006. [DOI] [PubMed] [Google Scholar]

- 32.Afrianty I., Nasien D., Kadir M.R.A., Haron H. Advances in Computer Science and its Applications. Vol. 279. Springer; Berlin/Heidelberg, Germany: 2014. Backpropagation Neural Network for Sex Determination from Patella in Forensic Anthropology; pp. 723–728. [DOI] [Google Scholar]

- 33.Pötsch-Schneider L., Endris R., Schmidt H. Discriminant Analysis of the Mandible for Sex Determination. Z. Fur Rechtsmedizin. J. Leg. Med. 1985;94:21–30. doi: 10.1007/BF00200520. [DOI] [PubMed] [Google Scholar]

- 34.el Morsi D.A., Gaballah G., Tawfik M.W. Sex Determination in Egyptian Population from Scapula by Computed Tomography. J Forensic Res. 2017;8:376. doi: 10.4172/2157-7145.1000376. [DOI] [Google Scholar]

- 35.Kalmey J., Rathbun T. Sex Determination by Discriminant Function Analysis of the Petrous Portion of the Temporal Bone. J. Forensic Sci. 1996;41:865–867. doi: 10.1520/JFS14013J. [DOI] [PubMed] [Google Scholar]

- 36.Sanchez L., Grajeda C., Baggili I., Hall C. A Practitioner Survey Exploring the Value of Forensic Tools, AI, Filtering, & Safer Presentation for Investigating Child Sexual Abuse Material (CSAM) Digit. Investig. 2019;29:S124–S142. doi: 10.1016/J.DIIN.2019.04.005. [DOI] [Google Scholar]

- 37.Teixeira W.R. Sex Identification Utilizing the Size of the Foramen Magnum. Am. J. Forensic Med. Pathol. 1982;3:203–206. doi: 10.1097/00000433-198209000-00003. [DOI] [PubMed] [Google Scholar]

- 38.Randhawa K., Narang R.S., Arora P.C. Study of the effect of age changes on lip print pattern and its reliability in sex determination. J. Forensic Odonto-Stomatol. 2011;29:45. [PMC free article] [PubMed] [Google Scholar]

- 39.Bewes J., Low A., Morphett A., Pate F.D., Henneberg M. Artificial Intelligence for Sex Determination of Skeletal Remains: Application of a Deep Learning Artificial Neural Network to Human Skulls. J. Forensic Leg. Med. 2019;62:40–43. doi: 10.1016/j.jflm.2019.01.004. [DOI] [PubMed] [Google Scholar]

- 40.Regina Silva Lucena dos Santos E., Paulo Feitosa de Albuquerque P., Virgínio de Albuquerque P., Duarte Ribeiro de Oliveira B., Caiaffo V. Determination of Sex Based on the Morphometric Evaluation of the Proximal Tibia Determinación Del Sexo Basada En La Evaluación Morfométrica de La Tibia Proximal. Int. J. Morphol. 2018;36:104–108. doi: 10.4067/S0717-95022018000100104. [DOI] [Google Scholar]

- 41.Hwang H.-W., Park J.-H., Moon J.-H., Yu Y., Kim H., Her S.-B., Srinivasan G., Aljanabi M.N.A., Donatelli R.E., Lee S.-J. Automated Identification of Cephalometric Landmarks: Part 2-Might It Be Better Than Human? Angle Orthod. 2020;90:69–76. doi: 10.2319/022019-129.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lee S.M., Kim H.P., Jeon K., Lee S.-H., Seo J.K. Automatic 3D Cephalometric Annotation System Using Shadowed 2D Image-Based Machine Learning. Phys. Med. Biol. 2019;64:055002. doi: 10.1088/1361-6560/ab00c9. [DOI] [PubMed] [Google Scholar]

- 43.Montúfar J., Romero M., Scougall-Vilchis R.J. Hybrid Approach for Automatic Cephalometric Landmark Annotation on Cone-Beam Computed Tomography Volumes. Am. J. Orthod. Dentofac. Orthop. 2018;154:140–150. doi: 10.1016/j.ajodo.2017.08.028. [DOI] [PubMed] [Google Scholar]

- 44.Montúfar J., Romero M., Scougall-Vilchis R.J. Automatic 3-Dimensional Cephalometric Landmarking Based on Active Shape Models in Related Projections. Am. J. Orthod. Dentofac. Orthop. 2018;153:449–458. doi: 10.1016/j.ajodo.2017.06.028. [DOI] [PubMed] [Google Scholar]

- 45.Codari M., Caffini M., Tartaglia G.M., Sforza C., Baselli G. Computer-Aided Cephalometric Landmark Annotation for CBCT Data. Int. J. Comput. Assist. Radiol. Surg. 2016;12:113–121. doi: 10.1007/s11548-016-1453-9. [DOI] [PubMed] [Google Scholar]

- 46.Baksi S., Freezer S., Matsumoto T., Dreyer C. Accuracy of an Automated Method of 3D Soft Tissue Landmark Detection. Eur. J. Orthod. 2020 doi: 10.1093/ejo/cjaa069. [DOI] [PubMed] [Google Scholar]

- 47.Ed-Dhahraouy M., Riri H., Ezzahmouly M., Bourzgui F., el Moutaoukkil A. A New Methodology for Automatic Detection of Reference Points in 3D Cephalometry: A Pilot Study. Int. Orthod. 2018;16:328–337. doi: 10.1016/j.ortho.2018.03.013. [DOI] [PubMed] [Google Scholar]

- 48.Croquet B., Matthews H., Mertens J., Fan Y., Nauwelaers N., Mahdi S., Hoskens H., el Sergani A., Xu T., Vandermeulen D., et al. Automated Landmarking for Palatal Shape Analysis Using Geometric Deep Learning. Orthod. Craniofac. Res. 2021 doi: 10.1111/ocr.12513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lachinov D., Getmanskaya A., Turlapov V. Cephalometric Landmark Regression with Convolutional Neural Networks on 3D Computed Tomography Data. Pattern Recognit. Image Anal. 2020;30:512–522. doi: 10.1134/S1054661820030165. [DOI] [Google Scholar]

- 50.Dot G., Rafflenbeul F., Arbotto M., Gajny L., Rouch P., Schouman T. Accuracy and Reliability of Automatic Three-Dimensional Cephalometric Landmarking. Int. J. Oral Maxillofac. Surg. 2020;49:1367–1378. doi: 10.1016/j.ijom.2020.02.015. [DOI] [PubMed] [Google Scholar]

- 51.Bermejo E., Taniguchi K., Ogawa Y., Martos R., Valsecchi A., Mesejo P., Ibáñez O., Imaizumi K. Automatic Landmark Annotation in 3D Surface Scans of Skulls: Methodological Proposal and Reliability Study. Comput. Methods Programs Biomed. 2021;210:106380. doi: 10.1016/j.cmpb.2021.106380. [DOI] [PubMed] [Google Scholar]

- 52.Lee J.-H., Yu H.-J., Kim M., Kim J.-W., Choi J. Automated Cephalometric Landmark Detection with Confidence Regions Using Bayesian Convolutional Neural Networks. BMC Oral Health. 2020;20:270. doi: 10.1186/s12903-020-01256-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Kang S.H., Jeon K., Kang S.-H., Lee S.-H. 3D Cephalometric Landmark Detection by Multiple Stage Deep Reinforcement Learning. Sci. Rep. 2021;11:17509. doi: 10.1038/s41598-021-97116-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Juneja M., Garg P., Kaur R., Manocha P., Prateek, Batra S., Singh P., Singh S., Jindal P. A Review on Cephalometric Landmark Detection Techniques. Biomed. Signal Process. Control. 2021;66:102486. doi: 10.1016/j.bspc.2021.102486. [DOI] [Google Scholar]

- 55.Yun H.S., Hyun C.M., Baek S.H., Lee S.-H., Seo J.K. Automated 3D Cephalometric Landmark Identification Using Computerized Tomography. arXiv. 2020 doi: 10.1371/journal.pone.0275114.2101.05205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Silva T.P., Hughes M.M., Menezes L.D., de Melo M.D., Takeshita W.M., Freitas P.H. Artificial Intelligence-Based Cephalometric Landmark Annotation and Measurements According to Arnett’s Analysis: Can We Trust a Bot to Do That? Dentomaxillofac. Radiol. 2021:20200548. doi: 10.1259/dmfr.20200548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Yun H.S., Jang T.J., Lee S.M., Lee S.-H., Seo J.K. Learning-Based Local-to-Global Landmark Annotation for Automatic 3D Cephalometry. Phys. Med. Biol. 2020;65:085018. doi: 10.1088/1361-6560/ab7a71. [DOI] [PubMed] [Google Scholar]

- 58.Kang S.H., Jeon K., Kim H.-J., Seo J.K., Lee S.-H. Automatic Three-Dimensional Cephalometric Annotation System Using Three-Dimensional Convolutional Neural Networks: A Developmental Trial. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2019;8:210–218. doi: 10.1080/21681163.2019.1674696. [DOI] [Google Scholar]

- 59.Kute R.S., Vyas V., Anuse A. Association of Face and Facial Components Based on CNN and Transfer Subspace Learning for Forensics Applications. SN Comput. Sci. 2020;1:1–16. doi: 10.1007/s42979-020-00280-2. [DOI] [Google Scholar]

- 60.Knyaz V.A., Maksimov A.A., Novikov M.M., Urmashova A.V. Automatic Anthropological Landmarks Recognition and Measurements. ISPAr. 2021;4421:137–142. doi: 10.5194/isprs-archives-XLIV-2-W1-2021-137-2021. [DOI] [Google Scholar]

- 61.Guyomarc P., Dutailly B., Charton J., Santos F., Desbarats P. Helene Coqueugniot Anthropological Facial Approximation in Three Dimensions (AFA3D): Computer-Assisted Estimation of the Facial Morphology Using Geometric Morphometrics. J Forensic Sci. 2014:1502–1516. doi: 10.1111/1556-4029.12547. [DOI] [PubMed] [Google Scholar]

- 62.Barbero-García I., Pierdicca R., Paolanti M., Felicetti A., Lerma J.L. Combining Machine Learning and Close-Range Photogrammetry for Infant’s Head 3D Measurement: A Smartphone-Based Solution. Measurement. 2021;182:109686. doi: 10.1016/j.measurement.2021.109686. [DOI] [Google Scholar]

- 63.Wen Y., Mingquan Z., Pengyue L., Guohua G., Xiaoning L., Kang L. Ancestry Estimation of Skull in Chinese Population Based on Improved Convolutional Neural Network; Proceedings of the 2020 IEEE International Conference on Bioinformatics and Biomedicine, BIBM; Online Conference. 16–19 December 2020; pp. 2861–2867. [DOI] [Google Scholar]

- 64.Liu X., Zou Y., Kuang H., Ma X. Face Image Age Estimation Based on Data Augmentation and Lightweight Convolutional Neural Network. Symmetry. 2020;12:146. doi: 10.3390/sym12010146. [DOI] [Google Scholar]

- 65.Fatima A., Shahid A.R., Raza B., Madni T.M., Janjua U.I. State-of-the-Art Traditional to the Machine- and Deep-Learning-Based Skull Stripping Techniques, Models, and Algorithms. J. Digit. Imaging. 2020;33:1443–1464. doi: 10.1007/s10278-020-00367-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Tu P., Book R., Liu X., Krahnstoever N., Adrian C., Williams P. Automatic Face Recognition from Skeletal Remains; Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition; Minneapolis, MN, USA. 17–22 June 2007; [DOI] [Google Scholar]

- 67.Curtner R.M. Predetermination of the Adult Face. Am. J. Orthod. 1953;39:201–217. doi: 10.1016/0002-9416(53)90182-1. [DOI] [Google Scholar]

- 68.Albert A.M., Ricanek K., Patterson E. A Review of the Literature on the Aging Adult Skull and Face: Implications for Forensic Science Research and Applications. Forensic Sci. Int. 2007;172:1–9. doi: 10.1016/j.forsciint.2007.03.015. [DOI] [PubMed] [Google Scholar]

- 69.Zhang D., Wu Z., Wang X., Lv C., Liu N. 3D Skull and Face Similarity Measurements Based on a Harmonic Wave Kernel Signature. Vis. Comput. 2020;37:749–764. doi: 10.1007/s00371-020-01946-x. [DOI] [Google Scholar]

- 70.Jain A., Singh R., Vatsa M. Face Recognition for Forensics. [(accessed on 1 November 2021)]. Available online: https://repository.iiitd.edu.in/xmlui/handle/123456789/593?show=fullhttps://repository.iiitd.edu.in/xmlui/handle/123456789/593?show=full.

- 71.Ma Q., Kobayashi E., Fan B., Nakagawa K., Sakuma I., Masamune K., Suenaga H. Automatic 3D Landmarking Model Using Patch-Based Deep Neural Networks for CT Image of Oral and Maxillofacial Surgery. Int. J. Med Robot. Comput. Assist. Surg. 2020;16:e2093. doi: 10.1002/rcs.2093. [DOI] [PubMed] [Google Scholar]

- 72.Tin M.L. Machine, Discourse and Power: From Machine Learning in Construction of 3D Face to Art and Creativity. Adv. Intell. Syst. Comput. 2020:517–523. doi: 10.1007/978-3-030-39512-4_81. [DOI] [Google Scholar]

- 73.Knyaz V.A., Kniaz V.V., Novikov M.M., Galeev R.M. Machine learning for approximating unknown face—Proquest. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020;43:857–862. doi: 10.5194/isprs-archives-XLIII-B2-2020-857-2020. [DOI] [Google Scholar]

- 74.Rajesh Kumar B. Region of interest feature extraction in facial expressions with convolutional neural network classification. Ctact J. Data Sci. Mach. Learn. 2020;2:149–152. [Google Scholar]

- 75.Mashouri P., Skreta M., Phillips J., McAllister D., Roy M., Senkaiahliyan S., Brudno M., Singh D. 3D Photography Based Neural Network Craniosynostosis Triaging System. Proc. Mach. Learn. Res. 2020;136:226–237. [Google Scholar]

- 76.Lo L.J., Yang C.T., Ho C.T., Liao C.H., Lin H.H. Automatic Assessment of 3-Dimensional Facial Soft Tissue Symmetry Before and After Orthognathic Surgery Using a Machine Learning Model: A Preliminary Experience. Ann. Plast. Surg. 2021;86:S224–S228. doi: 10.1097/SAP.0000000000002687. [DOI] [PubMed] [Google Scholar]

- 77.Hung K., Yeung A.W.K., Tanaka R., Bornstein M.M. Current Applications, Opportunities, and Limitations of AI for 3D Imaging in Dental Research and Practice. Int. J. Environ. Res. Public Health. 2020;17:4424. doi: 10.3390/ijerph17124424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Dalvit Carvalho da Silva R., Jenkyn T.R., Carranza V.A. Development of a Convolutional Neural Network Based Skull Segmentation in MRI Using Standard Tesselation Language Models. J. Pers. Med. 2021;11:310. doi: 10.3390/jpm11040310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Bekhouche S.E., Dornaika F., Benlamoudi A., Ouafi A., Taleb-Ahmed A. A Comparative Study of Human Facial Age Estimation: Handcrafted Features vs. Deep Features. Multimed. Tools Appl. 2020;79:26605–26622. doi: 10.1007/s11042-020-09278-7. [DOI] [Google Scholar]

- 80.Punyani P., Gupta R., Kumar A. Neural Networks for Facial Age Estimation: A Survey on Recent Advances. Artif. Intell. Rev. 2019;53:3299–3347. doi: 10.1007/s10462-019-09765-w. [DOI] [Google Scholar]

- 81.Liu H., Lu J., Feng J., Zhou J. Label-Sensitive Deep Metric Learning for Facial Age Estimation. IEEE Trans. Inf. Forensics Secur. 2018;13:292–305. doi: 10.1109/TIFS.2017.2746062. [DOI] [Google Scholar]

- 82.Lin H.H., Lo L.J., Chiang W.C. A Novel Assessment Technique for the Degree of Facial Symmetry before and after Orthognathic Surgery Based on Three-Dimensional Contour Features Using Deep Learning Algorithms. PervasiveHealth Pervasive Comput. Technol. Healthc. 2019:170–173. doi: 10.1145/3326172.3326222. [DOI] [Google Scholar]

- 83.Makaremi M., Lacaule C., Mohammad-Djafari A. Deep Learning and Artificial Intelligence for the Determination of the Cervical Vertebra Maturation Degree from Lateral Radiography. Entropy. 2019;21:1222. doi: 10.3390/e21121222. [DOI] [Google Scholar]

- 84.Ramanathan N., Chellappa R., Biswas S. Computational Methods for Modeling Facial Aging: A Survey. J. Vis. Lang. Comput. 2009;20:131–144. doi: 10.1016/j.jvlc.2009.01.011. [DOI] [Google Scholar]

- 85.Dou P., Shah S.K., Kakadiaris I.A. End-To-End 3D Face Reconstruction With Deep Neural Networks; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 5908–5917. [Google Scholar]

- 86.Fu Y., Guo G., Huang T.S. Age Synthesis and Estimation via Faces: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2010;32:1955–1976. doi: 10.1109/TPAMI.2010.36. [DOI] [PubMed] [Google Scholar]

- 87.Sai P.K., Wang J.G., Teoh E.K. Facial Age Range Estimation with Extreme Learning Machines. Neurocomputing. 2015;149:364–372. doi: 10.1016/j.neucom.2014.03.074. [DOI] [Google Scholar]

- 88.Porto L.F., Lima L.N., Franco A., Pianto D., Machado C.E., de Barros Vidal F. Estimating Sex and Age from a Face: A Forensic Approach Using Machine Learning Based on Photo-Anthropometric Indexes of the Brazilian Population. Int. J. Leg. Med. 2020;134:2239–2259. doi: 10.1007/s00414-020-02346-5. [DOI] [PubMed] [Google Scholar]

- 89.Ramanathan N., Chellappa R., Biswas S. Age Progression in Human Faces: A Survey. J. Vis. Lang. Comput. 2009;15:3349–3361. [Google Scholar]