Abstract

Retinal blood vessels have been presented to contribute confirmation with regard to tortuosity, branching angles, or change in diameter as a result of ophthalmic disease. Although many enhancement filters are extensively utilized, the Jerman filter responds quite effectively at vessels, edges, and bifurcations and improves the visualization of structures. In contrast, curvelet transform is specifically designed to associate scale with orientation and can be used to recover from noisy data by curvelet shrinkage. This paper describes a method to improve the performance of curvelet transform further. A distinctive fusion of curvelet transform and the Jerman filter is presented for retinal blood vessel segmentation. Mean-C thresholding is employed for the segmentation purpose. The suggested method achieves average accuracies of 0.9600 and 0.9559 for DRIVE and CHASE_DB1, respectively. Simulation results establish a better performance and faster implementation of the suggested scheme in comparison with similar approaches seen in the literature.

Keywords: blood vessel segmentation, curvelet transform, Jerman filter, mean-C thresholding

1. Introduction

Evaluation of the physical features of the retinal vascular structure can create understanding of the pathological transformation generated by ocular diseases. The illustration of the retinal vasculature is significant for analysis, treatment, viewing, assessment, and the clinical study of ophthalmic diseases that include retinal artery occlusion, diabetic retinopathy, hypertension, and choroidal neovascularization [1,2]. Blood vessels are dominating and mainly steady structures, which appear in the retina that is detected directly in vivo. The efficacy of cure for ophthalmologic disorders is dependent on the prompt recognition of alteration in retinal pathology. The manual labelling of retinal blood vessels is a tedious procedure that requires training and skill. Computerized segmentation offers reliability and accuracy and decreases the consumption of time by a physician or a skilled technician for hand mapping. Thus, an automatic definitive approach of vessel segmentation would be beneficial for the prior recognition and characterization of morphological alterations in the retinal vasculature. Generally, the feature representation and extraction in retinal images is a difficult assignment. The foremost complications are the lighting changes, insufficient contrast, noise effect, and anatomic changeability dependent on the individual patient.

Many filters are suggested for the enhancement of retinal blood vessels. Jerman et al. have recommended an improved multiscale vesselness filter, based on the ratio of multiscale Hessian eigenvalues, that produces uniform and stable acknowledgement in all vascular structures and correctly improves the border between the vascular structure and the background, later known as the Jerman filter [3,4]. They have assessed the proposed enhancement filter on High-Resolution Fundus (HRF) image database. However, their suggested method is only limited to the enhancement of retinal vasculature more uniformly. Frangi et al. have suggested a filtering approach with multiscale for vessel enhancement called the Frangi filter [5].

Among the human organs, the retina is the only place in which blood vessels can be captured straight noninvasively in vivo. In the automatic retinal disease recognition, blood vessels play a vital role, as they comprise the essential topic of screening systems. Precise segmentation and analysis of blood vessel length, thickness, and orientation can simplify the assessment of retinopathy of prematurity and recognition of arteriolar narrowing assessment of vessel diameter for the detection of aliments such as hypertension, diabetes, and arteriosclerosis, etc. [6].

On the other hand, many researchers have shown the importance of mutiresolution analysis, especially applicable for the enhancement of retinal blood vessels [7,8]. Among the multiresolution analysis, curvelet transform is one of the important techniques for enhancement of blood vessels. Few efforts have been introduced for enhancing the performances of curvelet transform by extending it in various ways for the segmentation of retinal images. Esmaeili et al. have offered a new technique for enhancing the retinal blood vessels utilizing curvelet transform [9]. In 2011, Miri and Mahloojifar utilized curvelet transform for the detection of the retinal image edges effectively through multistructure morphology operators [10]. In 2016, Aghamohamadian-Sharba et al. (2015) utilized curvelet transform to automatically grade the retinal blood vessel tortuosity [11]. In 2014, Kar et al. combined curvelet transform with matched filter and conditional fuzzy entropy for extraction of blood vessels [12]. In 2016, the same authors suggested another method for extraction of blood vessels by combining curvelet transform with matched filter and kernel fuzzy c-means [13]. Some of the significance associated with supervised and unsupervised works for retinal vessel segmentation that are available are discussed below.

Numerous principles and approaches for retinal vessel segmentation have been described in the literature. Fraz et al. have given a detailed report for the various approaches available for the retinal vessel segmentation [14]. Detection of retinal blood vessel segmentation can be designated into procedures on the basis of pattern recognition, vessel tracking, match filtering, multiscale analysis, morphological processing, and model-based algorithms. Furthermore, the pattern recognition techniques can be distributed into two classes: supervised approaches and unsupervised approaches. Supervised approaches use ground truth data for the classification of vessels that consider features of the blood vessels. These approaches comprise principal component analysis [15], neural networks [16], k nearest neighbour classifiers [17], and support vector machine (SVM) [18]. Some of the unsupervised approaches include matched filtering along with specially weighted fuzzy c-means clustering [19], radius-based clustering algorithm [20], and maximum likelihood estimation of vessel parameters [21].

Generally, the green channel of the image is taken into consideration in most of the vessel segmentation methods because of low level of noise and high level of contrast. Soares et al. have recommended an approach that classified pixels as vessel or nonvessel by utilizing supervised classification [22]. Lupascu et al. have utilized AdaBoost for the construction of a classifier [23]. Chaudhuri et al. have proposed methods based on matched filtering that convolves with a 2D template and designed to form the characteristics of the vasculature [24]. Kovacs and Hajdu have also suggested an approach considering matching of template and contour reconstruction [25]. Annunziata et al. have suggested an approach in which the presences of exudates in retinal images are reported [26]. Zamperini et al. have classified vessels considering the contrast, size, position, and colour by investigating the nearby pixels of background [27]. Relan et al. have utilized Gaussian Mixture Model with a hope to maximize clustering for the classification of vessels [28]. Dashtbozorg et al. have suggested a new approach to classify considering the geometry of vessels [29]. Estrada et al. have also taken graph theoretical method into consideration by extending a global likelihood model [30]. Relan et al. have employed the least square-support vector machine approach for the classification of veins on four-color features [31]. Vascular tortuosity measurement is important for the diagnosis of diabetes and some diseases related to central nervous system. Hart et al. have suggested a tortuosity measurement and classification of vessel segmentation and networks and also summarized the previous works [32]. Grisan et al. have recommended a tortuosity density measure to deal with the vessel segmentation of various lengths by adding each local turn [33]. Lotmar et al. have originated the first approach of the first kind that is extensively used [34]. Poletti et al. have suggested combined approaches for image-level tortuosity estimation [35]. Angle of variation for tortuosity valuation for the diagnosis of retinopathy is considered by Oloumi et al. [36]. Five approaches based on different principles are compared by Lisowska et al. [37]. Perez Rovira et al. have suggested a complete system for vessel analysis, which utilized the tortuosity measure by Trucco et al. [38,39]. Azzopardi et al. have recommended vessel extraction by using the B-COSFIRE (Combination of Shifted Filter Responses) technique that provides rotation invariance effectively by shifting operations [40]. Mapayi et al. have suggested of blood vessel segmentation by using GLCM (Gray Level Co-occurrence Matix) energy information through an adaptive thresholding technique [41]. Zhao et al. have proposed an infinite perimeter active contour model through combining intensity information and local phase-based enhancement map for vessel extraction [42]. Zhang et al. have suggested a new approach of segmenting the blood vessel in two ways: one is using LID (left invariant rotating derivative), and the other one is LAD (locally adaptive derivative) frame. The results of multiscale filtering through LAD or LID give enhanced images for vessel extraction [43].

Tan et al. have proposed an automated approach for extraction of retinal vasculature in which they have filtered the retinal images via a bank of Gabor kernels. The outputs are combined to form a maximal image that is thinned to get a network of one-pixel lines, examined and clipped to locate forks and from branches. Lastly, for finding out salient points, the algorithm known as Ramer–Douglas–Peucker is employed [44]. Farokhain et al. have designed new sets of Gabor filters applying imperialism competitive algorithm for blood vessel segmentation [45]. Orlando et al. have recommended vessel segmentation by conditional random field model [46]. Rodrigues and Marengoni have employed a graph-based technique utilizing Dijkstra’s shortest path algorithm and a statistic t distribution to extract the blood vessel [47]. Jiang et al. have recommended a pretrained fully convolutional network through transfer learning for the segmentation of blood vessels [48]. Khomri et al. have proposed a vessel segment method using the Elite-guided Multi-Objective Artificial Bee Colony (EMOABC) algorithm [49].

Memari et al. have suggested fuzzy c-means clustering integrated with level sets for blood vessel segmentation. They have employed contrast limited adaptive histogram equalisation, mathematical morphology integrated with a matched filter, the Gabor filter, and the Frangi filter to reduce the noise and enhance the retinal images [50]. Sundaram et al. have integrated few existing techniques such as morphological operations, bottom hat transform, multiscale vessel enhancement (MSVE) algorithm, and image fusion for retinal vessel segmentation [51]. Recently, Dash and Senapati have introduced an extension of Discrete wavelet transform (DWT) by combining it with the Coye filter [52]. Dash et al. have enhanced the image by using homomorphic filter-based enhancement to enhance the features. Afterwards, vessels are extracted by utilizing K-mean clustering method [53]. Likewise, there are many other suggested works are available in the literature for the improvement and detection health diseases utilizing machine learning [54,55,56,57,58] and deep learning [59,60,61,62,63,64].

Glaucoma is the second main basis of irreparable blindness worldwide. The disease progression of glaucoma can be controlled by early detection. Glaucoma leads to deterioration of optic nerves. The ratio of the optic cup to the optic disc, also known as the cup-to-disc ratio (CDR), is one of the important and standard measures to identify glaucoma. Trained ophthalmologists determine the CDR value manually, which restricts en masse screening for early detection of glaucoma. The exact value of CDR is difficult to determine if the optic cup and optic disc are not thoroughly distinct [65]. Therefore, an optic disc improvement technique is highly recommended. The suggested work can improve the quality of optic disc and optic cup for glaucoma identification.

Even though, recently, deep learning has been successfully implemented for retinal blood vessel segmentation, a lot can still be done to ameliorate the traditional unsupervised approaches further. Wang et al. have recommended Context Spatial U-Net for blood vessel segmentation [66]. Generally, designing a method of enhancing the contrast that generates a visual-artifact-free output is impracticable. Selection of a particular enhancement system is challenging due to the absence of reliable parameters for the evaluation of the quality of the output image.

Even though most of these approaches have generated prominent results, they so often present various complications because of noise and the imprecise nature of the retinal vessel images. In such circumstances, numerous critical challenges still exist that must be addressed, for instance, occurrence of false positives, poor connectivity in retinal vessels, accuracy under noisy condition, and many more. Despite few efforts having been taken for the enhancement of retinal vessel using curvelet transform, the improvement of the performance of curvelet transform can still be a challenging task.

Curvelet has the advantage of modifying the curvelet coefficients; it has the ability to enhance the edges more precisely. The multistructure elements approach has the property of directionality feature that makes it an effective tool in edge detection. The curvelet decomposition has the benefit of denoising as well as highlighting the edges and vessel curvature. The Jerman filter has the advantage of producing uniform and stable effect in all vascular structures and correctly improves the border in between the vascular structure and the background.

In this paper, a new attempt has been made by considering the advantages of both Jerman filter and curvelet transform for retinal vessels enhancement and mean-C thresholding for segmentation. The suggested approach integrates two different techniques, Jerman filter and curvelet transform, to improve the performance of the curvelet transform.

The paper is organized as follows: Section 2 discusses the methodology in detail. Results and comparisons are given and discussed in Section 3. In Section 4, the conclusions are drawn.

2. Materials and Methods

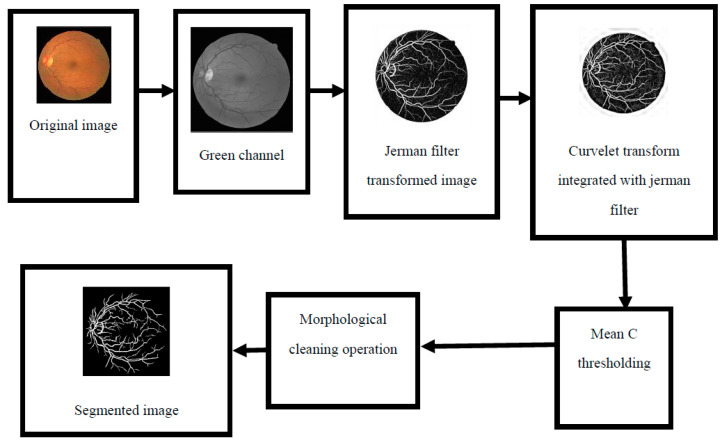

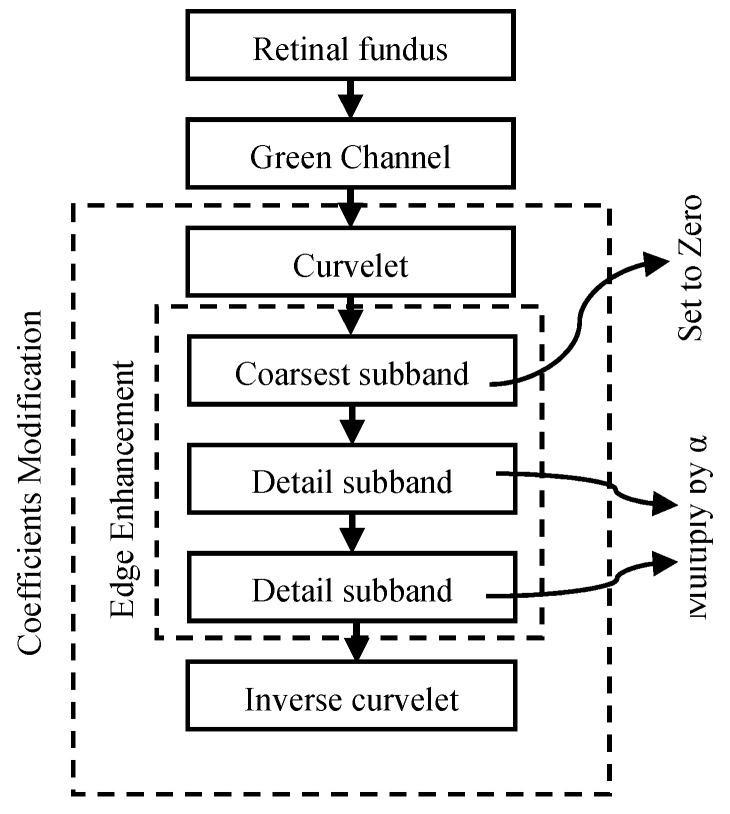

The suggested execution of the retinal blood vessel extraction system is enlightened by curvelet transform integrated with the Jerman filter [4]. The main purpose of combining the two techniques is to improve the traditional curvelet transform performance further for the enhancement of retinal blood vessels. Figure 1 shows the functional block diagram of the suggested segmentation scheme, and there are three stages of the approach that are comprehensively described below.

Figure 1.

Schematic outline of the suggested methodology.

2.1. Preprocessing

Due to the difficulties in capturing pictures of retinal images through pupil, the blood vessels have unbalanced illumination. Because of the low contrast, the vessels in the dark areas are difficult to discern. Therefore, the whole preprocessing stages are critical in order to extract as many fine vessels as possible. Accordingly, the overall image quality requires improvement through the preprocessing steps. Thus, the suggested method is a unique combination of a Jerman filter with curvelet transform to improve the performance measures of blood vessels.

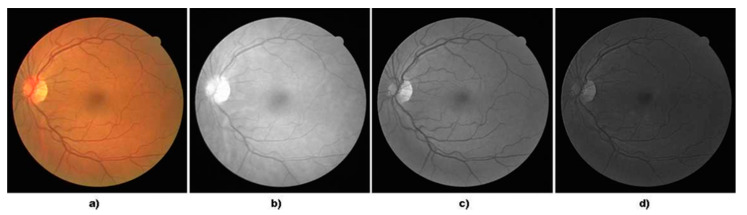

It can be inferred from Figure 2 that the vessels are better distinguishable in the green channel in comparison to the blue and red one. Hence, the green channel is considered in the entire process of vessel extraction.

Figure 2.

(a) Colour Image, (b) Red Channel, (c) Green Channel, (d) Blue Channel.

2.1.1. Enhancement of Vasculature Jerman Filter

Although various enhancement filters are extensively utilized, the responses of the filters are not uniform in between vessels of distinct radii. A close-to-uniform response is achieved for the entire vascular structure by initially considering the filter that uses the ratio of multiscale Hessian eigenvalues.

To handle with the deviations of intensity and outline of the targeted structures, noise, etc., the indicator function is approximated by smooth enhancement functions. Through maximization of a specified enhancement function, a multiscale filter response F(x) is then achieved, at each point x, over a span of scale s, as given below:

| (1) |

where is the hessian of F(x) at x and scale s.

The vasculature mostly contains straight vessels and rounded structures such as bending vessels and bifurcations and vascular pathologies, for example, aneurysms. The elongated tube type structures, for instance, vessels, can be enhanced by considering the eigenvalue λm of H (x, s), m = 1, 2, …E that can be calculated fast for 2 × 2 Hessian matrices in 2D images with the analytic approach. The elongated structures are specified by |λ2|>>|λ1| for 2D images (E = 2) in which λ2 is a bright (dark) structure on a dark (bright) background. For differently shaped structures, the eigenvalue relationships can be achieved in the same way.

Frangi et al. (1998) suggested a function in which a factor is used to suppress the rounded structures, as described below [5]:

, with and .

Jerman et al. (2016) removed the factor and reduced Frangi’s function as:

| (2) |

where is the second order measure of image structure that is designated as , where D indicates dimension, and discriminates between tubular and planar structures. Parameters α and K control the sensitivity of the measures and S, respectively. In 2D, the corresponding transformed Frangi’s enhancement function comprises only the second factor of Function (1), which is given as:

| (3) |

where .

The original Sato’s enhancement function consists the factor , δ ≥ 0 for the suppression of rounded structures (Sato et al. 2000) [67]. By eliminating this aspect, Jerman et al. (2016) derived the equation as [4]:

| (4) |

where γ controls the sensitivity.

Li et al. (2003) suggested an alike enhancement function that is without the suppression of spherical structures, stated as [7]:

| (5) |

that can be factored into . As reported by to Li et al. (2003), the first factor denotes the magnitude and the second likelihood of an elongated structure.

The terminologies of the entire abovementioned enhancement functions are, by some means, proportional to the magnitude or squared magnitude of or . By utilizing to approximate the second order factor in Function (1), Jerman et al. (2016) derived the following [4].

| (6) |

That explains Frangi’s Function (1) is proportional to the squared magnitude of and .

The dependency of the enhancement functions on the magnitude of or is executed mostly to suppress the noise in image regions with low and uniform intensities in which all eigenvalues have low and alike magnitudes.

For regularizing the value of at each scale p, the following formulation is considered:

| (7) |

where τ is the cut-off threshold between 0 and 1.

By this eigenvalue regularization mentioned above, the enhancement function can be described individually of the relative brightness of the structures of importance as follows:

| (8) |

Accordingly, Jerman et al. (2016) derived the enhanced filter function as [4]:

| (9) |

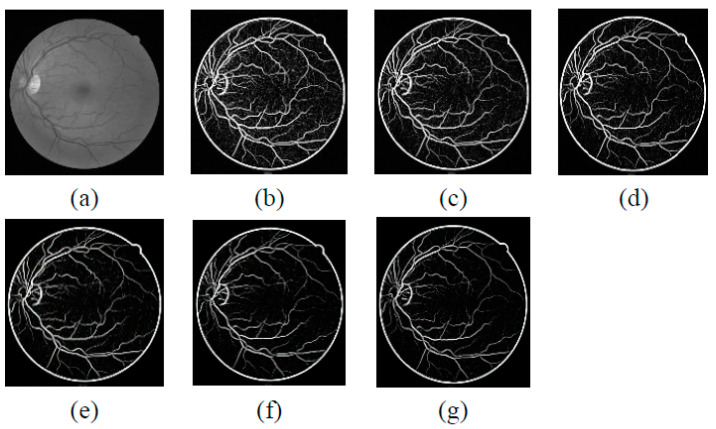

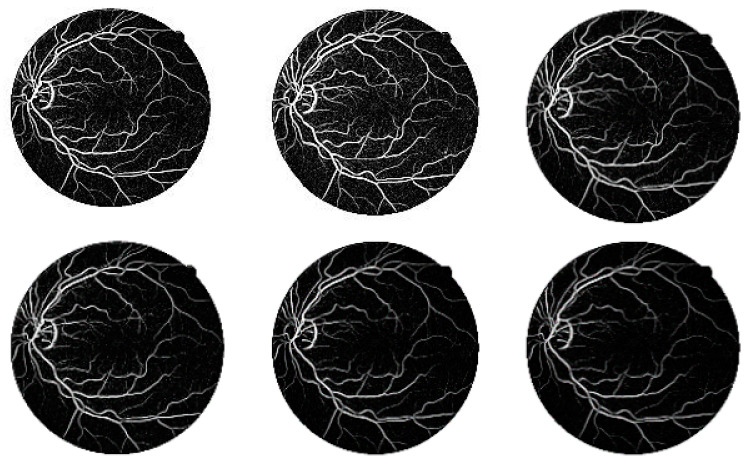

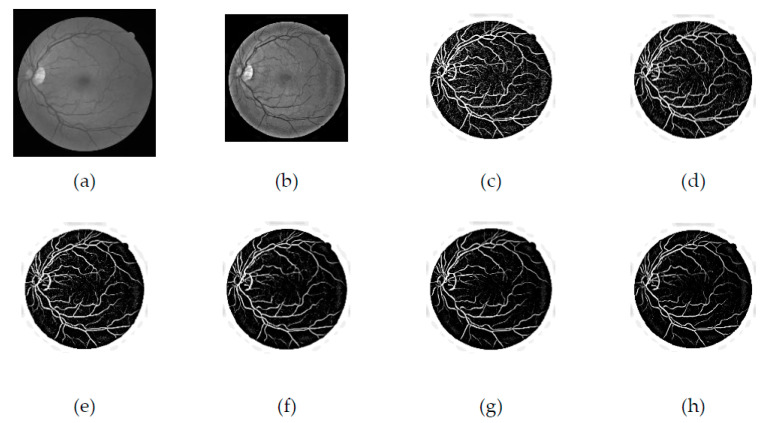

The suggested enhancement function is considering a ratio of eigenvalues with range response values from 0 to 1. Figure 3a presents the green channel, and Figure 3b,g shows the images obtained by applying the Jerman filter on the green channel of the blood vessel. Figure 4 displays the filtered images after the disk is removed.

Figure 3.

Comparison of green channel- and Jerman-filtered images with different τ values. (a) Green channel, (b) τ = 0.5, (c) τ = 0.6, (d) τ = 0.7, (e) τ = 0.8, (f) τ = 0.9, (g) τ = 1.

Figure 4.

Retinal images obtained from a Jerman filter after the disks are removed for different τ values.

2.1.2. Enhancement of Vasculature by Curvelet Transform

The Jerman-filtered transformed images are further processed through curvelet transform. The purpose of choosing curvelet transform is explained below.

In the curvelet transform, the curvelets are designed to pick up curves utilizing only a small number of coefficients. Therefore, the curve discontinuities are managed finely with curvelets. Main advantages of curvelet transform are its sensitivity in the direction of directional edges and contours and its ability to signify them by few sparse nonzero coefficients. Thus, in comparison to wavelet transform, curvelet transform can proficiently illustrate the edges and curves with a smaller number of coefficients. Furthermore, curvelet transforms are utilized to enhance the contrast of an image by highlighting its edges in several scales and directions.

The details of mathematical formulations are discussed as follows.

Donoho and Ducan (2000) suggested curvelet transform that is derived from ridgelet transform [8]. The curvelet transform is appropriate for objects that are smooth away from discontinuities across curves. Curvelet transform handles curve discontinuities in a fine manner because it is designed to handle curves utilizing only a small number of coefficients. The multiwavelet transformation offers better spatial and spectral localization of an image when compared with other multiscale representations. However, here, the curvelet via wrapping is implemented, as it is faster and has less computational complexity. In this method, the Fourier plane is divided into a number of concentric circles referred to as scale; each of these concentric circles is again divided into a number of angular divisions referred to as the orientation. This combination of the scale and the angular division is known as parabolic wedges. As these radial wedges capture the structural activity in the frequency domain, high anisotropy and directional sensitivity are the inherent characteristics of the curvelet transform. Next, to find out the curvelet coefficients, inverse FFT is taken on each scale and angle. The curvelet transform consists of four stages and is implemented as given below.

Initially in the subband decomposition, the image is first decomposed into log2N (N is the size of the image) wavelet subbands, and then curvelet subbands are formed by forming partial reconstruction from these wavelet subbands at various levels. The subband decompositions are denoted as:

where P0→ lowpass filter, Δ→ bandpass (highpass) filters.

The image is distributed into resolution layers . All layers include the particulars of various frequencies. In the next step of smooth portioning, every subband is smoothly windowed into ‘squares’ of a suitable measure. A grid of dyadic squares is described as:

| (10) |

Let p be a smooth windowing function. For every square, PI is a displacement of P localized close to I. The multiplication of Δsf with PI yields a smooth dissection of the function into ‘squares’.

| (11) |

This stage follows the windowing partition of the subbands isolated in the former step of the algorithm.

| (12) |

In the next step of renormalization, every resultant square is renormalized to unit scale. For a dyadic square I, let the following define an operator that transports and renormalizes f so that the part of the input supported near I becomes the part of output supported near the unit square.

| (13) |

In this step, each square resulting in the previous step is renormalized to unit scale.

| (14) |

where is the operator, and is the inverse opearator.

Finally, inverse curvelet transform is applied to achieve the curvelet enhanced image.

The digital curvelet transform applied on a 2D image f(x,y), such that 0 < x ≤ M and 0< y ≤ N, gives a set of curvelet coefficients as follows.

| (15) |

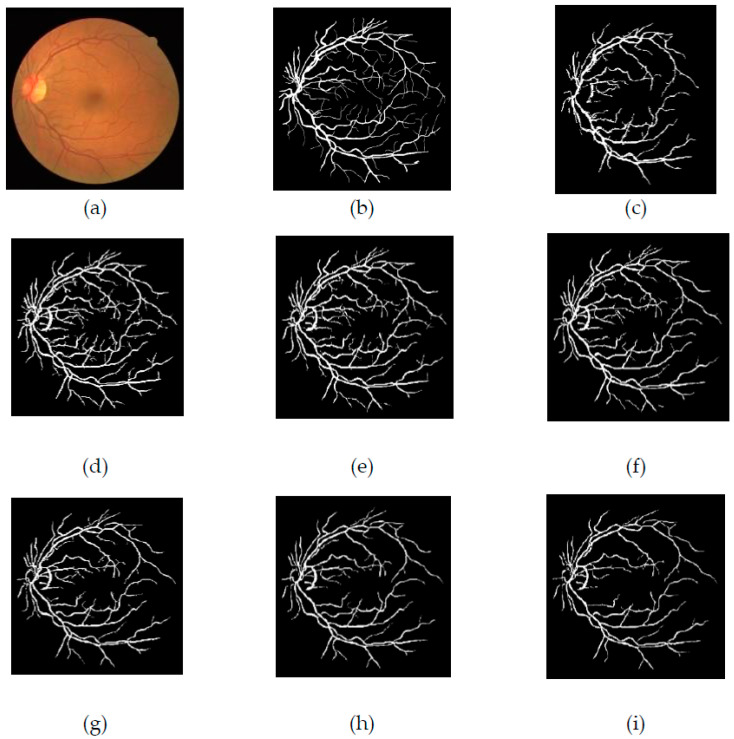

Here, ‘s’ is the scale or no of decomposition level, ‘θ’ is the orientation, ‘k1′ and ‘k2′ are spatial location of curvelet, and φ and ‘f(x,y)’ are the image in spatial domain. As the decomposition level increases, the curvelets become thinner and sharper. The schematic diagram of the general steps of the curvelet transform is given in Figure 5. Furthermore, the enhanced images obtained from curvelet transform are presented in Figure 6. Figure 6b represents the curvelet transformed image on the green channel. Figure 6c–h presents the enhanced curvelet transform retinal images through the Jerman filter.

Figure 5.

General steps of curvelet transform.

Figure 6.

Effect of curvelet transform on green channel- and Jerman-filtered images (a) Green channel, (b) Curvelet transformed image of green channel, (c–h) Jerman filter integrated with curvelet transform for different values of τ.

2.2. Mean-C Thresholding

In this research, mean-C thresholding method is considered in which a threshold is computed for every pixel in the image based on some local statistics such as mean and median. The threshold is updated every time. The core benefit of this approach is that it can be applied to unevenly illuminated images. The steps of the mean-C thresholding are described as follows.

The novel mean-C thresholding proceeds in two steps—background elimination and vessel segmentation. For background elimination, a mean image is first generated by convolving the enhanced image with a mean filter of window size ‘W’. This average filter smooths the background for the poorly illuminated image. This mean filtered image is subtracted from the enhanced image to produce a difference image. Now with an appropriate threshold value ‘C’, the image is binarised. The values of the parameters ‘W’ and ‘C’ are chosen empirically [58].

Initially, the mean filter with window size N × N is chosen.

The transformed image achieved through all the processes is convolved with the mean.

By taking the difference of the convolved image and the transformed image, a new difference image is obtained.

The difference image is thresholded with the constant value C. Experimentally, the value of C is fixed as 0.039.

The complement of thresholded image is computed.

2.3. Summary of the Proposed Method

The summary of the contribution of the suggested method is given below.

Step 1: Extract the green channel from the fundus image.

Step 2: Apply the Jerman filter with different τ value that is the cut-off threshold between 0 and 1. In the experiment, τ value varies from 0.5 to 1.

Step 3: Afterwards, Jerman-filter-enhanced images are applied on curvelet transform to improve the curvelet transform.

Step 4: Curvelet decomposition of level ‘s’ = 5 and orientation θ = 16 are applied to each channel individually (as described in Equation (15)). It gives a set of curvelet coefficients for each level of decomposition (i.e. for s = 1, 2.., 5). As the level of decomposition increases, the curvelet becomes thinner and finer, and hence, the s=1 corresponds to the core’s region, and s=5 corresponds to the finest or high-frequency region. The increase in θ value increases the no of coefficient significantly. Hence, the no of decomposition level and the orientation are chosen very precisely to achieve the best representation of the curves and singularities with minimum complexity.

Step 5: Then the image enhancement is performed by modifying the curvelet coefficients in such a way that the high-frequency curvatures and edges are emphasized, and the low-frequency cores regions are deemphasized. This coefficient modification scheme is unique and specific to the problem. Here, all the coefficients for s = 1 are set to zero, and the coefficients for all other values of s are multiplied by ‘α’. The value of ‘α’ is fixed at 1.2.

Step 6: Apply Inverse Curvelet transform on the modified set of curvelet coefficient to produce the enhanced image. As the coefficient modification set all core levels to zero and fine level coefficients are enhanced, in the reconstructed image, the background appears darker, and the curvatures and edges are highlighted. Various authors have suggested several strategies for coefficient modification; however, the suggested modification is very simple and straightforward and gives efficient enhancement.

Step 7: Mean C thresholding is applied on the curvelet-enhanced images to produce the vessel network.

Step 8: Mean C thresholding result contains some small, disconnected, vessel-like structures. These may be due to the noise. Thus, a postsegmentation fine tuning is performed by morphological opening operation, which successfully removes the artefacts.

3. Results

The performance of the recommended approach is analysed and compared with the other approaches by implementing it on publicly available DRIVE and CHASE_DB1 databases. The DRIVE database images are divided into two sets—training data set and testing data set. Each data set consists of 20 retinal colour images, corresponding mask, and two sets of corresponding manually segmented results. The manual segmented result given by the first ophthalmologist is treated as the ground truth image. The training data set is usually used in supervised methods to train the network. As the suggested scheme is an unsupervised method, we have considered only the test data set.

The CHASE_DB1 (Child Heart and Health Study in England) dataset contains of child retinal images of both the eyes. The images are taken at 30° field of view with resolution of 960 × 999 pixels by hand-held NM-200-D fundus camera. The images consist of uneven illumination at the background and poor contrast blood vessels. Segmentation results of the first of the two observers are deployed as the ground truth.

To analyse and quantify the method’s efficiency the segmented result is compared with the ground truth and several performance measures like sensitivity, accuracy, and specificity are computed, as per the equation defined below. The accuracy is defined as the ability of algorithm to differentiate the vessel and nonvessel pixels correctly. To estimate the accuracy, one has to calculate the proportion of true positive and true negative in all evaluated cases. The accuracy displays conventionality of the segmentation result. Mathematically, it is defined as:

| (16) |

Sensitivity quantifies the techniques of capability to identify the correct vessel pixel. Mathematically, it is stated as:

| (17) |

While specificity is a measure of the capability to identify the background pixels.

| (18) |

| (19) |

where TP, TN, FP, and FN are defined as follows:

True positive (TP) = counts pixel is accurately recognized as a vessel.

False positive (FP) = counts pixel is inaccurately recognized as vessel.

True negative (TN) = counts pixel is accurately recognized as background.

False negative (FN) = counts pixel is inaccurately recognized as background.

The first experiment is carried out for the original curvelet transform on both DRIVE and CHASE_DB1 databases. Table 1 shows the performance measures of the retinal blood vessel segmentation utilizing original curvelet transform with c-mean thresholding on DRIVE database. The average sensitivity, specificity, and accuracy values were computed and achieved as 0.6687, 0.9835, and 0.95570, respectively, for DRIVE database. Similarly, Table 2 shows the performance measures of the original curvelet transform on CHASE_DB1 database. The average sensitivity, specificity, and accuracy values were computed and found to be 0.6160, 0.9694, and 0.9432, respectively, for CHASE_DB1 database.

Table 1.

Performance evaluation of the original curvelet transform on DRIVE database.

| Image | Sensitivity | Specificity | Accuracy | Precision |

|---|---|---|---|---|

| Retina 1 | 0.689029 | 0.983848 | 0.957543 | 0.806914 |

| Retina 2 | 0.681326 | 0.98826 | 0.956828 | 0.868788 |

| Retina 3 | 0.662299 | 0.982472 | 0.950555 | 0.807091 |

| Retina 4 | 0.612176 | 0.994229 | 0.959083 | 0.914874 |

| Retina 5 | 0.625776 | 0.99155 | 0.957283 | 0.884459 |

| Retina 6 | 0.619286 | 0.98656 | 0.950812 | 0.832454 |

| Retina 7 | 0.645363 | 0.982846 | 0.952006 | 0.790952 |

| Retina 8 | 0.646095 | 0.981693 | 0.952819 | 0.768638 |

| Retina 9 | 0.64762 | 0.985057 | 0.95771 | 0.792622 |

| Retina 10 | 0.619679 | 0.989112 | 0.958707 | 0.836174 |

| Retina 11 | 0.663699 | 0.98082 | 0.952431 | 0.772854 |

| Retina 12 | 0.690874 | 0.979726 | 0.954785 | 0.763055 |

| Retina 13 | 0.560464 | 0.990786 | 0.948715 | 0.868271 |

| Retina 14 | 0.751659 | 0.969751 | 0.952118 | 0.686101 |

| Retina 15 | 0.714534 | 0.973168 | 0.954658 | 0.672419 |

| Retina 16 | 0.680373 | 0.984199 | 0.956767 | 0.810371 |

| Retina 17 | 0.665984 | 0.977015 | 0.950761 | 0.727612 |

| Retina 18 | 0.703259 | 0.97927 | 0.957401 | 0.744855 |

| Retina 19 | 0.804903 | 0.986169 | 0.971133 | 0.840365 |

| Retina 20 | 0.69116 | 0.983526 | 0.962026 | 0.769065 |

Table 2.

Performance evaluation of the original curvelet transform on CHASE_DB1 database.

| Image | Sensitivity | Specificity | Accuracy | Precision |

|---|---|---|---|---|

| Retina 1 | 0.605098 | 0.969179 | 0.943788 | 0.595448 |

| Retina 2 | 0.588396 | 0.957322 | 0.923329 | 0.526972 |

| Retina 3 | 0.670129 | 0.969808 | 0.946311 | 0.653783 |

| Retina 4 | 0.622163 | 0.978558 | 0.947489 | 0.697793 |

| Retina 5 | 0.632404 | 0.971317 | 0.943688 | 0.651083 |

| Retina 6 | 0.582326 | 0.978046 | 0.944288 | 0.66521 |

| Retina 7 | 0.609928 | 0.968976 | 0.94101 | 0.624149 |

| Retina 8 | 0.613154 | 0.972105 | 0.947597 | 0.595198 |

| Retina 9 | 0.600965 | 0.970603 | 0.951395 | 0.567225 |

| Retina 10 | 0.597453 | 0.963862 | 0.937151 | 0.588464 |

| Retina 11 | 0.619103 | 0.961833 | 0.940899 | 0.556381 |

| Retina 12 | 0.592585 | 0.965196 | 0.937655 | 0.564901 |

| Retina 13 | 0.597962 | 0.973663 | 0.947481 | 0.576893 |

| Retina 14 | 0.692803 | 0.972191 | 0.95296 | 0.648084 |

The second experiment is conducted for the proposed curvelet transform integrated with the Jerman filter. Table 3 shows the results of the average sensitivity, specificity, and accuracy values that are boosted to 0.7528, 0.9933, and 0.96008, respectively on DRIVE database. Likewise, Table 3 shows the results of average sensitivity, specificity, and accuracy values are boosted to 0.7078, 0.9850, and 0.9559, respectively on CHASE_DB1 database. For the calculation of the average values of the performance parameters, the highest values achieved from the various values of τ are considered. All the performance parameters are calculated with τ value between 0.5 and 1. The cut-off threshold value τ can be varied from zero to one. However, experimentally, it is observed that selecting a low value of τ below 0.5 supresses the elongated structures, and a high value supresses the bifurcation response. It is observed that better segmentation is achieved when the τ value is on the lower side, i.e., 0.5; poor segmentations are achieved as the τ value is increased and on the higher side. As the image illuminations vary for different images, various values of τ are utilized in the experiment. Accordingly, the image can be enhanced, and the value of τ can be fixed. The enhancement function of the multiscale filter (Function (1)) is calculated with scales from smin = 3 to smax = 16 pixels with step 0.5.

Table 3.

Performance Comparison of the recommended approach.

| Approach | Year | Sensitivity | Specificity | Accuracy | |||

|---|---|---|---|---|---|---|---|

| DRIVE | CHASE_ DB1 | DRIVE | CHASE_DB1 | DRIVE | CHASE_DB1 | ||

| Kar et al. [12] | 2016 | 0.7548 | -- | 0.9792 | -- | 0.9616 | -- |

| Azzopardi et al. [40] | 2015 | 0.7655 | 0.7585 | 0.9704 | 0.9587 | 0.9442 | 0.9387 |

| Mapayi et al. [41] | 2015 | 0.7650 | -- | 0.9724 | -- | 0.9511 | -- |

| Zhao et al. [42] | 2015 | 0.742 | -- | 0.982 | -- | 0.954 | -- |

| Zhang et al. [43] | 2016 | 0.7473 0.7743 |

0.7626 0.7277 |

0.9764 0.9725 |

0.9661 0.9712 |

0.9474 0.9476 |

0.945 -- |

| Tan et al. [44] | 2016 | 0.7743 | 0.7626 | 0.9725 | 0.9661 | 0.9476 | 0.9452 |

| Farokhain et al. [45] | 2017 | 0.693 | -- | 0.979 | -- | 0.939 | -- |

| Orlando et al. [46] | 2017 | 0.7897 | 0.7277 | 0.9684 | 0.9712 | -- | -- |

| Rodrigues and Marengoni [47] | 2017 | 0.7223 | -- | 0.9636 | -- | 0.9472 | -- |

| Jiang et al. [48] | 2018 | 0.7121 | 0.7217 | 0.9832 | 0.9770 | 0.9593 | 0.9591 |

| Khomri et al. [49] | 2018 | 0.739 | -- | 0.974 | -- | 0.945 | -- |

| Memari et al. [50] | 2019 | 0.761 | 0.738 | 0.981 | 0.968 | 0.961 | 0.939 |

| Sundaram et al. [51] | 2019 | 0.69 | 0.71 | 0.94 | 0.96 | 0.93 | 0.95 |

| Dash and Senapati [52] | 2020 | 0.7403 | -- | 0.9905 | -- | 0.9661 | -- |

| Dash et al. [53] | 2020 | 0.7203 | 0.6454 | 0.9871 | 0.9799 | 0.9581 | 0.9609 |

| Original Curvelet Transform | 0.6687 | 0.6160 | 0.9835 | 0.9647 | 0.9557 | 0.9432 | |

| Suggested approach (Jerman filter integrated with Curvelet transform) | 0.7528 | 0.7078 | 0.9933 | 0.9850 | 0.9600 | 0.9559 | |

4. Discussions

The segmented images of the DRIVE and CHASE_DB1 databases by the suggested approach are represented in Figure 7 and Figure 8, respectively. The first retinal original image of the DRIVE database is given in Figure 7a, while Figure 7b is the corresponding ground truth image. Figure 7c represents the vessel extracted by applying the curvelet transform enhanced method. Figure 7d–i shows the vessels extracted by the suggested method with different τ values of the Jerman filter. From the figures, it is clearly visible that, when increasing the τ values from 0.5 to 1 with an increment step of 0.1, the small branching vessels are disconnected from the main vessels, and correspondingly, the accuracy also reduced. The τ value at 0.5 finely preserved the vessel connectivity that is noticeably observed from Figure 7d. Furthermore, when the vessel-extracted image 7d is compared with the ground truth image 7b, it is noted that the thin vessels are more prominently detectable in Figure 7d.

Figure 7.

(a) Original image of DRIVE dataset, (b) Ground truth image, (c) Segmented image from original curvelet, (d) Segmented image of the suggested technique with τ = 0.5, (e) Segmented image of the suggested technique with τ = 0.6, (f) Segmented image of the suggested technique with τ = 0.7, (g) Segmented image of the suggested technique with τ = 0.8, (h) Segmented image of the suggested technique with τ = 0.9, (i) Segmented image of the suggested technique with τ = 1.

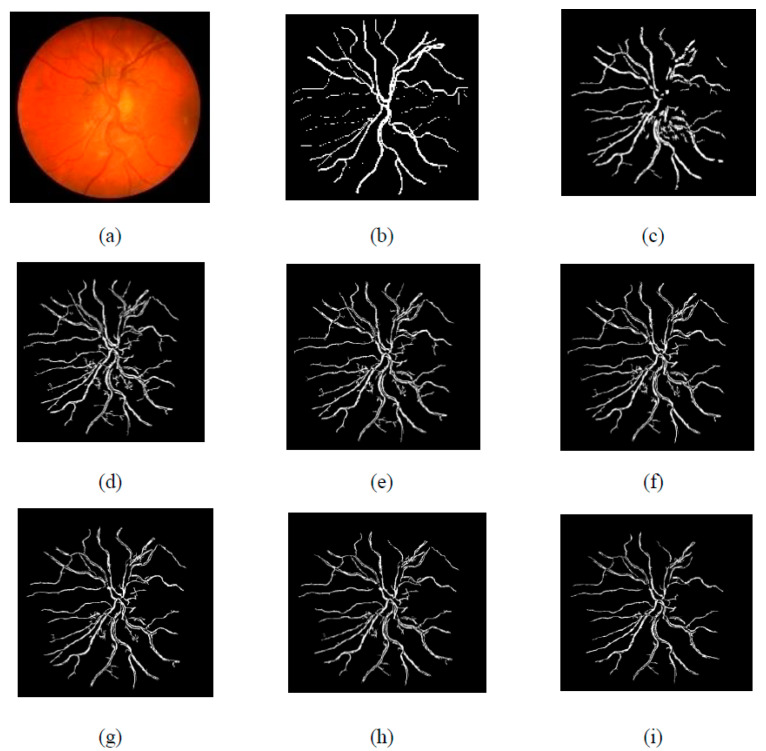

Figure 8.

(a) Original image of CHASE-DB1 dataset, (b) Ground truth image, (c) Segmented image from original curvelet, (d) Segmented image of the suggested technique with τ = 0.5, (e) Segmented image of the suggested technique with τ = 0.6, (f) Segmented image of the suggested technique with τ = 0.7, (g) Segmented image of the suggested technique with τ = 0.8, (h) Segmented image of the suggested technique with τ = 0.9, (i) Segmented image of the suggested technique with τ = 1.

For Figure 7, the first retinal original image of the CHASE_DB1 database is given in Figure 7a, while Figure 7b is the corresponding ground truth image. Figure 7c represents the vessel extracted by applying curvelet transform enhanced method. Figure 7d–i shows the vessels extracted by the suggested method with different τ values of the Jerman filter. All the observations of Figure 8 for CHASE_DB1 database are as explained for DRIVE database.

Thus, experimentally, it is noted that the existing curvelet transform enhancement method fail to extract many thin vessels accurately. The main advantage of the suggested approach is that it does not have postprocessing module after segmentation.

Table 3 represents the comparison of the performance measures of the recommended approach (that is computed for 0.5 as τ value) with other approaches presented in the literature. The performance of the proposed technique on DRIVE and CHASE_DB1 datasets are related with other techniques with reference to sensitivity, specificity, and accuracy: Kar et al. [12], Azzopardi et al. [40], Mapayi et al. [41], Zhao et al. [42], Zhang et al. [43], Tan et al. [44], Farokhain et al. [45], Orlando et al. [46], Rodrigues and Marengoni [47], Jiang et al. [48], Khomri et al. [49], Memari et al. [50], Sundaram et al. [51], Dash and Senapati [52], and Dash et al. [53]. It establishes that the recommended method accomplishes a higher accuracy in comparison with many state-of-the-art methods while retaining comparable sensitivity and specificity value.

As the suggested work is to improve the traditional curvelet transform, from Table 3 it is observed that there is a significant improvement in the results by the suggested method compared to traditional curvelet transform technique both for the DRIVE and CHASE_DB1 databases. Furthermore, the suggested approach outperforms the state-of-the-art-of methods.

5. Conclusions

This paper recommends a new technique for making curvelet transform approach more robust for retinal blood vessel segmentation by integrating it with the Jerman filter for different cut-off threshold values (τ) of the Jerman filter. For segmentation purpose, mean-C thresholding technique is employed. The integration of the Jerman filter and curvelet decomposition strongly intensify both thick and thin vessels and hence delivers better segmentation performance than the original curvelet transform. Simulation results establish that the suggested integrated scheme effectively detects the blood vessels and outperforms the state-of-the-art approaches in terms three performance indicators, sensitivity, accuracy, and specificity, over two public databases, DRIVE and CHASE_DB1. The achieved average sensitivity, specificity, and accuracy of segmented images are 0.7528, 0.9933, 0.9600 and 0.7078, 0.9850, 0.9559 on DRIVE and CHASE_DB1 databases, respectively.

Additionally, the proposed approach can be employed in a real-time scenario, as the approach is an unsupervised technique that does not need any training data.

Even though the segmentation performances are promising, they can further be improved by considering the optimum set of curvelet coefficients. In the future, this approach can be extended for classification of abnormal and healthy image by assimilating deep-learning-based classifiers.

Acknowledgments

Jana Shafi would like to thank the Deanship of Scientific Research, Prince Sattam bin Abdul Aziz University, for supporting this work.

Author Contributions

This research specifies below the individual contributions: Conceptualization, S.D., S.V., K. and M.S.K.; Data curation, K., M.S.K., M.W. and J.S.; Formal analysis, S.D., J.S., S.V., M.F.I. and M.W.; Funding acquisition, M.F.I. and M.W.; Investigation, S.D., S.V., K., M.S.K., M.W. and M.F.I.; Methodology, S.D., S.V., K., M.S.K., M.W., J.S. and M.F.I.; Project administration, M.F.I. and M.W.; Resources, M.F.I., M.W. and J.S.; Software, S.D., S.V., K. and M.S.K.; Supervision, J.S., S.V., M.F.I. and M.W.; Validation, S.D., S.V., K., M.S.K., J.S., M.F.I. and M.W. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge contribution to this project from the Rector of the Silesian University of Technology under a proquality grant no. 09/020/RGJ21/0007.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analysed in this study.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Zhou L., Rzeszotarski M.S., Singerman L.J., Chokreff J.M. The detection and quantification of retinopathy using digital angiograms. IEEE Trans. Med. Imaging. 1994;13:619–626. doi: 10.1109/42.363106. [DOI] [PubMed] [Google Scholar]

- 2.Teng T., Lefley M., Claremont D. Progress towards automated diabetic ocular screening: A review of image analysis and intelligent systems for diabetic retinopathy. Med. Biol. Eng. Comput. 2002;40:2–13. doi: 10.1007/BF02347689. [DOI] [PubMed] [Google Scholar]

- 3.Jerman T., Pernu F., Likar B., Špiclin Z. Beyond Frangi: An improved multiscale vesselness filter, in Medical Imaging: Image Processing. Int. Soc. Opt. Photo. 2015;9413:94132A. doi: 10.1117/12.2081147. [DOI] [Google Scholar]

- 4.Jerman T., Pernuš F., Likar B., Špiclin Z. Enhancement of vascular structures in 3D and 2D angiographic images. IEEE Trans. Med. Imaging. 2016;35:2107–2118. doi: 10.1109/TMI.2016.2550102. [DOI] [PubMed] [Google Scholar]

- 5.Frangi A.F., Niessen W.J., Vincken K.L., Viergever M.A. Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Cambridge, MA, USA, 11–13 October 1998. Volume 1496. Springer; Berlin/Heidelberg, Germany: 2006. Multiscale vessel enhancement filtering; pp. 130–137. [DOI] [Google Scholar]

- 6.Liew G., Wang J.J. Retinal vascular signs: A window to the heart? Rev. Esp. Cardiol. 2011;64:515–521. doi: 10.1016/j.recesp.2011.02.014. [DOI] [PubMed] [Google Scholar]

- 7.Li Q., Sone S., Doi K. Selective enhancement filters for nodules, vessels, and airway walls in two- and three- dimensional CT scans. Med. Phys. 2003;30:2040–2051. doi: 10.1118/1.1581411. [DOI] [PubMed] [Google Scholar]

- 8.Donoho D.L., Duncan M.R. Digital curvelet transform: Strategy, implementation, and experiments; Proceedings of the Wavelet Applications VII; Orlando, FL, USA. 5 April 2000; pp. 12–30. [DOI] [Google Scholar]

- 9.Esmaeili M., Rabbani H., Mehri A., Dehghani A. Extraction of retinal blood vessels by curvelet transform; Proceedings of the 16th IEEE International Conference on Image Processing (ICIP); Cairo, Egypt. 7–10 November 2009; pp. 3353–3356. [DOI] [Google Scholar]

- 10.Miri M.S., Mahloojifar A. Retinal image analysis using curvelet transform and multistructure elements morphology by reconstruction. IEEE Trans. Biomed. Eng. 2011;58:1183–1192. doi: 10.1109/TBME.2010.2097599. [DOI] [PubMed] [Google Scholar]

- 11.Aghamohamadian-Sharbaf M., Pourreza H.R., Banaee T. A novel curvature-based algorithm for automatic grading of retinal blood vessel tortuosity. IEEE J. Biomed. Health Inform. 2015;20:586–595. doi: 10.1109/JBHI.2015.2396198. [DOI] [PubMed] [Google Scholar]

- 12.Kar S.S., Maity S.P. Blood vessel extraction and optic disc removal using curvelet transform and kernel fuzzy c-means. Comput. Biol. Med. 2016;70:174–189. doi: 10.1016/j.compbiomed.2015.12.018. [DOI] [PubMed] [Google Scholar]

- 13.Kar S.S., Maity S.P., Delpha C. Retinal blood vessel extraction using curvelet transform and conditional fuzzy entropy; Proceedings of the 22nd European Signal Processing Conference (EUSIPCO); Lisbon, Portugal. 1–5 September 2014; pp. 1821–1825. [Google Scholar]

- 14.Fraz M.M., Remagnino P., Hoppe A., Uyyanonvara B., Rudnicka A.R., Owen C.G., Barman S.A. Blood vessel segmentation methodologies in retinal images–a survey. Comput. Methods Programs Biomed. 2012;108:407–433. doi: 10.1016/j.cmpb.2012.03.009. [DOI] [PubMed] [Google Scholar]

- 15.Sinthanayothin C., Boyce J.F., Cook H.L., Williamson T.H. Automated localisation of the optic disc, fovea, and retinal blood vessels from digital colour fundus images. Br. J. Ophthalmol. 1999;83:902–910. doi: 10.1136/bjo.83.8.902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Marín D., Aquino A., Gegúndez-Arias M.E., Bravo J.M. A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans. Med. Imaging. 2010;30:146–158. doi: 10.1109/TMI.2010.2064333. [DOI] [PubMed] [Google Scholar]

- 17.Staal J., Abràmoff M.D., Niemeijer M., Viergever M.A., Van Ginneken B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging. 2014;23:501–509. doi: 10.1109/TMI.2004.825627. [DOI] [PubMed] [Google Scholar]

- 18.Ricci E., Perfetti R. Retinal blood vessel segmentation using line operators and support vector classification. IEEE Trans. Med. Imaging. 2007;26:1357–1365. doi: 10.1109/TMI.2007.898551. [DOI] [PubMed] [Google Scholar]

- 19.Kande G.B., Subbaiah P.V., Savithri T.S. Unsupervised fuzzy based vessel segmentation in pathological digital fundus images. J. Med. Sys. 2010;34:849–858. doi: 10.1007/s10916-009-9299-0. [DOI] [PubMed] [Google Scholar]

- 20.Salem S.A., Salem N.M., Nandi A.K. Segmentation of retinal blood vessels using a novel clustering algorithm (RACAL) with a partial supervision strategy. Med. Biol. Eng. Comput. 2007;45:261–273. doi: 10.1007/s11517-006-0141-2. [DOI] [PubMed] [Google Scholar]

- 21.Ng J., Clay S.T., Barman S.A., Fielder A.R., Moseley M.J., Parker K.H., Paterson C. Maximum likelihood estimation of vessel parameters from scale space analysis. Image Vis. Comput. 2010;28:55–63. doi: 10.1016/j.imavis.2009.04.019. [DOI] [Google Scholar]

- 22.Soares J.V., Leandro J.J., Cesar R.M., Jelinek H.F., Cree M.J. Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Trans. Med. Imaging. 2006;25:1214–1222. doi: 10.1109/TMI.2006.879967. [DOI] [PubMed] [Google Scholar]

- 23.Lupascu C.A., Tegolo D., Trucco E. FABC: Retinal vessel segmentation using AdaBoost. IEEE Trans. Inf. Technol. Biomed. 2010;14:1267–1274. doi: 10.1109/TITB.2010.2052282. [DOI] [PubMed] [Google Scholar]

- 24.Chaudhuri S., Chatterjee S., Katz N., Nelson M., Goldbaum M. Detection of blood vessels in retinal images using two-dimensional matched filters. IEEE Trans. Med. Imaging. 1989;8:263–269. doi: 10.1109/42.34715. [DOI] [PubMed] [Google Scholar]

- 25.Kovács G., Hajdu A. A self-calibrating approach for the segmentation of retinal vessels by template matching and contour reconstruction. Med. Image Anal. 2016;29:24–46. doi: 10.1016/j.media.2015.12.003. [DOI] [PubMed] [Google Scholar]

- 26.Annunziata R., Garzelli A., Ballerini L., Mecocci A., Trucco E. Leveraging multiscale hessian-based enhancement with a novel exudate inpainting technique for retinal vessel segmentation. IEEE J. Biomed. Health Inform. 2016;20:1129–1138. doi: 10.1109/JBHI.2015.2440091. [DOI] [PubMed] [Google Scholar]

- 27.Zamperini A., Giachetti A., Trucco E., Chin K.S. Effective features for artery-vein classification in digital fundus images; Proceedings of the 25th IEEE International Symposium on Computer-Based Medical Systems (CBMS); Rome, Italy. 20–22 June 2012; pp. 1–6. [Google Scholar]

- 28.Relan D., MacGillivray T., Ballerini L., Trucco E. Retinal vessel classification: Sorting arteries and veins; Proceedings of the 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Osaka, Japan. 3–7 July 2013; pp. 7396–7399. [DOI] [PubMed] [Google Scholar]

- 29.Dashtbozorg B., Mendonça A.M., Campilho A. An automatic graph-based approach for artery/vein classification in retinal images. IEEE Trans. Image Process. 2014;23:1073–1083. doi: 10.1109/TIP.2013.2263809. [DOI] [PubMed] [Google Scholar]

- 30.Estrada R., Allingham M.J., Mettu P.S., Cousins S.W., Tomasi C., Farsiu S. Retinal artery-vein classification via topology estimation. IEEE Trans. Med. Imaging. 2015;34:2518–2534. doi: 10.1109/TMI.2015.2443117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Relan D., MacGillivray T., Ballerini L., Trucco E. Automatic retinal vessel classification using a least square-support vector machine in VAMPIRE; Proceedings of the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Chicago, IL, USA. 26–30 August 2014; pp. 142–145. [DOI] [PubMed] [Google Scholar]

- 32.Hart W.E., Goldbaum M., Côté B., Kube P., Nelson M.R. Measurement and classification of retinal vascular tortuosity. Int. J. Med. Inform. 1999;53:239–252. doi: 10.1016/S1386-5056(98)00163-4. [DOI] [PubMed] [Google Scholar]

- 33.Grisan E., Foracchia M., Ruggeri A. A novel method for the automatic grading of retinal vessel tortuosity. IEEE Trans. Med. Imaging. 2008;27:310–319. doi: 10.1109/TMI.2007.904657. [DOI] [PubMed] [Google Scholar]

- 34.Lotmar W., Freiburghaus A., Bracher D. Measurement of vessel tortuosity on fundus photographs. Albrecht Graefes Arch. Klin. Ophthalmol. 1979;211:49–57. doi: 10.1007/BF00414653. [DOI] [PubMed] [Google Scholar]

- 35.Poletti E., Grisan E., Ruggeri A. Image-level tortuosity estimation in wide-field retinal images from infants with retinopathy of prematurity; Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society; San Diego, CA, USA. 28 August–1 September 2012; pp. 4958–4961. [DOI] [PubMed] [Google Scholar]

- 36.Oloumi F., Rangayyan R.M., Ells A.L. Assessment of vessel tortuosity in retinal images of preterm infants; Proceedings of the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Chicago, IL, USA. 26–30 August 2014; pp. 5410–5413. [DOI] [PubMed] [Google Scholar]

- 37.Lisowska A., Annunziat R., Loh G.K., Karl D., Trucco E. An experimental assessment of five indices of retinal vessel tortuosity with the RET-TORT public dataset; Proceedings of the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Chicago, IL, USA. 26–30 August 2014; pp. 5414–5417. [DOI] [PubMed] [Google Scholar]

- 38.Perez-Rovira A., MacGillivray T., Trucco E., Chin K.S., Zutis K., Lupascu C., Tegolo D., Giachetti A., Wilson P.J., Doney A., et al. VAMPIRE: Vessel assessment and measurement platform for images of the Retina; Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Boston, MA, USA. 30 August–3 September 2011; pp. 3391–3394. [DOI] [PubMed] [Google Scholar]

- 39.Trucco E., Azegrouz H., Dhillon B. Modeling the tortuosity of retinal vessels: Does caliber play a role? IEEE Trans. Biomed. Eng. 2010;57:2239–2247. doi: 10.1109/TBME.2010.2050771. [DOI] [PubMed] [Google Scholar]

- 40.Azzopardi G., Strisciuglio N., Vento M., Petkov N. Trainable COSFIRE filters for vessel delineation with application to retinal images. Med. Image Anal. 2015;19:46–57. doi: 10.1016/j.media.2014.08.002. [DOI] [PubMed] [Google Scholar]

- 41.Mapayi T., Viriri S., Tapamo J.R. Adaptive thresholding technique for retinal vessel segmentation based on GLCM-energy information. Comput. Math Methods Med. 2015:597475. doi: 10.1155/2015/597475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Zhao Y., Rada L., Chen K., Harding S.P., Zheng Y. Automated vessel segmentation using infinite perimeter active contour model with hybrid region information with application to retinal images. IEEE Trans. Med. Imaging. 2015;34:1797–1807. doi: 10.1109/TMI.2015.2409024. [DOI] [PubMed] [Google Scholar]

- 43.Zhang J., Dashtbozorg B., Bekkers E., Pluim J.P., Duits R., ter Haar Romeny B.M. Robust retinal vessel segmentation via locally adaptive derivative frames in orientation scores. IEEE Trans. Med. Imaging. 2016;35:2631–2644. doi: 10.1109/TMI.2016.2587062. [DOI] [PubMed] [Google Scholar]

- 44.Tan J.H., Acharya U.R., Chua K.C., Cheng C., Laude A. Automated extraction of retinal vasculature. Med. Phys. 2016;43:2311–2322. doi: 10.1118/1.4945413. [DOI] [PubMed] [Google Scholar]

- 45.Farokhian F., Yang C., Demirel H., Wu S., Beheshti I. Automatic parameters selection of Gabor filters with the imperialism competitive algorithm with application to retinal vessel segmentation. Biocybern. Biomed. Eng. 2017;37:246–254. doi: 10.1016/j.bbe.2016.12.007. [DOI] [Google Scholar]

- 46.Orlando J.I., Prokofyeva E., Blaschko M.B. A discriminatively trained fully connected conditional random field model for blood vessel segmentation in fundus images. IEEE Trans. Biomed. Eng. 2016;64:16–27. doi: 10.1109/TBME.2016.2535311. [DOI] [PubMed] [Google Scholar]

- 47.Rodrigues L.C., Marengoni M. Segmentation of optic disc and blood vessels in retinal images using wavelets, mathematical morphology and Hessian-based multi-scale filtering. Biomed. Signal Process. Control. 2017;36:39–49. doi: 10.1016/j.bspc.2017.03.014. [DOI] [Google Scholar]

- 48.Jiang Z., Zhang H., Wang Y., Ko S.B. Retinal blood vessel segmentation using fully convolutional network with transfer learning. Comput. Med. Imaging Graph. 2018;68:1–15. doi: 10.1016/j.compmedimag.2018.04.005. [DOI] [PubMed] [Google Scholar]

- 49.Khomri B., Christodoulidis A., Djerou L., Babahenini M.C., Cheriet F. Retinal blood vessel segmentation using the elite-guided multi-objective artificial bee colony algorithm. IET Image Process. 2018;12:2163–2171. doi: 10.1049/iet-ipr.2018.5425. [DOI] [Google Scholar]

- 50.Memari N., Saripan M.I., Mashohor S., Moghbel M. Retinal blood vessel segmentation by using matched filtering and fuzzy c-means clustering with integrated level set method for diabetic retinopathy assessment. J. Med. Biol. Eng. 2019;39:713–731. doi: 10.1007/s40846-018-0454-2. [DOI] [Google Scholar]

- 51.Sundaram R., Ravichandran K.S., Jayaraman P., Venketaraman B. Extraction of blood vessels in fundus images of retina through hybrid segmentation approach. Mathematics. 2019;7:169. doi: 10.3390/math7020169. [DOI] [Google Scholar]

- 52.Dash S., Senapati M.R. Enhancing detection of retinal blood vessels by combined approach of DWT, Tyler Coye and Gamma correction. Biomed. Signal Process. Control. 2020;57:101740. doi: 10.1016/j.bspc.2019.101740. [DOI] [Google Scholar]

- 53.Dash S., Senapati M.R., Sahu P.K., Chowdary P.S.R. Illumination normalized based technique for retinal blood vessel segmentation. Int. J. Imaging Syst. Technol. 2020;31:351–363. doi: 10.1002/ima.22461. [DOI] [Google Scholar]

- 54.Rani G., Oza M.G., Dhaka V.S., Pradhan N., Verma S., Joel J.P.C. Applying deep learning-based multi-modal for detection of coronavirus. Multimedia Syst. 2021;18:1–24. doi: 10.1007/s00530-021-00824-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Sharma T., Verma S. Kavita, Prediction of heart disease using Cleveland dataset: A machine learning approach. Int. J. Rec. Res. Asp. 2017;4:17–21. [Google Scholar]

- 56.Sharma T., Srinivasu P.N., Ahmed S., Alhumam A., Kumar A.B., Ijaz M.F. An AW-HARIS Based Automated Segmentation of Human Liver Using CT Images. CMC-Comput. Mater. Contin. 2021;69:3303–3319. [Google Scholar]

- 57.Singh A.P., Pradhan N.R., Luhach A.K., Agnihotri S., Jhanjhi N.Z., Verma S., Kavita, Ghosh U., Roy D.S. A novel patient-centric architectural frame work for blockchain-enabled health care applications. IEEE Trans. Ind. Inform. 2021;17:5779–5789. doi: 10.1109/TII.2020.3037889. [DOI] [Google Scholar]

- 58.Li W., Chai Y., Khan F., Jan S.R.U., Verma S., Menon V.G., Kavita, Li X. A comprehensive survey on machine learning-based big data analytics for IOT-enabled healthcare system. Mob. Netw. Appl. 2021;26:234–252. doi: 10.1007/s11036-020-01700-6. [DOI] [Google Scholar]

- 59.Dash J., Bhoi N. A thresholding based technique to extract retinal blood vessels from fundus images. Future Comput. Inform. J. 2017;2:103–109. doi: 10.1016/j.fcij.2017.10.001. [DOI] [Google Scholar]

- 60.Ijaz M.F., Attique M., Son Y. Data-Driven Cervical Cancer Prediction Model with Outlier Detection and Over-Sampling Methods. Sensors. 2020;20:2809. doi: 10.3390/s20102809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Srinivasu P.N., SivaSai J.G., Ijaz M.F., Bhoi A.K., Kim W., Kang J.J. Classification of Skin Disease Using Deep Learning Neural Networks with MobileNet V2 and LSTM. Sensors. 2021;21:2852. doi: 10.3390/s21082852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Mandal M., Singh P.K., Ijaz M.F., Shafi J., Sarkar R. A Tri-Stage Wrapper-Filter Feature Selection Framework for Disease Classification. Sensors. 2021;21:5571. doi: 10.3390/s21165571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Ijaz M.F., Alfian G., Syafrudin M., Rhee J. Hybrid Prediction Model for Type 2 Diabetes and Hypertension Using DBSCAN-Based Outlier Detection. Synthetic Minority over Sampling Technique (SMOTE), and Random Forest. Appl. Sci. 2018;8:1325. doi: 10.3390/app8081325. [DOI] [Google Scholar]

- 64.Gandam A., Sidhu J.S., Verma S., Jhanjhi N.Z., Nayyar A., Abouhawwash M., Nam Y. An efficient post-processing adaptive filtering technique to rectifying the flickering effects. PLoS ONE. 2021;16:e0250959. doi: 10.1371/journal.pone.0250959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Almaroa A., Burman R., Raahemifar K., Lakshminarayanan V. Optic disc and optic cup segmentation methodologies for glaucoma image detection: A survey. J. Opthalmol. 2015;2015:180972. doi: 10.1155/2015/180972. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Wang B., Wang S., Qiu S., Wei W., Wag H., He H. CSU-Net: A context Spatial U-Net for accurate blood vessel segmentation in fundus images. IEEE J. Biomed. Health Inform. 2021;25:1128–1138. doi: 10.1109/JBHI.2020.3011178. [DOI] [PubMed] [Google Scholar]

- 67.Sato Y., Westin C.F., Bhalerao A., Nakajima S., Shiraga N., Tamura S., Kikinis R. Tissue classification based on 3D local intensity structures for volume rendering. IEEE Trans. Visual. Comp. Graphcs. 2000;6:160–180. doi: 10.1109/2945.856997. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Publicly available datasets were analysed in this study.