Abstract

Amid the flood of fake news on Coronavirus disease of 2019 (COVID-19), now referred to as COVID-19 infodemic, it is critical to understand the nature and characteristics of COVID-19 infodemic since it not only results in altered individual perception and behavior shift such as irrational preventative actions but also presents imminent threat to the public safety and health. In this study, we build on First Amendment theory, integrate text and network analytics and deploy a three-pronged approach to develop a deeper understanding of COVID-19 infodemic. The first prong uses Latent Direchlet Allocation (LDA) to identify topics and key themes that emerge in COVID-19 fake and real news. The second prong compares and contrasts different emotions in fake and real news. The third prong uses network analytics to understand various network-oriented characteristics embedded in the COVID-19 real and fake news such as page rank algorithms, betweenness centrality, eccentricity and closeness centrality. This study carries important implications for building next generation trustworthy technology by providing strong guidance for the design and development of fake news detection and recommendation systems for coping with COVID-19 infodemic. Additionally, based on our findings, we provide actionable system focused guidelines for dealing with immediate and long-term threats from COVID-19 infodemic.

Keywords: COVID-19, Infodemic, Fake News, Natural language processing, Text analytics, Network analytics

1. Introduction

On February 15th, 2020, the World Health Organization (WHO) Director-General Tedros Adhanom Ghebreyesus at the Munich Security Conference said that “We’re not just fighting an epidemic; we’re fighting an infodemic.” Fake news regarding the origin, preventions, cures, diagnostic procedures, and protective measures of the disease has been spreading uninhibited on the Internet simultaneously (Bastani & Bahrami, 2020; Huang & Carley 2020; Tasnim, Hossain, & Mazumder, 2020). Nearly half of the citizens surveyed in a study reported that they encountered pandemic related fake news (Casero-Ripollés, 2020).

Failure to stop the spread of fake news on coronavirus disease of 2019 (COVID-19) has resulted in panic, fear, and chaos within the society (Singh et al., 2020). We are observing various examples of the impact of fake news consumption on different aspect of our society. For example, an official in Iran’s Legal Medicine Organization stated that 796 people died from alcohol poisoning in Iran as a result of rumors about alcohol as a cure for the virus on social media (Spring, 2020). A 5-year-old boy went blind after his parents gave him strong alcohol to fight the virus. Another prominent example is 5G conspiracy theory proliferated by many celebrities like John Cusack, Woody Harrelson and a former Nigerian senator in social media (Satariano & Alba, 2020). It links 5G towers with the spread of new coronavirus and is responsible for the burning of about 80 mobile towers, and verbal and physical assaults of many telecommunication employees in UK including one engineer who was stabbed and sent to hospital (Reichert 2020). COVID-19 has really provided a tremendous opportunity for fake news to spread and cause harm to public safety by spreading falsehoods regarding issues such as origin of the virus, harm caused by taking vaccines, prevention and control procedures of COVID-19, and the number of fatalities (Ball & Maxmen, 2020). Last but not least, dispersing fake news also agitates racism and the panic purchase of medical supplies and drugs (Cuan-Baltazar et al., 2020, Depoux et al., 2020).

With the rapid and wide spread of COVID-19 fake news, it is crucial to understand the nature and characteristics of COVID-19 infodemic that will facilitate the design and development of fake news detection and recommendation systems for coping with COVID-19 infodemic. However, understanding the subtle nature of fake news is a challenging task for various reasons. First, fake news usually minges with real news and, thus, becomes difficult for people to distinguish truth from the fake. Second, as fake news quickly becomes viral, it gets difficult to keep pace with the real news as a recent study has shown that fake news spreads faster than the real news (Vosoughi et al. 2018). There is a call for increased research efforts to identify and control COVID-19 fake news (Editorial 2020). However, such effort are hampered due to a combination of factor such as lack of deeper understanding of the specific COVID-19 fake news topics at the human level and ineffective Artificial Intelligence (AI) tools for COVID-19 detection at the system level.

Despite the increasingly prevalent influence of fake news, it is not a new phenomenon and falls into the governance structure of the well-established First Amendment theory (Syed, 2017). This theory considers the news space as the “marketplace of ideas” and argues that counterspeech or more speech about real news is the tenet against fake news. However, counterspeech approach has been criticized for curbing fake news in the era of digital media environment (Syed, 2017). It is not clear how technologies could be leveraged to increase the effectiveness of counterspeech against fake news. In addition, following the suggestion by Alvesson and Sanberg (2011), we scrutinize and challenge two implicit assumptions of extant fake news literature to advance fake news research. One assumption is that bounded rationality due to limited information or mental capacity is the primary reason explaining why people believe and distribute fake news. People are assumed to avoid reading fake news when given labels informing them the real or false status about the news. Driven by this assumption, many recent studies focus on the correct prediction of fake news and attaching credibility labels to online news (Mena 2020) while ignoring other important causes for the consumption and spread of fake news such as inherent confirmation bias in human beings. People tend to seek and interpret information that confirms their existing beliefs (Nickerson 1998). Some scholars note that confirmation bias is prominent among new readers; people are more likely to read and spread fake news that echoes their beliefs (Kim et al. 2019). Therefore, the solution of fake news research should go beyond fake news identification/labeling and be extended to facilitate counterspeech at the network level such that the individuals have a reduced chance to form biases and/or reinforce their preexisting biases. Another assumption is the simple ideological denotation of fake news as bad while ignoring how real news generation, distribution and consumption may learn from the viral mechanisms of fake news. For example, fake news producers are having increased capability to target their audience (Napoli 2018) and often use bots to further spread false information (Huang & Carley, 2020), which reduces news consumers’ exposure to real news. It is important to consider the viral mechanisms of fake news when designing technical solutions for supporting counterspeech. For example, we may also consider using bots to propagate COVID real news as one form of counterspeech against fake news.

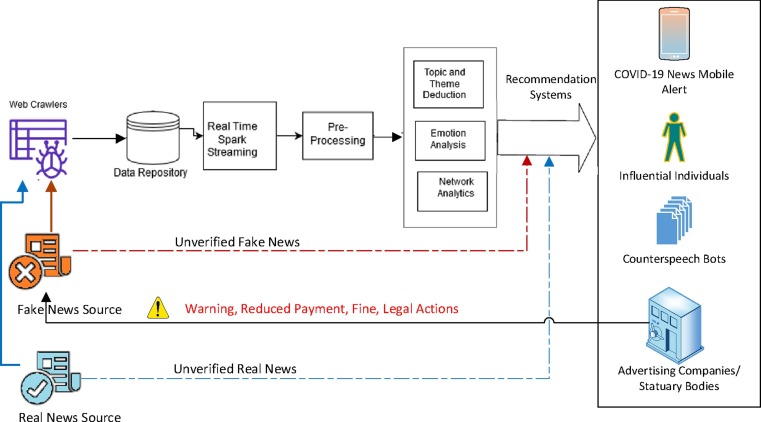

Therefore, this study has three major objectives. One is to review prior research related to three important aspects of COVID-19 fake news for motivating this study, including 1) the reason for people to believe and disseminate COVID-19 fake news, 2) COVID-19 fake news spreading patterns, and 3) strategies used for combating COVID-19 fake news. The second objective is to compare and contrast COVID-19 real and fake news in both content (i.e., topics, themes and emotions) and network characteristics. The third objective is to propose strategies centered on a trustworthy AI system to support the “couterspeech” approach of First Amendment Theory at both individual and network level such that more real news will permeate the media space. For example, influential individuals (e.g., celebrities) and real news bots could be utilized to proactively disseminate real news. Advertising companies also have the social responsibility to reduce the distribution of fake news by news agencies by issuing warning or reduced payment. At the individual level, recommendation systems could be leveraged to differentiate real and fake news and suggest real news to individual readers. Given the lack of data on COVID-19 fake news and the novelty of this particular pandemic, there is a paucity of research in all three related areas. This study addresses the following two questions: 1) how does fake news differ from real news in content and network characteristics in the context of COVID-19? and 2) how AI systems could be used to promote counterspeech against fake news?

We propose a trustworthy fake news detection, alert and recommendation systems for supporting the “counterspeech” that builds on First Amendment Theory. The core of the systems utilizes a combination of analytics approaches to improve fake news identification, which is further embedded in recommendation systems facing general population, influential individuals, counterspeech bots, advertising companies and statuary bodies. The core of the proposed systems was validated by analyzing 2049 fake news and 12,490 real news from news websites using LDA topic modeling, emotion analysis and network analytics.

This study advances the fake news literature in two ways. First, based on the findings from the three-pronged analytical approach, we provide specific contextual understanding of the differences between fake news and real news related to COVID-19 in topic, emotion and network characteristics. We note the importance of context in fake news detection from comparing and contrasting our findings with those in the fake news literature. Second, the holistic approach proposed in this study integrates fake news detection, alert and recommendation to different stakeholders (e.g., celebrities, counterspeech bots, advertising companies, and statuary bodies). Such holistic approach moves beyond the central assumption underlying extant fake news research that bounded rationality of news readers accounts for the viral of fake news. Instead, our approach addresses not only bounded rationality through improved detection of fake news but also alleviates the effect of inherent confirmation bias in human beings by leveraging counterspeech, i.e., infiltrating digital media space with more speech about real news. Thus, our theory-based counterspeech approach opens a new avenue for future research on fake news.

The remainder of the paper is organized as follows: in the next section, we review the literature of First Amendment Theory and counterspeech, as well as extant research on COVID-19 fake news. In the following section, we elaborate our research method encompassing data acquisition, pre-processing, and text and network analytics. Next, we report our findings from text and network analytics, followed by a discussion of behavioral, design and theoretical implications. Finally, we conclude with major findings and contributions.

2. Literature review

2.1. First Amendment theory and counterspeech

Prior studies have applied different theories to combat fake news or other forms of online deception such as Interpersonal Deception theory (IDT), Four-factor theory (FFT), Leakage theory (LT), Information Manipulation theory (IMT), Competence Model of Fraud Detection (CM), and Reputation theory (RT) (Siering et al., 2016, Zhang et al., 2016, Kim et al., 2019). For example, Siering et al. (2016) propose content-based cues and linguistic cues for detecting fraud on crowdfunding platforms based on IDT, FFT, LT, IMT and CM. Zhang et al. (2016) integrate verbal and nonverbal cues to better detect fake online reviews based on IDT. Kim et al. (2019) draw upon RT and argue that source rating is a viable approach for reducing the consumption of fake news during its spreading phase. Despite the new insights on fake news detection, these studies assume people will avoid reading online fake news or deceptive information that is detected to be fake or has low credibility ratings. Such assumption ignores the inherent confirmation bias of human beings who tend to selectively read and spread fake news that align with their existing beliefs; thereby, reducing the effectiveness of fake news detection techniques especially when the media space is permeated with fake news. Individual news readers will likely bypass the suggestions of fake news detection systems or even consider the suggestions as not creditable if they are inundated by fake news that echo their beliefs than real news. To win the battle against fake news, it is critical to make the paradigm shift in research effort from fake news detection to increasing the speech about real news in the media space or leveraging the counterspeech approach in First Amendment theory (FAT).

FAT seeks to promote values of freedom of expression, which governs not only public mass communication platforms such as radio and TV channels but also recent private online social media such as Facebook and Twitter where regular users could publish content (Syed, 2017). FAT embraces marketplace of ideas as its underlying doctrine (Napoli 2018). The marketplace metaphor imagines media space as a marketplace where real news competes with false news and prevails through infiltrating the marketplace with a multiplicity of real news (i.e., counterspeech) rather than through governmental banning of fake news. The counterspeech approach was effective when the media space was dominated by traditional mass communication channels and has high entry barriers for fake news generators (Napoli 2018). However, as a result of reduced entry barriers enabled by digital media, the generation and dispersion of fake news is outpacing that of real news, which not only increases the individuals’ exposure of fake news but also biases their view and erodes their trust toward real news. The counterspeech approach is becoming less effective for curtailing the influence of fake news without joint intervention of media space using technologies and incentives by technical and advertising companies. It is critical to design solutions that could holistically address the generation, dispersion and consumption of fake news and regain the media space for promoting healthy counterspeech, In this study, we focus on a holistic solution centered around a trustworthy AI system supporting the “couterspeech” approach and provide guidelines for enforcing the systems such as the need for incentive alignment by advertising companies to favor the generation and distribution of real news over fake news.

2.2. Why people believe COVID-19 fake news and dissemination

Since the COVID-19 outbreak, researchers have made efforts to investigate COVID-19 fake news. Some researchers examined why people believe and disseminate COVID-19 fake news. For example, Pennycook, McPhetres, Zhang, Lu, and Rand (2020b) found that people shared false news about COVID-19 partially because they did not think adequately about the accuracy of the content before deciding to share. A different study from this group subsequently suggested that “being reflective, numerate, skeptical, and having basic science knowledge (or some combination of these things) is important for the ability to identify false information about the virus” (Pennycook et al., 2020a). Motta et al. (2020) reported that people who consumed news from specific sources during the early stages of the pandemic were more likely to uphold COVID-19 fake news. They believe that public health experts (e.g., CDC, WHO, etc.) exaggerated the severity of the pandemic. Laato et al. (2020) concluded that an individual’s online information trust and perceived information overload were positively correlated with their tendency to share unverified information during the COVID-19 pandemic. All these studies implicitly assume that bounded rationality due to limited information and mental capacity is the underlying cause for fake news consumption and distribution; thus, news readers will avoid reading fake news if they have information regarding whether a news is fake or real. It is critical to go beyond this assumption and take other alternative explanations to advance fake news research. In this study, we seek to not only alleviate the bounded rationality of news reader through fake news detection but also address the issue of confirmation bias through theory-based counter-speech approach enabled by a holistic fake news detection and recommendation systems.

2.3. Spreading pattern of COVID-19 fake news

Some researchers looked at the spreading pattern of COVID-19 fake news. Cinelli et al. (2020) found that the spreading of information is motivated by the interaction paradigm set by the specific social media platforms or/and by the interaction patterns of users who were engaged in the topic. Huang and Carley (2020) pointed out that tweets including fake news URLs and stories are more likely to be disseminated by regular users than by news agencies and governments. Many of these regular users are bots that contribute to the majority of retweets of content sourced from fake news websites. Based on the analysis of 225 pieces of fake news identified by fact-checking tools, Brennen, Simon, Howard, and Nielsen (2020) indicated that 20% of them were shared by politicians, celebrities, and other public figures (top-down) but it accounted for 69% of the total social media engagement. Most of the fake news were still shared by regular users (bottom-up) but generated less engagement. These prior studies suggest the key roles of bots and influential individuals in spreading fake news. The majority of fake news written by regular users has low spread while a small percentage of fake news becomes viral or engages a large audience due to the amplifying role of bots and/or influential people. The effect of bots and influential individuals has yet to be considered in the design of solutions for supporting counterspeech.

2.4. Strategies to combat fake news

Strategies about how to combat COVID-19 fake news have been proposed by researchers. Bastani and Bahrami (2020) suggested having an active and effective presence of health experts and professionals on social media during the crisis and improving public health literacy as the most recommended strategies to curb fake news related issues. Pennycook et al. (2020b) recommended implementing accuracy nudges on social media platforms to help combat the spread of fake news. However, the implementation of accuracy nudges relies upon fact-checkers. Even though more and more fact-checkers have participated in response to COVID-19 fake news (Brennen et al., 2020), it is challenging for fact-checkers to keep up with the massive amount of fake news produced during the pandemic Van Bavel et al. (2020). Some other scholars attempt to combat fake news through tracking down their sources. For example, Morales et al. (2020) proposed a keystroke biometrics recognition system for content de-anonymization to link multiple accounts belonging to the same user who is generating fake content based on their typing behavior. Sharma et al. (2020) designed a dashboard that provides topic analysis, sentiment analysis, and misinformation analysis regarding COVID-19 fake news accessed from Twitter posts in order to track fake news on Twitter.

These prior studies have shed important lights into the reasons for the infodemic of COVID-19, its spreading patterns and counter measures. However, there is still an urgent need for developing mitigation systems and strategies for coping with COVID-19 fake news. Prior research on fake news is mostly from political contexts or utilizes a plethora of empirical or modeling approaches that investigate issues such as impact on financial indicators, efficacy of warning statement attached to fake news title, and mathematical modeling of fake news propagation. Some examples are in recent studies by Clarke et al., 2020, Moravec et al., 2020, Papanastasiou, 2020, Pennycook et al., 2021. Limited research has attempted to understand the topic and trend of COVID-19 fake news using topic modeling and sentiment analysis such as Sharma et al. (2020) and Xue et al. (2020). No prior studies have systematically compared and contrasted fake and real news related to COVID-19 using mixed methods that integrate topic modeling, discrete emotion analysis and network analytics. Further, extant research mostly focuses on fake news distributed over social media such as Facebook and Twitter where the content is mostly generated by individual social media users. Besides social media, COVID-19 fake news could also be created and propagated by fake news websites where content is created by new agencies. Little research has systematically compared fake news with real news on COVID-19 written by professionals such as new agencies. To fill the research gap, this study compared and contrasted fake and real news about COVID-19 based on the results of topic modeling, emotion analysis and network characterization of fake and real news on news websites. A deeper insight into the inherent characteristics and compositional differences among COVID-19 related fake news and real news will help develop mitigation systems and coping strategies from a pragmatic perspective. Our findings also have important implications for improving the effectiveness of counterspeech or more communication of real news about COVID-19 and the design of centralized surveillance systems for detecting and impeding the flow of fake news.

3. Research method

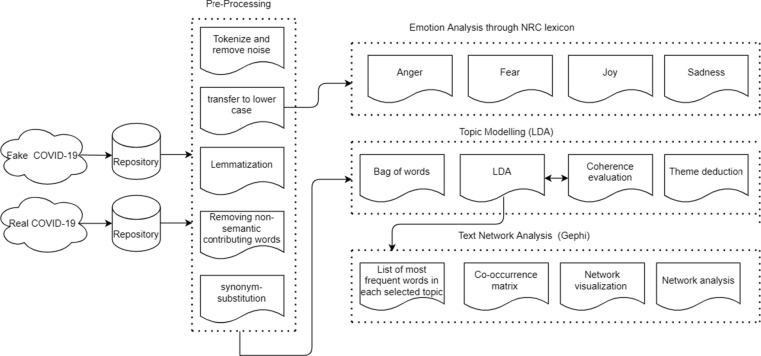

This study leverages natural language processing (NLP), topic modeling technique, and text network analysis to compare and contrast fake news and real news related to COVID-19. Recent studies have shown that the use of various NLP and topic modeling approaches lead to pragmatic solutions (Li et al., 2020). Our proposed method broadly consists of three phases, including data acquisition, preprocessing, and text analytics. Fig. 1 describes the overall text analytic process that we deployed in this study.

Fig. 1.

Text Analytic Process used in this study.

3.1. Data acquisition

We built separate data repositories for both fake news and real news using a web crawling program from March 2020 to May 2020. Fake news was gathered based on a vetted list of websites reported by a platitude of sources such as Wikipedia, https://www.factcheck.org/, etc. Prior research has used these websites as sources for legitimate news (Zhang, Gupta, Kauten, Deokar, & Qin, 2019). Customized crawlers were written in python using python libraries, including lxml, requests, and csv and x-paths. These crawlers extracted the data into excel files and were customized for each website so advertisements or noise get collected in the acquired datasets. Manual inspection of each extracted data field was performed to ensure garbage data does not get collected. Table 1 summarizes the new sources used in this study and the number of news incorporated into our data repositories.

Table 1.

Source websites and count of fake (See Appendix D) and legitimate news articles about COVID-19.

| Source Website | COVID-19 Real News | COVID-19 Fake news |

|---|---|---|

| NYPost | 7513 | |

| Reuters | 3720 | |

| Vox | 900 | |

| NYtimes | 160 | |

| BBC | 102 | |

| Time | 95 | |

| Jimbakkershow | 619 | |

| Newspunch | 450 | |

| Clashdaily | 321 | |

| Theepochtimes | 312 | |

| Infowars | 277 | |

| Redstate | 34 | |

| Dcgazette | 29 | |

| Intellihub | 7 |

3.2. Data pre-processing

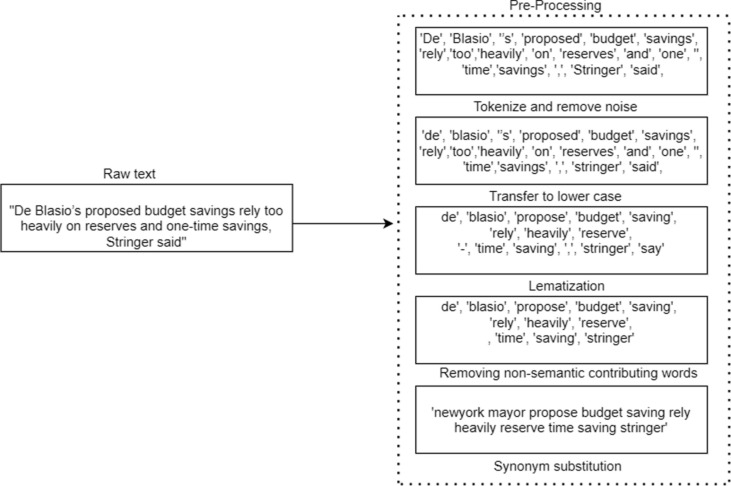

Before performing text analytics, we applied several NLP preprocessing steps on the original text using the Spacy package in python (Honnibal & Montani, 2017). We first tokenized each document and transformed them into lower cases. Second, we eliminated the tokens that do not bear any semantic value such as stop words (i.e., that, the, who, etc.), URLs, email addresses and specific characters, such as question mark, exclamation mark, etc. In the third step, we eliminated some words that were present in all articles (such as coronavirus) or add no specific values (such as “say) but remained unfiltered by the stop words. We also replaced some tokens by their synonyms. For example, “bill de blasio” and “de blasio” was replaced with “newyork mayor.” Fig. 2 shows an example of how a raw data set is ingested and taken through a series of pre-processing phases such as tokenization, lemmatization, synonym substation, etc.

Fig. 2.

Application of data pre-processing on a raw data record.

3.3. Text analytics

We first performed sematic analysis for deriving topics and themes using topic modeling and understanding emotions in fake and real news. We then extracted top words from each of the topics to build text networks separately for fake and real news and compared the network patterns of these two types of news based on various key network parameters.

3.3.1. Topic modeling

We used Latent Dirichlet Allocation (LDA), which is an unsupervised topic modeling technique for identifying latent topics in a collection of documents (corpus). LDA is regarded as among the most effective topic modeling techniques and has been widely used for both confirmatory and exploratory purposes (Lu et al., 2011, Li et al., 2016). We used bag-of-words (BOW) approach to provide input to LDA for generating latent topics in the document corpus such that every topic consists of word combinations to capture the context of their usage and each document can then be mapped to multiple topics that we derived (Blei, Ng, & Jordan, 2003). The LDA algorithm was applied using the sklearn package in Python. To our knowledge, no prior studies have used latent topic deduction approaches to understand COVID-19 focused fake news and real news. LDA approach was applied separately to identify topics in fake and real news. We aim to reveal underlying themes and recognize strategies that fake news writers leverage to lure people to read and spread fake news about COVID-19. Moreover, comparing and contrasting latent topics of these two types of news may also help generate useful insights for raising reader awareness of fake news distributed over online news media. Finally, such insights could be used to develop smart alerts systems for citizens.

In particular, we implemented a bigram, which is formed from the concatenation of two adjacent words such as ‘New York’, as our unit of analysis (i.e., tokens). Wallach (2006) pointed out that bigrams are better analysis units for topic modeling than single word tokens such as ‘New’ and ‘York’ since bigrams are able to better maintain the semantic value of words and capture context within the documents while balancing the dimensionality of the vocabulary constructed. In line with the typical practice of topic modeling, we also performed additional filtering of bigrams that occur in less than one percent or more than 95 percent of the documents since they provide little semantic value in extracting topics in document corpus. One major challenge with running LDA is to decide the number of topics (K) to extract since there is no agreed formula to determine the optimal value of K (Savov et al. 2020). The determination of K can be done qualitatively and/or quantitatively (Mortenson and Vidgen 2016). The qualitative approach largely relies on the subjective interpretation of topics and selects a K value that could generate a meaningful set of topics. The qualitative approach could, at the same time, be facilitated by quantitative distances among topics such that a good K value will be able to separate different topics. In this study, we employed Pyldavis (a web-based visualization) package (Sievert and Shirley, 2014, Mabey, 2018), which enables us to take a mixed approach to determine the value of K by examining both the meaning of each topic from the bigram bar chart (see Appendix A) and the distances among topical clusters on an intertopic distance map (Fig. 2). We run bigram LDA with different K values (i.e., 9, 8, 7, 6, and 5) and stopped searching at k = 5 since it leads to a set of non-overlapping and meaningful topics within each of the clusters in both datasets (fake and real).

In the final step, two experts qualitatively checked the coherence and interpretability of topics and extracted the themes within each topic. Our overall process of performing LDA is consistent with prior studies such as (Ellmann, Oeser, Fucci, & Maalej, 2017; Zhao, Zhang, & Wu, 2019). Appendix 1A and 1B provide details of topical term distribution across each of the five groups that we obtained using this process for fake as well as real news. Two researchers then independently went through topic terms of the five topics of fake news and real news in Appendix 1A and 1B to understand these latent topics and deduce themes within each topic. Themes were then matched with the goal of reaching the consensus. There was a 95 percent match in the themes between the two researchers. This process was also repeated for both fake news and real news theme deduction. The themes generated from this process were then summarized in Table 2, Table 3 and reported in the Results section.

Table 2.

Fake news topics and themes.

| Topic No. | Key Terms | Theme Deduction | Percentage of Tokens |

|---|---|---|---|

| 1 | US, NJ, LA, NY, Anthony Fauci, CDC, white house, health officials | Localized Epicenters and hotspot | 23.4% |

| Disease control, Scientific agencies or individual providing policy guidance or fact checking | |||

| 2 | South Korea, Hong Kong, Mainland China, Saudi Arabia, Cruise (diamond princess), European country | Global Epicenter or location outside US | 22.3% |

| confirm Case, new case, report case, test positive, million people | Intensity of disease spread, disease statistics | ||

| infect people, infectious disease, flu like, new infection | Disease Symptoms | ||

| 3 | World Health Organization (WHO), Chinese government, Wuhan China, Chinese virus, virus originate | Pandemic origin and Global Agencies responsible for curbing virus (e.g. WHO) | 19.6% |

| human to human transmission | Mode of transmission | ||

| 4 | ccp virus, party virus, ccp chinese, chinese communist | Virus origin | 18.1% |

| virus pandemic, grocery store, cause disease, food supply, south dakota | Spread of virus | ||

| social distance, police officer, health safety, distance guideline | Prevention and disease mitigation | ||

| 5 | small business, American people, toilet paper, hand sanitizer | Impact on business and individual life | 16.6% |

| house speaker, federal government stimulus package, relief package, | Federal relief |

Table 3.

Real news topics and themes.

| Topic No. | Key Terms | Theme Deduction | Percentage of Tokens |

|---|---|---|---|

| 1 | test positive, public health, health official, social distance, disease control, control prevention, wash hand, self-quarantine | Preventative measures | 22.8% |

| people die, infectious disease, intensive care, high risk, death toll | Severity of disease | ||

| nursing home, cruise ship | High occurrence place | ||

| 2 | New York, White House, New York Mayor, New Yorker, Federal Government, Trump administration, New Jersey, task force, press conference, executive order | Responses of federal government and New York | 20.3% |

| protective equipment, medical supply, hand sanitizer, face mask, essential worker, healthcare worker | Key resources for fighting COVID-19 | ||

| 3 | small business, work home, social medium, high school, toilet paper, mental health, grocery store, stay home, spring training, regular training, major league, play games | Impact on small business, overall human well-being (mental, physical and social) and sports and games | 20.2% |

| 4 | United States, Hong Kong, World Health Organization, South Korea, New Zealand, South Africa, case death, health minister, million people, new case, confirm case | Spread statistics of COVID-19 in US and other countries | 18.6% |

| stay home, home order, social distancing, state emergency lockdown measure, travel restriction | Governmental/state orders issued to slow down the spread of disease | ||

| 5 | central bank, wall street, billion euro, oil price, interest rate, federal reserve, financial crisis, stock market, supply chain, global economy, unemployment rate | Economic and societal impact in US and globally | 18.1% |

| stimulus package, unemployment benefits | Government stimulus program and relief efforts |

Fig. 3 shows intertopic distance map of fake news and real news topics on COVID-19 identified in this study. We use orange color and light blue color to denote fake news and real news, respectively. Each circle corresponds to one news topic (see a list of detailed topics in Table 2, Table 3 for fake and real news). The size of a circle suggests the prevalence of that topic in the corpus with larger circle containing more word tokens. For instance, topic 1 of fake news is more prevalent that topic 5. The distance between two circles reflects the similarities of the two news topics such that shorter distance suggests a higher level of similarity. For example, topic 1 of fake news is more similar to topic 2 than to topic 5.

Fig. 3.

Intertopic distance map (via multidimensional scaling) of fake and real news.

3.3.2. Emotion analysis

Besides extracting topics and themes, we are also interested in sentiments embedded in fake and real news. Following the suggestion by a recent review of basic emotions by Gu, Wang, Patel, Bourgeois, and Huang (2019), we analyzed four basic emotions (i.e. anger, fear/anxiety, sadness and joy). Basic emotions are considered to be universal across cultures and act as basis to form complex emotions (Power 2006). They can further elicit adaptive responses to aid human survival (Lazarus 1991). For example, fear is associated with threat avoidance, anger increases the tendency to fight and sadness often results in continued pondering on negative thoughts. The focus on these discrete basic emotions may bring us fundamental understanding of sentiments embedded in fake and real news and their impact.

To identify the four types of basic emotions, we adopted National Research Council Canada (NRC) emotion lexicon, which contains a list of English words and their matching with different types of emotions (Mohammad and Turney 2013). We performed the emotion analysis using Linguistic Inquiry and Word Count (i.e., LIWC2015) text analysis software since LIWC has been widely validated by prior studies (Pietraszkiewicz et al., 2019). LIWC2015 is considered as the gold standard for quantifying psychological content in the natural language, including people’s cognitions and emotions (LIWC). The use of standard LIWC2015 software tool to extract the four basic emotions allows us to build the body of knowledge from emotion analysis of fake news on COVID-19.

LIWC2015 was loaded with NRC emotion lexicon to enhance its capabilities. Pre-processed data is then fed to LIWC2015 software. This is subsequently tokenized into single word tokens. LIWC2015 then automatically counts the number of words associated with each of the four basic emotions and computes the percentage of occurrence of the four basic emotions in each document. The four emotion variables analyzed in this study are measured as the proportion of words that fall into that each emotion category to the total number of words in the news document. For example, a value of 3 for the fear emotion of a news document means that the proportion of fear words is 3% in that news document. Therefore, the four emotion variables are on a ratio scale with equal intervals and meaningful absolute zero points.

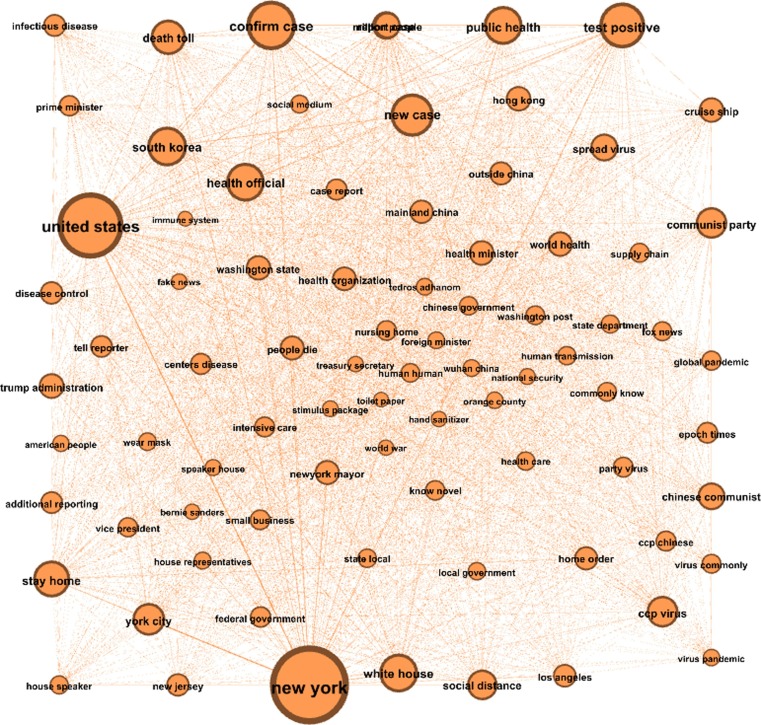

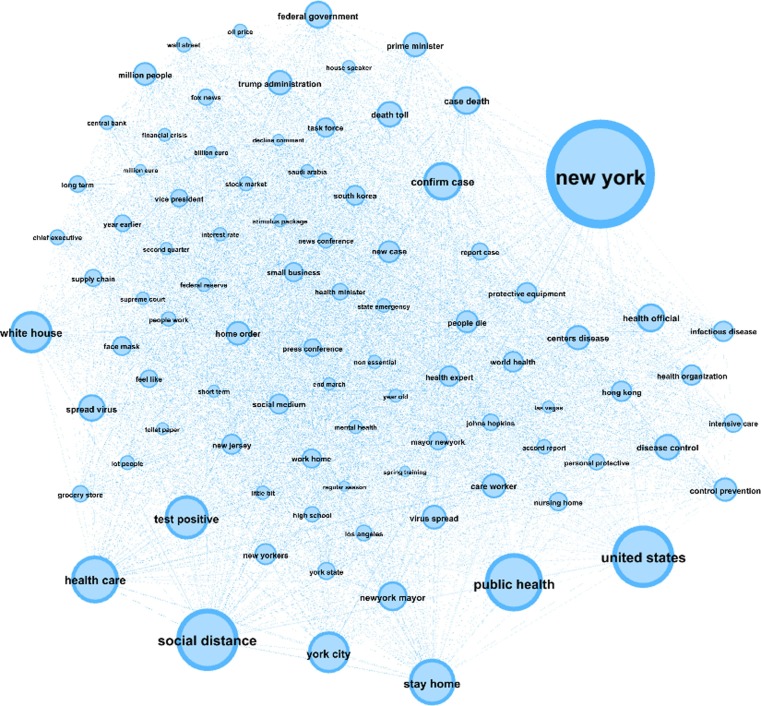

3.3.3. Text network analysis

In addition to topic modeling, we performed text network analysis to quantify and differ the network properties of fake and real news, which may provide important insights for designing fake-news detection systems for filtering news traffic by Internet service provider or by online media platforms such as Facebook and Twitter. Text network analysis identifies the relations between different textual entities such as frequency and co-occurrence of words (Popping, 2000), which provides the opportunity to interpret the linkage between those entities (Platanou et al. 2018). In this study, we used a co-occurrence network where nodes represent tokened words i.e., topics and the edges reflect the extent of co-occurrence between the words, assuming that words coexisting more often in the same document implies stronger semantic relation than those co-existing less frequently (Ineichen & Christen, 2015). To conduct network analysis, we employed Gephi (Yun and Park 2018), an open-source software tool that provides both network visualization and a wide range of quantitative network measures such as betweenness centrality and closeness centrality. The input used for Gephi is a list of top words in the topics extracted from above bigram LDA analysis since we aim to focus on those key words characterizing topics and avoid clutter in the text network. Particularly, we selected the top 20 most frequent words from each of the five topics generated by bigram LDA, which resulted in two separate lists of 100 words for fake news network and real news network.

4. Results

4.1. Topic analysis and theme deduction

After running bigram based LDA, we extracted five topics from fake news and five topics from real news. The term relevance metric, i.e. lambda, was set to the default value of 0.6 as suggested by Sievert and Shirley (2014). Table 2 shows the topics and themes that emerged from fake news corpus. The size of fake news topics, measured as the percent of tokens in the fake news corpus, ranges from 16.6 to 23.4 percent. The first fake news topic is the largest, consisting of two themes. One theme focuses on localized pandemic epicenter and hotspots within US that had the maximum disease spread. The other theme focuses on agencies or individuals who serve as fact checkers or guardians of truth. The second fake news topic consists of three themes focusing on global epicenters important for the spreading of COVID-19 as well as disease characteristics such as symptoms and intensity of spread. The third fake news topic mostly revolves around themes about pandemic origin, and spread mode, and global agencies (such as WHO) that work towards curbing the virus. The fourth topic focuses predominantly on three different themes. The first one is the misconception regarding virus origin. Several fake news articles focus on China and other purported originators of the virus. Another theme in this topic focuses on the spread of virus in places such as food delivery, grocery stores, etc. The third theme in this topic hinges on specific disease prevention and disease mitigation measures. Finally, the fifth topic focuses on the societal and economic impact of COVID-19 along with the federal government’s financial relief efforts.

Table 3 summarizes the topics and themes in real news corpus. The size of these five real news topics varies from 18.1 percent to 22.8 percent, which is comparable to that of fake news topics. Topic 1 focuses on the health hazards from the virus and preventative measures for reducing the risk of infection. Topic 2 is about governmental responses to the pandemic from communication to securing critical medical resources. Topic 3 covers the impact of the pandemic on small business, and individual lives. Topic 4 is about the statistics of the disease spreading in US and globally and regulations enforced to curtail its spread. Topic 5 centers on the economic and societal impact and the financial relief measures of federal government to help business and unemployed individuals.

From above, fake news and real news share some common themes such as health hazards, spread statistics and counter measures. At the same time, we note some major differences in topic themes between fake and real news. Real news does not have themes on the role of WHO in the spread of virus and the origin of the virus, i.e., China and/or Chinese communist party. In addition, real news seems to have more coverage about global impact such as global economy and oil price than fake news. Furthermore, fake news is more opinion based while real news is focused more on objective content.

4.2. Emotion analysis results

Besides topics and themes, we further compared and contrasted fake and real news based on four discrete basic emotions, i.e., anger, fear, sadness and joy. Table 4 shows the mean and standard deviation of the four emotions and the Mann-Whitney U test results for comparing the emotion distribution of fake news and real news. Mann-Whitney U test, also known as Wilcoxon test, is a non-parametric test for comparing two independent samples. We used this test since it does not require the assumptions of normality and equal variance and is particularly suitable when the two samples are different in size.

Table 4.

Emotions for real and fake news.

| Emotions | Fake News | Real News | Mann-Whitney U Test | |||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Test Stat | p | |

| Anger | 1.49 | 1.05 | 1.17 | 0.94 | 10828002.50 | 0.00 |

| Fear | 2.75 | 1.43 | 2.19 | 1.36 | 10185323.50 | 0.00 |

| Sadness | 1.81 | 1.04 | 1.66 | 1.07 | 12292045.00 | 0.00 |

| Joy | 1.17 | 0.96 | 1.19 | 0.94 | 13325627.00 | 0.10 |

From Table 4, in both fake and real news, fear is the dominant negative emotion with the next common emotion being sadness. Some examples for fear are “coronavirus pandemic will push an additional 130 million people to the brink of starvation “, “more dangerous than a nuclear attack”, “the majority of those cases end up in the intensive care unit”, and “the coronavirus pandemic has exacerbated the problem of scarce burial space in cities”. Some examples for sadness include “my friends are out of work. One of my friends had to terminate hundreds of her employees and give them their last paycheck”, and “had a case where a mom was already in the icu and the daughter, who was obese, came in …asked staff to wheel her by her mom’s room so she could say goodbye before she herself was intubated. we knew the mother was going to pass away.”

Among the three negative emotions, the extent of anger is the lowest for both fake and real news. At the same time, we found that the concentration of all three types of negative emotions is higher in fake news than in real news. Some examples for anger are “states closed gun shops and promise to arrest you if you leave your home” and “california officials … have been emptying prisons, … to prevent the spread of the coronavirus …release of seven sex offenders who are considered high risk criminals” and “too slow to respond to the coronavirus crisis”. We also found that joy is the least common emotion and has no significant difference in concentration between the two types of news. Our analysis results about anger and fear are in line with the literature that deceptive messages overall have stronger association with negative emotions (Newman et al. 2003). These negative emotions may help the fake messages spread quickly as found by Wu, Tan, Kleinberg, and Macy (2011) in the context of tweets. However, our findings about sadness and joy diverge from those by Vosoughi et al. (2018) that real stories distributed over Twitter are associated with more joy and sadness than fake stories. The inconsistent findings about sadness and joy may result from the differences in media space (i.e., Titter vs. news website) and/or the topic of news (non-COVID-19 vs. COVID-19). Future fake news studies should also go beyond the valence (i.e., positive vs. negative) of emotions and focus on more on discrete emotions to better differ these two types of news.

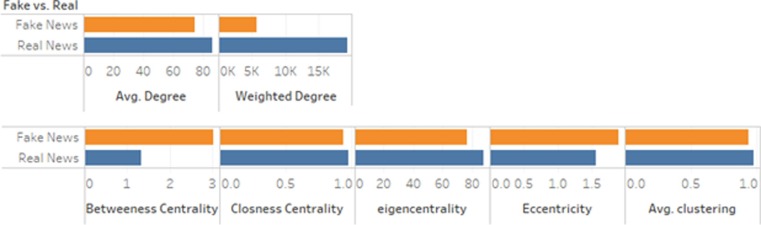

4.3. Network analytics results

While the topic modeling and emotion analysis approaches provide valuable insights into various characteristics of real and fake news along with the emergent themes, they do not explain the nature of inter-topical connectedness and network properties that are embedded within the news. Network analytic characteristics of COVID-19 fake and real news provide unique insights into the structural makeup and linkage properties of important topics within the entire corpus. We derive separate undirected networks with nodes representing bigrams using bag-of-words approaches and links or edges between the two nodes indicating if the two bigrams coexist in a single news item. We then compare various network analytic metrics for COVID-19 fake and real news. Fig. 4 summarizes the differences between fake and real news for six key network parameters: Average Degree, Average Weighted Degree, Average Betweenness Centrality, Closeness Centrality Eigenvector Centrality, and Eccentricity.

Fig. 4.

Comparison of fake and real news based on network characteristics.

Average degree for a network, which represents the average number of nodes that each node is uniquely connected with through their edges, is higher for real news (86 for real vs. 75 for fake). This may suggest that a single fake news item often focuses on a less diverse set of topics, which may help keep reader’s attention on a relatively narrow set of topics rather than much wider range of connected topics found in real news. For example, the fake news posted by Walker (2020) only covers topic 4 in Table 2 regarding the origin and spread of virus. Higher value of average degree for real news shows that, on an average, topics are more popular there and that they are being discussed in the context of other topics. Therefore, a single real news often covers more than one related topics. For example, the real news posted by (Barone, 2020) covers the restriction of meat purchase in Kroger (i.e., topic 3 in Table 3) while, the same time, it emphasizes the need to enforce preventative guidelines of CDC (i.e., topic 1 in Table 3). Likewise, we found that the average weighted degree is higher for real news network as compared to fake news (19303 for real vs. 5757 for fake). This is indicative of the frequency of ties, which serves as weight for an edge between any two connected nodes. It is computed by, first, evaluating the cumulative frequency of ties that each node shares with other connected nodes within the network and, then, finding the mean value for the entire network. This implies that the strength of ties is stronger in real news as compared to fake news, which again suggests a single real news item tends to cover a wider range of related topics. These could serve as the distinguishing features for develop a network based recommendation systems. Fig. 5a, Fig. 5b depict the entire network of fake and real news for weighted degree with the node size describing the importance of node within the network.

Fig. 5a.

Weighted degree for fake news.

Fig. 5b.

Weighted degree for real news.

Betweenness centrality measures how often a particular bigram appears on the shortest path between the two other bigrams. We found the betweenness centrality to be higher for fake news (approx. 3 for fake vs. approx. 1.3 for real). This implies that more terms within fake news serve as connection between other terms whereas real news has fewer nodes that serve this purpose. Appendix B shows the betweenness centrality for the entire network of fake and real news respectively with the importance of the node reflected by the size of the node circle.

Closeness centrality measures the average shortest geodesic distance (number of unique edges) of a given starting node to all other nodes in the network. Results show that closeness centrality is only marginally higher for real news and, therefore, will not be a good metric for inclusion in the design of COVID-19 fake news detection or recommendation systems. We evaluated eigenvector centrality metric for both fake and real news networks and found the value to be smaller for fake news network. Eigenvector centrality measures the connection of a node to other popular nodes within the network. In other words, it not only considers how many bigrams that a particular bigram of interest is connected with but also the degree of bigrams that are connected with the bigram of interest i.e., the importance of friends of friend. This was found to be higher for fake news, implying that fake news includes more repetitive arguments in a single news item. The average eccentricity, which is the distance of a given node to the farthest node within the network, was found to be higher in fake news. The difference in eccentricity suggests that fake news network overall is more disconnected than real news, which again suggests that a single fake news item often covers a narrow set of topics. On the other hand, average clustering coefficient, which is defined as the measure of density of a 1.5-degree egocentric network for each vertex (Hansen, Shneiderman, Smith, & Himelboim, 2020). This value is higher if a bigram connected to other bigrams (alters) that also have connection among themselves. In other words, friends of friends are connected. Real news network was found to have higher average clustering coefficient, suggesting an overall higher level of connectedness. Such a situation is bound to be more commonly found in real news due to the presence of plausible arguments and supporting evidences that are drawn from other topics. This shows that the tendency of nodes to form cliques is slightly higher in real news and provides the evidence that COVID-19 fake news is meant to appeal to a narrow set of interest topics while the real news caters to a broad set of topics. Appendix B shows the network for fake and real news based on eccentricity measure.

We performed additional comparative analysis on real and fake news data based on graph density and modularity (Table 5 ). Real news network was found to have slightly higher density than the fake news network. Density is a measure of completeness of the network and is computed as the ratio of total number of edges present to the total number of edges possible within the network. A higher density within real news network could be attributed to more comprehensive and in-depth coverage of a news item. Modularity, on the other hand, is another measure of the structural strength of the network and informs about the ability of a network to be grouped into smaller clusters or module class. These modules contain nodes that have stronger ties with the nodes within the same module but weaker ties with the nodes across different modules. Fake news reportedly has higher modularity than real news, which may again suggest the more frequent use of repetitive arguments in fake news.

Table 5.

Additional network characteristics for COVID-19 real and fake news.

| Network Characteristics | Real | Fake |

|---|---|---|

| Graph density | 0.97 | 0.926 |

| Modularity | 0.118 | 0.158 |

5. Discussion

Extant studies largely examined fake news on social media platforms such as Twitter and Facebook. Our study represents an initial endeavor to explore fake news from news websites, which lays the groundwork for more future studies along the line. More importantly, our study systematically compared and contrasted COVID-19 related fake news and real news using topic modeling, emotion analysis and network analytics, which provides important behavioral, design and theoretical implications for improving the effectiveness of counterspeech against fake news about COVID-19 and the design of centralized surveillance systems for curtailing the spread of fake news.

5.1. Behavioral implications

First Amendment theory contends that counterspeech or more speech about real news is the tenet against fake news. Our findings from LDA topic modeling and network analysis bring important behavioral implications for supporting the counterspeech through real news generation, and distribution. First, the results of network analysis suggest that COVID-19 real news has much more overlapping of topics than COVID-19 fake news. Each real news item apparently often consists of more than one topics targeting mixed audience while individual fake news tends to have more focused topic, catering the interest of specific audience. The narrow focus of audience helps COVID-19 fake news writers craft more effective communication messages based on who the audience are and what they want, which, to certain extent, explains why COVID-19 fake news goes viral. In the era of COVID-19 pandemic, real news writers carry critical social responsibility to inform and educate the public about the truth. To win the war of COVID-19 infodemic where real news and fake news compete for audience attention in the same digital space, writers for COVID-19 real news will have to better understand the different needs of news audience and create different messages to subsets of audiences instead of broadcasting to the general audience. Real news created around more focused topics may help arouse individual interests and further enhance readers’ desire to further spread the COVID-19 real news, increasing the effectiveness of counterspeech. Readers may also choose to subscribe to focused topics about COVID-19 that they are interested in and get notified for updates. Particularly, real news companies could work closely with Google, Twitter and Facebook to identify COVID-19 topics on social media that just start to trend and write more focused news entailing truth to reduce the noise of COVID-19 fake news in the digital space. In addition, those influential individuals such as celebrities play a key role in spreading news content and generate most of the social media engagement (Brennen et al., 2020). Therefore, just as these influential individuals may spread fake news, they could also be leveraged to spread more COVID-19 real news or launch counterspeech against the spread of COVID-19 fake news. Real news companies such as New York Times need to collaborate closely with these public influential figures to distribute truth about COVID-19 through social media platform.

5.2. Design implications

This study has strong implications on the development of autonomous trustworthy COVOD-19 fake news detection systems that utilizes the approaches demonstrated in this study as its core. Most recent efforts to develop AI-based fake news detection approaches have largely focused on specific areas (such as politics) and, therefore, bear little contextual relevance of COVID-19 case. Such systems are incapable of recognizing COVID-19 fake news due to lack of specific contextual understanding derived from topics, themes, emotions, and network characteristics. Hence, the findings from other fake news detection systems may not be generalizable to COVID-19 situations, rendering them ineffective for COVID-19 fake news detection. Implications of such a failure are high since the consumption of COVID-19 fake news not only skews an individual’s decision-making but also have serious societal implications.

Further, most of the recent fake news detection models developed for contexts other than COVID-19 only utilize a limited set of methodological approaches. For example, Zhang et al. (2019) developed FEND for fake news detection using topic modeling on a general political dataset. Cook, Waugh, Abdipanah, Hashemi, and Rahman (2014) used network analytic approaches to understand information propagation using social media for veracity evaluation in a political campaign. Using a combination of methodological approaches can foster improved identification of COVID-19 fake news and embedded non-obvious inter-topical relationships in COVID-19 fake news. This study provides the theoretical and methodological grounding for developing such ‘systems-of-the-future’ that utilize a combination of analytics approaches for promoting healthy counterspeech to tackle COVID-19 infodemic. Such system could be designed as real-time systems that utilize high performance computing paradigm, entailing the three-pronged analytical approach proposed in this study as its core. Topic modeling, emotion analysis and network analytics could be performed dynamically to build the up-to-date distinct digital fingerprints of COVID-19 fake news on the fly. Real-time recommendation systems could check each unverified COVID-19 news against the fingerprints of COVID-19 fake news, decide the credibility of the news and send recommendations to different stakeholders.

Fig. 6 describes the schematic of proposed real-time AI-based trustworthy fake news detection, alert and recommendation systems for COVID-19 and post COVID-19 stages. The web crawlers scrape various websites to populate the repository of vetted COVID-19 fake and real news (Appendix C). This repository could then be used as the data source for performing real time analytics. The implementation of real time detection system could be accomplished using Resilient Data Definition (RDD) or data frame processing structures of a memory based system such as Apache Spark that has Spark streaming capabilities of time window processing. After pre-processing, data is fed to the three component of the models presented in this study. First component performs linguistic based feature extraction, topic modeling and thematic analysis to identify various identifying features of COVID-19 fake and real news using the approaches described in this study. The second component of the proposed comprehensive model performs emotion analysis. While prior research has looked into sentiment analysis of fake news in different contexts, models that support emotion analysis of COVID-19 news provide unique insights into various emotional characteristics such as anger, fear and sadness that, as seen in this study, vary across fake and real news. Finally, the third component provides insights into the connectedness and network characteristics of topics within the COVID-19 fake news, thus providing guidelines for developing an effective recommendation system. Such approaches could be combined with various document similarity measures. This system will provide improved COVID-19 alerts and recommendations that could be routed to general population, influential individuals (e.g., celebrities), counterspeech bots, advertising companies, statuary bodies, and other stake holders like fact checking websites. As COVID-19 pandemic undergoes various cycles of pandemic, fake news topics will change. These models will need continuous updates to remain relevant for COVID-19 and Post COVID-19 phases.

Fig. 6.

Trustworthy Real-time AI systems for fake news detection, alert and recommendation for COVID-19 and post COVID-19 stages.

Based on the findings of this study and the proposed holistic design in Fig. 6, we provide the following seven actionable guidelines to combat COVID-19 fake news. First, as evident from topic and thematic analysis, we need a continuously updated knowledge repository of COVID-19 facts regarding topics such as the effectiveness of masks, virus origins, spreading mechanisms, disease symptoms, prevention and mitigation mechanisms (e.g., social distancing, masks, etc.). Second, as suggested from emotion analysis, COVID-19 fake news has strong negative emotions when compared to real news. This is likely to continue as we undergo various phases of pandemic lifecycle. The designed systems and the human intelligence need to be sensitive to these emotions for improved COVID-19 fake news recognition. Third, our network analytics approach demonstrated in this study provides deep insights into how various COVID-19 fake news terms are intertwined within different fake news topics. The design of future fake news detection system will improve by considering network features such as weighted degrees, betweenness centrality, eccentricity, etc. This provides added depth to the detection tools that is beyond semantics. Fourth, the detection of COVID-19 fake news can be facilitated by techniques that can assess the extent of subjectivity and objectivity such that news with higher subjectivity could be flagged. Fifth, specialized vocabulary and dictionaries are needed to develop effective machine-based fake news detection systems. The results from this study provide an initial list for developing such systems of the future. These vocabularies and dictionaries have to be continuously updated as the COVID-19 pandemic evolves through its various lifecycle stages. Sixth, human intelligence and domain expertise need to be integrated into the holistic AI-based systems to augment the system performance. Examples include checking the authenticity of news and determining shelf life of historical data, scientific facts about vaccine risk and advantages. Lastly, to promote healthy counterspeech about COVID-19 in the digital media space, the recommendation systems of COVID-19 needs to push information about news credibility, and alerts of high risk fake news with severe consequences to a variety of stakeholders. For example, general population and celebrities receiving mobile alerts about high risk COVID-19 fake news would be less likely to consume and further share it, which helps deter the flow of fake news. Technology companies and governmental agencies could also consider using counterspeech bots to amplify the dissemination of COVID-19 real news. In addition, to win the war of COVID-19 infodemic, it is necessary to jointly involve advertising companies and statuary bodies to enforce incentive alignment mechanisms and sanctions and hold fake news sources accountable. For example, websites generating and/or posting COVID-19 fake news could receive warning, reduced payment, fine or even closure sanctions.

5.3. Theoretical implications

By following the guidelines by Alvesson and Sandberg (2011), this study challenges the assumption of bounded rationality used for explaining why people believe and distribute fake news in extant fake news literature. As a result of this assumption, current research efforts for resolving the issue of fake news center on providing credibility labels to online news while ignoring the prevalent influence of confirmation bias on news readers. We argue that the solutions to address the viral phenomenon of fake news should go beyond fake news identification/labeling and be extended to facilitate counterspeech in the digital media space such that individuals have less exposure to fake news; thus they have a reduced chance to exercise confirmation bias. In addition, our study used First Amendment Theory as the foundation and argued that it is critical to design solutions that could holistically address the generation, dispersion and consumption of fake news and regain the media space for promoting healthy counterspeech. In particular, we extended the counterspeech approach of FAT from the context of traditional mass communication to the context of digital media as a solution to combat fake news. We also proposed some detailed suggestions centered around a trustworthy AI systems to elaborate the counterspeech approach of FAT in the digital media space (Fig. 6). Our suggestions emphasize not only technical measures based on text and network analytics but also the involvement of key stakeholders, e.g., celebrities, advertising companies and statuary bodies. Therefore, ssssssssour theory-based counterspeech approach provides a much-needed new perspective for future research on fake news and could serve as the foundation for future studies to build the counterspeech approach of FAT.

Finally, we would like to point out some limitations of this study and related potential future research endeavors. One limitation is related to the time frame of news items analyzed in this study. We only collected data in the early stage of COVID-19 up until early May 2020. Future research could collect more COVID-19 news and perform time-series analysis to examine the potential evolvement in topics and emotions across different stages of the pandemic. Moreover, our study only examines the COVID-19 fake and real news written by professionals. The findings of our study have yet to be verified across different digital media platforms such as Twitter and YouTube by future studies. For example, our findings from the topic modeling and network analytics might not be fully extensible to tweets disseminated on social media since tweets can only contain up to 280 characters. Lastly, we only analyzed news written in English in this study. It would be interesting for future studies to corroborate our findings using a multi-lingual analysis.

6. Conclusion

We are now inundated by the COVID-19 infodemic and more has yet to be accomplished in our battle against COVID-19 infodemic now as well in years to come to address issues related to COVID-19 causes and treatments, vaccine adoption and post-COVID symptoms. This paper examines the nature and characteristics of COVID-19 fake news and real news and identifies distinguishing features of COVID-19 fake news in three dimensions, i.e. topic and theme, emotion and network. A deeper understanding of fake news features across diverse dimensions and First Amendment Theory lay the foundations for the design of a trustworthy real-time AI-based fake news detection, alert and recommendation systems for COVID-19 fake news and improve the understanding of behavioral characteristics embedded within COVID-19 fake and real news.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Biographies

Ashish Gupta is Professor of Business Analytics in Raymond J. Harbert College of Business at the Auburn University. His research interests are in the areas of misinformation, data analytics, healthcare informatics, sports analytics, organizational and individual performance. His recent articles have appeared in journals such as Journal of Business Research, European Journal of Information Systems, Decision Sciences Journal, European Journal of Operations Research, MIT Sloan Management Review, Risk Analysis, Journal of American Medical Informatics Association, Journal of Biomedical Informatics, IEEE Transactions, Information Systems Journal, Decision Support Systems, Information Systems Frontiers, Communications of the Association for Information Systems, etc. He has published 5 edited books. Professor Gupta’s research has been supported by various grant agencies such as THEC, DHS, and several private organizations.

Han Li is an Associate Professor of MIS and information assurance at the University of New Mexico, USA. She received her doctorate in management information systems from Oklahoma State University. She has published in Journal of the Association for Information Systems, Decision Sciences, Decision Support Systems, Operations Research, European Journal of Information Systems, Information Systems Journal, European Journal of Operational Research, Journal of Organizational and End User Computing, Journal of Computer Information Systems, and other journals. She is an associate editor for Journal of Electronic Commerce Research. Her current research interests include fake news, Heath IT, privacy and confidentiality, data and information security and the adoption and post-adoption of information technology.

Alireza Farnoush is a doctoral student in the department of Industrial and System Engineering. His research focuses on text mining application.

Wenting ‘Kayla’ Jiang is a doctoral student in the department of system & Technology within the College of Business. Her research focuses on misinformation and text mining.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.jbusres.2021.11.032.

Appendices A, B, C and D. Supplementary material

The following are the Supplementary data to this article:

References

- Alvesson Mats, Sandberg Jorgen. Generating research questions through problematization. Academy of Management Review. 2011;36(2):247–271. [Google Scholar]

- Ball Philip, Maxmen Amy. The epic battle against coronavirus misinformation and conspiracy theories. Nature. 2020;581(7809):371–374. doi: 10.1038/d41586-020-01452-z. [DOI] [PubMed] [Google Scholar]

- Bastani Peivand, Bahrami Mohammad Amin. COVID-19 Related Misinformation on Social Media: A Qualitative Study from Iran. Journal of medical Internet research. 2020 doi: 10.2196/18932. [DOI] [PubMed] [Google Scholar]

- Zhao Yuehua, Zhang Jin, Wu Min. Finding Users’ Voice on Social Media: An Investigation of Online Support Groups for Autism-Affected Users on Facebook. International Journal of Environmental Research and Public Health. 2019;16(23):4804. doi: 10.3390/ijerph16234804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sievert Carson, Shirley Kenneth. LDAvis: A method for visualizing and interpreting topics. Proceedings of Workshop on Interactive Language Learning, Visualization, and Interfaces, Association for Computational Linguistics. 2014:63–70. [Google Scholar]

- Blei David M., Ng Andrew Y., Jordan Michael I. Latent dirichlet allocation. Journal of Machine Learning Research. 2003;3(Jan):993–1022. [Google Scholar]

- Brennen J. Scott, Simon Felix M., Howard Philip N., Nielsen Rasmus-Kleis. Types, sources, and claims of Covid-19 misinformation. Reuters Institute. 2020;7(3):1. [Google Scholar]

- Casero-Ripollés Andreu. Impact of Covid-19 on the media system. Communicative and democratic consequences of news consumption during the outbreak. El profesional de la información. 2020;292 [Google Scholar]

- Cinelli Matteo, Quattrociocchi Walter, Valensise Carlo Michele, Brugnoli Emanuele, Schmidt Ana Lucia, Zola Paola.…Scala Antonio. Vol. 101. Scientific Reports; 2020. pp. 1–10. (The COVID-19 social media infodemic). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clarke Jonathan, Chen Hailiang, Du Ding, Hu Yu Jeffrey. Fake news, investor attention, and market reaction. Information Systems Research. 2020;321:35–52. [Google Scholar]

- Cook David M., Waugh Benjamin, Abdipanah Maldini, Hashemi Omid, Rahman Abdul Rahman. Twitter deception and influence: Issues of identity, slacktivism, and puppetry. Journal of Information Warfare. 2014;13(1):58–71. [Google Scholar]

- Cuan-Baltazar Jose Yunam, Muñoz-Perez Maria José, Robledo-Vega Carolina, Pérez-Zepeda Maria Fernanda, Soto-Vega Elena. Misinformation of COVID-19 on the internet: infodemiology study. JMIR Public Health and Surveillance. 2020;62 doi: 10.2196/18444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Depoux Anneliese, Martin Sam, Karafillakis Emilie, Preet Raman, Wilder-Smith Annelies, Larson Heidi. The pandemic of social media panic travels faster than the COVID-19 outbreak Editorial. Coronavirus misinformation needs researchers to respond. Nature. 2020;581(7809):355–356. [Google Scholar]

- Gu Simeng, Wang Fushun, Patel Nitesh P., Bourgeois James A., Huang Jason H. A model for basic emotions using observations of behavior in Drosophila. Frontiers in Psychology. 2019;10:781. doi: 10.3389/fpsyg.2019.00781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hansen Derek L., Shneiderman Ben, Smith Marc A., Himelboim Itai. In: Itai Himelboim editors. Analyzing Social Media Networks with NodeXL. Second Edition. DerekHansen L., Shneiderman Ben, MarcSmith A., editors. Morgan Kaufmann; 2020. Chapter 3 - Social network analysis: Measuring, mapping, and modeling collections of connections; pp. 31–51. [Google Scholar]

- Honnibal Matthew, Montani Ines. spaCy 2: Natural language understanding with Bloom embeddings, convolutional neural networks and incremental parsing. To appear. 2017;7(1):411–420. [Google Scholar]

- Ineichen Christian, Christen Markus. Analyzing 7000 texts on deep brain stimulation: What do they tell us? Frontiers in Integrative Neuroscience. 2015;9:52. doi: 10.3389/fnint.2015.00052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim Antino, Moravec Patricia L., Dennis Alan R. Combating fake news on social media with source ratings: The effects of user and expert reputation ratings. Journal of Management Information Systems. 2019;36(3):931–968. [Google Scholar]

- Laato Samuli, Islam A.K.M. Najmul, Islam Muhammad Nazrul, Whelan Eoin. What drives unverified information sharing and cyberchondria during the COVID-19 pandemic? European Journal of Information Systems. 2020;29(3):288–305. [Google Scholar]

- Lazarus R.S. Oxford University Press; New York: 1991. Emotion and Adaptation. [Google Scholar]

- Li Weifeng, Chen Hsinchun, Nunamaker Jay F. Identifying and profiling key sellers in cyber carding community: AZSecure text mining system. Journal of Management Information Systems. 2016;33(4):1059–1086. [Google Scholar]

- Li Yan, Thomas Manoj a, Liu Dapeng. From semantics to pragmatics: Where IS can lead in Natural Language Processing (NLP) research. European Journal of Information Systems. 2021;30(5):569–590. [Google Scholar]

- Ellmann, Mathias, Oeser, Alexander, Fucci, Davide, Maalej, Walid, (2017). Find, understand, and extend development screencasts on YouTube. Proceedings of the 3rd ACM SIGSOFT International Workshop on Software Analytics, 1–7.

- Huang, Binxuan, Carley, Kathleen M. (2020). Disinformation and misinformation on twitter during the novel coronavirus outbreak. arXiv preprint arXiv:200604278.

- LIWC. Discover LIWC2015. Retrieved from liwc.wpengine.com. Accessed August 10, 2021.

- Lu Yue, Mei Qiaozhu, Zhai ChengXiang. Investigating task performance of probabilistic topic models: An empirical study of PLSA and LDA. Information Retrieval. 2011;14(2):178–203. [Google Scholar]

- Mena Paul. Cleaning up social media: The effect of warning labels on likelihood of sharing false news on Facebook. Policy & internet. 2020;12(2):165–183. [Google Scholar]

- Mohammad Saif M., Turney Peter D. Crowdsourcing a Word-Emotion Association Lexicon. Computational Intelligence. 2013;29(3):436–465. [Google Scholar]

- Morales Aythami, Acien Alejandro, Fierrez Julian, Monaco John V., Ruben Tolosana, Vera Ruben, Ortega-Garcia Javier. Keystroke biometrics in response to fake news propagation in a global pandemic. IEEE 44th Annual Computers. Software, and Applications Conference (COMPSAC) 2020:1604–1609. [Google Scholar]

- Moravec Patricia L., Kim Antino, Dennis Alan R. Appealing to sense and sensibility: System 1 and system 2 interventions for fake news on social media. Information Systems Research. 2020;31(3):987–1006. [Google Scholar]

- Mortenson Michael J., Vidgen Richard. A computational literature review of the technology acceptance model. International Journal of Information Management. 2016;36(6):1248–1259. [Google Scholar]

- Motta Matt, Stecula Dominik, Farhart Christina. How right-leaning media coverage of COVID-19 facilitated the spread of misinformation in the early stages of the pandemic in the US. Canadian Journal of Political Science/Revue canadienne de science politique. 2020;53(2):335–342. [Google Scholar]

- Napoli Philip M. What if more speech is no longer the solution: First Amendment theory meets fake news and the filter bubble. Fed Comm LJ. 2018;70:55. [Google Scholar]

- Newman Matthew L., Pennebaker James W., Berry Diane S., Richards Jane M. Lying words: Predicting deception from linguistic styles. Personality and Social Psychology Bulletin. 2003;29(5):665–675. doi: 10.1177/0146167203029005010. [DOI] [PubMed] [Google Scholar]

- Nickerson Raymond S. Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology. 1998;2(2):175–220. [Google Scholar]

- Papanastasiou Yiangos. Fake News Propagation and Detection: A Sequential Model. Management Science. 2020;66(5):1826–1846. [Google Scholar]

- Pennycook Gordan, McPhetres Jonathan, Bago Bence, Rand David G. Beliefs about COVID-19 in Canada, the United Kingdom, and the United States: A novel test of political polarization and motivated reasoning. Personality and Social Psychology Bulletin. 2021:1–16. doi: 10.1177/01461672211023652. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennycook Gordon, Bear Adam, Collins Evan T., Rand David G. The implied truth effect: Attaching warnings to a subset of fake news headlines increases perceived accuracy of headlines without warnings. Management Science. 2020;66(11):4944–4957. [Google Scholar]

- Pennycook Gordon, McPhetres Jonathon, Zhang Yunhao, Lu Jackson G., Rand David G. Fighting COVID-19 misinformation on social media: Experimental evidence for a scalable accuracy-nudge intervention. Psychological Science. 2020;31(7):770–780. doi: 10.1177/0956797620939054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pietraszkiewicz Agnieszka, Formanowicz Magdalena, Senden Marie Gustafsson, Boyd Ryan L., Sikstrom Sverker, Sczesny Sabine. The big two dictionaries: Capturing agency and communion in natural language. European Journal of Social Psychology. 2019;495:871–887. [Google Scholar]

- Platanou Kalliopi, Mäkelä Kristiina, Beletskiy Anton, Colicev Anatoli. Using online data and network-based text analysis in HRM research. Journal of Organizational Effectiveness: People and Performance. 2018;5(1):81–97. [Google Scholar]

- Popping Roel. Computer-assisted text analysis. Sage Publications. 2000 [Google Scholar]

- Power M.J. The structure of emotion: An empirical comparison of six models. Cognition & Emotion. 2006;20(5):694–713. [Google Scholar]

- Reichert C. 5G coronavirus conspiracy theory leads to 77 mobile towers burned in UK, report says. CNet Health and Wellness. 2020 [Google Scholar]

- Satariano Adam, Alba Davey. Burning cell towers, out of baseless fear they spread the virus. The New York Times. 2020 [Google Scholar]

- Savov Pavel, Jatowt Adam, Nielek Radoslaw. Identifying breakthrough scientific papers. Information Processing & Management. 2020;57(2):102168. doi: 10.1016/j.ipm.2019.102168. [DOI] [Google Scholar]

- Siering Michael, Koch Jascha-Alexander, Deokar Amit V. Detecting fraudulent behavior on crowdfunding platforms: The role of linguistic and content-based cues in static and dynamic contexts. Journal of Management Information Systems. 2016;33(2):421–455. [Google Scholar]

- Singh, Lisa, Bansal, Shweta, Bode, Leticia, Budak, Ceren, Chi, Guangqing, Kawintiranon, Kornraphop, Padden, Colton, Vanarsdall, Rebecca, Vraga, Emily, Wang, Yanchen (2020). A first look at COVID-19 information and misinformation sharing on Twitter. arXiv preprint arXiv:200313907.

- Syed Nabiha. Real talk about fake news: Towards a better theory for platform governance. Yale LJF. 2017;127:337. [Google Scholar]